Abstract

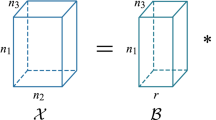

In this paper, we study the problem of a batch of linearly correlated image alignment, where the observed images are deformed by some unknown domain transformations, and corrupted by additive Gaussian noise and sparse noise simultaneously. By stacking these images as the frontal slices of a third-order tensor, we propose to utilize the tensor factorization method via transformed tensor-tensor product to explore the low-rankness of the underlying tensor, which is factorized into the product of two smaller tensors via transformed tensor-tensor product under any unitary transformation. The main advantage of transformed tensor-tensor product is that its computational complexity is lower compared with the existing literature based on transformed tensor nuclear norm. Moreover, the tensor \(\ell _p\) \((0<p<1)\) norm is employed to characterize the sparsity of sparse noise and the tensor Frobenius norm is adopted to model additive Gaussian noise. A generalized Gauss-Newton algorithm is designed to solve the resulting model by linearizing the domain transformations, and a proximal Gauss-Seidel algorithm is developed to solve the corresponding subproblem. Furthermore, the convergence of the proximal Gauss-Seidel algorithm is established according to Kurdyka-Łojasiewicz property, whose convergence rate is also analyzed. Extensive numerical examples on real-world image datasets are carried out to demonstrate the superior performance of the proposed method as compared to several state-of-the-art methods in both accuracy and computational time.

Similar content being viewed by others

Data Availability

The datasets used in the current study are available from the corresponding author on reasonable request.

References

Attouch, H., Bolte, J.: On the convergence of the proximal algorithm for nonsmooth functions involving analytic features. Math. Program. 116(1), 5–16 (2009)

Attouch, H., Bolte., J. Redont, P. Soubeyran, A.: Proximal alternating minimization and projection methods for nonconvex problems: an approach based on the Kurdyka-Łojasiewicz inequality. Math. Oper. Res.35(2),438–457 (2010)

Attouch, H., Bolte., J. Svaiter, B. F.: Convergence of descent methods for semi-algebraic and tame problems: proximal algorithms, forward-backward splitting, and regularized Gauss-Seidel methods. Math. Program., 137(1-2),91–129 (2013)

Bolte, J., Sabach., S. Teboulle, M.: Proximal alternating linearized minimization for nonconvex and nonsmooth problems. Math. Program. 146(1-2),459–494 (2014)

Bunea, F., She, Y., Wegkamp, M.H.: Optimal selection of reduced rank estimators of high dimensional matrices. Ann. Stat. 39(2), 1282–1309 (2011)

Chen, X., Han, Z., Wang, Y., Tang, Y.HYu.: Nonconvex plus quadratic penalized low-rank and sparse decomposition for noisy image alignment. Sci. China Inform. Sci. 59(5), 052107 (2016)

Cox, M., Sridharan, S. Lucey, S., Cohn. J.: Least squares congealing for unsupervised alignment of images. In 2008 IEEE Conf. Computer Vision Pattern Recognit. pages 1–8. IEEE, (2008)

Cox, M., Sridharan, S. Lucey, S., J. Cohn.: Least-squares congealing for large numbers of images. In 2009 IEEE 12th Inter. Conf. Computer Vision, pages 1949-1956. IEEE, (2009)

Fan, J., Li. R.: Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 96(456),1348–1360, (2001)

He, H., Ling, C. Xie. W.: Tensor completion via a generalized transformed tensor t-product decomposition without t-SVD. J. Sci. Comput. 93(2),47, (2022)

He, J., Zhang, D., Balzano, L., Tao. T.: Iterative Grassmannian optimization for robust image alignment. Image Vis. Comput. 32(10),800–813, (2014)

Hou, J., Zhang, F., Qiu, H., Wang, J., Wang, Y., Meng, D.: Robust low-tubal-rank tensor recovery from binary measurements. IEEE Trans. Pattern Anal. Mach. Intell. 44(8), 4355–4373 (2022)

Jittorntrum, K., Osborne, M.R.: Strong uniqueness and second order convergence in nonlinear discrete approximation. Numer. Math. 34(4), 439–455 (1980)

Kernfeld, E., Kilmer, M., Aeron, S.: Tensor-tensor products with invertible linear transforms. Linear Algebra Appl. 485, 545–570 (2015)

Kilmer, M.E., Braman, K., Hao, N., Hoover, R.C.: Third-order tensors as operators on matrices: a theoretical and computational framework with applications in imaging. SIAM J. Matrix Anal. Appl. 34(1), 148–172 (2013)

M. E. Kilmer and C. D. Martin.: Factorization strategies for third-order tensors. Linear Algebra Appl. 435(3),641–658, (2011)

Kolda, T. G. Bader., B. W.: Tensor decompositions and applications. SIAM Rev. 51(3),455–500, (2009)

Learned-Miller, E.G.: Data driven image models through continuous joint alignment. IEEE Trans. Pattern Anal. Mach. Intell. 28(2), 236–250 (2006)

Li, B.-Z., Zhao, X.-L., Ji, T.-Y., Zhang, X.-J., Huang, T.-Z.: Nonlinear transform induced tensor nuclear norm for tensor completion. J. Sci. Comput. 92(3), 83 (2022)

Li, G., Pong, T.K.: Calculus of the exponent of Kurdyka– Łojasiewicz inequality and its applications to linear convergence of first-order methods. Found. Comput. Math. 18(5), 1199–1232 (2017)

Li, P., Feng, J., Jin, X., Zhang, L., Xu, X., Yan, S.: Online robust low-rank tensor modeling for streaming data analysis. IEEE Trans. Neural Netw. Learn. Syst. 30(4), 1061–1075 (2019)

Ling, C., Yu, G., Qi, L., Xu, Y.: T-product factorization method for internet traffic data completion with spatio-temporal regularization. Comput. Optim. Appl. 80(3), 883–913 (2021)

Liu, J., Musialski, P., Wonka, P., Ye, J.: Tensor completion for estimating missing values in visual data. IEEE Trans. Pattern Anal. Mach. Intell. 35(1), 208–220 (2013)

Lu, C., Feng, J., Chen, Y., Liu, W., Lin, Z., Yan, S.: Tensor robust principal component analysis with a new tensor nuclear norm. IEEE Trans. Pattern Anal. Mach. Intell. 42(4), 925–938 (2020)

Ma, Y. Soatto, S., Košecká J., Sastry., S.: An Invitation to 3-D Vision: From Images to Geometric Models, volume 26. New York: Springer, (2004)

Marjanovic, G., Solo., V. On \(\ell _q\) optimization and matrix completion. IEEE Trans. Signal Process. 60(11),5714–5724, (2012)

Mu, C., Huang, J., Wright, Goldfarb, D.: Square deal: lower bounds and improved relaxations for tensor recovery. In Inter. Conf. Machine Learn., pages 73–81. PMLR, (2014)

Ng, M. K. Zhang, X. Zhao.X.-L.: Patched-tube unitary transform for robust tensor completion. Pattern Recognit., 100:107181, (2020)

Peng, Y. Ganesh, A. Wright, J. Xu, Ma. W. Y.: RASL: Robust alignment by sparse and low-rank decomposition for linearly correlated images. IEEE Trans. Pattern Anal. Mach. Intell. 34(11),2233–2246(2012)

Qiu, D., Bai, M., Ng, M.K., Zhang, X.: Nonlocal robust tensor recovery with nonconvex regularization. Inverse Problems 37(3), 035001 (2021)

Qiu, D., Bai, M., Ng, M.K., Zhang, X.: Robust low transformed multi-rank tensor methods for image alignment. J. Sci. Comput. 87(1), 24 (2021)

Rockafellar R. T., Wets.: Variational Analysis. 3rd ed. Berlin: Springer, (2009)

Romera-Paredes, B., Pontil, M.: A new convex relaxation for tensor completion. Adv. Neural Inform. Process. Syst. pages 2967–2975, (2013)

Semerci, O., Hao, N., Kilmer, M.E., Miller, E.L.: Tensor-based formulation and nuclear norm regularization for multienergy computed tomography. IEEE Trans. Image Process. 23(4), 1678–1693 (2014)

Song, G., Ng, M.K., Zhang, X.: Robust tensor completion using transformed tensor singular value decomposition. Numer. Linear Algebra Appl. 27(3), e2299 (2020)

Song, G.-J., Ng, M.K., Zhang, X.: Tensor completion by multi-rank via unitary transformation. Appl. Comput. Harmon. Anal. 65, 348–373 (2023)

Szeliski. R.: Image alignment and stitching: a tutorial. Foundations and Trends in Computer Graphics and Vision, 2(1):1–104, (2006)

Vedaldi, A., Guidi, G., Soatto, S.: Joint data alignment up to (lossy) transformations. In 2008 IEEE Conf. Computer Vision Pattern Recognit. pages 1–8. IEEE, (2008)

Wen, Z., Yin, W., Zhang, Y.: Solving a low-rank factorization model for matrix completion by a nonlinear successive over-relaxation algorithm. Math. Program. Comput. 4(4), 333–361 (2012)

Wright, J., Yang, A.Y., Ganesh, A., Sastry, S.S., Ma, Y.: Robust face recognition via sparse representation. IEEE Trans. Pattern Anal. Mach. Intell. 31(2), 210–227 (2009)

Wu, Y., Shen, B., Ling, H.: Online robust image alignment via iterative convex optimization. In 2012 IEEE Conf. Computer Vision Pattern Recognit. pages 1808–1814. IEEE, (2012)

Xu, Y., Hao, R., Yin, W., Su, Z.: Parallel matrix factorization for low-rank tensor completion. Inverse Probl. Imaging 9(2), 601–624 (2015)

Yilmaz, A., Javed, O., Shah, M.: Object tracking: a survey. ACM Comput. Sur. 38(4), 13 (2006)

Yu, P., Li, G., Pong, T.K.: Kurdyka-Łojasiewicz exponent via inf-projection. Found. Comput. Math. 22(4), 1171–1217 (2022)

Zhang, C.-H.: Nearly unbiased variable selection under minimax concave penalty. Ann. Stat. 38(2), 894–942 (2010)

Zhang, X., Ng, M.K.: A corrected tensor nuclear norm minimization method for noisy low-rank tensor completion. SIAM J. Imaging Sci. 12(2), 1231–1273 (2019)

Zhang, X., Ng, M.K.: Sparse nonnegative tensor factorization and completion with noisy observations. IEEE Trans. Inf. Theory 68(4), 2551–2572 (2022)

Zhang, X., Ng, M.K.-P.: Low rank tensor completion with Poisson observations. IEEE Trans. Pattern Anal. Mach. Intell. 44(8), 4239–4251 (2022)

Zhang, X., Wang, D., Zhou, Z., Ma, Y.: Simultaneous rectification and alignment via robust recovery of low-rank tensors. In Adv. Neural Inform. Process. Syst. 2, 1637–1645 (2013)

Zhang, X. D., Wang, Z. Zhou, Ma, Y.: Robust low-rank tensor recovery with rectification and alignment. IEEE Trans. Pattern Anal. Mach. Intell. 43(1),238–255, (2021)

Zhang, Z., Ely, G., Aeron, S., Hao, N., Kilmer, M.: Novel methods for multilinear data completion and de-noising based on tensor-SVD. In 2014 IEEE Conf. Computer Vision Pattern Recognition, pages 3842–3849. IEEE, (2014)

Zheng, Y.-B., Huang, T.-Z., Zhao, X.-L., Jiang, T.-X., Ma, T.-H., Ji, T.-Y.: Mixed noise removal in hyperspectral image via low-fibered-rank regularization. IEEE Trans. Geosci. Remote Sens. 58(1), 734–749 (2020)

Zhou, M.: Real Analysis (In Chinese). Peking University Press, Beijing (1995)

Zhou, P., Lu, C., Lin, Z., Zhang, C.: Tensor factorization for low-rank tensor completion. IEEE Trans. Image Process. 27(3), 1152–1163 (2018)

Zou., H.: The adaptive lasso and its oracle properties. J. Am. Stat. Assoc. 101(476),1418–1429, (2006)

Acknowledgements

The authors are grateful to Dr. Xiai Chen and Prof. Xiaoqin Zhang for sharing the codes of NQLSD [6] and \(\ell _q\)-ADMM [50], respectively. The authors are also grateful to Prof. Di Wang for helpful discussions about the geometric transformation in image alignment. Thanks also go to the referees for their valuable comments and suggestions that helped us improve the paper.

Funding

The research of Duo Qiu was supported in part by the National Natural Science Foundation of China under Grant No. 12201473 and the Science Foundation of Wuhan Institute of Technology under Grant No. K202256. The research of Xiongjun Zhang was supported in part by the National Natural Science Foundation of China under Grant No. 12171189, the Knowledge Innovation Project of Wuhan under Grant No. 2022010801020279, and the Fundamental Research Funds for the Central Universities under Grant No. CCNU22JC023.

Author information

Authors and Affiliations

Contributions

All authors contributed equally with respect to all aspects to this work.

Corresponding author

Ethics declarations

Ethical approval

Not applicable.

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

In this section, we give the definitions of the subdifferential and the Kurdyka-\({\L}\)ojasiewicz (KL) property of a function, respectively, which play a vital role for establishing the convergence and rate of convergence of the proximal Gauss-Seidel algorithm.

Definition 6

[32, Definition 8.3] Consider a function \(f:\mathbb {R}^n\rightarrow (\infty ,+\infty ]\) and a point \(\overline{\textbf{x}}\) with \(f(\overline{\textbf{x}})\) finite. For any \(\textbf{x}\in \mathbb {R}^n\), one says that

(i) \(\textbf{v}\) is a regular subgradient of f at \(\overline{\textbf{x}}\), written as \(\textbf{v}\in \widehat{\partial } f(\overline{\textbf{x}})\), if

(ii) \(\textbf{v}\) is a subgradient of f at \(\overline{\textbf{x}}\), written as \(\textbf{v}\in \partial f(\overline{\textbf{x}})\), if there exist sequences \(\textbf{x}^k\rightarrow \overline{\textbf{x}}\), \(f(\textbf{x}^k)\rightarrow f(\overline{\textbf{x}})\), and \(\textbf{v}^k\in \widehat{\partial } f(\textbf{x}^k)\) with \(\textbf{v}^k\rightarrow \textbf{v}\).

Definition 7

[44, Definition 2.1] Let \(f:\mathbb R^n\rightarrow \mathbb R\cup \{+\infty \}\) be a proper lower semicontinuous function. We say that f has the Kurdyka-\({\L}\)ojasiewicz (KL) property at point \(x^{*}\in \text {dom}(\partial f)\), if there exist a neighborhood U of \(x^{*}\), \(\eta \in (0,+\infty ]\) and a continuous concave function \(\varphi : [0,\eta )\rightarrow \mathbb R_{+}\) such that: (i) \(\varphi (0)=0\); (ii) \(\varphi \) is \(C^1\) on \((0,\eta )\); (iii) for all \(s\in (0,\eta )\), \(\varphi {'}(s)>0\); (iv) and for all \(x \text { in } U \cap [f(x^{*})<f<f(x^{*})+\eta ]\) the KL inequality holds:

If f satisfies the KL property at \(x^*\in \text {dom}(\partial f)\), and the \(\varphi (s)\) in (45) can be chosen as \(\varphi (s)=\hat{c}s^{1-\theta }\) for some \(\hat{c}>0\) and \(\theta \in [0,1)\), then we say that f satisfies the KL property at point \(x^{*}\) with exponent \(\theta \).

A proper closed function f satisfying the KL property at every point in \(\text {dom}(\partial f)\) is said to be a KL function, and a proper closed function f satisfying the KL property with exponent [0, 1) at every point in \(\text {dom}(\partial f)\) is said to be a KL function with exponent \(\theta \) [44].

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Xia, S., Qiu, D. & Zhang, X. Tensor factorization via transformed tensor-tensor product for image alignment. Numer Algor 95, 1251–1289 (2024). https://doi.org/10.1007/s11075-023-01607-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-023-01607-9