Abstract

We consider the time discretization of a linear parabolic problem by the discontinuous Galerkin (DG) method using piecewise polynomials of degree at most r − 1 in t, for r ≥ 1 and with maximum step size k. It is well known that the spatial L2-norm of the DG error is of optimal order kr globally in time, and is, for r ≥ 2, superconvergent of order k2r− 1 at the nodes. We show that on the n th subinterval (tn− 1,tn), the dominant term in the DG error is proportional to the local right Radau polynomial of degree r. This error profile implies that the DG error is of order kr+ 1 at the right-hand Gauss–Radau quadrature points in each interval. We show that the norm of the jump in the DG solution at the left end point tn− 1 provides an accurate a posteriori estimate for the maximum error over the subinterval (tn− 1,tn). Furthermore, a simple post-processing step yields a continuous piecewise polynomial of degree r with the optimal global convergence rate of order kr+ 1. We illustrate these results with some numerical experiments.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider an abstract, linear initial-value problem

We assume a continuous solution \(u:[0,T]\to \mathbb {L}\), with \(u(t)\in \mathbb {H}\) if t > 0, for two Hilbert spaces \(\mathbb {L}\) and \(\mathbb {H}\) with a compact and dense imbedding \(\mathbb {H}\subseteq \mathbb {L}\). By using the inner product 〈⋅,⋅〉 in \(\mathbb {L}\) to identify this space with its dual \(\mathbb {L}^{*}\), we obtain in the usual way an imbedding \(\mathbb {L}\subseteq \mathbb {H}^{*}\). The linear operator \(A:\mathbb {H}\to \mathbb {H}^{*}\) is assumed to be bounded and self-adjoint, as well as strictly positive-definite. For instance, if A = −∇2 so that (1) is the classical heat equation on a bounded Lipschitz domain \({\Omega }\subset \mathbb {R}^{d}\) where d ≥ 1, and if we impose homogeneous Dirichlet boundary conditions, then in the usual way we can choose \(\mathbb {L}=L_{2}({\Omega })\) and \(\mathbb {H}={H^{1}_{0}}({\Omega })\), in which case \(\mathbb {H}^{*}=H^{-1}({\Omega })\).

For an integer r ≥ 1, let U denote the discontinuous Galerkin (DG) time stepping solution to (1) using piecewise polynomials of degree at most r − 1 with coefficients in \(\mathbb {H}\). Thus, we consider only the time discretization with no additional error arising from a spatial discretization. Section 2 summarizes known results on the convergence properties of the DG solution U, and Section 3 introduces a local Legendre polynomial basis that is convenient for the practical implementation of DG time stepping as well as for our theoretical study. These sections serve as preparation for Section 4 where we show that

Here, kn denotes the length of the n th time interval In = (tn− 1,tn), the function pnr denotes the Legendre polynomial of degree r, shifted to In, and anr(u) denotes the coefficient of pnr in the local Legendre expansion of u on In. Since \(a_{nr}(u)=O({k_{n}^{r}})\), the result (2) shows that the dominant term in the DG error is proportional to the Gauss–Radau polynomial pnr(t) − pn,r− 1(t) for t ∈ In. However, the coefficient anr(u) and the \(O\left (k_{n}^{r+1}\right )\) term in (2) typically grow as t → 0 at rates depending on the regularity of the solution u, which in turn depends on the regularity and compatibility of the data. A possible extension permitting a time-dependent operator A(t) is discussed briefly in Remark 4.8.

In 1985, Eriksson, Johnson and Thomée [1. ] presented an error analysis for DG time stepping of (1), showing optimal O(kr+ 1) convergence in \(L_{\infty }\left ((0,T);L_{2}({\Omega })\right )\) and O(k2r− 1) superconvergence for the nodal values \(\lim _{t\to t_{n}^{-}}U(t)\), where \(k=\underset{}{\max\nolimits_{1\leq n\leq N\;}}k_n\). Subsequently, numerous authors [2,3,4,5,6,7,8. ] have refined these results, including a recent \(L_{\infty }\) stability result of Schmutz and Wihler [9. ] that we use in the proof of Theorem 4.4. Shortly before completing the present work we learned that the expansion (2) was proved by Adjerid et al. [10. , 11. ] for a linear, scalar hyperbolic problem, and also for nonlinear systems of ODEs [12. ]; see Remark 4.7 for more details.

Section 5 discusses some practical consequences of (2), in particular the superconvergence of the DG solution at the right Radau points in each interval. This phenomenon was exploited by Springer and Vexler [13. ] in the piecewise-linear (r=2) case to achieve higher-order accuracy for a parabolic optimal control problem. We will see in Lemma 5.1 how the norm of the jump in U at the break point tn− 1 provides an accurate estimate of the maximum DG error over the interval In. Moreover, a simple, low-cost post-processing step yields a continuous piecewise polynomial U∗ of degree at most r, called the reconstruction of U, that satisfies \(U_{*}(t)-u(t)=O\left (k_{n}^{r+1}\right )\) for t ∈ In; see Corollary 5.3. Finally, Section 6 reports the results of some numerical experiments for a scalar ODE and for heat equations in one and two spatial dimensions, confirming the convergence behavior from the theory based on (2).

Our motivation for the present study originated in a previous work [14. ] dealing with the implementation of DG time stepping for a subdiffusion equation \(u^{\prime }(t)+\partial _{t}^{1-\nu }Au(t)=f(t)\) with 0 < ν < 1, where \(\partial _{t}^{1-\nu }\) denotes the Riemann–Liouville fractional time derivative of order 1 − ν. We observed in numerical experiments that (2) holds except with \(O\left (k_{n}^{r+\nu }\right )\) in place of \(O\left (k_{n}^{r+1}\right )\).

Treatment of the spatial discretization of (1) is beyond the scope of this paper, apart from its use in our numerical experiments. To make practical use of our result (2) it is necessary to ensure that the spatial error is dominated by the \(O\left (k_{n}^{r+1}\right )\) term. Also, although we allow nonuniform time steps in our analysis, we will not consider questions such as local mesh refinement or adaptive step size control, which are generally required to resolve the solution accurately for t near 0.

2 Discontinuous Galerkin time stepping

As background and preparation for our results, we formulate in this section the DG time stepping procedure and summarize key convergence results from the literature. Our standard reference is the monograph of Thomée [15. , Chapter 12].

Choosing time levels 0 = t0 < t1 < t2 < ⋯ < tN = T, we put

Let \(\mathbb {P}_{j}(\mathbb {V})\) denote the space of polynomials of degree at most j with coefficients from a vector space \(\mathbb {V}\). We fix an integer r ≥ 1, put \(\boldsymbol {t}=(t_{n})_{n=0}^{N}\) and form the piecewise-polynomial space \(\mathcal {X}_{r}=\mathcal {X}_{r}(\boldsymbol {t},\mathbb {H})\) defined by

Denoting the one-sided limits of X at tn by

we discretize (1) in time by seeking \(U\in \mathcal {X}_{r}\) satisfying [15. , p. 204]

for \(X\in \mathcal {X}_{r}\) and 1 ≤ n ≤ N, with \(U^{0}_{-}=u_{0}\). Section 3 describes how, given \(U^{n-1}_{-}\) and f, we can solve a linear system to obtain \(U\vert _{I_{n}}\) and so advance the solution by one time step.

Remark 2.1

If the integral on the right-hand side of (3) is evaluated using the right-hand, r-point, Gauss–Radau quadrature rule on In, then the sequence of nodal values \(U^{n}_{-}\) coincides with the finite difference solution produced by the r-stage Radau IIA (fully) implicit Runge–Kutta method; see Vlasák and Roskovec [16. , Section 3].

Let ∥⋅∥ denote the norm in \(\mathbb {L}\) and let u(ℓ) denote the ℓ th derivative of u with respect to t. It will be convenient to write and to define the fractional powers of A in the usual way via its spectral decomposition [15. , Chapter 3]. The DG time stepping scheme has the nodal error bound [15. , Theorem 12.1]

and the uniform bound [15. , Theorem 12.2]

where in both cases 1 ≤ n ≤ N. We therefore have optimal convergence

provided \(u^{(r)}\in L_{\infty }((0,T);\mathbb {L})\) and \(A^{1/2}u^{(r)}\in L_{2}((0,T);\mathbb {L})\). In fact, U is superconvergent at the nodes [15. , Theorem 12.3] when r ≥ 2, with

Thus,

provided \(A^{r-1/2}u^{(r)}\in L_{2}((0,T);\mathbb {L})\).

Suppose for the remainder of this section that f ≡ 0, and consider error bounds involving the (known) initial data u0 instead of the (unknown) solution u. By separating variables, one finds that [15. , Lemma 3.2]

assuming that u0 belongs to the domain of As. It follows that, for sufficiently regular initial data, we have the basic error bound [1. , Theorem 1],

For non-smooth initial data u0 ∈ L2(Ω), the full rate of convergence still holds but with a constant that blows up as t tends to zero [1. , Theorem 3]: provided kn ≤ Ckn− 1 for all n ≥ 2,

and hence, by interpolation,

At the nodes [1. , Theorem 2],

and [1. , Theorem 3], provided kn ≤ Ckn− 1 for all n ≥ 2,

Taking s = q in (10) and (11), we see by interpolation that

3 Local Legendre polynomial basis

We now return to considering the general inhomogeneous problem and describe a practical formulation of the DG scheme using local Legendre polynomial expansions that will also play an essential role in our subsequent analysis.

Let Pj denote the Legendre polynomial of degree j with the usual normalization Pj(1) = 1, and recall that

Using the affine map βn : [− 1,1] → [tn− 1,tn] given by

we define local Legendre polynomials on the n th subinterval,

and note that

The local Fourier–Legendre expansion of a function v is then, for t ∈ In,

In particular, for the DG solution U we put \(U^{nj}=a_{nj}(U)\in \mathbb {H}\) so that

Define [14. , Lemma 5.1]

and \(H_{ij}={\int \limits }_{-1}^{1}P_{j}(\tau )P_{i}(\tau ) d\tau =\delta _{ij}/(2j+1)\); e.g., if r = 4 then

By choosing a test function of the form X(t) = pni(t)χ, for t ∈ In and \(\chi \in \mathbb {H}\), we find that the DG equation (3) implies

for 0 ≤ i ≤ r − 1 and 1 ≤ n ≤ N, where

Thus, given Un− 1,j for 0 ≤ j ≤ r − 1, by solving the (block) r × r system (15) we obtain Unj for 0 ≤ j ≤ r − 1, and hence U(t) for t ∈ In. The existence and uniqueness of this solution follows from the stability of the scheme [15. , p. 205]. Notice that

4 Behavior of the DG error

To prove our main results, we will make use of two projection operators. The first is just the orthogonal projector \({\Pi }_{r}:L^{2}((0,T);\mathbb {L})\to \mathcal {X}_{r}\) defined by

which has the explicit representation

The second projector \(\widetilde {\Pi }_{r}:C([0,T];\mathbb {L})\to \mathcal {X}_{r}\) is defined by the conditions [15. , Equation (12.9)]

for all \(X\in \mathcal {X}_{r}\) and for 1 ≤ n ≤ N. The next lemma shows that \(\widetilde {\Pi }_{r}u\) is in fact the DG solution of the trivial equation with A = 0; cf. Chrysafinos and Walkington [3. , Section 2.2].

Lemma 4.1

If \(u^{\prime }:(0,T]\to \mathbb {L}\) is integrable, then

Proof

Integrating by parts and using the properties (16) of \(\widetilde {\Pi }_{r}\), we have

and a second integration by parts then yields the desired identity.

The Legendre expansion of \(\widetilde {\Pi }_{r}v\) coincides with that of \(\Pi_r v\), except for the coefficient of pn,r− 1. Below, we denote the closure of the nth time interval by \(\bar {I}_{n}=[t_{n-1},t_{n}]\).

Lemma 4.2

If \(v:\bar {I}_{n}\to \mathbb {L}\) is continuous, then

where

Proof

By choosing \(X^{\prime }\vert _{I_{n}}=p_{nj}\) in the second property of (16), we see that

implying that \(\widetilde {\Pi }_{r}v={\Pi }_{r-1}v+\lambda p_{n,r-1}\) for some \(\lambda \in \mathbb {H}\). Since pnj(tn) = Pj(1) = 1, the first property in (16) gives

showing that \(\lambda =\tilde {a}_{n,r-1}(v)\).

By mapping to the reference element (− 1,1), applying the Peano kernel theorem, and then mapping back to In, we find [14. , p. 137]

and

Theorem 4.3

For 1 ≤ n ≤ N, if \(v:\bar {I}_{n}\to \mathbb {L}\) is Cr+ 1 then

Proof

By Lemma 4.2, if t ∈ In then

and

so

Taking the limit as \(t\to t_{n}^{-}\), and recalling that pnj(tn) = 1, we see that

Using (20) to eliminate \(\tilde {a}_{n,r-1}(v)\) in (19), we find that

on In, and the desired estimate follows at once from (18).

The following theorem and its corollary, together with the superconvergence result (6), show that

provided u is sufficiently regular.

Theorem 4.4

For 1 ≤ n ≤ N, if \(Au:\bar {I}_{n}\to \mathbb {L}\) is Cr, then

Proof

It follows from Lemma 4.1 that \(\widetilde {\Pi }_{r}u\) satisfies

whereas U satisfies

for all \(X\in \mathcal {X}_{r}\). Letting \(\rho =A(u-\widetilde {\Pi }_{r} u)\) and noting \((\widetilde {\Pi }_{r} u)^{n-1}_{-}=u(t_{n-1})\), we see that the piecewise polynomial \(\varepsilon =U-\widetilde {\Pi }_{r} u\in \mathcal {X}_{r}\) satisfies

for all \(X\in \mathcal {X}_{r}\), with \(\varepsilon ^{n-1}_{-}=U^{n-1}_{-}-u(t_{n-1})\). A stability result of Schmutz and Wihler [9. , Proposition 3.18] yields the estimate

that is,

By putting v = Au in (18) we find \(k_{n}{\int \limits }_{I_{n}}\|\rho \|^{2}\;\mathrm {d} t\le {k_{n}^{2}}\|\rho \|_{I_{n}}^{2}\le C(k_{n}^{r+1}\|Au^{(r)}\|_{I_{n}})^{2}\), and the desired estimate follows at once.

We are now able to establish the claim (2) from the Introduction.

Theorem 4.5

For 1 ≤ n ≤ N, if Au(r) and u(r+ 1) are continuous on \(\bar {I}_{n}\), then

Proof

Write

and apply Theorem 4.3 and 4.4.

We therefore have the following estimate for the homogeneous problem expressed in terms of the initial data.

Corollary 4.6

Assume kn ≤ Ckn− 1 for 2 ≤ n ≤ N so that (12) holds. If f ≡ 0, then for 0 ≤ s ≤ r + 1 and 2 ≤ n ≤ N,

Proof

Taking q = r + 1 in (12) yields

and using (7) we have ∥Au(r)(t)∥ = ∥u(r+ 1)(t)∥≤ Cts−(r+ 1)∥Asu0∥. The result follows for n ≥ 2 after noting that tn = tn− 1 + kn ≤ tn− 1 + Ckn− 1 ≤ Ctn− 1.

Remark 4.7

In their proof of (2) for the scalar linear problem

Adjerid et al. [10. , Theorem 3] use an inductive argument to show an expansion of the form

where \(Q_{nj}\in \mathbb {P}_{j-1}\) and Qnr(t) = cnp[pnr(t) − pn,r− 1(t)] for a constant cnp. They extend this result to a homogeneous linear system of ODEs \(\boldsymbol {u}^{\prime }-\boldsymbol {A}\boldsymbol {u}=\boldsymbol {0}\), then a nonlinear scalar problem \(u^{\prime }-f(u)=0\), and finally a nonlinear system \(\boldsymbol {u}^{\prime }-\boldsymbol {f}(\boldsymbol {u})=\boldsymbol {0}\).

Remark 4.8

The proof of Theorem 4.4 is largely unaffected if the elliptic term is permitted to have time-dependent coefficients, resulting in a time-dependent operator A(t). The main issue is to verify the stability property (23) for this more general setting. The only other complication is the estimation of ρ(t). Consider, for example, \(A(t)u(x,t)=-\nabla \cdot \left (a(x,t)\nabla u(x,t)\right )\). Since A(t)u(x,t) is of the form \({\sum }_{m=1}^{M} c_{m}(x,t)B_{m}u(x,t)\), where each Bm is a second-order linear differential operator involving only the spatial variables x, it follows that

and the final step of the proof becomes

Of course, to exploit this generalization of Theorem 4.4, it would also be necessary to verify the superconvergent error bounds for \(U^{n}_{-}\) in this case.

5 Practical consequences

Throughout this section, we will assume that

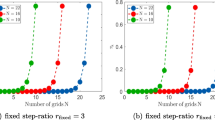

for 2 ≤ n ≤ N, where the factor ϕ(t,u) will depend on the regularity of u, which in turn depends on the regularity and compatibility of the initial data u0 and the source term f. Figure 1 plots the right-hand Gauss–Radau polynomials

as functions of τ ∈ [− 1,1] for r ∈{1,2,3,4}. In general, there are r + 1 points

such that τ1, τ2, …, τr are the r zeros of Pr − Pr− 1, and hence are also the abscissas of the right-hand, r-point Gauss–Radau quadrature rule for the interval [− 1,1]. Recalling our previous notation (13), let tnℓ = βn(τℓ) so that tn− 1 = tn0 < tn1 < ⋯ < tnr = tn with

Thus, whereas \(U(t)-u(t)=O({k_{n}^{r}})\) for general t ∈ In, the DG time stepping scheme is superconvergent at the r special points tn1, tn2, …, tnr in the half-open interval (tn− 1,tn]. More precisely,

Since pnj(tn− 1) = Pj(− 1) = (− 1)j, another consequence of (24) is that

which, in combination with the estimate \(\|U^{n-1}_{-}-u(t_{n-1})\|\le C\phi (t_{n},u)k_{n}^{r+1}\), shows that the jump \(\llbracket U\rrbracket ^{n-1}=U^{n-1}_{+}-U^{n-1}_{-}\) in the DG solution at tn− 1 satisfies

We are therefore able to show, in the following lemma, that ∥⟦U⟧n− 1∥ is a low-cost and accurate error indicator for the DG solution on In.

Lemma 5.1

For ϕ as in (24) and 2 ≤ n ≤ N,

Thus,

Proof

First note that since

we have

Hence, for t ∈ In,

and so \(\|U-u\|_{I_{n}}\le 2\|a_{nr}(u)\|+C\phi (t_{n},u)k_{n}^{r+1}\). Conversely,

and therefore

Since, by (25),

the result follows.

A unique continuous function \(U_{*}\in \mathcal {X}_{r+1}\) satisfies the r + 1 interpolation conditions

for 1 ≤ n ≤ N, and we see that

Makridakis and Nochetto [4. ] introduced this interpolant in connection with a posteriori error analysis of diffusion problems, and called U∗ the reconstruction of U. The next theorem provides a more explicit description of U∗ that we then use to prove U∗ achieves the optimal convergence rate of order \(k_{n}^{r+1}\) over the whole subinterval In.

Theorem 5.2

For t ∈ In and 1 ≤ n ≤ N, the reconstruction U∗ of the DG solution U has the representation

where

Proof

Since the polynomial \((U-U_{*})\vert _{I_{n}}\in \mathbb {P}_{r}(\mathbb {H})\) vanishes at tnℓ for 1 ≤ ℓ ≤ r, there must be a constant γ such that U(t) − U∗(t) = γ(pnr − pn,r− 1)(t) for t ∈ In. Taking the limit as \(t\to t_{n-1}^{+}\), we have \(U^{n-1}_{+}-U^{n-1}_{-}=\gamma [(-1)^{r}-(-1)^{r-1}] =2(-1)^{r}\gamma\) and so γ = (− 1)r⟦U⟧n− 1/2. It follows from (14) that

implying the formulae for \(U^{nj}_{*}=a_{nj}(U_{*})\).

Corollary 5.3

\(\|U_{*}-u\|_{I_{n}}\le C\phi (t_{n},u)k_{n}^{r+1}\) for 2 ≤ n ≤ N.

Proof

We see from the Theorem 5.2 and (26) that

so it suffices to apply (24) and (25).

Example 5.4

Let f ≡ 0 and let u0 belong to the domain of As. By (9),

and by (12),

Furthermore, Corollary 4.6 shows that our assumption (24) is satisfied with

so

6 Numerical experiments

The computational experiments described in this section were performed in standard 64-bit floating point arithmetic using Julia v1.7.2 on a desktop computer having a Ryzen 7 3700X processor and 32GiB of RAM. The source code is available online [17. ]. In all cases, we use uniform time steps kn = k = T/N.

6.1 A simple ODE

We begin with the ODE initial-value problem

where in place of a linear operator A we have just the scalar λ = 1/2, and where \(f(t)=\cos \limits (\pi t)\). For the piecewise-cubic case with N = 5 subintervals, Fig. 2 shows that U − U∗ provides an excellent approximation to the error U − u, and that the error profile is approximately proportional to pnr − pn,r− 1 with r = 4; cf. (21) and Fig. 1. In particular, superconvergence at the Radau points is apparent. By sampling at 50 points in each subinterval, we estimated the maximum errors

and, as expected from (5) and Corollary 5.3, the values shown in Table 1 exhibit convergence rates r = 4 and r + 1 = 5, respectively. The table also shows a convergence rate 2r − 1 = 7 for the nodal error \(\max \limits _{1\le n\le N}\vert U^{n}_{-}-u(t_{n})\vert\) up to the row where this error approaches the unit roundoff. By using Julia’s BigFloat datatype, we were able to observe O(k7) convergence of \(U^{n}_{-}\) up to N = 128, for which value the nodal error was 1.56e-19.

The DG error U − u, the difference U − U∗ between the DG solution and its reconstruction, along with the superconvergence points tnj (1 ≤ j ≤ r), for the ODE of Section 6.1 using piecewise-cubics (r = 4)

6.2 A parabolic PDE in 1D

Now consider the 1D heat equation with constant thermal conductivity κ > 0,

subject to the boundary conditions u(0,t) = 0 = u(L,t) for 0 ≤ t ≤ T, and to the initial condition u(x,0) = u0(x) for 0 ≤ x ≤ L. To obtain a reference solution, we introduce the Laplace transform \(\hat {u}(x,z)={\int \limits }_{0}^{\infty } e^{-zt}u(x,t) \mathrm {d}\;t\), which satisfies the two-point boundary-value problem (with complex parameter z),

where ω = (z/κ)1/2 and \(g(x,z)=\kappa ^{-1}[u_{0}(x)+\hat {f}(x,z)]\). Consequently, the variation-of-constants formula yields the representation [14. , Section 7.3]

and we then invert the Laplace transform by numerical evaluation of the Bromwich integral [18. ],

for a hyperbolic contour \(\mathcal {C}\) homotopic to the imaginary axis and passing to the right of all singularities of \(\hat {u}(x,z)\).

To discretize in space, we introduce a finite difference grid

and define up(t) ≈ u(xp,t) via the method of lines, replacing uxx with a second-order central difference approximation to arrive at the system of ODEs

where fp(t) = f(xp,t) with the boundary conditions u0(t) = 0 = uP(t) and the initial condition up(0) = u0(xp). For our test problem, we choose

where the value of the thermal conductivity κ normalizes the time scale by making the smallest eigenvalue of A = −κ(d/dx)2 equal 1. We will see below that u0 ∈ D(As) iff s < 5/4, so the regularity of the solution u is limited.

We apply DG to discretize up(t) in time and denote the resulting fully discrete solution by U(t) = [Up(t)] ≈u(t) = [up(t)]. Figure 3 plots the error in U and in its reconstruction U∗ using piecewise-quadratics (r = 3) and N = 8 equal subintervals in time, with P = 500 for the spatial grid. The errors are measured in the discrete L2-norm, that is,

and we observe a clear deterioration in accuracy as t approaches zero.

Time dependence of the errors in the DG solution U(t) and its reconstruction U∗(t) for the 1D heat equation (28), using piecewise-quadratics (r = 3) over N = 8 time intervals

To speed up the convergence as h → 0, we compute also a second DG solution \(U^{\text {fine}}_{p}(t)\) using a finer spatial grid with Pfine = 2P subintervals, and then perform one step of Richardson extrapolation (on the coarser grid), defining

Table 2 shows errors in this spatially extrapolated DG solution over the time interval [T/4,T], that is,

as well as the corresponding errors in the reconstruction \(\boldsymbol {U}^{\mathrm {R}}_{*}(t)\) and the nodal values \((\boldsymbol {U}^{\mathrm {R}})^{n}_{-}\). Again, the observed convergence rates are as expected.

To investigate the time dependence of the error for t near zero, we consider the weighted error in the DG solution

and likewise incorporate the weight wα(t) when measuring the reconstruction error and the nodal error. The top part of Table 3 shows results for the homogeneous problem, that is, with the same data as in (30) except f(x,t) ≡ 0. The mth Fourier sine coefficient of u0 is proportional to m− 3, so ∥Asu0∥≤ C𝜖− 1/2 for \(s=\frac {5}{4}-\epsilon\) and 𝜖 > 0. Based on the estimates in Example 5.4, we choose the weight exponents \(\alpha =r-\frac {5}{4}\) for the DG error, \(r+1-\frac {5}{4}\) for the reconstruction error, and \(2r-1-\frac {5}{4}\) for the nodal error, and observe excellent agreement in the top set of results in Table 3 with the expected convergence rates of order r, r + 1 and 2r − 1, respectively.

Similar results are found if u0(x) ≡ 0 with nonzero f. Curiously, in the bottom part of Table 3, choosing both u0 and f as in (30) (so both nonzero) disturbs the observed convergence rates for \((\boldsymbol {U}^{\mathrm {R}})^{n}_{-}\), although not for UR or \(\boldsymbol {U}^{\mathrm {R}}_{*}\).

6.3 A parabolic PDE in 2D

Now consider the 2D heat equation,

subject to the boundary conditions u(x,y,t) = 0 for (x,y) ∈ ∂Ω, and to the initial condition u(x,y,0) = u0(x,y) for (x,y) ∈ Ω. We introduce a spatial finite difference grid

with hx = Lx/Px and hy = Ly/Py. The semidiscrete finite difference solution upq(t) ≈ u(xp,yq,t) is then constructed using the standard 5-point approximation to the Laplacian, so that

for 0 ≤ t ≤ T and (xp,yq) ∈ Ω, where fpq(t) = f(xp,yq,t), together with the boundary condition upq(t) = 0 for (xp,yq) ∈ ∂Ω, and the initial condition upq(0) = u0(xp,yq) for (xp,yq) ∈ Ω. For (xp,yq) ∈ Ω, we use column-major ordering to arrange the unknowns upq(t), the source terms fpq(t) and initial data u0pq into vectors uh(t), f(t) and \(\boldsymbol {u}_{0}\in \mathbb {R}^{M}\) for M = (Px − 1)(Py − 1). There is then a sparse matrix A such that the system of ODEs (33) leads to the initial-value problem

For our test problem, we take Lx = Ly = 2 and Px = Py = 50 with

where the choice of κ ensures that the smallest Dirichlet eigenvalue of − κ∇2 on Ω equals 1. Table 4 compares the piecewise-quadratic (r = 3) DG solution Uh(t) of the semidiscrete problem (34) with uh(t), evaluating the latter using numerical inversion of the Laplace transform as before except that now, instead of \(\hat {u}(z)\), we work with the spatially discrete approximation \(\hat {\boldsymbol {u}}_{h}(z)\) obtained by solving the (complex) linear system \((z\boldsymbol {I}+\boldsymbol {A})\hat {\boldsymbol {u}}_{h}(z)=u_{0}+\hat {\boldsymbol {f}}(z)\). As with the 1D results in Table 2, we compute the maximum error over the time interval [T/4,T], and observe the expected rates of convergence, keeping in mind that by treating uh(t) as our reference solution we are ignoring the error from the spatial discretization.

Data availability

The paper does not make use of any data sets. The software used to generate the numerical results is available on github [17. ].

References

Eriksson, K., Johnson, C., Thomée, V.: Time discretization of parabolic problems by the discontinuous Galerkin method. ESAIM: M2AN 19, 611–643 (1985). https://doi.org/10.1051/m2an/1985190406111

Schötzau, D., Schwab, C.: Time discretization of parabolic problems by the hp-version of the discontinuous Galerkin finite element method. SIAM J. Numer. Anal. 38, 837–875 (2000). https://doi.org/10.1137/S0036142999352394

Chrysafinos, K., Walkington, N.J.: Error estimates for the discontinuous Galerkin methods for parabolic equations. SIAM J. Numer. Anal. 44, 349–366 (2006). https://doi.org/10.1137/030602289

Makridakis, C., Nochetto, R.H.: A posteriori error analysis for higher order dissipative methods for evolution problems. Numer. Math. 104, 489–514 (2006). https://doi.org/10.1007/s00211-006-0013-6

Akrivis, G., Makridakis, C., Nochetto, R.H.: Galerkin and Runge–Kutta methods: unified formulation, a posterior error estimates and nodal superconvergence. Numer. Math. 118, 429–456 (2011). https://doi.org/10.1007/s00211-011-0363-6

Richter, T., Springer, A., Vexler, B.: Efficient numerical realization of discontinuous Galerkin methods for temporal discretization of parabolic problems. Numer. Math. 124, 151–182 (2013). https://doi.org/10.1007/s00211-012-0511-7

Saito, N.: Variational analysis of the discontinuous Galerkin time-stepping method for parabolic equations. IMA J. Numer. Anal. 41, 1267–1292 (2021). https://doi.org/10.1093/imanum/draa017

Leykekhman, D., Vexler, B.: Discrete maximal parabolic regularity for Galerkin finite element methods. Numer. Math. 135, 923–952 (2017). https://doi.org/10.1007/s00211-016-0821-2

Schmutz, L., Wihler, T.P.: The variable-order discontinuous Galerkin time stepping scheme for parabolic evolution problems is uniformly L∞-stable. SIAM J. Numer. Anal. 37, 293–319 (2019). https://doi.org/10.1137/17M1158835

Adjerid, S., Devin, K.D., Flahery, J.E., Krivodonova, L.: A posteriori error estimation for discontinuous Galerkin solutions of hyperbolic problems. Comput. Methods Appl. Mech. Engrg. 191, 1097–1112 (2002). https://doi.org/10.1016/S0045-7825(01)00318-8

Adjerid, S., Baccouch, M.: Asymptotically exact a posteriori error estimates for a one-dimensional linear hyperbolic problem. Appl. Numer. Math. 60, 903–914 (2010). https://doi.org/10.1016/j.apnum.2010.04.014

Baccouch, M.: The discontinuous Galerkin finite element method for ordinary differential equations. In: Petrova, R. (ed.) Perusal of the Finite Element Method. https://doi.org/10.5772/64967, pp 32–68. InTechOpen (2016)

Springer, A., Vexler, B.: Third order convergent time discretization for parabolic optimal control problems with control constraints. Comput. Optim. Appl. 57, 205–240 (2014). https://doi.org/10.1007/s10589-013-9580-5

McLean, W.: Implementation of high-order, discontinuous Galerkin time stepping for fractional diffusion problems. ANZIAM J. 62, 121–147 (2020). https://doi.org/10.1017/S1446181120000152

Thomée, V.: Galerkin Finite Element Methods for Parabolic Problems. Springer, New York (2006)

Vlasák, M., Roskovec, F.: On Runge–Kutta, collocation and discontinuous Galerkin methods: mutual connections and resulting consequences to the analysis. In: Programs and Algorithms of Numerical Mathematics. https://eudml.org/doc/269918, pp 231–236. Institute of Mathematics AS CR, Prague (2015)

McLean, W.: DGErrorProfile. Github. https://github.com/billmclean/DGErrorProfile (2022)

Weideman, J.A.C., Trefethen, L.N.: Parabolic and hyperbolic contours for computing the Bromwich integral. Math. Comp. 76, 1341–1356 (2007). https://doi.org/10.1090/S0025-5718-07-01945-X

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions

Author information

Authors and Affiliations

Contributions

William McLean wrote an initial outline of the paper, which subsequently underwent multiple revisions arising from correspondence with Kassem Mustapha. William McLean carried out the numerical computations reported in the paper.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable

Consent for publication

Not applicable

Competing interests

The authors declare no competing interests.

Human and animal ethics

Not applicable

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

McLean, W., Mustapha, K. Error profile for discontinuous Galerkin time stepping of parabolic PDEs. Numer Algor 93, 157–177 (2023). https://doi.org/10.1007/s11075-022-01410-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-022-01410-y