Abstract

Many problems in science and engineering give rise to linear integral equations of the first kind with a square integrable kernel. Discretization of the integral operator yields a matrix, whose singular values cluster at the origin. We describe the approximation of such matrices by adaptive cross approximation, which avoids forming the entire matrix. The choice of the number of steps of adaptive cross approximation is discussed. The discretized right-hand side represents data that commonly are contaminated by measurement error. Solution of the linear system of equations so obtained is not meaningful because the matrix determined by adaptive cross approximation is rank-deficient. We remedy this difficulty by using Tikhonov regularization and discuss how a fairly general regularization matrix can be used. Computed examples illustrate that the use of a regularization matrix different from the identity can improve the quality of the computed approximate solutions significantly.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Linear integral equations of the first kind,

with a square integrable kernel κ arise in many applications, including remote sensing, computerized tomography, and image restoration. Here Ωi denotes a subset of \({\mathbb R}^{d_{i}}\) for some positive integer di. The integral operator in (1.1) is compact. Therefore, it has a singular value expansion, whose singular values cluster at the origin. This makes the solution of (1.1) an ill-posed problem; see, e.g., [13, 22] for introductions to ill-posed problems.

Discretization of (1.1) yields a linear system of equations

with a matrix, whose singular values coalesce at the origin. This makes the matrix A severely ill-conditioned and possibly rank-deficient; we measure the conditioning of a matrix with its condition number, which is the ratio of the largest and smallest singular values. Linear systems of equations with a matrix of this kind are often referred to as discrete ill-posed problems; see, e.g., [23]. We will for notational simplicity assume the matrix A to be square, however, the method described also can be applied, after minor modifications, when A is rectangular, in which case the linear system of (1.2) is replaced by a least-squares problem.

In many applications, the right-hand side vector g in (1.2) represents measured data and is contaminated by a measurement error e. Due to the severe ill-conditioning of A, straightforward solution of (1.2) typically yields a computed solution that is severely contaminated by propagated error, and therefore is not useful. To circumvent this difficulty, the linear system of (1.2) commonly is replaced by a nearby problem, whose solution is less sensitive to the error e in g. This replacement is referred to as regularization. Tikhonov regularization is possibly the most popular and well-understood regularization method. It replaces the linear system of equations (1.2) by a penalized least-squares problem of the form

where \(L\in {\mathbb R}^{p\times n}\) is referred to as the regularization matrix and μ > 0 as the regularization parameter. The problem (1.3) is said to be in standard form when L is the identity; otherwise (1.3) is in general form. Throughout this paper ∥⋅∥ denotes the Euclidean vector norm or the spectral matrix norm.

The choice of regularization parameter μ is important for the quality of the computed solution: a too small value results in a computed solution that is contaminated by needlessly much propagated error, while a too large value yields an unnecessarily smooth solution that may lack details of interest. Generally, a suitable value of μ is not known a priori, but has to be computed during the solution process. This typically requires that (1.3) be solved for several μ-values. Methods for determining a suitable value of μ include the L-curve criterion, generalized cross validation, and the discrepancy principle; see, e.g., [6, 7, 15, 28, 32, 33] for discussions of properties of these and other methods.

The matrix L is assumed to be chosen such that

where \({\mathcal N}(M)\) denotes the null space of the matrix M. Then the Tikhonov minimization problem (1.3) has the unique solution

for any μ > 0; see, e.g, [23] for details. Here and below the superscript T denotes transposition. We are interested in the situation when the matrices A and L are so large that it is impossible or undesirable to compute the solution (1.5) by Cholesky factorization of the matrix ATA + μLTL. In fact, we would like to avoid evaluating all the entries of A. We describe how A can be approximated by a much smaller matrix, without evaluating all matrix entries, by applying adaptive cross approximation.

The application of cross approximation to matrices that stem from the discretization of Fredholm integral equations of the second kind has received considerable attention in the literature, see, e.g., [3, 4, 17, 20, 37]; however, the use of cross approximation in the context of solving linear discrete ill-posed problems has not been thoroughly studied.

The use of adaptive approximation to determine the approximate solution of (1.3) when L is the identity is discussed in [29]. This paper extends this discussion to general regularization matrices L. Our interest in this extension of the method in [29] stems from the fact that the use of a suitably chosen regularization matrix L can deliver solutions of higher quality than L = I; see, e.g., [25, 31, 35] for illustrations and discussions on various ways of constructing regularization matrices. Roughly, L should be chosen so as not to damp known important features of the desired solution, while damping the propagated error stemming from the error in g.

In the computed examples of Section 4, we use the discrepancy principle to determine μ > 0. Let \(\widehat {\boldsymbol {g}}\in {\mathbb R}^{n}\) denote the unknown error-free vector associated with the right-hand side g in (1.2), i.e., \(\boldsymbol {g}=\widehat {\boldsymbol {g}}+\boldsymbol {e}\). Assume that the linear system of equations with the error-free right-hand side,

is consistent and that a fairly accurate bound ∥e∥≤ δ is known. Let \(\widehat {\boldsymbol {x}}\) denote the minimal-norm solution of (1.6). Then

The discrepancy principle prescribes that the regularization parameter μ > 0 be determined such that the Tikhonov solution (1.5) satisfies

where η > 1 is a user-specified parameter that is independent of δ. It can be shown that when δ tends to zero, xμ converges to \(\widehat {\boldsymbol {x}}\); see, e.g., [13] for a proof in a Hilbert space setting. We remark that the determination of μ > 0 such that xμ satisfies (1.8) typically requires the solution of (1.3) for several μ-values.

The present paper is concerned with the situation when the matrix \(A\in {\mathbb R}^{n\times n}\) in (1.2) is large. Then the evaluation of all entries of A can be quite time-consuming. Cross approximation, also known as skeleton approximation, of A reduces this time by approximating A by a matrix \(M_{k}\in {\mathbb R}^{n\times n}\) that consists of k ≪ n rows and columns of A. We would like to choose the rows and columns of A so that Mk approximates A well and is easy to compute with.

This paper is organized as follows. Section 2 reviews the application of adaptive cross approximation to the approximation of A by a matrix of low rank. In Section 3, we describe the application of adaptive cross approximation to the approximation of the Tikhonov (1.3). Section 4 reports a few computed examples, and concluding remarks can be found in Section 5.

We conclude this section with an example that leads to a large linear discrete ill-posed problem, whose solution is difficult to compute using straightforward discretization.

Example 1.1. Consider the solution of the Fredholm integral equation of the first kind

where ϕ is a given electric potential, \(S\in {\mathbb R}^{3}\) is a surface with electrodes, σ(y) denotes the density of the charge on S, and 𝜖0 stands for the electric permittivity in vacuum. We would like to determine σ from ϕ, and assume that ϕ is chosen so that (1.9) has a solution. The integral operator in (1.9) is compact. Therefore its singular values cluster at the origin; due to the singularity of the kernel at x = y, we expect the singular values to decay to zero with increasing index at a moderate rate. Nevertheless, the computation of a solution of (1.9) is an ill-posed problem. Using a weak formulation and discretization lead to a dense symmetric matrix \(K=[k_{ij}]_{i,j=1}^{n}\in {\mathbb R}^{n\times n}\) with entries

The singular values of the matrix K cluster at the origin; the matrix may be numerically rank-deficient when n is large.

This matrix k can be expensive to store and handle when the discretization is fine. Employing a hierarchical compression with \({\mathscr{H}}^{2}\)-matrices reduces the required storage to O(n), with a large constant hidden in the O(⋅), and allows matrix-vector product evaluations in O(n) arithmetic floating point operations (flops); see [5].

A fine discretization with n = 262,146 nodes results in a large, n × n, dense matrix. We used the H2Lib library [27] for the computations and base this example on one of the standard examples provided in this library. Without compression, 512 GB of memory is needed to store the matrix. On a laptop computer with an Intel Core i710710U CPU and 16 GB of RAM it took 1103 s to assemble the matrix K in the compressed \({\mathscr{H}}^{2}\)-matrix format. The matrix required 15.45 GB of storage, thus almost all the available RAM. Carrying out one matrix-vector product evaluation required 1596 s, that is 44% more time than for assembling the matrix. The reason for this is that the O(n) flops require a significant amount of communication between faster and slower storage. This example illustrates that there are linear discrete ill-posed problems of interest that are difficult to solve on a laptop computer, even if a significant amount of memory is available. It therefore is important to develop methods that are able to determine approximations of dense matrices that requires less computer storage and less CPU time for the evaluation of matrix-vector products. Unfortunately, it is not possible to solve Tikhonov regularized problems obtained from (1.9) for large values of n in reasonable time. We therefore are not able to carry out computations with this example.

2 Adaptive cross approximation

Cross approximation of a large matrix \(A\in {\mathbb R}^{n\times n}\) determines an approximation \(M_{k}\in {\mathbb R}^{n\times n}\) of rank at most k ≪ n. All entries of Mk can be evaluated much faster than all entries of A, because Mk is constructed from only k rows and columns of A. We would like Mk to be an accurate approximation of A. This is achieved by a careful choice of the k rows and columns of A that define the matrix Mk. A cross approximation method is said to be adaptive when the rows and columns of A that determine Mk (and k) are chosen depending on properties of A revealed during the computations; see [2, 4].

We outline the adaptive cross approximation method for a general square nonsymmetric matrix described in [29]. This method is an adaptation of the scheme in [17] to the approximation of the matrix of linear discrete ill-posed problems. When A is symmetric, the matrix Mk can be chosen to be symmetric. This roughly halves the storage requirement for Mk. Both the situations when A is symmetric positive definite or symmetric indefinite are discussed in [29]. We therefore will not dwell on these special cases in the present paper.

Let the matrix \(A\in {\mathbb R}^{n\times n}\) be nonsymmetric and choose k rows of A with indices in \({\mathbb N}=\{1,2,\dots ,n\}\). We let \(\mathbf {i},\mathbf {j}\in {\mathbb N}^{k}\) denote index vectors with k entries in \({\mathbb N}\). The submatrices A(i,:) and A(:,j) of A are made up of the k rows with indices i and the k columns with indices j, respectively. Moreover, the core matrix A(i,j) is made up of k rows and columns of A. Assume that this matrix is nonsingular. Then the rows and columns of the matrix

are equal to the corresponding rows and columns of A; when A is of rank k, we have Mk = A.

Goreinov et al. [21] show that it is beneficial to choose the index vectors i and j so that A(i,j) is a submatrix of A of maximal volume, i.e., so that the modulus of the determinant of A(i,j) is maximal. However, it is difficult to determine such index vectors i and j. We therefore seek to determine a low-rank matrix Mk that is a sufficiently accurate approximation of A by a greedy algorithm. Suppose that we already have computed an approximation

of rank at most k − 1 of A. To compute the next approximation, Mk, of A of rank at most k, we determine a row index i∗ and a column index j∗ by looking for the index of the maximum element in magnitude in the previously computed vectors \(\boldsymbol {w}^{(c)}_{k-1}\) (for index i∗) and \({\boldsymbol {w}^{(r)}_{k-1}}\) (for index j∗). The vector \({(\boldsymbol {w}^{(r)}_{1})^{T}}\) can be chosen as an arbitrary row of A. We will let \({(\boldsymbol {w}^{(r)}_{1})^{T}}\) be the first row of A in the computed examples of Section 4. The vector \(\boldsymbol {w}^{(c)}_{1}\) can be chosen in a similar way.

In the simplest form of cross approximation, the determination of the vectors \(\boldsymbol {w}^{(c)}_{k}\) and \(\boldsymbol {w}^{(r)}_{k}\) only requires the entries in row i∗ and column j∗ of A and the elements of already computed vectors \(\boldsymbol {w}^{(c)}_{\ell }\) and \({(\boldsymbol {w}}^{(r)}_{\ell })^{T}\), ℓ = 1,2,…,k − 1:

A new skeleton is obtained from the remainder,

without explicitly computing all entries of the matrix Rk. The required number of rank-one matrices, k, that make up Mk is generally not known a priori. We would like the difference A − Mk to be of small norm. However, we cannot evaluate this difference, because most entries of A are not known. Following [17], we include t randomly chosen matrix entries \(A_{i_{\ell },j_{\ell }}\), for \(\ell =1,2,\dots ,t\), with \(i_{\ell },j_{\ell }\in {\mathbb N}\). Define for future reference the set

When a new skeleton is determined, the values of these entries are updated by subtraction from the available skeletons,

with \((R_{0})_{i_{\ell },j_{\ell }}=A_{i_{\ell },j_{\ell }}\). The values \((R_{k})_{i_{\ell },j_{\ell }}\) are used in Section 3.3 as part of the stopping criterion to determine the final value k∗ for k. The value of t is a percentage of the total number of entries. The choice of t should depend on properties of the matrix A; see [17, 29] for further details. An algorithm is presented in [29].

3 Tikhonov regularization in general form

This section discusses how to combine adaptive cross approximation with Tikhonov regularization in general form.

3.1 Using adaptive cross approximation

The matrix Mk, whose computation was outlined in the previous section, is of the form

where

Compute the skinny QR factorizations

where the matrices \({Q_{k}^{(c)},Q_{k}^{(r)}\in {\mathbb R}^{n\times k}}\) have orthonormal columns and the matrices \({R_{k}^{(c)},R_{k}^{(r)}\in {\mathbb R}^{k\times k}}\) are upper triangular. The factorizations (3.2) can be computed by the Householder-QR method or by factorization methods that are designed to perform efficiently on modern computers, such as the methods described in [9, 14, 38].

Combining (3.1) and (3.2) yields

Replacing A by Mk in (1.3) gives the minimization problem

which can be solved in several ways. If the matrix L has a special structure, such as being banded with small bandwidth, then it may be attractive to transform (3.4) to standard form by a technique described by Eldén [12]. Regularization matrices L with a small bandwidth arise, e.g., when L represents a finite difference approximation of a differential operator in one space-dimension. It also is easy to transform (3.4) to standard form when L is an orthogonal projector; see [30].

In the remainder of this section, we discuss the situation when L is such that transformation of (3.4) to standard form as described in [12] is too expensive to be attractive. This is the case, for instance, when L represents a finite difference approximation of a differential operator in two or more space-dimensions. This kind of matrices L will be used in computed examples of Section 4.

We describe several approaches to compute a solution of (3.4) and start with the simplest one. The matrix Mk has a null space of large dimension (at least n − k). Therefore the Tikhonov minimization problem (3.4) is not guaranteed to have a unique solution. To remedy this difficulty, we require the solution of (3.4) to live in a subspace of fairly low dimension. A simple solution method is obtained when using the solution subspace \({\mathcal R}(Q_{k}^{(r)})\). Then we obtain the minimization problem

which has a unique solution if and only if

This holds, in particular, when the triangular matrices \(R_{k}^{(c)}\) and \(R_{k}^{(r)}\) in (3.3) are nonsingular. We found (3.6) to hold in all computed examples that we solved.

Introduce the QR factorization

where the matrix \(Q_{k}^{(L)}\in {\mathbb R}^{n\times k}\) has orthonormal columns and \(R_{k}^{(L)}\in {\mathbb R}^{k\times k}\) is upper triangular. We note that since the matrix L typically is very sparse and k is not large, the left-hand side of (3.7) generally can be evaluated quite quickly also when n is large. The minimization problem (3.5) yields the small problem

This problem can be solved in several ways: We may compute a generalized SVD (GSVD) of the matrix pair \(\{R_{k}^{(c)}(R_{k}^{(r)})^{T},R_{k}^{(L)}\}\) (see, e.g., [10, 23]), or apply a cheaper reduction of the matrix pair that can be used when the generalized singular values of the matrix pair are not explicitly required; see [11].

When the matrix \(R_{k}^{(L)}\) is nonsingular and not very ill-conditioned, which is the case in many applications, one may consider transforming the minimization problem (3.8) to standard form by the substitution \(\boldsymbol {z}=R_{k}^{(L)}\boldsymbol {y}\). This yields the problem

which easily can be solved, e.g., by computing the singular value decomposition of the matrix \(R_{k}^{(c)}(R_{k}^{(r)})^{T}(R_{k}^{(L)})^{-1}\). The solution zμ of (3.9) yields the solution \(\boldsymbol {y}_{\mu }=(R_{k}^{(L)})^{-1}\boldsymbol {z}_{\mu }\) of (3.5), from which we determine the approximate solution \(\widetilde {\boldsymbol {x}}_{\mu }=Q_{k}^{(r)}\boldsymbol {y}_{\mu }\) of (1.3). The solution of (3.9) is cheaper than the solution of (3.8) with the aid of the GSVD; see [19] for counts of the arithmetic floating point operations necessary to compute the GSVD of a pair of k × k matrices, and the SVD of a k × k matrix.

3.2 The discrepancy principle

We turn to the computation of the regularization parameter μ > 0 by the discrepancy principle. Assume for the moment that the matrix A is available. Then we can solve (1.8) for μ > 0 by using a zero-finder such as Newton’s method or one of the zero-finders described in [8, 34]. The theoretical justification of the discrepancy principle requires that the unavailable error-free vector \(\widehat {\boldsymbol {g}}\) associated with the available error-contaminated vector g satisfies \(\widehat {\boldsymbol {g}}\in {\mathcal R}(A)\).

Now consider the application of the discrepancy principle to the determination of the regularization parameter in (3.4). Generally, \(\widehat {\boldsymbol {g}}\not \in {\mathcal R}(M_{k})\) and, therefore, the discrepancy principle cannot be applied when solving (3.4) without modification. In the computed examples, we determine μ so that the computed solution xμ of (3.4) satisfies

cf. (1.8). If the matrices \(R_{k}^{(c)}\) and \(R_{k}^{(r)}\) in (3.3) are nonsingular, which generally is the case, then \(Q_{k}^{(c)}(Q_{k}^{(c)})^{T}\) is an orthogonal projector onto \({\mathcal R}(M_{k})\), and \(Q_{k}^{(c)}(Q_{k}^{(c)})^{T}\widehat {\boldsymbol {\textit {g}}}\) lives in \({\mathcal R}(M_{k})\). (3.10) can be solved for μ ≥ 0 by using a zero-finder.

Assume for simplicity that the matrices \(R_{k}^{(c)}\) and \(R_{k}^{(r)}\) are nonsingular. Then the minimal-norm solution of the problem

is given by

It satisfies

This is analogous to (1.7). However, the vector \(\widehat {\boldsymbol {x}}_{k}\) depends on k and may differ from \(\widehat {\boldsymbol {x}}\).

3.3 Stopping criterion for the adaptive cross approximation algorithm

In view of (1.8), we would like to determine a value of the regularization parameter μ > 0 such that

Even though the matrix A is not available, we can determine an approximate upper bound for the left-hand side of (3.11) as follows:

where Sk is an approximation of ∥A − Mk∥. Based on the values of \((R_{k})_{i_{\ell },j_{\ell }}\) from (2.2) such an approximation Sk can be computed as

where ∥⋅∥F denotes the matrix Frobenius norm, and the set π is defined by (2.1) with |π| = t elements.

The number of step k = k∗ of the adaptive cross approximation algorithm is chosen to be as small as possible such that there exists a μ > 0 such that

In this case we have, based on (3.12)

In the computed examples of the next section, we set η = η1 = η2 = 1.0. The computed results are not very sensitive to this choice.

4 Numerical experiments

This section describes a few computed examples with the adaptive cross approximation method. For problems in one space-dimension, we will use the regularization matrices L0 = I, where \(I\in {\mathbb R}^{n\times n}\) denotes the identity matrix, as well as

or

which approximate a multiple of the first and second order derivative operators, respectively, assuming that xμ is the discretization of a function xμ at equidistant points on a bounded interval Ω1. For problems in two space-dimensions, we use the regularization matrices

or

where ⊗ stands for the Kronecker product. These choices of regularization matrices are fairly common; see, e.g., [23, 26, 32] for illustrations.

The examples are taken from the Regularization Tools [24] and from IR Tools [18]. In all examples, except for Experiment 6, the number of elements in the set π is 50n. The matrix A is of size n × n with n ≫ 50 in these experiments. In Experiment 6, the number of elements in the set π is 4n.

Experiment 1

We consider the problem “gravity” of size n = 1024 from [24]. The aim of this experiment is to illustrate that the quantities Sk defined by (3.13) provide quite accurate approximations of ∥A − Mk∥. This is displayed by Fig. 1.

Experiment 2

We again consider the example “gravity” from [24] of size n = 1024. Let δ = 10− 2. This example illustrates that for certain problems only a fairly small number of steps of the adaptive cross approximation algorithm suffices to yield a satisfactory result. The example also shows that it may be very beneficial to use a regularization matrix different from the identity matrix. The maximum number of adaptive cross approximation steps is 30. Results are shown for L ∈{L0,L1,L2}. The quality of the computed solution xcomputed is measured by the relative error \(\|{\boldsymbol {x}}_{\text {computed}}-\hat {{\boldsymbol {x}}}\|/\|\hat {{\boldsymbol {x}}}\|\). The horizontal axis of Fig. 2 shows the number of steps of adaptive cross approximation; the vertical line indicates that for each one of the choices of L, the stopping criterion for the method is satisfied at step k∗ = 20.

For the “gravity” problem the relative error between the exact solution and the computed approximate solution is plotted as a function of the iteration step k and the chosen regularization matrix L0,L1,L2. The size of the problem is n = 1024 with δ = 1.0e − 2 and η = η1 = η2 = 1.0. The vertical line indicates where the stopping criterion is satisfied for adaptive rank approximation

Experiment 3

This experiment is similar to Experiment 2, but for problem “baart” from [24]. Results are displayed in Fig. 3. Also in this example it is beneficial to use a regularization matrix different from the identity.

For the “baart” problem the relative error between the exact solution and the computed approximate solution is plotted as a function of the iteration step k and the chosen regularization matrix L0,L1,L2. The size of the problem is n = 1024 with δ = 1.0e − 2 and η = η1 = η2 = 1.0. The vertical line indicates where the stopping criterion is satisfied for adaptive rank approximation

Experiment 4

This experiment is similar to Experiment 2, but for problem “phillips” from [24]. The result is shown in Fig. 4. For this example all three regularization matrices used perform about equally well. The singular values of the matrix A decay to zero slower for this example than for the previous examples. Therefore more steps with the adaptive cross approximation algorithm have to be carried out.

For the “phillips” problem the relative error between the exact solution and the computed approximate solution is plotted as a function of the iteration step k and the chosen regularization matrix L0,L1,L2. The size of the problem is n = 1024 with δ = 1.0e − 2 and η = η1 = η2 = 1.0. The vertical line indicates where the stopping criterion is satisfied for adaptive rank approximation

Experiment 5

We consider the example EXdiffusion_rrgmres from IR Tools [18]. The size of the problem is 4096 and \(\delta = 5.0e-3\|\widehat {\boldsymbol {g}}\|\) (Fig. 5). The other parameters are as in Experiment 2. The “best” solution determined by the example script in IR Tools has relative error (when compared with the exact solution) 0.1875, while for L = L2 our algorithm in iteration step k = 76 reaches a relative error of 0.1910. Although RRGMRES gives a slightly better solution, using a Krylov method such as RRGMRES requires knowledge of the whole matrix allowing to compute matrix-vector products. Our method only requires knowing part of the elements of the matrix.

For the “diffusion” problem the relative error between the exact solution and the computed approximate solution is plotted as a function of the iteration step k and the chosen regularization matrix L. The size of the problem is n = 4096 with \(\delta = 5.0e-3\| \widehat {\boldsymbol {g}} \|\) and η = η1 = η2 = 1.0. The vertical line indicates where the stopping criterion is satisfied for adaptive rank approximation

Experiment 6

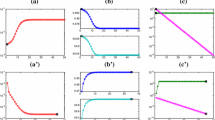

We consider A = B ⊗ B with \(B\in {\mathbb R}^{40\times 40}\) the matrix of the “baart” problem in [24]. Let \(\widehat {\boldsymbol {z}}\in {\mathbb R}^{40}\) be the “exact solution” furnished by the “baart” problem and compute the associated right-hand side \(\widehat {\boldsymbol {b}}=B\widehat {\boldsymbol {z}}\). Then \(B\widehat {\boldsymbol {z}}\widehat {\boldsymbol {z}}^{T}B^{T}=\widehat {\boldsymbol {b}}\widehat {\boldsymbol {b}}^{T}\). Let \(\widehat {\boldsymbol {x}}=\text {vec}(\widehat {\boldsymbol {z}}\widehat {\boldsymbol {z}}^{T})\) and \(\widehat {\boldsymbol {g}}=\text {vec}(\widehat {\boldsymbol {b}}\widehat {\boldsymbol {b}}^{T})\), where vec denotes the vectorization operator. Then \(A\widehat {\boldsymbol {x}}=\widehat {\boldsymbol {g}}\). The singular values of B decay to zero quite quickly with increasing index number. Since the singular values of A are pairwise products of the singular values of A, they also decrease to zero quickly with increasing index. Let δ = 1e − 2 and generate the error contaminated right-hand side vector g associated with \(\widehat {\boldsymbol {g}}\). We use (4.4) for L0, L1 and L2 as regularization matrices. The relative errors of the computed solutions are displayed in Fig. 6.

For the 2D “baart” problem the relative error between the exact solution and the computed approximate solution is plotted as a function of the iteration step k and the chosen 2D regularization matrix based on L0,L1,L2. The size of the problem is n = 402 with δ = 1.0e − 3 and η = η1 = η2 = 1.0. The vertical line indicates where the stopping criterion is satisfied for adaptive rank approximation

5 Conclusion

This paper discusses the application of adaptive cross approximation to Tikhonov regularization problems in general form. The computed examples illustrate that often only quite few cross approximation steps are required to yield useful approximate solutions. Particular attention is given to the stopping criterion for adaptive cross approximation.

Data availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Baart, M.L.: The use of auto-correlation for pseudo-rank determination in noisy ill-conditioned least-squares problems. IMA J. Numer. Anal. 2, 241–247 (1982)

Bebendorf, M.: Approximation of boundary element matrices. Numer. Math. 86, 565–589 (2000)

Bebendorf, M., Grzibovski, R.: Accelerating Galerkin BEM for linear elasticity using adaptive cross approximation. Math. Methods Appl. Sci. 29, 1721–1747 (2006)

Bebendorf, M., Rjasanow, S.: Adaptive low-rank approximation of collocation matrices. Computing 70, 1–24 (2003)

Börm, S.: Efficient Numerical Methods for Non-Local Operators: \({\mathscr{H}}_{2}\)-Matrix Compression Algorithms and Analysis. European Math. Society, Zürich (2010)

Brezinski, C., Rodriguez, G., Seatzu, S.: Error estimates for linear systems with applications to regularization. Numer. Algorithms 49, 85–104 (2008)

Brezinski, C., Redivo-Zaglia, M., Rodriguez, G., Seatzu, S.: Multi-parameter regularization techniques for ill-conditioned linear systems. Numer. Math. 94, 203–224 (2003)

Buccini, A., Pasha, M., Reichel, L.: Generalized singular value decomposition with iterated Tikhonov regularization. J. Comput. Appl. Math 373, 112276 (2020)

Calvetti, D., Petersen, J., Reichel, L.: A parallel implementation of the GMRES algorithm. In: Reichel, L., Ruttan, A., Varga, R.S., de Gruyter (eds.) Numerical Linear Algebra, pp 31–46, Berlin (1993)

Dykes, L., Noschese, S., Reichel, L.: Rescaling the GSVD with application to ill-posed problems. Numer. Algorithms 68, 531–545 (2015)

Dykes, L., Reichel, L.: Simplified GSVD computations for the solution of linear discrete ill-posed problems. J. Comput. Appl. Math. 255, 15–27 (2013)

Eldén, L.: A weighted pseudoinverse, generalized singular values, and constrained least squares problems. BIT Numer. Math. 22, 487–501 (1982)

Engl, H.W., Hanke, M., Neubauer, A.: Regularization of Inverse Problems. Kluwer, Dordrecht (1996)

Erhel, J.: A parallel GMRES version for general sparse matrices. Electron. Trans. Numer. Anal. 3, 160–176 (1995)

Fenu, C., Reichel, L., Rodriguez, G., Sadok, H.: GCV For Tikhonov regularization by partial SVD. BIT Numer. Math. 57, 1019–1039 (2017)

Fox, L., Goodwin, E.T.: The numerical solution of non-singular linear integral equations. Philos. Trans. R. Soc. Lond. Ser. A Math. Phys. Eng. Sci. 245:902, 501–534 (1953)

Frederix, K., Van Barel, M.: Solving a large dense linear system by adaptive cross approximation. J. Comput. Appl. Math. 234, 3181–3195 (2010)

Gazzola, S., Hansen, P.C., Nagy, J.G.: IR Tools: A MATLAB package of iterative regularization methods and large-scale test problems. Numer. Algorithms 81, 773–811 (2019)

Golub, G.H., Van Loan, C.F.: Matrix Computations, 4th edn. Johns Hopkins University Press, Baltimore (2013)

Goreinov, S.A., Tyrtyshnikov, E.E., Zamarashkin, N.L.: A theory of pseudo-skeleton approximation. Linear Algebra Appl. 261, 1–21 (1997)

Goreinov, S.A., Zamarashkin, N.L., Tyrtyshnikov, E.E.: Pseudo-skeleton approximations by matrices of maximal volume. Math. Notes 62, 515–519 (1997)

Groetsch, C.W.: The Theory of Tikhonov Regularization for Fredholm Equations of the First Kind. Pitman, Boston (1984)

Hansen, P.C.: Rank-Deficient And discrete Ill-Posed problems, SIAM, Philadelphia (1998)

Hansen, P.C.: Regularization Tools version 4.0 for Matlab 7.3. Software is available in Netlib at http://www.netlib.org. Numer. Algorithms 46, 189–194 (2007)

Huang, G., Noschese, S., Reichel, L.: Regularization matrices determined by matrix nearness problems. Linear Algebra Appl. 502, 41–57 (2016)

Huang, G., Reichel, L., Yin, F.: On the choice of subspace for large-scale Tikhonov regularization problems in general form. Numer. Algorithms 81, 33–55 (2019)

H2Lib: http://www.h2lib.org/, 2015–2020

Kindermann, S., Raik, K.: A simplified L-curve method as error estimator. Electron. Trans. Numer. Anal. 53, 217–238 (2020)

Mach, T., Reichel, L., Van Barel, M., Vandebril, R.: Adaptive cross approximation for ill-posed problems. J. Comput. Appl. Math. 303, 206–217 (2016)

Morigi, S., Reichel, L., Sgallari, F.: Orthogonal projection regularization operators. Numer. Algorithms 44, 99–114 (2007)

Noschese, S., Reichel, L.: Inverse problems for regularization matrices. Numer. Algorithms 60, 531–544 (2012)

Park, Y., Reichel, L., Rodriguez, G., Yu, X.: Parameter determination for Tikhonov regularization problems in general form. J. Comput. Appl. Math. 343, 12–25 (2018)

Reichel, L., Rodriguez, G.: Old and new parameter choice rules for discrete ill-posed problems. Numer. Algorithms 63, 65–87 (2013)

Reichel, L., Shyshkov, A.: A new zero-finder for Tikhonov regularization. BIT Numer. Math. 48, 627–643 (2008)

Reichel, L., Ye, Q.: Simple square smoothing regularization operators, Electron. Trans. Numer. Anal. 33, 63–83 (2009)

Shaw Jr, C.B.: Improvements of the resolution of an instrument by numerical solution of an integral equation. J. Math. Anal. Appl. 37, 83–112 (1972)

Tyrtyshnikov, E.E.: Incomplete cross approximation in the mosaic-skeleton method. Computing 64, 367–380 (2000)

Yamamoto, Y., Nakatsukasa, Y., Yanagisawa, Y., Fukaya, T.: Roundoff error analysis of the choleskyQR2 algorithm, Electron. Trans. Numer. Anal. 44, 306–326 (2015)

Acknowledgements

We would like to thank the referee for comments that lead to improvements of the presentation.

Funding

Open Access funding enabled and organized by Projekt DEAL. This research was partially supported by the Fund for Scientific Research–Flanders (Belgium), Structured Low-Rank Matrix/Tensor Approximation: Numerical Optimization-Based Algorithms and Applications: SeLMA, EOS 30468160, the KU Leuven Research Fund, Numerical Linear Algebra and Polynomial Computations, OT C14/17/073. The research of Thomas Mach has been partially funded by the Deutsche Forschungsgemeinschaft (DFG) - Project-ID 318763901 - SFB1294.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Dedicated to Claude Brezinski on the occasion of his 80th birthday.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mach, T., Reichel, L. & Van Barel, M. Adaptive cross approximation for Tikhonov regularization in general form. Numer Algor 92, 815–830 (2023). https://doi.org/10.1007/s11075-022-01395-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-022-01395-8