Abstract

Cauchy-like matrices arise often as building blocks in decomposition formulas and fast algorithms for various displacement-structured matrices. A complete characterization for orthogonal Cauchy-like matrices is given here. In particular, we show that orthogonal Cauchy-like matrices correspond to eigenvector matrices of certain symmetric matrices related to the solution of secular equations. Moreover, the construction of orthogonal Cauchy-like matrices is related to that of orthogonal rational functions with variable poles.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A matrix \(C\in \mathbb {R}^{n\times n}\) is of Cauchy type if its entries Cij have the form

where xi,yj for i,j = 1,…,n are mutually distinct real numbers, called nodes. Besides their pervasive occurrence in computations with rational functions [1], Cauchy matrices play an important role in deriving algebraic and computational properties of many relevant structured matrix classes. For example, they occur as fundamental blocks (together with trigonometric transforms) in decomposition formulas and fast solvers for Toeplitz, Hankel, and related matrices, see, e.g., [2].

The main goal of this contribution is to provide a complete answer to the following question: can the rows and columns of a Cauchy matrix be scaled so that the matrix becomes orthogonal? Interest in this question arises from the paper [3], where orthogonal matrices obtained by scaling rows and columns of Cauchy matrices are needed in the design of all-pass filters for signal processing purposes. Moreover, in [4] Cauchy matrices have been characterized as transition matrices between eigenbases of certain pairs of diagonalizable matrices, as better described below. Thus, it is interesting to characterize Cauchy matrices that can be orthogonalized by a row/column scaling, as the related eigenbases are similarly conditioned.

Matrices obtained by scaling rows and columns of a Cauchy matrix of order n can be parametrized by about 4n coefficients. On the other hand, an invertible matrix X is orthogonal if and only if it fulfills the identity XTX = I, which boils down to about n2/2 quadratic scalar equations. It may therefore seem that orthogonalization of a Cauchy matrix by scaling its rows and columns is feasible only for small n: the number of constraints grows faster than that of free variables. Instead, the main result of this paper shows that, for any fixed order n, there is an infinite number of Cauchy matrices that can be orthogonalized in the way said before.

Let us briefly explain the structure of the paper. A few basic facts and concepts on Cauchy matrices are recalled in the next section. Section 3 contains the main results of this paper, namely, the complete description of the set of orthogonal Cauchy-like matrices, that is, the orthogonal matrices obtained by diagonal scalings of Cauchy matrices, of any order n. Section 4 is devoted to displaying various algebraic and computational properties of orthogonal Cauchy-like matrices. There, we illustrate their relationships with secular equations, the diagonalization of a subclass of symmetric quasiseparable matrices and the construction of orthogonal rational functions with free poles. Moreover, in that section we specialize to orthogonal Cauchy-like matrices the characterization of Cauchy matrices obtained in [4], and provide a complete description of matrix sets that are simultaneously diagonalized by orthogonal Cauchy-like matrices. Finally, Section 5 exhibits a particular sequence of orthogonal Cauchy-like matrices of arbitrary order, whose nodes are based on Chebyshev points.

In the sequel, we adopt the following notation. The matrix in (1) is referred to simply as Cauchy(x,y). Let \(\mathbb {R}^{n}_{0}\) be the set of vectors in \(\mathbb {R}^{n}\) without null entries. We denote by 1 the all-ones vector in \(\mathbb {R}^{n}\). For \(0\neq x\in \mathbb {R}\) we set sign(x) = 1 if x ≥ 0 and sign(x) = − 1 if x < 0. For any \(z= (z_{1},\ldots ,z_{n})^{T}\in \mathbb {R}^{n}\) let \(D_{z} \in \mathbb {R}^{n\times n}\) be a diagonal matrix with the diagonal entries z1,…,zn.

2 Cauchy matrices and their properties

Algebraic and computational properties of Cauchy matrices are better understood by making use of the so-called displacement operators. Let \(M,N\in \mathbb {R}^{n\times n}\) and define

The matrix operator \(\mathcal {D}_{M,N}\) is widely known as a (Sylvester-type) displacement operator [5]. The map \(\mathcal {D}_{M,N}\) is invertible if and only if the spectra of M and N are disjoint. For any nonnegative integer r the set

is a displacement-structured matrix space. Various interesting matrix sets, such as circulant, Toeplitz, Vandermonde, Hankel, and also Cauchy matrices, are actually subsets of displacement-structured matrix spaces with a small rank k. These spaces share two important features: first, matrices in \(\mathcal {S}_{M,N}^{r}\) can be parametrized by a small set of coefficients (typically, their number is \(\mathcal {O}(nr)\)) by means of appropriate inversion formulas for the related displacement operator. Furthermore, if an invertible matrix belongs to \(\mathcal {S}_{M,N}^{r}\) then its inverse belongs to \(\mathcal {S}_{N,M}^{r}\). By means of these simple facts, linear systems and least squares problems with displacement-structured matrices can be solved by means of fast algorithms, requiring \(\mathcal {O}(n^{2})\) arithmetic operations or even less [6,7,8].

Cauchy matrices are very special displacement-structured matrices. For \(x,y\in \mathbb {R}^{n}\) we adopt the simplified notations \(\mathcal {D}_{x,y}\) and \(\mathcal {S}_{x,y}^{r}\) for the displacement operator \(\mathcal {D}_{x,y}(X) = D_{x}X-XD_{y}\) and the related displacement-structured matrix space, respectively. If the numbers x1,…,xn,y1,…,yn are all distinct then the operator \(\mathcal {D}_{x,y}\) is invertible. Indeed, for any given matrix \(A\in \mathbb {R}^{n\times n}\) the solution of the matrix equation \(\mathcal {D}_{x,y}(X) = A\) is Xij = Aij/(xi − yj). It is immediate to see that the matrix C in (1) is characterized as the solution of the matrix equation \(\mathcal {D}_{x,y}(C) = \mathbf {1}\mathbf {1}^{T}\), hence \(C \in \mathcal {S}_{x,y}^{1}\). More generally, a matrix \(A\in \mathbb {R}^{n\times n}\) belonging to a \(\mathcal {S}_{x,y}^{r}\) space with r ≪ n is said to possess a Cauchy-like displacement structure [5,6,7]. In this work, we term Cauchy-like matrix any matrix that belongs to some \(\mathcal {S}_{x,y}^{1}\) space.

Definition 1

Let \(x_{i},y_{j}\in \mathbb {R}\) be pairwise distinct numbers, for i,j = 1,…,n. A Cauchy-like matrix with nodes x1,…,xn and y1,…,yn is any matrix \(K\in \mathbb {R}^{n\times n}\) such that \(\mathcal {D}_{x,y}(K) = vw^{T}\) for some \(v,w\in \mathbb {R}^{n}\), i.e.,

The matrices of the previous definition are also called generalized Cauchy matrices by some authors, see, e.g., [9]. It is immediate to observe that the vector parameters x,y,v and w in (2) are not unique. Indeed, adding a constant vector to both x and y does not modify the denominators in (2). Moreover, the vectors v and w are defined apart of a nonzero constant. However, this ambiguity does not harm the following. The lemma below, whose proof is immediate, provides a useful factored form for Cauchy-like matrices.

Lemma 1

A Cauchy-like matrix with nodes x1,…,xn and y1,…,yn can be factored as K = DvCDw where C = Cauchy(x,y) and \(v,w\in \mathbb {R}^{n}\) are such that \(\mathcal {D}_{x,y}(K) = vw^{T}\). Conversely, a matrix factored as K = DvCDw where C = Cauchy(x,y) and \(v,w\in \mathbb {R}^{n}\) is Cauchy-like.

A notable feature of the set of Cauchy-like matrices is the invariance under row and column permutation. This fact is at the basis of stable numerical methods for solving linear systems with Cauchy-like matrices and, more generally, matrices with a Cauchy-like displacement structure [6, 7]. Indeed, let K be the matrix in (2) and let P,Q be two permutation matrices. Introduce the permuted vectors \(\widehat {v} = Pv\) and \(\widehat {w} = Qw\). Direct inspection proves the identity

Hence, when dealing with Cauchy-like matrices there is no loss of generality in supposing that the vectors x and y are ordered monotonically, i.e.,

Such an ordering can always be obtained by a row and column permutation of the matrix.

2.1 The inverse and determinant of a Cauchy matrix

The invertibility of Cauchy matrices is a well-known fact which has been rediscovered many times. We refer to [10] for an earlier exposition of explicit formulas for the determinant and inverse of a generic Cauchy matrix. For example, the formula

shows that every Cauchy matrix is nonsingular. To illustrate the convenience of displacement operators, we recall hereafter a simple displacement-based derivation of the structure of the inverse of a Cauchy matrix. Let C = Cauchy(x,y). From \(\mathcal {D}_{x,y}(C) = \mathbf {1}\mathbf {1}^{T}\) we derive

Thus \(\mathcal {D}_{y,x}(C^{-1}) = D_{y}C^{-1}-C^{-1}D_{x} = - C^{-1}\mathbf {1}\mathbf {1}^{T}C^{-1}\), that is, \(C^{-1}\in \mathcal {S}_{y,x}^{1}\). Let a = C− 11 and b = C−T1. Then we conclude \(\mathcal {D}_{y,x}(C^{-1})= - ab^{T}\) and, by Lemma 1,

The last passages exploits the identity Cauchy(x,y)T = −Cauchy(y,x). Incidentally, (6) inspired the authors of the paper [9] to investigate nonsingular matrices X such that X− 1 = DaXTDb for some diagonal matrices Da and Db, particularly about the sign patterns that appear in these matrices.

Clearly, equation (6) implies that \(a,b\in \mathbb {R}^{n}_{0}\). In fact, explicit expressions for the vectors a and b above can be obtained from the solution of certain polynomial interpolation problems, see, e.g., [10, 11]. In particular, for i = 1,…,n,

where p(x) and q(x) are the polynomials

3 Main results

On the basis of the facts recalled in the previous section, the construction of orthogonal Cauchy-like matrices with given poles x1,…,xn and y1,…,yn amounts to solving the quadratic matrix equation KTK − I = O under the constraint \(K\in \mathcal {S}_{x,y}^{1}\). In this section, we provide necessary and sufficient conditions for the solvability of this problem, together with a complete description of the solution set. First, we characterize the Cauchy matrices that can be diagonally scaled to orthogonality. Subsequently, we describe all orthogonal Cauchy-like matrices with prescribed nodes. Later, we solve the inverse problem of choosing a set of nodes given the other set, so that the resulting Cauchy matrix can be scaled to have its columns be orthogonal.

Definition 2

Let \(\mathcal {K}_{n}\) be the set of Cauchy matrices \(C\in \mathbb {R}^{n\times n}\) such that there exist \(v,w\in \mathbb {R}^{n}_{0}\) such that DvCDw is orthogonal.

Thus, \(\mathcal {K}_n\) consists of all Cauchy matrices that can be made orthogonal by scaling rows and columns. Owing to (3), the set \({\mathcal {K}_{n}}\) is closed under row/column permutations, i.e., permuting rows and columns of a Cauchy matrix has no effect on whether the matrix belongs to \({\mathcal {K}_{n}}\) or not. We formalize this fact in the next proposition.

Proposition 2

Let C = Cauchy(x,y) and let P,Q be two arbitrary permutation matrices. Then \(PCQ^{T}\in \mathcal {K}_n\) if and only if \(C\in \mathcal {K}_n\).

Hence, to characterize the matrices in \(\mathcal {K}_n\) we can restrict our attention to Cauchy matrices whose nodes verify the inequalities (4). The following results provide necessary and sufficient conditions for an n × n Cauchy matrix to belong to \(\mathcal {K}_{n}\). The condition in the forthcoming Lemma 3 concerns the signs of the numbers ai and bi defined in (7), while that in Theorem 4 only involves the nodes position on the real line.

Lemma 3

The matrix C = Cauchy(x,y) belongs to \(\mathcal {K}_n\) if and only if the numbers a1,…,an and b1,…,bn from (7) are either all positive or all negative.

Proof 1

From (6) we have C− 1 = DaCTDb with \(a,b\in \mathbb {R}^{n}_{0}\) given by (7). Furthermore, \(C\in \mathcal {K}_{n}\) if and only if there exist \(v,w\in \mathbb {R}^{n}_{0}\) such that the Cauchy-like matrix K = DvCDw is orthogonal. The latter factorization yields the representation

On the other hand, KT = DwCTDv. Thus K− 1 = KT if and only if

or, equivalently,

Comparing entrywise the matrices in the two sides of the latter equation, we see that the identity KT = K− 1 holds if and only if \({w_{i}^{2}}{v_{j}^{2}} = a_{i}b_{j}\), in particular aibj > 0, for all i,j = 1,…,n.

Conversely, let aibj > 0. Then let σ = sign(ai), \(w_{i} = \pm \sqrt {\sigma a_{i}}\) and \(v_{i} = \pm \sqrt { \sigma b_{i}}\) for i = 1,…,n and define K = DvCDw. We obtain \({w_{i}^{2}}{v_{j}^{2}} = a_{i}b_{j}\) and the identity KT = K− 1 can be derived by reversing the order of the previous arguments. □

Theorem 4

Let C = Cauchy(x,y) where the vectors x and y fulfill (4). Then, \(C\in \mathcal {K}_{n}\) if and only if the nodes xi and yj interlace, that is, either x1 < y1 < x2 < y2 < ⋯ < xn < yn or y1 < x1 < y2 < x2 < ⋯ < yn < xn. More precisely, we have the first sequence of inequalities when the numbers ai,bi in (7) are all negative and the second sequence when they are all positive.

Proof 2

Firstly, we prove that the node interlacing condition is necessary for having \(C\in \mathcal {K}_n\). Arguing by contradiction, let \(v,w\in \mathbb {R}^{n}_{0}\) be such that K = DvCDw is orthogonal and suppose that the nodes xi and yj do not interlace. Then at least one of the following conditions is true: (1) for some i = 1,…,n − 1 the interval [xi,xi+ 1] contains none of y1,…,yn; (2) for some i = 1,…,n − 1 the interval [yi,yi+ 1] contains none of x1,…,xn. In the first case consider the identity

By hypothesis, yj < xi if and only if yj < xi+ 1. Hence, the terms 1/(xi − yj) and 1/(xi+ 1 − yj) have the same sign for j = 1,…,n. Consequently, the rightmost expression in the previous equation is nonzero and we have a contradiction. Case (2) can be treated analogously by considering the formula for (KTK)i,i+ 1 and interchanging the role of x and y.

Conversely, suppose that the entries of x and y interlace as follows:

Let \(p(x) = {\prod }_{i}(x-x_{i})\) and \(q(x) = {\prod }_{i}(x-y_{i})\) be as in (8). Then

Consequently,

Furthermore,

From (7) we obtain sign(ai) = sign(bi) = − 1 and the claim follows from Lemma 3. The case where y1 < x1 < y2 < x2 < ⋯ < yn < xn can be treated analogously by interchanging the role of x and y. Here we have sign(ai) = sign(bi) = + 1, and this completes the proof. □

Remark 1

As shown in the preceding theorem, the set \(\mathcal {K}_n\) splits into two disjoint subsets, \(\mathcal {K}_n = \mathcal {K}_n^{1} \cup \mathcal {K}_n^{2}\) and \(\mathcal {K}_n^{1} \cap \mathcal {K}_n^{2} = \varnothing \), where \(\mathcal {K}_n^{1}\) contains all Cauchy matrices whose nodes (reordered as in (4)) fulfill the inequalities x1 < y1 < ⋯ < xn < yn and \({\mathcal {K}_{n}}^{2}\) consists of the Cauchy matrices such that y1 < x1 < ⋯ < yn < xn. Thus, up to permutations of rows and column, the sign patterns of matrices in \(\mathcal {K}_n^{1}\) and \(\mathcal {K}_n^{2}\) are

respectively. Any sign pattern that can be traced to one of the above by permuting rows and columns can be realized by a Cauchy matrix in \({\mathcal {K}_{n}}^{1}\) or \({\mathcal {K}_{n}}^{2}\), respectively. Observing these patterns it is not difficult to realize that these two sets are invariant under matrix transposition, that is, \(C\in \mathcal {K}_n^{i} \Leftrightarrow C^{T}\in \mathcal {K}_n^{i}\) for i = 1,2. Furthermore, using the inversion formula (6) and Lemma 3, we also conclude \(C\in {\mathcal {K}_{n}}^{i} \Leftrightarrow C^{-1}\in {\mathcal {K}_{n}}^{i}\) for i = 1,2. On the other hand, \(C\in \mathcal {K}_n^{1} \ \Leftrightarrow -C\in \mathcal {K}_n^{2}\).

The next result characterizes all orthogonal Cauchy-like matrices having prescribed nodes xi and yi that verify the interlacing inequalities in Theorem 4.

Corollary 5

Let \(C = \text {Cauchy}(x,y) \in \mathcal {K}_{n}\), and let ai,bi be as in (7). The Cauchy-like matrix K = DvCDw is orthogonal if and only if there exists a scalar α≠ 0 such that

Proof 3

The equations above can be rewritten as \({D_{v}^{2}} = \alpha D_{b}\) and \({D_{w}^{2}} = \frac {1}{\alpha } D_{a}\). Since \(v,w\in \mathbb {R}^{n}_{0}\) by construction, the matrices Dv and Dw are invertible and condition (10) implies the identity KT = K− 1. Indeed,

Conversely, if KT = K− 1 then, using the identity K = DvCDw we obtain

By matching the (i,j)-entry of the leftmost and rightmost matrices in the previous equation we find \({w_{i}^{2}}{v_{j}^{2}}/(x_{i}-y_{j}) = a_{i}b_{j}/(x_{i}-y_{j})\) for i,j = 1,…,n, which implies (10). □

4 Supplementary results

This section is divided into sub-sections dedicated to showing various algebraic and computational properties of orthogonal Cauchy-like matrices. First, we illustrate their relationships with secular equations and a family of quasiseparable matrices. Next, we show their occurrence in the construction of orthogonal rational functions with free poles, and specialize to orthogonal Cauchy-like matrices the characterization of Cauchy matrices obtained in [4]. Lastly, we extend results from [12, 13] to provide a complete description of matrix sets that are simultaneously diagonalized by orthogonal Cauchy-like matrices.

4.1 Secular equations and quasiseparable matrices

Let \(\mathcal {A}_n \subset \mathbb {R}^{n\times n}\) be the set of all matrices \(A\in \mathbb {R}^{n\times n}\) that admit the decomposition

for some \(x\in \mathbb {R}^{n}\), \(v\in \mathbb {R}^{n}_{0}\) and \(0\neq \alpha \in \mathbb {R}\), where in addition the entries of x = (x1,…,xn)T are pairwise distinct. Thus \({\mathcal {A}_{n}}\) consists of particular symmetric, irreducible matrices that can be decomposed into the sum of a diagonal and a rank-one matrix. Our next goal is to prove that orthogonal Cauchy-like matrices are exactly the eigenvector matrices of matrices belonging to \({\mathcal {A}_{n}}\). One part of this claim is actually known. Indeed, consider the following theorem.

Theorem 6

Let \(A= D_{x} + \alpha vv^{T}\in \mathcal {A}_n\). The eigenvalues of A are equal to the n roots y1,…,yn of the rational function

The corresponding normalized eigenvectors k1,…,kn are given by

The preceding theorem merely restates results of Golub [14] and of Bunch, Nielsen and Sorensen [15] who added formula (12); see also [16, Lemma 10.3]. The nonlinear equation r(t) = 0 with r(t) as in (11) is known as a secular equation and recurs in a variety of modified matrix eigenvalue problems [14, 15]. A close look at (12) shows that the eigenvector matrix of A is an orthogonal Cauchy-like matrix. Indeed, the vector ki is the i-th column of the matrix K = DvCauchy(x,y)Dw where wi = 1/∥(Dx − yiI)− 1v∥2 normalizes the i-th column of K to unit 2-norm. We prove hereafter that this result can be somewhat reversed, that is, every orthogonal Cauchy-like matrix is the eigenvector matrix of some matrix \(A\in {\mathcal {A}_{n}}\).

Theorem 7

Let K = DvCDw be an orthogonal Cauchy-like matrix, with C = Cauchy(x,y). Then K is the eigenvector matrix of the matrix A = Dx + αvvT where

Proof 4

From the displacement equation DxK − KDy = vwT we easily get

The left-hand side of this equation is symmetric. Hence, the rank-one term vwTKT must be symmetric too, that is, we can set Kw = −αv for some scalar α≠ 0. Actually, the value of α can be obtained from the identity αvvT = KDyKT − Dx as follows:

Hence (13) follows. We conclude that KDyKT is the spectral factorization of \(A = D_{x} + \alpha vv^{T} \in \mathcal {A}_n\). □

Remark 2

Theorem 7 proves that the orthogonal matrix K = DvCDw with \(C = \text {Cauchy}(x,y) \in \mathcal {K}_n\) diagonalizes the matrix A = Dx + αvvT. In view of the splitting \({\mathcal {K}_{n}} = {\mathcal {K}_{n}}^{1} \cup {\mathcal {K}_{n}}^{2}\) shown in Remark 1, it is worth pointing out that the sign of α determines whether C belongs to \(\mathcal {K}_n^{1}\) or \(\mathcal {K}_n^{2}\). Indeed, from (13) we have \(\text {sign}(\alpha ) = \text {sign}\big ({\sum }_{i=1}^{n} (y_{i} - x_{i})\big )\). Owing to the interlacing conditions in Theorem 4, we conclude that \(C\in \mathcal {K}_n^{1} \Leftrightarrow \alpha > 0\).

4.2 An inverse problem for orthogonal rational functions

The QR factorization of Cauchy-like matrices can be performed in \(\mathcal {O}(n^{2})\) arithmetic operations by taking advantage of the displacement structure, and allows to efficiently orthogonalize a given set of rational functions with respect to a discrete inner product [17, 18]. In fact, the numerical computation of rational orthogonal functions with prescribed poles is an interesting problem related to the numerical solution of inverse eigenvalue problems with quasiseparable matrices and of secular equations, see Chapter 14 of [16]. In this section, we propose a different approach to the construction of orthogonal rational functions, considering the poles as variables. More precisely, we want to solve the following problem.

Problem 1

Given a discrete inner product,

with ωk≠ 0 and pairwise distinct real nodes x1 < ⋯ < xn, construct rational functions φj(t) = 1/(t − yj) for j = 1,…,n, with pairwise distinct poles \(y_{1},\ldots ,y_{n}\in \mathbb {R}\) such that the functions φ1(t),…,φn(t) are mutually orthogonal, that is, 〈φi,φj〉 = 0 for i≠j.

Let \(\omega = (\omega _{1},\ldots ,\omega _{n})^{T}\in \mathbb {R}^{n}_{0}\), C = Cauchy(x,y) and consider the Cauchy-like matrix K = DωC, i.e.,

It is readily seen that the inner product 〈φi,φj〉 coincides with the (i,j)-entry of the matrix KTK. Hence, solving Problem 1 amounts to constructing the matrix K so that its columns are orthogonal, given the coefficients ωi and xi. In what follows, we present a complete description of the set of solutions to Problem 1 that is also amenable to numerical methods for computing the required poles y1,…,yn. Introduce the rational function

Note that this function depends only on the data \(x,\omega \in \mathbb {R}^{n}\) of Problem 1. In particular, its poles are the nodes of the discrete inner product and not the poles of the sought functions φ1(t),…,φn(t). As the following result shows, the latter are the solutions of the secular equation f(t) = α for some α≠ 0.

Theorem 8

If y1,…,yn are the poles of a solution to Problem 1 then there exists a scalar α≠ 0 such that f(yi) = α, i = 1,…,n. Conversely, let y1,…,yn be the solutions of the equation f(t) = α for some α≠ 0. Then y1,…,yn are the poles of a solution to Problem 1.

Proof 5

In the above notations, the matrix K = DωC in (14) has orthogonal columns if and only if there exist a diagonal matrix Δ such that KΔ has orthonormal columns. From Corollary 5 we derive that the matrix K has orthogonal columns if and only if \({\omega _{i}^{2}} = \alpha b_{i}\) for some scalar α≠ 0, where b = (b1,…,bn)T is the solution of the linear system CTb = 1. Equivalently, \(C^{T}D_{\omega }^{2}\mathbf {1} = \alpha C^{T} b = \alpha \mathbf {1}\), hence

for i = 1,…,n. This proves that the numbers y1,…,yn solve Problem 1 if and only if they verify the equation f(yi) = α for some α≠ 0.

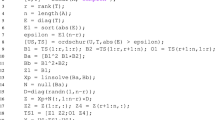

To prove that this equation admits exactly n real solutions it suffices to note that f(t) is strictly increasing in every open interval contained in its domain and, for every i = 1,…,n we have \(f(x_{i}^{-}) = +\infty \) and \(f(x_{i}^{+}) = -\infty \). Furthermore, \(\lim _{t=\pm \infty } f(t) = 0\). Hence, for any fixed nonzero scalar α there exist distinct numbers y1,…,yn such that f(yi) = α. Figure 1 illustrates a scenario where α > 0 (left panel) or α < 0 (right panel). In particular, if α < 0 then the numbers xi and yi can be reordered so that

while for α > 0 we obtain the other inequalities in the claim of Theorem 4. □

Solution of the secular equation f(t) = α with \(f(t) = {\sum }_{i=1}^{6} 1/(\cos \limits (i\pi /6)-t)\). Black line: graph of the function y = f(x). Diamonds indicate the position of the poles of f(x). Circles mark the intersection of the graph of y = f(t) with the y = α line. Left panel: α = 10. Right panel: α = − 10

The solutions of the secular equation f(x) = α with α≠ 0 and f(t) as in (15) generally have no closed form. However, due to the relevance of the secular equation in numerical linear algebra, a wealth of efficient and accurate numerical methods are available to solve it, see, e.g., [19, 20]. In particular, it is worth noting that the matrices in \(\mathcal {A}_n\) also belong to the wider class of quasiseparable matrices, for which very efficient methods of computing eigenvalues are available, see [16]. The equivalence of the eigenproblem for matrices in \({\mathcal {A}_{n}}\), the solution of the secular equation and the construction of orthogonal Cauchy-like matrices have been developed in the previous sections. To complete this circle of ideas, the next results show that solving Problem 1 is equivalent to computing the eigenvalues of a matrix in \(\mathcal {A}_n\).

Corollary 9

Let y1,…,yn be the set of poles of a solution to Problem 1. Then y1,…,yn are the eigenvalues of the matrix A = Dx + βωωT where

Conversely, let y1,…,yn be the eigenvalues of A = Dx + βωωT where β≠ 0 is arbitrary. Then y1,…,yn are the poles of a solution to Problem 1.

Proof 6

If y1,…,yn solve Problem 1 then the matrix K in (14) has orthogonal columns. Hence there exists a vector \(w\in \mathbb {R}^{n}_{0}\) such that \(\widehat {K} = KD_{w}\) is an orthogonal matrix. By Theorem 7, there exists \(A\in \mathcal {A}_n\) such that \(A = \widehat {K} D_{y} \widehat {K}^{T}\), namely, A = Dx + βωωT with \(\beta = {\sum }_{i=1}^{n} (y_{i} - x_{i})/\omega ^{T}\omega \).

Conversely, let y1,…,yn be the eigenvalues of A = Dx + βωωT for some β≠ 0. From Theorem 6 we have

where f(t) is as in (15). We only need to apply Theorem 8 with α = − 1/β to get that the poles y1,…,yn solve Problem 1, and the proof is complete. □

4.3 Characterization of \(\mathcal {K}_n\) in terms of Cauchy pairs

We borrow from [4] the following definition.

Definition 3

Let \(A,B\in \mathbb {R}^{n\times n}\) be two diagonalizable matrices. Let α1,…,αn and β1,…,βn the the eigenvalues of A and B, respectively. The pair (A,B) is a Cauchy pair if rank(A − B) = 1 and the numbers αi,βj are pairwise distinct, for i,j = 1,…,n.

Actually, the original definition in the paper cited above is stated in terms of invariant subspaces rather than eigenvalues and applies to arbitrary fields. The one given here is equivalent to the real-valued case of that in [4]. The main result in that paper characterizes Cauchy matrices in terms of Cauchy pairs. More precisely, the pair (A,B) is a Cauchy pair if and only if the matrices A and B admit diagonalizations A = XDαX− 1 and B = Y DβY− 1 such that X− 1Y = Cauchy(α,β). This fact allows the author of [4] to derive a bijection between suitably defined equivalence classes of Cauchy pairs and permutationally equivalent Cauchy matrices. The goal of this section is to prove a similar characterization for matrices in \({\mathcal {K}_{n}}\).

Lemma 10

Let (A,B) be a Cauchy pair where \(A,B\in \mathbb {R}^{n\times n}\) are symmetric matrices. Let α1,…,αn and β1,…,βn be the eigenvalues of A and B, respectively. Then \(C = \text {Cauchy}(\alpha ,\beta )\in {\mathcal {K}_{n}}\). Moreover, there exist diagonalizations A = XDαX− 1 and B = Y DβY− 1 such that C = X− 1Y.

Proof 7

Let A = UDαUT and B = V DβVT be spectral factorizations of the given matrices A and B where U and V are orthogonal matrices. Since rank(A − B) = 1, there exists \(z\in \mathbb {R}^{n}\) and a scalar σ≠ 0 such that A − B = σzzT, implying that

Now, let K = UTV, p = σUTz and q = VTz. It is immediate to see that K is an orthogonal matrix that verifies the identity \(\mathcal {D}_{\alpha ,\beta }(K) = pq^{T}\), that is, K is an orthogonal Cauchy-like matrix. By Lemma 1, that matrix admits the factorization K = DvCDw where C = Cauchy(α,β) and \(v,w\in \mathbb {R}^{n}_{0}\). In particular, \(C\in {\mathcal {K}_{n}}\). Finally, define X = UDv and \(Y = VD_{w}^{-1}\). Note that X and Y are invertible. Since diagonal matrices commute, we have

and, with similar passages, Y DβY− 1 = B. Moreover,

and the proof is complete. □

Lemma 11

Let \(C = \text {Cauchy}(\alpha ,\beta )\in \mathcal {K}_n\). For any given symmetric matrix \(A\in \mathbb {R}^{n\times n}\) with eigenvalues α1,…,αn there exists a symmetric matrix \(B\in \mathbb {R}^{n\times n}\) with eigenvalues β1,…,βn such that (A,B) is a Cauchy pair.

Proof 8

By assumption, there exist \(v,w\in \mathbb {R}^{n}_{0}\) such that K = DvCDw is orthogonal. From the displacement equation DαK − KDβ = vwT we get

Thus (Dα,KDβKT) is a Cauchy pair of symmetric matrices. Now, consider a spectral factorization A = UDαUT with an orthogonal matrix U. Define B = UKDβKTUT and the claim follows. □

Putting the previous two lemmas together, we easily get our next result.

Corollary 12

Let C be a Cauchy matrix. We have \(C\in \mathcal {K}_n\) if and only if there is a Cauchy pair of symmetric matrices (A,B) admitting diagonalizations A = XDαX− 1 and B = Y DβY− 1 such that X− 1Y = C.

4.4 Related matrix algebras

Previous results show that every matrix in the set \(\mathcal {A}_n\) is diagonalized by an orthogonal Cauchy-like matrix. Equivalently, for any given orthogonal Cauchy-like matrix K there exists a diagonal matrix Λ such that KΛKT belongs to \({\mathcal {A}_{n}}\). The goal of this section is to characterize all matrices that are diagonalized by a given orthogonal Cauchy-like matrix. More precisely, for a given orthogonal matrix \(X\in \mathbb {R}^{n\times n}\) let

This set is a commutative matrix algebra, that is, a vector space that is closed under matrix multiplication, of dimension n. If X is an orthogonal Cauchy-like matrix then Theorem 7 proves that \({\mathscr{L}}(X)\) has nonempty intersection with \(\mathcal {A}_n\).

Corollary 13 below provides a complete description of \({\mathscr{L}}(X)\) when X is an orthogonal Cauchy-like matrix. A similar characterization has been carried out in [12, 13] in the case where X = DvCDw is an orthogonal Cauchy-like matrix such that v = 1 or, more generally, v = α1 for some α≠ 0. The interest in that special case arises in the construction of matrix algebras of Loewner matrices.

Corollary 13

Let K = DvCDw be an orthogonal Cauchy-like matrix where \(C = \text {Cauchy}(x,y) \in \mathcal {K}_n\) and v and w are normalized so that the constant α in (10) equals 1. Then \(A\in {\mathscr{L}}(K)\) if and only if

where Av = z. In this case, the eigenvalues of A are the entries of the vector λ = CTDvz.

Proof 9

Let A = KΛKT be the spectral factorization of an arbitrary matrix \(A\in {\mathscr{L}}(K)\). Then A is a symmetric matrix such that

where we set z = KΛw. By assumption and (7),

Hence z = KΛw = KΛKTv = Av.

To prove the formula for the eigenvalues, let λ = Λ1 be the vector containing the eigenvalues of A. By the previous arguments we have the identity z = KΛw = KDwλ. Then \(\lambda = D_{w}^{-1} K^{T} z = C^{T}D_{v} z\), as claimed. Moreover, this identity shows that the linear map z↦λ is invertible. Noting that the map λ↦KDλKT is linear and invertible, we conclude that the compound map z↦KDλKT is a vector space isomorphism between \(\mathbb {R}^{n}\) and \({\mathscr{L}}(K)\), and the proof is complete. □

The characterization provided by the corollary above allows to recover the entries of a generic matrix \(A\in {\mathscr{L}}(K)\) from the knowledge of the vectors x, v and z. Indeed, let 1 ≤ i,j ≤ n be distinct integers. The displacement formula (16) yields

Thus the off-diagonal entries of A admit the expression

The diagonal entries of A cannot be retrieved from the previous formula, since the displacement operator \(\mathcal {D}_{x,x}\) is singular and its kernel consists precisely of the diagonal matrices. However, the matrix A can be identified by means of the additional information provided by the identity Av = z. In fact, with the previous notation we have

Recall that vi≠ 0 due to the nonsingularity of K = DvCDw. Finally, the identity Av = z suggests a method for calculating eigenvalues of A other than the one in the theorem. From KΛKTv = z we obtain KTz = ΛKTv. Hence, the i-th eigenvalue of A is λi = (KTz)i/(KTv)i, for i = 1,…,n.

5 A numerical example

In this section, we consider a sequence of orthogonal Cauchy-like matrices of arbitrary order n. The construction is based on properties of Chebyshev polynomials, which make the matrices easily computable.

Let Tn(x) and Un(x) denote the n-th degree Chebyshev polynomials of the first and second kind, respectively: for x ∈ [− 1,1],

For any fixed integer n ≥ 1, define the polynomials p(x) and q(x) in (8) as follows:

Actually, the polynomials in (8) are monic, while these are not. However our construction does not depend on p(x) and q(x) being monic. Indeed, the products aibj entering the expression of the entries of the sought orthogonal matrix are unaffected by scaling p(x) and q(x) by arbitrary (nonzero) constants, as a consequence of (7).

Consider the roots of p(x) and q(x) as nodes of a Cauchy matrix. Numbering them in ascending order, we have

respectively, for i = 1,…,n. Hence − 1 = y1 < x1 < y2 < x2 < ⋯ < xn < 1. Furthermore,

Using known formulas for the differentiation of Chebyshev polynomials, after some simplification we get

and

By (7), the coefficients of the vectors a and b are

Note that ai > 0 and bi > 0 for i = 1,…,n, as expected from Theorem 4. The aforementioned construction of orthogonal Cauchy-like matrices is described in the following statement.

Corollary 14

For any fixed integer n ≥ 1 and i = 1,…,n let xi,yi,ai,bi be as in (17) and (18). Moreover, let \(v_{i} = \sqrt {b_{i}}\) and \(w_{i} = \sqrt {a_{i}}\) for i = 1,…,n.

-

The Cauchy-like matrix K = (viwj/(xi − yj)) is orthogonal.

-

The matrix \(A = D_{x} - vv^{T} \in \mathcal {A}_n\) admits the spectral factorization A = KDyKT. Moreover, the matrix \(B = D_{y} + ww^{T} \in \mathcal {A}_n\) admits the spectral factorization B = KTDxK.

Proof 10

The first claim follows from (6) and Corollary 5. The first part of the last claim is a consequence of Theorem 7. Indeed, we have \({\sum }_{i} y_{i} - x_{i} = -1\) and \(v^{T}v = {\sum }_{i} b_{i} = 1\), thus α = − 1 in (13). The second part can be deduced from the previous one by noting that B = KT(A + vvT)K = Dy + KTvvTK and KTv = DwCTDvv = DwCTb = Dw1 = w. □

To avoid numerical cancellation, the denominators xi − yj appearing in the entries in the matrix Cauchy(x,y) can be computed using the right-hand side of the formula

which do not involve subtraction of similar quantities, thus avoiding numerical cancellation. Analogously, the formulas for ai and bi in (18) can be revised as follows:

These alternative formulas provide a significant improvement on the quality of calculations in machine arithmetic. Figure 2 illustrates the growth of ∥KTK − I∥ where K is the orthogonal Cauchy-like matrix defined in Corollary 14 with respect to n = 2k for k = 2,…,12. This measurement quantifies the lack of orthogonality of K due to finite precision computations. Matrix norms are the \(\infty \)-norm (left panel) and Frobenius norm (right panel). The results obtained using the formulas in Corollary 14 are graphed with blue diamonds, while the red circles represent the results obtained with the subtraction-free formulas (19) and (20). Dotted lines plot the functions y = nu and y = n2u, where u ≈ 2.2 ⋅ 10− 16 is the machine precision, and are included for eye guidance. Computations are performed in standard floating-point arithmetic using MATLABⒸ R2021a on a computer equipped with a 1.4GHz Intel i5 dual-core processor and 8GB RAM. The similarity of the two graphs seems to indicate that the errors in forming KTK due to computer arithmetics are strongly localized. Actually, close observations show that errors accumulate mainly on the computed diagonal entries of the matrix product. Anyway, errors arising from the use of the modified formulas (19) and (20) are consistent with a relative perturbation of the order of u in the entries of K.

Orthogonality loss ∥KTK − I∥ due to finite precision computation. Orthogonal matrices \(K\in \mathbb {R}^{n\times n}\) with n = 2,4,8…4096 are computed via the original formulas in Corollary 14 (blue diamonds) or (19)–(20) (red circles). Left panel: \(\| K^{T}K - I \|_{\infty }\). Right panel: ∥KTK − I∥F

6 Discussion

In this work, we have provided a complete characterization of orthogonal matrices with a Cauchy-like structure. Moreover, we have highlighted their relationships with the solution of secular equations, the diagonalization of symmetric quasiseparable matrices, and the computation of orthogonal rational functions with free poles. Furthermore, we have found all matrices that are diagonalized by matrices of that type. These results were obtained by making extensive use of the displacement structure of the matrices involved.

Interest in orthogonal Cauchy-like matrices originally stemmed from their appearance in the design of special filters for signal processing purposes [3]. However, our results may have more than just theoretical interest. In fact, linear systems with various displacement-structured matrices can be solved in numerically efficient ways by means of algorithms built around so-called fast orthogonal transforms, i.e., matrix-vector products with orthogonal matrices that can be performed with algorithms using \(\mathcal {O}(n\log n)\) arithmetic operations [2, 5, 21]. Using the notation introduced in Section 2, the basic technique is as follows. Let \(A\in \mathcal {S}_{M,N}^{r}\) and let U,V be invertible matrices. Then, \(UAV \in \mathcal {S}_{P,Q}^{r}\) where P = UMU− 1 and Q = V− 1NV. In this way, different displacement-structured matrix spaces can be transformed into each other. This technique is at the basis of viable numerical algorithms for numerical linear algebra with displacement-structured matrices. Indeed, the matrices U and V above are often related to Fourier-type trigonometric transforms, which are fast, numerically stable and allow their effective parallelization. Matrix-vector products with Cauchy-like matrices can also be performed in comparable polylogarithmic arithmetic complexity, see, e.g., [21, 22] owing to the diagonally scaled form of those matrices. Therefore, fast transforms based on orthogonal Cauchy-like matrices could be considered in the design of new structured linear solvers, transforming matrices through different structures. Admittedly, the issue of numerical stability of this kind of calculation is quite controversial. While some authors claim that in practice fast algorithms for matrix-vector multiplication with Cauchy-like matrices perform satisfactorily [21], known error analyses are not always supportive. On the other hand, a possible decrease in numerical stability of fast linear solvers can be compensated for by iterative refinement techniques as suggested in, e.g., [7]. In addition, the knowledge of matrix algebras that are simultaneously diagonalized by orthogonal Cauchy-like matrices could be exploited for structured eigensolvers.

Finally, it seems appropriate to shed light on the possible construction of orthogonal Cauchy-like matrices with displacement rank greater than 1 and other displacement structures, making room for further work. For this purpose, it is helpful to recall more properties of the displacement operators \(\mathcal {D}_{M,N}\) and their associated rank-structured spaces \(\mathcal {S}_{M,N}^{r}\) introduced in Section 2. A matrix having displacement rank r > 1 can be written as the sum of (at most) r matrices having displacement rank 1. However, this decomposition is unsuitable for the numerical treatment of orthogonal matrices. On the other hand, higher displacement-rank matrices can be factored in terms of low displacement-rank factors, by considering appropriate displacement operators. Indeed, let X and Y be two displacement-structured matrices, \(X\in \mathcal {S}_{M,N}^{p}\) and \(Y\in \mathcal {S}_{N,P}^{q}\). It is not difficult to verify that \(\mathcal {D}_{M,P}(XY) = \mathcal {D}_{M,N}(X)Y + X\mathcal {D}_{N,P}(Y)\). Hence \(XY\in \mathcal {S}_{M,P}^{p+q}\). An immediate consequence of this is that the product of k orthogonal Cauchy-like matrices is an orthogonal matrix with displacement rank k. Accordingly, the orthogonal Cauchy-like matrices discussed in this paper can be used to build higher displacement-rank orthogonal matrices in factorized form. Conversion to other displacement-structured spaces can be carried out as mentioned above.

References

Olshevsky, V., Pan, V.Y.: Polynomial and rational evaluation and interpolation (with structured matrices). In: Automata, Languages and Programming (Prague, 1999). Lecture Notes in Comput. Sci., vol. 1644, pp 585–594. Springer (1999)

Bini, D., Pan, V.Y.: Polynomial and Matrix Computations. Vol. 1. Progress in Theoretical Computer Science Birkhäuser, Boston, MA (1994)

Schlecht, S.J.: Allpass feedback delay networks. IEEE Trans. Signal Process. 69, 1028–1038 (2021)

Lynch, A.G.: Cauchy pairs and Cauchy matrices. Linear Algebra Appl. 471, 320–345 (2015)

Kailath, T., Sayed, A.H.: Displacement structure: Theory and applications. SIAM Rev. 37, 297–386 (1985)

Gohberg, I., Kailath, T., Olshevsky, V.: Fast Gaussian elimination with partial pivoting for matrices with displacement structure. Math. Comp. 64(212), 1557–1576 (1995)

Aricò, A., Rodriguez, G.: A fast solver for linear systems with displacement structure. Numer. Algorithms 55(4), 529–556 (2010)

Rodriguez, G.: Fast solution of Toeplitz- and Cauchy-like least-squares problems. SIAM J. Matrix Anal. Appl. 28(3), 724–748 (2006)

Fiedler, M., Hall, F. J.: G-matrices. Linear Algebra Appl. 436 (3), 731–741 (2012)

Schechter, S.: On the inversion of certain matrices. Math. Tables Aids Comput. 13, 73–77 (1959)

Gow, R.: Cauchy’s matrix, the Vandermonde matrix and polynomial interpolation. Irish Math. Soc. Bull. 28, 45–52 (1992)

Bevilacqua, R., Bozzo, E.: On algebras of symmetric Loewner matrices. Linear Algebra Appl. 248, 241–251 (1996)

Fiedler, M., Vavřín, Z.: A subclass of symmetric Loewner matrices. Linear Algebra Appl. 170, 47–51 (1992)

Golub, G.H.: Some modified matrix eigenvalue problems. SIAM Rev. 15, 318–334 (1973)

Bunch, J.R., Nielsen, C.P., Sorensen, D.C.: Rank-one modification of the symmetric eigenproblem. Numer. Math. 31(1), 31–48 (1978)

Vandebril, R., Van Barel, M., Mastronardi, N.: Matrix Computations and Semiseparable Matrices, vol. II. Johns Hopkins University Press, Baltimore, MD (2008)

Fasino, D., Gemignani, L.: A Lanczos-type algorithm for the QR factorization of regular Cauchy matrices. Numer. Linear Algebra Appl. 9(4), 305–319 (2002)

Fasino, D., Gemignani, L.: ALanczos-type algorithm for the QR factorization of Cauchy-like matrices. In: Fast Algorithms for Structured Matrices: Theory and Applications (South Hadley, MA, 2001). Contemp. Math., vol. 323, pp 91–104. Amer. Math. Soc., Providence, RI (2003)

Melman, A.: Numerical solution of a secular equation. Numer. Math. 69(4), 483–493 (1995)

Melman, A.: A numerical comparison of methods for solving secular equations. J. Comput. Appl. Math. 86(1), 237–249 (1997)

Pan, V.Y., Tabanjeh, M.A., Chen, Z.Q., Providence, S., Sadikou, A.: Transformations of Cauchy matrices, Trummer’s problem and a Cauchy-like linear solver. In: Solving Irregularly Structured Problems in Parallel (Berkeley, CA, 1998), Lecture Notes in Comput. Sci., vol. 1457, pp 274–284. Springer (1998)

Gerasoulis, A., Grigoriadis, M.D., Sun, L.: A fast algorithm for Trummer’s problem. SIAM J. Sci. Statist. Comput. 8(1), 135–138 (1987)

Funding

Open access funding provided by Università degli Studi di Udine within the CRUI-CARE Agreement. Partial financial support was received from GNCS-INdAM, Italy.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The author declare no competing interests.

Additional information

Data availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Fasino, D. Orthogonal Cauchy-like matrices. Numer Algor 92, 619–637 (2023). https://doi.org/10.1007/s11075-022-01391-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-022-01391-y