Abstract

The prediction of the temporal dynamics of chaotic systems is challenging because infinitesimal perturbations grow exponentially. The analysis of the dynamics of infinitesimal perturbations is the subject of stability analysis. In stability analysis, we linearize the equations of the dynamical system around a reference point and compute the properties of the tangent space (i.e. the Jacobian). The main goal of this paper is to propose a method that infers the Jacobian, thus, the stability properties, from observables (data). First, we propose the echo state network (ESN) with the Recycle validation as a tool to accurately infer the chaotic dynamics from data. Second, we mathematically derive the Jacobian of the echo state network, which provides the evolution of infinitesimal perturbations. Third, we analyse the stability properties of the Jacobian inferred from the ESN and compare them with the benchmark results obtained by linearizing the equations. The ESN correctly infers the nonlinear solution and its tangent space with negligible numerical errors. In detail, we compute from data only (i) the long-term statistics of the chaotic state; (ii) the covariant Lyapunov vectors; (iii) the Lyapunov spectrum; (iv) the finite-time Lyapunov exponents; (v) and the angles between the stable, neutral, and unstable splittings of the tangent space (the degree of hyperbolicity of the attractor). This work opens up new opportunities for the computation of stability properties of nonlinear systems from data, instead of equations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Chaotic behaviour has been observed and extensively studied in diverse scientific fields, initially in meteorology [1] and later in physics [2, 3], chemistry, biology and engineering [4] to name a few. Chaos appears from deterministic nonlinear equations in the form of sensitivity to initial conditions, aperiodic behaviour, and short predictability. A successful mathematical tool for the analysis of chaos is provided by stability analysis. By applying infinitesimal perturbations to a system’s trajectory, we can classify its stability along different directions and compute the properties of its linear tangent space.

Stability analysis relies on the linearization of the dynamical equations, which requires the Jacobian of the system. The key quantities that characterize chaotic dynamics, and many other related physical properties, such as dynamical entropies and fractal dimensions, are the Lyapunov Exponents (LEs) [5, 6], which are the eigenvalues of the Oseledets matrix [7]. There are several numerical methods to extract the LEs based on the Gram–Schmidt orthogonalization procedure [6, 8, 9]. The relevant eigenvectors are the corresponding Lyapunov vectors that constitute a coordinate dependent orthogonal basis of the linear tangent space. Instead, an intrinsic and norm-independent basis, which is also time invariant and covariant with the dynamics is given by the covariant Lyapunov vectors (CLVs). Crucially, CLVs are able to provide information on the local structure of chaotic attractors [10]. This viewpoint allows the study of an attractor’s topology with the occurrence of critical transitions [11,12,13,14], paving the way for CLVs to be considered as precursors to such phenomena.

The previous exposition is traditionally related to model-based approaches, as it relies on the knowledge of a system’s dynamical equations. However, studying the stability properties of observed data, where equations are not necessarily known, is hard; there are few approaches, e.g. [15, 16], relying on the delayed coordinates attractor reconstruction by Takens [17]. The recent breakthrough of data-driven (model-free) approaches poses the reasonable question: Can we use the rich knowledge of dynamical systems theory for model-free approaches? Indeed, although at early steps, the use of advanced machine learning (ML) techniques for complex systems has shown promising potential in applications ranging from weather and climate prediction and classification [18,19,20] to fluid flows prediction and optimization [21,22,23], among others. The overarching goal of this work is to propose a machine learning approach to accurately learn and infer the ergodic properties of prototypical chaotic attractors, and in particular to extract LEs and CLVs from data.

The recurrent neural networks (RNNs) constitute a promising type of ML to address chaotic behaviour. Thanks to their architecture, the RNNs are suitable for processing sequential data, typically encountered in speech and language recognition, or time-series prediction [24]. In particular, they are proven to be universal approximators [25, 26] and are able to capture long-term temporal patterns, i.e. they possess memory. A key piece of their architecture is that they maintain a hidden state that evolves dynamically, effectively allowing the RNNs to be treated as dynamical systems, and in particular as discrete neural differential equations [27]. Thus, RNNs lend themselves to being analysed with dynamical systems theory, allowing the study of stability properties from the dynamics they have learned. By exploiting this here, we derive the RNN’s Jacobian and infer the linear dynamics from data.

Recently, there have been significant advancements in employing RNNs to learn chaotic dynamics [28,29,30,31,32,33,34,35,36], where two core objectives are studied: (1) the time-accurate prediction of chaotic fluctuations and maximization of the prediction horizon and (2) accurately learning the ergodic properties of chaotic attractors. The first objective has been addressed by one of the co-authors in [34,35,36] for several prototypical chaotic dynamical systems using the same RNN architecture as the present work. Here we address the second objective by extending the recent works [29, 30, 32], where the LEs of the Lorenz 63 [1] and the one-dimensional Kuramoto–Sivashinsky equation [37] were retrieved from trained RNNs.

In this work, we employ a specific architecture of the RNN, a type of reservoir computer, the echo state network (ESN) [38] and train it with a diverse set of four prototypical chaotic attractors. The objective of this paper is twofold; first the accurate learning and inference of the ergodic properties of the chaotic attractors by the ESN. This is accomplished by thoroughly comparing the long-term statistics of (i) degrees of freedom, (ii) LEs, (iii) finite-time LEs, and (iv) angles of the CLVs. Second, by comparing the distribution of (i) finite-time LEs and (ii) angles of CLVs on the topology of the attractor, providing a strict test of the ESN’s capability to accurately learn intrinsic chaotic properties.

The paper is organized as follows: Section 2 presents the necessary tools for our study. In particular, Sect. 2.1 provides a brief introduction to the relevant concepts and quantities from dynamical systems, such as LEs and CLVs. Then, Sect. 2.2 describes the architecture of the ESN, while Sect. 2.3 its validation strategies. Section 3 presents our main results, which are divided into two subsections; Sect. 3.1 devoted in low-dimensional systems, namely the Lorenz 63 [1] and Rössler [39] attractors; and Sect. 3.2 showing results on the Charney-DeVore [40] and the Lorenz 96 [41] attractors. Finally, we summarize our results and provide future perspectives in the conclusions in Sect. 4. The appendix A presents the two algorithms to extract the LEs and CLVs from the ESN. Appendix B provides further tests on the robustness of the methodology.

2 Background

In the following two subsections, we summarize the key theory that underpins the stability of chaotic systems (Sect. 2.1) and reservoir computers (Sect. 2.2).

2.1 Stability of chaotic systems

We consider a state \({\varvec{x}}(t) \in {\mathbb {R}}^D\) with D degrees of freedom, which is governed by a set of nonlinear ordinary differential equations

where \(f({\varvec{x}}): {\mathbb {R}}^D \rightarrow {\mathbb {R}}^D\) is a smooth nonlinear function. Equation (1) defines an autonomous dynamical system. Hence, the dynamical system exists in a phase space of dimension D, equipped with a suitable metric, and is associated with a certain measure \(\mu \) that we assume to be preserved (invariant). To investigate the stability of the dynamical system (1), we perturb the state by first-order perturbations as

By substituting decomposition (2) into (1) and collecting the first-order terms \(\sim {\mathcal {O}}(\epsilon )\), we obtain the governing equation for the first-order perturbations (i.e. linear dynamics)

where \(J_{ij}=\frac{\partial f_i(x)}{\partial x_j} \in {\mathbb {R}}^{D\times D}\) are the components of the Jacobian, \({\varvec{J}}({\varvec{x}}(t))\), which is in general time-dependent. The perturbations \({\varvec{u}}\) evolve on the linear tangent space at each point \({\varvec{x}}(t)\). The goal of stability analysis is to compute the growth rate of infinitesimal perturbations, which is achieved by computing the Lyapunov exponents and a basis of the tangent space with the covariant Lyapunov Vectors. To do so, we numerically time-march \(K\le D\) tangent vectors, \({\varvec{u}}_i\in {\mathbb {R}}^D\), as columns of the matrix \({\varvec{U}}\in {\mathbb {R}}^{D\times K}\), \({\varvec{U}}= [{\varvec{u}}_1, {\varvec{u}}_2,\dots ,{\varvec{u}}_K]\)

Geometrically, Eq. (4) describes the tangent space around the state \({\varvec{x}}(t)\). Starting from \({\varvec{x}}(t=t_0)={\varvec{x}}_0\) and \({\varvec{U}}(t_0)={\mathbb {I}}\), Eqs. (1) and (4) are numerically solved with a time integrator. As explained in the subsequent paragraphs, in a chaotic system, almost all nearby trajectories diverge exponentially fast with an average rate equal to the leading Lyapunov exponent. Hence, the tangent vectors align exponentially fast with the leading Lyapunov vector, \({\varvec{u}}_1\). (‘Almost all’ means that the set of perturbations that do not grow with the largest Lyapunov exponents has a zero measure). To circumvent this numerical issue, it is necessary to periodically orthonormalize the tangent space basis during time evolution, using a QR-decomposition of \({\varvec{U}}\), as \({\varvec{U}}(t)={\varvec{Q}}(t) {\varvec{R}}(t,\varDelta t)\) (see [8, 9]) and updating the columns of \({\varvec{U}}\) with the columns of \({\varvec{Q}}\), i.e. \({\varvec{U}}\leftarrow {\varvec{Q}}\). The matrix \({\varvec{R}}(t,\varDelta t)\in {\mathbb {R}}^{K\times K}\) is upper-triangular and its diagonal elements \([{\varvec{R}}]_{i,i}\) are the local growth rates over a time span \(\varDelta t\) of the (now) orthonormal vectors \({\varvec{U}}\), which are also known as backward Gram–Schmidt vectors (GSVs) [10, 42]. The Lyapunov spectrum is given byFootnote 1

The algorithm 1 in the appendix A is a pseudocode for the calculation of the LEs for the ESN following [29, 32]. The sign of the Lyapunov exponents indicates the type of the attractor. If the leading exponent is negative, \(\lambda _1<0\), the attractor is a fixed point. If \(\lambda _1=0\), and the remaining exponents are negative, the attractor is a periodic orbit. If at least a Lyapunov exponent is positive, \(\lambda _1>0\), the attractor is chaotic. In chaotic systems, the Lyapunov time \(\tau _\lambda = \frac{1}{\lambda _{1}}\) defines a characteristic timescale for two nearby orbits to separate, which gives a scale of the system’s predictability horizon [43].

The GSVs, \({\varvec{U}}\), constitute a norm-dependent orthonormal basis, which is not time-reversible, due to the frequent orthogonalizations via the QR decomposition. Instead, the covariant Lyapunov vectors (CLVs) \({\varvec{V}}= [{\textbf{v}}_1, {\textbf{v}}_2,\dots ,{\textbf{v}}_K]\) (each CLV \({\textbf{v}}_i\in {\mathbb {R}}^D\) is a column of \({\varvec{V}}\)) form a norm-independent and time-invariant basis of the tangent space, which is covariant with the dynamics. The latter features of the CLVs, which are not possessed by the GSVs, allow us to examine individual expanding and contracting directions of a given dynamical system, thus providing an intrinsic geometrical interpretation of the attractor [6, 10], as well as a hierarchical decomposition of spatiotemporal chaos, thanks to their generic localization in physical space [42]. Each bounded nonzero CLV, i.e. \(0<||{\textbf{v}}_i||<\infty \), satisfies the following equation:

which shows that the CLV is evolved by the tangent dynamics \({\varvec{J}}({\varvec{x}}(t)){\textbf{v}}_i\), while the extra term \(- \lambda _i{\textbf{v}}_i\) guarantees that its norm is bounded [44]. The name “covariant” means that the ith CLV at time \(t_1\), \({\textbf{v}}_i({\varvec{x}}(t_1))\), maps at \({\textbf{v}}_i({\varvec{x}}(t_2))\) at time \(t_2\), and vice versa. Mathematically, if \({\varvec{M}}(t,\varDelta t)=\exp (\int _{t}^{t+\varDelta t} {\varvec{J}}({\varvec{x}},\tau )d\tau )\) is the system’s tangent evolution operator (which contains a path-ordered exponential), covariant means \({\varvec{M}}(t, \varDelta t) {\textbf{v}}_i(t) = {\textbf{v}}_i(t+\varDelta t)\); time-invariance of CLVs naturally arises from the previous expression, as \({\varvec{M}}(t, -\varDelta t) {\textbf{v}}_i(t+\varDelta t) = {\textbf{v}}_i(t)\). If the Lyapunov spectrum is non-degenerate (such as for the cases considered here), each CLV \({\textbf{v}}_i\) is associated with the Lyapunov exponent \(\lambda _i\) and is uniquely defined (up to a phase).

An important subclass of chaotic systems are uniformly hyperbolic systems, which have a uniform splitting between expanding and contracting directions, i.e. there are no tangencies between the unstable, neutral, and stable subspaces [45] that form the tangent space. Because of their simple geometrical structure, many theoretical tools have been developed in recent years. Hyperbolic systems have structurally stable dynamics and linear response, meaning that their statistics vary smoothly with parameter variations [46]. In practice, violations of hyperbolicity are commonly reported in the literature [44, 47, 48], whereas true hyperbolic systems are rare [49]. Thanks to the chaotic hypothesis [5, 50, 51], high-dimensional chaotic systems can be practically treated as hyperbolic systems, i.e. using techniques developed for hyperbolic systems, regardless of hyperbolicity violations. This is because many convenient statistical properties of uniformly hyperbolic systems, such as ergodicity, existence of physical invariant measures, exponential mixing and well-defined time averages with large deviation laws [52, 53], can be found in the macroscopic scale dynamics of certain large non-uniformly hyperbolic systems [46].

An application of CLVs is to assess the degree of hyperbolicity of the underlying chaotic dynamics. The tangent space of hyperbolic systems, at each point \({\varvec{x}}\), can be directly decomposed into three invariant subspaces, \({\varvec{E}}^U_{\varvec{x}}\oplus {\varvec{E}}^N_{\varvec{x}}\oplus {\varvec{E}}^S_{\varvec{x}}\). Here \({\varvec{E}}^U_{\varvec{x}}\) is the unstable subspace composed by the CLVs associated with positive LEs, \({\varvec{E}}^N_{\varvec{x}}\) is the neutral subspace spanned by the CLVs associated with the zero LEs, and \({\varvec{E}}^S_{\varvec{x}}\) is the stable subspace spanned by the CLVs associated with negative LEs. In hyperbolic systems, the distribution of angles between subspaces is bounded away from zero. In Sect. 3, we will study in detail the angles \(\theta _{U,N}\), \(\theta _{U,S}\), and \(\theta _{N,S}\) between pairs of the subspaces, and compare the ability of the ESN to accurately learn both the long-term statistics, and the phase space finite-time variability of the angles. Because the GSVs are mutually orthogonal, they cannot assess the degree of hyperbolicity of the attractor. Moreover, CLVs are key to the optimization of chaotic acoustic oscillations [44], as well as in reduced-order modelling [54]; they can reveal two uncoupled subspaces of the tangent space, one that comprises the physical modes carrying the relevant information of the trajectory, and another composed of strongly decaying spurious modes [10]. Two recent attempts to extract CLVs from data-driven approaches, which do not employ a neural network, can be found in [55, 56].

We explain the algorithm we employ to compute the CLVs; for further details, we refer the interested reader to [10, 42, 44]. The GSVs are generated by numerically solving Eqs. (1) and (4) simultaneously and performing a QR-decomposition every m timesteps. In this way, after a time-lapse \(\varDelta t\), the GSVs at time \(t+\varDelta t\) are given by:

We can define the CLVs \({\varvec{V}}(t)\) in terms of the GSVs as

where \({\varvec{C}}\) is an upper triangular matrix that contains the CLV expansion coefficients, \([{\varvec{C}}(t)]_{ji} = c^{j,i}(t)\), for \(j\le i\). Hence, the objective is to calculate \({\varvec{C}}(t)\). Because the CLVs have by choice a unit norm, each column of the matrix \({\varvec{C}}\) has to be normalized independently, i.e. \(\sum _{j=1}^{i} (c_{j,i}(t))^2 = 1, \forall i\).

We start by writing the evolution equation of the CLVs as

We can re-write Eq. (9) via Eq. (8)

and solve with respect to \({\varvec{C}}(t)\)

This equation is evolved backwards in time starting from the end of the forward-in-time simulation. We employ the solve_triangular routine of scipy [57] to invert \({\varvec{R}}(t,\varDelta t)\) and solve with respect to \({\varvec{C}}(t)\). The \({\varvec{C}}\) and \({\textbf{D}}\) matrices are initialized to the identity matrix \({\mathbb {I}}\). We leave a sufficient spin-up and spin-down transient time at the beginning and end of our total time window, before we compute the CLVs via Eq. (8), to ensure that they are converged. The algorithm 2 in the appendix A is a pseudocode for the calculation of the CLVs.

To estimate the expansions and contractions of the tangent space on finite-time intervals of length \(\varDelta t = t_2 - t_1\), we compute the finite-time Lyapunov exponents (FTLEs) as \(\varLambda _i = \frac{1}{\varDelta t} \ln [{\varvec{R}}]_{i,i}\). Hence, \(\lambda _i\) is the long-time average of \(\varLambda _i\). The FTLE \(\varLambda _1\) physically quantifies the exponential growth rate of a vector \({\varvec{u}}_1\) during the time interval \(\varDelta t\); therefore, \(\varLambda _2\) quantifies the exponential growth rate of the vector \({\varvec{u}}_2\) that is orthogonal to \({\varvec{u}}_1\) by construction. Hence, as the GSVs form an orthogonal basis, looking at individual FTLEs for \(\varLambda _i\), \(i\ge 2\), lacks a physical meaning. Instead, the sum of the first n FTLEs is a growth rate in \(\varDelta t\) for a typical n-dimensional volume \(\mathrm{Vol_n}\) in the tangent space [9, 58]

Accordingly, the diagonal matrix \({\textbf{D}}(t, \varDelta t)\) contains the CLV local growth factors of \(\gamma _i(t, \varDelta t) = ||{\varvec{M}}(t, \varDelta t) {\textbf{v}}_i(t)||\), i.e. \([{\textbf{D}}(t, \varDelta t)]_{i,j}=\delta _{i,j} \gamma _i(t, \varDelta t)\). We can extract the finite-time covariant Lyapunov exponents (FTCLEs) from the logarithm of these growth factors for a time interval \(\varDelta t\)

Each FTCLE quantifies a finite-time exponential expansion or contraction rate along a covariant direction given by \({\textbf{v}}_i\). Hence, each individual FTCLE has a physical interpretation, in contrast to the FTLEs, as explained before. On the other hand, now the sums of FTCLEs lack a physical meaning [58]. The long-time average of the FTCLEs is equal to the Lyapunov exponents, \(\lambda _i = \lim \limits _{T\rightarrow \infty }\frac{1}{T} \int _{t_0}^{T}\varLambda ^c_i(t)\textrm{d}t\).

2.2 Echo state network

The solution of a dynamical system is a time series. From a data analysis point of view, a time series is a sequentially ordered set of values, in which the order is provided by time. In a discrete setting, time can be thought of as an ordering index. For sequential data, and hence, time series, recurrent neural networks (RNNs) are designed to infer the temporal dynamics through their internal hidden state. However, training RNNs, such as long short-term memory (LSTM) [59] networks and gated recurrent units (GRUs) [60], requires backpropagation through time, which can be a demanding computational task due to the long-lasting time dependencies of the hidden states [61]. This issue is overcome by echo state networks (ESNs) [38, 62], a RNN that is a type of reservoir computer, of which the recurrent weights of the hidden state (commonly named “reservoir”) are randomly assigned and possess low connectivity. Therefore, only the hidden-to-output weights are trained leading to a simple quadratic optimization problem, which does not require backpropagation (see Fig. 1a for a graphical representation). The reservoir acts as a memory of the observed state history. ESNs have demonstrated accurate inference of chaotic dynamics, such as in [28,29,30,31,32,33,34,35,36, 63].

An echo state network maps the state from time index \(\textrm{t}_i\) to index \(\textrm{t}_{i+1}\) as follows (with a slight abuse of notation, the discrete time is denoted \(\textrm{t}_i\)). The evolution equations of the reservoir state and output are governed, respectively, by [36, 38]

where at any discrete time \(\textrm{t}_i\) the input vector, \({\textbf{y}}_{\textrm{in}}(\textrm{t}_{i}) \in {\mathbb {R}}^{N_y}\), is mapped into the reservoir state \({\varvec{r}}\in {\mathbb {R}}^{N_r}\), by the input matrix, \(\textbf{W}_{\textrm{in}} \in {\mathbb {R}}^{(N_y+1)\times N_r }\), where \(N_r \gg N_y\). The updated reservoir state \({\varvec{r}}(\textrm{t}_{i+1})\) is calculated at each time iteration as a function of the current input \(\hat{{\textbf{y}}}_{\textrm{in}}(\textrm{t}_{i})\) and its previous value \({\varvec{r}}(\textrm{t}_{i})\) via Eq. (14) and then is involved in the calculation of the predicted output, \({\textbf{y}}_{\textrm{p}}(\textrm{t}_{i+1})\in {\mathbb {R}}^{N_y}\) via Eq. (15). Here, \(\hat{(\;\;)}\) indicates normalization by the maximum-minus-minimum range of \({\textbf{y}}_{\textrm{in}}\) in training set component-wise, \((^T)\) indicates matrix transposition, (;) indicates array concatenation, \(\textbf{W} \in {\mathbb {R}}^{N_r\times N_r}\) is the state matrix, \(b_{\textrm{in}}\) is the input bias and \(\textbf{W}_{\textrm{out}} \in {\mathbb {R}}^{(N_{r}+1)\times N_y}\) is the output matrix. In our applications, the dimension of the input and output vectors is equal to the dimension of the physical system of Eq. (1), i.e. \(N_y \equiv D\).

The matrices \(\textbf{W}_{\textrm{in}}\) and \(\textbf{W}\) are (pseudo)randomly generated and fixed, whilst the weights of the output matrix, \(\textbf{W}_{\textrm{out}}\), are the only trainable elements of the network. The input matrix, \(\textbf{W}_{\textrm{in}}\), has only one element different from zero per row, which is sampled from a uniform distribution in \([-\sigma _{\textrm{in}},\sigma _{\textrm{in}}]\), where \(\sigma _{\textrm{in}}\) is the input scaling. The state matrix, \({\textbf {W}}\), is an Erdös–Renyi matrix with average connectivity d, in which each neuron (each row of \(\textbf{W}\)) has on average only d connections (i.e. nonzero elements), which are obtained by sampling from a uniform distribution in \([-1,1]\). The echo state property enforces the independence of the reservoir state on the initial conditions, which is satisfied by rescaling \({\textbf {W}}\) by a multiplication factor, such that the absolute value of the largest eigenvalue [38], i.e. the spectral radius, is smaller than unity. Following [29, 36, 63, 64], we add a bias in the input and output layers to break the inherent symmetry of the basic ESN architecture. Specifically, the input bias, \(b_{\textrm{in}}\) is a hyperparameter, selected in order to have the same order of magnitude as the normalized inputs, \(\hat{{\textbf{y}}}_{\textrm{in}}\). Differently, the output bias is determined by training the weights of the output matrix, \(\textbf{W}_{\textrm{out}}\).

In Fig. 1b and c, we present the two types of configurations with which the ESN can run, i.e. in open loop or closed loop, respectively. Running in open-loop is necessary for the training stage, as the input data is fed at each step, allowing for the calculation of the reservoir time series \({\varvec{r}}(\textrm{t}_{i})\), \(\textrm{t}_{i} \in [0,T_{\textrm{train}}]\), which need to be stored. There is an initial transient time window, the “washout interval”, where the output \({\textbf{y}}_{\textrm{p}}(\textrm{t}_{i})\) is not computed. This allows for the reservoir state to satisfy the echo state property, i.e. making it independent of the arbitrarily chosen initial condition, \({\varvec{r}}(t_0) = {0}\), while also synchronizing it with respect to the current state of the system.

The training of the output matrix, \(\textbf{W}_{\textrm{out}}\), is performed after the washout interval and involves the minimization of the mean square error between the outputs and the data over the training set

where \(||\cdot ||\) is the \(L_2\) norm, \(N_{\textrm{tr}}+1\) is the total number of data in the training set, and \({\textbf{y}}_{\textrm{in}}\) the input data on which the ESN is trained. Training the ESN is performed by solving with respect to \(\textbf{W}_{\textrm{out}}\) via ridge regression of

where \(\textbf{R}\in {\mathbb {R}}^{(N_r+1)\times N_{\textrm{tr}}}\) and \(\textbf{Y}_{\textrm{d}}\in {\mathbb {R}}^{N_y\times N_{\textrm{tr}}}\) are the horizontal concatenation of the reservoir states with bias, \([{\varvec{r}}(\textrm{t}_{i});1]\), \(\textrm{t}_i \in [0,T_{\textrm{train}}]\) and of the output data, respectively; \({\mathbb {I}}\) is the identity matrix and \(\beta \) is the Tikhonov regularization parameter [65].

On the other hand, in the closed-loop configuration (Fig. 1c) the output \({\textbf{y}}_{\textrm{p}}\) at time step \(\textrm{t}_{i}\) is used as an input at time step \(\textrm{t}_{i+1}\), in a recurrent manner, allowing for the autonomous temporal evolution of the network. The closed-loop configuration is used for validation (i.e. hyperparameter tuning, see Sect. 2.3) and testing, but not for training. For our purposes, we independently train \(N_{\textrm{ESN}}\in [5,10]\) networks, of which we take the ensemble average to increase the statistical accuracy of the prediction and evaluate its uncertainty. We start with \(N_{\textrm{ESN}}=10\) trained networks, but during post-processing we may discard any network that shows spurious temporal evolution. The \(N_{\textrm{ESN}}\) networks are statistically independent thanks to: (1) initializing the random matrices \(\textbf{W}_{\textrm{in}}\) and \(\textbf{W}\) with different seeds, and (2) training each network with chaotic time series staring from different initial points on the attractor.

2.2.1 Jacobian of the ESN

In this subsection, we mathematically derive the Jacobian of the echo state network. Equations (14)–(15) are a discrete map [2, 32],

and the continuous-time formulae derived for the Lyapunov exponents and CLVs in Sect. 2.1 can be adapted for a discrete-time system. The Jacobian of the ESN reservoir is the total derivative of the hidden state dynamics at a single timestep [29]

where from Eq. (14) \({\varvec{r}}(\textrm{t}_{i+1})^2 =\tanh ^2([\hat{{\textbf{y}}}_{\textrm{in}}(\textrm{t}_{i});b_{\textrm{in}}]^T \textbf{W}_{\textrm{in}}+{\varvec{r}}(\textrm{t}_{i})^T\textbf{W})\) is the updated squared hidden state at timestep \(\textrm{t}_{i+1}\). The Jacobian of the ESN is cheap to calculate as the expression \(\left( \textbf{W}^T_{\textrm{in}}\textbf{W}^T_{\textrm{out}} + \textbf{W}^T \right) \) is a constant matrix, which is fixed after the training of \(\textbf{W}_{\textrm{out}}\). The only time-varying component is the hidden state. The Jacobian \({\textbf{J}}\in {\mathbb {R}}^{N_r\times N_r}\) is used for the extraction of the Lyapunov spectrum and the CLVs of a trained ESN. We time-march D Lyapunov vectors \({\varvec{u}}_i\in {\mathbb {R}}^{N_r}\) and periodically perform QR decompositions, where \({\varvec{Q}}\in {\mathbb {R}}^{N_r\times D}\), and \({\varvec{R}}\in {\mathbb {R}}^{D\times D}\). The same CLV algorithm described in Sect. 2.1 is employed to extract D covariant Lyapunov vectors \({\textbf{v}}_i\in {\mathbb {R}}^{N_r}\) from a trained ESN. The pseudocode is given in algorithm 2.

2.3 Validation

The dataset is split into three subsets, which are the training, validation, and testing subsets in a time-ordered fashion. During training, the ESN runs in open-loop, while during validation and testing, the ESN runs in closed-loop and the prediction at each step becomes the input for the next step. After training the ESN, its validation is necessary for the determination of the hyperparameters. The objective is to compute the hyperparameters that minimize the logarithm of the MSE (16). The logarithm of the MSE is preferred because the error varies by orders of magnitude for different hyperparameters, as explained in [36]. In general, instead of Eq. (16), other types of error functions can be used for the hyperparameter tuning, such as the maximization of the prediction horizon [29, 34, 36] or the minimization of the kinetic energy differences [64]. Here the input scaling, \(\sigma _{\textrm{in}}\), the spectral radius, \(\rho \), and the Tikhonov parameter, \(\beta \), are the ESN hyperparameters that are being tuned [38, 64]. In order to select the optimal hyperparameters, \(\sigma _{\textrm{in}}\) and \(\rho \), we employ a Bayesian optimization, which is a strategy for finding the extrema of objective functions that are expensive to evaluate [64, 66]. Within the optimal \([\sigma _{\textrm{in}},\rho ]\), we perform a grid search to select \(\beta \) [64]. In particular, \([\sigma _{\textrm{in}},\rho ]\) are searched in the hyperparameter space \([0.1,5]\times [0.1,1]\) in logarithmic scale, while for \(\beta \) we test \(\{10^{-6},10^{-8}, 10^{-10},10^{-12}\}\). The Bayesian optimization starts from a grid of \(6\times 6\) points in the \([\sigma _{\textrm{in}},\rho ]\) domain, and then, it selects five additional points through the gp-hedge algorithm [66]. We set \(b_{\textrm{in}}=1\), \(d=3\) and add Gaussian noise with zero mean and standard deviation, \(\sigma _n=0.0005\sigma _y\), where \(\sigma _y\) is the standard deviation of the data component-wise, to the training and validation data. Adding noise to the data improves the performance of ESNs in chaotic dynamics by alleviating overfitting [32]. A summary of the hyperparameters is shown in Table 1.

One of the most commonly used validation strategy for RNNs is the single shot validation (SSV) [67], in which the data are split into a training set, followed by a single small validation set; see Fig. 2a. As the ESN now runs in closed loop, the size of the validation set is limited by the chaotic nature of the signal. In particular, at the beginning of the validation set, the input \({\textbf{y}}(\textrm{t}_{0})\) of the ESN is initialized to the target value. However, chaos causes the predicted trajectory to quickly diverge from the target trajectory in a few Lyapunov times \(\tau _\lambda \). The validation interval is therefore small and not representative of the full training set, which causes poor performance in the test set [64]. An improvement to the performance with cheap computations is achieved by the the recycle validation (RV), which was recently proposed by [64]. In the RV, the network is trained only once on the entire training dataset (in open loop), and validation is performed on multiple intervals already used for training (but now in closed loop); see Fig. 2b. In this work, we use the chaotic recycle validation (RVC), where the validation interval simply shifts as a small multiple of the first Lyapunov exponent, \(N_{\textrm{val}}=3\lambda _{1}\).

Schematic representation of the a single shot, and b recycling validation strategies. Here, \({\textbf{y}}\) represents the degrees of freedom of the data. Three sequential validation intervals are shown for the Recycle Validation [64]

3 Results

In this section, we present the numerical results, which include a thorough comparison between the statistics produced by the autonomous temporal evolution of the ESN and the target dynamical system. The selected observables are the statistics of the degrees of freedom, the Lyapunov exponents, the angles between the CLVs or subspaces composed of CLVs, and the finite-time covariant Lyapunov exponents. We separate our analysis into two subsections, which contain two low-dimensional systems and then two higher-dimensional systems.

3.1 Low-dimensional chaotic systems

As a first case, we consider two low-dimensional dynamical systems that exhibit chaotic behaviour: Lorenz 63 (L63) [1] and Rössler [39] attractors. The Lorenz 63 system is a reduced-order model of atmospheric convection for a single thin layer of fluid that is heated uniformly from below and cooled from above, which is defined by:

We chose the parameters \([\sigma , \beta , \rho ] = [10, 8/3, 28]\) to ensure a chaotic behaviour. The Rössler attractor, which models equilibrium in chemical reactions, is governed by:

We choose the parameters \([a, b, c] = [0.1, 0.1, 14]\) to ensure a chaotic behaviour.

To generate the target set, we evolve the dynamical systems forward in time with a fourth-order Runge–Kutta (RK4) integrator and a timestep \(\textrm{d}t= 0.005\) for both L63 and Rössler, which is sufficiently small for a good temporal resolution. (We tested slightly larger/smaller timesteps with no significant differences. Results not shown.) We perform a QR decomposition every \(m=1\) timesteps for L63 and every \(m=5\) timesteps for Rössler. For all systems, we generate a training set of size \(1000\tau _\lambda \) and a test set of size \(4000\tau _\lambda \), for the CLV statistics to converge, where \(\tau _\lambda =1/\lambda _1\) is the Lyapunov time, which is the inverse of the maximal Lyapunov exponent \(\lambda _1\).

First, we test whether the ESN correctly learns the chaotic attractor from a statistical point of view, i.e. whether the ESN correctly learns the long-term statistics of the degrees of freedom when it evolves in the closed-loop (autonomous) mode. By estimating the probability density function (PDF) of the degrees of freedom of the ESNs, as a normalized histogram, and comparing it with the corresponding PDF of the target set, we extract information on the invariant measure of the considered chaotic system. This is shown in Fig. 3 for L63 and Rössler attractors, in which the black lines show the target statistics and the red dashed lines show the ESN statistics. In Figs. 3, 5 and 7, and Table 2, we have used \(N_{\textrm{ESN}}\) ESNs trained on \(N_{\textrm{ESN}}\) independent target systems, starting from different initial conditions, and averaged among the estimated observables, where \(N_{\textrm{ESN}}=6\), and \(N_{\textrm{ESN}}=8\) for Rössler and L63, respectively. We perform the ensemble calculation to quantify the uncertainty of the predictions and the robustness of the ESN for different initializations.

Second, we test whether the ESN correctly learns the Lyapunov spectrum. Table 2 shows the ESN predictions on the Lyapunov exponents for the L63 and Rössler attractors, which are compared with the target exponents. The leading exponent is accurately predicted with a 0.2% error in the L63 and 1.5% error in the Rössler system. In chaotic systems, there exists a neutral Lyapunov exponent, which is associated with the direction of \(\frac{\textrm{d}{\varvec{x}}}{\textrm{d}t}\). In these cases, the neutral Lyapunov exponents are \(\lambda _2 = 0\) for both systems, which are correctly inferred by the ESN within a \({\mathcal {O}}(10^{-5})\) error, or less. For the smallest, and negative exponent, which is generally harder to extract because it is highly damped, the relative error is about 0.6% for L63 and 2.1% for Rössler. Therefore, the ESNs can accurately capture the tangent dynamics of a low-dimensional chaotic attractor.

Third, we investigate the angles between the CLVs. We assess whether the ESNs learn the long-term statistics of these quantities, but also whether, they correctly infer the distribution and fluctuations of those observables in the phase space. In other words, whether the ESNs learn the geometrical structure of the attractor and its tangent space.

Comparison of Target (left column), ESN (middle column), and their statistical mean absolute difference (right column), for a \(300\tau _\lambda \) trajectory of the Lorenz 63 system (19) in the test set, coloured by the CLV principal angles (in \(\deg \)). First row: \(\theta _{U,N}\), second row: \(\theta _{U,S}\), and third row: \(\theta _{N,S}\)

Comparison of the target (straight black line) and ESN (red dashed line) probability density functions (PDF) of the three principal angles between the covariant Lyapunov vectors, where U refers to unstable, N to neutral and S to stable CLVs. The top row (a–c) is for Lorenz 63 (19) and the bottom row (d–f) for Rössler (20). All y-axes are in logarithmic scale and the x-axis is in degrees. The shaded region indicates the error bars derived by the standard deviation. (Color figure online)

In Fig. 4, we present an analysis of the distribution of principal angles between the CLVs,

\(\theta _{a,b} \in [0^{\circ },90^{\circ }]\), on the topology of the L63 attractor. The attractor is well reproduced by a selected ESN (middle column), compared to the target (left column). The size of both trajectories is \(300\tau _\lambda \). In this case, there are three principal angles between the CLVs; \(\theta _{U,N}\) is the angle between the unstable and neutral CLV; \(\theta _{U,S}\) is the angle between the unstable and stable CLV; \(\theta _{N,S}\) is the angle between the neutral and stable CLV. The colouring of the attractor is associated with the measured \(\theta _{a,b}\). The black and dark red colours identify small angles, i.e. regions of the attractor where near-tangencies between the CLVs occur. Possible tangencies between CLVs or invariant manifolds composed of CLVs (as will be discussed later for higher dimensional chaotic systems) are of significant importance, as they signify that the attractor is non-hyperbolic [10] (see Sect. 2.1). The right column is the mean absolute difference between the target and the ESN. The x, y, z domain is discretized with 50 bins in each direction; then, the mean \(\theta _{a,b}\) is calculated from each of the three-dimensional bins for the \(300\tau _\lambda \) long trajectory. Finally, the absolute difference between ESN and target is calculated for each bin. The plots follow the same colour scheme as the colourbar, with black and dark red colours indicating \(<2^\circ \) differences with a maximum of \(\sim 10^\circ \). Figure 4 shows that the ESN is able to accurately learn the dynamics of the tangent linear space of the attractor.

In Fig. 5, we show the PDF of the principal angles between the three CLVs, for which there is agreement between target and ESN results in all cases for both L63 and Rössler, even for smaller angles. The nonzero count of events close to \(\theta \rightarrow 0\) indicates that the two considered systems are non-hyperbolic, which is consistent with the literature [58].

Fourth, we analyse the distribution on the attractor, as well as the statistics, of the Finite-time Covariant Lyapunov Exponents, for a time-lapse of \(\varDelta t = m \,\textrm{d}t\) timestep, and assess the accuracy of the trained ESNs. For the considered low-dimensional systems there are three FTCLEs with each showing the finite-time growth rate of the corresponding Covariant Lyapunov Vectors.

In Fig. 6, we visualize the distribution of the single timestep FTCLEs, in the case of the Rössler attractor, which is well reproduced by a selected ESN (middle column), compared to the target (left column). The size of both trajectories is \(300\tau _\lambda \). FTCLE 1 is the finite-time exponent for the unstable CLV, FTCLE 2 is for the neutral CLV, and FTCLE 3 is for the stable CLV. The colouring is associated with the values of the FTCLEs. Large positive FTCLEs correspond to high finite-time growth rates and, thus, reduced predictability. The distribution of the leading FTCLE on the attractor is similar between the target and ESN. The second FTCLE and third FTCLE, which correspond to the neutral CLV and stable CLVs, accordingly, also show good agreement between the two. The mean difference between the target and the ESN on the attractor is plotted in the right column, in which black identifies \(\varLambda _i^c\approx 0\). The right column shows that most of the small differences between the ESN and the target are located in the region of large variation of z.

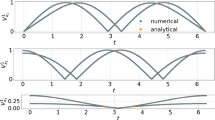

Finally, Fig. 7 shows the PDF of the three FTCLEs. There is agreement between the ESN-inferred quantities and the target in all cases, in particular in the Rössler attractor for the most-probable statistics. The small deviation in Fig. 7a for L63 corresponds to the statistics around the peak of the first FTCLE, \(\varLambda _1^c\), but the tails of the distributions are well reproduced. The mean of the \(\varLambda _i^c\) distributions coincides with the LEs \(\lambda _{i}\), which holds true for all our results. A behaviour as in Fig. 7a implies that in this case the finite-time values \(\varLambda _1^c\) are less peaked around the mean value, even though their long-time average coincides with the Lyapunov exponent \(\lambda _{1}\). Nevertheless, in Figs. 7d–f for Rössler the statistics around the peak (and beyond) are well captured.

Comparison of target (left column), ESN (middle column), and their statistical mean absolute difference (right column) for a \(300\tau _\lambda \) trajectory of the Rössler system (20) in the test set, coloured by the three FTCLEs. First row: FTCLE 1, second row: FTCLE 2, and third row: FTCLE 3

Comparison of the target (straight black line) and ESN (red dashed line) probability density functions (PDF) of the three finite-time covariant Lyapunov Exponents. The top row a–c is for Lorenz 63 (19) and the bottom row d–f for Rössler (20). All y-axes are in logarithmic scale. (Color figure online)

We refer the interested reader to our supplementary material where the corresponding results of Figs. 4 and 6 for both attractors are shown. Also, the statistics of FTLEs, as well as their distribution on the chaotic attractors, are presented in the supplementary material.

3.2 Higher-dimensional chaotic systems

We follow the same analysis and approach as in Sect. 3.1 for two higher-dimensional chaotic systems, both of which are related to atmospheric physics and meteorology. The first is a reduced-order model of atmospheric blocking events by Charney and DeVore [40] (CdV), which is a six-dimensional truncation of the equations for barotropic flow with orography. We employ the formulation of [68, 69], which is forced by a zonal flow profile that can be barotropically unstable. The governing equations are:

where the model coefficients are

Equation (22) is integrated with RK4 and \(\textrm{d}t = 0.1\). The constants are set to \((x_1^*, x_4^*,C,\beta ,\gamma ,b) = (0.95, -0.76095, 0.1, 1.25, 0.2, 0.5)\), for which the CdV model generates regime transitions [68, 69]. In particular, the CdV model allows for two metastable states, the so-called “zonal” state, which represents the approximately zonally symmetric jet stream in the mid-latitude atmosphere, and the “blocked” state, which refers to a diverse class of weather patterns that are a persistent deviation from the zonal state. Blocking events are known to be associated with regional extreme weather, from heatwaves in summer to cold spells in winter [70]. The dynamical properties of CLVs in connection to blocking events were recently investigated for a series of more complex atmospheric models than CdV [11, 12, 71], which demonstrated that CLVs are good candidates for blockings precursors, as well as a good basis for model reduction. In the previous work [34], the CdV system was used as a training model for the ESN, with the purpose of studying short-term accurate prediction of chaos, and quantifying the benefit of Physics informed echo state networks [34].

The second higher-dimensional system that we consider is the Lorenz 96 (L96) model [41], which is a system of coupled ordinary differential equations that describes the large-scale behaviour of the mid-latitude atmosphere, and the transfer of a scalar atmospheric quantity. Three characteristic processes of atmospheric systems (advection, dissipation, and external forcing) are included in the model, whose equations are

where \({\varvec{x}}= [ x_1, x_2, \dots ,x_D ] \in {\mathbb {R}}^D\). We set periodic boundary conditions, i.e. \(x_{1}=x_{D+1}\). In our analysis, we chose \(D=20\) degrees of freedom. The external forcing is set to \(F=8\), which ensures a chaotic evolution [32]. We integrate the system with RK4 and \(\textrm{d}t = 0.01\). We perform a QR decomposition every \(m=5\) timesteps for CdV and every \(m=10\) timesteps for L96. Similar to the previous section, we generate a training set of size \(1000\tau _\lambda \) and a test set of size \(4000\tau _\lambda \).

First, Fig. 8 shows the PDF of the six degrees of freedom of CdV, and the first six from L96 (the PDFs of the rest 13 dofs have similar shape and agreement between ESN and target). We use a semilogarithmic scale to emphasize that the agreement between target (black line) and ESN (red dashed line) is accurate for the tails of the distributions, which effectively correspond to the edges of each attractor. As in Sect. 3.1 in order to evaluate uncertainty and robustness, we start with \(N_{\textrm{ESN}}=10\) trained networks, but during post-processing we discard any network that shows spurious temporal evolution, and perform a further averaging of the PDFs of each network’s observable. Therefore, the PDFs of Fig. 8 are the outcome of averaging \(N_{\textrm{ESN}}=5\) and \(N_{\textrm{ESN}}=9\) PDFs with the same binning, for CdV and L96, respectively.

Second, Fig. 9 shows the Lyapunov exponents spectrum of (a) CdV and (b) L96 for \(D=20\) and compares the target (black squares) with the ESN prediction (red circles). The CdV model has a single positive Lyapunov exponent, with the average value of 5 ESNs resulting in \(\lambda _1 = 0.0214\), and for the 5 independent target sets, \(\lambda ^{\textrm{targ}}_1 = 0.0232\) with an \(8\%\) absolute error. The second Lyapunov exponent is zero (to numerical error) and corresponds to the neutral direction, with \(\lambda _2 = -3\times 10^{-5}\) for ESN, and \(\lambda ^{\textrm{targ}}_2 = -7\times 10^{-6}\) for the target. The low order of magnitude achieved by the ESN assures its ability to capture the neutral exponent. Finally, the four remaining negative exponents are well learned by the ESN, i.e. \(\lambda _{3-6} =[-0.077\), \(-0.103\), \(-0.224\), \(-0.234]\) and \(\lambda ^{\textrm{targ}}_{3-6} =[-0.079\), \(-0.101\), \(-0.218\), \(-0.226]\) for the target. Overall, excluding \(\lambda _2\), the mean absolute error of the CdV Lyapunov spectrum here is 3.7%, which is negligibly small.

With respect to the L96 Lyapunov spectra in Fig. 9b, the agreement between target and ESN across all 20 exponents is good. In particular, there are 6 positive, 1 zero and 13 negative exponents. The maximal exponent predicted from the ensemble of \(N_{\textrm{ESN}}=9\) ESNs is equal to \(\lambda _1 = 1.551\), and for the 9 independent target sets, \(\lambda ^{\textrm{targ}}_1 = 1.557\), meaning a \(0.4\%\) absolute error. The rest of the positive exponents are well captured by the ESN, with \(\lambda _{2-6} =[1.221\), 0.936, 0.668, 0.416, 0.151] and \(\lambda ^{\textrm{targ}}_{2-6} =[1.217\), 0.937, 0.673, 0.413, 0.152] for the target. The zero exponent is sufficiently small with \(\lambda _7 = -10^{-4}\) for ESN, and \(\lambda ^{\textrm{targ}}_7 = 4\times 10^{-4}\) for target. Albeit more difficult to predict because of large numerical dissipation, the negative Lyapunov exponents are accurately learned by the ESN, with the smallest ones reading \(\lambda _{15-20} =[-1.84\), \(-2.22\), \(-2.71\), \(-3.45\), \(-4.24\), \(-4.73]\) and accordingly \(\lambda ^{\textrm{targ}}_{15-20} =[-1.85\), \(-2.21\), \(-2.71\), \(-3.45\), \(-4.25\), \(-4.75]\) for the target. Those directions in tangent space decay exponentially fast and the accuracy that the ESN achieves is consistent. For L96 the mean absolute error of the Lyapunov spectrum is approximately 0.5%.

Comparison of the target (straight black line) and ESN (red dashed line) PDF of the three minimum principal angles between the three subspaces composed by the CLVs, where U refers to unstable, N to neutral and S to stable CLVs. The top row a–c is for Charney–DeVore (22) and the bottom row d–f for Lorenz 96 (24) at \(D=20\). Both x and y axes are in logarithmic scale and the x-axis is in degrees. Only in a a logarithmic binning was used being denser close to \(\theta _{U,N}\rightarrow 0\), while PDFs in b–f are linearly binned in x-axis. (Color figure online)

Comparison of the target (straight black line) and ESN (red dashed line) PDF of six finite-time covariant Lyapunov exponents (\(\varLambda ^c_i\)) for a Charney–DeVore (22) for which \(\lambda _1>0\), \(\lambda _2=0\) and the rest are \(\lambda _i<0\), and b Lorenz 96 (24) at \(D=20\), where for \(i=1,2,3\) \(\lambda _i>0\), for \(i=7\) \(\lambda _i=0\), and for \(i=11,17\) \(\lambda _i<0\). All y-axes are in logarithmic scale. (Color figure online)

To further elaborate, the L96 is known to be an extensive system [72, 73], which means that quantities such as the surface width, the entropy and the attractor dimension scale linearly with its dimensionality D. For the Lyapunov spectrum, this means that the proportion of positive to negative exponents is roughly the same (\(\approx 1/2\)) as D changes. For this reason, our chosen \(D=20\) is sufficient for our purposes.

Third, we investigate the statistics of the principal angles, \(\theta \in [0^{\circ },90^{\circ }]\), between the three subspaces that partition the invariant manifolds, which are the unstable \({\varvec{E}}^U_{\varvec{x}}\), neutral \({\varvec{E}}^N_{\varvec{x}}\) and stable \({\varvec{E}}^S_{\varvec{x}}\), spanned by the corresponding CLVs. The extraction of the principal angles between two linear subspaces requires a singular value decomposition of their matrix product \({\varvec{\varGamma }}^{a,b} = {\varvec{E}}^a_{\varvec{x}}{\varvec{E}}^b_{\varvec{x}}\) (assuming the CLVs are ordered as stacked columns, according to their Lyapunov exponent order), because all paired products between the CLVs spanning the subspaces do not provide all the angles [10, 74]. The angles are given by

and we analyse the smallest singular value. Here, we use the implemented routine scipy.linalg.subspace_angles of the scipy package [57] in python and analyse the minimum angle in order to track homoclinic tangencies between the subspaces. This implementation is based on the algorithm presented in [75], which has improved accuracy with respect to Eq. (25) in the estimation of small angles.

In Fig. 10, we study the PDFs of the three principal angles between the linear subspaces for CdV and L96. In CdV, the unstable and neutral subspaces are spanned only by the corresponding CLVs, while the stable subspace is spanned by the remaining four CLVs, of which \(\lambda _i<0\). In L96 with \(D=20\), the unstable subspace is spanned by the first six CLVs, the neutral subspace is spanned only by the 7th CLV, and the stable subspace is spanned by the remaining 13 CLVs. Focusing on Fig. 10a–c for CdV, we notice that this system is non-hyperbolic because the PDFs are populated close to \(\theta \rightarrow 0\). Specifically for Fig. 10a, the binning is geometrically spaced and denser close to \(\theta \rightarrow 0\). Interestingly, the PDF of \(\theta _{U,N}\) of CdV for small angles follows a power-law \(PDF(\theta ) \sim \theta ^{-\alpha } \) for \(\theta \rightarrow 0\) and until \(\approx 10^\circ \), before it saturates. A different shape that is still highly non-hyperbolic is shown for the PDFs of \(\theta _{U,S}\) and \(\theta _{N,S}\) in Fig. 10b and c, in which the binning is linear and both axes in logarithmic scale. Figure 10d–f shows the same statistics in the case of L96, which is also non-hyperbolic, as there is strong frequency of tangencies, \(\theta \rightarrow 0\). In all plots of Fig. 10, the agreement of the subspace angle statistics between target and ESN is good, which demonstrates that the ESN has achieved a robust and accurate learning of the ergodic properties from higher-dimensional data.

Fourth, the statistics of FTCLEs (\(\varLambda ^c_i\)), for a time-lapse of \(\varDelta t = m\, \textrm{d}t\) timesteps, in the cases of CdV and L96 are shown in Fig. 11. All six \(\varLambda ^c_i\) are shown for CdV, while a representative set of six \(\varLambda ^c_i\) are shown for L96, such that \(\lambda _i>0\) for \(k=1,2,3\), \(\lambda _i=0\) for \(k=7\), and \(\lambda _i<0\) for \(k=11, 17\). For CdV, the most probable statistics are well captured by the ESN, which is in agreement with the target data. There are slight deviations at the tails of the distributions, which are still in agreement within error bars (shaded region). In the case of L96, the agreement is good for both the most probable statistics and the tails, for all FTCLEs (also those not shown). The first moment of the distributions, i.e. the mean of the FTCLEs time series, must be equal to the Lyapunov exponents, \(\lambda _i=\frac{1}{T}\int _{0}^{T}\varLambda ^c_i\), which indeed holds for all the cases considered here. The agreement between ESN and target sets in Fig. 11 shows that the ESN is able to accurately learn the finite-time variability of the CLV growth rates also for higher dimensional systems that are characterized by many Lyapunov exponents.

Finally, in Table 3 we show the estimated Kaplan–Yorke dimension [76] for all the considered systems and compare the outcomes of the ESN and target. This dimension is an upper bound of the attractor’s fractal dimension [2], which is defined as

where k is such that the sum of the first k LEs is positive and the sum of the first \(k+1\) LEs is negative. We observe a good agreement in all cases with \(\le 1\%\) error. This observation further confirms the ability of the ESN to accurately learn the properties of the chaotic attractor.

4 Conclusion

Stability analysis is a principled mathematical tool to quantitatively answer key questions on the behaviour of nonlinear systems: Will infinitesimal perturbations grow in time (i.e. is the system linearly unstable)? If so, what are the perturbations’ growth rates (i.e. how linearly unstable is the system)? What are the directions of growth? To answer these questions, traditionally, we linearize the equations of the dynamical system around a reference point, and compute the properties of the tangent space, the dynamics of which is governed by the Jacobian. The overarching goal of this paper is to propose a method that infers the stability properties directly from data, which does not rely on the knowledge of the dynamical differential equations. We tackle chaotic systems, which have a linearized behaviour that is more general and intricate than periodic or quasi-periodic oscillations. First, we propose the echo state network with the recycle validation as a tool to accurately learn the chaotic dynamics from data. The data are provided by the integration of low- and higher- dimensional prototypical chaotic dynamical systems. These systems are qualitatively different from each other and are toy models that describe diverse physical settings, ranging from climatology and meteorology to chemistry. Second, we mathematically derive the Jacobian of the echo state network (Eq. (18)). In contrast to other recurrent neural networks, such as long short-term memory networks or gated recurrent units, the Jacobian of the ESN is mathematically simple and computationally straightforward. Third, we analyse the stability properties inferred from the ESN and compare them with the target properties (ground truth) obtained by linearizing the equations. The ESN correctly infers quantities that characterize the chaotic dynamics and its tangent space (i) the long-term statistics of the solution, for which we compute the probability density function of each state variable; (ii) the covariant Lyapunov vectors, which are a physical basis for the tangent space that is covariant with the dynamics; (iii) the Lyapunov spectrum, which is the set of eigenvalues of the Oseledets matrix that are the perturbations’ average exponential growths; (iv) the finite-time Lyapunov exponents, which are the finite-time growth along the covariant Lyapunov vectors; and (v) the angles between the stable, neutral, and unstable splittings of the tangent space, which informs about the degree of hyperbolicity of the attractor. We show that these quantities can be accurately learned from data by the ESN, with negligible numerical errors.

As mathematically and numerically shown in [44], the stability properties of fixed points (with eigenvalue analysis) and periodic solutions (with Floquet analysis) can be inferred from covariant Lyapunov analysis. Therefore, this work opens up new opportunities for the inference of stability properties from data in nonlinear systems, from simple fixed points, through periodic oscillations, to chaos.

Data availability

The implementation of the ESN follows [36] and the code can be found in the github repository https://github.com/gmargazo/ESN-CLVs.git.

Notes

The Oseledets’ theorem [6, 7, 10] establishes the existence of Lyapunov exponents (LEs) for a generic set of orbits under fairly general assumptions. In particular, the Oseledets’ theorem enables the extension of Lyapunov stability analysis to any trajectory of a dynamical system defined on a Riemannian manifold of dimension N and equipped with a suitable metric, including fixed points and periodic orbits.

References

Lorenz, E.N.: Deterministic nonperiodic flow. J. Atmos. Sci. 20(2), 130 (1963). https://doi.org/10.1175/1520-0469(1963)020<0130:DNF>2.0.CO;2

Ott, E.: Chaos in Dynamical Systems, 2nd edn. Cambridge University Press, Cambridge (2002). https://doi.org/10.1017/CBO9780511803260

Papaphilippou, Y.: Detecting chaos in particle accelerators through the frequency map analysis method. Chaos Interdiscip. J. Nonlinear Sci. 24(2), 024412 (2014). https://doi.org/10.1063/1.4884495

Strogatz, S.H.: Nonlinear Dynamics and Chaos: with Applications to Physics, Biology, Chemistry, and Engineering. CRC Press, Boca Raton (2015). https://doi.org/10.1201/9780429492563

Ruelle, D.: Measures describing a turbulent flow. Ann. N. Y. Acad. Sci. 357(1), 1 (1980). https://doi.org/10.1111/j.1749-6632.1980.tb29669.x

Eckmann, J.P., Ruelle, D.: Ergodic theory of chaos and strange attractors. Rev. Mod. Phys. 57, 617 (1985). https://doi.org/10.1103/RevModPhys.57.617

Oseledets, V.I.: A multiplicative ergodic theorem. Characteristic Ljapunov, exponents of dynamical systems. Trudy Mosk. Mat. Obshchestva 19, 179 (1968)

Benettin, G., Galgani, L., Giorgilli, A., Strelcyn, J.M.: Lyapunov characteristic exponents for smooth dynamical systems and for Hamiltonian systems; a method for computing all of them. Part 1: Theory. Meccanica 15(1), 9 (1980). https://doi.org/10.1007/BF02128236

Shimada, I., Nagashima, T.: A numerical approach to ergodic problem of dissipative dynamical systems. Progress Theoret. Phys. 61(6), 1605 (1979). https://doi.org/10.1143/PTP.61.1605

Ginelli, F., Chaté, H., Livi, R., Politi, A.: Covariant Lyapunov vectors. J. Phys. A Math. Theor. 46(25), 254005 (2013). https://doi.org/10.1088/1751-8113/46/25/254005

Schubert, S., Lucarini, V.: Covariant Lyapunov vectors of a quasi-geostrophic baroclinic model: analysis of instabilities and feedbacks. Quart. J. R. Meteorol. Soc. 141(693), 3040 (2015). https://doi.org/10.1002/qj.2588

Vannitsem, S., Lucarini, V.: Statistical and dynamical properties of covariant lyapunov vectors in a coupled atmosphere-ocean model–multiscale effects, geometric degeneracy, and error dynamics. J. Phys. A Math. Theor. 49(22), 224001 (2016). https://doi.org/10.1088/1751-8113/49/22/224001

Sharafi, N., Timme, M., Hallerberg, S.: Critical transitions and perturbation growth directions. Phys. Rev. E 96, 032220 (2017). https://doi.org/10.1103/PhysRevE.96.032220

Brugnago, E.L., Gallas, J.A.C., Beims, M.W.: Machine learning, alignment of covariant Lyapunov vectors, and predictability in Rikitake’s geomagnetic dynamo model. Chaos Interdiscipl. J. Nonlinear Sci. 30(8), 083106 (2020). https://doi.org/10.1063/5.0009765

Wolf, A., Swift, J.B., Swinney, H.L., Vastano, J.A.: Determining Lyapunov exponents from a time series. Phys. D Nonlinear Phenom. 16(3), 285 (1985). https://doi.org/10.1016/0167-2789(85)90011-9

Rosenstein, M.T., Collins, J.J., De Luca, C.J.: A practical method for calculating largest Lyapunov exponents from small data sets. Phys. D Nonlinear Phenom. 65(1), 117 (1993). https://doi.org/10.1016/0167-2789(93)90009-P

Takens, F.: Detecting strange attractors in turbulence. In: Rand, D., Young, L.S. (eds.) Dynamical Systems and Turbulence, Warwick 1980, pp. 366–381. Springer, Berlin (1981). https://doi.org/10.1007/BFb0091924

Rasp, S., Pritchard, M.S., Gentine, P.: Deep learning to represent subgrid processes in climate models. Proc. Natl. Acad. Sci 115(39), 9684 (2018). https://doi.org/10.1073/pnas.1810286115

Dueben, P.D., Bauer, P.: Challenges and design choices for global weather and climate models based on machine learning. Geosci. Model Dev. 11(10), 3999 (2018). https://doi.org/10.5194/gmd-11-3999-2018

Margazoglou, G., Grafke, T., Laio, A., Lucarini, V.: Dynamical landscape and multistability of a climate model. Proc. R. Soc. A Math. Phys. Eng. Sci. 477(2250), 20210019 (2021). https://doi.org/10.1098/rspa.2021.0019

Verma, S., Novati, G., Koumoutsakos, P.: Efficient collective swimming by harnessing vortices through deep reinforcement learning. Proc. Natl. Acad. Sci. 115(23), 5849 (2018). https://doi.org/10.1073/pnas.1800923115

Kochkov, D., Smith, J.A., Alieva, A., Wang, Q., Brenner, M.P., Hoyer, S.: Machine learning-accelerated computational fluid dynamics. Proc. Natl. Acad. Sci. 118(21), e2101784118 (2021). https://doi.org/10.1073/pnas.2101784118

Huhn, F., Magri, L.: Gradient-free optimization of chaotic acoustics with reservoir computing. Phys. Rev. Fluids 7, 014402 (2022). https://doi.org/10.1103/PhysRevFluids.7.014402

Goodfellow, I., Bengio, Y., Courville, A.: Deep Learning. MIT Press, (2016). http://www.deeplearningbook.org

Schäfer, A.M., Zimmermann, H.G.: Recurrent Neural Networks Are Universal Approximators. In: Kollias, S.D., Stafylopatis, A., Duch, W., Oja, E. (eds.) Artificial Neural Networks-ICANN 2006, pp. 632–640. Springer, Berlin (2006)

Grigoryeva, L., Ortega, J.P.: Echo state networks are universal. Neural Netw. 108, 495 (2018). https://doi.org/10.1016/j.neunet.2018.08.025

Chen, R.T.Q., Rubanova, Y., Bettencourt, J., Duvenaud, J.: Neural Ordinary Differential Equations. In: S. Bengio, H. Wallach, H. Larochelle, K. Grauman, N. Cesa-Bianchi, R. Garnett (eds), Advances in Neural Information Processing Systems. Curran Associates, Inc., vol. 31. (2018). https://proceedings.neurips.cc/paper/2018/file/69386f6bb1dfed68692a24c8686939b9-Paper.pdf

Lu, Z., Hunt, B.R., Ott, E.: Attractor reconstruction by machine learning. Chaos Interdiscip. J. Nonlinear Sci. 28(6), 061104 (2018). https://doi.org/10.1063/1.5039508

Pathak, J., Lu, Z., Hunt, B.R., Girvan, M., Ott, E.: Using machine learning to replicate chaotic attractors and calculate Lyapunov exponents from data. Chaos Interdiscip. J. Nonlinear Sci. 27(12), 121102 (2017). https://doi.org/10.1063/1.5010300

Pathak, J., Hunt, B., Girvan, M., Lu, Z., Ott, E.: Model-free prediction of large spatiotemporally chaotic systems from data: A reservoir computing approach. Phys. Rev. Lett. 120, 024102 (2018). https://doi.org/10.1103/PhysRevLett.120.024102

Vlachas, P.R., Byeon, W., Wan, Z.Y., Sapsis, T.P., Koumoutsakos, P.: Data-driven forecasting of high-dimensional chaotic systems with long short-term memory networks. Proc. R. Soc. A Math. Phys. Eng. Sci. 474(2213), 20170844 (2018). https://doi.org/10.1098/rspa.2017.0844

Vlachas, P., Pathak, J., Hunt, B., Sapsis, T., Girvan, M., Ott, E., Koumoutsakos, P.: Backpropagation algorithms and Reservoir Computing in Recurrent Neural Networks for the forecasting of complex spatiotemporal dynamics. Neural Netw. 126, 191 (2020). https://doi.org/10.1016/j.neunet.2020.02.016

Borra, F., Vulpiani, A., Cencini, M.: Effective models and predictability of chaotic multiscale systems via machine learning. Phys. Rev. E 102, 052203 (2020). https://doi.org/10.1103/PhysRevE.102.052203

Doan, N., Polifke, W., Magri, L.: Physics-informed echo state networks. J. Comput. Sci. 47, 101237 (2020). https://doi.org/10.1016/j.jocs.2020.101237

Doan, N.A.K., Polifke, W., Magri, L.: Short- and long-term predictions of chaotic flows and extreme events: a physics-constrained reservoir computing approach. Proc. R. Soc. A Math. Phys. Eng. Sci. 477(2253), 20210135 (2021). https://doi.org/10.1098/rspa.2021.0135

Racca, A., Magri, L.: Robust optimization and validation of echo state networks for learning chaotic dynamics. Neural Netw. 142, 252 (2021). https://doi.org/10.1016/j.neunet.2021.05.004

Kuramoto, Y.: Diffusion-induced chaos in reaction systems. Prog. Theor. Phys. Suppl. 64, 346 (1978). https://doi.org/10.1143/PTPS.64.346

Lukoševičius, M.: A Practical Guide to Applying Echo State Networks, pp. 659–686. Springer, Berlin (2012). https://doi.org/10.1007/978-3-642-35289-8_36

Rössler, O.: An equation for continuous chaos. Phys. Lett. A 57(5), 397 (1976). https://doi.org/10.1016/0375-9601(76)90101-8

Charney, J.G., DeVore, J.G.: Multiple flow equilibria in the atmosphere and blocking. J. Atmos. Sci. 36(7), 1205 (1979). https://journals.ametsoc.org/view/journals/atsc/36/7/1520-0469_1979_036_1205_mfeita_2_0_co_2.xml?tab_body=pdf

Lorenz, E.: Predictability: a problem partly solved. In: Seminar on Predictability, 4-8 September 1995, vol. 1. ECMWF, vol. 1, pp. 1–18. ECMWF, Shinfield Park, Reading, (1995). https://www.ecmwf.int/node/10829

Ginelli, F., Poggi, P., Turchi, A., Chaté, H., Livi, R., Politi, A.: Characterizing dynamics with covariant Lyapunov vectors. Phys. Rev. Lett. 99, 130601 (2007). https://doi.org/10.1103/PhysRevLett.99.130601

Boffetta, G., Cencini, M., Falcioni, M., Vulpiani, A.: Predictability: a way to characterize complexity. Phys. Rep. 356(6), 367 (2002). https://doi.org/10.1016/S0370-1573(01)00025-4

Huhn, F., Magri, L.: Stability, sensitivity and optimisation of chaotic acoustic oscillations. J. Fluid Mech. 882, A24 (2020). https://doi.org/10.1017/jfm.2019.828

Ruelle, D.: Differentiation of SRB states. Commun. Math. Phys. 187(1), 227 (1997). https://doi.org/10.1007/s002200050134

Lucarini, V., Blender, R., Herbert, C., Ragone, F., Pascale, S., Wouters, J.: Mathematical and physical ideas for climate science. Rev. Geophys. 52(4), 809 (2014). https://doi.org/10.1002/2013RG000446

Blonigan, P.J., Fernandez, P., Murman, S.M., Wang, Q., Rigas, G., Magri, L.: Toward a chaotic adjoint for LES. Center Turbul. Res. Proc. Summer Program (2017). https://doi.org/10.17863/CAM.35422

Wormell, C.L.: Non-hyperbolicity at large scales of a high-dimensional chaotic system. Proc. R. Soc. A Math. Phys. Eng. Sci. 478(2261), 20210808 (2022). https://doi.org/10.1098/rspa.2021.0808

Kuznetsov, S.P.: Possible Occurrence of Hyperbolic Attractors, pp. 35–56. Springer, Berlin (2012). https://doi.org/10.1007/978-3-642-23666-2_2

Gallavotti, G., Cohen, E.G.D.: Dynamical ensembles in nonequilibrium statistical mechanics. Phys. Rev. Lett. 74, 2694 (1995). https://doi.org/10.1103/PhysRevLett.74.2694

Gallavotti, G., Cohen, E.G.D.: Dynamical ensembles in stationary states. J. Stat. Phys. 80(5), 931 (1995)

Lebowitz, J.L., Spohn, H.: A Gallavotti-Cohen-type symmetry in the large deviation functional for stochastic dynamics. J. Stat. Phys. 95(1), 333 (1999). https://doi.org/10.1023/A:1004589714161

Lepri, S., Livi, R., Politi, A.: Energy transport in anharmonic lattices close to and far from equilibrium. Phys. D Nonlinear Phenom. 119(1), 140 (1998). https://doi.org/10.1016/S0167-2789(98)00076-1

Yang, H.L., Takeuchi, K.A., Ginelli, F., Chaté, H., Radons, G.: Hyperbolicity and the effective dimension of spatially extended dissipative systems. Phys. Rev. Lett. 102, 074102 (2009). https://doi.org/10.1103/PhysRevLett.102.074102

Viennet, A., Vercauteren, N., Engel, M., Faranda, D.: Guidelines for data-driven approaches to study transitions in multiscale systems: the case of lyapunov vectors (2022). https://doi.org/10.48550/ARXIV.2203.10322. arXiv:2203.10322

Martin, C., Sharafi, N., Hallerberg, S.: Estimating covariant Lyapunov vectors from data. Chaos Interdiscip. J. Nonlinear Sci. 32(3), 033105 (2022). https://doi.org/10.1063/5.0078112

Virtanen, P., Gommers, R., Oliphant, T.E., Haberland, M., Reddy, T., Cournapeau, D., Burovski, E., Peterson, P., Weckesser, W., Bright, J., van der Walt, S.J., Brett, M., Wilson, J., Millman, K.J., Mayorov, N., Nelson, A.R.J., Jones, E., Kern, R., Larson, E., Carey, C.J., Polat, İ, Feng, Y., Moore, E.W., VanderPlas, J., Laxalde, D., Perktold, J., Cimrman, R., Henriksen, I., Quintero, E.A., Harris, C.R., Archibald, A.M., Ribeiro, A.H., Pedregosa, F., van Mulbregt, P.: SciPy 1.0: Fundamental algorithms for scientific computing in python. SciPy 1.0 contributors. Nat. Methods 17, 261 (2020). https://doi.org/10.1038/s41592-019-0686-2

Kuptsov, P.V., Kuznetsov, S.P.: Lyapunov analysis of strange pseudohyperbolic attractors: angles between tangent subspaces, local volume expansion and contraction. Regul. Chaotic Dyn. 23(7), 908 (2018). https://doi.org/10.1134/S1560354718070079

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735 (1997). https://doi.org/10.1162/neco.1997.9.8.1735

Cho, K., van Merriënboer, B., Gulcehre, C., Bahdanau, D., Bougares, F., Schwenk, H., Bengio, Y.: Learning phrase representations using RNN encoder–decoder for statistical machine translation. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), pp. 1724–1734. Association for Computational Linguistics, Doha, Qatar (2014). https://doi.org/10.3115/v1/D14-1179. https://aclanthology.org/D14-1179

Werbos, P.: Backpropagation through time: what it does and how to do it. Proc. IEEE 78(10), 1550 (1990). https://doi.org/10.1109/5.58337

Jaeger, H., Haas, H.: Harnessing nonlinearity: Predicting chaotic systems and saving energy in wireless communication. Science 304(5667), 78 (2004). https://doi.org/10.1126/science.1091277

Huhn, F., Magri, L.: Learning Ergodic Averages in Chaotic Systems. In: Krzhizhanovskaya, V.V., Závodszky, G., Lees, M.H., Dongarra, J.J., Sloot, P.M.A., Brissos, S., Teixeira, J. (eds.) Computational Science-ICCS 2020, pp. 124–132. Springer International Publishing, Cham (2020)

Racca, A., Magri, L.: Data-driven prediction and control of extreme events in a chaotic flow (2022). https://doi.org/10.48550/ARXIV.2204.11682. arXiv:2204.11682

Tikhonov, A.N., Goncharsky, A., Stepanov, V., Yagola, A.G.: Numerical methods for the solution of ill-posed problems, vol. 328. Springer Science & Business Media, London (1995). https://doi.org/10.1007/978-94-015-8480-7

Hoffman, M., Brochu, E., de Freitas, N.: Portfolio allocation for Bayesian optimization. In: Proceedings of the Twenty-Seventh Conference on Uncertainty in Artificial Intelligence, pp. 327–336. AUAI Press, Arlington, Virginia, USA, UAI’11 (2011)

Lukoševičius, M., Uselis, A.: Efficient Cross-Validation of Echo State Networks. In: Tetko, I.V., Kůrková, V., Karpov, P., Theis, F. (eds.) Artificial Neural Networks and Machine Learning - ICANN 2019: Workshop and Special Sessions, pp. 121–133. Springer International Publishing, Cham (2019)

De Swart, H.: Physica D: Analysis of a six-component atmospheric spectral model: chaos, predictability and vacillation. Nonlinear Phenom. 36(3), 222 (1989). https://doi.org/10.1016/0167-2789(89)90082-1

Crommelin, D.T., Opsteegh, J.D., Verhulst, F.: A Mechanism for Atmospheric Regime Behavior. J. Atmos. Sci. 61(12), 1406 (2004). https://doi.org/10.1175/1520-0469(2004)061<1406:AMFARB>2.0.CO;2

Woollings, T., Barriopedro, D., Methven, J., Son, S.W., Martius, O., Harvey, B., Sillmann, J., Lupo, A.R., Seneviratne, S.: Blocking and its response to climate change. Curr. Clim. Chang. Rep. 4(3), 287 (2018). https://doi.org/10.1007/s40641-018-0108-z

Schubert, S., Lucarini, V.: Dynamical analysis of blocking events: spatial and temporal fluctuations of covariant Lyapunov vectors. Q. J. R. Meteorol. Soc. 142(698), 2143 (2016). https://doi.org/10.1002/qj.2808

Ruelle, D.: Large volume limit of the distribution of characteristic exponents in turbulence. Commun. Math. Phys. 87(2), 287 (1982). https://doi.org/10.1007/BF01218566

Karimi, A., Paul, M.R.: Extensive chaos in the Lorenz-96 model. Chaos Interdiscip. J. Nonlinear Sci. 20(4), 043105 (2010). https://doi.org/10.1063/1.3496397

Kuptsov, P.V., Kuznetsov, S.P.: Violation of hyperbolicity in a diffusive medium with local hyperbolic attractor. Phys. Rev. E 80, 016205 (2009). https://doi.org/10.1103/PhysRevE.80.016205

Knyazev, A.V., Argentati, M.E.: Principal angles between subspaces in an A-based scalar product: Algorithms and perturbation estimates. SIAM J. Sci. Comput. 23(6), 2008 (2002). https://doi.org/10.1137/S1064827500377332

Kaplan, J.L., Yorke, J.A.: Chaotic behavior of multidimensional difference equations. In: Functional Differential Equations and Approximation of Fixed Points, pp. 204–227. Springer (1979)

Acknowledgements

This research has received financial support from the ERC Starting Grant No. PhyCo 949388. LM gratefully acknowledges financial support TUM Institute for Advanced Study (German Excellence Initiative and the EU 7th Framework Programme No. 291763). We are grateful to Alberto Racca for insightful discussions regarding the ESN. GM is also grateful to Valerio Lucarini for insightful discussions regarding dynamical systems theory.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Appendices

A Algorithms to compute LEs and CLVs

In this section, we present two algorithms for the computation of LEs and CLVs. Algorithm 1 is used to calculate the first D LEs of an ESN, where \(N_r\) is the dimensionality of the hidden state and D is the dimensionality of the input state. This algorithm follows the methods described in [29, 32]. Algorithm 2 computes the first D CLVs for both the ESN and target chaotic systems, using the approach outlined in [42]. These algorithms are crucial for understanding the dynamics and predictability of the systems being studied.

B Robustness

An important aspect of data-driven approaches is their ability to perform accurately under a variety of conditions. In this section, we evaluate the robustness of our approach by using smaller training sets (less data) subject to noise levels that are higher than those of Sect. 3. We also test the effect of using a loss function other than the mean square error (MSE), as defined in Eq. (16), on the accuracy of the learning. The ESN architecture follows [36], where it was trained with chaotic data from the Lorenz 63 and Lorenz 96 systems, and was robustly optimized to maximize the prediction horizon under different validation strategies.

1.1 B.1 Training with less data and higher noise intensity

It has been demonstrated that adding a small amount of Gaussian centered noise proportional to the standard deviation of the chaotic signal during training can improve the performance of an ESN [32, 36]. Noise aids the ESN to generalize to unseen data. In Sect. 3 we add Gaussian noise with a zero mean and standard deviation, \(\sigma _n=\delta \sigma _y\), where \(\delta =0.05\%\), and \(\sigma _y\) is the standard deviation of the data component-wise. We consider the Lorenz 96 with \(D=10\) degrees of freedom and \(F=8\), such that the system is chaotic. We increase the noise intensity to \(\delta =\{0.5\%, 5\%, 10\%\}\). We also quantify the effect of less training data by using \(100\tau _\lambda \) and \(500\tau _\lambda \) long time series, i.e. 1/10 and half of the \(1000\tau _\lambda \) long time series that we used in Sect. 3. Figure 12 shows the effects in the Lyapunov spectrum. For 12a, where the training set is \(100\tau _\lambda \) long, there is a good agreement between the target (black squares) and the ESN (coloured points) positive exponents. As expected, a gradual deterioration appears as the noise increases. In 12b for a \(500\tau _\lambda \) long training set, the agreement is good for all exponents with a smaller difference for negative exponents compared to (a). After training \(N_{\textrm{ESN}}=10\) statistically independent networks with chaotic time series, some might eventually evolve towards a fixed point or a periodic orbit instead (i.e. they show spurious behaviour). Here, for \(100\tau _\lambda \) long training time series, no ESN evolves spuriously at 0.05% and 0.5% noise. However, at 10% noise, half of the networks show spurious evolution, and are discarded at post-processing. Instead, for \(500\tau _\lambda \) long training time series, one and two out of ten evolves spuriously at 0.05% and 0.5% noise, respectively, but none at 5% and 10% noise, which ensures robustness of the network.

As a further test, in Fig. 13 we consider the minimum angles between subspaces spanned by CLVs. In 13a–c the ESNs are trained with \(100\tau _\lambda \) long time series, and accordingly in 13d–f with \(500\tau _\lambda \). Overall, the results are in good agreement with the target ensuring the robustness of the ESN. A slight and gradual disagreement is observed as the noise intensity increases, in particular for \(\theta _{U,N}\).