Abstract

A crucial challenge encountered in diverse areas of engineering applications involves speculating the governing equations based upon partial observations. On this basis, a variant of the sparse identification of nonlinear dynamics (SINDy) algorithm is developed. First, the Akaike information criterion (AIC) is integrated to enforce model selection by hierarchically ranking the most informative model from several manageable candidate models. This integration avoids restricting the number of candidate models, which is a disadvantage of the traditional methods for model selection. The subsequent procedure expands the structure of dynamics from ordinary differential equations (ODEs) to partial differential equations (PDEs), while group sparsity is employed to identify the nonconstant coefficients of partial differential equations. Of practical consideration within an integrated frame is data processing, which tends to treat noise separate from signals and tends to parametrize the noise probability distribution. In particular, the coefficients of a species of canonical ODEs and PDEs, such as the Van der Pol, Rössler, Burgers’ and Kuramoto–Sivashinsky equations, can be identified correctly with the introduction of noise. Furthermore, except for normal noise, the proposed approach is able to capture the distribution of uniform noise. In accordance with the results of the experiments, the computational speed is markedly advanced and possesses robustness.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Across a range of engineering fields, if the form of the governing equations is clearly known, it is possible to analyze, forecast and control dynamics. However, because it is impossible to obtain all observed data due to the complexity of the nonlinear dynamics, solving the governing equations of nonlinear dynamics has become a challenging research task. Although models are initiated from first principles [1], as the latest motivation toward machine learning, the highlighted developments posit on data-driven model discovery [2], with a much broader class of methods, including Koopman mode decomposition [3,4,5], dynamic mode decomposition (DMD) [6, 9], neural networks [7, 8, 12, 31], equation-free modeling [10], and nonlinear Laplacian spectral analysis [11]. Advancements regarding parsimonious models, which strike a balance between accuracy and complexity, are particularly notable.

In this line of evolution, innovative achievements utilizing symbolic regression [12] were able to directly realize nonlinear dynamics from data. Recently, sparsity-promoting technologies via regularization have been employed to robustly find the sparse representation in the space of the potential functions for nonlinear dynamics, incorporating the least absolute shrinkage and selection operator (LASSO) [13], sequentially thresholded least squares (STLSQ) [14, 24], stagewise regression [15], basis pursuit denoising (BPDN) [16], and matching pursuits [17].

Superior model discovery demands a severe technology for validation. Model selection [18,19,20,21,22,23] built on Occam’s Razor filters parsimonious, explanatory and generalizable models from an unknown feature library via Pareto analysis. Furthermore, a well-selected model can be evaluated by information loss between observed data and model-generated data via information criteria, such as the Akaike information criterion (AIC) [19] or Bayesian information criterion (BIC) [20].

The sparse identification of nonlinear dynamics (SINDy) [24] algorithm is adopted in the context of data-driven model discovery and sparse regression. Its developments are discussed in Sect. 1.1, and the contributions of this paper are outlined in Sect. 1.2.

1.1 Overview of SINDy

As the SINDy algorithm directly obtains the governing equations of the nonlinear dynamics through partially known data, its extensions have existed across various engineering and science fields due to its particular architecture. It frames the problem for solving governing equations of nonlinear dynamics as the sparse regression framework via a nonlinear, predefined function library. Table 1 outlines a review of the SINDy algorithm.

As seen in Table 1, the extensions of the SINDy algorithm have been classified by modified architecture and distinct applications. Control terms or external forcing are introduced to augment the potential nonlinear function library [25], even for rational nonlinearities [26], integrating the alternating direction method [27] to solve the implicit function in the null space. In addition, since Lorenz-like systems [28] are exceedingly sensitive to initial conditions, the dismissed terms or discontinuous points [29] may be considered.

More recently, Poincaré mappings [30], intrinsic coordinates [31], constrained physical laws [32], and integral forms of the SINDy model [33] have been incorporated into the candidate function library to obtain well-selected models.

The process of the sparse regression problem can be recast as a convex problem. Therefore, convex relaxation regularization [34, 35] is embedded in the native SINDy frame for parameter estimation except for its inadequate robustness analysis. Bootstrap aggregating is utilized to robustify the SINDy algorithm [36]. Furthermore, spike-and-slab prior, regularized horseshoe prior [37] or Bayesian inference [38] is considered to promote robustness for limited data. Additionally, clustering is integrated into the SINDy model to identify the turning point for infectious disease [39, 40]. The underlying idea behind the SINDy may be deeply exploited to exceedingly extended areas, such as biology [26], optics [41] and physical fluids [42, 43]. Additionally, Zhang et al.[14] provided the proof of the convergence of SINDy, and optimization techniques have been utilized to reduce the derivative error in the original SINDy algorithm [44]. Afterward, the Python package for SINDy was explored [45, 46].

1.2 Contributions of this work

The proposed technique in this work focuses on the improved framework of the SINDy algorithm to identify the coefficients of a class of typical nonlinear dynamics and the noise distribution. The principle dedications and innovations of this paper are illustrated as follows.

-

(1)

A variant method of the SINDy is developed with the Akaike information criterion to directly identify governing equations from data.

-

(2)

Model selection is integrated with the original SINDy framework to identify the number of terms of nonlinearities in dynamics.

-

(3)

The group sparsity is embedded in the sparse regression to learn the coefficients of PDEs due to the increased complexity of nonlinear dynamics.

-

(4)

Considering the situation in which noise exists [53], input data may be polluted by noise or other perturbation elements. To avoid generating error for derivative estimation, it is required to divide the observations into noisy estimations and estimated measurement data to simultaneously denoise the measurements and to determine the probability distribution of the noise.

1.3 Structure of the article

The remaining structure is arranged as follows: Sect. 2 provides the overall framework of the proposed method. Section 3 elaborates the mathematical theory for the proposed method, including the SINDy, the sparse model selection with the Akaike information criterion (SINDy-AIC), and the group sparsity identification. Relevant results for experiments are displayed in Sect. 4. The discussion and further research are described in Sect. 5. Finally, the meaning of symbols appeared in this work is synthesized in “Appendix A”.

2 Framework of the proposed approach

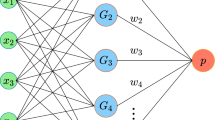

In this work, the SINDy algorithm is extended to integrate with the Akaike information criterion (AIC) and the group sparsity to identify a class of typical ODE and PDE models. Noise with normal and uniform distributions is introduced to test the robustness of the proposed approach. The framework and the flowchart of the suggested method in this paper are displayed in Figs. 1 and 2. The primary process is described as follows:

-

(1)

Step 1: Sparse identification of nonlinear dynamics (SINDy). A set of the time series data extracted from several ODE systems, prescribed as \({\mathbf{X,{\dot{X}}}}\), is imported into the SINDy model and a predefined library \({\mathbf{\Theta (X)}}\) is constructed on the basis of a priori physical information. Next, the sparsity threshold \(\lambda\) is utilized to iteratively regularize the nonzero entries \(\xi_{l}\) in the matrix \({{\varvec{\Xi}}}\) to obtain a sparse model \(\xi_{i}\).

-

(2)

Step 2: Sparse model selection. The SINDy algorithm provides a combinatorially large amount of candidate models \(Model(j)\) in the training set \({\mathbf{X}}\). Then the Akaike information criterion (AIC) is incorporated into the SINDy to select the optimal model \(Model(inds(1))\) by hierarchically ranking the AIC scores \(IC(j)\) with the inclusion of different categories of support values in the validation set \({\mathbf{Y}}\).

-

(3)

Step 3: Sequentially thresholded group ridge regression. In the primitive SINDy architecture, the sequentially thresholded least squares is employed to learn the sparse terms \(\xi_{l}\) incorporated in each candidate function library \(\theta_{l}\). Due to the complex structure of the parametric PDE dynamics, the group sparsity is used to learn the dependently parametric coefficients with function libraries and sparse vectors bundled into a group \(\Gamma\), where the ridge regression is leveraged to threshold the coefficients \(\xi_{i}\).

-

(4)

Step 4: Automatic noise identification. In general, the robustness of this approach should be considered. First, the noise estimation \({\hat{\mathbf{N}}}\) is achieved by presmoothing the noisy observation \({\mathbf{U}}\) and obtaining the estimated value of the clean measurements \({\hat{\mathbf{X}}}\) and its derivative \({\dot{\hat{\mathbf{X}}}}\). Finally, the technique makes use of optimization and the SINDy algorithm to synchronously recognize the distribution of the noise and to denoise the observations.

3 Methods

3.1 SINDy

Here, we consider the form of equations for dynamical systems

The function f(x(t)) denotes the governing equations of nonlinear dynamical systems, and the vector \({\mathbf{x}}(t) \in {\mathbb{R}}^{n}\) indicates the shape of a system at time t.

Actually, there exist only a few important terms in governing equations for physical systems of interest, so that the right-hand side of the equations is assumed to be sparse in the space of potential functions. To identify the form of Equations f from measurements, observations x(t) extracted from dynamical systems in time history and sampled at several times \(t_{1} ,t_{2} , \ldots ,t_{m}\) are arranged in the following matrix:

However, as the observed data will be employed to learn a model that can capture the entire trajectory of the motion of systems, the amount of observations should not be extremely small. In this way, the data can be augmented by coordination transformation or matrix transformation [59]. Here, the derivative \({\dot{\mathbf{x}}}(t)\) calculated by numerically approximate or total variation regularization is applied as the augmentation of the data dimension, which can be represented by

Realistically, X and \({\dot{\mathbf{X}}}\) are often contaminated with noise. Relying on the noise, it may be indispensable to filter X and \({\dot{\mathbf{X}}}\). Otherwise, the vector of sparse coefficients does not hold exactly. Total variation regularization [60] is utilized to denoise the derivative \({\dot{\mathbf{X}}}\) to counteract the differentiation error.

As control variables constituting dynamics can be established by individual customs, it is promising to make an assumption that the control variables can be deemed known terms. Accordingly, a candidate function, which includes all the possible nonlinearities composed of control variables in the form of permutations, including constant, polynomial or trigonometric terms, can be constructed. The specific form can be defined by the form of the governing equations of nonlinear dynamics,

where \({\mathbf{X}}^{{Q_{2} }} {\mathbf{,X}}^{{Q_{3} }}\) denotes a higher order polynomial term. For instance, \({\mathbf{X}}^{{Q_{2} }}\) represents the quadratic nonlinearities with respect to the state x, the exact form of which is shown as

At this point, Eq. (1) can be transformed into the form of Eq. (6). In this case, it is assumed that the left-hand side of the equation and the first term on the right-hand side of the equation represented as a self-set library are known, and the quantity to be obtained is the matrix of coefficients that characterize the control terms \({{\varvec{\Xi}}} = \left[ {\xi_{1} \;\;\xi_{2} \;\; \ldots \;\;\xi_{n} } \right].\)

Simply put, the problem for obtaining specific governing equations is converted to a requirement for the vector of sparse coefficients. Naturally, symbolic regression is exploited to simplify the issue. Therefore, Eq. (6) is substituted with Eq. (7).

Here, \(\Theta ({\mathbf{x}}^{T} ),\) which is a numerical matrix, is a vector of the symbolic function of elements of x with respect to \(\Theta ({\mathbf{X}})\). Naturally, the final form of the equations to be solved is indicated by

where the superscript T denotes the matrix transpose.

Each column of Eq. (6) demands a different optimization to obtain the sparse vector of coefficients \(\xi_{l}\) for the lth row equation. To mitigate computational errors caused by inconsistent data dimensions or excessively small entries of X, columns of \({\mathbf{\Theta (X)}}\) may be normalized. In view of presupposing that most coefficient matrices are sparse in an appropriate basis, a sparse solution to an overdetermined system with noise should be sought. Here, the sequentially thresholded least squares [24] is used, the particular form of which is given by

where \(\lambda\) is the sparsity knob and the R(·) denotes the regularized function.

Once the coefficient matrix has been acquired, the identified model through SINDy is obtained, which can also be considered a reconstruction or prediction of the ground truth system. The detailed flow is shown in Figs. 1 and 2, and Algorithm 1 outlines its pseudo-code in Table 2.

3.2 Sparse model selection (SINDy-AIC)

For higher accuracy, the models obtained by regression analysis may be further refined in conjunction with statistical learning methods. There are many model selection methods, including the Akaike information criterion (AIC) [19, 20], Bayesian information criterion (BIC) [21], deviance information criterion (DIC) [23] and cross-validation (CV) [22].

In this paper, the AIC is chosen as the statistical score for model comparison from many combinatorially probable models. Originally pioneered by Akaike.H. [19, 20], AIC incorporated the principles of information entropy and Kullback–Leibler (K-L) distance [18] and built upon the notion of maximum likelihood estimation to appraise parameters. Given a candidate Model i, its corresponding AIC value is

where \(L({\mathbf{x}},\hat{\mu }) = P({\mathbf{x}}|\hat{\mu })\) denotes the conditional probability function for state variable x under the condition of the estimation \(\hat{\mu }\), and k indicates the number of free parameters. It should be noted that 2 k represents a penalty enforced on the lower bound of the AIC values. In a wide variety of cases, the sampled data are finite datasets and a correction to the AIC value is required by

where l refers to the dimension of the observed data. In linear regression, the residual sum of squares (RSS) is generally applied to least-squares fitting for the objective function, which is embodied in the form of \(RSS = \sum\nolimits_{j = 1}^{l} {(y_{j} - g(x_{j} ;\mu ))^{2} }\), where \(x_{j}\) denotes the independent variable for observation, \(y_{j}\) signifies the observed dependent variable, and the candidate model is defined by \(g\). Thus, Eq. (10) can be reformulated as \(AIC = l\ln (RSS/l) + 2k\) and its value will be used in Eq. (11).

Indeed, the AIC scores prefer a rescaled criterion named by AICmin with the value of the minimum AIC since there are multiple AIC values for each potential models. In addition, the relative AICc can be linked to the statistical indicator p-value, so that the relative AIC values \(\Delta_{i} = AIC_{i} - AIC_{\min }\) can be straightforwardly defined as a strength-of-evidence criterion for a contrast with informative models [61]. The strong support value corresponds to the models with \(\Delta_{i} \le 2\), and the weak support is relative to the models with \(4 \le \Delta_{i} \le 7\), while the models with \(\Delta_{i} \ge 10\) are interpreted as no support.

The observations are divided into a training set and a validation set to verify the performance of this approach. The length and the sampling frequency of the time-series traces in each validation set are the same as the homologous training data, except for the initial conditions. For each example in Sect. 4.2, to validate the noise sensitivity, Gaussian noise with mean zero and standard deviation \(\varepsilon = 1.5 \times 10^{{{ - }4}}\) is added to both the training sets and the validation sets. The sparse threshold \(\lambda\) appearing in the validation sets is optimized by cross-validation.

3.3 Identification of parametric PDEs with group sparsity

In this section, the partial differential equation functional identification of nonlinear dynamics (PDE-FIND) [62] is introduced firstly. The mathematical form of the PDEs with constant coefficients is formulated as

where the unknown nonlinear function N(·) is assumed as the summation of monomial basis functions \(N_{k}\) comprised by variable v and its differential values, and the subscripts indicate the partial equation.

For simplicity, identifying a constant coefficient PDE can be idealized as an overdetermined linear regression problem \({\mathbf{V}}_{t} \; = \;{\mathbf{\Theta (V)\xi }}\), its detailed formulation is illustrated by

Note that measurement matrix Vt \(\in\) \(\mathbb {R}\)m×n demonstrates data sampled on m time instances and n spatial grids. \({{\varvec{\Theta}}}({\mathbf{V}})\) is a matrix composed with potential models and \({{\varvec{\upxi}}}\) represents the sparse vector of coefficients.

In contrast to PDE-FIND, the coefficients of either temporally or spatially parameter-dependent PDEs are required to be learned in this work. The time-varying parametric dependent expressions are shown as

The form of Eq. (14) has similarity to Eq. (12) except for the parametric term \(\mu (t)\). If the coefficient estimates are obtained in variable space, \(\xi (x)\) is selected to substitute \(\xi (t)\).

Similar to Eq. (4), the guessing candidate function library is constructed as

It encapsulates cross terms constituted by derivatives and parameters, where the form of the series of m equations is shown by Eq. (16).

Unlike the sparse regression problems in SINDy, the constraint that all the \(\xi^{(k)}\) share the same sparsity threshold is considered in this article. Therefore, the notion of group sparsity [63] is introduced to group sets of features in \({{\varvec{\Theta}}}(v^{(k)} )\) together. Then Eq. (13) can be transformed into the block diagonal form

Similar to Eq. (13), the class of equations represented by Eq. (16) can also be regarded as a solitary linear system. And the group sparsity will be involved in the original SINDy algorithm, then Eq. (9) will change to

where \(\Gamma\) denotes the groups, for m time grids and q potential features in library, \(\Gamma\) is defined as \(\Gamma = \left\{ {k + q \cdot i:i = {1}, \ldots ,m:k = {1}, \ldots ,q} \right\}\). And \({\mathbf{\tilde{\Theta }}}(\mathbf {V})\) indicates the block diagonal matrix \({\mathbf{\Theta (V)}}\).

Indeed, the approach evenly divides the measurements into different time steps or spatial locations with respect to the varied coefficients. Additionally, the validation set is constructed with 20% sampled data. And 20 cross-validation experiments are used to get the optimal solution to the sparse threshold. Next the well-selected models will be evaluated with the AIC-enlightened loss function:

where n represents the amount of rows in \({{\varvec{\Theta}}}\), k denotes the amount of the non-zero elements in the learned PDEs, \(\lambda_{f}\) indicates the threshold benchmark that exerts on each model to prevent over-fitting, and \(\tilde{v}_{t}\) represents the normalized vector of \(v_{t}\) that all terms in it have unit length.

3.4 Identify noise

In practice, a wide range of systems of interest can be affected by many adverse factors such as noise, which are usually ineradicable. Indeed, the challenging problems in actual application are how to identify the regime of the noise distribution and how to learn the type of noise apart from the Gaussian noise with mean zero and standard variance \(\varepsilon = 1\).

Crucially, noise can be considered as additional data added to the clean data [64]. Based on this notion, the observations u(t) = [u1(t), u2(t), …, up(t)]\(\in\) \(\mathbb {R}\)1×p can be classified into noiseless data x(t) = [x1(t), x2(t), …, xp(t)]\(\in\) \(\mathbb {R}\)1×p and noisy estimation n(t) = [n1(t), n2(t), …, np(t)]\(\in\) \(\mathbb {R}\)1×p.

Therefore, the candidate function library can also consist of two parts: the noiseless measurements X = [x(t1); x(t2); …; x(tl)]\(\in\) \(\mathbb {R}\)l×p and the noisy measurements N = [n(t1); n(t2); …; n(tl)]\(\in {\mathbb{R}}\)l×p, which is illustrated as

Throughout the training, the advent of noise has the implication on the correctness of the model identification. Therefore, it is essential to isolate the noise from signals as two segments, the noiseless observations and the noisy observations, whose patterns are constituted with past states and future states, in which step q denotes the degree advanced to current snapshots. Accordingly, the model is illustrated in integration form:

where \({{\varvec{\Theta}}}({\mathbf{x}}(\tau )){{\varvec{\Xi}}}\) indicates the vector field of the system, and F(·) denotes the nonlinear dynamics.

The two parts are then distinctly solved for sparse coefficients, and the result is compromised with the summation of distinct products. Aiming to diminish the absolute error between the estimated derivative \({\mathbf{\dot{\hat{X}}}}\) and the actual derivative \({\mathbf{\Theta ({\hat{X}})\Xi }}\), named, \(e_{d}\), which is shown as

The additional constraint would be needed to regularize the Eq. (23) to couple the optimization parameters \({\hat{\mathbf{N}}}\) and \({{\varvec{\Xi}}}\). Therefore, the formulation is revised by

where \(\omega_{l}\) is used to constrain the numerical error, h is the decay factor, which is set to 0.9, and the details can be described as \(\omega_{l} = h^{\left| l \right| - 1} (0 \le h \le 1)\). Particularly, upper triangle symbols represent the estimation value and the subscripts indicate the time step.

As the validation set is separated for noiseless and noisy estimation, the loss function is naturally composed of two elements: numerical error \(e_{d}\) and simulation error \(e_{s}\). Additionally, \(e_{s}\) is provided by the simulation of the vector field across the overall trajectory.

Since certain errors are bound to exist due to the extra noise, the same performance criterion advocated by Rudy et al. [65] is applied to judge the performance of the model. Specifically, these errors are the noise estimation error \(E_{N}\), the vector field error \(E_{f}\) and the simulated trajectory error \(E_{P}\). Notably, the relative noise estimation error is

which is defined by the inconsistency between the true noise \({\mathbf{n}}_{l}\) and the identified noise \({\hat{\mathbf{n}}}_{l}\) with the mean \(\ell_{2}\) error. The vector field error is

which denotes the deviation from the identified vector field \({\hat{\mathbf{f}}}({\mathbf{x}}_{l} )\) to the true vector field \({\mathbf{f}}({\mathbf{x}}_{l} )\) with relative squared \(\ell_{2}\) error. The simulated trajectory error is

which represents the discrepancy between the true trajectory \({\mathbf{x}}_{l}\) and the forward simulation trajectory \({\hat{\mathbf{F}}}^{l} ({\mathbf{x}}_{l} )\), especially, \(\left\| {\; \cdot \;} \right\|_{F}\) denotes the Frobenius norm. The exact process is shown in Figs. 1 and 2.

4 Results

In this section, we demonstrate the effectiveness of the above methods by several hybrid dynamical systems: the Van der Pol system, the Rössler system, the temporally dependent Burgers’ equation and the spatially dependent Kuramoto–Sivashinsky equation, respectively. Finally, a class of physical systems are provided to investigate the robustness of the proposed approach here.

4.1 Experiment I: Sparse identification of nonlinear dynamics

4.1.1 Van der Pol system

The Van der Pol system [66] is defined by

The initial condition is given by \([x_{10} ,x_{20} ] = [ - 2, - 1]\) and the training set is acquired at interval [− 25, 25] as the snapshot t = 0.001.

Figure 3a shows a comparison of the actual trajectory with the identified trajectory for the Van der Pol system in the form of a phase diagram, where it shows that the learned trajectory is approximated to the true trajectory with the extremely small error. Figure 3b decomposes the two-dimensional phase into two dimensions: x and y. Similarly, the result of the comparison between the actual time-series data and the estimated time-series instances on two dimensions shows that the error between them is extremely small, even almost zero.

4.1.2 Rössler system

The Rössler system [67] is also used to verify the performance of SINDy, which is governed by

This system is simulated with initial condition \(\left[ {x_{10} ,x_{20} ,x_{30} } \right] = [3,5,0]\). The training set is sampled with the interval t = 0.001 from t = 0 to t = 25.

Figure 3c represents the actual Rössler system, while Fig. 3d is the identified Rössler system via SINDy. Comparing the two panels, it is known that SINDy accurately reconstructs the Rössler system. Simultaneously, the results of the coefficients indicate that the model error between them is within the range from 0.01% to 0.05%.

4.2 Experiment II: Sparse model selection

4.2.1 Rössler system

The Rössler system includes three state variable (m = 3) with a third-order polynomial library (p = 3). The total number of models is \(N_{c} = \sum\nolimits_{i = 1}^{{N_{a} }} {\left( {\begin{array}{*{20}c} {N_{a} } \\ i \\ \end{array} } \right) = 1023}\) in the library, each of them is denoted with \(N_{a} = \left( {\begin{array}{*{20}c} {m + p} \\ p \\ \end{array} } \right) = 10\) possible monomials. One hundred initial conditions are randomly given to generate distinct validation sets with identical dimensions as the training sets, so that 100 cross-validation experiments are enforced on the validation set to adjust the values of the hyper-parameter \(\lambda\) and to determine the optimal model to be estimated.

As shown in Fig. 4c–e, there are so sizeable candidate models selected from the hierarchically ranked relative AICc that the rescaled relative AICc is used to narrow the range of candidate models. It is found that at the position relevant to the value of 6, the value of the relative AICc falls dramatically. Simultaneously, as shown in Fig. 4e, the 6-term model is equipped with ‘strong support’. Therefore, the model involving 6 nonlinearities is the optimal model.

4.2.2 Van der Pol oscillator

The Van der Pol system is taken for the test case, whose formulation is governed by Eq. (30), with two state variables (m = 2) and a sixth-order polynomial library (p = 6). On this occasion, there exist \(N_{a} = \left( {\begin{array}{*{20}c} {m + p} \\ p \\ \end{array} } \right) = 28\) potential monomials, and \(N_{c} = \sum\nolimits_{i = 1}^{{N_{a} }} {\left( {\begin{array}{*{20}c} {N_{a} } \\ i \\ \end{array} } \right) \approx 2.68435 \times 10^{8} }\) possible models. One hundred cross-validation trials are performed for each system on a validation set of the same dimension as the training set for ranking AICc.

Only the individual trajectory for each state variable (x and y) of the Van der Pol is employed as input, as shown in Fig. 5a. SINDy is able to subselect a series of models from the candidate library, including models with 1, 2 and 4 terms, as shown in Fig. 5b. Notably, there is an abrupt drop in the value of relative AICc at the number of nonlinear terms = 4, and the support values of the selected models fall into the strongly supported range, while other models fall into the unsupported domain according to Fig. 5b. Therefore, the optimal model selected is the one containing four nonlinear terms.

Finally, the value of the prediction error of SINDy-AIC is less than that of SINDy within the range of noise level from \(10^{ - 8}\) to 0.5, as shown in Fig. 5c. Nevertheless, the larger the noise value is, the greater the prediction of SINDy-AIC, with a value close to 1. The robustness of SINDy-AIC can perhaps be the focus of future research.

4.3 Experiment III: Identify the coefficients of the partial differential equations

4.3.1 Burgers’ equation

The first example is focused on Burgers’ equation, which has a nonlinear advection term in the form of a sinusoidally oscillatory coefficient \(\omega (t)\), which is defined by

Data are generated on the interval [− 8, 8] via the spectral method and the periodic boundary requirements are constrained by k = 256 snapshots and l = 256 time instances to evenly segment the time scale and to construct the time-varying dependent parametric PDE. Our library of candidate functions is fabricated by employing the cubic order derivatives of v, which can be multiplied by the fourth-order derivatives of v. It is necessary to segregate 20% of the data from each snapshot to be utilized as a validation set. The proper level of sparsity requires the optimal \(\lambda\) through cross-validation to build the sparse coefficients.

The consequent time series for the identified coefficient \(\tilde{\omega }(t)\) and the true coefficient \(\omega (t)\) in the case of either noiseless data or noisy data are illustrated in Fig. 6c, d, respectively. The results show that the parametric coefficients are identified elaborately with subtle error.

4.3.2 Kuramoto–Sivashinsky equation

The Kuramoto–Sivashinsky equation is a nonlinear PDE that simulates it with spatially varying coefficients

where \(\omega (x) = {{1 + \sin ({{\frac{\pi }{2}x}/L})} / 5}\), \(\xi (x) = - 1 + {{e^{{{{ - (x - 3)^{2} } \mathord{\left/ {\vphantom {{ - (x - 3)^{2} } 4}} \right. \kern-\nulldelimiterspace} 4}}} } / 5}\), \(\gamma (x) = {{ - 1 - e^{{{{ - (x + 3)^{2} } \mathord{\left/ {\vphantom {{ - (x + 3)^{2} } 4}} \right. \kern-\nulldelimiterspace} 4}}} }/ 5}\) and L = 20. The spatially dependent Kuramoto–Sivashinsky equation is solved mathematically employing a spectral method on a periodic domain [− 20, 20] with k = 512 snapshots and l = 512 time instances. The library consists of the product between the derivatives of v and its cubic order terms up to fourth order.

Figure 7b–d show the differences between the true coefficients and the learned coefficients in the noise-free and noisy measurements, respectively. Traditionally, the goodness-of-fit of the model is judged by the mean square error (MSE) \({{\left\| {\tilde{\Theta }\xi - \tilde{v}_{t} } \right\|_{2}^{2} } \mathord{\left/ {\vphantom {{\left\| {\tilde{\Theta }\xi - \tilde{v}_{t} } \right\|_{2}^{2} } n}} \right. \kern-\nulldelimiterspace} n}\) in Eq. (19). And the related mean square errors in two cases are \(3.92465 \times 10^{ - 6}\) and \(8.54312 \times 10^{ - 3}\). Obviously, this method can correctly determine the coefficients for Kuramoto–Sivashinsky in the former, while it does not accurately learn the coefficients with the introduce of noise.

Additionally, the AIC can be employed to evaluate the discrepancy for true coefficients and identified coefficients, as shown in Fig. 7e. When the noise magnitude \(\varepsilon\) is less than 1, the differences between the identified model and the true model is close to 0.

In particular, the range of the threshold tolerances, from \(\kappa_{\min }\) to \(\kappa_{\max }\), is initially employed to select the potential models, where \(\kappa_{\min }\) demonstrates the minimum tolerance that has consequences for the sparsity of the \(\left\| {{{\varvec{\Theta}}}({\mathbf{V}}_{t} ){{\varvec{\upxi}}} - {\mathbf{V}}_{t} } \right\|\), and \(\kappa_{\max }\) indicates the minimum tolerance that ensures all coefficients in \({{\varvec{\upxi}}}\) to be zero. And \({{\kappa_{\min } } \mathord{\left/ {\vphantom {{\kappa_{\min } } {\kappa_{\max } }}} \right. \kern-\nulldelimiterspace} {\kappa_{\max } }}\) is illustrated as \({{\kappa_{\min } } \mathord{\left/ {\vphantom {{\kappa_{\min } } {\kappa_{\max } = \mathop {{{\kappa_{\min } } \mathord{\left/ {\vphantom {{\kappa_{\min } } {\kappa_{\max } }}} \right. \kern-\nulldelimiterspace} {\kappa_{\max } }}}\limits_{h \in \Gamma } }}} \right. \kern-\nulldelimiterspace} {\kappa_{\max } = \mathop {{{\kappa_{\min } } \mathord{\left/ {\vphantom {{\kappa_{\min } } {\kappa_{\max } }}} \right. \kern-\nulldelimiterspace} {\kappa_{\max } }}}\limits_{h \in \Gamma } }}\left\| {\xi_{ridge} } \right\|_{2}\), where \(\xi_{ridge} = \left( {\tilde{\Theta }^{T} \tilde{\Theta } + \lambda {\mathbf{I}}} \right)^{ - 1} \tilde{\Theta }^{T} \tilde{v}_{t}\). The notion of \(\tilde{\Theta }\) and \(\tilde{v}_{t}\) are illustrated in Sect. 3.3, \(\lambda\) is the threshold, and I indicates the identity matrix.

Figure 7f provides the model evaluation with the loss function from Eq. (19). From 50 values of \(\kappa\), ranging from \(10^{ - 7}\) to \(10^{ - 2}\) evenly spaced in steps of \(10^{ - 1}\), the candidate models will be selected in both intervals of \(\kappa\) from \(7.03 \times 10^{ - 4}\) to \(7.98 \times 10^{ - 4}\) and from \(3.87 \times 10^{ - 3}\) to \(5.00 \times 10^{ - 3}\). Furthermore, the models corresponding to \(\kappa_{\min }\) within an interval are overfit to the measurements, while the models related to \(\kappa_{\max }\) are too sparse to predict the coefficients. Then the AIC will be enforced to yield the optimal model for the PDEs.

4.3.3 Comparison results

Regarding the validity of the augmented SINDy with group sparsity, the structure error and the success rate are introduced. The amount of entries in the model that are abnormally increased or removed are denoted by the structure error. As seen in Fig. 8a, the indigo area implies no discrepancy between the discovered model and the actual model, and the violin patterns quantify the allocation of inaccurate entries with cross-validation among 20 models. It is obvious that the augmented SINDy with group sparsity can handle 30,000 more noise data than SINDy-AIC.

Figure 8b shows the comparison of the success rate of SINDy, SINDy-AIC and the augmented SINDy with group sparsity in discovering the coefficients of Burgers’ equation, where the success rate is denoted by the average of the results of 15 runs. The augmented SINDy employs approximately 5 times less data than SINDy-AIC, and SINDy-AIC utilizes approximately 2 times less data than SINDy across a distinct percentage of measurements.

4.4 Experiment IV: Noise identification

4.4.1 Van der Pol System

Notably, the Van der Pol system is taken as the test instance, and its equation is formulated as Eq. (30).

The system is simulated with two state variables (x and y), and the prediction step q is set to 1. It is essential to compute the Jacobian \({{\partial L} \mathord{\left/ {\vphantom {{\partial L} {\partial \hat{N}}}} \right. \kern-\nulldelimiterspace} {\partial \hat{N}}}\) and \({{\partial L} \mathord{\left/ {\vphantom {{\partial L} {\partial \hat{\Xi }}}} \right. \kern-\nulldelimiterspace} {\partial \hat{\Xi }}}\) to solve Eq. (26). The optimization problem in Eq. (26) utilizes TensorFlow2.7 and the Adam optimizer with a learning rate of 0.001 for calculations. To promote the sparsity of the identified model, the sequentially thresholded is applied to Eq. (26) for \(N_{loop}\) times.

The initial conjecture of the next iteration was set as the preceding iteration’s optimization outcome \({\hat{\mathbf{N}}}\) in each iteration. Additional parameters for each example, such as the noise level, the initial conditions, the number of iterations \(N_{loop}\) and the sparse threshold \(\lambda\), are shown in Table 5.

Figure 9 shows the results after applying the proposed method to the dataset with the introduction of both 15% uniform and 15% normal measurement noise. It illustrates that the noise estimation is approximate to the true measurement noise with the form of the single simulation trajectory for the time series, histogram and probability density respectively, as shown in Fig. 9a, b, c, e, respectively. The error metrics for each noise distribution shown in Table 3.

4.4.2 Rössler system

The Rössler system is taken as the final example, whose form is given by Eq. (31).

Data are generated by three input variables (\(x_{1}\),\(x_{2}\) and \(x_{3}\)), and levels of 30% artificial noise for uniform or normal distribution are added.

Similar to the first case in Sect. 4.4.1, a learning rate of 0.001 of the Adam optimizer and TensorFlow2.7 are executed to learn the models. Auxiliary parameters, including the magnitude of noise, prediction step q, initial conditions, the amount of iterations \(N_{loop}\) and sparsity threshold \(\lambda\), are listed in Table 5.

A total of 2500 time steps from t = 0 to t = 25 are generated via high-fidelity simulation and then these data are corrupted with 30% virtual noise in a normal or uniform distribution. The error metrics for the property of the algorithm are outlined in Table 4, and it is shown that the values are approximate to zero.

Figure 10 displays the bias in the approximation of the measurement noise for a single trajectory with the time series, the simulation evolving into the attractor and the probability density corrupted by 30% artificial noise. In the left column, the system is contaminated by 30% normal measurement noise. Another column shows the system with 30% uniform noise against the true system.

The learned results, either the simulation trajectory or the probability density of various noise distributions, approach the actual values with minimal error. Additionally, it is evident that the differences for uniform observation noise are smaller than the measurements with 30% normal noise, in such an occasion where the error for 6 iterations declines gradually to approximately 0.

Finally, a range of canonical systems are provided to verify the robustness to significant measurement noise for the algorithm, and their relevant expressions are formulated as Eqs. (30), (31), (34), (35), (36) and (37).

The results are boxed in Fig. 11, which shows that the systems are reconstructed successfully with the levels of increasing noise, ranging from 5 to 30%. More broadly, the parameters and the performance indices for these systems are detailed in Tables 5, 6 and 7.

As the prediction step q, the number of iterations for denoising the data and sparse threshold affect the results of this algorithm, ergodic trials are utilized to undermine the derivative error and to identify the optimum for the abovementioned parameters, with the exception of the overwhelming computational disadvantage.

5 Discussion and conclusion

The incorporation of mathematical methods is recommended here as follows: (i) identification of nonlinear dynamics via SINDy, (ii) optimal model selection through the AIC, (iii) integration between sparse regression and group sparsity for coefficients of PDEs and (iv) automated identification of noise via data splitting.

From the point of view of experimental results, the proposed method accurately identifies the coefficients for either the ODEs or the PDEs. More broadly, the following limitations may be attracted.

-

(1)

Structural analysis. The clean measurements, the proper candidate function library and the appropriate regularized sparsity threshold are regarded as three preliminary elements for the structure of the SINDy model. Additionally, the pure measurements play the most important role in the framework; in case noise is introduced, the items in the identified models are bound to be incorrect regardless of the choice of the candidate function library and the sparsity threshold. Furthermore, the sparsity threshold determines the coefficient values of the identified models, and the type of the identified coefficients is defined by potential items in the candidate function library. Due to the actual consideration, the noise is impossible to neglect. Crucially, the indispensable process in sparse regression is parameter tuning.

-

(2)

Model analysis. As the structure of the majority of the models in engineering or biological fields is unknown or complex, the class of the items in the candidate function library is inconclusive. Therefore, the solution to the identified model is obscure. More broadly, the threshold selection will undoubtedly be influenced by the polynomial order in the potential function library. As the magnitude of the polynomial order increases, a regularization threshold may be employed to scale down the dimensionality of the function library.

-

(3)

Robustness analysis. In fact, there are many kinds of probability distributions of noise, but uniform and normal distributions are showcased in this paper. Moreover, the identified model will not be reconstructed with the introduction of the noise due to the asymmetric structure of the probability distribution, such as the gamma or Rayleigh distribution. The method may be extended to integrate with machine learning models to detect the regime of various probability distributions of noise, particularly those with a nonzero mean.

In conclusion, the proposed method has consequences for data-driven model identification and signal-to-noise separation. The flexibility of the architecture of this approach yields a structure for discovering the underlying dynamics of intricate systems with interpretability. Moreover, it can perhaps be engaged with brain science to exploit epilepsy or Alzheimer’s disease.

Data availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Schmidt, M., Lipson, H.: Distilling free-form natural laws from experimental data. Science 324(5923), 81–85 (2009)

Brunton, S.L., Kutz, J.N.: Data-Driven Science and Engineering: Machine Learning, Dynamical Systems, and Control. Cambridge University Press (2019)

Budišić, M., Mohr, R., Mezić, I.: Applied koopmanism. Chaos 22(4), 047510 (2012)

Mezić, I.: Analysis of fluid flows via spectral properties of the Koopman operator. Annu. Rev. Fluid Mech. 45, 357–378 (2013)

Klus, S., Nüske, F., Koltai, P., et al.: Data-driven model reduction and transfer operator approximation. J. Nonlinear Sci. 28(3), 985–1010 (2018)

Schmid, P.J.: Dynamic mode decomposition of numerical and experimental data. J. Fluid Mech. 656, 5–28 (2010)

Raissi, M., Yazdani, A., Karniadakis, G.E.: Hidden fluid mechanics: learning velocity and pressure fields from flow visualizations. Science 367(6481), 1026–1030 (2020)

Mardt, A., Pasquali, L., Wu, H., et al.: VAMPnets for deep learning of molecular kinetics. Nat. Commun. 9(1), 1–11 (2018)

Dylewsky, D., Barajas-Solano, D., Ma, T., et al.: Stochastically forced ensemble dynamic mode decomposition for forecasting and analysis of near-periodic systems (2020). arXiv preprint arXiv:2010.04248

Kevrekidis, I.G., Gear, C.W., Hyman, J.M., et al.: Equation-free, coarse-grained multiscale computation: enabling microscopic simulators to perform system-level analysis. Commun. Math. Sci. 1(4), 715–762 (2003)

Giannakis, D., Majda, A.J.: Nonlinear Laplacian spectral analysis for time series with intermittency and low-frequency variability. Proc. Natl. Acad. Sci. 109(7), 2222–2227 (2012)

Baddoo, P.J., Herrmann, B., McKeon, B.J., et al.: Kernel learning for robust dynamic mode decomposition: linear and nonlinear disambiguation optimization (LANDO) (2021). arXiv preprint arXiv:2106.01510

Tibshirani, R.: Regression shrinkage and selection via the lasso. J. R. Stat. Soc. B 58(1), 267–288 (1996)

Zhang, L., Schaeffer, H.: On the convergence of the SINDy algorithm. Multiscale Model. Simul. 17(3), 948–972 (2019)

Boninsegna, L., Nüske, F., Clementi, C.: Sparse learning of stochastic dynamical equations. J. Chem. Phys. 148(24), 241723 (2018)

Chen, S.S., Donoho, D.L., Saunders, M.A.: Atomic decomposition by basis pursuit. SIAM Rev. 43(1), 129–159 (2001)

Mallat, S.G., Zhang, Z.: Matching pursuits with time-frequency dictionaries. IEEE Trans. Signal Process. 41(12), 3397–3415 (1993)

Kullback, S., Leibler, R.A.: On information and sufficiency. Ann. Math. Stat. 22(1), 79–86 (1951)

Akaike, H.: Information theory and an extension of the maximum likelihood principle. In: Selected Papers of Hirotugu Akaike, pp. 199–213. Springer, New York (1998)

Akaike, H.: A new look at the statistical model identification. IEEE Trans. Autom. Control 19(6), 716–723 (1974)

Schwarz, G.: Estimating the dimension of a model. Ann. Stat. 6, 461–464 (1978)

Bishop, C.M., Nasrabadi, N.M.: Pattern recognition and machine learning, vol. 4, no. 4. Springer, New York (2006)

Van Der Linde, A.: DIC in variable selection. Stat. Neerl. 59(1), 45–56 (2005)

Brunton, S.L., Proctor, J.L., Kutz, J.N.: Discovering governing equations from data by sparse identification of nonlinear dynamical systems. Proc. Natl. Acad. Sci. 113(15), 3932–3937 (2016)

Brunton, S.L., Proctor, J.L., Kutz, J.N.: Sparse identification of nonlinear dynamics with control (SINDYc). IFAC-PapersOnLine 49(18), 710–715 (2016)

Mangan, N.M., Brunton, S.L., Proctor, J.L., et al.: Inferring biological networks by sparse identification of nonlinear dynamics. IEEE Trans. Mol. Biol. Multi-Scale Commun. 2(1), 52–63 (2016)

Qu, Q., Sun, J., Wright, J.: Finding a sparse vector in a subspace: linear sparsity using alternating directions. IEEE Trans. Inf. Theory 62(10), 5855–5880 (2016)

Lorenz, E.N.: Deterministic nonperiodic flow. J. Atmos. Sci. 20(2), 130–141 (1963)

Quade, M., Abel, M., Nathan Kutz, J., et al.: Sparse identification of nonlinear dynamics for rapid model recovery. Chaos 28(6), 063116 (2018)

Bramburger, J.J., Kutz, J.N., Brunton, S.L.: Data-driven stabilization of periodic orbits. IEEE Access 9, 43504–43521 (2021)

Champion, K., Lusch, B., Kutz, J.N., et al.: Data-driven discovery of coordinates and governing equations. Proc. Natl. Acad. Sci. 116(45), 22445–22451 (2019)

Kaptanoglu, A.A., Morgan, K.D., Hansen, C.J., et al.: Physics-constrained, low-dimensional models for magnetohydrodynamics: first-principles and data-driven approaches. Phys. Rev. E 104(1), 015206 (2021)

Wei, B.: Sparse dynamical system identification with simultaneous structural parameters and initial condition estimation (2022). arXiv preprint arXiv:2204.10472.v

Zheng, P., Askham, T., Brunton, S.L., et al.: A unified framework for sparse relaxed regularized regression: Sr3. IEEE Access 7, 1404–1423 (2018)

Champion, K., Zheng, P., Aravkin, A.Y., et al.: A unified sparse optimization framework to learn parsimonious physics-informed models from data. IEEE Access 8, 169259–169271 (2020)

Fasel, U., Kutz, J.N., Brunton, B.W., et al.: Ensemble-SINDy: Robust sparse model discovery in the low-data, high-noise limit, with active learning and control (2021). arXiv preprint arXiv:2111.10992

Hirsh, S.M., Barajas-Solano, D.A., Kutz, J.N.: Sparsifying priors for Bayesian uncertainty quantification in model discovery. R. Soc. Open Sci. 9(2), 211823 (2022)

Ram, P.R.M., Römer, U., Semaan, R.: Bayesian dynamical system identification with unified sparsity priors and model uncertainty (2021). arXiv preprint arXiv:2103.05090

Mangan, N.M., Askham, T., Brunton, S.L., et al.: Model selection for hybrid dynamical systems via sparse regression. Proc. R. Soc. A 475(2223), 20180534 (2019)

Jiang, Y.X., Xiong, X., Zhang, S., et al.: Modeling and prediction of the transmission dynamics of COVID-19 based on the SINDy-LM method. Nonlinear Dyn. 105(3), 2775–2794 (2021)

Sorokina, M., Sygletos, S., Turitsyn, S.: Sparse identification for nonlinear optical communication systems: SINO method. Opt. Express 24(26), 30433–30443 (2016)

Fukami, K., Murata, T., Zhang, K., et al.: Sparse identification of nonlinear dynamics with low-dimensionalized flow representations. J. Fluid Mech. (2021). https://doi.org/10.1017/jfm.2021.697

Loiseau, J.C.: Data-driven modeling of the chaotic thermal convection in an annular thermosyphon. Theor. Comput. Fluid Dyn. 34(4), 339–365 (2020)

Wu, Y.: Error Processing of sparse identification of nonlinear dynamical systems via L∞ approximation (2021). arXiv preprint arXiv:2107.06142

de Silva, B., Champion, K., Quade, M., et al.: PySINDy: A Python package for the sparse identification of nonlinear dynamical systems from data. JOSS 5, 2104 (2020)

Kaptanoglu, A.A., de Silva, B.M., Fasel, U., et al.: PySINDy: a comprehensive Python package for robust sparse system identification (2021). arXiv preprint arXiv:2111.08481

Kaiser, E., Kutz, J.N., Brunton, S.L.: Sparse identification of nonlinear dynamics for model predictive control in the low-data limit. Proc. R. Soc. A 474(2219), 20180335 (2018)

Hoffmann, M., Fröhner, C., Noé, F.: Reactive SINDy: discovering governing reactions from concentration data. J. Chem. Phys. 150(2), 025101 (2019)

Chu, H.K., Hayashibe, M.: Discovering interpretable dynamics by sparsity promotion on energy and the Lagrangian. IEEE Robot. Autom. Lett. 5(2), 2154–2160 (2020)

Dai, M., Gao, T., Lu, Y., et al.: Detecting the maximum likelihood transition path from data of stochastic dynamical systems. Chaos 30(11), 113124 (2020)

Brunton, S.L., Brunton, B.W., Proctor, J.L., et al.: Chaos as an intermittently forced linear system. Nat. Commun. 8(1), 1–9 (2017)

Loiseau, J.C., Noack, B.R., Brunton, S.L.: Sparse reduced-order modelling: sensor-based dynamics to full-state estimation. J. Fluid Mech. 844, 459–490 (2018)

Kaheman, K., Brunton, S.L., Kutz, J.N.: Automatic differentiation to simultaneously identify nonlinear dynamics and extract noise probability distributions from data. Mach. Learn. 3(1), 015031 (2022)

Dam, M., Brøns, M., Juul Rasmussen, J., et al.: Sparse identification of a predator–prey system from simulation data of a convection model. Phys. Plasmas 24(2), 022310 (2017)

Messenger, D.A., Bortz, D.M.: Weak SINDy for partial differential equations. J. Comput. Phys. 443, 110525 (2021)

Cortiella, A., Park, K.C., Doostan, A.: Sparse identification of nonlinear dynamical systems via reweighted ℓ1-regularized least squares. Comput. Methods Appl. Mech. Eng. 376, 113620 (2021)

Bhadriraju, B., Narasingam, A., Kwon, J.S.I.: Machine learning-based adaptive model identification of systems: application to a chemical process. Chem. Eng. Res. Des. 152, 372–383 (2019)

Jadhav, Y., Barati Farimani, A.: Dominant motion identification of multi-particle system using deep learning from video. Neural Comput. Appl. (2022). https://doi.org/10.1007/s00521-022-07421-z

Takens, F.: Detecting strange attractors in turbulence. In: Dynamical Systems and Turbulence, Warwick 1980, pp:366–381. Springer, Berlin (1981)

Rudin, L.I., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Physica D 60(1–4), 259–268 (1992)

Burnham, K.P., Anderson, D.R.: Multimodel inference: understanding AIC and BIC in model selection. Sociol. Methods Res. 33(2), 261–304 (2004)

Rudy, S.H., Brunton, S.L., Proctor, J.L., et al.: Data-driven discovery of partial differential equations. Sci. Adv. 3(4), e1602614 (2017)

Schaeffer, H., Tran, G., Ward, R.: Learning dynamical systems and bifurcation via group sparsity (2017). arXiv preprint arXiv:1709.01558

Daniels, B.C., Nemenman, I.: Automated adaptive inference of phenomenological dynamical models. Nat. Commun. 6(1), 1–8 (2015)

Rudy, S.H., Kutz, J.N., Brunton, S.L.: Deep learning of dynamics and signal-noise decomposition with time-stepping constraints. J. Comput. Phys. 396, 483–506 (2019)

Van Der Pol, B.: Vii forced oscillations in a circuit with non-linear resistance. Lond. Edinb. Dublin Philos. Mag. J. Sci. 3(13), 65–80 (1927)

Rössler, O.E.: An equation for continuous chaos. Phys. Lett. A 57(5), 397–398 (1976)

Acknowledgements

The authors would like to thank the valuable comments and suggestions of the anonymous reviewers.

Funding

This study was funded by the NSFC (National Natural Science Foundation of China) project (Grant Numbers: 41861047, 41461078).

Author information

Authors and Affiliations

Contributions

Conceptualization, XD and YB; methodology, XD; investigation, YL; software, XD; writing—original draft preparation, XD; writing—review and editing, YB; funding acquisition, YB; supervision, YB; validation, MF. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A

Appendix A

Table: The summation of the variable

Symbol | Meaning | Symbol | Meaning |

|---|---|---|---|

State variable | h | The decay factor in Sect. 3.4 | |

\({\dot{\mathbf{X}}},\;{\mathbf{X}}\) | \(\omega_{l}\) | The constrained condition in Eq. (24) | |

\({\hat{\mathbf{X}}}\;{\mathbf{,}}\;{\mathbf{\dot{\hat{X}}}}\) | The estimated measurements in Eq. (23) | \(\mu (t)\) | The parametric dependencies in Eq. (14) |

Y | The validation set in Sect. 2 | \(N_{a}\) | The number of possible monomials in Sect. 4.2 |

U | The actually observed data in Eq. (20) | \(N_{c}\) | The total number of potential models in Sect. 4.2 |

\({\hat{\mathbf{N}}}\) | The estimated noise in Eq. (26) | p | The pth order polynomial library in Sect. 4.2 |

\({\mathbf{X}}^{{Q_{i} }}\) | The ith order of polynomial terms in Eq. (5) | m | The dimension of state variable in Sect. 4.2 |

\({{\varvec{\Theta}}}\) | The candidate function collection in Eq. (4) | \(\lambda\) | The threshold in Sect. 4.4 |

V t | The measurements for partial differential equations (PDEs) in Eq. (13) | Performance criterion | |

\({\tilde{\mathbf{\Theta }}}\) | The block diagonal matrix \({{\varvec{\Theta}}}\) in Eq. (17) | \(e_{d}\) | The absolute error of the estimated derivative and system’s vector field in Eq. (23) |

\({{\varvec{\Xi}}}\) | The vector of the sparse coefficients in Eq. (6) | \(e_{s}\) | The simulation error in Eq. (25) |

\(\xi_{i}\) | The ith element in the vector of the sparse coefficients in Eq. (7) | \(E_{N}\) | The noise identification error in Eq. (27) |

\(\theta_{l}\) | The lth potential features in the candidate function library in Sect. 2 | \(E_{f}\) | The vector field error in Eq. (28) |

\(x_{i}\) | The state variables of distinct dimension in Eq. (30) | \(E_{P}\) | The prediction error in Eq. (29) |

\(v_{{\underbrace {xx \ldots x}_{n}}}\) | The nth order derivative in Eq. (12) | Function | |

\(t_{i}\) | The time points in Sect. 3.1 | \({\mathbf{f}}( \cdot )\) | The function of nonlinear dynamics in Eq. (1) |

Parameter | \({\mathbf{F}}( \cdot )\) | The flow map of the dynamic systems in Eq. (21) | |

\(\Gamma\) | A collection of groups in Eq. (18) | RSS | The residual sum of squares in Sect. 3.2 |

\(\hat{\mu }\) | The estimated parameter in Eq. (10) | \(L( \cdot )\) | The loss function in Eq. (26) |

k | The degree free parameters in Eq. (10) | \(\Delta_{i}\) | The relative AICc in Sect. 4.2 |

l | The dimension of measurements in Eq. (11) | \(\text{AIC}_{\min }\) | The minimum AIC value in Sect. 3.2 |

\(\varepsilon\) | The noise magnitude in Sect. 3.2 | \(R( \cdot )\) | The regularization function in Eq. (9) |

q | The backward or forward time steps in Eq. (22) | \(N( \cdot )\) | The nonlinear function for the dynamics in Eq. (12) |

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Dong, X., Bai, YL., Lu, Y. et al. An improved sparse identification of nonlinear dynamics with Akaike information criterion and group sparsity. Nonlinear Dyn 111, 1485–1510 (2023). https://doi.org/10.1007/s11071-022-07875-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-022-07875-9