Abstract

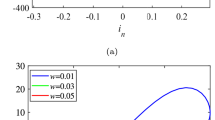

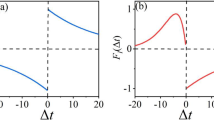

This paper focuses on the intrinsic chaotic behavior of recurrent neural networks under harmonic excitation. The chaotic behaviors of some untrained two-neuron RNN with strange attractors are studied in detail. Taking the input as an additional dimension, the intrinsic manifold shaped by an RNN is visualized using 3-D phase portraits. A series of isomorphic topological structures are generated to provide different aspects of the intrinsic manifold. Local fractal dimension is introduced to visualize the repetitive folding of attractors, which exhibits a spectrum of fractal dimensions concentrating around 1, 1.5 and 2. By using maximal Lyapunov exponent (MLE), our results show that when tuning the amplitude or frequency of the harmonic input, periodic and chaotic motions in the phase space emerge alternatingly. For a certain RNN, bifurcation with the topological transformation of the manifold can be observed when MLEs approach zero. Algorithm 1 is designed to search for various topological structures with potential chaotic patterns from massive randomly generated RNNs. Beautiful chaotic patterns are generated and presented in the form of a gallery appended in the end. This paper provides a novel prospect to generate topological structures with beautiful chaotic patterns using RNNs.

Similar content being viewed by others

References

Abrevaya, G., Aravkin, A., Cecchi, G., Rish, I., Polosecki, P., Zheng, P., Dawson, S.P.: Learning nonlinear brain dynamics: van der pol meets lstm. Preprint arXiv:1805.09874 (2018)

Awrejcewicz, J.: Bifurcation and Chaos: Theory and Applications. Springer, Berlin (2012)

Bakker, R.: Learning to simulate and predict chaotic dynamical systems (2007)

Bakker, R., Schouten, J.C., Giles, C.L., Takens, F., Bleek, C.M.: Learning chaotic attractors by neural networks. Neural Comput. 12(10), 2355–2383 (2000)

Barnes, J., Koss, L.: A julia set that is everything. Math. Mag. 76, 255–263 (2003). https://doi.org/10.2307/3219080

Bousmalis, K., Silberman, N., Dohan, D., Erhan, D., Krishnan, D.: Unsupervised pixel-level domain adaptation with generative adversarial networks. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017)

Cervantes-Ojeda, J., Gómez-Fuentes, M., Bernal-Jaquez, R.: Empirical analysis of bifurcations in the full weights space of a two-neuron dtrnn. Neurocomputing 237, 362–374 (2017)

Cestnik, R., Abel, M.: Inferring the dynamics of oscillatory systems using recurrent neural networks. Chaos Interdiscip. J. Nonlinear Sci. 29(6), 063128 (2019)

Chen, L., Aihara, K.: Chaos and asymptotical stability in discrete-time neural networks. Phys. D Nonlinear Phenom. 104(3–4), 286–325 (1997)

Chen, L., Aihara, K.: Strange attractors in chaotic neural networks. IEEE Trans. Circuits Syst. I Fundam. Theory Appl. 47(10), 1455–1468 (2000)

Chung, J., Gulcehre, C., Cho, K., Bengio, Y.: Empirical evaluation of gated recurrent neural networks on sequence modeling (2014)

Creswell, A., Bharath, A.A.: Adversarial training for sketch retrieval. In: Computer Vision—ECCV 2016 (2016)

Creswell, A., White, T., Dumoulin, V., Arulkumaran, K., Sengupta, B., Bharath, A.A.: Generative adversarial networks: an overview. IEEE Signal Process. Mag. 35(1), 53–65 (2017)

Diethelm, K., Ford, N.J., Freed, A.D.: A predictor-corrector approach for the numerical solution of fractional differential equations. Nonlinear Dyn. 29(1–4), 3–22 (2002)

Dong, T., Liao, X., Wang, A.: Stability and hopf bifurcation of a complex-valued neural network with two time delays. Nonlinear Dyn. 82(1–2), 173–184 (2015)

Fang, T., Sun, J.: Stability of complex-valued recurrent neural networks with time-delays. IEEE Trans. Neural Netw. Learn. Syst. 25(9), 1709–1713 (2014)

Gao, M., Cong, J., Xiao, J., He, Q., Li, S., Wang, Y., Yao, Y., Chen, R., Wang, P.: Dynamic modeling and experimental investigation of self-powered sensor nodes for freight rail transport. Appl. Energy 257, 113969 (2020)

Gao, M., Su, C., Cong, J., Yang, F., Wang, Y., Wang, P.: Harvesting thermoelectric energy from railway track. Energy 180, 315–329 (2019)

Gao, M., Wang, Y., Wang, Y., Wang, P.: Experimental investigation of non-linear multi-stable electromagnetic-induction energy harvesting mechanism by magnetic levitation oscillation. Appl. Energy 220, 856–875 (2018)

Gao, M., Wang, Y., Wang, Y., Yao, Y., Wang, P., Sun, Y., Xiao, J.: Modeling and experimental verification of a fractional damping quad-stable energy harvesting system for use in wireless sensor networks. Energy 190, 116301 (2020)

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y.: Generative adversarial networks. Adv. Neural Inf. Process. Syst. 3, 2672–2680 (2014)

Graves, A.: Long Short-Term Memory, pp. 37–45 (2012). https://doi.org/10.1007/978-3-642-24797-2_4

Hajihosseini, A., Lamooki, G.R.R., Beheshti, B., Maleki, F.: The hopf bifurcation analysis on a time-delayed recurrent neural network in the frequency domain. Neurocomputing 73(4–6), 991–1005 (2010)

Hajihosseini, A., Maleki, F., Lamooki, G.R.R.: Bifurcation analysis on a generalized recurrent neural network with two interconnected three-neuron components. Chaos, Solitons & Fractals 44(11), 1004–1019 (2011)

Han, M., Xi, J., Xu, S., Yin, F.L.: Prediction of chaotic time series based on the recurrent predictor neural network. IEEE Trans. Signal Process. 52, 3409–3416 (2005). https://doi.org/10.1109/TSP.2004.837418

Haschke, R., Steil, J., Ritter, H.: Controlling oscillatory behaviour of a two neuron recurrent neural network using inputs. pp. 1109–1114 (2001). https://doi.org/10.1007/3-540-44668-0_154

Haschke, R., Steil, J.J.: Input space bifurcation manifolds of recurrent neural networks. Neurocomputing 64, 25–38 (2005)

Hubert, A., Jayalalitha, G.: Mandlebrot sets as complex polynomial 10, (2018)

Krishnaiah, J., Kumar, C.S., Faruqi, M.A.: Modelling and control of chaotic processes through their bifurcation diagrams generated with the help of recurrent neural network models: Part 1-simulation studies. J. Process Control 16, 53–66 (2006). https://doi.org/10.1016/j.jprocont.2005.04.002

Jozefowicz, R., Zaremba, W., Sutskever, I.: An empirical exploration of recurrent network architectures. In: International Conference on International Conference on Machine Learning (2015)

Kanitscheider, I., Fiete, I.: Training recurrent networks to generate hypotheses about how the brain solves hard navigation problems. In: Advances in Neural Information Processing Systems, pp. 4529–4538 (2017)

Karras, T., Aila, T., Laine, S., Lehtinen, J.: Progressive growing of gans for improved quality, stability, and variation. In: International Conference on Learning Representations (2018)

Ledig, C., Theis, L., Huszár, F., Caballero, J., Cunningham, A., Acosta, A., Aitken, A., Tejani, A., Totz, J., Wang, Z., Shi, W.: Photo-realistic single image super-resolution using a generative adversarial network. Preprint arXiv: 1609.04802 (2016)

Lefranc, M.: The Topology of Chaos: Alice in Stretch and Squeezeland. Wiley, Hoboken (2002)

Liu, Y., Wang, Z., Liu, X.: Stability criteria for periodic neural networks with discrete and distributed delays. Nonlinear Dyn. 49(1–2), 93–103 (2007)

Ma, Q., Zheng, Q.L., Peng, H., Zhong, T.W., Xu, L.Q.: Chaotic time series prediction based on evolving recurrent neural networks. pp. 3496–3500 (2007). https://doi.org/10.1109/ICMLC.2007.4370752

Maleki, F., Beheshti, B., Hajihosseini, A., Lamooki, G.R.R.: The bogdanov-takens bifurcation analysis on a three dimensional recurrent neural network. Neurocomputing 73(16–18), 3066–3078 (2010)

Martín Arjovsky, S.C., Bottou, L.: Wasserstein generative adversarial networks. In: the 34th International Conference on Machine Learning (ICML) (2017)

Mikolov, T., Karafiát, M., Burget, L., Cernocký, J., Khudanpur, S.: Recurrent neural network based language model. pp. 1045–1048 (2010)

Pathak, J., Lu, Z., Hunt, B.R., Girvan, M., Ott, E.: Using machine learning to replicate chaotic attractors and calculate lyapunov exponents from data. Chaos Interdiscip. J. Nonlinear Sci. 27(12), 121102 (2017)

Radford, A., Metz, L., Chintala, S.: Unsupervised representation learning with deep convolutional generative adversarial networks. Computer Science (2015)

Rakkiyappan, R., Udhayakumar, K., Velmurugan, G., Cao, J., Alsaedi, A.: Stability and hopf bifurcation analysis of fractional-order complex-valued neural networks with time delays. Adv. Differ. Equ. 2017(1), 225 (2017)

Sun, K., Wang, X., Yin, L., Zhu, C.: Chaos and bifurcations of the fractional-order unified system. In: 2010 International Workshop on Chaos-Fractal Theories and Applications, pp. 301–305. IEEE (2010)

Teran, R., Draye, J.P., Pavisic, D., Calderon, G., Libert, G.: predicting a chaotic time series using a dynamical recurrent neural network. In: Mertzios, B., Liatsis, P. (eds.) Proceedings IWISP ’96, pp. 115–118. Elsevier Science Ltd, Oxford (1996)

Townley, S., Ilchmann, A., Weiß, M., McClements, W., Ruiz, A., Owens, D., Pratzel-Wolters, D.: Existence and learning of oscillations in recurrent neural networks. IEEE Trans. Neural Netw. Publ. IEEE Neural Netw. Council 11, 205–14 (2000). https://doi.org/10.1109/72.822523

Trischler, A.P., D’Eleuterio, G.M.: Synthesis of recurrent neural networks for dynamical system simulation. Neural Netw. 80, 67–78 (2016)

Wang, R., Kalnay, E., Balachandran, B.: Neural machine-based forecasting of chaotic dynamics. Nonlinear Dyn. 98, 1–5 (2019). https://doi.org/10.1007/s11071-019-05127-x

Wang, X.: Period-doublings to chaos in a simple neural network. In: IJCNN-91-Seattle International Joint Conference on Neural Networks, vol. 2, pp. 333–339. IEEE (1991)

Yao, K., Zweig, G., Hwang, M.Y., Shi, Y., Yu, D.: Recurrent neural networks for language understanding (2013). https://doi.org/10.13140/2.1.2755.3285

Yu, W., Cao, J., Chen, G.: Stability and hopf bifurcation of a general delayed recurrent neural network. IEEE Trans. Neural Netw. 19(5), 845–854 (2008)

Zerroug, A., Terrissa, L., Faure, A.: Chaotic dynamical behavior of recurrent neural network. Annu. Rev. Chaos Theory Bifurc. Dyn. Syst. 4, 55–66 (2013)

Zhang, J.S., Xiao, X.C.: Predicting chaotic time series using recurrent neural network. Chin. Phys. Lett. 17, 88 (2008). https://doi.org/10.1088/0256-307X/17/2/004

Zhu, J.Y., Krähenbühl, P., Shechtman, E., Efros, A.: Generative visual manipulation on the natural image manifold (2016). https://doi.org/10.1007/978-3-319-46454-1_36

Zhu, J.Y., Park, T., Isola, P., Efros, A.A.: Unpaired image-to-image translation using cycle-consistent adversarial networks. In: International Conference on Computer Vision (2017)

Zou, S., Huang, L., Chen, Y.: Linear stability and hopf bifurcation in a three-unit neural network with two delays. Neurocomputing 70(1–3), 219–228 (2006)

Acknowledgements

Authors Yuan Wang and Huiyue Tang were funded by the China Scholarship Council (CSC), Grant Nos. 201707000036 and 201707000037, respectively.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

A The gallery of the chaotic patterns

Rights and permissions

About this article

Cite this article

Wang, D., Tang, H., Wang, Y. et al. Beautiful chaotic patterns generated using simple untrained recurrent neural networks under harmonic excitation. Nonlinear Dyn 100, 3887–3905 (2020). https://doi.org/10.1007/s11071-020-05640-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-020-05640-4