Abstract

The sampling data control of bidirectional associative memory (BAM) neural network with leakage delay is considered in this article. The BAM model is viewed as a mixed delay that combines a distributed delay, a discrete delay that varies over time, and a delay in the leaking period. The sampling system is then converted to a continuous time-delay system using an input delay method. In order to get adequate conditions in the form of linear matrix inequalities(LMIs), we build a new Lyapunov-Krasovskii Functional (LKF) in conjunction with the free weight matrix approach. Finally, a simulation results are given to show the efficiency of the theoretical approach.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

A neural network is a processing device, whose design was inspired by the design and functioning of human brain and their components. There is no idle memory containing data and programmed, but each neuron is programmed and continuously active. Neural network has many applications. The most likely applications for the neural networks are (i) Classification (ii) Association and (iii) Reasoning. One of the applications of neural networks with time delays are unavoidable in many practical systems such as biology systems and artificial neural networks [4,5,6]. Recently, the stability analysis of dynamical systems are greatly focused and has become an emerging area of research due to the fact that it has most successful applications such as image processing, optimization, pattern recognition and other areas [7,8,9]. Various types of time-delay systems and delay dynamical networks have been investigated, and many significant results have been reported [10,11,12,13,14,15]. From the point of view of nonlinear dynamics, the study of neural networks with delays is useful and important to solve problems both theoretically and practically.

An extension of the unidirectional auto associator of Hopfield neural network is called bidirectional associative memory (BAM) neural networks, which was first introduced by kosko [16]. Then, BAM neural networks with delays have attracted considerable attention and have been widely investigated. It is composed of neurons arranged in two layers: the X-layer and the Y-layer. The neurons in one layer are fully interconnected to the neurons in the other layer, while there are no interconnection among neurons in the same layer. Through iterations of forward and backward propagation information flows between the two layers, it performs a two-way associative search for stored bipolar vector pairs and generalize the single-layer auto-associative Hebbian correlation to a two-layer pattern-matched hetero associative circuits. Therefore, it possesses good applications in the field of pattern recognition and artificial intelligence [17,18,19,20,21]. In addition, the addressable memories or patterns of BAM neural networks can be stored with a two-way associative search. Accordingly, the BAM neural network has been widely studied both in theory and applications. In practical applications, the BAM neural network has been successfully applied to image processing, pattern recognition, automatic control, associative memory, parallel computation, and optimization problems. Therefore, it is interesting and important to study the stability of BAM neural network, which has been widely investigated [22,23,24,25,26,27,28,29,30].

Recently, Gopalsamy [31] initially introduced the delays in the ”forgetting” or leakage terms, and some results have been obtained, (see for example [32,33,34,35,36] and the references cited therein). Unfortunately, the delays in the leakage terms of neural networks in most literatures listed above are constants, and few authors have considered the dynamics of BAM neural networks with time-varying delays in the leakage terms [31]. Therefore, it is important and interesting to further investigate the dynamical behaviors of the BAM neural networks with time-varying leakage delays.

Up to now, various control approaches have been adopted to stabilize those instable systems. Controls such as dynamic feedback control, fuzzy logical control, impulsive control, \(H_\infty \) control, sliding mode control and sampled-data control are adopted by many authors [37,38,39,40,41,42]. The sampled-data control deals with continuous system by sampling the data at discrete time based on the computer, sensors, filters and network communication. Hence it is more preferable to use digital controllers instead of analogue circuits. This drastically reduces the amount of the transmitted information and improve the control efficiency. Compared with continuous control, the sampled-data control is more efficient, secure and useful [43,44,45,46,47,48,49]. In [50], the author considered the synchronization problem of coupled chaotic neural networks with time delay in the leakage term using sampled-data control. R. Sakthivel et al. [36] established a state estimator for BAM neural networks with leakage delays with the help of Lyapunov technique and LMI framework.

However to the best of our knowledge, the sampled-data control design of BAM neural network with time delay in the leakage term has not been investigated until now. This motivates our work.

The main contribution of this paper lies in three aspects:

-

(i).

It is the first attempt to study the sampled-data control of delayed BAM neural networks. An efficient approach is presented to deal with it.

-

(ii).

Based on the Lyapunov-Krasovskii theory and LMI framework, a new set of sufficient conditions is obtained to ensure that the dynamical system is globally asymptotically stable.

-

(iii).

It is worth pointing that a novel Lyapunov–Krasovskii functional (LKF) is constructed with augmented terms

The main objective of this paper is to study the sampled-data control for bidirectional associate memory neural networks with leakage delay components. By constructing suitable triple integral LKF, by utilizing the free-weighting matrix method and reciprocal convex method, a unified linear matrix inequality (LMI) approach is developed to establish sufficient conditions for leakage BAM neural network is globally stable. Finally a numerical example is given to illustrate the usefulness and effectiveness of the proposed method (Table 1).

Notations: Throughout the paper, we have used the standard notations in [36].

2 Problem description and Introductory

We investigated the sampled-data stabilization of a BAM neural networks with leaky delays. The following BAM neural network with leaking delay components is considered:

Here \(x^{(1)}_i(t)\) and \(y^{(1)}_j(t)\) are the state variables representing the neuron’s of state at time t, respectively. \(a_i>0\) and \(b_j>0\) are constants, and signify the time scales for the relevant network layers. \({{w}^{(1)}_{ij}},{{w}^{(2)}_{ij}},{{v}^{(1)}_{ij}}\) and \({{v}^{(2)}_{ij}}\) are the synaptic connection weights. The external inputs are represented by \(J^{(1)}_i\) and \(J^{(2)}_j\); \(\ \delta _1\) and \(\ \delta _2\) are leakage delays; Time-varying delay components are \(\tau _1(t)>0\) and \(\tau _2(t)>0\); Neuron activation functions \(\tilde{f}^{(1)}_j(\cdot ),\ \tilde{f}^{(2)}_j(\cdot ),\ \tilde{g}^{(1)}_i(\cdot )\) and \(\tilde{g}^{(2)}_i(\cdot )\), \(\tau _1(t)>0\) and \(\tau _2(t)>0\) are differentiable, bounded and satisfies

where \(\tau _1,\ \tau _2,\ \delta _1,\ \delta _2,\ \mu _1\) and \(\mu _2\) are positive constants.

Let \((x^{(1)^*},y^{(1)^*})^T\) be an equilibrium point of equation (1). Then, \((x^{(1)^*},y^{(1)^*})^T\) satisfies the following equations:

By shifting the equilibrium point \((x^{(1)^*},y^{(1)^*})^T\) to the origin using transformation \(x_{i}(t)=x^{(1)}_i(t)-x^{(1)^*}_i,\ y_{i}(t)=y^{(1)}_i(t)-y^{(1)^*}_i\) the system (1) can be written as:

where \(A=diag\{a_1,...,a_n\}>0,\ B=diag\{b_1,...,b_n\}>0,\ W_1=(w^{(1)}_{ij})_{n\times n}\in R^{n\times n},\ W_2=(w^{(2)}_{ij})_{n\times n}\in R^{n\times n},\ V_1=(v^{(1)}_{ij})_{n\times n}\in R^{n\times n},\ V_2=(v^{(2)}_{ij})_{n\times n}\in R^{n\times n},\ f_1(y(t))=\tilde{f}_1(y(t)-y^{(1)^*}),\ f_2(y(t))=\tilde{f}_2(y(t)-y^{(1)^*}),\ g_1(x(t))=\tilde{g}_1(x(t)-x^{(1)^*})\) and \(g_2(x(t))=\tilde{g}_2(x(t)-x^{(1)^*})\) with \(f_1(0)=f_2(0)=g_1(0)=g_2(0)=0\).

Assumption (A). The neuron activation functions \(f_{ki}(\cdot ),\ g_{kj}(\cdot )\) in (4) are non-decreasing, limited, and there exist constants \(F^{-}_{ki},\ F^{+}_{ki},\ G^{-}_{kj},\ G^{+}_{kj}\) such that

Here \(k=1,2\) and \(\alpha ,\beta \in \mathbb {R}\) are both equal to \(\beta \). We define the following matrices for ease of notation:

The system (4) with control is given by

where the output measurements are u(t) and v(t). We define the sampled-data control as:

where the sampled-data gain matrices are \(\mathcal {K},\mathcal {M}\in \mathcal {R}^{n\times n}\). The sample time point, \(t_k\), meets the conditions \(0=t_0<t_1,...,<t_k<...,\) and \(\lim \limits _{k\rightarrow \infty }t_k=+\infty .\) Additionally, there is a positive constant \(\tau _3\) such that \(t_{k+1}-t_k\le \tau _3,\ \forall k\in {\textbf {N}},\). If \(\tau _3(t)=t-t_k,\) for \(t\in [t_k,t_{k+1}),\) then \(t_k=t-\tau _3(t)\) with \(0\le \tau _3(t)\le \tau _3\). The delayed BAM networks with leakage delay (6) may be rebuilt as follows in accordance with the control law:

We present the following lemmas, which will be employed in the main theorems.

Lemma 2.1

[51] If the following integrations are defined well, for every positive definite matrix \(N>0\) and scalars \(\beta>\alpha >0\), we have

Lemma 2.2

[52] For every constant matrix \(R\in R^{n \times n},\ R=R^T>0,\), and \(\dot{e}:[-\tau _M,0]\rightarrow R^n\), respectively. The integrations listed below are satisfy

Lemma 2.3

[53] Let an open subset D of \(R^m\) have positive values for \(f_1,f_2,...,f_N:R^m\mapsto R \). When \(f_i\) over D is reciprocally convex, it is satisfied that,

subject to

3 Main results

This section examines the system stability (8) and derives the necessary requirements while maintaining system stability.

Theorem 3.1

Under Assumption (A), for given gain matrices \(\mathcal {K},\ \mathcal {M}\) and scalars \(\tau _1\), \(\tau _2\), \(\tau _3\), \(\delta _1\), \(\delta _2\), \(\mu _1\), and \(\mu _2\), the equilibrium point of system (8) is stable if there exist matrices \(\mathcal {P}>0\), \(\mathcal {Q}>0\), \(P_i>0\), \((i=1,2,...,8)\), \(Q_j>0\), \(R_j>0\), \(\left[ \begin{array} {cc} X_{j} &{} Y_{j}\\ \maltese &{} Z_{j} \end{array}\right] >0,\) positive-definite diagonal matrices \(U_{j},\ J_{j}, \) and any matrices \( \left[ \begin{array} {cc} M_{j} &{} N_{j}\\ H_{j} &{} T_{j} \end{array}\right] ,\ (j=1,2,...,4), {\tilde{R}}_1,\ {\tilde{R}}_2, S_1 \) and \(S_2\) such that the following LMIs hold:

where

Proof

We construct the Lyapunov Krasovskii functional as:

where

We calculate the derivatives \(\dot{V}_i(x_{t},y_{t},t),\ i=1,2,...,7\) along the trajectories of the system (8) gives,

The integral terms in (15) can be expressed as following

By applying Lemma 2.2, we obtain

By applying the Lemmas 2.1 and 2.3 in \(\dot{V}_5(x_{t},y_{t},t)\), we obtain the following results

We can estimate the three terms in inequality (16) in a similar way to (27) as

The upper bound of the reciprocally convex combination in \(\dot{V}_6(x_{t},y_{t},t)\) can be obtained as

According to Lemma 2.1 the following inequality’s are hold:

On the other hand, for any matrices \(S_1\) and \(S_2\) with appropriate dimensions the following equations holds:

Furthermore, based on Assumption (A), we have

where \(k=1,2,\)

which is equivalent to

where \(x_i\) and \(y_j\) denotes the units column vector having element 1 on its \(i^{th}\) row, \(j^{th}\) row, and zeros elsewhere. Let \(U_k=diag\{u^k_{11},u^k_{12},...,u^k_{1n}\}>0,\) \(J_k=diag\{{\overline{j}}^k_{11},{\overline{j}}^k_{12},...,{\overline{j}}^k_{1n}\}>0,\). Here \(x_i\) and \(y_j\) stand for the units column vector, which has zeros on the other rows and element 1 on the \(i^{th}\) row, \(j^{th}\) rows. It is simple to observe that if \(U_k=diag\{u^k_{11},u^k_{12},...,u^k_{1n}\}>0,\) \(J_k=diag\{{\overline{j}}^k_{11},{\overline{j}}^k_{12},...,{\overline{j}}^k_{1n}\}>0,\)

That is,

Similar to above, for \(U_{k+2}=diag\{u^{k+2}_{11},u^{k+2}_{12},...,u^{k+2}_{1n}\}>0\), \(J_{k+2}=diag\{{\overline{j}}^{k+2}_{11},{\overline{j}}^{k+2}_{12},...,{\overline{j}}^{k+2}_{1n}\}>0,\) we can obtain the following inequalities:

Now, combining (12)–(38), we have

where

and \(\Omega \) is defined as in (10).

Thus, the equilibrium point of (8) is globally asymptotically stable. It may be inferred from the inequality (39) that,

where

where

On the other hand, by the definition of \(V(x_{t},y_{t},t)\), we get

Then, combining (41) and (42), we obtain

It is clear that the system (8) is globally asymptotic stable for \(\Omega <0\), by Lyapunov stability theory. This completes the proof. \(\square \)

4 Sampled-Data Stabilization

Theorem 4.1

Under Assumption (A), for given scalars \(\tau _1,\ \tau _2,\ \tau _3,\ \delta _1,\ \delta _2,\ \mu _1\), and \(\mu _2\), the equilibrium point of system (8) is stable if there exist matrices \(\mathcal {P}>0,\ \mathcal {Q}>0,\ P_i>0,\ (i=1,2,...,8), \ Q_j>0,\ R_j>0,\ \left[ \begin{array} {cc} X_{j} &{} Y_{j}\\ \maltese &{} Z_{j} \end{array}\right] >0,\) positive-definite diagonal matrices \(U_{j},\ J_{j} \) and any matrices \( \left[ \begin{array} {cc} M_{j} &{} N_{j}\\ H_{j} &{} T_{j} \end{array}\right] ,\ (j=1,2,...,4), {\tilde{R}}_1,\ {\tilde{R}}_2, {L_1}, {L_2} \) such that the following LMIs hold:

where

Moreover, desired controller gain matrix are given by

Proof

The proof is similar to that of Theorem 3.1. Because it is not included here. \(\square \)

Remark 4.2

By adopting the input delay approach, the proposed model can be transformed into continuous system. Because of the sampled-data control strategy deals with continuous system by sampling data at discrete time, we make the first attempt to address the sampled-data synchronization control problem for the presented model. A novel sampled-data control strategy was adopted to ensure that the drive system could achieve synchronization with the response system. To be noted that the sampled-data control drastically reduces the amount of transmitted information and increases the efficiency of bandwidth usage, because its’ control signals are kept constant during the sampling period and are allowed to change only at the sampling instant. On the other hand, due to the rapid growth of the digital hardware technologies, the sampled-data control method is more efficient, secure and useful. Thus, in this research area, a great deal of remarkable research investigations have been made and it can be found in [54,55,56,57].

Remark 4.3

The leakage delay, in particular, which exists in the negative feedback term of BAM, has recently emerged as a research topic of great significance. In [58] Gopalsamy was the first who investigated the stability of the BAM neural networks with constant leakage delays. He was surprised to launched that time delays in leakage terms has an essential impact on the dynamical performances, and the stability issue has been destabilized by leakage term for NN model. Hence, it is clear that, dynamic behaviours of system including leakage/forgetting term, that can be construct in the negative feedback term in the system model, which has been sketched back to 1992 Later on, a substantial achievements have been reached regarding the dynamics of BAM with delay in the leakage term [59,60,61,62]. On the other hand, it is natural that the BAM contains certain information about the derivative of the past state. This is expressed via the encompass of delay in the neutral derivative of BAM which often appears in the study of automatic control, population dynamics and vibrating masses attached to an elastic bar; the reader may consult the papers [63,64,65,66] for more details.

5 Numerical Examples

The usefulness of the theoretical methods proposed is demonstrated in this section by numerical examples.

Example 5.1

We consider the following BAM neural networks with leakage delays:

with the following parameters:

Let the activation functions as \(f_1(x)=f_2(x)=g_1(x)=g_2(x)=tanh(x).\) It is obvious that Assumption (A) is satisfied, and we obtain

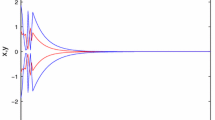

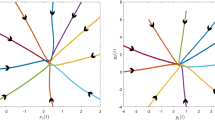

The allowable new upper bounds of \(\tau _1,\ \tau _2 \) and the corresponding new gain matrices for various values of \( \mu _1,\ \mu _2 \) and leakage delays \( \delta _1,\ \delta _2 \) are obtained by solving the LMIs in (45) with the aforementioned new parameter values using the LMI control toolbox. These results are listed in Table 2. Let \(\tau _1=\tau _2=0.5746,\ \mu _1=\mu _2=1\) the dynamical analysis of BAM neural framework (1) is stable. The feasible solutions are shown below.

The following gain matrices are obtained by taking the sample time points \( t_k=0.01k, k=1,2,...,\) and the sampling period \(\tau _3=0.03\), respectively.

Thus every requirement in Theorem 4.1 has been satisfies and system (47) is stable under the specified sampled-data feedback control, according to Theorem 4.1.

6 Conclusion

In order to regulate BAM neurons with leakage delays, a novel sampled-data control approach and its stability analysis are investigated in this research. We started by looking at instability events brought on by leakage delays. After that, we used a sampled-data control approach to bring the unstable systems under control. To determine the gain matrix for the planned sampled-data controllers, certain LMIs were generated. Finally, to demonstrate the efficiency of our theoretical findings, a numerical example and accompanying computational models have been provided. Future planning will take into account the intricacy of some control systems.Our future study will also focus on some systems biology with leakage delays as a key field of research such as Event triggered control and bifurcation analysis.

References

Thendra M, Ganesh Babu TR, Chandrasekar A, Cao Y (2022) Synchronization of Markovian jump neural networks for sampled data control systems with additive delay components: Analysis of image encryption technique. Math Meth Appl Sci. https://doi.org/10.1002/mma.8774

Chandrasekar A, Radhika T, Zhu Q (2022) State estimation for genetic regulatory networks with two delay components by using second-order reciprocally convex approach. Neural Process Lett 54(1):327–345

Chandrasekar A, Rakkiyappan R, Li X (2016) Effects of bounded and unbounded leakage time-varying delays in memristor-based recurrent neural networks with different memductance functions. Neurocomputer 202:67–83

Haykin S (1994) Neural networks: a comprehensive foundation. Prentice Hall, New York

Gunasekaran N, Syed Ali M (2021) Design of Stochastic Passivity and Passification for Delayed BAM Neural Networks with Markov Jump Parameters via Non-uniform Sampled-Data Control. Neural Process Lett 53:391–404

Xu S, Lam J, Ho WC, Zou Y (2005) Delay-dependent exponential stability for a class of neural networks with time delays. J Comput Appl Math 183:16–28

Kwon OM, Lee SM, Park JH, Cha EJ (2012) New approaches on stability criteria for neural networks with interval time-varying delays. Appl Math Comput 218:9953–9964

Arik S (2014) An improved robust stability result for uncertain neural networks with multiple time delays. Neural Netw 54:1–10

Zeng HB, He Y, Wu M, Zhang CF (2011) Complete delay-decomposing approach to asymptotic stability for neural networks with time-varying delays. IEEE Trans Neural Netw 22:806–812

Liu Y, Lee SM, Kwon OM, Park JH (2015) New approach to stability criteria for generalized neural networks with interval time-varying delays. Neurocomputing 149:1544–1551

Arik S (2014) An analysis of stability of neutral-type neural systems with constant time delays. J Franklin Inst 351:4949–4959

Li X, Caraballo T, Rakkiyappan R, Xiuping H (2015) On the stability of impulsive functional differential equations with infinite delays. Math Methods Appl Sci 38(14):3130–3140

Dan Y, Li X, Qiu J (2019) Output tracking control of delayed switched systems via state-dependent switching and dynamic output feedback. Nonlinear Anal Hybrid 32:294–305

Li X, Yang X, Huang T (2019) Persistence of delayed cooperative models: Impulsive control method, Applied. Math Comput 342:130–146

Ali MS, Gunasekaran N, Zhu Q (2017) State estimation of T-S fuzzy delayed neural networks with Markovian jumping parameters using sampled-data control. Fuzzy Sets Syst 306:87–104

Kosko B (1987) Adaptive bidirectional associative memories. Appl Opt 26:4947–4960

Gopalsamy K, He XZ (1994) Delay independent stability in bidirectional associative memory networks. IEEE Trans Neural Netw 5:998–1002

Cao J, Dong M (2003) Exponential stability of delayed bi-directional associative memory networks. Appl Math Comput 135:105–112

Arik S (2005) Global asymptotic stability of bidirectional associative memory neural networks with time delays. IEEE Trans Neural Netw 16:580–586

Senan S, Arik S (2007) Global robust stability of bidirectional associative memory neural networks with multiple time delays. IEEE Trans Syst Man Cybern B 37:1375–1381

Cao J, Wan Y (2014) Matrix measure strategies for stability and synchronization of inertial BAM neural network with time delays. Neural Netw 53:165–172

Cao J, Song Q (2006) Stability in Cohen-Grossberg-type bidirectional associative memory neural networks with time-varying delays. Nonlinearity 19:1601–1617

Syed Ali M, Gunasekaran N, Rani ME (2017) Robust stability of Hopfield delayed neural networks via an augmented LK functional. Neurocomputing 234:198–204

Syed Ali M, Gunasekaran N, Ahn CK, Shi P (2016) Sampled-data stabilization for fuzzy genetic regulatory networks with leakage delays. IEEE/ACM Trans Comput Biol Bioinform 15:271–285

Gunasekaran N, Ali MS (2021) Design of stochastic passivity and passification for delayed BAM neural networks with Markov jump parameters via non-uniform sampled-data control. Neural Process Lett 53:391–404

Bao H, Cao J (2011) Robust state estimation for uncertain stochastic bidirectional associative memory networks with time-varying delays. Phys Scripta 83:065004

Mathiyalagan K, Sakthivel R, Marshal Anthoni S (2012) New robust passivity criteria for stochastic fuzzy BAM neural networks with time-varying delays. Commun Nonlinear Sci Numer Simulat 17:1392–1407

Bao H, Cao J (2012) Exponential stability for stochastic BAM networks with discrete and distributed delays. Appl Math Comput 218:6188–6199

Thoiyab NM, Muruganantham P, Zhu Q, Gunasekaran N (2021) Novel results on global stability analysis for multiple time-delayed BAM neural networks under parameter uncertainties. Chaos, Solitons Fractals 17(152):111441

Thoiyab NM, Muruganantham P, Gunasekaran N (2021) Global robust stability analysis for hybrid bam neural networks. In: 2021 IEEE second international conference on control, measurement and instrumentation (CMI), pp. 93–98

Gopalsamy K (2007) Leakage delays in BAM. J Math Anal Appl 325:1117–1132

Wang H, Li C, Xu H (2009) Existence and global exponential stability of periodic solution of cellular neural networks with impulses and leakage delay. Int J Bifurcat Chaos 19:831–842

Li X, Cao J (2010) Delay-dependent stability of neural networks of neutral type with time delay in the leakage term. Nonlinearity 23:1709–1726

Li X, Fu X, Balasubramaniam P, Rakkiyappan R (2010) Existence, uniqueness and stability analysis of recurrent neural networks with time delay in the leakage term under impulsive perturbations. Nonlinear Anal Real World Appl 11:4092–4108

Lakshmanan S, Park JH, Jung HY, Balasubramaniam P (2012) Design of state estimator for neural networks with leakage, discrete and distributed delays. Appl Math Comput 218:11297–11310

Sakthivel R, Vadivel P, Mathiyalagan K, Arunkumar A, Sivachitra M (2015) Design of state estimator for bidirectional associative memory neural networks with leakage delays. Inform Sci 296:263–274

Jagannathan S, Vandegrift M, Lewis FL (2000) Adaptive fuzzy logic control of discrete-time dynamical systems. Automatica 36:229–241

Stamova I, Stamov G (2012) Impulsive control on global asymptotic stability for a class of impulsive bidirectional associative memory neural networks with distributed delays. Math Comput Model 53:824–831

Li B, Shen H, Song X, Zhao J (2014) Robust exponential \(H_\infty \) control for uncertain time-varying delay systems with input saturation: A Markov jump model approach. Appl Math Comput 237:190–202

Behrouz E, Reza T, Matthew F (2014) A dynamic feedback control strategy for control loops with time-varying delay. Int J Control 87:887–897

Peng C, Zhang J (2015) Event-triggered output-feedback \(H_\infty \) control for networked control systems with time-varying sampling. IET Control Theory Appl 9:1384–1391

Zhu XL, Wang Y (2011) Stabilization for sampled-data neural-network-based control systems. IEEE Trans Syst Man Cybern 41:210–221

Zhang W, Yu L (2010) Stabilization of sampled-data control systems with control inputs missing. IEEE Trans Automat Control 55:447–452

Zhu XL, Wang Y (2011) Stabilization for sampled-data neural-networks-based control systems. IEEE Trans Syst Man Cybern 41:210–221

Yoneyama J (2012) Robust sampled-data stabilization of uncertain fuzzy systems via input delay approach. Inform Sci 198:169–176

Su L, Shen H (2015) Mixed \(H_\infty \)/passive synchronization for complex dynamical networks with sampled-data control. Appl Math Comput 259:931–942

Hui G, Zhanga H, Wu Z, Wang Y (2014) Control synthesis problem for networked linear sampled-data control systems with band-limited channels. Inform Sci 275:385–399

Wu Z, Shi P, Su H, Chu J (2013) Stochastic synchronization of Markovian jump neural networks with time-varying delay using sampled-data. IEEE Trans Cybern 43:1796–1806

Weng Y, Chao Z (2014) Robust Sampled-data \(H_\infty \) output feedback control of active suspension system. Int J Innov Comput Inf Control 10:281–292

Gan Q, Liang Y (2012) Synchronization of chaotic neural networks with time-delay in the leakage term and parametric uncertainties based on sampled-data control. J Franklin Inst 349:1955–1971

Feng Z, Lam J (2012) Integral partitioning approach to robust stabilisation for uncertain distributed time-delay systems. Int J Robust Nonlinear Control 22:679–689

Balasubramaniam P, Krishnasamy R, Rakkiyappan R (2012) Delay-dependent stability of neutral systems with time-varying delays using delay-decomposition approach. Appl Math Model 36:2253–2261

Park P, Ko JW, Jeong C (2011) Reciprocally convex approach to stability of systems with time-varying delays. Automatica 47:235–238

Wang J, Tian L (2019) Stability of Inertial Neural Network with Time-Varying Delays Via Sampled-Data Control. Neural Process Lett 50:1123–1138

Saravanakumar R, Amini A, Datta R, Cao Y (2022) Reliable memory sampled-data consensus of multi-agent systems with nonlinear actuator faults. IEEE Trans Circuits Syst II Exp Briefs 69(4):2201–2205

Wang Z, Wu H (2017) Robust guaranteed cost sampled-data fuzzy control for uncertain nonlinear time-delay systems. IEEE Trans Syst Man Cybern Syst 49(5):964–975

Hua C, Wu S, Guan X (2020) Stabilization of T-s fuzzy system with time delay under sampled-data control using a new looped-functional. IEEE Trans Fuzzy Syst 28(2):400–407

Gopalsamy K (2007) Leakage delays in BAM. J Math Anal Appl 325:1117–1132

Peng S (2010) Global attractive periodic solutions of BAM neural networks with continuously distributed delays in the leakage terms. Nonlinear Anal Real World Appl 11:2141–2151

Balasubramaniam P, Kalpana M, Rakkiyappan R (2011) Global asymptotic stability of BAM fuzzy cellular neural networks with time delay in the leakage term, discrete and unbounded distributed delays. Math Comput Model 53:839–853

Liu B (2013) Global exponential stability for BAM neural networks with time-varying delays in the leakage terms. Nonlinear Anal Real World Appl 14:559–566

Lakshmanan S, Park J, Lee T, Jung H, Rakkiyappan R (2013) Stability criteria for BAM neural networks with leakage delays and probabilistic time-varying delays. Appl Math Comput 219:9408–9423

Zhang H (2014) Existence ans stability of almost periodic solutions for CNNs with continuously distributed leakage delays. Neural Comput Appl 24:1135–1146

Feng J, Xu S, Zou Y (2009) Delay-dependent stability of neutral type neural networks with distributed delays. Neurocomputing 72:2576–2580

Li YK, Li YQ (2013) Existence and exponential stability of almost periodic solution for neutral delay BAM neural networks with time-varying delays in leakage terms. J Frankin Inst 350:2808–2825

Xu CJ, Li PL (2016) Existence and exponentially stability of anti-periodic solutions for neutral BAM neural networks with time-varying delays in the leakage terms. J Nonlinear Sci Appl 9(3):1285–1305

Acknowledgements

The work of the author was supported by National Research Council of Thailand (Talented Midcareer Researchers) under research Grant No. N42A650250.

Author information

Authors and Affiliations

Contributions

SRC and SP: Methodology and Writing - original draft, MSA and VU: supervision, GR: conceptual, Writing - review & editing, Funding acquisition and Writing - original draft, BP and AJ : validation and simulation.

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chandra, S.R., Padmanabhan, S., Umesha, V. et al. New Insights on Bidirectional Associative Memory Neural Networks with Leakage Delay Components and Time-Varying Delays Using Sampled-Data Control. Neural Process Lett 56, 94 (2024). https://doi.org/10.1007/s11063-024-11499-y

Accepted:

Published:

DOI: https://doi.org/10.1007/s11063-024-11499-y