Abstract

Subspace clustering model based on self-representation learning often use \(\ell _1, \ell _2\) or kernel norm to constrain self-representation matrix of the dataset. In theory, \(\ell _1\) norm can constrain the independence of subspaces, but which may lead to under-connection because the sparsity of the self-representation matrix. \(\ell _2\) and nuclear norm regularization can improve the connectivity between clusters, but which may lead to over-connection of the self-representation matrix. Because a single regularization term may cause subspaces to be over or insufficiently divided, this paper proposes an elastic deep sparse self-representation subspace clustering network (EDS-SC), which imposes sparse constraints on deep features, and introduces the elastic network regularization mixed \(\ell _1\) and \(\ell _2\) norm to constraint self-representation matrix. The network can extract deep sparse features and provide a balance between subspace independence and connectivity. Experiments on human faces, objects, and medical imaging datasets prove the effectiveness of EDS-SC network.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Subspace clustering based on spectral clustering needs to learn a self-representation matrix for data dictionaries, and obtain the similarity between data points by the self-representation coefficients of samples, that is, each sample point \(x_j\) can be reconstructed as a linear of other sample points \(\{x_1,\ldots ,x_{j-1},x_{j+1},\ldots ,x_n\}\). This kind of method can be divided into two steps: firstly, by representing the learning model, the problem is transformed into an optimization problem with norm regularization terms by using the self-representation learning model (\(X=XZ\)) which minimize the loss of self-representation reconstruction. Secondly, solve the model to obtain the self-representation matrix Z, and use the symmetrized self-representation matrix Z as the adjacency matrix, and applied the N-cut algorithm to segment sample points. The main difference between different subspace clustering methods lies in the selection of regularization terms, in which the sparse subspace clustering SSC [1] adopts \(\ell _1\) norm regularization to constraint the representation matrix Z as sparse as possible, but its disadvantage is that the sparsity of the adjacency matrix may lead to over-segmentation, that is, sample points from the same subspace cannot be located in the same connected component of the correlation graph [2]. Therefore, the least squares subspace clustering LSR [3] uses the \(\ell _2\) norm as the regularization term. One of the advantages of LSR is that the representation matrix C is usually dense, which alleviates the connectivity problem of the SSC method. However, it is easy to connect the sample points of different subspaces, resulting in the sample points being difficult to be divided. Similarly, low-rank subspace clustering based on nuclear norm [4] also has limitations in application. In order to alleviate the problem caused by single-form regularization, Zhu et al. [5] propose sparse low-rank subspace clustering, and introduce elastic net regularization with mixed \(\ell _1\) and kernel norms, which has better subspace representation ability than SSC. You et al. [6] give a geometric explanation of the relationship between subspace independence and connectivity, and analyze the role of elastic net regularization. However,these traditional methods are only suitable for linear data, it is still difficult to effectively learn the similarity matrix of subspace clustering for high-dimensional nonlinear data.

Recently, deep learning has captured growing attention in subspace clustering. Lv et al. [7] proposed pseudo-supervised deep subspace clustering, which used pairwise similarity to weigh the reconstruction loss to capture local structure information, and a similarity was learned by the self-expression layer. Cai et al. [8] proposed a deep clustering framework with joint optimization through introducing the contractive representation in feature learning and utilizing focal loss in the clustering layer, which learned feature representation and label assignment simultaneously in an end-to-end way. Deep autoencoder as an important representative of deep learning has achieved great success in unsupervised representation learning, which uses multi-layer neural networks to encode the original high-dimensional data into low-dimensional feature representations, and then reconstructs the original data through a symmetric decoder. Ji et al. [9] first proposed a deep subspace clustering network by introducing self-representation layer to deep autoencoder. Peng et al. [10] proposed structured deep subspace clustering (DSC), introduced a priori sparse constraint to learn a self-representation matrix, and maintained the subspace structure of the original data. Zhou et al. [11] proposed deep subspace clustering that maintains the distribution of potential features, and used the loss of distribution consistency to make the distribution of data in the original space and feature space as same as possible. Kang et al. [12] used the relationship between samples to guide the representation learning of the deep autoencoders, and learn the self-representation matrix adaptively with. Zhou et al. [13] integrated generative adversarial network and deep subspace clustering, and proposed deep adversarial subspace clustering. Cai et al. [14] proposed a deep stacked sparse embedded clustering method, which considered both the local structure preservation and sparse property of inputs. Valanarasu et al. [15] fuse the features from both undercomplete and overcomplete autoencoder networks before passing them through the self-expressive layer, and proposed overcomplete deep subspace clustering networks. Efficient Deep Embedded Subspace Clustering (EDESC) [16] aimed to learn the subspace bases from deep representation in an iterative refining manner while the refined subspace bases help learning the representation of the deep neural networks in return. The above-mentioned deep subspace clustering (DSC) methods only adopt a single form of \(\ell _1\) or \(\ell _2\) regularization to constrain their self-representing matrices, and rarely constrain the deep features extracted by the DSC, which lead to difficult to fully eliminate irrelevant information in the original high-dimensional data.

Based on the above discussion, this paper proposes elastic deep sparse self-representation subspace clustering network (EDS-SC). EDS-SC combines deep autoencoder and self-representation subspace clustering, uses the deep autoencoder network to extract sparse latent features of the original data, and learns the self-representation matrix of the latent features for clustering. The EDS-SC network consists of encoder, self-representation layer and decoder. The main contributions of this paper are as follows:

-

(1)

In order to solve the problems of the existing deep subspace clustering (DSC) methods, EDS-SC introduce sparse regularization to constraint on the sample feature extracted by encoder, so that the dimension of the learned sample feature is as low as possible, and the redundant information is fully removed.

-

(2)

In order to overcome the shortcomings of single-form regularization and achieve better clustering effect, EDS-SC introduces elastic net regularization to constraint the self-representation matrix of the sample features in self-representation layer, so that the encoder parameters and self-representing learning parameters of EDS-SC can be optimized jointly during solving process.

-

(3)

The effectiveness of the proposed EDS-SC method was verified on the objects, faces and CT images datasets, and its clustering accuracy is better than all comparison methods, especially on the datasets with a small number of categories, the advantages of EDS-SC are more obvious.

2 Related Works

2.1 Subspace Clustering Based on Self-Representation Learning

Given a data matrix \(X=[x_1,x_2\ldots ,x_n]\in {R}^{d\times n}, d\) is the characteristic dimension of the sample, and n is the number of samples. The core of the subspace clustering method based on self-representation learning is to use the self-representation model \(X=XZ\) to learn the self-representation matrix Z, where Z measures the similarity between samples. Ideally, the similarity matrix Z is a block diagonal matrix, i.e., there is no correlation between clusters. The basic framework of the model is:

where \(Z\in R^{n\times n}\) represents the similarity between the original samples, and the constraint that the diagonal matrix is 0 is to prevent the trivial solution from being represented by the sample itself. After solving the coefficient matrix Z through the optimization framework, the adjacency matrix \(Z^*=(\left| Z\right| +\left| Z^T\right| )/2\) is constructed to calculate the Laplace matrix L. most subspace clustering algorithms use principal component analysis (PCA) to obtain the eigenvectors of the Laplacian matrix, and then combined with the classical clustering algorithm such as k-means to obtain the related subspace segmentation. \(f(\cdot )\) corresponds to different matrix norms: \(\ell _1\) norm corresponds to sparse subspace clustering, nuclear norm corresponds to low-rank subspace clustering, and F-norm corresponds to least square subspace clustering algorithm.

2.2 Regularization Theory

In machine learning algorithm, Bias and variance affect the accuracy of the model together. The error caused by bias is defined as the difference between the prediction (or average prediction) of the model created and the true value. The error caused by variance is defined as the variability of the predicted value of a given data point. The bias is used to measure the distance between the predicted value and the real value, while the variance describes the range of the predicted value and describes the degree of dispersion (i.e., the distance from the expected value). Figure 1 is a visualization of bias and deviations [17].

Graphical visualization of bias and variance [17]

High bias can easily lead to underfitting of the model, and high variance can lead to overfitting of the model. Due to the limited representation learning ability, the structure and parameter settings of the machine learning algorithm may lead to locally optimal solution. The regularization method can reduce the complexity of the model, avoid overfitting, and adapt the model to different tasks. Researchers have proposed a variety of regularization methods. Among them, \(\ell _1\) regularization, \(\ell _2\) regularization, and Elastic Net regularization are more commonly used, and they are defined as follows:

(1)\(\ell _1\) regularization (Lasso regression)

Given samples X and labels \(y,\beta \) is the weight matrix, \(\ell _1\) regularization introduces regularization term \(\lambda \left\| \beta \right\| _1\) on the basis of objective loss function, and learns the weight sparse matrix \(\beta , \lambda \) is a parameter that balances the objective loss and the importance of the regularization. The optimization model is as follows:

(2)\(\ell _2\) regularization (Ridge Regression)

\(\ell _2\) regularization(also called ridge regression) adds regularization term \(\lambda \left\| \beta \right\| _2^2\) in minimizing loss function, and makes the weight matrix \(\beta \) close to zero but not necessarily equal to zero, which has grouping effect, and the optimization model is as follows:

(3)Elastic Net regularization (Elastic Net [6])

Elastic Net regularization integrates the \(\ell _1\) and \(\ell _2\) regularization, which can balance the sparsity and connectivity of the weight matrix \(\beta \). Elastic Net regularization method is very useful when multiple features are related to another feature. The optimization model is as follows:

where \(\alpha \in \left[ 0,1\right] , \alpha \) is the factor controlling the regularization ratio of \(\ell _1\) and \(\ell _2\), \(\alpha \Vert \beta \Vert _{1}+(1-\alpha )\Vert \beta \Vert _{2}^{2}\) is exactly the convex linear combination of \(\ell _1\) regularization term and \(\ell _2\), regularization. When \(\alpha =1\), it is the \(\ell _1\) norm regularization. When \(\alpha =0\), it is the \(\ell _2\) norm regularization. When \(\alpha \in (0,1)\), Elastic Net integrates the advantages of \(\ell _1\) and \(\ell _2\) to achieve elasticity. By adjusting \(\lambda \) and \(\alpha \), it can better adapt to the characteristics of different data.

2.3 Autoencoder

The purpose of the autoencoder is to solve the unguided problem of back propagation and automatically obtain potential features from specific data sets based on the supervision of its own data. By encoding and decoding the input to reconstruct the data, it can essentially be used as a feature extractor [18]. With the development of deep learning applications, autoencoders are gradually combined with deep framework to optimize parameters through multi-layer neural network training in a self-supervised way. Assuming that the data \(X=[x_1,x_2,\ldots ,x_n]\in R^{d\times n}\) is the original input data, where d is the dimension of the sample and n is the number of samples, take the two-layer coding layer as an example, the potential feature space obtained by coding is:

where \(W^{\left( 1\right) }\) and \(b^{\left( 1\right) }\), \(W^{\left( 2\right) }\) and \(b^{\left( 2\right) }\) are weights and biases of the first and the second coding layers respectively, \(f_1\) and \(f_2\) is the activation function of the corresponding layer, Y is the latent feature extracted by the encoding layer decoded. The original input data itself is used to guide feature extraction and the potential feature Y is decoded to reconstruct to obtain \({\hat{X}}\). The goal of the autoencoder is to make X and \({\hat{X}}\) as close as possible. The optimization model is as follows:

where \(\mathrm {\Theta }_e\) is the parameter of the encoder, \(g(\cdot )\) is the nonlinear mapping of the encoder, \(\mathrm {\Theta }_d\) is the parameter of the decoder, \(h(\cdot )\) is the nonlinear mapping of the decoder. For example, \(g(\cdot )\) can be a convolution operation, while \(h(\cdot )\) is the deconvolution operation, and \({\hat{X}}\) is a function of the parameters \(\{\mathrm {\Theta }_e,\mathrm {\Theta }_d\}\).

2.4 Deep Subspace Clustering Algorithm Based on Autoencoder

In subspace clustering, the method of finishing deep feature extraction and self-representation learning at the same time is called deep subspace clustering. It is different from the traditional subspace clustering method in that it studies the subspace structure after feature extraction. Peng et al. [19] proposed a structured deep autoencoder (Struct AE), which introduces a priori sparse or non-sparse constrained learning self-representation matrix Z as a priori guide for encoder dimensionality reduction to potential space. Clustering in potential feature space, the optimization model is as follows:

where the first item of the model is to optimize the reconstruction error, the smaller the better, \({\hat{X}}\) is a function of the parameter \(\{\Theta _e,\Theta _d,Z\}\). However, the deficiency of this method is that the priori self-representation matrix Z depicts the relationship between the original high-dimensional samples, and the data of the original samples may be redundant, so the role of a priori guidance may not be ideal.

The self-representation matrix can be used as a priori constraint to better lead the learning of neural network parameters, and it can also be learned by combining neural networks as parameters. Ji et al. [9] proposed a Deep Subspace Clustering (DSC) model that combines autoencoder and self-representation model, which is mapped to the latent feature space Y by the encoder, and the self-representation layer is introduced between the encoder and the decoder. The projection points in the latent space are used as nodes, so that the nodes can represent each other, and Y and Z are solved by minimizing the reconstruction error and the representation error. The optimization model is as follows:

where \(\left\| {Z} \right\| _p\) is regularization term, which can be \(\ell _1\) or \(\ell _2\) regularization. DSC trains the autoencoding network and the self-representation model uniformly, and learns the self-representation coefficient of the sample with latent features. The self-representation matrix learns the relationship between samples after feature extraction, which can better describe the similarity between samples in low-dimensional space, which is closer to the essential relationship of data, which is conducive to spectral clustering.

3 Elastic Deep Sparse Self-Representation Subspace Clustering Network

Inspired by the deep subspace clustering based on the autoencoder, this paper extends the DSC-Net framework and proposes an elastic deep sparse self-representation subspace clustering (EDS-SC). Based on \(\ell _2\) regularity can effectively control the overfitting of training model, while based on \(\ell _1\) regularity can constrain the sparsity of self-representation matrix, they are opposite in the function of balancing connectivity and independence of subspace. In general, \(\ell _2\) regularity can make some parameters close to 0, and \(\ell _1\) regularity can make some parameters equal to 0. Combining these two regularity, elastic networks can achieve both regular and sparse [20]. In order to sparse the deep features extracted by the autoencoder, the sparse constraint on the potential feature space Y is introduced into the objective function, and a sparse deep subspace clustering algorithm based on elastic network regularity is proposed. The optimization model is as follows:

where \(\zeta _{AE}=\left\| {X-{\hat{X}}} \right\| _F^2\) is the reconstruction error of the autoencoder, \(\zeta _{SC}=\left\| {Y-YZ} \right\| _F^2\) is the self-representation error of subspace clustering of latent feature space passing through the coding layer. \(\zeta _{EN}=\alpha _{EN}\left\| {Z} \right\| _1+(1-\alpha _{EN})\left\| {Z} \right\| _F^2\) is a regularization term based on Elastic Net, the regularization terms of Lasso regression and ridge regression can be adjusted by the parameter \(\alpha _{EN}\) of the elastic network. \(\zeta _S=\left\| {Y} \right\| _1\) encourages the deep features extracted to be sparse, so that the potential features can help to improve the distinguishability between different clusters. By substituting the parameters, we can get the EDC-SC model as follows:

where \(\alpha _{SC}\) is the balance parameter of the error of the self-representation layer in subspace clustering, \(\alpha _{EN}\) is the proportion of the two regularization terms that adjust the elastic network, while \(\lambda \) is set to control the balance parameters of these two parts, \(\alpha _S\) is the equilibrium parameter of sparse feature. \(\mathrm {\Theta }_e\) and \(\mathrm {\Theta }_d\) are the network parameters of the encoding layer and the decoding layer respectively. The network structure is shown in Fig. 2.

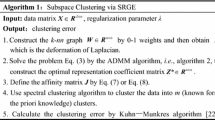

In the above objective function, the elastic net regular can weigh \(\ell _1\) norm and F-norm, so that the self-representation matrix can keep the subspace independent as well as the subspace internally connected. The learning of self-representation matrix can be optimized by adjusting parameters and joint control. But the reference [9] only considers the regularity of a single form, they consider two kinds of regularization for self-representation matrix Z: (1) The \(\ell _1\) norm is denoted as DSC-Net-L1. (2) \(\ell _2\) norm as regular term, namely DSC-Net-L2. In the subspace clustering based on spectral graph learning, the general constrained self-representation matrix is sparse, low-rank or has group effect. However, the single application of these methods is easy to cause subspace structure imbalance and is not conducive to spectral clustering. In this paper, the algorithm integrates two kinds of regular terms to optimize the self-representation matrix of the potential feature space. Considering that the image has no effective constraint information after convolutional coding, the sparse constraint of the coding layer is introduced. The extracted sparse feature Y is more helpful to learn the subspace structure of the self-representation model. The algorithm flow of EDS-SC is shown in Algorithm 1.

Assume that the network has \(2N+1\) layers, N layers for encoder and decoder respectively, and a self-representation layer. If the number of channels in the \(i-th\) layer network of the encoder is \(c_i(c_0=1)\), the kernel size is \(k_i\times k_i\), then the parameters of each layer have \(k_i^2{c_{i-1}c}_i\). Because the encoder and decoder are symmetrical, the total number of network kernel parameter connections is \(\sum _{i=1}^{N}{2k_i^2{c_{i-1}c}_i}\). The number of encoder biases is \(\sum _{i=1}^{N}c_i\), the number of biases for the decoder is \(1+\sum _{i=1}^{N-1}c_i\). The self-representation layer is mainly to solve the self-representation matrix. Assuming that the number of samples of the data set is n, the number of parameters of the self-representation layer is \(n^2\). Therefore, the total number of parameters of the EDS-SC algorithm is \(n^2+\sum _{i=1}^{N}{2{(k}_i^2c_{i-1}+1)c_i-c_N}\).

4 Experiment and Result Analysis

4.1 Experimental Datasets

Four experimental data sets are used in our experiments, including the face dataset Extend Yale B [21] (abbreviated as EYaleB) and ORL [22], the object dataset COIL20 [23], and COVID19 dataset [24]. EYaleB includes face images of 38 people, and each person takes 64 images under different lighting conditions, a total of 2432 images. ORL contains 40 subjects and for each subject there are 10 samples with different facial expressions (eyes open/eyes closed, smiling/not smiling) and facial details (glasses/no glasses) under different lighting conditions. COIL20 contains 1440 grayscale images of 20 objects, and each object is shot on the turntable at \(5^{\circ }\) angle intervals. COVID-19 consists of 17 patients from two categories (COVID-19 and non-COVID-19), and each with 20 lung CT images including 200 confirmed and 140 unconfirmed. Some examples of the datasets are shown in Fig. 3. Among them, the upper two rows of the COVID19 dataset are non-COVID-19 patients, and the lower two rows are images of patients with COVID-19. After comparison, it can be found that the CT images of patients with COVID-19 have characteristics such as ground glass.

4.2 Experimental Setup

The EDS-SC network proposed in this paper is composed of a sparse convolutional encoder, a self-representation layer of Elastic Net regularization and a deconvolutional decoder. The stride of the convolution kernel is 2, the learning rate is \(\eta ={10}^{-3}\), and ReLU activation function is used. Each input image is mapped from the sparse convolutional coding layer to the latent feature space Y. In the self-representation layer, nodes fully connect using linear weights and no bias and nonlinear activation. Finally, the latent feature space is reconstructed back to the original size through the deconvolution decoder. The experiment is based on the Tensorflow\(-\)1.15 framework and a dual GTX 2080Ti GPUs environment. Firstly, the sparse autoencoder is pre-trained and the network parameters are initialized, and then the network is fine-tuned according to the elastic net regularization and deep sparse feature items of EDS-SC. For each data set, select the value of the equilibrium parameter \(\alpha _{EN}\) of the elastic net regularization from \(\{0,0.1,0.2,0.3,0.4,0.5,0.6,0.7,0.8,0.9,1.0\}\), and the deep sparse feature parameter is fixed to \(\alpha _S=1\).

This paper compares shallow and deep methods that are closely related to the development of subspace clustering, including the following:

-

(1)

Traditional subspacel clustering methods: SSC [1], LRR [4], Sparse subspace clustering and low-rank representation subspace clustering.

-

(2)

KSSC: Kernel Sparse Subspace Clustering (KSSC) [25], which introduces kernel mapping on the basis of SSC to make data linearly separable.

-

(3)

EDSC: Efficient Dense Subspace Clustering (EDSC) [26], by introducing a new dictionary variable to approximate the original data, using the dictionary variable to learn a representation coefficient matrix with group effects, and constraining the sample points in the same subspace to be close connection.

-

(4)

AE+SSC: The convolutional autoencoder AE extracts nonlinear features firstly, then uses the SSC algorithm to cluster the potential feature samples. The feature extraction and clustering process are separated.

-

(5)

DSC: Deep Subspace Clustering (DSC) [9] use the \(\ell _1\) norm as DSC-L1, and use the \(\ell _2\) norm as DSC-L2.

-

(6)

RGRL: Relation-Guided Representation Learning (RGRL), the \(\ell _1\) norm is recorded as RGRL-L1, and the \(\ell _2\) norm is recorded as RGRL-L2 [12].

Clustering accuracy (ACC) [27] and normalized mutual information (NMI) [28] are used as clustering evaluation index. The calculation formula of ACC is:

where n is the number of samples, \(s_i\) and \(r_i\) are the true labels and predicted labels respectively. The \(map(\cdot )\) function maps the class labels obtained by clustering to the class labels equivalent to the real class labels. Generally, the Kuhn-Munkres algorithm can be used to obtain the results. When \(s_i=map(r_i)\), \(\delta (s_i,map(r_i))=1\), otherwise \(\delta (s_i,map(r_i))=0\). Assume that \(\mathrm {\Omega }={w_1,w_2,\ldots ,w_k}\) is a partitioned set obtained through a clustering algorithm, \(C={c_1,c_2,\ldots ,c_k}\) is the division set of real clusters of the original data, NMI is defined as follows:

where \(I(\mathrm {\Omega };C)\) is mutual information, \(H(\mathrm {\Omega })\) is the entropy of \(\mathrm {\Omega }\), \((H(\mathrm {\Omega })+H(C))/2\) normalizes the mutual information. \(I(\mathrm {\Omega };C)\) and \(H(\mathrm {\Omega })\) are defined as follows:

where \(P(w_k)\) and \(P(c_j)\) are the probability that the sample belongs to class \(w_k\) and the probability that the sample belongs to class \(c_j\) respectively, \(P(w_k\cap c_j)\) is the probability of belonging to both class \(w_k\) and class \(c_j\).

4.3 Experimental Result

The original size of the EYale B dataset image is \(192\times 168\), and we down sampling it to \(42\times 42\). Similarly, we down sampling ORL from \(112\times 92\) to \(32\times 32\), and COIL20 to \(32\times 32\). The size of CT image in the COVID19 dataset is \(1024\times 1024\), and we down sampling it to \(224\times 224\) and normalize it. According to the different characteristics of data sets, the specific network structure design of each data set is different. The convolution core size, the number of channels per layer and the size of the self-representation matrix Z of the four datasets are shown in Table 1.

For the EYaleB dataset, during the fine-tuning phase, the number of epochs trained is defined as \(10+40k\), the balance parameters of the self-representation layer are \(\alpha _{SC}=1.0\times {10}^{k/10-3}\), \(\alpha _{EN}=0.5\). For the experiment of k classes (k target experimenters), take the mean and median of the experimental results of \(39-k\) times, and the experimental results are shown in Table 2.

From the experimental results in Table 2, as the number of experimenters in the experiment increases, the accuracy of clustering decreases. Compared with the traditional subspace clustering method, the result of the deep subspace clustering method is 10–30% higher. Compared with deep subspace clustering DSC-L1 and DSC-L2, the accuracy of EDC-SC is higher under various cluster numbers. Theoretically, Elastic Net can balance the connectivity and independence between self-representation matrix classes and classes, and the deep sparse representation in the algorithm can constrain some outliers and noise.

Table 3 shows the clustering results of all 4 image datasets on different comparison methods, in most cases, the proposed EDS-SC method performs the best. In addition, the following conclusions can be drawn from the table:

-

(1)

Compared with the traditional shallow subspace clustering SSC and KSSC based on kernel mapping, the clustering result of KSSC has not been completely improved, indicating that the method of kernel trick is not necessarily effective. Compared with the shallow subspace clustering method, the deep subspace clustering method has a greater improvement in the clustering results, which is due to the powerful representation learning ability of the autoencoder neural network.

-

(2)

On the basis of DSC, RGRL introduces sample relationship to guide representation learning, and the clustering results have been improved. Both methods perform better when based on \(\ell _2\) regularization. The EDS-SC proposed in this paper introduces Elastic Net regularization term and deep feature sparse term, which improves the accuracy of EYaleB, ORL, COIL20 and COVID19 by 0.47%, 1.75%, 0.98% and 3.62% respectively compared with RGRL-L2 with sub-optimal clustering results.

-

(3)

EDS-SC plays the most significant role in improving the COVID19 dataset. Because EDS-SC introduces sparse terms of depth features, and extracting sparse potential features of medical images is helpful to clustering. What is more, the COVID19 dataset has fewer classes, only two classes, and the data self-representation matrix is sparse and low-rank, which is consistent with the goal of elastic regularization. And the non-linear features of the face and object image datasets extracted by the autoencoder may not be as sparse as that of medical CT images, so the improvement effect on the COVID19 dataset is more obvious. Further, we conducted ablation experiments in 4.5 to verify the improvement of the two parts of the innovative work in this paper.

In addition, there are some deep subspace clustering code that is not open source, including latent distribution preserving deep subspace clustering (DPSC) [11], Structured AutoEncoders for Subspace Clustering (StructAE) [10], Deep Adversarial Subspace Clustering (DASC) [13] and Pseudo-supervised deep subspace clustering(PSSC) [7]. This paper compares their experimental results of COIL20 data sets, as shown in Table 4.

StructAE maintains the original high-dimensional sample subspace structure, which is not conducive to learning low-dimensional subspace structure. DPSC keeps the distribution of samples before and after feature extraction consistent, and the clustering accuracy is high at 97.54%. DASC generates “fake” samples in the sampling layer and learns the subspace structure in the process of adversarial generation, which has high time complexity and is not easy to converge. PSSC combines DSC and the self-supervised learning which uses pseudo-labels as supervision. The method EDS-SC in this paper combined Elastic Net regularization and autoencoder deep feature sparse items, and the clustering accuracy and standard mutual information are 97.99% and 98.10%, respectively, which were better than the clustering results of the DSC and RGRL series methods with the single form regularization. The clustering results show that the effectiveness of the method in this paper.

Figure 4 takes COIL20 (first row) and ORL (second row) as examples to show the adjacency matrix A learned by the EDS-SC network proposed in this paper. Ideally, A should have a block diagonal structure. Each block diagonal structure of the similarity matrix diagram corresponds to a class and corresponds to a subspace. The similarity matrix reflects the relationship between data points, and the denser the relationship, the greater the correlation. Select RGRL-L1 and RGRL-L2 with the second best clustering results to compare with the EDS-SC method in this paper.

The energy of COIL20 similarity graph is mainly distributed on diagonal and its very small neighborhood. Because the object in COIL20 rotates every 5 degrees to take a picture, so each image is only similar to the nearest neighbor angle image, but the energy distribution still satisfies the block diagonal property, and the similarity in each class is relatively uniform, which is less noisy than the ORL data set. It can be seen from Fig. 4 that the adjacency matrix A learned by EDS-SC method has a better block diagonal structure and has fewer noise points than a single form of regular RGRL-L1 or RGRL-L2. Because the feature of the EDS-SC constraint encoder is sparse, the performance of the self-representation matrix based on the sparse feature is better.

4.4 Parametric Analysis

The EDS-SC model has 4 parameters \(\alpha _{SC},\alpha _{EN},\alpha _S,\lambda \), where the sparse feature parameter \(\alpha _S\) is fixed to 1. Firstly, we explore the influence of the value of the balance parameter \(\alpha _{EN}\) of the Elastic Net regularization that constrains the self-representation matrix on the clustering accuracy. \(\alpha _{EN}\) is selected from the range of \(\{0,0.1,0.2,0.3,0.4,0.5,0.6,0.7,0.8,0.9,1.0\}\), and the clustering results of the four data sets at different values are shown in Fig. 5.

The EDS-SC method corresponds to a single form of \(\ell _1\) or \(\ell _2\) regularization when \(\alpha _{EN}=0\) or \(\alpha _{EN}=1\), respectively. It can be seen from Fig. 5 that the clustering performance is better when \(\alpha _{EN}\) is in the middle of 0.1\(-\)0.9, which shows the effectiveness of Elastic Net regularization.

In the experiment, parameter analysis shows that the best clustering results of four data sets EYaleB, COIL20, ORL and COVID19 are \(\alpha _{EN}\) equal to 0.4, 0.2, 0.7 and 0.5, respectively. Therefore, taking COIL20 and ORL as examples, discuss the influence of self-representation error parameter \(\alpha _{SC}\) and regular parameter \(\lambda \) on the clustering results when selecting the optimal \(\alpha _{EN}\). It can be seen from Fig. 6 that when the balance parameter \(\alpha _{SC}\) is between 4-10 and the parameter \(\lambda \) is between 0.001\(-\)0.1, the clustering accuracy of COIL20 and ORL is better.

4.5 Ablation Experiment

The innovation of EDS-SC proposed in this paper is divided into two parts: Elastic Net regularization can balance the connectivity and independence of the self-representation matrix, and deep sparse feature items can constrain outliers and noise. This section conducts an ablation study on the effectiveness of these two parts of the work:

-

(1)

Only Elastic Net regularization term, no feature sparse term, at this time \(\alpha _S=0\), the algorithm is marked as ED-SC.

-

(2)

Only feature sparse term, no Elastic Net regularization term, when \(\alpha _{EN}=1\), it corresponds to DS-SC-L1, when \(\alpha _{EN}=0\), it corresponds to DS-SC-L2.

-

(3)

There is no Elastic Net regularization term \((\alpha _{EN}=1\) or \(\alpha _{EN}=0)\) and feature sparse term \((\alpha _S=0)\). When \(\alpha _{EN}=1\), EDS-SC degenerates to D-SC-L1. When \(\alpha _{EN}=0\), EDS-SC degenerates to D-SC-L2.

In order to explore the effectiveness of the two parts of the work, the clustering accuracy of the above three types of methods and the time it takes to train one epoch are calculated respectively, as shown in Table 5.

The following conclusions can be drawn from the table:

-

(1)

Compared with DSC-L1 and DSC-L2, the clustering results of ED-SC based on Elastic Net regularization are increased by 0.40%, 2.70%, 0.25%, and 1.55%, respectively. It can be seen that Elastic Net regularization can take a better combination between \(\ell _1\) and \(\ell _2\) regularities. While DS-SC-L1 or DS-SC-L2 based on deep feature sparse items increases by 0.49%, 2.79%, 2.00%, 4.35% respectively, which is more effective than ED-SC. Theoretically, feature sparsity constraints can constrain outliers and noise, and help to learn more effective latent features, especially in the complex medical imaging dataset COVID19, which has the best improvement effect.

-

(2)

The EDS-SC proposed in this paper integrates the Elastic Net regularization term and feature sparse term, and the clustering accuracy rate reaches 97.99% on COIL20 and 93.12% on COVID19. Compared with the DSC-L2 without these two items, the improvement effect is significant, which verifies the effectiveness of the method proposed in this paper.

-

(3)

The EDS-SC method only takes 0.21s, 0.08s, 0.02s and 0.15s longer to train an epoch than DSC-L1 on the four datasets. The images of ORL and COIL20 datasets are not as complex as the medical CT images of COVID19, so the added time is relatively small. Because of the large number of training samples in the EYaleB dataset, the added time cost is the largest.

5 Conclusion

With the high-dimensional characteristics of the data, it is often not conducive to capture the essential geometric structure of the data, so the clustering result is not ideal. Under the theoretical condition, the \(\ell _1\) norm helps to maintain the affinity within the subspace, and the regularization of \(\ell _2\) and nuclear norm can improve the group effect and connectivity of the sample points, but only maintain the affinity between the independent subspaces. Elastic Net regularization can balance subspace independent maintenance and subspace connectivity, and prevent excessive segmentation or excessive group effects. This paper proposes the EDS-SC algorithm, which balances the intra-class consistency and inter-class separation of class data points through the Elastic Net regularization, and sparsely extracts the features from the autoencoder to obtain deep sparse features. Experiments on data sets in many fields show that the algorithm in this paper has good clustering performance and is suitable for high-dimensional data sets. In addition, EDS-SC is especially suitable for datasets with a small number of classes due to the introduction of elastic net regularization. The reason is that the self-representation matrix of such datasets is sparse and low-rank, which is consistent with the goal of minimizing elastic net regularization. However, in this paper, how to balance the two regularizations and the visualization of sparse feature selection in Elastic Net regularization has not been studied and experimented. This will be an important direction and goal of future research. Although deep autoencoder networks have been applied to subspace clustering, deep subspace clustering frameworks have the problem of loading all images into one batch, which is memory-consuming for neural networks and is not conducive to updating. Therefore, studying scalable deep subspace clustering will be a future work direction.

References

Elhamifar E, Vidal R (2009) Sparse subspace clustering. In: IEEE conference on computer vision and pattern recognition, pp 2790–2797

Elhamifar E, Vidal R (2012) Sparse subspace clustering: algorithm, theory, and applications. IEEE Trans Pattern Anal Mach Intell 35(11):2765–2781

Lu CY, Min H, Zhao ZQ et al (2012) Robust and efficient subspace segmentation via least squares regression. In: European conference on computer vision (ECCV), vol 7578, pp 347–360

Vidal R, Favaro P (2014) Low rank subspace clustering (LRSC). Pattern Recognit Lett 43(1):47–61

Zhu X, Zhang S, Li Y et al (2019) Low-rank sparse subspace for spectral clustering. IEEE Trans Knowl Data Eng 31(8):1532–1543

You C, Li CG, Robinson DP et al (2016) Oracle based active set algorithm for scalable elastic net subspace clustering. In: Computer vision pattern recognition. IEEE

Lv JC, Kang Z, Lu X et al (2021) Pseudo-supervised deep subspace clustering. IEEE Trans Image Process 66:5252–5263

Cai JY, Wang SP, Xu CY et al (2022) Unsupervised deep clustering via contractive feature representation and focal loss. Pattern Recognit 123:108386

Ji P, Zhang T, Li HD et al (2017) Deep subspace clustering networks. Neural Inf Process Syst 66:24–33

Peng X, Feng JS, Xiao SJ et al (2018) Structured autoEncoders for subspace clustering. IEEE Trans Image Process 27(10):5076–5086

Zhou L, Xiao B, Wang D et al (2019) Latent distribution preserving deep subspace clustering. In: 28th International joint conference on artificial intelligence

Kang Z, Lu X, Liang J et al (2020) Relation-guided representation learning. Neural Netw 131:93–102

Zhou P, Hou Y, Feng J (2018) Deep adversarial subspace clustering. In: IEEE Computer Society conference on computer vision and pattern recognition, pp 1596–1604

Cai JY, Wang SP, Guo WZ et al (2021) Unsupervised embedded feature learning for deep clustering with stacked sparse auto-encoder. Expert Syst Appl 186:115729

Valanarasu JM J, Patel VM (2021) Overcomplete deep subspace clustering networks. In: IEEE winter conference on applications of computer vision, pp 746–755

Cai JY, Fan JC, Guo WZ et al (2022) Efficient deep embedded subspace clustering. In: The IEEE conference on computer vision and pattern recognition (CVPR)

Domingos P (2012) A few useful things to know about machine learning. Commun ACM 55(10):78–87

Zhang S, Wang M, Yang F et al (2019) Manifold sparse auto-encoder for machine fault diagnosis. IEEE Sens J 66(99):1

Xi P, Jiashi F, Shijie X et al (2018) Structured AutoEncoders for subspace clustering. IEEE Trans Image Process 27:5076–5086

Hui Z, Hastite T (2005) Regularization and variable selection via the elastic net. J R Stat Soc Ser B Stat Methodol 67(2):301–320

Lee KC, Ho J, Kriegman DJ (2005) Acquiring linear subspaces for face recognition under variable lighting. IEEE Trans Pattern Anal Mach Intell 27(5):684–698

Cai D, He X, Hu Y et al (2007) Learning a spatially smooth subspace for face recognition. In: IEEE conference on computer vision and pattern recognition, pp 1–7

Nene SA, Nayar SK, Murase H (1996) Columbia object image library (coil-100)

Wang Q, Wang W, Chen X et al (2020) Deep learning based on high dimensional tensor for COVID-9 diagnosis. In: 2020 International conference on information science, parallel and distributed systems (ISPDS), pp 183–188

Patel VM, Vidal R (2014) Kernel sparse subspace clustering. In: IEEE international conference on image processing (ICIP), pp 2849–2853

Ji P, Salzmann M, Li H (2014) Efficient dense subspace clustering. In: IEEE winter on applications of computer vision (WACV), pp 461–468

Chen X, Jian C (2014) Gene expression data clustering based on graph regularized subspace segmentation. Neurocomputing 143:44–50

Luo S, Zhang C, Zhang W (2018) Consistent and specific multi-view subspace clustering. In: The thirty-second AAAI conference on artifificial intelligence, pp 3730–3737

Acknowledgements

This research was supported by National Natural Science Foundation of China (Grant No. 71273053, 11571074) and Natural Science Foundation of Fujian Province (Grant No. 2022J01102).

Author information

Authors and Affiliations

Contributions

QW: Software, Validation, Writing—original draft. XC: Conceptualization, Methodology, Supervision, Writing—review. YL: Validation, Writing—final draft. YL: Validation, Writing—editing.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, Q., Chen, X., Li, Y. et al. Elastic Deep Sparse Self-Representation Subspace Clustering Network. Neural Process Lett 56, 58 (2024). https://doi.org/10.1007/s11063-024-11473-8

Accepted:

Published:

DOI: https://doi.org/10.1007/s11063-024-11473-8