Abstract

Kernelized fuzzy C-means clustering with weighted local information is an extensively applied robust segmentation algorithm for noisy image. However, it is difficult to effectively solve the problem of segmenting image polluted by strong noise. To address this issue, a reconstruction-aware kernel fuzzy C-mean clustering with rich local information is proposed in this paper. Firstly, the optimization modeling of guided bilateral filtering is given for noisy image; Secondly, this filtering model is embedded into kernelized fuzzy C-means clustering with local information, and a novel reconstruction-filtering information driven fuzzy clustering model for noise-corrupted image segmentation is presented; Finally, a tri-level alternative and iterative algorithm is derived from optimizing model using optimization theory and its convergence is strictly analyzed. Many Experimental results on noisy synthetic images and actual images indicate that compared with the latest advanced fuzzy clustering-related algorithms, the algorithm presented in this paper has better segmentation performance and stronger robustness to noise, and its PSNR and ACC values increase by about 0.16–3.28 and 0.01–0.08 respectively.

Similar content being viewed by others

1 Introduction

Image segmentation [1] is a common theoretical foundation in image understanding [2], machine vision [3, 4], and image analysis [5]. It divides the image into a certain number of non-overlapping, homogeneous regions or semantic objects with similar hue, color, intensity, and other characteristics. Over the past several decades, large numbers of researchers have put forward various image segmentation algorithms, which have promoted the rapid development of image interpretation technology. According to different features, existing image segmentation methods are divided into the following categories: thresholding method [6], watershed transformation [7], region growing method [8], edge detection method [9], neural network-based learning method [10], and clustering method [11]. Among them, clustering methods based on fuzzy theory have received widespread attention from many researchers in recent years due to the fuzziness of image itself.

Fuzzy C-means (FCM) clustering [12] is one of the popular fuzzy clustering techniques, which adopts membership as a discriminant to divide data. When the image is not contaminated by noise, the FCM algorithm can obtain the satisfactory segmented result [13]. However, when the image contains noise with different intensities, FCM is difficult to achieve satisfactory segmented result. Therefore, to address the susceptibility of FCM to noise in image, Ahmed et al. [14] presented a robust FCM algorithm incorporating spatial information (FCM-S). This algorithm introduces local spatial neighborhood information constraints to provide continuity between the feature values of the central pixel and its neighborhood pixels, thereby achieving the utilization of spatial information and improving its robustness to noise to a certain extent. Although FCM_S incorporates spatial neighborhood information, it is still short of strong robustness against noise, and its runtime is much longer than that of FCM. Therefore, to overcome such limitation, Chen and Zhang [15] modified FCM-S and presented two variants, FCM-S1 and FCM-S2, which reduces the calculation complexity of the algorithm. However, to effectively resist noise, it is imperative to select the regularization factor for local spatial information constraints based on experience. Considering the shortcomings of the above algorithms, Cai et al. [16] further presented a fast generalized FCM algorithm (FGFCM), which solves the challenge of relying on parameter experience adjustment. This improved the FGFCM algorithm guarantees anti-noise performance while preserving image details. However, the FGFCM algorithm is not directly applied to the original image, but rather balances the relationship between anti-noise robustness and image details preservation by selecting parameters that require repeated experiments to determine. To solve the above problem, Krinidis and Chatzis [17] introduced a new local fuzzy factor into the FCM algorithm and presented a robust FCM with local information constraints (FLICM). This algorithm can adaptively resist noise to some extent by fuzzy fusion of the spatial distance and gray information of neighborhood pixels. To further improve the anti-noise ability of the FLICM algorithm, Gong et al. [18] introduced a kernel method into the FLICM algorithm and presented a FCM algorithm with local information and kernel metrics to segment noisy image (KWFLICM). This algorithm uses a nonlinear function to map linearly non-separable data in lower space to high feature space, enhancing the data linearly separable. Although kernel method can effectively solve the problem of nonlinear classification, it still poses a challenge to solve the segmentation problem of strong noise-polluted images.

To reduce the influence of noise on the fuzzy clustering correlation algorithms, we usually need to resist noisy images. Gaussian filtering (GS) [19] is a classic low-pass filter, which can usually be used to process high levels of noise or rough textures for image restoration and reconstruction applications [20]. Wan et al. [21] presented an improved FCM algorithm based on Gaussian filtering (IFCM), but Gaussian filtering will lose part of feature information when smoothing the image, making the segmented results have obvious loss of details. Tomasi et al. [22] presented a bilateral filtering (BF) with better filtering performance, but the gradient of bilateral filtering near the main edge has some distortion. Therefore, He et al. [23] presented a guided filter (GF) method, which has the same good edge smoothing characteristics as bilateral filter, and it is not affected by gradient inversion artifacts. Therefore, this method is widely used in image enhancement [24], high dynamic range compression (HDR) [25], image extinction [26], and other scenes. Guo et al. [27] presented a new FCM algorithm with integrating guided filter for noise-polluted image segmentation (FCMGF). This algorithm directly uses the guided filter on the membership matrix during the iteration process, but the guided filter parameters are set to a fixed value, which weakens the noise tolerance ability of the FCMGF algorithm under different intensity noise conditions. However, guided filtering often leads to overly smooth edges and distorted appearance when processing images with complex textures and noise. Liu et al. [28] presented a local entropy-based window perception guided image filter (WGBF) by combining Gaussian entropy filter (GEF) with guided filtering. This filter has good performance in image de-noising, texture smoothing, and edge extraction.

Recently, large numbers of researchers have presented a combination of FCM and deep learning for cluster analysis and image understanding. Feng et al. [29] presented an affinity graph-regularized deep fuzzy clustering (GrDNFCS) to strength the robustness of deep features. Lei et al. [30] presented a deep learning based FCM algorithm based on loss function and entropy weight learning (DFKM) for image segmentation. This algorithm has good segmentation performance and strong anti-noise robustness, but its time overhead is too high. Huang et al. [31] presented a fully convolutional learning network guided by fuzzy information, which can better handle uncertainty. Gu et al. [32] presented a deep possibilistic clustering correlation algorithm that optimizes the clustering centers, thereby improving the efficiency and accuracy of clustering. Pitchai et al. [33] presented a combining framework method that combines artificial neural networks and fuzzy k-means to increase segmentation accuracy. Chen et al. [34] presented an FCM-based deep convolutional network to complete fast and robust segmentation of SPECT/CT images. However, these algorithms also have the following clear problems: (1) due to the randomness of noise, the construction of training datasets for noisy image segmentation has a certain degree of uncertainty; (2) excessive training time makes the real-time application of these algorithms very challenging; (3) due to the randomness and diversity of noise, the generalization learning ability of these algorithms significantly decreases, resulting in weaker noise-resistant performance; (4) due to the difficulty in fully utilizing the local spatial information of pixels in deep learning networks, they are unable to effectively segment high noisy images; (5) due to their high hardware resource overhead, these algorithms are difficult to apply in embedded systems. Therefore, robust FCM-related algorithms with abundant local information constraints still have significant advantages in intelligence transportation, industrial automation monitoring, and moving target tracking.

Compared with existing algorithms that combine deep learning with fuzzy clustering, the segmentation algorithm of fuzzy clustering does not require too much prior knowledge, has low time complexity, fast processing speed, and is widely applied. Therefore, this paper will continue to explore kernel based FCM with richer local information. To address the issue of high noise-polluted images, a reconstruction-aware kernel based fuzzy clustering algorithm incorporating adaptive local information is presented in this paper.

2 Research Motivation

A good segmentation algorithm with strong robustness and adaptability will be able to effectively process complex images with noise of various types and intensities. In these classic denoising algorithms [17, 18], the similarity between neighbourhood pixels is constantly used to enhance the denoising ability of the algorithm, and local self-similarity and structural redundancy on the image can be used to enhance the adaptability of the algorithm to different noisy images. We also believe that reasonable utilization of the local spatial and gray information in noisy image may effectively preserve the details of the image. In summary, improving the anti-robustness of the algorithm not only exploits local information of neighborhood pixels, but also relies on current pixel information of original information in noisy images.

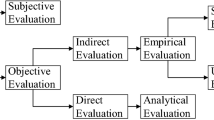

In this paper, the main work can be divided into the following modules as shown in Fig. 1.

-

(1)

The noisy image is preprocessed by the local entropy-based Gaussian filter and the corresponding filtered information is used to drive the bilateral filter.

-

(2)

The guided bilateral filter model is integrated into fuzzy C-means clustering with kernel metric and local information to construct the proposed algorithm.

-

(3)

The tri-level alternating iteration algorithm with adaptive information reconstruction is used to segment noisy images from standard datasets.

-

(4)

Evaluation and analysis of the segmentation results confirm progressiveness and advantages of the proposed algorithm.

The major contributions of this paper are emphasized as below:

-

Guided bilateral filter is embedded into robust kernelized fuzzy local information C-means clustering, and a robust kernel based fuzzy local information clustering model motivated by information reconstruction is originally proposed.

-

Robust iterative algorithm for optimal model is strictly derived through Lagrange multiplier method, so that the algorithm has a solid mathematical theoretical foundation.

-

The algorithm presented in this paper is rigorously proved to be locally convergent using Zangwill theorem, providing a sound theoretical support for its widespread application.

-

Extensive experiments indicate that the presented algorithm outperforms many existing robust fuzzy clustering correlation algorithms and addresses the issue of KWFLICM not being fully suitable for high noise-polluted image segmentation.

The remaining contents of this paper are structured as follows. Section 2 briefly introduces bilateral filtering and guided filtering methods, and robust FLICM-related algorithms. Section 3 puts forward the presented algorithm and rigorously analyzes its local convergence. Section 4 validates the effectiveness, robustness, and advantages of the presented algorithm through experimental testing and comparative analysis. Section 5 comes to the conclusions in this paper.

3 Preliminaries

In this section, we mainly introduce the fundamental theories related to this paper, including bilateral filtering, guided filtering, FCM and its robust algorithms.

3.1 Image Filtering Method

Given image \(X = \{ x_{i} |i = 1,2, \cdots ,n\}\), and the size of image is \(n\). The optimizing model of bilateral filtering [22] is formulated as follows.

where \(g_{\sigma } ( \cdot )\) and \(g_{r} ( \cdot )\) are the grayscale Gaussian function and spatial information Gaussian function respectively.

By using the classic least square method, the optimal output of this filter is given as follows.

where \(k_{i} = \sum\nolimits_{{j \in N_{i} }} {g_{r} (||i - j||)g_{\sigma } (||x_{i} - x_{j} ||)}\), \(x_{j}\) is the intensity value of the image at a pixel \(j\), \(N_{i}\) is a local neighborhood window around pixel \(i\), and the radius of local neighborhood window is \(r\).

Generally, bilateral filtering has better edge preserving performance than Gaussian filtering, but it cannot effectively solve the problem of image denoising in the presence of strong noise.

To find a better filtering effect and solve the problem that there is no gradient deformation near the main edge of bilateral filtering, He et al. [23] presented guided filtering. Given a guided image \(G = \{ G_{i} |i = 1,2, \cdots ,n\}\), and noisy image \(I = \{ I_{i} |i = 1,2, \cdots ,n\}\). The optimizing model of guided filtering is formulated as follows.

where \(A = \{ a_{i} |i = 1,2, \cdots ,n\}\) and \(B = \{ b_{i} |i = 1,2, \cdots ,n\}\).\(\varepsilon\) is a regularization parameter.

By using the least square method, the formulas of parameters \(a_{i}\) and \(b_{i}\) can be obtained as follows.

The final output of this filter is as follows.

where \(\hat{a}_{i} = |N_{i} |^{ - 1} \cdot \sum\nolimits_{{j \in N_{i} }} {a_{j} }\) and \(\hat{b}_{i} = |N_{i} |^{ - 1} \cdot \sum\nolimits_{{j \in N_{i} }} {b_{j} }\).

Guided filtering has been widely applied in image processing. However, when handling complex texture images and high noise-polluted images, it often leads to overly smooth edges and distorted appearance. Therefore, the combination of guided image filtering and bilateral filtering is an important approach to address the issue of noisy image restoration.

3.2 FCM and Its Robust Variants

The FCM algorithm is an unsupervised fuzzy partition clustering technique. The optimizing model is given as follows.

s.t. \(0 \le u_{ij} \le 1,1 \le i \le n,1 \le j \le c\); \(\sum\nolimits_{j = 1}^{c} {u_{ij} = 1} ,1 \le i \le n\); \(0 < \sum\nolimits_{i = 1}^{n} {u_{ij} } < n,1 \le j \le n\).

where \(d_{ij}^{2} = ||x_{i} - v_{j} ||^{2}\) represents the squared Euclidean distance of sample \(x_{i}\) to the clustering center \(v_{j}\), \(m\) is the fuzzy weighted exponent (also called fuzzifier) and is constantly selected between 1.5 and 2.5.

The iterated expressions of membership and the clustering center for FCM are as follows.

The classic FCM algorithm is susceptible to noise and outliers, and it is prone to being trapped in local optima. Introducing local spatial neighborhood information into the FCM algorithm enhances the algorithm’s capability to resist noise. However, under various types and intensities of noise, these spatial fuzzy clustering algorithms [14,15,16] is short of some adaptability to image segmentation.

Given that robust spatial fuzzy clustering algorithms make it challenging to choose the regularized factor constrained by spatial information, Chatzis et al. [17] introduced the local fuzzy factor in the FCM algorithm and presented a novel FLICM algorithm, which fuses the spatial and grayscale information of neighboring pixels and suppresses the impact of noise on image segmentation to some extent. The FLICM algorithm is modelled as follows.

s.t. \(0 \le u_{ij} \le 1,1 \le i \le n,1 \le j \le c\); \(\sum\nolimits_{j = 1}^{c} {u_{ij} = 1} ,1 \le i \le n\); \(0 < \sum\nolimits_{i = 1}^{n} {u_{ij} } < n,1 \le j \le n\).

where fuzzy local factor \(G_{ij} = \sum\nolimits_{{k \in N_{i} ,\;k \ne i}} {\frac{1}{{d_{ki} + 1}}\left( {1 - u_{kj} } \right)^{m} d_{kj}^{2} }\).

The iterated expressions of membership and the clustering center for FLICM are as follows:

To reduce algorithm complexity, the clustering center of Eq. (12) can be roughly approximated as

Compared with the FCM algorithm, the FLICM algorithm increases the spatial and grayscale information of neighborhood pixels and has stronger robustness and adaptability to various noises. But the FLICM algorithm cannot effectively handle the segmentation problem of images with strong noise.

Later, Gong et al. [18] presented the widely applied KWFLICM algorithm based on FLICM by using a kernel-based learning approach. KWFLICM can use nonlinear mapping to map linearly non-separable samples in low space to high feature space, enhancing the data linearly separable. The optimizing model for KWFLICM is as follows.

s.t. \(0 \le u_{ij} \le 1,\forall i,j\); \(\sum\nolimits_{j = 1}^{c} {u_{ij} = 1} ,\forall i\); \(0 < \sum\nolimits_{i = 1}^{n} {u_{ij} } < n,\forall j\).

where weighted kernel local fuzzy factor \(G^{\prime}_{ij}\) is

The iterated expressions of membership and the clustering center for KWFLICM are as follows:

In KWFLICM algorithm, due to Gaussian kernel metric learning, the running time is too long to meet the needs of many applications. Therefore, the iterative formula for clustering center \(v_{j}\) is constantly approximated as

So far, KWFLICM algorithm is widely applied in noisy image segmentation. But it is difficult to effectively restrain high Gaussian noise and speckle noise, and it is not entirely suitable for widespread application in the case of strong noise.

4 Related Work

In this section, we introduce the theory related to the algorithms in this paper, including guided bilateral filtering and fuzzy C-means clustering with the use of the reconstructed data. These support the research work of this paper.

4.1 Guided Bilateral Filtering

To effectively reconstruct the original image from noisy image, this paper first uses the local entropy of neighborhood pixels around the central pixel to construct a local entropy-based weighted Gaussian filter. Then this weighted Gaussian filter with local entropy is used to handle noisy image, and the preprocessed image with weak noise is obtained. Finally, the bilateral filter is guided by the preprocessed image, and a guided bilateral filter similar to the image guided filter is obtained.

Given noisy image \(I = \{ I_{i} |i = 1,2, \cdots ,n\}\), a local neighborhood window centered on pixel \(I_{i}\) is denoted as \(\Omega_{i}\). The local entropy of pixel \(I_{i}\) is defined as

where \(L\left( {\Omega_{i} } \right)\) is the number of gray levels in local window \(\Omega_{i}\), \(\xi_{l}\) is the probability of the \(l\)th gray level in \(\Omega_{i}\).

According to the local entropy [35] of the image, we construct the local entropy-based Gaussian filter, and the optimizing model is.

where \(g_{{\sigma_{1} }} \left( {\left\| {I_{i} - I_{k} } \right\|} \right) = \exp \left( { - \sigma_{1}^{ - 2} \cdot \left\| {I_{i} - I_{k} } \right\|^{2} } \right)\).

By using the least square method, the optimal solution of Eq. (20) is obtained as follows.

Therefore, \(\hat{G} = \{ \hat{G}_{i} |1 \le i \le n\}\) is the filtered image obtained by local entropy-based weighted Gaussian filter on noisy image \(I\). The preprocessed image \(\hat{G}\) is used to drive bilateral filter and a Gaussian filtering image-guided bilateral filtering optimization model is reconstructed as follows.

where \(g_{r} (||i - k||) = \exp ( - \gamma^{ - 2} \cdot ||i - k||^{2} )\) is the spatial Gaussian function of pixel position for two pixels \(I_{i}\) and \(I_{k}\). \(g_{{\sigma_{2} }} (||\hat{G}_{i} - \hat{G}_{k} ||) = \exp ( - \sigma_{2}^{ - 2} ||\hat{G}_{i} - \hat{G}_{k} ||^{2} )\) is the grayscale Gaussian function of two pixels \(\hat{G}_{i}\) and \(\hat{G}_{k}\).

By using the least square method, the optimal solution of Eq. (22) is obtained as follows.

Therefore, \(\hat{I} = \{ \hat{I}_{i} |1 \le i \le n\}\) is the filtering results of Gaussian filtering image guided bilateral filter on noisy image \(I\).

On the whole, Gaussian filtering image guided bilateral filter retains the advantages of bilateral filter in simplicity and parallelism, and has a good denoising effect for images with high noise.

4.2 Fuzzy C-Means Clustering with the Use of the Reconstructed Data

Zhang et al. [37] presented a new tri-level iterative method for FCM using reconstructed data (RDFCM). The optimizing model is.

s.t. \(0 \le u_{ij} \le 1,i = 1,2, \cdots ,n,j = 1,2, \cdots ,c\); \(\sum\nolimits_{j = 1}^{c} {u_{ij} = 1} ,i = 1,2, \cdots ,n\); \(0 < \sum\nolimits_{i = 1}^{n} {u_{ij} } < n,j = 1,2, \cdots ,c\).

where a set of data \(X^{*} = \{ x_{i}^{*} |i = 1,2, \cdots ,n\}\) is clustered, and \(X = \{ x_{i} |1 \le i \le n\}\) is the constructed data.\(T\) is a positive regularization factor.

From Eq. (24), the iterated expressions for the tri-level alternative and iterative algorithm are as follows.

In the RDFCM algorithm mentioned above, the reconstructed data are directly applied to update the clustering center and membership degree. Inspired by this RDFCM algorithm, this paper combines guided bilateral filtering with robust kernel space fuzzy clustering with local information to construct a new robust clustering algorithms for solving the segmentation problem of images with strong noise.

5 Proposed Algorithm

Although the widely applied KWFLICM algorithm has better segmentation performance and stronger robustness to noise than the FLICM algorithm, it is difficult to effectively handle images with heavy noise. Therefore, this section will research a reconstruction-aware kernelized FCM with adaptive local information for noise-corrupted image segmentation. Figure 2 gives the total framework of the algorithm presented in this paper.

To address the issue of segmenting blurred images, Lelandais and Ducongé [36] presented a segmentation model of combing the maximum likelihood expectation maximization deconvolution with FCM for blurred image segmentation. Inspired by this combination model for blurred image segmentation, this paper presents a new segmentation model of combing guided bilateral filtering and weighted kernelized FCM with local information for high noise-polluted image segmentation. In this presented main framework given in Fig. 2, the noise-corrupted image is smoothed by a Gaussian filter to obtain a preprocessed image, and it is further processed by a Gaussian entropy filter to provide filter weights for the guided bilateral filter. Combining the idea of guided bilateral filter and KWFLCM algorithm, a new robust fuzzy clustering algorithm is constructed. Reconstructed data, fuzzy membership, and the clustering centers are solved through alternating iteration until the algorithm converges. Finally, the noisy image is segmented into different regions based on the principle of maximum fuzzy membership.

5.1 Optimal Modeling

According to the idea of FCM motivated by the reconstructed data, this paper combines Gaussian filtering image guided bilateral filtering with the KWFLICM algorithm, and a reconstruction-aware kernelized FCM algorithm with adaptive local information is put forward for noise-corrupted image segmentation. The optimizing model is originally constructed as

s.t. \(0 \le u_{ij} \le 1,i = 1,2, \cdots ,n,j = 1,2, \cdots ,c\); \(\sum\nolimits_{j = 1}^{c} {u_{ij} = 1} ,i = 1,2, \cdots ,n\); \(0 < \sum\nolimits_{i = 1}^{n} {u_{ij} } < n,j = 1,2, \cdots ,c\).

where \(U = [u_{ij} ]_{n \times c}\) denotes fuzzy membership partition matrix, \(F = [f_{i} ]_{n \times 1}\) denotes the pixel value obtained after reconstructing pixel \(i\), \(V = [v_{j} ]_{c \times 1}\) denotes the clustering center, \(\hat{G}\) denotes a guided image from Gaussian filtering via local entropy in noisy image \(I\). \(I\) denotes an input noisy image. \(g_{\gamma } \left( {\left\| {i - k} \right\|} \right)\) is a spatial Gaussian kernel function, \(g_{{\sigma_{2} }} \left( {\left\| {\hat{G}_{i} - \hat{G}_{k} } \right\|} \right)\) is a grayscale Gaussian kernel function. \(N_{i}\) denotes the local neighbourhood window with the radius of \(r\), \(\Omega_{i}\) is the local neighborhood window around the current pixel for bilateral filtering, \(\tau\) is the positive regularized factor, \(G^{\prime}_{ij}\) denotes the weighted kernelized local fuzzy factor, and is defined as

where \(K(f_{i} ,v_{j} )\) is a Mercer Gaussian kernel function, defined as

In Eq. (30), the parameter \(\sigma\) is the bandwidth, the size of which has a significant impact on the image segmentation results.

where \(d_{i} = ||f_{i} - \overline{f}||\), \(\overline{d} = n^{ - 1} \sum\nolimits_{i = 1}^{n} {d_{i} }\), \(\overline{f} = n^{ - 1} \cdot \sum\nolimits_{i = 1}^{n} {f_{i} }\).

The local weighted coefficient \(w_{ik}\) is given as follows.

where \(w_{ki}^{(sc)} = \frac{1}{{d_{ki} + 1}}\). \(w_{ki}^{(gc)}\) is the local gray-level constraint defined as

where \(\eta_{ki} = \frac{{\xi_{ki} }}{{\sum\nolimits_{{k \in N_{i} }} {\xi_{ki} } }}\), \(\xi_{ki} = \exp ( - (C_{ki} - \overline{C}_{i} ))\), \(\overline{C}_{i} = |N_{i} |^{ - 1} \sum\nolimits_{{k \in N_{i} }} {C_{ki} }\), \(C_{ki} = \frac{{Var(f_{i} )}}{{\overline{f}_{i} }}\), \(Var(f_{i} ) = |N_{i} |^{ - 1} \cdot \sum\nolimits_{{k \in N_{i} }} {||f_{k} - \overline{f}_{i} ||}^{2}\), \(\overline{f}_{i} = |N_{i} |^{ - 1} \cdot \sum\nolimits_{{k \in N_{i} }} {f_{k} }\).

5.2 Model Solution

For Eq. (28), by Lagrange multiplier method, we construct the following unconstrained objective function.

We calculate the partial derivatives of \(L(U,V,F,\lambda )\) with respect to \(u_{ij}\), \(v_{j}\), \(\lambda_{i}\), and \(f_{i}\) respectively, and set them to zero.

5.2.1 Update \(U\)

From Eq. (35), it can obtain

Equation (37) is substituted into Eq. (36), we can obtain

So that, we have

Equation (39) is substituted into Eq. (37), and we obtain the iterative expression of fuzzy membership \(u_{ij}\) as follows.

5.2.2 Update \(V\)

According to \(\frac{\partial L}{{\partial v_{j} }} = 0\), we have

So then, the iterative expression of the clustering center is obtained as follows.

Considering the high computational complexity, according to references [17, 18], Eq. (42) can be roughly approximated as

5.2.3 Update \(F\)

According to \(\frac{\partial L}{{\partial f_{i} }} = 0\), we have

Hence,

According to Eqs. (40), (43), and (45), we can construct the tri-level alternating iteration algorithm to resolve the optimizing problem of Eq. (28).

From Fig. 3, it can be seen that neighborhood window with the size of \(3 \times 3\) is selected, the iterative changes of fuzzy membership, pixel reconstruction information, and clustering centers intuitively reflect that the presented algorithm is a tri-level alternative and iterative algorithm. In one neighborhood of noisy images, we identify normal pixels with gray values of 10 and 0, and noisy pixels with gray values of 120, 154, 109, and 85. In the first iteration, the membership of noise pixels is relatively low, and after three iterations using the RDKWFLICM method, the membership values of noisy pixels are much the same as those of the surrounding pixels. The testing results demonstrate that the presented RDKWFLICM algorithm can effectively suppress the effect of noise on the clustering algorithm.

5.3 Tri-Level Alternative Iteration Algorithm

The pseudocode of the presented tri-level alternation and iterative method for noise-corrupted image segmentation is given in Algorithm 1.

5.4 Analysis of Algorithm Convergence

If the algorithm presented in this paper can locally converge, the tri-level alternative iteration process needs to meet the constrained conditions in the Zangwill’s theorem [38,39,40] that the objective function \(J(U,V,F)\) is a decreasing function. As \(J(U,V,F)\) is a decreasing function, the following three propositions for function \(J(U,V,F)\) are true. So we construct the unconstrained Lagrange function as follows.

Proposition 1

Given \(V\) and \(F\), let \(L_{1} (U,\lambda ) = L(U,V,F,\lambda )\), \(U^{*}\) must be a strict local minimum point of \(L_{1} (U,\lambda )\) when and only when \(u_{ij}^{*}\) is calculated by Eq. (40).

Proposition 2

Given \(U\) and \(F\), let \(L_{2} (V) = L(U,V,F,\lambda )\), \(V^{*}\) must be a strict local minimum point of \(L_{2} (V)\) when and only when \(v_{j}^{*}\) is calculated by Eq. (42).

Proposition 3

Given \(U\) and \(V\), let \(L_{3} (F) = L(U,V,F,\lambda )\), \(F^{*}\) must be a strict local minimum point of \(L_{3} (F)\) when and only when \(f_{i}^{*}\) is calculated by Eq. (44).

-

(1)

Given \(V\) and \(F\), if \(U^{*}\) be the local minimum point of \(L_{1} (U,\lambda )\), we have \(\frac{{\partial L_{1} (U^{*} ,\lambda )}}{{\partial u_{ij} }} = 0\), namely

$$ \frac{{\partial L_{1} (U^{*} ,\lambda )}}{{\partial u_{ij} }} = mu_{ij}^{m - 1} [1 - K(f_{i} ,v_{j} ) + G^{\prime}_{ij} ] - \lambda_{i} = 0 $$(47)

So then

Equation (48) is substituted into the constrained condition \(\sum\nolimits_{j = 1}^{c} {u_{ij} = 1,\forall i}\), we obtain

Therefore, Eq. (49) is a necessary condition for function \(L_{1} (U,\lambda )\) to have a minimum value.

We find Hessian matrix of function \(L_{1} (U,\lambda )\) with respect to \(u_{ij}\) at \(U = U^{*}\), namely

From Eq. (50), this Hessian matrix is positive definite. Therefore, \(U^{*}\) is a strict local minimum point of \(L_{1} (U,\lambda )\).

If some constraints are considered, the bordered Hessian matrix [41,42,43] related to Lagrange multipliers method must also be evaluated. If \(V\) and \(F\) are fixed, the corresponding bordered Hessian matrix of \(u_{i} = [u_{i1} \;u_{i2} \; \cdots \;\;u_{ic} ]\) and \(\lambda_{i}\) are given as follows.

where \(\frac{{\partial^{2} L_{1} (u_{i} ,\lambda_{i} )}}{{\partial \lambda_{i} \partial u_{ij} }} = - 1,j = 1,2, \cdots ,c\).

The leading principal minors of this matrix \(H_{{L_{1} }} (u_{i} ,\lambda_{i} )\) are evaluated as follows.

Hence, \(L_{1} (U,\lambda )\) subject to \(\sum\limits_{i = 1}^{c} {u_{ij} = } 1\) is locally minimized at \({\varvec{U}}^{*} = [u_{ij}^{*} ]\) with

-

(2)

Given \(U\) and \(F\) given, if \(V^{*}\) be the local minimum point of \(L_{2} (V)\), we have \(\frac{{\partial L_{2} (V^{*} )}}{{\partial v_{j} }} = 0\), namely

$$ \frac{{\partial L_{2} (V^{*} )}}{{\partial v_{j} }} = - 2\sigma^{ - 1} \cdot \sum\nolimits_{i = 1}^{n} {u_{ij}^{m} [K(f_{i} ,v_{j} )(f_{i} - v_{j} ) + \sum\nolimits_{{k \in N_{i} ,k \ne i}} {w_{ik} (1 - u_{kj} )^{m} K(f_{k} ,v_{j} )(f_{k} - v_{j} )} ]} = 0 $$(56)

So then

Therefore, Eq. (57) is a necessary condition for function \(L_{2} (V)\) to have a minimum value. During the iteration process, the clustering center is updated as follows.

Therefore, we have

where \(v_{j}^{ + } \in N_{\delta } (v_{j} ) = \{ v_{j}^{*} \left| {||v_{j}^{*} - v_{j} || < \delta ,} \right.\delta > 0\}\).

We calculate Hessian matrix of \(L_{2} (V)\) at \(V = V^{*}\), that is

Equation (60) shows that this Hessian matrix is positive definite. \(V^{*}\) is the local minimum point of \(L_{2} (V)\).

-

(3)

Given \(U\) and \(V\), if \(F^{*}\) be the minimum point of \(L_{3} (F)\), we have \(\frac{{\partial L_{3} (F^{*} )}}{{\partial f_{i} }} = 0\), namely

$$ \begin{gathered} \frac{{\partial L_{3} (F^{*} )}}{{\partial f_{i} }} = 2\sigma^{ - 1} \cdot \sum\nolimits_{j = 1}^{c} {u_{ij}^{m} K(f_{i} ,v_{j} )(f_{i} - v_{j} )} \\ + 2\sum\nolimits_{{k \in N_{i} }} {g_{r} (||i - k||)g_{\sigma } (||G_{i} - G_{k} ||)(f_{i} - I_{k} ) = 0} \\ \end{gathered} $$(61)

So then, we obtain

Therefore, Eq. (62) is a necessary condition for function \(L_{3} (F)\) to have a minimum value.

During the iterative process, the reconstructed image \(F = \{ f_{i} |1 \le i \le n\}\) is updated as

Therefore, we have

where \(f_{i}^{ + } \in N_{\delta } (f_{i} ) = \{ f_{i}^{*} \left| {||f_{i}^{*} - f_{i} || < \delta } \right.,\delta > 0\}\).

We find Hessian matrix of function \(L_{3} (F)\) with respect to \(f_{i}\) at \(F = F^{*}\), namely

Equation (65) shows that this Hessian matrix is positive definite. \(F^{*}\) is the local minimum point of \(L_{3} (F)\).

Based on the above-mentioned analysis, the objective function \(J(U,V,F)\) of the algorithm presented in this paper is a decreasing function, meeting the constrained conditions of Zangwill’s theorem. Therefore, the algorithm presented in this paper must be convergent.

6 Experiments and Discussion

To test the effectiveness of the algorithm presented in this paper (the source code: http://github.com/qi7xiao/RDKWFLICM), four kinds of grayscale images and natural color images are selected for testing, and the testing results of the algorithm presented in this paper are compared with those of ARFCM [44], FLICMLNLI [45], FCM_VMF [46], DSFCM_N [47], KWFLICM [18], PFLSCM [48], FCM_SICM [49], FSC_LNML [50], and FLICM [17]. Additionally, speckle noise, Gaussian noise, Rician noise, and salt and pepper noise are added to these images respectively, and the corresponding noise-corrupted images are used to test these fuzzy clustering-related algorithms. Some representative evaluation indicators such as accuracy (Acc), sensitivity (Sen), Jaccard coefficient, segmentation accuracy (SA), Kappa coefficient, mean intersection over union (mIoU), peak signal noise ratio (PSNR), and Dice similarity coefficient (DICE) are adopted to assess the segmented results. During the testing process, the size of neighborhood window in the algorithm presented in this paper is selected as \(11 \times 11\) and its fuzzifier \(m\) is 2.0. Experimental environment is Matlab2018a, and hardware platform is CR7-5700U processor with 16G memory and a clock speed of 1.8GHZ. Among these comparative algorithms, the size of neighborhood window in ARFCM, FLICMLNLI, FCM_VMF, DSFCM_N, KWFLICM, PFLSCM, FCM_SICM, FSC_LNML, and FLICM algorithms is selected as 5 × 5, 3 × 3, 4 × 4, 3 × 3, 3 × 3, 3 × 3, 7 × 7, 7 × 7, and 3 × 3 respectively. The number of clusters in FCM-related segmentation algorithms is determined according to the clustering validity function toolbox [51]. For ease of exposition, this section uses \(\mu\) to denote the mean value of Gaussian noise, and \(\sigma_{n}^{2}\) represents the normalized variance; \(p\) denotes the intensity level of salt-and-pepper noise, and \(\sigma\) denotes the standard deviation of Rician noise. For example, Gaussian noise with mean value of 0 and normalized variance of 0.1 is expressed as Gaussian noise (\(\mu = 0,\sigma_{n}^{2} = 0.1\)) or GN(0.1), salt-and-pepper noise with the intensity of 30% is expressed as salt-and-pepper noise (\(p = 0.3\)) or SPN(0.3); speckle noise with the normalized variance of 0.2 is expressed as speckle noise (\(\sigma_{n}^{2} = 0.2\)) or SN(0.2), and Rician noise with standard deviation of 80 is expressed as Rician noise (\(\sigma = 80\)) or RN(80).

6.1 Algorithm Performance Metrics

To objective confirm the performance of the proposed algorithm, this paper uses the following performance indexes to evaluate the segmentation performance of different algorithms in the presence or absence of noise.

6.1.1 Peak Signal Noise Ratio (PSNR) [52]

PSNR is an important evaluation indicator for evaluating the quality of images and also used to assess the performance of image segmentation algorithms, and the modified PSNR represents a measure of the ground truth and the segmented image achieved by a given algorithm.

where \(R\) is set to 255, the number of total pixels in image is \(M_{1} \times N_{1}\), \(I(x,y)\) is the grayscale value of pixel position \((x,y)\) in the ground truth, \(O(x,y)\) is the grayscale value of pixel position \((x,y)\) in the segmented result achieved by some algorithm.

6.1.2 Segmentation Accuracy (SA) [44]

SA is the ratio of the number of correctly divided pixels to the number of total pixels in image.

In general, the larger the value of SA is, the closer the segmented result is to the ground truth, indicating that the algorithm has good segmentation performance.

6.1.3 Mean Intersection Over Union (mIoU) [50]

mIoU is defined as the intersection degree between the segmented result achieved by some algorithm and the ground truth.

The larger the value of mIoU is, the higher the matching degree between the segmented results achieved by a given algorithm and the ideal segmentation image, indicating that the performance of the algorithm is much better.

6.1.4 Accuracy (Acc), Sensitivity (Sen), and Jaccard Coefficient [53]

Given true negative (TN), true positive (TP), false positive (FP), and false negative (FN). We define the following evaluation indicators. ACC is an important performance evaluation index for clustering or segmentation algorithms, which measures the ratio of correctly classified samples to the total number of samples. When the result achieved through a given algorithm is closer to the ground truth, the value of ACC approaches 1, indicating higher accuracy. ACC is given as follows.

Accuracy

Sen refers to the proportion of samples that are truly positive and are correctly predicted as positive by a given algorithm, also known as recall. Sen is defined as follows.

Sensitivity

Jaccard coefficient is a widely applied performance indicator for measuring the similarity between the ground truth and clustering results obtained through a given algorithm. A value of 1 means that the test result is the same as the ground truth, indicating high accuracy. Jaccard coefficient is defined as follows.

Jaccard coefficient

DICE is commonly used to represent the overlap degree between the result obtained by a given algorithm and the ground truth. The larger the value of DICE is, the better the performance of the algorithm is. DICE index is defined as follows.

Dice similarity coefficient [47] (DICE)

6.1.5 Kappa Coefficient [54]

Kappa coefficient is an important performance indicator used for consistency evaluation, which can be used to assess the effectiveness of classification or partition.

where \(p_{o}\) is the sum of the number of correctly classified or clustered samples in each category divided by the total number of samples, which is the overall classification accuracy.

6.2 Test and Evaluation of Algorithm Anti-noise Robustness

6.2.1 Synthetic image

To verify the anti-noise robustness of various algorithms, four synthetic images are selected and added different types of noise to test the algorithm presented in this paper and all the comparative algorithms.

RN (\(\sigma = 80\)) is used to corrupt Fig. 4a, SPN(\(p = 0.3\)) is used to corrupt Fig. 4b, GN (\(\mu = 0,\sigma_{n}^{2} = 0.1\)) is used to corrupt Fig. 4c, and SN(\(\sigma_{n}^{2} = 0.2\)) is used to corrupt Fig. 4d. These noisy images are segmented using different fuzzy segmentation algorithms, and the segmented results are displayed in Fig. 5. Table 1 gives the corresponding performance evaluation results.

From Fig. 5, the image contains strong noise, the segmented results of FCM_VMF and FLSCM are very poor, indicating that these two comparative algorithms are short of certain robustness to noise. DSFCM_N, PFLICM, and FLICMLNLI achieve more satisfactory segmented results when handing image contaminated by Rician noise, but when images contain other types of noise, their segmented results are also poor, so DSFCM_N, PFLICM, and FLICMLNLI are robust to Rician noise, but lack a certain robustness to other types of noise; When images contains salt-and-pepper noise, ARFCM appears over-segmentation, but when images contain other types of noise, it can also achieve good segmented results, so ARFCM is susceptible to salt-and-pepper noise. The KWFLICM algorithm presented in this paper can effectively restrain a large amount of noise, but its segmented results are still dissatisfied; FCM_SICM and FSC_LNML can also restrain lots of noise, but its segmented results have uneven edges. In summary, The RDKWFLICM algorithm presented in this paper is more effective than several other comparative algorithms in segmenting synthetic images with various types of noise. From Table 1, all the evaluation values of the RDKWFLICM algorithm presented in this paper are higher than those of several other comparative algorithms. Therefore, the RDKWFLICM algorithm presented in this paper outdistances many existing FCM-related algorithms for strong noise-polluted image.

6.2.2 Natural Image

To further test the robustness performance of the algorithm presented in this paper, four natural images are selected from BSDS500 [55], COCO [56], and VOC2010 [57] for segmentation testing.

SPN(0.3) is used to corrupt Fig. 6a and b, and GN(0.1) is used to corrupt Fig. 6c and d. These noise-polluted images are segmented using various FCM-related algorithms, and the segmented results are displayed in Fig. 7. Table 2 provides the corresponding performance evaluation indexes.

As shown in Fig. 7, except for FCM_SICM, FSC_LNML, and RDKWFLICM, the segmented results of all other algorithms appear some noise. Among them, FLICMLNLI and FCM_VMF have the worst segmentation effect, and there are some misclassification in the segmented results; DSFCM_N achieves more satisfactory segmented results when handling images with Gaussian noise, but the segmented effect is still poor when images are polluted by salt-and-pepper noise; the FLICM algorithm can extract targets in images, but the segmented results appear a large amount of noise; the KWFLICM algorithm can restrain lots of noise, but the segmented effect is still dissatisfied; FCM_SICM and FSC_LNML can effectively restrain a large amount of noise, but there are non-uniform edges in their segmented results; The segmented results of the PFLSCM algorithm appear a lot of noise, which is hardly satisfactory; the RDKWFLICM algorithm presented in this paper is more effective than other compared algorithms. As shown in Table 2, all the evaluation values of the RDKWFLICM algorithm presented in this paper are all higher than those of other comparative algorithms for natural image with high noise.

6.2.3 Remote Sensing Image

To test the anti-noise performance of the algorithm, four remote sensing images are selected from UC Merced Land Use dataset [58] and SIRI_WHU dataset [59] for segmentation testing.

SPN(0.4) is used to corrupt Fig. 8a and b, and SN(0.2) is used to corrupt Fig. 8c and d. These noisy images are segmented using various FCM-related algorithms, and the segmented results are displayed in Fig. 9. Table 3 provides the corresponding performance evaluation indicators.

As shown in Fig. 9, FCM_VMF, FLICMLNLI, PFLSCM, KWFLICM, DSFCM_N, and FLICM have poor segmented results for remote sensing images with high noise. Among them, ARFCM has under-segmentation when segmenting Fig. 9b and d; FLICMLNLI achieves more satisfactory segmented results for images in Fig. 9c and d, but cannot segment images with salt-and-pepper noise; FCM_VMF algorithm has poor segmentation effect, and the segmented results also contain a lot of noise; DSFCM_N, KWFLICM, and FLICM algorithms can effectively extract targets in images, but the segmented results also contain a lot of noise; FCM_SICM and FSC_LNML can restrain a large amount of noise, but there are uneven edges among their segmented results, and the segmentation results are still dissatisfied. The RDKWFLICM algorithm presented in this paper can obtain better segmentation effect for four noisy remote sensing images than other comparative algorithms. In terms of performance indicators, the index values of the RDKWFLICM algorithm presented in this paper are all significantly higher than those of other comparative algorithms for remote sensing image with high noise.

6.2.4 Medical Images

To test the robustness performance of the algorithm for medical image, four MR images are selected from Brain Tumor MRI data set [60] for segmentation testing.

RN(80) is used to corrupt Fig. 10a and b, and GN(0.1) is used to corrupt Fig. 10c and d. These noisy images are segmented using various FCM-related algorithms, and the segmented results are displayed in Fig. 11. Table 4 provides the corresponding performance evaluation indexes at lengthen.

As shown in Fig. 11, ARFCM algorithm cannot extract targets in images when handling noisy medical images; FLICMLNLI has lost image details when processing noisy images, making the segmented results unsatisfactory; FCM_VMF algorithm is difficult to restrain the noise in images, and its segmented results are the worst; the segmented results of FCM_SICM and FSC_LNML are similar to the ground truth, suppressing almost all noises but some details are lost; DSFCM_N, KWFLICM, PFLSCM and FLICM can retain image details well and restrain some noise, but there is still some noise in their segmented results. However, the presented RDKWFLICM algorithm suppresses almost all the noises in medical images and can extract targets in images accurately, and achieves satisfactory segmented results, which are also verified in the evaluation indexes in Table 4. Overall, the RDKWFLICM algorithm presented in this paper outdistances many existing FCM-related algorithms for medical images with strong noise.

6.3 Test and Analysis of Algorithm Performance with Noise Intensity

In this section, GN(0.05–0.11) is used to corrupt the synthetic image in Fig. 4a. Ten fuzzy algorithms are used to segment these noisy images, and the change curves of evaluation metrics of various algorithms are obtained, as given in Fig. 12. Most of evaluation indexes of all algorithms decrease as \(\sigma_{n}^{2}\) of Gaussian noise increases, among which FCM_VMF algorithm has the lowest evaluation values; FLICM, FLICMLNLI, KWFLICM, and the RDKWFLICM algorithm presented in this paper have improved evaluation index values, and the performance indexes of the RDKWFLICM algorithm presented in this paper are better than those of all the comparative algorithms. Overall, the performance curves of the RDKWFLICM algorithm presented in this paper change slowly with \(\sigma_{n}^{2}\) of Gaussian noise, and the RDKWFLICM algorithm presented in this paper outdistances all the comparative algorithms.

SPN(0.12–0.3) is added to the natural image in Fig. 6c. Using ten fuzzy algorithms to process the noise-contaminated images, the change curves of evaluation metrics of various algorithms with \(p\) of salt-and-pepper noise are obtained, as displayed in Fig. 13. Most of the performance indexes of all algorithms decrease as \(p\) of salt-and-pepper noise increases. The FLICMLNLI algorithm has the lowest evaluation index values; When \(p\) of salt-and-pepper noise is lower than 21%, the PFLSCM algorithm has higher values for all evaluation indexes than other algorithms, and when \(p\) of salt-and-pepper noise is higher than 21%, the RDKWFLICM algorithm presented in this paper has higher values for all evaluation indexes than other comparative algorithms. Overall, the RDKWFLICM algorithm presented in this paper outdistances all the comparative algorithms in the presence of high salt-and-pepper noise.

SN(0.05–0.23) is added respectively to the remote sensing image in Fig. 8d. Using ten fuzzy algorithms to segment the noise-contaminated images, the change curves of evaluation metrics of various algorithms with \(\sigma_{n}^{2}\) of speckle noise are obtained, as displayed in Fig. 14. Most of evaluation indexes of all algorithms decrease as \(\sigma_{n}^{2}\) of speckle noise increases. FCM_VMF has the lowest evaluation values and RDKWFLICM obtains higher evaluation values than all the comparative algorithms. On the whole, the change of \(\sigma_{n}^{2}\) of speckle noise has the least impact on the performance of the RDKWFLCM algorithm presented in this paper, and its robustness to noise is stronger than all the comparing algorithms.

RN(50–80) is used to corrupt MRI images in Fig. 10c. Using ten fuzzy algorithms to segment the noise-polluted medical images, and the change curves of performance indexes of various algorithms with \(\sigma\) of Rician noise are obtained, as displayed in Fig. 15. The performance curves of evaluation indexes in most algorithms decrease progressively with the increase of \(\sigma\) of Rician noise. FLICMLNLI algorithm obtains the lowest values of different evaluation indexes for MRI image with Rician noise. However, the RDKWFLICM algorithm presented in this paper has higher values than all the comparative algorithms in all evaluation indexes, and has stronger stability and robustness against changes in \(\sigma\) of Rician noise.

6.4 Test and Analysis of Algorithm Complexity

Algorithm complexity is an important index for evaluating algorithm performance. For the algorithm presented in this paper and all the comparative algorithms related to this paper, their computational complexity is given in Table 5, where \(n\) is the number of total pixels in image, \(w\) is the size of neighborhood window in robust fuzzy clustering, \(c\) is number of categories;, \(t\) is iteration times when the algorithm converges, \(w_{1}\) is the size of neighborhood window in Gaussian filtering and guided bilateral filtering, \(w_{2}\) is the size of neighborhood window in no-local mean filtering, \(w_{3}\) is the size of searching window in no-local mean filtering.

The computational time complexity of the RDKWFLICM algorithm presented in this paper includes of three parts: the iteration of the RDKWFLICM algorithm, the update of the position of local window in guided bilateral filtering, and the update of the position of neighborhood window. For an image with the size of \(n\), the computational time complexity of iteration operation of the RDKWFLICM algorithm presented in this paper is \(O(w \times n \times c \times t + n \times w_{1} \times t)\). In the process of filtering, the image is divided into filtering window with the size of \(w_{1}\), then the window slides until all pixel points on the image are traversed, and its computational time complexity is \(O(n \times w_{1} )\). The size of neighborhood window is \(w\), then its computational time complexity is \(O(n \times w)\). Therefore, the RDKWFLICM algorithm presented in this paper has computational time complexity of \(O(n \times w_{1} + n \times t \times w_{1} + n \times w \times t + n \times c \times t \times w)\).

As shown in Table 5, the computation time complexity of FLICMLNLI, PFLSCM, and FSC_LNML algorithms is markedly higher than that of other comparative algorithms. To verify the computational time complexity of these fuzzy algorithms, we add various types and intensities of noise to various images for testing. By analyzing of time cost of these fuzzy algorithms for noise-polluted images, we confirm the computational time complexity of various FCM-related algorithms related to this paper. RN(80), SPN(0.3), GN(0.1), and SN(0.2) are used to corrupt two synthetic images in Fig. 4a and b; SPN(0.3) and GN(0.1) are used to corrupt two natural images in Fig. 6d and e; SPN(0.3) and SN(0.2) are used to corrupt remote sensing images in Fig. 8a and c; RN(80) and GN(0.1) are used to corrupt medical images in Fig. 10a and d. A histogram of time cost of various algorithms for these noise-polluted images is given in Fig. 16, where the parameters of algorithm are selected as \(m = 2\) and \(c = 3\), RN is Rician noise, SPN is salt-and-pepper noise, GN is Gaussian noise, and SN is speckle noise.

As seen in Fig. 16, the time cost of the FLICMLNLI and PFLSCM algorithms for all noisy images is markedly higher than that of other comparative algorithms, and these two algorithms spend approximately the same time in segmenting these noisy images. However, the RDKWFLICM algorithm presented in this paper requires less time to handle these noisy images, but meeting the requirements of large-scale real time image processing remains challenging. In next work, we will study fast algorithm of the presented RDKWFLICM algorithm using fast FCM-related method [61, 62], fast bilateral filter [63, 64], and SPARK platform [65, 66] to meet real time image processing demands.

6.5 Impact of neighbor window size on algorithm performance

To investigate the impact of neighborhood window size on algorithm performance, we select neighborhood window with the size of 3 × 3, 5 × 5, 7 × 7, 9 × 9, 11 × 11, 13 × 13, and 15 × 15 to test images with various types of noise and analyze the segmented results to objectively evaluate the impact of neighborhood window size on algorithm performance.

GN(0.1) is used to corrupt the synthetic image in Fig. 4a, SPN(0.3) is used to corrupt the natural image in Fig. 6b, SN(0.2) is used to corrupt the remote sensing image in Fig. 8c, and RN(80) is used to corrupt the MRI image in Fig. 10a respectively. The segmented results of these noise-polluted images are displayed in Fig. 17.

From Fig. 17, when the neighborhood window size is 3 × 3, the segmented result contains a much noise, resulting in poor segmentation effect; When the neighborhood window size is 5 × 5, the segmented result contains a small amount of noise, which is dissatisfied; As the neighborhood window size continues to increase, such \(7 \times 7\) and \(9 \times 9\), the algorithm’s ability to restrain noise has been enhanced, and it can achieve satisfactory segmented results; However, as the neighborhood window size further increases, such as 13 × 13 and 15 × 15, the algorithm's ability to restrain noise has been markedly enhanced, but some details have been lost in the segmented image, which is dissatisfied. From Table 6, it can be seen that when the neighborhood window size is \(7 \times 7\), the segmentation evaluation indexes for synthetic and medical images are the highest, while the neighborhood window size is 11 × 11, the segmentation evaluation indexes for natural and remote sensing images are the highest. Therefore, it can be concluded that when the neighborhood window size is 7 × 7 to 11 × 11, the presented RDKWFLICM algorithm can obtain satisfactory segmented results.

6.6 Impact of Fuzzifier on Algorithm Performance

Fuzzifier is an important parameter in FCM-related algorithms, which has a certain impact on the clustering performance. Pal and Bezdek [67] pointed out that from the perspective of clustering validity, the range of fuzzifier should be between 1.5 and 2.5. This paper selects fuzzifier as a series of values in [1.5, 2.5] to test the presented algorithm, and objectively analyzes the impact of fuzzifier on the performance of the presented algorithm.

GN(0.1) is used to corrupt the synthetic image in Fig. 4a, SPN(0.3) is used to corrupt the natural image in Fig. 6b, SN(0.2) is used to corrupt the remote sensing image in Fig. 8c, and RN(80) is used to corrupt the MRI image in Fig. 10a respectively. The corresponding noisy images are used to test the presented algorithm with different fuzzifiers. Figure 18 provides the variation curves of algorithm performance with fuzzifier.

As shown in Fig. 18, for images with speckle or Rician noise, the presented algorithm is less sensitive to fuzzifier than images with Gaussian noise or salt-and-pepper noise. Overall, the performance of the presented algorithm is stable as fuzzifier changes, and it is reasonable to select a fuzzifier of around 2.0 in the presented algorithm.

6.7 Algorithm Sensitivity to Initial Clustering Centers

To verify the susceptibility of the presented algorithm to initial clustering centers, the grayscale levels within the maximum and minimum values of noise-polluted image are divided equally into \(c\) segments, and the grayscale levels with a frequency of 0 are removed. At each execution, a group of values from the \(c\) segments are randomly selected as the initial clustering centers [68, 69], and six groups of initial clustering centers are selected for segmentation testing. We select the synthetic image in Fig. 4a, and it is corrupted by Gaussian noise with different normalized variances. Natural image in Fig. 6b is corrupted by salt-and-pepper noise with different intensity levels. Remote sensing in Fig. 8c is corrupted by speckle noise with different normalized variances. MRI image in Fig. 10a is corrupted by Rician noise with different standard deviations. These noise-polluted images are used to test the presented algorithm. The box plots of algorithm performance varying with initial clustering centers are shown in Figs. 19, 20, 21, and 22.

As shown in Figs. 19, 20, 21, and 22, the presented algorithm for MRI image with Rician noise is more sensitive to initial clustering centers compared with images with other types of noise, but the presented algorithm for Gaussian noise-polluted image is the most insensitivity to initial clustering centers. Overall, the proposed algorithm is not very susceptible to initial clustering centers.

6.8 Test and Analysis of the Generalization Performance

To verify the adaptability of the presented algorithm, we select large numbers of images from BSDS500 dataset to demonstrate the effectiveness and generality of the algorithm presented in this paper. Considering the limited length of this paper, we only provide 7 images from BSDS500 and segment them using ten FCM-related algorithms. GN(0.1) is used to corrupt #2018, #24004, #3063, and #238011, SPN(0.3) is used to corrupt #235098, #8086, and #56028. The segmented results of these noise-polluted images are displayed in Fig. 23.

From Fig. 23, when natural images contain strong noise, the segmented results of FCM_VMF and FLICMLNLI are very poor, indicating that these two algorithms are short of certain robustness to noise. FCM_VMF and FLICMLNLI have the worst segmented results for noisy image; ARFCM for natural image will lose some details in images, resulting in poor segmentation effect; The segmented results of DSFCM_N, PFLSCM, and FLICM contain much noise, which are dissatisfied; FCM_SCIM and FSC_LNML can restrain lots of noise, but the edges of the segmented image are not smooth; the KWFLICM algorithm has good performance in image segmentation, but its segmented results still contain some noise; Compared with other comparative algorithms, the segmented results of the RDKWFLICM algorithm presented in this paper are closer to the ground truth and fully preserve the details of images. From Figs. 24 and 25, the presented RDKWFLICM algorithm has better performance than other comparative algorithms. Therefore, the presented RDKWFLICM algorithm has marked potential advantages in noisy natural image segmentation.

To further verify the effectiveness and adaptability of the presented algorithm, we continue to select large numbers of images from BSDS500 for segmentation testing, and extensive experiments demonstrate that the presented RDKWFLICM algorithm has good performance in noiseless natural image segmentation. Considering the restricted space of this paper, we only provide the segmented results of eight noiseless natural images in Fig. 26.

As shown in Fig. 26, ARFCM segments noiseless natural images, resulting in partial details loss in images; FCM_VMF can extract targets from images #2018 and #51084, but it is difficult to segment other images; DSFCM_N and PFLISCM segment #238011, #235098 and #24004, resulting in obvious misclassification of image background, but it can effectively segment other images; FCM_SICM and FSC_LNML segment #35008, #235,098, #238011 and #24004, resulting in over-segmentation. DSFCM_N and PFLISCM cannot reasonably segment #238011, #235098, and #24004, but it can effectively segment other images; FCM_SICM and FSC_LNML segment #35008, #235098, #238011, and #24004, and they also have a certain over-segmentation; KWFLICM, FLICM, and RDKWFLICM obtained similar segmented results. From Figs. 27 and 28, the Acc and PSNR indicators of the presented RDKWFLICM algorithm are much higher than other comparative algorithms. Overall, the presented RDKWFLICM algorithm for noiseless images has better generalizability and adaptability than many existing FCM-related algorithms.

6.9 Test and Analysis of Algorithm for Color Image

To verify the adaptability of the presented algorithm for color images, we select large numbers of color images from BSDS500 and UC Merced Land Use remote sensing dataset to demonstrate the effectiveness and generality of the presented algorithm. Considering the restricted space of this paper, we only provide eight color images from BSDS500 dataset and UC Merced Land Use remote sensing dataset for segmentation testing. SPN(0.2) is used to corrupt #51084 and #3063, GN(0.1) is used to corrupt #124084 and #12003, and SN(0.2) is used to corrupt building 30. These noiseless images and noise-polluted images are segmented using ten FCM-related algorithms. The corresponding segmented results are displayed in Fig. 29.

As shown in Fig. 29, for noise-free color images, ARFCM, FLICMLNLI, DSFCM_N, KWFLICM, FCM_SICM, and FLICM can effectively extract targets in images. However, FCM_VMF is almost unable to extract targets in images; for noise-polluted color images, DSFCM_N, PFLSCM, FLICMLNLI and FLICM cannot completely restrain the noise, and there is still noise in their segmented results. ARFCM, FCM_SCIM, and FSC_LNML can restrain most of noise in images, but the edges of the segmented image are not smooth and dissatisfied; Compared with other comparative algorithms, the presented RDKWFLICM algorithm obtain good segmentation results, which are roughly the same as the ground truths and their details are retained completely. From Figs. 30 and 31, the presented RDKWFLICM has the higher performance indicators than other comparative algorithms for these noiseless images. Overall, the RDKWFLICM algorithm presented in this paper also has good generality and adaptability for color image segmentation.

6.9.1 Statistical Comparisons and Analysis

To systematically evaluate various algorithms, this paper uses Friedman test [70] to measure the running efficiency (time) and segmentation quality (Acc, PSNR and mIoU) of ten FCM-related segmentation algorithms for sixteen images in Figs. 4, 6, 8, and 10. More specifically, in Friedman test process, a significance level is set to \(\alpha = 0.05\). Critical difference (CD) diagrams are shown in Fig. 32.

From Fig. 32, the presented algorithm for sixteen images achieves a statistical advantage in running efficiency and segmentation quality over FCM_SICM. The FLICMLNLI, PFLSCM, ARFCM, and FCM_VMF outperform the presented algorithm in running efficiency, as shown in Fig. 32a. However, the presented algorithm is not too much higher in running time than these comparative algorithms. Therefore, the presented algorithm can achieve a good trade-off between segmentation quality and running efficiency in statistics.

6.9.2 Algorithm Convergence Test

This paper monitors the algorithm by counting the number of iterations corresponding to its convergence during iteration. The condition used to determine the convergence of the algorithm is whether the deviation between the clustering centers corresponding to the previous and current iterations is less than a predetermined threshold level. In the iteration of FCM-related clustering, updating the clustering centers is very important. When the deviation of the clustering centers is less than or equal to a predetermined threshold level, the clustering centers have reached a stable state, and the clustering algorithm can be considered to have converged. Usually, we can define an algorithm stopping error or the maximum number of iterations as the stopping condition for any iterative algorithm, and if the algorithm reaches the stopping condition, it is considered that the algorithm has converged. To test the convergence speed of various algorithms, three images are selected from BSDS500 dataset [55], UC Merced land use dataset [58], and brain tumor MRI dataset [60] for segmentation testing. The number of iterations of various algorithms for different noise-polluted images are detailed in Table 7.

From Table 7, the algorithm presented in this paper has fewer iterations than other comparative algorithms. By comparing iteration times of different algorithms, the algorithm presented in this paper is obviously better than other comparative algorithms in aspect of the rate of convergence. Overall, the algorithm presented in this paper not only has good segmentation performance, but also has high operational efficiency.

6.9.3 Impact of Cluster Number on the Algorithm

Determining the cluster number in FCM-related algorithms is the problem of clustering validity, and it is also important topic in fuzzy clustering theory. So far, many clustering validity functions [51] are constructed to solve the problem of selecting optimal number of clusters in many unsupervised clustering. Validity functions not only solve the problem of the number of clusters in FCM-related algorithm, but also uses to guide FCM-related algorithms for data analysis and image understanding [71].

In this paper, we use the software in reference [51] to determine the number of clusters for image segmentation. However, if the number of clusters is not selected properly, the segmented results of FCM-related algorithms will differ significantly from the ground truth, leading to catastrophic errors in image understanding.

Irregular synthetic image with five classes (abbreviated as SI), medical image with four classes (abbreviated as CT31), natural image (abbreviated as 24,063), and remote sensing image with three classes (abbreviated as buildings 69) are selected for segmentation testing. These four images are corrupted by various types and intensities of noise. The corresponding noisy images are processed using the RDKWFLICM algorithm presented in this paper. The segmented results are displayed in Fig. 33, and the evaluation indexes are detailed in Table 8.

As shown in Fig. 33, when the number of classes is small, the details in image cannot be fully extracted, resulting in inaccurate image segmentation. When the number of classes is close to the real number of classes in image, various features and details can be better extracted, thus obtaining higher segmentation accuracy and more accurate other evaluation indexes in Table 8. Therefore, when FCM-related algorithms are used for image segmentation, it is necessary to select an appropriate number of classes according to clustering validity functions to obtain satisfactory segmented results.

7 Conclusion and Outlook

This paper presents a reconstruction-aware kernelized FCM with weighted local information and guided filtering for image segmentation, which enhances the segmentation performance of KWFLICM algorithm in the stronger noise. This algorithm first uses local entropy-based Gaussian filter to process noisy image; Then the filtered image of Gaussian filter is embedded into bilateral filter and an optimization model of guided bilateral filter is established; Finally, the guided bilateral filtering is fused into KWFLICM algorithm, and a tri-level alternative and iterative algorithm of reconstruction data, fuzzy membership and the clustering centers are presented. This algorithm has a solid mathematical theoretical foundation and good local convergence, paving the way for its widespread application. Extensive experiments indicate that the presented algorithm has good segmentation performance and strong anti-noise robustness, and it outperforms many existing robust FCM-related algorithms such as KWFLICM. However, there are still the following issues that need to be addressed: (1) the low contrast images or edge blurred images with complex noise pollution may lead to the lack of clarity of the image edges, which in turn affects the accuracy of image segmentation and object recognition. (2) The proposed algorithms need to be manually parameterized and cannot be adaptively adjusted, which leads to inconvenience in use. (3) The proposed algorithm takes a long time to process the noisy image and the processing time increases with the increase of noise, image size and image complexity. Therefore, we have made further improvements to the algorithm in future work, strengthening its running efficiency and adaptability.

In near future, we will combine the proposed algorithm with sparse encoding based click prediction [72] for web noisy image reranking, and deeply integrate the proposed algorithm with layered deep click feature prediction [73] to solve the problem of noisy image recognition. This has significant value in promoting the widespread application of the algorithm proposed in this paper.

References

Qureshi I, Yan J, Abbas Q, Shaheed K, Riaz AB, Wahid A, Khan MWJJ, Szczuko P (2023) Medical image segmentation using deep semantic-based methods: a review of techniques, applications and emerging trends. Inf Fusion 90:316–352

Nie Y, Guo S, Chang J, Han X, Huang J, Hu SM, Zhang JJ (2020) Shallow2Deep: Indoor scene modeling by single image understanding. Pattern Recogn 103:107271

Li H, Zeng N, Wu P, Clawson K (2022) Cov-Net: a computer-aided diagnosis method for recognizing COVID-19 from chest X-ray images via machine vision. Expert Syst Appl 207:118029

Gubbi MR, Gonzalez EA, Bell MAL (2022) Theoretical framework to predict generalized contrast-to-noise ratios of photoacoustic images with applications to computer vision. IEEE Trans Ultrason Ferroelectr Freq Control 69(6):2098–2114

Tellez D, Litjens GJ, van der Laak JA, Ciompi F (2018) Neural image compression for gigapixel histopathology image analysis. IEEE Trans Pattern Anal Mach Intell 43(2):567–578

Wang D, Wang X (2019) The iterative convolution-thresholding method (ICTM) for image segmentation. Pattern Recogn 130:108794

Kucharski A, Fabijańska A (2021) CNN-watershed: a watershed transform with predicted markers for corneal endothelium image segmentation. Biomed Signal Process Control 68:102805

Li Z, Yue J, Fang L (2022) Adaptive regional multiple features for large-scale high-resolution remote sensing image registration. IEEE Trans Geosci Remote Sens 60:1–13

Shang R, Liu M, Jiao L, Feng J, Li Y, Stolkin R (2022) Region-level SAR image segmentation based on edge feature and label assistance. IEEE Trans Geosci Remote Sens 60:1–16

Li FY, Li W, Gao X, Xiao B (2021) A novel framework with weighted decision map based on convolutional neural network for cardiac MR segmentation. IEEE J Biomed Health Inform 26(5):2228–2239

Wang C, Pedrycz W, Li Z, Zhou M (2021) Kullback–Leibler divergence-based fuzzy C-means clustering incorporating morphological reconstruction and wavelet frames for image segmentation. IEEE Trans Cybern 52(8):7612–7623

Kumar N, Kumar H (2022) A fuzzy clustering technique for enhancing the convergence performance by using improved fuzzy C-means and particle swarm optimization algorithms. Data Knowl Eng 140:102050

Kumar P, Agrawal RK, Kumar D (2023) Fast and robust spatial fuzzy bounded k-plane clustering method for human brain MRI image segmentation. Appl Soft Comput 133:109939

Ahmed MN, Yamany SM, Mohamed N, Farag AA, Moriarty T (2002) A modified fuzzy C-means algorithm for bias field estimation and segmentation of MRI data. IEEE Trans Med Imaging 21(3):193–199

Chen S, Zhang D (2004) Robust image segmentation using FCM with spatial constraints based on new kernel-induced distance measure. IEEE Trans Syst Man Cybern Part B (Cybern) 34(4):1907–1916

Cai W, Chen S, Zhang D (2007) Fast and robust fuzzy C-means clustering algorithms incorporating local information for image segmentation. Pattern Recogn 40(3):825–838

Krinidis S, Chatzis V (2010) A robust fuzzy local information C-means clustering algorithm. IEEE Trans Image Process 19(5):1328–1337

Gong M, Liang Y, Shi J, Ma W, Ma J (2012) Fuzzy C-means clustering with local information and kernel metric for image segmentation. IEEE Trans Image Process 22(2):573–584

D’Haeyer JP (1989) Gaussian filtering of images: a regularization approach. Signal Process 18(2):169–181

Xie S, Huang W, Yang T, Wu D, Liu H (2020) Compressed sensing based image reconstruction with projection recovery for limited angle cone-beam CT imaging. In: 2020 42nd annual international conference of the IEEE engineering in medicine biology society (EMBC), pp 1307–1310

Wan C, Ye M, Yao C, Wu C (2017) Brain MR image segmentation based on Gaussian filtering and improved FCM clustering algorithm. In: 2017 10th international congress on image and signal processing, biomedical engineering and informatics (CISP-BMEI), pp 1–5

Tomasi C, Manduchi R (1998) Bilateral filtering for gray and color images. In: Sixth international conference on computer vision (IEEE Cat. No.98CH36271), pp 839–846

He K, Sun J, Tang X (2013) Guided image filtering. IEEE Trans Pattern Anal Mach Intell 35(6):1397–1409

Cheng Y, Jia Z, Lai H, Yang J, Kasabov NK (2020) A fast sand-dust image enhancement algorithm by blue channel compensation and guided image filtering. IEEE Access 8:196690–196699

Mukherjee R, Debattista K, Rogers TB, Bessa M, Chalmers A (2018) Uniform color space-based high dynamic range video compression. IEEE Trans Circuits Syst Video Technol 29(7):2055–2066

Gao H, Zhang G, Huang M (2021) Hyperspectral image superresolution via structure-tensor-based image matting. IEEE J Sel Top Appl Earth Obs Remote Sens 14:7994–8007

Guo L, Chen L, Chen CP, Zhou J (2018) Integrating guided filter into fuzzy clustering for noisy image segmentation. Digital Signal Process 83:235–248

Liu C, Yang C, Wang J (2021) Window-aware guided image filtering via local entropy. IET Image Proc 15(7):1459–1470

Feng Q, Chen L, Chen CP, Guo L (2020) Deep fuzzy clustering-a representation learning approach. IEEE Trans Fuzzy Syst 28(7):1420–1433

Lei L, Wu C, Tian X (2022) Robust deep kernel-based fuzzy clustering with spatial information for image segmentation. Appl Int 53:23–48

Huang K, Zhang Y, Cheng HD, Xing P, Zhang B (2021) Semantic segmentation of breast ultrasound image with fuzzy deep learning network and breast anatomy constraints. Neurocomputing 450:319–335

Gu Y, Ni T, Jiang Y (2022) Deep possibilistic-means clustering algorithm on medical datasets. Comput Math Methods Med 2022(5):1–10

Pitchai R, Supraja P, Victoria AH, Madhavi M (2021) Brain tumor segmentation using deep learning and fuzzy k-means clustering for magnetic resonance images. Neural Process Lett 53(4):2519–2532

Chen J, Li Y, Luna LP, Chung HW, Rowe SP, Du Y, Solnes LB, Frey EC (2021) Learning fuzzy clustering for SPECT/CT segmentation via convolutional neural networks. Med Phys 48(7):3860–3877

Hržić F, Štajduhar I, Tschauner S, Sorantin E, Lerga J (2019) Local-entropy based approach for X-ray image segmentation and fracture detection. Entropy 21(4):338

Lelandais B, Ducongé F (2015) Deconvolution regularized using fuzzy C-means algorithm for biomedical image deblurring and segmentation. In: 2015 IEEE 12th international symposium on biomedical imaging (ISBI), pp 1457–1461

Zhang L, Zhong W, Zhong C, Lu W, Liu X, Pedrycz W (2017) Fuzzy C-means clustering based on dual expression between cluster centers and reconstructed data. Int J Approx Reason 90:389–410

Zangwill WI (1969) Nonlinear programming a unified approach. Prentice-Hall, Inc., Engle-wood Cliffs

Abu MS, Aik LE, Arbin N (2015) A theorem for improving kernel based fuzzy C-means clustering algorithm convergence. AIP Conf Proc 1660:050044

Saha A, Das S (2018) Stronger convergence results for the center-based fuzzy clustering with convex divergence measure. IEEE Trans Cybern 49(12):4229–4242

Yang MS, Tian YC (2015) Bias-correction fuzzy clustering algorithms. Inf Sci 309:138–162

Van Pham N, Pham LT, Pedrycz W, Ngo LT (2021) Feature-reduction fuzzy co-clustering approach for hyper-spectral image analysis. Knowl Based Syst 216:106549

Chang-Chien SJ, Nataliani Y, Yang MS (2021) Gaussian kernel C-means clustering algorithms. Soft Comput 25(3):1699–1716

Gao Y, Wang Z, Xie J, Pan J (2022) A new robust fuzzy C-means clustering method based on adaptive elastic distance. Knowl Based Syst 237:107769

Zhang X, Sun Y, Liu H, Hou Z, Zhao F, Zhang C (2021) Improved clustering algorithms for image segmentation based on non-local information and back projection. Inf Sci 550(6):129–144

Zhang H, Li H, Chen N, Chen S, Liu J (2022) Novel fuzzy clustering algorithm with variable multi-pixel fitting spatial information for image segmentation. Pattern Recogn 121:108201

Ghosh S, Hazarika AP, Chandra A, Mudi RK (2021) Adaptive neighbor constrained deviation sparse variant fuzzy C-means clustering for brain MRI of AD subject. Vis Inform 5(4):67–80

Tang Y, Ren F, Pedrycz W (2020) Fuzzy C-means clustering through SSIM and patch for image segmentation. Appl Soft Comput 87:105928

Wang Q, Wang X, Fang C, Yang W (2020) Robust fuzzy C-means clustering algorithm with adaptive spatial intensity constraint and membership linking for noise image segmentation. Appl Soft Comput 92:106318

Wei T, Wang X, Li X, Zhu S (2022) Fuzzy subspace clustering noisy image segmentation algorithm with adaptive local variance non-local information and mean membership linking. Eng Appl Artif Intell 110:104672

José-García A, Gómez-Flores W (2023) CVIK: a Matlab-based cluster validity index toolbox for automatic data clustering. SoftwareX 22:101359

Wang Q, Wang X, Fang C, Jiao J (2021) Fuzzy image clustering incorporating local and region-level information with median memberships. Appl Soft Comput 105:107245

Gharieb RR, Gendy G, Selim H (2018) A hard C-means clustering algorithm incorporating membership KL divergence and local data information for noisy image segmentation. Int J Pattern Recognit Artif Intell 32(04):1850012

Zheng X, Chen T (2021) High spatial resolution remote sensing image segmentation based on the multiclassification model and the binary classification model. Neural Comput Appl 35(5):3597–3604

https://www2.eecs.berkeley.edu/Research/Projects/CS/vision/grouping/resources.html#bsds500

https://figshare.com/articles/dataset/SIRI_WHU_Dataset/8796980