Abstract

Machine-aided geological interpretation provides an opportunity for rapid and data-driven decision-making. In disciplines such as geostatistics, the integration of machine learning has the potential to improve the reliability of mineral resources and ore reserve estimates. In this study, inspired by existing geostatistical approaches that use radial basis functions to delineate domain boundaries, we reformulate the problem into a machine learning task for automated domain boundary delineation to partition the orebody. We use an actual dataset from an operating mine (Driefontein gold mine, Witwatersrand Basin in South Africa) to showcase our new method. Using various machine learning algorithms, domain boundaries were created. We show that based on a combination of in-discipline requirements and heuristic reasoning, some algorithms/models may be more desirable than others, beyond merely cross-validation performance metrics. In particular, the support vector machine algorithm yielded simple (low boundary complexity) but geologically realistic and feasible domain boundaries. In addition to the empirical results, the support vector machine algorithm is also functionally the most resemblant of current approaches that makes use of radial basis functions. The delineated domains were subsequently used to demonstrate the effectiveness of domain delineation by comparing domain-based estimation versus non-domain-based estimation using an identical automated workflow. Analysis of estimation results indicate that domain-based estimation is more likely to result in better metal reconciliation as compared with non-domained based estimation. Through the adoption of the machine learning framework, we realized several benefits including: uncertainty quantification; domain boundary complexity tuning; automation; dynamic updates of models using new data; and simple integration with existing machine learning-based workflows.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Mineral resources and ore reserves estimation requires sound knowledge of deposit geology (e.g., lithofacies, alteration and weathering profiles, structure, geometry and ore variability) and geospatial statistics (spatial continuity, grade [or quality], volume-variance effect and estimation techniques) (Chilès & Delfiner, 2012; Rossi et al., 2013, Sanchez & Deutsch, 2022). Given that ore deposits are formed by a fortuitous combination of complex processes, the characterization and prediction of in-situ resource distribution will invariably be uncertain (Nwaila et al., 2020, 2022a). This is partly due to the fact that an exact description and thorough sampling of a geological system is impossible at all scales of mapping (Garrett, 1983). From the perspective of complex systems, processes responsible for the formation of ore deposits are multi-scaled (regional- to microscopic-scale) and multivariate (a complex interaction of dynamic crustal and surficial processes) (Frimmel & Nwaila, 2020). This has strong implications on the reliability or certainty of all spatial representations of geological bodies, from exploration maps to resource models, specifically the accuracy of lithological or domain boundaries. At the orebody scale, oftentimes, the true geometry and metal tenor of an ore deposit or a constituent lithofacies can only be known after it has been fully extracted. In order to estimate the metal grade or quality of a mineral deposit, one of the common practices in mineral resource estimation consists of partitioning the orebody into spatially separable domains, the action of which is called “domaining” or “geodomaining” (Larrondo & Deutsch, 2005; Yunsel & Ersoy, 2011). There is no universal solution for domaining; however, some of the current practices include (Chilès & Delfiner, 2012; Rajabinasab & Asghari, 2019; Talebi et al., 2019): (a) defining ore-grade intervals/cut-offs bounded by a heuristic boundary to form a domain; (b) partitioning the orebody based on structural and alteration properties; (c) grade shells based on spatial dependency between paired samples; and (d) coupled grade intervals weighted based on the probability of occurrence within a specific spatial position in an unsampled location. These processes are applied prior to geostatistical modeling and estimation/simulation at unsampled locations, but can lead to erroneous estimates if applied incorrectly.

The premise of geostatistical estimation using kriging is the assumption of second-order stationarity and spatial autocorrelation, where partitioned domains are assumed to exhibit homoscedastic distributions (Dias & Deutsch, 2022). Each domain’s statistical stationarity is tested using various geological, statistical and mathematical techniques such as variability of lithofacies, structural polygons and F-test statistics based on the analysis of variance (ANOVA) similarity as opposed to comparing the first-moment of stationarity (i.e., the mean or central tendency). This demonstrates that defining geologically coherent and statistically stationary domains is of prime importance (Talebi et al., 2019). Although significant progress has been made in partitioning an orebody into different domains, there are still larger uncertainties associated with domain boundary definition. Domain boundaries can be classified into three categories (Larrondo & Deutsch, 2005; Emery, 2008):

-

(a)

Hard domain boundaries refer to geologically coherent and statistically stationary boundaries that have been confirmed through physical mapping of an orebody’s characteristics, mineralogy and metallurgical test work validated via statistical tests or marked by known geological contacts (e.g., regular/sharp or uneven contacts) or abrupt changes in the metallurgical response of ore (Ortiz & Emery, 2006).

-

(b)

Soft domain boundaries are those that are either yet to be confirmed or inferred from the conventional geological interpretation of an orebody’s characteristics, geometry and metallurgical response that may be subject to change pending physical verification via geological mapping and numerical modeling (Elliot et al. 2001).

-

(c)

Transition or overlapping domain boundaries are those that occur along phase transitions (i.e., gradational contact) of an orebody either to primary formation mechanisms or via post-emplacement/formation overprint such as metamorphism. However, in many cases, the geologic structures that generate a deposit are transitional (overlapping several geologic domains; Kasmaee et al., 2019).

From the above domain categories, mineral resources and ore reserves estimation in the presence of hard boundaries is straightforward because only samples within a domain are used, and there is no continuity between variables in adjacent domains (Emery & Maleki, 2019). Estimating regionalized variables bounded by soft domain boundaries is simple to some extent, provided that the uncertainty can be quantified based on a-priori information (Ortiz & Emery, 2006). However, any estimation in transition or overlapping boundary is most likely to be subjected to undesirable artifacts due to spatial variabilities on either side of boundaries. To compensate for this uncertainty, resource geologists and geostatisticians have resorted to defining such domains based on changing the local mean grade, which is usually gradational rather than abrupt, thus estimating variables in this type of domain by a moving neighborhood analysis (Journel & Rossi, 1989). Others use probabilistic indicator kriging to define boundaries of transition zones (Kasmaee et al., 2019). This approach is subject to contention as it does not always hold true, especially in variably-altered and vein-type mineralization ores that are characterized by a high nugget variance. The overarching aim of this study is to demonstrate an explainable, empirically and theoretically feasible machine learning-based and data-driven approach for the delineation of domain boundaries in order to improve the quality of estimated mineral resources using a case study from a highly variable narrow tabular orebody (Middelvlei Reef) of the Witwatersrand goldfields (South Africa). Our workflow consists of three main components: (1) geostatistical data processing; (2) machine learning-based predictive modeling of domain boundaries (delineation); and (3) block modeling and visualization. This workflow is unconcerned with the assignment of samples into domains. It is worth noting that any sound domaining strategy should also consider the feasibility of extracting economically valuable blocks in terms of mine planning, logistics associated with ore handling and storage of extracted ore blocks.

Geological Background

The Mesoarchean-aged Witwatersrand Basin is located at the center of the Kaapvaal Craton in South Africa and is the largest known gold province in the world, having produced about one third (~ 53,000 metric tons) of all gold mined in history (Frimmel, 2019). Annual production was once the world’s highest for decades, has since steadily decreased from > 1000 metric tons annually in the 1970s to the current levels (i.e., 2018 to date) of < 150 metric tons (Frimmel & Nwaila, 2020). Nonetheless, remaining resources within the basin are estimated to be close to 30% of global known resources (Frimmel, 2014), albeit at ever decreasing ore grades (average of < 4 g/t) and increasing mining depths (> 3.7 km depth; Frimmel & Nwaila, 2020).

The Witwatersrand Basin is defined by a set of cratonic successor basins that rest on the Paleo- to Mesoarchean granitoid-greenstone terranes of the Kaapvaal Craton. At the base of the basin are the Dominion Group rocks (3086 ± 3 to 3074 ± 6 Ma; Armstrong et al., 1991; Robb et al., 1992) which are characterized by a thin basal siliciclastic unit and a bimodal volcanic sequence. Overlying the Dominion Group is the Witwatersrand Supergroup, which is further divided into the West Rand and Central Rand groups. The West Rand Group (2914 ± 8 Ma; Armstrong et al., 1991) is characterized by quartzites and shales with minor conglomerate beds which are mostly restricted to the upper part of the group (Frimmel, 2019). The Central Rand Group (2790 ± 8 Ma; Gumsley et al., 2018) is dominated by quartzites and conglomerate beds which are separated by erosional unconformities (Catuneanu, 2001). Compared to the West Rand Group, conglomerate beds (or reefs) from the Central Rand Group are exceptionally endowed in Au and U. A stratigraphic equivalence of the Witwatersrand Supergroup is the Pongola Supergroup, which is situated toward the eastern margin of the Kaapvaal Craton but is less endowed in both Au and U.

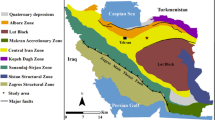

Although most of the high-grade conglomerate beds of the Witwatersrand Basin are exhausted, several marginal to low-grade orebodies remain unmined and poorly explored. Current orebodies are geometallurgically more challenging to process than historic orebodies, because of significant nugget effects and a generally more complex mineralogical assemblage. The Middelvlei Reef is one of the largest unmined gold resources in the Witwatersrand goldfields, especially in the Carletonville goldfield (Fig. 1). The Middelvlei Reef in the Driefontein mining area is a 20 cm- to 4 m-thick auriferous conglomerate bed with interbedded quartzites that is stratigraphically located approximately 50 m to 70 m above the Carbon Leader Reef, near the base of the Central Rand Group (Jolly, 1984; Myers et al., 1993). In the northern part of the Driefontein mine area, the Middelvlei Reef subcrops against the sub-horizontal Black Reef Formation (Transvaal Supergroup), whereas in the eastern part, it subcrops against the Ventersdorp Contact Reef (Ventersdorp Supergroup) (Myers et al., 1993). Both above and below the Middelvlei Reef are immature to mature quartzites. Rocks in the area strike east–west and dip between 20° and 30° south (Jolly, 1984). Lithologies in the Carletonville goldfield were deposited in an aggradational sandy braided stream and floodplain environment, with the highest gold grades associated with paleo-stream channels (east in the Driefontein mine area; Els, 1983; Jolly, 1984; Myers et al., 1993).

Simplified geological maps of the Witwatersrand Supergroup (modified after Frimmel et al., 2005) and Carletonville goldfield (modified after McCarthy, 2006). (a) Map and location of the Witwatersrand Basin. (b) Map of the Carletonville goldfield with the location of the Driefontein mine area (shaded). The location of the Ventersdorp Contact Reef is included. The inset demonstrates the stratigraphic succession in the Carletonville goldfield area. In both (a) and (b) the cover sequences of the younger Ventersdorp and Transvaal supergroups have been removed

In the study area, the Middelvlei Reef is bounded by major (≥ 50 m displacement) and minor (< 50 m displacement) faults. Prominent geological structures within the study area consist of a parallel system of sinistral “wrench or tear” faults, a twin strike-slip E–W tending faults with left-lateral displacement of up to 500 m and a reverse sense of vertical displacement of 50 to 20 m down-throw to the NW (Fig. 1b). These faults can be tracked from the West Rand goldfield into the Carletonville goldfield. Similar geometry of faults was observed in the Far East Rand goldfields, and are described in Dankert and Hein (2010) and references therein. In the study area, the tear faults close-out to 1 m vertical displacements toward the western margin of the Middelvlei Reef in the Driefontein mining area.

Data and Methodology

Source Data

The data for this proof-of-concept study originates from a mine that is extracting from the Middelvlei Reef. Samples are typically taken as decimeter-scale vertical averages from reefs (also known as channels, Fourie & Minnitt, 2016). The data contain unified spatial and geodomain information, and gold assays of 25 056 samples, of which about 10% are from underground exploration diamond drill holes and the remainder from grade-control channel chips. A sample horizontal spacing of 5 m was used for panel (block) sampling and horizontal spacing of 3 m in the development end. The orebody has a high nugget effect (~ 50% nugget variance) due to the close spacing occurrence of the physical gold nuggets coupled with possible sampling errors. Regardless, the overall spatial variability is known to be lower at the mine relative to the surrounding orebodies such as the Ventersdorp Contact Reef (Fig. 1b). All samples (from drill holes and channel samples) represent vertical averages of a minimum of 10 cm of material, composited to centimeter grams per metric tons (cm g/t), which is a standard industry practice to represent gold accumulation in narrow tabular orebodies. This ensures that the vertical variation is accounted for as modeling the third-dimension (depth) explicitly becomes undesirable for narrow tabular orebodies. This is different from other forms of orebodies such as porphyry-type copper deposits that are composited into accessible widths to enable the creation of ore-grade shells. Outliers in the dataset were assessed spatially (e.g., clusters of high or low-grade areas were not treated as outliers) and where detected, the outliers were trimmed based on a 98% confidence interval of the modeled distribution.

Geodomaining at the Point Level

The challenge of geodomaining is traditionally a geostatistical problem, whose solution best separates data points spatially using their variable domain characteristics (e.g., Fouedjio et al., 2021). Based on the information provided by the mine, geometallurgical information (i.e., ore grade, lithofacies or rock type, mineralization type, alteration and structural information) were used to assign samples in our dataset to domains. Geodomaining partitions the orebody into spatially contiguous regions that are more similar within than between. Notions of similarity can be a combination of data-driven and knowledge-driven approaches. One data-driven approach would be the clustering of samples to domains using unsupervised machine learning (e.g., Madani et al., 2022). For geometallurgical domaining, it is important that the resulting clusters are generally compact, spatially contiguous and correspond with knowledge (Madani et al., 2022). Regardless of the approach, geometallurgical domains are typically created to benefit downstream activities, from mapping (or modeling) to extraction and processing. Differences in downstream activities between companies imply that domains are generally not unique and largely heuristic. For our data, the largest-scale sample variability was attributable to geological factors (e.g., faults, orebody geometry and lithofacies). The in-mine geological and operating knowledge was used to divide the data points to one of seven domains. After the assignment, an F-test was performed on gridded samples to determine in-domain and between-domain homoscedasticity using a grid spacing at approximately twice the sampling density (10 m). Based on Krige’s relationship, it is expected that the variance of a portion of an orebody can be expected to be smaller than the orebody as a whole (Krige, 1951, 1981, 1997). Stationarity is a continuous measure and hence, there is no general consensus of the exact condition and confidence that must be used to partition samples (Dias & Deutsch, 2022). A 95% confidence limit (α = 0.05) was adopted in this study (Fig. 2). Domain 3 contained only seven data points, which are all closely located near data points in domain 0 (Fig. 2). The summary statistics of data in each domain are given in Table 1. Notice that the domains at the point level are labeled as “p0” to “p6” to avoid confusion with delineated spatial domains. Because only the fully domained data were supplied for this study, it is impossible to replicate the geodomaining process, since other information, including expert knowledge of the area was unavailable. Aside from replication, assignment of points to domains would be out of the scope of this paper. For each domain, the coefficient of variation (CV) was used to measure the dispersion of data points (Isaaks & Srivastava, 1989).

Automated Domain Boundary Delineation Using Machine Learning

Once the samples are separated, domain boundaries can be drawn. This is important for activities that require a gridded or continuous spatial representation of the data, such as mapping or resource modeling. The first requirement to enable machine learning-based domain boundary delineation is to formulate this problem into a machine learning task. Fortunately, this is relatively simple in our case. From the machine learning perspective, each data point contains a class label, which is the domain. The features are the data point’s coordinates. Therefore, the machine learning problem is essentially a spatial classification problem, where decision boundaries occur in spatial domain. Therefore, the machine learning task is to create predictive models that best predict the domain label (class label in machine learning terminology) using spatial coordinates. Model selection would be based on a combination of quantitative (metric-based) and qualitative (knowledge-based) methods. Qualitative methods cater to physical requirements of the domain boundaries, which are more subjective and can include, for example, the complexity of domain boundaries (e.g., to within extraction capability and geotechnical feasibility).

Mathematical methods to delineate domains include interpolation of signed distance functions using radial basis functions (RBF; Cowan et al., 2003). In this method, the distance between samples and the nearest sample of an adjacent domain is calculated. Negative values are set for samples that fall inside the modeled domain and positive values for samples that fall outside. Subsequently, the distances are interpolated and the boundary between domains is extracted where the distance equals to zero (Sanchez & Deutsch, 2022). There are different methods for distance interpolation, including: discrete smooth interpolation (Mallet, 1989), classical geostatistical methods (Blanchin & Chilès, 1993) and RBF methods (Cowan et al., 2003), which include RBF with gradients and constraints (Hillier et al., 2014) and RBF with local anisotropy (Martin & Boisvert, 2017). Using RBF methods as an example, the desire to interpolate between signed distances is a discipline-specific implementation of domain boundary delineation. In all previous delineation methods, the boundary transition zone is modeled using traditional mathematical and geostatistical methods (Sanchez & Deutsch, 2022 and references therein).

The idea of using RBF to model domain boundaries is closely resemblant of some machine learning algorithms. Perhaps the closest algorithm is the support vector machine (SVM) algorithm using a RBF kernel (Vapnik, 1998; Hsu & Lin, 2002; Karatzoglou et al., 2006). The SVM algorithm can be used for classification and regression tasks and is particularly adept at delineating nonlinear boundaries in high-dimensional space (Hsu & Lin, 2002; Karatzoglou et al., 2006). Nonlinear kernels such as the RBF kernel permits the SVM algorithm to separate linearly non-separable data. In classification tasks, SVM attempts to maximize the Euclidean distance between the closest samples (support vectors) to the decision plane, which, in the context of domain boundary delineation, is the domain boundary. Optimization occurs from the minimization of an objective function that measures the sum of the Euclidean distances between the support vectors and the decision plane (margin). The property of the SVM algorithm to maximize distance between support vectors using flexible nonlinear kernels makes it a theoretically perfect choice for data-driven domain delineation. The SVM algorithm has a few key hyperparameters, such as C, which defines a penalty for misclassifying support vectors and larger values increase the decision boundary complexity. Another hyperparameter in SVM for classification tasks is \(\gamma\), which specifies the nonlinear kernel’s coefficients. Because of the use of an Euclidean distance metric, SVM implicitly assumes Euclidean geometry for the feature space, which is not an issue for the task of domain boundary delineation, as the spatial coordinates can be converted into the UTM coordinate system (or similar), which is locally Euclidean. In essence, the SVM algorithm with the RBF kernel elegantly formulates the RBF-based approach as proposed by Sanchez and Deutsch (2022) into a machine learning task. There are benefits of choosing the machine learning framework, such as: (1) a data-driven and complete automation of domain boundary delineation; (2) explicit controls on the hyperparameters through either cross-validation or user selection (e.g., to tune boundary complexity to suit extraction capabilities); and (3) the ability to add non-spatial features to boundary delineation, which integrates other characteristics of the orebody or mining operation. Here, we demonstrate benefits (1) and (2), as our dataset is unsuitable to realize benefit (3), because no additional data attributes are available.

Aside from SVM and the inspiration of Sanchez and Deutsch (2022), there are other classification algorithms that are variably explainable and may be suitable for domain delineation. Some of them may also give physically unrealistic results, such as the tree-based methods, which delineate boundaries always parallel to feature coordinates. Unexplainable algorithms such as neural networks produce models that are inherently complex and difficult to interpret (Zuo et al., 2019), which gave rise to the field of explainable artificial intelligence (e.g., Linardatos et al., 2021). It is not only model complexity that may be a challenge to explain to justify the results, but in the case of geodomaining, an anticipated application would be dynamic model tuning and retraining during mining operations. For unexplainable models, their behavior may be difficult to extrapolate to new data and areas. In our study, for the sake of representation and completeness, but also to illustrate anticipatable behavior from common algorithms, we explore other common algorithms, including the k-nearest neighbors (kNN; Fix & Hodges, 1951; Cover & Hart, 1967), tree-based algorithms such as random forest and adaptive boosting or AdaBoost (Freund & Schapire, 1995; Ho, 1995; Breiman, 1996a, 1996b, 2001; Kotsiantis, 2014; Sagi & Rokach, 2018), logistic regression (for classification, Cramer, 2002), naïve Bayes (for classification, Rennie et al., 2003; Hastie et al., 2009), and artificial neural network (ANN, Curry, 1944; Rosenblatt, 1961; Rumelhart et al., 1986; Hastie et al., 2009; Lemaréchal, 2012). These algorithms, their functionality and hyperparameters in classification tasks are fully explained in Zhang et al. (2022) and Nwaila et al. (2022b) and references therein. In general, the hyperparameters control the geometric complexity of decision boundaries, which for geodomaining are domain boundaries.

To select algorithms and their best models over a parameter grid (Table 2), we used stratified k-fold cross-validation (k = 5) combined with the F1 score as a metric. Even though we used cross-validation for model selection in this work, it is not ideal for geospatial data. In geostatistical learning (Hoffimann et al., 2021), the spatial correlation of covariates leads to serious under-estimation of model errors and incorrect rankings of models. The F1 score is a harmonic mean of precision and recall, where the best score is 1 and the worst score is 0. These details are important for domain delineation. Stratification handles class imbalance (e.g., disproportionate sample coverage across domains), which is generally unacceptable for domain delineation because delineation should always occur between adjacent domains regardless of their populations. The choice of the F1 metric for model tuning is also important for domain delineation because it is important to symmetrically minimize both the number of false positives and negatives. This ensures that there is minimal bias of the samples from any domain that is misclassified into adjacent domains. To understand model performance, we averaged 10 runs of fivefold cross-validation across the entire dataset using the accuracy metric, the F1 score and confusion matrices (Fawcett, 2006). To demonstrate the ability to manually tune domain boundary complexity, we also adopted a manually tuned model using the same algorithm and parameter grid as the SVM algorithm, but with a substantially less regularization (C = 1,000,000). In this study, the machine learning workflow was implemented in Python using the Scikit-Learn package (Buitinck et al., 2013).

Spatial Autocorrelation and Domain-Based Resource Estimation

For demonstrating the effect of domaining versus un-domained estimation of resources, we employed a standard geostatistical resource estimation approach using GSLIB (Deutsch & Journel, 1992) via Python in the form of GeostatsPy (Pyrcz et al., 2021). In this study, we used block ordinary kriging using a spherical variogram model (Krige, 1997; Olea, 1999). Subsequently, the kriging variance \(({\sigma }_{\mathrm{K}}^{2}\)), efficiency (KEFF; Krige, 1997) and slope of regression (SLOR; Snowden, 2001) were calculated. KEFF is the ratio of the difference of the kriging (or estimation) variance and the block variance \(({\sigma }_{\mathrm{B}}^{2})\), and the block variance (\(\mathrm{KEFF}=\frac{{\sigma }_{\mathrm{B}}^{2}-{\sigma }_{\mathrm{K}}^{2}}{{\sigma }_{\mathrm{B}}^{2}}\), Deutsch & Deutsch, 2012). As \({\sigma }_{\mathrm{K}}^{2}\) tends to zero, KEFF approaches 1, which implies that a perfect match exists between the estimated and true grade distributions. Where data become sparser or clustered, or as blocks are extrapolated more than interpolated, KEFF decreases. Sometimes kriging efficiency can be negative, signaling extremely unreliable estimates. SLOR measures the conditional bias of the kriging estimate and can be written as \(\mathrm{SLOR}=\frac{{{\sigma }_{\mathrm{B}}}^{2}-{{\sigma }_{\mathrm{K}}}^{2}+\lambda }{{{\sigma }_{\mathrm{B}}}^{2}-{{\sigma }_{\mathrm{K}}}^{2}+2\lambda }\), where \(\lambda\) is the Lagrange multiplier (Snowden, 2001). For simple kriging, SLOR is always exactly 1, whereas for ordinary kriging, SLOR is generally less than 1. Higher values of SLOR implies that the estimated high and low grades correspond more accurately to the respective true high and low grades. Maps of these metric scores facilitate the interpretation of block kriging results (Isaaks, 2005).

The number of samples for estimation per domain is a kriging hyperparameter (using machine learning terminology). In this study, we used a grid search from 1 to 20 samples combined with leave-one-out cross-validation to select optimum number of samples at a point level using ordinary kriging, which is a heuristic method (Deutsch et al., 2014). In general, fewer number of samples produces less accurate interpolation results, while higher number of samples introduces progressive smoothing. To assess the cross-validation results, we chose the mean absolute error or MAE. Using the elbow method, the maximum number of samples is the number of samples with the lowest MAE, while the minimum number of samples was determined as the number of samples that first reached the flat portion of the elbow curve.

Results

Data-Driven Geodomain Delineation

Data pre-processing includes the removal of samples with assay values (in units of cm g/t) of 0 or negative gold grades, as well as trimming the outliers above the 98th percentile. While this is not a requirement for predictive modeling, grades that were at 10 cm g/t (equivalent to 0.5 g/t) or below were either samples that were taken off from the orebody, or were erroneously recorded. The trimming of outliers is necessary for geostatistical interpolation (Chiquini & Deutsch, 2017). After this process, feature scaling was used on the spatial coordinates of the samples, such that they were rescaled to between 0 and 1 for both the X and Y (in UTM) directions. This is a requirement for spatially-aware machine learning algorithms (in the feature space, because of the use of spatial metrics to measure distance). After pre-processing, about 94.5% of the data points were retained, resulting in a loss of 1380 samples.

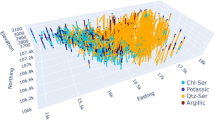

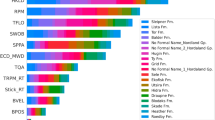

Algorithm and model selection showed that the least accurate algorithm was the naïve Bayes algorithm, while the most accurate was the random forest algorithm (Fig. 3). The optimized hyperparameters are shown in Table 3. The confusion matrices derived from averages of 10 randomized fivefold (stratified) cross-validation runs (Fig. 4) show that domain p3, which only featured seven samples, was the most challenging to classify correctly. The delineated domain boundaries are presented in Figure 5. In addition to featuring only seven data points, domain p3 occupied the least space (see Fig. 2), which also made it generally unreliable to extrapolate its boundaries beyond the data points. In the completely data-driven perspective, this domain was best isolated by the kNN algorithm and ignored by a few algorithms, namely the SVM, logistic regression and ANN algorithms. This is because domain p3 presents a pathological condition for classification: very infrequent occurrence of the class label; and the extremely close spacing of the data points. Hence, some algorithms may treat domain p3 as noise or outliers. Manually lowering the regularization (C = 1,000,000) of the SVM decision boundaries permitted the isolation of domain p3 (Fig. 5h). However, the manual approach is less data-driven and requires knowledge of the periphery of the orebody (whether domain p3 is significant, e.g., it represents a real but under-sampled orebody). It can be seen that the curvature of the domain boundaries, especially for delineated domains 0 and 6, increased and were more contoured to individual data points than that with more regularization (Fig. 5b). Within this more complex domain model, six of the seven data points were captured within the space designated for domain p3.

F1 and accuracy scores of various algorithms and their best models through fivefold (stratified) cross-validation. Here NB is naïve Bayes; LR is logistic regression; ANN is artificial neural networks; SVM is support vector machine; Man is manually tuned SVM; kNN is k-nearest neighbors; AB is AdaBoost; and RF is random forest

Domain boundary delineated by various algorithms: (a) k-nearest neighbors (kNN); (b) support vector machine (SVM) with domain labels at the point level (domains p0 to p6); (c) random forest (RF); (d) AdaBoost (AB); (e) naïve Bayes (NB); (f) logistic regression (LR); (g) artificial neural networks (ANN); and (h) manually tuned SVM (Man)

Although domain p3 exhibited the lowest accuracy according to the confusion matrices, it is nevertheless consistently delineated (even with 0 internal data points) with the exception of the logistic regression algorithm (Fig. 5a–g). In particular, the domain boundary as delineated by the random forest algorithm would be impossible to realize in the extraction setting and is probably not physically realistic in terms of orebody geometry (Fig. 5c). Therefore, although the random forest algorithm performed the best during algorithm selection, its results were not particularly desirable for downstream uses of geodomains. The results from the logistic regression, naïvse Bayes and ANN algorithms were not particularly selective (Figs. 3 and 4) and feature substantial amount of data crossing over into adjacent domains (Fig. 5e–g) and, therefore, were also undesirable for further downstream use. However, ANN is theoretically infinitely tunable by enlarging the hidden layer(s), but this would result in increasingly more complex domain boundaries with correspondingly unexplainable model behavior. The results from the remainder—kNN, SVM and AdaBoost (Fig. 5a, b and d)—were more reasonable. Model choice depends on extraction capabilities and mine planning layout (which are not data-driven). However, compared with boundaries obtained by kNN and AdaBoost, the SVM-derived boundaries were substantially less complex geometrically (especially relative to those obtained by kNN). Although it was unable to fully separate the sparsely sampled domain p3, it was able to create an empty spatial domain that corresponded to domain p3. This may be useful in cases where sparsely sampled domains occur near the edges of orebodies. The manually tuned SVM model also featured an unexplainable triangular cusp in the north-western portion of the map (Fig. 5h), which is an artifact of model over-fitting. The membership of all domains as delineated by all models is largely similar (Figs. 4 and 5).

Variogram Modeling and Fitting of the Spherical Model

For our dataset, we chose the domain model provided by the SVM algorithm (Fig. 5b). As the domain membership is largely comparable between all models, we expect only minor quantitative differences between their resulting resource models. Using the SVM model, the membership of samples at the point level within each domain was re-assigned according to the model (summary statistics provided in Table 4). Subsequently, we performed automated anisotropic variogram modeling using a single structure and the spherical model (domains 1, 5 and 6 shown in Fig. 6). Others are qualitatively similar with the exception of domain 3, which is empty (the data were included in the adjacent domain, domain 0). Even if any model isolated all seven data points in the original domain p3 (e.g., the membership of domain 3 is equal to domain p3), there would have been insufficient data for further modeling. The fitting method weighs the data proportional to their lag distance, which biases the fit to better capture the pre-range portion of the variogram. For the model parameters for all domains, see Table 5.

Optimization of the Number of Samples to be Used for Kriging

The results of the grid search are illustrated for domains 1, 5 and 6 in Figure 7. The qualitative behavior of all domains was identical with the exception of domain 3, which contained no samples. In general, the MAE reached the beginning of the elbow at around 5 samples. Thereafter, the MAE at increasing number of samples dropped slowly with increasing number of samples. These per-domain optimized number of samples were subsequently used in the interpolation process for resource estimation-type of block modeling.

Mean absolute error (MAE) versus the number of samples in a leave-one-out cross-validation using ordinary kriging for domains 1 (a), 5 (b) and 6 (c). For domain 1, the maximum number of samples is 11 and the minimum is 4. For domain 5, the maximum number of samples is 18 and the minimum is 4. For domain 6, the maximum number of samples is 6 and the minimum is 4

Kriging Block Model

Using data from each domain, as delineated by the SVM algorithm with domain-optimized variograms and hyperparameters (variogram models and number of samples), we created a geodomained block models at a resolution of 20 m (square) using ordinary kriging (Fig. 8a). This is the block size that is commonly used by the mine. Similarly, the KEFF and SLOR maps are shown in Figure 8b and c. The summary statistics for the geodomained model are given in Table 6. An examination of the geodomained block model (Fig. 8a) shows that the cross-domain continuity of gold grades was excellent (e.g., upper right corner of Figure 8a), such that it would be difficult to unambiguously identify domain boundaries by solely relying on the block model.

To appreciate the empirical impact of geodomaining on resource estimation, we created a non-geodomained block model (Fig. 9) using the same data and workflow (pre-processing, automated variogram modeling and hyperparameter tuning). Due to differences in variogram ranges between the geodomained and non-geodomained block models, the total number of blocks estimated are different (Figs. 8a and 9). The non-geodomained model contains 4060 estimated blocks, versus the 3992 blocks in the geodomained model (excluding all blocks of domain 3), which is about 1.7% relative difference using the geodomained model as a reference. Visually, the mid- to high-grade zones near the north-western and north-eastern portions of the maps extend further in absence of domain boundaries (Fig. 9). At the first-order of stationarity, it is clear that blocks with higher gold concentrations tend to be more abundant and connected in appearance compared to that of the geodomained model and the maximum block grade is lower (Fig. 8a). As such, there is more smoothing in the non-geodomained model compared to the geodomained model (Figs. 8a and 9). Higher smoothing results in a reduction in the block standard deviation and maximum grade. In our case, the geodomained model featured an increased maximum block grade (about 6% relative difference), which occurred in domain 0 (2154.18 cm g/t), relative to the non-geodomained model (2038.22 cm g/t, Tables 6 and 7). A map of the relative difference (geodomained grades minus the non-geodomained grades, divided by the non-geodomained grades) shows that the difference is the most pronounced in the vicinity of the vertical boundary between domains 1 and 5 and the least prominent inside domain 5 (Fig. 10). Quantitatively, there was an excess of 3.03 × 104 cm g/t of gold in the non-geodomained model relative to the geodomained model (derived from Tables 6 and 7). This amounted to about 1.4% relative error between the two models, using the geodomained block model as a reference. Since ground truth was unavailable, it was impossible to verify either resource model. However, a feed-forward analysis is possible. The extent of smoothing, which is an undesirable consequence of spatial interpolation, was reduced through geodomaining. This is an improvement in favor of geodomaining. At the second-order of stationarity, the block standard deviations were substantially different between domains (Table 6). This implies that the variability of the resource grade was dissimilar between domains and combining their constituent data points into the same domain for block modeling would not be rigorous. For a detailed comparison of cumulative distribution curves and quantiles, see Supplementary Information. Based on the feed-forward analysis, we expect that metal reconciliation at the block level would be better using the geodomained model. The reduction in total estimated blocks is also a potential benefit of geodomaining for resource estimation, as this would result in a reduction in the extraction of poorly sampled blocks that may also compromise metal reconciliation.

Cumulative Distribution Curves of Sample Points and Kriged Blocks

The quality of a resource model, in addition to using metrics such as the SLOR and KEFF, can be assessed through the cumulative distribution curve and quantile–quantile plots. The more similar the cumulative distributions between the points and the blocks for each domain, the better a model. In our case, there was a tendency toward a higher frequency of higher-grade blocks compared to points and the opposite behavior at the low grades (Fig. 11).

Discussion

Our application of machine learning algorithms to delineate domain boundaries was inspired by the works of Cowan et al. (2003), Sanchez and Deutsch (2022). In particular, the use of RBF to interpolate between samples at the edges of adjacent domains is thematically very similar to the mechanisms of decision boundary delineation using the SVM algorithm in the realm of machine learning. An exploration of a variety of classification algorithms that were capable of domain delineation demonstrates that the quality of domain boundaries cannot be solely determined using performance metrics. The qualitative difference in domain boundaries is substantial (compare between Fig. 5a–h). Some of the algorithms produced geometrically complex and likely useless boundaries (e.g., kNN and RF). In comparison, while the decision boundary from the SVM algorithm is simpler, it still separated domains within the area in a manner that was objective based on the data. While typical domain complexity in the mining sector is impossible to determine due to the closed nature of resource estimation, we suspect that simpler domain boundaries are more likely to be implementable, particularly since domain memberships are largely comparable across our models. In addition to implementation feasibility (due to extraction capability and geotechnical constraints), it is also important to anticipate that with continued extraction, more data would be generated that may allow the domain models to be refined. Hence, as usual with machine learning, model generalizability is important. The fundamental principle of the variance versus bias trade-off in machine learning can be leveraged to understand the desirability of domain boundary models. Thus, more complex models do not generalize as well as simpler models, and therefore, it is more probable that simpler models would result in less overall resource model errors with continued extraction. It is also our experience that typical domains created using in-discipline methods and orebody geometries do not resemble that of the tree-based methods.

The fundamental premise behind geodomaining is essential in resource estimation and mapping (although more implicitly). Geodomaining has the potential to incorporate high-dimensional data, especially in the geometallurgical sense. The desire for increased use of geometallurgical constraints at earlier stages of the mineral industry originates from the desire for more integrated approaches to maximize business outcomes (e.g., efficiency and profit; e.g., Lishchuk et al., 2020; Nwaila et al., 2022c). In this manner and as orebodies are becoming more complex, challenging to extract and process, geodomaining, which is a first pass of material separation and concentration, is likely to increase in significance and could become a determinant for the course of the mineral industry. Combining dissimilar samples into the same spatial model for mapping to resource estimation is likely to yield unreliable or misleading results. The downstream impacts include extraction feasibility, rigor of metal accounting and reconciliation, processing and beneficiation efficiency, and ultimately, business and resource sustainability. Results from our application show that there was 1.4% relative error in total resource between the geodomained and non-geodomained block models, among other higher-moment statistical differences between them. Compared to point-level geodomaining, the delineation of domain boundaries is not yet fully data-driven. Presently, manual and mathematically-aided interpretation techniques exist but especially in the case of manual interpretations, operator expertise and experience are key to method and outcome replicability (e.g., Sanchez and Deutsch, 2022). In other words, the biggest factor limiting scientific replicability in the delineation of domains has not been data replicability, but domaining-method replicability.

The ability to leverage data-driven methods to delineate geodomains would finally provide absolute delineation replicability, and, if used appropriately, increased model objectivity to the best availability of the data. The ability to consistently delineate domains using an established framework not only standardizes replicability but also introduces uncertainty analysis tools provided by the framework. In this sense, solving the domain delineation problem within the machine learning framework provides several critical capabilities: (1) the ability to automatically or manually tune the complexity of domain boundaries to match downstream capabilities (e.g., mechanized extraction); (2) the ability to anticipate the uncertainty of domain boundaries due to the ability to use stratified cross-validation testing to produce standardized metric scores (however, cross-validation over-estimates model performance for geospatial data); (3) the ability to quickly incorporate new data and dynamically update models due to typical machine learning workflow automation and the ease of updating machine learning models; and (4) the ability to seamlessly incorporate additional constraints aside from spatial coordinates to delineate domains in high-dimensional feature space (e.g., other sample characteristics that are desirable aside from spatial coordinates). In addition to these capabilities, the integration of a machine learning-based geodomaining workflow with additional machine learning tasks, such as target prediction (e.g., Nwaila et al., 2019; Zhang et al., 2020) and anomaly detection (e.g., Zhang et al., 2021a, b) is straight forward as data pre-processing, workflow sequencing, visualization and predictive modeling can all be automated. This should facilitate geodomaining for geodata scientists (who may not be geoscientists but are trained in mainly trans-disciplinary disciplines, such as machine learning, artificial intelligence and data science); conversely, it facilitates the adoption of other machine learning methods for resource estimation and spatial interpolation specialists. This bipartite benefit is probably the most important outcome of approaches, such as ours, that bring a mutually understandable and reliable bridge between traditional disciplines and modern trans-disciplinary talents, which would facilitate talent recruitment, retainment and the development of dry laboratories to the benefit of the entire minerals industry (Ghorbani et al., 2022).

Conclusions

Geodomaining is at least a two-staged process, whereby in the first stage, the samples are classified into discrete domains and in the second stage, boundaries are drawn to best isolate adjacent domains. This study focuses on the second stage and was inspired by an existing approach to use RBF to delineate domain boundaries, which is an in-discipline practice. We solved the domain delineation problem using the machine learning framework by transcribing the domain delineation problem into a classification problem. We demonstrated that a variety of algorithms are capable of this task (of data-driven domaining) in a variable narrow tabular orebody (i.e., Middelvlei Reef) from the Witwatersrand goldfields. Of all the explored algorithms, the SVM algorithm is theoretically the most resemblant of the in-discipline approach using RBF. Furthermore, we showed that a purely data-driven approach using solely metrics to assess the feasibility of algorithms and models is insufficient, and discipline-specific expertise around the geometry of orebodies and extraction capability is important to adopt suitable domain boundaries. Nevertheless, by adopting the machine learning framework, we realized several benefits including: (1) uncertainty quantification (through cross-validation performance metrics); (2) data-driven or manual domain boundary complexity tuning; automation; (3) dynamic updates of models using new data; and (4) simple integration with existing machine learning-based workflows. In addition, we replicated the general consensus in geostatistics that domaining point-wise data is important to create accurate resource models. Non-geodomained estimates were generally more smoothed than geodomained estimates and there was an overall difference in terms of total estimated resource. Lastly, our study is an example of the adoption of data-driven methods in geosciences that pays homage to and builds heavily on existing in-discipline knowledge.

References

Armstrong, R. A., Compston, W., Retief, E. A., Williams, I. S., & Welke, H. (1991). Zircon ion microprobe studies bearing on the age and evolution of the Witwatersrand Triad. Precambrian Research, 53, 243–266.

Blanchin, R., & Chilès, J.-P. (1993). The channel tunnel: Geostatistical prediction of the geological conditions and its validation by the reality. Mathematical Geology, 25(7), 963–974.

Breiman, L. (1996a). Bagging predictors. Machine Learning, 24(2), 123–140.

Breiman, L. (1996b). Stacked regressions. Machine Learning, 24(1), 49–64.

Breiman, L. (2001). Random forests. Machine Learning, 45, 5–32.

Buitinck, L., Louppe, G., Blondel, M., Pedregosa, F., Mueller, A., Grisel, O., Niculae, V., Prettenhofer, P., Gramfort, A., Grobler, J., Layton, R., Vanderplas, J., Joly, A., Holt, B., & Varoquaux, G. (2013). API design for machine learning software: experiences from the scikit-learn project. http://arxiv.org/abs/1309.0238.

Catuneanu, O. (2001). Flexural partitioning of the late Archaean Witwatersrand foreland system, South Africa. Sedimentary Geology, 141, 95–112.

Chilès, J.-P., & Delfiner, P. (2012). Geostatistics: Modelling spatial uncertainty (2nd ed.). Wiley.

Chiquini, A. P., & Deutsch, C. V. (2017). A simulation approach to calibrate outlier capping. In J. L. Deutsch (Ed.), Geostatistics lessons. Retrieved August 7, 2022, from https://geostatisticslessons.com/lessons/simulationcapping.

Cover, T., & Hart, P. (1967). Nearest neighbor pattern classification. IEEE Transactions on Information Theory, 13(1), 21–27.

Cowan, J., Beatson, R., Ross, H. J., Fright, W. R., McLennan, T. J., Evans, T. R., Carr, J. C., Lane, R. G., Bright, D. V., Gillman, A.J., Oshust, P.A., & Titley, M. (2003). Practical implicit geological modelling. In 5th international mining geology conference, the Australian institute of mining and metallurgy (Vol. 8, pp. 89–99).

Cramer, J. S. (2002). The origins of logistic regression (pp. 16). Tinbergen Institute, working paper no. 2002-119/4. https://doi.org/10.2139/ssrn.360300.

Curry, H. B. (1944). The method of steepest descent for non-linear minimization problems. Quarterly of Applied Mathematics, 2, 258–261.

Dankert, B. T., & Hein, K. A. A. (2010). Evaluating the structural character and tectonic history of the Witwatersrand Basin. Precambrian Research, 177(1–2), 1–22.

Deutsch, J. L., & Deutsch, C. V. (2012). Kriging, stationary and optimal estimation: measures and suggestions. CCG Annual Report 14, Paper 306.

Deutsch, C. V., & Journel, A. G. (1992). GSLIB: Geostatistical software library and user’s guide. Oxford University Press.

Deutsch, J. L., Szymanski, J., & Deutsch, C. V. (2014). Checks and measures of performance for kriging estimates. The Journal of the Southern African Institute of Mining and Metallurgy, 114, 223–230.

Dias, P. M., & Deutsch, C. V. (2022). The decision of stationarity. In J. L. Deutsch (Ed.), Geostatistics lessons. Retrieved August 7, 2022, from http://www.geostatisticslessons.com/lessons/stationarity.

Eliott, S. M., Snowden, D. V., Bywater, A., Standing, C. A., & Ryba, A. (2001). Reconciliation of the McKinnons gold deposit, Cobar, New South Wales. In A. C. Edwards (Ed.), Mineral resource and ore reserve estimation—The AusIMM guide to good practice. The Australasian Institute of Mining and Metallurgy.

Els, B. G. (1983). The sedimentology of the Middelvlei Reef on doornfontein gold mine. M.Sc. thesis. University of Johannesburg.

Emery, X., & Maleki, M. (2019). Geostatistics in the presence of geological boundaries. Application to mineral resources modeling. Ore Geology Reviews, 114, 103124.

Emery, X., Ortiz, J. M., & Cáceres, A. M. (2008). Geostatistical modelling of rock type domains with spatially varying proportions: Application to a porphyry copper deposit. Journal of the Southern African Institute of Mining and Metallurgy, 108(5), 284–292.

Fawcett, T. (2006). Introduction to ROC analysis. Pattern Recognition Letters, 27, 861–874.

Fix, E., & Hodges, J. L. (1951). An important contribution to nonparametric discriminant analysis and density estimation. International Statistical Review, 57(3), 233–238.

Fouedjio, F., Scheidt, C., Yang, L., Achtziger-Zupančič, P., & Caers, J. (2021). A geostatistical implicit modeling framework for uncertainty quantification of 3D geo-domain boundaries: Application to lithological domains from a porphyry copper deposit. Computers & Geosciences, 157, 104931.

Fourie, A., & Minnitt, R. C. A. (2016). Review of gold reef sampling and its impact on the mine call factor. Journal of the Southern African Institute of Mining and Metallurgy, 116(11), 1001–1009.

Freund, Y., & Schapire, R. E. (1995). A decision-theoretic generalization of on-line learning and an application to boosting. In P. Vitányi (Ed.), Second European conference on computational learning theory. Springer.

Frimmel, H. E., & Nwaila, G. T. (2020). Geologic evidence of syngenetic gold in the Witwatersrand Goldfields, South Africa. In T. Sillitoe, R. Goldfarb, F. Robert, & S. Simmons (Eds.), Geology of the major gold deposits and provinces of the world, (Special Publication 23, pp. 645–668). Society of Economic Geologists. https://doi.org/10.5382/SP.23.31

Frimmel, H. E. (2005). Archaean atmospheric evolution: Evidence from the Witwatersrand gold fields, South Africa. Earth-Science Reviews, 70, 1–46.

Frimmel, H. E. (2014). A giant Mesoarchean crustal gold-enrichment episode: Possible causes and consequences for exploration. Society of Economic Geologists, Special Publication, 18, 209–234.

Frimmel, H. E. (2019). The Witwatersrand basin and its gold deposits. In A. Kröner & A. Hofmann (Eds.), The Archean geology of the Kaapvaal craton, Southern Africa (pp. 325–345). Springer.

Garrett, R. G. (1983). Sampling methodology. In R. J. Howarth (Ed.), Handbook of exploration geochemistry (Vol. 2, pp. 83–110). Elsevier.

Ghorbani, Y., Zhang, S. E., Nwaila, G. T., & Bourdeau, J. E. (2022). Framework components for data-centric dry laboratories in the minerals industry: A path to science-and-technology-led innovation. The Extractive Industries and Society, 10, 101089.

Gumsley, A., Stamsnijder, J., Larsson, E., Söderlund, U., Naeraa, T., de Kock, M. O., & Ernst, R. (2018). The 2789–2782 Ma Klipriviersberg large igneous province: Implications for the chronostratigraphy of the Ventersdorp Supergroup and the timing of Witwatersrand gold deposition. In GeoCongress 2018, geological society of south Africa.

Hastie, T., Tibshirani, R., & Friedman, J. (2009). The elements of statistical learning: data mining, inference, and prediction. Springer.

Hillier, M. J., Schetselaar, E. M., de Kemp, E. A., & Perron, G. (2014). Three-dimensional modelling of geological surfaces using generalized interpolation with radial basis functions. Mathematical Geosciences, 46(8), 931–953.

Ho, T. K. (1995). Random decision forests. In Proceedings of the 3rd international conference on document analysis and recognition (pp. 278–282). https://doi.org/10.1109/ICDAR.1995.598994

Hoffimann, J., Zortea, M., de Carvalho, B., & Zadrozny, B. (2021). Geostatistical learning: Challenges and opportunities. Frontiers in Applied Mathematics and Statistics, 7, 689393.

Hsu, C. W., & Lin, C. J. (2002). A comparison of methods for multiclass support vector machines. IEEE Transactions on Neural Networks, 13, 415–425.

Isaaks E. H., Srivastava, R. M. (1989). Applied geostatistics (Vol. 561). Oxford University Press.

Isaaks, E. (2005). The kriging oxymoron: a conditionally unbiased and accurate predictor. In Quantitative geology and geostatistics (pp. 363–374). Geostatistics Banff 2004.

Jolly, M. K. (1984). The sedimentology and economic potential of the auriferous Middlevlei Reef on Driefontein Consolidated Limited. M.Sc. thesis. University of Johannesburg.

Journel, A. G., & Rossi, M. E. (1989). When do we need a trend model in kriging? Mathematical Geology, 21, 715–739.

Karatzoglou, A., Meyer, D., & Hornik, K. (2006). Support vector machines in R. Journal of Statistical Software, 15, 1–28.

Kasmaee, S., de Raspa, G., Fouquet, C., Tinti, F., Bonduà, S., & Bruno, R. (2019). Geostatistical estimation of multi-domain deposits with transitional boundaries: A sensitivity study for the sechahun iron mine. Minerals, 9, 115.

Kotsiantis, S. B. (2014). Integrating global and local application of naive bayes classifier. International Arab Journal of Information Technology, 11(3), 300–307.

Krige, D. G. (1951). A Statistical approach to some mine valuations and allied problems at the Witwatersrand. M.Sc. thesis, University of Witwatersrand, South Africa.

Krige, D. G. (1981). Lognormal-de Wijsian Geostatistics for ore evaluation (pp. 1–58). South African Institute of Mining and Metallurgy.

Krige, D. G. (1997). A practical analysis of the effects of spatial structure and of data available and accessed, on conditional biases in ordinary kriging. In E. Y. Baafi & N. A. Schofield (Eds.), Geostatistics wollongong 96, fifth international geostatistics congress (pp. 799–810). Kluwer.

Larrondo, P., & Deutsch, C. V. (2005). Accounting for geological boundaries in geostatical modeling of multiple rock types. In O. Leuangthong, & C. V. Deutsch (Eds.), Geostatistics banff 2004, quantitative geology and geostatistics (vol. 14). Springer. https://doi.org/10.1007/978-1-4020-3610-1_1.

Lemaréchal, C. (2012). Cauchy and the gradient method. Doc Math Extra, 251(254), 1–10.

Linardatos, P., Papastefanopoulos, V., & Kotsiantis, S. (2021). Explainable AI: A review of ML interpretability methods. Entropy, 23(1), 1–18.

Lishchuk, V., Koch, P.-H., Ghorbani, Y., & Butcher, A. R. (2020). Towards integrated geometallurgical approach: Critical review of current practices and future trends. Minerals Engineering, 145, 106072.

Madani, N., Maleki, M., & Soltani-Mohammadi, S. (2022). Geostatistical modeling of heterogeneous geoclusters in a copper deposit integrated with multinomial logistic regression: An exercise on resource estimation. Ore Geology Reviews, 150, 105132.

Mallet, J.-L. (1989). Discrete smooth interpolation. ACM Transactions on Graphics (TOG), 8(2), 121–144.

Martin, R., & Boisvert, J. B. (2017). Iterative refinement of implicit boundary models for im- proved geological feature reproduction. Computers & Geosciences, 109, 1–15.

Matheron, G. (1963). Principles of geostatistics. Economic Geology, 58(8), 1246–1266.

McCarthy, T. S. (2006). The witwatersrand supergroup. In M. R. Johnson, C. R. Anhaeusser, & R. J. Thomas (Eds.), The geology of South Africa (Vol. 51, pp. 155–186). Geological Society of South Africa, Johannesburg and Council for Geoscience.

Myers, R. E., Zhou, T., & Phillips, G. N. (1993). Sulphidation in the Witwatersrand goldfields: Evidence from the Middelvlei Reef. Mineralogical Magazine, 57, 395–405.

Nwaila, G. T., Durrheim, R. J., Jolayemi, O. O., Maselela, H. K., Jakaite, L., Burnett, M. S., & Zhang, S. E. (2020). Significance of granite-greenstone terranes in the formation of Witwatersrand-type gold mineralisation—A case study of the Neoarchaean Black Reef Formation, South Africa. Ore Geology Reviews, 121, 103572.

Nwaila, G. T., Frimmel, H. E., Zhang, S. E., Bourdeau, J. E., Tolmay, L. C., Durrheim, R. J., & Ghorbani, Y. (2022b). The minerals industry in the era of digital transition: An energy-efficient and environmentally conscious approach. Resources Policy, 78, 102851.

Nwaila, G. T., Manzi, M. S. D., Zhang, S. E., Bourdeau, J. E., Bam, L. C., Rose, D. H., Maselela, K., Reid, D. L., Ghorbani, Y., & Durrheim, R. J. (2022a). Constraints on the geometry and gold distribution in the Black Reef Formation of South Africa using 3D reflection seismic data and Micro-X-ray computed tomography. Natural Resources Research, 31, 1225–1244.

Nwaila, G. T., Zhang, S. E., Bourdeau, J. E., Negwangwatini, E., Rose, D. H., Burnett, M., & Ghorbani, Y. (2022c). Data-driven predictive modelling of lithofacies and Fe in-situ grade in the Assen Fe ore deposit of the Transvaal Supergroup (South Africa) and implication on the genesis of banded iron formations. Natural Resources Research, 31(5), 2369–2395.

Nwaila, G. T., Zhang, S. E., Frimmel, H. E., Manzi, M. S. D., Dohm, C., Durrheim, R. J., Burnett, M. S., & Tolmay, L. C. (2019). Local and target exploration of conglomerate-hosted gold deposits using machine learning algorithms: A case study of the Witwatersrand gold ores, South Africa. Natural Resources Research, 29, 135–159.

Olea, R. A. (1999). Geostatistics for engineers and earth scientists. Springer.

Ortiz, J. M., & Emery, X. (2006). Geostatistical estimation of mineral resources with soft geological boundaries a comparative study. Journal of the South African Institute of Mining and Metallurgy, 106, 577–584.

Pyrcz, M. J., Jo, H., Kupenko, A., Liu, W., Gigliotti, A. E., Salomaki, T., & Santos, J. (2021). GeostatsPy python package. PyPI, Python Package Index, https://pypi.org/project/geostatspy/.

Rajabinasab, B., & Asghari, O. (2019). Geometallurgical domaining by cluster analysis: Iron ore deposit case study. Natural Resources Research, 28(3), 665–684.

Rennie, J. D., Shih, L., Teevan, J., & Karger, D. R. (2003). Tackling the poor assumptions of Naive Bayes text classifiers. In Proceedings of the twentieth international conference on machine learning (ICML-2003) (pp. 616–623).

Robb, L. J., Davis, D., Kamo, S. L., & Mayer, F. M. (1992). Ages of altered granites adjoining the Witwatersrand basin with implications for the origin of gold and uranium. Nature, 357(6380), 677–680.

Rosenblatt, F. (1961). Principles of neurodynamics: Perceptrons and the theory of brain mechanisms. Spartan Books. https://doi.org/10.1007/978-3-642-70911-1_20

Rossi, M. E., & Deutsch, C. V. (2013). Mineral resource estimation. Springer.

Rumelhart, D. E., Hinton, G. E., & Williams, R. J. (1986). Learning internal representations by error propagation. In D. E. Rumelhart, J. L. McClelland, & PDP research group (Eds.), Parallel distributed processing: Explorations in the microstructure of cognition: Foundation (Vol. 1). MIT Press.

Sagi, O., & Rokach, L. (2018). Ensemble learning: A survey. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery, 8(4), e1249. https://doi.org/10.1002/widm.1249

Sanchez, S., & Deutsch, C.V. (2022). Domain delimitation with radial basis functions. In J. L. Deutsch (Ed.), Geostatistics lessons. Retrieved August 10, 2022, from http://www.geostatisticslessons.com/lessons/implicitrbf

Snowden, D. V. (2001). Practical interpretation of mineral resource and ore reserve classification guidelines. In A. C. Edwards. (Ed.), Mineral resource and ore reserve estimation—The AusIMM guide to good practice (Monograph 23, pp. 643–652). Australasian Institute of Mining and Metallurgy, Melbourne.

Talebi, H., Mueller, U., Tolodana Delgado, R., & van den Boofaart, K. G. (2019). Geostatistical simulation of geochemical compositions in the presence of multiple geological units: Application to mineral resource evaluation. Mathematical Geosciences, 51, 129–153.

Vapnik, V. (1998). Statistical learning theory. Springer.

Yunsel, T. Y., & Ersoy, A. (2011). Geological modeling of gold deposit based on grade domaining using plurigaussian simulation technique. Natural Resources Research, 20(4), 231–249.

Zhang, S. E., Bourdeau, J. E., Nwaila, G. T., & Corrigan, D. (2021a). Towards a fully data-driven prospectivity mapping methodology: A case study of the Southeastern Churchill Province, Québec and Labrador. Artificial Intelligence in Geosciences, 2, 128–147.

Zhang, S. E., Nwaila, G. T., Bourdeau, J. E., & Ashwal, L. D. (2021b). Machine learning-based prediction of trace element concentrations using data from the Karoo large igneous province and its application in prospectivity mapping. Artificial Intelligence in Geosciences, 2, 60–75.

Zhang, S. E., Nwaila, G. T., Bourdeau, J. E., Frimmel, H. E., Ghorbani, Y., & Elhabyan, R. (2022). Application of machine learning algorithms to the stratigraphic correlation of Archean shale units based on lithogeochemistry. Journal of Geology, 129(6), 647–672.

Zhang, S. E., Nwaila, G. T., Tolmay, L., Frimmel, H. E., & Bourdeau, J. E. (2020). Intergration of machine learning algorithms with Gompertz curves and kriging to estimate resources in gold deposits. Natural Resources Research, 30, 39–56.

Zuo, R., Xiong, Y., Wang, J., & Carranza, E. J. M. (2019). Deep learning and its application in geochemical mapping. Earth Science Reviews, 192, 1–14.

Acknowledgments

The authors would like to thank Sibanye-Stillwater Ltd. for providing the data used in this study. We thank several anonymous reviewers for their constructive and insightful comments, which have greatly improved the manuscript. We also thank all editors for editorial handling.

Funding

Open access funding is provided by University of the Witwatersrand. This study was supported by a Department of Science and Innovation (DSI)-National Research Foundation (NRF) Thuthuka Grant (Grant UID: 121973) and DSI-NRF CIMERA. We also thank Sibanye-Stillwater Ltd. for their funding through the Wits Mining Institute (WMI).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, S.E., Nwaila, G.T., Bourdeau, J.E. et al. Machine Learning-Based Delineation of Geodomain Boundaries: A Proof-of-Concept Study Using Data from the Witwatersrand Goldfields. Nat Resour Res 32, 879–900 (2023). https://doi.org/10.1007/s11053-023-10159-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11053-023-10159-7