Abstract

Accurate prediction of geological formation tops is a crucial task for optimizing hydrocarbon exploration and production activities. This research investigates and conducts a comprehensive comparative analysis of several advanced machine learning approaches tailored for the critical application of geological formation top prediction within the complex Norwegian Continental Shelf (NCS) region. The study evaluates and benchmarks the performance of four prominent machine learning models: Support Vector Machine (SVM), K-Nearest Neighbors (KNN), Random Forest ensemble method, and Multi-Layer Perceptron (MLP) neural network. To facilitate a rigorous assessment, the models are extensively evaluated across two distinct datasets - a dedicated test dataset and a blind dataset independent for validation. The evaluation criteria revolve around quantifying the models' predictive accuracy in successfully classifying multiple geological formation top types. Additionally, the study employs the Density-Based Spatial Clustering of Applications with Noise (DBSCAN) algorithm as a baseline benchmarking technique to contextualize the relative performance of the machine learning models against a conventional clustering approach. Leveraging two model-agnostic feature importance analysis techniques - Permutation Feature Importance (PFI) and Shapley Additive exPlanations (SHAP), the investigation identifies and ranks the most influential input variables driving the predictive capabilities of the models. The comprehensive analysis unveils the MLP neural network model as the top-performing approach, achieving remarkable predictive accuracy with a perfect score of 0.99 on the blind validation dataset, surpassing the other machine learning techniques as well as the DBSCAN benchmark. However, the SVM model attains superior performance on the initial test dataset, with an accuracy of 0.99. Intriguingly, the PFI and SHAP analyses converge in consistently pinpointing depth (DEPT), revolution per minute (RPM), and Hook-load (HKLD) as the three most impactful parameters influencing model predictions across the different algorithms. These findings underscore the potential of sophisticated machine learning methodologies, particularly neural network-based models, to significantly enhance the accuracy of geological formation top prediction within the geologically complex NCS region. However, the study emphasizes the necessity for further extensive testing on larger datasets to validate the generalizability of the high performance observed. Overall, this research delivers an exhaustive comparative evaluation of state-of-the-art machine learning techniques, offering critical insights to guide the optimal selection, development, and real-world deployment of accurate and reliable predictive modeling strategies tailored for hydrocarbon exploration and reservoir characterization endeavors in the NCS.

Graphical abstract

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The oil and gas industry generates vast amounts of data during operations, and applying machine learning algorithms to process this data has become increasingly important (Elkatatny 2018; Aniyom et al. 2022). Machine learning has been recognized as a promising tool in the oil and gas industry, with applications ranging from improving operational efficiency to predicting geological formations. Accurately predicting geological formation tops is an essential yet challenging task in hydrocarbon exploration and production activities (Mahmoud et al. 2020). Traditional manual interpretation of well logs to pick formation tops is labor intensive, prone to human subjectivity and errors, and unable to handle large datasets efficiently. This highlights the need for an automated and optimized approach. Applying machine learning for real-time prediction of geological formation tops using drilling data is a topic of significant interest in the oil and gas industry (Zhong et al. 2022). Several studies have explored different aspects of this topic, demonstrating the potential of machine learning to improve operational efficiency and reduce risks (Sircar et al. 2021; Losoya et al. 2021).

Al-AbdulJabbar et al. (2018) have developed a novel method for predicting formation tops in real-time that can replace more expensive techniques. Their method leverages drilling mechanics and rate of penetration data to identify lithological changes during drilling accurately. The authors gathered field data from two wells drilled with the same bit size and through the same formations. Data from Well A was used to train and test an artificial neural network (ANN) model (70% training, 30% testing), while data from Well B served as unseen test data. The optimized ANN model with one hidden layer and 20 neurons achieved high correlations of 0.94 and 0.98 on Wells A and B, respectively, demonstrating the method's ability to predict formation tops reliably. A key advantage of this approach is the real-time nature, as no log data processing or cuttings lag is required. By relying solely on low-cost, existing drilling data, formations can be accurately identified instantly without operational delays or expending resources on logs. This study highlights the potential for ANNs and drilling mechanics to enable rapid, precise, and inexpensive top detection during drilling. Mahmoud et al. (2021) developed artificial neural networks (ANN), adaptive neuro-fuzzy inference systems (ANFIS), and fuzzy neural network (FNN) models to predict lithology changes and formation tops during drilling operations. The models were trained on 3162 datasets across six input parameters. After optimization, the models were validated on 1356 datasets from a separate well. The ANN model achieved the highest accuracy, correctly predicting lithology distributions and formation tops for training and testing data over 98% of the time. Compared to ANFIS and FNN, the ANN model showed superior performance as a real-time predictive tool for lithology and formation changes during drilling. The study demonstrates the potential of ANN models to enable more informed decision-making and adjustments while drilling through multiple formations.

Vikara and Khanna (2022) developed an innovative framework to generate predictive models using various machine-learning classification algorithms. The goal was to identify specific stratigraphic units in the prolific Midland Basin of West Texas. After testing multiple algorithms, the random forest (RF) model achieved the highest prediction accuracy of 93% on holdout validation data. Notably, the RF model demonstrated exceptional performance in predicting major hydrocarbon-producing zones in the basin. This data-driven approach provides an accurate, cost-effective solution to complement traditional reservoir characterization methods across energy sector applications. Overall, the study by Vikara and Khanna establishes a robust framework leveraging machine learning for optimized subsurface analysis and resource identification. Ziadat et al. (2023) proposed a novel machine-learning approach for real-time detection of drilled formation tops and lithology types using only surface drilling data. They leveraged random forest and decision tree classifiers to develop highly accurate models predicting lithology from a dataset of five complex geological formations. Their methodology included rigorous data collection, preprocessing, exploratory analysis, feature engineering, model development, and hyperparameter tuning. Through comprehensive experiments, they demonstrated over 95% testing accuracy in lithology classification, even on intricate formation schemes. The study highlights the capability of machine learning techniques to enable real-time subsurface lithology prediction solely from surface data. This could significantly enhance drilling efficiency and reduce costs by guiding the optimal, geology-specific selection of drilling parameters in real time without needing downhole measurements. The proposed data-driven methodology provides a broadly generalizable framework to unlock the full potential of surface data for real-time formation characterization.

Ibrahim et al. (2023) developed machine learning models from drilling data to accurately predict lithology and formation tops in real-time. They collected data from two wells in the Middle East and trained Gaussian naive Bayes (GNB), logistic regression (LR), and linear discriminant analysis (LDA) models. The GNB model demonstrated exceptional accuracy in predicting lithology, achieving near-perfect scores. The LR and LDA models also performed well, although LDA misclassified some carbonate/shale formations. During the new data validation, the models maintained high accuracies of 0.96, 0.95, and 0.92 for GNB, LR, and LDA, respectively. Their innovative modeling enables real-time rock type determination while drilling, allowing rapid geosteering decisions. Khalifa et al. (2023) have developed an innovative machine-learning approach for real-time lithology prediction during drilling operations. Using a dataset from the Volve field, they trained models to classify drilling data into three lithology classes—claystone, marl, and sandstone—with remarkable accuracy. Through careful preprocessing, including balancing the class distribution and reducing redundant features, they prepared an unbiased training set. Their best model achieved 95% overall testing accuracy and 98% average precision, demonstrating exceptional predictive performance. To enhance accessibility, they built GeoVision, an easy-to-use web application that allows drilling engineers to utilize the models on-site. This pioneering methodology and software tool enable more informed and rapid drilling decision-making, marking a significant step toward real-time geosteering. With rigorous methodology and testing, Khalifa et al. have set a high benchmark for lithology prediction from drilling data using machine learning. Their innovative integration of ML with drilling engineering promises to transform future drilling operations.

Challenges are involved despite the potential benefits of machine learning in the oil and gas industry. These include the need for high-quality data, the complexity of the algorithms, and the need for skilled personnel to interpret the results (Khalifah et al. 2020; Alsaihati et al. 2021). However, these challenges can be mitigated with continuous technological advancements and increasing adoption of machine learning. This study aims to develop a comprehensive machine-learning framework to accurately and reliably predict formation tops on the complex Norwegian Continental Shelf (NCS) using well-log data. Four main algorithms—support vector machines (SVM), random forest (RF), k-nearest neighbor (KNN), and multi-layer perceptron (MLP)—are implemented, optimized, and rigorously evaluated to determine the most suitable method for this problem. The methodology involves multiple stages. First, the dataset is preprocessed by handling missing values, outliers, and noisy data and applying techniques like normalization. Next, exploratory analysis uncovers patterns and relationships within the data. Optimal features are then extracted using statistical metrics and domain expertise. The cleaned dataset is split into training and test sets for model development and evaluation. The four machine learning algorithms are implemented with appropriate hyperparameters and configurations tailored to the formation top classification task. Models are optimized using techniques like grid search and cross-validation. Evaluation metrics such as accuracy, F1-score, precision, and recall quantify model performance. The best model is selected based on these metrics. Additional techniques like clustering using DBSCAN provide supplementary insights. This framework ensures accurate, reliable, optimized models while comprehensively understanding the data’s underlying structure. By comparing multiple algorithms, their relative strengths and weaknesses are analyzed to identify the ideal approach for the NCS. The automated methodology overcomes human subjectivity and inefficiency. Accurate formation top picks have far-reaching impacts. They enhance subsurface geological models, minimize uncertainty, assist in assessing hydrocarbon potential, optimize drilling activities, and improve recovery strategies. This research enables geologists to focus on critical tasks rather than repetitive manual interpretation. The insights can inform data-driven decision-making to unlock value. In conclusion, this study develops an exhaustive machine learning approach for efficient, accurate, and automated formation top classification from well logs on the NCS. The results provide key insights into leveraging artificial intelligence to transform subjective processes into optimized, intelligent systems in the hydrocarbon industry. The methodology and findings significantly advance available techniques for subsurface characterization.

Approach and procedures

For this investigation, we gathered the dataset employed to predict the topography of geological formations on the Norwegian Continental Shelf (NCS) from a trustworthy data source. The dataset comprises a range of variables and features pertinent to predicting formation tops. These features play a vital role in discerning the characteristics and properties of various formations within the field. To guarantee the quality and reliability of the data, a sequence of preprocessing steps was undertaken. This encompassed addressing missing values, outliers, and other data quality issues. Furthermore, data normalization or standardization techniques were implemented to ensure uniformity and comparability across the features. The exploratory data analysis (EDA) phase was crucial for comprehending the dataset and extracting insights into its characteristics. Summary statistics, encompassing measures like mean, median, mode, and standard deviation, were computed to offer a comprehensive overview of the data. These statistics aided in grasping central tendencies, dispersion, and variable distributions. Diverse data visualization techniques, such as histograms, heatmaps, box plots, and correlation matrices, were employed during EDA to scrutinize relationships and patterns within the dataset. These visualizations yielded valuable insights into variable distributions, potential outliers, and feature correlations.

During the exploratory data analysis (EDA) phase aimed at cleaning the dataset, the process involves the elimination or handling of data that is not valid, particularly those containing non-numeric values (NaN). It is crucial to identify invalid or missing data while analyzing the dataset. The approach removes such data from the dataset regarding non-numeric or NaN values. This step ensures the dataset's consistency and safeguards against invalid data's influence on subsequent analysis and modeling. Removing NaN or non-numeric data guarantees that the dataset comprises only valid numerical values, facilitating insightful analysis and accurate predictions. Nevertheless, it is crucial to emphasize that the decision to remove data should be executed judiciously and grounded in thoroughly comprehending the dataset. If the volume of NaN or non-numeric data is substantial or contains valuable information, alternative strategies like imputation techniques may be explored. Imputation involves filling in missing values with reasonable estimates and preserving data integrity. In summary, the EDA process in cleaning the dataset includes the option to eliminate data with non-numeric values (NaN) or non-numeric data. However, the decision-making process should consider the overall impact on the dataset. If warranted, alternative methods like imputation can be employed to maintain data completeness and enable more precise analysis and modeling.

Feature selection or extraction techniques were implemented to pinpoint the most pertinent features for real-time prediction of geological formation tops. This process included scrutinizing the relationship between parts and the target variable using statistical measures or domain knowledge. The identified features were subsequently utilized as inputs for machine learning models. The study incorporated various machine learning algorithms, such as multi-support vector machines (SVM), random forest, k-nearest neighbors (KNN), and multilayer perceptron (MLP). Each algorithm was instantiated with specific configurations and hyperparameters tailored to the real-time prediction of geological formation tops task. For example, the SVM algorithm employed a selected kernel and appropriate regularization parameters, while random forest had a designated number of trees and splitting criteria. Relevant evaluation metrics were utilized to gauge the performance of the models. Metrics, including accuracy, precision, recall, and F1-score, were computed to appraise the predictive prowess of each model in accurate real-time prediction of geological formation tops. These evaluation metrics furnished a quantitative gauge of the model's performance, enabling comparisons between machine-learning algorithms. The experimental setup encompassed a train-test split ratio, dividing the dataset into segments for model training and evaluation. A portion was allocated for training the models, while the remaining was for testing and assessing their performance. Cross-validation techniques might have been employed to validate the models' generalizability. Furthermore, statistical analyses could have been conducted to validate the results or compare the performance of various machine learning models. These analyses would offer additional insights into the findings' significance and the predictive models' reliability. The materials and methods outlined in this study were designed to guarantee the robustness, reproducibility, and validity of real-time prediction of geological formation top prediction models employing various machine learning algorithms. Integrating exploratory data analysis (EDA) techniques, data preprocessing procedures, diverse machine learning models, and evaluation metrics constituted a comprehensive framework, facilitating the attainment of precise and dependable predictions for geological formation tops in the NCS.

The flowchart depicted in Fig. 1 for "Modeling Geological Formation Tops Prediction in the NCS: A Machine Learning Approach using Multi SVM, Random Forest, KNN, MLP" offers a systematic guide for constructing and assessing predictive models. The process initiates with the importation of necessary libraries and the loading of the dataset. Subsequently, the dataset is divided into a training set and a blind set for model training and evaluation. Exploratory data analysis (EDA) is then executed to glean insights from the dataset, followed by multivariate data analysis to unveil intricate relationships. The workflow includes essential data preprocessing tasks, encompassing missing values, treatment of outliers, and resolution of data duplicates. Collinear independent variables are eliminated to prevent potential issues in model performance. Scaling and normalization techniques are then employed to ensure comparability among features. The definition of feature and output matrices for model training involves selecting specific inputs from the dataset. In this case, the chosen feature inputs are DEPT, ROP5, HKLD, SWOB, TQA, RPM, BPOS, BVEL, SPPA, TFLO, TRPM_RT, Stick_RT, ECD_MWD from the Measurement While Drilling (MWD) data. The target matrix is specified as GFT. This compilation forms the basis for training the model. The details of real-time measurement while drilling (MWD) records employed in this study are outlined in Table 1. The dataset is divided into training and test sets, with a test size of 0.2 (20%), a training set of 0.8 (80%), and a random state set to zero for reproducibility. Various machine learning algorithms, including KNN, Random Forest, SVM, and MLP, are applied to construct predictive models. Hyperparameter tuning is executed to optimize the performance of each model. The model evaluation uses appropriate metrics, and the top-performing models make predictions on the blind dataset. Furthermore, clustering analysis utilizing the DBSCAN algorithm might be incorporated to uncover inherent groupings within the data. Hyperparameter tuning could be employed to optimize the DBSCAN clustering process. Visualizations of the distribution of various formation tops are created to extract insights. Ultimately, the flowchart concludes the modeling and evaluation process. In summary, this systematic approach ensures the creation of precise formation top prediction models while attaining a thorough understanding of the dataset.

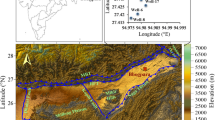

Dataset utilized in the study

The dataset employed in the modeling process comprises two distinct sets. The initial dataset serves as the training set, while the second is a blind set. The latter is utilized for validation and predicting the model trained on the initial dataset. The dataset used in this experiment encompasses real-time drilling data from two wells, denoted Wells A and B. Well A serves as the training dataset, while well B functions as the blind dataset for validation. The dataset comprises 14 measurement while drilling (MWD) parameters, including DEPT, ROP5, BPOS, BVEL, SWOB, HKLD, TQA, RPM, TFLO, TRPM_RT, SPPA, ECD_ARC, Stick_RT, and GFT. A detailed breakdown of these parameters is presented in Table 1. Also, Table 2 shows the descriptive statistics summarizing the characteristics of both input features and the target variable in Dataset A. Similarly, Table 3 provides descriptive statistics for the input features and target variable in Dataset B.

Preprocessing the dataset

The preprocessing phase in machine learning modeling, aimed at eliminating datasets containing NaN values or no data, involves a series of steps. Initially, the dataset is examined to identify the location and quantity of NaN values. Subsequently, the presence of NaN values is assessed, considering their pattern or impact on the data and the modeling objective. If NaN values are deemed insignificant or cannot be resolved through proper filling techniques, the subsequent step involves removing the rows or columns containing NaN values using the ‘.dropna()’ method. Following the elimination, the dataset is reassessed to ensure that the quantity and distribution of the remaining data remain sufficient and representative. Evaluating the impact of NaN value elimination on class balance or target distribution within the model is crucial. Additionally, it is essential to verify the dataset index after the removal of NaN data. When making this decision, carefully considering the dataset's context and characteristics is vital, as eliminating NaN data can influence the quantity and representation of the available data.

Identifying outliers using automated methods

Outliers are anomalous points within a dataset. They are points that do not fit within the normal statistical distribution of the dataset and can occur for various reasons, such as sensor and measurement errors, poor data sampling techniques, and unexpected events. Within MWD logs, outliers can occur due to washed-out boreholes, tool and sensor issues, rare geological features, and issues in the data acquisition process. These outliers must be identified and investigated early in the workflow, as they can result in inaccurate predictions by machine learning models. Several unsupervised machine-learning methods can be used to identify anomalies/outliers within a dataset. In this study, we will look at three common ways: Isolation Forest (IF), One Class SVM (SVM), and Local Outlier Factor (LOF). Table 4 provides a detailed summary of the outcomes obtained from applying three distinct outlier elimination methods—Isolation Forest (IF), One Class SVM (SVM), and Local Outlier Factor (LOF)—to two datasets: the training dataset (Dataset A) and the blind dataset (Dataset B). Table 4 illustrates the number of abnormal and non-anomalous values identified by each method for both datasets. For Dataset A, the total values for each technique, including the sum of anomalous and non-anomalous instances, are displayed. Similarly, for Dataset B, the corresponding totals are presented. This comprehensive overview enables a comparative analysis of the effectiveness of the outlier elimination methods across the two datasets, offering insights into their performance in identifying and handling anomalous values in different contexts. The performance of each of the models using Surface weight on bit -Torque cross-plots is shown in Fig. 2.

The IF method provides a better result, followed by SVM and LOF. The first two methods remove most outliers on the plot's right-hand side. Figure 3 presents a comprehensive analysis of the training dataset before and after outlier removal using the isolation forest (IF) method. Subfigure (a) displays the boxplot of the training data before outlier removal, providing insights into the distribution and potential presence of outliers. Subfigure (b) showcases the boxplot after successfully applying the IF outlier removal method, highlighting the impact on the dataset's distribution. Removing outliers contributes to a more refined representation of the training data. Subfigure (c) supplements the analysis by presenting a count of the outliers released, categorized by geological formation top names and outlier detection method. This breakdown provides a detailed understanding of the specific formations affected and the effectiveness of the IF method in successfully eliminating outliers from the dataset. The improved data quality resulting from the IF outlier removal enhances subsequent analyses' and modeling efforts' robustness and reliability.

Impacts of outlier removal on detection, range determination, and confidence

Outlier removal can have significant impacts that require careful consideration when developing machine learning models. Here is a summary of the outlier removal effects on detection, range determination, and confidence:

-

Three outlier removal methods were tested—Isolation Forest (IF), One Class SVM (SVM), and Local Outlier Factor (LOF). They were applied on both the training dataset (Dataset A) and the blind validation dataset (Dataset B).

-

For the training set (Dataset A), IF removed 24,778 outliers, SVM removed 24,777 outliers, and LOF removed 21,886 outliers. So IF and SVM detected a similar number of outliers, more than LOF.

-

For the blind dataset (Dataset B), IF removed 14,494 outliers, SVM removed 14,494 outliers, and LOF removed 13,013 outliers. Again, IF and SVM detected nearly the same number of outliers, while LOF found fewer outliers.

-

Visual analysis of SWOB vs TQA plots before and after IF outlier removal (Figs. 2 and 3) shows that applying IF helps eliminate most outliers, resulting in a cleaner distribution. This was the motivation to select IF for outlier elimination.

-

By removing outliers, the data distribution becomes less skewed and more refined. This enhances the robustness and reliability of subsequent analysis and modeling using the "cleaned" dataset after IF outlier removal.

In summary, Isolation Forest (IF) was found to perform well in identifying and eliminating outliers from both the training and blind datasets. Applying IF outlier removal led to improved data quality and distributions for further analysis. This highlights the importance of detecting and handling outliers prior to applying machine learning algorithms. Therefore, utilizing multiple detection techniques, validating across datasets, inspecting distribution shifts visually, and incorporating domain expertise improves confidence that suitable outliers were identified and handled.

Encoding of geological formation tops

Encoding the categorical target variable, representing geological formation tops, is essential for modeling. The original formation names have been mapped to numerical values for computational efficiency. Table 5 presents the geological formation dictionary used for encoding:

In the dataset, each instance of the geological formation top is represented by the corresponding formation number. This encoding allows for seamless integration of the target variable into machine learning models, ensuring compatibility with various algorithms. Using numerical representations enhances computational efficiency and aids in interpreting model predictions. This encoding scheme will be employed throughout the subsequent modeling and analysis phases, providing a standardized and efficient representation of geological formation tops.

Feature selection

Feature selection in machine learning modeling often involves utilizing heatmaps to examine the correlation among independent variables. The initial step entails computing the correlation matrix for the independent variables within the dataset. Subsequently, the correlation results are presented visually through a heatmap (see Fig. 4), where bright colors represent high correlation levels and dark colors indicate low correlation levels. At this juncture, pairs of variables exhibiting substantial correlation, typically surpassing a predetermined threshold, are identified. Such a high correlation signifies redundancy in information. Following this, the task is to determine which variables should be eliminated within the identified pairs. This decision-making process considers factors such as domain relevance, model interpretation, and the contribution of variables to the overall model. Variables deemed more crucial or informative are retained, while those with lower or less significant contributions are excluded.

Referring to Fig. 4, the attributes exhibiting the most substantial absolute correlations include 'HKLD,' 'DEPT,' 'TQA,' and 'ECD_MWD.' These features demonstrate strong correlations with our target variable, GFT. After removing the variables, the machine learning model is retrained using the selected feature subset, and its performance is evaluated. It should be emphasized that heatmaps and correlations only provide an initial overview, and additional steps such as statistical testing or other feature selection methods may be required to validate the feature selection decisions made. In this modeling process, the variables used as input are DEPT, ROP5, HKLD, SWOB, TQA, RPM, BPOS, BVEL, SPPA, TFLO, TRPM_RT, Stick_RT and ECD_MWD. While the variable set as output or target is GFT. More details about separate features and target matrix are shown in Fig. 5.

The stacked bar chart in Fig. 5 shows the correlation coefficients between different geological features/formations and the target variable. The features are sorted from highest to lowest mean correlation on the x-axis. The stacked bar chart indicates that Hook-load has the strongest positive correlation with the target variable, with an average correlation coefficient of around 0.8. This suggests that a Hook-load could serve as a key predictive feature for the target. In addition, bit depth, torque, and Equal circulating density display moderately strong positive correlations on average, with coefficients ranging from 0.6 to 0.7. These three formations also appear to have good predictive relationships with the target that could be utilized. On the other hand, Bottom turbine revolutions and Total pump flow demonstrate weaker but still positive correlations around 0.4–0.5, meaning their signals may retain some useful information. However, the remaining features exhibit low or negative average correlations, like traveling block velocity, RPM, etc. These formations likely have minimal or no predictive relationship with the target. In summary, the analysis indicates that hook load, bit depth, torque, and equivalent circulating density should be the primary features focused on for modeling. At the same time, Bottom turbine revolutions and Total pump flow may provide secondary signals, and the remaining components can likely be excluded from the predictive modeling. A multivariate perspective on the relationships between the selected input features and the target geological formation tops is provided through the pair-plot visualization in Fig. 6. Each subplot depicts the two-dimensional distribution between a feature pair, with points colored by formation top class. Distinct clustering by class is observed for variables including Bit depth, Equivalent circulating density, Hook-load, and Torque, indicating their efficacy in discriminating between formation tops. Based on the diagonal patterns, strong positive correlations are evident between bit depth, equivalent circulating density, bit depth, hook load, and equivalent circulating density and hook load. RPM exhibits significant overlap between multiple classes, implying limited differentiation capability. The pair-plot enables an assessment of the relevance and interrelationships of the selected features in predicting the target formation tops. These insights guide appropriate algorithm selection and parameter tuning to maximize classification performance.

Several insights can be extracted from Fig. 6:

-

There is a clear separation between many of the formation tops based on the variable pairs. For example, the Hod and Lista formations (light green and purple points) separate from other tops on axes like DEPT vs SWOB.

-

Some formations demonstrate more compact, concentrated clusters (e.g., Sele in red), while others show greater dispersion (e.g., Tor in dark green). This indicates heterogeneity within formations.

-

DEPT, ECD_MWD, HKLD, and TQA display distinct trends and clustering by formation top, suggesting they provide good discrimination. Others, like RPM, show a high overlap between classes.

-

Based on the diagonal clusters, strong positive correlations are visible between DEPT and ECD_MWD, DEPT and HKLD, and ECD_MWD and HKLD. This is expected due to the direct relationships between these features.

-

Looking at the DEPT vs. ECD_MWD subplot, we see that points belonging to the Hod formation (light green) cluster in the upper left region while points from the Lista formation (purple) fall in the lower right area. This indicates that the Hod formation tends to have higher depth (DEPT) and lower equivalent circulating density (ECD_MWD) than the Lista formation.

-

In the DEPT vs. SWOB plot, the cloud of points corresponding to the Sele formation (red) occupies a narrow range of low surface weight on bit (SWOB) values across depths, suggesting consistent rock strength. In contrast, the Balder formation points (black) are more dispersed in SWOB for a given depth, implying greater heterogeneity.

-

For TQA vs. RPM, we see extensive overlap between many formations at lower torque (TQA) values. But at higher torque, formations like Ty (orange) and Draupne (blue) separate from others, likely due to differences in rock properties that impact drilling torque requirements.

-

The HKLD vs. SPPA plot exhibits distinct clustering by formation top but also some overlap between formations with similar compressive strengths that dictate the hook-load (HKLD) and pump pressure (SPPA) relationship during drilling.

-

Comparing SWOB vs. HKLD and SWOB vs. SPPA, we see diagonal patterns indicating a positive correlation between these features. Higher weight on the bit leads to higher hook load and pump pressure.

Based on a visual examination of the pair plot in Fig. 6, there appear to be some linear relationships between certain parameters:

-

o

Depth (DEPT) and Equivalent Circulating Density (ECD_MWD) show a strong positive linear correlation, as evidenced by the diagonal elongated cluster in their subplot. As depth increases, ECD also increases.

-

o

Similarly, Depth (DEPT) and Hookload (HKLD) display a positive linear relationship, with increasing depth associated with higher hookload values.

-

o

Hookload (HKLD) and Pump Pressure (SPPA) also seem to have an approximate positive linear association, though the relationship looks weaker.

In contrast, many of the other parameter combinations do not demonstrate strong linear relationships:

-

o

The variables Surface Weight on Bit (SWOB) and Depth (DEPT) do not appear to have a clear linear correlation, with substantial scatter in their subplot.

-

o

Revolution per Minute (RPM) vs Torque (TQA) also does not show a definitive linear trend, with overlapping classes spanning a wide range of values.

-

o

Other pairs, like DEPT vs. SWOB, TQA vs. SPPA, etc., lack distinct linear patterns.

In addressing the inherent challenges posed by the presence or absence of linear relationships between parameters in machine learning, this study employs a diverse set of models, including support vector machines (SVM), random forests (RF), k-nearest neighbors (KNN), and multilayer perceptron (MLP). The intricate balance between linear and nonlinear relationships in the data is effectively managed by leveraging these diverse algorithms. The risk of overfitting, particularly prevalent in strong linear associations, is mitigated by the ensemble nature of Random Forests, preventing fixation on specific patterns. Similarly, support vector machines and multilayer perceptron models excel in handling complex, nonlinear relationships. By incorporating k-nearest neighbors, the models collectively reduce underfitting concerns, ensuring a nuanced capture of underlying data intricacies. This comprehensive approach not only addresses the challenges associated with linear and nonlinear patterns but also results in the highest accuracy in prediction across various scenarios.

Splitting training dataset into training and test set

We are utilizing the train_test_split function from Sklearn.model_selection, we partition the dataset into training and test sets, with the test set comprising only 20% of the data. During this partitioning, the random_state parameter is either turned off or set to 0 to ensure consistency and prevent variations in model outcomes.

Dataset standardization

Standardizing a dataset within the context of machine learning modeling holds significant importance. This process, facilitated by the StandardScaler library, involves converting the variables in the dataset to a consistent scale, thereby eliminating potential scale-related discrepancies that might impact model performance. Such differences can adversely affect algorithms sensitive to scale variations, such as those relying on distance or gradient-based methods. Utilizing StandardScaler ensures that the variables in the dataset are transformed to possess a mean of zero and a standard deviation of one, ensuring uniform scaling across all variables. This standardization facilitates faster algorithm convergence and enhances overall model stability. Moreover, it simplifies model interpretation by providing clear interpretations for variable coefficients. Standardization also mitigates outliers' influence on large-scale variables, improving model stability and accuracy.

Classification algorithms and parameter tuning

Optimal tuning of parameters holds a crucial role in achieving high-accuracy results when employing support vector machines (SVM), random forests (RF), and k-nearest neighbors (KNN). Each classifier entails distinct tuning steps and parameters. A range of values was systematically tested for each classifier to determine the optimal parameters, and the parameters resulting in the highest overall classification accuracy were identified. In this study, the classified results obtained under the optimal parameters for each classifier were utilized to assess and compare the performance of the classifiers.

Support vector machine (SVM)

In land cover classification studies, as highlighted by Knorn et al. (2009) and Shi and Yang (2015), the radial basis function (RBF) kernel of the support vector machine (SVM) classifier is commonly employed due to its demonstrated good performance. Accordingly, we utilized the RBF kernel to implement the SVM algorithm. Two crucial parameters must be set when applying the SVM classifier with the RBF kernel: the optimal parameters of cost (C) and the kernel width parameter (\(\gamma\)) (Qian et al. 2015; Ballanti et al. 2016). The C parameter determines the permissible level of misclassification for non-separable training data, allowing for the adjustment of the rigidity of the training data (Li et al. 2014). On the other hand, the kernel width parameter (\(\gamma\)) influences the smoothness of the shape of the class-dividing hyperplane (Melgani and Bruzzone 2004). Larger values of C may lead to an overfitting model (Ghosh and Joshi 2014), while an increase in the γ value affects the shape of the class-dividing hyperplane, potentially impacting classification accuracy results (Huang et al. 2002). In line with the approach outlined by Li et al. (2014) and validated for our dataset through pretesting, this study explored three values of C (1, 5, 10) to identify the optimal parameters for the SVM classifier.

Random forest (RF)

To implement random forest (RF), it is necessary to configure two parameters: the number of trees (ntree) and the number of features considered in each split (mtry). Numerous studies have indicated that satisfactory outcomes can be attained using default parameters (Duro et al. 2012). However, as highlighted by Liaw and Wiener (2002), a higher number of trees can yield a more stable result in variable importance. Additionally, Breiman (2001) mentioned that exceeding the required number of trees might be unnecessary, but it does not adversely affect the model. Moreover, Feng et al. (2015) suggested that RF could achieve accurate results with ntree = 200. Regarding the mtry parameter, many studies opt for the default value mtry = \(\sqrt{p}\), where p is the number of predictor variables (Duro et al. 2012). However, in this study, to identify the optimal RF model for classification, a range of values for both parameters was systematically tested and evaluated: ntree = 100, 200, 500, and 1000; mtry = 1 to 10 with a step size of 1.

K-nearest neighbor (KNN)

The KNN approach is a nonparametric method that originated in the early 1970s for statistical applications (Franco Lopez et al. 2001). The fundamental principle behind KNN is locating a group of k samples in the calibration dataset closest to unknown samples, typically determined based on distance functions. From these k samples, the label (class) of unknown samples is determined by calculating the average of the response variables, representing the class attributes of the k-nearest neighbors (Akbulut et al. 2017; Wei et al. 2017). Consequently, the value of k plays a crucial role in the performance of KNN, serving as the key tuning parameter (Qian et al. 2015). The parameter k was determined through a bootstrap procedure. This study explored k values ranging from 1 to 20 to identify the optimal k value for all training sample sets.

Results

Comparative analysis of machine learning models

Various machine learning algorithm models are available when predicting geological formation tops or lithology in hydrocarbon reservoir exploration and production. The multilayer perceptron (MLP) proves beneficial in cases of high complexity or nonlinear relationships between input features and output targets. Random forest is well-suited for data with independent features or intricate interactions, offering class probability estimates and insights into significant features. K-nearest neighbor (KNN) presents an easily implementable and effective option for nonlinear scenarios, though it may be inefficient for large datasets or those with unbalanced class distributions. Support vector machine (SVM) is apt for high dimensionality and clear class separation datasets. It is important to note that no single algorithm model universally attains the highest or best accuracy in this context. Performance hinges on data characteristics, problem complexity, and appropriate parameter settings. Thus, it is advisable to undertake experiments and cross-validation to assess the relative performance of each model within the specific parameters of the given scenario.

The KNN classifier

In the KNN classifier, the algorithm classifies an object based on the class attributes of its k-nearest neighbors. Therefore, the k value is a crucial tuning parameter for the KNN algorithm. In this study, we conducted tests with k values (3–12) for nearest neighbors and explored two weight options (uniform and distance), as illustrated in Table 6. The optimal parameter selection for the KNN classifier involves using training datasets to assess the performance with different k values. Based on the conducted tuning experiments, the parameter configuration yielding the best results consists of setting the k to 3, with the weight parameter set to distance. Table 6 displays the outcomes of a hyperparameter tuning experiment using the k-nearest neighbors (KNN) algorithm through GridSearchCV. The investigation explored the impact of two key hyperparameters: the number of neighbors (param_n_neighbors) and the weight function (param_weights) employed in the KNN algorithm. Different configurations were tested in the "param_n_neighbors" column, representing the number of neighbors considered during predictions. The "param_weights" column indicates the weight function used in the KNN algorithm, with "uniform" suggesting an equal contribution from all neighbors and "distance" implying that closer neighbors have more influence on predictions.

The "mean_test_score" column represents the average score of the model on the test data for a given set of hyperparameters. Higher scores indicate superior model performance. The "std_test_score" column denotes the standard deviation of test scores across folds or splits, providing insight into the model's consistency. The findings reveal that the most successful configuration involves three neighbors with distance weighting, achieving a mean test score of approximately 99.30%. Arrangements with 6 and 9 neighbors closely follow, both using distance weighting. Notably, distance-weighted configurations consistently outperform those with uniform weighting. The results suggest that a smaller number of neighbors, particularly 3, coupled with distance weighting, leads to optimal performance for this KNN classifier and dataset. Standard deviations in the "std_test_score" column are relatively low, indicating consistent model performance across different folds. These insights are crucial for configuring KNN models in similar contexts, emphasizing the significance of tuning the number of neighbors and the weighting scheme for enhanced classification accuracy.

Figure 7 shows a receiver operating characteristic (ROC) curve for a multi-class classification problem. The ROC curve is a useful tool for evaluating the performance of a classifier, as it shows the trade-off between true positive rate (TPR) and false positive rate (FPR) at different classification thresholds. The ROC curve in the figure shows that the classifier performs very well for all classes, with AUC values of 0.99 or higher for all classes except for class 13 and class 14, which have AUC values of 0.99. This means that the classifier can accurately identify positive examples (i.e., examples belonging to the target class) while minimizing the number of false positives (i.e., models incorrectly classified as belonging to the target class).

One way to interpret the ROC curve is to imagine that you have a classifier that is used to detect disease. The TPR is the proportion of diseased patients correctly identified by the classifier. At the same time, the FPR is the proportion of non-diseased patients incorrectly identified by the classifier as diseased. A high TPR means the classifier is good at identifying diseased patients, while a low FPR implies that the classifier avoids false positives. In Fig. 7, we can see that the ROC curve for each class is close to the top-left corner of the graph. This means the classifier can achieve a high TPR while maintaining a low FPR. For example, class 0 has an AUC of 0.99, which means that the classifier can correctly identify 99% of diseased patients while only misclassifying 1% of non-diseased patients as diseased. Overall, the ROC curve in the figure shows that the classifier is a very good performance. It can accurately identify positive examples while minimizing the number of false positives, which is an important goal for many classification tasks.

The diagonal elements of the confusion matrix represent the number of correct predictions for each class. The off-diagonal elements represent the number of incorrect predictions. For example, the entry in row 0, column 1, represents the number of examples incorrectly predicted as class 1, even though they belonged to class 0. The accuracy of the KNN model in the figure is 99.9%, which means that it correctly predicted the class of 99.9% of the examples in the test set. However, it is important to note that the accuracy score can be misleading for multi-class classification problems, especially when the classes are imbalanced. For example, if there are very few examples of class 10 in the test set, the KNN model can achieve a high accuracy score by simply predicting class 0 for all examples. A better way to evaluate the performance of the KNN model is to look at the precision, recall, and F1 score for each class. These metrics are more robust to class imbalance and provide a more complete figure of the model's performance (Figs. 8 and 9).

In summary, the presented model exhibits exceptional performance across all classes, demonstrating high precision, recall, and F1-Score. Notably, it distinguishes certain classes, such as 2, 11, 12, and 17, as evidenced by their perfect precision, recall, and F1-Score (Table 7). It is important to consider the specific requirements and characteristics of the classification problem when interpreting these performance metrics.

The random forest classifier

As highlighted in "Random forest (RF)" section, two key parameters, namely tree and mtry, significantly impact the performance of Random Forest (RF) classifiers. In this study, we engaged in hyperparameter tuning, exploring various parameters to achieve optimal accuracy. The hyperparameter tuning involved adjusting (n_estimator, max_depth, and max_feature), each with a specified range, as depicted in Table 8. Following the tuning process, the most effective parameters were identified as {'max_depth': 6, 'max_features': 6, 'n_estimators': 100}.

The configuration {'max_depth': 6, 'max_features': 6, 'n_estimators': 100} stands out as the best-performing, achieving a mean test score of 0.985 and ranking first. This suggests that a deeper tree ('max_depth': 6), using more features ('max_features': 6), and a moderate number of trees ('n_estimators': 100) contribute to higher accuracy. Higher standard deviations in some configurations, such as {'max_depth': 3, 'max_features': 1, 'n_estimators': 124}, indicate more variability in performance. The 'n_estimators' parameter varies between 100 and 145, with no clear pattern indicating an optimal number of trees. The hyperparameter tuning process identified a configuration that maximizes mean test scores, shedding light on effective hyperparameter values for this Random Forest model. Further validation on a separate test set is recommended for robust performance assessment (Table 9).

Based on the classification report, the random forest classifier performs well overall, with a weighted average F1 score of 0.98 and an accuracy of 0.99.

-

Precision and recall scores are strong (mostly at or very close to 1.00) for most classes, indicating the model reliably predicts those classes and does not make many errors.

-

Classes 7 and 13 have lower precision, meaning the model makes incorrect predictions on those classes when it predicts them. But recall is still high, meaning it correctly finds most examples of those classes.

-

Class 6 and 15 have lower recall, so the model struggles to identify some examples of those classes (about 26% of class 6 missed, 3% of class 15). Precision is still high when it does predict those classes.

-

The model seems robust to a class imbalance with both macro-average and weighted-average F1 scores at 0.93 and 0.98. Performance across minority classes does not drop off.

In summary, I generally performed extremely strongly, with just a few classes representing opportunities for improvement. The focus could be distinguishing classes 6, 7, 13, and 15. But generally an excellent, well-balanced classifier.

Figure 10 shows a decision tree visualization from a random forest classifier. The tree has a maximum depth of 2, meaning no node in the tree is more than two levels deep. The tree leaves are labeled with the class to which most of the training examples that reach that leaf belong. The tree is used to classify a set of models by starting at the root node and asking a question about one of the features. The answer to the question determines which child node of the root node to go to. This process is repeated at each child node until a leaf node is reached. The class label of the leaf node is then used to classify the example. The "TRPM RT 0.516" at the top of the tree is the value of the "TRPM_RT" feature used to split the data at the root node. The "gini = 0.863" is the Gini impurity of the root node. The Gini impurity measures how well the data is split at a node. A lower Gini impurity means that the data is purer at that node and that it is easier to classify the examples that reach it. The "samples 39,743" value at the root node is the number of training examples that get that node. The "value [949, 5774, 2975, 1041, 1953, 617, 606, 3269, 996, 787, 16,913, 4206, 1785, 1741, 298, 1053, 11,663, 5423, 5411]" is the number of examples in each class that reach the root node. The "class = y[10]" value at the root node is the majority class of the examples that match the root node. This Figure is a good example of how decision trees can be used to classify data. The tree is relatively simple but can still achieve accuracy on many tasks.

The support vector machine classifier

Similarly, in the case of SVM, our research involves hyperparameter tuning with several key parameters. The parameters subject to tuning include (C, kernel, and gamma). For the C parameter, we explore a range of values (1, 5, 10), while the kernel parameter is adjusted between Linear and RBF. Lastly, the gamma parameter is varied with options of 'scale' and 'auto,' with further details in Table 10. Following the tuning process, the optimal parameter configuration identified is {'C': 10, 'gamma': 'scale,' 'kernel': 'rbf'}. Table 10 summarizes the results of hyperparameter tuning for an SVM (Support Vector Machine) algorithm using a Grid Search approach. This table provides information about different combinations of hyperparameters and their corresponding mean test scores and standard deviations. The best-performing model has an 'rbf' kernel, 'scale' gamma, and a C value of 10, achieving a mean test score of approximately 99.26% with a standard deviation of around 0.00097. The table also provides insights into the performance of different combinations of hyperparameters, allowing you to compare linear and radial basis function kernels, different gamma values, and various levels of regularization (C values). This table can guide you in selecting the optimal hyperparameters for your SVM model. You may choose the hyperparameter combination that maximizes the mean test score while considering the standard deviation to ensure stability across different cross-validation folds (Table 11).

Figure 11 shows the confusion matrix for the SVM model's predictions on the test set. The confusion matrix visualization shows the model is very accurate, with most predictions along the diagonal indicating correct classification. Most predictions fall on the diagonal, indicating precise type. The classification report further backs this up. With ~ 15 k test samples, the SVM achieves 99% accuracy. Precision, recall, and F1-score for each class are also very high-most are above 95%, and many are at 100%. This indicates the model is very good at correctly predicting each class. The strong diagonal confusion matrix shows the model skillfully discriminates between the different classes. The class-wise prediction reliability offered in the classification report is also very high. This analysis validates the SVM's exemplary performance on this dataset.

Figure 12 appears to show the learning curve of a machine learning model. The training score measures how well the model performs on the trained data. The cross-validation score measures how well the model acts on data it was not trained on. This is an important metric because it helps ensure that the model is not simply overfitting the training data. The graph shows that the training score increases as the number of training examples increases. This is expected, as the model is learning from the data. The cross-validation score is also growing but at a slower rate. This suggests that the model is starting to overfit the training data. The ideal situation is for the training and cross-validation scores to be as close together as possible. This would indicate that the model is learning from the data without overfitting. The gap between the training and cross-validation scores is relatively small. This suggests that the model is doing a good job of learning from the data without overfitting. The graph shows that the model is learning from the data and not overfitting the training data. However, there is still room for improvement.

Multilayer perceptron (MLP) classifier

The multilayer perceptron (MLP) is a class of artificial neural networks that utilizes backpropagation for training. MLPs contain multiple layers of nodes, including an input layer, one or more hidden layers, and an output layer. In this study, the MLP classifier was implemented using the Keras deep learning library in Python. For the MLP model, a sequential model was defined with an input layer size equal to the number of features, two hidden layers with 32 and 16 nodes, respectively, and an output layer with a size equal to the number of target classes (19 class types). The hidden layers used the Rectified Linear Unit (ReLU) activation function, and the output layer used softmax activation to generate probabilistic predictions. The MLP model was trained for 50 epochs using the Adam optimizer and categorical cross-entropy loss function. To prevent overfitting, L2 regularization was applied to the weights. The model achieved a training accuracy 1 and was evaluated on the test set data. Key strengths of MLPs include the ability to model complex nonlinear relationships, adaptability to various problems, and use of backpropagation to learn deep feature representations. However, challenges include longer training times, the need for parameter tuning, and susceptibility to overfitting. Overall, the MLP model performed comparable to the other classifiers on this dataset for predicting geological formation tops. Table 12 shows different MLP architectures experimented with by varying the number of layers, nodes per layer, activation functions, and regularization techniques. A 3-layer network with 64 and 32 nodes and ReLU or Softmax activation produced the best results, with Adam optimizer and Categorical Cross entropy loss function helping prevent overfitting. The tuning process helped determine the optimal structure and hyperparameters for the MLP model on this dataset.

In Table 12, three experiments with varying MLP architectures were tested, each characterized by specific hyperparameter settings. Experiment 1 reveals a moderate level of accuracy at 0.95. Given the chosen architecture and hyperparameters, the model performs reasonably well. It serves as a baseline for comparison with other experiments. Experiment 2 exhibits a significant improvement in accuracy, reaching 0.98. The increased number of epochs, hidden layers, and neurons per layer likely contributed to enhanced model performance. This suggests that a more complex architecture can capture intricate patterns in the data, leading to improved accuracy. Experiment 3 demonstrates a perfect accuracy of 1. While achieving 100% accuracy may suggest overfitting to the training data, using a lower learning rate, fewer epochs, and a more intricate architecture might have contributed to this result. It is essential to assess the model's generalization performance on unseen data to ensure its effectiveness in real-world scenarios. In summary, these experiments showcase the impact of hyperparameter tuning on MLP model performance. Experiment 2, with a learning rate of 0.01 and a more complex architecture, stands out as the top performer, achieving the highest accuracy of 0.98. The choice of hyperparameters significantly influences the model's ability to learn and generalize from the training data (Fig. 13).

The multilayer perceptron (MLP) model demonstrates very strong performance in multi-class classification of handwritten digits, as evidenced by the confusion matrix and classification report. The model achieves an overall accuracy of 0.98 on the test set, correctly classifying 98% of the 15,648 test samples. This high accuracy indicates reliable predictive capabilities across the 20 different digit classes. Precision, recall, and F1 scores are mostly in the excellent 0.94–0.99 range across categories, signaling that the model predicts each true type with high precision and retrieves most samples within each category (high recall), leading to robust F1 balances. The macro-averaged precision, recall, and F1 scores are 0.96, 0.95, and 0.95, respectively, confirming reliable differentiation, particularly for low-volume classes in the test dataset. Weighted averages approaching the accuracy score further establish that most errors derive from smaller classes. While classes 6 and 15 exhibit comparatively lower scores, suggesting some difficulty differentiating these specific digits, this does not substantially impact overall performance given the much greater distribution of other classes in the dataset. In summary, precise class-wise metrics nearing or exceeding 0.95 and 98% test accuracy underscore MLP's proficiency in distinguishing handwritten digits based on the provided test set evaluation data and labels. The model achieves excellent inter-class differentiation to classify 98% of all samples successfully.

Figure 14 shows the side-by-side visualization of weight heatmaps for each layer in the neural network model. Each subfigure illustrates the spatial distribution of weights in the corresponding layer, providing insights into the learned features and relationships. The color intensity represents the magnitude of the importance, with warmer colors indicating higher values. These weight heatmaps offer a glimpse into the intricate patterns and structures captured by the neural network during the training process.

Figure 15 shows a model's performance over time, with an accuracy plot on top and a loss plot on the bottom.

-

Accuracy plot analysis:

-

The training accuracy starts around 0.6 and reaches near 1.0 after around 15 epochs. This indicates the model is learning from the training data.

-

The validation accuracy starts near 0.6 as well. It reaches a peak of about 0.8 by around eight epochs, then levels off and decreases slightly after that.

-

The gap between training and validation accuracy grows over time. This suggests overfitting is occurring, where the model performs better on training data than new validation data.

-

-

Loss plot analysis:

-

The training loss decreases rapidly in the first five epochs, indicating the model is optimizing and learning quickly.

-

After five epochs, training loss continues decreasing but at a slower pace. This signals the model is still improving but at a slower rate.

-

Validation loss mirrors training loss early on, decreasing rapidly rather than more slowly. But after 5–8 epochs, validation loss levels off and stops improving much.

-

This model exhibits signs of overfitting, performing better on training data than validation data over time. The best weights are likely at 5–8 epochs when validation accuracy peaks and validation loss still decreases. To improve the model, regularization techniques could be added to reduce overfitting. But after ~ 8 epochs, performance on new data does not seem to improve much or begins degrading, suggesting training should likely be stopped by then.

High Accuracy in Machine Learning Models

Throughout the modeling process employing various machine learning algorithms, including Support Vector Machines (SVM), Random Forest, K-Nearest Neighbors (KNN), and Multilayer Perceptron (MLP), notable differences in accuracy scores were observed. Specifically, when applied to the training dataset, the Multilayer Perceptron (MLP) algorithm demonstrated a commendable accuracy score of 1. Also, SVM, Random Forest, and K-Nearest Neighbors (KNN) algorithms exhibited slightly lower accuracy score 0.99. The modeling procedures utilized the training dataset detailed in section “For this investigation, we gathered the”, Approach and Procedures. These findings suggest that, in predicting geological formation tops in hydrocarbon reservoir exploration and production, the MLP algorithm excelled in accuracy compared to other algorithms. However, selecting the most suitable algorithm involves considering additional factors such as computational efficiency, interpretability of results, and application-specific requirements. Further assessment and testing are imperative to validate the reliability and applicability of the chosen model. There is a considerable disparity between these training dataset results and the accuracy scores obtained when predicting the blind dataset, falling within the range of 0.91–0.99. Several factors may contribute to this decrease in model performance on the blind dataset. Overfitting is one potential factor where the model becomes excessively tailored to the training data and struggles to generalize to unseen data. Overfitting may arise from a model's complexity or insufficient training data relative to the problem's intricacy. Furthermore, an imbalance in sample numbers or class distribution in the blind dataset might lead the model to favor predictions for the majority class. Other influences, such as inadequate parameter tuning, suboptimal data quality, or other sources of error, can also impact the accuracy of the blind dataset. Therefore, a comprehensive analysis is essential to pinpoint the reasons behind the performance decline on the blind dataset, and strategies like improved parameter tuning, regularization techniques, appropriate data preprocessing methods, or additional data collection may be applied to enhance the model's ability to accurately predicting formation tops or rock types in real-world scenarios. Refer to Table 13 for a detailed comparison of accuracy scores across machine learning models.

Table 13 presents predictions generated by a model trained on the training datasets, as outlined in the evaluation on test sets section. In contrast, the blind sets represent outcomes obtained when making predictions using data that has not been previously used or encountered. Figure 16 compares the predicted formation tops from four models (KNN, SVM, MLP, and RF) to the actual formation tops. Figure 16 shows that all four models could accurately predict the formation tops. The MLP model had the smallest average error, followed by the SVM, KNN, and RF models.

Feature importance analysis

To gain a deeper understanding of which parameters are most influential in driving the predictions of our formation tops models, we employed two model-agnostic feature importance analysis techniques—Permutation Feature Importance (PFI) and Shapley Additive exPlanations (SHAP). Permutation Feature Importance evaluates the predictive power of each feature by calculating the decrease in the model's evaluation metric (such as accuracy) when the feature's values are randomly shuffled. This breaks the original association between the feature and the target variable. A large decrease in the evaluation metric after shuffling indicates higher feature importance. On the other hand, SHAP explains the model's predictions by computing Shapley values for each feature. These Shapley values represent the feature's contribution in "pushing" the forecast away from the expected value. Features with large absolute Shapley values are considered highly impactful on the prediction. We supplemented the analysis with visualizations of the importance of each model's SHAP and PFI features, as shown in Figs. 17, 18, 19, 20, 21, 22, 23, 24, 25, 26 and 27 below. By leveraging both PFI and SHAP for our KNN, Random Forest, SVM, and MLP models, we obtained the following insights:

-

o

KNN

-

o

According to PFI, HKLD, SWOB, SPPA, DEPT, and RPM, they have emerged as the most important features. This implies that these two features strongly influence determining the nearest neighbors for a data point, which drives the KNN model's formation tops prediction.

-

p

The SHAP analysis also identified DEPT as a top contributor. Additionally, RPM, ECD_MWD, SPPA, and HKLD greatly impacted predictions. The agreement between PFI and SHAP provides confidence about the significant role of DEPT, RPM, SPPA, and HKLD in the KNN model.

The resulting SHAP summary plot represents the combined SHAP values across all classes. Each feature's importance and impact on the model's output are visualized. The y-axis of the plot typically represents the features, and the x-axis represents the average magnitude of the SHAP values. Each dot in the plot corresponds to a specific instance in your dataset.

The SHAP plot above reveals that DEPT had the highest SHAP values, indicating their strong influence on predictions in the KNN model. RPM, ECD_MWD, SPPA, and HKLD also had a substantial impact. This aligns with the PFI analysis. Conversely, features like BVEL, Stick_RT, SWOB, TFLO, BPOS, and TQA have lower SHAP values, indicating their influence is less pronounced. The plot also shows that the SHAP values are generally positive for higher feature values, meaning that increasing the importance of these features tends to increase the model's output. However, a few exceptions exist, such as BVEL, where expanding the value decreases the model's production.

The PFI bar chart shows the significant decrease in KNN model accuracy when ROP5 and TFLO were used, demonstrating their low permutation importance. This validates the findings from the SHAP analysis.

Here is a summary of the key points of the KNN model analysis:

-

According to both PFI and SHAP analyses, DEPT, RPM, HKLD, SPPA, and SWOB were the most important features for predicting geological formation tops. These features had the strongest influence on determining nearest neighbors and model predictions.

-

SHAP analysis also identified ECD_MWD as having a high impact. There was an agreement between PFI and SHAP on the significant roles of DEPT, RPM, SPPA, and HKLD.

-

The SHAP summary plot visualized each feature's impact. DEPT had the highest SHAP values, followed by RPM, ECD_MWD, SPPA, and HKLD. Features like BVEL, Stick_RT, SWOB, and TFLO had less impact.

-

Higher feature values tended to increase model output, with some exceptions like BVEL.

-

The PFI analysis validated the SHAP findings—ROP5 and TFLO produced significant decreases in model accuracy when permuted, demonstrating their low importance.

In summary, DEPT, RPM, HKLD, SPPA, and ECD_MWD emerged as the most influential features in the KNN model for predicting geological formation tops. The PFI and SHAP analysis techniques aligned on the key variables driving the model.

-

Random Forest

-

DEPT was flagged as a highly impactful feature by PFI. This can be attributed to its likely involvement in key decision tree splits within the Random Forest ensemble.

-

SHAP analysis concurred on the importance of DEPT. It also highlighted RPM as an additional key driver of predictions, aligning with the PFI results.

-

The SHAP plot highlights DEPT and RPM as highly influential features in the Random Forest model based on their SHAP values. TFLO also had a noticeable impact.

The PFI chart reveals the substantial Random Forest model accuracy dropped when ROP5, TQA, and BVEL were used, confirming their low permutation importance. BVEL also showed a significant accuracy decrease, agreeing with the SHAP results.

Figure 22 shows a graph showing the effect of various parameters on model output for prediction for Sleipner formation top. The chart has two axes: the x-axis shows the feature value, and the y-axis shows the SHAP value (impact on model output). The SHAP value measures how much each feature contributes to the model's production. The graph shows that the parameters with the highest impact on the model output are DEPT, RPM, ECD_MWD, and TFLO. These parameters have SHAP values that are greater than 0.2. The parameters with the lowest impact on the model output are Stick_RT, TRPM_RT, and BVEL. These parameters have SHAP values that are less than − 0.2. The graph also shows that the relationship between the feature and SHAP values is not always linear. For example, the SHAP value for DEPT decreases as the feature value increases, while the SHAP value for RPM increases as the feature value increases. Overall, the graph provides a useful visualization of the impact of various parameters on model output. This information can be used to identify the model's most important parameters and understand how the model is making predictions.

This plot illustrates the contributions of individual features to the model prediction. Positive SHAP values push the model prediction above the expected value, while negative SHAP values pull it below. Components with larger absolute SHAP values have a greater impact on the model output for this specific instance. Figure 23 is a Force plot showing the SHAP values for different features: DEPT, RPM, HKLD, TQA, TRPM_RT, and TFLO. The graph shows that DEPT and RPM have the highest impact on the model output, followed by HKLD and TQA. The model's prediction accuracy increases by increasing parameters DEPT, RPM, HKLD, and TQA. Also, reducing the TFLO and TRPM_RT parameters increases the model's prediction accuracy.

Here is a summary of key points about the Random Forest model analysis:

-

Permutation Feature Importance (PFI) identified DEPT as the most impactful feature, with RPM also being highly important. This aligns with the SHAP analysis.

-

SHAP values highlighted DEPT and RPM as the main drivers of model predictions. TFLO also had a noticeable impact.

-

The SHAP Summary Dot Plot visualized the impact of different features on predictions for the Sleipner formation top. DEPT, RPM, ECD_MWD, and TFLO had the highest SHAP values, indicating they are most important for the model.

-

The SHAP Force Plot illustrated how individual features contribute to the model's predictions. It showed that DEPT and RPM had the greatest influence, followed by HKLD and TQA. Increasing DEPT, RPM, HKLD, and TQA increases prediction accuracy, while decreasing TFLO and TRPM_RT also improves accuracy.

In summary, both PFI and SHAP analyses consistently pointed to DEPT and RPM as the most impactful features in the Random Forest model. The visual plots provided further confirmation and granular details on how the parts affect the predictions. This information can guide feature engineering efforts to improve model performance.

-

SVM

-

DEPT, HKLD, and SWOB emerged as the most relevant parameters by permutation importance. This indicates their role in orienting the SVM model's maximum margin hyperplanes.

-

SHAP feature importance also pointed to DEPT, HKLD, and RPM as key features. TFLO was also identified as impactful, agreeing with the PFI findings.

-

The SVM SHAP plot above indicates DEPT, HKLD, and RPM as key parameters based on their high SHAP values. The influence of TFLO is also observable. The parameters with the highest average impact for each class are:

-

Sleipner Fm.: DEPT

-

Lista Fm.: HKLD

-

Tor Fm.: RPM

-

Balder Fm.: TFLO

-

No Formal Name_Nordland Gp.: SPPA

-

No Formal Name_2_Hordaland Gp.: ECD_MWD

-

Hugin Fm.: TQA

-

Ty Fm.: TRPM RT

-

Grid Fm.: Stick_RT

-

No Formal Name_1_Hordaland Gp.: BVEL

-

Sele Fm.: BPOS

-

Ekofisk Fm.: ROP5

These parameters are the most important for predicting the target class for each formation. For example, DEPT is the most important parameter for predicting the target class for Sleipner Fm., while HKLD is the most important parameter for predicting the target class for Lista Fm.

The PFI chart shows the considerable declines in SVM accuracy when ROP5 and BVEL were used, validating their low permutation importance. DEPT and HKLD also exhibited a noticeable extent.

Here is a summary of the key points about the SVM model analysis:

-

The permutation importance analysis identified DEPT, HKLD, and SWOB as the most relevant parameters for orienting the SVM model's maximum margin hyperplanes.

-

SHAP feature importance also highlighted DEPT, HKLD, and RPM as key features, along with TFLO. This aligns with the permutation importance findings.

-

The SHAP plot shows that DEPT, HKLD, and RPM have a high impact based on their SHAP values. TFLO's influence is also observable.

-

The parameters with the highest average impact for predicting each formation class are:

-

Sleipner Fm: DEPT

-

Lista Fm: HKLD

-

Tor Fm: RPM

-

Balder Fm: TFLO

-

No Formal Name_Nordland Gp: SPPA

-

The permutation importance chart validated the low importance of ROP5 and BVEL based on the decline in accuracy when they were used. DEPT and HKLD also showed noticeable importance.

In summary, DEPT, HKLD, RPM, and TFLO emerge as key parameters in the SVM analysis, having an important role in prediction and model orientation. The permutation and SHAP methods validate one another in identifying influential features.

-

MLP

-

PFI showed DEPT, HKLD, and RPM as central features in the neural network-based MLP model.

-