Abstract

We report on a novel method for solving one-dimensional moving boundary problems based on wave digital principles. Here, we exploit multidimensional wave digital algorithms to derive an efficient and robust algorithm for the solution of the considered problem. Our method lets the wave digital model, on which the algorithm is based, expand according to the size of the solution domain. The expanding model introduces new dynamical elements, which must be properly initialized to obtain a calculable algorithm. To deal with this problem, we make use of linear multistep methods to extrapolate the missing values. Our results show the proposed method to indeed be capable of correctly solving a one-dimensional partial differential equation describing a growing biological axon.

Similar content being viewed by others

1 Introduction

Partial differential equations (PDEs) are the primary mathematical tool for describing spatio-temporal physical phenomenon, such as electro-magnetic wave propagation (Lindell, 2005) or heat transfer (Vaidya et al., 1913). In general, most partial differential equations can not be solved analytically, which is why numerical solvers are necessary.

An interesting subset of differential problems is the set of moving boundary problems also known as Stefan problems (Stefan, 1889). These problems represent expanding physical domains, which, from a mathematical perspective, implies that the solution domain is dynamical, i.e. its boundaries result as an integral part of the solution (Zerroukat & Chatwin, 1997). Such problems occur in many research fields. Examples are the analysis of melting and solidification problems (Tao, 1967; Alexiades, 1993) or diffusional controlled release problems associated with drug release (Higuchi, 1963; Lee, 2011). The solution of moving boundary problems is quite challenging, even from a numerical standpoint. Over the years, a lot of work has been invested into the development of approximative, numerical, and semi-analytical solution methods of which some turned out to be very accurate compared to real-world measurements, refer to (Zerroukat & Chatwin, 1997; Tao, 1986) for a good exposition.

In this paper, we aim to introduce a new numerical solution method based on the wave digital concept (Fettweis, 1986). To this end, we have picked one example, where such as problem occurs, namely the diffusion of microtubules in a spatially extending axon (McLean & Graham, 2004). What makes this process interesting is the fact that it plays an important role in the maturity of neural networks. From an engineering perspective, both the mathematical and electrical modeling of this process can be used for the design of self-organizing bio-inspired neural networks in the context of neuromorphic computing, cf. Ochs et al. (2021), Michaelis et al. (2022), Jenderny and Ochs (2022), Singh Muralidhar et al. (2022), which has inspired us to pick this specific problem.

Overall, this works builds on a large foundation of research oriented towards the solution of ordinary and partial differential equations based on wave digital principles of which we can only a list of few (Fettweis, 1986; Lawson & Guzman, 2001; Balemarthy & Bass, 1995; O’Connor, 2005; Bose & Fettweis, 2004; Fettweis, 1991, 1992, 2002; Fettweis & Nitsche, 1991a, b; Hemetsberger & Hellfajer, 1994; Fettweis, 2006; Fettweis & Basu, 2011; Werner et al., 2015), [29], (Bernardini et al., 2019, 2021). Our goal is to present a first approach for dealing with one-dimensional moving boundary problems. Here, we make use of multidimensional wave digital algorithms, which are known for their robustness, massive parallelism, and fault-tolerance (Fettweis & Nitsche, 1991a).

The synthesis of a reference circuit constitutes the hardest part of the solution process. In the early stages of the wave digital concept, these circuits were derived based on a combination of physical considerations and circuit-theoretical intuition (Fettweis, 1992, 2002; Erbar & Horneber, 1995; Fettweis, 1994). However, this required a strong foundation and extensive experience in the field of circuit theory. Some circuit synthesis approaches were later presented for example in Vollmer (2004), Vollmer (2005). Later on these approaches were used as a basis for deriving an algorithm for the automated circuit synthesis of passive hyperbolic partial differential equations based on a special state-space representation (Leuer & Ochs, 2009, 2012). This paper applies the latter algorithm, while also combining numerical integration methods with the wave digital concept to solve the considered biological problem. Contrary to common approaches in literature, which utilize nonlinear time-varying transformations to deal with the moving boundary, our approach allows for retaining the simplicity of the baseline mathematical model.

The remainder of this paper is organized as follows. Section 2 recapitulates the considered problem from a biological as well as a mathematical standpoint. In Sect. 3, we derive an electrical model for the considered problem. Section 4 discusses the concept of dynamic wave digital models, which is then utilized to obtain the solutions presented in Sect. 5. Finally, Sect. 6 summarizes this work and its main contributions.

2 Tubulin-driven axon-growth

2.1 Biological background

Neurons or nerve cells are the primary building blocks of the human nervous systems. In general, these cells have a wide range of responsibilities including sensing environmental signals, processing sensory signals, and communicating information to different parts of the body (Bullock et al., 2005). The neurons themselves are composed of many different parts, the soma representing the cell’s main body, the nucleus containing genetic information, the axon responsible for bridging the spatial gap between neurons, the synapse handling inter-neuron communication, etc. A simplified biological sketch of a neuron is presented in Fig. 1. In this work, we focus on one part of the neuron, namely the axon. In particular, we deal with the mathematical and electrical modeling of axonal outgrowth.

During the development of the nervous system, axon-growth takes place. The axon’s form is maintained by a cytoskeleton consisting of tubulin dimers (microtubules) (McLean & Graham, 2004). Its growth process involves the extension of the cytoskeleton and the spatial elongation. Here, tubulin is first produced within the cell body and then transported along the axon (Wang et al., 1996). The latter is driven by a combination of diffusion and active transport (Galbraith et al., 1999). The axon’s extension results from the polymerization of tubulin at the tip of the axon (Morrison et al., 2002), where microtubules are first assembled and then used to create the cytoskeleton, which results in axon-growth (Sayas et al., 2002). Considering that this process takes place on a timescale of months to years, we expect some form of tubulin degradation. Indeed, this effect was experimentally observed, where the degradation of tubulin took place on a timescale of days and thus slowed down the growth process (Miller & Samuels, 1997). Overall, the process of axon-growth is determined by the combination of tubulin production (at the soma), tubulin degradation, tubulin transport (along the axon via diffusion and active transport), and microtubule assembly (at the axon tip) (Miller & Samuels, 1997).

2.2 Mathematical modeling

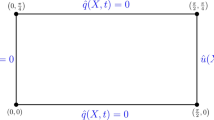

A mathematical view on tubulin-driven axon-growth for three different time instants with \(t_{0}<t_{1}<t_{2}\). The three-dimensional cylinder approximates the axon’s geometry. We assume a steady flux of tubulin being produced at the soma and flowing through the soma-axon interface into the axon. At the tip of the axon, whose current position is denoted by \(l(t_{1})\), microtubules are assembled to form the elongation of the axon. Under the assumption of a homogenous flow of tubulin, we denote the concentration of tubulin at the coordinates \((z_{1},t_{1})\) by \(c(z_{1},t_{1})\)

A mathematical view on the biological scenario of axon-growth is depicted in Fig. 2. Here, a three-dimensional cylinder is used to approximate the axon’s geometry. Moreover, we assume that the axon only grows straight and in the z-direction. Here, the variable \(\ell (t)\) denotes the axon’s length at the time t. At the soma-axon interface, see Fig. 2, we assume a steady supply of tubulin leading to a constant in-flux. At the axon tip, see \(\ell (t_{1})\) in Fig. 2, microtubules are assembled to form the extension of the axon, so it can elongate. The axon’s extension is represented by the cylinder with the black dashed edges. Under the assumption that the concentration of tubulin is identical for all coordinates (x, y) at the cross-section at \(z_{1}\), we refer to the concentration of tubulin at some time instant \(t_{1}\) as \(c(z_{1},t_{1})\).

The following section recapitulates the derivation of a one-dimensional PDE model for the tubulin distribution within the cylinder based on conservation laws. This derivation originates from the discussions of McLean and Graham (2004) and is first made for the three-dimensional case. Reducing the three-dimensional model to one dimension follows in a straightforward manner.

2.2.1 Conservation law

Let \(V_{\textrm{a}} \in \mathbb {R}^3\) and \(S_{\textrm{a}} \in \mathbb {R}^2\) denote the volume and enclosing surface of the three-dimensional cylinder of Fig. 2, respectively. Furthermore, let \({\varvec{r}}=[x,y,z]^{\textrm{T}}\) be the three-dimensional space vector. We start our derivation from the three-dimensional case, where the tubulin concentration at some coordinate \({\varvec{r}} \in \mathbb {R}^3\) is denoted by \(c({\varvec{r}},t)\). Our first goal is to derive an integral conservation law from which a partial differential equation for the tubulin distribution can then be inferred. To this end, we assume the axon to be an isolated system and exploit the principle of conservation of charge from physics. Here, we can draw an analogy between the concentration of tubulin and charge density. The principle of conservation of charge states that the change in electric charge in any volume is equal to the amount of in-flowing charge minus the amount of out-flowing charge. In our context, we can similarly state that the change of tubulin within \(V_{\textrm{a}}\) is equal to the flux of tubulin in and out of the axon:

Here, \(\mathcal {D}_{t}\) denotes a partial derivative w.r.t. time, \({\varvec{j}}({\varvec{r}},t)\) denotes the flux of tubulin at the coordinate \(({\varvec{r}},t)\), and \({\varvec{s}}_{\textrm{a}}\) is the curve parametrization of \(S_{\textrm{a}}\). Equation (1) is an ideal conservation law, as it does not consider the possibility of tubulin degradation within the axon. We can incorporate this non-ideality by adding a degradation term,

where \(c_{l}({\varvec{r}},t)\) is a (possibly nonlinear) function of \(c({\varvec{r}},t)\) describing the loss of tubulin within \(V_{\textrm{a}}\), while \(T_{l}\) represents the exponential decay constant, which is identical for every point \({\varvec{r}} \in V_\textrm{a}\). Under the assumption of a smooth vector field, we can apply the divergence theorem to rewrite the conservation law (2) as

where \({\varvec{\mathcal {D}}}_{{\varvec{r}}} = [\mathcal {D}_{x}, \mathcal {D}_{y}, \mathcal {D}_{z}]^{\textrm{T}}\) denotes the gradient w.r.t. the coordinate vector \({\varvec{r}}\). Equation (3) is the conservation law in integral form. In differential form, it reads:

Thus, we obtain a partial differential equation (PDE), whose solution provides the tubulin distribution in \(V_{\textrm{a}}\). However, this PDE is incomplete, since we have yet to define the tubulin flux \({\varvec{j}}({\varvec{r}},t)\) and the tubulin loss function \(c_{l}({\varvec{r}},t)\) in terms of \(c({\varvec{r}},t)\).

2.2.2 Tubulin flux and degradation

Within the axon, the flux of tubulin is either due to diffusion \({\varvec{j}}_{\textrm{d}}({\varvec{r}},t)\) or active transport \({\varvec{j}}_{\textrm{a}}({\varvec{r}},t)\), where the latter is meditated by transport proteins. The overall flux is given by the superposition of both processes:

Fick’s law of diffusion is arguably the most common way of describing diffusion processes (Paul et al., 2014). It states that the flux goes from the region of high concentration to the region of low concentration. Moreover, the flux is said to be proportional to the spatial derivative of the concentration:

where \(\textbf{D}({\varvec{r}}) \in \mathbb {R}^{3 \times 3}\) is the so-called diffusivity matrix, which has a spatial dependency. The active transport of tubulin \({\varvec{j}}_\textrm{a}({\varvec{r}},t)\) is assumed to be proportional to the local tubulin concentration:

where \(\textbf{v}_{\textrm{a}}({\varvec{r}})\) denotes the local transport velocity of tubulin. Inserting both definitions (6) and (7) into (5) yields

Thus, we have now defined the tubulin flux \({\varvec{j}}({\varvec{r}},t)\) in terms of the tubulin concentration \(c({\varvec{r}},t)\).

Regarding the tubulin degradation, we adopt the tubulin loss model from McLean and Graham (2004), which states that tubulin degradation is proportional to its local concentration, i.e. \(c_{l}({\varvec{r}},t) = c({\varvec{r}},t)\). If we briefly assume a closed system (no tubulin entering or exiting the axon), then the considered form of tubulin loss implies that tubulin locally decays to 1/e of its initial value after an elapsed time of \(T_{l}\), i.e. \(c(z,t_{0}) \approx e^{-1}c(z,t_{0}+T_{l})\), for some initial time \(t_{0}\).

2.2.3 One-dimensional model

Since we are assuming the axon to only extend in one direction, it is sufficient to work with a one-dimensional model. Moreover, both the diffusion constant and the active transport velocity are assumed to be constant along the axon, which leads to the special case:

This leads to the one-dimensional PDE system

where the last equation (10e) is the growth law describing the elongation or shortening of the axon. It states that the axon, with the length \(\ell (t)\), grows or shrinks at the velocity \(\textrm{v}_{\textrm{g}}\), when the tubulin concentration at the axon tip \(c(\ell (t),t)\) exceeds or drops below some constant threshold \(c_{\textrm{th}}\). For the sake of completeness, we state that \(\ell _{0}\) denotes the initial length of the axon, while \(N_{0} = 1\,\textrm{mol}\) is a normalization constant arising from the assumption that the tubulin concentration is measured in mol.

Looking at the PDE system (10), we see that the tubulin distrubiton plays a role in solving the differential equation describing the growth process (10e), but that the axon length \(\ell (t)\) resulting from the solution of the latter equation does not directly appear in (10a)-(10d) describing the tubulin distribution. Here, it is important to consider that the solution domain of the PDE is determined by the length of the axon \(\ell (t)\). In other words, the PDE is only solved on the domain \(z \in [0; \ell (t)]\) at every time t. This makes the problem a so-called moving boundary problem, which essentially denotes a differential problem with a time-varying solution domain (Zerroukat & Chatwin, 1997). To our utmost knowledge, no general analytical solution exists for this equation. However, for some special cases in which the appearing parameters are restricted to certain intervals, McLean and Graham were able to derive analytical steady-state solutions, cf. McLean and Graham (2004). Furthermore, the existence and uniqueness of one solution for the biologically relevant case, i.e. \(\ell (\infty ) > 0\) was proven in the same reference. Moreover, we would like to emphasize that the PDE system is not hyperbolic and does not (generally) constitute a passive PDE system making it incompatible with the wave digital method of solving PDEs. We will show how the PDE system can be made hyperbolic without changing the steady-state solution. We will also derive necessary and sufficient passivity conditions.

2.3 Boundary conditions

Naturally, the considered PDE system can only be solved when boundary conditions are imposed. Here, we adopt the boundary conditions proposed by McLean and Graham (2004), which we now briefly recapitulate:

-

1.

There is a steady (independent) flux of tubulin entering the axon at \(z=z_{0}\) (Neumann-type condition).

-

2.

The flux of tubulin exiting the axon at \(z=z_{\textrm{e}}\) is proportional to the tubulin concentration at the tip of the axon (Robin-type condition).

Mathematically, they can be formulated as,

where \(c_{0}\) is a typical tubulin scale, \(r_{\textrm{p}}\) is a typical tubulin production rate, \(r_{\textrm{a}}\) is the tubulin assembly rate, and \(j_{\textrm{d,r}}\) is the constant returned flux due to tubulin disassembly. In biology, the latter quantity is generally not constant. To our utmost knowledge, its value stems from a steady-state measurement and is used as an approximation (McLean & Graham, 2004; Graham et al., 2006). Note, both assumptions at the boundaries describe diffusion processes, which is why the boundary conditions are formulated in terms of \(\mathcal {D}_{z}c(z,t) = -j_{\textrm{d}}(z,t)/\textrm{d}\) and not j(z, t). If we formulate the conditions in terms of j(z, t), we would also impose restrictions on the active transport of tubulin. However, the latter transport mechanism remains unchanged at the boundaries of the considered domain.

2.4 Initial conditions

In addition to the boundary conditions, we require initial values for the starting time \(t_{0}\). In the following, we use the linear initial conditions suggested in Graham et al. (2006):

These initial conditions fulfill the boundary conditions (11) but not the governing PDE (10). The initial conditions for j(z, t) can be obtained by using (10b), which, in compact form, reads:

The spatial derivative of c(z, t) at \(t=t_{0}\) can be obtained from (12a):

3 Electrical modeling

Solving PDEs with the wave digital concept requires first synthesizing a reference circuit, whose dynamics coincides with the considered PDE (Fettweis & Nitsche, 1991a). In the following, we exploit the methods discussed in Leuer and Ochs (2009), Leuer and Ochs (2012) to synthesize a reference circuit.

3.1 Physically-motivated hyperbolization

The wave digital concept is not equally suited to solve all types of PDEs (Fettweis & Nitsche, 1991a; Luhmann & Ochs, 2006). It excels at solving hyperbolic PDEs, because all other types of PDEs lead to wave digital algorithms with implicit relationships, which must be resolved, for example, through fixed-point iterations, cf. Luhmann and Ochs (2006). The implicitness of these relationships stems from the lack of deep physical modeling allowing physical phenomenon to propagate at infinite speeds. The considered PDE (10) is of the parabolic type. For a deeper physical modeling (and an enhanced wave digital algorithm later on), we now apply a hyperbolization by finding the root cause, which makes the PDE parabolic, and rectifying it such that the PDE becomes hyperbolic. The root cause is the use of Fick’s law, which does not consider any sort of delay in the diffusion of tubulin. This implies that even for very small times, there exists a finite amount of diffusing tubulin, whose origin is very far from its current position, cf. Gómez et al. (2007). This makes the diffusion process unphysical and distorts the true qualitative nature of the considered phenomenon, as the movement of tubulin particles is exceeding the speed of light. Thus, for a deeper physical modeling, we will now replace Fick’s diffusion law (10c) by a modified diffusion law, namely Cattaneo’s law Cataneo (1958):

Cattaneo’s law is the most the widely accepted generalization of Fick’s diffusion law (Gomez et al. 2010). It introduces a sort of inertia or relaxation time with the time constant \(T_{\textrm{r}}\) into the diffusion process allowing the particles to only move at an infinite propagation velocity, whose value will be derived later on. Considering that our PDE system represents a general advection–diffusion problem on the most abstract level, making use of Cattaneo’s law is a very common and natural way of dealing with the unphysical nature of this problem. A general theory on hyperbolic advection–diffusion problems utilizing Cattaneo’s law has been developed in Gomez et al. (2010), and we refer the interested reader to this reference for more details on this hyperbolization approach and its physical consequences. Note, the underlying conservation law governing our PDE system is retained, when modifying the diffusion law, cf. Gómez et al. (2007), Gomez et al. (2010). Also, we would like to emphasize that there is a great amount of literature concerning the generalized hyperbolization of PDEs modeling physical processes besides Cattaneo’s method (Fettweis & Basu, 2015; Bright & Zhang, 2009; Rubin, 1992; Chester, 1963), some more recent and/or physical than others.

With reference to (10), the hyperbolized one-dimensional PDE now reads:

One important property of this hyperbolic PDE system is the fact that it degenerates to the parabolic model in the steady-state, i.e. when all time derivatives amount to zero. This means that its steady-state solution does not differ from the one of the parabolic model, such that the existence and uniqueness properties of its solution (McLean & Graham, 2004) carries over from the parabolic model. For the remainder of this section, we consider the two parts of (14) as two independent systems. This is because the c(z, t) appears in the ODE computing \(\ell (t)\), but \(\ell (t)\) does not appear in the PDE computing c(z, t). Thus, we consider the ODE to be controlled by the PDE and therefore first start by synthesizing a reference circuit for the PDE before dealing with the ODE.

3.2 Canonical PDE formulation

Before applying the circuit synthesis procedure, one must first rewrite (14a) in the canonical form,

where the voltage vector \({\varvec{e}}\) is a vector of zeros due to the absence of source terms in the left part of (14). The relation between the current vector \({\varvec{i}}\) and the tubulin concentration and flux is given by:

Here, we introduce the normalization constants \(F_{0}=1/\textrm{s}\), \(L_{0}=1\,\textrm{H}\), and associate c(z, t) and j(z, t) with currents. Now, the matrices \({{\varvec{L}}}^{t}\), \({{\varvec{L}}}^{z}\), and \({{\varvec{R}}}\) are obtained by first rewriting (14) in terms of the currents defined in (15b) and then applying a coefficient comparison:

Note, the circuit synthesis procedure only works when the PDE fulfills the conditions of multidimensional passivity (MD passivity) (Fettweis & Basu, 2011; Leuer & Ochs, 2009, 2012). The latter is given when the circuit is MD causal (Fettweis & Basu, 2011) and the following sufficient conditions are fulfilled by the involved matrices:

We elaborate on the conditions of MD causality in Sect. 3.5.2. Now, we have decomposed the PDE so the first two conditions are met. The third condition is generally not fulfilled, since the matrix \({{\varvec{R}}}\) is only positive-semidefinite for:

This means that the circuit synthesis can result in an active (resistive) component. For the sake of completeness, we would like to mention that the parameters used in this manuscript violate this condition, which then indeed results in an active component. In this case, many of the great properties inherent to multidimensional wave digital algorithms may or may not hold. We will come back to this aspect in Sect. 3.6, once we complete the circuit synthesis procedure.

3.3 Circuit realization of the axon-growth PDE

Top: Reference circuit resulting from applying the circuit synthesis procedure. Center: An alternative reference circuit equivalent to \(\mathcal {C}_{1}\). Bottom left: Reference circuit \(\mathcal {C}_{2}\) after applying simplifications by an appropriate choice of the free parameters \(\alpha _{\mu }\). Bottom right: Reference circuit of the axon growth law (10e)

The canonical formulation (15) can now be used as a starting point the systematic circuit synthesis. In the following, we only briefly sketch the procedure; the interested reader is referred to Leuer and Ochs (2009), Leuer and Ochs (2012). To this end, we first rewrite (15a) as:

Here, the left and right handside are termed (hyperbolic) PDE and ODE subsystem, respectively:

To ensure the hyperbolicity of the PDE subsystem, a new term \({{\varvec{L}}}^{z}_{t} \mathcal {D}_{t}\) has been introduced. The matrix \({{\varvec{L}}}^{z}_{t}\) is a positive-semidefinite diagonal matrix consisting of free/design parameters with the unit of an inductance:

The matrix is defined such that a positive free parameter \(\alpha _{\mu }^{z}\) is introduced every time the matrix \({{\varvec{L}}}^{z}\) has a non-zero column (or row, since it is symmetric). This ensures that a time derivative is added to every spatial derivative introduced by the term \({{\varvec{L}}}^{z} \mathcal {D}_{z}\), which guarantees the hyperbolicity of the PDE subsystem. When no spatial derivative appears in a certain row of the equation set, i.e. when the corresponding column fulfills \({{\varvec{L}}}^{z} {\varvec{e}}_{\mu } = \textbf{0}\), no time derivative is required and the corresponding free parameter \(\alpha _{\mu }^{z}\) is set to zero. Due to the introduction of \({{\varvec{L}}}^{z}_{t}\) and because the original PDE (15) should not be modified, one must introduce a new matrix \({{\varvec{L}}}^{t}_{t}\), which is defined as:

By inserting (18e) into (18a), it can be verified that we indeed re-obtain the canonical formulation (15). The newly introduced matrices are given by:

For the sake of completeness, we now give a brief outline on the circuit synthesis procedure proposed by Leuer and Ochs (2009), Leuer and Ochs (2012). First, to ensure MD causality, the hyperbolic PDE subsystem (18b) undergoes a bijective coordinate transformation, cf. Fettweis and Basu (2011). Second, the transformed PDE subsystem is realized with a known equivalent circuit. This results in an uncoupled circuit representing the (uncoupled) PDE subsystem. Third, an equivalent circuit for the ODE subsystem is synthesized by applying the circuit synthesis procedure extensively described in Ochs (2012). Fourth, the circuit realization of the ODE subsystem is used to close the external ports of the circuit representing the PDE subsystem. This step is a direct consequence of the constitutive relationship (18a). Finally, the previously introduced free parameters \(\alpha _{\mu \nu }^{z}\), can be chosen in a way to eliminate redundant electrical components and obtain a simpler circuit realization. This step can be understood as a circuit optimization. Applying all aforementioned steps yields the reference circuit \(\mathcal {C}_{1}\) in Fig. 3 with the electrical parameters,

where the newly introduced velocity constant \(\textrm{v}_{1}\) is used as a transformation variable, cf. Fettweis and Nitsche (1991a), Leuer and Ochs (2012). In many cases, an appropriate choice of \(\textrm{v}_{1}\) can greatly simplify the resulting circuit. We elaborate on the optimal choice of this constant in Sect. 3.5. Note, we refer to the inductors with the derivative operators \(\mathcal {D}_{z_{\pm }}\) as spatio-temporal inductors, because the derivative operator is given by a linear combination of a spatial and a time derivative, cf. Fettweis and Nitsche (1991a), Luhmann and Ochs (2006), Leuer and Ochs (2009), Leuer and Ochs (2012):

As can be seen from the definition of \(R_{1}\) in (19), its resistance is only non-negative when (17) is fulfilled. In other words, the active part of the PDE translates into a negative resistance in the resulting circuit, which does not dissipate but rather supplies power to the circuit.

While the applied procedure indeed returns a valid reference circuit, the number of components is quite large in comparison to the order of the PDE. For that reason, we now adopt a different approach for obtaining a reference circuit. To be specific, we use the systematic procedure only to synthesize a reference circuit for the PDE subsystem. By doing so, we obtain the reference circuit depicted in the dashed box Fig. 3. To obtain a reference circuit for the remaining ODE subsystem, we first explicitly write out the associated equation (18c):

Sorting the terms and neglecting the appearing zero vector, we obtain the two mesh equations:

Let us first consider the first mesh equation. We see that it corresponds to the series connection of an inductor \(L_{1}\), a resistor \(r_{1}\), and the port with the voltage \(u_{1}\) in \(\mathcal {C}_{2}\) of Fig. 3. Now, let us consider the second mesh equation, while ignoring its right handside. This equation is identical to first mesh equation, and we effectively obtain the same electrical components with different parameters, which are interconnected to the port designated with the voltage \(u_{2}\) in Fig. 3. Contrary to the first equation, the second equation contains a source term \(r_{21} i_{1}\) coupling \(i_{1}\) to the other side of the circuit. To realize the cross-coupling, we can make use of a current-controlled voltage source with the transresistance \(r_{21}\) encoding the active part of the PDE. The reference circuit resulting from this approach is depicted in \(\mathcal {C}_{2}\) of Fig. 3. As can be seen, it contains less electrical components and has a simpler structure than \(\mathcal {C}_{1}\) in Fig. 3. For the remainder of this work, we make use of the alternative circuit, due to its structural simplicity.

3.4 Circuit realization of the axon-growth ODE

In this section, we derive an equivalent circuit for the growth dynamics given in (14b). To this end, we associate the axon’s length with a current quantity:

Then, we substitute the definition of \(\mathcal {D}_{t}\ell (t)\) from (10e) rewrite its right handside in terms of the currents defined in (15b):

As can be seen, (22b) performs an integration of its right handside, when \(\ell (t)\) is known. Thus, a possible circuit realization is given by the simple integrator circuit depicted in \(\mathcal {C}_{4}\) of Fig. 3. Here, \(r_{\textrm{t}}\) denotes the transresistance of the appearing current-controlled voltage source. The axon’s length can be reconstructed from the current \(i_{3}\) flowing through the inductor \(L_{\textrm{a}}\) by applying the bijective mapping relation given in (22a).

3.5 Circuit optimization

The last step of the circuit synthesis procedure involves optimizing the circuit \(\mathcal {C}_{2}\) in Fig. 3 by eliminating redundant components, which can be achieved, by an appropriate choice of the free parameters \(\alpha _{\mu \nu }^{z}\) and the velocity constant \(\textrm{v}_{1}\). Here, we start by determining the maximal propagation velocity \(\textrm{v}_{\textrm{max}}\) of the system, which allows us to derive a lower bound for the velocity constant \(\textrm{v}_{1}\) (Fettweis & Nitsche, 1991a; Gomez et al., 2010).

3.5.1 Maximal propagation velocity

For hyperbolic PDEs with constant coefficients of the form (18), the maximal propagation velocity \(\textrm{v}_{\textrm{max}}\) can be explicitly calculated by evaluating the dispersion relation (Bilbao, 2004),

where \(\det (\cdot )\) is the determinant operator, \(\textrm{j}\) is the imaginary unit, and \(k_{z}\) is the wavenumber in z-direction. The dispersion relation provides the angular frequencies \(\omega _{\nu }\) of the waves, which propagate in the system, from which we can derive the associated group velocities:

The maximal propagation velocity is the maximal (absolute) group velocity,

which turns out to be a function of \(k_{z}\) and not a constant. A variable maximal propagation velocity is not helpful for the upcoming circuit optimization procedure, which is why we now make a worst case approximation. Considering that (24a) is a monotonically increasing uneven bounded function of \(k_{z}\), we can make a worst case approximation by calculating the limit:

3.5.2 Simplifications

To eliminate redundancies in the circuit, we now choose our free parameters such that certain electrical parameters become zero. Considering that our two free parameters can be chosen to eliminate the inductances in (19), we conclude that the following two choices are optimal:

The two design parameters have influence on the other electrical parameters of the PDE subsystem:

The final parametric degree of freedom is given by the velocity constant \(\textrm{v}_{1}\). This constant must fulfill the MD causality condition (Fettweis & Basu, 2011; Leuer & Ochs, 2009), which in this case reads: \(\textrm{v}_{1} \ge \textrm{v}_{\textrm{max}}\). Furthermore, it must be chosen so the inductances \(L_{12}^{z}\) and \(L_{21}^{z}\) are non-negative, which is a necessary condition for their passivity:

Since \(\textrm{v}_{\textrm{max}} > 0\), the second condition is actually redundant. The non-negativity condition of \(L_{12}^{z}\) coincides with the condition of MD causality. Thus, we see that \(\textrm{v}_{1} = \textrm{v}_{\textrm{max}}\) is an optimal choice, since it eliminates \(L_{12}^{z}\) without violating the latter. In a final optimization step, we eliminate the ideal transformer, appearing in \(\mathcal {C}_{2}\) of Fig. 3, by an equivalency transformation incorporating its turns ratio into the electrical parameters on the left side of its primary port. The reference circuit resulting after these simplifications is depicted in \(\mathcal {C}_{3}\) of Fig. 3. The equivalency of its dynamics with those of PDE system can be verified by evaluating the node and mesh equations.

3.6 Intermediate summary and discussion

In an intermediate summary, we would like to briefly elaborate on the properties of the synthesized circuit and its consequences on the resulting wave digital algorithm. In this work, we have synthesized two possible electrical circuits for the hyperbolic PDE system. If the circuit parameters are chosen in a way that fulfills the third passivity condition (17), we recommend using \(\mathcal {C}_{1}\) as depicted at the top of Fig. 3 to solve the hyperbolic PDE. However, in case this condition is not met, we recommend using circuit \(\mathcal {C}_{3}\) depicted at the bottom of the same figure due to its structural simplicity. The latter circuit contains a nonlinear and active component and as mentioned earlier, there is no guarantee that any of the great properties inherent to multidimensional wave digital algorithm carry over to this case. However, we can see that all circuit elements are passive and that the active part of the PDE is reflected by one active nonlinearity, the controlled voltage source. Thus, we can separate the circuit into a passive subcircuit that is interconnected to an active subcircuit, a controlled voltage source. All great properties of wave digital algorithms apply to the passive and dominant part of the overall circuit. In this configuration, the active subcircuit excites the passive subcircuit. As long as this excitation is bounded the passive subcircuit will react with a bounded output due to passivity. Here, it is important to consider that passivity carries over to the wave digital domain (Meerkötter, 2018).

4 Wave digital modeling

The emulation of the electrical model derived in the previous section is discussed in this section. For a better understanding of the applied methods, we begin by briefly recapitulating the fundamentals of the wave digital concept. Then, we translate the electrical model into the wave digital domain, which results in a compact wave digital model. Uncoiling the latter results in the explicit wave digital model, which can be used for emulation purposes. Finally, we introduce the concept of dynamic wave digital models, which is our main contribution.

4.1 Fundamentals

The wave digital algorithm of a given reference circuit is the result of writing out the signal relationships of the associated wave digital model in a causal manner (Fettweis, 1986; Meerkötter, 2018). The latter can be obtained by decomposing the reference circuit into a set of one- and multiports, which are then translated into the wave digital domain by applying the bijective mapping relation:

Here, a, b, and R denote the incident wave, reflected wave, and the port resistance, respectively (Fettweis, 1986). Electrical components with differential relationships must first undergo a discretization via numerical integration, commonly the trapezoidal rule (Fettweis, 1986; Meerkötter, 2018). For an overview on electrical components and their translation into the wave digital domain, the interested reader is referred to Fettweis (1986), Meerkötter (2018). Considering that we will be working with multidimensional wave digital algorithms, we define the discrete coordinates, \(z_{k} = z_{0} + k Z\), where \(z_{0}\) denotes the initial z-coordinate. Thus, we have \(z_{k} - z_{k-1} = Z\) for all \(k \in \mathbb {N}^{+}_{0}\), where T and \(Z=\textrm{v}_{1} T\) denote the temporal and spatial step-size of the wave digital algorithm, respectively.

One aspect we would like to highlight is the fact that the trapezoidal rule, used in combination with the wave digital concept, is a so-called A-stable integration method (Hairer & Wanner, 1991). This means that asymptotic stability properties exhibited by the solution of a differential equation carry over to the wave digital domain. Indeed, this special property of the trapezoidal rule has been shown to play a big role in the accuracy of numerical solutions, for example, more recently in the simulation of lumped transmission lines, cf. Zhou et al. (2021). In our context, this is property also plays a big role, as the existence and uniqueness of a steady-state solution is ensured by the discussions of McLean and Graham (2004). Thus, multidimensional wave digital algorithms based on the trapezoidal rule will always have an edge over any explicit integration method for solving this problem for two reasons:

-

1.

The trapezoidal rule is an implicit, lossless, and the most accurate A-stable linear multistep method (Dahlquist, 1963; Ochs, 2001), which becomes explicit in the wave digital domain (Fettweis, 1986). Consequently, the wave digital algorithm is fully explicit and does not require any sort of iteration methods, which makes it efficient.

-

2.

Explicit integration methods can not be A-stable (Dahlquist, 1963; Ochs, 2001). Contrary to A-stable integration methods, explicit integration methods are only numerically stable for sufficiently small step-sizes (Dahlquist, 1963), which can make them inefficient from a computational perspective.

4.2 Wave digital model

Compact representation of the PDE subsystem in the wave digital domain (\(\mathcal {M}_{1}\)) \(\mathcal {C}_{3}\). The wave digital model \(\mathcal {M}_{2}\) corresponds to translating the equivalent circuit of the growth law (10e), denoted with \(\mathcal {C}_{4}\) in Fig. 3, to the wave digital domain. Uncoiling the compact wave digital model \(\mathcal {M}_{1}\) leads to the uncoiled wave digital model \(\mathcal {M}_{3}\), in the case of three spatial coordinates \(z_{\mu }\) with \(\mu \in \{1,2,3\}\)

Now, we discuss the translation of the circuits \(\mathcal {C}_{3/4}\) in Fig. 3 into the wave digital domain. Starting with circuit \(\mathcal {C}_{4}\), the ideal controlled voltage source and the inductor translate to a reflective wave source with the reflection coefficient \(-1\) and a delay element (with a sign inversion), respectively (Fettweis, 1986). The port resistance of the temporal inductor is given by \(R_{\textrm{a}} =2L_{\textrm{a}}/T\), where T denotes the sampling period of the wave digital algorithm. Now, we translate circuit \(\mathcal {C}_{3}\), where we start by replacing the Jaumann structure with a Jaumann adaptor. The resistor and the controlled voltage source are then replaced by the reflection coefficient \(\varrho _{R}\) and a reflective wave source, respectively, with:

Finally, the spatio-temporal inductors are replaced by spatio-temporal delays \(Z_{\pm }\) (Leuer & Ochs, 2009, 2012). The delays are associated with transformed differential operators (20) and can be interpreted as a combination of a time and a positive or negative spatial delay (Luhmann & Ochs, 2006). To understand the reason behind two signs of the spatial delay, one must consider that physical phenomenon can propagate in positive or negative directions as time passes.

The compact wave digital model \(\mathcal {M}_{1}\) is depicted in Fig. 4. It is an implicit representation of the actual wave digital model, because it only describes the dynamics of the PDE in one grid point. The uncoiled wave digital model, on the other hand, is an explicit interpretation of a multidimensional wave digital model (Luhmann & Ochs, 2006), see \(\mathcal {M}_{3}\) in Fig. 4. Since we are working with a one-dimensional model, consider a one-dimensional grid with spatial step-size Z. Now, place the compact wave digital model of the previous section at every grid point and remove all the spatio-temporal delays \(Z_{\pm }\). The ports at which the latter elements were previously placed, are left open (as external docking ports). The uncoiled wave digital model is obtained by interconnecting the compact wave digital models at every grid point. In particular, every reactive-element-free model is only connected to its nearest neighbor. The interconnection elements are the delays, which we have previously removed from the model. These delays, however, are now pure temporal delays. This is because the spatial delay of the compact model is now embedded into the distribution of the compact models along the one-dimensional grid. For instance, the currents \(i_{\nu }(z_{1},t)\) can be inferred from the wave quantities of the first wave digital model (the one placed at the first grid point) in \(\mathcal {M}_{3}\) of Fig. 4. When interconnecting two neighboring models, it is important that every connection exiting an external port, which previously belonged to a positive/negative spatio-temporal delay, also connects to a port belonging to the same type of delay. Note, in this work, we do not discuss the realization of the boundary conditions. However, our approach is based on the discussions of Fettweis (2006).

4.3 Dynamic wave digital model

In the first section, we stated that the considered problem is of the moving boundary type. In this section, we first start by explaining the consequences of a moving boundary w.r.t. wave digital model and then move on to demonstrate a way of dealing with these consequences. When an axon changes its length, the tip of the axon, which was previously at some position \(\ell (t_{0}) = \ell _{0}\), moves to some position \(\ell (t_{1}) = \ell _{1}\) at the next time instant, where \(\ell _{0}\) can be larger than, smaller than, or equal to \(\ell _{1}\).

Case 1: Growing axon

First, let us consider the case, where \(\ell _{0}\) is larger than \(\ell _{1}\). At the time step \(t_{0}\), the right boundary of the solution domain is at the position \(\ell _{0}\). In the next time step, the axon grows, such that the boundary is now at \(\ell _{1}\). Thus, the position at which the (right) boundary condition must be applied, has changed. In terms of the wave digital model, this means that we must add a wave digital structure to the wave digital model every time the axon grows. Furthermore, we must shift the position of the right boundary to be at the new boundary coordinate, which comes after the newly introduced wave digital structure. Here, we have implicitly assumed the difference between the new axon length and the old axon length to be maximally given by Z. Every time a wave digital structure is added, two new delays are introduced, see the right side of Fig. 5. The delays couple the wave digital model from the previous time step to the newly added wave digital structure. To initialize the delay that the models have in common (at the two consecutive time steps), we can simply use the wave reflected towards the right boundary at the previous time step. However, we have no way of initializing the other introduced delays, such that, our wave digital model becomes incalculable. To deal with this problem, we suggest using an extrapolation technique to calculate the missing values, which is the topic of the next section.

Case 2: Shrinking axon

Now that we have covered the first case, let us discuss the second case, where the axon shrinks, i.e. \(\ell _{1} < \ell _{0}\). In this case, we can simply wipe the memory of all the wave digital structures representing the axon segments that come after \(\ell _{1}\). Now, we have a wave digital model that is fit to solve the PDE in the domain \([z_{0};\ell _{1}]\). Essentially, this corresponds to interpreting Fig. 5 in the reverse manner, i.e. the right side is the initial wave digital model and the left side is the wave digital model at the next time instant.

Case 3: Axon retains length

The third and final case is when the axon retains its length. This case is very trivial, as we can simply continue using the same wave digital model to solve the PDE on the same solution domain, until one of the other two cases comes into play.

Left: Representation of the wave digital model at a time instant \(t_{\mu }\). The multiports \(\mathcal {N}_{\mu }\) represent the compact wave digital models after removing the spatio-temporal delays. Right: Axon-growth takes place and a wave digital structure is added at the time instant \(t_{\mu +1}\). Adding a wave digital structure introduces three new delays. Furthermore, the right boundary moves \(\mathcal {N}_{\textrm{r}}\) and docks to the newly introduced wave digital structure at \(z_{k}\)

4.4 Extrapolation technique

Extrapolation techniques are useful for approximating unknown values from known values within the solution domain. In this section, we focus on a special type of extrapolation techniques, namely linear multistep methods (LMS), cf. Fränken and Ochs (2002) and derive a LMS method for approximating the initial value of the newly introduced delays. These values depend on the tubulin concentration c(z, t) and the tubulin flux j(z, t). Thus, the initialization requires extrapolating the associated currents \(i_{1}\) and \(i_{2}\). In the following, we demonstrate the extrapolation for the missing current \(i_{1}\). The derived method can then be analogously used to extrapolate \(i_{2}\).

Let us consider the current \(i_{1}(z,t)\) associated with the tubulin concentration c(z, t) at some point \(z_{k}\). We can approximate the currents at the preceding coordinates \(i_{1}(z_{k-\nu }, t)\) by a Taylor series expansion, while using \(i_{1}(z_{k},t)\) as our pivot point:

Here, the notation \(\mathcal {D}_{z} c(z_{k},t)\) refers to the spatial derivative of c(z, t) evaluated at \(z_{k}\). Using \(z_{k-\nu } - z_{k} = -\nu Z\) and repeating this expansion for \(\nu = \{1,2,3\}\) yields the linear equation system:

Let us now first consider the left side of the above equation. The coefficient matrix on the left handside is a so-called Vandermonde-matrix. Its inverse can always be explicitly calculated. For \(t=t_{\mu }\), the vector on the right handside contains known current values from the solution domain. The vector of unknowns contains the boundary current \(i_{1}(z_{k},t)\) and its scaled spatial derivatives. To calculate the value of \(i_{1}(z_{k},t_{\mu })\), we can simply use the inverse of the coefficient matrix at every \(t_{\mu }\). Now, consider the right part of (28). Since we are primarily interested in calculating \(i_{1}(z_{k},t_{\mu })\), we only require the first row of the equation system, which reads:

Equation (30a) is a second-order accurate approximation for the value of \(i_{1}(z_{k},t_{\mu })\). This formula also applies when extrapolating the current \(i_{2}(z_{k},t_{\mu })\) associated with the tubulin flux. Using these two values, we can approximate the missing currents,

which we now associate with the new wave digital structure preceding the boundary, see right side of Fig. 5. Using the half-step approximation scheme (Hetmanczyk & Ochs, 2009, 2011) and the extrapolated currents, we are now able to initialize the new delays. We can approximate the internal states of the delay elements by knowing the corresponding currents half a step in the past, cf. Hetmanczyk and Ochs (2009), Hetmanczyk and Ochs (2011):

The extrapolated currents serve as an approximation for the unknown currents and prove to be sufficiently good for solving the considered PDE. Once the delays are initialized, the wave flow diagram must be recalculated before moving to the next time step. This means that two wave flow diagram iterations must be executed every time axon-growth takes place. This re-iteration is necessary to calculate the new value that is reflected by the right boundary, since axon-growth has caused it to shift its position to the right.

At first glance, it may be appealing to use a higher order approximation to calculate the missing values at the boundary. In fact, calculating the inverse of the Vandermonde-matrix would not be difficult even if we used all \(n-2\) points within the solution domain. Moreover, the inverse would only have to be calculated once at the beginning of the simulation, so it would not lead to any decrease in the algorithmic efficiency. However, one must not forget that the accuracy of the Taylor series expansion decreases the further we move from the pivot point. These inaccuracies are so large such that even fourth-order approximations can lead to instable solutions. Thus, we recommend the use of second-order or third-order approximation schemes, when it comes to dynamic model expansion.

Finally, we would like to mention that our method only works well under the assumption made in Sect. 4.3, namely, that the difference between boundary position at two consecutive instants is maximally given by Z. In many physical problems, this assumption is justified and can be ensured by choosing the sampling period T in a way that allows the algorithm to closely capture the movement of the boundary. In case the boundary growth is rapid, such that the relative increase in the boundary position is more than Z, the only solution would be to modify the growth law such that the boundary grows at a slower rate. Otherwise, the extrapolation method would lead to large numerical errors possibly leading to inaccurate solutions.

5 Emulation results and discussion

In this section, we start by comparing our emulation results to the results of previous research (Graham et al., 2006). Here, we consider a growing axon with the initial length \(\ell _{0} = 1\,\textrm{mm}\). The biological parameters are listed in table 1, from which the electrical and wave digital parameters can be calculated.

Emulation results for the biological parameters listed in table 1. Top left: Axon length over time. Top right: tubulin concentration at soma over time. Bottom left: tubulin concentration at the axon tip over time. Bottom right: Steady-state tubulin concentration over space

In this scenario, the length of the axon is mainly determined by the production rate \(r_{\textrm{p}}\). Here, it was reported that the axon reaches a steady-state axon length of \(\ell _{\infty } \approx 35 \, \textrm{mm}\) for the given production rate. This results in a phenomenon, which is referred to as moderate growth, i.e. the steady-state axon length is moderately larger than its initial length. In Graham et al. (2006), the steady-state length was shown to be slightly under \(1 \, \textrm{mm}\). Furthermore, the peak axon length was approximately \(4 \, \textrm{mm}\). The steady-state concentrations at the two tips of the axon were shown to have the values

Our results depicted in Fig. 6 match those from Graham et al. (2006). The peak axon length and the steady-state length are the same. However, the tubulin concentration at the soma, which in our case amounts to \(c_{\textrm{s}}(\infty ) \approx 10.16 \, \upmu \textrm{mol}\) is \(0.004 \%\) smaller than in Graham et al. (2006). Furthermore, the characteristic behavior of the axon length, the tubulin concentration at the soma and axon tip, and the steady-state tubulin concentration are all the same.

5.1 Sensitivity analysis

Now, we exploit the wave digital concept to perform a simple sensitivity analysis, where we (independently) vary the diffusion constant \(\textrm{d}\), the active transportation velocity \(\textrm{v}_{\textrm{a}}\), and the tubulin loss time constant \(T_{l}\). This analysis is inspired by the sensitivity analysis performed in Graham et al. (2006). Its main purpose is to verify the validity of our model and compare its dynamical behavior to the parabolic model. Due to the absence of reference numerical data, we can only give a qualitative comparison between our results and the ones from literature.

Figure 7 depicts the results of our sensitivity analysis. Here, we examine the axon-growth dynamics for the three different growth scenarios: large (\(r_{\textrm{p}}=40\,\frac{1}{\textrm{m}}\)), moderate (\(r_{\textrm{p}}=20\,\frac{1}{\textrm{m}}\)), and small (\(r_{\textrm{p}}=2\,\frac{1}{\textrm{m}}\)). Each column depicts the results of varying the three different parameters for one growth scenario.

Large growth

The sensitivity analysis results for the large growth scenario are depicted in the first column of Fig. 7. Comparing these results to the ones from literature, we have found our results to be nearly identical. Some slight differences can be observed in the top plot, where we vary \(\textrm{d}\). In Graham et al. (2006), varying the diffusion constant has no effect on the curve characteristics or the steady-state length whatsoever. In our case, the convergence speed is effected, i.e. lowering the diffusion constant makes the axon length converge slower. This observation is reasonable, since we have replaced Fick’s law with Cattaneo’s law, which introduced a time derivative and can hence slow down the speed of convergence. Varying the other two parameters does not seem to lead to any deviations, just like in literature.

Moderate growth

The sensitivity analysis results for the moderate growth scenario is depicted in the middle column of Fig. 7. Comparing our results to the ones from literature, we can observe very large deviations in the transient behavior. While the curve characteristics of our results match the ones from literature, prominent points such as the peak value of the axon length are different. The greatest deviation can be observed in the upper plot, where we varied the diffusion constant \(\textrm{d}\). In Graham et al. (2006), varying the diffusion constant did not change the peak value of the axon length nor the convergence speed. However, it changed the steady-state length. In our results, we can see that varying the diffusion constant changes both the convergence behavior and the peak values of the axon length. In particular, reducing \(\textrm{d}\) seems to lead to fast convergence and lower peak values. The steady-state axon length, however, does indeed match the one from literature in all three cases. The other two results are much closer to the ones from literature. To be specific, both the transient behavior and the steady-state axon length are identical.

Small growth

The last set of results for the small growth scenario is depicted in the right column of Fig. 7. Overall, the results are very similar to the ones from literature. However, it is noteworthy to state that the hyperbolization seems to reduce the system’s oscillation tendency. In particular, the axon length does not seem to overshoot as much before converging to its steady-state value, when compared to the results in Graham et al. (2006).

Discussion

Overall, our sensitivity results greatly match the ones from literature. In particular, our steady-state solutions are identical, which is to be expected, as we have shown the hyperbolization to not affect them. The largest discrepancies can be observed in the first row in Fig. 7, where we have varied the diffusion constant \(\textrm{d}\). Otherwise, all other results match the reference results, which have been qualitatively justified in Graham et al. (2006) by experimental data. We would like to mention that the authors had no access to these measurements, which is why a direct comparison was not possible. In the following, we discuss the discrepancies appearing in the first row of Fig. 7 from a physical and biological perspective.

The physical perspective. As stated in Sect. 3.1, hyperbolizing the PDE using Cattaneo’s law essentially corresponds to adding a sort of inertia, which, in our context, limits the velocity at which tubulin particles can move within the axon. The original (parabolic) model allows the tubulin particles to move at infinite velocities, which is unphysical. Looking at the results of Sect. 3.5.1, we see that the relaxation constant \(T_{\textrm{r}}\), contributes to setting a maximal propagation velocity of tubulin, see the definition of \(\textrm{v}_{\textrm{max}}\). Thus, we argue that our model serves as a better approximation of the real tubulin diffusion process, which occurs at the maximal velocity \(\textrm{v}_{\textrm{max}}\).

The biological perspective. If we briefly assume the results of the parabolic model to be correct, then this implies that varying the diffusivity does not influence the peak axon length for a constant tubulin production rate. However, the diffusivity of tubulin is what effectively determines the amount of particles reaching the axon tip per unit time. Therefore, the old set of results implies that the same amount of microtubules can reach the tip of the axon in the same amount of time, even when the diffusivity is decreased. This statement is not reasonable, since a lower diffusivity should also decrease the amount of microtubules reaching the axon tip per unit time. Thus, a lower diffusivity should also lead to a lower peak axon length according to the growth law (10e), which is something that can be observed in the first row of Fig. 7. Therefore, we argue that our model is more consistent with the modeled biological phenomenon.

A question that remains to be answered is how the relaxation time constant \(T_{\textrm{r}}\), introduced by our hyperbolization approach, is to be chosen relative to the growth time constant \(T_{\textrm{a}}\). In this work, we have chosen the former as \(T_{\textrm{r}}=1\,\textrm{s}\), which is in the same order as \(T_{\textrm{a}}\). Decreasing the relaxation time constant leads to results that are more similar to the ones from literature, which, according to our discussions, do not fully reflect the actual biological scenario. To fit the emulation results to actual measurements, this degree of freedom must be chosen appropriately, since it is directly correlated with the maximal velocity of the diffusion process. As this work is mainly concerned with the electrical modeling and wave digital emulation of the considered PDE, all discussions related to this topic are omitted.

6 Conclusion

In this work, we have dealt with the solution of moving boundary problems based on wave digital principles. Here, we first started by describing the problem of axon-growth from a biological as well as a mathematical standpoint. The latter is given by a one-dimensional partial differential equation with a time-varying solution domain. Then, we derived an electrical model (reference circuit) for the partial differential equation from which we obtained the associated wave digital model. To deal with the moving boundary, we introduced the concept of dynamic wave digital models, which can red their size according to the solution domain. This concept was then used to solve the considered partial differential equation. Here, we showed an astounding resemblance between our results and those from previous research, where the mathematical model originates from.

In the future, we aim to extend this method to solve two- and three-dimensional problems. This works will serve as a basis for deriving novel methods for dealing with higher dimensional moving boundary problems. Furthermore, we aim to integrate the linear multistep methods used in this work into the wave digital concept in order to obtain a unitary wave digital method for dealing with general moving boundary problems.

References

Alexiades, V. (1993). Mathematical modeling of melting and freezing processes (1st ed.). Routledge.

Balemarthy, K., & Bass, S. C. (1995). General, linear boundary conditions in MD wave digital simulations. In 1995 IEEE international symposium on circuits and systems (ISCAS), vol. 1, pp. 73–76.

Bernardini, A., Werner, K. J., Smith, J. O., & Sarti, A. (2019). Generalized wave digital filter realizations of arbitrary reciprocal connection networks. IEEE Transactions on Circuits and Systems I: Regular Papers, 66(2), 694–707. https://doi.org/10.1109/TCSI.2018.2867508

Bernardini, A., Maffezzoni, P., & Sarti, A. (2021). Vector wave digital filters and their application to circuits with two-port elements. IEEE Transactions on Circuits and Systems I: Regular Papers, 68(3), 1269–1282. https://doi.org/10.1109/TCSI.2020.3044002

Bilbao, S. (2004). 3. In Multidimensional wave digital networks. Wiley; pp. 53–113. Available from: https://onlinelibrary.wiley.com/doi/abs/10.1002/0470870192.ch3.

Bose, N. K., & Fettweis, A. (2004). Skew symmetry and orthogonality in the equivalent representation problem of a time-varying multiport inductor. IEEE Transactions on Circuits and Systems I: Regular Papers, 51(7), 1321–1329. https://doi.org/10.1109/TCSI.2004.830687

Bright, T. J., & Zhang, Z. M. (2009). Common misperceptions of the hyperbolic heat equation. Journal of Thermophysics and Heat Transfer, 23(3), 601–607. https://doi.org/10.2514/1.39301

Bullock, T. H., Bennett, M. V. L., Johnston, D., Josephson, R. K., Marder, E., & Fields, R. D. (2005). The Neuron Doctrine. Redux. Science, 310, 791–793.

Cataneo, M. (1958). Sur une forme de l’equation de la chaleur eliminant le paradoxe d’une propagation instantane. Comptes Rendus Academie des Sciences Paris, 247(4), 431–433.

Chester, M. (1963). Second sound in solids. Physical Review, 131, 2013–2015. https://doi.org/10.1103/PhysRev.131.2013

Dahlquist, G. G. (1963). A special stability problem for linear multistep methods. BIT Numerical Mathematics, 3(1), 27–43. https://doi.org/10.1007/BF01963532

Erbar, M., & Horneber, E. H. (1995). Models for transmission lines with connecting transistors based on wave digital filters. International Journal of Circuit Theory and Applications, 23(4), 395–412. https://doi.org/10.1002/cta.4490230412

Fettweis, A. & Basu, S. (2015). Modelling of multidimensional (MD) heat diffusion via the Kirchhoff paradigm. In 2015 IEEE international symposium on circuits and systems (ISCAS); pp. 2373–2376.

Fettweis, A. (1991). The role of passivity and losslessness in multidimensional digital signal processing-new challenges. In 1991 IEEE international sympoisum on circuits and systems, vol. 1; pp. 112–115.

Fettweis, A. (1992). Multidimensional wave digital filters for discrete-time modelling of Maxwell’s equations. International Journal of Numerical Modelling: Electronic Networks, Devices and Fields, 5(3), 183–201. https://doi.org/10.1002/jnm.1660050307

Fettweis, A. (1994). Multidimensional wave-digital principles: From filtering to numerical integration. In Proceedings of ICASSP ’94 IEEE international conference on acoustics, speech and signal processing, vol. 6, vi:VI/173–VI/181.

Fettweis, A. (2002). Improved wave-digital approach to numerically integrating the PDES of fluid dynamics. In 2002 IEEE International symposium on circuits and systems. Proceedings (Cat. No.02CH37353). vol. 3; p. III.

Fettweis, A. (1986). Wave digital filters: Theory and practice. Proceedings of the IEEE, 74(2), 270–327.

Fettweis, A. (2006). Robust numerical integration using wave-digital concepts. Multidimensional Systems and Signal Processing, 17(1), 7–25. https://doi.org/10.1007/s11045-005-6236-3

Fettweis, A., & Basu, S. (2011). Multidimensional causality and passivity of linear and nonlinear systems arising from physics. Multidimensional Systems and Signal Processing, 22(1), 5–25. https://doi.org/10.1007/s11045-010-0135-y

Fettweis, A., & Nitsche, G. (1991). Numerical integration of partial differential equations using principles of multidimensional wave digital filters. Journal of VLSI Signal Processing Systems for Signal, Image and Video Technology, 3(1–2), 7–24.

Fettweis, A., & Nitsche, G. (1991). Transformation approach to numerically integrating PDEs by means of WDF principles. Multidimensional Systems and Signal Processing, 2(2), 127–159. https://doi.org/10.1007/BF01938221

Fränken, D., & Ochs, K. (2002). Improving wave digital simulation by extrapolation techniques. AEU - International Journal of Electronics and Communications, 56(5), 327–336.

Galbraith, J. A., Reese, T. S., Schlief, M. L., & Gallant, P. E. (1999). Slow transport of unpolymerized tubulin and polymerized neurofilament in the squid giant axon. Proceedings of the National Academy of Sciences, 96(20), 11589–11594.

Gómez, H., Colominas, I., Navarrina, F., & Casteleiro, M. (2007). A finite element formulation for a convection-diffusion equation based on Cattaneo’s law. Computer Methods in Applied Mechanics and Engineering, 196(9), 1757–1766. https://doi.org/10.1016/j.cma.2006.09.016

Gomez, H., Colominas, I., Navarrina, F., París, J., & Casteleiro, M. (2010). A hyperbolic theory for advection-diffusion problems: Mathematical foundations and numerical modeling. Archives of Computational Methods in Engineering, 06(17), 191–211.

Graham, B. P., Lauchlan, K., & Mclean, D. R. (2006). Dynamics of outgrowth in a continuum model of neurite elongation. Journal of Computational Neuroscience, 20(1), 43.

Hairer, E., & Wanner, G. (1991). In Stiff problems–One-step methods (pp. 1–254). Berlin, Heidelberg: Springer.

Hemetsberger, G., & Hellfajer, R. (1994). Approach to simulating acoustics in supersonic flow by means of multidimensional vector-WDFs. In 1994 IEEE International Symposium on Circuits and Systems (ISCAS), vol. 5; pp. 73–76.

Hetmanczyk, G. & Ochs, K. (2009). Initialization of linear multistep methods in multidimensional wave digital models. In 2009 52nd IEEE international midwest symposium on circuits and systems; pp. 786–789.

Hetmanczyk, G., & Ochs, K. (2011). A practical guide to multidimensional wave digital models using the example of fluid dynamics. International Journal of Numerical Modelling: Electronic Networks, Devices and Fields, 03(24), 154–174. https://doi.org/10.1002/jnm.768

Higuchi, T. (1963). Mechanism of sustained-action medication. Theoretical analysis of rate of release of solid drugs dispersed in solid matrices. Journal of Pharmaceutical Sciences.,52(12), 1145–1149. https://doi.org/10.1002/jps.2600521210.

Jenderny, S., & Ochs, K. (2022). Wave digital emulation of a bio-inspired circuit for axon growth. In 2022 IEEE Biomedical Circuits and Systems Conference (BioCAS); p. 260–264.

Lawson, S. S., & Guzman, J. G. (2001). On the modelling of the 2D wave equation using multidimensional wave digital filters. In ISCAS 2001. The 2001 IEEE international symposium on circuits and systems (Cat. No.01CH37196), vol. 2; p. 377–380

Lee, P. I. (2011). Modeling of drug release from matrix systems involving moving boundaries: Approximate analytical solutions. International Journal of Pharmaceutics, 418(1), 18–27. https://doi.org/10.1016/j.ijpharm.2011.01.019. Mathematical modeling of drug delivery systems

Leuer, C. & Ochs, K. (2009). Systematic derivation of reference circuits for wave digital modeling of passive linear partial differential equations. In 2009 52nd IEEE international midwest symposium on circuits and systems; pp. 782–785.

Leuer, C., & Ochs, K. (2012). On systematic wave digital modeling of passive hyperbolic partial differential equations. International Journal of Circuit Theory and Applications, 40(7), 709–731. https://doi.org/10.1002/cta.752

Lindell, I. (2005). Electromagnetic wave equation in differential-form representation. Progress in Electromagnetics Research, 01(54), 321–333. https://doi.org/10.2528/PIER05021002

Luhmann, K., & Ochs, K. (2006). A novel interpretation of the hyperbolization method used to solve the parabolic neutron diffusion equations by means of the wave digital concept. International Journal of Numerical Modelling: Electronic Networks, Devices and Fields, 07(19), 345–364.

McLean, D. R., & Graham, B. P. (2004). Mathematical formulation and analysis of a continuum model for tubulin-driven neurite elongation. Proceedings of the Royal Society of London Series A, 460(2048), 2437–2456.

Meerkötter, K. (2018). On the passivity of wave digital networks. IEEE Circuits and Systems Magazine, 18(4), 40–57. https://doi.org/10.1109/MCAS.2018.2872664

Michaelis D, Ochs K, Beattie BA, & Jenderny S. (2022). Towards A self-organizing neuronal network based on guided axon-growth. In 2022 IEEE 65th international Midwest symposium on circuits and systems (MWSCAS); pp. 1–4.

Miller, K. E., & Samuels, D. C. (1997). The axon as a metabolic compartment: Protein degradation, transport, and maximum length of an axon. Journal of Theoretical Biology, 186(3), 373–379.

Morrison, E., Moncur, P., & Askham, J. (2002). EB1 identifies sites of microtubule extension during neurite formation. Brain Research Molecular Brain Research, 02(98), 145–52.

Ochs, K. (2001). Passive integration methods: Fundamental theory. AEU - International Journal of Electronics and Communications, 55(3), 153–163. https://doi.org/10.1078/1434-8411-00024

Ochs, K. (2012). Theorie zeitvarianter linearer Übertragungssysteme. Aachen: Shaker Verlag.

Ochs, K., Michaelis, D., & Jenderny, S. (2021). Synthesis of an equivalent circuit for spike-timing-dependent axon growth: What fires together now really wires together. IEEE Transactions on Circuits and Systems I: Regular Papers, 68(9), 3656–3667. https://doi.org/10.1109/TCSI.2021.3093172

O’Connor, W. (2005). Wave and scattering methods for numerical simulation, Stefan Bilbao, Wiley, Chichester, UK, 2004, p. 380, £ 75 (Hardback), ISBN: 0-470-87017-6: Book Reviews. Int J Numer Model. 18(4):325.

Olsen, M. J., Werner, K. J., Germain, F. G. (2017). Network variable preserving step-size control in wave digital filters.

Paul, A., Laurila, T., Vuorinen, V., & Divinski, S. (2014). In Fick’s laws of diffusion; pp. 115–139.

Rubin, M. B. (1992). Hyperbolic heat conduction and the second law. International Journal of Engineering Science, 30(11), 1665–1676. https://doi.org/10.1016/0020-7225(92)90134-3

Sayas, C., Avila, J., & Wandosell, F. (2002). Regulation of neuronal cytoskeleton by lysophosphatidic acid: Role of GSK-3. Biochimica et Biophysica Acta, 06(1582), 144–53.

Singh Muralidhar, B. K., Ashkrizzadeh, R., Kohlstedt, H., Petraru, A. & Rieger R. (2022). A pressure-sensitive oscillator for neuromorphic applications. In 2022 IEEE biomedical circuits and systems conference (BioCAS); pp. 345–348.

Stefan, J. (1889). Über einige probleme der theorie der wärmeleitung. Sitzungber, Wien, Akad Mat Natur., 98, 473–484.

Tao, L. C. (1967). Generalized numerical solutions of freezing a saturated liquid in cylinders and spheres. AIChE Journal, 13(1), 165–169. https://doi.org/10.1002/aic.690130130

Tao, L. N. (1986). A method for solving moving boundary problems. SIAM Journal on Applied Mathematics, 46(2), 254–264. https://doi.org/10.1137/0146018

Vaidya, N., Deshpande, A., & Pidurkar, S. (1913). Solution of heat equation (Partial Differential Equation) by various methods. Journal of Physics: Conference Series, 2021(05), 012144. https://doi.org/10.1088/1742-6596/1913/1/012144

Vollmer, M. (2004). An approach to automatic generation of wave digital structures from PDEs. In 2004 IEEE International symposium on circuits and systems (IEEE Cat. No. 04CH37512), vol. 3; pp. III–245.

Vollmer, M. (2005). Automatic generation of wave digital structures for numerically integrating linear symmetric hyperbolic PDEs. Multidimensional Systems and Signal Processing, 16(4), 369–396. https://doi.org/10.1007/s11045-005-4125-4

Wang, J., Yu, W., Baas, P. W., & Black, M. M. (1996). Microtubule assembly in growing dendrites. Journal of Neuroscience, 16(19), 6065–6078.

Werner, K. J., Nangia, V., Smith, J. O. & Abel, J. S. (2015). A general and explicit formulation for wave digital filters with multiple/multiport nonlinearities and complicated topologies. In 2015 IEEE workshop on applications of signal processing to audio and acoustics (WASPAA); pp. 1–5.

Zerroukat, M., & Chatwin, C. (1997). Computational moving boundary problem. Journal of Fluid Mechanics, 01(343), 407.

Zhou, H., Lu, T., Zhang, S., & Zhang, X. (2021). Lumped-circuits model of lossless transmission lines and its numerical characteristics. Frontiers in Energy Research., 12, 9. https://doi.org/10.3389/fenrg.2021.809434

Acknowledgements

This work was funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) - Project-ID 434434223 - SFB 1461.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

Bakr Al Beattie prepared all figures and wrote the manuscript. The second author supervised the writing process. The manuscript was reviewed by both authors.

Corresponding author

Ethics declarations

Financial or non-financial interests

The authors have no relevant financial or non-financial interests to disclose.

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Al Beattie, B., Ochs, K. Solving a one-dimensional moving boundary problem based on wave digital principles. Multidim Syst Sign Process 34, 703–730 (2023). https://doi.org/10.1007/s11045-023-00881-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11045-023-00881-z