Abstract

Multilevel image thresholding using Expectation Maximization (EM) is an efficient method for image segmentation. However, it has two weaknesses: 1) EM is a greedy algorithm and cannot jump out of local optima. 2) it cannot guarantee the number of required classes while estimating the histogram by Gaussian Mixture Models (GMM). in this paper, to overcome these shortages, a novel thresholding approach by combining EM and Salp Swarm Algorithm (SSA) is developed. SSA suggests potential points to the EM algorithm to fly to a better position. Moreover, a new mechanism is considered to maintain the number of desired clusters. Twenty-four medical test images are selected and examined by standard metrics such as PSNR and FSIM. The proposed method is compared with the traditional EM algorithm, and an average improvement of 5.27% in PSNR values and 2.01% in FSIM values were recorded. Also, the proposed approach is compared with four existing segmentation techniques by using CT scan images that Qatar University has collected. Experimental results depict that the proposed method obtains the first rank in terms of PSNR and the second rank in terms of FSIM. It has been observed that the proposed technique performs better performance in the segmentation result compared to other considered state-of-the-art methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Image processing plays a critical role in digital applications like damage detection and visual recognition. Image segmentation is the first step of image analysis which separates an image into several regions with similar properties. Several segmentation approaches have been suggested in the literature, such as edge-based [15, 19, 26, 44, 47], region-based [12, 13, 22, 41, 46], deep-learning [21, 38], and histogram thresholding [8, 35].

Edge-based segmentation methods try to distinguish between areas according to boundary localization. In this method, edges are detected by rapid changes in the gray level in adjacent regions. Edge detection techniques are useful for images with high-contrast areas where regions are clearly separated. In other cases, this method generates ill-defined and discontinuous edges which do not lead to closed curves. Moreover, it is not immune to noise and produces worse results compared to other segmentation techniques. Several studies addressed the edge detection issue in literature [11, 17, 24, 27, 42]. Tchinda et al. proposed a novel segmentation of blood vessels based on edge detection techniques in retina images [31]. Baltierra et al. used the Ant Colony algorithm to identify edges in noisy images [5].

Region-based segmentation methods classify pixels with similar properties, such as intensity and neighborhood. There are two categories for region-based techniques: region growing and region splitting. In the region-growing method, a pixel is considered a seed point, and neighbor pixels with similar intensity join the seed point. In region splitting, pixels would be divided into two groups if they do not have sufficient segmentation properties. Region-based methods are noise-robust and have good results with homogeneous images. However, they are both expensive in the memory usage and execution time.

Moreover, the segmented image is not unique because the output depends on initial points, which are known as seed points. In recent years, several applicable methods have been reported for region-based segmentation such as document images [37], medical images [10, 25, 40, 43], and farm images [20]. Guo et al. suggested a region-growing framework based on a multi-information fusion network with good results on chest CT scans [14]. Chauhan and Goyal considered color burn images and introduced a region segmentation algorithm with 93.4% of accuracy [7].

Artificial Neural Network (ANN) simulates human-brain interactions and is known as deep learning. Recently, various type of deep-learning segmentation methods has been developed in the literature [34]. ANN learns and adjusts weights and can segment images by training. The sequence is simple, and the network works in parallel mode. However, the training procedure is lengthy, and initialization affects the output image.

Moreover, overfit may occur during the training set. Different methods have been developed for medical images to apply segmentation using the ANN method. Huang et al. introduced novel deep learning for medical data segmentation to reduce high dimensional complexity [18]. In another research, different models of deep-learning networks were compared on Colorectal cancer images [16].

Thresholding is a simple and fast segmentation method that does not need prior knowledge about images. Thresholding-based segmentation methods are used to extract objects from the background by assigning pixel intensities to various levels. Similar to other segmentation methods, thresholding techniques have disadvantages too. It does not yield acceptable results on images with flat histograms. Also, the thresholding method does not consider spatial details, and output regions will not be contiguous. Recently, various thresholding methods have been reported that use a meta-heuristic algorithm to find the best solutions, such as cuckoo search [6], whale optimization [1, 3], and Particle Swarm Optimization (PSO) [45].

This paper presents a novel segmentation method based on EM (Expectation Maximization) and GMM (Gaussian Mixture Model). The significant contributions of this study are summarized as follows:

-

A novel approach is proposed to correct image segmentation based on EM. Expectation Maximization tries to estimate a histogram by GMM. In this procedure, some gaussian distributions may be covered and have no chance of being selected as a threshold level. In this case, the number of classes is reduced and desired threshold levels cannot be obtained. To overcome this drawback, a mechanism is considered in this study to maintain the number of desired levels.

-

The EM algorithm is sensitive to initial points. The poor starting points results in trapping the algorithm in local optima, and premature convergence will occur. To mitigate this shortage, a nature-inspired algorithm, namely Salp Swarm Algorithm (SSA) is used to help EM jump out of local areas.

This study is organized as follows: in Section 2, the theory of multilevel thresholding and expectation Maximization based on the Gaussian Mixture Model is reviewed and Salp Swarm Algorithm is described briefly, and necessary formulas are given. Section 3 refers to the proposed algorithm that combines the EM algorithm with SSA to achieve better performance. Section 4 compares the proposed algorithm to the traditional EM algorithm and four state-of-art algorithms in image segmentation. The experimental results with evaluation criteria are presented in this section. Finally, discussions are concluded in Section 5, emphasizing the efficiency of the proposed algorithm for medical image segmentation.

2 Basic theory

In this section, the definition of the thresholding problem is clarified. Then, the necessary information on Expectation Maximization (EM) is discussed, and the concept of the Salp Swarm Algorithm is briefly explained.

2.1 Multilevel thresholding

Image thresholding is often considered one of the most challenging and intriguing segmentation techniques. Consider an image I of size m × n with L distinct gray levels. Consider an image I of size m × n with L distinct gray-levels. The major purpose of thresholding is to determine a threshold vector T = [t1, …, tK − 1] for an image I to separate its pixels into K groups. Each group can be defined by

where Gk is kth defined group and (x, y) indicates the location of the pixel in the image I with gray level intensity f(x, y) between 0 and L − 1. The threshold vector T can be determined by a thresholding criterion like Otsu between classes [30]. However, this is not a proper segmentation metric. In 2011, Xu et al. proved that Otsu threshold biases toward the group which has a larger variance value [39]. Another way to segment an image is EM algorithm based on GMM which is described as follows.

2.2 Expectation maximization

An Expectation-Maximization algorithm is an approach for performing maximum likelihood estimation in the presence of latent variables. It does this by first estimating the values for the latent variables, then optimizing the model, then repeating these two steps until convergence. It is an effective and general approach and is most commonly used for density estimation with missing data, such as clustering algorithms like the Gaussian Mixture Model. Assume there are N samples drawn independently from D dimensional space and denoted byX [4]

The glossary of terms and parameters with their definitions are presented in Table 1.

To decompose a histogram of an image, Gaussian Mixture Model is used which is formulated as

In this formula, N is Gaussian function and μk, Σk, πk indicate mean, variance and coefficient of N. The purpose is to estimate μk, Σk and πk which maximize the likelihood function:

where

To reduce the complexity of formulas, log-likelihood of Eq. (4) is used as

However, this problem cannot be solved. Because the labels of observation are not available. To reduce the complexity of the problem, we define a label variable as

All zn elements are 0 expect one element. zn shows the class of xn.

Probability of zn is calculated as

The log-likelihood function is formulated as

To solve the problem, γ is defined as the probability of assigning a sample to the specific cluster

derivation with respect to μ and Σ, leads to obtain optimum values

πk can be identifed by lagrange multiplier

However, we cannot find a closed-form answer for this problem. EM algorithm is used to estimate unknown parameters which its pseudo code is shown in Fig. 1.

1- initialize parameters μk, Σk and πk.

2- E-step: compute probability of assigning a data point to a cluster

3- M-step: update GMM parameters based on calculated γ(znk)

4- check the convergence. If the log-likelihood converges, the proceture will terminate

The process can be described as follows: First, we select some initial values for the means and mixing coefficients (μk, Σk and πk). Then, we alternate between the following two updates called the E (expectation) step and the M (maximization) step. In the expectation step, the current values for the model parameters are used to compute the posterior probabilities γ(znk). In the maximization step, the responsibilities are used to estimate the model parameters (e.g., means and mixing coefficients). Finally, the log-likelihood is computed and checked for convergence.

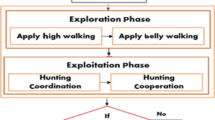

2.3 Salp swarm algorithm

Salp Swarm Algorithm (SSA) was suggested by Mirjalili et al. for solving complicated problems based on the behavior of living salps at sea [28]. Assume population G is randomly generated, and the good source is denoted as F. The group tries to find better food sources at the sea. The location of the leader is changed according to the following formula:

Where \({\boldsymbol{Gf}}_j^1\) is the leader of the group (first individual of the group), j is referred to j-th dimension of the space and Fj is denoted as the food location. q2 and q3 are drawn from the uniform distribution. q1adjusts the exploration ability by following equation:

Where iter means the current iteration and the maximum number of iterations is denoted as max _ iter. The location of follower members is changed based on the following equation

Where \({\boldsymbol{Gf}}_j^i\) indicates the position of i-th agent (i ≥ 2) in current iteration j. SSA has two advantages over other metaheuristic algorithms: high convergence and escaping from local areas. SSA performs a smooth balance between exploration and exploitation to track the global optimum as well as faster convergence. Also, following the leader and random walking helps the algorithm not to trap in the local points [2].

3 The proposed algorithm

EM algorithm based on GMM is a powerful and fast method in image segmentation. This algorithm is formulated according to Bayes’ theorem. EM estimates a histogram with a mixture of Gaussian functions. However, it cannot guarantee the number of pre-defined classes. For example, Fig. 2a traces a histogram of a grayscale image with Gaussian mixture functions, which has been estimated by the EM algorithm. EM decomposes the histogram into three Gaussian functions properly. However, Gaussian function two is covered by Gaussian function one and Gaussian function three. It means that the second class has no chance of winning in the segmentation competition. In this case, we have only two classes (Gaussian function one and Gaussian function two) instead of three classes. To overcome this shortage, a mechanism is considered in this paper. Assume that the desired number of segmentation regions is K and we want to segment the image into these K classes. In the process, M classes are covered and the image is segmented into K − M groups. To compensate for this reduction, M classes are created according to M classes which have been covered by other Gaussian functions. Fig. 2b shows a covered Gaussian function. In this situation, a new class is created with μi ± dist range of intensity where μi indicates the mean value of i-th covered gaussian function and dist is distance from μi which identifies the intensity limitation of the new class. It means that every pixel with intensity between μi ± dist is assigned to this class. This technique recovers missed classes and improves the quality of the segmented image.

The second drawback of the EM algorithm is the convergence problem. EM is a greedy algorithm and converges to the nearest optimum point. No mechanism is considered in this algorithm to jump out of the local areas. For example, Fig. 3-a shows the Log-Likelihood changes over a tuning parameter. This parameter can be a mean, variance, or Gaussian coefficient. EM algorithm tries to climb up the curve to reach the top of the local points (P1, P2 and P3) and no mechanism is considered in the algorithm to escape from local areas. Therefore, the Log-Likelihood always has a saturation curve. We addressed this shortage by a mechanism in this paper. The EM algorithm is equipped with a natural-inspired algorithm, namely Salp Swarm Algorithm (SSA). SSA helps the EM algorithm to jump out of local areas and find a better solution. For example, in every five iterations, SSA searches GMM parameters (means, variances, and coefficients) and introduces a suggestion point to EM. EM algorithm evaluates the suggested solution. If the suggested solution has a better Log-likelihood, the EM algorithm jumps to the suggested solution and continues the convergence from this point. SSA finds an optimum solution according to the RMSE fitness function, which is defined as follows:

Where L indicates the number of intensity levels, Fig. 3b shows a convergence process where SSA finds a better solution and introduces it to the EM algorithm (green point). In this example, EM considers the green point as the initial point and continues calculations from this point.

The pseudo-code and flowchart of the proposed method are shown in Fig. 4 and Fig. 5, respectively. The algorithm starts with GMM parameters initialization (μk, Σk and πk). In every iteration, new means, variances, and coefficients are calculated (\({\mu}_k^{new}\), \({\Sigma}_k^{new}\) and \({\pi}_k^{new}\)). If EM goes to the saturation area, the algorithm will be terminated. In every five iterations, SSA is handled and finds a local solution in the search space. First, μk, Σk and πk are initialized and SSA tries to update the location of search agancies by Eq. (19). If the termination criterion is satisfied then SSA finishes and the best soution which is known as \({\boldsymbol{Gf}}_j^1\) is fed into EM algorithm. If this point has a higher Log-Likelihood than the current point, EM considers the suggested solution as the initial point and continues the convergence process from this point. Else, the iteration increases, and the EM algorithm calculates new GMM parameters.

4 Results and discussions

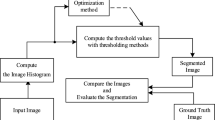

This section considers some practical experiments on sets of medical grayscale images. PSNR and FSIM, as two evaluating metrics, are employed in this paper to show the superiority of the proposed method. PSNR is used to measure the robustness of segmenting algorithms and is

Where MSE is expressed as

Where A and B indicate the original and segmented image, respectively, and M and N are the size of images. FSIM is Feature Similarity Index and is formulated as [23]

Where Ω is the entire image, SL(x) refers to similarity between the segmented image and the original image, and PCm(x) shows the phase consistency. The higher value of PSNR or FSIM means better segmentation quality. Similar to other optimization problems, setting the parameters is the first step to solve the problem. Table 2 indicates setting parameters to run EM algorithm and the proposed method. Each algorithm is implemented in MATLAB language environment with 2.7 GHz CPU and 8 GHz RAM memory.

A set of 16 medical lung images are utilized to measure the performance of the introduced algorithm and are shown in Fig. 6. These images are selected from the COVID-19 Radiography Database [36]. A team of researchers from Qatar University has created this database of chest X-ray images for COVID-19-positive cases, along with Normal and Viral Pneumonia images.

Fig. 7 shows the histograms of Fig. 6 images estimated by the EM algorithm and the introduced method. In this simulation, the aim is to decompose the histogram into ten classes. In other words, the algorithms look for GMM parameters that perfectly fit the histogram. An actual image has a discontinuous histogram which Gaussian Mixtures cannot cover with soft changes. So, the error of estimation is inevitable.

Table 3 lists the number of missed classes covered in the EM algorithm. k indicates the number of desired classes. For example, for image 1, we want to segment the histogram into 15 groups (k=15). However, 4 Gaussian functions are covered by the others, and we get only 11 classes. In the proposed algorithm, the image is segmented into 15 classes. Because the covered functions are detected, and a range of intensity is assigned to them. In this paper, dist=4 is considered for an intensity range (look at a covered Gaussian function presented in Fig. 2). Generally, the probability of missed classes is increased with an increasing number of segmentation classes.

Table 4 lists PSNR values of segmented test images by the EM algorithm and the proposed algorithm. Better results are presented in boldface. From obtained PSNR values mentioned in Table 4, we find out the proposed method has a better performance in 41 cases out of 48 cases, while the EM algorithm performs better in only 7 cases. If an image is segmented into 255 levels, the output image will be similar to the original image and PSNR will be infinitive. Generally, with an increasing number of segmentation parts, PSNR values will also be increased. Consequently, when some Gaussian functions are covered in the EM algorithm, and the number of segmentation parts reduces, the PSNR metric is also degraded.

Moreover, FSIM values are calculated for test image segmentation. The results are depicted in Table 5. The higher values are presented in boldface. It can be seen from this table that the proposed method scores better results in most cases. Generally, higher segmentation numbers mean less difference between the original and output images and a higher FSIM metric. According to Table 3, the EM algorithm yields the missed classes in most cases, which is the main reason the EM algorithm cannot score better FSIM values.

In order to visually compare the performance of the introduced method and the EM algorithm, segmented test images with k = 20 are shown in Fig. 8. As can be seen from the segmented images, it is clear that the proposed algorithm is superior to the EM algorithm and includes more details. This is because the higher level of segmentation yields smoother results and less difference between the input image and the segmented image. For example, in Test 6, the proposed algorithm’s segmented image has more details than the EM algorithm. In some cases, such as Test 2, the EM algorithm has rough results because of missed classes.

Fig. 9 presents convergence curves of test images to improve understanding of the studied algorithms’ segmentation performance. SSA equips the proposed algorithm to jump out of local optima, while the EM algorithm does not have this mechanism. As told in Section 3, in every five iterations, SSA starts and finds a candidate solution in the search space and introduces it to the EM algorithm. If the candidate solution has a higher Log-likelihood, the EM algorithm considers it the initial solution, jumps to this point, and continues the convergence process. In some cases, such as Test 1, Test 2, Test 10, and Test 13, the proposed solution has a better Log-likelihood value. Hence, the EM algorithm interrupts the previous solution and starts the process from these points. So-called test images have discontinuous convergence curves depicted with red circles in Fig. 9. With an increasing number of iterations, the probability of finding a better solution is reduced. Because the EM algorithm climbs up to reach the top of the local optimum points and in this case, the chance of finding a solution with an upper location compared to local optima will be reduced. Hence, in most cases, such as Test 1, Test 10, and Test 13, the break-point occurred at the beginning of the search process.

In order to understand the speed of the proposed algorithm, the execution time of studied methods is calculated and presented in Fig. 10 for different test images. As seen in this figure, the computational time of the proposed algorithm is higher than the EM algorithm, which is the disadvantage of the introduced method. Although SSA is a fast algorithm, its computational time is added to the total execution time. Two techniques are considered in the proposed method to optimize the running time:

-

a)

the fitness function is defined as Eq. (20) instead of Log-likelihood, a less complicated formula.

-

b)

SSA starts every five iterations instead of every iteration, saving the total time.

As discussed, most jumping points in convergence curves occur at the beginning of the process. The execution of SSA can be limited to the first 50 iterations of a run to save more computational time.

In order to compare the ability of the proposed algorithms, four recent segmenting algorithms namely ECSO [35], FCS [6], BOA [33], and EMA [32] are implemented on medical images shown in Fig. 11 to prove the superiority of the proposed method. This dataset contains 1000 CT scans of patients diagnosed with COVID-19 and segmentations of lung infections made by experts [29]. This dataset aims to encourage the research and development of effective and innovative methods to identify if COVID-19 infects a person through the analysis of his/her CT scans.

FSIM and PSNR are selected to compare the efficiency of different methods and the accuracy of the obtained solutions. In order to have an identical condition, the initial members of searching methods were selected from a uniform distribution between [0, 255]. The number of iterations was set to 100, and the population size was assigned to 200 for all algorithms to obtain a fair comparison between searching algorithms. Other adjusting parameters are set according to the reference papers as listed in Table 6. The goal is to segment the image into ten classes. The segmented images are shown in Fig. 12.

It is evident from these figures that the FCS algorithm gets blurred results, and ESCO gets better than the FCS algorithm. However, these two algorithms are less competitive than other algorithms. Also, the BOA algorithm yields over-segmentation results in some cases, such as in Image 5. These figures show that the EMA algorithm and the proposed method can better segment different images. However, the main limitation of these methods is their sensitivity to noise.

In addition, PSNR values of output images are computed to show a better perception of the segmentation quality, and the results are listed in Table 7. The highest values are marked in boldface. These results show that the proposed algorithm has better PSNR values than other compared algorithms because it obtains the best results in 4 out of 8 cases.

Table 8 shows the FSIM values extracted from test images. These results indicate that BOA obtains the first rank because it performs best in 3 out of 8 cases. The proposed method comes in the second rank with 2 cases, and other algorithms have only one.

For further analysis, the CPU time results for each algorithm are recorded and shown in Fig. 13. From this figure, the EMA algorithm achieves the best results. BOA obtains the second rank followed by the FCS algorithm; it is ranked third. In contrast, the proposed method is considered the slowest algorithm in the experiments.

Another experiment is done on studied algorithms to show the fair comparison between algorithms, which is the Wilcoxon test [9]. This statistical test is based on two hypotheses. The null hypothesis is described as: there is no significant difference between two algorithms where the alternative hypothesis proves a difference. p − values are used to reject the null hypothesis. If p − value<0.05, it indicates the significant difference between the two groups of the results. Table 9 lists the calculated p − values of the Wilcoxon signed-rank test. Values greater than 0.05 are shown in bold. According to obtained results, it could be seen that p-values are less than 0.05 for 30 out of 32 cases. In other words, the superiority of the proposed method is proven in the Wilcoxon rank test.

5 Conclusion

This work suggests an improved segmentation method based on the EM algorithm. In order to overcome shortages of the EM algorithm in image segmentation, two mechanisms are considered: First, covered Gaussian functions are detected, and a class is assigned to each function to compensate for missed classes. Second, SSA is applied to find new solutions. Perhaps these candidate solutions have better fitness functions. In this case, the EM algorithm terminates the previous answer and starts the process from these points. This helps the algorithm to jump out of local areas. Twenty-four medical images are selected to show the performance of the proposed algorithm. By comparing the EM algorithm and the proposed method, obtained results on segmentation metrics such as PSNR and FSIM clearly prove the higher accuracy of the proposed method. Also, convergence curve results demonstrate that the proposed algorithm can fly away from local optima with SSA suggestion points. The Wilcoxon rank test confirms the meaningful superiority of the proposed algorithm. The only disadvantage of the introduced method is the execution time which can be improved in future work by employing some shortcut techniques. The algorithms were sorted from good to bad in terms of running time; it was seen that they were sorted in the order of EMA < BOA < FCS < ESCO<proposed method. Based on FSIM values, the performance of studied algorithms can be sorted in the order: ESCO=FCS = EMA < proposed method < BOA. On the other hand, BOA has much better and more efficient performance in the FSIM metric. In comparison, the proposed algorithm achieved the second rank. When the algorithms were sorted from bad to good in terms of PSNR, it was seen that they were sorted in the order of ESCO=FCS=BOA = EMA < proposed method. Therefore, it is concluded that the proposed method performs better in the segmentation process. In contrast, the proposed method obtained the second rank.

In future work, to further improve the segmentation quality, work on the parameter sensitivity of SSA will be accomplished when applied to the EM algorithm. Also, the suggested methodology would be helpful for different applications like brain magnetic resonance image segmentation, breast cancer thermogram image segmentation, and other natural grey-scale or RGB image segmentations.

Data availability

The image data that support the findings of this study are available in “COVID-QU-Ex Dataset” with the identifier

https://www.kaggle.com/datasets/cf77495622971312010dd5934ee91f07ccbcfdea8e2f7778977ea8485c1914df [36].

References

Abdel-Basset M, Mohamed R, AbdelAziz NM, Abouhawwash M (2022) HWOA: a hybrid whale optimization algorithm with a novel local minima avoidance method for multi-level thresholding color image segmentation. Expert Syst Appl 190:116145. https://doi.org/10.1016/j.eswa.2021.116145

Abualigah L, Shehab M, Alshinwan M, Alabool H (2019) Salp swarm algorithm: a comprehensive survey. Neural Comput & Applic 32:11195–11215. https://doi.org/10.1007/s00521-019-04629-4

Agrawal S, Panda R, Choudhury P, Abraham A (2022) Dominant color component and adaptive whale optimization algorithm for multilevel thresholding of color images. Knowl-Based Syst 240:108172. https://doi.org/10.1016/j.knosys.2022.108172

Asheri H, Hosseini R, Araabi BN (2021) A new EM algorithm for flexibly tied GMMs with large number of components. Pattern Recogn 114:107836. https://doi.org/10.1016/j.patcog.2021.107836

Baltierra S, Valdebenito J, Mora M (2022) A proposal of edge detection in images with multiplicative noise using the ant Colony system algorithm. Eng Appl Artif Intell 110:104715. https://doi.org/10.1016/j.engappai.2022.104715

Chakraborty S, Mali K (2022) Biomedical image segmentation using fuzzy multilevel soft thresholding system coupled modified cuckoo search. Biomed Signal Proc Contr 72:103324. https://doi.org/10.1016/j.bspc.2021.103324

Chauhan J, Goyal P (2021) Convolution neural network for effective burn region segmentation of color images. Burns 47:854–862. https://doi.org/10.1016/j.burns.2020.08.016

Chen X, Huang H, Heidari AA, Sun C, Lv Y, Gui W, Liang G, Gu Z, Chen H, Li C, Chen P (2022) An efficient multilevel thresholding image segmentation method based on the slime mould algorithm with bee foraging mechanism: a real case with lupus nephritis images. Comput Biol Med 142:105179. https://doi.org/10.1016/j.compbiomed.2021.105179

Derrac J, García S, Molina D, Herrera F (2011) A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evolut Comput 1:3–18. https://doi.org/10.1016/j.swevo.2011.02.002

Feng C, Wang L, Xu P, Chu Z, Yao J, Sun W, Gong H, Zhang X, Li Z, Fan Y (2021) Microstructural and mechanical evaluations of region segmentation methods in classifications of osteonecrosis. J Biomech 119:110208. https://doi.org/10.1016/j.jbiomech.2020.110208

Gao P, Song Y, Minhui S, Qian P, Su Y (2021) Extract Nanoporous gold ligaments from SEM images by combining fully convolutional network and Sobel operator edge detection algorithm. SSRN J. https://doi.org/10.2139/ssrn.3979931

Guo J, Mu Y, Xue D, Li H, Chen J, Yan H, Xu H, Wang W (2021) Automatic analysis system of calcaneus radiograph: rotation-invariant landmark detection for calcaneal angle measurement, fracture identification and fracture region segmentation. Comput Methods Prog Biomed 206:106124. https://doi.org/10.1016/j.cmpb.2021.106124

Guo R, Lu S, Wu Y, Zhang MM, Wang F (2022) Robust and fast dual-wavelength phase unwrapping in quantitative phase imaging with region segmentation. Opt Commun 510:127965. https://doi.org/10.1016/j.optcom.2022.127965

Guo J, Fu R, Pan L, Zheng S, Huang L, Zheng B, He B (2022) Coarse-to-fine airway segmentation using multi information fusion network and CNN-based region growing. Comput Methods Prog Biomed 215:106610. https://doi.org/10.1016/j.cmpb.2021.106610

Hait SR, Mesiar R, Gupta P, Guha D, Chakraborty D (2022) The Bonferroni mean-type pre-aggregation operators construction and generalization: application to edge detection. Information Fusion 80:226–240. https://doi.org/10.1016/j.inffus.2021.11.002

Hosseinzadeh Kassani S, Hosseinzadeh Kassani P, Wesolowski MJ, Schneider KA, Deters R (2022) Deep transfer learning based model for colorectal cancer histopathology segmentation: a comparative study of deep pre-trained models. Int J Med Inform 159:104669. https://doi.org/10.1016/j.ijmedinf.2021.104669

Hu X, Wang Y (2022) Monitoring coastline variations in the Pearl River estuary from 1978 to 2018 by integrating canny edge detection and Otsu methods using long time series Landsat dataset. CATENA 209:105840. https://doi.org/10.1016/j.catena.2021.105840

Huang B, Wei Z, Tang X, Fujita H, Cai Q, Gao Y, Wu T, Zhou L (2021) Deep learning network for medical volume data segmentation based on multi axial plane fusion. Comput Methods Prog Biomed 212:106480. https://doi.org/10.1016/j.cmpb.2021.106480

Javed A, Lee H, Kim B, Han Y (2022) Vibration measurement of a rotating cylindrical structure using subpixel-based edge detection and edge tracking. Mech Syst Signal Process 166:108437. https://doi.org/10.1016/j.ymssp.2021.108437

Kim W-S, Lee D-H, Kim T, Kim G, Kim H, Sim T, Kim YJ (2021) One-shot classification-based tilled soil region segmentation for boundary guidance in autonomous tillage. Comput Electron Agric 189:106371. https://doi.org/10.1016/j.compag.2021.106371

Li X, Chen D (2022) A survey on deep learning-based panoptic segmentation. Digital Sig Proc 120:103283. https://doi.org/10.1016/j.dsp.2021.103283

Liang H, Cheng Z, Zhong H, Qu A, Chen L (2022) A region-based convolutional network for nuclei detection and segmentation in microscopy images. Biomed Signal Proc Contr 71:103276. https://doi.org/10.1016/j.bspc.2021.103276

Lin Z, Zhang L, Mou X, Zhang D (2011) FSIM: a feature similarity index for image quality assessment. IEEE Trans on Image Proc 20:2378–2386. https://doi.org/10.1109/TIP.2011.2109730

Liu H, Yang Z, Zhang H, Wang C (2022) Edge detection with attention: from global view to local focus. Pattern Recogn Lett 154:99–109. https://doi.org/10.1016/j.patrec.2022.01.006

Liu X, Yang L, Chen J, Yu S, Li K (2022) Region-to-boundary deep learning model with multi-scale feature fusion for medical image segmentation. Biomed Signal Proc Contr 71:103165. https://doi.org/10.1016/j.bspc.2021.103165

Lyu LF, Higgins GD, Zhu WD (2022) Operational modal analysis of a rotating structure using image-based tracking continuously scanning laser Doppler vibrometry via a novel edge detection method. J Sound Vib 525:116797. https://doi.org/10.1016/j.jsv.2022.116797

Maksimovic V, Petrovic M, Savic D, Jaksic B, Spalevic P (2021) New approach of estimating edge detection threshold and application of adaptive detector depending on image complexity. Optik 238:166476. https://doi.org/10.1016/j.ijleo.2021.166476

Mirjalili S, Gandomi AH, Mirjalili SZ, Saremi S, Faris H, Mirjalili SM (2017) Salp swarm algorithm: a bio-inspired optimizer for engineering design problems. Adv Eng Softw 114:163–191. https://doi.org/10.1016/j.advengsoft.2017.07.002

Morozov S et al (2020) MosMedData: Chest CT Scans With COVID-19 Related Findings Dataset. arXiv preprint arXiv:2005.06465

Otsu N (1979) A threshold selection method from gray-level histograms. IEEE Trans Syst, Man, Cybern 9:62–66. https://doi.org/10.1109/TSMC.1979.4310076

Saha Tchinda B, Tchiotsop D, Noubom M, Louis-Dorr V, Wolf D (2021) Retinal blood vessels segmentation using classical edge detection filters and the neural network. Inform Med Unloc 23:100521. https://doi.org/10.1016/j.imu.2021.100521

Sathya PD, Kalyani R, Sakthivel VP (2021) Color image segmentation using Kapur, Otsu and minimum cross entropy functions based on exchange market algorithm. Expert Syst Appl 172:114636. https://doi.org/10.1016/j.eswa.2021.114636

Sowjanya K, Injeti SK (2021) Investigation of butterfly optimization and gases Brownian motion optimization algorithms for optimal multilevel image thresholding. Expert Syst Appl 182:115286. https://doi.org/10.1016/j.eswa.2021.115286

Sun B, Wang Z, Lin J, Chen C, Zheng G, Yue S, Wang H, Kang X, Chen X, Hong W, Wang P (2022) Automatic quantitative analysis of metabolism inactivation concentration in single bacterium using stimulated Raman scattering microscopy with deep learning image segmentation. Med Novel Technol Dev 14:100114. https://doi.org/10.1016/j.medntd.2022.100114

Swain M, Tripathy TT, Panda R, Agrawal S, Abraham A (2022) Differential exponential entropy-based multilevel threshold selection methodology for colour satellite images using equilibrium-cuckoo search optimizer. Eng Appl Artif Intell 109:104599. https://doi.org/10.1016/j.engappai.2021.104599

Tahir AM, Chowdhury ME, Qiblawey Y, et al (n.d.) COVID-QU-Ex Dataset

Umer S, Mondal R, Pandey HM, Rout RK (2021) Deep features based convolutional neural network model for text and non-text region segmentation from document images. Appl Soft Comput 113:107917. https://doi.org/10.1016/j.asoc.2021.107917

Webb JM, Adusei SA, Wang Y, Samreen N, Adler K, Meixner DD, Fazzio RT, Fatemi M, Alizad A (2021) Comparing deep learning-based automatic segmentation of breast masses to expert interobserver variability in ultrasound imaging. Comput Biol Med 139:104966. https://doi.org/10.1016/j.compbiomed.2021.104966

Xu X, Xu S, Jin L, Song E (2011) Characteristic analysis of Otsu threshold and its applications. Pattern Recogn Lett 32:956–961. https://doi.org/10.1016/j.patrec.2011.01.021

Yan L, Wang Q, Huang L, Chen B, Lin Y (2022) Phase unwrapping of digital holographic microscopy using adaptive region segmentation and phase derivative calibration with respect to fringe density. Opt Lasers Eng 148:106780. https://doi.org/10.1016/j.optlaseng.2021.106780

Yang Z, Zhao Y, Liao M, di SH, Zeng YZ (2021) Semi-automatic liver tumor segmentation with adaptive region growing and graph cuts. Biomed Signal Proc Contr 68:102670. https://doi.org/10.1016/j.bspc.2021.102670

Yang C, Wang W, Feng X (2022) Joint image restoration and edge detection in cooperative game formulation. Signal Process 191:108363. https://doi.org/10.1016/j.sigpro.2021.108363

Yang Y, Hou X, Ren H (2022) Efficient active contour model for medical image segmentation and correction based on edge and region information. Expert Syst Appl 194:116436. https://doi.org/10.1016/j.eswa.2021.116436

Zhao H, Wu B, Guo Y, Chen G, Ye D (2021) SSWS: an edge detection algorithm with strong semantics and high detectability for spacecraft. Optik 247:168037. https://doi.org/10.1016/j.ijleo.2021.168037

Zhao Y, Yu X, Wu H, Zhou Y, Sun X, Yu S, Yu S, Liu H (2021) A fast 2-D Otsu lung tissue image segmentation algorithm based on improved PSO. Microprocess Microsyst 80:103527. https://doi.org/10.1016/j.micpro.2020.103527

Zhou Z, Dai M, Guo Y, Li X (2021) Global-to-local region-based indicator embedded in edge-based level set model for segmentation. Digital Sig Proc 114:103061. https://doi.org/10.1016/j.dsp.2021.103061

Zhu Z, Li M, Xie Y, Zhou F, Liu Y, Wang W (2022) The optimal projection intensities determination strategy for robust strip-edge detection in adaptive fringe pattern measurement. Optik 257:168771. https://doi.org/10.1016/j.ijleo.2022.168771

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ehsaeyan, E. An efficient image segmentation method based on expectation maximization and Salp swarm algorithm. Multimed Tools Appl 82, 40625–40655 (2023). https://doi.org/10.1007/s11042-023-15149-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15149-8