Abstract

In order to create immersive experiences in virtual worlds, we need to explore different human senses (sight, hearing, smell, taste, and touch). Many different devices have been developed by both industry and academia towards this aim. In this paper, we focus our attention on the researched area of thermal and wind devices to deliver the sensations of heat and cold against people’s skin and their application to human-computer interaction (HCI). First, we present a review of devices and their features that were identified as relevant. Then, we highlight the users’ experience with thermal and wind devices, highlighting limitations either found or inferred by the authors and studies selected for this survey. Accordingly, from the current literature, we can infer that, in wind and temperature-based haptic systems (i) users experience wind effects produced by fans that move air molecules at room temperature, and (ii) there is no integration of thermal components to devices intended for the production of both cold or hot airflows. Subsequently, an analysis of why thermal wind devices have not been devised yet is undertaken, highlighting the challenges of creating such devices.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Multimedia is a commonplace component of the modern computing landscape. Sound, graphics, and animation are present in a coordinated way on almost all desktop and mobile platform applications [50]. However, such applications still predominantly engage just two human senses – vision and audition. Given the quest to develop more realistic digital environments, the transition from multimedia to mulsemedia (multiple sensory media) is quite natural. Mulsemedia enriches traditional multimedia content with new media objects, which stimulate olfaction, gustation, haptic (touch) and thermoception [15], to name but a subset. It offers new opportunities for immersive technologies and opens new horizons for entertainment [11, 43, 65, 67, 74], education, training, and simulation [1, 10, 12, 13, 23, 54, 58, 59, 62, 64, 75], culture [8, 9], marketing [25, 37, 57]. Yet, these new sensory media require new technology solutions implemented in both hardware and software [36, 49, 52, 61].

When integrating new sensory media into traditional multimedia applications, new challenges are brought to the fore of Human Computer Interfaces [51]: traditional media, such as audio and video, are characterized by the need to play back their components (e.g. video frame resolution and pixel depth) at a specific rate (e.g. frames per second), and whose individual components disappear once played. However, olfactory, wind, and thermal media are characterized by a lingering effect, whereby individual media components persist in the atmosphere, bringing new challenges to traditional multimedia applications. Moreover, a constant playback rate is not compulsory, as the effect can also be achieved by an initial impulse delivery of the respective media in the vicinity of the user. Also, sensory media are much more dependent on the conditions of the environment in which a user is immersed (e.g. humidity and temperature) and the media perception is relative (e.g. feeling the effect as being hot or cold).

This paper aims to deepen the study of thermal wind-emitting devices, which are a particular case of haptic apparatuses in which the sensory effect is obtained by generating airflow that collides against the human skin. A systematic review carried out by [27] revealed that haptic devices alone cover 86.6% of all mulsemedia studies in Virtual Reality and it is the most common form of improvement of immersive experiences. For this paper in particular, we are interested in how one can employ such devices to generate air of a particular temperature. Whilst research has explored wind-emission in the context of mulsemedia, as a standalone effect, as well as the generation of thermal effects of varying temperatures, the generation of air of a particular temperature is a challenge yet to be mastered. This is because generating two separate effects – blowing and heating (or cooling) the air – and trying to pool their combined effect to obtain generation of hot/cold sensations on the user is complex, as one effect (blowing air) will likely displace/disperse the hot/cold air emitted separately.

Although this work is not a systematic review in the sense of having a strict methodology for the selection and separation of studies, it can be classified as a overview [16] and the articles highlighted therein were identified as being relevant towards the development of a haptic device that deals with the emission of hot and cold air flows [46]. These studies were found in Google Scholar, terms for searching were: “Mulsemedia”, “Human-Computer Interaction”, “Quality of Experience”, “Thermal Sensation”, “Multisensory”, “Immersive Environment”, “Immersive Experience”.

Because of the plethora of devices identified and how they work, the studies selected were separated in two main categories: wearable and non-wearable. In addition, the present paper also details a historical perspective of the use of haptic devices, together with indications of solutions for mulsemedia scenarios, as well as the recent paradigm shift from wearable to non-wearable devices, which aligns with the more general trend of Internet of Things (IoT).

The structure of this paper is as follows. Section 2 is divided in three subsections. The first two subsections describe wearable and non-wearable devices capable of delivering thermal and wind sensory effects found in this survey followed by the evaluation that the authors of each study made to investigate how users perceived wind and thermal experiences. Section 2.3 then covers commercial products and devices. These devices were in a separate section as they do not include questions related to their usability evaluation and/or reproducibility. Section 2.1 is further subdivided in another two Sections 2.1.1 and 2.1.2, which respectively explore wind and thermal wind devices separately. Section 3 presents the discussion of our findings emphasising the insights that have emerged as a result of this study. Finally, Section 4 concludes the paper underlining the need for further studies.

2 Thermal and wind effects in mulsemedia scenarios

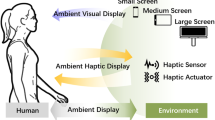

Thermal and wind devices for generating haptic effects can be classified in two categories: wearable and non-wearable. The former is a category of electronic devices that are worn as accessories, embedded in clothing, implanted in the user’s body, or even tattooed [53] that detect, analyze and transmit information in the expectation of continuously sensing, collecting, and uploading physiological data to improve quality of life, user experience, augmented reality or provide context sensitivity. These devices can be made aware of the user’s surroundings and state and context-sensitive applications can be developed to exploit the intimacy between human, computer, and environment [3]. Therefore, when used in mulsemedia applications, wearable devices can stimulate the human senses such as olfactory, tactile and taste systems.

The second category of non-wearable devices are those that are not in contact with or near the user’s body. Although not always the case, non-wearable devices can be so subtle that users are not even aware of their presence, (e.g. climatizers, air conditioning, or scent/fragrance devices). These can be felt, but are not necessarily visible. These concealed devices are not common in mulsemedia applications and were identified as a challenge because they can break user immersion. One of the most mentioned concerns is with the user’s perception of the fan, that is, if the fans are placed in the user’s field of view, the user will immediately notice that wind is flowing from the fans, impairing their sense of reality. Matsukura et al. [26] observed the possible loss of realism when fans are visible to users. To avoid this loss of immersion, these authors suggested that using an indirect air flow of at least two devices allows the fans to be located in a place not visible to the users.

Both categories were identified in this work. While fans were predominant to simulate the wind, thermal effects are diverse with some of them using light, vibrations and even smells to create the illusion of cold and heat.

Moreover, multisensory devices are so diverse that there is no single standard methodology for their evaluation. Most studies presented here, when evaluated, used an environment (virtual or not) in conjunction with the constructed device and applied user questionnaires and/or interview for user feedback and qualitative data [17].

2.1 Non-wearable devices

2.1.1 Wind effects and devices

Delivery of wind-induced sensory effects is usually done with fans placed directly or indirectly around the user. One or more fans can be used to improve user feedback in respect of the tactile sensation of wind, but how they work and for what purpose varies.

Accordingly, in 2004, [28] presented a proposal for creating a surrounding wind effect on the user environment. The wind generator system, called WindCube, consisted of several small fans connected to a cubic structure in which a user is physically inserted. Twenty DC motors are controlled by a custom built hardware connected to a personal computer through the USB port. Each motor had a controller based on a ATMELFootnote 1 chip and a motor driver circuitry that allows up to 64 levels of control for the speed of the fan. This system was evaluated by an experiment to verify that the proposed device indeed can contribute to generating enhanced user felt presence in a virtual reality system. A virtual city scene in a snowstorm was shown in two different conditions for a group of 15 individuals (12 men, 3 women, average age 24 years): (i) with visual display only and (ii) with both visual and wind display followed by a questionnaire comprised of two questions asking users to rate on a scale of 0 to 100: (i) the degree to which the subject felt as if being in a place other than the experiment site (presence), and (ii) how realistic the feeling of the wind was (realism of the wind display). Analysis has shown that the WindCube generated significant improvement in terms of immersion.

Rainer et al. [40] conducted a study in 2012 on the impact of sensory effects (light, vibration and wind) on the quality of the video experience on the web. The study aimed to assess subjective qualities (SQAS - Safety and Quality Assessment for Sustainability) in order to investigate the variation of Quality of Experience (QoE) and understand how users’ emotions are influenced by videos with and without sensory effects. The results showed that, in general, sensory effects improve QoE by 19% compared to traditional videos. As for the wind, all participants reported that they would like to add thermal effects to their experience. Some of them reported that the effect of the wind caused a false sensation because they expected a change in temperature synchronized with the content of the video.

In related work, Chambel et al. [41] reported in 2013 positive results in investigating the Windy Sight Surfers, an interactive mobile application for providing a more realistic immersion experience. Their results showed an increased sense of immersion as a result of stimulation with two fans while watching 360∘ videos. Authors evaluated this system mainly on observations, questionnaires and interviews. 21 users, 8 female, 13 male, age 18 to 57 participate in this study to evaluate the existence of differences between the two categories: (i) experience sensing and (ii) context awareness. Authors stated that by evaluating presence, one can also draw conclusions regarding the immersion capabilities of the system. To do so, users completed two questionnaires about immersion and presence, the former before the experiment, the latter after the evaluation. The user evaluation showed that Windy Sight Surfers increases the sense of presence and immersion, and that users appreciated having both categories of features, finding all of them very useful, satisfactory and easy to use.

The Haptic ChairIO system, proposed in 2015 by [14], combines vibration effects, using actuators installed on the floor, and wind, through 8 fans, to enhance the sense of realism. The wind subsystem consists of a group of fans installed on an octagonal structure around the user, controlled by two Arduinos. This system was not evaluated, but a plan was proposed by the authors to study the user’s spatial orientation that uses a triangle completion task. In the triangle-completion task, there are three spheres in the scene. The user is asked to move from the first to the second to the third sphere, and asked to return to the first one without looking at it (blindfolded). The study is a mixed between-/within-subject design, with three independent variables, with/without wind, floor vibration, and step sounds [14].

In 2019, [63] proposed the WindyWall, a panel of 90 fans with 3 panels to explore up to 270∘ of wind coverage simulations. Each panel has a curvature that covers a 90∘ angle and has 30 individual fans arranged in a matrix of 5 columns and with 6 fans each. Each fan can be individually controlled to produce different effects of the wind such as blowing air, undulating breeze, sweeping air movement or objects passing quickly by the user. For the evaluation of this system, 16 participants (9 men and 7 women aged 19 to 33) were recruited. To ensure better wind perception, participants were asked to close their eyes and listened to white noise to cover fan noises. Each test session took no more than 15 minutes. Participants were free to give their ratings on any scale. Normalization techniques to bring ratings into a consistent scale across the entire sample was applied (ANOVA). Authors found a moderately positive correlation between participants’ perceived airflow magnitude and objective measurements for each simulation. The evaluation however was to investigate if bodies can differentiate between single and multiple sources of wind on the same patch of skin and not to user immersion. As such, the results revealed that the individual variation in the assessment of wind effects is very high. Horizontal winds seemed to be more easily perceived, however, users’ sensitivity to changes in the magnitude of the airflow was highly variable. It is possible to observe that, in this study, the wind was the only content provided and the experiments did not analyze what happens when the effects of the wind are synchronized with the audiovisual content. This leads to the inference that wind is perceived differently and that the cross mode plays a role in digital experiences enriched with the wind.

2.1.2 Thermal effects and devices

Among devices that deliver thermal and wind sensory effects, are those that integrate both. These can be simple as improved humidifiers or hairdryers which respectively deliver cold or heat sensations, to complex air pumps, compressors, high speed fans or Peltier tablets mixed with wind devices to simulate different climatic effects, such as a storm, a fog or sunny day.

In 2014, [20] presented a system to generate winds and radiate heat in a CAVE (Cave Automatic Virtual Environment), which is configured with an infrared lamp and an axial fan. Hülsmann et al. [20], evaluated their improved CAVE system with wind and heat with a pilot study focused on the users’ experience. Although the participants reported an enrichment in their experiences in the CAVE, it should be noted that the air flow and the heat (transferred through thermal radiation) were generated from different devices. This may have influenced the users’ perception of both effects, as mentioned by the authors, who also emphasized the need to study in greater depth the cross modality of influences between wind and heat in the virtual environment.

Introduced in 2018, Haptic Around [18] is a system of tactile stimuli integrated with an HMD. This system consists of a steerable haptic device rigged on the top of the user head and a handheld device also with haptic feedback to simultaneously provide tactile sensations to the users in a 2m x 2m space. It uses a fan, a thermal blower, a mist disperser and a heating lamp. The system was divided into two parts, one to produce the sensation of heat and the other, of coldness. The hot part consists of two modules: the heat part and the hot air part. The heat module is equipped with a 250W lamp that can increase the temperature by 3∘C. The hot air module uses a 1300W thermal blower, which not only provides an air flow, but can also increase the temperature by up to 16∘C. The cold part consists of three modules: the mist, the wind and the rain. The first uses four ultrasonic transducers and a fan. The rain module is similar to the fog module, but uses three atomizers with micro openings at the bottom of a 600 ml reservoir that directly spray water. Finally, the wind module is equipped with a fan that provides winds up to 11.5 m/s. For the evaluation of this system, three virtual reality applications with haptic feedback were developed: (i) Entertainment, (ii) Education and (iii) Weather Simulation. Authors demo-ed those applications in three separate exhibitions, with each participant experiencing each application for about 8 minutes. Early results shows that most of the participants enjoyed playing the application with multiple tactile sensation. Further results were explored in a user evaluation: 16 participants (8 male and 8 female) age 20-29 were recruited to validate their haptic feedback system with the following conditions: (i) Without Haptics: here, the system was experienced in the absence of any non-contact tactile sensations; (ii) Controller-based Haptics: the system was experienced with haptic feedback on the controller only; (iii) Ubiquitous-based Haptics: the system was experienced with haptic feedback of the environment provided by the stationary device only, and, lastly, (iv) Hybrid-Haptics: the system was experienced with haptic feedback from the controller and the stationary device. This study was conducted as a RM-ANOVA with post-hoc pairwise Tukey’s tests. Results show that participants rated their experience as more enjoyable, realistic and immersive when they were in the hybrid-haptic feedback condition. The results demonstrated that the system is a potential approach to improve the immersive experience. In addition, users enjoyed the feeling of humidity. Also, most participants (10/16) mentioned that the perception of fog in the environment is quite significant. Finally, in the tests, the score for cold scenes was higher than that for hot scenes, with participants perceiving better the sensation of cold and humid environments, such as the river valley and snowy mountain.

Unlike most of the studies already mentioned, which are focused mostly on entertainment, Shaw et al. [55] studied in 2019 the behavior of users when evacuating simulated fires and the feeling of realism, with the addition of heat and smell in the simulated environment. The control of the devices responsible for the effects is done by an Arduino, with the production of aromas carried out by a SensoryScent 200 and the irradiation of heat, by 3 infrared devices of power 2KW each. Moreover, the developed system also has fins to allow better control of heat propagation. In their study, sser evaluation was divided into three rounds of tests (5 users in each). Participants were exposed to a fire simulation in a virtual environment. The aim was to investigate the addition of heat and smell to the simulation of a fire in an office building. Accordingly, participants were separated into groups: those who participated only with traditional content of audio and video (AV) and the group that participated with multisensory content (MS). The first two rounds used a think-aloud protocol while users navigated the prototype, thus obtaining feedback to refine various elements of the user experience, such as optimisation of movement. The third round did not use the think-aloud protocol. Instead, participants were interviewed following use of the system. In addition to further minor revisions to improve user experience, this final round of formative studies prompted the authors to introduce a pseudo-task which provided a context to improve the ecological validity, and allowed to investigate completion of tasks correctly. Participants conducted a virtual fire evacuation using one of two VR configurations: 23 participants, 13 male 10 female age 28 (mean) with one with visual and audio feedback only (AV condition) and 20 participants, 11 male and 9 female age 29 (mean) with with visual, audio, with additional thermal and olfactory feedback (multisensory, MS condition). Results suggest that participants in the MS condition felt a greater sense of urgency compared to those in the AV condition. The key difference was that users showed different attitudes towards the fire scenario, with MS participants feeling as though they were in a fire, and AV participants feeling as though they were in a fire simulation, with a more detached mentality. The final conclusions showed that it is possible to increase the user’s immersion in a virtual environment of simulation of a dangerous scenario by using multisensory interfaces.

In a related work in 2019, Embodied Weather [21] seeks to provide the user with an experience that involves different climatic effects (cloudy, drizzle, rain and storm). This work aimed to raise public awareness about the impact caused by anthropogenic activities on the planet, which causes extreme climatic conditions, such as typhoons, floods, heat and cold waves. The experiment reported by the authors simulates the user experience being in a typhoon in the city of Hong Kong. The subsystem that produces the wind effects is composed of an Arduino Mega with a relay module, for the control of the components, a high power and variable speed fan and VR glasses, to present the video that simulates the typhoon. This however is a prototypical work and to date no user evaluation has been performed by the authors.

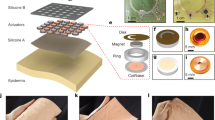

Related to [21], another device from the same authors was presented in 2020, namely the ThermalAirGlove [5], a pneumatic glove, with five inflatable airbags on the fingers and the palm, two temperature chambers (one hot and one cold), complemented with a closed-loop pneumatic thermal control system, which provides thermal feedback for users, to support the haptic experience of grabbing objects of different temperatures and materials in virtual reality (VR). Multiple evaluations were done with this device: first, the device was evaluated to measure the performance on generating different levels of thermal feedback in the temperature-tracking experiments. The results showed that the mean absolute error of the temperature-changing proportional stage was 0.37∘C, and 0.31∘C. The overall maximum measured error during the proportional stage was 0.95∘C, and 0.95∘C for the stable stage. Another three user evaluations were done with 12 participants each. For the first evaluation, a within-subject design was used with three independent variables: direction of change, intensity of change, and rate of change. Authors measured participants’ subjective ratings of thermal intensity and the trial-completion time as the dependent variables. In each trial, a thermal stimulus presentation comprised of a 10 seconds stimulus followed by a 40 seconds deflation period for the skin temperature to naturally return to its neutral temperature. There were no visual or auditory cues during the stimulus. The monitor in front of the participant showed the count-down process, and the tablet screen remained blank during the 10s stimulus. After the stimulus, the slider was presented for the participant to rate his/her perceived intensity in a continuous scale from Very Cold (slider value: 1.00) to Very Hot (slider value: 5.00), and the slider value for Neutral is equal to 3.00. Post-hoc pairwise comparison revealed that the cooling process was rated significantly more intensive than the warming process. Authors suggest that one possible reason is that cold receptors outnumber warm receptors in the skin, and the differential threshold for cooling is usually lower than that of warm one. The second user evaluation was similar to the previous experiment, but in this case, participants were stimulated to grab a 10cm sphere of a particular material with his/her dominant hand. Therefore, authors developed a Unity3D-based experiment system with the LeapMotion device above the table. The device tracked the participant’s hand movements and controlled the movements of a virtual hand accordingly. When the system detected the collision between the virtual hand and the virtual sphere, it activated the thermal cues accordingly. No significant results were showed, as there was no significant difference in terms of accuracy yielded by the real-object and the ThermalAirGlove material stimulation. The third and the last evaluation was done in virtual reality. Here, the authors developed a virtual reality application using Unity3D and the application used HTC Vive Pro HMD, a Leap-Motion hand-tracking device, and the glove system. There were five virtual objects in the virtual reality game: three spheres in different materials, and two cups of water. As regards object manipulation, there were three different modes: 1) using the bare hands without ThermalAirGlove, 2) wearing ThermalAirGlove with inflating the room-temperature air only, without any temperature or material simulation, and 3) wearing ThermalAirGlove with controlled pneumatic thermal feedback for temperature and material simulation. Results showed that the type of the control mode significantly affected the perceived naturalness of the interaction, with the use of both virtual reality and the ThermalAirGlove archiving higher scores. General results showed that this device in immersive virtual reality could significantly improve users’ experience of presence compared to the current virtual reality settings in the commercial markets. The user studies also suggested the thermal feedback played a vital role in the improvement of virtual reality experience.

Han et al. [17] presented in 2019 the Hapmosphere system which simulates the climatic conditions of the environment, allowing to supply wind, hot wind, humidity and heat, simultaneously. The system consists of a steerable structure with actuator modules manipulated on the ceiling, so that users can freely walk around the room using a head mounted display. The heat module is equipped with a 175W lamp to increase the air temperature from 22 to 25∘C. The hot wind device uses a 1300W thermal blower and allows to raise the temperature to 38∘C. The wind module consists of a large fan, while the humidity module uses an ultrasound set with a water tank to produce fog and a spray nozzle with a water pump to produce rain. A small fan allows you to direct the flow of the mist. The combination of modules allowed the generation of seven types of climates: Beach, Snowy Mountain, Cave, Rainforest, Desert, Forest, and Temperate Desert.To evaluate the feasibility of the project, the authors conducted a user study to validate their multiple tactile prototype with the seven environments. 21 participants (10 male and 11 females) age 21 to 25 were recruited. This was conducted as an RM-ANOVA with post-hoc pairwise Tukeys tests. Twenty participants had VR experience, 12 of them had experience with developing VR applications (without haptic feedback), only 1 participant had no experience of VR-HMD. After completing each scene, each participant filled out a questionnaire (measuring perceived intensity and persistence) and answered a short interview. Additionally, authors investigated the immersion and quality of the virtual scene rated on a 7-point Likert scale, after which an overall interview was conducted at the end of each session. Results were mixed: although their system couldn’t reconstruct extreme weather, the results showed the valid information for each weather condition, and it is a potential approach for enhancing the immersive experience in a room-scale space. Other results showed that, even for small temperature variations (reduction of 2∘C or increase of 1∘C), users noticed the change even if it did not correspond to the expected temperature in the environment. This shows the importance of audiovisual content in the multisensory experience - users can be brought to perceive some sensations of cold and heat when watching videos of snow or desert. However, despite the potential of these solutions, the authors highlighted that the development of thermal systems to reproduce, for example, extreme climatic conditions, is quite challenging. In fact, when the temperature varies rapidly in such multimedia experiences, according to the content of the video, the thermal effects must be provided from different devices, so that the wind does not dissipate them.

Xu et al. [73] implemented in 2019 a prototype that controls the speed of the generated air flow to produce a cooling process by removing heat from human skin. To generate cold air, the prototype uses a vortex tube, which is a small and simple device and has no moving parts. The author argues that, compared to the Peltier tablet, which needs more time to reach the desired temperature, the vortex tube provides faster responses to cooling when fed with compressed air, in addition to being able to reach very low temperatures (around -20∘C). The prototype consists of a vortex tube, a 750W compressor to supply compressed air and a solenoid valve to control the speed of the flow of the cold air generated. 5 participants (1 female, 4 male) aged between 21 to 28 took part in the experiments to evaluate the feasibility of this prototype. Participants wore a pair of noise-concealing headphones and heard pink noise to avoid sound interference. The air flow velocity equal to 2.0 m/s was considered as a standard stimulus and seven flow velocities (0.5; 1.0; 1.5; 2.0; 2.5; 3.0; 3.5 m/s) as comparison stimuli. To quantitatively assess the performance of the comparison, the JND (Just-Noticeable Difference) was calculated, which can be defined as the amount that a quantity must be changed in order for the difference to be noticeable. The mean and standard deviation of the JND of the 5 participants were 1.2818 m/s and 0.3304 m/s, respectively. Results revealed that the faster the flow rate, the colder the user will feel and a feeling of coolness at various levels can be experienced. This prototype proved to be a good alternative to Peltier tablets, which provide thermal sensation at a much slower pace.

In 2020, [29] presented a system that improves the cooling effect obtained through the generation of air flow mixed with vaporized water (mist) through ultrasonic transducers. This system is composed of: a surface of phase ultrasonic components; ultrasonic transducers, to generate mist; and a polyethylene tank, to store the mist. The tank is connected to a fan and a duct and, the moment the fan is activated, air is blown into the tank and the mist is discharged. The duct outlet is configured so that it is aligned with the surface of ultrasonic components. Part of the mist discharged from the duct is transported by the air flow generated from such a surface, thus improving the cooling effect. The evaluation of this system was made with the user placing his hand in front of the device. The results were measured with a thermographic camera showed a relatively fast temperature drop, achieving a greater cooling effect than other thermal devices that relied on Peltier elements.

2.2 Wearable devices for thermal effects

Cardin et al. [6] developed in 2007 a system composed of 8 fans with speed control distributed around a Head-Mounted Display, with the objective of improving the pilots’ immersion in remotely operated aircraft such as Unmanned Aerial Vehicles (UAVs). To evaluate this system, an application has been developed around a flight simulator where wind direction and forces are passed to the user via their system. Five different candidates, three men and two women from 20 to 35 years old experimented 20 min each with the environment. In the simulation, the main objective of the user was to support his/her aircraft as long as possible, facing the wind, in order to generate the maximum relative speed. The flights took place in circles, which produced disorientation, thus forcing the user to find the direction of the wind produced in the system. Between attempts, the wind direction was changed randomly. Concerns about the immersion were taken into account: in order to mask the sound of the fan, which can help in determining from where the wind is coming from and break immersion, a loud speaker was used to pass the sounds from the flight simulator. Feedback from the different users showed improvements of the immersion inside the simulation: “users find this device very natural to use and greatly improve the sense of presence. Although this feedback is mainly subjective to the users feeling” [6]. Results showed that the average error to find the correct wind direction, among the different users, had a standard deviation of 8.5∘. Regarding the general feeling of users, there was an improvement in immersion and many found the device to be intuitive. One of the main issues to be addressed is the system latency due to the inertia of the fan and the relatively slow wind speed.

Also in 2007, [69] investigated the use of thermal sensations to generate notifications for the user in mobile applications. Two prototypes were developed to evaluate the proposal. The first, designed to test the ability to detect heat at the fingertips, used a resistor as a heat source. This prototype received notification information and converted to up to three heating levels (32∘C, 37∘C and 42∘C). The second prototype also used a resistor and was used for a space navigation scenario, in which the temperature was gradually increased according to the proximity between the user and his destination. In order to evaluate the experience and the viability of the prototypes, a series of tests with users were carried out. In all of them, the ability to recognize the thermal change was assessed. In the first test, the user tried to discern the correct temperature level (low, medium or high) when placing his/her finger upon the prototype. Of the 10 participants tested, during ten cycles each, there was an average of 2.7 errors in total. When removing the error rate produced in the test configuration, the final error rate was 25%. In the extended tests, which lasted for ten days, the probabilities of a learning effect to recognize the thermal change were examined. In this test, the participant used a small device with a touch area that heated up, at random, at five different temperature levels. Then, information was requested on what temperature level the prototype was at. It was found that the error rate, which was initially around 65% on average, became progressively lower, converging to a figure around 25% after a ten-day cycle. Finally, it was shown that, within a given range of approximately 10∘C, it is possible to create a scale with up to five different temperature levels. On this scale, any user can detect and distinguish one level from another. User evaluation with Peltier tablets, to generate heat and cold, revealed excessive energy consumption as well as user discomfort. However, the experiment confirmed the authors’ assumptions that human thermal perception is capable of responding even to subtle changes of a device’s temperature, which qualifies thermal output as a means of ambient information display. This information is useful for mulsemedia application and can me improved when integrated with physiological data measurement. Indeed, the drawback of high energy consumption of thermal devices is saw in other studies [4, 6].

Narumi et al. [32] proposed in 2009 the Thermotaxis system which aimed at providing users with location-dependent thermal information in open existing spaces. This system was composed of several earmuff-like wearable devices and an unit responsible for tracking and controlling each wearable device. For tracking, the system uses an IEEE 1394 camera with an infrared filter attached to the ceiling and infrared LEDs attached to the top of the wearable device. The control unit was implemented with an Arduino Nano that controls two Peltier plates placed on each side of the earmuff device. The Peltier plates can create differences in temperature between 20∘C to 40∘C, which enable Thermotaxis devices to create thermal sensory spots in an open space. In addition, an experiment was carried out to examine how the thermal characteristics of space affect the behavior of people in space. Six thermal earmuffs were developed, which allowed six people to try the system at the same time. The authors estimated that more than 400 people, of various ages, have tried this system. Everyone was instructed to put on the ear protector and walk through the open space to find comfortable areas. The dimensions of the space were about 25m x 16m. To examine the trajectories of people in that space, the system was programmed to record users’ positions. The results showed that it is possible to influence people’s behavior by reconfiguring an open space with an invisible spatial structure. In this article, the authors argued that the use of thermal information is useful to create an implicit spatial structure. The result of the experiment showed that the presentation of thermal information affects people’s behavior, causing them to gather in warmer areas.

In 2011, [71] presented two studies on the limit of users’ perception of thermal stimuli induced in the fingertips, palm, dorsal surface of the forearm and dorsal surface of the arm. The studies were carried out with a micro controller and two Peltier tablets to produce the thermal sensation. 14 participants, 9 male 5 female aged between 21 to 57 years took part in the evaluation. The goal of this study was to identify what stimuli produce detectable sensations from a neutral base temperature. The results showed that: (i) the palm of the hand is more sensitive, (ii) the sensation of cold is more noticeable and comfortable than the sensation of hot and that (iii) stimuli with more sudden changes are more detectable, however less comfortable. Also, although authors stated while both warm and cold stimuli are equally detectable, cold stimuli are faster to detect, require less change to be perceptible and are more comfortable as they feel less intense. Those results are similar were later reconfirmed by the findings of [2] and [35] some years later.

In 2017, [42] proposed Ambiotherm, a VR system that integrates thermal and wind stimuli in an head mounted display. Still in the same project, [44] developed the Seasonal Traveler, a 3D printed VR system that, in addition to the wind thermal stimuli, also incorporates olfactory stimuli. To provide these resources, the system consists of a set of fans (wind), a set of Peltier plates (thermal) and air pumps (olfactory), all controlled by commands sent by the head mounted display via Bluetooth. The thermal sensation is associated with a module that is located around the user’s neck; such a module consists of two Peltier plates that are mounted with heat sinks for regular temperature. The wind module uses two fans and two servomotors. These rotate the fans up to 45∘ to the left or right in relation to the center of the user’s face to try to increase the realism of the air flows. To evaluate this system, the authors developed two distinct VR environments: an arid desert and a snowy mountain environment. The user follows the virtual guide on her path provided with a first-person view of the virtual environment. Auditory feedback that represents the virtual guide’s footsteps and the wind blowing is provided. It was found that participants could always detect wind stimuli, but they could not do the same with thermal effects. The researchers raised the hypothesis that this is related to the exclusive sensitivity of the participants, that is, each one perceives the thermal effects differently. Although this statement may be true, it does not apply to the effects of the wind, which makes clear the need for further studies with the effects of wind and thermals to understand the perception of users. Overall results are that, despite both performing equally well in many aspects, Ambiotherm outperformed VR and Wind devices in terms of thermal involvement. Another observation is that virtual reality with Wind consistently outperformed virtual reality with Thermal in nearly all recorded aspects. Authors speculate that this may be due to differences in the raw impact of the two stimuli, a phenomenon also highlighted by other studies in evaluations of their devices.

Introduced in 2017 by [35], ThermoVR is a system to produce heat and cold stimuli directly on the user’s face using 5 thermal modules, based on Peltier tablets, coupled with a HMD. These tablets are capable of increasing or decreasing the temperature at a relatively high speed, at a rate of ± 3∘C/s. The temperature of the tablets is controlled by an Arduino Nano 2 and they are in contact with the user’s face at three points on his/her forehead and in the two areas below each of the eyes. Two evaluations of ThermoVR were conducted with 15 participants (9 males and 6 females), with anof average age 28 years: a qualitative study for perception accuracy and a qualitative study to investigate the thermal immersion experience. Overall, participant feedback mentioned that hot stimuli on the forehead were difficult to identify. However, cold stimuli were perceived at 96% accuracy as their experiences indicated that cold stimuli are perceived better than hot ones; in addition, users feel more immersed when experiencing the maximum configuration - all modules were activated with hot and cold temperatures. For user safety reasons, the temperature was kept within a range of 24∘C to 40∘C. As expected from the thermal aspects, authors concluded that thermal stimulation significantly improved the immersion experience for participants. A statement that was not identified by other studies but is worthy of note is that devices mounted on HMDs can be rather heavy and uncomfortable.

Kim et al. [22] presented in 2018 a study that explored the relation between voice and thermal sensation. Their approach uses a haptic prototype that allows temperature variation to complement the interaction with voice-based intelligent agents (VIA, Voice-based Intelligent Agent), such as Alexa, Siri and Bixby. The prototype was divided into two main parts: the configuration of the VIA and the development of thermal stimulation. The first was implemented using Amazon’s Alexa Voice Service (AVS) on a Raspberry Pi. For the second, two thermal stimulators were installed at the extremities of the prototype. The stimulators were configured for five levels of thermal sensations (cooler, colder, neutral, hot, and hotter) for interaction with the users’ hands. An infrared heating wire generated heat whilst a Peltier insert, coupled with a heatsink and cooler, removed heat from the prototype. The combined use of the heating wire and Peltier insert allowed for effective temperature changes. Finally, two infrared temperature sensors were installed in the stimulators to measure the temperature in real time. In the article, the authors discuss whether touch interaction is necessary and argue that in certain contexts, especially when voice commands allow users to have their hands free, it may seem unnecessary. 6 participants, 3 male and 3 female, age 22-30, took part in the evaluation of this prototype: participants were asked to hold the prototype with both hands and to interact with Alexa at a room temperature of about 23∘ C. In addition to participants having generally enjoyed the interaction with the prototype, it is noteworthy that participants perceived Alexa supplemented with interactive thermal augmentation to be friendlier and more lifelike compared with the voice-only Alexa. However, it is possible to consider appropriate contexts whose touch interaction can occur naturally, such as, for example, hugging, holding hands or caressing. For this, it is possible to represent a warm and soft sensation on the human skin, applying materials that can provide a realistic impression, such as silicon, in the touch region. In addition, the study of [22] suggests that thermal stimulation can support information awareness and an immersive experience; the thermal sensation can be associated with the perception of right or wrong, true or false and positive or negative. In the same article, the authors discuss whether touch interaction is necessary and argue that in certain contexts, especially when voice commands allow users to have their hands free, it may seem unnecessary.

Thermal Bracelet [34] is a related prototype produced in 2019 by the same authors of ThermoVR [35]. This prototype consists of a bracelet mounted around the user’s wrist, to produce vibration and heating/cooling stimuli. The authors also used Peltier tablets and justified their choice in the ability of the tablets to promote rapid changes in temperature and provide heating or cooling stimuli. For the development of the bracelet, several tactile modules [30] were used to produce a vibratory and thermal response on the skin. These devices were composed of a miniaturized vibrator (Force Reactor AF) and four Peltier tablets, two placed diagonally for heating, and two others for cooling. All modules were controlled by an Arduino Mega microcontroller. A laptop connects (i) to the prototype’s Arduino Mega controller via a serial port connection to issue heating / cooling commands and (ii) to the vibration control driver through its audio interface. This device was evaluated by 12 participants, 7 male and 5 female age 22-34 in three different studies. The main goal of the first evaluation was to explore the perception limits of thermal haptic feedback around the wrist with multiple actuators and select a suitable configuration to provide feedback in a close environment (24∘C room temperature in an indoor environment in summer-like weather conditions). The goal of the second evaluation was to investigate the thermal haptic feedback around the wrist in a semi-realistic scenario such as while walking. The goal of the second experiment was to explore the possibility of expanding haptic notifications around the wrist with spatio temporal stimuli. The results indicate that, the mean perceived accuracy for thermal haptic feedback worked best with cold thermal feedback in the four (99%) and six modules (95%) equally spaced around the wrist. The overall response rate in these later scenarios for thermal response around the wrist (89%) was better than the vibration stimuli (78%). Such data indicate the feasibility of providing a thermal tactile response on the wrist. Finally, the ability of the bracelet to provide a spatial temporal response was investigated, while the user developed a task that distracted him/her, such as playing a game. This indicated that the thermal response may be adequate for the situation mentioned. In addition, the thermal stimuli performed better compared to vibrotactile stimuli.

In 2020, [70] proposed the FaceHaptics system which consists of a custom robot arm connected to an Head-Mounted Display. A set comprising a fan and an electrical resistor was connected to the HMD to allow the production of hot air flows. The flow can reach about 55∘C to 3 cm from the user’s skin. In order to provide sensations of humidity, a spray nozzle, with relay activation, was added to the side of the fan. The nozzle, connected to a Philips Sonicare Airfloss device through a flexible tube, can spray small amounts of a mixture of air and water on the user’s face. The small water container offers more than 50 sprays, with a delay of 600 ms (caused by Philips Sonicare). An Arduino Mega drives the elements of the system that were used in the simulation of a scene passed in a tropical forest created in 3D Unity. The evaluation of this system consisted in two different studies with 16 participants, 11 male and 5 female, aged between 19 to 47 years. In both studies, users wore noise-concealing headphones with the head-mounted display and experimented simulated environments. Participants answered demographic and user experience questionnaires before and after the evaluation. The goal of the first study was to investigate how well participants could judge direction of airflow provided through the fan attached to the system. Results highlighted that users could judge directionality of the wind well. The goal of the second study was to investigate the effect of different FaceHaptics elements, namely touch, wind, warmth and wetness, on presence and emotional response in a compelling immersive environment walkthrough. In this aspect, results revealed that presence was higher in most multisensory categories for the multisensory condition. Multisensory experience also achieved higher emotional response ratings, measured with SAM scale ratings. In addition, it was reported that the direction of tactile stimuli positively affected the link with a visual cue. Finally, it is important to note that the robotic arm compensates for the movement of the head, making the experience more immersive. This aspect of hardware design is very different from such a system compared to other multisensory head-mounted display solutions.

ThermalWear [2] is a vest also developed in 2020 to explore the relationship between thermal stimuli and the perception of voice messages with affection. Two prototypes were created (a male and a female version), which were based on a Neoprene diving vest, in which a Peltier tablet was inserted, chosen, according to the authors, for its ability to provide rapid changes in temperature. In addition, an ESP8266, a voltage regulator, a thermocouple type K, a MAX31850 for digital conversion, a DC motor driver (BTN8982TA) and a heat sink were used. The thermocouple was used to measure the temperature in real time (intervals of 100 ms). The DC motor driver was added to the project to allow changing the voltage value in the Peltier insert, thus allowing for different thermal stimuli. This vest was evaluated in two experiments: (1) the first was to explore thermal perception across contact medium, thermal intensity, and direction of change. For each experimental session, participants were asked to wear the ThermalWear prototype, and thereafter given a demonstration. Here, participants could get familiar with the thermal stimuli and Android application by experiencing the coolest and warmest stimuli. After the demonstration, participants were presented the unique combinations of experiment conditions. Eight trials were experienced directly on the skin (No-Fabric condition), and the other eight through a silk patch (Fabric condition). Overall results of the first experiment also support the previous finding that perception of cold thermal stimuli is greater than that of warm stimuli. The second experiment was to explore how thermal stimuli on the upper chest influence emotion perception of neutrally spoken voice messages. 12 participants, 6 male and 6 female, between 24-32 years old, were recruited and the procedures followed the first experiment. Results showed that with respect to valence ratings, thermal stimulation does indeed affect the valence of a neutrally spoken voice message spoken voice messages. This however varies across cool and warm stimuli. First, while warm stimuli increase the valence of positive messages, this is not so for negative ones. Cool stimuli on the other hand, lower the valence of both positive and negative messages. The authors found that thermal stimulation of the chest can alter levels of excitation and valence. In addition, they concluded that thermal stimuli in the chest can be perceived quickly (in approximately 5s) and that the equipment does not cause discomfort to the user.

Much akin to ThermalBracelet [34], in 2020 [56] presented THED, a wearable thermal display for perceiving thermal sensations in a virtual reality (VR) environment. The equipment consists of a thermal stimulation module (Peltier tablet and heat sink) worn on the wrist and a control module that uses Bluetooth communication (Bluno, Arduino and BLE). The prototype was tested in an RV environment that presents the user with a bonfire in a snowy climate. The main objective of the study was to identify how humans perceive the thermal sensations in their hands.

Also in 2020, [33] introduced the ThermalBitDisplay, a haptic device composed of Peltier tablets to provide different thermal sensations on the user’s skin. The authors decided to use the smallest available Peltier tablet so that it could be incorporated into portable devices, such as a ring or bracelet, in addition to reducing energy consumption. 12 participants (8 male and 4 females; aged between 20–50 years) participated in the evaluation in two different studies. Authors tested the following hypotheses: (1) Thermal feedback can be perceived differently between different sensitive body parts[ (2) When multiple different thermal stimuli are presented to the thermally sensitive parts such as the lips, each stimulus can be perceived separately. The experimental results demonstrated the feasibility of ThermalBitDisplay mainly because the second study indicated that the spatial summation of thermal perception and thermal referral did not occur on the lips; multiple stimuli were perceived separately. Therefore, ThermalBitDisplay with three Peltier devices can provide hot and pain stimulation to the lips.

2.3 Commercial products and devices

In addition to the devices mentioned so far, we have included some commercial products that provide wind and/or thermal effects. These cover failed kickstart projects and discontinued products, as well as some projects that were recently released or are still experimental. They are not included in the previous sections because there is not enough information about their evaluation, results or how they were built.

In 2006, Philips’ amBX Gaming PC peripherals [38] was the first commercial platform to generate different sensory effects (air flows produced by 2 fans, a vibration bar and RGB LEDs) associated with audiovisual content. Although the product was discontinued, amBX was the most used platform in studies that involved reproducing sensory effects until 2013 [66]. Waltl et al. [68] created a set of annotations in the MPEG-V standard to add wind, vibration and lighting effects to video clips. To test such notes, the amBX system was used and, to increase the immersion in one of the test configurations, two amBX kits were used together. In 2019, [48] proposed an uncoupled and distributed architecture to deal with sensory effects and used the amBX platform to compare performance with the monolithic architecture used by Waltl et al. [68].

VirWind [24] is another example of a platform designed to produce wind effects. The platform consists of four fan towers, which could be distributed around the user in order to produce tactile sensations by 3D winds. The control of the towers is done by a controller unit, which connects to the content generating device (PC or game console) via USB. In 2015, a campaign was started for crowdfunding VirWind, but the project was unsuccessful [24].

Birdly [45] was developed in 2014 to simulate the experience of a bird in flight. The user controls the simulator with his hands and arms, to get closer to the experience of using wings. The visualization takes place through HMD (Oculus Rift), in which the participant watches a virtual landscape, in first person, as if it were a flying bird. In addition, it has sound, olfactory and wind stimuli. The olfactory stimulus is based on the bird’s overflight location and the odors produced can vary from the smell of a forest to others specific to a desert. The commercial version of Birdly was launched in 2019 [47].

VORTX from WhirlWindfxFootnote 2 is an environmental simulator for PC games released in 2018 that creates real-time synchronized wind and thermal effects with on-screen action. Using sensors that analyze audio and video for loud noise and warm colors such as red and yellow, VORTX triggers physical environmental effects of ambient-temperature or hot wind to simulate events such as explosions and high-velocity. Despite being power hungry (1000 KW), the device is being well received by its customers, scoring 4.3 / 5 based on 219 Reviews. Issues identified by some customer reviews are constant device noise, even when in idle, and some impracticalities as sensors that trigger wind with any loud sounds such as music or unrelated wind or thermal event. Also, although it was supposed to work in real time, the lack of synchronization between the event and the wind was mentioned by users. This is understandable as synchronization is a major issue with multimedia devices, especially in real-time.

3 Discussion

The first understanding of the studies presented is the absence of a standard usability evaluation methodology. This is understandable, as the plethora of devices and how they work is extensive. Even so, some elements could be identified in almost all surveyed studies: first was the concern about breaking of immersion when using devices that can be seen or felt by the user. For example, to muffle the sound created by their devices, [63, 73] and [20] ordered their participating users to close their eyes and listen to white or pink noise. The use of loud speakers was also used to muffle the sound created by fans, as reported by [6], while sound concealing headphones were used in the studies of [70] and [31]. Even though participants wore headphones with acoustic noise cancellation, [31] mentioned that the sound was loud enough for participants to hear it and authors believe that this sound increased participants’ awareness of the real world and thus helped the break of immersion.

This concern is valid because these studies used tactile wind effects to increase spatial perception or improve user immersion. Sounds generated by fans or other devices could confuse the user and hinder results and the sound produced by these devices, mainly with fans, can perturb user immersion.

The second understanding about their evaluations is that, with the exception of [21] that did not perform any, all studies collected qualitative data through questionnaires [17, 22, 41, 70, 71], interviews [4, 31, 70] and/or think-aloud methods [55]. Evaluations were performed using Likert Scale or variations, such as ASHRAE, a Likert based scale specifically designed to measure temperature [2, 4, 17, 71] generally in addition with ANOVA [5, 17, 18, 31, 34, 35, 63, 70, 71].

Insights about the perception of wind and thermal effects showed that, as expected, they achieved their goal of improving the user experience in almost all instances. However, some conclusions shared by several authors are noteworthy. The first is that cold effects are more easily noticeable to users and achieve higher accuracy scores than warm or hot effects in all studies that made this comparison [2, 5, 18, 34, 35, 35, 71]. Hot effects were also harder to identify compared to cold effects even in studies that used olfactory devices to stimulate and create an illusion of temperature variation [4]. This can be explained by the fact that our thermoreceptors - free nerve endings that reside in the skin, liver, skeletal muscles and in the hypothalamus - are divided into warm and cold receptors, with the latter being more prevalent in the human skin than warm receptors at a ratio of thirty-to-one [19].

A main aspect pertaining to the field of Internet of Things, in which most of these devices presented can be classified, but only one study shows concerns about, is the challenge of synchronization and latency [6]. Synchronization of multi sensory effects and the underlying mulsemedia responsible for generating them is a crucial aspect in developing immersive experiences and [6]. The latency of the system due to the inertia of the fan and the slow speed of the wind is one of the main issues that can compromise the user experience. Moreover, this problematic, since thermal effects are not delivered immediately, but have a gradual variation from cold to heat or vice versa. When wind and thermal effects are mixed, the combined effect of heat/cold sensations on the user is even more complex, as one effect can disperse the other.

To deal with the problem of slow and gradual thermal variance, present in both Peltier tablets and fans alike, some alternatives were proposed: vortex tubes, used by [73], could generate fast ultra low temperatures when supplied with pressured air. However, this method can be expensive and power consuming. Another alternative is a mist guided by ultrasonic transducers [29] - besides being capable of delivering a higher cooling effect at a faster rate than other devices, it also improves the capacity to maintain the low temperature and prolong the return of the initial temperature after the airflow stopped.

Another important aspect most studies have not considered is the power consumption of these devices. Only a few studies highlighted this, such as those conducted by [6, 69], and [4]. These authors state that energy supply is a major problem of thermal interfaces, as Peltier elements require a high amount of electrical power. This aspect is even more complex as wearable devices tend to be mobile, as a result, power supply becomes a significant issue. This challenge was an important motivation for developing the device proposed by [4] that achieved temperature illusion with low powered electronics, enabling the miniaturization of simple temperature sensations. Therefore, this can be considered an alternative to traditional thermoelectric materials.

The study conducted by [4] showed that olfactory stimuli can be more effective (at least 20 times more effective) in generating the sensation of thermal effects than regular devices such as ventilators and Peltier tablets. In another study that explored the olfactory impact on thermal sensations, [31] also stated that the addition of an olfactory stimulus was also effective in increasing immersion, while the addition of a tactile stimulus, despite the positive impact, was not statistically significant when compared to olfactory stimuli.

Some studies also showed challenges besides the power consumption and latency. For example, [35] stated that participants perceived the system consisting of Peltier elements as heavy and uncomfortable after only 20 min of use. For the olfactory system, [4] observed that, although their system achieved better thermal sensation than other conventional devices, one participant found this system to be “too intense” and preferred the thermal experience without olfactory stimulants. This was supported by results reported by [71], where users perceived this problem of intensity variation with both hot and cold stimuli, where hot sensation was generally less comfortable and felt more intense. Wettach et al.[69] however stated that the discomfort appeared mostly at low temperatures generated by the Peltier modules. To avoid skin damage or discomfort, [19] emphasised that warm and cold receptors respond and are activated at different rates of temperature or temperature change. Warm thermoreceptors are active in the range from 30∘C to 45∘C while cold thermoreceptors are active in the range of decreasing temperatures from 30∘C to 18∘C. Below 18∘C and above 45∘C, thermal sensation transitions to a feeling of pain. This information is relevant for the development of multisensory devices as the thermal variation should respect this threshold.

Studies cited so far have depended on the haptic stimuli applied to areas of the skin to deliver thermal sensation with fans, Peltier plates, or other devices However, some studies bring a different approach that can provide a countermeasure for the aforementioned problems of power consumption or danger of skin lesions: the simulation heat and cold effects with olfactory stimuli, by the trigeminal nerve of the nose. These systems have the advantage of simulating thermal sensation with less energy consumption as they do not rely on thermal devices which are inherent power consuming [6, 69] and, sometimes, with better results [4].

One of these low-power electronic equipments that creates an illusion of temperature variation was developed by [4]. Such an illusion is dependent on the properties of certain aromas (e.g. freshness of mint or the taste of peppers). These odors stimulate not only the olfactory part, but also the trigeminal nerve of the nose, which has receptors that respond to temperature. To explore this, the authors designed a wearable device based on a micro pump (which produces small bursts of 70ms) and an atomizer (vibrating mesh) that emits up to three personalized “thermal” scents directly on the user’s nose. When breathing in these aromas, the user feels hot or cold. The authors conducted a series of user studies aimed at identifying the most effective trigeminal stimulants to achieve simple hot and cold sensations. Out of six candidate scents, authors found that two stimulants, thymol and capsaicin were perceived as warm, while the remainder four (peppermint essential oil, eucalyptol, linalool and Everclear) were perceived as cooling. A second study was conducted to verify another key idea related to how these stimulants can induce simple hot/cold sensations in virtual reality. Qualitative feedback was collected with questionnaires. Several participants remarked that cooling trigeminal scents increased their sense of immersion in the environment and felt as though the “air breathed-in was cold”. Authors stated that their approach is 20 to 52 times more efficient than conventional thermal equipment reported before. As seen in the previously mentioned study, the warming sensations were less pronounced.

Immersion in virtual environments is an objective dimension of the technology being used. Therefore, it is difficult to compare the results of these studies across the years, given the pace of technology developments [6, 31]. Another limitation of these studies is that they were performed in temperature-controlled environments and not in “real” or open environments. Thus, how this might influence the results and the perception of the thermal sensation is still a matter of research.

Figure 1 shows the timeline of the development of thermal and wind devices for multisensory human-computer interaction. The concentration between the years 2018 and 2021 highlights a possible research trend. In addition, we have classified each device into three colors: blue for devices that emit wind, red for devices that emit thermal effects, and purple for devices that use the olfactory sense as a heat sensation inducer.

Table 1 compares some important features of the related work discussed in this section and summarizes the main parameters considered on the user experience evaluation for the approaches and systems surveyed in this paper. The second and third columns identify the cited work and present a brief description and the name of the prototype, if it is a commercial product or a brief description of the system or device. The “Type of sensory effects implemented” column shows the types of sensory effects the device is capable to provide to users in each related work. “Components for temperature control” clarifies the electronic components responsible to produce the thermal effects. “Effect Presentation Mode” field explains whether the approach uses wearable or unwearable devices. The “Consump.” column corresponds to the energy consumed to produce the wind and/or thermal effects for each approach. In this case, the information “Low” represents a range of up to 50W. For items marked “Medium”, the power consumed is in the range that varies between 50W and 1KW. The “High” was used for equipment that operates above 1KW. The power scale was divided into three to encompass the following segments: portable, domestic and industrial. The “Assessment Approach” field can contain the information of “Subjective”, which is characterized as something that varies according to the judgment of each person, or “Objective”, which, unlike the “Subjective” that allows interpretation, reflection and individual speculation about a certain thing is direct and literal. In the “Hypothesis Test”, it seeks to inform whether the experiment was carried out using equipment measurements or questionnaires with users. The acronyms UEQ and RUM on the table stand for User Experience Questionnaire and Real User Monitoring, respectively. Also, NIA stands for No Information Available. Finally, the “Number of participants” for each study is shown in the last column.

It is important to note that most devices deliver air flows at environment temperature. In addition, of the few that provide airflow with temperature control, most are able to produce air heating and use Peltier plates to produce air cooling. The devices with the highest energy consumption generally use heating lamps or thermal blowers. Finally, wearable devices tend to consume less power, but have greater complexity for implementation.

In addition to the technical aspects identified here, a historical projection of the functioning of these devices was also observed. Originally, haptics and thermal devices tended to be unwearable. With the advancement of IoT technologies, the trend has increasingly shifted towards wearable devices. This can be evidenced and justified by the ease of communication between “things” and devices, which facilitates the construction and evaluation of haptic devices with different functionalities. There is even a trend towards adopting physiological data capture devices (such as electroencephalography, heart rate and respiration) to work in conjunction with multisensory devices to better understand their functionality and feedback [7, 60].

Suhaimi et al. [60] even claims that despite the growing interest in using physiological systems to measure the quality of the user experience, there are still obstacles such as the a lack of studies to conduct an emotion classification based on virtual reality or with another mulsemedia device where the immersive experience could evoke greater emotional responses in relation to traditional stimuli presented through computer monitors or audible speakers, since mulsemedia combines multiple senses and the sensation of “being there” in an immersive way.

4 Conclusion

In this paper, we presented an overview of the approaches for generating tactile effects through air flows and temperature variation. As shown, few platforms for generating sensory effects have devices capable of producing air flows with variable temperature. In the reviewed studies: (i) users usually experience air flows produced by fans that move air molecules at room temperature and (ii) it is not usual to integrate thermal components to the devices intended for the production of air flows.

We mention here that, in the solution of [44], which has fans and thermal actuators, the winds are not heated or cooled. The same is valid for the system proposed by Hülsmann et al. [20], and in this case, the focus was only on the generation of heat - the lamps were used to heat the room and there was no mechanism to cool them. Han et al. [17] also focused only on generating the sensation of heat, produced by a thermal blower that generates a flow of hot air. A common solution in the aforementioned studies was the use of humidifiers [21] or ultrasonic transducers [17, 18, 29] to vaporize the water and direct the air flow towards the user. However, such a solution aims to generate a thermal cooling sensation, without actually changing the temperature of the generated wind.

Solutions with vortex tubes and dry ice were mentioned in the studies conducted by Xu et al. [73] and Nakajima et al. [29], respectively. Although both prototypes have worked well according to results presented by the authors, both prototypes are complex in their construction and impractical. The first requires an air compressor, a large and difficult to access device, whilst the second has complex storage requirements, mandating the packaging of the polyethylene tank in a freezer at -78∘C. In addition to the improved devices with heated or cooled wind effects, contact heating or cooling devices were evaluated in order to identify which components are most used for temperature variation.

Our survey revealed that Peltier plates were the most used solution for cooling in the evaluated proposals [2, 22, 32,33,34,35, 44, 71] to (i) provide relatively rapid changes in temperature; (ii) enable the generation (heating) or heat absorption (cooling) on their faces; and (iii) allow simple temperature control [33]. On the other hand, according to Xu et al. [73], the vortex tube, an element chosen by the authors to reduce the temperature in their prototype, had a much better response in time than Peltier for cooling, and was recommended for applications where this requirement is mandatory. Such a contradiction must be investigated, but can be attributed to the difficulty of using the tablet to cool or warm winds. Brooks et al. [4] argued that Peltier tablets have a high energy consumption and proposed a pseudo-haptic solution that creates an illusion of temperature by stimulating the trigeminal nerve. However, the sensation of heat or cold stimulated by odors can be influenced by cultural aspects [72]. Pseudo-haptic systems have another limitation - it is yet to be studied how our brain would react with ambiguous multisensory data [39] taking into account context, target, and parallel stimuli.

Within a more general context, the findings of the current paper endorse the usual challenges of mulsemedia systems: control and delivery time (latency), spatial location, and effects quality and quantity during stimulus delivery, in addition to complex integration involving multiple sensory effects simultaneously (valence) of two or more senses to create a third, as with tactile stimuli with thermoreceptors to simulate environmental or spatial awareness, or the blending of smell and taste to simulate thermal effects.

Furthermore, the identified devices revealed an evolutionary perspective as well as historical trend: devices for stimulating heat and coldness were predominantly non-wearable and restricted to wind emission. However, from 2015 to 2021, thermal devices for emitting heat have increased their presence and wearable devices have become commonplace. Indeed, considering the advent of the IoT, it is understandable that wearable devices are a trend being followed by mulsemedia devices.

Our overview does not consider the joint effects of wind (usually without temperature control) and smell effects in mulsemedia systems. Thus, the studies like those conducted by [11] and [31] are out of scope of this paper. The former reported QoE experiments in 360∘ videos with content synchronized to olfactory and tactile stimuli by means of airflow, produced from prototyped devices. A fan, without temperature control and controlled by an Arduino, and an odor emitter, controlled by a DFRobot Bluno Nano, were used for the effects. Videos with different degrees of dynamism and with and without annotations of smell effects and air flows were watched by the participants in the experiment. The results of [11] showed that both the overall perceived quality and user satisfaction is significantly higher when sensory effects are used in the videos. In related work [31] studied the effect of tactile and olfactory stimuli on the participants’ immersion sensation while watching a 360∘ video using an HMD. For olfactory purposes, a SensoryCo SmX-4D aroma system was used, fed by compressed air. A scent is preloaded, which is subsequently released into the environment using SensoryCo’s proprietary nozzle. The main conclusion of this work was that the addition of an olfactory stimulus significantly increases immersion, whereas the addition of a tactile stimulus, despite having shown a positive impact, was not statistically significant, indicating that the olfactory stimulus should be privileged over the tactile, for being more effective.

The integration of wind and thermal devices in a digital context is challenging as well, as the resulting effects can cancel each other out (the wind dissipates the thermal effect) and this can affect the user experience. Although some attempts to provide these effects separately have been presented in this paper, combining them might enhance certain types of experiences (for example, experiencing the climate in a virtual environment).

Given the importance of multisensory stimuli for a greater sense of immersion in mulsemedia and VR, it is plausible to assume that the ability to emit wind effects at specific temperatures has a significant impact on the user experience. Thus, it is imperative that future work targets both the development of thermal wind devices as well as conducting user QoE studies, so as to gain a deeper understanding of how one can incorporate such effects in a multisensory context in order to maximize the user experience.

Notes

Manufacturer of semiconductors that was acquired by Microchip Technology in 2016.

References

Ademoye OA, Ghinea G (2013) Information recall task impact in olfaction-enhanced multimedia. ACM Trans Multimed Comput Commun Appl (TOMM) 9(3):1–16

Ali AE, Yang X, Ananthanarayan S, Röggla T, Jansen J, Hartcher-O’Brien J, Jansen K, Cesar P (2020) ThermalWear: exploring wearable on-chest thermal displays to augment voice messages with affect. In: Proceedings of the 2020 CHI conference on human factors in computing systems. ACM. https://doi.org/10.1145/3313831.3376682

Billinghurst M, Starner T (1999) Wearable devices: new ways to manage information. Computer 32(1):57–64. https://doi.org/10.1109/2.738305

Brooks J, Nagels S, Lopes P (2020) Trigeminal-based temperature illusions. In: Proceedings of the 2020 CHI conference on human factors in computing systems, CHI ’20, pp 1–12, New York, NY, USA. Association for computing machinery. ISBN 9781450367080. https://doi.org/10.1145/3313831.3376806

Cai S, Ke P, Narumi T, Zhu K (2020) Thermairglove: a pneumatic glove for thermal perception and material identification in virtual reality. In: 2020 IEEE conference on virtual reality and 3D user interfaces (VR), pp 248–257. https://doi.org/10.1109/VR46266.2020.00044

Cardin S, Thalmann D, Vexo F (2007) Head mounted wind. In: Proceeding of the 20th annual conference on computer animation and social agents (CASA2007), pp 101–108