Abstract

In this proposed work, a dual image watermarking algorithm is used to protect the data against copyright violations. In this work, the DICOM image is used as a host image. Two watermark images used are the MNNIT logo and the personal data of the patient. This method utilizes the advantages of Schur decomposition, lifting wavelet transform (LWT), discrete cosine transform (DCT) and singular value decomposition (SVD). The scaling factor is a vital parameter of watermarking technique. The firefly optimization technique is used to get the optimized scaling factor. The Speeded-up robust features (SURF) are used for watermarking authentication. To evaluate the performance of the proposed algorithm, peak signal-to-noise ratio (PSNR), normalized correlation coefficient (NCC), and structural similarity index measurement (SSIM) are used. The proposed method is tested against various attacks such as Salt and Pepper noise, Gaussian noise, Gaussian low pass filter, Average filter, Median filter, Histogram equalization, Sharpening, Rotation and Region of interest filtering. The proposed algorithm shows a high level of robustness and imperceptibility. It is found that the features of the input host image and the watermarked image are matching correctly on applying the SURF technique.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

With the rapid development of digital technologies, several pressing security concerns have arisen, including the unlawful copying and distortion of private information. Digital image watermarking is thought to be a viable solution to these security concerns. In a watermarking algorithm, invisibility denotes the cover image and the watermarked image being similar enough to avoid being spotted. The robustness of the watermark shows that the watermark algorithm can withstand frequent attacks. DICOM stands for Digital Imaging and Communications in Medicine and it is a global standard for storing, exchanging and transmitting medical pictures. This standard is used by image technologies such as X-rays, ultrasound, microscopy, MRI and CT. The standard provides several benefits, including: (1) Both image and patient information will be transferred in a single network session, (2) Patient safety is improved, and (3) Save detailed acquisition and diagnostic protocol information. The recent literature survey of image watermarking methods is listed in Table 1.

From the literature survey presented in Table 1, it can be summarized that image watermarking poses several possible risks and challenges. Finding a balance between imperceptibility, resilience, and capacity is the first challenge because enhancing one aspect has a detrimental impact on the others. An effective watermarking system should have all three traits at once. The amount of data conveyed is referred to as the payload size. Less imperceptibility results from a larger payload. All of these features must be balanced out in a decent watermarking strategy. So, in this work, a method is proposed to get a highly robust and imperceptible dual image watermarking. The scaling factor plays a vital role in the proposed scheme, so to get the optimum value of the scaling factor, an optimization technique is used by taking a unique equation. Authentication is the process by which one can understand that the features of the watermarked medical image are valid and there is no adverse effect due to various attacks after applying the proposed watermarking scheme. In this work, SURF features are used for authentication purpose.

This paper is divided into the following sections: section 2 is related to the preliminaries, section 3 shows the proposed watermarking embedding and extraction process, section 4 is results and discussion, section 5 is related to the SURF feature authentication, and section 6 is the conclusion.

2 Preliminaries

The proposed watermarking technique uses LWT-DCT-SVD and Schur decomposition for embedding. The work proposed in this paper is different from [27], as in this work, Firefly optimization is used instead of Particle swarm optimization and the scheme is also a dual watermarking scheme. DWT-DCT-SVD with backpropagation neural network is used in [36] to get the watermarked image, but the proposed work uses LWT-DCT-SVD with Schur decomposition for embedding.

2.1 Lifting wavelet transform (LWT)

Sweldens [30] introduced the lifting wavelet transform in 1998. The lifting Wavelet reduces the challenge of reversibility by immediately examining the problem in the integer domain, which is not observed in standard Wavelet transforms. The advantages of LWT over typical Wavelet transform aid in developing watermarking methods while also improving computing efficiency [33]. Splitting, prediction, and updating are the three essential steps of the lifting system [29]. The block diagram is shown in Fig. 1.

Split: Lazy wavelet transform is another name for splitting. In this step, the input signal Sj is divided into even and odd samples: Sj, 2k and, Sj, 2k + 1 [33].

Predict: This stage can be viewed as a high-pass filtering procedure [33]. Here we predict odd samples Sj, 2k + 1 using even samples Sj, 2k and the abstract difference dj − 1 is generated.

Update: This step can be viewed as a low-pass filtering operation [33]. The low-frequency component Sj − 1 represents a coarse approximation to the original signal SjWhich is obtained by applying an update operator.

2.2 Discrete cosine transform (DCT)

The discrete cosine transform coefficient has only real values, unlike the discrete Fourier transform. DCT has the ability to compress an image’s pixels information into a small number of DCT coefficient values, resulting in data consolidation into less values [7]. The 2D DCT and inverse DCT of an N × N image are defined as shown in Eq. (1) and Eq. (2):

The inverse discrete cosine transform (IDCT) in two dimensions is defined as follows [7]:

where, \( P(i)=\left\{\begin{array}{c}\sqrt{1/N},i=0\\ {}\sqrt{2/N},i=0\end{array}\right. \) \( P(j)=\left\{\begin{array}{c}\sqrt{1/N},j=0\\ {}\sqrt{2/N},j=0.\end{array}\right. \)

2.3 Schur decomposition (SD)

In numerical linear algebra, the Schur decomposition is a valuable technique. The Schur decomposition can be defined as shown in Eq. (3) for a real matrix H.

where U: unitary matrix; D: upper triangular matrix. The matrix H can be obtained as shown in Eq. (4):

where, U′ is the transpose of matrix U.

2.4 Singular value decomposition (SVD)

The N× M matrix’s SVD can be written as [7]:

Where L and R are unitary orthogonal matrices shown in Eq. (6), Σ = diag (σ1, σ2, ⋯, σr, 0, ⋯, 0) is the singular value matrix, σi = √λi (i = 1, 2, …r), and r is the rank of matrix C. The SVD is significantly used in image alteration, particularly in digital watermarking. The singular values of a matrix characterize its data distribution qualities and are reasonably stable; a slight change in the singular value does not affect the aesthetic impression of the image [25]. Furthermore, the SVD has no limit on the size of the picture matrix.

2.5 Firefly optimization

Firefly Algorithms for Multimodal Optimization is proposed by Yang [35]. Firefly algorithm can be constructed by using one of the three rules: (1) any firefly can be attracted to any other brighter one regardless of their sex, (2) The brightness of the Firefly can be determined from the encoded objective function, and (3) The attractiveness is directly proportional to brightness, and they both decreases as their distance increases. It means that the Firefly will move towards the brighter one, and if there is no brighter one, it will move randomly. From elementary physics, the light intensity is inversely proportional to the square of the distance (r). The variation of attractiveness β can be defined as shown in Eq. (6):

where βo is the attractiveness at r = 0. If the Firefly is located at Xj is more attractive (brighter) than another firefly located at XiThe Firefly is located at Xi will move towards Xj. The position update of the Firefly is located at Xi can be defined as shown in Eq. (7):

where T is the iteration number, \( \left({X}_j^T-{X}_i^T\right) \) is the distance between the two Firefly, \( {\beta}_o{e}^{-\gamma {r}^2} \) is the attractiveness, αT is the constant parameter that defines randomness, and lies between 0 and 1, and \( {\in}_i^T \) is a vector of random numbers. If βo = 0, then it becomes a simple random walk. If γ = 0, then it reduces to simple PSO. αT can be defined as shown in Eq. (8):

where αo is the initial randomness scaling factor, and δ is essentially a cooling factor. For most of the applications δ = 0.95 to 0.97, βo = 1, and αo = 0.01L (L = Average scale of the problem interest). However, γ, should also be related to the scaling L. In general, we can set \( \gamma =\frac{1}{\sqrt{L}} \). In general, the Firefly algorithm has two inner loops when going through the population (n) and one outer loop for the iteration (T). So, the complexity in the extreme case is O(n2T). If n is very small and T is very large, the computational cost is relatively inexpensive because the algorithm complexity is linear in terms of T. Different cases of Firefly optimization are shown in Fig. 2. The flow chart of Firefly optimization is shown in Fig. 3.

2.5.1 Advantages of firefly optimization

-

Firefly optimization is based on attraction, and attractiveness decreases with distance. This leads to the fact that the whole population can automatically be subdivided into subgroups.

-

The parameters in Firefly can be tuned to control the randomness as iterations proceed, so that convergence can also be speed up.

-

The natural capability of dealing with multimodal optimization.

-

High ergodicity and diversity in the solution.

2.5.2 Working of firefly optimization

-

Parameters Setting:

-

Population size = 5

-

Number of iterations = 20

-

βo = 1, γ = 0.01, δ = 0.97, \( {\in}_i^T=\left(\mathit{\operatorname{rand}}-\frac{1}{2}\right)\times scale \)

-

Dimension of the problem = 3

-

-

Fitness function:

In the above equation, NCC1 is the normalized correlation coefficient for the first watermark image, NCC2 is the normalized correlation coefficient for the second watermark image, a represents the number of attacks, and μ shows the number of used host images.

-

Randomly initialize the initial position (P) of the population using Eq. (10):

where rand is the random number between 0 and 1, the lower limit for NCC is 0.9, and the Upper limit is 1.

-

Compute the fitness value using Eq. (9) for every Firefly.

-

Compare the result of the first Firefly with the second one and if the value of the first Firefly is greater than the second, then retain the old value. But if the value of the second Firefly is larger, use Eq. (7) and move the first Firefly towards the second one. This way compares the fitness value of the first Firefly with every other Firefly and updates the position if needed. Then again, compare the fitness value of every Firefly with the other one and completes the iterations.

3 Proposed watermarking algorithm

This section explains the watermarking embedding and extraction procedure. For watermarking embedding process, we have taken an Ultrasound image [size: 512 × 512] of the liver of three different patients, and two watermark logo images are used. MNNIT logo [size 256 × 256] is the first watermark image and the details of patient [size 256 × 256] are the second logo. The block diagram of the embedding and extraction algorithm is shown in Figs. 4 and 5.

3.1 Watermark embedding process

-

Step 1: Decompose the input liver ultrasound image by applying a three-level lifting wavelet transform, and pick two sub-bands (HL3 and LH3).

-

Step 2: Perform the Schur decomposition on the sub-bands to get the unitary and upper triangular matrix. In Eq. (11), U1 is a unitary matrix, and T1 is the upper triangular matrix for the HL3 sub-band. In Eq. (12), U2 is a unitary matrix, and T2 is the upper triangular matrix for the LH3 sub-band.

-

Step 3: Apply singular value decomposition (SVD) on the previously obtained upper triangular matrix to get the two different dominant matrices. In Eq. (13) L1 is left unitary orthogonal matrix, R1 is a right unitary orthogonal matrix, and ∑1 is dominant matrix corresponding to T1. In Eq. (14) L2 is left unitary orthogonal matrix, R2 is a right unitary orthogonal matrix, and ∑2 is dominant matrix corresponding to T1.

-

Step 4: Take the first watermark image: the MNNIT logo.

-

Step 5: Perform a two-level lifting wavelet transform on the MNNIT logo and pick the HLMNNIT sub-band.

-

Step 6: Apply DCT on HLMNNIT sub-band to get HLMNNIT_DCT.

-

Step 7: Apply singular value decomposition after applying DCT to the obtained dominant matrix. In Eq. (15), \( {L}_1^W \)is left unitary orthogonal matrix, \( {R}_1^W \) is a right unitary orthogonal matrix, and \( {\sum}_1^W \) is the dominant matrix corresponding to the first watermark image.

-

Step 8: Take the Second watermark image: Details of the patient.

-

Step 9: Perform two-level lifting wavelet transform on Details of the patient, and pick LHDetails sub-band.

-

Step 10: Apply DCT on the LHDetails sub-band to get LHDetails_DCT.

-

Step 11: Apply singular value decomposition (SVD) after applying DCT to the obtained dominant matrix. In Eq. (16), \( {L}_2^W \)is left unitary orthogonal matrix, \( {R}_2^W \) is a right unitary orthogonal matrix, and \( {\sum}_2^W \) is the dominant matrix corresponding to the second watermark image.

-

Step 12: Add the dominant matrix of both the watermark images with the dominant matrices of the host image. In Eq. (17), α is the scaling factor, and S1 is modified dominant matrix. In Eq. (18), α is the scaling factor, and S2 is modified dominant matrix.

-

Step 12: Rebuild the sub-bands by applying inverse SVD and inverse Schur decomposition, then apply three-level inverse LWT to get the watermarked image.

3.2 Watermark extraction process

-

Step 1: Decompose the watermarked liver ultrasound image by applying a three-level lifting wavelet transform, and pick two sub-bands (HLW and LHW).

-

Step 2: Apply Schur decomposition on both sub-bands and then apply SVD to get two dominant matrices.

-

Step 3: Apply inverse embedding to both dominant matrices. In Eq. (19) \( {S}_1^W \) is the dominant matrix obtained after applying reverse embedding, and \( {\sum}_1^{W\prime } \) is the dominant matrix of the watermarked image. In Eq. (20) \( {S}_2^W \) is the dominant matrix obtained after applying reverse embedding, and \( {\sum}_2^{W\prime } \) is the dominant matrix of the watermarked image.

-

Step 4: Apply inverse SVD and DCT on previously obtained inverse embed dominant matrices.

-

Step 5: Apply the two-level inverse lifting Wavelet transform to get the extracted watermark.

4 Results and discussion

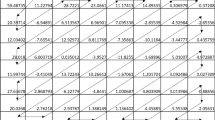

The proposed algorithm is implemented with the help of MATLAB R2017a installed in the system with specifications as Lenovo 11th generation Intel(R) Core (TM) i5-1135G7 @ 2.40GHz - 2.42 GHz. The proposed watermarking method is tested on an Ultrasound image [size: 512 × 512] of the liver of three different patients taken from the cancer imaging archive [11], shown in Fig. 6, and two watermark logo images are used, shown in Fig. 7. MNNIT logo [size 256 × 256] is the first watermark image, and the details of patient [size 256 × 256] are the second logo. The watermarked image is shown in Fig. 8, and extracted watermark logo is shown in Fig. 9. To check the performance of the proposed method, the peak signal to noise ratio (PSNR), structural similarity index measurement (SSIM), and normalized correlation coefficient (NCC1 is used for MNNIT logo, and NCC2 is used for Details of patient image). The PSNR and SSIM value is used for the imperceptibility of the proposed method, and NCC is used for robustness. Table 2 shows the PSNR, SSIM, and NCC values for different host images without attack. The PSNR value for patient 1 is 45.8168, patient 2 is 45.7966, and patient 3 is 45.8186. The PSNR value is highest for patient 3. The SSIM value for patient 1 is 0.9969, patient 2 is 0.9951, and patient 3 is 0.9967. The PSNR value is highest for patient 1. The performance parameters are shown in Eqs. (22), (23), (24), and (25).

4.1 Performance parameters

The proposed scheme uses the PSNR value to comment on imperceptibility.

Pixel is the maximum pixel value in the above equation, and MSE is the mean square error value. Mean square error (MSE) can be defined as:

where M × N is the size of the host image, HI(i,j) is the host image, WI(i,j) is the watermarked image, and i, j is used for pixels.

Structural similarity index measurement (SSIM) is also used to comment on the imperceptibility of the proposed watermarking scheme and is defined as:

where μx, μy, σx, σy, and σxy are the local means, standard deviations, and cross-covariance for images x, y, and C1 and C2 are the regularization constant for the luminance and contrast.

This paper uses normalized correlation coefficient (NCC) values to comment on the robustness of the proposed watermarking scheme and is defined as:

where W(i,j) is the original watermark logo, and Wr(i,j) is the recovered watermark logo.

4.2 Imperceptibility analysis

The invisibility of the proposed scheme is determined by the PSNR. Table 3 shows the PSNR and SSIM values P1 host image under attacks. The PSNR value for Salt and Pepper noise (0.001) is 34.7385, Gaussian noise (0,0.001) is 30.8709, Speckle noise (0.02) is 26.2916, Gaussian low pass filter (3 × 3) is 45.1891, Average filter (3 × 3) is 38.9229, Median filter (3 × 3) is 43.0525, Sharpening is 31.7900, Wiener filter (3 × 3) is 42.5133, JPEG (90) is 43.9055, JPEG 2000 (10) is 45.1019, Motion blur is 35.8291, and Region of interest filtering is 44.7626. The highest PSNR value is for JPEG 2000 (10). The PSNR value for Rotation (2) is 12.5608, Histogram eq. is 11.7571, and Shearing is 12.2016. The lowest value of PSNR is for Histogram eq. The highest value of SSIM is for Gaussian low pass filter (0.9937), and the lowest value is for Histogram eq. (0.5618).

Table 4 shows the PSNR and SSIM values P2 host image under attacks. The PSNR value for Salt and Pepper noise (0.001) is 35.2279, Gaussian noise (0,0.001) is 30.4923, Speckle noise (0.02) is 21.9830, Gaussian low pass filter (3 × 3) is 42.3107, Average filter (3 × 3) is 33.9608, Median filter (3 × 3) is 41.7102, Sharpening is 35.9569, Wiener filter (3 × 3) is 43.9449, JPEG (90) is 44.3145, JPEG 2000 (10) is 45.2125, Motion blur is 32.8780, and Region of interest filtering is 45.1307. The highest PSNR value is for Region of interest filtering. The PSNR value for Rotation (2) is 15.8857, Histogram eq. is 20.9272, and Shearing is 11.5788. The lowest value of PSNR is for Shearing. The highest value of SSIM is for Region of interest filtering (0.9948), and the lowest value is for Shearing (0.4518).

Table 5 shows the PSNR and SSIM values P3 host image under attacks. The PSNR value for Salt and Pepper noise (0.001) is 34.5093, Gaussian noise (0,0.001) is 30.5798, Speckle noise (0.02) is 27.6779, Gaussian low pass filter (3 × 3) is 43.0262, Average filter (3 × 3) is 35.3777, Median filter (3 × 3) is 38.5289, Sharpening is 31.0042, Wiener filter (3 × 3) is 40.3403, JPEG (90) is 42.8298, JPEG 2000 (10) is 42.8298, Motion blur is 30.4943, and Region of interest filtering is 41.7626. The highest PSNR value is for JPEG 2000 (10). The PSNR value for Rotation (2) is 14.1548, Histogram eq. is 10.4261, and Shearing is 13.0938. The lowest value of PSNR is for Histogram eq. The highest value of SSIM is for JPEG 2000 (10) (0.9944), and the lowest value is for Shearing (0.4119). The Comparison of PSNR values for P1, P2, and P3 is shown in Fig. 10. The Comparison of SSIM values for P1, P2, and P3 is shown in Fig. 11 and the timing analysis is shown if Figs. 12 and 13.

4.3 Robustness analysis

The robustness of the proposed scheme is determined by the normalized correlation coefficient. NCC1 is used for the MNNIT logo, and NCC2 is used for Details of patient image. Table 3 shows the NCC1 and NCC2 values for P1. NCC1 and NCC2 values are the highest for Region of interest filtering (1.0000). The NCC1 value is lowest for sharpening (0.9730), and the NCC2 value is lowest for Shearing (0.9816). Table 4 shows the NCC1 and NCC2 values for P2. NCC1 and NCC2 values are highest for Region of interest filtering (1.0000) and JPEG 2000 (1.0000). The NCC1 value is lowest for rotation (0.9430), and the NCC2 value is lowest for rotation (0.9703). Table 5 shows the NCC1 and NCC2 values for P3. NCC1 value is highest for Salt and Pepper noise (1.0000), JPEG (1.0000), and JPEG 2000 (1.0000). NCC2 value is highest for JPEG (1.0000) and JPEG 2000 (1.0000). The NCC1 value is lowest for histogram (0.9493), and the NCC2 value is lowest for Shearing (0.9821). The extracted watermark logo images for P1, P2 and P3 are shown in Figs. 14, 15 and 16, respectively.

4.4 Time analysis

Profiling is a technique for determining how long our code to run entirely takes and where MATLAB invests the most time. After determining which functions take the most time, we may assess them for potential performance enhancements. Figures 12 and 13 show the timing analysis of the algorithm. Function name: The function that the profiled code calls.Calls: The number of incidents the function was called by the profiled code.Total Time: In seconds, the overall time spent in the function. Time spent on child functions is included in the function time. The Profiler takes some time, which is reflected in the final results. For files with insignificant run times, the total duration can be nil.Self-Time: Time spent in a function in seconds, discounting time invested in any child functions. Self-time contains some overhead incurred during the profiling procedure.

The dual image watermarking increases the security of the proposed watermarking technique. The proposed technique is highly robust against various attacks. The imperceptibility of the proposed scheme is also up to the mark. The firefly optimization is used to get the optimized scaling factor. The timing complexity is significantly less and the proposed scheme takes lesser time to implement. The SURF features successfully matches the concerned portions of the given medical image and there is no significant distortion in these portions.

5 SURF Feature Extraction & Matching

SURF is an enhancement on SIFT as an image key point definition operator depending on scale space. The SURF operator’s graphic features resist noise, filtering, and rotation. The Hessian matrix is used by the SURF technique to find extreme points. In feature extraction, the SURF method uses box filtering rather than Gauss filtering. Simple addition and deduction can be used to finish the image filtering operation. In Fig. 17, matched features between the original image and rotated images are shown. The performance of the efficient algorithm SURF is identical to that of SIFT, but its computational complexity is lower. The SIFT algorithm shows its strength in the majority of circumstances, but its performance is still sluggish. The SURF algorithm is similar to SIFT and it also performs well (Table 6).

In Fig. 13, the ultrasound image of the liver is shown. In all the images, the black portion represents the presence of fluid, and the white part shows the presence of fat. There is a concentration of black portion, i.e., fluid in all the images in some parts. So, these two points are mainly the concerns of this medical image. With the help of SURF, the features of these two portions are extracted, even after taking one of the image as a rotated watermarked image. It is clear from the above images that the concerned portions of the used DICOM medical image are not changed or distorted because the SURF feature matches these portions, and there is no error in matching.

6 Conclusion

In the proposed scheme, a dual image watermarking technique is proposed, which utilizes the properties of Schur decomposition, SVD-DCT-LWT for embedding to improve the robustness and imperceptibility and to further improve the performance of the scheme firefly optimization is used. SURF features are used for authentication. The SURF features successfully matches the Region of interest of the given DICOM medical image and there is no distortion or alteration to these portions. The security of the scheme is also increased due to the use of dual image watermarking. The proposed watermarking method is robust against various attacks like Salt and Pepper noise, Speckle noise, Gaussian noise, Gaussian low pass filter, Average filter, Median filter, Rotation, Histogram, Motion blur, and Region of interest filter. The PSNR values for different input images are greater than 40 dB (without attack), the PSNR values for salt and pepper noise, Gaussian noise, Gaussian low pass filter, Average filter, Median filter, Sharpening, Wiener filter, JPEG, JPEG 2000, Motion blur and Region of interest filtering are greater than 30 dB except for Rotation, Histogram equalization and Shearing. By observing the PSNR values, it shows that the imperceptibility or invisibility of the proposed watermarking scheme is improved. The proposed technique can further be improved by using machine learning techniques for feature matching or authentication. The security of the scheme for medical data can be improved by using encryption techniques.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Abdulrahman AK, Ozturk S (2019) A novel hybrid DCT and DWT based robust watermarking algorithm for color images. Multimed Tools Appl 78(12):17027–17049

Ahmaderaghi B, Kurugollu F, Del Rincon JM, Bouridane A (2018) Blind image watermark detection algorithm based on discrete shearlet transform using statistical decision theory. IEEE Trans Comput Imaging 4(1):46–59

Amirgholipour SK, Naghsh-Nilchi AR (2009) Robust digital image watermarking based on joint DWT-DCT. Int J Digit Content Technol Appl 3(2):42–54

Amrit P, Singh AK (2022) Survey on watermarking methods in the artificial intelligence domain and beyond. Comput Commun 188:52–65

Anand A, Singh AK (2022) Dual watermarking for security of COVID-19 patient record. IEEE Trans Dependable Secure Comput:1. https://doi.org/10.1109/TDSC.2022.3144657

Aslantas V (2008) A singular-value decomposition-based image watermarking using genetic algorithm. AEU-Int J Electron Commun 62(5):386–394

Awasthi D, Srivastava VK (2022) LWT-DCT-SVD and DWT-DCT-SVD based watermarking schemes with their performance enhancement using Jaya and Particle swarm optimization and comparison of results under various attacks. Multimed Tools Appl 81:25075–25099

Bao P, Ma X (2005) Image adaptive watermarking using wavelet domain singular value decomposition. IEEE Trans Circuits Syst Video Technol 15(1):96–102

Bhatnagar G, Raman B, Swaminathan K (2008, August) DWT-SVD based dual watermarking scheme. In: 2008 first international conference on the applications of digital information and web technologies (ICADIWT). IEEE. pp. 526-531

Chang TJ, Pan IH, Huang PS, Hu CH (2019) A robust DCT-2DLDA watermark for color images. Multimed Tools Appl 78(7):9169–9191

Clark K, Vendt B, Smith K, Freymann J, Kirby J, Koppel P, Moore S, Phillips S, Maffitt D, Pringle M, Tarbox L, Prior F (2013) The Cancer imaging archive (TCIA): maintaining and operating a public information repository. J Digit Imaging 26(6):1045–1057

Dowling J, Planitz BM, Maeder AJ, Du J, Pham B, Boyd C, ..., Crozier S (2007, December) A comparison of DCT and DWT block-based watermarking on medical image quality. In: International Workshop on Digital Watermarking (pp. 454–466). Springer, Berlin, Heidelberg., 2008

Ernawan F, Kabir MN (2018) A robust image watermarking technique with an optimal DCT-psychovisual threshold. IEEE Access 6:20464–20480

Furqan A, Kumar M (2015, February) Study and analysis of robust DWT-SVD domain based digital image watermarking technique using MATLAB. In: 2015 IEEE international conference on Computational Intelligence & Communication Technology. IEEE. pp. 638-644

Hurrah N, Parah S, Loan N, Sheikh J, Elhoseny M, Muhammad K (2018) Dual watermarking framework for privacy protection and content authentication of multimedia. Futur Gener Comput Syst 94:654–673. https://doi.org/10.1016/j.future.2018.12.036

Jane O, Elbasi E, Ilk H (2014) Hybrid non-blind watermarking based on DWT and SVD. J Appl Res Technol 12:750–761

Kang XB, Zhao F, Lin GF, Chen YJ (2018) A novel hybrid of DCT and SVD in DWT domain for robust and invisible blind image watermarking with optimal embedding strength. Multimed Tools Appl 77(11):13197–13224

Khare P, Srivastava VK (2018, November) Image watermarking scheme using homomorphic transform in wavelet domain. In: 2018 5th IEEE Uttar Pradesh section international conference on electrical, electronics and computer engineering (UPCON). IEEE. pp. 1-6

Khare P, Srivastava VK (2018, February) Robust digital image watermarking scheme based on RDWT-DCT-SVD. In: 2018 5th international conference on signal processing and integrated networks (SPIN). IEEE. pp. 88-93

Khare P, Srivastava VK (2021) A novel dual image watermarking technique using homomorphic transform and DWT. J Intell Syst 30(1):297–311

Kumar A (2022) A cloud-based buyer-seller watermarking protocol (CB-BSWP) using semi-trusted third party for copy deterrence and privacy preserving. Multimed Tools Appl 81:21417–21448

Li J, Yu C, Gupta BB, Ren X (2018) Color image watermarking scheme based on quaternion Hadamard transform and Schur decomposition. Multimed Tools Appl 77(4):4545–4561

Liu XL, Lin CC, Yuan SM (2016) Blind dual watermarking for color images' authentication and copyright protection. IEEE Trans Circuits Syst Video Technol 28(5):1047–1055

Liu J, Huang J, Luo Y, Cao L, Yang S, Wei D, Zhou R (2019) An optimized image watermarking method based on HD and SVD in DWT domain. IEEE Access 7:80849–80860

Nandi S, Santhi V (2016) DWT–SVD-based watermarking scheme using optimization technique. In: Artificial intelligence and evolutionary computations in engineering systems. Springer, New Delhi, pp 69–77

Rao VSV, Shekhawat RS, Srivastava VK (2012, March) A reliable digital image watermarking scheme based on SVD and particle swarm optimization. In: 2012 students conference on engineering and systems. IEEE. pp. 1-6

Singh PK (2022) Robust and imperceptible image watermarking technique based on SVD, DCT, BEMD and PSO in wavelet domain. Multimed Tools Appl 81(16):22001–22026

Singh D, Singh SK (2017) DWT-SVD and DCT based robust and blind watermarking scheme for copyright protection. Multimed Tools Appl 76(11):13001–13024

Sweldens W (1996) The lifting scheme: a custom-design construction of biorthogonal wavelets. Appl Comput Harmon Anal 3(2):186–200

Sweldens W (1998) The lifting scheme: a construction of second-generation wavelets. SIAM J Math Anal 29(2):511–546

Thakkar FN, Srivastava VK (2017) A blind medical image watermarking: DWT-SVD based robust and secure approach for telemedicine applications. Multimed Tools Appl 76(3):3669–3697

Thakkar F, Srivastava VK (2017) A particle swarm optimization and block-SVD-based watermarking for digital images. Turk J Electr Eng Comput Sci 25(4):3273–3288

Verma VS, Jha RK, Ojha A (2015) Significant region based robust watermarking scheme in lifting wavelet transform domain. Expert Syst Appl 42(21):8184–8197

Wang Z, Sun X, Zhang D (2007, September) A novel watermarking scheme based on PSO algorithm. In: International conference on life system modeling and simulation. Springer, Berlin, Heidelberg. pp. 307-314

Yang XS (2009, October) Firefly algorithms for multimodal optimization. In: International symposium on stochastic algorithms. Springer, Berlin, Heidelberg. pp. 169-178

Zear A, Singh AK, Kumar P (2018) Multiple watermarking for healthcare applications. J Intell Syst 27(1):5–18

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

No funds, grants, or other support were received. The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Awasthi, D., Srivastava, V.K. Robust, imperceptible and optimized watermarking of DICOM image using Schur decomposition, LWT-DCT-SVD and its authentication using SURF. Multimed Tools Appl 82, 16555–16589 (2023). https://doi.org/10.1007/s11042-022-14002-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-14002-8