Abstract

To improve the efficiency of traditional face recognition techniques, this paper proposes a novel face recognition algorithm called Image Gradient Feature Compensation (IGFC). Based on the gradients along four directions in an image, a fusion algorithm and a compensation method are implemented to obtain features of the original image. In this study, gradient magnitude maps of a face image are calculated along four directions. Fusion gradients and differential fusion gradients are produced by fusing the four gradient magnitude maps of a face image in multiple ways, and they are used as compensation variables to compensate the appropriate coefficients on the original image and build IGFC feature maps of the original face image. Subsequently, IGFC feature maps are divided into several blocks to calculate the concatenated histogram over all blocks, which is in turn utilized as the feature descriptor for face recognition. Principal component analysis (PCA) is used to cut down the number of dimensions in high-dimensional features, which are recognized by the Support Vector Machine (SVM) classifier. Finally, the proposed IGFC method is superior to traditional methods as suggested by verification studies on YALE, ORL, CMU_PIE, and FERET face databases. When the LibSVM parameter was set to ‘-s 0 -t 2 -c 16 -g 0.0009765625’, the algorithm achieved 100% recognition on Yale and ORL data sets, 92.16% on CMU_PIE data sets, and 74.3% on FERET data sets. The average time for simultaneous completion of the data sets examined was 1.93 s, and the algorithm demonstrated a 70.71% higher running efficiency than the CLBP algorithm. Therefore, the proposed algorithm is highly efficient in feature recognition with excellent computational efficiency.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

As computer graphics and image processing capability continue to develop, face recognition has become a popular field of research recently. Face recognition technologies can be generally classified into two categories [2, 39], one of which is based on global features, and the other is based on local features. Over the years, many excellent algorithms have been proposed for face recognition, such as principal component analysis (PCA) [38], linear judgment analysis (LDA) [6], two-dimensional PCA (2DPCA) [41], two-dimensional LDA (2DLDA) [16, 43], ICA [3], enhanced ICA [28], multiple feature subspace analysis (MFSA) [13], as well as several other classical global-feature-based algorithms. However, problems of small sample sizes or high dimensional data are commonly seen in face classification and recognition tasks. Therefore, most global-feature-based recognition methods are not available for direct use as the within-class scatter matrix is always singular.

In recent years, as deep learning technologies emerge and advance, many scholars began to study their applications in face recognition [10, 12, 22, 32,33,34]. Generally speaking, deep learning technologies extract data-driven features and obtain deep and dataset-specific feature descriptions by learning a large number of samples. Convolutional Neural Network (CNN) and Tensor Face are two of the most recognized deep learning face recognition methods. Deep learning technologies are advantageous because they express data sets more efficiently and accurately, but their results are highly dependent on the sample set and the computing power.

Researchers have done a lot of explorations on local-feature-based methods, most of which divide the global image into several parts and analyze the features of each part independently. Therefore, local feature extraction methods can demonstrate better robustness, and some of these methods include Local Binary Mode (LBP) [1], Local Gradient Pattern (LGP) [24], Center-Symmetric Local Binary Patterns (CS-LBP) [20], SIFT [7], HOG [14], and wavelet Gabor [29]. Specifically, LBP is the most classical local feature extraction method, which has been extended extensively from many aspects [1, 9, 20, 21, 24, 31]. Moreover, two very popular methods extended from LBP, namely LGP [24] and CS-LBP [20], have been widely used for face feature extraction. Wang et al. [40] proposed a face recognition algorithm named complete local binary mode (CLBP) and a classification recognition method based on LBP and the local difference values and gray values of the center pixel. Yang et al. [23] proposed a single sample face recognition algorithm based on bidirectional gradient centrosymmetric local binary mode (BGCSBP). This algorithm works by obtaining the horizontal and vertical gradient information of images, encoding it with a CS-LBP operator, and integrating BGCSBP feature descriptions of the formed face images for classification and recognition. In Zhang et al.’s work [45], LBP coding was performed on the pixels in the face image, which was in turn divided into several sub-regions. In each sub-region, LBP histogram features were calculated to describe the overall face image. LBP operator [1, 9, 21, 31] has been used to calculate the relationships of the differences between the center pixel and its surrounding pixels in an n*n module to obtain a series of binary codes. However, as the number of neighboring pixels considered increases, remarkably high eigenvectors would be generated from the LBP operator. This problem could be solved by the centrosymmetric local binary model [20]. Besides, Jun and Kim proposed an LGP descriptor [24], which considers the intensity gradient distribution and realizes local changes near key points.

Additionally, Yang et al. [42] proposed an algorithm for face recognition based on the word bag model, which was established by integrating various features. By extracting local features in sample face images, a visual dictionary was trained offline and mapped to the corresponding high-dimensional and middle-level semantic space to extract descriptions of new face images. Ning et al. [30] proposed a BULPDA algorithm. They created a method to express similarity coefficients and proposed the vector space solution of singular value decomposition by integrating the concept of irrelevant space, which brought new insights on feature extraction. Fu et al. [8] proposed a face recognition algorithm based on the maximum value average, in which they used the template variance size to select different thresholds and extract more detailed information. Déniz et al. [17] proposed an approach to make robust use of HOG features for face recognition. Li et al. [26] used Gabor to transform images and extract features and realized some improvements in the face recognition rate. A. Bastanfard et al. [4, 5, 15] proposed some approaches to facial rejuvenation in adults image. It is helpful to solve the recognition rate of the condition of age change in face recognition process. In addition, there are other meaningful algorithms related to face recognition, such as literature [36], which have been deeply studied by Rituraj Sonil O et al.

Fast face recognition systems are face recognition systems that can recognize the identity of the person in the picture within a short time [10, 19, 25, 27, 33, 35, 37], which could significantly limit the time a recognized person stays in front of the camera and improve recognition efficiency. Specifically, Qu and Wei et al. [33] proposed a real-time face recognition method based on the convolution neural network with promising speed and accuracy. Cai and Lei et al. [10] proposed a fast and robust 3D face recognition approach with three component technologies: a fast 3D scan preprocessing, multiple data augmentation, and a deep learning technique based on facial component patches. Tang and Wu et al. [37] proposed a fast face recognition method based on the fractal theory that compresses the facial images to fractal codes and finishes face recognition with these codes. He Q and He B et al. [19] used the K-Mean clustering technology to improve the speed up robust features (SURFs), and their results were satisfactory.

Despite all of these advancements, a proper method for face recognition is still a challenge. Even though some reliable systems and advanced methods have been introduced with controlled conditions, their recognition rates, efficiencies, or speeds remain generally unfavorable. Many algorithms are very complex, which limits their recognition speed and efficiency significantly. To solve these problems, a novel concept called image gradient compensation (IGC) is proposed in this paper. Briefly, IGC uses a certain algorithm to strengthen the available salient features of the image and weaken the non-salient ones, thereby retrieving more valuable feature information during the feature extraction stage and improving the recognition rate. In this study, a fusion gradient and differential fusion gradient operator is used to generate fusion gradients and differential fusion gradients. An image gradient feature compensation (IGFC) algorithm is obtained by compensating the original image with appropriate compensation coefficients for fusion gradients and differential fusion gradients.

This study aims to improve the quality of extracted face image features, cut down the time and power spent on face recognition, and improve the recognition efficiency with the IGC strategy. Particularly, the IGC strategy is first used to enhance the available features of the image. Besides, the efficiencies of image feature extraction and face recognition are improved as a result of simplified algorithms. Practical experiments proved that the algorithm is of good practical prospects.

The author’s main contributions are as follows: 1) A novel image gradient algorithm is proposed to extract image features with greater image recognition rates; 2) Image feature extraction is simplified remarkably with the image gradient fusion technology, and the operation efficiency of image recognition is improved markedly; 3) The proposed device needs comparably lower power to operate thanks to the improvements in image recognition efficiency.

The proposed algorithm can fit the needs of face identification systems and face recognition intelligent hardware systems in hotels, workplaces, community entries, etc. Unlike AI face recognition, this algorithm is still a traditional face recognition method. The image feature extraction process relies on the local-feature-based algorithm design, which evades the requirements of large samples and high computational power for face recognition training. Experimental results show that the algorithm has a good recognition rate with significantly higher efficiency.

The remaining chapters of this paper are organized as follows. Section 2 introduces some concepts, important definitions, and the IGFC algorithm models. Section 3 gives the flow and implementation chart of the IGFC algorithm. Section 4 describes the experimental results on YALE, ORL, CMU_PIE face databases, and provides analyses to prove the superiority of our proposed algorithm. Finally, a conclusion will be drawn in Section 5.

2 Backgroup

2.1 Image gradient

The first derivative of a one-dimensional function is defined as:

A gray image is considered as a two-dimensional function f(x,y), and the derivative of either x or y can be calculated as follows:

Since an image is a discrete two-dimensional function by pixel, the smallest ϵ is 1 pixel. Therefore, eqs. 2 and 3 can be simplified to eqs. 4 and 5 (ϵ = 1):

Equations 4 and 5 stand for the horizontal gradient (x gradient) and vertical gradient (y gradient) of a point g(x, y) in the image, respectively. When ϵ = 1, the gradient of the image is equivalent to the difference between two adjacent pixels [46].

2.2 Related concepts

A pixel point in the image has two gradients in opposite horizontal directions, i.e. the left gradient and the right gradient. Let Grx be the right gradient and Glx be the left gradient, and they are calculated as follows:

Similarly, there are two gradients in the vertical direction, i.e. the upper gradient and the lower gradient. Let Guy be the upper gradient and Gdy be the lower gradient, and we have:

-

Definition 1:

Fusion gradient

The fusion gradient of image A is defined as the sum of left, right, upper, and lower gradients of image A, which is denoted as:

-

Definition 2:

Differential fusion gradient

The differential fusion gradient of image A is defined as the result of adding the subtracted values of the left and right gradients to the subtracted values of the upper and lower gradients, which is denoted as:

-

Definition 3:

Feature compensation

Feature compensation is defined as the operation of adding or subtracting the original image with the image’s feature operator. When a feature operator is added to the pixel value of the original image, positive compensation occurs. When a feature operator is subtracted from the pixel value of the original image, negative compensation occurs.

-

Definition 4:

Feature compensation coefficient

During image feature compensation, the feature operator can be added or subtracted several times on the original image. In this case, the number of times of such addition or subtraction is defined as the feature compensation coefficient. It is a real number for flexible adjustments of the feature compensation amplitude.

2.3 IGFC algorithm model

The feature information of the image was extracted with the following steps:

-

(1)

Convert the image into a grayscale image in img. Format;

-

(2)

Obtain the fusion gradient and differential fusion gradient of the image;

A fusion gradient image is a feature image with enlarged edge contours, and it can well display the rough facial contours. The fusion gradient image display of Lena head is shown in Fig. 1a. On the other hand, differential fusion gradient represents a more subtle and detailed description of edge contours (Fig. 1b).

-

(3)

Obtain image feature descriptions.

The calculation of pixel value A at row i and column j of image IMG is:

Where a0(i,j) is the value of the pixel at the ith row and jth column of the original image, fm is the compensation coefficient of fusion gradient, fn is the compensation coefficient of differential fusion gradient, gfusion(i,j) is the fusion matrix of pixel point a0(i,j), gsubfus(i,j) is the differential fusion matrix of pixel point a0(i,j).

Figure 2 illustrates the workflow to calculate the IGFC value centered on a0 (whose pixel value is 148) in image IMG, and the pixel values of its surrounding pixels concerned in the calculation are shown in Fig. 2a. The gradient amplitudes of the right, left, up, and low directions are calculated with Eqs. 6–9 (Fig. 2b). Therefore, gfusion = grx + glx + guy + gdy = 23 (Eq. 10), and gsubfus = (grx-glx) + (guy-gdy) = −7 (Eq. 11). Let m = 1, n = 7, and we have the eigenvalue of pixel a0 (Eq. 12):

a = a0 + gfusion- gsubfus*7 = 220 (Fig. 2c).

-

(4)

In the Lena head with a specification of 200*200, we took area A(101:107,101:107) with clear outlines at the corner of the eye to find out the pixel values of the original image and the transformed feature image (Fig. 3).

The image appearance of Lena head after IGFC feature conversion is shown in Fig. 4:

2.4 Histogram statistics

Once the IGFC feature image of the original image is obtained, it is subjected to a histogram to extract its detailed feature vectors. The histogram statistics is implemented for statistical analysis of the grayscale images with pixel values between 0 ~ 255 [14], and it returns a 256-dimensional array as the result, in which each value pair should be the statistic numbers of the corresponding pixel values in the image. The histogram statistics of Lena head is shown in Fig. 5.

In the field of face recognition, if a whole picture can be represented by histogram statistics, then the statistical results can represent the overall features of the whole image. However, different pictures may exhibit similar statistical results. To minimize the impact brought by such a situation, the whole image is divided into several subgraphs with a certain order, and the histograms of the subgraphs are calculated separately before being connected in a certain sequence to form the general feature vector of the overall image. Following that, a classifier is used to complete model training and vector testing and verify the image recognition and matching outcomes.

Even if two different images are judged statistically similar by their integral histograms, it is highly possible to overthrow such a conclusion when comparing the histograms of their segmented sub-images. The Lena head is partitioned into a 3*3 array, and the histograms of each partitioned sub-graph are shown in Fig. 6 [17]:

As shown in Figs. 5 and 6, histograms with or without separate calculations are significantly different. Specifically, Fig. 5 is the global statistical result of Lena head, and the C2 block in Img1 of Fig. 6 corresponds to the local statistical result block G2 in Img2. Each histogram in Img2 corresponds to the subgraph that is located in the respective locations of the original image (e.g. E1 - > A1, E2 - > A2, etc).

The corresponding relationship of each histogram in Fig. 6 is as follows:

-

a.

E1 is the statistical histogram of A1, E2 is the statistical histogram of A2, E3 is the statistical histogram of A3;

-

b.

F1 is the statistical histogram of B1, F2 is the statistical histogram of B2, F3 is the statistical histogram of B3;

-

c.

G1 is the statistical histogram of C1, G2 is the statistical histogram of C2, G3 is the statistical histogram of C3.

3 Proposed method: IGFC

3.1 Algorithm process

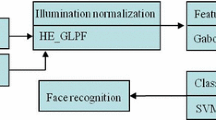

The IGFC algorithm is executed with the following steps (Fig. 7). Firstly, image features are extracted. Secondly, the image features are decomposed into multiple subgraphs following the 3 × 3 template [17]. Thirdly, each subgraph is subjected to histogram calculations to obtain the feature vector. Fourthly, PCA is used to reduce dimensionality. Finally, SVM classification is used to train and recognize image classifications.

3.2 Implementation of the algorithm

When implementing the algorithm, an image is loaded and converted into a grayscale one to obtain an m*n matrix A.

-

Step 1: Calculate the differences between pixel point a0(i,j) in matrix A and its up, down, left and right adjacent pixel points, take the absolute values of the differences (where 1 < i < m-1, 1 < j < n-1), and obtain the upper gradient guy, lower gradient gdy, right gradient grx, and left gradient glx.

-

Step 2: Calculate gfusion (Eq. 10);

-

Step 3: Calculate gsubfus (Eq. 11);

-

Step 4: Calculate the pixel value a(i,j) of the pixel point a0(i,j) (Eq. 12).

-

Step 5: Repeat Step1 ~ Step4 until all pixels in matrix A are converted into a feature matrix B of (m-2)*(n-2).

-

Step 6: Use adjacent value filling (fill null positions in the matrix with the mean of their immediate adjacent values) to transform matrix B into a new matrix B′ of m * n (identical to matrix A).

-

Step 7: Use a specific template to block matrix B [17], and calculate the histograms for each image block. Connect the histograms for each block in a specific order to obtain the feature vector of the image. In this paper, the specific template is 3 × 3.

higher dimension numbers of the image feature vector mean more time and storage resources needed for model training and recognition. Therefore, PCA, as one of the most commonly used dimensionality reduction methods, is used to reduce the number of dimensions of feature vectors [44]. After PCA processing, high-dimensional data are removed, and only the most important feature information remains.

3.3 Vector machine SVM

The support vector machine (SVM) method is a tool to discriminate and classify test samples by extracting limited sample information based on statistical theory. It can minimize the structural risks, evade the problems of overfitting and local minimization commonly seen in traditional methods, and provide strong and generalized results. The kernel function is adopted in SVM to map the high-dimensional space with low calculation complexity and bypass the Curse of Dimensionality. Therefore, SVM has been widely used in face recognition [16], and this paper adopts LIBSVM, a sub-model of SVM, developed by Professor Chih-Jen Lin and his colleagues [11]. Matlab 2016a was used for the classification experiments of face recognition.

4 Experiment and result analysis

4.1 Experimental environment

In this section, we will illustrate the effectiveness of our proposed operator. Specifically, we evaluated the performance of our proposed method on three public face image databases, which are Olivetti Research Laboratory (ORL), YALE face database, and CMU_PIE face database.

The tests were performed on a 2.4GHz CPC, 8GB memory (including 1G video memory), and a 64-bit Windows 7 operating system.

4.2 Parameter settings

4.2.1 Compensation coefficients fm、fn

As indicated by Eq. 12, compensation coefficients fm, fn will affect the accuracy of algorithm recognition. Therefore, these coefficients were analyzed to find out their impacts on test results and obtain the most reasonable values.

Since fm is the compensation coefficient of fusion gradient calculated by accumulating gradients in multiple directions, it is expected to be large. However, if fm is too large, too many pixel values will be equal to or greater than 255, which is not favored for image feature extraction. Therefore, fm is set to 1 in this algorithm, and we focused on the influences of different fn values on the algorithm recognition rates (Fig. 8).

For the ORL database set, most experimental results on the impact of different fn values reached 100% accuracy thanks to the 3 × 3 image separation method. To further understand the influence of fn on the recognition rates, the 1 × 2 template was used instead of 3 × 3 in another set of experiments, and the impacts of different fn values on the recognition rates became more significant (Fig. 8).

The experimental results show that greater fn does not mean better recognition. For ORL and CMU_PIE face libraries, when fm = 1, the recognition rates obtained when fn = 7, 8, and 9 are relatively stable and high compared to the other situations. Therefore, in the remaining parts of this paper, we chose fm = 1 and fn = 7.

4.2.2 Other parameters

The image block template adopted in this paper is 3 × 3, and the classifier used is the LibSVM classifier[[11] developed by Professor Chih-Jen Lin and his colleagues, and the corresponding parameters are set as’ -s 0 -t 2 -c 16 -g 0.0009765625′.

4.3 Experimental results

Our proposed algorithm was compared with five excellent algorithms (classic LBP [1], CLBP [40], WLCGP [18], LGP [24], and FNDC [37]) on four face databases (YALE, ORL, CMU_PIE, and FERET) for their recognition rates and operation efficiencies.

4.3.1 Recognition rates

For all the algorithms referenced, experimental parameters in each original document were used; if the experimental parameters were not provided in the original document, the parameter settings in this paper were used. The recognition rates generated by each algorithm were compared, and 30%–80% of all the images of a certain individual on the databases were used for training.

4.3.2 YALE database results

The Yale Face Dataset was created by Yale University. It consists of 11 images for each of the 15 different people, who were shot with different poses, expressions, and light conditions. Each image is 80 × 80 pixels in size, and a sample set of one person is shown in Fig. 9.

In this experiment, the first 3–8 images in each sample set were used for training, and the remaining images were tested. The test results are described in Table 1.

In Table 1, the proposed algorithm demonstrated better performance than classic LBP, LGP, and WLCGP algorithms when the number of training images was 3–8. On the other hand, compared to the proposed algorithm, CLBP exhibited higher recognition rates when the number of training images was 4–7, and FNDC revealed a greater recognition rate when the number of training images was 4.

4.3.3 ORL database results

ORL face database was created by Olivetti Research Laboratory, The University of Cambridge, UK. This dataset involves 400 92*112 pictures of 40 people (10 pictures each), and the facial expression changes, small gesture variations, and scale changes among the pictures of the same person are lower than 20%. The samples of one particular person used in this experiment are given in Fig. 10.

In this experiment, the first 3–8 images in each sample set were used for training, and the remaining images were tested. The test results are described in Table 2.

For the ORL face database, the recognition rates of CLBP, WLCGP, and the proposed algorithm are all satisfactory, but the proposed algorithm is the most superior in general. CLBP and FNDC algorithms both realize a 100% recognition rate with 7 and 8 training graphs and a 99.38% recognition rate with 6 training graphs, and all of these numbers are identical to those of the proposed algorithm. However, as the number of training graphs drops, the proposed algorithm demonstrates greater recognition rates than the other two algorithms. For classical LBP and LGP algorithms, their recognition rates against the ORL database are constantly lower than those of the proposed algorithm.

4.3.4 CMU_PIE database results

The CMU_PIE face database was created by researchers at Carnegie Mellon University. The original database contains pictures of 68 people taken under 13 poses, 43 light conditions, and 4 expressions, and the total number of photos is 40,000. In this paper, 1632 64 × 64 grayscale face images in the CMU_PIE database were tested, and 24 images of each person were included in one sample set. An example sample set is given in Fig. 11.

In this experiment, images No.10 to No.18 for each person in the database were taken for training, and the remaining images were tested. The test results are displayed in Table 3.

For the CMU_PIE face database, when the number of training is 10, the proposed algorithm demonstrates higher recognition rates compared to other reference algorithms except for the classical LBP. Besides, the recognition rate of the proposed algorithm rises steadily from 42.86% to 92.16% as the number of training increases from 10 to 18.

4.3.5 FERET database results

Face Recognition Technology (FERET) engineering was launched by the US Department of Defense’s Counterdrug Technology Transfer Program (CTTP). The resulting FERET database includes a general face library and universal test standards, in which variations in facial expressions, illuminations, gestures, and ages are all represented by 1400 images of 200 people (7 images each person). An example sample set is given in Fig. 12.

In this experiment, images No.3 to No.5 for each person in the database were taken for training, and the remaining images were tested. The test results are displayed in Table 4.

For the FERET face database, when the number of training is 3, the proposed algorithm demonstrates higher recognition rates compared to other reference algorithms except for the classical FNDC. Besides, the recognition rate of the proposed algorithm rises steadily from 41.34% to 74.3% as the number of training increases from 3 to 5. Generally speaking, the proposed algorithm demonstrates higher recognition rates than other algorithms when the number of training is 5.

To sum up, the algorithm described in this paper was compared with five algorithms (classic LBP, CLBP, WLCGP, LGP, and FNDC) on four databases (YALE, ORL, CMU_PIE, and FERET), and the comparison results suggest the proposed algorithm to offer greater recognition rates than the other algorithms in general.

4.3.6 Time complexity analysis

In order to compare the operation efficiencies of each algorithm in terms of image feature extraction and image recognition, the times spent by the proposed algorithm and five other algorithms (classical LBP, CLBP, WLCGP, LGP, and FNDC) to complete the image feature extraction and image recognition tasks were recorded (Tables 5 and 6).

For image recognition, we performed model training and image recognition as two independent experiment sessions. The times each algorithm spent from the beginning of image processing to the end of recognition were recorded (measured number of images in each image set: 3 for Yale, 2 for ORL, and 6 for CMU_PIE).

Judging from the tables, FNDC and CLBP algorithms are comparably more efficient for YALE, spending 1.68 s and 1.54 s to extract feature information from 165 80 × 80 images, respectively. However, the proposed algorithm exhibited much more superior performance (0.55 s) in that aspect.

For ORL, CLBP, LGP, WLCGP, and FNDC algorithms all demonstrate promising running efficiency, spending 3.71 s, 3.94 s, 3.96 s, and 2.26 s to extract feature information from 400 92*112 images. Nevertheless, these numbers are still longer than that of the proposed algorithm (1.97 s).

For the CMU_PIE database, CLBP, WLCGP, and FNDC algorithms exhibit good performance, using 14.54 s, 15.32 s, and 5.25 s to extract feature information from 1632 64 × 64 images, respectively. However, the total time spent by the proposed algorithm to finish the identical task is merely 3.26 s. On average, the time spent by the proposed algorithm to extract image features from the three databases remains obviously shorter than the other algorithms.

5 Conclusion

In this article, we propose a new face recognition algorithm (IGFC algorithm) capable to extract features from the original image by fusion and compensation. The algorithm calculates the gradient values in four directions for each pixel in the original image and uses the fusion gradient and differential fusion gradient to compensate for the original pixel value. Following the pixel value compensation, a feature graph that can accurately describe the original features can be obtained. Subsequently, the feature graph is divided into several blocks and each block is subjected to histogram calculations. The histograms obtained are connected in a given sequence to generate a feature vector that describes the image features, and the dimensionality of the feature vector is reduced by principal component analysis. Finally, the SVM classifier is used to classify and identify the original image. Experiments show that the IGFC algorithm can produce good recognition rates in YALE, ORL, CMU_PIE, and FERET databases with excellent operational efficiency.

The proposed algorithm mainly focuses on the image recognition effect in a specific environment, but the face recognition effect in the case of complex background is not very ideal. The face recognition effect in complex background is not very ideal, which will be the focus of the later work. The author will strengthen the research on this aspect of technology, and try to put forward an algorithm that can better solve the face recognition effect in complex background.

References

Ahonen T, Hadid A, Pietikainen M (2006) Face description with local binary patterns: application to face recognition. IEEE Trans Pattern Anal Mach Intell 28(12):2037–2041

Bao LN, Le D-N, Van Chung L, Nguyen GN (2016) Performance evaluation of video-based face recognition approaches for online video contextual advertisement user-oriented system. In: Satapathy SC, Mandal JK, Udgata SK, Bhateja V (eds) Information systems design and intelligent applications. Springer, New Delhi, pp 287–295

Bartlett MS, Movellan JR, Sejnowski TJ (2002) Face recognition by independent component analysis. IEEE Trans Neural Netw Publ IEEE Neural Netw Counc 13(6):1450–1464

Bastanfard A, Bastanfard O, Takahashi H, Nakajima M (2004) Toward anthropometrics simulation of face rejuvenation and skin cosmetic. Comp Anim Virtual Worlds 15:347–352

Bastanfard A, Takahashi H, Nakajima M (2004) Toward E-appearance of human face and hair by age, expression and rejuvenation. International Conference on Cyberworlds. IEEE

Belhumeur PN, Hespanha JP, Kriegman DJ (1997) Eigenfaces vs. fisherfaces: recognition using class specific linear projection. IEEE Trans Pattern Anal Mach Intell 19(7):711–720

Bicego M, Lagorio A, Grosso E, et al. (2006) On the use of SIFT features for face authentication[C]. Proceedings of the 19th International Conference on Computer Vision and Pattern Recognition Workshop. Los Alamitos: IEEE Computer Society Press, Article No. 35

Bo F, Xu C, Xilin Z, Zheng X (2019) Face recognition LBP algorithm based on the most value average. Comput Appl Softw 36(9):209–213

Bui L, Tran D, Huang X, Chetty G (2011) Novel metrics for face recognition using local binary patterns. Knowl-Based Intell Inf Eng Syst 6881:436–445

Cai Y, Lei Y, Yang M, You Z, Shan S (2019) A fast and robust 3d face recognition approach based on deeply learned face representation. Neurocomputing 363:375–397

Chang CC, Lin CJ (2011) LIBSVM: a library for support vector machines. ACM Trans Intell Syst Technol 2(3):1–27

Chen Z, Dong R (2020) Research on fast recognition method of complex sorting images based on deep learning. Int J Pattern Recognit Artif Intell 8:80948–80963. https://doi.org/10.1142/S0218001421520054

Chu Y, Zhao L, Ahmad T (2019) Multiple feature subspaces analysis for single sample per person face recognition. Vis Comput 35(2):239–256

Dalal N, Triggs B (2005) Histograms of oriented gradients for human detection, proceedings of the IEEE computer society conference on computer vision and pattern recognition. IEEE Computer Society Press, LosAlamitos, pp 1886–1893

Dehshibi MM, Bastanfard A (2010) A new algorithm for age recognition from facial images. Signal Process 90(8):2431–2444

Deniz O, Castrillon M, Hernandez M (2003) Face recognition using independent component analysis and support vector machines. Pattern Recogn Lett 24:2153–2157

Déniz O, Bueno G, Salido J, De la Torre F (2011) Face recognition using histograms of oriented gradients. Pattern Recogn Lett 32(12):1598–1603

Fang S, Yang J, Liu N, Sun W, Zhao T (2018) Face recognition using weber local circle gradient pattern method. Multimed Tools Appl 77(2):2807–2822

He Q, He B, Zhang Y, Fang H (2019) Multimedia based fast face recognition algorithm of speed up robust features. Multimed Tools Appl 78(17):24035–24045

Heikkila M, Pietkainen M, Schmid C (2006) Description of interest regions with center-symmetric local binary patterns. Comput Vis Graph Image Process 4338:58–69

Huang D, Shan C, Ardabilian M (2011) Local binary patterns and its application to facial image analysis: a survey. IEEE Trans Syst Man Cybern Part C 41(6):765–781

Huh JH, Seo YS (2019) Understanding edge computing: engineering evolution with artificial intelligence. IEEE Access 7(2019):164229–164245

Huixian Y, Dilong H, Fan L, Yang L, Zhao L (2017) Face recognition based on bidirectional gradient center-symmetric local binary patterns. J Comput-Aided Des Comput Graph 29(1):130–136

Jun B, Kim D (2012) Robust face detection using local gradient patterns and evidence accumulation. Pattern Recogn 45(9):3304–3316

Kim J, Ra M, Kim WY (2020) A DCNN-based fast NIR face recognition system robust to reflected light from eyeglasses. IEEE Access 8:80948–80963. https://doi.org/10.1109/ACCESS.2020.2991255

Kuan L, Jianping Y, Yong L, Fayao L (2012) Local statistical analysis of gabor coefficients and adaptive feature extraction for face description and recognition. J Comput Res Dev 49(4):777–784

Lee P, Yoo J, Huh (2020) Face recognition at a distance for a stand-alone access control system. Sensors 20(3):785

Liu C (2004) Enhanced independent component analysis and its application to content based face image retrieval. IEEE Trans Sys Man Cybern Part B (Cybern) 34(2):1117–1127

Liu CJ, Wechsler H (2002) Gabor feature based classification using the enhanced fisher linear discriminant model for face recognition. IEEE Trans Image Process 11(4):467–476

Ning X, Li W, Li H, Liu W (2016) Uncorrelated locality preserving discriminant analysis based on bionics. J Comput Res Dev 53(11):2623–2629

Ojala T, Pietikainen M, Maeapaa T (2002) Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans Pattern Anal Mach Intell 24(7):971–987

Park SW, Ko JS, Huh JH, Kim JC (2021) Review on generative adversarial networks: focusing on computer vision and its applications. Electronics 10(10):1216

Qu X, Wei T, Peng C, Du P (2018) A fast face recognition system based on deep learning. 2018 11th international symposium on computational intelligence and design (ISCID). IEEE. Vol. 1. IEEE, 2018

Ramaiah NP, Ijjina EP, Mohan CK (2015) Illumination invariant face recognition using convolutional neural networks, in: proceedings of the IEEE Interna-tional conference on signal processing. Inf Commun Energy Syst SPICES

Singh G, Chhabra I (2018) Effective and fast face recognition system using complementary OC-LBP and HOG feature descriptors with SVM classifier. J Inf Technol Res 11(1):91–110

Soni R, Kumar B, Chand S (2019) Optimal feature and classifier selection for text region classification in natural scene images using Weka tool. Multimed Tools Appl 78:31757–31791. https://doi.org/10.1007/s11042-019-07998-z

Tang Z, Wu X, Fu B, Chen W, Feng H (2018) Fast face recognition based on fractal theory. Appl Math Comput 321(2018):721–730

Turk M, Pentland A (1991) Eigenfaces for recognition. J Cogn Neurosci 3(1):71–86

Wan Y, Li HH, Wu KF, Tong H (2015) Fusion with layered features of LBP and HOG for face recognition. J Comput-Aided Des Comput Graph 27(4):640–650

Wang X, Zhang Y, Mu X, Zhang FS (2012) The face recognition algorithm based on improved LBP. Opto-Electron Eng 39(7):109–114

Yang J, Zhang D, Frangi AF, Yang J (2004) Two-dimensional pca: a new approach to appearance-based face representation and recognition. IEEE Trans Pattern Anal Mach Intell 26(1):131–137

Yang S, Chunxia Z, Fan L, Feng C (2017) A face recognition algorithm using fusion of multiple features. J Comput-Aided Des Comput Graph 29(9):1668–1672

Ye J, Janardan R, Li Q (2005) Two-dimensional linear discriminant analysis. Adv Neural Inf Proces Syst 17:1569–1576

Zhang B, Wang SF (2015) PCA face recognition algorithm based on robust MCD estimator. Comput Eng Des 36(3):778–782

Zhang J, Zhao H, Chen S (2014) Face recognition based on weighted local binary pattern with adaptive threshold. J Electron Inf Technol 36(6):1327–1333

Zhaoxia YANG, Feng LU, Yuesheng LI (2002) Computation of image gradient and divergence and their application to edge detection of Noisy images. Acta Sci Nat Univ Sunyatseni 41(6):6–9

Funding

This work was supported by Characteristic Innovation Research Fund for Universities of Guangdong Province(No.2019GKTSCX041) and Science and Technology Program of Shaoguan(No.2018SN041).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare they have no competing no financial interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, Y., Yan, L. A fast face recognition based on image gradient compensation for feature description. Multimed Tools Appl 81, 26015–26034 (2022). https://doi.org/10.1007/s11042-022-12804-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-12804-4