Abstract

The concept of transfer learning has received a great deal of concern and interest throughout the last decade. Selecting an ideal representational framework for instances of various domains to minimize the divergence among source and target domains is a fundamental research challenge in representative transfer learning. The domain adaptation approach is designed to learn more robust or higher-level features, required in transfer learning. This paper presents a novel transfer learning framework that employs a marginal probability-based domain adaptation methodology followed by a deep autoencoder. The proposed frame adapts the source and target domain by plummeting distribution deviation between the features of both domains. Further, we adopt the deep neural network process to transfer learning and suggest a supervised learning algorithm based on encoding and decoding layer architecture. Moreover, we have proposed two different variants of the transfer learning techniques for classification, which are termed as (i) Domain adapted transfer learning with deep autoencoder-1 (D-TLDA-1) using the linear regression and (ii) Domain adapted transfer learning with deep autoencoder-2 (D-TLDA-2) using softmax regression. Simulations have been conducted with two popular real-world datasets: ImageNet datasets for image classification problem and 20_Newsgroups datasets for text classification problem. Experimental findings have established and the resulting improvements in accuracy measure of classification shows the supremacy of the proposed D-TLDA framework over prominent state-of-the-art machine learning and transfer learning approaches.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this modern era of self-regulation, intelligent machine learning (ML) technologies are developing exponentially to ease real-life applications, such as regression, classification, and clustering. Such machines heavily depend on the learning techniques that are implied to learn to develop a classifier. The ML techniques are mainly characterized as supervised, unsupervised, and semi-supervised learning, etc. [31]. Supervised learning techniques train the machine using labeled input data (train/source data) to predict the desired output (labels) of test data. However, the hidden patterns needed to be mined from the train data in unsupervised learning techniques as class labels are unknown. Semi-supervised learning techniques are used when a minimal amount of labeled train data is available, i.e., a large amount of unlabeled train data is used in conjunction with few labeled data to build the learning classifiers [57].

Several ML techniques assume that the learning of every new task starts from scratch, and the training and test data originate from the same feature distribution and domain space [29]. Unsupervised and semi-supervised learning methods are relatively ineffective if sufficient information about the test and training data is not available. In unsupervised learning, the prediction model works poorly when the information about the unlabeled data does not exist. To overcome this, a new approach to learning, called Transfer Learning (TL), has emerged as an attractive area of research [12, 14, 25, 28, 33, 38, 42, 49]. TL is inspired by human intelligence, i.e., humans do not require learning from scratch for every new task as they can efficiently utilize their already acquired knowledge (from other tasks) to solve a new task or learn more rapidly. For example, cycle riding experience helps in grasping motorbike skills quickly and swiftly. TL algorithms are usually designed to predict the test data using a mathematical model trained on a dataset that differs concerning domains, tasks, and distributions [57].

Domain adaptation (DA) is an excellent representation of TL, which utilizes both source and target data for learning even though their distributions are not the same [31,32,33, 48]. The goal of DA is to share the knowledge among source and target data but only for related tasks or domains. A notable issue within DA is to decrease the dissimilarity between the distributions of source and target domains, somehow maintaining the essential features of both environments. Although numerous research works have been done over the last decade on TL algorithms to produce an appropriate distance measure for DA, yet remains an ongoing and challenging subject. To that end, this work offers a novel DA method for determining the distance among domains. Precisely, the DA approach runs over the source domain to derive the representative features and then applied to target data. The distance measure is defined to distinguish the difference between the source and target domain. In other words, if the distance measure between domains is minimal, their distributions are considered to be similar, otherwise dissimilar. Hence, the weight vectors trained from the encoding and decoding layers as of the source data apply perfectly to target data for classification. Moreover, the feature representation model based on the proposed DA approach makes the source and target domain distributions similar, which constructs a new framework for the domain-specific features.

In this paper, we propose a marginal probability-based domain adaptation methodology, which efficiently captures the similarity within the features of source and target domains. It is a feature representation technique for reducing the dimensionality of features within both domains for better representation learning. Further, we utilize one of the incredibly powerful approaches of machine learning known as a deep neural network [42]. The concept of a deep learning network originated from the artificial neural network with many hidden layers which capture the intricate non-linear representation of data. The deep neural network is considered an intelligent feature extraction module that offers excellent flexibility in extracting high-level features in the proposed TL model. Deep autoencoder is a deep feed-forward neural network that contains more than one hidden layer and is trained to map the input value. We have utilized a deep autoencoder-based supervised representation learning method for transfer learning called Transfer Learning with Deep Autoencoder (TLDA) [55]. In TLDA, there are two layers: the first layer is for encoding and the second layer is for decoding, where the weights are pooled through source and target data. The first encoding layer is called the embedded layer. At this layer, the source and target data distributions are minimized by the K-L divergence of the embedded instances between domains. The second embedding layer is the level encoding layer where the source labels information is encoded using a softmax regression model. The above discourse TLDA framework is coupled with our proposed DA approach, as shown in Fig. 1.

Significant contributions of the work are summarized as follows:

-

i).

We propose a new transfer learning framework that can tackle analogous multi-domain predicament when the source and target domains exhibit profound data distribution (e.g., source data contain images of passenger cars; however, the target domain comprises ambulances).

-

ii).

In the proposed framework, we have exploited marginal probability distribution between source and target domain to efficiently select similar features to bridge the vast differences across the source and target domains.

-

iii).

Two different feature representation-based domain adaption techniques in conjunction with deep autoencoder are proposed. We have termed the newly developed techniques as (1) Domain Adapted Transfer Learning with Deep Autoencoder-1 (D-TLDA-1), and (2) Domain Adapted Transfer Learning using Softmax Regression-2 (D-TLDA-2)

-

iv).

To analyze the efficacy of D-TLDA-1 & D-TLDA-2 as improvements in the accuracies of classification, simulations are conducted on two real-world datasets, namely, the ImageNet dataset and the 20_Newsgroup dataset, and the impact of parameter sensitivity have been examined.

-

v).

To establish clear superiority of the proposed D-TLDA-1 and D-TLDA-2, thorough comparisons are conducted with the following:

(a) Transfer learning methods: Transfer Component Analysis (TCA) [32], marginalized Stacked Denoising Autoencoder (mSDA) [8], Transfer Learning with Deep Autoencoder (TLDA) [55, 56].

(b) Machine learning tools: Linear Regression (LR) [16], Neural Network (NN) [3], Support Vector Machine (SVM) [45], and Extreme Learning Machine [50].

The rest of the paper is organized as follows. Section 2 contains a literature survey. Section 3 discussed the modelling framework of the objective function, which is to be optimized in the learning model. In Section 4, the proposed framework for domain adaptation in transfer learning with deep autoencoder is explained. In Section 5, two real-world datasets are used to test the performance of our proposed techniques. This section also presents the findings of our experimental results are reported and compared with the various machine and transfer learning techniques. Finally, we conclude the paper in Section 6 with a brief discussion about our contribution and future work.

2 Literature survey

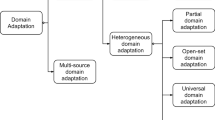

The study conducted by Pan and Yang [31] is a ground-breaking effort that categorizes TL and examines the research achievements made up to 2010. The homogeneous and heterogeneous TL techniques introduced and summarized in the survey by Weiss et al. [49]. However, in-depth look into heterogeneous TL is provided by Day and Khoshgoftaar in their study [14]. Several researchers have studied specialized topics, including activity recognition [12], computational intelligence [28], and deep learning [42]. A number of studies also concentrate on particular application situations, such as visual categorization [38], collaborative recommendation with auxiliary data [30], computer vision [48], and sentiment analysis [25]. A neural probabilistic language learning model proposed by Bengio et al. [4] learns a distributed representation for words and the probability function for every word sequence. A unique sharing method utilizing Bayesian methodology was reported by Bakker and Heskes [1], which is a general amalgamation of expert architecture incorporating gated dependencies on task characteristics. A heuristic approach, called positive examples and negative examples labeling heuristic, was proposed by Fung et al. [17], in which a classifier efficiently utilizes both positive and negative instances. Collobert and Weston [11] proposed a unified architecture for natural language processing for features relevant to the tasks. Blitzer et al. [5] reported the concept of pivot feature selection of domain adaptation (DA) for a target domain using both the source and target domains. A co-clustering algorithm was suggested by Dai et al. [13] to exploit the technique of transferal of label information across both source domain and target domain. Cao et al. [6] introduced an extension of the AdaBoost algorithm titled ITLAdaBoost as Inductive Transfer Learning AdaBoost algorithm for pedestrian detection. Tang et al. [43] developed a framework for distributed learning across heterogeneous networks to efficiently classify various types of social relationships. A multi-task transfer learning approach for small training samples was proposed by Saha and Gupta in [37]. The authors also developed an alternative method of multipliers to solve an optimization problem.

It was proved by Rosenstein et al. [35] that when two tasks are entirely dissimilar, then transferral of knowledge even by exploiting the brute-force approach can’t cease performance degradation. Therefore, the use of transformations to project instances of different domains into a common latent space is a widely studied TL technique. Silver et al. [40] proposed a context-sensitive neural network, where single output neural network was used to learn different tasks. To construct a feature-based TL, Deng et al. [15] used a sparse autoencoder technique to recognize emotions in a speech. Huang et al. [20] introduced the idea of cross-language knowledge transfer using the deep multilingual network with shared hidden layers. Long et al. [27] proposed adaptation regularization based TL techineque which optimized the structural risk functional and adapted the joint distribution of the marginal and conditional distributions by exploring the maximum possible learning objectives. A hybrid heterogeneous TL framework was proposed by Zhou et al. [53], which utilized deep learning to understand the mapping between cross-domain complex features. Roy et al. [36] proposed a domain adaptation approach incorporating a stacked autoencoder-based deep neural network. Utkin et al. [44] proposed a TL approach using a robust deep learning algorithm for the efficient classification of multi-robot systems. Huang et al. [21] used the deep neural network to design a TL approach for speaker adaptation in automatic speech recognition against speaker variability. Tahmoresnezhad and Hashemi [41] proposed a DA technique to contain domain shift problems when data distribution varies too much.

Ganin and Lempitsky [18] proposed a back-propagation regularization technique for DA using deep learning models for unsupervised TL. The [18] was further enhanced by Clinchant et al. [10], incorporating more robust regularization for denoising autoencoders. A stacked denoising autoencoder (SDA) was proposed by Vincent et al. [47], which was later used by Glorot et al. [19] to learn robust features in the data collected from different domains. Chen et al. [8] further improved the [47] approach by proposing a marginalized SDA for transfer learning. Shao et al. [39] developed the deep adaptive exemplar autoencoder method of unsupervised DA using a bisection tree partition for source and target data. A TL model was described by Liu et al. [24], which transfers the knowledge learned from abundant, simple human action to recognize complex human efforts.

Recently, Zhang et al. [51] used a deep transfer based multi-task learning approach for better feature representations for annotating gene expressions of biological images. Some surveys are supplied for readers who desire a thorough grasp of this area. In [29], Niu et al. presented the most representative works on TL in the past decade from 2010 to 2020 and organized into different categories. Zhang et al. [57] connect and systematize the existing TL researches, as well as to summarize and interpret the mechanisms and the strategies of TL in a comprehensive way, which provides the most better understanding of the current research status and ideas on TL. Moreover, TL based mechanisms have been applied to a number of real-work applications such as Object detection in remote sensing images [9], human activity recognition [7, 46], fruit freshness classification [22], sketch recognition [52], person re-identification [26], detect malarial parasite [2], prediction of COVID-19 infection through chest CT-scan images [23] and so on. Unlike the earlier works, this paper proposed a novel supervised learning-based description method called D-TLDA using deep autoencoder networks that minimize the distance measure among the marginal probability distribution of domain variation and labeling of encodes of the source domain.

3 Modelling framework

In this section, we describe basic concepts of deep autoencoder, softmax regression, relative entropy, and regularization used in our proposed formulation. Table 1 presents the list of notations/symbols used in the proposed framework.

3.1 Deep autoencoder

An autoencoder is a mapping between an input (x) to hidden layers (y) such that the data can be reconstructed (\( \hat{x} \)) from y. It includes two major processes: encoder and decoder. The encoder encodes an input x into one or more hidden layers y using Eq. (1). However, the decoder takes y and decodes it in reconstructed output \( \hat{x} \) using Eq. (2).

Here, f represents a sigmoid function (non-linear activation function), W ∈ ℝk × m shows weight matrix and b ∈ ℝk × 1 is the bias vector.

The essential purpose of an autoencoder is to construct a feed-forward neural network [3]. The deep autoencoder is a particular neural network that contains more than one hidden layer and is trained to map the input values. Figure 2 shows a typical deep autoencoder diagram. In Fig. 2, x1, x2 and x3 represent the input and \( {\hat{x}}_1,\kern0.5em {\hat{x}}_2\ \mathrm{and}\ {\hat{x}}_3 \) are output with two central nodes, z1 and z2, arranged symmetrically in three hidden layers. The goal of the deep autoencoder is to minimize the deviance between output \( \hat{x} \) and input x.

Deep autoencoder minimizes the reconstruction error function \( {\sum}_{i=1}^n{\left\Vert \hat{x}-x\right\Vert}^2 \) by learning the weight matrices \( {W}_1,{W}_1^T \) and bias vector \( {b}_1,\kern0.5em {b}_1^T \) to accomplish this objective [55, 56]. Hence, the aim of deep autoencoder can be written as the following optimization problem.

Suppose \( s=\left({x}_i^{(s)},{l}_i^{(s)}\right) \) represents source domain having set of input data\( {x}_i^{(s)}\in {\mathbb{R}}^{m\times {n}_s} \) with label \( {l}_i^{(s)}\in \left\{1,\dots, {n}_l\right\} \), and \( t={x}_i^{(t)} \) represents target domain without label. Then, for a given number of instances of source domain (ns) and target domain(nt), the reconstruction error using Eq. (3) can be derived as follows:

Equation (4) can be adequately understood by Fig. 2 in which \( {y}_i^{(r)}=f\left({W}_1{x}_i^{(r)}+{b}_1\right) \) is the first hidden layer called embedding layer with output y ∈ ℝk × 1, (k ≤ m), the weight matrix W1 ∈ ℝk × m, and bias vector b1 ∈ ℝk × 1. The output of the embedding layer (y) is taken as the input of the second hidden layer. The second hidden layer (called label layer) is calculated as\( {z}_i^{(r)}=f\left({W}_2{y}_i^{(r)}+{b}_2\right), \) where \( {W}_2\in {\mathbb{R}}^{n_l\times k} \) and b2 ∈ ℝk × 1 shows weight matrix and bias vector, respectively using Eq. (1). The output \( z\in {\mathbb{R}}^{n_l\times 1} \) of nl nodes used to predict the number of class labels of the target domain. Similarly, the third hidden layer\( \hat{y}\in {\mathbb{R}}^{k\times 1} \), known as reconstruction of embedding layer with the weight matrix \( {W}_2^T\in {\mathbb{R}}^{k\times {n}_l} \) and bias vector\( {b}_2^T\in {\mathbb{R}}^{k\times 1} \), is evaluated as \( {\hat{\ y}}_i^{(r)}=f\left({W}_2^T{z}_i^{(r)}+{b}_2^T\right) \) using Eq. (2). Finally, \( \hat{x} \) is constructed for input x by \( {\hat{x}}_i^{(r)}=f\left({W}_1^T{\hat{y}}_i^{(r)}+{b}_1^T\right), \) where \( {W}_1^T\in {\mathbb{R}}^{m\times k} \) and \( {b}_1^T\in {\mathbb{R}}^{k\times 1} \) are respectively the corresponding weight matrix and bias vector.

3.2 Softmax or multinomial logistic regression

In classification problems, softmax or multinomial logistic regression model comes into the picture when the possible values of class label l can have many values, i.e., l ∈ {1, 2, …, nl}, such that nl representing the label number, and nl ≥ 2. It is a generalization of the logistic regression [16]. To calculate the probability Pr(l = j|x), j = {1, 2, …, nl} for a test input x the softmax model construct the hypothesis hθ(x) function. The hθ(x) is estimated by calculating the probabilities of the class label for all of the nl different possible values of class to which x belongs. Therefore, it can be counted as follows:

Here\( , {\theta}_1,{\theta}_2,\dots, {\theta}_{n_l} \) are the parameters of model and \( 1/{\sum}_{j=1}^{n_l}{\mathsf{e}}^{\theta_j^T{x}_i} \) normalizes the distribution. For a given source data set \( {\left\{{x}_i,{l}_i\right\}}_{i=1}^n,{l}_i\in \left\{1,2,\dots, {n}_l\right\}, \)the softmax regression model can be designed by solving the optimization model presented in Eq. (6).

where, I represents an indicator function, which gives either 0 or 1 values. Once the softmax regression model is trained, i.e., parameters of the model are evaluated the probability of x belongs to a label j can be calculated using Eq. (5) and the class label assign to x can be obtained by using the Eq. (7).

Softmax regression model include the labels information of the source domain \( s=\left({x}_i^{(s)},{l}_i^{(s)}\right) \) into the embedding layer \( {y}_i^{(s)} \) by evaluating Eq. (7). Then, the loss function of the softmax regression can be formalized as follows:

Here, \( {\theta}_j^T\left(j\in \left\{1,\dots, {n}_l\right\}\right) \) is the j−th row of W2.

3.3 Relative entropy

Relative entropy or Kullback-Leiber (KL) divergence is a measure of divergence among two different probability distributions [34]. If P and Q are two given discrete non-symmetric probability distributions, then the relative entropy of Q from P, D(P ∣ |Q), is the loss of information when Q is approximated to P. Similarly, D(Q‖P) gives the loss of information when P is approximated to Q. Mathematically, D(P‖Q) and D(Q ∣ |P) are calculated as follows:

Relative entropy measures the change between source and target data domain when they are embedded. In classification, two probability distributions are said to be dissimilar when the value of relative entropy is higher. Total relative entropy between P and Q is given by D(P, Q) = D(P‖Q) + D(Q ∣ |P). Then, the measure of relative entropy of embedded instances between the source and target domains may be written as:

Here, Ps and Pt are respectively the probability distributions of source and target at the embedded layer. They may be evaluated as follows:

As a result, when two different domains are embedded in the same embedding space, the relative entropy is used to determine their divergence. In the embedding space, the purpose of minimizing the relative entropy is to guarantee that the source and target domain distributions are comparable.

3.4 Regularization

Regularization is a common way of controlling and flexibly reducing over-fitting. Regularization is accomplished by introducing the regularization term R(θ) in the cost function to manage the over-fitting of parameters w. Mathematically, it is defined as R(θ) = δ. ‖w‖2; where,δ ≥ 0 is the constant multiplier.

4 Proposed transfer learning model for classification

This section explains our proposed transfer learning framework of classification. The detailed flow diagram of the learning model is presented in Fig. 3. It consists of different processes: domain adaptation, objective function formulation, Gradient descent algorithm, classifier construction, and accurate measurement. All these processes are discussed in the following subsections.

4.1 Domain adaptation

Domain adaptation (DA) is a realistic illustration of TL because it uses both source and target data for the training model even through the distributional differences [31,32,33, 48]. DA aims to communicate knowledge between source and target data for the relevant entities or tasks. A particular challenge for DA is to minimize the inequality between the distributions of the source and target domains while retaining the critical characteristics of both domains. Thus, we propose a marginal probability distribution based on the distance measure of DA in the present TL model. The proposed distance measure adapts the source and the target domain by rendering the marginal distribution of both domains. Let, \( {\mathit{\Pr}}_M^{(s)} \) and \( {\mathit{\Pr}}_M^{(t)} \) represent the discrete marginal distribution probability for each feature of the given source (x(s)) and target (x(t)) domains. Then, mathematically, \( {\mathit{\Pr}}_M^{(s)} \) and \( {\mathit{\Pr}}_M^{(t)} \) may be calculated using the Eq. (12) as follows.

Now, let dij denotes the difference between the marginal distribution probabilities for each source feature to the all target domain features. That is, the distance metric, dij, may be calculated as:

The adaptation works for the features having the same marginal distribution probability. However, the difference between the two probabilities is not exactly zero. Therefore, we used a pivot value/constant (u) to distinguish the difference between the two domain distributions. Algorithm-1 demonstrates the complete procedure of the proposed domain adaptation (DA) technique. The outputs of Algorithm-1 are the newly generated source domain (sDA) and the target domain (tDA). Now, the adaptive domains will be used in the deep autoencoder to form the neural network.

4.2 Objective function

Suppose \( s=\left({x}_i^{(s)},{l}_i^{(s)}\right) \) is a given source domain labeled data\( {x}_i^{(s)}\in {\mathbb{R}}^{m\times {n}_s}, \) \( {l}_i^{(s)}\in \left\{1,\dots, {n}_l\right\} \) and \( t={x}_i^{(t)} \) target domain without the label. In our proposed framework, the objective is to minimize all the defined functions: f0 (in Eq. (4)), f1 (in Eq. (8)), f2 (in Eq. (10)) and f3(W, b, WT, bT) simultaneously, as shown in Fig. 1. Therefore, by considering the weighted aggregation method, the total objective function [54, 56] can be formalized as follows.

Here α, β and γ are non-negative factors to set of scales the effect of different components in the total objective function. In Eq. (14), the function f3 is a regularization function on the parameters of the model, which is defined as follows:

Therefore, the objective of the proposed model is to minimize the total objective function, as expressed below.

4.3 Transfer learning model

The unconstrained optimization problem formulated in Eq. (14) is a minimization problem concerning the decision variables\( {W}_1,{b}_1,{W}_1^T,{b}_1^T \)and\( {W}_2,{b}_2,{W}_2^T,{b}_2^T \). To solve the optimization problem, we have used the Gradient Descent Algorithm [54, 56], an iterative process in which each particular variable gets updated and the objective function is optimized. It is noted here that the optimization problem is not a convex optimization. Therefore, getting stuck in the local minima is entirely possible. However, we can still obtain the optimal minimal by an exceptional selection of step size. To solve the optimization problem with the gradient descent approach, we update the decision variables as follows:

Here, η is the step size, which determines the speed of convergence. Equations (16) and (17) need to find the partial derivatives (gradients) of the objective function with respect to \( {W}_1,{b}_1,{W}_1^T,{b}_1^T,{W}_2,{b}_2,{W}_2^T\mathrm{and}\ {b}_2^T \). The derivatives are computed as follows:

Here, W2j is the j-th row of W2 and nsj is the number of instance with the label j in source domain. Some intermediate variables are presented as follows:

The updated variables are used to train the layers of the autoencoder. Once the autoencoder layers are trained, the second autoencoder layer can be formed using the output of the previous autoencoder layers. This procedure may require to be repeated to create an autoencoder. This autoencoder is used as an initialization step in the transfer learning process. The details of the transfer learning with deep autoencoder are summarized in Algorithm-2. In our proposed learning model, we begin with Algorithm-1 on the source & target data for the adaptation and further apply the outcomes of Algorithm-1 in Algorithm-2.

4.4 Construction of the classifiers

Afterward training all the factors and variables of the learned autoencoder, we construct the classifier for labeling the target domain. Four different classifiers are built as follows.

-

(i).

Directly use the source and target domain without using the proposed DA approach to train the autoencoder using Algorithm-2. The output of the second hidden layer is used to calculate the probabilities of the belongingness to the class. The maximum value of all these probabilities predicts the label. This classifier is termed ransfer learning with deep autoencoder-1 (TLDA-1).

-

(ii).

Directly use the source and target domain without using the proposed DA approach and apply the softmax regression algorithm to train the classifier for the source domain in the embedding space. Then, predict the class label for the target domain using Algorithm-2. This classifier is called transfer learning with deep autoencoder-2 (TLDA-2).

-

(iii).

First, apply our proposed DA procedure (Algorithm-1) on the source and target domain to adopt similar features. The adapted domains are used to train the autoencoder using Algorithm-2. The maximum probability of belongingness from the second hidden layer predicts the class. We named this proposed domain adapted transfer learning with deep auto encoder-1 (D-TLDA-1).

-

(iv).

First, adapt the two domains using Algorithm-1 and apply softmax regression to train the autoencoder using Algorithm-2 for classification. We named this proposed domain adapted transfer learning with deep auto encoder-2 (D-TLDA-2).

5 Experimental simulations

The proposed D-TLDA frameworks are systematically implemented to evaluate its effectiveness in classifying real-world datasets, namely the ImageNet dataset and 20_Newsgroups dataset. This section consists of various subsections. The first subsection briefly summarizes the details of datasets and their pre-processing according to the TL framework. The second subsection concerns the examined outcomes and discussions of the accuracy measurement obtained by the representative TL classification approaches. Finally, in the last subsection, we performed a thorough comparative study to demonstrate the efficacy of the proposed learning model over other transfer learning and traditional machine learning techniques. The proposed framework for classification has been implemented in MATLAB on a workstation having a Xeon® processor with 16 GB RAM on Windows 7 OS.

5.1 Datasets

ImageNet Dataset

The ImageNet dataset is a collection of over 10 million images. The images from different categories such as ambulance, taxi, jeep, minivan, passenger car, and scooter are taken to build five different domains. The five domains contain diverse images and categories as D1: (ambulance and scooter images), D2: (taxi and scooter images), D3: (jeep and scooter images), D4: (minivan and scooter images), and D5: (passenger car and scooter images). Hence, images come from different domains and categories, i.e., ambulances come from D1 and taxi comes from D2, which make an appropriate dataset for transfer learning representation.

20_Newsgroups Dataset

The Newsgroups dataset is a collection of nearly 20,000 newspaper documents, which are uniformly partitioned across 20 different groups based on the subject matter of the newspapers, and each group corresponds to a different topic. The dataset has grown into a successful collection for experimentally testifying the ability of learning-based representation techniques in text classification. We have selected six categories from Newsgroup datasets in which datasets are binary classified based on their subject matter content similarities. To construct our classification problem, we have collected five different domains from these six categories: D1 (comp.os.mswindows.misc. + comp.graphics), D2 (comp.sys.ibm.pc.hardware + comp.graphics), D3 (comp.sys.mac.hardware + comp.graphics), D4 (comp.windows.x + comp.graphics), and D5 (rec.autos + comp.graphics).

To form the analysis of the proposed D-TLDA frameworks, we selected two domains from these five domains and constructed a classifier by taking one as the source domain and the other as the target domain. Hence, the total possible combinations of classification problems are 5P2 = 20 for both datasets. Tables 2 and 3 show the statistics of the real-word ImageNet datasets for image classification problems and 20_Newsgroups datasets for text classification problems. Details of positive and negative instances are provided corresponding to each domain in the tables for depicting the class labels, i.e., domain D1 of 20_Newsgroups contains the data of class label 0 and class label 1 representing ‘comp.os.mswindows.misc.’ and ‘comp.graphics, respectively. The number of features is 1000 for ImageNet datasets and 61,188 for 20_Newsgroups dataset.

5.2 Results and discussions

This section presents the empirical results of the proposed D-TLDA approaches for predicting the binary class label of the target domains for the twenty classification problems of both ImageNet and 20_Newsgroups datasets. The accuracy measure parameter is widely used to estimate the performance of the classification problems. For this purpose, an absolute difference based accuracy measure is used as the result to investigate the correctness of the learning approaches. Accuracy is defined as the number of correctly identified sample labels divided by total actual sample labels. The accuracy is evaluated using Eq. (22) as follows.

The accuracy value having the highest value will be considered as the most accurate model in finding the unknown labels of the target domain. Five different source domains are generated for a target domain, and each source domain classification model (as discussed in section 4.3) is trained. The four TL classifiers are D-TLDA-1, D-TLDA-2, TLDA-1, and TLDA-2. Therefore, a total of 80 learning models were constructed for the target domains. The D-TLDA and TLDA classifiers are different depending on their implementation procedure; D-TLDA first applies the proposed DA approach (using Algorithm-1), then Algorithm-2 is performed, whereas TLDA will be directly implemented using Algorithm-2.

The classifiers aim to minimize the objective function presented in Eq. (12) by obtaining the optimal encoding and decoding weight vectors W1, W2, \( {W}_1^T \), and \( {W}_2^T \). Therefore, it is essential to tune the parameters associated with individual objectives such as α associated with the loss function of the regression model, β associated with the entropy, and γ associated with the regularization function. So, we have conducted an extensive parameter sensitivity analysis of the proposed D-TLDA-1 & D-TLDA-2 and the TLDA-1 & TLDA-2. To investigate the influence of the parameters, α and β are sampled from the set {0.01, 0.05, 0.10, 0.50, 1.00, 5.00, 10.00, 50.00, 100.00}, the embedding layers k are selected from {10, 20, ..., 80} and γ = 0.00001.

Supervised domain adaptation experiments are performed in the learning simulation over 20 runs, and averages of the accuracy of 20 classification problems are reported for both datasets. The output results are presented in Appendix as Table 5 for the ImageNet dataset and Table 6 for the 20_Newsgroups datasets. In Tables 5 and 6, the highest accuracy values representing the best learning model for the target domain are shown in bold. Figure 4 shows the classification accuracy results for twenty adaptation tasks on the ImageNet dataset for the four methods with different parameters. In Fig. 4, the x-axis represents the index of the 20 target domain instances, and the y-axis represents the average percentage accuracy. After some preliminary experiments, we present eight variations on the ImageNets dataset for parameter sensitivity and depict the outcomes in figures from Fig. 4a–h . The average accuracies of all the problems sorted the increasing order for obvious comparison. From the figures, we can observe that the performance of the D-TLDA is relatively stable compared to the TLDA in the selection of α and β. From Fig. 4, we can ensure that the proposed D-TLDA-2 outperforms the TLDA-2 for all the choice of parameters. Similarly, D-TLDA-1 gives better results than TLDA-1 for most target domains.

The parameters values of α = 5.00, β = 5.00, and α = 10.00, β = 10.00, achieve good and highest accuracy for the ImageNet dataset with other parameters values as γ = 0.00001, and k = 10 (see Fig. 4e, f). Thus, we set these values of parameters to perform classifications for the 20_Newsgroups datasets. The average accuracies of twenty different runs for the 20_Newsgroups datasets are of the four classifiers shown in Fig. 5, and the results are presented in Table 6 of the Appendix. Similar to the ImageNet dataset, we have evaluated the results for 20 classification problems drawn from the 20_Newsgroup dataset. From the figures, we have observed that the efficacy of our proposed TL framework. The D-TLDA-2 performs better than D-TLDA-1, representing the superiority of the nonlinear sigmoid regression model to predict representative learning. D-TLDA-2 is also outperformed TLDA-1 and TLDA-2, indicating the effectiveness of the domain adaptation task from the source domain. It is to be noted that D-TLDA-1 is better than TLDA-2, and TLDA-1 shows the benefit from taking advantage of the marginal probability distribution-based distance measure in the source domain and the success of using deep learning for the transfer learning domain adaptation.

5.3 Comparative study

5.3.1 Comparison with well-known transfer learning techniques

We have compared the performance of our proposed method with the three most popular baseline transfer learning methods, viz. Transfer component analysis (TCA) [32], Marginalized Stacked Denoising Autoencoders (mSDA) [8] and transfer learning with deep autoencoder (TLDA) [55, 56]. TCA is one of the first machine learning methods aimed at learning a low-dimensional representation for transfer learning. The number of latent dimensions was carefully tuned during the implementation of TCA. That is, for the ImageNet dataset, the number is sampled from [10, 20, 30, …, 80], and its best results are reported. The mSDA is a transfer-learning algorithm based on stacked autoencoders, and we adopt the default parameters as noted in Chen et al. (2012). TLDA-1 and TLDA-1 evaluated the results for classification problems with parameter values, α = 10.00, β=10.00, γ = 0.00001, u = 1 × 10−5 and k = 10.

The comparisons of average accuracies of 20 classification problems are shown in Figs. 6 and for the ImageNet dataset and the 20_Newsgroup dataset, respectively. In Figs. 6 and 7, column 1 shows the TCA classification results on the 20 classification problems with an average accuracy of 75.5% for the ImageNet dataset and 68.3% for the 20_Newsgroup dataset. Column 2 shows the mSDA classification accuracy is 83.3% and 73.32% for the respective datasets. Average classification accuracies of TLDA-1 & TLDA-2 are represented by columns 3 and 4 of Figs. 6 and 7 with the values of 88.7% and 90.1% for the ImageNet dataset and 82.6% and 87.7% for the 20_Newsgroup dataset. Finally, column 5 and column 6 show the accuracy results obtained from the proposed D-TLDA-1 and D-TLDA-2 approaches with the average values of 90.8% and 92.1% for the ImageNet dataset and 93.2% and 93.7% for the 20_Newsgroup dataset. Thus, our proposed D-TLDA-1 & D-TLDA-2 approaches outperform the current state-of-the-art results by achieving highest prediction accuracy in comparing with the transfer learning methods.

Now, we will perform the comparison of the prediction accuracy for both classification datasets with respect to the well-known classical machine learning methods and analyze the result to observe the efficacy of the proposed transfer learning method.

5.3.2 Comparison with traditional machine learning techniques

We have compared the performance of our proposed method with the traditional machine learning tools: Linear Regression [16], Neural Network [3], Support Vector Machine [45], and Extreme Learning Machine [50]. Neural Network (NN) and Logistic Regression (LR) are the most traditional supervised machine learning algorithms. For the implementation of LR and NN algorithms, we use the original code and adopt the default parameters with the number of hidden layers as 10. The SVM is a learning algorithm based on a support vector regression model for evaluating the predictive accuracy of the target domains. The number of hidden nodes used in ELM is chosen by a trial-and-error method and set as 100 for both datasets. The machine learning tools obtain the resulting accuracy of different target domains with varying combinations of source domains. The accuracy averages to the 20 classification problems for the ImageNet dataset and the 20_Newsgroup dataset are shown in Figs. 8 and 9 for comparatively analyzing the mentioned machine learning approaches.

For the ImageNet dataset, in Fig. 8, column 1 shows the average accuracy of the LR method results on the classification problems, which is 80.5%. Column 2 shows the SVM classification accuracy is 84.4%, whereas column 3 represents the NN classifier that obtains 80.56% value. The ELM classification value is represented by column 4 with an average accuracy of 85.18%. Further, column 5 and column 6 show the accuracy results obtained from the proposed D-TLDA-1 and D-TLDA-2 approaches with the average values of 90.8% and 92.1%. In a similar fashion, for the 20_Newsgroup dataset, the average classification accuracy obtained by the LR approach is 77.1%, represented by column 1 of Fig. 9. Further, column 2 presents the average prediction accuracy of the SVM that is 71.38%, column 3, showing the NN method with 65.8%, and the ELM approach obtained 72.91% in column 4 of Fig. 9. Average classification accuracies of D-TLDA-1 & D-TLDA-2 are represented by columns 5 and 6 of Fig. 9 with the values of 93.2% and 93.7% for the 20_Newsgroup dataset. It can clearly be observed that the proposed D-TLDA-1 and D-TLDA-2 provide better average accuracies than all the prominent machine learning tools we have considered for comparison.

In summary, we observe that the proposed D-TLDA method performs better than all the compared algorithms on image classification problems for the ImageNet dataset and text issues of classification of the 20_Newsgroup dataset. In general, the model retained a considerably more extensive margin of accuracy advancement of D-TLDA-2 on both datasets. Table 4 illustrates the margin of improvements in the accuracy (in percentage) of D-TLDA-1 & D-TLDA-2 over compared machine learning and transfer learning approaches. The highest percentage marginal improvements in the accuracy are 16.60% and 25.40% by the D-TLDA-2 compared to the accuracy obtained from TCA, demonstrating our model’s more vital transfer learning capability.

6 Conclusion and future work

In transfer learning, domain adaptation adapts the similar features of both the source and target domains, and the deep learning approach extracts robust features for designing a powerful classifier. This paper utilized a deep learning approach to improve transfer learning through optimum exploitation of marginal probability-based domain adaptation methodology. The new transfer learning framework can tackle analogous multi-domain predicament when the source and target domains exhibit profound data distribution. The proposed framework exploited marginal probability distribution between source and target domain to efficiently select identical features to bridge the vast differences across source and target domain. Further, these adapted domains train a deep neural network as a deep autoencoder. The classification model is termed domain adapted transfer learning with deep autoencoder (D-TLDA). Two versions of this feature representation technique in conjunction with deep autoencoder are proposed as (1) Domain Adapted Transfer Learning with Deep Autoencoder-1 (D-TLDA-1), and (2) Domain Adapted Transfer Learning with Deep Autoencoder using Softmax Regression-2 (D-TLDA-2). Extensive experiments have been performed on two real-world datasets, viz. ImageNet dataset and the 20_Newsgroup dataset for image and text classification problems, respectively. By thorough comparison, we have shown that the accuracy achieved by our proposed transfer learning frameworks, D-TLDA-1 and D-TLDA-2, outperformed other well-known transfer learning methods viz. Transfer Component Analysis (TCA), marginalized Stacked Denoising Autoencoder (mSDA), Transfer Learning with Deep Autoencoder (TLDA-1 and TLDA-2). It is also shown that D-TLDA-1 and D-TLDA-2 have supremacy over prominent machine learning algorithms such as Linear Regression (LR), Neural Network (NN), Support Vector Machine (SVM), and Extreme Learning Machine (ELM).

In the future, we will apply the proposed approach over big datasets such as Galaxy Dataset, Leaf Dataset, etc., to find the analysis of accuracy over other transfer learning approaches. Also, we will examine the performance of the proposed method by using various distance metrics (e.g., Normalized Euclidean distance, Hamming distance, etc.) for determining the divergence between the distributions of the source domain and the target domain.

References

Bakker B, Heskes T (2003) Task clustering and gating for Bayesian multitask learning. J Mach Learn Res 1:83–99. https://doi.org/10.1162/153244304322765658

Banerjee T, Jain A, Sethuraman SC, Satapathy SC, Karthikeyan S, Jubilson A (2021) Deep convolutional neural network (falcon) and transfer learning-based approach to detect malarial parasite. Multimed Tools Appl. https://doi.org/10.1007/s11042-021-10946-5

Bengio Y (2009) Learning deep architectures for AI. Found trends®. Mach Learn 2:1–127. https://doi.org/10.1561/2200000006

Bengio Y, Ducharme R, Vincent P, Janvin C (2003) A neural probabilistic language model. J Mach Learn Res 3:1137–1155. https://doi.org/10.1162/153244303322533223

Blitzer J, Crammer K, Kulesza A et al (2007) Learning bounds for domain adaptation. Neural Inf Process Syst:129–136

Cao X, Wang Z, Yan P, Li X (2013) Transfer learning for pedestrian detection. Neurocomputing 100:51–57. https://doi.org/10.1016/j.neucom.2011.12.043

Chakraborty S, Mondal R, Singh PK, Sarkar R, Bhattacharjee D (2021) Transfer learning with fine tuning for human action recognition from still images. Multimed Tools Appl 80:20547–20578. https://doi.org/10.1007/s11042-021-10753-y

Chen M, Weinberger KQ, Xu Z(E), Sha F (2015) Marginalizing stacked linear denoising autoencoders. J Mach Learn Res 16:3849–3875

Chen J, Sun J, Li Y, Hou C (2021) Object detection in remote sensing images based on deep transfer learning. Multimed Tools Appl 41:1028001. https://doi.org/10.1007/s11042-021-10833-z

Clinchant S, Csurka G, Chidlovskii B (2016) A domain adaptation regularization for denoising autoencoders. 54th annu meet assoc comput linguist ACL 2016 - short Pap 26–31. https://doi.org/10.18653/v1/p16-2005

Collobert R, Weston J (2008) A unified architecture for natural language processing. In: Proceedings of the 25th international conference on machine learning - ICML ‘08. ACM Press, New York, pp. 160–167

Cook D, Feuz KD, Krishnan NC (2013) Transfer learning for activity recognition: a survey. Knowl Inf Syst 36:537–556. https://doi.org/10.1007/s10115-013-0665-3

Dai W, Xue G-R, Yang Q, Yu Y (2007) Co-clustering based classification for out-of-domain documents. In: Proceedings of the 13th ACM SIGKDD international conference on knowledge discovery and data mining - KDD ‘07. ACM Press, New York, p 210

Day O, Khoshgoftaar TM (2017) A survey on heterogeneous transfer learning. J Big Data 4:29. https://doi.org/10.1186/s40537-017-0089-0

Deng J, Zhang Z, Marchi E, Schuller B (2013) Sparse autoencoder-based feature transfer learning for speech emotion recognition. In: 2013 Humaine association conference on affective computing and intelligent interaction. IEEE, pp 511–516

Friedman J, Hastie T, Tibshirani R (2010) Regularization paths for generalized linear models via coordinate descent. J Stat Softw 33:1–22. https://doi.org/10.1359/JBMR.0301229

Gabriel Pui Cheong Fung YJX, Hongjun Lu YPS (2006) Text classification without negative examples revisit. IEEE Trans Knowl Data Eng 18:6–20. https://doi.org/10.1109/TKDE.2006.16

Ganin Y, Lempitsky V (2014) Unsupervised domain adaptation by backpropagation

Glorot X, Bordes A, Bengio Y (2011) Domain adaptation for large-scale sentiment classification: a deep learning approach. In: Proc 28th Int Conf Mach Learn, pp 513–520

Huang JT, Li J, Yu D et al (2013) Cross-language knowledge transfer using multilingual deep neural network with shared hidden layers. In: ICASSP, IEEE Int Conf Acoust Speech Signal Process - Proc, pp 7304–7308. https://doi.org/10.1109/ICASSP.2013.6639081

Huang Z, Marco S, Lee C (2016) Neurocomputing A uni fi ed approach to transfer learning of deep neural networks with applications to speaker adaptation in automatic speech recognition. Neurocomputing 218:448–459. https://doi.org/10.1016/j.neucom.2016.09.018

Kang J, Gwak J (2021) Ensemble of multi-task deep convolutional neural networks using transfer learning for fruit freshness classification. Multimed Tools Appl. https://doi.org/10.1007/s11042-021-11282-4

Kundu R, Singh PK, Ferrara M, Ahmadian A, Sarkar R (2021) ET-NET: an ensemble of transfer learning models for prediction of COVID-19 infection through chest CT-scan images. Multimed Tools Appl 81:31–50. https://doi.org/10.1007/s11042-021-11319-8

Liu F, Xu X, Qiu S, Qing C, Tao D (2016) Simple to complex transfer learning for action recognition. IEEE Trans Image Process 25:949–960. https://doi.org/10.1109/TIP.2015.2512107

Liu R, Shi Y, Ji C, Jia M (2019) A survey of sentiment analysis based on transfer learning. IEEE Access 7:85401–85412. https://doi.org/10.1109/ACCESS.2019.2925059

Liu H, Guo F, Xia D (2021) Domain adaptation with structural knowledge transfer learning for person re-identification. Multimed Tools Appl 80:29321–29337. https://doi.org/10.1007/s11042-021-11139-w

Long M, Wang J, Ding G, Pan SJ, Yu PS (2014) Adaptation regularization: a general framework for transfer learning. IEEE Trans Knowl Data Eng 26:1076–1089. https://doi.org/10.1109/TKDE.2013.111

Lu J, Behbood V, Hao P, Zuo H, Xue S, Zhang G (2015) Transfer learning using computational intelligence: a survey. Knowledge-Based Syst 80:14–23. https://doi.org/10.1016/j.knosys.2015.01.010

Niu S, Liu Y, Wang J, Song H (2021) A decade survey of transfer learning (2010–2020). IEEE Trans Artif Intell 1:151–166. https://doi.org/10.1109/tai.2021.3054609

Pan W (2016) A survey of transfer learning for collaborative recommendation with auxiliary data. Neurocomputing 177:447–453. https://doi.org/10.1016/j.neucom.2015.11.059

Pan SJ, Yang Q (2010) A survey on transfer learning. IEEE Trans Knowl Data Eng 22:1345–1359. https://doi.org/10.1109/TKDE.2009.191

Pan SJ, Tsang IW, Kwok JT, Yang Q (2011) Domain adaptation via transfer component analysis. IEEE Trans Neural Netw 22:199–210. https://doi.org/10.1109/TNN.2010.2091281

Pan J, Hu X, Li P, Li H, He W, Zhang Y, Lin Y (2016) Domain adaptation via multi-layer transfer learning. Neurocomputing 190:10–24. https://doi.org/10.1016/j.neucom.2015.12.097

Pharmaceutics S (2000) Letters to the editor letters to the editor. J Am Vet Med Assoc 224:871–872. https://doi.org/10.1097/gme.0b013e3181967b88

Rosenstein MT, Marx Z, Kaelbling LP, et al (2005) To transfer or not to transfer. NIPS 2005 work Transf learn 898:1–4

Roy AG, Sheet D (2016) DASA: Domain Adaptation in Stacked Autoencoders using Systematic Dropout. arXiv Prep:1–5

Saha B, Gupta S (2016) Multiple task transfer learning with small sample sizes. Knowl Inf Syst 46:315–342. https://doi.org/10.1007/s10115-015-0821-z

Shao L, Zhu F, Li X (2015) Transfer learning for visual categorization: a survey. IEEE Trans Neural Networks Learn Syst 26:1019–1034. https://doi.org/10.1109/TNNLS.2014.2330900

Shao M, Ding Z, Zhao H, Fu Y (2016) Spectral bisection tree guided deep adaptive exemplar autoencoder for unsupervised domain adaptation. In: Thirtieth AAAI Conf Artif Intell, pp 2023–2029

Silver DL, Poirier R, Currie D (2008) Inductive transfer with context-sensitive neural networks. Mach Learn 73:1–24. https://doi.org/10.1007/s10994-008-5088-0

Tahmoresnezhad J, Hashemi S (2017) Visual domain adaptation via transfer feature learning. Knowl Inf Syst 50:585–605. https://doi.org/10.1007/s10115-016-0944-x

Tan C, Sun F, Kong T et al (2018) A survey on deep transfer learning. In: Artificial neural networks and machine learning – ICANN 2018. ICANN 2018. Springer, Cham, pp 270–279

Tang J, Lou T, Kleinberg J, Wu S (2016) Transfer learning to infer social ties across heterogeneous networks. ACM Trans Inf Syst 34:1–43. https://doi.org/10.1145/2746230

Utkin LV, Popov SG, Zhuk YA (2016) Robust transfer learning in multi-robot systems by using sparse autoencoder. In: 2016 XIX IEEE international conference on soft computing and measurements (SCM). IEEE, pp 224–227

Vapnik VN (2000) The nature of statistical learning theory. Springer New York, New York, NY

Varshney N, Bakariya B, Kushwaha AKS (2021) Human activity recognition using deep transfer learning of cross position sensor based on vertical distribution of data. Multimed Tools Appl. https://doi.org/10.1007/s11042-021-11131-4

Vincent P, Larochelle H, Lajoie I et al (2010) Stacked denoising autoencoders: learning useful representations in a deep network with a local Denoising criterion. J Mach Learn Res 11:3371–3408. https://doi.org/10.1111/1467-8535.00290

Wang M, Deng W (2018) Deep visual domain adaptation: a survey. Neurocomputing 312:135–153. https://doi.org/10.1016/j.neucom.2018.05.083

Weiss K, Khoshgoftaar TM, Wang D (2016) A survey of transfer learning. J Big Data 3:9. https://doi.org/10.1186/s40537-016-0043-6

Zhai J, Shao Q, Wang X (2016) Improvements for P-ELM1 and P-ELM2 pruning algorithms in extreme learning machines. Int J Uncertainty, Fuzziness Knowlege-Based Syst 24:327–345. https://doi.org/10.1142/S0218488516500161

Zhang W, Li R, Zeng T, Sun Q, Kumar S, Ye J, Ji S (2020) Deep model based transfer and multi-task learning for biological image analysis. IEEE Trans Big Data 6:322–333. https://doi.org/10.1109/TBDATA.2016.2573280

Zhao P, Liu Y, Lu Y, Xu B (2019) A sketch recognition method based on transfer deep learning with the fusion of multi-granular sketches. Multimed Tools Appl 78:35179–35193. https://doi.org/10.1007/s11042-019-08216-6

Zhou JT, Pan SJ, Tsang IW, Yan Y (2014) Hybrid heterogeneous transfer learning through deep learning. AAAI Conf Artif Intell:2213–2219

Zhuang F, Cheng X, Pan SJ et al (2014) Transfer learning with multiple sources via consensus regularized autoencoders. In: Lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics), pp 417–431

Zhuang F, Cheng X, Luo P et al (2015) Supervised representation learning : transfer learning with deep autoencoders. Int Jt Conf Artif Intell:4119–4125

Zhuang F, Cheng X, Luo P, Pan SJ, He Q (2018) Supervised representation learning with double encoding-layer autoencoder for transfer learning. ACM Trans Intell Syst Technol 9:1–17. https://doi.org/10.1145/3108257

Zhuang F, Qi Z, Duan K, Xi D, Zhu Y, Zhu H, Xiong H, He Q (2021) A comprehensive survey on transfer learning. Proc IEEE 109:43–76. https://doi.org/10.1109/JPROC.2020.3004555

Acknowledgements

The authors express their gratitude for the constructive remarks and suggestions received from the reviewers and editor, which have helped to improve the final version of the work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

No author affiliated with this paper has disclosed any potential or pertinent conflicts that may be perceived to have impending conflict with this work.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Rights and permissions

About this article

Cite this article

Dev, K., Ashraf, Z., Muhuri, P.K. et al. Deep autoencoder based domain adaptation for transfer learning. Multimed Tools Appl 81, 22379–22405 (2022). https://doi.org/10.1007/s11042-022-12226-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-12226-2