Abstract

In this paper planar STIT tessellations with weighted axis-parallel cutting directions are considered. They are known also as weighted planar Mondrian tessellations in the machine learning literature, where they are used in random forest learning and kernel methods. Various second-order properties of such random tessellations are derived, in particular, explicit formulas are obtained for suitably adapted versions of the pair- and cross-correlation functions of the length measure on the edge skeleton and the vertex point process. Also, explicit formulas and the asymptotic behaviour of variances are discussed in detail.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

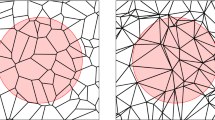

Let \(W\subset \mathbb {R}^2\) be a convex polygon, fix some time parameter \(t>0\), and let \(\Lambda\) be a locally finite and translation-invariant measure on the space of lines in the plane. A random STIT tessellation in the window W can be constructed by the following random process. We start by assigning to W a random lifetime, which is exponentially distributed with parameter \(\Lambda ([W])\), where [W] denotes the set of lines having non-empty intersection with W. When the lifetime of W has expired and is less or equal to \(t>0\), a random line L that hits W is selected according to the distribution \(\Lambda (\,\cdot \, \cap [W])/\Lambda ([W])\) and splits W into two smaller polygons \(W\cap L^+\) and \(W\cap L^-\), where \(L^\pm\) are the two closed half-planes bounded by L. The construction is now repeated independently within \(W\cap L^+\) and \(W\cap L^-\) until the time threshold t is reached. The STIT tessellation of W, composed of cells with lifetime \(t>0\) is understood to be the union of the splitting edges constructed as above until time \(t>0\), and is denoted as \(Y_t(W)\) (see Fig. 1). These edges are referred to as maximal edges of \(Y_t(W)\). For a formal construction of STITs we refer the reader to Mecke et al. (2008) and Nagel and Weiss (2005). It has been shown in Nagel and Weiss (2005), that the construction just described can be consistently extended. This means that there exists a stationary random tessellation \(Y_t\) of \(\mathbb {R}^2\) with the property that its restriction to a polygon W has the same distribution as \(Y_t(W)\). For a STIT tessellation \(Y_t(W)\) we denote by \(\mathcal {E}_t\) the random edge length measure on \(Y_t(W)\) and by \(\mathcal {V}_t\) the related vertex process.

In the existing literature special attention has been payed to planar STIT tessellation in the so-called isotropic case, as well as their higher-dimensional analogues. The isotropic case appears if the line measure \(\Lambda\) is taken to be a constant multiple of the unique Haar measure on the space of lines. The reason for this particular choice is that only in this case one can rely on classical integral geometry and obtain the most explicit formulas for second-order parameters related to such tessellations.

On the other hand, it has turned out that there is another particular class of STIT tessellations, called Mondrian tessellations, for which a second-order description is desirable, since such tessellations have found numerous applications in machine learning. Reminiscent of the famous paintings of the Dutch modernist painter Piet Mondrian, the eponymous tessellations are a version of STIT tessellations with only axis-parrallel cutting directions. Originally established by Roy et al. (2008), Mondrian tessellations have been shown to have multiple applications in random forest learning Lakshminarayanan et al. (2014, 2016) and kernel methods Balog et al. (2016). Both random forest learners and random kernel approximations based on the Mondrian process have shown significant results, especially as they are substantially more adapted to online-learning than many other of their tessellation-based counterparts. This is due to the self-similarity of Mondrian tessellations, which stems from their defining characteristic of being iteration stable (see Nagel and Weiss (2005)), and allows to obtain explicit representations for many conditional distributions of Mondrian tessellations. This property allows a tessellation-based learner to be re-trained on new data without having to newly start the training process and is thus considerably more efficient on large data sets. These methods have recently been carried over to general STIT tessellations Ge et al. (2019) and O’Reilly and Tran (2020).

Formally, by a weighted planar Mondrian tessellation we understand a planar STIT tessellation whose driving line measure \(\Lambda\) is concentrated on a set of lines having axis-parallel directions, one with weight \(p\in (0,1)\), the other with weight \((1-p)\) (see Fig. 2). Such tessellations are in the focus of the present paper. While mean values for Mondrian and more general STIT tessellations can be derived by means of classical translative integral geometry, for second-order properties this is only the case for isotropic STIT tessellations, since in this case integralgeometric methods with respect to the full group of rigid motions become an essential tool in the analysis. The purpose of this paper is to close this gap partially and to study second-order properties of weighted planar Mondrian tessellations.

In the following Section 2 our main results are presented. The remaining sections of this paper are then dedicated to proving the main results, with Section 3 proving the variance formulas, Section 4 providing some auxiliary results, Section 5 and Section 7 deriving the Mondrian pair-correlation functions for \(\mathcal {E}_t\) and \(\mathcal {V}_t\), respectively, and Section 6 showing their (Mondrian) cross-correlation function.

2 Main Results

2.1 Notation

Let \(\mathbb {R}\) denote the real line and \(\mathbb {R}^2\) the Euclidean plane equipped with their respective Borel \(\sigma\)-fields, and write \(\ell _1(\cdot )\) and \(\ell _2(\cdot )\) for the corresponding Lebesgue measure on each space. Given a topological space \(\mathbb {X}\) and a measure \(\mu\) on \(\mathbb {X}\), we denote by \(\mu ^{\otimes k}\), \(k\in \mathbb {N}\), its k-fold product measure. Further, for a set \(A \subset \mathbb {X}\) we write \(\textbf{1}_A( \cdot )\) for its indicator function and \(\mathfrak {B}(\mathbb {X})\) the Borel \(\sigma\)-field on \(\mathbb {X}\). For a random element X in \((\mathbb {X}, \mathfrak {B}(\mathbb {X}))\) we write \(\mathbb {P}_X\) for its distribution, and denote by \(X \overset{d}{=} Y\) equality in distribution of two \(\mathbb {X}\)-valued random elements X, Y, i.e., \(\mathbb {P}_X=\mathbb {P}_Y\).

Let \([\mathbb {R}^2]\) be the space of lines in \(\mathbb {R}^2\). Equipped with the Fell topology, \([\mathbb {R}^2]\) carries a natural Borel \(\sigma\)-field \(\mathfrak {B}([\mathbb {R}^2])\), see (Last and Penrose 2017, Chapter A.3). Further, define \([\mathbb {R}^2]_0\) to be the space of all lines in \(\mathbb {R}^2\) passing through the origin. For a line \(L\in [\mathbb {R}^2]\), we write \(L^+\) and \(L^-\) for the positive and negative half-planes of L, respectively, and \(L^\perp\) for its orthogonal line passing through the origin. For a compact set \(A\subset \mathbb {R}^2\) define

to be the set of all lines in the plane that have non-empty intersection with A. We set \(\mathcal {S}(A)\) and \(\mathcal {S}(\mathbb {R}^2)\) to be the bounded line segments within A and \(\mathbb {R}^2\), respectively, and denote the line segment between two points \(x,y \in \mathbb {R}^2\) as \(\overline{x,y}\). Furthermore, for lines \(L, L_1, L_2 \in [\mathbb {R}^2]\) with \(\emptyset \ne L\cap L_1 \ne L\), \(\emptyset \ne L\cap L_2 \ne L\), and \(L_1 \ne L_2\), we define \(L(L_1, L_2) = \overline{L\cap L_1, L \cap L_2}\) to be the line segment connecting the two intersection points, otherwise we set \(L(L_1, L_2) = \emptyset\). For any locally finite, translation invariant measure \(\Lambda\) on \([\mathbb {R}^2]\) we have a unique measure \(\mathcal {R}\) on \([\mathbb {R}^2]_0\), called the directional measure, that allows the decomposition

for any non-negative measurable function \(g: [\mathbb {R}^2] \rightarrow \mathbb {R}\), see (Schneider and Weil 2008, Theorem 4.4.1). The normalization of \(\mathcal {R}\) is referred to as the directional distribution. Without loss of generality we assume that \(\mathcal {R}\) itself is already a probability measure.

2.2 Set-up

For a weight parameter \(p\in (0,1)\) we consider the measure \(\Lambda _p\) on the space \([\mathbb {R}^2]\) of lines of the following form:

where \(\{e_1,e_2\}\) is the standard orthonormal basis in \(\mathbb {R}^2\), \(E_i=\mathrm{span}(e_i)\) for \(i\in \{1,2\}\) and we integrate with respect to the Lebesgue measure. By a Mondrian tessellation of some convex polygon \(W \subset \mathbb {R}^2\) with weight \(p\in (0,1)\) and time parameter \(t>0\) we understand a planar STIT tessellation \(Y_t(W)\) with driving measure \(\Lambda _p\). Given a polygon \(W \subset \mathbb {R}^2\) and a Mondrian tessellation \(Y_t(W)\), \(t >0\), together with a bounded, measurable functional \(\phi\) of tessellation edges or line segments, respectively, we define the functionals \(\Sigma _\phi\) and \(A_\phi\) by

and

where \(Y_t(W) \cap L\) for \(L\in [W]\) is a one-dimensional tessellation of \(W\cap L\), whose cells are simply the associated segments (intervals) of the tessellation. In the focus of the present paper are the following special cases:

-

(i)

taking \(\phi \equiv 1\), \(\Sigma _\phi (Y_t(W))\) reduces to the number of maximal edges of \(Y_t(W)\);

-

(ii)

taking \(\phi (e)=\Lambda _p([e])\), \(\Sigma _\phi (Y_t(W))\) is the weighted total edge length of \(Y_t(W)\), where all maximal edges parallel to the \(e_1\) direction are weighted by the factor p and the maximal edges parallel to the \(e_2\) direction with a factor \(1-p\). In the following we write \(\Sigma _{\Lambda _p}\) and \(A_{\Lambda _p}\) instead of \(\Sigma _{\Lambda _p([\cdot ])}\) and \(A_{\Lambda _p([\cdot ])}\) for notational brevity.

We note that property (ii) follows from the observation that, for a measurable set \(A\subset \mathbb {R}^2\),

where for a line \(E\in [\mathbb {R}^2]\) we write \(\Pi _E\) for the orthogonal projection onto E. The motivation for considering weighted edge lengths (instead of non-weighted ones) is as follows. A general directional distribution can be considered as an even probability measure on the unit circle and is thus the surface area measure of a uniquely determined symmetric planar convex set, the so-called associated zonoid (see (Schneider and Weil 2008, Section 4.6)). The latter induces a norm on \(\mathbb {R}^2\), which is naturally adapted to the given directional distribution. In our case, the associated zonoid is a rectangle with edge lengths p and \(1-p\), and the weighted lengths we consider are just the lengths measured according to the norm induced by this rectangle. To be consistent with the existing literature and to allow a comparison of our results with those for the isotropic case, we consider the weighted version of the edge length in Section 2.3, whereas in Section 2.4 we concentrate on the non-weighted case.

2.3 Variances

We assume the same set-up as described in the previous section and recall the definition of \(\Sigma _\phi\) from (2.2). The expected values \(\mathbb {E}\Sigma _\phi\) for \(\phi \equiv 1\) and \(\phi (\cdot )=\Lambda _p([\,\cdot \,])\) are known from (Schreiber and Thäle 2010, Section 2.3) and given as follows.

Proposition 2.1

Consider a Mondrian tessellation \(Y_t(W)\) with weight \(p\in (0,1)\). Then

and

For rectangular windows \(W=[-a,a] \times [-b,b]\), \(a,b >0\), these expressions simplify to

and

Remark 2.2

For the non-weighted edge length one has that \(\mathbb {E}[\Sigma (Y_t(W))] = t\ell _2(W)\) as follows from (Nagel and Weiss 2005, Lemma 3) or (Schreiber and Thäle 2010, Eq. (8)), for example.

We turn now to the main result of this section, which provides a fully explicit second-order description of the functionals \(\Sigma _1\) and \(\Sigma _{\Lambda _p}\). We remark that for general STIT tessellation in the plane, abstract formulas have already been developed in (Schreiber and Thäle 2010, Theorem 1) and they have been used in the isotropic case. However, specializing them explicitly to Mondrian tessellations is a non-trivial task.

Theorem 2.3

Take \(W=[-a,a]\times [-b,b]\), \(a,b >0\), and let \(Y_t(W)\) be a Mondrian tessellation with weight \(p\in (0,1)\).

-

(i)

The variance of the weighted total edge length \(\Sigma _{\Lambda _p}\) is given by

$$\begin{aligned} {\text {Var}}(\Sigma _{\Lambda _p}(Y_t(W)))=-8abp(1-p)\Big ((1-p) g_1(2at(1-p)) + p g_1(2btp) \Big ) \end{aligned}$$with \(g_1(x)=\sum _{k\mathop {=}1}^\infty (-1)^k \frac{x^k}{k(k\mathop {+}1)!}\) non-positive and monotonically decreasing on \([0, \infty ).\)

-

(ii)

The variance of the number of maximal edges \(\Sigma _{1}\) of \(Y_t(W)\) in W is given by

$$\begin{aligned}{\text {Var}}(\Sigma _{1}(Y_t(W)))&=2tbp+2ta(1-p)+12abt^2p(1-p) \\&\quad - 16ab t^2p(1-p)\,\Big ((1-p) g_2(2a(1-p)t)+p g_2(2btp)\Big ) \end{aligned}$$with \(g_2(x)= \sum _{k\mathop {=}1}^{\infty }(-1)^k \frac{x^k }{k(k\mathop {+}1)(k\mathop {+}2)!} .\)

-

(iii)

The covariance of the two functionals in (i) and (ii) is given by

$$\begin{aligned}& {\text {Cov}}(\Sigma _{\Lambda _p}(Y_t(W)),\Sigma _{1}(Y_t(W)))\\&= 8tab p(1-p) \Big (1 -\big [(1-p)g_3(2at(1-p))+pg_3(2btp)\big ]\Big ) \end{aligned}$$with \(g_3(x)= \sum _{k\mathop {=}1}^{\infty }(-1)^k \frac{x^k }{k(k\mathop {+}1)(k\mathop {+}1)!}.\)

Next, we specialize Theorem 2.3 to the case where \(W=Q_r:=[-r,r]^2\) is a square with side length \(2r>0\) and consider the behaviour of the (co-)variances, as \(r\rightarrow \infty\). In what follows we write \(f(r)\asymp g(r)\) for two functions \(f,g:[0,\infty )\rightarrow \mathbb {R}\), whenever \(f(r)/g(r)\rightarrow 1\), as \(r\rightarrow \infty\).

Corollary 2.4

Let \(Q_r=[-r,r]^2\) be a square and consider a Mondrian tessellation \(Y_t(Q_r)\) with weight \(p\in (0,1)\). Then, as \(r\rightarrow \infty\),

and

Remark 2.5

It is instructive to compare this result to the corresponding asymptotic formulas for isotropic STIT tessellations in the plane and the rectangular Poisson line process. We therefore denote by \(\Lambda _\mathrm{iso }\) the isometry invariant measure on the space of lines in the plane normalized in such a way that \(\Lambda _\mathrm{iso}([[0,1]^2])={4\over \pi }\) (this is the same normalization as the one used in Schneider and Weil (2008)).

-

(i)

For isotropic STIT tessellations it is known from (Schreiber and Thäle 2010, Section 3.4) in combination with (Schreiber and Thäle (2012), Theorem 3) that, for example,

$$\begin{aligned} {\text {Var}}(\Sigma _{\Lambda _\mathrm{iso }}(Y_t(B_r^2))) \asymp {16\over \pi }r^2\log (r)\quad \text {and}\quad {\text {Var}}(\Sigma _{1}(Y_t(B_r^2))) \asymp {16\over \pi }t^2r^2\log (r) \end{aligned}$$for a disc \(B_r^2\) of radius \(r>0\), as \(r\rightarrow \infty\). In addition, it can be concluded from (Schreiber and Thäle 2010, Theorem 1) that

$$\begin{aligned} {\text {Cov}}(\Sigma _{\Lambda _\mathrm{iso}}(Y_t(B_r^2)), \Sigma _{1}(Y_t(B_r^2)))\asymp {16\over \pi }tr^2\log (r). \end{aligned}$$ -

(ii)

For the rectangular Poisson line process we consider a stationary Poisson line process \(\eta _p\) in the plane with intensity measure \(t\Lambda _p\), \(t>0\). For the total weighted edge length \(\Sigma _{\Lambda _p}(Q_r)\) and the number of edges \(\Sigma _1(Q_r)\) in a square \(Q_r=[-r,r]^2\) with side length \(2r > 0\) one has

$${\text {Var}}(\Sigma _{\Lambda _p}(Q_r)) = 4tp(1-p)r^3$$and

$$\begin{aligned}{\text {Var}}(\Sigma _1(Q_r)) =&\ t^3(2p(1-p)^2r^3+2p^2(1-p)r^3)\\&+2t^2p(1-p)r^2 \asymp 2t^3r^3p(1-p).\end{aligned}$$

2.4 Correlation Function

In Schreiber and Thäle (2010) an explicit description of the pair-correlation function of the vertex point process of an isotropic planar STIT tessellation has been derived, while such a description for the random edge length measure can be found in Schreiber and Thäle (2012). Also the so-called cross-correlation function between the vertex process and the random length measure was computed in Schreiber and Thäle (2010). In the present paper we develop similar results for planar Mondrian tessellations. To define the necessary concepts, we suitably adapt the notions used in the isotropic case. We let \(Y_t\) be a weighted Mondrian tessellation of \(\mathbb {R}^2\) with weight \(p\in (0,1)\) and time parameter \(t>0\), define \(R_p:=[0,1-p]\times [0,p]\) and let \(R_{r,\,p}:=rR_p\) be the rescaled rectangle with side lengths \(r(1-p)\) and rp. In the spirit of Ripley’s K-function widely used in spatial statistics Stoyan et al. (1995), we let \(t^2K_\mathcal{E}(r)\) be the total edge length of \(Y_t\) in \(R_{r,\,p}\) when \(Y_t\) is regarded under the Palm distribution with respect to the random edge length measure \(\mathcal {E}_t\) concentrated on the edge skeleton. On an intuitive level the latter means that we condition on the origin being a typical point of the edge skeleton, see (Stoyan et al. 1995, Section 4.5). The classic version of Ripley’s K-function considers a ball of radius \(r>0\), but since our driving measure is non-isotropic, we account for that by considering \(R_{r,\,p}\) instead (compare also with the discussion at the end of Section 2.2). Similarly, we let \(\lambda^2 K_\mathcal{V}(r)\) be the total number of vertices of \(Y_t\) in \(R_{r,\,p}\), where \(\lambda =t^2p(1-p)\) stands for the vertex intensity of \(Y_t\) and where we again condition on the origin being a typical vertex of the tessellation (in the sense of the Palm distribution with respect to the random vertex point process). While these functions still have a complicated form (which we will determine in the course of our arguments below), we consider their normalized derivatives – provided these derivatives are well defined as it is the case for us. In the isotropic case, these are known as the pair-correlation functions of the random edge length measure or the vertex point process, respectively. In our case, the following normalization turns out to be most suitable:

where we suppress the dependence of \(g_\mathcal {E}\) and \(g_\mathcal {V}\) on \(t>0\) for notational brevity. In contrast to the classical pair-correlation function we normalize by the factor \(2p(1-p)r\) instead of \(2\pi r\), as we use the adaptation of Ripley’s K-function based on \(R_{r,\,p}\) instead of a ball of radius r. The normalized derivatives above are what we refer to as the Mondrian pair-correlation function of \(\mathcal {E}_t\) and \(\mathcal {V}_t\), respectively. Similarly to the isotropic case, one can also define what is called a cross K-function \(K_{\mathcal {E},\,\mathcal {V}}(r)\) and the corresponding correlation function

again suppressing the dependence of \(g_{\mathcal {E},\,\mathcal {V}}\) on \(t>0\) and refer to Eq. (6.6) below for a formal description. Since it is also based on \(R_{r,\,p}\) instead of a ball of radius \(r>0\), we call it the Mondrian cross-correlation function of \(\mathcal {E}_t\) and \(\mathcal {V}_t\). We are now prepared for the presentation of the main results of this section. We start with the Mondrian edge pair-correlation function \(g_\mathcal {E}(r)\).

Theorem 2.6

Let \(Y_t\) be a Mondrian tessellation with weight \(p\in (0,1)\) and time parameter \(t>0\). Then

This result can be considered as the Mondrian counterpart to (Schreiber and Thäle 2012, Theorem 7.1), while the next theorem for the Mondrian cross-correlation function \(g_{\mathcal {E},\,\mathcal {V}}(r)\) is the analogue of (Schreiber and Thäle 2010, Corollary 4).

Theorem 2.7

Let \(Y_t\) be a Mondrian tessellation with weight \(p\in (0,1)\) and time parameter \(t>0\). Then

Finally, we deal with the Mondrian vertex pair-correlation function \(g_\mathcal {V}(r)\), which in the isotropic case has been determined in (Schreiber and Thäle 2010, Corollary 3). Plots for the functions \(g_\mathcal {E}(r), g_{\mathcal {E},\,\mathcal {V}}(r),\) and \(g_\mathcal {V}(r)\) for \(t=1\) and different weights \(p \in \{0.5, 0.75, 0.9\}\) are shown in Fig. 3.

Theorem 2.8

Let \(Y_t\) be a Mondrian tessellation with weight \(p\in (0,1)\) and time parameter \(t>0\). Then

Remark 2.9

Again, we compare our result with the correlation functions for isotropic STIT tessellations in the plane and the rectangular Poisson line process.

-

(i)

In the isotropic case Schreiber and Thäle showed in Schreiber and Thäle (2013) that the pair-correlation function of the random edge length measure \(\mathcal {E}_{t}\) has the form

$$\begin{aligned} g_\mathcal {E}(r) = 1 + \frac{1}{2t^2 r^2}\Big (1-e^{-\frac{2}{\pi } tr}\Big ). \end{aligned}$$In Schreiber and Thäle (2012) the same authors showed that the pair-correlation function of the vertex point process \(\mathcal {V}_t\) and the cross-correlation function of the random edge length measure and the vertex point process are given by

$$\begin{aligned} g_{\mathcal {E},\,\mathcal {V}}(r) = 1+ \frac{1}{t^2 r^2}-\frac{\pi }{4t^3r^3}- \frac{e^{-\frac{2}{\pi }tr}}{2t^2r^2}\Big (1-\frac{\pi }{2tr}\Big ) \end{aligned}$$and

$$\begin{aligned} g_\mathcal {V}(r) = 1 + \frac{2}{t^2r^2} - \frac{\pi }{t^3r^3} + \frac{\pi ^2}{4t^4r^4} - \frac{e^{-\frac{2}{\pi }tr}}{2t^2r^2} \Big ( 1 - \frac{\pi }{tr} + \frac{\pi ^2}{2t^2r^2} \Big ). \end{aligned}$$ -

(ii)

For the rectangular Poisson line process as given in Remark 2.5 one can use the theorem of Slivnyak-Mecke (see for example (Stoyan et al. 1995, Example 4.3)) to deduce that the corresponding analogues of the cross- and pair-correlation functions are given by

$$g_\mathcal {E}(r) = 1 + \frac{1}{tr},\quad g_{\mathcal {E},\,\mathcal {V}}(r) = 1 + \frac{1}{4trp(1-p)}\quad \text {and}\quad g_\mathcal {V}(r)=1 + \frac{1}{2tr p^2(1-p)^2 }.$$

3 Variance Calculations for Mondrian Tessellations

3.1 The Point Intersection Measure and Expectations

First and second order properties of \(Y_t(W)\) are typically accessible to us via the functionals \(\Sigma _\phi\) and \(A_\phi\) as given in (2.2) and (2.3) for specific choices of \(\phi\) by means of martingale results from (Schreiber and Thäle 2010, Proposition 1). In what follows we concentrate on the two specific cases \(\phi \equiv 1\) and \(\phi (\,\cdot \,)=\Lambda _p([\,\cdot \,])\) and define the two measures that we need to formulate expectations and variances. The first is the so-called point intersection measure on \(\mathbb {R}^2\) given by

which is the main tool in the proof of Proposition 2.1.

Proof of Proposition 2.1

From the results in (Schreiber and Thäle 2010, Section 2.3) we conclude that

Since for measurable \(A \subset \mathbb {R}^2\) one has that

it follows from (3.1) that

and by also using (2.4)

The expression for \(W=[-a,a] \times [-b,b]\) with \(a,b >0\) is now straight forward. \(\square\)

3.2 The Segment Intersection Measure and Variances

In a next step, we introduce the segment intersection measure on the space of line segments \(\mathcal {S}(\mathbb {R}^2)\) by

where \(\delta _x\) denotes the Dirac measure at a point \(x\in \mathbb {R}^2\). For a measurable \(A \subset \mathbb {R}^2\) we obtain that \(\langle \!\langle (\Lambda _p\times \Lambda _p)\cap \Lambda _p\rangle \!\rangle (\mathcal {S}(A))\) equals

In order for \((E_1 + \sigma e_2) \cap L_i\) and \((E_2 + \sigma e_1) \cap L_i, \, i \in \{1,2\},\) to be non-empty, the lines L and \(L_i\) have to be perpendicular, i.e., for \(i\in \{1,2\}\) we either have

-

(i)

\((E_1 + \sigma e_2) \cap L_i = (E_1 + \sigma e_2) \cap (E_2 + \tau _i e_1) = (\tau _i, \sigma )\) for some \(\tau _i \in \mathbb {R}\) or

-

(ii)

\((E_2 + \sigma e_1) \cap L_i = (E_2 + \sigma e_1) \cap (E_1 + \tau _i e_2) = (\sigma , \tau _i)\) for some \(\tau _i \in \mathbb {R}\).

This leads to

Having the point and segment intersection measures at hand, we now proceed to prove Theorem 2.3.

Proof of Theorem 2.3

Applying (Schreiber and Thäle 2010, Theorem 1), we combine Eqs. (13) and (14) in Schreiber and Thäle (2010) to obtain that \({\text {Var}}(\Sigma _{\Lambda _p}(Y_t(W)))\) is the same as

by Fubini’s theorem. As outlined above, in order for \(\Lambda _{p}([L(L_1,L_2)])\) to be non-zero we need

for some \(\sigma \in \Pi _{E_{j}}(W)\) and \(\tau , \vartheta \in \Pi _{E_{i}}(W)\), \(i,j \in \{1,2\}\) with \(i \ne j\). In the integral above we then have

for \(L=E_1+\sigma e_2\), \(L_1=E_2+\tau e_1 \text { and } L_2=E_2+\vartheta e_1\) and

if \(L=E_2+\sigma e_1\), \(L_1=E_1+\tau e_2 \text { and } L_2=E_1+\vartheta e_2 .\) Hence,

by Fubini’s theorem. For the inner integrals we obtain

and

respectively. Furthermore,

and

Recalling the definition of \(g_1(x)\) from the statement of Theorem 2.3 (i), proves the result. We now turn to the variance of \(\Sigma _1(Y_t(W))\) in Theorem 2.3 (ii). Combining Proposition 1 and Eq. (12) in Schreiber and Thäle (2010) gives

Furthermore, Eq. (8) in Schreiber and Thäle (2010) shows that

Equations (2.4) and (3.3) now yield

For the last term we use Theorem 2.3 (i) to see that

Now,

and similarly

It follows that

proving claim the second claim. To deal with the covariance in Theorem 2.3 (iii), we use (Schreiber and Thäle 2010, Eq. (11)) in combination with (3.7) to see that

Proceeding as above, we get

which in combination with (3.3) yields the result. \(\square\)

Proof of Corollary 2.4

One can check that \(g_{1}(x)\asymp -\log (x)\), \(g_{2}(x) \asymp - \frac{1}{2} \log (x)\) and that \(g_{3}(x) \asymp \log (x)\), as \(x\rightarrow \infty\). The result then follows from Theorem 2.3. \(\square\)

4 Auxiliary Computations

In order to prove our results on the Mondrian pair- and cross-correlation functions, we will use results for a more general setting provided in (Schreiber and Thäle 2010, Section 3). The subsequent section gives the auxiliary computations necessary to adapt these results to our set-up of Mondrian tessellations with weighted axis-parallel cutting directions. We start with some general observations for rectangular STIT tessellations that we will use repeatedly throughout the proofs. For a segment e of a STIT tessellation \(Y_t\) on \(\mathbb {R}^2\) we introduce the measure

where we denote by Vertices(e) the set of all vertices in the relative interior of the maximal edge e. Furthermore, for \(e \in Y_t\) we define the measure

on \(\mathbb {R}^2\) (cf. (Schreiber and Thäle 2010, Section 3.2)). Also, for bounded measurable functions \(f :\mathbb {R}^2\rightarrow \mathbb {R}\) with bounded support and \(t>0\) we define the integrals

and

for integers \(n\ge 2\). We now look at the measures defined in (4.1) and (4.2) in our set up. Since we are dealing with rectangular tessellations, the endpoints of any segment need to coincide in one coordinate, i.e., segments parallel to \(E_1\) have endpoints \((\tau , \sigma )\) and \((\omega , \sigma )\), endpoints of segments parallel to \(E_2\) are of the form \((\sigma ,\tau )\) and \((\sigma , \omega )\). For ease of notation we will write \(\overline{(\tau \omega )}_\sigma\) for segements of the first and \({_\sigma }{\overline{(\tau \omega )} }\) for segments of the second kind. We now see that

and

We can now use (4.5) and (4.7) to simplify the expression in (Schreiber and Thäle 2010, Theorem 3). To do so, the lemma below gives simplified expressions for integrals over product measures where one or both of the measures is a Dirac measure or the 1-dimensional Lebesgue measure of the intersection of a line segment with a subset of \(\mathbb {R}^2\).

Lemma 4.1

Let \(A, B \in \mathcal {B}(\mathbb {R}^2)\).

-

(i)

For any \(q \in [0,1 ]\) and \(j \in \mathbb {N}\) we have

$$\begin{aligned}&\int _{\mathbb {R}} \int _{\mathbb {R}} \int _{\mathbb {R}}(\delta _{(\tau ,\,\sigma )}\otimes \ell _1(\cdot \cap \overline{(\tau \vartheta )}_\sigma )(A \times B) \, \mathcal {I}^{j}\big (s^2 \exp (-s q|\tau -\vartheta |);\ t\big )\ \mathrm{d}\vartheta \, \mathrm{d}\tau \, \mathrm{d}\sigma \\&=\int _{\mathbb {R}^2} \delta _{w}(A)\int _{\mathbb {R}}\, \ell _1(B-w\cap \overline{(0 z)}_0))\, \mathcal {I}^{j}\big (s^2 \exp (-s q|z|);\ t\big ) \,\mathrm{d}z \, \mathrm{d}w \end{aligned}$$and

$$\begin{aligned}&\int _{\mathbb {R}} \int _{\mathbb {R}} \int _{\mathbb {R}}(\delta _{(\sigma ,\, \tau )}\otimes \ell _1(\cdot \cap {_\sigma }{\overline{(\tau \vartheta )}})(A \times B) \,\mathcal {I}^{j}\big (s^2 \exp (-s q|\tau -\vartheta |);\ t\big )\ \mathrm{d}\vartheta \, \mathrm{d}\tau \, \mathrm{d}\sigma \\&=\int _{\mathbb {R}^2} \delta _{w}(A)\int _{\mathbb {R}}\ell _1(B-w\cap \overline{{_0}{(0z)}}))\, \mathcal {I}^{j}\big (s^2 \exp (-s q|z |);\ t\big ) \, \mathrm{d}z \, \mathrm{d}w. \end{aligned}$$ -

(ii)

For any \(q \in [0,1 ]\) and \(j \in \mathbb {N}\) we have

$$\begin{aligned}&\int _{\mathbb {R}} \int _{\mathbb {R}} \int _{\mathbb {R}}(\ell _1(\cdot \cap \overline{(\tau \vartheta )}_\sigma )\otimes \ell _1(\cdot \cap \overline{(\tau \vartheta )}_\sigma )\quad (A \times B))\, \mathcal {I}^j\big (s^2 \exp (-s q|\tau -\vartheta |);\ t\big )\ \mathrm{d}\vartheta \, \mathrm{d}\tau \, \mathrm{d}\sigma \\&= \int _{\mathbb {R}^2} \delta _{w}(A) \int _{\mathbb {R}} \Big (\int _{ \overline{(0z)}_0} \int _{ \overline{(0z)}_0} \delta _{y\mathop{-}x}\quad (B-w)\, \mathrm{d}x \, \mathrm{d}y\Big )\, \mathcal {I}^j\big (s^2 \exp (-sq|z|);\ t\big )\, \mathrm{d}z \, \mathrm{d}w \end{aligned}$$and

$$\begin{aligned}&\int _{\mathbb {R}} \int _{\mathbb {R}} \int _{\mathbb {R}}(\ell _1(\cdot \cap {_\sigma }{\overline{(\tau \vartheta )}}) \otimes \ell _1(\cdot \cap {_\sigma }{\overline{(\tau \vartheta )}}))\quad(A \times B))\, \mathcal {I}^j\big (s^2 \exp (-s q|\tau -\vartheta |);\ t\big )\ \mathrm{d}\vartheta \, \mathrm{d}\tau \, \mathrm{d}\sigma \\&= \int _{\mathbb {R}^2} \delta _{w}(A) \int _{\mathbb {R}} \Big (\int _{ {_0}{\overline{(0z)}}} \int _{ {_0}{\overline{(0z)}}} \delta _{y\mathop {-}x}(\cdot )\, \quad \mathrm{d}x \, \mathrm{d}y\Big )\,\mathcal {I}^j\big (s^2 \exp (-sq|z|);\ t\big )\, \mathrm{d}z \, \mathrm{d}w. \end{aligned}$$ -

(iii)

For any \(q \in [0,1]\) and \(j \in \mathbb {N}\) we have

$$\begin{aligned}&\int _{\mathbb {R}} \int _{\mathbb {R}} \int _{\mathbb {R}}(\delta _{(\tau ,\,\sigma )}\otimes \delta _{(\tau ,\,\sigma )})(A \times B) \, \mathcal {I}^j\big (s^2 \exp (-s q|\tau -\vartheta |);\ t\big )\ \mathrm{d}\vartheta \, \mathrm{d}\tau \, \mathrm{d}\sigma \\& = \int _{\mathbb {R}^2} \delta _{\omega }(A) \int _{\mathbb {R}} \delta _{\textbf{0}}(B-w)\,\mathcal {I}^j\big (s^2 \exp (-sq|z|);\ t\big )\, \mathrm{d}z \, \mathrm{d}w, \end{aligned}$$where \(\textbf{0}:= (0,0)\). Furthermore,

$$\begin{aligned}&\int _{\mathbb {R}} \int _{\mathbb {R}} \int _{\mathbb {R}}(\delta _{(\tau ,\sigma )}\otimes \delta _{(\vartheta ,\,\sigma )})(A \times B) \, \mathcal {I}^j\big (s^2 \exp (-s q|\tau -\vartheta |);\ t\big )\ \mathrm{d}\vartheta \, \mathrm{d}\tau \, \mathrm{d}\sigma \\&= \int _{\mathbb {R}^2} \delta _{w}(A) \int _{\mathbb {R}} \delta _{(z,\,0)}(B-w)\,\mathcal {I}^j\big (s^2 \exp (-sq|z|);\ t\big )\, \mathrm{d}z \, \mathrm{d}w \end{aligned}$$and

$$\begin{aligned}&\int _{\mathbb {R}} \int _{\mathbb {R}} \int _{\mathbb {R}}(\delta _{(\sigma ,\,\tau )}\otimes \delta _{(\sigma ,\,\vartheta )})(A \times B) \, \mathcal {I}^j\big (s^2 \exp (-s q|\tau -\vartheta |);\ t\big )\ \mathrm{d}\vartheta \, \mathrm{d}\tau \, \mathrm{d}\sigma \\&= \int _{\mathbb {R}^2} \delta _{w}(A) \int _{\mathbb {R}} \delta _{(0,\,z)}(B-w)\,\mathcal {I}^j\big (s^2 \exp (-sq|z|);\ t\big )\, \mathrm{d}z \, \mathrm{d}w. \end{aligned}$$

Proof

In parts (i) and (ii) both cases can be shown with similar arguments, so that we will only prove one of them at a time. In part (iii) this holds true for the second and third claim, so that we will prove the first and second equality here. To prove (i), note that \(\ell _1(B\cap \overline{(\tau \vartheta )}_\sigma )=\ell _1(B-(\tau ,\sigma )\cap \overline{(0 (\vartheta -\tau ))}_0)\) for any \(\tau , \vartheta , \sigma \in \mathbb {R}\), so that

Substituting \(z=\vartheta -\tau\) we obtain that the above is equal to

which shows (i) by putting \(\omega =(\tau ,\sigma )\). To prove part (ii), note that

where we substituted \(z=\vartheta -\tau\) and put \((\tau ,\sigma )=w\). Substituting \(\tilde{w}=w+x\) we end up with

which proves the first equality in (ii). We now turn to the proof of the first part of item (iii). Substituting \(z=\vartheta -\tau\) and setting \(w:= (\tau , \sigma )\) we obtain

Using the same substitution as above we get

for the second term in (iii). Since the third expression can be handled in an analogous way, this completes the proof. \(\square\)

Recall that in order to deduce pair- and cross-correlation functions of the vertex and the random edge length process we will observe a planar weighted Mondrian \(Y_t(W)\) in a growing window \(rR_p\), where \(R_p=[0,1-p] \times [0,p]\). It is then straightforward to see that, for \(z > 0\), we have

Furthermore, it holds that for \(z \le 0\) we have \(\ell _1(rR_p \cap \overline{(0z)}_0)=\ell _1(rR_p \cap \overline{{_0}{(0z)}})=0\) and

as well as

Lemma 4.2

Take \(R_{r,\,p}=[0,u_{1}(r,p)]\times [0, u_2(r,p)]\) with non-negative functions \(u_1,u_2:(0,\infty )\times [0,1]\rightarrow (0,\infty )\). It then holds that

and

Proof

We start by proving the second claim. Note that for \(x,y \in \overline{{_0}{(0z)}}\)

Assume \(z\ge 0\), the case \(z<0\) follows an analogous line of argumentation. For \(z \ge u_2(r,p)\) we have

and for \(z \in [0,u_2(r,p))\) we get

The proof of the first claim works in an analogous way. \(\square\)

To deal with the Mondrian pair- and cross-correlation functions we will need to differentiate terms of the form

and

with respect to r for a differentiable function \(u: \mathbb {R}\times [0,1] \rightarrow \mathbb {R}^+\) and \(k \in \mathbb {N}\). Note that for \(k \ge 1\) we have

and

where \(u'\) denotes the partial derivative of u with respect to the r-coordinate. Combining Lemma 4.2 with Eqs. (4.11) and (4.12) we obtain

Proceeding analogously with the first integral in Lemma 4.2 we obtain

When calculating pair- and cross-correlation functions, we will come across several integrals of functions \(f(z) = z^i \mathcal {I}^j\big (s^2 \exp (-sz);\ t\big )\) for \(i,j \in \{1,2,3\}\). We collect their exact values in the following lemma for easy reference.

Lemma 4.3

Let \(q \in [0,1], t\in [0, \infty ), s \in [0,t], j \in \{1,2,3\}\) and \(\mathcal {I}^j\) as defined in (4.3) and (4.4). Then it holds that

and, for \(y \ge 0\),

5 Surface Covariance Measure and Edge Pair Correlations

This section aims to show Theorem 2.6. For a weighted planar Mondrian tessellation \(Y_t\) on \(\mathbb {R}^2\) with driving measure \(\Lambda _p\) the covariance measure \({\text {Cov}}(\mathcal {E}_{t})\) of its random edge length measure \(\mathcal {E}_{t}\) is defined as the measure on \(\mathbb {R}^2 \times \mathbb {R}^2\) given by the relation

for bounded and measurable \(f,g:\mathbb {R}^2 \rightarrow \mathbb {R}\) with bounded support, where \(J^f\) is the edge functional

Slightly adapting the calculations in (Schreiber and Thäle 2010, Section 3.3) and using the martingale arguments from (Schreiber and Thäle 2010, Eq. (3)) to calculate expectations for the functional \(A_{J^f J^g}\) as in (2.3), we get

By the definition of \(J^f\), \(J^g\) and the segment intersection measure from (3.4), we obtain the following form for the covariance measure:

Having established the covariance measure of the edge process \(\mathcal {E}_{t}\), we now aim at giving the corresponding pair-correlation function \(g_\mathcal {E}(r)\). In a first step towards this we need to establish the reduced covariance measure \(\widehat{{\text {Cov}}}(\mathcal {E}_{t})\) defined by the relation

for a measurable product \(A\times B\subset \mathbb {R}^2\times \mathbb {R}^2\) (cf. (Daley and Vere-Jones 2003, Corollary 8.1.III)). We now examine the first of the two integral summands in (5.1). Using Lemma 4.1(ii) we see that

Proceeding analogously with the second summand in (5.1) and using the diagonal shift argument from (Daley and Vere-Jones 2003, Corollary 8.1.III), we get the reduced covariance measure \(\widehat{{\text {Cov}}}(\mathcal {E}_{t})\) on \(\mathbb {R}^2\):

Noting that the intensity of the random measure \(\mathcal {E}_{t}\) is just t, see (Schreiber and Thäle 2010, Eq. (8)), we apply Eq. (8.1.6) in Daley and Vere-Jones (2003) to see that the corresponding reduced second moment measure \(\widehat{\mathcal {K}}(\mathcal {E}_{t})\) is

While the classical Ripley’s K-function would be \(t^{-2}\) times the \(\widehat{\mathcal {K}}(\mathcal {E}_{t})\)-measure of a disc of radius \(r>0\), we define our Mondrian analogue as

where \(R_{r,\,p}:=rR_p\) with \(R_p:=[0,1-p] \times [0, p]\) as before. Calculating \(K_{\mathcal {E}}(r)\) explicitly via Lemma 4.2 yields

With (4.13) and (4.14) this gives

The definition of the function \(g_\mathcal {E}\) as given in Section 2.4 together with the integral calculations from Lemma 4.3 then gives the function in the statement of Theorem 2.6. \(\square\)

6 Edge-Vertex Correlations for Mondrian Tessellations

We now turn to the proof of Theorem 2.7 by deriving the concrete cross-covariance measure of the vertex process \(\mathcal {V}_{t}\) and the random edge length measure \(\mathcal {E}_{t}\) of a weighted Mondrian tessellation \(Y_t\) on \(\mathbb {R}^2\) with driving measure \(\Lambda _p\).Using the general formula for this cross-covariance measure from (Schreiber and Thäle 2010, Theorem 3) for the driving measure \(\Lambda _p\) together with the definition of the segment intersection measure in (3.4) yields

With the definition of the measure \(\Lambda _p\) given in (2.1) and Eqs. (3.5), (4.5) and (4.7) yield that

Let \(A, B \in \mathcal {B}(\mathbb {R}^2)\). We can now use Lemma 4.1(i) to deal with the first summand in both terms, applied to the product set \(A\times B\), to obtain

and

The second summand in each of the terms in (6.2) can be dealt with using Lemma 4.1(ii), see also Eq. (5.2). As in the previous section, we want to proceed by giving the reduced covariance measure via the diagonal-shift argument in the sense of (Daley and Vere-Jones 2003, Corollary 8.1.III). Plugging the terms we just deduced into (6.2), we end up with the covariance measure

where the reduced cross-covariance measure is given by

Thus, the reduced second cross moment measure has the form

The Mondrian cross K-function is now defined as

where \(\lambda _\mathcal {V}=t^2p(1-p)\) is the intensity of \(\mathcal {V}_{t}\), \(\lambda _{\mathcal {E}}=t\) that of \(\mathcal {E}_{t}\) and \(R_{r,\,p}=rR_p=[0,r(1-p)]\times [0,rp]\). Using Eq. (6.5) we see that

Using Eqs. (4.9) and (4.10) we can simplify the the term \(T_1\) in (6.7), and then use (4.11) and (4.12) to conclude that

For the second and third term we can evoke Eqs. (4.13) and (4.14) to see that

and

By plugging in the expressions for the respective integrals given in Lemma 4.3, recalling Eq. (6.6) we finally obtain the formula for \(g_{\mathcal {V},\,\mathcal {E}}(r)\) after simplification of the resulting expression. \(\square\)

7 Vertex Covariance Measure and Vertex Pair-Correlations

In this section we derive Theorem 2.8. As before we denote by \(\mathcal {V}_{t}\) the vertex point process of a weighted Mondrian tessellation \(Y_t\) with driving measures \(\Lambda _p\). As in the previous sections (Schreiber and Thäle 2010, Theorem 2) provides us with a general formula for its covariance measure. Specialization to \(\Lambda _p\) gives

Starting with the first of these three summands, we can use the definition of the segment intersection measure as given in (3.4) with (4.5) and (4.7) to see that

We now aim at giving the corresponding Mondrian analogue of the pair-correlation function of the vertex point process. As in the previous sections, we do so by giving the reduced covariance measure via a diagonal-shift argument in the sense of (Daley and Vere-Jones 2003, Corollary 8.1.III). Consider the first integral term in (7.2) without its coefficient for the Borel set \(A\times B \subset \mathbb {R}^2\times \mathbb {R}^2\). After multiplying the Dirac measures, we only consider the first two summands that integrate over \(\delta _{(\tau ,\,\sigma )}(A)\delta _{(\tau ,\,\sigma )}(B)\) and \(\delta _{(\tau ,\,\sigma )}(A)\delta _{(\vartheta ,\, \sigma )}(B)\), respectively, as the other two can be handled in the same fashion. Using Lemma 4.1(iii) yields

and

We get the same terms from the third and fourth summand within the first integral expression, yielding

as the overall value of that term. The same argument, with an appropriate swap of \(x-\) and y-coordinate, shows that the second integral in (7.2) (again without its coefficient) is equal to

For the second and third term in (7.1) we see that, due to (4.1) and (4.5), these can be handled with the help of Lemma 4.1(i) and (ii), respectively, see also the handling of such terms as carried out in Eqs. (5.2), (6.3) and (6.4). We again define a function in the spirit of Ripley’s K-function via the reduced second moment measure \(\widehat{\mathcal {K}}( \mathcal {V}_{t})(R_{r,\,p})\) of \(R_{r,\,p}\), \(r>0\), and the corresponding normalized derivative as

Combining the considerations above with the diagonal shift argument from (Daley and Vere-Jones 2003, Corollary 8.1., III) we obtain that the reduced covariance measure \(\widehat{{\text {Cov}}}( \mathcal {V}_{t})\) with

is given by

The relationship (Daley and Vere-Jones 2003, Eq. (8.1.6)) now yields the reduced second moment measure \(\widehat{\mathcal {K}}( \mathcal {V}_{t})\)

We now use (4.8), (4.9), (4.10), and Lemma 4.2 in straight-forward calculations, to obtain

With the calculations from (4.11), (4.12), (4.13) and (4.14), we get the derivatives

and

The other three terms can be handled in the same way as the terms \(T_1\), \(T_2\) and \(T_3\) in Section 6. More precisely, \(S_3\) can be dealt with in the same way as \(T_1\), \(S_4\) as \(T_2\) and \(S_5\) as \(T_3\). Finally, recalling \(K_{\mathcal {V}}(r)\) and \(g_{\mathcal {V}}(r)\) from (7.2), and using the concrete integral calculations from Lemma 4.3, we conclude the proof of Theorem 2.8. \(\square\)

Data availability

Not applicable.

References

Balog M, Lakshminarayanan B, Ghahramani Z, Roy DM, Teh YW (2016). The Mondrian Kernel. 32nd Conference on Uncertainty in Artificial Intelligence. pp 32–41

Daley DJ, Vere-Jones D (2003) An introduction to the theory of point processes: volume I: elementary theory and methods. Springer

Ge S, Wang S, Teh YW, Wang L, Elliott L (2019) Random tessellation forests, vol 32. In: Advances in Neural Information Processing Systems. Curran Associates, Inc

Lakshminarayanan B, Roy DM, Teh YW (2014) Mondrian forests: efficient online random forests. Adv Neural Inf Process Syst 27:3140–3148

Lakshminarayanan B, Roy DM, Teh YW (2016) Mondrian forests for large-scale regression when uncertainty matters. In: Artificial Intelligence and Statistics. PMLR, pp 1478–1487

Last G, Penrose M (2017) Lectures on the Poisson process, vol 7. Cambridge University Press

Mecke J, Nagel W, Weiss V (2008) A global construction of homogeneous random planar tessellations that are stable under iteration. Stochastics 80(1):51–67

Nagel W, Weiss V (2005) Crack STIT tessellations: characterization of stationary random tessellations stable with respect to iteration. Adv Appl Probab 37(4):859–883

O’Reilly E, Tran N (2020) Stochastic geometry to generalize the Mondrian process. To appear in SIAM Journal on Mathematics of Data Science

Roy DM, Teh YW et al (2008) The Mondrian process. In: NIPS. pp 1377–1384

Schneider R, Weil W (2008) Stochastic and integral geometry. Springer Science & Business Media

Schreiber T, Thäle C (2010) Second-order properties and central limit theory for the vertex process of iteration infinitely divisible and iteration stable random tessellations in the plane. Adv Appl Probab 42(4):913–935

Schreiber T, Thäle C (2012) Second-order theory for iteration stable tessellations. Probab Math Stat 32:281–300

Schreiber T, Thäle C (2013) Geometry of iteration stable tessellations: connection with Poisson hyperplanes. Bernoulli 19(5A):1637–1654

Stoyan D, Kendall WS, Mecke J (1995) Stochastic geometry and its applications. Wiley

Acknowledgements

The authors would like to thank Claudia Redenbach for providing the helpful images of planar Mondrian tessellations with different weights in Fig. 2.

Funding

Open Access funding enabled and organized by Projekt DEAL. CB and CT were supported by the DFG priority program SPP 2265 Random Geometric Systems.

Author information

Authors and Affiliations

Contributions

All authors of this work have contributed to equal parts.

Corresponding author

Ethics declarations

Conflict of interest

There were no competing interests to declare which arose during the preparation or publication process of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Betken, C., Kaufmann, T., Meier, K. et al. Second-Order Properties for Planar Mondrian Tessellations. Methodol Comput Appl Probab 25, 47 (2023). https://doi.org/10.1007/s11009-023-10017-2

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11009-023-10017-2

Keywords

- Cross-correlation function

- Mondrian tessellation

- Pair-correlation function

- STIT tessellation

- Stochastic geometry

- Variance asymptotic