Abstract

In this paper we show existence of all exponential moments for the total edge length in a unit disk for a family of planar tessellations based on stationary point processes. Apart from classical tessellations such as the Poisson–Voronoi, Poisson–Delaunay and Poisson line tessellation, we also treat the Johnson–Mehl tessellation, Manhattan grids, nested versions and Palm versions. As part of our proofs, for some planar tessellations, we also derive existence of exponential moments for the number of cells and the number of edges intersecting the unit disk.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Setting and Main Results

Random tessellations are a classical subject of stochastic geometry with a very wide range of applications for example in the modeling of telecommunication systems, topological optimization of materials and numerical solutions to PDEs. In this paper we focus on random planar tessellations \(S\subset {\mathbb {R}}^2\) which are derived deterministically from a stationary point process \(X=\{X_i\}_{i\in I}\). The most famous example here is the planar Poisson–Voronoi tessellation.

For several decades, research has been performed to understand statistical properties of various characteristics of S such as the degree distribution of its nodes, the distribution of the area or the perimeter of its cells, etc. For the classical examples, where the underlying point process is given by a Poisson point process (PPP), it is usually possible to derive first and second moments for these characteristics as a function of the intensity \(\lambda \), see [16, Table 5.1.1] and for example [12,13,14]. However, to derive complete and tractable descriptions of the whole distribution of these characteristics is often difficult.

In this paper we contribute to this line of research by proving existence of all exponential moments for the distribution of the total edge length in a unit disk. More precisely, let \(B_r\subset {\mathbb {R}}^2\) denote the closed centered disk with radius \(r>0\) and let \(|S \cap A|=\nu _1(S \cap A)\) denote the random total edge length of the tessellation \(S\subset {\mathbb {R}}^2\) in the Lebesgue measurable volume \(A\subset {\mathbb {R}}^2\), where \(\nu _1\) denotes the one-dimensional Hausdorff measure. We show for a large class of tessellations that for all \(\alpha \in {\mathbb {R}}\) we have that

As a motivation, let us mention that the information on the tail behavior of the distribution of \(|S \cap B_1|\) provided by (1) is an important ingredient for example in the large deviations analysis of random tessellations. If additionally the tessellation has sufficiently strong mixing properties, namely that there exists \(b>0\) such that \(|S\cap A|\) and \(|S\cap B|\) are stochastically independent for measurable sets \(A,B\subset {\mathbb {R}}^2\) with \(\mathrm{dist}(A,B)=\inf \{|x-y|:x\in A, y\in B\}>b\), then the cumulant-generating function

exists, see [10, Lemma 6.1]. This can be used for example to establish the limiting behavior of the percolation probability for the Boolean model with large radii based on Cox point processes where the intensity measure is given by \(|S\cap \mathrm dx|\), see [10]. Moreover, existence of exponential moments plays a role in establishing percolation in an SINR graph based on Cox point processes in the case of an unbounded integrable path-loss function, see [17] for details.

1.1 Tessellations

Let \(\partial A={\bar{A}}\setminus A^o\) denote the boundary of a set \(A\subset {\mathbb {R}}^2\) and write \(x=(x_1,x_2)\) for \(x\in {\mathbb {R}}^2\). Apart from the classical Voronoi tessellation (VT), where

and its dual, the Delaunay tessellation (DT), where

we also consider the line tessellation (LT), where

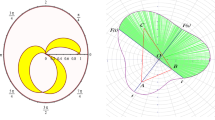

See Fig. 1 for realizations of the VT and the DT and their intersections with \(B_1\) in case X is a homogeneous PPP.

The extension of the VT known as the Johnson–Mehl tessellation (JMT) is covered by our results, see for example [1]. For this consider the i.i.d. marked stationary point process \({\widetilde{X}}=\{(X_i,T_i)\}_{i\in I}\) on \({\mathbb {R}}^2\times [0,\infty )\) with mark measure \(\mu (\mathrm dt)\). We define the Johnson–Mehl metric by

where we use the same notation \(|\cdot |\) for the Euclidean norm on \({\mathbb {R}}^2\) and \([0,\infty )\). Then, the JMT is given by

We also consider the Manhattan grid (MG), see for example [9]. For this let \(Y=(Y_{\mathrm{v}},Y_{\mathrm{h}})\) be the tuple where \(Y_{\mathrm{v}}=\{Y_{i,\mathrm v}\}_{i\in I_{\mathrm{v}}}\) and \(Y_\mathrm{h}=\{Y_{i,\mathrm h}\}_{i\in I_{\mathrm{h}}}\) are two independent simple stationary point processes on \({\mathbb {R}}\). Then the MG is defined as

Note that \(S_{\mathrm{M}}\) is stationary, similarly to all previously defined tessellations, however, unlike them, it is not isotropic. One can make \(S_{\mathrm{M}}\) isotropic by choosing a uniform random angle in \([0,2\pi )\), independent of Y, and rotating \(S_{\mathrm{M}}\) by this angle. Our results for the MG will be easily seen to hold for both the isotropic and anisotropic version of the MG.

Next, let us denote by \((C_i)_{i\in J}\) the collection of cells in the tessellation S, where \(J=J(S)\). Formally, a cell \(C_i\) of S is defined as an open subset of \({\mathbb {R}}^2\) such that \(C_i\cap S=\emptyset \) and \(\partial C_i\subset S\). In view of applications, see for example [9] or [15], it is sometimes desirable to consider nested tessellations (NT), which we can partially treat with our techniques. For this, let \(S_o\) be one of the tessellation processes introduced above, defined via the point process \(X^{(o)}\), with cells \((C_i)_{i\in J}\), which now serves as a first-layer process. For every \(i\in J\), let \(S_i\) be an independent copy of one of the above tessellation processes, maybe of the same type as \(S_o\) with potentially different intensity or maybe of a different type, but all \(S_i\) should be of the same type and have the same intensity. Let \(X^{(i)}\) denote the underlying independent point process of \(S_i\). Then the associated NT is defined as

Here, \(\bigcup _{i\in J}(S_i\cap C_i)\) will be called the second-layer tessellation. This definition of a NT originates from [19, Section 3.4.4], where this class of tessellations was defined as a special case of iterated tessellations.

Note that all kinds of tessellations S defined in this section are stationary, i.e., S equals \(S+x\) in distribution for any given \(x \in {\mathbb {R}}^2\). However, for a planar tessellation in order to be stationary, it is not required that it is based on a stationary point process. For example, let Y be a homogeneous Poisson process in \({\mathbb {R}}\), and let \(X=\{ (X_i,0) :X_i \in Y \}\). Then X is not a stationary point process in \({\mathbb {R}}^2\), however, the associated process \(\{ (X_i,t) :X_i\in Y, t \in {\mathbb {R}}\}\) of infinite vertical lines is a stationary tessellation.

Finally note that all subgraphs of tessellations having the property (1) inherit this property by monotonicity. In particular, our results cover the cases of the Gabriel graph, the relative neighborhood graph, and the Euclidean minimum spanning tree, since they are subgraphs of the DT, presented in decreasing order with respect to inclusion.

1.2 Assumptions

Unless noted otherwise, throughout the manuscript \(X=\{X_i\}_{i\in I}\) denotes a stationary point process on \({\mathbb {R}}^2\) with intensity \(0<\lambda <\infty \). Our results will use the following assumptions on exponential moments for the number of points and void probabilities for the underlying stationary point process. First, for the VT, we assume that

for all \(\beta > 0\). Second, we assume that

We provide the easy proof that these conditions hold for the homogeneous PPP in Sect. 1.4. They can also be verified for example for some b-dependent Cox point processes and some Gibbsian point processes, see also Sect. 1.4.

For the JMT, we generally assume that the mark distribution \(\mu (\mathrm dt)\) is absolutely continuous with respect to the Lebesgue measure. Further, let \(B^\mathrm{J}_r\) denote the centered ball in the JM metric as defined in (2). Then, in analogy to the above, we assume that

for all \(\beta > 0\). Second, we assume that

Again, these conditions hold if \((X_i)_{i \in I}\) is homogeneous PPP and \(\mu \) is for example the Lebesgue measure, see Sect. 1.4.

For the LT, we will assume that there exists \(\beta _\star \le \infty \) such that the random variable \(\# (X \cap ([-1,1] \times [0,2\pi ]))\) has exponential moments up to \(\beta _\star \), i.e.,

for all \(\beta < \beta _\star \). This condition holds for example for the homogeneous PPP with \(\beta _\star =\infty \).

For the MG, we assume that there exist \(\beta _{\mathrm{v}},\beta _\mathrm{h}\le \infty \) such that the random variables \(\#(Y_\mathrm{v}\cap [0,1])\) and \(\#(Y_{\mathrm{h}}\cap [0,1])\) have all exponential moments up to \(\beta _{\mathrm{v}},\beta _{\mathrm{h}}\), i.e.,

for all \(\beta <\beta _{\mathrm{v}}\), respectively \(\beta <\beta _{\mathrm{h}}\). This condition is satisfied with \(\beta _{\mathrm{v}}=\infty \) if \(Y_\mathrm{v}\) is a homogeneous Poisson process, and analogously for \(\beta _{\mathrm{h}}\).

1.3 Results

Having defined the types of tessellations we consider, we can now state our main theorem with its proof and all other proofs presented in Sect. 2.

Theorem 1.1

We have that (1) holds for all \(\alpha \in {\mathbb {R}}\) if S is a

-

1.

Voronoi tessellation, in case (3) and (4) hold for all \(\beta >0\),

-

2.

Johnson–Mehl tessellation, in case (5) and (6) hold for all \(\beta >0\),

-

3.

Delaunay tessellation, in case the underlying point process is a homogeneous Poisson point process.

For the line tessellation, in case (7) holds for all \(\beta <\beta _\star \), then (1) holds for all \(\alpha <\beta _\star \). For the Manhattan grid, in case (8) holds for all \(\beta <\beta _{\mathrm{v}}\), respectively \(\beta <\beta _{\mathrm{h}} \), then (1) holds for all \(\alpha <\min \{\beta _{\mathrm{v}},\beta _{\mathrm{h}}\}\).

Note that, using Hölder’s inequality and stationarity, the statement of Theorem 1.1 and all subsequent results remain true if \(B_1\) is replaced by any bounded measurable subset of \({\mathbb {R}}^2\).

Let us briefly comment on the proof of Theorem 1.1. The proof of the parts for the LT and MG is rather straightforward. As will become clear from the proof, in case of the MG, an application of Hölder’s inequality would give the same result without the independence assumption on the point processes \(Y_{\mathrm v},Y_{\mathrm h}\), but we lose some of the exponential moments. The cases for the VT, JMT and DT are more involved. However, the statements follow easily if exponential moments for the corresponding number of edges intersecting \(B_1\) can be established. More precisely, let \((E_i)_{i\in K}\) denote the collection of edges in the tessellation S, where \(K=K(S)\), and

the number of edges intersecting \(B_1\). In the case of tessellations consisting of infinite lines just as the LT and the MG, one has to be careful with the definition of W. Indeed, in this case an edge is to be understood as a maximally linear portion of a cell boundary. Hence, each infinite line of the tessellation contains an infinite number of collinear edges, and W is bounded from below by the number \(W_\infty \) of infinite lines having a non-empty intersection with \(B_1\).

Then, for S being a VT or a DT, the edges of S are straight line segments and hence the intersection of each edge with \(B_1\) has length at most 2. Similarly, edges of the JMT are either hyperbolic arcs or straight line segments, see [16, Property AW2, page 126]. By convexity, the intersection of any Johnson–Mehl edge with \(B_1\) has length at most \(|\partial B_1|=2\pi \). Hence, for the VT, DT or JMT, if

holds for all \(\alpha >0\), then so does (1) for all \(\alpha >0\). If (10) holds for some \(\alpha >0\), then so does (1) for some \(\alpha >0\). The following result establishes exponential moments for W and also the simple consequence that

for some \(\alpha >0\), where

is the number of cells intersecting \(B_1\).

Proposition 1.2

-

(i)

For Voronoi or Johnson–Mehl tessellations based on a stationary point process that satisfies (3) and (4), respectively (5) and (6), for all \(\beta >0\), (10) holds for all \(\alpha \in {\mathbb {R}}\). For Delaunay tessellations based on a homogeneous Poisson point process, (10) holds for some \(\alpha >0\).

-

(ii)

For Voronoi or Johnson–Mehl tessellations based on a stationary point process that satisfies (3) and (4), respectively (5) and (6), for all \(\beta >0\), (11) holds for all \(\alpha \in {\mathbb {R}}\). For Delaunay tessellations based on a homogeneous Poisson point process, (11) holds for some \(\alpha >0\).

As mentioned above, Theorem 1.1 parts (i) and (ii) are immediate consequences of Proposition 1.2 part (i) for the corresponding tessellations. However, for the case of the DT, as in part (iii) of Theorem 1.1, we cannot use Proposition 1.2 since we do not have a statement for all \(\alpha >0\). In order to overcome this difficulty, we first estimate small exponential moments of the total number of edges intersecting with \(B_a\) for different values of \(a>0\) and then use an additional scaling argument to conclude (1) for all \(\alpha >0\). Let us also emphasize that for the DT, we establish the above results only in the case in which the underlying point process is a PPP. It is unclear if exponential moments for the number of edges W and number of cells V intersecting with the unit disk exist for the LT and we make no statements about them.

For the NT, existence of exponential moments for V for the first-layer tessellation can be used to verify (1) for \(S_{\mathrm{N}}\). More precisely, we have the following result.

Corollary 1.3

Consider the nested tessellation.

-

(i)

If for the first-layer tessellation (11) holds for all \(\alpha \in {\mathbb {R}}\) and for the second-layer tessellation (1) holds for all \(\alpha \in {\mathbb {R}}\), then also \(S_{\mathrm{N}}\) satisfies (1) for all \(\alpha \in {\mathbb {R}}\).

-

(ii)

If for the first-layer tessellation (11) holds for some \(\alpha >0\) and for the second-layer tessellation (1) holds for some \(\alpha >0\), then also \(S_{\mathrm{N}}\) satisfies (1) for some \(\alpha >0\).

As we will explain in Sect. 1.5, the statement of Proposition 1.2 is false for the MG based on independent homogeneous Poisson processes on the axes, despite the fact that (1) holds in this case according to Theorem 1.1. However, in the special case where the NT is composed of MGs in both layers and the second-layer MG is based on independent homogeneous Poisson processes, for this \(S_{\mathrm{N}}\), we still obtain (1) for all \(\alpha \in {\mathbb {R}}\). This is the content of the following result.

Proposition 1.4

Consider the nested tessellation and assume that the second-layer tessellation is given by Manhattan grids based on two independent homogeneous Poisson processes and the first-layer tessellation is also a Manhattan grid satisfying (1) for all \(\alpha \in {\mathbb {R}}\). Then, (1) holds for the nested Manhattan grid also for all \(\alpha \in {\mathbb {R}}\).

Let us mention that for the tessellations studied in Theorem 1.1, considering Palm versions of the underlying point process, at least in the case where it is a homogeneous PPP, does not change existence of all exponential moments. We want to be precise here since there are multiple different possibilities to define Palm measures in this context. For the Poisson–VT, Poisson–JMT and Poisson–DT, we denote by \(X^*\) the Palm version of the underlying unmarked PPP and denote by \(S^*=S(X^*)\) its associated tessellations. For the Poisson–LT we denote by \(X^*\) the Palm version of the underlying PPP only with respect to the first coordinate, i.e., \(X^* = X \cup \{ (0,\varPhi ) \}\), where \(\varPhi \) is a uniform random angle in \([0,\pi )\) that is independent of X. Roughly speaking, this corresponds to \(S^*_\mathrm{L}=S_{\mathrm{L}}(X^*)\) being distributed as \(S_{\mathrm{L}}\) when conditioned to have a line crossing the origin o of \({\mathbb {R}}^2\) with no fixed angle. The Palm version of the MG is given by

where U is an independent uniformly distributed random variable on [0, 1] and \(Y_\mathrm v^*\) and \(Y_\mathrm h^*\) denote the Palm versions of \(Y_\mathrm v\) and \(Y_\mathrm h\), see [9, Section III.B]. We will recall the notion of the Palm version of a general stationary point process in Sect. 2.3. Palm distributions of NTs can be defined correspondingly, see for example [9, 19].

Corollary 1.5

Consider all the tessellations S appearing in Theorem 1.1. If the underlying point processes are homogeneous Poisson point processes, we also have for all \(\alpha \in {\mathbb {R}}\) that

To end this section with a short discussion, let us mention that it is a simple consequence of the works [2, 8, 20] that for all \(\alpha \in {\mathbb {R}}\)

where \(N^*\) denotes the number of Poisson–Delaunay edges originating from the origin under the Palm distribution for the underlying PPP. The assertion (15) seems similar to the one (14) for the Poisson–DT, however, \(S^* \cap B_1\) can contain segments from many edges that are not adjacent to the origin, in particular also from edges both endpoints of which are situated outside \(B_1\). It is an interesting open question whether it is possible to provide a simpler proof of the assertion (14) for all \(\alpha \in {\mathbb {R}}\) or the assertion (1) for all \(\alpha \in {\mathbb {R}}\) for the Poisson–DT based on the fact that (15) holds for all \(\alpha \in {\mathbb {R}}\).

For Corollary 1.5, we provide a case-by-case proof. Let us mention that, for the reverse implication with \(S^*=S(X^*)\) for a homogeneous PPP X with intensity \(\lambda \), using the inversion formula of Palm calculus [11, Section 9.4] and Hölder’s inequality, we can derive the following criterion,

where \({\mathcal {C}}_o\) is the Voronoi cell of the origin in the VT \(S_{\mathrm{V}}(X^*)\).

Finally, let us comment on possible generalizations of our results to higher dimensions. In at least three dimensions, it is still true that VTs, DTs and JMTs are exponentially stabilizing, i.e., the probability that a point of the underlying point process outside the ball \(B_k\) influences the realization of the tessellation inside \(B_1\) decays exponentially fast, which is an important argument in our proofs. However, in the planar case, given that the points inside \(B_k\) determine the tessellation inside \(B_1\), the total number of edges intersecting with \(B_1\) can be bounded by constant times the number of points in the region \(B_k\) (see e.g. Sect. 2.1.1 for details). This is in general not true in higher dimensions, which yields the main obstacle for generalizing our results. On the other hand, some of our results extend easily to higher dimensions. For example, defining a higher-dimensional analogue of a Manhattan grid using independent stationary point processes on all coordinate axes and connecting all these points by edges, an analogue of Theorem 1.1 can easily be derived using arguments similar to the ones of Sect. 2.1.5.

1.4 Examples: Poisson–, Cox– and Gibbs–Voronoi Tessellations

It is easy to check that the assumptions listed in Sect. 1.2, are satisfied if the underlying point process is a stationary PPP. Indeed, for (3) note that by the Laplace transform, for any measurable \(B\subset {\mathbb {R}}^2\)

Further, for (4) note that the void probability for the PPP is given by

As for the assumptions (5) and (6), the same arguments can be applied.

It is natural to ask under what conditions existence of exponential moments for the total edge length in the unit disk can be guaranteed for tessellations S(X) where X is not a PPP but some different stationary planar point process. As a starting point for future studies, in this section we present examples for the VT based on a stationary Cox point process (CPP) and a stationary Gibbsian point process (GPP) X where our results guarantee the existence of exponential moments.

1.4.1 Cox–Voronoi Tessellations

A Cox point process is a PPP with random intensity measure \(\varLambda (\mathrm dx)\), see for example [6] for details. We have the following proposition.

Proposition 1.6

Consider \(S_{\mathrm{V}}(X)\) where X is a stationary Cox point process with intensity measure \(\varLambda \) satisfying

for all \(\beta >0\). Then, for \(S=S_{\mathrm{V}}(X)\), (1) holds for all \(\alpha \in {\mathbb {R}}\).

Proof of Proposition 1.6

It suffices to verify the assumptions (3) for all \(\beta >0\) and (4). For assumption (4), note that for any measurable \(B\subset {\mathbb {R}}^2\)

and thus (16) is precisely what we need. The same argument can be applied for assumption (3). \(\square \)

The conditions (16) and (17) hold if \(\varLambda (Q_1)\) has all exponential moments and \(\varLambda \) is b-dependent, where we call \(\varLambda \) b-dependent if for any two measurable sets \(A,B\subset {\mathbb {R}}^2\) such that \(\mathrm{dist}(A,B)=\inf _{x \in A,y\in B} |x-y|>b\), the restrictions \(\varLambda |_A\) and \(\varLambda |_B\) of \(\varLambda \) to A respectively B are independent. Indeed, by stationarity of \(\varLambda \), it suffices to verify (16) and (17) with \(B_k\) replaced by \(Q_k=[-k/2,k/2]^2\) (both for \(k=n\) and \(k=n+4\)) in the limit \({\mathbb {N}}\ni k \rightarrow \infty \). Let us assume that \(\varLambda \) is b-dependent. Then, there exists \(b'=b'(b) \in {\mathbb {N}}\) such that for any \(k \in {\mathbb {N}}\), \(Q_k\) can be partitioned into at most \(b'\) disjoint subsets such that each of these subsets consists of (apart from the boundaries) disjoint copies of \(Q_1\) such that the restrictions of \(\varLambda \) to these copies are mutually independent. Using this independence and the existence of all exponential moments of \(\varLambda (Q_1)\), further applying Hölder’s inequality for the collection of partition sets, (16) and (17) follow. A relevant example for a b-dependent and even bounded intensity measure is the modulated Poisson point process where \(\varLambda (\mathrm dx) = \mathrm dx(\lambda _1 \mathbb {1} \{ x \in \varXi \} + \lambda _2 \mathbb {1} \{ x \in \varXi ^{\mathrm c} \} )\), with \(\varXi \) being a Poisson–Boolean-model with bounded radii, and \(\lambda _1,\lambda _2 \ge 0\), see [5, Section 5.2.2]. Another example for which conditions (16) and (17) holds, and which is unbounded, is the shot-noise field, see [5, Section 5.6], where \(\varLambda (\mathrm dx)=\mathrm dx\sum _{i\in I}\kappa (x-Y_i)\) for some integrable kernel \(\kappa :{\mathbb {R}}^2\rightarrow [0,\infty )\) with compact support and \(\{Y_i\}_{i\in I}\) a stationary PPP.

1.4.2 Gibbs–Voronoi Tessellations

A Gibbs point process on \({\mathbb {R}}^2\) is defined via its conditional probabilities in bounded measurable volumes \(B\subset {\mathbb {R}}^2\). They take the form of a Boltzmann weight

where \({{\mathcal {P}}}_B\) is a PPP on B with intensity \(\lambda >0\), \(\gamma \in {\mathbb {R}}\) is a system parameter and H is the Hamiltonian, which assigns some real-valued energy to the configuration \(X_BX_{B^\mathrm{c}}=X_B\cup X_{B^\mathrm{c}}\), where \(X_{B^\mathrm{c}}\) is a boundary configuration in \(B^\mathrm{c}={\mathbb {R}}^2\setminus B\). For details see for instance [7]. As an example, we consider the Widom–Rowlinson model where \(H(X)=|\bigcup _{X_i\in X}B_r(X_i)|\), with \(B_r(x)\) the ball of radius \(r>0\), centered at \(x\in {\mathbb {R}}^2\). Existence of associated point processes on \({\mathbb {R}}^2\) that are stationary can be guaranteed, see for example [4]. We have the following result.

Proposition 1.7

Consider \(S_{\mathrm{V}}(X)\) where X is the Widom–Rowlinson model. Then, for \(S=S_{\mathrm{V}}(X)\), (1) holds for all \(\alpha \in {\mathbb {R}}\).

Proof of Proposition 1.7

It suffices to verify the assumptions (3) for all \(\beta >0\) and (4). For assumption (4), note that by consistency for all bounded measurable \(B\subset {\mathbb {R}}^2\),

for all \(r,\lambda ,\gamma >0\). For assumption (3), note that for all bounded measurable sets \(B\subset {\mathbb {R}}^2\),

for all \(r,\lambda ,\gamma ,\beta >0\), which proves the desired result. \(\square \)

1.5 Absence of Exponential Moments for the Number of Edges and Cells

In Proposition 1.2, we provide statements about existence of exponential moments for V, the number of cells intersecting \(B_1\), and W, the number of edges intersecting \(B_1\). In this section we want to exhibit one example in our family of tessellations for which exponential moments for V do not exist. Indeed, take the MG where the underlying stationary point processes are PPPs \(Y_{\mathrm{v}}\) and \(Y_{\mathrm{h}}\) with intensity \(\lambda \). By translation invariance, we can also consider the random variable \(V'\), the number of cells intersecting \(Q_1\). In order to simplify the notation, let us write \(X_\mathrm v=\#(Y_{\mathrm{v}} \cap [-1/2,1/2])\) and \(X_\mathrm h=\#(Y_{\mathrm{h}} \cap [-1/2,1/2])\). These random variables are independent and Poisson distributed with parameter \(\lambda \). Then we have that

Since for the MG based on PPPs, V and the number \(W_\infty \) of infinite lines intersecting with \(Q_1\) are of the same order, further, \(W_\infty \le W\), it follows that \({\mathbb {E}}[\exp (\alpha W)]=\infty \).

2 Proofs

For our results, it obviously suffices to consider \(\alpha >0\) instead of \(\alpha \in {\mathbb {R}}\).

2.1 Total Edge Length, Number of Edges and Cells: Proof of Theorem 1.1 and Proposition 1.2

The proof of Theorem 1.1 and Proposition 1.2 is organized as follows. As already discussed, for the VT and the JMT it suffices to show Proposition 1.2 part (i) for all \(\alpha >0\) in order to conclude the corresponding part of Theorem 1.1. In Sect. 2.1.1 we cary out the proof of Proposition 1.2 part (i) for all \(\alpha >0\) for the VT and in 2.1.2 for the JMT. Section 2.1.3 is devoted to the case of the Poisson–DT. Here we first verify an extended version of Proposition 1.2 part (i) for small \(\alpha >0\), and using this we verify (1) for all \(\alpha >0\). The direct and short proofs of (1) for all \(\alpha >0\) for the LT and the MG can be found in Sects. 2.1.4 and 2.1.5, respectively. Given these results, we prove Proposition 1.2 part (ii) in Sect. 2.1.6.

2.1.1 Voronoi tessellations: Proof of Proposition 1.2 part (i)

It suffices to verify (10) for all \(\alpha >0\). For this we extend arguments first presented in [17, Theorem 2.6], where \({\mathbb {E}}[\exp (\alpha |S_{\mathrm{V}} \cap [-1/2,1/2]^2|)]<\infty \) was verified for all sufficiently small \(\alpha >0\) in the case where the underlying point process is a PPP. Let us extend the notion of W defined in (9) to balls of different radii via

where we recall that \((E_i)_{i \in K}\) is the collection of edges of \(S_{\mathrm{V}}\). The following lemma states that unless we have a void space, numbers of edges can be bounded from above by numbers of points in bounded regions.

Lemma 2.1

Let \(b\ge a > 0\). If \(X\cap B_b \ne \emptyset \), then we have

Proof

Let us assume existence of \(X_i\in X\cap B_b\). We first claim that for any edge of \(S_{\mathrm{V}}\) intersecting with \(B_a\), the corresponding edge in the dual DT connects two points in \(B_{b+3a}\). Indeed, assume otherwise, then there exists \(v \in B_a\) and \(X_j \in X \cap B_{b+3a}^{\mathrm c}\) such that \(|v-X_j|=\min \{ |v-X_l| :l \in I \}\) and

On the other hand,

which is a contradiction. Thus, for any Voronoi edge intersecting with \(B_a \subseteq B_b\), the corresponding Delaunay edge has both endpoints in \(X \cap B_{b+3a}\). But since the subgraph of the Delaunay graph spanned by the vertex set \(X \cap B_{b+3a}\) is simple and planar, Euler’s formula (see e.g. [12, Remark 2.1.4]) implies that the number of such edges is bounded by 3 times the number of vertices in this subgraph. This implies (19). \(\square \)

Note that Lemma 2.1 holds for any point cloud X. The proof of (10) for the VT now rests on the assumption that it is exponentially unlikely to have large void spaces of order \(k^2\) and existence of exponential moments for numbers of points in annuli of order k. Let

denote the distance of the closest point in X to the origin.

Proof of Proposition 1.2 part (i)

In the event \(\{ R \le 1 \}\) we have that \(B_1 \cap X \ne \emptyset \), and therefore by Lemma 2.1 applied for \(a=b=1\), we obtain

On the other hand, in the event \(\{ R \ge 1 \}\), we can apply Lemma 2.1 with \(a=1\) and \(b=R\) in order to obtain that, almost surely,

where we also used that by stationarity, on \(\partial B_R\) there is precisely one point, almost surely. By assumption (4) we have \({\mathbb {E}}[R]<\infty \) and hence \({\mathbb {P}}(R<\infty )=1\). We can thus estimate for all \(\alpha >0\),

where we used Hölder’s inequality in the last line. Now, by the assumptions (4) and (3), there exist \(c_1,c_2>0\) such that for sufficiently large k, we have

and hence summability of the right-hand side of (21) is guaranteed. This concludes the proof of Proposition 1.2 for the number of edges and thus of Theorem 1.1 part (i). \(\square \)

2.1.2 Johnson–Mehl Tessellations: Proof of Proposition 1.2 Part (i)

As explained in Sect. 1, Theorem 1.1 (ii) follows once we verify (10) for the JMT for all \(\alpha >0\), which is the first part of Proposition 1.2 for the JMT. The arguments are very similar to the ones used in Sect. 2.1.1 for the VT. To start with, we have the following lemma, which is an analogue of Lemma 2.1 in the Johnson–Mehl case. Recall that for \((x,s) \in {\mathbb {R}}^2 \times [0,\infty )\) and \(r>0\) we write \(B_r^\mathrm{J}(x,s)\) for the closed ball of radius r around (x, s) in the Johnson–Mehl metric, see (2).

Lemma 2.2

Let \(b \ge a>0\). If \( {\widetilde{X}} \cap B^\mathrm{J}_b \ne \emptyset \), then \(S_{\mathrm{J}} \cap B_a\) is determined by \({\widetilde{X}} \cap B_{b+3a}^{\mathrm J}\). That is, for any \(x\in S_{\mathrm{J}} \cap B_a\), if \(j \in I\) is such that \(d_\mathrm{J}((X_j,T_j),(x,0))=\inf _{k\in I} d_{\mathrm{J}} ((X_k,T_k),(x,0))\), then \((X_j,T_j) \in B_{b+3a}^{\mathrm J}\).

Proof

Assume that there exists \(i \in I\) such that \((X_i,T_i) \in B^{\mathrm{J}}_b\) and that \(S_{\mathrm{J}}\) exhibits an edge having a non-empty intersection with \(B_a\), and let \(x \in B_a\) be a point of such an edge. Then, using the triangle inequality, since

and for any \(j \in I\) with \((X_j,T_j) \notin B^\mathrm{J}_{b+3a}\), we have

and the result follows. \(\square \)

Proof of (10)

for the JMT for all \(\alpha >0\). We start with two preliminary observations. First, let \({\mathcal {E}}\) denote the set of (closed) edges of \(S_{\mathrm{J}}\). By construction of a JMT, almost surely, any \(E \in {\mathcal {E}}\) has the property that there exist precisely two points \((X_i,T_i),(X_j,T_j)\) (depending on E) such that for all \(z \in E\)

In this case, we will write \(E=\big ((X_i,T_i);(X_j,T_j)\big )\). We claim that for any finite subset \(I_0\) of I,

holds. Indeed, the set on the left-hand side of (22) is in one-to-one correspondency with \(\#{\mathcal {D}}(I_0) \) where

since \((i,j) \in {\mathcal {D}}(I_0)\) if and only if \(X_i\) and \(X_j\) are connected by an edge in the dual of the Johnson–Mehl graph. Note that since JMT is a planar graph, so is its dual, and thus \(\mathcal D(I_0)\) has cardinality at most \(3 \# I_0\) thanks to the Euler formula for planar graphs.

Now, let us define the distance of the closest point to the (space-time) origin in the Johnson–Mehl metric

Now, in the event \(\{ R' \le 1 \}\), we have \(B_{1}^\mathrm{J}\cap {\widetilde{X}} \ne \emptyset \), and thus an application of Lemma 2.2 for \(a=b=1\) gives

Thanks to (22), the right-hand side is at most \( \# ({\widetilde{X}} \cap B_4^\mathrm{J})\). On the other hand, in the event \(\{ R' > 1\}\), we can apply Lemma 2.2 for \(a=1\) and \(b=R'\), which together with the convexity yields

Again, by stationarity of X and absolute continuity of \(\mu \), almost surely, we can further bound the right-hand side of (24) from above, which yields

By assumption (6) we have \({\mathbb {E}}[R']<\infty \) and hence \({\mathbb {P}}(R'<\infty )=1\). We can thus estimate for all \(\alpha >0\) using Hölder’s inequality,

As above, the assumptions (5) and (6) now guarantee summability. This proves Proposition 1.2 for the number of edges and thus of Theorem 1.1 part (ii). \(\square \)

2.1.3 Poisson–Delaunay Tessellations: Proof of Theorem 1.1 Part (iii) and Proposition 1.2 part (i)

The case of the DT is the most difficult one to handle, essentially since in this case, existence of points close to the origin does not automatically eliminate the influence of other distant points. To keep the argument simple, we thus only treat the case here where the underlying point process is a homogeneous PPP. Recall the definition of \(W_a\) from (18). Our first step towards the proof of Theorem 1.1 (iii) is to verify that there exists a fixed \(\alpha >0\) such that \( {\mathbb {E}}[\exp (\alpha W_a)]<\infty \) holds for any \(a>0\). Let us write \(X^\lambda \) to indicate the intensity \(\lambda \) in the underlying PPP and write \(S^\lambda _{\mathrm{D}}=S_{\mathrm{D}}(X^\lambda )\) and \(W^\lambda _a\) for the number of edges of \(S^\lambda _{\mathrm{D}}\) intersecting with \(B_a\).

Proposition 2.3

Let \(a>0\) and \(\lambda >0\). Then, \({\mathbb {E}}[\exp (\alpha W^\lambda _a)]<\infty \) holds for all \(\alpha <\frac{1}{6} \log \big ( 1+ \frac{1}{72} \big )\).

In particular, choosing \(a=\lambda =1\), (10) follows from this proposition for the Poisson–DT for small \(\alpha >0\), which proves Proposition 1.2 part (i) for the Poisson–DT. The proof rests on a comparison on the exponential scale.

Proof

For \(x \in {\mathbb {R}}^d\), let \(Q_r(x)\) denote the box of side length r centered at x. We define

the finest discretization of \({\mathbb {R}}^2\) into boxes such that every box in the 2-annulus contains points. Note that R is almost surely finite. For \(k \in {\mathbb {N}}\) such that \(k > \lceil 2a \rceil \),

Note that once \(k>\lceil 2a \rceil \), the right-hand side of (27) does not depend on a. Since these terms are summable from \(k=1\) to \(\infty \), \({\mathbb {P}}(R=\lceil 2a \rceil )\) tends to one and thus \({\mathbb {E}}[R]\) tends to infinity as \(a \rightarrow \infty \).

In the event \(\{ R=k \}\) for some \(k\ge 2a\), the points of \(\partial Q_{3k}(o)\) are within a distance at most \(\sqrt{2} k\) from the centroid of their Voronoi cell. Among these Voronoi cells, the neighboring ones are separated by a Voronoi edge and hence their cell centroids are Delaunay neighbors. The Delaunay edges connecting the centroids of the successive cells yield a closed path in the Delaunay graph surrounding \(B_a\). This path defines a bounded region in which both endpoints of any Delaunay edge intersecting \(B_a\) are located. Further, this region is fully contained in \(Q_{3k}(o) \oplus B_{\sqrt{2}k} \subset Q_{3k}(o) \oplus Q_{2\sqrt{2}k}(o) \subset Q_{6k}(o)\). Hence, since the restriction of the Delaunay triangulation is a planar graph, using Euler’s formula we arrive at

Now we can use Hölder’s inequality, the Laplace transform of a Poisson random variable and (27) to estimate

But the right-hand side is finite for \(\alpha <\frac{1}{6} \log \big ( 1+\frac{1}{72} \big )\) for all \(a>0\) and \(\lambda >0\), as asserted. \(\square \)

We have the following corollary of Proposition 2.3 for the total edge length.

Corollary 2.4

Let \(a>0\) and \(\lambda >0\). Then for all \(\alpha < \frac{1}{12a} \log \big ( 1+\frac{1}{72} \big )\), \({\mathbb {E}}[\exp (\alpha |S^\lambda _{\mathrm{D}} \cap B_a|)]<\infty \).

Proof

Since the edges of the Poisson–DT are straight line segments, any edge contributes to \(|S^\lambda _{\mathrm{D}} \cap B_a|\) by at most 2a. Hence,

Now, \({\mathbb {E}}[\exp (\alpha (2a W^\lambda _a))]<\infty \) holds once \({\mathbb {E}}[\exp ((2a\alpha )W^\lambda _a)]<\infty \). Thanks to Proposition 2.3, this holds as soon as \( \alpha < \frac{1}{12a} \log \big ( 1+\frac{1}{72} \big )\), as wanted. \(\square \)

Further, we have the following scaling relation for \(\lambda ,r>0\):

Indeed, since \(X^\lambda \), \(X^{\lambda /r^2}\) are homogeneous PPPs with intensities \(\lambda \), \(\lambda /r^2\), respectively, we have that \(X^{\lambda /r^2} \cap B_r\) equals \(r(X^\lambda \cap B_1)\) in distribution. Thus, \(S_\mathrm{D}^{\lambda /r^2} \cap B_r\) is equal to a rescaled version of \(S_\mathrm{D}^\lambda \cap B_1\) in distribution where the length of each edge is multiplied by r. This implies the statement (28).

Proof of Theorem 1.1 part (iii)

Let us fix \(\alpha \). Using (28), it suffices to show that there exists \(a>0\) such that

for some \(a>0\). Thus, we only have to lift Corollary 2.4 from sufficiently small \(\alpha \) to all \(\alpha \). For \(a>0\) let us define

Then, thanks to Corollary 2.4, \({\mathbb {E}}[\exp (\alpha |S_{\mathrm{D}} \cap B_1|)]<\infty \) for all \(\alpha \in (0,\alpha _{\mathrm{c}}(1))\). Let \(r>0\) be sufficiently large such that \( \alpha /r<\alpha _{\mathrm c}(1)\). Note that \(\alpha _{\mathrm{c}}(a) = \frac{1}{a} \alpha _{\mathrm{c}}(1)\). Further, observe that the value \(\alpha _{\mathrm c}(1)\) is independent of the intensity parameter of the underlying PPP. These imply that for any \(\lambda '>0\), we have

Choosing \(\lambda =1/r^2\) implies (29) with \(a=r\) everywhere. This concludes the proof. \(\square \)

2.1.4 Line Tessellations: Proof of Theorem 1.1 Part (iv)

Proof

We use the notation of Sect. 1. Since for any line

of \(S_{\mathrm{L}}\) we have \(|l_i \cap B_1| \le 2\), it suffices to show that under the assumption (7) the number of lines of \(S_{\mathrm{L}}\) intersecting with \(B_1\) has exponential moments up to \(\beta _\star \). Now, a line \(l_i\) in \({\mathbb {R}}^2\) intersects with \(B_1\) if and only if its distance parameter \(X_{i,1}\) is at most one in absolute value, independently of its angle parameter \(X_{i,2} \in [0,2\pi ]\). By the assumption (7), the number of such lines has exponential moments up to \(\beta _\star \). \(\square \)

2.1.5 Manhattan Grids: Proof of Theorem 1.1 for the MG

Proof

Since \(B_1\) is a subset of \(Q_1=Q_1(o)\), it suffices to verify the statement for \(Q_1\) instead of \(B_1\). Note that for any edge E in \(S_{\mathrm{M}}\), either \(E \cap Q_1 = \emptyset \) or \(| E \cap Q_1|=1\). Since \(Y_{\mathrm{v}}\) and \(Y_{\mathrm{h}}\) are independent, it follows that for all \(\alpha >0\), we have

By assumption \(\#(Y_{\mathrm h} \cap [-1/2,1/2])\) and \(\#(Y_{\mathrm v} \cap [-1/2,1/2])\) have exponential moments, for \(\alpha <\beta _{\mathrm{v}}\), respectively \(\alpha <\beta _{\mathrm{h}}\), which implies exponential moments for \(S_{\mathrm{M}}\) for \(\alpha <\min \{\beta _{\mathrm{v}},\beta _{\mathrm{h}}\}\). \(\square \)

2.1.6 Number of Cells: Proof of Proposition 1.2 Part (ii)

Proof of Proposition 1.2 part (ii)

Note that any edge of the VT, DT or JMT that intersects with \(B_1\) is adjacent to precisely two cells intersecting with \(B_1\), whereas if \(W=0\), then \(V=1\), and thus we have the trivial bound \( V \le 2 W+1\). Thus, the assertion (11) for any given \(\alpha /2>0\) follows from the assertion (10) for the same \(\alpha \). \(\square \)

2.2 Nested Tessellations: Proof of Corollary 1.3 and Proposition 1.4

Proof of Corollary 1.3

We write \(S'\) for a fixed tessellation process that equals \(S_i\), \(i \in J\), in distribution, and we define V according to (12) for the first-layer tessellation \(S_o\), so with the index set J being such that \(S_o\) has cells \((C_i)_{i \in J}\). For \(\alpha ,\beta >0\), let us write

where \(M_\alpha , N_\beta \) are defined as elements of \([0,\infty ]\). Then, we need to show (i) that if \(M_\alpha <\infty \) and \(N_\beta <\infty \) for all \(\alpha ,\beta >0\), then \({\mathbb {E}}[\exp (\gamma |S_\mathrm N \cap B_1|)]<\infty \) holds for all \(\gamma >0\), and (ii) if there exists \(\alpha ,\beta >0\) such that \(M_\alpha <\infty \) and \(N_\beta <\infty \), then there exists \(\gamma >0\) such that \({\mathbb {E}}[\exp (\gamma |S_\mathrm N \cap B_1|)]<\infty \). First, using Hölder’s inequality, we can separate the first from the second layer process,

For the first factor on the right-hand side, note that we can bound

as an inequality in \([0,\infty ]\). From this, (i) follows immediately. As for (ii), let us assume that \(M_\alpha <\infty \) holds for some \(\alpha >0\) and \(N_\beta <\infty \) holds for some \(\beta >0\). Then, the moment generating function \({\mathbb {R}}\rightarrow [0,\infty ]\), \(\beta \mapsto N_\beta \) is continuous (in fact, infinitely many times differentiable) in an open neighborhood of 0, which implies that \(\lim _{\beta \rightarrow 0} N_{\beta }=N_0=1\). Analogous arguments imply that \(\lim _{\alpha \rightarrow 0} \log M_{\alpha }=0\). Hence, there exists \(\alpha >0\) such that \(N_{\log M_\alpha }<\infty \), which implies (ii). \(\square \)

Proof of Proposition 1.4

We verify the statement with \(B_1\) replaced by \(Q_1\) in (1), which suffices thanks to the fact that \(B_1 \subset Q_1\). According to the assumptions of the proposition, let the first-layer tessellation \(S_o\) be a MG satisfying (1) for all \(\alpha >0\), and let us write \(Y^o=(Y_\mathrm v^o, Y_\mathrm h^o)\) for the corresponding pair of point processes on \({\mathbb {R}}\). We can enumerate the points of \(Y_{\mathrm{v}}^o \cap [-1/2,1/2]\) in increasing order as \(Y_{\mathrm{v}}^o \cap [-1/2,1/2]=(P^i)_{i=1}^{N_{\mathrm{v}}}\). Similarly, we can enumerate the points of \(Y_{\mathrm{h}}^o \cap [-1/2,1/2]\) in increasing order as \(Y_{\mathrm{h}}^o \cap [-1/2,1/2]=(Q^j)_{j=1}^{N_{\mathrm{h}}}\). We further write \(P^0=Q^0=-1/2\) and \(P^{N_{\mathrm{v}}+1}=Q^{N_{\mathrm{h}}+1}=1/2\). Note that \(\sum _{i=1}^{N_{\mathrm v}+1} (P^i-P^{i-1}) = \sum _{j=1}^{N_{\mathrm h}+1} (Q^j-Q^{j-1}) = 1\).

Now, the collection of cells of \(S_o\) intersecting \(Q_1\) is given as

where \(C_{i,j}\) is the open rectangle \((P^{i-1},P^{i}) \times (Q^{j-1},Q^{j})\). We write \(S_{i,j}\) for the second-layer tessellation corresponding to \(S_{\mathrm{N}}\) in the cell \(C_{i,j}\) and \(Y^{i,j}=(Y^{i,j}_{\mathrm{v}},Y^{i,j}_{\mathrm{h}})\) for the associated pair of Poisson processes on \({\mathbb {R}}\). Here, there exist \(\lambda _\mathrm{v},\lambda _{\mathrm{h}}>0\) such that for all \(i \in \{ 1,\ldots ,N_\mathrm{v}+1 \}\) and for all \(j\in \{1,\ldots ,N_{\mathrm{h}}+1 \}\), \(Y^{i,j}_\mathrm{v}\) has intensity \(\lambda _{\mathrm{v}}\) and \(Y^{i,j}_{\mathrm{h}}\) has intensity \(\lambda _{\mathrm{h}}\). Now note that for all \(i \in \{ 1,\ldots ,N_{\mathrm{v}}+1 \}\) and for all \(j\in \{1,\ldots ,N_{\mathrm{h}}+1 \}\), all vertical edges of \(S_{i,j}\) intersect \(C_{i,j}\) in a segment of length \(P^i-P^{i-1}\) and all horizontal edges of \(S_{i,j}\) intersect \(C_{i,j}\) in a segment of length \(Q^j-Q^{j-1}\). Thus, we obtain that

By Hölder’s inequality, it suffices to verify the existence of all exponential moments for each of the three terms on the right-hand side separately. The first term has all exponential moments thanks to the assumption of Proposition 1.4. Further, by symmetry between the second and the third term, it suffices to show existence of all exponential moments for one of them; we will consider the second term.

Since for fixed \(i \in \{1,\ldots ,N_{\mathrm h} + 1 \}\), \(\# (Y_v^{i,j} \times (Q^{j-1},Q^j))_{j=1,\ldots ,N_{\mathrm v}+1}\) are independent Poisson random variables with parameters summing up to \(\lambda _{\mathrm{v}}\), it follows that their superposition \(N_i = \sum _{j=1}^{N_{\mathrm v}+1} \# (Y_{\mathrm v}^{i,j} \cap (Q^{j-1},Q^j)\) is a Poisson random variable with parameter \(\lambda _{\mathrm{v}}\). Further, conditional on \((P^i)_{i=1}^{N_{\mathrm h}}\), \((N_i)_{i=1}^{N_{\mathrm h}+1}\) are independent.

Now, fix \(\alpha >0\), and let \(K_\alpha >0\) be such that for all \(x \in (-\infty ,\alpha ]\) we have \(\exp (x)-1 \le K_\alpha x\). Using that \(P^i-P^{i-1} \le 1\) for all i and \(\sum _{i=1}^{N_{\mathrm{h}}+1} (P^i-P^{i-1})=1\), we estimate

which is further equal to

Since the right-hand side is finite, we conclude the proof of the proposition. \(\square \)

2.3 Palm Versions of Tessellations: Proof of Corollary 1.5

We handle each case separately.

Proof of Corollary 1.5 for the Poisson–VT

Corollary 1.5 follows directly from Lemma 2.1 and the Slivnyak–Mecke theorem (see e.g. [11, Section 9.2]). Indeed, since Lemmas 2.1 uses no information about the distribution of X but only the definition of a Voronoi tessellation, these lemmas remain true after replacing \(S^*\) by S. Next, the Palm version \(X^*\) of the underlying PPP equals \(X \cup \{ o \}\) in distribution by the Slivnyak–Mecke theorem, in particular, it contains o almost surely. Thus, using the aforementioned versions of Lemma 2.1 (for \(a=b=1\)), we deduce that \(|S_{\mathrm{V}} \cap B_1|\) is stochastically dominated by \( 2\pi (\# (X \cap B_4)+1)\). This random variable has all exponential moments, hence the corollary. \(\square \)

Proof of Corollary 1.5 for the Poisson–JMT

This is analogous to the proof for the Poisson–VT where instead of Lemma 2.1 we use the Lemma 2.2. \(\square \)

Proof of Corollary 1.5 for the Poisson–DT

Note that the random radius R defined in (26) is invariant under changing X to \(X \cap \{ o \}\) in its definition. Hence, using the Slivnyak–Mecke theorem, one can first verify Proposition 2.3 with \(W_a\) replaced by the number of edges of \(S_{\mathrm{D}}^*\) intersecting with \(B_a\), then one can prove that Corollary 2.4 holds with \(S_{\mathrm{D}}\) replaced by \(S_{\mathrm{D}}^*\) and (28) holds with \(S_{\mathrm{D}}^\lambda \) replaced by its Palm version \((S_\mathrm{D}^\lambda )^*\) for all \(\lambda >0\), and then one can complete the proof of Corollary 1.5 for the Poisson–DT analogously to the final part of the proof of Theorem 1.1 (iii). \(\square \)

Proof of Corollary 1.5 for the Poisson–LT

As already mentioned in Sect. 1, \(S^*\) equals \(S_L(X^{*})\) where \(X^{*} = X \cup \{ (0,\varPhi ) \}\), with \(\varPhi \) being a uniform random angle in \([0,\pi )\) that is independent of X.Thus, \(S^* = S \cup \{ l \}\), where \( l=\{ x \in {\mathbb {R}}^2 :x_1 \cos \varPhi + x_2 \sin \varPhi = 0 \}. \) Since the intersection of l with \(B_1\) has length 2, the corollary in the case of a Poisson–LT follows directly from Theorem 1.1 part (iv). \(\square \)

Proof of Corollary 1.5 for the MG

We verify the statement with \(B_1\) replaced by its superset \(Q_2\). First, let us write \(Y_\mathrm v^*\) and \(Y_\mathrm h^*\) for the Palm versions of \(Y_\mathrm v\) and \(Y_\mathrm h\). Here, \(Y_{\mathrm v}^*\) is defined via the property [10, Section 2.2] that

for any measurable function f on the space of \(\sigma \)-finite counting measures on \({\mathbb {R}}\) to \([0,\infty )\). Then the Palm version \(S_{\mathrm{M}}^*\) is given according to (13). It suffices to verify that \(Y_{\mathrm v}^* \times [-1,1]\) and \(Y_{\mathrm h}^* \times [-1,1]\) have all exponential moments. Indeed, using this and the mutual independence of \(Y_{\mathrm v}\), \(Y_{\mathrm h}\), and U, the proof of Corollary 1.5 for the MG can be completed analogously to the proof of Theorem 1.1 part (v) in Sect. 2.1.5. We only consider \(Y_{\mathrm v}^*\), the proof for \(Y_{\mathrm h}^*\) is analogous. For \(\alpha >0\) we have

where in the first inequality of the last line we used Hölder’s inequality. \(\square \)

As above, note that weaker assumptions on the exponential moments of \(Y_{\mathrm v},Y_{\mathrm h}\) imply lower exponential moments for \(S^*_{\mathrm{MG}}\).

Change history

07 April 2021

A Correction to this paper has been published: https://doi.org/10.1007/s10955-021-02743-z

References

Bollobás, B., Riordan, O.: Percolation on random Johnson-Mehl tessellations and related models. Probab. Theory Relat. Fields 140(3–4), 319–343 (2008)

Calka, P.: An explicit expression for the distribution of the number of sides of the typical Poisson-Voronoi cell. Adv. Appl. Probab. 35(4), 863–870 (2003)

Calka, P.: Tessellations. In: Kendall, W.S., Molchanov, I. (eds.) New Perspectives in Stochastic Geometry, pp. 145–169. Oxford University Press, Oxford (2010)

Chayes, J.T., Chayes, L., Kotecký, R.: The analysis of the Widom-Rowlinson model by stochastic geometric methods. Commun. Math. Phys. 172(3), 551–569 (1995)

Chiu, S., Stoyan, D., Kendall, W., Mecke, J.: Stochastic Geometry and Its Applications. Wiley, New York (2013)

Daley, D., Vere-Jones, D.: An Introduction to the Theory of Point Processes. General Theory and Structure, vol. II. Springer, New York (2008)

Dereudre, D.: Introduction to the theory of Gibbs point processes. In: Coupier, D. (ed.) Stochastic Geometry, pp. 181–229. Springer, New York (2019)

Hilshorst, H.: The perimeter of large planar Voronoi cells: a double-stranded random walk. J. Stat. Mech. 2, L02003 (2005)

Hinsen, A., Hirsch, C., Jahnel, B., Cali, E.: The typical cell in anisotropic tessellations, accepted for publication in IEEE 16th International Symposium on Modeling and Optimization in Mobile, Ad Hoc, and Wireless Networks (WiOpt), see also: arXiv:1811.09221, (2020)

Hirsch, C., Jahnel, B., Cali, E.: Continuum percolation for Cox point processes. Stoch. Process. Appl. 129, 3941–3966 (2019)

Last, G., Penrose, M.: Lectures on the Poisson Process. Cambridge University Press, Cambridge (2017)

Møller, J.: Lectures on Random Voronoi Tessellations. Lecture Notes in Statistics, vol. 87. Springer, New York (1994)

Møller, J.: Random tessellations in \(\mathbb{R}^d\). Adv. Appl. Probab. 21(1), 37–73 (1989)

Møller, J., Stoyan, D.: Stochastic geometry and random tessellations. Department of Mathematical Sciences, Aalborg University, Tech. rep. (2007)

Neuhäuser, D., Hirsch, C., Gloaguen, C., Schmidt, V.: Ratio limits and simulation algorithms for the Palm version of stationary iterated tessellations. J. Stat. Comput. Simul. 84(7), 1486–1504 (2014)

Okabe, A., Boots, B., Sugihara, K., Sung, S.: Spatial Tessellations: Concepts and Applications of Voronoi Diagrams. Wiley, New York (2009)

Tóbiás, A.: Signal to interference ratio percolation for Cox point processes, ALEA Lat. Am. J. Probab. Math. Stat., to appear, see also: arXiv:1808.09857, (2020)

van Lieshout, M.: An introduction to planar random tessellation models. Spat. Stat. 1(1), 40–49 (2012)

Voss, F.: Spatial Stochastic Network Models – Scaling Limits and Monte–Carlo Methods, PhD thesis, Universität Ulm, (2009)

Zuyev, S.: Estimates for distributions of the Voronoi polygon’s geometric characteristics. Random Struct. Algorithms 3, 149–162 (1992)

Acknowledgements

The authors thank A. Hinsen, C. Hirsch and W. König for interesting discussions and comments. Moreover, we thank an anonymous reviewer for suggestions regarding simplifications of the proof of Theorem 1.1 (i)-(iii) as well as possible generalizations, which helped to substantially improve the manuscript. Further, we thank two more anonymous reviewers for insightful suggestions that lead to further improvements. BJ was supported by the Deutsche For- schungsgemeinschaft (DFG, German Research Foundation) under Germany’s Excellence Strategy – MATH+ : The Berlin Mathematics Research Center, EXC-2046/1 – project ID: 390685689. AT was supported by a Phase II scholarship from the Berlin Mathematical School.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Bruno Nachtergaele.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this article was revised: “With the author(s)’ decision to opt for Open Choice the copyright fo the article @ The Author’s 2021.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jahnel, B., Tóbiás, A. Exponential Moments for Planar Tessellations. J Stat Phys 179, 90–109 (2020). https://doi.org/10.1007/s10955-020-02521-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-020-02521-3

Keywords

- Stationary point process

- Poisson point process

- Total edge length

- Number of cells

- Number of edges

- Iterated tessellation