Abstract

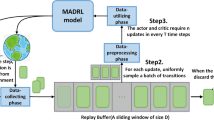

Multi-agent reinforcement learning (MARL) algorithms have made promising progress in recent years by leveraging the centralized training and decentralized execution (CTDE) paradigm. However, existing MARL algorithms still suffer from the sample inefficiency problem. In this paper, we propose a simple yet effective approach, called state-based episodic memory (SEM), to improve sample efficiency in MARL. SEM adopts episodic memory (EM) to supervise the centralized training procedure of CTDE in MARL. To the best of our knowledge, SEM is the first work to introduce EM into MARL. SEM has lower space complexity and time complexity than state and action based EM (SAEM) initially proposed for single-agent reinforcement learning when using for MARL. Experimental results on two synthetic environments and one real environment show that introducing episodic memory into MARL can improve sample efficiency, and SEM can reduce storage cost and time cost compared with SAEM.

Similar content being viewed by others

Data availability

Not applicable.

Code availability

Not applicable.

Notes

EMC (Zheng et al., 2021) also adopts EM in MARL. However, the arXiv version (Ma and Li, 2021) of our SEM came out earlier than EMC. Hence, the idea to adopt EM in MARL was independently proposed by SEM and EMC. Furthermore, EMC did not discuss the possibility of different ways to apply EM for MARL. On the contrary, our SEM gives a deep analysis of different ways to apply EM for MARL, which provides insight for designing a suitable EM mechanism for MARL.

V-learning (Jin et al., 2021) also adopts the method of restricting the state and joint action space to the state space to avoid the dependence on the number of joint actions. V-learning is based on decentralized training, but our work is based on centralized training. Furthermore, there is no EM mechanism in V-learning. Our work introduces the EM mechanism and restricts the state and joint action space to the state space when introducing the EM mechanism. In addition, the arXiv version of our SEM (Ma and Li, 2021) came out earlier than V-learning.

Our work SEM is based on the global states, independent of the observation function type. Hence, our method remains useful regardless of whether the observation function is probabilistic or deterministic.

We use \(\textrm{SC} 2.4 .6 .2 .69232\) (the same version as that in (Samvelyan et al., 2019)), instead of the newer version \(\textrm{SC} 2.4.10\).

The weighting function is the centrally-weighting function with a hyper-parameter \(\alpha\). \(\alpha\) is set to 0.1 on grid-world game and two-step matrix game. On SMAC, \(\alpha\) is set to 0.75 by following (Rashid et al., 2020).

References

Amarjyoti, S. (2017). Deep reinforcement learning for robotic manipulation—the state of the art. arXiv:1701.08878.

Andersen, P., Morris, R., Amaral, D., et al. (2006). The hippocampus book. Oxford: Oxford University Press.

Badia AP, Piot B, Kapturowski S, et al (2020). Agent57: Outperforming the atari human benchmark. In ICML.

Bentley, J. L. (1975). Multidimensional binary search trees used for associative searching. Communications of the ACM, 18(9), 509–517.

Berner, C., Brockman, G., Chan, B., et al. (2019). Dota 2 with large scale deep reinforcement learning. arXiv:1912.06680.

Blundell, C., Uria, B., Pritzel, A., et al. (2016). Model-free episodic control. arXiv:1606.04460.

Cao, Y., Yu, W., Ren, W., et al. (2012). An overview of recent progress in the study of distributed multi-agent coordination. IEEE Transactions on Industrial Informatics, 9(1), 427–438.

Duan, Y., Chen, X., Houthooft, R., et al. (2016). Benchmarking deep reinforcement learning for continuous control. In ICML.

Foerster, J. N., Farquhar, G., Afouras, T., et al. (2018). Counterfactual multi-agent policy gradients. In AAAI.

Hardt, O., Nader, K., & Nadel, L. (2013). Decay happens: The role of active forgetting in memory. Trends in Cognitive Sciences, 17(3), 111–120.

Hernandez-Leal, P., Kartal, B., & Taylor, M. E. (2019). A survey and critique of multiagent deep reinforcement learning. Autonomous Agents and Multi-Agent Systems, 33(6), 750–797.

Jaakkola, T. S., Jordan, M. I., & Singh, S. P. (1994). On the convergence of stochastic iterative dynamic programming algorithms. Neural Computing, 6(6), 1185–1201.

Jin, C., Liu, Q., Wang, Y., et al. (2021). V-learning: A simple, efficient, decentralized algorithm for multiagent RL. arXiv:2110.14555.

Johnson, W. B., & Lindenstrauss, J. (1984). Extensions of Lipschitz mappings into a Hilbert space. Contemporary Mathematics, 26(189–206), 1.

Kober, J., Bagnell, J. A., & Peters, J. (2013). Reinforcement learning in robotics: A survey. The International Journal of Robotics Research, 32(11), 1238–1274.

Kononenko, I., & Kukar, M. (2007). Machine learning and data mining. Horwood Publishing.

Lample, G., Chaplot, D. S. (2017). Playing FPS games with deep reinforcement learning. In AAAI.

Lengyel, M., & Dayan, P. (2007). Hippocampal contributions to control: The third way. In NeurIPS.

Lillicrap, T. P., Hunt, J. J., Pritzel, A., et al. (2016). Continuous control with deep reinforcement learning. In ICLR.

Lin, Z., Zhao, T., Yang, G., et al. (2018). Episodic memory deep q-networks. In IJCAI.

Lowe, R., Wu, Y., Tamar, A., et al. (2017). Multi-agent actor-critic for mixed cooperative-competitive environments. In NeurIPS.

Ma, X., & Li, W. (2021). State-based episodic memory for multi-agent reinforcement learning. arXiv:2110.09817.

Melo, F. S. (2001). Convergence of q-learning: A simple proof. Institute of Systems and Robotics, Technical Report, pp. 1–4.

Mnih, V., Kavukcuoglu, K., Silver, D., et al. (2015). Human-level control through deep reinforcement learning. Nature, 518(7540), 529–533.

Oliehoek, F. A., & Amato, C. (2016). A concise introduction to decentralized POMDPs. Berlin: Springer.

Oliehoek, F. A., Spaan, M. T. J., & Vlassis, N. A. (2008). Optimal and approximate q-value functions for decentralized POMDPs. Journal of Artificial Intelligence Research, 32, 289–353.

Powers, R., Shoham, Y., & Vu, T. (2007). A general criterion and an algorithmic framework for learning in multi-agent systems. Machine Learning, 67(1–2), 45–76.

Pritzel, A., Uria, B., Srinivasan, S., et al. (2017). Neural episodic control. In ICML.

Rashid, T., Samvelyan, M., de Witt, C. S., et al. (2018). QMIX: Monotonic value function factorisation for deep multi-agent reinforcement learning. In ICML.

Rashid, T., Farquhar, G., Peng, B., et al. (2020). Weighted QMIX: Expanding monotonic value function factorisation for deep multi-agent reinforcement learning. In NeurIPS.

Samvelyan, M., Rashid, T., de Witt, C. S., et al. (2019). The starcraft multi-agent challenge. In AAMAS.

Shalev-Shwartz, S., Shammah, S., Shashua, A. (2016). Safe, multi-agent, reinforcement learning for autonomous driving. arXiv:1610.03295.

Silver, D., Huang, A., Maddison, C. J., et al. (2016). Mastering the game of go with deep neural networks and tree search. Nature, 529(7587), 484–489.

Son, K., Kim, D., Kang, W. J., et al. (2019). QTRAN: Learning to factorize with transformation for cooperative multi-agent reinforcement learning. In ICML.

Squire, L. R. (2004). Memory systems of the brain: A brief history and current perspective. Neurobiology of Learning and Memory, 82(3), 171–177.

Sunehag, P., Lever, G., Gruslys, A., et al. (2018). Value-decomposition networks for cooperative multi-agent learning based on team reward. In AAMAS.

Sutton, R. S., Barto, A. G. (1998). Reinforcement learning: An introduction. MIT Press.

Tan, M. (1993). Multi-agent reinforcement learning: Independent vs. cooperative agents. In ICML.

Vinyals, O., Ewalds, T., Bartunov, S., et al. (2017). Starcraft II: A new challenge for reinforcement learning. arXiv:1708.04782.

Wang, J., Ren, Z., Liu, T., et al. (2020). QPLEX: Duplex dueling multi-agent q-learning. arXiv:2008.01062.

Watkins, C. J. C. H., & Dayan, P. (1992). Technical note q-learning. Machine Learning, 8, 279–292.

Wiering, M. A. (2000). Multi-agent reinforcement learning for traffic light control. In ICML.

Yang, Y., Hao, J., Liao, B., et al. (2020). Qatten: A general framework for cooperative multiagent reinforcement learning. arXiv:2002.03939.

Zheng, L., Chen, J., Wang, J., et al. (2021). Episodic multi-agent reinforcement learning with curiosity-driven exploration. In NeurIPS.

Zhu, G., Lin, Z., Yang, G., et al. (2020). Episodic reinforcement learning with associative memory. In ICLR.

Funding

This work is supported by National Key R&D Program of China (No. 2020YFA0713900), NSFC Project (No. 61921006, No. 62192783), and Fundamental Research Funds for the Central Universities (No. 020214380108).

Author information

Authors and Affiliations

Contributions

Xiao Ma and Wu-Jun Li conceived of the presented idea. Xiao Ma developed the theory and Wu-Jun Li verified the theory. Xiao Ma and Wu-Jun Li conceived and planned the experiments. Xiao Ma carried out the experiments. Xiao Ma wrote the manuscript in consultation with Wu-Jun Li, and Wu-Jun Li modified the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

All authors certify that they have no affiliations with or involvement in any organization or entity with any financial interest or non-financial interest in the subject matter or materials discussed in this manuscript.

Additional information

Editor: Tong Zhang.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ma, X., Li, WJ. State-based episodic memory for multi-agent reinforcement learning. Mach Learn 112, 5163–5190 (2023). https://doi.org/10.1007/s10994-023-06365-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10994-023-06365-2