Abstract

Time series subject to regime shifts have attracted much interest in domains such as econometry, finance or meteorology. For discrete-valued regimes, models such as the popular Hidden Markov Chain (HMC) describe time series whose state process is unknown at all time-steps. Sometimes, time series are annotated. Thus, another category of models handles the case with regimes observed at all time-steps. We present a novel model which addresses the intermediate case: (i) state processes associated to such time series are modelled by Partially Hidden Markov Chains (PHMCs); (ii) a multivariate linear autoregressive (MLAR) model drives the dynamics of the time series, within each regime. We describe a variant of the expectation maximization (EM) algorithm devoted to PHMC-MLAR model learning. We propose a hidden state inference procedure and a forecasting function adapted to the semi-supervised framework. We first assess inference and prediction performances, and analyze EM convergence times for PHMC-MLAR, using simulated data. We show the benefits of using partially observed states as well as a fully labelled scheme with unreliable labels, to decrease EM convergence times. We highlight the robustness of PHMC-MLAR to labelling errors in inference and prediction tasks. Finally, using turbofan engine data from a NASA repository, we show that PHMC-MLAR outperforms or largely outperforms other models: PHMC and MSAR (Markov Switching AutoRegressive model) for the feature prediction task, PHMC and five out of six recent state-of-the-art methods for the prediction of machine useful remaining life.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Time series are widely present in many domains such as industry, energy, meteorology, e-commerce, social networks or health. They represent the temporal evolving of systems and help us to understand their temporal dynamics and perform short, medium or long-term predictions. A major research line has been dedicated to time series analysis. In this line, exponential smoothing models (Gardner & Everette, 2006; Bergmeir et al., 2016), Box and Jenkins models (Box et al., 2015) and nonlinear autoregressive neural networks (Yu et al., 2014; Wang et al., 2019; Noman et al., 2020) are essentially devoted to forecasting. In addition to the forecasting goal, Regime-Switching AutoRegressive models (Ubilava & Helmers, 2013; Hamilton, 1990) also allow to discover hidden behaviors of such systems.

In the cases when the studied system is stationary, that is its behavior is time-independent, the Linear AutoRegressive (LAR) model is a framework widely used to capture the autoregressive dynamics of the corresponding time series (Wold, 1954; Degtyarev and Gankevich, 2019). The LAR model is a simple linear regression model in which predictors are lagged values of the current value in the time series. However, many real-life systems are subject to changes in behaviors: for instance in econometry, we distinguish between recession and expansionary phases; in meteorology, anticyclonic conditions alternate with low pressure conditions. These systems are commonly referred to as regime-switching systems, where each regime corresponds to a specific behavior. Each time-step is associated with some state, amongst those allowed for the system. Regime-switching system modelling is achieved in two steps: (i) the state process modelling that enables to capture how states are generated, and (ii) the modelling of the autoregressive dynamics of the time series within each regime. In the latter step, a simple autoregressive framework such as the LAR model can be used. Generally, in step (i), the state process is modelled by a discrete-valued Markov process. In the current state-of-the-art literature, two categories of models can be distinguished.

In Hidden Regime-Switching AutoRegressive (HRSAR) models, the state process is hidden and is modelled by a Hidden Markov Process (HMP). This category of models has been introduced by Hamilton (1989) in the context of United States’s Gross National Product time series analysis. Several variants and extensions were subsequently designed.

In Observed Regime-Switching AutoRegressive (ORSAR) models, the state process is either observed or derived a priori. In the latter case, a clustering algorithm is used before fitting the model, to extract the regimes. The clustering may either rely on endogenous variables (i.e., the variables whose dynamics is observed through the time series) or on exogenous variables supposed to drive regime-switching. The works of Flecher et al. (2010) on the one hand, and of Bessac et al. (2016) on the other hand, illustrate the application of these models to meteorological time series.

When the state process is partially observed, which means that the system state is known at some random time-steps and unknown for the remaining time-steps, ORSAR models cannot be directly applied while HRSAR models are suboptimal in the sense that the observed states cannot be included.

Industry is a major potential supplier of such data. Many machines are monitored continuously, through multiple sensors. In parallel, technical monitoring may be carried out episodically, by humans, during expert or technician visits; these visits result in partial annotations on the state of the machine. Modelling adapted to this type of partially annotated multivariate time series is a prerequisite for predicting the evolution of the extent of wear of a machine and anticipating maintenance operations, or even avoiding accidents. The same needs have been identified for machines used in transport (trains, planes, ships). Monitoring the ageing of engineering structures (bridges, railways) can also combine the continuous collection of data from sensors and episodic assessments of the state of the structures. In a different register, manual annotation of time series data (e.g., video sequences, audio sequences) is a time-consuming task. It is very often the case that only a partial annotation is available. Automatizing the annotation of latent states, seeking to leverage the partial annotation, is therefore appealing. Thus, one can increase the amount of fully labelled data, upstream a fully supervised machine learning task such as automated speech recognition, human gesture analysis, human activity recognition, segmentation of time series. Again, the same situation can be found in software reliability modelling. For instance, time intervals between bug occurrences can be governed by a Markov chain (Bharathi & Selvarani, 2020). The latter may be considered as partially hidden, since the debugging state is an observable state. Partial annotation corresponds to a frequently encountered situation in research in biology. For instance, in de novo detection of biologically functional signals in proteins, wet-lab experiments are expected to provide guidance for annotating regions of proteins as potentially harboring such functional signals. However, experimental limitations may prevent full annotation into “signal” and “no signal” states. In this case, to avoid additional costly and time-consuming experiments, a model allowing partial annotation would be appropriate.

To overcome the ORSAR and HRSAR limitations, in this work, we propose a novel Regime-Switching AutoRegressive model that capitalizes on the observed states while the hidden states are inferred. We consider a special case of Markov process henceforth named Markov Chain. Our model is referred to as the Partially Hidden Markov Chain Multiple Linear AutoRegressive (PHMC-MLAR) model. The innovative contributions brought by this model are threefold. First, the PHMC-MLAR model is a flexible parametric model that supplies a unification of HRSAR and ORSAR models when the state process is a Markov Chain. Thus, when the state process is fully observed, PHMC-MLAR is reduced to ORSAR. Reversely, when the state process is fully hidden, PHMC-MLAR instantiates as HRSAR. Second and third, our model can be seen as both an extension of the seminal work of Scheffer and Wrobel (2001) around the Partially Hidden Markov Chain (PHMC) and an extension of the seminal work of Hamilton (1989) around the Markov-switching autoregressive (MSAR) model. On the one hand, the PHMC-MLAR model locally adds autoregressive features to a (global) PHMC framework ; this innovation clearly extends the PHMC proposal to the domain of time series modelling. Meanwhile, PHMC-MLAR adds a PHMC feature to the MSAR framework, to switch to a semi-supervised global framework. Finally, beyond the unification aspect, we contribute to the machine learning literature through designing the underlying algorithmic machinery dedicated to effective and efficient PHMC-MLAR model training. We consider multivariate time series.

The main contributions of this paper are as follows:

-

1.

We propose a new Regime-Switching AutoRegressive model that integrates the states observed at some random time-steps. This model, referred to as PHMC-MLAR, provides a unification of HRSAR and ORSAR models when the state process is modelled by a Markov Chain (MC).

-

2.

The PHMC-MLAR proposal extends two existing models of the literature, the PHMC and MSAR models. On the one hand, PHMC-MLAR incorporates local MSAR models into a global PHMC framework, to model time series. On the other hand, PHMC-MLAR replaces the Markov chain used as the global switching mechanism of the MSAR model, by a semi-supervised global framework (PHMC).

-

3.

We propose a variant of the Expectation-Maximization (EM) algorithm that allows to learn the parameters of our model.

-

4.

Inference on hidden states is carried out by a variant of the Viterbi algorithm, adapted to take into account the observed states.

-

5.

Regarding the time series forecasting task, a prediction function is proposed. We distinguish between the case where the system state is known at forecast horizons from the case where it is latent.

The Baum-Welsh algorithm is the instantiation of the EM algorithm tailored for hidden Markov models (HMMs) (Baum et al., 1970). It relies on the popular forward-backward procedure, to calculate the statistics of the Expectation step. Scheffer and Wrobel (2001) adapted this procedure to their PHMC proposal and derived a backward-forward-backward procedure. However, these authors deal with observations that are independent and categorical. Instead, we extended this PHMC framework to handle observations that are continuous time series; we therefore thoroughly revisited the backward-forward-backward procedure to incorporate the autoregressive feature.

In addition, we derived an estimation procedure to infer the unknown states in the state sequence of a discrete-valued Markov process: the introduction of partial knowledge on states, and that of the autoregressive feature, compelled us to customize the well-known Viterbi algorithm (Forney, 1973).

The ability of our model to infer the hidden states and to make accurate predictions on time series, even when the observed states are unreliable, was investigated through experiments performed on synthetic data. Our work underlines the benefits of using partially observed states to decrease EM convergence times. This performance is obtained with no or practically no impact on the quality of hidden state inference, as from labelling percentages around 20–30\(\%\); the prediction accuracy is also preserved above such percentage thresholds. For instance, for a training set of 100 sequences, with \(70\%\) labelled states, the EM algorithm converges after 22 iterations on average against 62 on average for the unsupervised case. Moreover, performing fully supervised training with a proportion of ill-labelled states is also beneficial for EM convergence. For example, given a training set of size 100 annotated with a \(70\%\)-reliable labelling function, the EM algorithm converges after a single iteration against 67 iterations for the unsupervised case. This offers promising prospects to enhance model selection for the PHMC-MLAR model. Further experimentations also show the ability of our variant of the Viterbi algorithm to infer hidden states in partially-labelled sequences. In addition, while assessing the impact on predictions generated by incorporating labelled states in the training sequences, we also compared the situations where all states are unknown at forecast horizons to the situations where all states are known. Prediction errors are subdued at all horizons in the latter case (by 44% on average), but contrasted horizons are still evidenced with low (respectively high) scores as in the former case. The constrast is kept constant whatever the percentage of observed states in the training set. Besides, we also point out the robustness of our model to labelling errors in inference task, when large training datasets and moderate labelling error rates are considered. Finally, the latter experiment highlights the remarkable robustness to error labelling in the prediction task, over the whole range of error rates.

Finally, the performance of the PHMC-MLAR model was evaluated in the context of a practical application to machine health prognostics. For this purpose, we conducted experiments on turbofan engine data from a NASA repository. Considering short, medium and long-term feature forecasting, we first show that PHMC-MLAR and MSAR models obtain comparable accuracies at the short-term horizon (\(h=5\)), whereas PHMC-MLAR presents higher forecast accuracies than MSAR at medium and long-term forecast horizons (\(h = 10, 20, 30\)). In comparison with the PHMC model, our model achieves much better performance (whatever the horizon). These results show the relevance of including an autoregressive model within each regime, as suggested in this work. Second, we evaluated the performance of PHMC-MLAR in predicting the remaining useful life (RUL) of machines. Our results show that our proposal outperforms PHMC and five of six recent state-of-the-art RUL prediction methods, including four artificial intelligence-based methods.

This paper is organized as follows. Related work is reviewed in Sect. 2. Section 3 describes the PHMC-MLAR model. Then a learning algorithm is derived in Sect. 4, to estimate the model parameters. Inference of the hidden states is addressed in Sect. 5. Section 6 presents the time series forecasting procedure. Section 7 depicts the experimental protocol that drove our experimentations on synthetic data, and discusses the results obtained. Section 8 focuses on a practical application to machine health prognostics. Therein, we depict the experimental protocol applied to realistic datasets composed of turbofan engine degradation trajectories, and we discuss the results observed. Section 9 concludes this paper and opens up future directions of research.

2 Related work

This section first highlights the links between our proposal, PHMC-MLAR, and the most closely related contributions of the literature. The PHMC-MLAR combines a variant of the Hidden Markov Model (HMM), namely the Partially Hidden Markov Chain (PHMC), with the Linear AutoRegressive (LAR) model. The rest of this section reviews the two main models that compose the hybrid model proposed.

As mentioned in the introduction, the PHMC-MLAR model unifies the HRSAR and ORSAR frameworks. However, the common thread between these latter frameworks is the implication of dependencies that drive the local dynamics within each regime. Therefore, the contributions of the literature most closely related to PHMC-MLAR are also characterized by various local dynamics.

Several models closely related to HRSAR were proposed in the literature. The MSAR model (Markov-switching AutoRegressive model) designed by Hamilton (1989) combines ARIMA (AutoRegressive Integrated Moving Average) models with an HMM, to characterize changes in the parameters of an autoregressive process. The targeted application motivating the MSAR model was economic analysis: the switch between fast growth and slow growth is governed by the outcome of the Markov process.

Further, Filardo (1994) incorporated time-varying transition probabilities between regimes in the MSAR model. For instance, the resulting model was subsequently used to reproduce the cyclic patterns existing in climatic variables (Cardenas-Gallo et al., 2016). In parallel, the Hamilton’s MSAR model was also extended into a general dynamic linear model combined with Markov-switching (Kim, 1994). Finally, Michalek and co-authors’work focused on a HRSAR model that integrates HMM with Moving Average (MA) models (Michalek et al., 2000). In the same work, the parameter estimation approximation thus derived was generalized to deal with AutoRegressive Moving Average (ARMA) hybridized with HMM. Simulations of electrophysiological recordings showed that the derived estimators allow to recover the true dynamics where standard HMM fails. The model generalized by Michalek and collaborators, to integrate HMM with ARMA, was also applied to model human activity as time signals for activity early recognition (Li & Fu, 2012).

More recently, a nonhomogeneous HRSAR model was developed to model wind time series (Ailliot et al., 2015). The aim was to acknowledge that the probability of switching from cyclonic conditions to anticyclonic conditions between time-steps t and \(t+1\) depends on the wind conditions at time-step t at some given location off the French Brittany coast. A nonhomogeneous MSAR (NHMSAR) model was thus designed for this purpose.

To our knowledge, the investigations around ORSAR models are limited to the recent work of Bessac et al. (2016) which was applied to wind time series. Therein, observed regimes are derived by running a clustering procedure on the variables under study or on extra variables. Thus are identified the states, all distinct from one other, in which the data are homogeneous. Besides comparing the ORSAR models derived from various clustering procedures, Bessac and collaborators also compare the respective merits of HRSAR and ORSAR models on real-world and simulated data.

2.1 Partially Hidden Markov Chain—PHMC(K)

Hidden Markov models (HMMs) have been successfully used in such domains as natural language processing (Morwal et al., 2012), handwriting recognition (Mouhcine et al., 2018), speech emotion recognition (Schuller et al., 2003), human action recognition (Berg et al., 2018) or renewable power prediction (Ghasvarian Jahromi et al., 2020), to name but a few.

HMM(K) is a flexible probabilistic framework able to model complex hidden-regime-switching systems. It exactly possesses K states where each state drives the specific behavior of an observed variable. This variable is itself modelled through a usual probability law such as a Gaussian law, for example. The system state process, which specifies the ongoing behavior of the latter observed variable at each time-step, is fully latent. Therefore, state inference is the main purpose of HMM models: the goal is to learn about the latent sequence of states from the observed behavior. This task is generally driven by Maximum A Posteriori (MAP) estimation implemented through the Viterbi algorithm (Forney, 1973). Importantly, the HMM framework satisfies the Markov property, which stipulates that the conditional probability distribution of the hidden state at time-step t, given the hidden states at previous time-steps \(t' < t\), only depends on the hidden state at time-step \(t-1\). Besides, the observed behavior at time-step t solely depends on the hidden variable at time-step t.

When dealing with systems in which the state process is partially observed or known, applying HMM would result in an important information loss in the sense that the observed states are ignored. To overcome this limitation, Scheffer and Wrobel (2001) have introduced the Partially Hidden Markov Chain (PHMC), which integrates partially observed states in the modelling process. The authors have proposed an active learning algorithm in which the user is asked to label difficult observations identified during model learning. More recently, Ramasso and Denoeux (2013) have proposed a model that makes use of partial knowledge on HMM states. These authors have modelled the partial knowledge by a belief function that specifies the probability of each state at each time-step. The works carried out by Ramasso and Denoeux (2013) have shown that the use of partial knowledge on states accelerates HMM model learning.

2.2 Linear AutoRegressive model—LAR(p)

An observed time series is considered to be one realization of a stochastic process. Time series analysis and forecasting thus require that the underlying stochastic process be modelled. The linear autoregressive (LAR) model is a stochastic model widely used for this purpose. A LAR model of order p is a linear model in which the regressors are the p past values of the variable, hence the term autoregression. Although the LAR model is conceptually simple and easy to learn, it can only be applied to stationary time series. When this condition is violated, model misspecification issues arise. Nonetheless, it is well known that if the autoregressive coefficients of a LAR process are all less than one in module, then the process will be stationary. This is a necessary and sufficient condition which is tested through unit root tests (Phillips & Perron, 1988; Dickey & Fuller, 1979; Kwiatkowski et al., 1992).

In the LAR(p) model, the hyper-parameter p denotes the number of past observations to include in the prediction at time-step t. Two alternative methods are generally used to fix the value of p. The first one relies on a well-known property of the partial autocorrelation function of the LAR(p) model: the autocorrelation becomes null from lag \(p+1\). The second method, more general, tests a range of candidate values for p, then selects the value that minimizes a model selection criterion such as the Bayesian information criterion (BIC) or the Akaike’s information criterion (AIC).

3 The PHMC-MLAR model

In this section, we explain how we have created a new regime-switching model called PHMC-MLAR, based on the PHMC and LAR models. The section first introduces some notations. Then Sect. 3.2 describes our proposal to model the state process by a PHMC model. Section 3.3 details how, within each regime, the dynamics of the observed variable is governed by a LAR model. Thus, the bivariate process follows a PHMC-MLAR model. A final subsection briefly discusses hyper-parameter selection in Markov-switching models.

To note, the fundamental difference between our model and the two other approaches identified in the same line (Scheffer & Wrobel, 2001; Ramasso & Denoeux, 2013) is the autoregressive dynamics of our model (see Fig. 1).

The conditional independence graphs of the Partially Hidden Markov Chain and of the Partially Hidden Markov Chain Linear Autoregressive (PHMC-MLAR) model, when the LAR order p is equal to 2. a PHMC model. b PHMC-MLAR model. Observed states are shown in dark shade whereas hidden states are colored in light shade. When a state is observed, \(\sigma _t\) is reduced to a singleton (Color figure online)

3.1 Notations

-

Symbol \(:=\) stands for the definition symbol.

-

\(\varvec{1}_A: \Omega \rightarrow \{0,1\}\) denotes the indicator function that indicates membership of an element in a subset A of \(\Omega\). As from now, \(\varvec{1}_A\) will be noted \(\varvec{1}_{\{ x \in A \}}\).

-

\(\{X_t\}_{t \in {\mathbb {Z}}}\) denotes a multidimensional stochastic process with \(X_t \in\) \({\mathbb {R}}^d\). By convention, \(X_{1-p}^0\) denotes the p initial values of the time series \(\{X_t\}\). For each \(t \ge 1\), \(X_{t-p}^{t-1}\) stands for the subseries \(\{ X_{t-p}, X_{t-p+1} \cdots X_{t-1} \}\).

-

\(\mathbf {x} = x_{1}^T\) represents an observed multivariate time series with \(\mathbf {x}_0=x_{1-p}^0\) the corresponding initial values.

-

\(\{ S_t \}_{t \in {\mathbb {N}}^*}\) denotes a state process depicting the temporal evolution of a regime-switching system where the set of states is \(\mathbf {K} = \{1, 2, \dots , K\}\). In this paper, states are instantaneous, whereas a regime is a succession of identical states. We denote \(\sigma _t\) (\(\subseteq \mathbf {K}\)) the set of possible states at time-step t with \(\sigma _t = \mathbf {K}\) when \(S_t\) is latent, and \(\sigma _t = \{k\}\) when \(S_t = k\), that is \(k^{\mathrm{th}}\) state is observed at time-step t.

-

\({\mathcal {M}}_p({\mathbb {R}})\) is the set of square matrices of order p with real coefficients.

-

Symbols in bold represent nonscalar variables (e.g., vectors).

3.2 Modelling the state process

Let \(\{ (S_t, \sigma _t) \}\) the state process which is supposed to be partially observed. Remind that if \(S_t=k\), i.e. \(k^{\mathrm{th}}\) state has been observed at time-step t, then \(\sigma _t = \{k\}\). At the extreme, \(\sigma _t = \mathbf {K}\) for a (fully) latent state \(S_t\). We draw the reader’s attention to the flexibility of the model: an intermediate case between observed (\(\{k\}\)) and latent (\(\mathbf {K}\)) would be specified by \(\sigma _t \subset \mathbf {K}\).

Let \({\mathcal {R}} = \{ k \in \mathbf {K} \,|\, \exists \, t \in {\mathbb {N}}^*, \sigma _t = \{k\} \}\), the set of states that have been observed at least once. We have \(|{\mathcal {R}}| \le K\) where K is the total number of states. Thus, \(K - |{\mathcal {R}}|\) states are undetermined and depict the hidden dynamics of the system under study. It has to be underlined that it is difficult (it not sometimes impossible) to associate a physical interpretation to the hidden dynamics. Such an interpretation requires strong knowledge upon the studied system.

In the PHMC-MLAR model, \(\{(S_t, \sigma _t)\}\) is modelled by a K-state PHMC, parametrized by transition probabilities

and stationary law \(\pi _i = P(S_1 = i), \quad \pi _i \in [0, 1], \, \sum _{i=1}^K \pi _i = 1\).

Let \(\varvec{\theta }^{(S)} = ( (\pi _i)_{i=1,...,K}, (a_{i,j})_{i,j=1,...,K})\) denote the set of parameters associated with the PHMC.

3.3 Modelling the dynamics under each state

For each state \(k \in \mathbf {K}\), \(\{X_t \in {\mathbb {R}}^d\}\) is supposed to be stationary and modelled by a p-order LAR process defined as follows:

where \(p \ge\) 1 is the number of past values of \(X_t\) to be used in modelling, k is the state at time-step t, \(\phi _{0,k} \in {\mathbb {R}}^d\) and \((\phi _{i,k} \in {\mathcal {M}}_d({\mathbb {R}}))_{i=1, \dots , p}\) are respectively the vector of intercepts and the matrices of autoregressive coefficients associated with \(k^{\mathrm{th}}\) state. The error terms \(\epsilon _{t,k} \in {\mathbb {R}}^d\) are independent and identically distributed with zero mean and covariance matrix \(h_k \in {\mathcal {M}}_d({\mathbb {R}})\).

Equation 1 defines the relationships between each dimension of \(X_t\) (a univariate time series) and both the p lagged values for the \(d - 1\) other dimensions and its own p past values. The example below illustrates this relationship in the case where \(d=3\) and \(p=2\).

It is important to underline that Eq. 1 is not defined for the p initial values denoted by \(X_{1-p}^0\). These initial values are modelled by the initial law \(g_0(x_{1-p}^0; \varvec{\psi })\) parametrized by \(\varvec{\psi }\). For instance, \(g_0\) can be a multivariate normal distribution \({\mathcal {N}}_{d \times p}(\mathbf {m}, \mathbf {V})\) where \(\mathbf {m} \in {\mathbb {R}}^{d \times p}\) is the mean and \(\mathbf {V} \in {\mathcal {M}}_{d \times p}({\mathbb {R}})\) is the variance-covariance matrix.

Note that the law of \(\{\epsilon _{t,k}\}\) and the conditional distribution \(P(X_t|X_{t-p}^{t-1}\), \(S_t=k; \, \phi _{0,k}, \, \phi _{1,k}\), \(..., \phi _{p,k}, h_k)\) belong to the same family. Usually, Gaussian white noises are used. In this case, the conditional distribution is Gaussian too, with mean and covariance matrix respectively equal to \(\phi _{0,k} + \sum _{i=1}^p \phi _{i,k} X_{t-i}\) and \(h_k\).

Let \(\varvec{\theta }^{(X,k)} = (\phi _{0,k}, \, \phi _{1,k}, ..., \phi _{p,k}, h_{k})\) the parameters of the LAR(p) process associated with \(k^{\mathrm{th}}\) state. The law of \(\{X_t\}\) is fully parametrized by \(\varvec{\theta }^{(X)} = (\varvec{\theta }^{(X,k)})_{k=1, ...,K}\) and \(\varvec{\psi }\).

To note, as in (Scheffer & Wrobel, 2001) and (Ramasso & Denoeux, 2013), the PHMC-MLAR model assumes that the same order p is shared by all \(| \mathbf {K} |\) LAR processes associated with the states in \(\mathbf {K}\).

It has also to be highlighted that the state \(S_t = k\) conditioning a LAR process of order p on \(X_t\) does not impose that the p lagged values \(X_{t-1}^{t-p}\) be observed under same state k. That is, the PHMC-MLAR model may perfectly switch from regime to regime, and even from state to state, meanwhile keeping memory of values determined by previous regimes or states.

4 Learning PHMC-MLAR models

This section first presents an instance of the Expectation-Maximization (EM) algorithm dedicated to PHMC-MLAR parameter learning. Then, we briefly discuss hyper-parameter selection in Markov-switching models.

4.1 Estimation of the PHMC-MLAR parameters

This subsection is dedicated to the presentation of an instance of the EM algorithm, to estimate the PHMC-MLAR parameters. As seen in previous subsections, the PHMC component and the LAR components of our model are respectively parametrized by \(\varvec{\theta }^{(S)}\) and \((\varvec{\theta }^{(X)}, \varvec{\psi })\). Then, the whole PHMC-MLAR model is parametrized by \((\varvec{\theta }, \varvec{\psi })\) where \(\varvec{\theta } = (\varvec{\theta }^{(S)}, \varvec{\theta }^{(X)})\). Thus, PHMC-MLAR learning consists in estimating \((\varvec{\theta }, \varvec{\psi })\) from a training dataset.

Thanks to good statistical properties such as asymptotic efficiency, a maximum likelihood estimator (MLE) is considered. However, for models with hidden variables like ours, MLE computation results in an untractable problem. To address this issue, the Expectation-Maximization (EM) algorithm is generally used, in order to approximate a set of parameters that locally maximizes the likelihood function. EM was introduced by Baum et al. (1970) to cope with Hidden Markov Model learning. This version was further extended by Dempster et al. (1977) into the versatile EM algorithm, to handle parameter estimation in a more general framework. EM has also been applied to autoregressive Markov-switching models (Hamilton, 1990) and PHMC models (Scheffer & Wrobel, 2001; Ramasso & Denoeux, 2013).

We propose to learn the PHMC-MLAR model through a dedicated instance of the EM algorithm. To fix ideas, in Sect. 4.1.1, we first consider the case where the model is trained in a single training time series context, that is considering a unique pair of data (\(\mathbf {x} = x_{t=1-p}^T, \, \Sigma =\sigma _{t=1}^T\)), with \(\mathbf {x}\) a realization of \(\{ X_t \}\) and \(\sigma _t\) the set of possible states at time-step t. Then, in Sect. 4.1.2, we briefly outline the EM algorithm in the case where N (independent) training time series \((\mathbf {x}^{(1)},\Sigma ^{(1)})\), \(\dots\), \((\mathbf {x}^{(N)},\Sigma ^{(N)})\) are used.

4.1.1 Single training time series

Let \(\mathbf {x} = x_{1-p}^T\) the observed time series with \(x_{1-p}^0\) the initial values of the autoregressive process. Let \(\Sigma = \sigma _{t=1}^T\), further simplified into \(\sigma _{1}^T\), where \(\sigma _t\) stands for the set of possible states at time-step t. Let \((S_1^T, \Sigma )\) the state process (partially observed) of \(\mathbf {x}\) with \(\sigma _t = \mathbf {K}\) if \(S_t\) is hidden, \(\sigma _t = \{k\}\) if state k is observed at time-step t, and \(\sigma _t \subset \mathbf {K}\) in the intermediate case.

MLE is implemented by maximizing the expectation (with respect to the latent variables) of the complete data likelihood. Complete data likelihood is further referred to as \({\mathcal {L}}^c\). \({\mathcal {L}}^c\) denotes the evidence/likelihood of the training data when latent/hidden variables are supposed to be known. \({\mathcal {L}}^c\) writes as follows:

with \({\mathcal {L}}_c^c\) the conditional complete data likelihood and \(g_0\) the initial law of \(X_t\).

When the expectation of \({\mathcal {L}}^c\) with respect to the partially hidden states is calculated, term \(g_0(x_{1-p}^0; \, \varvec{\psi })\) in Eq. 2 can be taken out of the expectation since it does not depend on the states:

where \(P(S_1^T \,| \,X_{1-p}^{T}=x_{1-p}^{T}, \Sigma ; \, \varvec{\varvec{\theta }})\) is the posterior probability of partially hidden states \((S_1^T, \Sigma ).\)

Then, by considering the logarithmic scale, Eq. 3 can be separately maximized with respect to \(\varvec{\theta }\) and \(\varvec{\psi }\):

It has to be noted that Eq. 4 is a simple probability observation problem. In contrast, because of the hidden states, maximization with respect to \(\varvec{\theta }\) (Eq. 5) is carried out by an instance of the EM algorithm.

EM is an iterative algorithm that alternates between E(xpectation) step and M(aximization) step. At iteration n, we obtain:

with \(P(S_1^T \,| \,X_{1-p}^{T}=x_{1-p}^{T}, \Sigma ; \, \hat{\varvec{\varvec{\kern-2pt\theta }}}_{n-1})\) the posterior probability of partially hidden states \((S_1^T, \Sigma )\) at iteration \(n-1\).

The rest of this subsection details the two EM steps.

Step E of EM

In this step, the quantity \(Q(\varvec{\theta }, \hat{\varvec{\kern-2pt\theta }}_{n-1})\) (Eq. 6) is computed. Following the conditional independence graph of the PHMC-MLAR model (see Fig. 1b), the conditional complete data likelihood writes:

with \(\varvec{\theta }^{(X,k)}\) the parameters of the LAR process associated with \(k^{\mathrm{th}}\) state and \(P(X_t=x_t \, | \, X_{t-p}^{t-1}, S_t =k; \, \varvec{\theta }^{(X,k)})\) the conditional law of \(X_t\) within k.

Notice that the terms in Eq. 8 depend on either a single state \(S_t\) or two consecutive states \(S_t, S_{t-1}\). In this same equation, products are replaced by sums when considering the logarithm scale. Then \(\ln {\mathcal {L}}_c^c (\varvec{\varvec{\theta }})\) is substituted in Eq. 6 and the expectation with respect to the posterior probability of state process is developed. After some integrations, we find that \(Q(\varvec{\theta }, \hat{\varvec{\theta }}_{n-1})\) only depends on the following probabilities:

The \(\xi _t(k, \ell )\) quantities are used to compute the \(\gamma _t(\ell )\) probabilities (\(\gamma _t(\ell ) = \sum _{j=1}^K \xi _t(j,\ell ), \text {for}\ t=2, \dots , T_i\), \(\gamma _1(\ell ) =\sum _{j=1}^K \xi _2(\ell ,j)\)). Therefore, the E-step is reduced to computing these probabilities. To this end, we have derived a backward-forward-backward procedure as an extension of the forward-backward algorithm, one of the ingredients of the Baum-Welsh algorithm (Baum et al., 1970). The backward-forward-backward algorithm was initially proposed by Scheffer and Wrobel (2001) for the purpose of PHMC model learning.

The difference with respect to the classical unsupervised framework of the MSAR model lies in that the calculus of the probabilities \(\gamma _t(\ell )\) is ruled by the \(\Sigma\) annotation. In the MSAR fully unsupervised framework, probabilities \(\gamma _t(\ell )\) always have to be computed. In the semi-supervised PHMC-MLAR framework, probability \(\gamma _t(\ell )\) reaches the minimum 0 if annotation \(\Sigma\) specifies that \(\sigma _t = \{s\}\) since \(S_t = s\) is observed and \(s \ne \ell\). In this configuration, probability \(\gamma _t(s)\) reaches the maximum 1. Thus, no calculation of probabilities \(\gamma _t(...)\) is required for the known states.

Besides, we have adapted the EM algorithm to PHMC-MLAR models by taking into consideration the autoregressive dynamics. The details about the adapted backward-forward-backward algorithm are given in Appendix A. Note that in this appendix, we have carefully indicated the conditions required to calculate the various statistics involved, in relation to the \(\Sigma\) annotation: these statistics may not be defined or they do not need to be calculated.

Step M of EM

At iteration n, this step consists in maximizing \(Q(\varvec{\theta }, \hat{\varvec{\theta }}_{n-1})\) with respect to parameters \(\varvec{\theta } = (\varvec{\theta }^{(S)}, \varvec{\theta }^{(X)})\). It is straightforward to show that \(Q(\varvec{\theta }, \hat{\varvec{\theta }}_{n-1})\) can be decomposed as follows:

where \(\varvec{\theta }^{(X,k)}\) is the set of parameters specific to regime k. Functions \(Q_S\) and \(Q_X^{(k)}\) write:

We call the reader’s attention to the fact that \(Q_S\) (respectively \(Q_X^{(k)}\)) only depends on parameters \(\varvec{\theta }^{(S)}\) (respectively \(\varvec{\theta }^{(X,k)}\)). Therefore, \(Q_S\) and \({Q_X^{(k)}}_{k=1, \dots , K}\) can be maximized apart:

Eq. 13 is an optimization problem under equality constraints which can be solved by the method of Lagrange multipliers. Thus, the re-estimation formulas of \(\varvec{\theta }^{(S)}\) write:

In contrast, it is generally difficult to derive the analytical expression for \(\hat{\varvec{\kern-2pt\theta }}_n^{(X,k)}\). That is why \(Q_X^{(k)}(\varvec{\theta }^{(X,k)}, \hat{\varvec{\kern-2pt\theta }}_{n-1})\) is maximized relying on a numerical optimization method (e.g., the quasi-Newton method).

We point out that the M-step of our algorithm is very similar to that of the unsupervised framework MSAR. The only difference relies on the fact that in our algorithm, probabilities \(\gamma _t\)’s and \(\xi _t\)’s depend on the partial annotation of states.

Finally, dealing with the multivariate case does not pose any fundamental problem with respect to the univariate case: in the M step, the number of parameters estimated per regime is simply multiplied by \(d^2\) where d is the dimension of the time series.

4.1.2 Sketch of EM algorithm: several training time series

We now consider the general case in which PHMC-MLAR model is learnt from N (independent) partially annotated time series \((\mathbf {x}^{(1)},\Sigma ^{(1)})\), \(\dots\), \((\mathbf {x}^{(N)},\Sigma ^{(N)})\), with \(\mathbf {x}_0^{(1)}, \dots , \mathbf {x}_0^{(N)}\) the associated initial values and \(\mathbf {S}^{(1)}, \dots , \mathbf {S}^{(N)}\) the corresponding state processes with partial annotations \(\Sigma ^{(1)}, \dots , \Sigma ^{(N)}\). It has to be noted that time series \(\mathbf {x}^{(i)}\)’s can have different lengths while their respective initial vectors have a common size (\(\mathbf {x}_0^{(i)} \in {\mathbb {R}}^{d \times p}\), with p the autoregressive order). The lengths of the N time series are denoted \(T_1, T_2, \dots , T_N\), respectively.

The extension from the single-training time series to the multi-training time series case does not fundamentally change the parameter estimation algorithm. Thus, at each iteration, the E-step is separatly run on each training time series, which results in quantities \((\xi _t^{(i)})_{t=1, \dots , T_i}\) for \(i = 1, \dots , N\). Then in the M-step, these probabilities are used to update model parameters \(\varvec{\theta }\). Note that when \(N=1\), the single-training time series case is recovered.

Algorithm 1 sums up the instance of EM proposed for PHMC-MLAR parameter learning.

It is well known that the EM algorithm is sensitive to the choice of the starting point \(\hat{\varvec{\theta }}^{(0)}\) as regards the risk of attraction in a local maximum. In practice, several initial values are tested and the model that provides the highest likelihood is chosen. In this work, the initialization procedure presented in Algorithm 2 is used.

4.2 Hyper-parameter selection

An important step prior to the learning of Markov-switching models is hyper-parameter selection (or model selection). Hyper-parameter selection is the problem of picking a particular structure amongst several alternatives. In the case of Markov-switching, the model structure encompasses the number of hidden states, the form of the state transition matrix and output probabilities. There exist three main frameworks to address hyper-parameter selection.

Cross-validation iteratively splits the training set in a novel training set and a validation set, to assess how the model structure under consideration generalizes to the validation set. The computational burden of this approach is prohibitive for large hyper-parameter grids.

Regularization adds a penalty term to the likelihood objective function, to favour parsimonious models. In this category, several criteria are very often used for HMMs [see the recent review by Pohle et al. (2017)]. The Bayesian Information Criterion (BIC) (Schwarz, 1978) is defined as follows:

with L the log-likelihood, \(\hat{\theta }\) the maximum likelihood estimator, \(n_{par}\) the number of parameters of the model and C a regularization term that depends on the data used to train the model. For N independent multivariate time series of dimension d and respective lengths \(T_1, T_2, \cdots , T_N\), the C penalty is \(d \times \Sigma _i T_i\).

Experiments conducted on synthetic data have shown that the BIC criterion is relevant for the selection of the number of states in MSAR models (Psaradakis & Spagnolo, 2003), and on the joint determination of the number of states and autoregressive order in Markov-switching models (Psaradakis and Spagnolo, 2006). Furthermore, works on real-world data have shown that the BIC criterion allows the selection of models that are parsimonious and relevant, (i.e., that fit the data well) (Ailliot & Monbet, 2012; Kuck & Schweikert, 2017).

It should be noted that the consistency of the BIC criterion, i.e., its ability to always choose the right number of states when an infinite sample size is used, has been established for independent mixture models (Durand, 2003). However, in the case of HMM and MSAR models, the theoretical study of the behaviour of the BIC criterion remains an open problem.

On the other hand, the Akaike Information Criterion (AIC) (Akaike, 1974) is defined as

The AIC is an asymptotically unbiased estimator of a scoring function used to rank candidate models. It is a variant of the Kullback-Leibler (KL) divergence between the true model (i.e., the process that generated the data) and the approximate candidate model. Some works about KL divergence-based selection focused on Markov-switching models have been reported in the literature (Smith et al., 2006). However, the BIC score is more documented than the AIC score as regards Markov-switching models.

In the context of probabilistic modelling, it is often enlightening to think of regularizers as expressing a prior over the parameters, and thus view the regularized maximum likelihood fitting procedure as the search for maximum a posteriori (MAP) parameters under such a prior. Dirichlet distributions are commonly used as priors for the parameter distributions in the case of variables with categorical distributions or multinomial distributions in the models. Dirichlet, normal, gamma and inverse-gamma priors are used in the case of MSARs (Pinto & Spezia, 2015; Lhuissier, 2019).

In regularized model selection for autoregressive Markov-switching models, a grid of (p, K) values is tested and the pair obtaining the minimum value for the criterion considered is retained. However, estimating such models using a grid of hyper-parameters may be computationally expensive. A Bayesian approach treats all unknown quantities as random variables, assigning priors to these quantities to infer posterior distributions. A step further, in the case of HMMs for example, when the model structure, i.e. the number of states, is part of the unknown quantities, model structures can nonetheless be compared provided one knows how to integrate over both parameters and hidden states. In practice, Bayesian integration requires approximating integrals, for example through Monte-Carlo methods, Laplace approximation or the variational Bayesian method (Ghahramani, 2001).

In a similar vein, the sticky infinite hidden Markov-switching modelling framework proposed by Fox et al. (2011) short-circuits this computation: it assumes a Markov chain with a potentially infinite number of states, thus encompassing any finite number of them. Instead, the number of states is determined during the estimation of the model, which avoids the need to fix this number using a criterion such as BIC. For instance, Bauwens et al. (2017) applied this framework to autoregressive moving average Markov-switching models. A panorama of Bayesian nonparametric methods for learning Markov-switching processes is provided in (Fox et al., 2010).

In Sect. 8.3.1, we mention that the computational resources available to us allowed us to test multiple values for the hyper-parameters, using the BIC score.

5 Hidden state inference

In HMM modelling, after a model is learnt, inference consists in finding the state sequence that maximizes the likelihood of a given observed sequence. This is equivalent to solve a Maximum A Posteriori (MAP) problem. The Greedy search method that enumerates all combinations of states requires \({\mathcal {O}}(K^T)\) operations, where K is the number of states and T is the sequence length. The Viterbi algorithm designed by Forney (1973) computes the optimal state sequence in \({\mathcal {O}}(TK^2)\) operations.

In this section, we propose a variant of the Viterbi algorithm that takes into account the observed states of the PHMC-MLAR model. Thus, the hidden states are inferred given the observed states and the given observation sequence.

Let \(\hat{\varvec{\theta }}\) the MLE parameter estimates of the PHMC-MLAR model trained on a given dataset. Let \(\mathbf {x} = x_{1}^T\) an observed time series and \(\mathbf {x}_0=x_{1-p}^0\) the corresponding initial values. Let \(\Sigma = \sigma _{t=1}^T\) the possible states at each time-step with \(\sigma _t = \{k\}\) if \(k^{\mathrm{th}}\) regime is observed at time-step t, \(\sigma _t = \mathbf {K}\) if the state process is latent at that time-step, and \(\sigma _t \subset \mathbf {K}\) in the intermediate case. Let \((\mathbf {S}, \Sigma )\) the partially hidden state process associated with this time series.

We search the optimal state sequence \(\mathbf {z}^* = (z_1^*, \dots , z_T^*)\) that maximizes the posterior probability \(P(\mathbf {S}=\mathbf {z} \,|\, \mathbf {X}=\mathbf {x}, \mathbf {X}_0=\mathbf {x}_0, \Sigma ; \, \hat{\varvec{\kern-2pt\theta }})\). Thanks to Bayes’ rule, maximizing this posterior probability is equivalent to maximizing the joint probability \(P(\mathbf {S}=\mathbf {z}, \mathbf {X}=\mathbf {x} \,|\, \mathbf {X}_0=\mathbf {x}_0, \Sigma ; \, \hat{\varvec{\kern-2pt\theta }})\):

where \(\mathbf {K} = \{1, 2, \dots , K\}\) is the set of possible states.

Note that the probability of a given state sequence is null if there is at least a time-step t such that \(z_t \notin \sigma _t\), that is if state \(z_t\) is not allowed at time-step t. A consequence is that \(\mathbf {z}^*\) must coincide with the observed states if there are any. This constraint entails a decrease in calculation cost, as we will see later.

Following the dynamic programming paradigm, the Viterbi algorithm makes it possible to retrieve \(\mathbf {z}^*\) by splitting the initial problem into subproblems and solving this set of smaller problems. Let \(\delta _t(\ell ; \, \hat{\varvec{\kern-2pt\theta }})\) the maximal probability of subsequence \((z_1, \dots , z_t = \ell )\) that ends within regime \(\ell\):

The information on the known states is taken into account in the \(\delta _t(\ell ; \, \hat{\varvec{\kern-2pt\theta }})\) quantities, through the \(\sigma _1^t\) terms.

The probabilities involved in these quantities are iteratively computed as follows:

At first time-step,

where

For \(t=2, \dots , T\) we have

with

Since the maximal probability of the complete state sequence, that is the maximum for the probability expressed in Eq. 16, also writes:

the optimal sequence \(\mathbf {z}^*\), defined in Eq. 17 is retrieved by backtracking as follows:

The original Viterbi algorithm runs in \({\mathcal {O}}(TK^2)\). Our variant runs in \({\mathcal {O}}((T-T_{obs})K^2 + (T_{obs}+T)K)\) where \(T_{obs}\) denotes the number of observed states and \(T-T_{obs}\) is the number of undetermined states to be inferred. To note, \({\mathcal {O}}(K^2)\) (resp. \({\mathcal {O}}(K)\)) is the computational cost of Viterbi variables \(\delta _t\)’s when the state at time-step t is undetermined (respectively observed); and \({\mathcal {O}}(TK)\) represents the backtracking computational cost. Thus, when all states are undetermined (i.e. \(T_{obs} = 0\)), our algorithm has the same complexity as the original Viterbi algorithm. Moreover, the computational cost of our algorithm decreases linearly with the number of observed states \(T_{obs}\).

6 Forecasting

Forecasting for a time series consists in predicting future values based on past values. Let us consider a PHMC-MLAR model trained on a sequence observed up to time-step T, and \(\hat{\varvec{\kern-2pt\theta }}\) the corresponding parameters. Let \(\sigma _{1}, \dots , \sigma _{T+h}\) the set of possible states from time-step 1 to time-step \(T+h\).

The optimal prediction of \(X_{T+h}\) (with respect to mean squared error) is the conditional mean \({\mathbb {E}}\left[ X_{T+h} \, | \, X_{1-p}^{T}=x_{1-p}^{T}, \, \sigma _{1}^{T+h}; \, \hat{\varvec{\kern-2pt\theta }}\right]\), which writes as follows:

with \(\mathbf {y}_{T+h} = (1, x_{T+h-1}, \dots , x_{T+h-p})\), \(\hat{\varvec{\mu }}_k = (\phi _{0,k}, \phi _{1,k}, \dots , \phi _{p,k})\) the intercept and autoregressive parameters associated with \(k^{\mathrm{th}}\) state, and \('\) denoting matrix transposition.

Equation 23 depends on smoothed probabilities \(\bar{\gamma }(i, s) = P(S_{T+i}=s \,|\, X_{1-p}^{T}=x_{1-p}^{T}, \, \sigma _{1}^{T+i}; \, \hat{\varvec{\kern-2pt\theta }})\), which are recursively computed as follows:

for \(i=1, \dots , h\), \(s \in \mathbf {K}\) and \(\gamma _{T}(l)\) defined in Eq. 10.

From Eqs. 23 and 24, we can notice that if state s is observed at time-step \(T+h\) (i.e. \(\sigma _{T+h} = \{s\}\)), then prediction \(\hat{X}_{T+h}\) equals the conditional mean of the LAR process associated with this state (since \(\bar{\gamma }(h, k) = 0\) for \(k \notin \sigma _{T+h}\)). In contrast, if state process is latent at time-step \(T+h\) (i.e., \(\sigma _{T+h} = \mathbf {K}\)), \(\hat{X}_{T+h}\) is computed as the weighted sum of the conditional means of all states, with probabilities \(\bar{\gamma }(h, k)\) as weights.

Note that for \(h=1\), the past values of the time series required in Eq. 23 are known. In contrast, for \(h > 1\), the intermediate predictions \(\hat{X}_{T+1}, \dots , \hat{X}_{T+h-1}\) are used in order to feed the autoregressive dynamics of the PHMC-MLAR framework.

It is important to underline that, the whole distribution of \(X_{T+h} | X_{1-p}^T, \, \sigma _{1}^{T+h}\) is computed as a mixture of conditional densities \(P(X_{T+h} | X_{T+h-p}^{T+h-1}, S_{T+h}; \hat{\varvec{\kern-2pt\theta }})\) (Eq. 1) weighted by probabilities \(\bar{\gamma }\)’s (Eq. 24) Thus, the point forecast in Eq. 23 is the mean of this distribution, which is called the predictive density. In practice, this predictive density can be sampled in order to build a confidence interval for the predicted values, instead of a single-point forecast.

7 Experiments

The aim of this section is two-fold: (i) assess the ability of PHMC-MLAR model to infer the hidden states, (ii) evaluate prediction accuracy. These evaluations were achieved on simulated data, following two experimental settings. On the one hand, we varied the percentage of observed states in training set, to evaluate its influence on hidden state recovery and prediction accuracy. On the other hand, we simulated unreliable observed states in training set, and evaluated the influence of uncertain labelling on hidden state inference and prediction accuracy.

This section starts with the description of the protocol used to simulate data in both experimental settings. Then, the section focuses on implementation aspects. We next present and discuss the results obtained in both experimental settings.

7.1 Simulated datasets

This subsection first focuses on the model used to generate data. Then we describe the precursor sets used to further generate the test-set and the training datasets.

7.1.1 Generative model

These experiments were achieved on simulated data from a univariate (\(d=1\)) 4-state PHMC-MLAR(2) model whose transition matrix and initial probabilities are:

Within each state \(k \in \{1, 2, 3, 4\}\), the autoregressive dynamics is a LAR(2) process defined by parameters \(\varvec{\theta }^{(X,k)} = (\phi _{0,k}, \, \phi _{1,k}, \,\phi _{2,k}, \, h_k^{\frac{1}{2}})\):

In the LAR(2) process associated with state k, stationarity is guaranteed by setting the following contraints: \(\phi _{i,k} < 1,\ i \in \{1,2\}\).

Finally, the initial law \(g_0\) is a bivariate Gaussian distribution

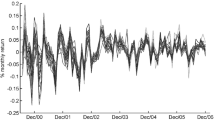

Figure 2 shows an example of state process (Fig. 2a) and corresponding time series (Fig. 2b) that were simulated from the previously defined PHMC-MLAR(2).

7.1.2 Precursor sets for the test-set and training datasets

The training and test sets are common to both experimental settings (influence of the percentage of observed labels, influence of labelling error).

Inference The precursor set \({\mathcal {P}}_{infer\_test}\) of the test-set is composed of \(M=100\) fully labelled observation sequences of length \(\ell = 1000\). These sequences were generated from the PHMC-MLAR(2) model described in Eqs. 25–27. A protocol repeated for each \(N \in \{1, 10, 100\}\) produced a precursor set \({\mathcal {P}}_{N\_infer\_train}\) consisting of N fully labelled observation sequences of length \(T=100\). The generative model in Eqs. 25–27 was used for this purpose.

Forecasting In this case, training sets are each reduced to a single sequence. In each such sequence, the sequence’s prefix of size \(T=100\) is used for model training, whereas the subsequence \(T+1, \cdots , T+10\) is used for testing prediction accuracy. The sequences of the unique precursor set denoted \({\mathcal {P}}_{N=1\_forecast\_train\_test}\) are generated using Eqs. 25–27.

7.2 Implementation

Our experiments required intensive computing resources from a Tier 2 data centre (Intel 2630v4, 2\(\,\times\,\)10 cores 2.2 Ghz, 20\(\,\times\,\)6 GB). We exploited data-driven parallelization to replicate our experiments on various training sets. On the other hand, code parallelization allowed us to process multiple sequences simultaneously in the step E of the EM algorithm. The software programs dedicated to model training, hidden state inference and forecasting were written in Python 3.6.9. We used the NumPy and Scipy Python libraries.

The models were learnt through the EM algorithm with precision \(\kappa = 10^{-6}\) and initialization procedure parameters \((L, N_{iter}) = (10, 5)\).

7.3 Influence of the percentage of observed states

To analyze the impact of observed states, we varied the percentage P of labelled observations (equivalently the percentage of observed states) in the training sets. P was varied from \(0\%\) (fully unsupervised case) to \(100\%\) (fully supervised case), with steps of \(10\%\). The aim is to evaluate the performance of intermediate cases for different sizes of the training datasets.

7.3.1 Hidden state inference

The test-set \({\mathcal {S}}_{infer\_test}\) was generated by unlabelling all states from the precursor set \({\mathcal {P}}_{infer\_test}\) described in Sect. 7.1.2 (\(M=100\) fully observed sequences of length \(\ell =1000\)).

To generate the training sets, the following protocol was repeated for each \(N \in \{1, 10, 100\}\) and for each percentage P: (i) considering the appropriate precursor set \({\mathcal {P}}_{N\_infer\_train}\) (N fully observed sequences of length \(T=100\)) depicted in Sect. 7.1.2, only a proportion of P observations was kept labelled while the rest was unlabelled; (ii) this process was repeated 15 times, each time varying which observations are kept labelled. Thus were produced 15 training datasets \({\mathcal {S}}_{N\_P\_infer\_train\_1}, \cdots , {\mathcal {S}}_{N\_P\_infer\_train\_15}\).

The PHMC-MLAR(2) model with 4 states was trained on each training set \({\mathcal {S}}_{N,P,infer\_train\_i}\), \(i=1, \cdots , 15\). For each trained model, state inference was achieved for the M fully hidden sequences of test-set \({\mathcal {S}}_{infer\_test}\), which yielded M sequences of predicted labels. Inference performance was evaluated by comparing the true state sequences with the inferred ones, using the Mean Percentage Error (MPE) score defined as follows:

where \(s_j\)’s and \(\hat{s}_j\)’s are respectively observed and inferred states. The MPE score varies between 0 and 1. The lower the value of the MPE score, the higher the inference performance.

Figure 3 displays \(95\%\) confidence interval for the MPE score as a function of P. As expected, the results show that inference ability increases with the number of training sequences denoted by N. Note that when the proportion of labelled observations is less than some threshold (\(P=30\%\) for \(N=1, 10\) and \(P=20\%\) for \(N=100\)), inference performance is greatly impacted by the distribution of observed states since we obtain very large confidence intervals for the MPE score.

\(95 \%\) confidence interval for mean percentage error (MPE) of hidden state inference, as a function of the percentage P of labelled observations. Models were trained on datasets of N sequences of length 100, for each of 15 replicates differing by the \(P \%\) labelled observations. For each model, inference was performed for a test-set of 100 unlabelled sequences of size 1000. The \(95 \%\) confidence interval of the MPE score was computed from the 15 replicates. The dash (red) line indicates the MPE score obtained for the unsupervised learning case (\(P = 0\%\)). Mind the differences in scales between the three subfigures (Color figure online)

For \(N = 1\), the use of labelled observations makes it possible to outperform the fully unsupervised case (\(P = 0\%\)) (which translates into small MPE scores) when at least \(30\%\) of observations are labelled (see Fig. 3a). In contrast, for \(N=10, 100\), from some threshold value of P (respectively \(30\%\) and \(20\%\)), the use of larger proportions of labelled observations sustains inference performances equal to that of the fully unsupervised case (see Fig. 3b and c). Importantly, the results show that using large proportions of labelled observations considerably speeds up model training by decreasing the number of iterations of the EM algorithm (see Fig. 4), and allows to better characterize the training data (which is reflected by a greater likelihood, see Fig. 5). Ramasso and Denoeux (2013) had already underlined the beneficial impact of partial knowledge integration on EM convergence in HPMCs. Our work confirms this advantage in the PHMC-MLAR model, with a good preservation of inference performance. Besides, the decrease in convergence time offers promising prospects to enhance model selection for the PHMC-MLAR model, by allowing examination of larger grids of hyper-parameter values.

Number of EM iterations before convergence as a function of the percentage P of labelled observations. For the description of the experimental protocol, see caption of Fig. 3. The distribution of the number of EM iterations is studied across 15 replicates. Dash (red) line and dot (green) line indicate the number of iterations for unsupervised and supersived learning cases respectively. Mind the differences in scales between the three subfigures (Color figure online)

Log-likelihood as a function of the percentage P of labelled observations. For the description of the experimental protocol, see caption of Fig. 3. The distribution of the log-likelihood is studied across 15 replicates. Dash (red) line and dot (green) line indicate the log-likelihoods for unsupervised and supervised learning cases respectively. Mind the differences in scales between the three subfigures (Color figure online)

In order to evaluate the influence of observed states in recognition phase, we considered the case \(P=10 \%\) which previously obtained the lowest inference performance. This time, we also kept labelled a proportion Q of observations within the test-set \({\mathcal {S}}_{infer\_test}\). We assessed the inference performances for the models trained on \({\mathcal {S}}_{N,P=10\%,infer\_train\_i}\), \(i=1, \cdots 15\). Figure 6 presents MPEs as a function of Q for \(N = 1, 10\) and 100. We observe that inference performances are improved by the presence of observed states. More precisely, for Q taking its values in \(25 \%\), \(50 \%\) and \(75 \%\), respectively, MPE decreases by: (i) \(19\%\), \(42\%\) and \(69\%\) for \(N=1\) (Fig. 6a); (ii) \(27\%\), \(52\%\) and \(77\%\) for \(N=10\) (Fig. 6b); and (iii) \(27\%\), \(53\%\) and \(77\%\) for \(N=100\). (Fig. 6c). These results show the ability of our variant of the Viterbi algorithm to infer partially-labelled sequences.

\(95 \%\) confidence interval for mean percentage error (MPE) of hidden state inference, as a function of the percentage Q of labelled observations within test-set, with \(P = 10\%\) labelled observations in the training sets. Models were trained on datasets of N sequences of length 100 in which \(P=10\%\) of observations have been labelled. Fifteen replicates differing by the \(P=10 \%\) labelled observations were considered. For each model, inference was performed for a test-set of 100 partially labelled (\(Q\%\)) sequences of size 1000. The \(95 \%\) confidence interval of the MPE score was computed from the 15 replicates. Mind the differences in scales between the three subfigures (Color figure online)

7.3.2 Forecasting

In this experiment, we consider models trained on a single sequence. This case corresponds to many real-world situations in which a unique time series is available (e.g., the evolution of air pollution at some geographical location). Using the precursor set \({\mathcal {P}}_{N=1\_forecast\_train\_test}\) described in Sect. 7.1.2, we generated datasets \({\mathcal {S}}_{N=1\_forecast\_train\_test\_i}\), \(i=1, \cdots , 15\) each composed of a single sequence of size 110. Again, the 15 replicates differed by the \(P\%\) labelled observations. In these sets, the sequence prefixes of length \(T=100\) were used to train the models. Out-of-sample forecasting was carried out at horizons \(T+h\), \(h=1, \dots , 10\), which means that prediction accuracy was assessed using subsequences \(T+1, \cdots , T+h\). To note, the \(P\%\) labelled observations were distributed in the sequence prefixes of length T.

Two experimental schemes were considered. First, the states at forecast horizons were supposed to be latent; that is, all states were unlabelled from \(T+1\) to \(T+h\), \(h=1, \cdots , 10\). Then, we performed the prediction evaluation when states are observed at forecast horizons. The latter situation corresponds to performing the prediction conditional on some assumption on the regime. For instance, in econometrics, assuming we know which phase will be on (growth phase versus recession) might improve the forecasting performance of the Gross National Product (GNP). In this case, all states were kept labelled from \(T+1\) to \(T+h\), \(h=1, \cdots , 10\).

Prediction performance is estimated by the Root Mean Square Error (RMSE) defined as follows:

where h is the forecast horizon and \(N_{rep}=15\) is the number of replicates. Accurate predictions are characterized by low RMSEs.

Table 1 presents the RMSEs obtained when the states at forecast horizons are supposed to be latent. Figure 7a presents the mean, median and maximum of RMSEs, computed over all forecast horizons, as a function of P, the percentage of labelled observations in the training sets. Table 1 and Fig. 7a show that as from some low P threshold (\(10\%\) or \(20\%\)), the prediction performance remains nearby constant across proportions.

Mean, median and maximum root mean square error (RMSE) of prediction at horizon h as a function of P, the percentage of labelled observations in the training datasets. The 95% confidence intervals are shown for the mean. States at forecast time-steps \(T+h\), \(h=1, \cdots 10\) are a hidden, b known. Models were trained on a single sequence, for each of 15 replicates differing by the \(P \%\) labelled observations. Model training was performed on subsequences of length 100, whereas prediction was achieved for the 10 subsequent time-steps. For each value of P, the statistics provided were computed across the 15 replicates and all horizons (Color figure online)

In addition, Table 1 also highlights that the ability to predict depends on the forecast horizon under consideration. At any given labelling percentage P, high RMSE scores (i.e., around 7) alternate with low scores (around 1) across horizons. The nonmonotonic error trend across horizons was observed empirically for MSAR models and threshold autoregressive models when they are applied to US GNP time series (Clements & Krolzig, 1998).

Finally, our experiments show that PHMC-MLAR model’s ability to better characterize the training data in presence of large proportions of labelled observations (characterized by greater likelihood, see Fig. 5a) does not translate into an improved forecast performance.

When states are known at forecast horizons, RMSEs (presented in Table 2) are reduced by \(44\%\) on average. Moreover, Fig. 7b shows that above percentage \(P = 30 \%\), prediction performances are slightly greater than that of the unsupervised case (\(P=0 \%\)). Note that as in the case when the states are unknown at forecast horizons, the prediction ability depends on the forecast horizon. Again, for a given P, the RMSE score does not systematically increase with forecast horizon h, although previously predicted values are used as inputs when predicting at next horizons.

7.4 Influence of labelling error

In this experiment, the influence of labelling error is evaluated. To simulate unreliable labels, we proceeded as follows.

At each time-step t, an error probability \(p_t\) was drawn randomly from a beta distribution with mean \(\rho\) and variance 0.2. With probability \(p_t\), the observed state \(s_t\) was replaced by a random state uniformly chosen from \(\{1, 2, 3, 4\} \setminus \{s_t\}\). So, the unreliable labels \(\tilde{s}_t\) were defined as follows:

where \({\mathcal {U}}\) is the discrete-valued uniform distribution. Thus, on average a proportion \(\rho\) of observations is assigned wrong labels.

7.4.1 Inference of hidden states

To assess inference performance in presence of labelling errors, we relied on the test-set \({\mathcal {S}}_{infer\_test}\) described in Sect. 7.3 (\(M=100\) fully hidden sequences of length \(\ell =1000\)) corresponding to the fully labelled dataset \({\mathcal {P}}_{infer\_test}\).

To generate the training sets, for each N \(\in \{1,\ 10,\ 100\}\), we considered the appropriate precursor set \({\mathcal {P}}_{N\_infer\_train}\) (N fully observed sequences of length \(T=100\)) depicted in Sect. 7.1.2.

We varied the mean labelling error probability \(\rho\) in \(\{0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7\), \(0.8, 0.9, 0.95 \}\). For \(N \in \{1,\ 10,\ 100\}\), and each value of \(\rho\), we generated 15 replicates from dataset \({\mathcal {P}}_{N\_infer\_train}\), each time varying the distribution of the wrong labels amongst the observations. The PHMC-MLAR(2) model with 4 states was trained on each of the training sets \({\mathcal {S}}_{N,\rho ,infer\_train\_1}, \cdots , {\mathcal {S}}_{N\_\rho \_infer\_train\_15}\) thus obtained.

For each trained model, state inference was achieved, which yielded \(M=100\) sequences of predicted labels of length 1000, to be compared with the label sequences within \({\mathcal {P}}_{infer\_test}\) (see Sect. 7.1.1).

Figure 8 presents \(95\%\) confidence intervals for the MPE score as a function of \(\rho\). Note that for all sizes \(N \in \{1,\ 10,\ 100\}\) of training data, the average MPE gradually increases when \(\rho\) tends to 1. Moreover, confidence intervals become more and more tight when larger training data is considered. We also observe that up to \(\rho = 0.7\), the robustness to labelling errors, translated into small MPE average and low dispersion, increases with N. However, from \(\rho \ge 0.8\), this trend is reversed and inference performance slightly decreases when N grows.

On the other hand, we underline that the fully unsupervised case outperforms supervised cases in presence of labelling errors. Up to relatively high labelling error rates (\(\rho =70\%\)), the trade-off between training time and inference performance becomes beneficial for large training datasets. For instance, for \(N=100\), with a \(70\%\)-reliable labelling function (i.e. \(\rho = 0.3\)), the EM algorithm converges after a single iteration against 67 iterations for the unsupervised case; and the resulting model has good inference abilities with an MPE score equal to \(35\%\) on average (see Fig. 8c) against \(5\%\) on average in the unsupervised case. Thus, when analyzing real-world data for which the number of states K and auto-regressive order p are unknown, model selection strategies can capitalize on such labelling functions in order to explore/prospect larger grids of values for the hyper-parameters K and p.

\(95 \%\) confidence interval for mean percentage error (MPE) of hidden state inference, as a function of the mean labelling error probability \(\rho\). Models were trained on N sequences, for each of 15 replicates differing by the \(\rho \%\) ill-labelled observations. The average MPE was computed from the 15 replicates. The dash (red) line indicates the MPE score obtained for the unsupervised learning case. Mind the differences in scales between the three subfigures (Color figure online)

7.4.2 Forecasting

As in Sect. 7.3.2, we considered models trained on a single sequence (\(N = 1\)). Again, for each value of the mean labelling error probability \(\rho\), we used precursor set \({\mathcal {P}}_{N=1\_forecast\_train\_test}\) described in Sect. 7.1.2, and we varied the distribution of wrong labels: 15 replicates (i.e., 15 sequences of length \(T=100\)) were thus generated. Out-of-sample forecasting was carried out at horizons \(T+h\), \(h = 1,\cdots ,10\).

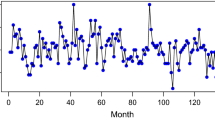

Table 3 presents RMSE scores for different values of mean labelling error \(\rho\) when states are unknown at forecast horizons \(h = 1, \dots , 10\). The results show that at forecast horizons \(h = 1, 2, 5, 6\), the best prediction accuracies are reached when \(\rho\) is null, whereas at the remaining horizons, the highest accuracies are obtained when \(\rho = 0.8\) or 0.9. Figure 9 presents the mean, median and maximum for the prediction errors computed over the whole forecast horizons as a function of \(\rho\). We observe that the mean and median very slightly increase with \(\rho\), whereas labelling errors exert a greater impact on the maximum values of RMSEs. Therefore, this second experiment also highlights the remarkable robustness to error labelling in the prediction task, over the whole range of error rates.

Descriptive statistics for the distribution of the root mean square error (RMSE) of prediction, as a function of \(\rho\): \(\rho\) denotes the mean labelling error probability. The forecast horizons are time-steps \(T+1\) to \(T+h\), \(T=100\). The statistics are computed over all horizons. The 95% confidence intervals are shown for the mean (Color figure online)

8 Application to machine health prognostics

In this section, we report experiments on realistic machine condition data available on NASA’s CMAPSS (Commercial Modular Aero-Propulsion System Simulation) repository (https://ti.arc.nasa.gov/tech/dash/groups/pcoe/prognostic-data-repo-sitory/#turbofan). The application of interest is data-driven machine health prognostics. This task consists in predicting the Remaining Useful Life (RUL) of a machine: the RUL is the time period beyond which equipments will likely require repair or replacement. The aim of these experiments is two-fold: (i) assess the benefit of adding autoregressive dynamics to the PHMC model as we propose in this work; (ii) compare our model to state-of-the-art methods in the context of machine health prognostics.

The remainder of this section is organized as follows. NASA’s CMAPSS datasets are described in Sect. 8.1. Section 8.2 explains how we predicted RUL using PHMC models, with or without autoregressive dynamics. The last Sect. 8.3 is devoted to assess the performance of our model for two tasks: h-step ahead feature prediction and RUL prediction. Feature prediction performances are compared for PHMC-MLAR, PHMC and MSAR. RUL prediction performances are compared for PHMC-MLAR, PHMC, and six recent state-of-the-art RUL prediction methods.

8.1 Data description

NASA’s CMAPSS datasets are composed of realistic degradation trajectories of turbofan engines. In our experiments, we used datasets FD001 and FD003. Each dataset is divided into a training and testing subsets of 100 trajectories each. FD003 is a more complex case study than FD001 because it includes two fault modes against a single fault mode for FD001. In fact, it is known that fault occurrences are directly related to the degradation of engine operating conditions so that the number of fault modes increases the diversity of degradation trajectories.

The degradation pattern of each trajectory is represented by 21 features (time series) recorded from 21 sensors. Moreover, for each trajectory, engine operational state is healthy in the early stage and begins to degrade over time until a failure occurs. At a given time-step, the RUL indicates the time period left before failure. Since the training datasets contain the whole degradation patterns, the RUL value at the last time-step of each training trajectory equals 0. In contrast, for each testing trajectory, only an incomplete (i.e. “partial”) degradation pattern and the RUL (different from 0) associated with the last time-step are available. In Fig. 10, each testing trajectory is represented by a point whose coordinates are its length and its RUL. To note, the difficult cases are caracterized by large RUL values and short partial degradation patterns (see the top left-hand side of Fig. 10).

The data-driven machine health prognostics task consists in predicting the RUL of a device, knowing its partial degradation pattern. To build such a predictive method, models are trained on training trajectories and evaluated on testing trajectories.

8.2 Machine health prognostics using PHMC-LAR models

In the literature of data-driven machine health prognostics, we distinguish between three main approaches: case-based reasoning approaches (Wang et al., 2008; Ramasso, 2014), artificial intelligence approaches (Wu et al., 2018; Zhao et al., 2019) and statistical model-based approaches (Javed et al., 2015). The method proposed in this work belongs to the family of statistical model-based approaches. The overview of our method is summed up in Fig. 11. It consists of two complementary modules: the model training module and the RUL prediction module. In the model training module, CMAPSS training datasets were annotated with operational states which were used to feed PHMC[-MLAR] (that is PHMC or PHMC-MLAR models) with partial annotations during model training (see Sect. 8.2.1). Then in the RUL prediction module, the RULs of the testing trajectories were predicted following a three-step procedure (see Sect. 8.2.2).

RUL prediction using PHMC or PHMC-MLAR models: overview of the proposed method. RUL: remaining useful life. In the training phase, we first had to assign partial state annotation to each multivariate training trajectory. We designed a synthetic health indicator, constraining it to roughly decay from 1 (healthy state) to 0 (failure state) over time, for each trajectory. By regressing HI against the features for each time-step of each training trajectory, we obtained one estimated \(\hat{HI}\) time series per trajectory [subfigure (a)]. The segmentation of this time series yielded one sequence of states per trajectory [subfigure (b)]. The four possible states are healthy (1), intermediate (2), faulty (3) and failure (4). The partial annotation \(\Sigma\) of each trajectory was obtained by setting \(\sigma _t\) to \(\{i, j\}\) in each time-step t of windows (of size 11) centered on the switch from state i to state j. Otherwise, \(\sigma _t\) was set to the state obtained through the segmentation. Only the 8 features that are sufficiently informative were retained from the 21 initial features, in the rest of the experiment. To predict a RUL for each testing trajectory, a three-step process was implemented. In step 1, the degradation pattern of each testing trajectory, known from time-step 1 to the trajectory’s length T, was completed from \(T+1\) to \(T+H\) [see subfigure (c)]. H was set at 145, the maximum RUL value observed in the testing dataset. The completion was achieved by sampling from the feature forecasting function described in Eq. 23. Iterating this sampling procedure \(R=100\) times produced R completed degradation patterns per testing trajectory. In step 2, the R patterns of each testing trajectory were each segmented into healthy, intermediate, faulty and failure states using our variant of the Viterbi algorithm. When existing, the switch from faulty to failure state allowed us to estimate the trajectory’s RUL. Otherwise, the RUL estimate was set to the maximum H. In step 3, for each testing trajectory, a final RUL estimate was aggregated from the R estimates previously obtained [see subfigure (d)] (Color figure online)

8.2.1 Engine operational states—model training