Abstract

This paper introduces the hhsmm R package, which involves functions for initializing, fitting, and predication of hidden hybrid Markov/semi-Markov models. These models are flexible models with both Markovian and semi-Markovian states, which are applied to situations where the model involves absorbing or macro-states. The left-to-right models and the models with series/parallel networks of states are two models with Markovian and semi-Markovian states. The hhsmm also includes Markov/semi-Markov switching regression model as well as the auto-regressive HHSMM, the nonparametric estimation of the emission distribution using penalized B-splines, prediction of future states and the residual useful lifetime estimation in the predict function. The commercial modular aero-propulsion system simulation (C-MAPSS) data-set is also included in the package, which is used for illustration of the application of the package features. The application of the hhsmm package to the analysis and prediction of the Spain’s energy demand is also presented.

Similar content being viewed by others

References

Adam T, Langrock R, Weiß CH (2019) Penalized estimation of flexible hidden Markov models for time series of counts. Metron 77(2):87–104

Azimi M (2004) Data transmission schemes for a new generation of interactive digital television (Doctoral dissertation, University of British Columbia)

Bulla J, Bulla I, Nenadic O (2010) hsmm an R package for analyzing hidden semi-Markov models. Comput Stat Data Anal 54(3):611–619

Cartella F, Lemeire J, Dimiccoli L, Sahli H (2015) Hidden semi-Markov models for predictive maintenance. Math Probl Eng

Cook AE, Russell MJ (1986) Improved duration modeling in hidden Markov models using series-parallel configurations of states. Proc Inst Acoust 8:299–306

Dempster AP, Laird NM, Rubin DB (1977) Maximum likelihood from incomplete data via the EM algorithm. J R Stat Soc Ser B (Methodol) 39(1):1–22

Durbin R, Eddy SR, Krogh A, Mitchison G (1998) Biological sequence analysis: probabilistic models of proteins and nucleic acids. Cambridge University Press, Cambridge

Fontdecaba S, Muñoz MP, Sànchez JA (2009) Estimating Markovian Switching Regression Models in An application to model energy price in Spain. In The R User Conference, France

Guédon Yann (2005) Hidden hybrid Markov/semi-Markov chains. Comput Stat Data Anal 49(3):663–688

Harte D (2006) Mathematical background notes for package “HiddenMarkov”. Statistics Re.

Jackson C (2007) Multi-state modelling with R: the msm package. Cambridge, UK, pp 1–53

Kim CJ, Piger J, Startz R (2008) Estimation of Markov regime-switching regression models with endogenous switching. J Econom 143(2):263–273

Langrock R, Kneib T, Sohn A, DeRuiter SL (2015) Nonparametric inference in hidden Markov models using P-splines. Biometrics 71(2):520–528

Langrock R, Adam T, Leos-Barajas V, Mews S, Miller DL, Papastamatiou YP (2018) Spline-based nonparametric inference in general state-switching models. Statistica Neerlandica 72(3):179–200

Li J, Li X, He D (2019) A directed acyclic graph network combined with CNN and LSTM for remaining useful life prediction. IEEE Access 7:75464–75475

Lloyd S (1982) Least squares quantization in PCM. IEEE Trans Inf Theory 28(2):129–137

ÓConnell J, Højsgaard S (2011) Hidden semi Markov models for multiple observation sequences: the mhsmm package for R. J Stat Softw 39(4):1–22

R Development Core Team (2010) R: a Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria

Schellhase C, Kauermann G (2012) Density estimation and comparison with a penalized mixture approach. Comput Stat 27:757–777

Van Buuren S, Groothuis-Oudshoorn K (2011) mice: multivariate imputation by chained equations in R. J Stat Softw 45:1–67

Visser I, Speekenbrink M (2010) depmixS4: an R package for hidden Markov models. J Stat Softw 36(7):1–21

Viterbi A (1967) Error bounds for convolutional codes and an asymptotically optimum decoding algorithm. IEEE Trans Inf Theory 13(2):260–269

Acknowledgements

The authors would like to thank the two anonymous referees and the associate editor for their useful comments and suggestions, which improved an earlier version of the hhsmm package and this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Forward-backward algorithm for the HHSMM model

Denote the sequence \(\{Y_{t},\ldots ,Y_{s}\}\) by \(Y_{t:s}\) and suppose that the observation sequence \(X_{0:\tau -1}\) with the corresponding hidden state sequence \(S_{0:\tau -1}\) is observed. The forward-backward algorithm is an algorithm to compute the probabilities

within the E-step of the EM algorithm. The above probabilities are computed in the backward recursion of the forward-backward algorithm.

For a semi-Markovian state j, and \(t=0,\ldots ,\tau -2\), the forward recursion computes the following probabilities (Guédon 2005),

and

where the normalizing factor \(N_t\) is computed as follows

For a Markovian state j, and for \(t = 0\),

and for \(t = 1,\ldots ,\tau -1\)

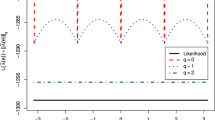

The log-likelihood of the model is then

which is used as a criteria for convergence of the EM algorithm and the evaluate the quality of the model. The backward recursion is initialized by

For a semi-Markovian state j, we have

and for \(t = \tau -2,\ldots ,0\),

where

and

with

For a Markovian state j and for \(t = \tau -2,\ldots ,0\)

Furthermore, if a mixture of multivariate normal distributions with probability density function

is considered as the emission distribution, then the following probabilities of the mixture components are computed in the E-step of the \((s+1)\)th iteration of the EM algorithm

where \(\lambda _{kj}^{(s)}\), \(\mu _{kj}^{(s)}\) and \(\Sigma _{kj}^{(s)}\) are the sth updates of the emission parameters in the M-step of the sth iteration of the EM algorithm.

1.2 The M-step of the EM algorithm

In the M-step of the EM algorithm, the initial probabilities are updated as follows

For a semi-Markovian state i, the transition probabilities are updated as follows

and for a Markovian state i

If we consider the mixture of multivariate normals, with the probability density function (A.27), as the emission distribution, then its parameters are updated as follows

Also, the parameters of the sojourn time distribution are updated by maximization of the following quasi-log-likelihood function

where

and \(u_{\tau } = \min (u,\tau )\).

1.3 Viterbi algorithm and smoothing for the HHSMM model

The Viterbi algorithm (Viterbi 1967) is an algorithm to obtain the most likely state sequence, given the observations and the estimated parameters of the model.

For a semi-Markovian state j, and for \(t=0,\ldots ,\tau -2\), the probability of the most probable state sequence is obtained by the Viterbi recursion as follows

and

For a Markovian state j, the Viterbi recursion is initialized by

and for \(t = 1,\ldots .\tau -1\),

After obtaining the probability of the most probable state sequence, the current most likely state is obtained as \({\hat{s}}_t^* =\arg \max _{1\le j\le J} \alpha _j(t)\).

Another approach for obtaining the state sequence is the smoothing method, which uses the backward probabilities \(L_j(t)\) instead of \(\alpha _j(t)\).

Rights and permissions

About this article

Cite this article

Amini, M., Bayat, A. & Salehian, R. hhsmm: an R package for hidden hybrid Markov/semi-Markov models. Comput Stat 38, 1283–1335 (2023). https://doi.org/10.1007/s00180-022-01248-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-022-01248-x