Abstract

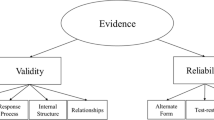

We present the validation of a questionnaire for compulsory secondary school students (seventh to tenth grade), designated “Educational learning environments for ESO pupils” (CEApA_ESO), for the purpose of evaluating learning environments. Although many instruments have been developed in this area, our work attempts to comprehensively cover some factors that most influence learning environments from the students’ perspective. Therefore, we included physical, learning, teaching and motivational elements, by adapting different already-validated scales to our intended overall approach and the Spanish context. We conducted a pilot study with 207 students from four grades (two classes per educational level). We performed descriptive and factor analyses with maximum likelihood extraction method and varimax rotation to identify factors underlying each scale. The factors extracted from each scale were used to evaluate the fit of the model, using the AMOS v.18 software for structural equation analysis, taking as reference the criteria set by Byrne (Structural equation modeling with AMOS: Basic concepts, applications, and programming, Taylor & Francis Group, 2010) and Kline (Principles and practice of structural equation modeling, The Guilford Press, 2010) (CMIN/DF between 2 and 5, CFI and IFI > 0.9, RMSEA < 0.06 and HOELTER > 200). Finally, we present the factorial validity of the complete scale and analyse the internal consistency of the scale and its subscales using Cronbach’s alpha coefficient. This instrument, with adequate psychometric properties, offers educators and researchers a valid tool for assessing the learning environments of their schools.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

School improvement is one of the greatest political and social concerns of all times (Cabrera, 2020; Feldhoff et al., 2016). To respond to this challenge, it is necessary to develop an educational model in which inclusion becomes a benchmark objective (UNESCO, 2021). To achieve this, among other things, we need to review learning environments. These environments are vital to the holistic development of students while they are in schools and, for schools, they are a source of motivation and a factor that influences students’ learning strategies and levels of engagement (Cayubit, 2022).

At the same time, the literature points out that the degree of commitment to studies, the lack of identification with them, the inequality of opportunities, or the complex relationships between peers are all recurring factors which, in many cases, end up dragging students towards dropping out, school failure, vulnerability or marginalisation (Anderson & Beach, 2022; González-Falcón et al., 2016; González-Losada et al., 2015; Méndez & Cerezo, 2018; Tarabini & Curran, 2015). The aim is to address the lack of action against structural inequalities and situations of disadvantage that hinder progress towards Education for All. The socio-economic conditions of the neighbourhood, size of the school, instability of teachers’ jobs, and inaction towards participation in growth projects and initiatives, etc. mean that the starting point is different depending on the context in which the school finds itself (UNESCO, 2021).

The importance of students’ perceptions of their environments as predictors of their school performance is corroborated by publications such as those developed by Cai et al. (2022), Fraser (2012), Harinarayanan and Pazhanivelu (2018), Sinclair and Fraser (2002) or the Report on the State System of Education Indicators of the Spanish Ministry of Education and Vocational Training (MEYFP, 2021a), which uses data on schooling and the educational environment as essential categories in diagnosing the state of the education system based on OECD budgets and with reference to the European Education Area (2021–2030).

The malleable nature attributed to learning environments in the literature makes it necessary to understand and improve them. Doing so based on the learner’s perceptions (either through qualitative or quantitative procedures), is one of the strategies that researchers such as Gençoglu et al. (2022), Goulet and Morizot (2022), or Korpershoek et al. (2020) have found helpful for assessing the different dimensions that make up learning environments. Starting with the assumptions of the evaluation of physical aspects, the quality of the teacher’s teaching, students’ relationships with the environment in their construction of new learning, and the theory of self-determination, our work focused on the validation of an instrument that serves the scientific community to ratify optimal psychometric properties in the dimensions attributed to learning environments. As stated by Gençoglu et al. (2022), our work is one more snapshot in the field of study, adapted to the Spanish context in which it took place. Also our work involves reporting the opinion of students in secondary education in relation to overcoming barriers associated with socio-emotional, interpersonal relationships, educational inclusion, or the development of critical thinking about the physical conditions in which they learn. All of this must lead to an approach that clearly recognises that the learning environment becomes a key factor in determining how students are taught and learn and whether these processes can be considered of high quality (optimal relationships with teachers, a sense of belonging to the school, a high level of commitment, etc.) (Fatou & Kubiszewski, 2018).

In our context, it is worrisome that we have a high percentage of young people with only basic education (14.8% in OCDE; 12.3% in UE22) or that the Secondary Education graduation rate in Spain is 74.7% (MEYFP, 2021b). Furthermore, we must not forget that access to the ESO stage coincides with a life cycle stage of psychological, biological and emotional transformation, in which certain factors typical of adolescence are manifested, including the need to belong to a group, to be accepted and to feel reflected in a cohort of equals (Sullivan et al., 2005, cited by Méndez & Cerezo, 2018).

Thus, the ultimate purpose of this research was to provide a valid tool to assess students’ perceptions of learning environments. Its application is likely to provide relevant information for decision making in secondary schools, contributing to the improvement of teaching quality and the educational response to diversity (inclusion and learning), which can lead to improvements in indicators of school success, continuation of studies and better school performance.

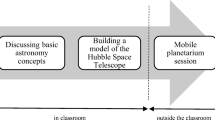

Learning environments

Work in the field of learning environments dates back to Lewin (1936) who acknowledged that both the environment and its interaction with the subject’s personal characteristics play an important role in determining behaviour. Based on this work, the literature has contributed to its definition, with the terms educational or learning environments or spaces used interchangeably. There are multiple meanings compiled by Chinujinim and Amaechi (2019), Johnson and McClure (2004), and Sarioğlan (2021), among others. These authors identify different elements that help in understanding the dimensions of the concept and bring it closer to a multidimensional space/place (classrooms, library, laboratories, recreation areas, etc.), with its own characteristics (equipment, facilities, etc.), which students learn to interact and communicate (developing attitudes, values, skills, motivations, etc.) through different strategies (methodologies, procedures, resources, etc.) with other agents with whom this space is shared (peer groups, teachers, management team, specialists, etc.) and, at the same time, contribute to the development of the formative and socialisation process.

This multifactorial character has been analysed in different research studies that focus on the design and validation of instruments that help to measure or evaluate its impact through student or teacher perceptions (Skordi & Fraser, 2019). The pioneering work of Moos (1974) consolidates the first scales related to social factors that affect the classroom, although there are other instruments with a high impact on educational research, as summarised by Fraser (2012). In his review, Fraser not only compiles questionnaires by reviewing their validity and use—such as the Learning Environment Inventory (LEI), Classroom Environment Scale (CES), Individualised Classroom Environment Questionnaire (ICEQ) and College and University Classroom Environment Inventory (CUCEI), My Class Inventory (MCI), Questionnaire on Teacher Interaction (QTI), Science Laboratory Environment Inventory (SLEI), Constructivist Learning Environment Survey (CLES), What Is Happening In this Class? (WIHIC) questionnaire—but also analyses lines of past research on learning environments and reviews comparative studies between countries.

Among the research focused secondary education, we find works such as those by Aldridge et al. (2006), who used scales belonging to several of the most frequently used instruments (WIHIC, ICEQ and CLES) to create the Outcomes-Based Learning Environment Questionnaire (OBLEQ) whose factors (participation; research; cooperation; equity; differentiation; personal relevance; responsibility for one’s own learning) are essential in evaluating the environment where secondary students are learning. Gilman et al. (2021) offered an adaptation of the 5 Essentials Survey developed by Chicago Public Schools (CPS), currently used in 22 states of the USA and structured on the basis of five factors (supportive environment, ambitious instruction, effective leaders, collaborative teachers and involved families), from which seven factors emerged (safety, confidence/trust, support, personalisation of teaching, clarity, English teaching and mathematics instruction). In turn, Bradshaw et al. (2014) designed an instrument based on a theoretical model in which school climate is based on three factors with 13 sub-domains: security (perceived safety, intimidation and aggression and drug use); participation (engagement with teachers, student connectedness, academic participation, connection with the school, equity and parent involvement); and environment (rules and consequences, comfort and physical support, untidiness). Along the same lines, Zullig et al. (2015) contribute to the validation of the School Climate Measure (SCM) by adding to the original eight domains (positive student–teacher relationships, school connectedness, academic support, order and discipline, physical environment, social environment, perceived exclusion, and academic satisfaction) two more recently-developed domains (family involvement and opportunities for student involvement). Bear et al. (2011) provide the Delaware School Climate Survey—Student (DSCS-S) to assess school climate with five factors, namely, teacher–student relationships, student–student relationships, fairness in rules, school liking and school safety.

Núñez et al. (2012) carried out a translation and adaptation of the Learning Climate Questionnaire (LCQ) (Williams & Deci, 1996), emphasising the importance of support for autonomy in the educational context as a key element in the teaching process in university studies. This was also approached by León et al. (2017) through the design and validation of a scale to evaluate the quality of teacher training as perceived by secondary school students with nine factors: teaching for relevance, acknowledging negative feelings, encouraging participation, controlling language, optimal challenge, process focus, classroom structure, positive feedback and support.

Another factor highlighted in the literature has to do with motivation. Suarez et al. (2019) applied the Homework Survey, in which intrinsic motivation, perceived usefulness and attitude towards homework are included as student motivational variables, to secondary students to reveal that intrinsic motivation is a precursor to students’ behavioural engagement and that school performance also relates to the latter. Along the same line, Mikami et al. (2017) provided an in-depth analysis of the phenomenon of peer relationships in secondary school as a predictor of both students’ behavioural commitment and academic performance. To this end, two instruments were applied, one to measure peer relationships and the feeling of belonging (4 items) and the other to measure commitment and behavioural disaffection (5 items).

In the university context, there are also several notable studies. Peng (2016) adapted an instrument designed by Pielstick (1988) to identify the physical, teaching, learning and motivational components of the environment. This structure was the one used as a reference in this study because, on the one hand, it is consistent with the variables analysed in the reference project and meets the defining characteristics of the concept mentioned previously (Chinujinim & Amaechi, 2019; Johnson & McClure, 2004; Sarioğlan, 2021). On the other, they are general dimensions that bring together most of the factors identified in other versions of instruments designed for the secondary education stage. Finally, researchers such as Postareff et al. (2015) note how students’ perceptions of the teaching–learning environments influence their ways of learning. Barrett et al. (2013), Kweon et al. (2017) or Yerdelen and Sungur (2018) reported how the physical environment of the school can determine the ways of relating to teachers and peers, teaching methodology or communication, or pupil perceptions of safety at school (Bradshaw et al., 2015). Poondej and Lerdpornkulrat (2016) also related the environments to students’ personal characteristics and the way in which they approach their learning. Yang et al. (2013), Castro-Pérez and Morales-Ramirez (2015) and Besançon et al. (2015) also emphasised the importance of motivational factors as determinants of students’ learning processes.

Based on the review of literature on evaluation instruments fully developed in the English-speaking context and the adoption of different scales associated with some of them, we aimed to provide another tool to allow, on the one hand, assessment of the perceptions of secondary school students of the learning processes that take place in different dimensions in which learning environments are defined and, on the other hand, a contribution to reflection on decision making to meet the current challenges of the educational system. Studying the learning environment through the perceptions of learners allows us to discern their own views (as they are the ones who can be most accurate in assessing reality, given the hours that they spend in them), as well as to identify information that might otherwise be insignificant or overlooked by outside observers (Fraser, 2012).

Our questionnaire is based on the ecological class model coined by Doyle (2006, cited in Peng, 2016). In this model, there are three paradigms to be considered (Doyle, 1977, cited in Peng, 2016): the process–product paradigm (which emphasises teacher variables as determinants of student outcomes); the mediation process paradigm (in which the emphasis is on student response variables as determinants of their learning outcomes); and the ecological classroom paradigm (in which it is the mutual relationships between environmental demands and responses in natural classroom settings that are important). Based on these references, we selected four scales for our research: the physical, learning, teaching and motivational environment scales proposed by Peng (2016). In the case of the physical environment scale, there are previous studies that show the importance and influence of the physical environment (as mentioned above). Malaguzzi (1995), for example, points out the influence of space on learning processes and identifies it as the third teacher.

For the teaching and learning scales, we used some scales from the WIHIC questionnaire of Aldridge et al. (1999). This instrument is oriented towards outcomes-based education, focusing on what students are expected to learn and how to make those outcomes measurable through assessment. Advantages of the WIHIC are that it provides us with meaningful information and feedback for teaching practice, allowing us to identify classroom characteristics in order to improve learning environments (Khine, 2002). This instrument has been widely used in cross-national comparative studies, and other scales have been added to it depending on the purposes of the study and contexts, such as equality and constructivism (Fraser, 2012). This versatility also led us to choose to use the WIHIC.

Previous studies (Aldridge et al., 1999) identified useful information about learning environments and dimensions that could be modified to improve learning outcomes, but they did not identify causal factors associated with learning environments. This is why we included the motivation scale of Jang et al. (2012), based on the theory of self-determination (Deci & Ryan, 1985). According to the theory, motivation presents different levels and orientations that do not act as watertight compartments, but form a continuum from amotivation to intrinsic motivation (Deci & Ryan, 1985). The different types of motivation identified, from the lowest to the highest degree of self-determination, are: amotivation, four forms of extrinsic motivation (external, introjected, identified and integrated) and intrinsic motivation (IM). According to Ryan and Deci (2000), IM is defined as engagement in an activity fully and freely, while extrinsic motivation (EM) consists of varying degrees of involvement in an activity in order to receive an external incentive. Building on this framework, previous studies highlight the importance of designing environments that promote IM and explore the relationship between learning environments and motivation (Müller & Louw, 2004) in order to improve learning outcomes. Likewise, this scale allows us to study students’ academic satisfaction, which depends to a great extent on the motivational climate of the classroom and on how students’ basic psychological needs (competence, autonomy and social relations) are met by their teachers (Tomás & Gutiérrez, 2019), who are the primary drivers of their learning (Schumacher et al., 2013).

Objectives

The general aim of this study was to design and validate an instrument to assess learning environments in secondary education, while analysing students’ perceptions of four key factors: physical, learning, teaching and motivational environment.

Method

We used a quantitative, cross-sectional research design with the survey method to gather information and apply deductive approaches. The theoretical background reviewed in this paper was used for the initial structure of the questionnaire and subsequent identification of the factors underlying each of the proposed scales.

Population and sample

Because we are presenting the results of the pilot study, our reference population consisted of ESO students from a secondary school in the city of Huelva (N = 429) who were randomly selected using the SPSS suite. Calculating the sample size (95% confidence level and margin of error 5), the number of surveys obtained was 204. Pupils from the school were divided into 4 classes, with four groups for each of them. We administered the surveys to two groups from each course in person (again randomly), making previous appointments during tutoring hours. The final sample consisted of 207 students (51.2% male; 48.8% female) distributed by courses: first year (24.8%); second year (24.3%); third year (29.1%); and fourth year (21.8%).

Instrument

To evaluate the learning environments, a questionnaire was created consisting of four scales (physical environment, learning, teaching and motivational environment) with a total of 63 items arranged in Likert format with a range from 1 to 5 (1 = never; 5 = almost always).

Two validated instruments were used to construct the first scale related to the physical environment: the Physical Environment subscale of the School Climate Survey by Zullig et al. (2015) (Items 1 to 7) to assess perceptions of the school’s physical facilities; and the School Liking subscale the Delaware School Climate Survey—Student (DSCS-S) by Bear et al. (2011) (Items 8 to 12) to assess appreciation of the school/institution. This scale assesses whether the facilities and resources are sufficient and adequate and if students feel safe and proud of their school. This is linked to Sustainable Development Goal 4, which sets out the objectives for providing resources to ensure high-quality, inclusive and holistic education and ensuring equitable access to education (UNESCO, 2021).

For the scale focused on learning, the WIHIC (Aldridge et al., 1999), a validated and widely-used instrument, was taken as a reference. Specifically, several items (from 13 to 31) were selected to study relationships within the environment and how students construct new learning (student cohesion, task orientation and cooperation). As for the teaching scale, on one hand, the WIHIC (Aldridge et al., 1999) was again used to select several items (from 32 to 42) from two dimensions (support and equality) and, on the other, the instrument designed by León et al. (2017) was used to select several items (from 45 to 51) relating to the dimension of structuring classes. Finally, a scale on motivation was based on the work of Jang et al. (2012), who developed an instrument that has recently been translated and validated in Spanish by Núñez and León (2019). There are 12 items (52 to 63) that help to assess agentic, behavioural, emotional and cognitive involvement.

The English translations of the scales were undertaken by a professional native translator and the discrepancies found by the authors were discussed until consensus was reached. The resulting instrument was subjected to content assessment by a group of experts consisting of five university professors specialising in quantitative methodology and validation of questionnaires and two secondary school teachers. The definitions of each of the constructs were provided and a rating of the relevance of the items to the constructs was requested using a Likert response scale (1 not relevant at all and 4 highly relevant). The experts’ suggestions were taken into consideration.

After this procedure, Cronbach’s alpha reliability for the 63 items was found to be 0.95.

Data gathering and analysis procedures

Prior contact was established with the school to ensure distribution of the instrument within one week. The researchers individually attended the selected classrooms at the agreed times and had 20 min to complete research requirements. After administering the questionnaire, we analysed the perceptions of the participating students in terms of the frequency with which they considered that a series of indicators arise in relation to the different environments studied. For this purpose, responses to each item were taken into account through a descriptive analysis. As a measure of central tendency, the mean of each item was obtained and, as a measure of dispersion, the standard deviation was studied as well as the maximum and minimum values. The homogeneity of each item was also studied with its corrected item-total correlation, allowing the identification of the least consistent items in each of the scales.

To study the psychometric properties of the instrument created, the following statistical techniques were applied:

-

We submitted each scale to a factor analysis with maximum likelihood extraction and varimax rotation, in order to identify factors underlying each scale.

-

With the factors extracted, model fit was assessed using structural equation analysis through the AMOS v.18 and adopting as a reference the criteria established by Byrne (2010) and Kline (2010) (CMIN/DF between 2 and 5, CFI and IFI > 0.9, RMSEA < 0.06 and HOELTER > 200).

-

The factorial validity of the complete scale was presented, together with the internal consistency of the whole scale and its subscales using Cronbach’s alpha coefficient. All analyses were performed with the SPSSv25 package.

Results

Students’ perceptions of the proposed environments: physical, learning, teaching and motivational

For all items and scales described below, the minimum value available is 1 and the maximum value is 5, with sufficient variability to contribute to a high reliability coefficient.

Descriptive analysis of physical environment scale

As can be seen in Table 1, the highest scores were for “The sports facilities are sufficient” (4.18) and “I feel safe in my school” (3.85). Pupils perceived that the sports facilities are sufficient and they feel safe in general. Moreover, the distribution of scores (SD = 0.90) on these items was quite homogeneous with respect to the mean. The lowest scores were for “I would rather have gone to another school” (1.98), “In summer, the air conditioning works properly” (1.90) and “In winter, the heating system works properly” (2.14). For these items, students’ mean scores indicate that they would never or rarely like to have gone to another school. Therefore, in general, they have an optimistic perception of their school. Regarding the heating and air conditioning of the school, they do not perceive it as working properly. However, the standard deviations reflect a distribution with considerable variation (i.e. very different perceptions in these statements). The rest of the items achieved neutral scores, although with a wide dispersion of responses, which indicates the existence of different perceptions.

In the item-total correlations, we identified two items with negative correlations (Items 9 and 12), suggesting that these items do not help to measure in the same direction as the rest of the items.

Descriptive analysis of environmental aspects of learning scale

For the environmental aspects of learning, high scores were concentrated in the following items: “I make friends with my classmates” (mean = 4.34; SD = 0.91); “I get on well with my classmates” (mean = 4.34; SD = 0.80); “I work well with my classmates” (mean = 4.07; SD = 0.92); “It is important to keep my tasks up-to-date” (mean = 4.33; SD = 0.99); “I am punctual” (mean = 4.06; SD = 1.17); “I try to understand the work I have to do” (mean = 4.30; SD = 0.85); and “I know how much work I have to do” (mean = 4.19; SD = 0.93). In all of them, the average obtained was higher than 4. Thus, the majority of pupils reported that they always make these statements and there is a fairly uniform perception with respect to the mean, except for being punctual for which students seem to have differing perceptions. (SD = 1.17). The rest of the items all had averages higher than 3, as well as a relatively homogeneous dispersion around respect to the mean.

The item-total correlations were all positive, indicating that all items contribute to measuring the environmental aspects of learning with values between 0.32 and 0.64 (Table 2).

Descriptive analysis of the teaching scale

Students had a rather even-handed perception regarding the learning environment. For the majority of the statements, mean scores around option 3 were observed (i.e. students sometimes perceive the learning environment in a positive way, with high standard deviations greater than 1). So, there was quite a lot of variation in the perceptions/scores obtained. The lowest means were for the items “My teachers are interested in my problems” (mean = 2.77; SD = 1.26) and “My teachers take my feelings into account” (mean = 2.91; SD = 1.16). This implies that the pupils’ views of their teachers lack empathy, although these perceptions are not unanimous.

The highest averages were found for the items “I have the same opportunities to participate” (mean = 4.04; SD = 1.09), “I feel included in my class” (mean = 4.05; SD = 1.12), and “They know a lot about the subjects” (mean = 4.30; SD = 0.89). The first two statements, in terms of the average score achieved, imply positive perceptions of student participation and inclusion in the classroom, although with different ideas among students responding to these items. The standard deviations obtained for them show that there is quite a lot of variation in the scores.

For the third statement that “They know a lot about the subjects”, we found a tendency among students to choose option 4, with a fairly homogeneous distribution. In other words, the majority of students feel that teachers have a lot of knowledge about their subjects.

As for the item-total correlations, they were all positive and greater than 0.5 (Table 3).

Descriptive analysis of the motivation scale

In the motivation area, we found the highest average for “I like learning new things” (mean = 4.01; SD = 0.99), suggesting that students are motivated to make further progress in their learning. The lowest score was for the item “I tell the teachers what I am interested in” (mean = 2.73; SD = 1.29); in this case, students did not express their interests, although there are different perceptions in the scores chosen to define this item. The rest of the items had central scores that exceed the mean of 3, although all had quite a lot of variation in the scores achieved (i.e. students have different perceptions). The item-total correlations were all positive with values between 0.48 and 0.75 (Table 4).

Analysis of psychometric properties of instruments

For the factor analysis, a series of recommendations proposed in the scientific literature were considered. First, items whose correlations (Cr-IT) were inverse to the rest of the items or weak (< 0.3) were discarded. Before excluding these items for further analysis, they were inverted with the aim of observing whether the internal consistency of the scale (Physical Environment) would improve. We did not obtain positive result as the alpha coefficient showed that eliminating these items would significantly improve (alpha = 0.59 for 12 items; alpha = 0.80 for 10 items). Second, values below 0.30 in the factor loadings were suppressed to identify which loadings were the most important (Nunnally & Bernstein, 1995). Third, the factor analysis presented here is the result of applying an iterative process in which, at each step, items whose factor loadings were weak or saturated in more than one factor were discarded. Following this criterion, the items eliminated in the different factor analyses were as follows: for the physical environment scale, Items 2, 3, 8 and 9; for the environmental aspects of learning scale, Items 14, 18, 26, 28, 29, 30 and 31; and for the teaching environment scale, Items 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 46, 47,48 and 50. For the motivational environment scale, Items 53, 54, 57, 58 and 62 were eliminated.

Factor analysis, parameter estimation and fit assessment

Factor analysis was carried out with the maximum likelihood extraction method and varimax rotation for all scales in order to identify factors underlying each scale and simplify their interpretation. The factor structures of the scales after eliminating the items identified above are presented below, together with estimation of parameters and evaluation of the fit through structural equation models.

Physical environment scale

Two factors explained 50.64% of the total variance (Table 5). The first factor, which refers to school facilities and/or equipment, is made up of five items (Items 4, 6, 5, 1 and 7) and explains 37.13% of the variance. A second factor, consisting of two items (Items 11 and 10) that refer to the feeling of pride and satisfaction with the school, explains 13.50% of the variance.

Confirmatory factor analysis was performed to test the adequacy of a two-factor model. Standardised regression weights and covariances are presented in the model, which all were significant (p < 0.01), as were the fit indices.

All the estimated parameters of the model returned factor loadings on each factor that, in all cases, were higher than 0.4 and, on average, were above 0.6, which leads to the conclusion that all items made an adequate contribution to the source dimensions.

As for the relationships established in Fig. 1, a strong correlation is shown between the two factors F1: School Facilities and/or Equipment and F2: Feeling of Satisfaction among Pupils (β = 0.64; p < 0.001). The model indices showed a good fit according to the criteria established by Byrne (2010) and Kline (2010), CMIN/df = 0.92, CFI = 1, IFI = 1, RMSEA = 0.00 and HOELTER = 382.

Environmental aspects of learning

Two factors were extracted that explained 49.93% of the total variance. The first factor refers to items associated with ‘individual academic responsibility’ and explains 35.07% of the variance. The second factor refers to ‘relationships with peers’ and explains 14.86% of the variance (Table 6).

As shown in Fig. 2, this scale has satisfactory fit and regression indices.

The goodness-of-fit indicators were within acceptable parameters: CMIN/df = 1.57, CFI = 0.95, IFI = 0.95, RMSEA = 0.05 and HOELTER = 176. The HOELTER indicator is the only one that was below the established criterion (≥ 200). However, because this indicator is sensitive to the sample size, it is necessary to consider the rest of the indicators, such as the RMSEA, which point to a good explanatory level of the model. Therefore, the two-factor model was accepted for this dimension, although it could be replicated with smaller sample sizes. The covariance between the two extracted factors was high and significant (β = 0.50; p < 0.001) and the regression weights all were above 0.50 or very close to it, indicating that all items made an adequate contribution to this dimension.

Teaching environment scale

For this scale (Table 7), the two factors explained 68.42% of the total variance. The first factor (47.68% of the variance) saturates variables related to ‘attention to diversity’ (having the same opportunities, feeling included in class, receiving the same treatment) and a second factor (20.74% of variance) that saturates variables related to ‘class preparation’ strategies (encouraging student participation, entertaining, interesting strategies, well organised classes, etc.) (Fig. 3).

The goodness-of-fit indicators were within acceptable parameters: CMIN/df = 1.47, CFI = 0.98, IFI = 0.99, RMSEA = 0.04 and HOELTER = 271. The factor loadings all were above 0.50. The covariance between the two factors was high and significant (β = 0.50; p < 0.001), indicating that the two extracted factors F5: Attention to Diversity and F6: Class Preparation refer to the same dimension, in this case, the teaching environment.

For the motivational environment scale (Table 8), two factors explained 62.98% of the total variance. The first factor (44.37% of the total variance) saturated a set of items related to academic effort (“I make a lot of effort to do things properly”; “I make as much effort as I can”; “I pay attention”). The second factor (18.60% of the total variance) included items associated with showing interest (“I tell the teachers what I am interested in”; “I express my preferences and opinions”; “I like learning new things”; “I ask questions that help me understand”). (Fig. 4).

The evaluation of fit for this scale involved acceptable indexes: CMIN/df = 3.40, CFI = 0.92, IFI = 0.92, RMSEA = 0.1 and HOELTER = 104. The RMSEA and HOELTER indices did not meet the established criterion (RMSEA < 0.06 and HOELTER > 200). However, the rest of the goodness-of-fit indices of the model were satisfactory. In addition, the high regression coefficients found between the items and their reference dimension, together with the high covariance between both factors (β = 0.51; p < 0.001), reflect the existence of a higher dimension that brings together both factors, all of which indicates acceptance of the model.

Full scale factor confirmation

After the results obtained in the exploratory analysis, confirmatory factor analysis was performed to check the adequacy of a first-order model of four factors (physical, learning, teaching and motivational environments) and eight of second order (F1: School facilities and/or equipment; F2: Feeling of satisfaction; F3: Individual academic responsibility; F4: Relations with classmates; F5: Attention to diversity; F6: Class preparation; F7: Academic effort and F8: Showing interest). As shown in Fig. 5, the regression weights all were above or very close to 0.50, which creates a strong relationship of those items with their respective latent variables. Moreover, the high variances of all observable variables, as well as of the second-order latent variables, indicate the high explanatory level of the model for all variables. The standardised regression weights of the variables and the covariances were all significant (p < 0.001). The covariances were all very high among all the items.

With respect to the goodness-of-fit indices of the model, the following results were obtained: CMIN/df = 1.75, CFI = 0.86, IFI = 0.86, RMSEA = 0.06 and HOELTER = 131. These indices denote an appropriate fit of the scales that make up the questionnaire. On the other hand, we decided to correlate in the model a series of variables that seem to be subject to the same error. Variables 43 and 44 (“My work receives the same praise as everyone else’s” and “I feel included in my class”) correlate in a negative way, although referring to the same information, as not receiving the same praise as others makes them feel not included in class. In factor 7, variables 52 and 56 (“I make a lot of effort to do things properly” and “I make as much effort as I can”) are correlated. They share the same information, which leads us to believe that they refer to the same variable. The same thing happened with variables 63 and 61 (“I express my preferences and opinions” and “I like learning new things”).

After establishing the scales in the model, the reliability of each of the four scales was calculated using Cronbach’s alpha, which provided average or high values of internal consistency both for the total scale (0.91 for 32 items) and for each dimension: physical (0.75), learning (0.81), teaching (0.78) and motivational (0.78). In addition, the alpha coefficient of each extracted factor also was acceptable: F1: 0.68; F2: 0.78; F3: 0.78; F4: 0.76; F5: 0.75; F6: 0.78; F7: 0.80; F8: 0.71.

In relation to the block of items focused on Individual Academic Responsibility and its relationship with motivation, they are closely related constructs (proof of this is the high covariance found between both groups of items—learning scale and motivation scale; Fig. 5). They measure the same phenomenon, but from different theoretical standpoints. Individual Academic Responsibility items focus on Task Orientation. But the items of the motivation block based on self-determination theory examine the construct of involvement and involve four typologies: agentic, behavioural, emotional and cognitive. In general, the results obtained indicate that the final model, a consequence of all the analyses of the psychometric properties, adequately describes the data.

Discussion

The purpose of this study was to validate, for the Spanish context, an instrument for assessing learning environments from students’ perspective. In this sense, the analyses carried out left us with an instrument with 32 items distributed among four scales and eight subscales:

-

Physical environment, consisting of two factors: School Facilities and/or Equipment, consisting of five items (Items 4, 6, 5, 1 and 7) and Feeling of Pride and Satisfaction with the School consisting of two items (Items 11 and 10).

-

Environmental aspects of learning, consisting of two factors: Individual Academic Responsibility composed of seven items (Items 23, 20, 24, 25, 19, 21 and 22) and Relationships with Classmates composed of five items (Items 16, 13, 17, 15 and 27).

-

Teaching environment, consisting of two factors: Attention to diversity (Items 42, 43 and 44) and Class Preparation (Items 49, 51 and 45).

-

Motivational environment, consisting of two factors: Academic Effort (Items 52, 56, 60) and Showing Interest (items 59, 63, 61 and 55).

As Peng observed (2016), physical, teaching, learning and motivational sub-ecosystems are interdependent and mutually interacting. In order to create a favourable learning environment for learners, we should expand our understanding of the classroom environment from an ecological perspective and highlight the interaction between the teacher, students and the classroom settings in the whole classroom ecosystem. As indicated by the confirmatory factor analysis, we believe that it is interesting to retain the F1 and F7 factors even though they are conceptually quite similar. According to Núñez and León (2019), self-determination theory (SDT) establishes that the social conditions that support individual experiences of autonomy, competence and social relations promote higher levels of quality in the types of involvement in the development of activities. The analysis of engagement can help us to understand how to improve students’ intrinsic motivation (Peng, 2016).

The results obtained from analysing the psychometric properties of the instrument point to a good configuration of the underlying dimensions and sub-dimensions, thus supporting the reference works that validated the selected scales and, at the same time, sharing content with other scales designed in studies such as those of Aldridge et al., (1999, 2006), Aldridge and Fraser (2000), Bear et al. (2011), Bradshaw et al. (2014), Sarioğlan (2021), Zullig et al. (2015) or Jang et al. (2012).

In view of the above, this questionnaire is likely to be useful and easy to apply in evaluating and continuing to investigate the learning environments at the secondary education stage in depth, as has been carried out in other contexts where the application of instruments containing some of the factors in this study were used to assess students’ perceptions of their learning environments. Examples include research in countries such as Indonesia (Widiastuti et al., 2020), Turkey (Kahya, 2019), United Kingdom (Barrett et al., 2015), Iran (Mokhtarmanesh & Ghomeishi, 2019), The Ne2019therlands (Könings et al., 2014), Trinidad and Tobago (Maharaj-Sharma, 2021), United Arab Emirates (Khalil & Aldridge, 2019), Georgia (Slovinsky et al., 2021), Spain and Mexico (Alonso Tapia & Fernández Heredia, 2008) and Slovenia (Radovan & Makovec, 2015). These examples demonstrate that the application of instruments, such as the one validated here, is likely to support the construction of optimal learning environments in the secondary school setting, especially from the student’s point of view concerning:

-

(a)

Relationships, coexistence in the classroom and learning management. This is likely to help teaching teams and specialists to make decisions about direct intervention in the design processes of curricular itineraries or in attention to personal development.

-

(b)

Personal and professional competences of teachers. It will be possible to identify teacher profiles and propose training actions to help improve their work in the teaching process.

-

(c)

Physical conditions in which the teaching and learning process takes place. This should make it easier for management teams and teachers themselves to make decisions about the needs expressed by students, as well as to detect levels of apprehension towards the school.

-

(d)

Possible situations of school dropout or failure among pupils or even the detection of educational needs that entail the development of psycho-pedagogical actions.

This instrument, as a diagnostic tool, must have a strategic value for discovering what happens in each of these learning environments and, as the specific literature has shown, what factors share the constructs studied. Therefore incorporating aspects such as class structure, behavioural disaffection or academic support, among others (León et al., 2017; Mikami et al., 2017; Zullig et al., 2015), will make the instrument more versatile and allow it to examine in depth those environments in which a special interest is detected." -->

On the other hand, and considering the averages and standard deviations for the four scales, we can say that some of the environmental conditions, which have been identified as conditioning elements of learning, should be improved (Barret et al., 2013; Kweon et al., 2017). Relationships in the school appear to be good and they have been identified as determinants of behavioural engagement and performance (Mikami et al., 2017). In teaching and learning, as stated by León et al. (2017) and Cayubit (2022), teacher support must be improved, and this is how students saw it in our study. Although in general the pupils feel safe and at ease in their school, this might indicate that teachers’ responses, demands and expectations of their students could be helping to promote this security, according to Bear et al. (2011). For motivation, defined as active involvement in the learning activity, it seems that students need to externalise their interests more in order to improve their agentic motivation. All this would contribute, in terms of Núñez and León (2019), to the improvement of instruction and the development of teaching by the teacher, while understanding their work as a facilitator of motivation. Notwithstanding these results, it is important to highlight the variability of perceptions that were found in the students’ responses. For this reason, we believe that it is necessary to study these perceptions more broadly and in depth in the intensive study that we are carrying out.

The limitations of our work include those of the measuring instrument per se (desirability of the responses) and others of the application (test–retest reliability). Our future lines of work will involve the extensive application of the instrument to a representative sample of students in the community of Andalusia. This community has the worst academic results and rates of school failure and dropout compared with other Spanish communities (MEYFP, 2021c). We will use the revised School Level Environment Questionnaire (SLEQ; Johnson et al., 2007) to identify teachers’ perceptions of school climate. In addition, we would like to investigate the impact of other variables linked to the sample’s characteristics (gender, repeat years, type of classroom attended, average marks or grades, type of school, etc.), because the literature highlights the incidence of these variables in the relationship with learning environments and academic performance. In doing so, we intend to make an x-ray of the situation and propose plans to improve the learning environments with the schools. In a second phase, and along the lines of Aldridge et al. (2020), we will complete our study with a qualitative component in which we will use student voice methodology (Messiou, 2014).

References

Aldridge, J. M., & Fraser, B. J. (2000). A cross-cultural study of classroom learning environments in Australia and Taiwan. Learning Environments Research, 3, 101–134. https://doi.org/10.1023/A:1026599727439

Aldridge, J., Fraser, B. J., & Huang, T. I. (1999). Investigating classroom environments in Taiwan and Australia with multiple research methods. The Journal of Educational Research, 93(1), 48–62. https://doi.org/10.1080/00220679909597628

Aldridge, J., Laugksch, R. C., Seopa, M. A., & Fraser, B. J. (2006). Development and validation of an instrument to monitor the implementation of outcomes-based learning environments in science classrooms in South Africa. International Journal of Science Education, 28(1), 45–70. https://doi.org/10.1080/09500690500239987

Aldridge, J., Rijken, P. E., & Fraser, B. J. (2020). Improving learning environments through whole-school collaborative action research. Learning Environments Research, 24, 183–205. https://doi.org/10.1007/s10984-020-09318-x

Alonso Tapia, J., & Fernández Heredia, B. (2008). Development and initial validation of the Classroom Motivational Climate Questionnaire (CMCQ). Psicothema, 20(4), 883–889.

Alonso Tapia, J., & Fernández Heredia, B. (2009). A model for analysing classroom motivational climate: Cross-cultural validity and educational implications. Journal for the Study of Education and Development, 32(4), 598–612. https://doi.org/10.1174/021037009789610368

Anderson, R. C., & Beach, P. (2022). Measure of opportunity: Assessing equitable conditions to learn twenty-first century thinking skills. Learning Environments Research, 25, 741–774. https://doi.org/10.1007/s10984-021-09388-5

Barrett, P., Zhang, Y., Moffat, J., & Kobbacy, K. (2013). A holistic, multi-level analysis identifying the impact of classroom design on pupils’ learning. Building and Environment, 59, 678–689. https://doi.org/10.1016/j.buildenv.2012.09.016

Barrett, P., Davies, F., Zhang, Y., & Barrett, L. (2015). The impact of classroom design on pupils' learning: Final results of a holistic, multi-level analysis. Building and Environment, 89, 118–133. https://doi.org/10.1016/j.buildenv.2015.02.013

Bear, G. G., Gaskins, C., Blank, J., & Chen, F. F. (2011). Delaware School Climate Survey—Student: Its factor structure, concurrent validity, and reliability. Journal of School Psychology, 49(2), 157–174. https://doi.org/10.1016/j.jsp.2011.01.001

Besançon, M., Fenouillet, F., & Shankland, R. (2015). Influence of school environment on adolescents’ creative potential, motivation and well-being. Learning and Individual Differences, 43, 178–184. https://doi.org/10.1016/j.lindif.2015.08.029

Bradshaw, C. P., Milam, A. J., Debra, C., Furr-Holden, M., & Lindstrom Johnson, S. (2015). The school assessment for environmental typology (SAfETy): An observational measure of the school environment. American Journal of Community Psychologu, 56, 280–292. https://doi.org/10.1007/s10464-015-9743-x

Bradshaw, C. P., Waasdorp, T. E., Debnam, K. J., & Johnson, S. L. (2014). Measuring school climate in high schools: A focus on safety, engagement, and the environment. Journal of School Health, 84(9), 593–604. https://doi.org/10.1111/josh.12186

Byrne, B. (2010). Structural equation modeling with AMOS: Basic concepts, applications, and programming. Taylor & Francis Group.

Cabrera, L. (2020). Is education a personal and/or social concern in Spain? Revista De Educación, 388, 193–228. https://doi.org/10.4438/1988-592X-RE-2020-388-452

Cai, J., Wen, Q., Lombaerts, K., Jaime, I., & Cai, I. (2022). Assessing students’ perceptions about classroom learning environments: The New What Is Happening In this Class (NWIHIC) instrument. Learning Environments ResEarch, 25, 601–618. https://doi.org/10.1007/s10984-021-09383-w

Castro-Pérez, M., & Morales-Ramírez, M. E. (2015). Los ambientes de aula que promueven el aprendizaje, desde la perspectiva de los niños y niñas escolares. Revista Electrónica Educare, 19(3), 1–32. https://doi.org/10.15359/ree.19-3.11

Cayubit, R. F. O. (2022). Why learning environment matters? An analysis on how the learning environment influences the academic motivation, learning strategies and engagement of college students. Learning Environments Research, 25, 581–599. https://doi.org/10.1007/s10984-021-09382-x

Chinujinim, T., & Amaechi, J. (2019). Influence of school environment on students’ academic performance in technical Colleges in Rivers State. International Journal of New Technology and Research, 5(3), 40–48.

Deci, E. L., & Ryan, R. M. (1985). Intrinsic motivation and self-determination in human behaviour. Plenum.

Doyle, W. (1977). Paradigms for research on teacher effectiveness. Review of Research in Education, 5, 163–198. https://doi.org/10.3102/0091732X005001163

Fatou, N., & Kubiszewski, V. (2018). Are perceived school climate dimensions predictive of students’ engagement? Social Psychology of Education: An International Journal, 21(2), 427–446. https://doi.org/10.1007/s11218-017-9422-x

Feldhoff, T., Radisch, F., & Bischof, L. M. (2016). Designs and methods in school improvement research: A systematic review. Journal of Educational Administration, 54(2), 209–240. https://doi.org/10.1108/JEA-07-2014-0083

Fraser, B. J. (2012). Classroom learning environments: Retrospect, context and prospect. In B. J. Fraser, K. G. Tobin, & C. J. McRobbie (Eds.), Second international handbook of science education (pp. 1191–1239). Springer.

Gençoglu, B., Helms-Lorenz, M., Maulana, R., & Jansen, E. (2022). Examining students’ perceptions of teaching behavior: Linking effective teaching framework and self-determination theory. Abstract from Quality in Nordic Teaching (QUINT) Conference, Reykjavik, Iceland.

Gilman, L. J., Zhang, B., & Jones, C. J. (2021). Medición de las percepciones de los entornos de aprendizaje escolar para estudiantes más jóvenes. Entorno De Aprendizaje Research, 24, 169–181. https://doi.org/10.1007/s10984-020-09333-y

González-Falcón, I., Coronel, J. M., & Correa, R. I. (2016). School counsellors and cultural diversity management in Spanish secondary schools: Role of relations with other educators and intervention model used in care of immigrant students. Intercultural Education, 27(6), 615–628. https://doi.org/10.1080/14675986.2016.1259462

González-Losada, S., García-Rodríguez, M. P., Ruíz, F., & Muñoz, J. M. (2015). Factores de riesgo de abandono escolar desde la perspectiva del profesorado de Educación Secundaria Obligatoria en Andalucía (España). Profesorado Revista De Curriculum Y Formación Del Profesorado, 19(3), 226–245.

Goulet, J., & Morizot, J. (2022). Socio-educational environment questionnaire: Factor validity and measurement invariance in a longitudinal study of high school students. Learning Environments Research. https://doi.org/10.1007/s10984-022-09441-x

Harinarayanan, S., & Pazhanivelu, G. (2018). Impact of school environment on academic achievement if secondary school students at Vellore Educational District. Shanlax International Journal of Education, 7(1), 13–19. https://doi.org/10.5281/zenodo.2529402

Jang, H., Kim, E. J., & Reeve, J. (2012). Longitudinal test of self-determination theory’s motivation mediation model in a naturally occurring classroom context. Journal of Educational Psychology, 104(4), 1175–1188. https://doi.org/10.1037/a0028089

Johnson, B., & McClure, R. (2004). Validity and reliability of a shortened, revised version of the constructivist learning environment survey (CLES). Learning Environments Research, 7(1), 65–80. https://doi.org/10.1023/B:LERI.0000022279.89075.9f

Johnson, B., Stevens, J. J., & Zvoch, K. (2007). Teachers’ perceptions of school climate: A validity study of scores from the revised school level environment questionnaire. Educational and Psychological Measurement, 67(5), 833–844. https://doi.org/10.1177/0013164406299102

Kahya, E. (2019). Mismatch between classroom furniture and anthropometric measures of university students. International Journal of Industrial Ergonomics, 74, 102864. https://doi.org/10.1016/j.ergon.2019.102864

Khalil, N., & Aldridge, J. (2019). Assessing students’ perceptions of their learning environment in science classes in the United Arab Emirates. Learning Environments Research, 22(3), 365–386. https://doi.org/10.1007/s10984-019-09279-w

Khine, M. S. (2002). Using what is happening in this class (WIHIC) to measure the learning environment. Teaching and Learning, 22(2), 54–61.

Kline, R. (2010). Principles and practice of structural equation modeling. The Guilford Press.

Könings, K. D., Seidel, T., & van Merriënboer, J. J. G. (2014). Participatory design of learning environments: Integrating perspectives of students, teachers, and designers. Instructional Science, 42, 1–9. https://doi.org/10.1007/s11251-013-9305-2

Korpershoek, H., Canrinus, E. T., Fokkens-Bruinsma, M., & De Boer, H. (2020). The relationships between school belonging and students’ motivational, social-emotional, behavioural, and academic outcomes in secondary education: A meta-analytic review. Research Papers in Education, 35(6), 641–680. https://doi.org/10.1080/02671522.2019.1615116

Kweon, B., Ellis, C. D., Lee, J., & Jacobs, K. (2017). The link between school environments and student academic performance. Urban Forestry & Urban Greening, 23, 35–43. https://doi.org/10.1016/j.ufug.2017.02.002

León, J., Medina-Garrido, E., & Núñez, J. L. (2017). Teaching quality in math class: The development of a scale and the analysis of its relationship with engagement and achievement. Frontiers in Psychology, 8, 895. https://doi.org/10.3389/fpsyg.2017.00895

Lewin, K. (1936). Principles of topological psychology. McGraw.

Ministerio de Educación y Formación Profesional de España (MEYFP). (2021a). Sistema estatal de indicadores de la educación 2021a. Secretaría General Técnica, Subdirección General de Atención al Ciudadano, Documentación y Publicaciones. https://www.educacionyfp.gob.es/dam/jcr:98edb864-c713-4d48-a842-f87464dc8aee/seie-2021a.pdf

Ministerio de Educación y Formación Profesional de España (MEYFP). (2021c). Datos de la encuesta de población activa. https://www.lamoncloa.gob.es/serviciosdeprensa/notasprensa/educacion/Paginas/2021c/290121-abandono.aspx

Ministerio de Educación y Formación Profesional de España (MEYFP). (2021b). Panorama de la educación indicadores de la OCDE 2021b. Informe español. Secretaría General Técnica. https://www.educacionyfp.gob.es/inee/indicadores/indicadores-internacionales/ocde.html

Maharaj-Sharma, R. (2021). A WIHIC exploration of secondary school science classrooms–A case study from Trinidad and Tobago. Research in Pedagogy, 11(1), 165–179. https://doi.org/10.5937/IstrPed2101165M

Malaguzzi, L. (1995). I cento linguaggi dei bambini. Edizioni Junior

Méndez, I., & Cerezo, F. (2018). La repetición escolar en educación secundaria y factores de riesgo asociados. Educación XX1, 21(1), 41–62. https://doi.org/10.5944/educXX1.13717

Messiou, K. (2014). Responding to diversity by engaging with students’ voices A strategy for teacher development. Accounts of practice. European Union.

Mikami, A. Y., Ruzek, E. A., Hafen, C. A., Gregory, A., & Allen, J. P. (2017). Perceptions of relatedness with classroom peers promote adolescents’ behavioral engagement and achievement in secondary school. Journal of Youth and Adolescence, 46(11), 2341–2354. https://doi.org/10.1007/s10964-017-0724-2

Mokhtarmanesh, S., & Ghomeishi, M. (2019). Participatory design for a sustainable environment: Integrating school design using students’ preferences. Sustainable Cities and Society, 51, 101762. https://doi.org/10.1016/j.scs.2019.101762

Moos, R. H. (1974). The social climate scales: An overview. Consulting Psychologists Press.

Müller, F. H., & Louw, J. (2004). Learning environment, motivation and interest: Perspectives on self-determination theory. South African Journal of Psychology, 34(2), 169–190. https://doi.org/10.1177/008124630403400201

Núñez, J. L., & León, J. (2019). Determinants of classroom engagement: A prospective test based on self-determination theory. Teachers and Teaching, 25(2), 147–159. https://doi.org/10.1080/13540602.2018.1542297

Núñez, J. L., León, J., Grijalvo, F., & Albo, J. M. (2012). Measuring autonomy support in university students: The Spanish version of the learning climate questionnaire. The Spanish Journal of Psychology, 15(3), 1466–1472. https://doi.org/10.5209/rev_SJOP.2012.v15.n3.39430

Nunnally, J. C., & Berstein, I. H. (1995). Factor analysis I: The general model and variance condensation. McGraw-Hill.

Peng, H. (2016). Learner perceptions of Chinese EFL college classroom environments. English Language Teaching, 9(1), 22–32. https://doi.org/10.5539/elt.v9n1p22

Pielstick, N. L. (1988). Assessing the learning environment. School Psychology International, 9(2), 111–122. https://doi.org/10.1177/0143034388092005

Poondej, C., & Lerdpornkulrat, T. (2016). Relationship between motivational goal orientations, perceptions of general education classroom learning environment, and deep approaches to learning. Kasetsart Journal of Social Sciences, 37, 100–103. https://doi.org/10.1016/j.kjss.2015.01.001

Postareff, L., Parpala, A., & Lindblom-Ylänne, S. (2015). Factors contributing to changes in a deep approach to learning in different learning environments. Learning Environments Research, 18, 315–333. https://doi.org/10.1007/s10984-015-9186-1

Radovan, M., & Makovec, D. (2015). Relations between students’ motivation, and perceptions of the learning environment. Center for Educational Policy Studies Journal, 5(2), 115–138. https://doi.org/10.26529/cepsj.145

Ryan, R. M., & Deci, E. L. (2000). Self-determination theory and the facilitation of intrinsic motivation, social development, and well-being. American Psychologist, 55(1), 68–78.

Sarioğlan, A. B. (2021). Development of inquiry-based learning environment scale: A validity and reliability study. MOJES: Malaysian Online Journal of Educational Sciences, 9(4), 27–40.

Schumacher, D. J., Englander, R., & Carraccio, C. (2013). Developing the master learner: Applying learning theory to the learner, the teacher, and the learning environment. Academic Medicine, 88(11), 1635–1645. https://doi.org/10.1097/ACM.0b013e3182a6e8f8. PMID: 24072107.

Sinclair, B. B., & Fraser, B. J. (2002). Changing classroom environments in urban middle schools. Learning Environments Research, 5, 301–328. https://doi.org/10.1023/A:1021976307020

Skordi, P., & Fraser, B. J. (2019). Validity and use of the what is happening in this class? (WIHIC) questionnaire in university business statistics classrooms. Learning Environments Research, 22(2), 275–295. https://doi.org/10.1007/s10984-018-09277-4

Slovinsky, E., Kapanadze, M., & Bolte, C. (2021). The effect of a socio-scientific context-based science teaching program on motivational aspects of the learning environment. EURASIA Journal of Mathematics, Science and Technology Education, 17(8), em1992. https://doi.org/10.29333/ejmste/11070

Sullivan, K., Cleary, M., & Sullivan, G. (2005). Bullying en la enseñanza secundaria. El acoso escolar: cómo se presenta y cómo afrontarlo. CEAC.

Suárez, N., Regueiro, B., Estévez, I., Del Mar Ferradás, M., Guisande, M. A., & Rodríguez, S. (2019). Individual precursors of student homework behavioral engagement: The role of intrinsic motivation, perceived homework utility and homework attitude. Frontiers in Psychology, 10, 941. https://doi.org/10.3389/fpsyg.2019.00941

Tarabini, A., & Curran, M. (2015). El efecto de la clase social en las decisiones educativas: Un análisis de las oportunidades, creencias y deseos educativos de los jóvenes. Revista De Investigación En Educación, 13(1), 7–26.

Tomás, J.-M., & Gutiérrez, M. (2019). Aportaciones de la teoría de la autodeterminación a la predicción de la satisfacción académica en estudiantes universitarios. Revista De Investigación Educativa, 37(2), 471–485. https://doi.org/10.6018/rie.37.2.328191

UNESCO (2021). Liderar el ODS 4. Educación 2030. https://es.unesco.org/themes/liderar-ods-4-educacion-2030

Widiastuti, K., Susilo, M. J., & Nurfinaputri, H. S. (2020). How classroom design impacts for student learning comfort: Architect perspective on designing classrooms. International Journal of Evaluation and Research in Education, 9(3), 469–477. https://doi.org/10.11591/ijere.v9i3.20566

Williams, G. C., & Deci, E. L. (1996). Internalization of biopsychosocial values by medical students: A test of selfdetermination theory. Journal of Personality and Social Psychology, 70, 767–779. https://doi.org/10.1037//0022-3514.70.4.767

Yang, Z., Becerik-Gerber, B., & Mino, L. (2013). A study on student perceptions of higher education classrooms: Impact of classroom attributes on student satisfaction and performance. Building and Environment, 70, 171–188. https://doi.org/10.1016/j.buildenv.2013.08.030

Yerdelen, S., & Sungur, S. (2018). Multilevel investigation of students’ self-regulation processes in learning science: Classroom learning environment and teacher effectiveness. International Journal of Science and Mathematics Education, 17(1), 89–110. https://doi.org/10.1007/s10763-018-9921-z

Zullig, K. J., Collins, R., Ghani, N., Hunter, A. A., Patton, J. M., Huebner, E. S., & Zhang, J. (2015). Preliminary development of a revised version of the school climate measure. Psychological Assessment, 27(3), 1072–1081. https://doi.org/10.1037/pas0000070

Funding

Funding for open access publishing: Universidad de Huelva/CBUA.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The information we are collecting is part of the project entitled: “Analysis of learning environments in secondary schools in Andalusia. Quantitative study.” (Ref: UHU-1256187), within the call for ERDF projects financed by the European Union, Junta de Andalucía and Universidad de Huelva. This project is led by Dr. María del Pilar García Rodríguez and Dr. José Manuel Coronel Llamas. Both professors of the Department of Pedagogy of the University of Huelva (Faculty of Education Sciences, Psychology and Sports Sciences).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

García-Rodríguez, M., Conde-Velez, S., Delgado-García, M. et al. Learning environments in compulsory secondary education (ESO): validation of the physical, learning, teaching and motivational scales. Learning Environ Res 27, 53–75 (2024). https://doi.org/10.1007/s10984-023-09464-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10984-023-09464-y