Abstract

The review summarizes the current state, outlook and development of the field of thermal analysis, emphasizing the study of thermal effects as the basis of all other methodologies. Heat and its understanding intertwines throughout the entire civilization from the Greek philosophers through the middle ages to today’s advanced technological era. The foundations of the field of thermal analysis, where heat acts as its own agent, date back to the nineteenth century, and the calorimetric evaluation of heat fluxes became the basis. It views the processes of calibration and rectification specifies the iso- and noniso- degrees of transformation, explains the role of the equilibrium background, which is especially necessary in kinetics. It introduces a new concept of thermodynamics with regard to the constancy of first derivatives and discusses the role of standard temperature and its non-equilibrium variant—tempericity. It describes the constrained states of glasses and assesses the role of dimensions in material science. Finally yet importantly, it deals with the influence of thermoanalytical journals, their role in presenting unusual results, and discusses the role of the dissident science. It also describes the level and influence of adequate books and finally describes discussions and perspectives, i.e. where to look better interpretation for and what is the influence of current over-sophisticated devices.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The connecting perception of fire/light/heat (and more recently energy) is generally recognized, as a fundamental integrating element in the way, matter and society are organized [1,2,3,4,5]. Throughout human history, fire has been omnipresent in both human life and human thought. Fire/heat has been intellectually at work in the development of civilization as mythology, as religion, as natural philosophy, and as modern science. Fire, as its first embodiment, has an extremely long history, which has passed through several unequal stages of the development of civilization [4,5,6,7,8]. As part of the development of interactions in society, we can distinguish roughly four periods. Perhaps the longest can be associated with the period without fire, because the first people were afraid of fire as much as wild animals, but gradually they got used to the necessity of mastering the internal struggle with the feeling of heat and cold. Another long era was accompanied by a steady growth in experience with the use of fire, which helped distinguish humans from animals, especially the use of fire as a weapon, a conscious source of heat, or as an essential aid in cooking to facilitate digestion. A definite advance occurred with the recent but brief period of fire making, both by flint and shaking a pin in a wooden hole, to the current domestication of fire as a tool for working with heat-treated materials. From the point of view of thermal analysis, the most important consumer is the use of heat as a reagent for the own analysis of the behaviour of materials when heated.

Foundations of philosophical concepts of fire/heat

Fire is reflected as a philosophical archetype that around 450 BC became a part of the ancient Greek philosophy that everything is made up of four elements including earth, water and air, tied up by eater or better forma, which was later strengthened and supplemented by renowned Aristotle [7]. The entangled fire was a traditional part of the limitless “apeira” of the scared and self-referential “apeiron” (boundless) primordial of immaterial existence. Fire “pye” (flamma) provides light (eyesight) which is transmitted (such as hearing) by air “aer” (flatus) and reflected (like appetite) by water “hydor” (fluctus) and absorbed (to tactility) over the earth ('ge'- moly). It could be said that the Greeks already understood that there is a kind of natural force that can overcome all other forces (in the energetic sense) and which is subsequently able to create unification, but is also ready to destroy. They also knew how to discriminate and call the basic building blocks as certain patterns or better roots (“rhizomata”), which thus trial the basis of all existence (or better being). Followers of Aristotle dealt with the general forms and causes of being and discussed the concepts of potentiality (“dynamics”) and actuality (“entelecheia”), the elements determining not only the degree of heat and moisture but also its nature to move up or down according to the dominance of air (buoyance) or Earth (gravity). The so-called fifth Platonic existence “quinta essential” was proposed as to ensure the principle of quality (“arche”) conceptualized the ether (“aither”) as something heavenly and indestructible. Aristotle also distinguished temperature from a heat-like quantity, although the same world (“thermon”) was applied to both. Substances were divided into shapes and forms (“morphe” or “forma”) and matter (“hyle”) and the derived philosophy was often referred to as “hylemorhism”, which argued that matter could not exist without form, and the opposite form cannot exist independently, too. In today’s terminology, the expression “in-forma-tion” has survived.

Views on heat in the middle ages

Already in the 17th Century, a Bohemian education reorganizer and Czech highbrow Comenius presented the distinction of three degrees of heat (calor, fervor and ardour) and cold (frigus, algor) and an unnamed degree with reference to normal temperature (tepor). The highest heat degree “ardour” is associated with internal decomposition of matter into some kind of like “atoms”. Comenius was also one of the first to propose the use of the term caloric when observing also the non-equilibrium nature of heat treatment [8]. He assumed that we could use fire to melt ice into water and heat it quickly while there was no way to turn hot water into ice fast enough, even though it is exposed to very intense frost, thereby intuitively recording the phenomena of latent heat and undercooling. In the same period [5,6,7,8,9,10,11,12,13,14,15,16,17], the greatest physicists Newton and Leibniz were still devoted alchemists. The idea of a “fiery fluid” (a kind of spherical particle that easily sticks only to flammable objects) persisted for the next two hundred years, with the assumption that when the substance burned, an insubstantial phlogiston (“terra pinguis”) would escape. However, the definitions of conservation laws needed to be more precise due to the operation of older traditional vital and mortal forces, while conservation was assumed something general between and within a system. It was probably first discovered by the uncited Czech Marcus Marci while Descartes played most important role, although he first believed that the universe was filled with matter in three forms: fire, light and dark matter. Until the work of Scot Black [8], the terms heat and temperature (nature/temperament, first used by Avicenna in the eleventh century) were not discriminated. However, it took two centuries for the fluid theory of heat (via thermogen and caloricum) to be replaced by the vibrational view (the moving state of inherent particles), which was supported by Lavoisier's theory of combustion, although he first combined oxygen with outdated phlogiston. Sadi Carnot presented the theory of a four-stroke device for the operation of an idealized heat engine thus providing basis of modern thermodynamics. Surprisingly, even his rather obsolete caloric theory could be used to reform today's thermodynamics to intriguing usage, as shown in our early studies [18, 19].

Groundwork of thermal analysis

The science of heat has been based on a number of different foundations [8,9,10,11,12,13,14,15,16,17, 20,21,22,23]. In this methodology, heat, as its own agent, is an instrumental means for modern analysis of materials, generally called thermal analysis. G. Tamman [24] when working with the so-called heating and cooling curves was first to introduce this term. Without the discovery of the thermocouple and its widespread use as a measuring sensor, thermal analysis would not have matured to develop such a wide range of applications. Significant to the early development was Osmond's investigation of the heating and cooling behaviour of iron and steel to elucidate the effects of carbon and effectively introduce thermal analysis into the then important branch of metallurgy. It was Roberts-Austen who was one of the first to construct a device providing a continuous record of the output of a thermocouple and called it a “thermoelectric pyrometer”. Although the sample holder of the time had a design reminiscent of modern equipment, its capacity was extremely large, which reduced sensitivity, but on the contrary provided an objectively good degree of reproducibility. Stanfield came up with the idea of differential thermal analysis (DTA) when he kept a “cold” junction at a constant temperature, allowing the difference between two high sample temperatures to be measured. It is important to emphasize that from the very beginning the temperature of the reference sample was taken as the regular temperature of the measured process in the reacting sample, which followed the controlled temperature of the heater (thermostat, furnace), which was a simplification that was carried over without much criticism into today's common use. Another simplification was the assumption that heat propagates instantaneously and there is no need to take into account the effect of its thermal retardation, although this contradicted Newton's earlier well-known findings on the time delay during heat transfer [25].

In the course of development, it has been shown that DTA methods provide relatively quick information about the unique character of the thermal behaviour of materials during heating and thus reveal easily available evidence about the identity of the compound in the examined sample. Indispensable information on measurement and identification was prompted by clay characterization studies [26,27,28], which also brought the first quantitative evaluation based on the description of heat flows between samples. After the Second World War, DTA was already used explicitly for quantitative measurements [28,29,30]. As a result of such a rapid development, the journal Analytical Chemistry became the centre of news concerning the prospects of thermal analysis, published for 22 years by Connie B. Murphy as a biennial reports in [31, 32], the first three of which were designed to specifically analyse DTA [31] and the series was with critiques [33] and perspectives [34]. However, the reviews at the time focused on device designs, applications and qualitative measurement interconnections and did not pay enough attention to the actual thermal phenomenon resulting from heat transfer, which is inherent in all thermal measurements, while being neglected although well described already in 1949 [29].

Theoretical basis summarizing heat transfer

The field of thermal analysis has a long history based on conferences, which thus formed the core of the field, at that time still in the politically divided world of East and West. Thus, one can recall the Russian thermographic conferences starting from Kazan 1953 or the Czech thermographic discussions in Prague from 1955. On the other hand, it was the “Thermal Analysis Symposium” held at the Northern Polytechnic in London in 1965. The initial books published on both sides [35,36,37,38,39,40,41,42] became the basis for the growth of a newly emerging field that can be characterized at four different but gradually increasing levels: identity (fingerprints [43]), quality (such as characteristic temperatures [44]), quantity (peak areas) and kinetics (curve shapes).

The quantitative description was based on the DTA setup of two concurrent crucibles (sells with samples) depending on the determination of mutual heat flows between and from both samples. This procedure is well known from the early theories of calorimetry [45,46,47] and variously emphasized and labelled in subsequent books [30,31,32,33,34,35,36,37,38,39,40,41,42, 48, 49]. The calculation is based on the historically original procedure published by Vold [29] in the following form:

where dα/dt is the reaction rate based on the measured degree of conversion, Δ0T means a level of signal background, CS is heat capacity of sample, ΔT is measured signal of temperature difference between the samples, ΔH is the change of enthalpy and KDTA is the DTA instrumental constant. The term (dΔT/dt), which is identifying the change of observed temperature difference with time brought unprecedented problem with its interpretation from the beginning. It is often thought of as a heating rate imbalance, while its real meaning relates to the thermal inertia resulting from the original Newtonian derivations, which is habitually overlooked. The question remained as to how far it interferes with quantitative evaluation and when it can be neglected. This became the subject of analysis as early as the late 1950s [29, 30], but unfortunately mistakenly [50] although the contained error was discussed in detail in [51], but again little read and acknowledged. Therefore and yet this problem appears again and again in the unacceptable re-quoting [52, 53], even though the validity of Newtonians time-dependent heat transport has been long established and recognized [28, 29, 54,55,56,57,58].

In the middle of sixties there a new approach appeared based on reheating the samples with an attached micro-heaters so that both sample temperatures were kept the same and the heat needed for reheating becomes the measured variable [59, 60]. In principle, this new method differed from classical DTA because the temperature difference was not a measured quantity but was maintained to a minimum and thus used for mere regulation. For this innovative measurement, called Differential scanning calorimetry (DSC), the solution of heat flows was simpler because the term heat inertia was absent. However, this new term DSC became fashionable and almost all equipment manufacturers started renaming their DTA´s to DSC based on and as a result of additional calibration where the renormalized heat flux instead of the classical temperature difference was taken as the measured quantity. In such a case, however, it would be correct to use the name heat-flow DSC. However, it is important to keep in mind that the whole principle of DTA has been preserved and thus the necessity of accounting for the effect of thermal inertia.

Calibration, deconvolution, rectification and heat pulse testing

In its primary stages, thermal analysis researchers focused on the design and construction of devices, often prepared at home in laboratories from the parts available at that time [61], which was a frequent subject of early books [34,35,36,37,38,39,40,41,42]. The instrument application was focused on the area of fingerprints [43] and the identification of characteristic temperatures [31,32,33,34, 44] for which it was necessary to undergo calibration of the instrument using the temperature behaviour of well-known standards [62, 63]. It became necessary in order to obtain data that match what another instrument would obtain [64,65,66], and in which a number of researchers from various countries were once involved under the banner of the multinational ICTAC [67, 68]. However, in the course of the sophistication of the go-ahead equipment, such calibration has become a part of their manufacturing setups and thus unnecessary. However, another special calibration remained active when converting DTA to the heat-flow DSC via standardization relation Δ(dq/dt) = KDTA·ΔT.

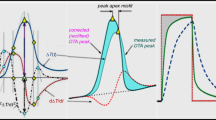

It should be noted that there remains a method of improved resolution of experimentally obtained data using deconvolution based on a mathematical algorithm that removes measurement noise, artefacts also allowing the resolution of overlapping effects [69,70,71,72,73]. However, the practice of deconvolution is often confused with the process of refinement, which according to the dictionary means improving accuracy, sensitivity, or anything that represents exactness. Thus, it is only appropriate for converting the just-measured DTA curve into a form corresponding to the circumstance that takes into account the inseparable process of heat transfer causing time delays. Such an improvement, however, is not liked, because it brings a significant mathematical complication compared to the traditional (simplified) procedures already introduced in the early theory of thermal analysis, where the heat transfer is abridged as instantaneous, even if it contradicts the effects of the old Newton’s law [74,75,76]. With some exaggeration, it can be said that the old-new scientific approach proposed by Newton will not prevail over the established procedures commonly used during the last three hundred years. Their modern reckoning can only occur when the generation of current practitioners dies out and a new generation begins to use it as acceptable to themselves. It is historically significant that the validity of this approach can be verified based on the evaluation of an artificially introduced temperature pulse and subsequently evaluated using the heat flow equation [55, 56] cf. Equation 1. It must be emphasized that instead of the traditional linear background of the DTA peak, a real S-shaped background appears as it is just affected by the impact of heat transfer. Such a distortion of the embedded rectangular pulses shown in Fig. 1 is not only common in the current literature [78,79,80] but is already known from before, when it was presented by Tian in 1933 [47]: so nothing groundbreaking—just a traditional omission of historical facts.

Illustrative set of four published experimental curves [47, 77,78,79,80,81] obtained as responses to an artificially inserted rectangular shape of thermal pulse introduced into the sample and its subsequent processing by means of rectification in order to confirm the effect of thermal inertia. The first from the left reproduces Tian's original experiment from 1933 [46, 47] and is without corrections. The second is the result of electric heating introduced by resistivity wire placed inside the sample [78] while the last one shows the heat pulse brought on the crucible outside [80]. The third thermal pulse is introduced by external thermal irradiation of the sample surface with a laser [79]. All curves are normalized on the axis, vertical temperature versus horizontal time, so that the same fine-tuning of each other's shape is adjusted to be comparable. It is clear from the schematic illustration that all the corrections for the various types of measurements meet and correspond to the nature of the thermal inertia corrections where the lowest-lying curve just measured being raised by rectification so that approaching the input pulse in shape. The small area in the upper left between the rectified peaks and the injected pulse is due to the uncalculated temperature gradients

Degree of conversion, equilibrium background and related kinetics

In thermal analysis, it is important to properly determine the degree of reaction, α, which is habitually done using the simple proportioning of the areas of the thermal effect, especially the peak [81,82,83,84]. Consequential from Fig. 1, the size of the area depends on whether we take the as-measured peak or its refined form after rectification. In the traditional kinetic approach of non-isothermal measurements, the first procedure is favoured conventional due to its historical roots [30,31,32,33,34,35,36,37,38,39,40,41,42] and the question arises as to how far the kinetic outputs would change after inertia implementation and whether this would invalidate previously published data. Yet another issue is the evaluation of overlapping peaks, which is used to identify interconnected and otherwise associated processes. This means whether, as in the traditional approach, to carry out rectification already on the peaks that have just been measured or to execute it only after the peak overlay parting [76].

In the thermoanalytical mode of increasing temperature, the process of phase transformation becomes dependent not only on the rate of change, but also and especially on the scenery behind the phase evenness, on the so-called equilibrium background [82,83,84,85,86]. Its extent is determined by the type of phase transformation and can be characterized as equilibrium advancement of process, λeq. Then the classical degree of reaction, α is defined as the proportion λeq./λ where λ is the true non-isothermal degree of reaction [83, 84]. Its derivation was called the Holba-Šesták equation in the literature [85] in honour of my deceased colleague who was accountable for a number of clarifications mentioned in this review [84].

Both of the criteria mentioned above indicate new basis of non-isothermal kinetics, even though they have not yet been widely adopted in the literature [82, 86]. Current thermoanalytical kinetics is still dependent on the style of publications in turn of sixties [87,88,89] (well-reviewed in [90]) actually converted from the traditional isothermal version [86]. In addition, such publications mainly deal with the geometrical description of possible elementary routes taking place at a simple line of the phase interface between reactant and product [90,91,92] and do not deal much with the thermal phenomena that naturally accompany these processes [93]. This is not a criticism of the current level of kinetic papers, because they represent a certain approach to solving the problem of kinetics under certain simplifying assumptions, because reaching full reality is often not at all evaluable and possible. They are usually excellent essays full of mathematical wisdom and insights that deserve full appreciation and respect, e.g. [91, 92]. However, variants involving specific aspects of truer non-isothermal regime are open and will certainly become available in the future although heat transfer was properly included into kinetics earlier [93].

An unclear situation is in the field of iso-conversional methods [94,95,96] including the popular Kissinger method of apex shift with increasing heating rate [97,98,99,100,101], because it seems that some of the inertia peculiarities that make the non-isothermal description controversial can compensate for each other [52]. In the same way, the theoretical basis of kinetics regarding the use of the popular Arrhenius equation [102,103,104], due to the interconnection of the pre-exponential factor with the exponent (activation energy) [105, 106], is not enough clarified, nor is their true meaning identified [104, 105].

Extreme experimental conditions and temperature value

The continuous device sophistication did not concerned with structural improvements only, but also focused on controlled heating innovation, in which constant heating was modulated [107,108,109,110,111,112,113] by introducing short-term temperature changes in the plus/minus range of a few degrees. Such a periodic temperature change above and below the base heating line made it possible to capture even minor changes caused by frozen undercooling or off-equilibrium imbalance due to the delays caused by metastable phase’s formation. As has been the case historically, the system characterizes a single temperature data, which is an averaging of temperature gradients. As shown in [81, 86] these inverted U-shaped gradients regularly overturn, and it remains unclear how far they affect the measurements themselves and whether thermal inertia plays a role. This is just one of the possible challenges of the future.

Significant advances in semiconductor screening chips [114] allowed instrument design to move away from traditional crucibles, i.e. cells mounted on thermocouple joint ends, to construct new placements of micro-discs, or even the samples themselves, directly onto flat microchips. Ultra-sensitivity then made it possible to use extreme speeds instead of traditional heating in the range of degrees per minute [115,116,117], especially when cooling, reaching thus an unimaginable 103 K/s. Despite some logical anxieties, this method has become very useful and widespread even among apparatus manufacturers, and the measurement results are usually well extrapolable with classical measurements under standard heating rates. Despite the rather tolerable thermal gradient analysis involved [118, 119] the concern about the veracity of such results persists mainly due to the definition of temperature, which in thermodynamics is defined exclusively on the basis of equilibrium and can be appropriately extrapolated only to the area of heating satisfying the constancy of the first derivatives [81, 82]. In this case of extreme changes very far from equilibrium, it is possible to redefine the temperature term to a new expression that would better correspond to a deep imbalance under non-equilibrium, for example with yet unusual tempericity [120,121,122]. This is again the subject of a future clarification of the appropriate terminology for the new task of experimental detection of off-equilibrium temperature [126].

Furthermore, regarding the feasibility of such a non-equilibrium temperature gauging, a comparison with the classic Heisenberg uncertainty principle [123, 124] is offered identifying that the position, x, and the velocity, (dx/dt), of an object cannot both be measured exactly, at the same time. The complete rule stipulates that the product of the uncertainties is proportional to the Planck constant, h. Thus, a similar inter-dominance principle can be assumed for the argued conduct of temperature, T, and the rate of its change (i.e. heating/cooling, dT/dt) where both of which cannot be apparently measured concurrently with sufficient accurateness, too [122]. This attitude should be correlated with the state of propagation, mobility and momentum of the micro-particles (molecules) included in the measured system, with a correlating constant effectively and possibly matching to its heat capacity Cp.

The determining role of the first derivatives, related thermodynamics and variant termotics

The field of thermal analysis is specific in its dynamism and obviously cannot be described by the classical approach of textbook thermodynamics that is so-called thermostatics [125] admitting only small deviations from equilibrium. It is obvious that even the theory of non-equilibrium thermodynamics [126, 127] of irreversible processes [128, 129] is not anticipated solution, because it depends on the assumed distribution of the system into small areas, in equilibrium with each other, but not describing the behaviour of the entire system as such, which is necessary in thermoanalytical studies. Therefore, a modified thermodynamics was proposed [82, 130], where the studied system is maintained under conditions of constancy of the first derivatives as it occurs during the preservation of linear heating. In this case, the validity of classical thermodynamic laws and data is preserved [130]. We thus deviate from the classical Carnot´s approach [19] with the assumption of dissipation-less work and relate to the workless dissipation dynamics respecting the laws of Fourier, Fick and Ohm [131] as well as the Onsager reciprocal relations expressing the equality of certain ratios between flows and forces in thermodynamic systems out of equilibrium [132]. It is important to underline that the notion of temperature also applies here without any problems.

Of course, in the experimental area of thermal analysis, we deviate from this (above) scheme during actual measurements in the thermally effected process, but we still admit that the temperature is relevant and applicable. For these conditions, Tykoldi's proposed domain term thermotics (as mathematics) [133] should be used, as previously elaborated and recalled in [121]. However, in the case of rapid temperature changes [115, 116], a completely different situation occurs, which may not preserve the validity of classical thermodynamic values. In addition, temperature ceases to be a classical quantity and therefore it must be re-defined and replaced by novel non-equilibrium tempericity [120]. This is actually a theoretical area that deserves further deepening and thus remains the sought-after thermoanalytical music of the future [130].

The design of temperature measurements itself is undergoing a gradual transition from classic thermocouples to microchips, i.e. from classic single-point measurement to that taken over the entire sample surface. By comparing single-point and whole surface quota, surprising connections can be arrived at, showing that in both cases we find incomparable and often differently applicable figures [122]. This is a question of re-evaluating and including the influence of temperature gradients, which were already considered important in history [122, 134] and need restoration in future more accurate analysis.

Another problem arises from the decreasing tendency adjusting the sample size as small as possible. From a certain value, the sample surface becomes competitive with the volume and the searched bulk properties became unrepresentative. Added to this, the size of the grains in the often-heterogeneous samples may also become decisive and their gradual reduction brings another dimensional variable into standard thermodynamic description, i.e. the surface of the particles. Therefore, most of nano-systems start to possess an extra degree of freedom [135,136,137]. Temperature values are then linked to the size/diameter of the participating particles, which is known from the history of studying colloids, especially the relationship Tcurve/Tflat ≈ Surface-tension/particle-radius [137]. Surprisingly, this particle effect can manifest itself in the same way as the effect of cooling rate during the quench-preparation of materials, which reveals itself in a new area of real phase diagrams depicting current technological conditions in the sphere of the so-called kinetic phase diagrams [138].

This is a new area of research where we encounter a critical limit where the structure loses its orderliness. In the area of melt quenching, it is a glassy state, while in wet-casting of polysialates it is amorphousness. The same breakthrough can be found when modelling a cluster of particles, for example assembled from spheres, assuming their cubic structure. The beginning of the arrangement of the spheres brings the first internal particle at 26 and the cube motif (8 internal particles) appears at cluster 64. The limit when there is the same amount of particles on the surface and inside occurs at about 350 total, which is also confirmed by structural studies. These considerations emphasize the way of describing ultra-small systems, which are also starting to be studied using specialized thermal methods and are also the ultimate challenge of the future [137,138,139], see below.

Constrained states of glasses and the role of dimensions

Based on the previous paragraph, it is worth mentioning that thermal research has found new directions and dimensions following the development of material sciences and their thermodynamic understanding. Surprisingly newer than the discovery of radioactivity are works in the field of glass research falling into the production of materials under strongly non-equilibrium conditions, the characterization and identification of which are made possible by the methods of thermal analysis. In this context, one of the greatest achievements of DTA is its irreplaceable role in the detection of the glass transition, which is characteristic precisely for the emergence and disappearance of the non-equilibrium glassy state [140,141,142,143]. In materials research, this has extended to metallic glasses [144, 145], amorphous geopolymers [146,147,148,149], molecular glasses [150,151,152] and bio-glasses [153, 154], all comfortably studied by DTA.

Special attention deserves new directions of materials science reaching very small dimensions. It is the home field of nanomaterials and related nano-thermodynamics [135, 154,155,156]. It that distinguishes the difference in cohesive energy between the core (bulk) and the shell (surface), which thus becomes decisive for behaviour and description. This binding energy model rationalizes the following:

-

(i)

How surface dangling bonds reduce melting point, entropy, and enthalpy;

-

(ii)

How the order–disorder transition of nanoparticles depends on the size of the changing particles and their stability in the given matrix;

-

(iii)

Predicts the existence of face-centred cubic structures at small size;

-

(iv)

Indicates the formation of a nanoscale immiscible alloy in the bulk, allowing the prediction of their critical size and the shape dependence of a number of physical properties such as melting temperature, entropy of melting, enthalpy of melting, ordered temperature, Gibbs free energy and heat of formation.

Furthermore, such modelling predicts, for example, phenomena of the thermal stability of metal particles on graphene, overheating of embedded nanoparticles, the order–disorder transition of nano-alloys, the size-temperature kinetic phase diagram [137, 138] for low-dimensional solids and their alloying ability at the nanoscale. This specialty has also developed its own thermoanalytical focus [157,158,159].

The importance of the study of small dimensions also brought about a new study technique, where the nanostructure of the surface is directly (mechanically) examined by moving a suitably chosen probe formed by a needle with an almost one-atom tip [160]. This was surprisingly reflected in the new technique of microthermal analysis using adapted scanning thermal microscopy and the de facto implementation of a localized search for the distribution of various gradients [160,161,162,163].

However, the thermoanalytical exceptional field of quantum phenomena [164,165,166,167] is not far from the study of low dimensions and it needs to be mentioned, too. In general, new constraints are discovered that govern spontaneous thermodynamic processes, in particular the fundamental limits for extracting work from non-equilibrium states due to both finite-size effects that are present at the nanoscale and quantum coherence. This means that thermodynamic transitions are generally irreversible at this nano-scale and to what extent this is the case and what the condition of reversibility is, become the subject of ongoing discussions [167], particularly regular conferences [168].

The quantum incidence is also reflected in the everyday measurement of curious diffuse phenomena, which control recurrent processes of self-organization in which Planck's constant appears as a binding factor for distance (or wavelength), mass and propagation speed,, albeit diffusion proceeds macroscopically [168,169,170,171,172]. Specific deterministic problems are not only in low dimensions, but also appear in the macroscale of the cosmos and in the relativistic concept of, for example, temperature [173, 174], which like quantum phenomena, falls outside the scope of this review.

Journals and books on thermal analysis

Thermal analysis announced itself as both an emerging and an important field [14, 15] and thus enabled the creation of corresponding journals, such as the Journal of Thermal Analysis (J. Simon, since 1969) and Thermochimica Acta (W. W. Wendladt, since 1970), which over the years have achieved wide use and significant values of the monitored impact factor. Of course, thermoanalytical studies were published earlier, in addition to the already mentioned Anal Chem [31, 32], the Czech journal Silikáty [175] is worth mentioning. Today, other (so-called predatory) journals have been created that require a financial contribution from the author, namely the modern periodicals Thermo (ISSN 2510–2702) or Chemical Thermodynamics and Thermal Analysis (ISSN 26,667–3126). However, it must be remembered that such an often-condemned predatory policy of open access and copyright payment is no worse than the standard way of paying for reading an article published in a standard journal, which is often only available in funded libraries. It is obvious, that nothing is free when it comes to obtaining published data, and this also applies to the field of thermals [175, 176, 177] which needs a bit of explanation that is not commonly available.

Unfortunately, it is worth noting that the forced publication boom is a product of the growing tendency to present results at any cost as defined by the meditative slogan “Publish or perish”. Behind all this is the related need to find sources of finance necessary for the research survival of scientists [175,176,177,178,179,180,181]. In fact, the previously admired joy of knowledge and the pleasure of finding new things is gradually being replaced by the pursuit of financial well-being. On the other hand, the real pursuit of funds is manifested in the journal's tendency to reject authors already at the time of submission of manuscripts due to the excess of submitted texts. This is habitually done by short-term editors recruited often from graduates who have the option of rejection based only on their (in) experience and feelings. Unfortunately, this applies to journals with a high impact factor. A related problem is the level of peer-review, where professionalism is sometimes mixed with personal distaste for some areas of (often-competitive) research, sometimes bordering on politics. Another problem is that originality and thematic innovations are sometimes mistaken for foolishness, unsuitable for publishing, even if they may appear novel in the future. Computer comparison has already shown that plagiarism is no longer a problem, but in some cases it almost turns into harassment and torment, especially with the publication of content-reviewed works and especially books that cannot do without self-repetition.

The content of a number of publications does not have to coincide with the whole-society scientific approach and a number of papers deviate from established customs and head into the so-called dissident area [177,178,179,180,181] otherwise defined only in politics. It is known from history (most famous Galileo or Einstein) that whenever a power structure is threatened by novel ideas an authoritative attempt to suppress those ideas can be anticipated. Thus, the authors who dare publish an article in such a titled dissident journal often do not have the opportunity to come back to publication in a regular periodical, though the differences between the standard and predatory approach are gradually deleted. However, dissident is crucial for the advancement of science because disagreement is at the heart of advanced peer reviewing and is important for uncovering unjustified assumptions and problematic reasoning. Enabling and encouraging dissent also helps to generate alternative hypotheses, models and explanations. A typical example is a journal Apeiron who became a forum for self-styled (thus dissident) opinions not often accepted by the conventional journals, mostly based on ultra-novel ideas touching extraordinary speculations and having thus a notable contribution from authors involved in early exertion on e.g. quantum mechanics, relativity and even heat transfer manner. Even in our native field of thermal research, we can meet entities that are not often welcomed by ordinary users’—typically thermal inertia or tempericity. It is commendable that some journals, such as the Journal of Thermal Analysis, dared to publish unconventional articles whose content fell between avant-garde and dissident areas [74, 120, 148, 182] and thus showed their welcome content overlap [183].

It is pleasing to see that the Journal of Thermal Analysis and Calorimetry citation occurs throughout almost all innovative entries and that it is as such a representative overview of the thermoanalytical publication [183] that mostly concerns solids and materials. This brings the necessity of the theoretical foundations of solids first represented in the historical book by Swalin [184] to nowadays compendia [185,186,187,188]. However, it should be added that this book does not provide an entirely adequate view to the inventive issue of solids, still relying on the textbooks derived from traditional liquid–vapour relations and not emphasizing solid-state specialties such as non-crystalline constrain states or solid-state reactivity and stoichiometry already revealed in our early books [189, 190]. I am glad to have helped advance the field of material science in the present when we published a triptych of books from Springer [191,192,193] then included by outstanding co-authors renowned in the innovative solid phase work (e.g. [151, 158]) who admirably scored over ten thousand downloads for each of the three books mentioned. This was supplemented by the author's book [195], as a second and extended edition of [190], with a completely revised content initiated by the previously published monographs [189, 190]. The content of all four books [191,192,193,194] includes, in addition to the theoretical foundations of thermal analysis conceived in the framework of heat flows and its inertia, material chapters.

The content of all four books [191,192,193,194] includes, in addition to the theoretical foundations of thermal analysis conceived in the traditional framework of heat flows and their inertia, also specialized chapters on materials. These bring sought-after specializations focused on glass formation, structural relaxation, crystallization and nucleation, kinetics of reactions in the solid phase, description of non-equilibrium phase diagrams, non-stoichiometry, periodic processes, geopolymers and nanomaterials, supplemented by unusual thermodynamics of social relations and a thermoanalytical climate scheme. We believe that this brings the desired way for a more modern analysis of the thermodynamic connections [130] of thermal analysis.

There is an important remark to note in this view, which is a still problematic and unsettled state of the theory of factual solids and the related applications and theories of thermal analysis. This controversy is well illustrated by the last two published books [196, 197], which are worth closer analysis. While in context they offer an easy-to-understand introduction to thermoanalytical principles, along with coverage of a wide range of techniques currently used in a wide variety of industries (see history [33,34,35,36,37,38]), they do not clearly distinguish between heat-flow and compensation DSC principles. They are not profound to the description of heat flows and related calorimetric equations, and so the description of competing theories is unfortunately missing. In contrast to previous modernized approaches [190,191,192,193,194,195], both books [196, 197] become primarily useful as a practical tool for managing instrumentation and processing measured outputs in various applications, thus complementing instrumental manuals well. Unfortunately, they do not allow the reader to go deeper into the basics of thermal analysis, which today's world should take for granted. Furthermore, the book [197] offers a broad overview of thermodynamics, but unfortunately presents a traditional textbook thermostatic view suitable for the analysis of steady-state measurements, which is not adapted to the needed dynamics of thermal analysis and thus lags behind the books [194, 195]. That's not a boast, but unfortunately a fact of contemporary literature.

Discussions and perspectives—where to look for better interpretation

The term thermal analysis should be re-evaluated and understood as the analysis of heat/temperature settings by determining heat fluxes relative to the environment, not just the standard examination of the reaction properties of the sample itself. The current state of thermal analysis focuses primarily on the detection and analysis of outputs, such as measured physical quantities, simplifying their interpretation and setting specified (and thus controlled) measurement conditions. Interestingly, analyses of thermal effects in the parallel domain of structures translate much more reliably and efficiently into simultaneous studies of the thermal properties of real materials, such as buildings [198, 199] or even textiles [200, 201], where it is often necessary to determine the wider awareness and sensation of heat and cold, see Fig. 2. Here, the temperature situation is conceived differently than in the dynamic area of thermal analysis, where it expresses the thermal change potential, Cp (ΔT/dt) [74,75,76], where Cp is the heat capacity and ΔT is the ongoing thermal change. However, in the analogous approach the thermal inertia of materials has a static character [197,198,199,200,201], i.e. √(Cp·λ·ρ), where λ is heat conductivity and ρ is the density and is understood as a measure of the receptiveness of a material to differences in temperatures. It is often referred as a kind of volume-specific heat capacity capable to circumscribe the facility of a given substance to penetratingly accumulate internal energy or the degree of slowness with which the temperature of a body approaches that of its environs. Surprisingly, even from above one can find a number of application ideas not yet used in the theory of thermal analysis.

A thermoanalytical sample should be considered in the same insight and logic as the conditions of any well-being in a temperature-controlled room (as a measuring head). We have to deal with the processes of heat transfer, the influence of the inertia of materials involved and not only interested in the internal state of the heated body itself—be it a comforting person or an examined sample

The thermal inertia of an existing heating system is thus considered a critical factor that will affect the operation of the entire system [202,203,204]. However, from the point of view of resource flexibility, it can be seen that the thermal inertia of the system effectively increases the flexibility of system operation, reduces energy consumption, and improves the ability to balance energy supply and demand. From this point of view, the importance and analysis of thermal inertia in thermoanalytical measurements seem to be underestimated and unjustifiably overlooked [74,75,76] and clearly needs more attention than only in non-isothermal kinetics [53, 57, 58, 194, 195].

Heat dissipation and thermal management are central challenges in various areas of science and technology and are critical issues for the majority of nano-electronic devices such as heat production in integrated circuits, semiconductor lasers or thermos-photovoltaic generation. Advances in thermal characterization and do called phonon engineering that have increased the understanding of heat transport and demonstrated efficient ways to control heat propagation in nano-materials. Diffusion regime of standard heat transfer is in narrow space replaced by the regime of free molecules showing tunnelling so that classical laws became invalid and new approaches are anticipated [204,205,206,207,208] so future thermal analysis applications will have to take this into account.

As shown above, the development trend shows that thermodynamics has expanded unprecedentedly from macroscopic descriptions [15, 20, 125, 127, 174] to microscopic ones [169,170,171] (nanomaterials and down to the quantum world). The specifics and measurement technique have reached an unprecedentedly sophisticated level thanks to the computerization and sensitivity of the detectors. Unfortunately, thermoanalytical thermodynamics is still faltering in the approach towards an off-equilibrium description of measurements under heating. I hope that a follower of our ideas [82, 130] will be found approving the validity faithfulness of our theory on the first derivative-based thermodynamics, which up to now falls outside the scope of standard thermodynamics published so far, see paragraph 9.

In general, it can be said that the methods and instruments of thermal analysis have become an integral part of all laboratories and, especially necessary where the behaviour of the solid phase is studied. During more than half a century of intensive development, TA devices have reached such a high level, which unfortunately resulted in the reduced availability or unavailability of a full understanding of the user's own operation. We can recall the old days of self-made creation, when the construction of devices [61], as well as the subsequent experimental use, was fully in the hands of the user, who thus saw all possible positives and negatives of his design. In case of distrust in the obtained result, he was able to intervene in the construction procedure and possibly introduce the desired improvement. However, the current design has become almost a kind of “black box”; which we are unable to influence and where even for the smallest changes we are forced to invite a repairperson from a renowned manufacturer. It is actually a global improvement trend, where design specifics are beyond the reach of the user, which is a good example of cars, where the former possibility of self-repair is impossible and literally reduced to the mere possibility of opening and closing the engine cover. Similarly, we encounter the use of computational algorithms that are sometimes so clever that they can inadvertently improve the result that the user receives in the form of printed data and other processing facts. This becomes a problem, especially for graduates, who thus only intervene in data collection with an effort to obtain the largest possible number of parallel-related measurements without trying to understand the purpose of the measurements. In this direction, it is difficult to estimate the future, because of the ongoing automation and subsequent robotization of devices together with the influence of computers will make the situation even more sophisticated.

We can even consider that today the classic technique of constant heating will be replaced by a fully computerized analysis of any heating methods, especially the exponential type of spontaneous heating/cooling. In the same way, calculation programs will become more and more complex, able to take into account even those phenomena that we neglect today, such as heat transfer. This will make it possible to replace existing simplifying practice such as using the temperature of the inert as the reaction temperature of the sample or dealing with thermal inertia.

In this regard, the field of thermoanalytical kinetics needs to be re-examined [51,52,53,54,55,56,57,58, 86,87,88,89,90, 210] experiencing a boom involving several application sectors. The most widespread is the traditional physical-geometrical description of the processes taking place at the reaction interface [211], although this may not fully correspond to the reality influenced by thermal flows [212]. Even in this well-researched area, however, there are prerequisites for further development of description patterning. However, it is clear that in the near future there will be a period when the computer literate experts will start to get interested in thermal processes that actually lead to the initiation of the reaction itself—nothing revolutionary, because it already existed in the past [93, 213, 214]. In particular, it is necessary to explain the scope of thermal inertia and its influence on kinetic evaluation, where for iso-conversion methods its influence can be mutually reduced until eliminated [53, 215, 216]. It is certain that the view of unconventional descriptions of kinetics of solid-state processes will also expand [94,95,96], including the non-equilibrium region of glass crystallization [96,97,98,99,100,101]. We can look forward to new novel procedures applied in the reaction kinetics of solids [217,218,219,220,221] including the finding of non-isothermal experimental edges [222,223,224]. Analyses of constraint states and their relaxation phenomena [141, 143, 225,226,227,228]) will be sophisticated, and the still little explored reaction states bordering on quantum phenomena (diffusion [169,170,171]) will certainly reach sensitive refinements.

It can be argued that not enough attention was paid to all alternative methods of thermoanalytical instrumental methodology, especially thermogravimetry as one of the basic methods of identification. As with some of the above-discussed temperature studies, there is an additional problem of describing the supply and removal of gaseous reactants, which can and usually become the main impedance of the reaction progress, in addition to the screening dependence on changes in partial pressures. This phenomenon has been known for a long time in the works of Gray [93], who left the entire reaction course dependent only on mass transport. Individual problems are moreover well explicated in the works of Mianowski [214, 229], Koga [92, 219, 230] and even our contribution initiated by Czarnecki [195, 213, 231]. However, the issue of revision of reactions interacting with the surrounding atmosphere needs to be supplemented and has not yet been sufficiently explained even in the recent ICTAC recommendation [232], as it is also important for the study of non-stoichiometry [194, 195]. Therefore, we need many justifications and improvements [231, 232], preferably continuing from the point of view of the previously proposed equilibrium background of the process [83, 84], see paragraph 7.

Obviously, it is not possible to capture all the nuances of individual TA methods, emphasizing that temperature measurements are the essence that blends into all other methods of measurement [195]. Therefore, in conclusion, it can be underlined that there will certainly appear other and perhaps unexpected applications in the field of thermal material science [235,236,237,238] and thus the concept of the future trend of thermal analysis [190,191,192,193,194,195] will be expanded and upgraded, for which I wish all users good luck. I believe that the new management of ICTAC will also take care of this, as documented by the successful program of the ESTAC’13 conference, see Fig. 3., and that we will not lose the necessary sense of humour [239] while striving for the best result.

References

Pyne S. Fire in the mind: changing understandings of fire in Western civilization. Phil Trans R Soc B. 2016;371:20150166.

Lunn AC. The measurement of heat and the scope of Carnot’s principle. Phys Rev. 1919;14:1.

Fox R. The caloric theory of gases: from Lavoasier to Regnault. Oxford: Claredon Press; 1971.

Brush S.G. The Kind of Motion we call Heat’, Vols I and II, North Holland; 1976

Winder B. Positive aspects of fire: fire in ritual and religion. Irish J Psychol. 2012;30:05–19.

Hume D. The art of fire. Texas: Cornerstone; 2017.

Mackenzie RC. History of thermal analysis, as a special issue of Thermochim. Acta. 1984;73:247.

Mackenzie RC, Proks I. Comenius and Black as progenitors of thermal analysis. Thermochim Acta. 1985;92:3–12.

Šesták J, Mackenzie RC. The fire/heat concept and its journey from prehistoric time into the third millennium. J Therm Anal Calor. 2001;64:129–47.

Izatt RM, Brown PR, Oscarson JI. The history of the calorimetry conferences 1946–1995. J Chem Thermod. 1995;27:449.

Cardillo P. A history of thermochemistry through the tribulations of its development. J Therm Anal Calor. 2002;72:7.

Robens E., Amarasiri S., Jayaweera A. Some aspects on the history of thermal analysis. Anal Univ Curie-Sklodowska, Lublin, Poland. V. LXVII (2012) 1–29.AA

Šestak J, Mareš JJ. From caloric to statmograph and polarography. J Therm Anal Calor. 2007;88:763–71.

Šestak J, Hubik P, Mareš JJ. Historical roots and development of thermal analysis and calorimetry. In: Šestak J, Mareš JJ, Hubik P, editors. Glassy, amorphous and nano-crystalline materials. Berlin: Springer; 2011. p. 347–70.

Šestak J. Thermal science and analysis: history, terminology, development and the role of personalities. J Therm Anal Calor. 2013;113:1049–54.

Šulcová P, Šesták J, Menyhárd A, Liptay G. Some historical aspects of thermal analysis on the mid-European territory. J Therm Anal Calorim. 2015;120:239–54.

Proks I. Evaluation of the knowledge of phase equilibria. In: Chvoj Z, Šesták J, Triska A, editors. Kinetic phase diagrams; non-equilibrium phase transitions. Elsevier: Amsterdam; 1991. p. 1–50.

Mareš JJ, Hubik P, Šestak J, Špička V, Stávek J. Phenomenological approach to the caloric theory of heat. Thermochim Acta. 2008;474:16–24.

Mareš JJ, Šestak J, Hubik P, Proks I. Contribution by Lazare and Sadi Carnot to thecaloric theory of heat and its inspirative role in alternative thermodynamics. J Therm Anal Calor. 2009;97:679–83.

Bejan A. Evolution in thermodynamics. Appl Phys Rew. 2017;4:011305.

Saslow WM. A history of thermodynamics: the missing manual. Entropy. 2020;22:77–86.

Nenjan A, Tsatsaromis G. Purpose of thermodynamics. Energy. 1921;14:408–33.

Mitrovic J. Some ideas of James Watt in contemporary energy conversion thermodynamics. J Modern Phys. 2022;13:385–409.

Tammann G. Über die anwendung der thermische analysen in abnormen fällen. Z Anorg Chem. 1905;45:24–30.

Newton I. Scale graduum Caloris. Calorum descriptiones & signa. Philosophical Trans. 1701; 22: 824–829.

Kallauner C, Matějka J. Beitrag zu der rationellen analyse; Sprechsaal 1914; 47: 423

Norton FH. Critical study of the differential thermal methods for the identification of the clay minerals. J Amer Ceram Soc. 1939;22:54–61.

Speil S, Berkenhamer L.H., Pask J.A., Davies B. Differential thermal analysis, theory and its application to clays and other aluminous minerals. US. Bur. Mines, Technical Paper. 1945; pp. 664–745.

Vold MJ. Differential thermal analysis. Anal Chem. 1949;21:683–8.

Boersma SL. A theory of DTA and new methods of measurement and interpretation. J Amer Ceram Soc. 1955;38:281–4.

Murphy C.B. Differential thermal analysis. Anal Chem 1958; 30 867–872 and 1960; 32 168–171 and 1962; 34: 298R–301R

Murphy C.B. Thermal analysis. Anal Chem. 1968; 40, 380R 5 times up to 1980; 52: 106R–112R

Garn PD. Thermal analysis—a critique. Anal Chem. 1961;33:1247–55.

Wendlandt WW. Thermal analysis. Anal Chem. 1982;54:97R-105R.

Berg LG, Nikolaev AV, Rode EY. Thermography. Moscow: Izd. AN SSSR; 1944.

Mackenzie RC, editor. The differential thermal investigation of clays. London: Mineral. Society; 1957.

Smothers WJ, Chiang Y. Differential thermal analysis: theory and practice. New York: Chemical Publishing; 1958.

Piloyan GO. Introduction in theory of thermal analysis. Moskva: Izd. Nauka; 1964.

Garn PD. Thermal analysis of investigation. New York: Academic; 1964.

Wendlandt WW. Thermal methods of analysis. New York: Wiley; 1964.

Schultze D. Differentialthermoanalyze. Berlin: VEB; 1969.

Mackenzie RC. (Eds.) Differential thermal analysis, Vol. I. and II. Academic, London 1970 and 1972

Liptay G. Atlas of thermoanalytic curves, akademiai kiado and heyden, vol. I. (1971) up to vol. IV. (1977), Budapest amd London

Kozmidis-Petrovic A, Šestak J. Forty years of the Hruby’ glass-forming coefficient via DTA temperatures in relation to the glass stability and vitrification ability. J Therm Anal Calor. 2012;110:997–1004.

Holman SW. Calorimetry: methods of cooling correction. Proc Am Acad Arts Sci. 1895;31:245–54.

Tian A (1923) Recherches sur le Thermostats; Contribution a l´étude du reglage—thermostats a engeintes multiples. J Chim-Phys; 132–166

Tian A. Recherches sue la calorimétrie. Généralisation de la méthode de compensation électrique: microcalorimétrie. J Chim-Phys. 1933;30:665–708.

Boerio-Goates J, Callen JE. Differential thermal methods. In: Rossiter BW, Beatzold RC, editors. Determination of thermodynamic properties. New York: Wiley; 1992. p. 621–718.

Haines PJ, Reading M, Wilburn FW. Differential thermal analysis and differential scanning calorimetry. In: Brown ME, Gallagher PK, editors. Handbook of thermal analysis and calorimetry, vol. 1. Amsterdam: Elsevier; 2008. p. 279–361.

Borchard HJ, Daniels F. The application of DTA to the study of reaction kinetics. J Amer Chem Soc. 1957;79:41–6.

Šesták J, Holba P. Heat inertia and temperature gradient in the treatment of DTA peaks. J Therm Anal Calorim. 2013;113:1633–43.

Kirsh Y. On the kinetic analysis of DTA curves. Thermochim Acta. 1988;135:97–101.

Vyazovkin S. How much is the accuracy of activation energy selected by ignoring thermal inertia? Int J Chem Kin. 2020;52:23–8.

Faktor MM, Hanks R. Quantitative application of dynamic differential calorimetry. Part 1.—Theoretical and experimental evaluation. Trans Faraday Soc. 1967;63:1122–9.

Šestak J, Holba P, Lombardi G. Quantitative evaluation of thermal effects: theory and practice. Ann Chim. 1977;67:73–87.

Holba P, Nevřiva M, Šesták J. Analysis of DTA curve and related calculation of kinetic data using computer technique. Thermochim Acta. 1978;23:223–31.

Holba P, Šesták J. Heat inertia and its role in thermal analysis. J Therm Anal Calorim. 2015;121:303–7.

Šesták J. Ignoring heat inertia impairs accuracy of determination of activation energy in thermal analysis. Int J Chem Kinet. 2019;51:74–80.

O’Neill MJ. Differential scanning calorimeter for quantitative DTA. Anal Chem. 1964;36:1238.

Watson ES, O’Neill MJ, Justin J, Brenner N. A DSC for quantitative differential thermal analysis. Anal Chem. 1964;36:1233–9.

Wendlandt WW. The development of thermal analysis instrumentation 1955–1985. Thermochim Acta. 1986;100:1–22.

Sabbah R, Xu-wu A, Chickos J-S, Roux MV, Torres LA. Reference materials for calorimetry and differential thermal analysis. Thermochima Acta. 1999;331:93–204.

Kostyrko M, Skoczylas M, Klee A. Certified reference materials for thermal analysis. J Therm Anal. 1988;33:351–7.

Yasser A, Essam A, Ibrahim M, Mostafa M. Measurement uncertainty in the DTA temperature calibration. Int J Pure Appl Phys. 2010;6:429–37.

Kempen ATW, Sommer F, Mittemeijer EJ. Calibration and desmearing of a DTA measurement signal upon heating and cooling. Thermochim Acta. 2002;383:21–30.

Kossoy A. A short guide to calibration of DTA instruments. CISP Newsleter, St. Petersburg; 2022. DOI: https://doi.org/10.13140/RG.2.2.12029.64480

Wunderlich B, Bopp RC. A study of transition temperatures standards for DTA. J Therm Anal. 1974;6:335–43.

Della GG, Richardson MJ, Sarge SM, Stolen S. Standards, calibration and guidelines in microcalorimetry. Pure Appl Chem. 2006;78:1455–76.

Benin A, Kossoy A, Belokhvostov V. Data deconvolution in study of chemical reaction kinetics by DSC. J Phys Chem. 1987;61:1121–205.

Speyer RF. Deconvolution of superimposed DTA/DSC peaks using the simplex algorithm. J Mater Res. 1993;8:675–9.

Sbirrazzuoll N, Brunel LD, Eldgant I. Neural network, signal filtering and deconvolution in thermal analysis. J Therm Anal. 1997;49:1553–64.

Spink CH. The deconvolution of differential scanning calorimetry unfolding transitions. Methods. 2015;76:78–86.

Ziegeler NJ, Nolte PW, Schweizer S. Optimization-based network identification for thermal transient measurements. Energies. 2021;14:7648.

Šesták J. Are nonisothermal kinetics fearing historical Newton’s cooling law, or are just afraid of inbuilt complications due to undesirable thermal inertia? J Therm Anal Calorim. 2018;134:1385–93.

Kočí V, Šesták J, Černý R. Thermal inertia and evaluation of reaction kinetics: a critical review. Measurements. 2022;198:111354.

Šesták J. Dynamic character of thermal analysis where thermal inertia is a real and not negligible effect influencing the evaluation of nonisothermal kinetics: a review. Thermo. 2021;2:220–31.

Calvet E. Recent progress in microcalorimetry. Oxford: Pregamon; 1963.

Svoboda H, Šesták J. A new approach to DTA calibration by predetermined amount of Joule heat. In: the proceedings of 4th ICTA, “Thermal Analysis”, (I. Buzás, ed), pp. 726–731, Akademia Kiado, Budapest; 1974

Kaisersberger E, Moukhina E. Temperature dependence of the time constants for deconvolution of heat flow curves. Thermochim Acta. 2009;492:101–9.

Barale S, Vincent L, Sauder G, Sbirrazzuoli N. Deconvolution of calorimeter response from electrical signals for extracting kinetic data. Thermochim Acta. 2015;615:30–7.

Holba P, Šesták J, Sedmidubský D. Heat transfer and phase transition at DTA experiments. In: Šesták J, Šimon P, editors. Thermal analysis of micro-, nano- and non-crystalline materials. Berlin: Springer; 2013. p. 99–134.

Šesták J. Thermodynamic basis for the theoretical description and correct interpretation of thermoanalytical experiments. Thermochim Acta. 1970;28:197–227.

Holba P, Šesták J. Kinetics with regards to the equilibrium of processes studied at increasing temperatures. Z Phys Chem NF. 1972;180:1–20.

Holba P. Ehrenfest equations for Calorimetry and dilatometry. J Therm Anal Cal. 2015;120:175–81.

Mianowski A. Consequence of Holba-Sestak equation. J Therm Anal Calor. 2009;96:507–12.

Koga N, Šesták J, Šimon P. Some fundamental and historical aspects of phenomenological kinetics in the solid state studied by thermal analysis. In: Šesták J, Šimon P, editors. Thermal analysis of micro, nano- and non-crystalline materials: transformation, crystallization, kinetics and thermodynamics. Dordrecht: Springer Netherlands; 2013. p. 1–28.

Freeman ES, Carrol B. The application of thermoanalytical techniques to reaction kinetics. J Phys Chem. 1958;62:394–7.

Doyle C. Kinetic analysis of thermogravimetric data. J Appl Polym Sci. 1961;5:28–33.

Friedmann HI. New method for evaluating kinetic parameters from thermal analysis. J Polym Sci. 1963;3:183–8.

Flynn JH, Wall LA. General treatment of the thermogravimetry of polymers. J Res Nat Bur Stand Part A. 1966;70:487–99.

Vyazovkin S, Koga N, Schick C (Eds.) Handbook of thermal analysis and calorimetry, Volume 6: recent advances, techniques and applications. Elsevier, Amsterdam; 2018

Koga N, Sakai Y, Fukuda M, Hara D, Tanaka N. Universal kinetics of the thermal decomposition of synthetic Smithsonite over different atmospheric conditions. J Phys Chem C. 2021;125:1384–402.

Gray AP. A simple generalized theory for analysis of dynamic thermal measurements. In: Porter RS, Johnson JF, editors. Analytical calorimetry. New York: Plenum Press; 1968. p. 209–18.

Šimon P. Isoconversional methods. J Therm Anal Calor. 2004;76:123–9.

Mianowski A, Urbanczyk W. IsoconversionaL methods in thermodynamic principle. J Therm Anal Calor. 2018;122:6819–28.

Granado L, Sbirrazzuoli N. Isoconversional computations for nonisothermal kinetic predictions. Thermochim Acta. 2021;697:178859.

Kissinger HE. Reaction kinetics in differential thermal analysis. Anal Chem. 1957;29:1702–6.

Roura P, Farjas J. Analytical solution for the Kissinger equation. J Mater Res. 2009;24:3095–8.

Holba P, Šesták J. Imperfections of Kissinger evaluation method and crystallization kinetics. Glass Phys Chem. 2014;40:486–549.

Šestak J. Doubts about the popular Kissinger method of kinetic evaluation and its applicability for crystallization of cooling melts requiring equilibrium temperatures. J Therm Anal Calor. 2020;142:2095–8.

Vyazovkin S. Kissinger method in kinetics of materials: things to beware and be aware of. Molecules. 2020;25:2813.

Galwey AK, Brown ME. Application of the Arrhenius equation to solid-state kinetics: can this be justied? Thermochim Acta. 2002;386:91–8.

Šimon P. Single-step kinetics approximation employing nonarrhenian temperature function. J Therm Anal Calor. 2005;79:703–9.

Šimon P, Thomas P, Dubaj T, Cibulkova Z. Equivalence of the Arrhenius and non-Arrhenian temperature functions in the temperature range of measurement. J Therm Anal Calor. 2015;120:231–8.

Koga N. A review of the mutual dependence of Arrhenius parameters evaluated by the thermoanalytical study of solid-state reactions: the kinetic compensation effect. Thermochim Acta. 1994;244:1–20.

Koga N, Šesták J. Kinetic compensation effect as a mathematical consequence of the exponential rate constant. Thermochim Acta. 1991;176:201–7.

Reading M, Elliot D, Hill VL. Modulated differential scanning kalorimetry: a new way forward in materials characterization. Trends Polym Sci. 1993;1:248–53.

Gill PS, Sauerbrunn SR, Reading M. Modulated differential scanning calorimetry. J Therm Anal. 1993;40:931–9.

Ozawa T. Temperature modulated differential scanning calorimetry-applicability and limitation. Pure Appl Chem. 1997;69:2315–20.

Gmelin E. Classical temperature-modulated calorimetry: a review. Thermochim Acta. 1997;304:1–26.

Shawe JK. Principles for the interpretation of temperature-modulated DSC measurements: a thermodynamic approach. Thermochim Acta. 1997;304:111–9.

Simon SL. Temperature-modulated differential scanning calorimetry: theory and application. Thermochim Acta. 2001;374:55–71.

Jiang Z, Imrie VT, Hutchinson JM. An introduction to temperature modulated differential scanning calorimetry: a relatively non-mathematical approach. Thermochim Acta. 2002;387:75–93.

Lerchner JA, Wolf G, Wolf J. Recent developments in integrated circuit calorimetry. J Therm Anal Calorim. 1999;57:241–9.

Adamovsky A, Minakov AA, Schick C. Scanning microcalorimetry at high cooling rate. Thermochim Acta. 2003;403:55–63.

Adamovsky A, Schick C. Ultra-fast isothermal calorimetry using thin film sensors. Thermochim Acta. 2004;154:1–7.

Minakov A, Schick C. Ultrafast thermal processing and nanocalorimetry at heating and cooling rates up to 1 MK/s. Rev Sci Instr. 2007;78:073902.

Minakov A, Morikawa J, Hashimoto T, Huth H, Schick C. Temperature distribution in a thin-film chip utilized for advanced nano-calorimetry. Meas Sci Technol. 2006;17:199–207.

Minakov A, Morikawa J, Zhuravlev E, Schick C. High-speed dynamics of temperature distribution in ultrafast (up to 108 K/s) chip-nano-calorimeters. J Appl Phys. 2019;125:054501.

Šestak J. Measuring, “hotness”: should be the sensor’s readings for rapid temperature changes named “tempericity”? J Therm Anal Calor. 2016;125:991–9.

Holba P. The Šestak’s proposal of term “tempericity” for non-equilibrium temperature and modified Tykodi’s thermal science classification with regards to the methods of thermal analysis. J Therm Anal Calor. 2017;127:2553–9.

Šesták J. Do we really know what temperature is: from Newton’s cooling law to an improved understanding of thermal analysis. J Therm Anal Calor. 2020;142:913–26.

Hilgevoord J. The uncertainty principle for energy and time. Am J Phys. 1996;64:1451.

Chiara MLD. Uncertainties. Sci Eng Ethics. 2010;16:479–87.

Tribus M. Thermostatics and thermodynamics: an introduction to energy, information and states of matter. New York: Nostrand; 1961.

Puglisi A, Sarracino A, Vulpiani A. Temperature in and out of equilibrium: a review of concepts, tools and attempts. Phys Rep. 2017;709:1–110.

Muschik W. Why so many “schools” of thermodynamics? Forsch Ingenieurwes. 2007;71:149–61.

Prigogine I. Introduction to the thermodynamics of irreversible processes. Springfield: C.C.Thomas Publications; 1955.

Prigogine I, Nayne F. Microscopic theory of irreversible processes. Proc Nat Acad Sci USA. 1977;74:4152–6.

Šesták J, Černý R. Thermotics as an alternative nonequilibrium thermodynamic approach suitable for real thermoanalytical measurements: a short review. J Non-Equil Thermod. 2022;47:233–40.

Won YY, Ramkrishna D. Revised formulation of Fick’s, Fourier’s and Newton’s laws for spatially varying linear transport. ACS Omega. 2019;4:11215–22.

Masdao D. Onsager principle as a tool for approximation. Chin Phys B. 2015;24:0205052015.

Tykodi R, Correspondence J. thermodynamics—thermotics as the name of the game. Ind Eng Chem. 1968;60:22.

Smyth HT. Temperature distribution during mineral inversion and its significance in DTA. J Am Cer Soc. 1951;34:221–4.

Yang CC, Mai YW. Thermodynamics at the nanoscale: a new approach to the investigation of unique physicochemical properties of nanomaterials. Mater Sci Eng R. 2014;79:1–40.

Guisbiers G. Advances in thermodynamic modelling of nanoparticles. J Adv Phys. 2019;4:968–88.

Šestak J. Thermal physics of nanostructured materials: thermodynamic (topdown) and quantum (bottom-up) issues. Mater Today Proc. 2021;37:28–34.

Šesták J. Kinetic phase diagrams as consequence of radical changing temperature or particle size. J Therm Anal Calor. 2015;120:129–37.

Ünlü H, Horing NJM, editors. Progress in nanoscale and low-dimensional materials and devices: properties, synthesis, characterization, modelling and applications. Berlin: Springer; 2022.

Šestak J. Use of phenomenological kinetics and the enthalpy versus temperature diagram (and its derivative - DTA) for a better understanding of transition processes in glasses. Thermochim Acta. 1996;280:175–90.

Šestak J. Miracle of reinforced states of matter. Glasses: ancient and innovative materials for the third millennium. J Thermal Anal Calor. 2000;61:305–23.

Queiroz CA, Sestak J. Aspects of the non-crystalline state. Phys Chem Glasses-Eur J Glass Sci Technol Part B. 2010;51:165–72.

Langer JS. Theories of glass formation and the glass transition. Rep Prog Phys. 2014;77:042501.

Kruzic JJ. Bulk metallic glasses as structural materials: a review. Adv Eng Mater. 2016;18:1308–31.

Illeková E, Šesták J. Crystallization of metallic micro-, nano-, and non-crystalline alloys. In: Šesták J, Šimon P, editors. Thermal analysis of micro, nano- and non-crystalline materials: transformation, crystallization, kinetics and thermodynamics. Dordrecht: Springer Netherlands; 2013. p. 257–89.

Šesták J, Koga N, Šimon P, Foller B. Amorphous inorganic polysialates: geopolymeric composites and the bioactivity of hydroxyl groups. In: Šesták J, Šimon P, editors. Thermal analysis of micro, nano- and non-crystalline materials: transformation, crystallization, kinetics and thermodynamics. Dordrecht: Springer Netherlands; 2013. p. 441–60.

Drabolt DA. Topics in the theory of amorphous materials. Eur Phys J B. 2009;68:1–21.

Davidovits J. Geopolymers and geopolymeric materials. J Therm Anal. 1989;35:429–41.

Šesták J, Kočí V, Černý R. Kovařík T. Thirty years after J. Davidovits introduced geopolymers considered now as hypo-crystalline materials within the mers-framework and the effect of oxygen binding: a review. J Thermal Anal Calor; Submited 2023

Suga H, Seki S. Thermodynamic investigation on glassy states. J Non-Cryst Solids. 1974;16:171–94.

Suga H, Seki S. Frozen-in states of orientational and positional disorder in molecular solids. Faraday Discuss. 1980;69:221–40.

Suga H. Propects of material science: from crystalline to amorphous solids. J Therm Anal Calorim. 2000;60:957.

Hirsh AG. Vitrification in plants as a natural form of cryoprotection. Cryobiolology. 1987;24:214–28.

Šesták J, Zámečník J. Can clustering of liquid water and thermal analysis be of assistance for better understanding of biological germplasm glasses exposed to ultra-low temperatures. J Therm Anal Calor. 2007;88:411–6.

Hill TL. Perspective nano-thermodynamics. Nano Lett. 2001;1:111–2.

Chamberlin RV. The big world of nano-thermodynamics. Entropy. 2015;17:52–73.

Navrotsky A. Calorimetry of nanoparticles, surfaces, interfaces, thin films, and multilayers. J Chem Thermod. 2007;39:1–9.

Wunderlich B. Calorimetry of nanophases of macromolecules. Int J Thermophys. 2007;28:958–67.

Wunderlich B. Thermodynamics and thermal properties of nanophases. Thermochim Acta. 2009;492:2–15.

Heath GR, Kots E, Khelashvili G, Scheuring G. Localization atomic force microscopy. Nature. 2021;594:385–90.

Hammiche A, Reading M, Pollock HM, Song M, Hourston DJ. Localized thermal analysis using a miniaturized resistive probe. Rev Sci Instrum. 1996;67:4268–75.

Price DM, Reading M, Hammich A, Pollock HM. Micro-thermal analysis: scanning thermal microscopy and localized thermal analysis. Int J Pharmac. 1999;192:85–96.

Reading M, Price DM, Grandy D, Smith RM, Conroy M, Pollock HM. Microthermal analysis of polymers: current capabilities and future prospects. Macromol Symp. 2001;167:45–55.

MarešJ J, Šesták J. An attempt at quantum thermal physics. J Therm Anal Calor. 2005;82:681–6.

Jaeger G. What in the quantum world is macroscopic? Am J Phys. 2014;82:896–991.

Spakovsky RM, Gemmer J. Some trends in quantum thermodynamics. Entropy. 2014;16:3434–70.

Merali Z. The new quantum thermodynamics: how quantum physics is bending the rules. Nature. 2017;551:20–2.

Conferences on frontiers of quantum and mesoscopic thermodynamics in Prague, biannually from the 1st (2004) to 9th (2022)

Kalva Z, Šesták J. Transdisciplinary aspects of diffusion and magneto-caloric effect. J Therm Anal Calor. 2004;76:67–74.

Mareš JJ, Stávek J, Šesták J. Quantum aspects of self-organized periodic chemical reaction. J Chem Phys. 2004;121:1499–503.

Mareš JJ, Stávek J, Šesták J, Hubik P. Do periodic chemical reactions reveal Fuerth’s quantum diffusion limit? Physica E. 2005;29:145–9.

Stávek J. Diffusion action of chemical waves. Apeiron. 2003;3:183–93.

Mareš JJ, Stávek J, Šesták J, Špička V, Krištofik J, Stávek J. Relativistic transformation of temperature and Mosengeil–Ott’s antinomy. Physica E Low-dimens Syst Nanostructures. 2010;42:484–7.

Mareš JJ, Hubík P, Špička V. On relativistic transformation of temperature. Prog Phys. 2017;65:1700018.

Holba P. Šestak J, Czechoslovak footprints in the development of methods of thermometry, calorimetry and thermal analysis. Ceram-Silik. 2012;56:159–67.

Fiala J, Mareš JJ, Šestak J. Reflections on how to evaluate the professional value of scientific papers and their corresponding citations. Scientometrics. 2017;112:697–709.

Šesták J, Fiala J, Gavrichev SK. Evaluation of the professional worth of scientific papers, their citation responding and the publication authority. J Thermal Anal Calor. 2018;131:463–71.

Horrobin DF. Philosophical basis of peer review and the suppression of innovation. J Amer Med Assoc. 1990;263:1438.

Martin B. Suppression of dissident in science. Res Soc Probl Public Policy. 1990;1:105–35.

Martín M, Intermann K. Scientific dissent and public policy. EMBO Rep. 2013;14:231–5.

Lee DE, Bond ML, Hanson C. When scientists are attacked: strategies for dissident scientists and whistleblowers. In: DellaSala DA, editor. Conservation science and advocacy for a planet in Peril. Chennai: Elsevier; 2021. p. 27–40.

Mimkes J. Binary alloys as a model for the multicultural society. J Therm Anal. 1995;43:521.

Šesták J. Citation records and some forgotten anniversaries in the field of thermal analysis. J Therm Anal Calor. 2012;108:511–8.