Abstract

Developing optimal flame retardant polymer compositions that meet all aspects of a given application is energy and cost-intensive. To reduce the number of measurements, we developed an artificial neural network-based system to predict the flammability of polymers from small-scale test data and structural properties. The system can predict ignition time, peak and total heat release, and mass residue after the burning of reference and flame retarded epoxy resins. Total heat release was predicted most accurately, followed by the peak heat release rate. We ranked the input parameters according to their impact on the output parameters using a sensitivity analysis. This ranking allowed us to establish a relationship between the input and output parameters taking into account their physical content.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Epoxy resins and their composites are widely used in several industrial sectors (e.g. the aerospace and the automotive industry) where proper flame retardancy is crucial. Developing novel materials with excellent fire performance is often material and cost-intensive and requires the use of several material compositions and destructive tests. It would substantially simplify the development process if large-scale combustion test results could be predicted based on polymer composition and small-scale test results.

Several studies have tried to find correlations between numerical flammability parameters in the past. Johnson [1, 2] suggested that the limiting oxygen index (LOI) of the most common polymers can be reasonably predicted from their specific heat of combustion (SHC) expressed in J g−1:

This expression is valid if the C to O and the C to N ratio are less than 6 and the C to H ratio is larger than 1.5.

Van Krevelen [3] established a relationship between the LOI and pyrolysis char residue (CR) expressed in mass% at 850 °C for the combustion of halogen-free polymers:

Since the heat of combustion and the char residue can be estimated from the elemental composition of the polymer, Van Krevelen and Hoftyzer [2] established a direct link between the LOI and elemental composition:

where H/C, F/C, and Cl/C are the ratios of corresponding atoms in the polymer and CP is the composition parameter. For polymers with CP values greater than 1, the LOI is approximately 0.175 (17.5 volume%), while for CP values smaller than 1, the LOI can be calculated with the following equation:

Lyon et al. [4] found the following correlation between the LOI of polymers and their heat release capacity (the maximum value of the specific heat release rate measured during the pyrolysis combustion flow calorimetry (PCFC) divided by the heating rate):

where LOI is the limiting oxygen index expressed in volume% and HRC is the heat release capacity expressed in kJ (gK)−1.

Early studies on predicting flammability parameters focused on determining the heat of combustion of individual atoms without considering the interactions between them. These attempts were followed by the group contribution method, where the polymer molecules are divided into characteristic structural units or groups, and their contribution to a given flammability parameter is determined. If these values are weighted according to the mass fraction of each group in the molecule and added together, the flammability parameter of the molecule is obtained. The root of these approaches is Van Krevelen’s method [3] for the estimation of pyrolysis char residue from the group contributions to the char forming tendency (CFT), which he defined as the amount of char per structural unit divided by 12 (relative atomic mass of carbon). This value was found to be additive, and so the total pyrolysis char residue could be calculated with the following equation:

where CFT is the carbon-forming tendency of the group and M expressed in g mol−1 is the molecular mass of the structural unit. He also found that aliphatic groups attached to aromatic groups have a negative char forming tendency.

Subsequently, Walters and Lyon [5] extended this method and determined the molar group contributions to the heat release capacity (HRC) for over 40 different groups, and Walters [6] determined the molar group contributions to the heat of combustion of 37 structural groups. Lyon et al. [7] established a molecular basis for polymer flammability by determining the molar contributions to char yield, the heat of combustion, and heat release capacity.

Sonnier et al. [8] improved and extended the method Van Krevelen and Lyon developed. They correctly predicted the heat release capacity and total heat release measured by pyrolysis combustion flow calorimetry using only 31 chemical groups. They also set up guidelines for selecting groups; nevertheless, they also emphasised that arbitrary decisions were made to split the molecules in many cases.

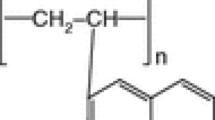

Later on, Sonnier et al. [9] tested thermoset polymers, including polycyanurates, polybenzoxazines, epoxy, and phthalonitrile resins and determined the molar contributions to total heat release, heat release capacity, and char content of 14 new chemical groups. Their results indicate that the main parameters that seem to contribute to the flammability of the polymer are the aromaticity, the presence of oxygen or nitrogen atom(s) in the structure, and the number of bonds between this structure and neighbouring chemical groups. Finally, Sonnier et al. [10] determined the molar contributions of two pendant groups containing phosphorus to flammability: 9,10-dihydro-9-oxa-10-phosphaphenanthrene-10-oxide (DOPO) and PO3, which are common components of flame retardants.

Although the molar group contribution method can predict the flammability parameters of many common polymers well, the arbitrary assignment of chemical groups and, in the case of new molecules or additives, the use of chemical groups whose molar contribution has not yet been determined limits the applicability of the method.

The publicly available data about polymer properties and machine learning algorithms enable the prediction of new polymer properties, including fire performance, for optimisation purposes [11, 12].

Parandekar et al. [13] developed genetic function algorithms to predict heat release capacity, total heat release, and the amount of char residue. They correlated the chemical structure of the polymer to its flammability using the quantitative structure–property relationships methodology.

Asante-Okyere et al. [14] predicted microscale combustion calorimetry results, such as total heat release, heat release capacity, peak heat release rate, and associated temperature of polymethyl methacrylate from sample mass and heating rate using supervised learning algorithm-based feed-forward back propagation neural network and generalised regression neural network. The sensitivity analysis indicated that the heating rate had the greatest effect on the output parameters. Similarly to Asente-Okyere, Mensah et al. [15] predicted the microscale combustion calorimeter results of extruded polystyrene from sample mass and heating rate using the group method of data handling and feed-forward back-propagation neural networks. The group method of data handling provided more accurate predictions.

In this study, we developed an artificial neural network (ANN)-based system to predict ignition time, peak and total heat release, and mass residue after burning reference and flame retarded epoxy resins in a mass loss type cone calorimeter using small-scale thermal and flammability test results and structural properties.

Materials and methods

Materials

For the validation of epoxy samples, we selected a trifunctional, glycerol-based (GER; IPOX MR3012, IPOX Chemicals Ltd., Budapest, Hungary; main component: triglycidyl ether of glycerol, viscosity: 0.16–0.2 Pas at 25 °C, density: 1.22 g cm−3 at 25 °C, epoxy equivalent 140–150 g eq−1) and a tetrafunctional, pentaerythritol-based epoxy (PER; IPOX MR 3016, Ipox Chemicals Ltd., Budapest, Hungary; main component tetraglycidyl ether of pentaerythritol, viscosity: 0.9–1.2 Pas at 25 °C, density: 1.24 g cm−3 at 25 °C, epoxy equivalent: 156–170 g eq−1) resin. A cycloaliphatic amine (IPOX MH 3122, IPOX Chemicals Ltd., Budapest, Hungary; main component: 3,3’-dimethyl-4,4’-diaminocyclohexylmethane, viscosity: 80–120 mPas at 25 °C, density: 0.944 g cm−3 at 25 °C, amine hydrogen equivalent: 60 g eq−1) was used as hardener.

As flame retardants, we applied ammonium polyphosphate (APP) (supplier: Nordmann Rassmann (Hamburg, Germany), trade name: NORD-MIN JLS APP, P content: 31–32%, average particle size: 15 µm) and resorcinol bis(diphenyl phosphate) (RDP) (supplier: ICL Industrial Products (Beer Sheva, Israel), trade name: Fyrolflex RDP, P content: 10.7%).

Methods

Sample preparation

During the preparation of the validation specimens, the mass ratio of the epoxy (EP) component and hardener was 100:40, both in the case of GER and PER. GER- and PER-based EP samples of 4 mass% P content from the inclusion of APP or RDP were prepared. In addition to these samples containing only one flame retardant (FR), mixed formulations with 2% P content from APP and 2% P content from RDP were also prepared. The P content of the samples in mass% was related to the total mass of the matrix (epoxy resin + hardener + flame retardant). First, the FRs (APP, RDP, or both) were added to the EP component. Then the hardener was added, and the components were mixed in a crystallising dish at room temperature until the mixture became homogenous. The specimens were crosslinked in appropriately-sized (100 × 100 × 4 mm) silicon moulds. The curing cycle, determined based on differential scanning calorimetry (DSC), involved two isothermal heat steps: 1 h at 80 °C, followed by 1 h at 100 °C.

Characterisation of fire behaviour

Mass loss type cone calorimetry (MLC) tests were performed with an instrument made by FTT Inc. (East Grinstead, UK) according to the ISO 13927 standard. 100 × 100 × 4 mm specimens were exposed to a constant heat flux of 50 kW m−2 and ignited. Heat release values and mass reduction were recorded during burning.

Artificial neural network

Artificial neural networks (ANNs) can be used to solve complex problems that can be divided into two tasks: classification (i.e. separation) and function approximation (i.e. regression). Classification often arises, for example, in image processing, where the ANN classifies images into different groups so that the content of the image can be determined with high accuracy. The solution to the regression problem is a predicted function or a specific value [16]. Artificial neural networks are usually used to solve problems that are either difficult to algorithms, or where the available data are incomplete or imprecise, or where the relationship between the studied parameters is not linear.

Machine learning is basically a set of many simple algebraic operations. To fully understand the construction and the structure of the artificial neural network, we would like to illustrate the machine learning process through the problem used as the topic of this study.

At the beginning of the development of neural networks, so-called perceptron networks were developed, which are single-layer networks. The input and the output of a perceptron can only be 0 and 1. The binary value of the output is determined by whether the weighted sum of the inputs exceeds a certain threshold. A simple perceptron network is illustrated in Fig. 1.

Let us suppose that we have a perceptron network with structural and flammability properties of reference and flame retarded thermoset polymers as input. These inputs are the type of the matrix, the hardener, the type of the flame retardant, the ratio of carbon, hydrogen, oxygen atoms, etc. We want the network to find the optimal value of masses and biases so that we can get a good estimation of the most important flammability properties: peak heat release rate (pHRR), the time to ignition (TTI), total heat release (THR), or mass residue.

During the learning process, the perceptron network compares the test set values with the results of the learning set and then changes the masses and biases based on this comparison. We need to introduce so-called sigmoid neurons instead of using perceptrons to ensure that the new values of masses and biases do not cause large changes in the output. Like perceptrons, sigmoid neurons have inputs, but their output can be any number between 0 and 1 instead of the two fixed positions, as in the case of perceptrons. The definition of the sigmoid function:

where \(z = \sum\nolimits_{{\text{n}}} {w_{{\text{n}}} x_{{\text{n}}} - b}\). Thus the output of the sigmoid neuron is:

where xn denotes the inputs, wn denotes the masses, and b is the bias. The sigmoid function is one of the most common examples of an activation function. The choice of the optimal activation function is part of optimising the hyperparameters of the neural network, which is one of the stages of the development of the neural network.

The transfer of activity from one layer to another can be described as follows. Consider the relationship between the input layer and the first neuron of the first hidden layer (Fig. 2). This can be mathematically described as follows:

where \({{\text{a}}_{0}}^{(1)}\): is the first neuron of the first hidden layer, \({{\text{a}}_{\text{n}}}^{(0)}\): neurons of the input layer; σ: sigmoid function (Eq. 8), \({\text{w}}_{\text{0,n}}\): masses associated with the first neuron of the first hidden layer, \({\text{b}}_{0}\): the bias related to the first hidden layer. By analogy, we can obtain the total activity of the first hidden layer:

The notations are analogous to those used for the first neuron, except that in this case, each input is assigned to a mass so that a given row of the mass matrix belongs to a given neuron. This means that each neuron is a function with each neuron in the previous layer as a variable and the result of this function is a number between 0 and 1.

Regarding the neural network structure, the leftmost layer is called the input layer, and the neurons in this layer are called input neurons. The rightmost layer is the output layer, and the neurons are output neurons. The middle layers are called hidden layers. Figure 3 shows a neural network with 15 input neurons, 2 hidden layers, and one output layer. This figure is an illustration of the neural network we used. The lines connecting the neurons symbolise the masses, with red representing a positive and blue a negative mass. The absolute magnitude of the masses is illustrated by the transparency of the line: Fainter lines represent smaller values. The design of the input and output layers of the network is usually self-evident once the existing problem and the desired outcome are identified. However, the identification of the hidden layers and the number of neurons associated with them is often determined with the use of heuristic methods. Determining the neural network structure—the optimal number of layers and neurons—is also a part of the neural network design. Neural networks can be classified into two main groups based on the interconnection scheme between the individual neurons: feed-forward networks (FNN) and recurrent networks (RNN). In the latter case, the graph representing the network contains a loop. We used a feed-forward neural network.

The dataset consists of two parts: a training dataset and a validation dataset. During the teaching process, the training dataset is further divided into two parts: a teaching set and a test set. In the learning process of the neural network, our aim is to create an algorithm that allows us to find the masses and biases that best approximate the output (teaching set) to the expected output (test set). To quantify the extent to which we have achieved this goal, we can define a so-called loss function:

where n is the number of inputs used in the training, a is the vector of network outputs, and y(x) is the y outputs associated with the x inputs. The value of the loss function also depends on the total masses and biases of the network. The choice of the loss function is based on iterative methods; in this example, it is the mean squared deviation, but in our network development, we used the mean absolute deviation. The aim is to find the masses and biases that make the loss function values as small as possible. The algorithm that usually performs this process is called gradient descent. The gradient of the loss function determines the direction and magnitude in which masses and biases should be changed to most effectively reduce the loss function. The extent of this gradient determines how ‘sensitive’ the loss function is to a given mass or bias. The loss function is back-propagated, so it readjusts the masses and biases. This method is called back-propagation for multilayer feed-forward meshes. Error back-propagation is an algorithm that computes these gradients [17].

The steps required to create a neural network capable of prediction are illustrated in Fig. 4. The main stages are explained in the following section and subsections.

Development of the neural network

Creating a database

We used TensorFlow to develop the neural network, an open-source Python library developed by Google. It is specifically designed for numerical computation, making machine learning easier and faster. The database consisted of measured data collected from our previous publications [18, 19]. The complete database contained 39 elements, 15 input parameters, and 4 output parameters. The first 15 columns are a matrix of input parameters, while the last column is a matrix of the output parameter to be predicted. The input parameters were: type of matrix, hardener, flame retardants; mass% of C, H, O, N and P atoms, mass% of aliphatic, cycloaliphatic, and aromatic structures, LOI and UL-94 test results and heat flux used during the mass loss type cone calorimetry tests. The output parameters were: peak heat release rate (pHRR), time to ignition (TTI), total heat release (THR), and char residue. Table 1 illustrates the initial structure of the dataset for pHRR prediction with the use of different epoxy matrix, hardener, and flame retardant combinations.

First, we scanned the database and then deleted any rows with missing items. During the learning process, the programme cannot handle any rows with missing items and categorical properties, only numbers. Thus, we also quantified the categorical properties (type of the matrix, the type of the hardener, and the type of the flame retardant) so that they could be processed by the training functions. We quantified the UL-94 classifications manually, as there is a correlation between flammability and UL-94 classification, so it was appropriate to assign values representing this. As a result, the HB rating was assigned a value of 1, while the V-0 rating was assigned a value of 4. We split the entire database into two parts: a training dataset and a validation dataset. The validation dataset was the last eight elements of the dataset (No. 31–38). The training dataset was subsequently split into two further parts in the ratio of 0.8:0.2, as a teaching set and a test set. Since the different properties and data have different magnitudes, these values had to be normalised so that the algorithm would treat the input parameter data with equal mass. Normalisation was performed by proportionally transforming the input data into values between 0 and 1.

Optimisation of hyperparameters

In machine learning, the optimisation or tuning of hyperparameters plays an important role. A hyperparameter is a parameter used to control the learning process. Hyperparameters are usually optimised manually, while the algorithm itself determines the values of other parameters. For different datasets, different hyperparameters are suitable, and consequently, for each predicted property (pHRR, THR, TTI, char residue), a new optimisation had to be performed. Different techniques have been developed to choose these parameters since, for complex datasets, it is a tedious and time-consuming task to set the appropriate values. Hyperparameter optimisation is still an intensively researched area.

Table 2 shows the most important hyperparameters and the type of intervals we used. The learning rate or learning factor essentially determines the rate of convergence, the size of the steps towards the minimum location. For example, the activation function is the sigmoid function mentioned earlier (Eq. 8). In addition to the sigmoid function, there are other common types of functions, such as the ELU (Exponential Linear Unit). As for the optimiser, a typical example is the gradient descent algorithm already presented. Nowadays, more advanced versions of this algorithm are more commonly used for this task, such as the adaptive momentum estimation (Adam) method. By specifying the batch size, the training dataset can be divided into smaller units, which seeds up the learning process. This hyperparameter is extremely important for large databases. The number of iterations (epoch) determines the number of times the algorithm runs through the training dataset. In simple terms, how many times it ‘sees’ the elements of the training set before it determines the predicted values.

The biggest challenge in the optimisation process is the vast size of the search field. The hyperparameter space usually has 2–7 dimensions. The number of dimensions depends on which hyperparameters the designer considers specific. An experimental design can be made to reduce the optimisation time, but it has been shown that random trials are more efficient since not all hyperparameters have equal importance. In the case of experimental designs, sometimes too much computational time is consumed by testing for insignificant hyperparameters [19]. Consequently, we first searched for the most important hyperparameters by varying them one by one and then looked for their optimal values. The most important hyperparameters were the number of hidden layers and the number of neurons in each case, so first, we determined the structure of the neural network and then chose the learning rate, the activation function, and the optimisation algorithms were chosen.

The problem of under- and overfitting had to be kept in mind when we created an algorithm capable of prediction. In the case of underfitting, the model predicts with a significant error in both testing and validation. In contrast, in the case of overfitting, the model produces accurate results in testing but becomes inaccurate when new data is added. Hence, the validation error is much higher. To avoid these problems, we plotted the errors obtained at the end of both training and validation in each case, and the relative positions of the curves allowed us to infer the presence of underfitting or overfitting. For example, during the training and validation of pHRR, we found that a small degree of overfitting could be observed, but it was still within an acceptable range. By expanding the dataset, both underfitting and overfitting errors can be reduced. Furthermore, from the convergence of the errors, the optimal value of the learning rate can be determined. If the learning rate is too high, the error curve will reach its minimum value early but may also diverge. If the set value is too low, the curve will not reach its minimum in a given number of iterations. In addition to observing the variation in the error values, we tried to get a more general idea of the accuracy of the prediction by choosing validation data that covered several ranges.

Evaluation of the results

For validation, we used two epoxy matrices with different functionality: the glycerol-based epoxy resin (GER) is a trifunctional, while the pentaerythritol-based epoxy resin (PER) is a tetrafunctional resin. Besides the reference resins, the flammability of flame retarded compositions was also predicted and validated. As the same flame retardants were used in the same proportions, the effectiveness of each type of flame retardant in a given case and their effects on each other can be compared. The experimentally measured data, i.e. the validation results, are compared to the predicted data in Tables 3 and 4.

We described the error of prediction with the average of the absolute deviations for each composition and the average of the deviations for each composition given in percentages. For the char residue, no average absolute percentage deviation could be calculated due to division by zero. (The char residue of the reference GER and PER epoxy resins was 0% because they burnt completely during the mass loss type cone calorimetry tests.) The results of Tables 3 and 4 are also illustrated in Figs. 5 and 6.

Validation was based on actual measurement data derived from mass loss type cone calorimetry (MLC) tests. Due to the complex combustion mechanism, the typical standard deviations of the parameters determined by this test method range from 5 to 10%.

For pHRR, the model predicted PER matrix samples with high accuracy as 36% of the training dataset was made up of PER matrix samples, while no GER matrix was included. In the case of TTI, the largest error occurred in the case of the PER reference sample since two values from this composition were included in the training dataset with TTI values of 30 and 37 s. This caused an overestimation. The slightest difference occurred in the estimation of THR: 7.5%. Overall, it can be seen that the magnitude of the average error values is often increased by one or two outliers, which might be measurement errors. In 65% of the cases, the average absolute deviation was below 10%, promising for later applications, though the model needs further development and a more extensive training dataset.

Sensitivity analysis of output parameters

Artificial intelligence is often compared to a ‘black box’. The name might be misleading, implying that we do not know how it works. Instead, the term means that there is no physical link between the input and the output parameters. Basically, the neural network finds the connections by the computations detailed earlier. The relationship between the input and the output variables can be determined, for example, by sensitivity analysis. Consequently, we wanted to use this method to get information on the effect of each input parameter and thus reduce the number of these.

There are several types of sensitivity analysis methods (e.g. ‘Partial Derivatives (PaD)’ method, ‘Weights’ method, ‘Perturb’ method, ‘Profile’ method, ‘Classical stepwise’ method, and ‘Improved stepwise’ method [20]). We performed the sensitivity analysis by removing the variables one by one (‘Classical stepwise’ method). The idea is to generate as many models as there are input parameters. We remove each variable one by one and record the error value generated in the output as a result. The variable that generated the slightest error is the least significant in the system so it can be removed. After removing this variable, we repeat this method on the neural network, this time with a reduced number of input parameters. Again, the least significant parameter is removed. This is continued until one variable remains at the end. The last remaining input parameter is considered the most significant on the given output parameter [20]. Figure 6 shows an example of a case where a better prediction is obtained after removing the least significant input parameter.

To quantify whether removing a given input variable gives a better or worse prediction, we introduced an error factor (R/-; Eq. 12). The R factor expresses the ratio of the error after removing a given parameter to the error obtained using all input variables. If the value of R is large, then the input parameter, which has been removed, has a significant effect on the output variable. If R is below 1, removing that input parameter gives a better prediction.

In Eq. 12, errors are the mean absolute deviations. Errori is the error after removing the ith parameter, while Error means the error obtained using all parameters. If Errori is smaller than Error, than R will decrease. This indicates that after removing the ith parameter the prediction became better. If R slightly increases, that means that Errori is higher than Error, meaning that the prediction became less precise.

For better understanding, we present the sensitivity analysis for pHRR. First, we removed the input parameters one by one using all fifteen parameters. The most significant error occurred when the type of hardener was removed, while the slightest error was in the case of removing the ratio of carbon atoms. As a consequence, the latter was removed from the system. The order of parameters obtained in this step is illustrated in Fig. 7. After that, we repeated the removal of the remaining 14 parameters one by one. In the second case, the aliphatic ratio seemed to be the least significant parameter. Continuing this analogy, we found that in the end, the ratio of phosphorus atoms was the most significant parameter, which is not surprising if we consider that the flame retarded resins contained phosphorus-based flame retardants. The higher the ratio of P atoms, the more flame retardant additive there is in the resin system; thus the pHRR decreases.

The input matrix used to predict pHRR in the sensitivity study gave the best results with the following four parameters: matrix type, number of hydrogen atoms, phosphorus atoms, and aromatic ratio. The method presented was also performed to predict TTI, THR, and char residue. In the case of these three flammability parameters, we could not reduce the number of input parameters based on the sensitivity analysis results. Regardless, we were able to order the input parameters according to their effect on the given output parameter. The order of the effect of the input parameters on the output parameters is presented in Table 5.

The most significant parameter affecting the pHRR and char residue was the ratio of phosphorus atoms, so basically, the amount of flame retardant in the resin system, which is in good agreement with reality. In the case of the char residue, the ratio of carbon atoms also plays an important role. The type of hardener seemed to be the most significant parameter affecting the time to ignition, which may be caused by the different decomposition temperatures of the crosslinks in the thermoset resin. On the other hand, some of the hardeners (e.g. TEDAP [18]) function as both a hardener and a flame retardant, so in the order of the input parameters, TEDAP could be evaluated as a hardener by the neural network, not as a flame retardant. Furthermore, those compositions containing TEDAP as a flame retardant showed the highest increase in TTI among the flame retarded resins in the database. In the case of THR, we got the heat flux used in the MLC test as the parameter with the most influence. It is certainly a significant parameter but not the most decisive in reality.

Overall, it can be concluded that the most important factors determining the pHRR, the TTI, and the char residue can be linked to actual physical parameters. Also, the input parameters of the neural network are often functions of each other; thus, removing them one by one can produce changes in the effect of other parameters. In addition, the flammability parameters (LOI, UL-94 classification) did not significantly influence the results according to the sensitivity analysis, so their inclusion in the database should be considered in the future. This foreshadows the possibility of creating an extensive database containing only structural properties.

Conclusions

In this study, we dealt with developing an artificial neural network (ANN) model, which can accurately predict the most important flammability parameters (peak of heat release, time to ignition, total heat release, and char residue) using structural properties (ratio of different atoms and aliphatic, cycloaliphatic, and aromatic structures in the polymer) and small-scale flammability results (limiting oxygen index, UL-94 test). We developed an algorithm using the Tensorflow Python library and used mass loss type cone calorimetry results of reference and flame retarded epoxy resins to validate the created system. The prediction accuracy of the ANN-based model varied in the following order: total heat release > peak heat release rate > time to ignition > char residue. The relatively larger error in predicting time to ignition and mass residue is due to the inherently larger standard deviation of the test methods. Data outliers in the input database often cause a significant difference between predicted and validated data, but in 65% of cases, the average absolute deviation is below 10%. We used the sensitivity analysis of the output parameters to rank the input parameters according to their impact on the output parameters. The resulting ranking was helpful in establishing a relationship between the input and output parameters based on their physical content. We principally consider this work as a proof of concept that it is possible to predict large-scale flammability test results from structural parameters and small-scale test results using ANN models. With the development of the algorithms used and extending the dataset, the accuracy of the prediction can be significantly improved in the future. We also intend to extend this method to predict the flammability of fibre reinforced composites.

References

Johnson PR. A general correlation of the flammability of natural and synthetic polymers. J Appl Polym Sci. 1974;18:491–504. https://doi.org/10.1002/app.1974.070180215.

Van Krevelen DW, Te Nijenhuis K. Product properties (II). In: van Krevelen DW (editor) Properties of polymers. Elsevier, Amsterdam: The Netherlands; 2009. pp. 847–873. https://doi.org/10.1016/B978-0-08-054819-7.00026-1.

Lyon RE, Walters RN, Stoliarov SI. Thermal analysis of flammability. J Therm Anal Calorim. 2007;89(2):441–8. https://doi.org/10.1007/s10973-006-8257-z.

Van Krevelen DW. Some basic aspects of flame resistance of polymeric materials. Polymer. 1975;16(8):615–20. https://doi.org/10.1016/0032-3861(75)90157-3.

Walters RN, Lyon RE. Molar group contributions to polymer flammability. J Appl Polym Sci. 2002;87(3):548–63. https://doi.org/10.1002/app.11466.

Walters RN. Molar group contributions to the heat of combustion. Fire Mater. 2002;26(3):131–45. https://doi.org/10.1002/fam.802.

Lyon RE, Takemori MT, Safronava N, Stoliarov SI, Walters RN. A molecular basis for polymer flammability. Polymer. 2009;50(12):2608–17. https://doi.org/10.1016/j.polymer.2009.03.047.

Sonnier R, Otazaghine B, Iftene F, Negrell C, David G, Howell BA. Predicting the flammability of polymers from their chemical structure: an improved model based on group contributions. Polymer. 2016;86:42–55. https://doi.org/10.1016/j.polymer.2016.01.046.

Sonnier R, Otazaghine B, Dumazert L, Ménard R, Viretto A, Dumas L, Bonnaud L, Dubois P, Safronava N, Walters R, Lyon R. Prediction of thermosets flammability using a model based on group contributions. Polymer. 2017;127:203–13. https://doi.org/10.1016/j.polymer.2017.09.012.

Sonnier R, Otazaghine B, Vagner C, Bier F, Six J-L, Durand A, Vahabi H. Exploring the contribution of two phosphorus-based groups to polymer flammability via pyrolysis-combustion flow calorimetry. Materials. 2019;12(18):2961. https://doi.org/10.3390/ma12182961.

Ye S, Li B, Li Q, Zhao H-P, Feng X-Q. Deep neural network method for predicting the mechanical properties of composites. Appl Phys Lett. 2019;115(16):161901. https://doi.org/10.1063/1.5124529.

Vahabi H, Naser MZ, Saeb MR. Fire protection and materials flammability control by artificial intelligence. Fire Technol. 2022;58:1071–3. https://doi.org/10.1007/s10694-021-01200-3.

Parandekar PV, Browning AR, Prakash O. Modeling the flammability characteristics of polymers using quantitative structure-property relationships (QSPR). Polym Eng Sci. 2015;55(7):1553–9. https://doi.org/10.1002/pen.24093.

Asante-Okyere S, Xu Q, Mensah RA, Jin C, Ziggah YY. Generalised regression and feed forward back propagation neural networks in modeling flammability characteristics of polymethyl methacrylate (PMMA). Thermochim Acta. 2018;667:79–92. https://doi.org/10.1016/j.tca.2018.07.008.

Mensah RA, Jiang L, Asante-Okyere S, Xu Q, Jin C. Comparative evaluation of the predictability of neural network methods on the flammability characteristics of extruded polystyrene from microscale combustion calorimetry. J Therm Anal Calorim. 2019;138(5):3055–64. https://doi.org/10.1007/s10973-019-08335-0.

Fazekas I. Neural networks. Debrecen: University of Debrecen, Faculty of Informatics; 2003.

Nielsen MA. Neural networks and deep learning. Determination Press. 2015. Available online: http://neuralnetworksanddeeplearning.com/.

Toldy A. Development of environmentally friendly epoxy resin composites. Doctoral thesis, Hungarian academy of sciences. 2017. ISBN978–963–313–262–3. Available online: http://www.pt.bme.hu/publikaciok/977_open_Toldy%20DSc%20thesis.pdf.

Bergstra J, Bengio Y. Random search for hyper-parameter optimisation. J Mach Learn Res. 2012;13:281–305. Available online: https://jmlr.org/papers/volume13/bergstra12a/bergstra12a.pdf.

Gevrey M, Dimopoulos I, Lek S. Review and comparison of methods to study the contribution of variables in artificial neural network models. Ecol Model. 2003;160(3):249–64. https://doi.org/10.1016/s0304-3800(02)00257-0.

Acknowledgements

The research reported in this paper is part of project no. BME-NVA-02, implemented with the support provided by the Ministry of Innovation and Technology of Hungary from the National Research, Development and Innovation Fund, financed under the TKP2021 funding scheme. This work was also supported by the National Research, Development and Innovation Office (2018-1.3.1-VKE-2018-00011). The authors thank Lilla Kisbenedek for her help in developing the artificial neural network model.

Funding

Open access funding provided by Budapest University of Technology and Economics.

Author information

Authors and Affiliations

Contributions

The research concept was developed by Ákos Pomázi and Andrea Toldy. Both authors were responsible for writing, revising and editing the original draft. Andrea Toldy was responsible for the supervision and administration of the project.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pomázi, Á., Toldy, A. Predicting the flammability of epoxy resins from their structure and small-scale test results using an artificial neural network model. J Therm Anal Calorim 148, 243–256 (2023). https://doi.org/10.1007/s10973-022-11638-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10973-022-11638-4