Abstract

We consider rough differential equations whose coefficients contain path-dependent bounded variation terms and prove the existence and a priori estimate of solutions. These equations include classical path-dependent stochastic differential equations containing running maximum processes and normal reflection terms. We apply these results to determine the topological support of the solution processes.

Similar content being viewed by others

1 Introduction

In the framework of Itô’s calculus, path-dependent stochastic differential equations(=SDEs) are naturally formulated and the existence and uniqueness hold under suitable standard assumptions on the coefficients. For example, reflected SDEs and SDEs containing running maximum and minimum processes are typical examples. In one dimensional cases, very simple SDEs containing the maximum and minimum processes and reflection term have been studied in detail. In this paper, we consider rough differential equations (=RDEs) whose coefficients contain path-dependent bounded variation terms and prove the existence and a priori estimate of solutions. This class of equations include the classical path-dependent SDEs mentioned above. Although the solutions are not unique in general, the uniqueness holds for smooth rough paths in many cases. Under the uniqueness assumption, we prove a continuity property of solution mappings at smooth rough paths which is useful to determine the topological support of the solution processes.

The structure of this paper is as follows. In Sect. 2, we introduce a class of RDEs containing bounded variation terms:

where \(\textbf{X}_t\) is a \(1/\beta \) rough path (\(1/3<\beta \le 1/2\)) and \(A(Z)_t\) is a continuous bounded variation path which depends on the past path \((Z_s)_{s\le t}\). After that, we state our main theorem (Theorem 2.7) which proves the existence and a priori estimate of solutions under \(\sigma \in \textrm{Lip}^{\gamma -1}\) (\(\gamma >1/\beta \)) and suitable assumptions on A. Note that the regularity assumption on \(\sigma \) for the existence of solutions is standard in the case of usual RDEs which corresponds to \(A\equiv 0\). The solution \(Z_t\) is a controlled path of the driving rough path \(\textbf{X}\). Actually, we solve this equation in product Banach spaces consisting of Z and \(\Psi =A(Z)\) by applying Schauder’s fixed point theorem.

To this end, we introduce Hölder continuous path spaces \(\mathcal {C}^{\theta }\) and Banach spaces \(\mathcal {C}^{q\text {-}var, \theta }\) consisting of \(\Psi \) based on the control function \(\omega \) of \(\textbf{X}\). The latter is a set of paths whose q-variation norms \((q\ge 1)\) are finite and satisfy a certain Hölder continuity defined by \(\omega \). We also study basic properties of the functional A. We briefly explain examples but we will discuss the detail in Sect. 5.

In Sect. 3, we prove our main theorem. The uniqueness does not hold in general. See Remark 2.8 (6).

In Sect. 4, we consider usual \(\beta \)-Hölder rough path \(\textbf{X}\) with the control function \(\omega (s,t)=|t-s|\). We show that the (generally multivalued) solution mapping is continuous at a rough path for which the solution is unique in Proposition 4.2 using a priori estimate of solutions. We use this result to prove support theorems in Sect. 6.

In Sect. 5, we give examples. In Sect. 5.1, we consider reflected rough differential equations on a domain D in \({\mathbb R}^n\):

where \(\Phi _t\) is the reflection term which forces \(Y_t\in \bar{D}\). This equation looks different from the equation studied in the main theorem. However, it is well-known that reflected Itô (Stratonovich) SDEs can be transformed to certain path-dependent Itô (Stratonovich) SDEs without reflection term. This is used to prove Freidlin-Wentzell type large deviation principle ([5]) and the support theorem ([14]) for reflected diffusions on domains with smooth boundary. We prove the existence theorem (Theorem 5.6) under standard assumptions (A) and (B) on D and \(\sigma \in \textrm{Lip}^{\gamma -1}\) by transforming the Eq. (1.2) to the corresponding path-dependent RDE (1.1). This is an extension of the result in [2] in which we proved the existence of solutions of (1.2) under stronger assumptions that D satisfies the condition (H1) and \(\sigma \in C^3_b\).

In 1-dimensional cases, perturbed SDEs and perturbed reflected SDEs were studied by many people. See e.g. [7, 8, 10, 11, 13, 31, 36]. In Sect. 5.2, we give a short review of these subjects.

In Sect. 5.3, we consider multidimensional and rough path versions of 1-dimensional perturbed SDEs and perturbed reflected SDEs. In the study of the latter one, we need to consider an implicit Skorohod equation as in [2]. As for perturbed reflected SDE whose driving process is the standard Brownian motion, we can extend the existence and uniqueness result of the solution due to Doney and Zhang [13] by using our approach. See Remark 5.22.

Path-dependent functional \(A(x)_t\) which we are mainly concerned with in this paper is a kind of generalization of the maximum process \(\max _{0\le s\le t}|x_s|\) and the local time term \(L(x)_t\). The maximum process \(\max _{0\le s\le t}|x_s|\) is obtained as the limit of \(\Vert x\Vert _{L^p([0,t])}\) as \(p\rightarrow \infty \). Hence it may be natural to study the case where \(A(x)_t=\Vert x\Vert _{L^p([0,t])}\). In Sect. 5.4, we study such examples.

In Sect. 6, we prove support theorems for solution processes by using Proposition 4.2 and Wong–Zakai theorems. In this section, except Theorem 6.4, we consider the Brownian rough path \(\textbf{W}\) which implies that we consider the usual Stratonovich SDEs driven by the standard Brownian motion.

Section 1 is an appendix. The solution \(Y_t\) studied in Sect. 5 is a sum of a controlled path \(Z_t\) and a continuous bounded variation path \(\Phi _t\). For a given controlled path Z, the Gubinelli derivative \(Z'\) is uniquely determined if the first level path X of \(\textbf{X}\) is truly rough in the sense of [20]. In our case, \(\Phi \) is certainly bounded variation but does not have good regularity property in Hölder norm. Hence it is natural to ask whether \(Z'\) is unique or not for Y in our setting. We study this problem by using a certain rough property of the path X in Sect. 7.1. In Sect. 7.2, we make a remark on path-dependent rough differential equations with drift. This consideration is necessary for the study of the reflected diffusions with the drift terms.

2 Preliminary and Main Theorem

Let us fix a positive number T. Let \(\omega (s,t)\) \((0\le s\le t\le T)\) be a control function. That is, \((s,t)\mapsto \omega (s,t)\in {\mathbb R}^{+}\) is a continuous function and \(\omega (s,u)+\omega (u,t)\le \omega (s,t)\) \((0\le s\le u\le t\le T)\) holds. We introduce a mixed norm by using \(\omega \) and p-variation norm. We refer the readers to [21] for the related studies. Let E be a finite dimensional normed linear space. For a continuous path \((x_t)\) \((0\le t\le T)\) on E, we define for \([s,t]\subset [0,T]\),

where \(\mathcal{P}=\{s=t_0<\cdots <t_N=t\}\) is a partition of the interval [s, t] and \(x_{u,v}=x_v-x_u\). When \([s,t]=[0,T]\), we may omit denoting [0, T]. For \(0<\theta \le 1, q\ge 1\), \(0\le s\le t\le T\) and a continuous path x, we define

We use the convention that \(\inf \emptyset =+\infty \). When \(\omega (s,t)=|t-s|\), \(\Vert x\Vert _{\theta , [s,t]}<\infty \) is equivalent to that \(x_u\) \((s\le u\le t)\) is a Hölder continuous path with the exponent \(\theta \) in usual sense. Hence we may say x is an \(\omega \)-Hölder continuous path with the exponent \(\theta \) (\((\omega ,\theta )\)-Hölder continuous path in short). For two parameter function \(F_{s,t}\) \((0\le s\le t\le T)\), we define \(\Vert F\Vert _{\theta ,[s,t]}\) similarly.

We denote by \(\mathcal {C}^{\theta }([0,T], E)\) the set of \(\omega \)-Hölder continuous paths x with values in E satisfying \(\Vert x\Vert _{\theta }=\Vert x\Vert _{\theta ,[0,T]}<\infty \). We may denote the function space by \((\mathcal {C}^{\theta }([0,T], E),\omega )\) to specify the control function. \(\mathcal {C}^{\theta }([0,T], E)\) is a Banach space with the norm \(|x_0|+\Vert x\Vert _{\theta }\). We may just write \(\mathcal {C}^{\theta }(E)\) if there is no confusion. Let \(\mathcal {C}^{q\text {-}var,\theta }(E)\) denote the set of E-valued continuous paths of finite q-variation defined on [0, T] satisfying \(\Vert x\Vert _{q\text {-}var,\theta }:=\Vert x\Vert _{q\text {-}var,\theta ,[0,T]}<\infty \). Note that \(\mathcal {C}^{q\text {-}var,\theta }(E)\) is a Banach space with the norm \(|x_0|+\Vert x\Vert _{q\text {-}var,\theta }\). Obviously, any path \(x\in \mathcal {C}^{q\text {-}var,\theta }(E)\) satisfy \(|x_{s,t}|\le \Vert x\Vert _{q\text {-}var,\theta }\omega (s,t)^{\theta }\). We may write \(\mathcal {C}^{\theta }, \mathcal {C}^{q\text {-}var,\theta }\) for simplicity.

We next introduce the notation for mappings between normed linear spaces. Let E, F be finite dimensional normed linear spaces. For \(\gamma =n+\theta \) \((n\in {\mathbb N}\cup \{0\}, 0<\theta \le 1)\), \(\textrm{Lip}^{\gamma }(E,F)\) denotes the set of bounded functions f on E with values in F which are n-times continuously differentiable and whose derivatives up to n-th order are bounded and \(D^nf\) is a Hölder continuous function with the exponent \(\theta \) in usual sense.

We use the following lemma. The compact embedding in (2) is necessary for the application of the Schauder fixed point theorem.

Lemma 2.1

-

(1)

Let \(1\le q'\le q\). For a continuous path x, we have

$$\begin{aligned} \Vert x\Vert _{q\text {-}var,[s,t]}\le \Vert x\Vert _{q'\text {-}var,[s,t]}^{q'/q} \Vert x\Vert _{\infty \text {-}var,[s,t]}^{(q-q')/q} \le \Vert x\Vert _{q'\text {-}var,[s,t]}. \end{aligned}$$(2.6) -

(2)

Let \(1\le q'\le q\). Let \(0<\theta , \theta '\le 1\) be positive numbers such that \(q\theta \le q'\theta '\). Then for any \(x\in \mathcal {C}^{q'\text {-}var,\theta '}\), we have

$$\begin{aligned} \Vert x\Vert _{q\text {-}var,\theta }\le \omega (0,T)^{(q'\theta '-q\theta )/q} \Vert x\Vert _{q'\text {-}var,\theta '}^{q'/q} \Vert x\Vert _{\infty \text {-}var}^{(q-q')/q}. \end{aligned}$$(2.7)Further if \(q'<q\) holds, then the inclusion \(\mathcal {C}^{q'\text {-}var,\theta '}\subset \mathcal {C}^{q\text {-}var,\theta }\) is compact.

-

(3)

If \(\Vert x\Vert _{q\text {-}var,[s,t]}<\infty \) for some q, then \(\lim _{q\rightarrow \infty }\Vert x\Vert _{q\text {-}var,[s,t]}=\Vert x\Vert _{\infty \text {-}var,[s,t]}\).

Proof

(1) We have

The second inequality follows from the trivial bound \(\Vert x\Vert _{\infty \text {-}var,[s,t]}\le \Vert x\Vert _{q'\text {-}var,[s,t]}\).

(2) By (1), we have

This implies (2.7). If \(\sup _n|(x_n)_0|+\Vert x_n\Vert _{q'\text {-}var,\theta '}<\infty \), then by their equicontinuities, there exists a subsequence such that \(x_{n_k}\) converges to a certain function \(x_{\infty }\) in the uniform norm. By (2.7), we can conclude that the convergence takes place with respect to the norm on \(\mathcal {C}^{q\text {-}var,\theta }\).

(3) We need only to prove \(\limsup _{q\rightarrow \infty }\Vert x\Vert _{q\text {-}var,[s,t]}\le \Vert x\Vert _{\infty \text {-}var,[s,t]}\). Suppose \(\Vert x\Vert _{q_0\text {-}var,[s,t]}<\infty \). Then for \(q>q_0\),

Taking the limit \(q\rightarrow \infty \), we obtain the desired estimate. \(\square \)

Throughout this paper, \(\beta \) is a positive number satisfying \(1/3<\beta \le 1/2\) if there are no further comments. Let \(\omega \) be a control function and let \(\textbf{X}_{s,t}=(X_{s,t},{\mathbb X}_{s,t})\) \((0\le s\le t\le T)\) be a \((\omega ,\beta )\)-Hölder rough path on \({\mathbb R}^d\). That is, \(\textbf{X}\) satisfies Chen’s relation and the path regularity conditions,

where \(\Vert X\Vert _{\beta }(<\infty )\) and \(\Vert {\mathbb X}\Vert _{2\beta }(<\infty )\) denote the \(\omega \)-Hölder norm. We denote by \(\mathscr {C}^{\beta }({\mathbb R}^d)\) the set of all \((\omega ,\beta )\)-Hölder rough paths, where \(\omega \) moves in the set of all control functions. When \(\omega (s,t)=|t-s|\), \(\textbf{X}_{s,t}\) is a usual \(\beta \)-Hölder rough path. If \(\textbf{X}_{s,t}\) is a rough path with finite \(1/\beta \)-variation, setting \(\omega (s,t)=\Vert X\Vert _{1/\beta \text {-}var, [s,t]}^{1/\beta }+ \Vert {\mathbb X}\Vert _{1/(2\beta )\text {-}var, [s,t]}^{1/(2\beta )}\), \(\Vert X\Vert _{\beta }\le 1\) and \(\Vert {\mathbb X}\Vert _{2\beta }\le 1\) hold. We refer the reader to [6, 20, 22, 28, 29] for the references of rough paths.

We use the following quantity,

We introduce a set of controlled paths \({\mathscr {D}}^{2\theta }_X({\mathbb R}^n)\) of \(\textbf{X}_{s,t}\), where \(1/3<\theta \le \beta \) following [20, 24]. A pair of \(\omega \)-Hölder continuous paths \((Z,Z')\in \mathcal {C}^{\theta }([0,T],{\mathbb R}^n)\times \mathcal {C}^{\theta }([0,T], \mathcal{L}({\mathbb R}^d,{\mathbb R}^n))\) with the exponent \(\theta \) is called a controlled path of X, if the remainder term \(R^Z_{s,t}=Z_t-Z_s-Z'_sX_{s,t}\) satisfies \(\Vert R^Z\Vert _{2\theta }<\infty \). The set of controlled paths \({\mathscr {D}}^{2\theta }_X({\mathbb R}^n)\) is a Banach space with the norm

The rough differential equations which we will study contain path dependent bounded variation term \(A(x)_t\). We consider the following condition on A. Note that the function space \(\mathcal {C}^{\beta }\) in the following statement depends on the control function \(\omega \).

Assumption 2.2

Let \(\xi \in {\mathbb R}^n\). Let \(\omega \) be a control function. Let A be a mapping from \(\mathcal {C}^{\beta }([0,T], {\mathbb R}^n~|~x_0=\xi )\) to \(C([0,T], {\mathbb R}^n)\) satisfying the following.

-

(1)

\((\text {Adaptedness})\) \(\left( A(x)_s\right) _{0\le s\le t}\) depends only on \((x_s)_{0\le s\le t}\) for all \(0\le t\le T\).

-

(2)

\((\text {Continuity})\) There exists \(1/3<\beta _0<\beta \) such that A can be extended to a continuous mapping from \(\mathcal {C}^{\beta _0}([0,T],{\mathbb R}^n~|~x_0=\xi )\) to \((C([0,T], {\mathbb R}^n), \Vert ~\Vert _{\infty ,[0,T]})\). We use the same notation A to denote the extended mapping on \(\mathcal {C}^{\beta _0}\).

-

(3)

There exists a non-decreasing positive continuous function F on \([0,\infty )\) such that for all \(x\in \mathcal {C}^{\beta _0}([0,T],{\mathbb R}^n~|~x_0=\xi )\),

$$\begin{aligned} \Vert A(x)\Vert _{1\text {-}var,[s,t]}\le F(\Vert x\Vert _{(1/\beta _0)\text {-}var,[s,t]}) \Vert x\Vert _{\infty \text {-}var,[s,t]}, \quad 0\le s\le t\le T \nonumber \\ \end{aligned}$$(2.14)hold.

Remark 2.3

The conditions (1), (2) are natural. In many cases, A is defined on continuous path spaces and is continuous with respect to the uniform norm. The condition (3) is strong assumption. This implies that the total variation of A(x) on [s, t] can be estimated by the norm of the path \((x_u-x_s)\) on \(s\le u\le t\). Note that this does not exclude the case where \(A(x)_u\) \((s\le u\le t)\) depends on \(x_v\) \((v\le s)\).

We have the following simple result.

Lemma 2.4

Let \(\omega \) be a control function and let \(\mathcal {C}^{\beta }([0,T],{\mathbb R}^n)\) be the corresponding Hölder space.

-

(1)

Suppose \(A: \mathcal {C}^{\beta }([0,T], {\mathbb R}^n~|~x_0=\xi )\rightarrow C([0,T], {\mathbb R}^n)\) satisfies Assumption 2.2 (1), (2). Then the initial value \(A(x)_0\) is independent of \(x\in \mathcal {C}^{\beta }([0,T], {\mathbb R}^n~|~x_0=\xi )\).

-

(2)

Let \(0<T'<T\) and set \(\omega _{T'}(s,t)=\omega (T'+s,T'+t)\) \((0\le s\le t\le T-T')\). Then \(\omega _{T'}\) is a control function.

-

(3)

Let \(\mathcal {C}^{\beta }_{T'}([0,T-T'],{\mathbb R}^n)\) be the \((\omega _{T'},\beta )\)- Hölder space. Let \(y\in \mathcal {C}^{\beta }([0,T'],{\mathbb R}^n)\) and \(x\in \mathcal {C}^{\beta }_{T'}([0,T-T'],{\mathbb R}^n)\) and suppose \(y_{T'}=x_0\). Set

$$\begin{aligned} \tilde{x}_t= {\left\{ \begin{array}{ll} y_t &{} t\le T',\\ x_{t-T'} &{}T'\le t\le T. \end{array}\right. } \end{aligned}$$Then \(\tilde{x}\in \mathcal {C}^{\beta }([0,T],{\mathbb R}^n)\). Let

$$\begin{aligned} \tilde{A}_{y,T'}(x)_t=A(\tilde{x})_{T'+t},\quad 0\le t\le T-T',\quad x\in \mathcal {C}^{\beta }_{T'}([0,T-T'],{\mathbb R}^n~|~x_0=y_{T'}). \end{aligned}$$Then \(\tilde{A}_{y,T'}\) satisfies Assumption 2.2 replacing \(\omega \) and T by \(\omega _{T'}\) and \(T-T'\). In particular, (2.14) holds for the same function F.

Proof

(1) For \(x\in C([0,T],{\mathbb R}^n)\), let \(x^t_u=x_{t\wedge u}\). Then by Assumption 2.2 (1), \(A(x)_u=A(x^t)_u\) \((0\le u\le t)\) holds. By a simple calculation, for any \(x,y\in C([0,T],{\mathbb R}^n)\), we have

Since \((y^0)^t=y^0\), this implies \(\lim _{t\rightarrow +0}\Vert x^t-y^0\Vert _{\mathcal {C}^{\beta _0}}=0\). Hence

(2) and (3) are easy to check. \(\square \)

Actually, the condition (3) automatically implies the following stronger estimate. By this result, we may assume that the growth rate of F(u) is at most of order \(u^{1/\beta }\), that is, a polynomial order.

Lemma 2.5

Assume the mapping \(A: \mathcal {C}^{\beta }([0,T], {\mathbb R}^n~|~x_0=\xi ) \rightarrow C([0,T], {\mathbb R}^n)\) satisfies the condition (3) in Assumption 2.2.

-

(1)

There exists \(C>0\) such that

$$\begin{aligned} \Vert A(x)\Vert _{1\text {-}var,[s,t]}\le C\left( \Vert x\Vert _{(1/\beta _0)\text {-}var, [s,t]}^{1/\beta _0}+1\right) \Vert x\Vert _{\infty \text {-}var,[s,t]} \quad 0\le s\le t\le T. \end{aligned}$$(2.15) -

(2)

Let us choose positive numbers \(\tilde{\alpha }\) and q such that \(\tilde{\alpha }\le \beta \) and \(1\le q\le \beta /\tilde{\alpha }\). Then for any \(x,x'\in \mathcal {C}^{\beta }\), we have

$$\begin{aligned}&\Vert A(x)-A(x')\Vert _{q\text {-}var, \tilde{\alpha }}\nonumber \\&\le \omega (0,T)^{\frac{\beta }{q}-\tilde{\alpha }} \left( F(\Vert x\Vert _{\beta _0}\omega (0,T)^{\beta _0}) \Vert x\Vert _{\beta }+ F(\Vert x'\Vert _{\beta _0}\omega (0,T)^{\beta _0}) \Vert x'\Vert _{\beta } \right) ^{1/q}\nonumber \\&\qquad \qquad \times \Vert A(x)-A(x')\Vert _{\infty \text {-}var}^{1-(1/q)}. \end{aligned}$$(2.16)

Proof

Let \( \omega _{1/\beta _0}(s,t)=\Vert x\Vert _{1/\beta _0\text {-}var, [s,t]}^{1/\beta _0}. \) For \(\varepsilon >0\), we choose the points \(s=t_0<t_1<\cdots <t_N=t\) such that \(\omega _{1/\beta _0}(t_{i-1},t_i)=\varepsilon \) \((1\le i\le N-1)\) and \(\omega _{1/\beta _0}(t_{N-1},t_N)\le \varepsilon \). By the super additivity of \(\omega _{1/\beta _0}\), we have \( (N-1)\varepsilon \le \sum _{i=1}^N\omega _{1/\beta _0}(t_{i-1},t_i)\le \omega _{1/\beta _0}(s,t) \) and \(N\le \omega _{1/\beta _0}(s,t)/\varepsilon +1\). By the additivity property of the bounded variation norm, we have

which implies the desired estimate.

(2) Applying Lemma 2.1 (2) in the case where \(q'=1, \theta '=\beta , \theta =\tilde{\alpha }\), we have

Note that

By the assumption on A, we have

This completes the proof. \(\square \)

Remark 2.6

Of course, we may optimize the estimate (2.15) as follows:

where \(\tilde{F}(u)=\inf _{\varepsilon >0}F(\varepsilon )\left\{ \left( \frac{u}{\varepsilon }\right) ^{1/\beta _0}+1\right\} \).

We now introduce our RDEs and state our main theorem.

Theorem 2.7

Let \(\gamma >1/\beta \). Let \(\textbf{X}\) be a \((\omega ,\beta )\)-Hölder rough path. Let \(\sigma \in \textrm{Lip}^{\gamma -1}({\mathbb R}^n\times {\mathbb R}^n, \mathcal{L}({\mathbb R}^d,{\mathbb R}^n))\) and \(\xi \in {\mathbb R}^n\). Assume that the mapping \(A: \mathcal {C}^{\beta }([0,T], {\mathbb R}^n~|~x_0=\xi ) \rightarrow C([0,T], {\mathbb R}^n)\) satisfies the condition in Assumption 2.2. Then the following hold.

-

(1)

There exists a controlled path \((Z,Z')\in {\mathscr {D}}^{2\beta }_X({\mathbb R}^n)\) such that

$$\begin{aligned} Z_t&=\xi +\int _0^t\sigma (Z_s,A(Z)_s)d\textbf{X}_s,\quad Z'_t=\sigma (Z_t,A(Z)_t),\quad 0\le t\le T. \end{aligned}$$(2.20) -

(2)

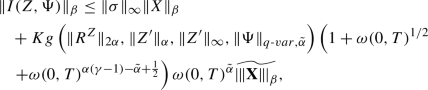

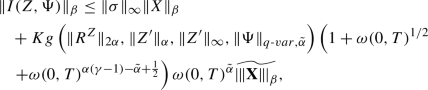

All solutions \((Z,Z')\) of (2.20) satisfy the following estimate: there exist positive constants K and \(\kappa _1,\kappa _2,\kappa _3\) which depend only on \(\sigma , \beta , \gamma \), F such that

(2.21)

(2.21)

First we make some remarks for this theorem and after that we explain some examples.

Remark 2.8

(1) From now on, we always set \(\gamma >1/\beta \) for \(1/3<\beta \le 1/2\) if there is no further comment.

(2) Let \((Z,Z')\in {\mathscr {D}}^{2\theta }_X({\mathbb R}^n)\) \((1/3<\theta \le \beta )\). Let \(\{\Psi _t\}_{0\le t\le T}\) be a continuous bounded variation path on \({\mathbb R}^n\). Then we can define the integral \(\int _0^t\sigma (Z_s, \Psi _s)d\textbf{X}_s\) in a similar way to the usual rough integral. We denote the derivative of \(\sigma =\sigma (\xi ,\eta )\) \((\xi \in {\mathbb R}^n, \eta \in {\mathbb R}^n)\) with respect to \(\xi \) by \(D_1\sigma \) and \(\eta \) by \(D_2\sigma \). Let

and \(\tilde{\Xi }^{\Psi }_{s,t}=\Xi ^{\Psi }_{s,t} -(D_2\sigma )(Z_s,\Psi _s)\int _s^t\Psi _{s,r}\otimes dX_r\). Let \(\mathcal{P}=\{s=t_0<\cdots <t_N=t\}\) and write \(|\mathcal{P}|=\max _i|t_{i+1}-t_i|\). Then it is easy to check that \(\lim _{|\mathcal{P}|\rightarrow 0}\sum _{i=1}^N \Xi ^{\Psi }_{t_{i-1},t_i}\) converges by the Sewing lemma using (3.8). Actually \(\lim _{|\mathcal{P}|\rightarrow 0}\sum _{i=1}^{N} \tilde{\Xi }^{\Psi }_{t_{i-1},t_i}\) also converges to the same limit value. We denote the limit by \(\int _s^t\sigma (Z_u,\Psi _u)d\textbf{X}_u\). Hence the sum of the term \(\int _s^t\Psi _{s,r}\otimes dX_r\) does not have any effect on the integral. However, we need to consider \(\Xi ^{\Psi }\) instead of \(\tilde{\Xi }^{\Psi }\) to obtain estimates of the integral in Lemma 3.2 which is necessary for the proof of the main theorem.

(3) Let us consider the case \(\sigma (\xi ,\eta )=\tilde{\sigma }(\xi +\eta )\), where \(\tilde{\sigma }\in \textrm{Lip}^{\gamma -1}({\mathbb R}^n,\mathcal {L}({\mathbb R}^d,{\mathbb R}^n))\). Let Y be a continuous path on \({\mathbb R}^n\). Suppose that there exist \((Z, Z')\in {\mathscr {D}}^{2\theta }_X({\mathbb R}^n)\) and continuous bounded variation path \((\Psi _t)_{0\le t\le T}\) such that \(Y_t=Z_t+\Psi _t\) \((0\le t\le T)\). Clearly, the decomposition of Y to controlled path part Z and the bounded variation part \(\Psi \) is not unique. We should note that our definition of \(\int _0^t\tilde{\sigma }(Y_s)d\textbf{X}_s\) depends on \(Z'\) and Y. However, under a natural assumption, the Gubinelli derivative \(Z'_t\) is uniquely defined for Y and the integral does not depend on the decomposition \((Z,\Psi )\). We discuss this problem in the “Appendix”.

(4) Theorem 2.7 implies that the solution \(Z_t\) satisfies the following estimate:

Here G is a certain polynomial function which depends on \(\sigma ,\beta ,\gamma ,F\). Also \(\theta (>1)\) is a positive constant which depends on \(\beta \) and \(\gamma \) (When \(\gamma =3\), \(\theta =3\beta \) holds). Clearly, a path \(Z_t\) which satisfies (2.22) is a solution of (2.20).

(5) Let \(\tilde{\omega }\) be a control function and \(C_i\) be positive constants. Actually, under the assumption that for all \(0\le s\le t\le T\),

we can prove similar results to Theorem 2.7 for \(\beta \)-Hölder rough paths \(\textbf{X}\) with \(\omega (s,t)=|t-s|\) by a similar proof of the main theorem. This extension is necessary to treat the examples in Example 2.9 (3) and (4). However, we need to change the upper bound function in (2.21). The reason is as follows. The \(\beta \)-Hölder rough path \(\textbf{X}\) can be regarded as a \((\bar{\omega },\beta )\)-Hölder rough path, where \(\bar{\omega }(s,t)=\tilde{\omega }(s,t)+|t-s|\). We can do the same proof as in the main theorem in this setting. The control function \(\omega \) in (2.21) should be changed to this \(\bar{\omega }\) and accordingly  also should be changed to the corresponding quantity. Also we should replace the term

also should be changed to the corresponding quantity. Also we should replace the term  by

by  .

.

(6) If \(A\equiv 0\), the uniqueness of the solutions hold under the assumption \(\sigma \in \textrm{Lip}^{\gamma }\). However, even if \(A\equiv 0\), the uniqueness does not hold in general under \(\sigma \in \textrm{Lip}^{\gamma -1}\). See Davie [9]. Gassiat [23] gave an example which showed that the uniqueness does not hold for reflected RDE even if the coefficient is smooth and the domain is just a half space. Contrary to this, in one dimensional case (note that the driving noise is multidimensional one), the uniqueness of the solutions of reflected RDEs were proved by Deya-Gubinelli-Hofmanová-Tindel in [12]. It may be interesting problem to find natural class of solutions for which the uniqueness hold and a non-trivial class of reflected RDEs or more generally path-dependent RDEs for which the uniqueness hold in an appropriate sense. See also Sect. 5.4 for some examples for which the uniqueness hold.

The situation is different if \(\beta >1/2\). Ferrante and Rovira [19] proved the existence of solutions of reflected (Young) ODE on half space driven by fractional Brownian motion with the Hurst parameter H> 1/2. Falkowski and Słomin’ski [18] proved the Lipschitz continuity of the Skorohod mapping on a half space in the Hölder space and proved the uniqueness in that case.

We briefly explain examples. We refer the reader for the detail to Sect. 5.

Example 2.9

(1) Let D be a domain in \({\mathbb R}^n\) satisfying conditions (A) and (B). Consider the Skorohod equation \(y_t=x_t+\phi _t\), where x is a continuous path whose starting point is in \(\bar{D}\). Also \(y_t \in \bar{D}\) \((0\le t\le T)\) and \(\phi _t\) is the bounded variation term. The mapping \(L: x\mapsto \phi \) satisfies Assumption 2.2. Using this result, we can apply the main theorem to reflected rough differential equations.

(2) Let \(f_i\) \((1\le i\le n)\) be Lipschitz functions on \({\mathbb R}^n\) and define

This satisfies Assumption 2.2. Actually this satisfies the stronger conditions \(\mathrm{(Lip)}_{\rho }\) and \(\mathrm{(BV)}_{\rho }\) for certain \(\rho \) in Definition 5.12. See Proposition 5.13 for the proof. Note that even if we replace each \(\max _{0\le s\le t}f_i(x_s)\) by finite products of maximum functions and minimum functions of \(f(x_s)\), Assumption 2.2 holds.

(3) Let \(c_1,\ldots ,c_n\) be \(\beta \)-Hölder continuous paths on \({\mathbb R}^n\) in usual sense. Let f be a Lipschitz map from \({\mathbb R}^n\) to \({\mathbb R}^n\). Let us consider a variant of the example (2) as follows:

This does not satisfy Assumption 2.2 (3). However it holds that

for some positive constant C.

(4) We consider the case \(\omega (s,t)=|t-s|\), that is, usual \(\beta \)-Hölder rough path. Path-dependent functional \(A(x)_t\) which we are mainly concerned with in this paper is a kind of generalization of the maximum process \(\max _{0\le s\le t}x_s\) and the local time term \(L(x)_t\). The maximum process \(\max _{0\le s\le t}|x_s|\) is obtained as the limit of \(\Vert x\Vert _{L^p([0,t])}\) as \(p\rightarrow \infty \). Hence it may be natural to study the case where \(A(x)_t=\Vert x\Vert _{L^p([0,t])}\). Theorem 2.7 cannot be applied to this directly. We will study this example in Sect. 5.4.

(5) Let \(W_t\) be the 1-dimensional standard Brownian motion starting at 0. Let us consider the following equations,

Here a denotes a real number.

The Eq. (2.25) contains the local time term \(\Phi _t\) at 0. These processes have been studied e.g. in [7, 8, 10, 11, 13, 31, 36]. We see that a multidimensional version of these equations can be transformed to the equation of the form (2.20) in Sect. 5.2. We also give some brief review of 1-dimensional cases there.

3 Proof of Theorem 2.7

In the calculation below, we assume \(\gamma \le 3\) as well as \(\gamma >1/\beta \).

If we write \(A(Z)_t=\Psi _t\), then the Eq. (2.20) reads

We solve this equation by using Schauder’s fixed point theorem. First, we give an estimate of the integral \(\int _s^t\Psi _{s,r}\otimes dx_r\) \((0\le s<t\le T)\), where \(x\in \mathcal {C}^{\theta }\), \(\Psi \in \mathcal {C}^{q\text {-}var, \theta '}\) and \(\otimes \) denotes the tensor product. To this end, we introduce some notations. Let \(0\le s\le t\le T\) and consider a mapping F defined on \(\{(u,v)~|~s\le u\le v\le t\}\) with values in a certain vector space. Let \(\mathcal{P}=\{s=t_0<\cdots <t_{N}\le t\}\) be a partition of [s, t]. We write

We use the following estimate.

Lemma 3.1

Let \(x\in \mathcal {C}^{\theta }({\mathbb R}^n)\). Let p be a positive number such that \(\theta p>1\). Let q be a positive number such that \(1/p+1/q\ge 1\) and \(\Psi \in \mathcal {C}^{q\text {-}var, \theta '}({\mathbb R}^n)\). For any \(0\le s<t\le T\), the integral \(\int _s^t\Psi _{s,r}\otimes dx_r\) converges in the sense of Young integral and it holds that

where \(C_{\theta ,q}=2^{\theta +\frac{1}{q}}\zeta \left( \theta +\frac{1}{q}\right) \).

Proof

The assumption implies x is finite \(1/\theta \)-variation. Moreover \(\theta +1/q>1\) holds. Hence the Young integral of \(\int _s^t\Psi _{s,r}\otimes dx_r\) converges and the following estimate holds:

which completes the proof. \(\square \)

By using this lemma, we will give estimates for the integral \(\int _s^t\sigma (Z_u,\Psi _u)d\textbf{X}_u\). As we mentioned, we denote the derivative of \(\sigma =\sigma (\xi ,\eta )\) \((\xi \in {\mathbb R}^n, \eta \in {\mathbb R}^n)\) with respect to \(\xi \) by \(D_1\sigma \) and \(\eta \) by \(D_2\sigma \). Also we write \(D\sigma (\xi ,\eta )(u,v)=D_1\sigma (\xi ,\eta )u+D_2\sigma (\xi ,\eta )v\). We write \(Y_t=(Z_t,\Psi _t)\in {\mathbb R}^n\times {\mathbb R}^n\). Let \((Z,Z')\in {\mathscr {D}}^{2\alpha }_X({\mathbb R}^n)\) and \(\Psi \in \mathcal {C}^{q\text {-}var,\tilde{\alpha }}({\mathbb R}^n)\).

Until the end of this section, we choose and fix \(p>0\) such that \(1/\beta<p<\gamma \). For this p, we assume \(q,\alpha ,\tilde{\alpha }\) satisfy the following condition.

As we explained, we consider

By a simple calculation, we have for \(s<u<t\),

Thus, under the assumption on \(Z, \Psi \), applying Lemma 3.1 to the case \(\theta =\beta \), \(\theta '=\tilde{\alpha }\) and \((a+b+c)^{\gamma -2}\le 3^{\gamma -2}(a^{\gamma -2}+b^{\gamma -2}+c^{\gamma -2})\), we obtain

where \(C=3^{\gamma -2}(2+C_{\beta ,q})\). Therefore, there exists a positive constant C which depends only on \(\gamma ,\beta ,p\) such that

where

Let \(\mathcal{P}=\{t_k\}_{k=0}^N\) be a partition of [s, t]. Since \(\beta +(\gamma -1)\alpha>\beta +p\alpha -\alpha \ge p\alpha >1\), by the Sewing lemma (see e.g. [20, 22, 29]), the following limit exists,

We may denote \(I\left( (Z,Z'),\Psi \right) \) by

simply if there are no confusion. This integral satisfies the additivity property

The pair \(\left( I(Z,\Psi ), \sigma (Y_t)\right) \) is actually a controlled path of X. In fact, we have the following estimates.

Lemma 3.2

Assume \((Z,Z')\in {\mathscr {D}}^{2\alpha }_X({\mathbb R}^n)\) and \(\Psi \in \mathcal {C}^{q\text {-}var,\tilde{\alpha }}({\mathbb R}^n)\) and \(q, \alpha , \tilde{\alpha }\) satisfy (3.4). For any \(0\le s\le t\le T\), we have the following estimates. The constant K below depends only on \(\Vert \sigma \Vert _{\infty }\), \(\Vert D\sigma \Vert _{\infty }, \Vert D\sigma \Vert _{\gamma -2}, \alpha ,\beta , p,\gamma \) and may change line by line.

-

(1)

$$\begin{aligned} |\Xi _{s,t}|&\le \Bigl \{\Vert \sigma \Vert _{\infty }\Vert X\Vert _{\beta } +\Vert D\sigma \Vert _{\infty }\Vert Z'\Vert _{\infty } \Vert {\mathbb X}\Vert _{2\beta }\omega (s,t)^{\beta }\nonumber \\&\qquad +C_{\beta ,q} \Vert D\sigma \Vert _{\infty } \Vert \Psi \Vert _{q\text {-}var,\tilde{\alpha }} \Vert X\Vert _{\beta }\omega (s,t)^{\tilde{\alpha }}\Bigr \}\omega (s,t)^{\beta }. \end{aligned}$$(3.14)

-

(2)

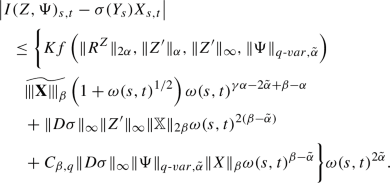

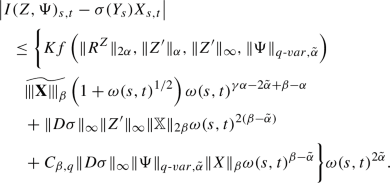

(3.15)

(3.15)and

(3.16)

(3.16)where

$$\begin{aligned} f(a,b,c,d)&=a+b+(a^{\gamma -2}+c^{\gamma -2}+d^{\gamma -2})(c+d), \end{aligned}$$(3.17)$$\begin{aligned} g(a,b,c,d)&= f(a,b,c,d)+ c+d. \end{aligned}$$(3.18) -

(3)

(3.19)

(3.19) -

(4)

$$\begin{aligned}&{|\sigma (Y_t)-\sigma (Y_s)|}\nonumber \\&\le \Vert D\sigma \Vert _{\infty } \left\{ \Vert Z'\Vert _{\infty } \Vert X\Vert _{\beta } \omega (s,t)^{\beta -\tilde{\alpha }}+ \Vert R^Z\Vert _{2\alpha }\omega (s,t)^{2\alpha -\tilde{\alpha }} \right. \nonumber \\&\quad \left. +\Vert \Psi \Vert _{q\text {-}var,\tilde{\alpha }}\right\} \omega (s,t)^{\tilde{\alpha }}. \end{aligned}$$(3.20)

-

(5)

\((I(Z,\Psi ),\sigma (Z,\Psi ))\in {\mathscr {D}}^{2\tilde{\alpha }}_X\) holds.

Remark 3.3

(1) Under the condition (3.4), \((\gamma -1)\alpha +\beta >1\) holds as we noted.

(2) If \(\Psi \in \mathcal{C}^{1\text {-}var,\beta }\), then \(I(Z,\Psi )\in {\mathscr {D}}^{2\beta }_X\).

(3) We give estimates of paths on [0, T] in Lemma 3.2. However, a similar estimate holds on small interval \([0,\tau ]\) \((0<\tau <T)\) by replacing the norms and \(\omega (0,T)\) in Lemma 3.2 by the norms on \([0,\tau ]\) and \(\omega (0,\tau )\).

(4) Let \(1/3<\tilde{\beta }<\beta \). Then \(\textbf{X}\) can be regarded as a \(1/\tilde{\beta }\)-rough path. It is easy to check that Lemma 3.2 still holds under the condition (3.4) by replacing \(\beta \) by \(\tilde{\beta }\). Suppose \(\omega (0,T)\le 1\). Then \(\Vert X\Vert _{\tilde{\beta }}\le \Vert X\Vert _{\beta }\) and \(\Vert {\mathbb X}\Vert _{2\tilde{\beta }}\le \Vert {\mathbb X}\Vert _{2\beta }\) holds. We use these results to prove a priori estimate in Theorem 2.7.

Proof

(1) This follows from the explicit form of (3.5) and Lemma 3.1.

(2) This follows from (3.8) and the Sewing lemma.

(3) This follows from (2) and Lemma 3.1.

(4) This follows from the definition of \(Y_t\).

(5) This follows from (3) and (4) and \(2\alpha \ge \tilde{\alpha }\). \(\square \)

We consider the product Banach space \( {\mathscr {D}}^{2\theta _1}_{X}\times \mathcal {C}^{q\text {-}var,\theta _2}, \) where \(1/3<\theta _1\le 1/2\) and \(0<\theta _2\le 1\). The norm is defined by

Let \(\xi \) be the starting point of Z and let \(\eta =A(x)_0\in {\mathbb R}^n\). Note that \(\eta \) is independent of x. Let

The solution of RDE could be obtained as a fixed point of the mapping,

We prove a continuity property of \(\mathcal{M}\).

Lemma 3.4

(Continuity) Assume

where \(\beta _0\) is the number in Assumption 2.2. Then \(\mathcal{M}\) is continuous.

We already proved the compactness of the inclusion \(\mathcal {C}^{q'\text {-}var,\theta '}\subset \mathcal {C}^{q\text {-}var,\theta }\), where \(1\le q'<q,\, q\theta \le q'\theta '\). We need the following compactness result also.

Lemma 3.5

Let \(\frac{1}{3}<\theta <\theta '\le \frac{1}{2}\). Then \({\mathscr {D}}_X^{2\theta '}\subset {\mathscr {D}}^{2\theta }_X\) and the inclusion is compact.

Proof of Lemma 3.5

Suppose

This implies \(\{Z(n)'\}\) is bounded and equicontinuous. Since \(Z(n)_t-Z(n)_s=Z(n)'_sX_{s,t}+R^{Z(n)}_{s,t}\), \(\{Z(n)\}\) is also bounded and equicontinuous. Hence certain subsequence \(\{Z(n_k), Z(n_k)'\}\) converges uniformly. This implies \(\{(Z(n_k)',R^{Z(n_k)})\}\) converges in \({\mathscr {D}}^{2\theta }_X\). \(\square \)

Proof of Lemma 3.4

First note that

\( (\xi +I(Z,\Psi ),\sigma (Y))\in \mathscr {D}^{2\alpha }_X \) follows from Lemma 3.2. By Assumption 2.2, we have

which shows

Thus we have proved (3.26). We estimate \(\Vert I(Z,\Psi )'-I(\tilde{Z},\tilde{\Psi })'\Vert _{\alpha }\). We have

Since \(\beta>\tilde{\alpha }>\alpha \), this shows the continuity of the mapping \(((Z,Z'),\Psi )\mapsto I(Z,\Psi )'\).

We next estimate \(\Vert R^{I(Z,\Psi )}-R^{I(\tilde{Z},\tilde{\Psi })}\Vert _{2\alpha }\).

We argue in a similar way to the sewing lemma for the estimate of the first term. Let \(\mathcal{P}_N=\{t^N_k=s+\frac{k(t-s)}{2^N}\}\) be a usual dyadic partition of [s, t]. We have

By (3.15),

Hence this term is small in the \(\omega \)-Hölder space \(\mathcal {C}^{2\alpha }\) on a bounded set of \(\mathcal {W}_{T,\alpha ,\tilde{\alpha },q,\xi ,\eta }\) if N is large. We fix a partition so that this term is small. Although the partition number may be big,

is a finite sum, and by the explicit form of \(\delta \Xi \) as in (3.6), we see that this difference is small in \(\mathcal {C}^{2\alpha }\) if \(((Z,Z'), \Psi )\) and \(((\tilde{Z},\tilde{Z}'), \tilde{\Psi })\) are sufficiently close in \(\mathcal {W}_{T,\alpha ,\tilde{\alpha },q,\xi ,\eta }\). The estimate of the second and the third terms are similar to the above and we obtain the continuity of the mapping

We next prove the continuity of the mapping

Since we choose \(\beta _0\le \alpha \), it suffices to apply Lemma 2.5 (2) to the case where \(x=\xi +I(Z,\Psi )\) and \(x'=\xi +I(\tilde{Z},\tilde{\Psi })\) because of Lemma 3.2 (2) and the continuity (3.33). \(\square \)

By using the above lemmas, we prove the existence of solutions on small interval \([0,T']\). Since the interval can be chosen independent of the initial condition, we obtain the global existence of solutions and the estimate for solutions. We consider balls with radius 1 centered at \(\left( (\xi +\sigma (\xi ,\eta )X_{t}, \sigma (\xi ,\eta )), \eta \right) \) \((0\le t\le T')\),

Lemma 3.6

(Invariance and compactness) Assume (3.24) and let \(\alpha<\underline{\alpha }<\tilde{\alpha }<\overline{\alpha }<\beta \). Also we choose \(q'>1\) such that \(\displaystyle {\frac{\tilde{\alpha }}{\overline{\alpha }}q<q'<q}\).

-

(1)

For sufficiently small \(T'\), we have

$$\begin{aligned} \mathcal{M}(\mathcal {B}_{T',\alpha ,\tilde{\alpha },q})\subset \mathcal {B}_{T',\underline{\alpha },\overline{\alpha },q'}\subset \mathcal {B}_{T',\alpha ,\tilde{\alpha },q}. \end{aligned}$$(3.36)Moreover \(T'\) does not depend on \(\xi \).

-

(2)

\(\mathcal {B}_{T',\underline{\alpha },\overline{\alpha },q'}\) is a compact subset of \(\mathcal {B}_{T',\alpha ,\tilde{\alpha },q}\).

Proof

(1) The second inclusion is immediate because \(\omega (0,T')\le 1\) and the definition of the norms. We prove the first inclusion. Let \(((Z,Z'),\Psi )\in \mathcal {B}_{T',\alpha ,\tilde{\alpha },q}\). Recall that \(I(Z,\Psi )'_t=\sigma (Z_t,\Psi _t)\) and note that \(\Vert Z'\Vert _{\infty ,[0,T']}\le \Vert \sigma \Vert _{\infty } +\Vert Z'\Vert _{\alpha }\omega (0,T')^{\alpha }\). From Lemma 3.2 (4), we have

We next estimate \(R^{I(Z,\Psi )}\). Let \(0<s<t<T'\). By Lemma 3.2 (3), we have

We turn to the estimate of \(A(\xi +I(Z,\Psi ))\). By (3.27) and Lemma 3.2 (2), we have

Thus, noting Lemma 2.5 (1), there exists a positive number

\(K'\) which depends on K,

\(\Vert \sigma \Vert _{\infty }\),

\(\Vert D\sigma \Vert _{\infty }\), f, g and a positive number

\(\kappa _0\) which depends on

\(\beta -\underline{\alpha }\) and

\(\tilde{\alpha }-\underline{\alpha }\) such that if

, then

\( \mathcal{M}(\mathcal {B}_{T',\alpha ,\tilde{\alpha },q})\subset \mathcal {B}_{T',\underline{\alpha },\overline{\alpha },q'} \) holds. This completes the proof.

, then

\( \mathcal{M}(\mathcal {B}_{T',\alpha ,\tilde{\alpha },q})\subset \mathcal {B}_{T',\underline{\alpha },\overline{\alpha },q'} \) holds. This completes the proof.

(2) This follows from Lemma 2.1 (2) and Lemma 3.5. \(\square \)

We are in a position to prove our main theorem.

Proof of Theorem 2.7

(1) Let us take

\(\alpha , \tilde{\alpha }, p, q, \underline{\alpha }, \overline{\alpha }\) as in Lemma 3.6. By Lemma 3.4 and Lemma 3.6, applying Schauder’s fixed point theorem, we obtain a fixed point for small interval

\([0,T']\) if

, where K is a certain positive constant. That is, there exists a solution on

\([0,T']\). We now consider the equation on

\([T',T]\). We can rewrite the equation as

, where K is a certain positive constant. That is, there exists a solution on

\([0,T']\). We now consider the equation on

\([T',T]\). We can rewrite the equation as

Let \(\omega _{T'}(s,t)=\omega (T'+s,T'+t)\) for \(0\le s<t\le T-T'\). We see that \(\tilde{Z}_t:=Z_{T'+t}\) and \(\tilde{\Psi }_{t}:=\Psi _{T'+t}\) \((0\le t\le T-T')\) is a solution to

where

Note that we already defined \( \tilde{A}_{y,T'}(x)_t\) \((0\le t\le T-T')\) \((x\in \mathcal {C}^{\beta }([0,T-T'],{\mathbb R}^n ~|~ x_0=Z_{T'}, \omega _{T'}))\) in Lemma 2.4 (4).

Thanks to Lemma 2.4, we can do the same argument as \([0,T']\) for small interval. By iterating this procedure finite time, say, N-times, we obtain a controlled path \((Z_t,Z_t')\) \((0\le t\le T)\). This is a solution to (2.20). Clearly,

We need to show \((Z,\Psi )\in \mathcal {W}_{T,\beta ,\beta ,1,\xi ,\eta }\) and its estimate with respect to the norm \(\Vert \cdot \Vert _{\beta }\). We give the estimate of the solution on \([0,T']\). The solution \((Z,Z')\) which we obtained satisfies

Let \(0\le u\le v\le T'\). From (3.42), (3.16) and (3.1), we have

Second, by (2.14) and (3.43), we have

Therefore Z and A(Z) are

\((\omega ,\beta )\)-Hölder continuous paths. Hence, we have

Moreover, we can apply Lemma 3.2 to Z and

\(\Phi =A(Z)\) in the case where

\(\alpha =\tilde{\alpha }=\beta \) and

\(q=1\). Thus, by substituting the estimates (3.43) and (3.44) for (3.19), we obtain for

\(0\le u\le v\le T'\),

Moreover, we can apply Lemma 3.2 to Z and

\(\Phi =A(Z)\) in the case where

\(\alpha =\tilde{\alpha }=\beta \) and

\(q=1\). Thus, by substituting the estimates (3.43) and (3.44) for (3.19), we obtain for

\(0\le u\le v\le T'\),

These local estimates hold on other small intervals. By the estimate (3.41), we obtain the desired estimate.

These local estimates hold on other small intervals. By the estimate (3.41), we obtain the desired estimate.

(2) Let \((Z,Z')\in \mathscr {D}^{2\beta }_X({\mathbb R}^n)\) be a solution of (2.20). Let \(\beta _0<\tilde{\beta }<\beta \). The constants \(K, \kappa _1,\kappa _2,\kappa _3\) which will appear in the calculation below depend only on \(\sigma \) and F and may change line by line. As we already noted in Remark 3.3 (4), Lemma 3.2 still holds replacing \(\beta \) by \(\tilde{\beta }\). We take \(0<\tau \le T\) so that \(\omega (0,\tau )\le 1\). Using \(\Vert X\Vert _{\tilde{\beta },[0,\tau ]}\le \Vert X\Vert _{\beta ,[0,\tau ]}\) and \(\Vert {\mathbb X}\Vert _{\tilde{\beta },[0,\tau ]}\le \Vert {\mathbb X}\Vert _{\beta ,[0,\tau ]}\) which follows from \(\omega (0,\tau )\le 1\), we have

By Lemma 2.5 (1), we have

which implies

By (3.45) and (3.47), we obtain

We apply Lemma 3.2 (3) to the estimate of \(R^Z\) in the case where \(\Psi =A(Z)\), \(q=1\) and \(\alpha =\tilde{\alpha }=\tilde{\beta }\). By combining the estimates obtained above, we see that there exist \(\kappa _1>0, \kappa _2>1,\kappa _3>0\) and \(K>0\) which can be taken independent of \(\tilde{\beta }\) such that

Let \( z_{\tilde{\beta },\tau }=\Vert Z'\Vert _{\tilde{\beta },[0,\tau ]}+\Vert R^Z\Vert _{2\tilde{\beta },[0,\tau ]}. \) Then using (3.48) and (3.49), we see that there exist (possibly different) \(\kappa _1\ge 1, \kappa _2>1, \kappa _3>0, K>0\) which can be taken independent of \(\tilde{\beta }\) such that

Since \(\tilde{\beta }<\beta \), the function \(\tau \mapsto z_{\tilde{\beta },\tau }\) \((0\le \tau \le 1)\) is an increasing continuous function and \(\lim _{\tau \rightarrow +0}z_{\tilde{\beta }, \tau }=0\). If  , then by the definition, \(Z_t=\xi \) for all \(0\le t\le T\) and \(\Vert Z'\Vert _{\beta }=\Vert R^Z\Vert _{2\beta }=0\) hold. The desired estimate holds. So we assume

, then by the definition, \(Z_t=\xi \) for all \(0\le t\le T\) and \(\Vert Z'\Vert _{\beta }=\Vert R^Z\Vert _{2\beta }=0\) hold. The desired estimate holds. So we assume  . We now define

. We now define

There are two cases \(\tau _{1}=T\) and \(\tau _{1}<T\). Suppose \(\tau _{1}=T\). Then  holds. If this is not the case,

holds. If this is not the case,  holds. Hence by the inequality (3.50), we get

holds. Hence by the inequality (3.50), we get

After establishing this estimate, we proceed in a similar way to the argument in the proof of (1) replacing \(T'\) by \(\tau _1\). In this way, we obtain an increasing time sequence \( 0=\tau _0<\tau _1<\cdots<\tau _{N-1}<\tau _N=T \) \((N\ge 2)\) and the estimate (3.51) hold for \(\omega (\tau _{i-1},\tau _i)\) \((1\le i\le N-1)\). Also we have

By using \(\sum _{i=1}^{N-1}\omega (\tau _{i-1},\tau _i)\le \omega (0,T)\), we get the estimate of N as follows.

Using (3.52), (3.53), (3.54) and simple estimates

we obtain

Since \(\tilde{\beta }<\beta \) and \(\Vert Z'\Vert _{\beta , [0,T]}+\Vert R^Z\Vert _{2\beta , [0,T]}<\infty \), taking the limit \(\tilde{\beta }\uparrow \beta \), this estimate hold for the norms \(\Vert \cdot \Vert _{\beta }\) and \(\Vert \cdot \Vert _{2\beta }\) as well. The estimates of Z and A(Z) follow from this estimate and the estimates similar to (3.45) and (3.47). This completes the proof. \(\square \)

4 A Continuity Property of the Solution Mapping

In this section, we consider the case where \(\omega (s,t)=|t-s|\). That is, we consider usual Hölder rough paths. Also let us denote the set of \(\beta \)-Hölder geometric rough paths (\(1/3<\beta \le 1/2\)) by \(\mathscr {C}^{\beta }_g({\mathbb R}^d)\) which is the closure of the set of smooth rough paths in the topology of \(\mathscr {C}^{\beta }({\mathbb R}^d)\). In this paper, smooth rough path means the rough path \(\textbf{h}\) defined by a Lipschitz path \(h\in \mathcal {C}^1\) and its iterated integral \(\bar{h}^2_{s,t}= \int _s^t(h_u-h_s)\otimes dh_u\). We identify \(\textbf{h}\) and the Lipschitz path h. Also we denote the set of smooth rough paths by \(\mathscr {C}_{\textrm{Lip}}({\mathbb R}^d)\).

Let \(Z(\textbf{h})\) be a solution to (2.20) for \(\textbf{X}=\textbf{h}\). Then \(Z(\textbf{h})\) is a solution to the usual integral equation

As already explained, we cannot expect the uniqueness of the solution of the RDEs in our setting driven by general rough path \(\textbf{X}\). However, the uniqueness hold in many cases when the driving rough path is a smooth rough path and \(\sigma \) is sufficiently smooth. If the solution to the ODE (4.1) is unique, then \(Z(\textbf{h})\) is uniquely defined and \((Z(\textbf{h}),R^{Z(\textbf{h})},A(Z(\textbf{h})))\) satisfies the same estimate as in Theorem 2.7. We use the notation \(Z(h)_t\) instead of \(Z(\textbf{h})_t\) in this case.

We denote the set of solutions \((Z,Z')\) of our RDE (2.20) by \(Sol(\textbf{X})\). We prove a certain continuity property of multivalued mapping \(\textbf{X}\mapsto Z(\textbf{X})\in Sol(\textbf{X})\) at the rough path \(\textbf{X}\) for which the solution is unique. Thus, this multivalued map is continuous in such a sense at any smooth rough path if the uniqueness holds on the set of smooth rough paths.

We write \(\mathcal {C}^{\theta -}=\cap _{0<\varepsilon<\theta }\mathcal {C}^{\theta -\varepsilon }, \mathcal {C}^{1+\text {-}var,\theta -}=\cap _{q>1,0<\varepsilon <\theta }\mathcal {C}^{q\text {-}var,\theta -\varepsilon }\). Clearly, these spaces are Fréchet spaces with the naturally defined semi-norms. Also note that \(Z(\textbf{X})\in \mathcal {C}^{\beta -}([0,T],{\mathbb R}^n)\).

Lemma 4.1

We consider the Eq. (2.20) and assume the same assumption on A and \(\sigma \) in Theorem 2.7. Let \(\textbf{X}\in \mathscr {C}^{\beta }({\mathbb R}^d)\). Let \(\{\textbf{X}_N\}\subset \mathscr {C}^{\beta }({\mathbb R}^d) \) be a sequence such that  . Let us choose solutions \(Z(\textbf{X}_N)\in Sol(\textbf{X}_N)\) \((N=1,2,\ldots )\). Then there exists a subsequence \(N_k\uparrow \infty \) such that the limit \(Z=\lim _{k\rightarrow \infty }Z(\textbf{X}_{N_k})\) exits in \(\mathcal {C}^{\beta -}([0,T], {\mathbb R}^n)\). Further for such Z, \((Z,\sigma (Z,A(Z)))\in Sol(\textbf{X})\) and \( \lim _{k\rightarrow \infty }\left\| R^{Z(\textbf{X}_{N_k})}-R^{Z}\right\| _{2\beta -} =0 \) hold.

. Let us choose solutions \(Z(\textbf{X}_N)\in Sol(\textbf{X}_N)\) \((N=1,2,\ldots )\). Then there exists a subsequence \(N_k\uparrow \infty \) such that the limit \(Z=\lim _{k\rightarrow \infty }Z(\textbf{X}_{N_k})\) exits in \(\mathcal {C}^{\beta -}([0,T], {\mathbb R}^n)\). Further for such Z, \((Z,\sigma (Z,A(Z)))\in Sol(\textbf{X})\) and \( \lim _{k\rightarrow \infty }\left\| R^{Z(\textbf{X}_{N_k})}-R^{Z}\right\| _{2\beta -} =0 \) hold.

Proof

By the estimate in Theorem 2.7 (2), we can choose \(\{N_k\}\) such that \(Z(\textbf{X}_{N_k}), \) \( A(Z(\textbf{X}_{N_k}))\) converges in \(\mathcal {C}^{\beta -}\) and \(\mathcal {C}^{1+\text {-}var, \beta -}\) respectively. This implies \(\lim _{k\rightarrow \infty }\int _s^tA(Z(\textbf{X}_{N_k}))_{s,r} dX_{N_k}(r) =\int _s^tA(Z(\textbf{X}))_{s,r}dX_r\) which shows the limit Z satisfies the inequality (2.22).

This proves \((Z,\sigma (Z,A(Z)))\in Sol(\textbf{X})\). We have

Hence \(\lim _{k\rightarrow \infty }R^{Z(\textbf{X}_{N_k})}_{s,t}=R^{Z(\textbf{X})}_{s,t}\) for all (s, t). Combining the uniform estimates of \((\omega ,2\beta )\)-Hölder estimates of them, this completes the proof. \(\square \)

The following proposition follows from the above lemma

Proposition 4.2

We consider the Eq. (2.20) and assume the same assumption on A and \(\sigma \) in Theorem 2.7. Assume the solution of (2.20) is unique for the rough path \(\textbf{X}_0\in \mathscr {C}^{\beta }({\mathbb R}^d)\). Then the multivalued mapping \(\textbf{X} (\in {\mathscr {C}}^{\beta }({\mathbb R}^d))\rightarrow Sol(\textbf{X})\) is continuous at \(\textbf{X}_0\) in the following sense. For any \(\varepsilon >0\), there exists \(\delta >0\) such that for any \(\textbf{X}\) satisfying  and any \(Z(\textbf{X})\in Sol(\textbf{X})\), it holds that

and any \(Z(\textbf{X})\in Sol(\textbf{X})\), it holds that

Remark 4.3

Let \(\textbf{X}\in \mathscr {C}^{\beta }_g({\mathbb R}^d)\). It holds that for any sequence \(\{\textbf{h}_N\}\subset \mathscr {C}_{\textrm{Lip}}({\mathbb R}^d)\) satisfying  , any accumulation points of \(\{Z(h_{N})\}\) belong to \(Sol(\textbf{X})\). The set \(Sol_{\infty }(\textbf{X})\) which consists of such all accumulation points is a subset of \(Sol(\textbf{X})\) and may be a natural class of solutions. However \(Sol_{\infty }(\textbf{X})=Sol(\textbf{X})\) may hold.

, any accumulation points of \(\{Z(h_{N})\}\) belong to \(Sol(\textbf{X})\). The set \(Sol_{\infty }(\textbf{X})\) which consists of such all accumulation points is a subset of \(Sol(\textbf{X})\) and may be a natural class of solutions. However \(Sol_{\infty }(\textbf{X})=Sol(\textbf{X})\) may hold.

By a similar argument to the proof of Theorem 4.9 in [2], we can prove the existence of universally measurable selection mapping of solutions as follows.

Proposition 4.4

We consider the Eqs. (3.1) and (3.2) and assume the same assumption on A and \(\sigma \) in Theorem 2.7. Then there exists a universally measurable mapping

which satisfies the following.

-

(1)

\(\left( Z(\textbf{X}),\sigma (Y(\textbf{X}))\right) \in {\mathscr {D}}^{2\beta }_X({\mathbb R}^n)\) and \(\Bigl ( \left( Z(\textbf{X}),\sigma (Y(\textbf{X}))\right) , \Psi (\textbf{X}) \Bigr )\) is a solution in Theorem 2.7 and satisfies the estimate in (5.13).

-

(2)

There exists a sequence of Lipschitz paths \(h_N\) such that

and \(\mathcal{I}(\textbf{h}_N)\) converges to \(\mathcal{I}(\textbf{X})\) in \(\mathcal {C}^{\beta -}({\mathbb R}^n)\times \mathcal {C}^{\beta -}(\mathcal{L}({\mathbb R}^d,{\mathbb R}^n))\times \mathcal {C}^{1\text {-}var+,\beta -}({\mathbb R}^d)\).

and \(\mathcal{I}(\textbf{h}_N)\) converges to \(\mathcal{I}(\textbf{X})\) in \(\mathcal {C}^{\beta -}({\mathbb R}^n)\times \mathcal {C}^{\beta -}(\mathcal{L}({\mathbb R}^d,{\mathbb R}^n))\times \mathcal {C}^{1\text {-}var+,\beta -}({\mathbb R}^d)\).

Proof

Below, we omit writing \(\xi \). We consider the product space,

and its subset

Let \(\bar{E}_0\) be the closure of \(E_0\) in E. Then \(\bar{E}_0\) is a separable closed subset of E. The separability follows from the continuity of the mapping \(h\mapsto \left( (Z(h), \sigma (Y(h))), \Psi (h)\right) \). Note that \(Sol_{\infty }(\textbf{X})\) coincides with the projection of the subset of \(\bar{E}_0\) whose first component is \(\textbf{X}\). Hence by the measurable selection theorem (See 13.2.7. Theorem in [15]), there exists a universally measurable mapping \(\mathcal{I}: {\mathscr {C}}^{\beta }_g({\mathbb R}^d)\rightarrow E \) such that \(\mathcal{I}(\textbf{X})\in \left\{ \textbf{X}\right\} \times Sol_{\infty }(\textbf{X})\). This mapping satisfies the required properties in (1) and (2). \(\square \)

Remark 4.5

It is not clear that we could obtain the adapted measurable solution mapping \(\mathcal {I}\).

5 Examples

5.1 Reflected Rough Differential Equations

Let D be a connected domain in \({\mathbb R}^n\). As in [27, 34], we consider the following conditions (A), (B) on the boundary. See also [35].

Definition 5.1

We write \(B(z,r)=\{y\in {\mathbb R}^n\,|\, |y-z|<r\}\), where \(z\in {\mathbb R}^n\), \(r>0\). The set \(\mathcal{N}_x\) of inward unit normal vectors at the boundary point \(x\in \partial D\) is defined by

-

(A)

There exists a constant \(r_0>0\) such that

$$\begin{aligned} \mathcal{N}_x=\mathcal{N}_{x,r_0}\ne \emptyset \quad \text{ for } \text{ any }~x\in \partial D. \end{aligned}$$ -

(B)

There exist constants \(\delta >0\) and \(0<\delta '\le 1\) satisfying: for any \(x\in \partial D\) there exists a unit vector \(l_x\) such that

$$\begin{aligned} (l_x,{\varvec{n}})\ge \delta ' \qquad \text{ for } \text{ any }~{\varvec{n}}\in \cup _{y\in B(x,\delta )\cap \partial D}\mathcal{N}_y. \end{aligned}$$

Let us recall the Skorohod equation. The Skorohod equation associated with a continuous path \(x\in C([0,\infty ), {\mathbb R}^n)\) with \(x_0\in \bar{D}\) is given by

Under the assumptions (A) and (B) on D, the Skorohod equation is uniquely solved. This is due to Saisho [34]. We write \(\Gamma (x)_t=y_t\) and \(L(x)_t=\phi _t\). By the uniqueness, we have the following flow property.

Lemma 5.2

Assume (A) and (B). For any continuous path x on \({\mathbb R}^n\) with \(x_0\in {\bar{D}}\), we have for all \(\tau , s\ge 0\)

where \((\theta _sx)_{\tau }=x_{\tau +s}-x_s\).

We obtain the following estimate of L(x).

Lemma 5.3

Assume conditions (A) and (B) hold. Let \(x_t\) be a continuous path of finite q-variation \((q\ge 1)\). Then we have the following estimate.

where C is a positive constant which depends on the constants \(\delta , \delta ', r_0\) in conditions (A) and (B).

Proof

We proved the following estimate in [2, 4] following the argument in [34].

where

By combining this and Lemma 2.5, we complete the proof. \(\square \)

Lemma 5.4

Assume (A) and (B). Consider two Skorohod equations \(y=x+\phi \), \(y'=x'+\phi '\). Then

The estimate (5.10) can be found in Remark 4.1 (i) in [34]. Lemma 5.3 shows that if x is a \((\omega ,\theta )\)-Hölder continuous path, \(L(x)\in \mathcal {C}^{1\text {-}var,\theta }\) holds true. Actually, \(\Vert L(x)\Vert _{1\text {-}var, [s,t]}\) can be estimated by the modulus of continuity of x and \(\Vert x\Vert _{\infty \text {-}var,[s,t]}\). For example, see [34] and the proof of Lemma 2.3 in [4]. Hence, we see that L is a 1/2-Hölder continuous map on \(C([0,\infty ), {\mathbb R}^n)\). Note that \(\Gamma \) is Lipschitz continuous if D is a convex polyhedron ([16]).

Let \(\textbf{X}\in \mathscr {C}^{\beta }({\mathbb R}^d)\). We assume D satisfies the condition (A) and (B). We now consider reflected RDE:

We need to make clear the definition of the solution \((Y_t)\) of (5.11).

Definition 5.5

We call \(Y_t\) is a solution of (5.11) if and only if the following holds:

-

(i)

There exist a \(Z\in \mathscr {D}^{2\beta }([0,T],{\mathbb R}^n)\) and a continuous bounded variation path \(\Phi _t\) such that \(Y_t=Z_t+\Phi _t\) \((0\le t\le T)\).

-

(ii)

\(\Phi _t=L(Z)_t\) \((0\le t\le T)\).

-

(iii)

Z satisfies

$$\begin{aligned} Z_t&=\xi +\int _0^t\sigma (Z_s+L(Z)_s)d\textbf{X}_s,\quad Z_t'=\sigma (Z_t+L(Z)_t) \qquad 0\le t\le T. \end{aligned}$$(5.12)

Note that if Y is a solution in the above sense, Z is uniquely determined by Y and \(\textbf{X}\) since \(Z_t=\xi +\int _0^t\sigma (Y_s)d\textbf{X}_s\) and \(Z'_t=\sigma (Y_t)\) hold. See also Remark 5.7 (1).

By applying Theorem 2.7, we obtain the following result.

Theorem 5.6

Let \(\textbf{X}\in \mathscr {C}^{\beta }({\mathbb R}^d)\). Assume D satisfies conditions (A) and (B).

Let \(\sigma \in \textrm{Lip}^{\gamma -1}({\mathbb R}^n, \mathcal{L}({\mathbb R}^d,{\mathbb R}^n))\) and \(\xi \in \bar{D}\). Then there exist \((Z,Z')\in {\mathscr {D}}^{2\beta }_X({\mathbb R}^n)\) and \(\Phi \in \mathcal {C}^{1\text {-}var,\beta }({\mathbb R}^n)\) with \(\Phi _0=0\) such that \(Y_t=Z_t+\Phi _t\) is a solution of (5.11). Moreover the following estimate holds,

where \(K, \kappa _i\) are constants which depend only on \(\sigma ,\beta ,\gamma , \delta , \delta ', r_0\).

Proof

By applying Theorem 2.7, we have at least one solution Z and the estimate of (5.12). Let \(Y_t=Z_t+L(Z)_t\) and \(\Phi _t=L(Z)_t\). Then this pair is a solution to the original equation. \(\square \)

Remark 5.7

(1) Let \((Y_t,\Phi _t)\) be a solution of (5.11). Then there exists \(\theta >1\) such that

Conversely, suppose

-

(i)

\((Y_t,\Phi _t)\) is a pair of continuous paths satisfying (5.14) and \((\Phi _t)\) is a bounded variation path satisfying \(\Vert \Phi \Vert _{1\text {-}var,[s,t]}\le C\omega (s,t)^{\beta }\) \((0\le s\le t\le T)\).

-

(ii)

\(Y_t\in \bar{D}\) \((0\le t\le T)\).

-

(iii)

\((Y_t,\Phi _t)\) satisfies

$$\begin{aligned} \Phi _t=\int _0^t1_{\partial D}(Y_s){\varvec{n}}(s)d\Vert \Phi \Vert _{1\text {-}var,[0,s]} \quad \quad 0\le t\le T,\quad ({\varvec{n}}(s)\in \mathcal {N}_{Y_s}\quad \text {if }Y_s\in \partial D). \end{aligned}$$

Let \(\Xi _{s,t}=\sigma (Y_s)X_{s,t}+(D\sigma )(Y_s)[\sigma (Y_s)]\mathbb {X}_{s,t}+ (D\sigma )(Y_s)\left( \int _s^t\Phi _{s,u}\otimes dX_u\right) \). Then \(|(\delta \Xi )_{s,u,t}|\le C\omega (s,t)^\theta \) \((0\le s\le u\le t\le T)\) holds and \(Z_{0,t}\in \mathcal {C}^{\beta }([0,T],{\mathbb R}^n; x_0=0)\) exists such that \(|(Z_{0,t}-Z_{0,s})-\Xi _{s,t}|\le C\omega (s,t)^\theta \). Further, by the assumption on \(\Phi \), \((Z_{0,t})\in \mathscr {D}^{2\beta }_X({\mathbb R}^n)\) with \(Z_{0,t}'=\sigma (Y_t)\) and \(Y_t=Y_0+Z_{0,t}+\Phi _t\) holds. Clearly, \(Z_{0,t}=\int _0^t\sigma (Y_s)d\textbf{X}_s\). By the definition of L, we have \(L(Y_0+Z_{0,\cdot })_t=\Phi _t\). Hence, \((Y_t,\Phi _t)\) is a solution of (5.11).

(2) In [2], we consider the following condition (H1) on D:

-

(i)

The condition (A) holds,

-

(ii)

There exists a positive constant C such that for any x, it holds that

$$\begin{aligned} \Vert L(x)\Vert _{1\text {-}var, [s,t]}&\le C\Vert x\Vert _{\infty \text {-}var,[s,t]}. \end{aligned}$$

This condition holds if D is convex and there exists a unit vector \(l\in {\mathbb R}^n\) such that

Under (H1) and \(\sigma \in C^3_b\), we proved the existence of solutions of reflected RDEs driven by \(1/\beta \) rough paths and gave estimates for the solutions in Theorem 4.5 in [2]. We used Euler approximation of the solution modifying Davie’s proof in [9]. In the proof, we need to solve the following implicit Skorohod equation in each step,

where \(0<T'<T\), \(y_t\in \bar{D}\) \((0\le t\le T')\), \(M\in \mathcal {L}({\mathbb R}^n\otimes {\mathbb R}^d,{\mathbb R}^n)\) and \(\Phi _t\) is a continuous bounded variation path. Also \(\eta _t\), \(x_t\) are finite \(1/\beta \)-variation paths which are defined by X and \({\mathbb X}\). If we replace \(\int _0^t\Phi _r\otimes dx_r\) in (5.15) and (5.16) by \(\int _0^tf(\Phi _r)\otimes dx_r\), where f is a bounded Lipschitz map between \({\mathbb R}^n\), then we can solve the equation under general condition (A) and (B). To avoid the explosion problem, that is, to handle the linear growth term of \(\Phi _t\), we put stronger assumption (H1)(ii) on D in [2]. Also we used Lyon’s continuity theorem of rough integrals in the proof and so we need to assume \(\sigma \in C^3_b\). In this paper, we adopt different approach to the problem and obtain an extension of the previous result in the sense that the assumption on \(\sigma \) and D can be relaxed.

In Sect. 4, we prove a continuity property of solution mappings at Lipschitz paths under the uniqueness of the solutions. For reflected RDEs, we can give more explicit estimate of the continuity of the solution mapping Y at the Lipschitz paths. As before we consider a domain \(D\subset {\mathbb R}^n\) which satisfies the conditions (A) and (B). Let h be a Lipschitz path on \({\mathbb R}^d\) starting at 0. If \(\sigma \) is Lipschitz continuous, there exists a unique solution \((Y(h,\xi )_t,\Phi (h,\xi )_t)\) to the reflected ODE in usual sense (see Proposition 4.1 in [4] for example),

We may omit denoting \(h,\xi \). Moreover, \(Z(h)_t=\xi +\int _0^t\sigma (Y_s(h))dh_s\), \(Z_t(h)'=\sigma (Y_t(h))\) and \(\Phi (h)_t\) are a unique pair of solution to the equation in Theorem 5.6 for the smooth rough path \(\textbf{h}_{s,t}=(h_{s,t},\bar{h}^2_{s,t})\) defined by h. Hence the solution \((Z(h),R^{Z(h)},\Phi (h))\) satisfies the estimate (5.13) with the same constant \(C_1, C_2\).

From now on, we will give an explicit estimate for \(Y_t(\xi ,\textbf{X})-Y_t(\eta ,h)\). Let \(\textbf{X}\) be a general (not necessarily geometric) \(\beta \)-Hölder rough path. Let \(\textbf{X}^{-h}_{s,t}\) be the translated rough path of \(\textbf{X}\) by h. That is, the 1st level path and the second level path are given by,

Hence

These imply that if  , then

, then

By the definition of controlled paths, we immediately obtain the following.

Lemma 5.8

Let \(\textbf{X}\in \mathscr {C}^{\beta }_g({\mathbb R}^d)\). Let h be a Lipschitz path. If \((Z,Z')\in \mathscr {D}_X^{2\beta }\), then \((Z,Z')\in \mathscr {D}_{X-h}^{2\beta }\). In fact,

Let \((Z,Z')\in {\mathscr {D}}^{2\alpha }_X({\mathbb R}^n)\) and \(\Phi \in \mathcal {C}^{q\text {-}var,\tilde{\alpha }}({\mathbb R}^n)\) with \(\Phi _0=0\) and \(q, \alpha , \tilde{\alpha }\) satisfy the assumptions in Lemma 3.1. By the above lemma, we can define the integral \(\int _s^t\sigma (Y_u)d\textbf{X}^{-h}_u\) and the estimates in Lemma 3.2 hold for this integral. Here \(Y_u=Z_u+\Phi _u\). Moreover, \(\Xi _{s,t}\) in (3.5) which is defined by \(\textbf{X}^{-h}_{s,t}\) reads

where

Since \(|\tilde{\Xi }_{s,t}|\le C(t-s)^{1+\tilde{\alpha }}\), the sum of these terms converges to 0. Thus we obtain

We now consider the following condition on the boundary.

Definition 5.9

(Condition (C)) There exists a \(Lip^{\gamma }\) function f on \({\mathbb R}^n\) and a positive constant k such that for any \(x\in \partial D\), \(y\in \bar{D}\), \({\textbf{n}}\in \mathcal{N}_x\) it holds that

Usually, the function f is assume to be \(C^2_b\) in the condition (C). See [27, 34]. Here, we assume \(f\in Lip^{\gamma }\) to make use of estimates in Lemma 3.2.

Under additional condition (C), we can prove the following explicit modulus of continuity.

Lemma 5.10

Let \(\textbf{X}\in \mathscr {C}^{\beta }_g({\mathbb R}^d)\). Assume that D satisfies the conditions (A), (B), (C) and \(\sigma \in \textrm{Lip}^{\gamma -1}\). Let \(Y_t(\textbf{X},\xi ), Z_t(\textbf{X},\xi ), \Phi _t(\textbf{X},\xi ), Y_t(h,\zeta ), \Phi _t(h,\zeta )\) be a solution as in Lemma 4.1. Assume  . Then there exists a positive constant C which depends only on \(\sigma , r_0, \delta , \delta ', f, k\) such that

. Then there exists a positive constant C which depends only on \(\sigma , r_0, \delta , \delta ', f, k\) such that

Proof

We write \(Y_t=Y_t(\textbf{X},\xi )\), \(\Phi (\textbf{X},\xi )_t=\Phi _t\) and \(\tilde{Y}_t=Y(h,\zeta )_t\), \(\tilde{\Phi }_t=\Phi (h,\zeta )_t\) for simplicity. Let \( Z_t=e^{-\frac{2}{k}\left( f(Y_t)+f(\tilde{Y}_t)\right) } |Y_t-\tilde{Y}_t|^2. \) We have

Condition (C) implies that the fourth integral on the right-hand side of the Eq. (5.30) is always negative. By the estimates of the solution \(Y, \tilde{Y}, \Phi , \tilde{\Phi }\) in Theorem 5.6 and the estimates in Lemma 3.2 and the Gronwall inequality, we obtain the desired estimate. \(\square \)

5.2 Perturbed Reflected SDEs: A Short Review

Let us recall basic results for the following equation driven by a continuous path \(x_t\) on \({\mathbb R}\),

When \(x_t\) is a sample path of a standard Brownian motion, the solutions to (5.31) and (5.32) are called (doubly) perturbed Brownian motion and perturbed reflected Brownian motion respectively.

First we consider the Eq. (5.31). Clearly, if either \(a\ge 1\) or \(b\ge 1\), then there are no solutions to this equation for certain x. So we consider the case where \(a<1\) and \(b<1\). Suppose \(b=0\). Then we have explicitly, \(Y_t=x_t+\frac{a}{1-a}\sup _{0\le s\le t}x_s\). By [7], when \(|\frac{ab}{(1-a)(1-b)}|<1\), a fixed point argument works and the unique existence holds for any continuous path \(x_t\) with \(x_0=0\). The unique existence extends to \(|\frac{ab}{(1-a)(1-b)}|=1\) by [10]. Consider the case where \(x_t\) is a sample path of 1-dimensional Brownian motion \(W_t\) with \(W_0=0\). For any \(0\le a<1\), \(0\le b<1\), it is proved in [31] that the pathwise uniqueness holds and the solution is adapted to the Brownian filtration. Finally, for any \(a<1, b<1\), the same results is proved in [8].

We consider the Eq. (5.32). By a fixed point argument, the unique existence is proved in [25] the case (1) \(a<1/2\) and (2) \(a<1\) with \(x_0>0\). Next, the pathwise uniqueness is proved by [8] for \(a<1\) when \(x_t\) is the Brownian path \(W_t\) with \(W_0=0\). The unique existence for \(a<1\) is extended by [13] for any continuous path \(x_t\).

We next explain results for the variable coefficient version driven by a standard 1-dimensional Brownian motion \(W_t\),

where \(\sigma \) is a Lipschitz continuous function on \({\mathbb R}\) and the integral is the Itô integral. The unique existence of the solution to (5.33) is proved for \(a<1\) by [13]. The same authors prove the unique existence of the solution to (5.34) for two cases where (1) \(a<1\) and \(\xi >0\) and (2) \(0\le a<1/2\) and \(\xi =0\). Under the same assumption on a, the absolutely continuity of the law of \(Y_t\) with respect to the Lebesgue measure was studied in [36].

5.3 Perturbed Reflected Rough Differential Equations

We consider the multidimensional versions of (5.33) and (5.34) driven by rough paths. Our objectives are the following two equations.

where \(e_n={}^t(0,\ldots ,0,1)\) and \(\sigma \in \textrm{Lip}^{\gamma -1}({\mathbb R}^n,\mathcal {L}({\mathbb R}^d,{\mathbb R}^n))\). We assume that C is a mapping from \(C([0,T],{\mathbb R}^n)\) to the subspace of continuous and bounded variation paths on \({\mathbb R}^n\) and \(\{C(x)_s\}_{0\le s\le t}\) is measurable with respect to \(\sigma (\{x_s\}_{0\le s\le t})\) for all \(0\le t\le T\). The first Eq. (5.35) is a perturbed rough differential equations and the second Eq. (5.36) is a perturbed reflected rough differential equation on \(\bar{D}=\{(x_1,\ldots ,x_n)~|~x_n\ge 0\}\). \(\Phi _te_n\) is the reflected term and \(Y_t\) and \(\Phi _t\) should satisfy

In both equations, \(Y_0\ne \xi \) in general. Consider the case \(t=0\). Then we have \( Y_0=\xi +C(Y)_0. \) Since C(Y) is adapted, \(C(Y)_0\) is a function of \(Y_0\) and we may write \(C(Y)_0=C_0(Y_0)\). Hence \(Y_0\) should satisfy \(Y_0=\xi +C_0(Y_0)\) and we need to assume \(Y_0\in \bar{D}\). If we consider the case where \(Y_t\in {\mathbb R}\) and \(C(Y)_t=a\max _{0\le s\le t}Y_s\) \((a<1)\), \(Y_0=\frac{1}{1-a}\xi \) holds. In this case, \(Y_0\ge 0\) and \(\xi \ge 0\) are equivalent and so \(Y_t\) starts from \([0,\infty )\) when \(\xi \ge 0\). Under the assumption that \(Y_0=\xi +C_0(Y_0)\in \bar{D}\), by the explicit solution of the Skorohod problem, we have

where \(a\vee b=\max (a,b)\).

We give the definition of the solution of (5.35) and (5.36).

Definition 5.11

(1) \(Y_t\) is a solution of (5.35) if the following hold.

-

(i)

There exists a \(Z\in \mathscr {D}^{2\beta }_X({\mathbb R}^n)\) such that \(Y_t=Z_t+C(Y)_t\) and \(Z'_t=\sigma (Y_t)\) \((0\le t\le T)\) hold.

-

(ii)

\(Z_t=\xi +\int _0^t\sigma (Z_s+C(Y)_s)d\textbf{X}_s\) \((0\le t\le T)\) holds.

(2) \((Y_t,\Phi _t)\) is a solution of (5.36) if the following holds:

- (i)

-

(ii)

There exists a \(Z\in \mathscr {D}^{2\beta }_X({\mathbb R}^n)\) such that \(Y_t=Z_t+C(Y)_t+\Phi _te_n\) and \(Z'_t=\sigma (Y_t)\) \((0\le t\le T)\) hold.

-

(iii)

\(Z_t=\xi +\int _0^t\sigma (Z_s+C(Y)_s+\Phi _se_n)d\textbf{X}_s\) \((0\le t\le T)\) holds.

We solve these equations by transforming them to the equations in Theorem 2.7. To this end, we introduce the following conditions.

Definition 5.12

For a mapping \(C: C([0,T], {\mathbb R}^n)\rightarrow C([0,T], {\mathbb R}^m)\), we consider the following conditions, where \(\rho \) denotes a positive number.

- \(\mathrm{(Lip)}_{\rho }\):

-

\(\Vert C(x)-C(y)\Vert _{\infty ,[0,t]}\le \rho \Vert x-y\Vert _{\infty ,[0,t]}\) for all \(x,y\in C([0,T], {\mathbb R}^n)\) and \(0\le t\le T\).

- \(\mathrm{(BV)}_{\rho }\):

-

\(\Vert C(x)\Vert _{1\text {-}var, [s,t]}\le \rho \Vert x\Vert _{\infty \text {-}var, [s,t]}\) for all \(0\le s\le t\le T\).

We may write \(C\in (\textrm{Lip})_{\rho }\) simply when C satisfies the condition \((\textrm{Lip})_{\rho }\), etc. Also we denote by \(\Vert C\Vert _{\textrm{Lip}}\) the smallest nonnegative number \(\rho \) for which \((\textrm{Lip})_{\rho }\) holds.

Clearly the conditions \(\mathrm{(Lip)}_{\rho }\) and \(\mathrm{(BV)}_{\rho }\) are stronger than the conditions in Assumption 2.2. Also the conditions \(\mathrm{(Lip)}_{\rho }\) and \(\mathrm{(BV)}_{\rho }\) imply the conditions (A1), (A2) and (A3) in [1].

As we noted, C which is defined in Example 2.9 (2) satisfies the above conditions.

Proposition 5.13

Let \(\rho >0\). Let \(f: {\mathbb R}^n\rightarrow {\mathbb R}\) be a Lipschitz function satisfying \((\textrm{Lip})_{\rho }\). Let \(C(x)_t=\max _{0\le s\le t}f(x_s)\) for \(x\in C([0,T],{\mathbb R}^n)\). Then we have \(C\in \mathrm{(Lip)}_{\rho }\cap \mathrm{(BV)}_{\rho }\).

Proof

We consider the simplest case \(C(x)_t=\max _{0\le s\le t}x_s\), where x is a continuous path on \({\mathbb R}\). Let \(0\le s<t\). We take values \(0\le s_{*}\le s, 0\le t_{*}\le t\) such that \(C(x)_{s}=x_{s_{*}}\) and \(C(x)_{t}=x_{t_{*}}\). Suppose \(t_{*}\le s\), then \(C(x)_u=C(x)_{t_{*}}\) \((s\le u\le t)\) holds. Hence \(\Vert C(x)\Vert _{1\text {-}var, [s,t]}=0\). Suppose \(s<t_{*}\le t\). Then using \(x_s\le x_{s_{*}}\), we have

which implies the validity of \((\textrm{BV})_1\). We next show \((\textrm{Lip})_1\). Let \(x, x'\) be continuous paths on \({\mathbb R}\). Similarly, \(t'_{*}\) denotes a time at which \(x'\) attains its maximum of \(x_u\) \((0\le u\le t)\). We have \(C(x)_t-C(x')_t=x_{t_{*}}-x'_{t'_{*}}\). If \(x_{t_{*}}-x'_{t'_{*}}=0\), Suppose \(x_{t_{*}}>x'_{t'_{*}}\). Then, by \(x'_{t'_{*}}\ge x'_{t_{*}}\), we have

This proves that \((\textrm{Lip})_{1}\) holds for \(C(x)_t=\max _{0\le s\le t}x_s\). General cases follow from this simplest case. \(\square \)

We consider (5.35). To this end, we consider the following condition on C.

\((\textbf{Condition}~ \tilde{\textbf{C}})\) (i) For any \(x\in C([0,T],{\mathbb R}^n)\), there exists unique \(y\in C([0,T],{\mathbb R}^n)\) such that \(y=x+C(y)\). Define \(\tilde{C}(x)=y-x\).

(ii) \(\tilde{C}\) satisfies \(\mathrm{(Lip)}_{\rho '}\) for certain \(\rho '\).

About this property, we have the following. The proof is straightforward and so we omit the proof.

Proposition 5.14

Assume C satisfies \((\textrm{Condition}~ \tilde{\textrm{C}})\) \(\mathrm{(i)}\). Then for any \(0\le t\le T\) and \(x\in C([0,t],{\mathbb R}^n)\), there exists a unique \(y\in C([0,t],{\mathbb R}^n)\) such that \(y=x+C(y)\) on [0, t]. For these x and y, we define \(\tilde{C}_t(x)=y-x\in C([0,t],{\mathbb R}^n)\). Then for any \(z\in C([0,T],{\mathbb R}^n)\) satisfying \(z_s=x_s\) \((0\le s\le t)\), \(\tilde{C}(z)_s=\tilde{C}_t(x)_s\) \((0\le s\le t)\) holds.

By this result, given \(\xi \in {\mathbb R}^n\), the solution \(\eta \in {\mathbb R}^n\) of \(\eta =\xi +C_0(\eta )\) is unique if C satisfies \((\textrm{Condition}~ \tilde{\textrm{C}})\) \(\mathrm{(i)}\). We have the following result for (5.35).

Theorem 5.15

Let C be a continuous mapping between \(C([0,T], {\mathbb R}^n)\). Suppose C satisfies \((\textrm{Condition}~ \tilde{\textrm{C}})\) and \(\tilde{C}\) satisfies \((\textrm{BV})_{\rho ''}\) for certain \(\rho ''\). Let \(\textbf{X}\in \mathscr {C}^{\beta }({\mathbb R}^d)\).

-

(1)

There exists a controlled path \(Z\in {\mathscr {D}}^{2\beta }_X({\mathbb R}^n)\) satisfying the equation

$$\begin{aligned} Z_t=\xi +\int _0^t\sigma \left( Z_s+\tilde{C}(Z)_s\right) d\textbf{X}_s, \quad Z_t'=\sigma (Z_t+\tilde{C}(Z)_t). \end{aligned}$$(5.40)and Z has the estimate similarly to Theorem 2.7. Moreover \(Y_t=Z_t+\tilde{C}(Z)_t\) is a solution to (5.35).

-

(2)

Let \(Y_t\) be a solution to (5.35) defined by \(Z\in \mathscr {D}^{2\beta }_X({\mathbb R}^n)\). Then Z is a solution to (5.40). Moreover, such a Z is uniquely determined by Y.

-

(3)

The transformations defined in (1) and (2) are inverse mapping each other and the uniqueness of the solution of (5.35) and (5.40) is equivalent.

Proof

(1) The existence and the estimate of the solution follows from Theorem 2.7. By \(Y_t=Z_t+\tilde{C}(Z)_t\) and by the definition of \(\tilde{C}\), we have \(\tilde{C}(Z)=C(Y)\). Hence \(Z'_t=\sigma (Z_t+C(Y)_t)\) and \(Y_t\) is a solution to (5.35).

(2) By the definition of \(\tilde{C}\), \(\tilde{C}(Z)_t=C(Y)_t\) holds. Hence Z is a solution to (5.40). Also the uniqueness follows from the assumption on C.

(3) These follows from the assumption on C. \(\square \)

We give sufficient conditions on C under which C satisfies \((\textrm{Condition}~ \tilde{\textrm{C}})\).

Lemma 5.16

Let C be a continuous mapping between \(C([0,T], {\mathbb R}^n)\).

-

(1)

Assume C satisfies \(\mathrm{(Lip)}_{\rho _1}\) with \(\rho _1<1\). Let \(x\in C([0,T], {\mathbb R}^n)\). There exists a unique \(y\in C([0,T], {\mathbb R}^n)\) satisfying \( y=x+C(y). \) Then \(\tilde{C}\) satisfies \(\mathrm{(Lip)}_{\rho _1/(1-\rho _1)}\).

-

(2)

Suppose that C satisfies \(\mathrm{(Lip)}_{\rho _1}\) with \(\rho _1<1\) and \(\mathrm{(BV)}_{\rho _2}\) with \(\rho _2<1\). Then \(\tilde{C}\) satisfies \(\mathrm{(BV)}_{\rho _2/(1-\rho _2)}\).

-

(3)

Suppose that C satisfies \(\mathrm{(Lip)}_{\rho }\) and \(\mathrm{(BV)}_{\rho }\) with \(\rho <1/2\). Then \(\tilde{C}\) satisfies \(\mathrm{(Lip)}_{\rho '}\) and \(\mathrm{(BV)}_{\rho '}\) with \(\rho '=\frac{\rho }{1-\rho }<1\).

Proof

(1) The existence of y follows from the fact that the mapping \(y\mapsto x+C(y)\) is contraction. We have \(\tilde{C}(x)=C(y)=C(x+\tilde{C}(x))\). Therefore,

which implies \(\Vert \tilde{C}(x)-\tilde{C}(x')\Vert _{\infty , [0,t]}\le \frac{\rho _1}{1-\rho _1}\Vert x-x'\Vert _{\infty , [0,t]}\).

(2) We have

which implies the desired estimate.

(3) This follows from (1) and (2). \(\square \)

Example 5.17

(1) We consider the following C:

where \(Y^j_t\) and \(C^i(Y)_t\) are the j-th coordinate and i-th coordinate of \(Y_t\) and \(C(Y)_t\) respectively. By Proposition 5.13 and Lemma 5.16, we see that this C satisfies the assumption in Theorem 5.15 for sufficiently small \(a^i_j, b^i_j\). In this paper, we do not consider the subtle case as in the previous Subsection, e.g., \(|ab/(1-a)(1-b)|\le 1\) or \(a<1, b<1\), etc. We just mention the following simple result.

Let \(a_i<1\) \((1\le i\le n)\) and consider C defined by \( C^i(x)_t=a_i\max _{0\le s\le t}x^i(s), \) where \(x_t=(x^i_t)\). If \(a\le -1\), the mapping \(C:x=(x_t)(\in C([0,T],{\mathbb R})\rightarrow (a\max _{0\le s\le t}x_s)\in C([0,T],{\mathbb R})\) is not a strict contraction mapping, but, \(y=x+C(y)\) \((y\in C([0,T],{\mathbb R}))\) is uniquely solved as \(y_t=x_t+\frac{a}{1-a}\max _{0\le s\le t}x_s\). Therefore, we have explicitly

Hence, this example satisfies the assumption in Theorem 5.15.

(2) Let \(f_i: {\mathbb R}^n\rightarrow {\mathbb R}\) \((1\le i\le n)\) be Lipschitz functions satisfying \((\textrm{Lip})_{\rho _i}\). For \(x\in C([0,T],{\mathbb R}^n)\), we define C by \( C^i(x)_t=\max _{0\le s\le t}f_i(x_s). \) Then C satisfies \(\mathrm{(Lip)}_{\sqrt{\sum _i\rho _i^2}}\) and \(\mathrm{(BV)}_{\sum _{i}\rho _i}\). Hence, if \(\sum _i\rho _i<1\), then the assumption in Theorem 5.15 holds. This follows from Proposition 5.13 and Lemma 5.16.

We now consider (5.36) on \(\bar{D}=\{(x_1,\ldots ,x_n)~|~x_n\ge 0\}\). For the moment, we suppose C satisfies \((\textrm{Condition}~ \tilde{\textrm{C}})\) and \(\xi \) is chosen so that the solution \(\eta \) of \(\eta =\xi +C_0(\eta )\) satisfies \(\eta \in \bar{D}\) as we noted before. Let \(Y_t\) be a solution of (5.36) and suppose \(Y_t=Z_t+C(Y)_t+\Phi _te_n\) as in Definition 5.11 (2) (ii). Let \(\tilde{Z}_t=Y_t-C(Y)_t\). Using \(\tilde{C}\), we have \(Y_t=\tilde{Z}_t+\tilde{C}(\tilde{Z})_t\). Then

By (5.39), we get an equation for \(\Phi _t\),

where \(Z^n_s\) and \(\tilde{C}^n\) is the n-th coordinate of \(Z_s\) and \(\tilde{C}\) respectively. This is a nonlinear implicit Skorohod equation. This kind of equation appeared in the study of the Euler approximation of the solutions for reflected RDEs in [2].

Fix \(x\in C([0,T], {\mathbb R}^n; x_0=\xi )\) and consider a mapping on \(C([0,T],{\mathbb R}; \phi _0=0)\):