Abstract

We show that, for \(\beta \ge 1\), the semigroups of \(\beta \)-Laguerre and \(\beta \)-Jacobi processes of different dimensions are intertwined in analogy to a similar result for \(\beta \)-Dyson Brownian motion recently obtained in Ramanan and Shkolnikov (Intertwinings of \(\beta \)-Dyson Brownian motions of different dimensions, 2016. arXiv:1608.01597). These intertwining relations generalize to arbitrary \(\beta \ge 1\) the ones obtained for \(\beta =2\) in Assiotis et al. (Interlacing diffusions, 2016. arXiv:1607.07182) between h-transformed Karlin–McGregor semigroups. Moreover, they form the key step toward constructing a multilevel process in a Gelfand–Tsetlin pattern leaving certain Gibbs measures invariant. Finally, as a by-product, we obtain a relation between general \(\beta \)-Jacobi ensembles of different dimensions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The aim of this short note is to establish intertwining relations between the semigroups of general \(\beta \)-Laguerre and \(\beta \)-Jacobi processes, in analogy to the ones obtained for general \(\beta \)-Dyson Brownian motion in [20] (see also [13]). These also generalize the relations obtained for \(\beta =2\) in [3] when the transition kernels for these semigroups are given explicitly in terms of h-transforms of Karlin–McGregor determinants.

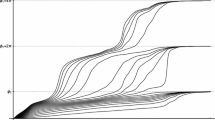

We begin, by introducing the stochastic processes we will be dealing with. Consider the unique strong solution to the following system of SDEs with \(i=1,\ldots ,n\) with values in \([0,\infty )^n\),

where the \(B_i^{(n)}\), \(i=1,\ldots , n,\) are independent standard Brownian motions. This process was introduced and studied by Demni [7] in relation to Dunkl processes (see, for example, [22]) where it is referred to as the \(\beta \)-Laguerre process, since its distribution at time 1, if started from the origin, is given by the \(\beta \)-Laguerre ensemble (see Sect. 5 of [7]). We could, equally well, have called this the \(\beta \)-squared Bessel process, since for \(\beta =2\) it exactly consists of nBESQ(d) diffusion processes conditioned to never collide as first proven in [15], but we stick to the terminology of [7]. Similarly, consider the unique strong solution to the following system of SDEs in \([0,1]^n\),

where again, the \(B_i^{(n)}\), \(i=1,\ldots , n,\) are independent standard Brownian motions. We call this solution the \(\beta \)-Jacobi process. It was first introduced and studied in [6] as a generalization of the eigenvalue evolutions of matrix Jacobi processes and whose stationary distribution is given by the \(\beta \)-Jacobi ensemble (see Sect. 4 of [6]):

for some normalization constant \(C_{n,a,b,\beta }\).

We now give sufficient conditions that guarantee the well-posedness of the SDEs above. For \(\beta \ge 1\) and \(d\ge 0\) and \(a,b\ge 0\), (1) and (2) have a unique strong solution with no collisions and no explosions and with instant diffraction if started from a degenerate (i.e., when some of the coordinates coincide) point (see Corollaries 6.5 and 6.7, respectively, of [14]). In particular, the coordinates of \(X^{(n)}\) stay ordered. Thus, if

then with probability one,

From now on, we restrict to those parameter values.

It will be convenient to define \(\theta =\frac{\beta }{2}\). We write \(P^{(n)}_{d,\theta }(t)\) for the Markov semigroup associated with the solution of (1). Similarly, write \(Q^{(n)}_{a,b,\theta }(t)\) for the Markov semigroup associated with the solution of (2). Furthermore, denote by \({\mathcal {L}}^{(n)}_{d,\theta }\) and \({\mathcal {A}}^{(n)}_{a,b,\theta }\) the formal infinitesimal generators for (1) and (2), respectively, given by,

With I denoting either \([0,\infty )\) or [0, 1], define the chamber,

Moreover, for \(x\in W^{n+1}\) define the set of \(y \in W^{n}\) that interlace with x by,

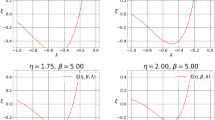

For \(x\in W^{n+1}\) and \(y\in W^{n,n+1}(x)\), define the Dixon–Anderson conditional probability density on \(W^{n,n+1}(x)\) (originally introduced by Dixon at the beginning of the last century in [9] and independently rediscovered by Anderson in his study of the Selberg integral in [1]) by,

Denote by \(\Lambda ^{\theta }_{n,n+1}\), the integral operator with kernel \(\lambda ^{\theta }_{n,n+1}\), i.e.,

Then, our goal is to prove the following theorem, which should be considered as a generalization to the other two classical \(\beta \)-ensembles, the Laguerre and Jacobi, of the result of [20] for the Gaussian ensemble.

Theorem 1.1

Let \(\beta \ge 1\), \(d\ge 2\) and \(a,b \ge 1\). Then, with \(\theta =\frac{\beta }{2}\), we have the following equalities of Markov kernels, \(\forall t \ge 0\),

Remark 1.2

For \(\beta =2\), this result was already obtained in [3]; see in particular Sects. 3.7 and 3.8 therein, respectively.

Remark 1.3

The general theory of intertwining diffusions (see [19]) suggests that there should be a way to realize these intertwining relations by coupling these n and \(n+1\) particle processes, so that they interlace. In the Laguerre case (the Jacobi case is analogous), the resulting process \(Z=(X,Y)\), with Y evolving according to \(P^{(n)}_{d,\theta }(t)\) and X in its own filtration according to \(P^{(n+1)}_{d-2,\theta }(t)\), should (conjecturally) have generator given by,

with reflecting boundary conditions of the X components on the Y particles (in case they do collide). For a rigorous construction of the analogous coupled process in the case of Dyson Brownian motions with \(\beta >2\), see Sect. 4 of [13]. In fact, for certain values of the parameters, the construction of the process with the generator above can be reduced to the results of [13] and a more detailed account will appear as part of the author’s Ph.D. thesis [2].

As just mentioned, such a coupling was constructed for Dyson Brownian motion with \(\beta > 2\) in [13] and in [3] (see also [23]) for copies of general one-dimensional diffusion processes, which in particular includes the squared Bessel (this corresponds to the Laguerre process of this note) and Jacobi cases for \(\beta =2\), when the interaction, between the two levels, entirely consists of local hard reflection and the transition kernels are explicit. Given such 2-level couplings, one can then iterate to construct a multilevel process in a Gelfand–Tsetlin pattern, as in [25] which initiated this program (see also [3, 13, 19]). For a different type of coupling, for \(\beta =2\) Dyson Brownian motion preceded [15] and is related to the Robinson–Schensted correspondence; see [16, 17] and the related work [5].

Using Theorem 1.1 and that \({\mathcal {M}}^{Jac,n}_{a,b,\beta }\) is the unique stationary measure of (2) which follows from smoothness and positivity of the transition density \(p^{n,\beta ,a,b}_t(x,y)\), with respect to Lebesgue measure of \(Q^{(n)}_{a,b,\theta }(t)\) (see Proposition 4.1 of [6]; for this to apply, we further need to restrict to \(a,b > \frac{1}{\beta }\)) and the fact that two distinct ergodic measures must be mutually singular (see [24]), we immediately get:

Corollary 1.4

For \(\beta \ge 1\) and \(a,b > 1\) and with \(\theta =\frac{\beta }{2}\),

Proof

From (8), we obtain that \({\mathcal {M}}^{Jac,n+1}_{a-1,b-1,\beta }\Lambda ^{\theta }_{n,n+1}\) is the unique stationary measure of \(Q^{(n)}_{a,b,\theta }(t)\).

\(\square \)

Before closing this introduction, we remark that in order to establish Theorem 1.1, we will follow the strategy given in [20]; namely, we rely on the explicit action of the generators and integral kernel on the class of Jack polynomials which, along with an exponential moment estimate, will allow us to apply the moment method. We note that, although the \(\beta \)-Laguerre and \(\beta \)-Jacobi diffusions look more complicated than \(\beta \)-Dyson’s Brownian motion, the main computation, performed in Step 1 of the proof below, is actually simpler than the one in [20].

2 Preliminaries on Jack Polynomials

We collect some facts on the Jack polynomials \(J_{\lambda }(z;\theta )\) which as already mentioned will play a key role in obtaining these intertwining relations. We mainly follow [20] which in turn follows [4] (note that there is a misprint in [20]; there is a factor of \(\frac{1}{2}\) missing from Eq. (2.7) therein c.f. Eq. (2.13d) in [4]). The \(J_{\lambda }(z;\theta )\) are defined to be the (unique up to normalization) symmetric polynomial eigenfunctions in n variables of the differential operator \({\mathcal {D}}^{(n),\theta }\),

indexed by partitions \(\lambda =(\lambda _1 \ge \lambda _2\ge \cdots )\) of length l with eigenvalue \(\hbox {eval}(\lambda ,n,\theta )=2B(\lambda ')-2\theta B(\lambda )+2\theta (n-1)|\lambda |\) where \(B(\lambda )=\sum (i-1)\lambda _i=\sum \left( {\begin{array}{c}\lambda '_i\\ 2\end{array}}\right) \) and \(\lambda '\) is the conjugate partition. With \(1_n\) denoting a row vector of n 1s, we have the normalization,

Define the following differential operators,

Then, the action of these operators on the \(J_{\lambda }(z;\theta )\)’s is given explicitly by (see [4] Eqs. (2.13a), (2.13d) and (2.13b), respectively),

where \(\lambda _{(i)}\) is the sequence given by \(\lambda _{(i)}=(\lambda _1,\ldots ,\lambda _{i-1},\lambda _i-1,\lambda _{i+1},\ldots )\) (in case \(i=l\) and \(\lambda _i=1\), we drop \(\lambda _l\) from \(\lambda \)) and the combinatorial coefficients \(\left( {\begin{array}{c}\lambda \\ \rho \end{array}}\right) _{\theta }\) are defined by the following expansion (we set \(\left( {\begin{array}{c}\lambda \\ \lambda _{(i)}\end{array}}\right) _{\theta }=0\) in case \(\lambda _{(i)}\) is no longer a non-decreasing positive sequence),

but whose exact values will not be required in what follows. Finally, we need the following about the action of \(\Lambda ^{\theta }_{n,n+1}\) on \(J_{\lambda }(\cdot ;\theta )\) (see [18] Sect. 6),

where

3 Proof

We split the proof in 4 steps, following the strategy laid out in [20].

Proof of Theorem 1.1

First, note that we can write the operators \({\mathcal {L}}^{(n)}_{d,\theta }\) and \({\mathcal {A}}^{(n)}_{a,b,\theta }\) as follows,

Step 1 The aim of this step is to show the intertwining relation at the level of the infinitesimal generators acting on the Jack polynomials. Namely, that

We will show relation (22) for the Jacobi case and at the end of Step 1 indicate how to obtain (21).

(RHS): We start by computing \({\mathcal {A}}^{(n)}_{a,b,\theta }J_{\lambda }(y;\theta )\).

Now, apply \(\Lambda ^{\theta }_{n,n+1}\) to obtain that

Now, in order to check \((\hbox {LHS})=(\hbox {RHS})\) we check that the coefficients of \(J_{\lambda }\) and \(J_{\lambda _{(i)}}\)\(\forall i\) coincide on both sides.

-

First, the coefficients of \(J_{\lambda }(x;\theta )\):

(LHS): \(-\,2c(\lambda ,n,\theta )\hbox {eval}(\lambda ,n+1,\theta )-c(\lambda ,n,\theta )|\lambda |2 \theta (a+b-2)\).

(RHS): \(-\,2c(\lambda ,n,\theta )\hbox {eval}(\lambda ,n,\theta )-c(\lambda ,n,\theta )|\lambda |2 \theta (a+b)\).

These are equal iff:

$$\begin{aligned} \frac{-\,2\hbox {eval}(\lambda ,n,\theta )+2\hbox {eval}(\lambda ,n+1,\theta )}{4\theta |\lambda |}=1, \end{aligned}$$which is easily checked from the explicit expression of \(\hbox {eval}(n,\lambda ,\theta )\).

-

Now, for the coefficients of \(J_{\lambda _{(i)}}(x;\theta )\):

(LHS):

(RHS):

These are equal iff:

We first claim that

This immediately follows from

Hence, we need to check that the following is true,

which is obvious.

Now, in order to obtain (21) we only need to consider coefficients in \(J_{\lambda _{(i)}}\)’s (since the operators \({\mathcal {D}}^{(n),\theta }\) and \({\mathcal {B}}_3^{(n)}\) that produce \(J_{\lambda }\)’s are missing) and replace a by \(\frac{d}{2}\).

To prove the analogous result for \(\beta \)-Dyson Brownian motions, one needs to observe, as done in [20], that the generator of n particle \(\beta \)-Dyson Brownian motion \(L^{(n)}_{\theta }\) can be written as a commutator, namely \(L^{(n)}_{\theta }=[{\mathcal {B}}_1^{(n)},{\mathcal {B}}_2^{(n),\theta }]={\mathcal {B}}_1^{(n)}{\mathcal {B}}_2^{(n),\theta }-{\mathcal {B}}_2^{(n),\theta }{\mathcal {B}}_1^{(n)}\).

Step 2 We obtain an exponential moment estimate, namely regarding \({\mathbb {E}}_{x}[\hbox {e}^{\epsilon \Vert X^{(n)}(t)\Vert }]\). This is obviously finite by compactness of \([0,1]^n\) in the Jacobi case. In the Laguerre case, we proceed as follows. Writing \(X^{(n)}\) for the solution to (1), letting \(\Vert \cdot \Vert \) denote the \(l_1\) norm and recalling that all entries of \(X^{(n)}\) are nonnegative, we obtain

Note that

and that by Levy’s characterization, the local martingale \((M(t),t\ge 0)\) defined by

is equal to a standard Brownian motion \((W(t),t\ge 0)\) and so we obtain

Thus, \(\Vert X^{(n)}(t)\Vert \) is a squared Bessel process of dimension \(\hbox {dim}_{\beta ,n,d}=\beta \left( \frac{d}{2}n+2\left( {\begin{array}{c}n\\ 2\end{array}}\right) \right) \). Hence, from standard estimates (see [21] Chapter IX.1 or Proposition 2.1 of [10]; in case that \(\hbox {dim}_{\beta ,n,d}\) is an integer the result is an immediate consequence of Fernique’s theorem ( [11]) since \(\Vert X^{(n)}(t)\Vert \) is the square of a Gaussian process), it follows that, for \(\epsilon >0\) small enough, \({\mathbb {E}}_{x}[\hbox {e}^{\epsilon \Vert X^{(n)}(t)\Vert }]<\infty \).

Step 3 We now lift the intertwining relation to the semigroups acting on the Jack polynomials, namely

The proof follows almost word for word the elegant argument given in [20]. We reproduce it here, elaborating a bit on some parts, for the convenience of the reader, moreover only considering the Laguerre case for concreteness. We begin by applying Ito’s formula to \(J_{\lambda }(X^{(n)}(t);\theta )\) and, taking expectations (note that the stochastic integral term is a true martingale since its expected quadratic variation is finite which follows by the exponential estimate of Step 2), we obtain

Now, note that by (23), \({\mathcal {L}}^{(n)}_{d,\theta }J_{\lambda }(\cdot ;\theta )\) is given by a linear combination of Jack polynomials \(J_{\kappa }(\cdot ;\theta )\) for some partitions \(\kappa \) with \(\kappa _i\le \lambda _i\)\(\forall i \le l\) and we will write \(\kappa \le \lambda \) if this holds. We will denote the action of \({\mathcal {L}}^{(n)}_{d,\theta }\) on this finite-dimensional vector space, spanned by the Jack polynomials indexed by partitions \(\kappa \) with \(\kappa \le \lambda \), by the matrix \(M_2\).

Moreover, each \(J_{\kappa }(\cdot ;\theta )\) for \(\kappa \le \lambda \) obeys (24), and thus, we obtain the following system of integral equations, with \(f_{\kappa }(t)=P^{(n)}_{d,\theta }(t)J_{\kappa }(\cdot ;\theta )\),

whose unique solution is given by the matrix exponential,

Now, observe that by (17) the Markov kernel \(\Lambda ^{\theta }_{n,n+1}\) also acts on the aforementioned finite- dimensional vector space of Jack polynomials as a matrix, which we denote by \(M_1\). We will also denote by a matrix \(M_3\) the action of \({\mathcal {L}}^{(n+1)}_{d-2,\theta }\) and note that the intertwining relation (21) can be written in terms of matrices as follows: \(M_3M_1=M_1M_2\). Thus, making use of the following elementary fact about finite-dimensional square matrices,

and display (25), along with its analog with \(M_2\) replaced by \(M_3\), we get that

Step 4 We again follow [20]. Recall (see [20] and the references therein) that we can write any symmetric polynomial p in n variables as a finite linear combination of Jack polynomials in n variables. Hence, for any such p,

Now, any probability measure \(\mu \) on \(W^n(I)\) will give rise to a symmetrized probability measure \(\mu ^\mathrm{symm}\) on \(I^n\) as follows,

where \(z_{(1)}\le z_{(2)}\le \cdots \le z_{(n)}\) are the order statistics of \((z_1,z_2,\ldots ,z_n)\). Moreover, for every (not necessarily symmetric) polynomial q in n variables, with \(S_n\) denoting the symmetric group on n symbols, we have

Note that now \(p(z)=\frac{1}{n!}\sum _{\sigma \in S_n}^{}q(z_{\sigma (1)},\ldots ,z_{\sigma (n)})\) is a symmetric polynomial (in n variables). Thus, from (26) and (27) all moments of the symmetrized versions of both sides of (BESQintertwining) and (8) coincide. Hence, by Theorem 1.3 of [8] (and the discussion following it) along with the fact that \((\Lambda ^{\theta }_{n,n+1}f)(z)\le \hbox {e}^{\epsilon \Vert z\Vert _1}\) where \(f(y)=\hbox {e}^{\epsilon \Vert y\Vert _1}\) (since all coordinates are positive) and our exponential moment estimate from Step 2, we obtain that the symmetrized versions of both sides of (7) and (8) coincide, where we view for each \(x\in W^{n+1}\) and \(t\ge 0\)\(P^{(n+1)}_{d-2,\theta }(t)\Lambda ^{\theta }_{n,n+1}\) and \(\Lambda ^{\theta }_{n,n+1}P^{(n)}_{d,\theta }(t)\) as probability measures on \(W^n\). In fact, by the discussion after Theorem 1.3 of [8], since we work in \([0,\infty )^n\) and not the full space \({\mathbb {R}}^n\), we need not require that the symmetrized versions of these measures have exponential moments but that they only need to integrate \(\hbox {e}^{\epsilon \sqrt{\Vert z\Vert }}\). The theorem is now proven. \(\square \)

References

Anderson, G.W.: A short proof of Selberg’s generalized beta formula. Forum Math. 3, 415–417 (1991)

Assiotis, T.: PhD thesis at University of Warwick, in preparation, (2017)

Assiotis, T., O’Connell, N., Warren, J.: Interlacing Diffusions. arXiv:1607.07182 (2016)

Baker, T.H., Forrester, P.J.: The Calogero-Sutherland model and generalized classical polynomials. Commun. Math. Phys. 188, 175–216 (1997)

Bougerol, P., Jeulin, T.: Paths in Weyl chambers and random matrices. Probab. Theory Relat. Fields 124(4), 517–543 (2002)

Demni, N.: \(\beta \)-Jacobi processes. Adv. Pure Appl. Math. 1, 325–344 (2010)

Demni, N.: Radial Dunkl Processes : Existence and Uniqueness, Hitting Time, Beta Processes and Random Matrices, arXiv:0707.0367 (2007)

de Jeu, M.: Determinate multidimensional measures, the extended Carleman theorem and quasi-analytic weights. Ann. Probab. 31(3), 1205–1227 (2003)

Dixon, A.L.: Generalizations of Legendre’s formula \(KE^{\prime }-(K-E)K^{\prime }=\frac{1}{2}\pi \). Proc. Lond. Math. Soc. 3, 206–224 (1905)

Donati-Martin, C., Rouault, A., Yor, M., Zani, M.: Large deviations for squares of Bessel and Ornstein-Uhlenbeck processes. Probab. Theory Relat. Fields 129, 261–289 (2004)

Fernique, X.: Integrabilite des vecteurs gaussiens,Comptes Rendus de l’Academie des Sciences Paris A-B, A1698–A1699, (1970)

Forrester, P.J.: Log-gases and random matrices. Princeton University Press, Princeton (2010)

Gorin, V., Shkolnikov, M.: Multilevel Dyson Brownian motions via Jack polynomials. Probab. Theory Relat. Fields 163, 413–463 (2015)

Graczyk, P., Malecki, J.: Strong solutions of non-colliding particle systems. Electron. J. Probab. 19, 1–21 (2014)

König, W., O’Connell, N.: Eigenvalues of the Laguerre process as non-colliding squared bessel processes. Electron. Commun. Probab. 6, 107–114 (2001)

O’Connell, N.: A path-transformation for random walks and the Robinson-Schensted correspondence. Trans. Am. Math. Soc. 355, 3669–3697 (2003)

O’Connell, N., Yor, M.: A representation for non-colliding random walks. Electron. Commun. Probab. 7, 1–12 (2002)

Okounkov, A., Olshanski, G.: Shifted Jack polynomials, binomial formula, and applications. Math. Res. Lett. 4, 69–78 (1997)

Pal, S., Shkolnikov, M.: Intertwining Diffusions and Wave Equations. arXiv:1306.0857 (2015)

Ramanan, K., Shkolnikov, M.: Intertwinings of \(\beta \)-Dyson Brownian Motions of Different Dimensions. arXiv:1608.01597 (2016)

Revuz, D., Yor, M.: Continuous martingales and brownian motion. In: A Series of Comprehensive Studies in Mathematics, vol. 293, 3rd Edn. Springer, New York (1999)

Rosler, M., Voit, M.: Markov processes related with Dunkl operators. Adv. Appl. Math. 21, 575–643 (1998)

Sun, Y.: Laguerre and Jacobi Analogues of the Warren Process, arXiv:1610.01635 (2016)

Walters, P.: An Introduction to Ergodic Theory, Graduate Texts in Mathematics, vol. 79, Springer, New York (1982)

Warren, J.: Dyson’s Brownian motions, intertwining and interlacing. Electron. J. Probab. 12, 573–590 (2007)

Acknowledgements

I would like to thank Jon Warren for several useful comments on an earlier draft of this note and also Neil O’Connell and Nizar Demni for some historical remarks. Finally, I would like to thank an anonymous referee for detailed comments and suggestions that led to an improvement of the exposition. Financial support through the MASDOC DTC Grant No. EP/HO23364/1 is gratefully acknowledged.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Assiotis, T. Intertwinings for General \(\beta \)-Laguerre and \(\beta \)-Jacobi Processes. J Theor Probab 32, 1880–1891 (2019). https://doi.org/10.1007/s10959-018-0842-0

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10959-018-0842-0