Abstract

In this paper, we analyze a speed restarting scheme for the inertial dynamics with Hessian-driven damping, introduced by Attouch et al. (J Differ Equ 261(10):5734–5783, 2016). We establish a linear convergence rate for the function values along the restarted trajectories. Numerical experiments suggest that the Hessian-driven damping and the restarting scheme together improve the performance of the dynamics and corresponding iterative algorithms in practice.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In convex optimization, first-order methods are iterative algorithms that use function values and (generalized) derivatives to build minimizing sequences. Perhaps the oldest and simplest of them is the gradient method [13], which can be interpreted as a finite-difference discretization of the differential equation

describing the steepest descent dynamics. The gradient method is applicable to smooth functions, but there are more contemporary variations that can deal with nonsmooth ones, and even exploit the functions’ structure to enhance the algorithm’s per iteration complexity, or overall performance. A keynote example (see [9] for further insight) is the proximal-gradient (or forward–backward) method [18, 28], (see also [22, 30]), which is in close relationship with a nonsmooth version of (1). In any case, the analysis of related differential equations or inclusions is a valuable source of insight into the dynamic behavior of these iterative algorithms.

In [25, 29], the authors introduced inertial substeps in the iterations of the gradient method, in order to accelerate its convergence. This variation improves the worst-case convergence rate from \(\mathcal O(k^{-1})\) to \(\mathcal O(k^{-2})\). In the strongly convex case, the constants that describe the linear convergence rate are also improved. This method was extended to the proximal-gradient case in [10], and to fixed point iterations [16, 21] (see, for example [3, 4, 14, 19, 20], among others). Su et al. [31] showed that Nesterov’s inertial gradient algorithm—and, analogously, the proximal variant—can be interpreted as a discretization of the ordinary differential equation with Asymptotically Vanishing Damping

where \(\alpha >0\). The function values vanish along the trajectories [6], and they do so at a rate of \(\mathcal {O}(t^{-2})\) for \(\alpha \ge 3\) [31], and \(o(t^{-2})\) for \(\alpha > 3\) [23], in the worst-case scenario.

Despite its faster convergence rate guarantees, trajectories satisfying (AVD)—as well as sequences generated by inertial first order methods—exhibit a somewhat chaotic behavior, especially if the objective function is ill-conditioned. In particular, the function values tend not to decrease monotonically, but to present an oscillatory character, instead.

Example 1.1

We consider the quadratic function \(\phi :{\mathbb R}^3\rightarrow {\mathbb R}\), defined by

whose condition number is \(\max \{\rho ^2,\rho ^{-2}\}\). Figure 1 shows the behavior of the solution to (AVD), with \(x(1)=(1,1,1)\) and \(\dot{x}(1)=-\nabla \phi \big (x(1)\big )\) (the direction of maximum descent).

Depiction of the function values according to Example 1.1, on the interval [1, 35], for \(\alpha =3.1\), and \(\rho =10\) (left) and \(\rho =100\) (right)

In order to avoid this undesirable behavior, and partly inspired by a continuous version of Newton’s method [2], Attouch et al. [5] proposed a Dynamic Inertial Newton system with Asymptotically Vanishing Damping, given by

where \(\alpha ,\beta >0\). In principle, this expression only makes sense when \(\phi \) is twice differentiable, but the authors show that it can be transformed into an equivalent first-order equation in time and space, which can be extended to a differential inclusion that is well posed whenever \(\phi \) is closed and convex. The authors presented (DIN-AVD) as a continuous-time model for the design of new algorithms, a line of research already outlined in [5], and continued in [7]. Back to (DIN-AVD), the function values vanish along the solutions, with the same rates as for (AVD). Nevertheless, in contrast with the solutions of (AVD), the oscillations are tame.

Example 1.2

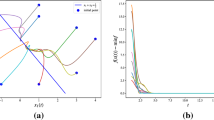

In the context of Example 1.1, Fig. 2 shows the behavior of the solution to (AVD) in comparison with that of (DIN-AVD), both with \(x(1)=(1,1,1)\) and \(\dot{x}(1)=-\nabla \phi \big (x(1)\big )\).

Depiction of the function values according to Example 1.2, on the interval [1, 35], for \(\alpha =3.1\), \(\beta =1\), and \(\rho =10\) (left) and \(\rho =100\) (right)

An alternative way to avoid–or at least moderate—the oscillations exemplified in Fig. 1 for the solutions of (AVD) is to stop the evolution and restart it with zero initial velocity, from time to time. The simplest option is to do so periodically, at fixed intervals. This idea is used in [26] for the accelerated gradient method, where the number of iterations between restarts that depends on the parameter of strong convexity of the function. See also [1, 8, 24], where the problem of estimating the appropriate restart times is addressed. An adaptive policy for the restarting of Nesterov’s Method was proposed by O’Donoghue and Candès [27], where the algorithm is restarted at the first iteration k such that \(\phi (x_{k+1})>\phi (x_{k})\), which prevents the function values to increase locally. This kind of restarting criteria shows a remarkable performance, although convergence rate guarantees have not been established, although some partial steps in this direction have been made in [15, 17]. Moreover, the authors of [27] observe that this heuristic displays an erratic behavior when the difference \(\phi (x_{k})-\phi (x_{k+1})\) is small, due to the prevalence of cancellation errors. Therefore, this method must be handled with care if high accuracy is desired. A different restarting scheme, based on the speed of the trajectories, is proposed for (AVD) in [31], where rates of convergence are established. The improvement can be remarkable, as shown in Fig. 3.

In [31], the authors also perform numerical tests using Nesterov’s inertial gradient method, with this restarting scheme as a heuristic, and observe a faster convergence to the optimal value.

The aim of this work is to analyze the impact that the speed restarting scheme has on the solutions of (DIN-AVD), in order to set the theoretical foundations to further accelerate Hessian-driven inertial algorithms—like the ones in [7]—by means of a restarting policy. This approach combines two oscillation mitigation principles that result in a monotonic and fast convergence of the function values. We provide linear convergence rates for functions with quadratic growth and observe a noticeable improvement in the behavior of the trajectories in terms of stability and convergence speed, both in comparison with the non-restarted trajectories, and with the restarted solutions of (AVD). As a byproduct, we generalize and improve some of the results in [31]. It is worth noticing that the convergence rate result holds for all values of \(\alpha >0\) and \(\beta \ge 0\), in contrast with those in [5,6,7].

The paper is organized as follows: In Sect. 2, we describe the speed restart scheme and state the convergence rate of the corresponding trajectories, which is the main theoretical result of this paper. Section 3 contains the technical auxiliary results—especially some estimations on the restarting time—leading to the proof of our main result, which is carried out in Sect. 4. Finally, we present a few simple numerical examples in Sect. 5, in order to illustrate the improvements, in terms of convergence speed, of the restarted trajectories.

2 Restarted Trajectories for (DIN-AVD)

Throughout this paper, \(\phi :{\mathbb R}^n\rightarrow {\mathbb R}\) is a twice continuously differentiable convex function, which attains its minimum value \(\phi ^*\), and whose gradient \(\nabla \phi \) is Lipschitz-continuous with constant \(L>0\). Consider the ordinary differential equation (DIN-AVD), with initial conditions \(x(0)=x_0\), \(\dot{x}(0)=0\), and parameters \(\alpha > 0\) and \(\beta \ge 0\). A solution is a function in \(\mathcal {C}^2\left( (0,+\infty ); {\mathbb R}^n \right) \cap \mathcal {C}^1\left( [0,+\infty ); {\mathbb R}^n \right) \), such that \(x(0)=x_0\), \(\dot{x}(0)=0\) and (DIN-AVD) holds for every \(t>0\). Existence and uniqueness of such a solution is not straightforward due to the singularity at \(t=0\), but can be established by a limiting procedure. As shown in Appendix 1, we have the following:

Theorem 2.1

For every \(x_0 \in {\mathbb R}^n\), the differential equation (DIN-AVD), with initial conditions \(x(0)=x_0\) and \(\dot{x}(0)=0\), has a unique solution.

We are concerned with the design and analysis of a restart scheme to accelerate the convergence of the solutions of (DIN-AVD) to minimizers of \(\phi \), based on the method proposed in [31].

2.1 A Speed Restarting Scheme and the Main Theoretical Result

Since the damping coefficient \(\alpha /t\) goes to 0 as \(t\rightarrow \infty \), large values of t result in a smaller stabilization of the trajectory. The idea is thus to restart the dynamics at the point where the speed ceases to increase.

Given \(z\in {\mathbb R}^n\), let \(y_z\) be the solution of (DIN-AVD), with initial conditions \(y_z(0)=z\) and \(\dot{y}_z(0)=0\). Set

Remark 2.1

Take \(z\notin {{\,\textrm{argmin}\,}}(\phi )\), and define \(y_z\) as above. For \(t\in \big (0,T(z)\big )\), we have

But \(\langle \nabla ^2 \phi (y_{z}(t))\dot{y}_{z}(t), \dot{y}_{z}(t) \rangle \ge 0\) by convexity, and \(\langle \ddot{y}_{z}(t), \dot{y}_{z}(t) \rangle \ge 0\) by the definition of T(z). Therefore,

In particular, \(t\mapsto \phi \big (y_z(t)\big )\) decreases in [0, T(z)].

If \(z\notin {{\,\textrm{argmin}\,}}(\phi )\), then T(z) cannot be 0. In fact, we shall prove (see Corollaries 3.2 and 3.3) that

Definition 2.1

Given \(x_0\in {\mathbb R}^n\), the restarted trajectory \(\chi _{x_0}:[0,\infty )\rightarrow {\mathbb R}^n\) is defined inductively:

-

1.

First, compute \(y_{x_0}\), \(T_1=T(x_0)\) and \(S_1=T_1\), and define \(\chi _{x_0}(t)=y_{x_0}(t)\) for \(t\in [0,S_1]\).

-

2.

For \(i\ge 1\), having defined \(\chi _{x_0}(t)\) for \(t\in [0,S_i]\), set \(x_i=\chi _{x_0}(S_i)\), and compute \(y_{x_i}\). Then, set \(T_{i+1}=T(x_i)\) and \(S_{i+1}=S_{i}+T_{i+1}\), and define \(\chi _{x_0}(t)=y_{x_i}(t-S_{i})\) for \(t\in (S_i,S_{i+1}]\).

In view of (5), \(S_i\) is defined for all \(i\ge 1\), \(\inf _{i\ge 1}(S_{i+1}-S_i)>0\) and \(\lim _{i\rightarrow \infty }S_i=\infty \). Moreover, in view of Remark 2.1, we have

Proposition 2.1

The function \(t\mapsto \phi \big (\chi _{x_0}(t)\big )\) is nonincreasing on \([0,\infty )\).

Our main theoretical result establishes that \(\phi \big (\chi _{x_0}(t)\big )\) converges linearly to \(\phi ^*\), provided there exists \(\mu >0\) such that

for all \(z\in {\mathbb R}^n\). The Łojasiewicz inequality (6) is equivalent to quadratic growth and is implied by strong convexity (see [11]). More precisely, we have the following:

Theorem 2.2

Let \(\phi :{\mathbb R}^n\rightarrow {\mathbb R}\) be convex and twice continuously differentiable. Assume \(\nabla \phi \) is Lipschitz-continuous with constant \(L>0\), there exists \(\mu >0\) such that (6) holds, and that the minimum value \(\phi ^*\) of \(\phi \) is attained. Given \(\alpha >0\) and \(\beta \ge 0\), let \(\chi _{x_0}\) be the restarted trajectory defined by (DIN-AVD) from an initial point \(x_0 \in {\mathbb R}^n\). Then, there exist constants \(C,K>0\) such that

for all \(t>0\).

The rather technical proof is split into several parts and presented in the next subsections.

3 Technicalities

Throughout this section, we fix \(z\notin {{\,\textrm{argmin}\,}}(\phi )\) and, in order to simplify the notation, we denote by x (instead of \(y_{z}\)) the solution of (DIN-AVD) with initial condition \(x(0)=z\) and \(\dot{x}(0)=0\).

3.1 A Few Useful Artifacts

We begin by defining some useful auxiliary functions and point out the main relationships between them.

To this end, we first rewrite Eq. (DIN-AVD) as

Integrating (7) over [0, t], we get

In order to obtain an upper bound for the speed \(\dot{x}\), the integrals

will be majorized using the function

which is positive, nondecreasing and continuous.

Lemma 3.1

For every \(t>0\), we have

Proof

For the first estimation, we use the Lipschitz-continuity of \(\nabla \phi \) and the fact that \(M_z\) in nondecreasing, to obtain

which results in

Then, from the definition of \(I_z(t)\) we deduce that

For the second inequality, we proceed analogously to get

which yields

Then,

as claimed. \(\square \)

The dependence of \(M_z\) on the initial condition z may be greatly simplified. To this end, set

The function H is concave, quadratic, does not depend on z, and has exactly one positive zero, given by

In particular, H decreases strictly from 1 to 0 on \([0,\tau _1]\).

Lemma 3.2

For every \(t \in (0,\tau _1)\),

Proof

If \(0< u\le t\), using (8) and (10), along with Lemma 3.1, we obtain

Since the right-hand side is nondecreasing in t, we take the supremum for \(u\in [0,t]\) to deduce that

Rearranging the terms, and using the definition of H, given in (14), we see that

We conclude by observing that H is positive on \((0,\tau _1)\). \(\square \)

By combining Lemmas 3.1 and 3.2, and inequalities (12) and (13), we obtain:

Corollary 3.1

For every \(t \in (0,\tau _1)\), we have

We highlight the fact that the bound above depends on z only via the factor \(\left\| \nabla \phi (z)\right\| \).

3.2 Estimates for the Restarting Time

We begin by finding a lower bound for the restarting time, depending on the parameters \(\alpha \), \(\beta \) and L, but not on the initial condition z.

Lemma 3.3

Let \(z\notin {{\,\textrm{argmin}\,}}(\phi )\), and let x be the solution of (DIN-AVD) with initial conditions \(x(0)=z\) and \(\dot{x}(0)=0\). For every \(t\in (0,\tau _1)\), we have

Proof

From (8) and (10), we know that

On the other hand,

Then,

where

and

With this notation, we have

For the first term, we do as follows:

where we have used the Cauchy–Schwarz inequality and Corollary 3.1. For the second term, we first use (18) and observe that

and

by Corollary 3.1. We conclude that

as stated. \(\square \)

The function G, defined by

does not depend on the initial condition z. Its unique positive zero is

In view of the definition of the restarting time, an immediate consequence of Lemma 3.3 is

Corollary 3.2

Let \(T_*=\inf \big \{T(z):z\notin {{\,\textrm{argmin}\,}}(\phi )\big \}\). Then, \(\tau _3\le T_*\).

Remark 3.1

If \(\beta =0\), then

The case \(\alpha =3\) and \(\beta =0\) was studied in [31], and the authors provided \(\frac{4}{5\sqrt{L}}\) as a lower bound for the restart. The arguments presented here yield a higher bound, since

Recall that the function H given in (14) decreases from 1 to 0 on \([0,\tau _1]\). Therefore, \(H(t)>\frac{1}{2}\) for all \(t\in [0,\tau _2)\), where

Evaluating the right-hand side of (19), we see that

whence

These facts will be useful to provide an upper bound for the restarting time.

Proposition 3.1

Let \(z\notin {{\,\textrm{argmin}\,}}(\phi )\), and let x be the solution of (DIN-AVD) with initial conditions \(x(0)=z\) and \(\dot{x}(0)=0\). Let \(\phi \) satisfy (6) with \(\mu >0\). For each \(\tau \in (0,\tau _2)\cap (0,T(z)]\), we have

Proof

In view of (8) and (10), we can use Corollary 3.1 to obtain

From the (reverse) triangle inequality and the definition of H, it ensues that

which is positive, because \(\tau \in (0,\tau _2)\). Now, take \(t \in [\tau ,T(z)]\). Since \(\left\| \dot{x}(t)\right\| ^2\) increases in \(\big [0,T(z)\big ]\), Remark 2.1 gives

Integrating over \([\tau ,T(z)]\), we get

It follows that

in view of (6). It suffices to rearrange the terms to conclude. \(\square \)

Corollary 3.3

Let \(\phi \) satisfy (6) with \(\mu >0\), and let \(\tau _*\in (0,\tau _2)\cap (0,T_*]\). Then,

4 Function Value Decrease and Proof of Theorem 2.2

The next result provides the ratio at which the function values have been reduced by the time the trajectory is restarted.

Proposition 4.1

Let \(z\notin {{\,\textrm{argmin}\,}}(\phi )\), and let x be the solution of (DIN-AVD) with initial conditions \(x(0)=z\) and \(\dot{x}(0)=0\). Let \(\phi \) satisfy (6) with \(\mu >0\). For each \(\tau \in (0,\tau _2)\cap (0,T(z)]\), we have

for every \(t\in [\tau ,T(z)]\).

Proof

Take \(s\in (0,\tau )\). By combining Remark 2.1 with (23), we obtain

because H decreases in \((0,\tau _1)\), which contains \((0,\tau )\). Integrating on \((0,\tau )\) and using (6), we obtain

To conclude, it suffices to observe that \(\phi \big (x(t)\big )\le \phi \big (x(\tau )\big )\) in view of Remark 2.1. \(\square \)

Remark 4.1

Since \(\varPsi \) is decreasing in \([0,\tau _2)\), we have \(\varPsi (t)\ge \varPsi (\tau _*)>0\), whenever \(0\le t\le \tau _* < \tau _2\). Moreover, in view of (22) and Corollary 3.2, we can take \(\tau _*=\tau _3\) to obtain a lower bound. If \(\beta =0\), we obtain

which is independent of L. As a consequence, the inequality in Proposition 4.1 becomes

For \(\alpha =3\), this gives

For this particular case, a similar result is obtained in [31] for strongly convex functions, namely

Our constant is approximately 66.37% larger than the one from [31], which implies a greater reduction in the function values each time the trajectory is restarted. On the other hand, if \(\beta >0\), we can still obtain a slightly smaller lower bound, namely \(\varPsi (\tau _3)>\left( \dfrac{2\alpha +1}{2\alpha +2}\right) ^2\), independent from \(\beta \) and L. The proof is technical and will be omitted.

Proof of Theorem 2.2

Adopt the notation in Definition 2.1, take any \(\tau _*\in (0,\tau _2)\cap (0,T_*]\), and set

In view of Corollaries 3.2 and 3.3, we have

for all \(i\ge 0\) (we assume \(x_i\notin {{\,\textrm{argmin}\,}}(\phi )\) since the result is trivial otherwise). Given \(t>0\), let m be the largest positive integer such that \(m\tau ^*\le t\). By time t, the trajectory will have been restarted at least m times. By Proposition 2.1, we know that

We may now apply Proposition 4.1 repeatedly to deduce that

By definition, \((m+1)\tau ^*>t\), which entails \(m>\frac{t}{\tau ^*}-1\). Since \(Q\in (0,1)\), we have

and the result is established, with \(C=Q^{-1}\) and \(K=-\frac{1}{\tau ^*}\ln (Q)\). The proof is finished due to the fact that \(\phi (u)\le \phi ^*+\frac{L}{2}\Vert u-u^*\Vert ^2\) for every \(u^*\in {{\,\textrm{argmin}\,}}(\phi )\).\(\square \)

The convergence rate given in Theorem 2.2 holds for C and K of the form

and

for any \(\tau _*\in (0,\tau _2)\cap (0,T_*]\). In view of (22) and Corollary 3.2, \(\tau _*=\tau _3\) is a valid choice. On the other hand, the function \(K(\cdot )\) vanishes at \(\tau \in \{0,\tau _2\}\) and is positive on \((0,\tau _2)\). By continuity, it attains its maximum at some \(\hat{\tau }_*\in (0,\tau _2)\cap (0,T_*]\). Therefore, \(K(\hat{\tau }_*)\) yields the fastest convergence rate prediction in this framework.

Remark 4.2

It is possible to implement a fixed restart scheme. To this end, we modify Definition 2.1 by setting \(T_i\equiv \tau \), with any \(\tau \in (0,\tau _2)\cap (0,T_*]\), such as \(\hat{\tau }_*\) or \(\tau _3\), for example. In theory, \(\hat{\tau }_*\) gives the same convergence rate as the original restart scheme presented throughout this work. From a practical perspective, though, restarting the dynamics too soon may result in a poorer performance. Therefore, finding larger values of \(\hat{\tau }_*\) and \(\tau _3\) is crucial to implement a fixed restart (see Remarks 3.1 and 4.1).

5 Numerical Illustration

In this section, we provide a very simple numerical example to illustrate how the convergence is improved by the restarting scheme. A more thorough numerical analysis will be carried out in a forthcoming paper, where implementable optimization algorithms will be analyzed.

5.1 Example 1.2 Revisited

We consider the quadratic function \(\phi :{\mathbb R}^3\rightarrow {\mathbb R}\), defined in Example 1.1 by (2), with \(\rho = 10\). We set \(\alpha =3.1\) and \(\beta =0.25\) and compute the solutions of (AVD) and (DIN-AVD), starting from \(x(1)=x_1=(1,1,1)\) and zero initial velocity, with and without restarting, using the Python tool odeint from the scipy package. Figure 4 shows a comparison of the values along the trajectory with and without restarting, first for (AVD), and then, for (DIN-AVD). We observe the monotonic behavior established in Proposition 2.1, as well as a faster linear convergence rate. We shall provide more quantitative insight in a moment.

However, one can do better. As mentioned earlier, restarting schemes based on function values are effective from a practical perspective, but show an erratic behavior as the trajectory approaches a minimizer. It seems natural as a heuristic to use the first (or n-th) function-value restart point as a warm start, and then apply speed restarts, for which we have obtained convergence rate guarantees. Although the velocity must be set to zero after each restart, there are no constraints on the initial velocity used to compute the warm starting point. The results are shown in Fig. 5, with initial velocities \(\dot{x}(1)=0\) and \(\dot{x}(1)=-\beta \nabla \phi (x_1)\), respectively.

A linear regression after the first restart provides estimations for the linear convergence rate of the function values along the corresponding trajectories, when modeled as \(\phi \big (\chi (t)\big )\sim Ae^{-Bt}\), with \(A,B>0\). The results are displayed in Table 1. The absolute value of the exponent B in the linear convergence rate is increased by 34.67% in the case \(\dot{x}(1)=0\), and by 39.86% in the case \(\dot{x}(1)=-\beta \nabla \phi (x_1)\). Also, the minimum values for the methods presented in Fig. 5 can be analyzed. The last and best function values on [1, 25] are displayed in Table 2. In all cases, the best value without restart is approximately \(10^4\) times larger than the one obtained with our policy. We also observe similar final values for the restarted trajectories despite the different initial velocities.

5.2 A First Exploration of the Algorithmic Consequences

Different discretizations of (DIN-AVD) can be used to design implementable algorithms and generate minimizing sequences for \(\phi \), which hopefully will share the stable behavior we observe in the solutions of (DIN-AVD). Three such algorithms were first proposed in [7], for which we implemented a speed restart scheme, analogue to the one we have used for the solutions of (DIN-AVD). Since we obtained very similar results and the numerical analysis of algorithms is not the focus of this paper, we describe only the simplest one in detail and present the numerical results for that one. As in [31], a parameter \(k_{\min }\) is introduced, to avoid two consecutive restarts to be too close.

Example 5.1

We begin by applying Algorithm 1, as well as the variation with the warm start, to the function \(\phi :{\mathbb R}^3 \mapsto {\mathbb R}\) in Examples 1.1 and 1.2, with the parameters \(k_{\min }=10\), \(\beta =h=1/\sqrt{L}\) and \(\alpha =3.1\). Figure 6 shows the evolution of the function values along the iterations. The coefficients in the approximation \(\phi (x_k) \sim Ae^{-Bt}\), with \(A,B >0\), obtained for each algorithm, are detailed in Table 3. As one would expect, the value of B is similar and that of A is significantly lower. Also, Table 4 shows the values obtained along 1000 iterations. The best value without restart is \(10^5\) times larger than the one obtained with our policy.

Example 5.2

Given a positive definite symmetric matrix A of size \(n\times n\), and a vector \(b \in {\mathbb R}^n\), define \(\phi :{\mathbb R}^n \mapsto {\mathbb R}\) by

For the experiment, \(n=500\), A is randomly generated with eigenvalues in (0, 1), and b is also chosen at random. We first compute L, and set \(k_{\min }=10\), \(h=1/\sqrt{L}\), \(\alpha =3.1\) and \(\beta =h\). The initial points \(x_0=x_1\) are generated randomly as well. Figure 7 shows the comparison for Algorithm 1 and a variation of it giving a warm start as the one described in the continuous setting. That is, to restart the first time when the function increases instead of decrease, and then, performing the speed restart detailed on Algorithm 1. It can be seen that the restart scheme stabilizes and accelerates the convergence in both cases. The coefficients obtained for each algorithm in the approximation \(\phi (x_k) \sim Ae^{-Bt}\), with \(A,B >0\), are presented in Table 5. Also, Table 6 shows the value gaps obtained along 1800 iterations. The best value without restart is more than \(10^4\) times larger than the one obtained with restart.

6 Conclusions

We have proposed and analyzed a speed restarting scheme for the inertial dynamics with Hessian-driven damping (DIN-AVD), introduced in [5]. We have established a linear convergence rate for the function values, which decrease monotonically to the minimum along the restarted trajectories, when the function \(\phi \) has quadratic growth, for every value of \(\alpha >0\) and \(\beta \ge 0\). As a byproduct, we improve and extend the results of Su, Boyd and Candès [31], obtained in the strongly convex case for \(\alpha =3\) and \(\beta =0\).

Our numerical experiments suggest that the Hessian-driven damping and the restarting scheme produce a better improvement in the performance of the dynamics and related iterative algorithms—for the purpose of approximating the minimum of \(\phi \)—when used together, compared to using either technique separately.

References

Alamo, T., Limon, D., Krupa, P.: Restart FISTA with global linear convergence. In: 2019 18th European Control Conference (ECC), pp. 1969–1974. IEEE (2019)

Alvarez, F., Pérez, J.: A dynamical system associated with Newton’s method for parametric approximations of convex minimization problems. Appl. Math. Optim. 38(2), 193–217 (1998)

Attouch, H., Cabot, A.: Convergence of a relaxed inertial forward–backward algorithm for structured monotone inclusions. Appl. Math. Optim. 80(3), 547–598 (2019)

Attouch, H., Peypouquet, J.: Convergence of inertial dynamics and proximal algorithms governed by maximally monotone operators. Math. Program. 174, 391–432 (2019)

Attouch, H., Peypouquet, J., Redont, P.: Fast convex optimization via inertial dynamics with Hessian driven damping. J. Differ. Equ. 261(10), 5734–5783 (2016)

Attouch, H., Chbani, Z., Peypouquet, J., Redont, P.: Fast convergence of inertial dynamics and algorithms with asymptotic vanishing viscosity. Math. Program. 168, 123–175 (2018)

Attouch, H., Chbani, Z., Fadili, J., Riahi, H.: First-order optimization algorithms via inertial systems with Hessian driven damping. Math. Program. 1–43 (2020)

Aujol, J.-F., Dossal, C.H., Labarrière, H., Rondepierre, A.: FISTA restart using an automatic estimation of the growth parameter. Working Paper or Preprint (2022)

Beck, A.: First-Order Methods in Optimization. Society for Industrial and Applied Mathematics, Philadelphia, PA (2017)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009)

Bolte, J., Nguyen, T.P., Peypouquet, J., Suter, B.W.: From error bounds to the complexity of first-order descent methods for convex functions. Math. Program. 165(2), 471–507 (2017)

Brézis, H.: Functional Analysis. Sobolev Spaces and Partial Differential Equations, Springer, New York (2011)

Cauchy, A., et al.: Méthode générale pour la résolution des systemes d’équations simultanées. Comp. Rend. Sci. Paris 25(1847), 536–538 (1847)

Fierro, I., Maulén, J.J., Peypouquet, J.: Inertial Krasnoselskii-Mann iterations. arXiv preprint arXiv:2210.03791 (2022)

Giselsson, P., Boyd, S.: Monotonicity and restart in fast gradient methods. In: 53rd IEEE Conference on Decision and Control, pp. 5058–5063. IEEE (2014)

Krasnosel’skii, M.A.: Two comments on the method of successive approximations. Usp. Math. Nauk 10, 123–127 (1955)

Lin, Q., Xiao, L.: An adaptive accelerated proximal gradient method and its homotopy continuation for sparse optimization. Comput. Optim. Appl. 60(3), 633–674 (2015)

Lions, P.L., Mercier, B.: Splitting algorithms for the sum of two nonlinear operators. SIAM J. Numer. Anal. 16(6), 964–979 (1979)

Lorenz, D.A., Pock, T.: An inertial forward–backward algorithm for monotone inclusions. J. Math. Imaging Vis. 51, 311–325 (2015)

Maingé, P.-E.: Convergence theorems for inertial KM-type algorithms. J. Comput. Appl. Math. 219(1), 223–236 (2008)

Mann, W.R.: Mean value methods in iteration. Proc. Am. Math. Soc. 4(3), 506–510 (1953)

Martinet, B.: Regularisation, d’inéquations variationelles par approximations succesives. Revue Française d’informatique et de Recherche operationelle (1970)

May, R.: Asymptotic for a second-order evolution equation with convex potential andvanishing damping term. Turk. J. Math. 41(3), 681–685 (2017)

Necoara, I., Nesterov, Y., Glineur, F.: Linear convergence of first order methods for non-strongly convex optimization. Math. Program. 175(1), 69–107 (2019)

Nesterov, Y.: A method for solving the convex programming problem with convergence rate \(\cal{O} (1/k^2)\). Proc. USSR Acad. Sci. 269, 543–547 (1983)

Nesterov, Y.: Gradient methods for minimizing composite functions. Math. Program. 140(1), 125–161 (2013)

O’Donoghue, B., Candès, E.: Adaptive restart for accelerated gradient schemes. Found. Comput. Math. 15(3), 715–732 (2015)

Passty, G.B.: Ergodic convergence to a zero of the sum of monotone operators in Hilbert space. J. Math. Anal. Appl. 72(2), 383–390 (1979)

Polyak, B.: Some methods of speeding up the convergence of iteration methods. USSR Comput. Math. Math. Phys. 4, 1–17 (1964)

Rockafellar, R.T.: Monotone operators and the proximal point algorithm. SIAM J. Control Optim. 14(5), 877–898 (1976)

Su, W., Boyd, S., Candès, E.J.: A differential equation for modeling Nesterov’s accelerated gradient method: theory and insights. J. Mach. Learn. Res. 17(153), 1–43 (2016)

Acknowledgements

This work is partially funded by the Faculty of Physical and Mathematical Sciences, University of Chile and at the Bernoulli Institute, University of Groningen. It was financially supported by the Chilean government through the National Agency for Research and Development (ANID)/Scholarship Program/BECA DOCTORADO NACIONAL/2021-21210993 and Centro de Modelamiento Matemático (CMM) BASAL fund FB210005 for center of excellence from ANID-Chile.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Radu Ioan Boţ.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Proof of Theorem 2.1

Appendix A: Proof of Theorem 2.1

Consider the differential equation

We assume that \(\gamma \) is continuous and positive, with \(\lim _{t\rightarrow 0}\gamma (t)=+\infty \), and that \(\mathcal F\) and \(\mathcal G\) are (continuous and) sufficiently regular so that, for each \(\delta >0\), the differential equation (25)—with initial conditions \(x(\delta )\) and \(\dot{x}(\delta )\) given—has a unique solution defined on \([\delta ,T_\infty )\), for some \(T_\infty \in (0,\infty ]\). Let

We have the following:

Theorem A.1

Assume there is \(T>0\) such that

Then, the differential equation (25), with initial condition \(x(0)=x_0\) and \(\dot{x}(0)=v_0\), has a solution.

Proof

For \(\delta \in (0,T)\), define \(x_\delta :[0,T]\rightarrow {\mathbb R}^n\) as follows: for \(t\in [0,\delta ]\), \(x_\delta (t)=x_0+tv_0\); and for \(t>\delta \), \(x_\delta \) is the solution of (25) with initial condition \(x(\delta )=x_0+\delta v_0\) and \(\dot{x}(\delta )=v_0\). Notice that \(x_{\delta }\) is a continuous function such that matches a solution of (25) on \([\delta ,T]\). From the hypotheses, there exist \(c,K>0\) and such that \(\gamma (t)\ge c\) and \(M(\delta ,t)\le K\) for all \(0<\delta \le t\le T\). Therefore,

whenever \(0<\delta \le s\le t\le T\), so that

for all \(s\in [0,T]\). As a consequence,

on [0, T]. It follows that \((x_\delta )\) is bounded in \(H^1(0,T;{\mathbb R}^n)\).

By weak sequential compactness and the Rellich–Kondrachov Theorem (see, for instance [12, Theorem 9.16]), there is a sequence \((\delta _\nu )\) converging to zero, such that \(x_{\delta _\nu }\) converges uniformly to a continuous function \(x^*\), while \(\dot{x}_{\delta _\nu }\) converges weakly in \(L^2(0,T;{\mathbb R}^n)\) to some \(y^*\). Clearly, \(x^*(0)=x_0\). In turn, for \(t\in (0,T]\), we have

Now, write \(\zeta _\nu (t)=x_{\delta _\nu }(t)-tv_0\). By the Mean Value Theorem, there is \(t_\nu \in (0,t)\) (we may assume that \(t_\nu \in (\delta _\nu ,t)\), actually), such that

in view of (28). Dividing by t and using (29), we obtain

The right-hand side tends to zero as \(t\rightarrow 0\). It remains to prove that \(x^*\) satisfies (25). To this end, take any \(t_0\in (0,T)\) and observe that \(\delta _\nu <t_0\) for all sufficiently large \(\nu \). Therefore, \(x_{\delta _\nu }\) satisfies (25) on \([t_0,T)\) for all such \(\nu \). Multiplying by

we deduce that

By taking yet another subsequence if necessary, we may assume that \(\dot{x}_{\delta _\nu }(t_0)\) converges to some \(v^*\). From the uniform convergence of \(x_{\delta _\nu }\) to \(x^*\) on [0, T], and the weak convergence of \(\dot{x}_{\delta _\nu }\) to \(y^*\) in \(L^2(0,T;{\mathbb R}^n)\), it ensues that

for all \(t\in (t_0,T)\). As a consequence, \(x^*\) is continuously differentiable, \(\dot{x}^*=y\), and \(x^*\) satisfies (25). \(\square \)

Corollary A.1

Equation (DIN-AVD) has at least one solution.

Proof

According to Theorem A.1, for the existence, it suffices to show that the expression \(M(\delta ,t)\), defined in (26), is bounded for \(0<\delta \le t\le T\), for some \(T>0\). Mimicking the proof of Lemma 3.2, we show that

The only positive zero of H is \(\tau _1\), given by (15), and H is decreasing on \((0,\tau _1)\). Hence, if \(T<\tau _1\), then

as claimed. \(\square \)

Proposition A.1

Equation (DIN-AVD), with initial condition \(x(0)=x_0\) and \(\dot{x}(0)=0\), has at most one solution in a neighborhood of \(t=0\).

Proof

Let x and y satisfy (DIN-AVD) with the same initial state and null initial velocity. We define

and proceed as in the proof of Lemma 3.1, to obtain

As x and y satisfy (DIN-AVD), we integrate by parts to obtain

Using (30), and the fact that \(\tilde{M}(t)\) is increasing, we get

Then,

whenever \(0<t\le T\). Taking supremum, we conclude that

for all \(T>0\). Since \(Q(T)>0\) in a neighborhood of 0, it follows that \({\tilde{M}}\) must vanish there, whence x and y must coincide. \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Maulén, J.J., Peypouquet, J. A Speed Restart Scheme for a Dynamics with Hessian-Driven Damping. J Optim Theory Appl 199, 831–855 (2023). https://doi.org/10.1007/s10957-023-02290-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-023-02290-5