Abstract

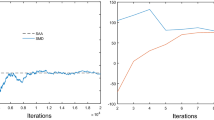

Stochastic alternating algorithms for bi-objective optimization are considered when optimizing two conflicting functions for which optimization steps have to be applied separately for each function. Such algorithms consist of applying a certain number of steps of gradient or subgradient descent on each single objective at each iteration. In this paper, we show that stochastic alternating algorithms achieve a sublinear convergence rate of \({\mathcal {O}}(1/T)\), under strong convexity, for the determination of a minimizer of a weighted-sum of the two functions, parameterized by the number of steps applied on each of them. An extension to the convex case is presented for which the rate weakens to \({\mathcal {O}}(1/\sqrt{T})\). These rates are valid also in the non-smooth case. Importantly, by varying the proportion of steps applied to each function, one can determine an approximation to the Pareto front.

Similar content being viewed by others

References

Abdelaziz, F.B.: L’Efficacité en Programmation Multi-Objectifs Stochastique. PhD thesis, Université de Laval, Québec (1992)

Abdelaziz, F.B.: Solution approaches for the multiobjective stochastic programming. Eur. J. Oper. Res. 216, 1–16 (2012)

Bechikh, S., Datta, R., Gupta, A.: editors. Recent Advances in Evolutionary Multi-Objective Optimization, volume 20. Springer (2016)

Beck, A.: First-Order Methods in Optimization. SIAM, Philadelphia (2017)

Bento, G.C., Cruz, N.J.X.: A subgradient method for multiobjective optimization on Riemannian manifolds. J. Optim. Theory Appl. 159, 125–137 (2013)

Bento, G.C., Cruz, N.J.X., Soubeyran, A.: A proximal point-type method for multicriteria optimization. Set-Valued Var. Anal. 22, 557–573 (2014)

Bera, S., Chakrabarty, D., Flores, N., Negahbani, M.: Fair algorithms for clustering. In: NIPS, pp. 4954–4965 (2019)

Bonnel, H., Iusem, A.N., Svaiter, B.F.: Proximal methods in vector optimization. SIAM J. Optim. 15, 953–970 (2005)

Bottou, L., Curtis, F.E., Nocedal, J.: Optimization methods for large-scale machine learning. SIAM Rev. 60, 223–311 (2018)

Browning, E.K., Zupan, M.A.: Microeconomics: Theory and Applications. Wiley, Hoboken (2020)

Caballero, R., Cerdá, E., Munoz, M., Rey, L.: Stochastic approach versus multiobjective approach for obtaining efficient solutions in stochastic multiobjective programming problems. Eur. J. Oper. Res. 158, 633–648 (2004)

Chierichetti, F., Kuma, R., Lattanzi, S., Vassilvitskii, S.: Fair clustering through fairlets. In: NIPS, pp. 5029–5037 (2017)

Coello, C.C.: Evolutionary multi-objective optimization: a historical view of the field. IEEE Comput. Intell. Nag. 1, 28–36 (2006)

Bello, C.J.Y.: A subgradient method for vector optimization problems. SIAM J. Optim. 23, 2169–2182 (2013)

Custódio, A.L., Madeira, J.A., Vaz, A.I.F., Vicente, L.N.: Direct multisearch for multiobjective optimization. SIAM J. Optim. 21, 1109–1140 (2011)

Désidéri, J.A.: Multiple-gradient descent algorithm (MGDA) for multiobjective optimization. C. R. Math. Acad. Sci. Paris 350, 313–318 (2012)

Désidéri, J.A.: Multiple-gradient descent algorithm for Pareto-front identification. In: Modeling. Simulation and Optimization for Science and Technology, pp. 41–58. Springer, Dordrecht (2014)

Drummond, L.G., Iusem, A.N.: A projected gradient method for vector optimization problems. Comput. Optim. Appl. 28, 5–29 (2004)

Drummond, L.G., Raupp, F.M.P., Svaiter, B.F.: A quadratically convergent Newton method for vector optimization. Optimization 63, 661–677 (2014)

Drummond, L.G., Svaiter, B.F.: A steepest descent method for vector optimization. J. Comput. Appl. Math. 175, 395–414 (2005)

Fliege, J., Drummond, L.G., Svaiter, B.F.: Newton’s method for multiobjective optimization. SIAM J. Optim. 20, 602–626 (2009)

Fliege, J., Svaiter, B.F.: Steepest descent methods for multicriteria optimization. Math. Methods Oper. Res. 51, 479–494 (2000)

Fukuda, E.H., Drummond, L.M.G.: Inexact projected gradient method for vector optimization. Comput. Optim. Appl. 54, 473–493 (2013)

Fukuda, E.H., Drummond, L.M.G.: A survey on multiobjective descent methods. Pesquisa Operacional 34, 585–620 (2014)

Gass, S., Saaty, T.: The computational algorithm for the parametric objective function. Nav. Res. Logist. Q. 2, 39–45 (1955)

Gunantara, N.: A review of multi-objective optimization: methods and its applications. Cog. Eng. 5(1), 52 (2018)

Haimes, Y.V.: On a bicriterion formulation of the problems of integrated system identification and system optimization. IEEE Trans. Syst. Man Cybern. 1, 296–297 (1971)

Liu, S., Vicente, L.N.: A stochastic alternating balance \(k\)-means algorithm for fair clustering. Lecture Notes in Computer Science (2023) to appear

Liu, S., Vicente, L.N.: The stochastic multi-gradient algorithm for multi-objective optimization and its application to supervised machine learning. Ann. Oper. Res. 5, 2569 (2023)

Miettinen, K.: Nonlinear Multiobjective Optimization, vol. 12. Springer, New York (2012)

Nemirovski, A., Juditsky, A., Lan, G., Shapiro, A.: Robust stochastic approximation approach to stochastic programming. SIAM J. Optim. 19, 1574–1609 (2009)

Lucambio, P.L.R., Prudente, L.F.: Nonlinear conjugate gradient methods for vector optimization. SIAM J. Optim. 28, 2690–2720 (2018)

Povalej, Z.: Quasi-Newton’s method for multiobjective optimization. Comput. Optim. Appl. 255, 765–777 (2014)

Qu, S., Goh, M., Chan, F.T.S.: Quasi-Newton methods for solving multiobjective optimization. Oper. Res. Lett. 39, 397–399 (2011)

Qu, S., Goh, M., Liang, B.: Trust region methods for solving multiobjective optimisation. Optim. Methods Softw. 28, 796–811 (2013)

Quentin, M., Fabrice, P., Désidéri, J.A.: A stochastic multiple gradient descent algorithm. Eur. J. Oper. Res. 271, 808–817 (2018)

Schmidt, M., Schwiegelshohn, C., Sohler, C.: Fair coresets and streaming algorithms for fair \(k\)-means. In: International Workshop on Approximation and Online Algorithms, pp. 232–251. Springer (2019)

Tanabe, H., Fukuda, E.H., Yamashita, N.: Proximal gradient methods for multiobjective optimization and their applications. Comput. Optim. Appl. 72, 339–361 (2019)

Tanabe, H., Fukuda, E.H., Yamashita, N.: Convergence rates analysis of a multiobjective proximal gradient method. Optim. Lett. 5, 214 (2023)

Villacorta, K.D., Oliveira, P.R., Soubeyran, A.: A trust-region method for unconstrained multiobjective problems with applications in satisficing processes. J. Optim. Theory Appl. 160, 865–889 (2014)

Ziko, I.M., Granger, E., Yuan, J., Ayed, I.B.: Variational fair clustering. In: Proceedings of the AAAI Conference on Artificial Intelligence 35, 11202–11209 (2021)

Acknowledgements

We would like to thank a Referee for many insightful comments on the assumptions, statements, and proofs of this paper. One comment we did not follow was to use Young’s inequality in (8) in order to avoid an assumption on the boundedness of the iterates such as Assumption 3.1. By doing so, we would obtain a first term proportional to \(\alpha _t^2 \Vert x_t - x_*\Vert ^2\) and a second term of the order of \(\alpha _t^2\). The first term could be absorbed by the negative term of the order of \(\alpha _t \Vert x_t - x_*\Vert ^2\) by choosing a suitable step size. The reason why we chose not to make this change is that the rates would only hold for sufficiently small \(\alpha _t\) (involving unknown constants in the statement of “sufficiently small” such as Lipschitz constants or noise bounds).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Edouard Pauwels.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Support for this author was partially provided by the Centre for Mathematics of the University of Coimbra under grant FCT/MCTES UIDB/MAT/00324/2020.

A Proposition using Intermediate Value Theorem

A Proposition using Intermediate Value Theorem

Based on the intermediate value theorem, we derive the following proposition for the purpose of convergence rate analysis of the SA2GD algorithm.

Proposition A.1

Given a continuous real function \(\phi (x): {\mathbb {R}}^n \rightarrow {\mathbb {R}}\) and a set of points \(\{x_j\}_{j =1}^m\), there exists \(w \in {\mathbb {R}}^n\) such that

where \(w = \sum _{j = 1}^m \mu _j x_j, \text{ with } \sum _{j = 1}^m \mu _j = 1, \mu _j \ge 0\), \(i = 1, \ldots , m\), is a convex linear combination of \(\{x_j\}_{j =1}^m\).

Proof

The proposition is obtained by consecutively applying the intermediate value theorem to \(\phi (x)\). First, for the pair of points \(x_1\) and \(x_2\), there exists a point \(w_{12} = \mu _{12} x_1 + (1-\mu _{12}) x_2, \mu _{12} \in [0, 1]\), such that \(\phi (w_{12}) = (\phi (x_1) + \phi (x_2))/2\) according to the intermediate value theorem, which implies that \(\sum _{j = 1}^m \phi (x_j) = 2\phi (w_{12}) + \sum _{j = 3}^m \phi (x_j)\). Then, there exists \(w_{13} = \mu _{13} w_{12} + (1-\mu _{13}) x_3, \mu _{13} \ge 0\), such that \(\phi (w_{13}) = (2\phi (w_{12}) + \phi (x_3))/3\) holds given that the average function value \((2\phi (w_{12}) + \phi (x_3))/3\) lies between \(\phi (w_{12})\) and \(\phi (x_3)\). Notice that \(w_{13}\) can also be written as convex linear combination of \(\{x_1, x_2, x_3\}\). The proof is concluded by continuing this process until \(x_m\) is reached. \(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, S., Vicente, L.N. Convergence Rates of the Stochastic Alternating Algorithm for Bi-Objective Optimization. J Optim Theory Appl 198, 165–186 (2023). https://doi.org/10.1007/s10957-023-02253-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-023-02253-w