Abstract

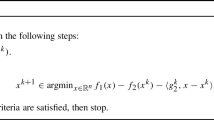

We consider a class of structured nonsmooth difference-of-convex minimization. We allow nonsmoothness in both the convex and concave components in the objective function, with a finite max structure in the concave part. Our focus is on algorithms that compute a (weak or standard) d(irectional)-stationary point as advocated in a recent work of Pang et al. (Math Oper Res 42:95–118, 2017). Our linear convergence results are based on direct generalizations of the assumptions of error bounds and separation of isocost surfaces proposed in the seminal work of Luo and Tseng (Ann Oper Res 46–47:157–178, 1993), as well as one additional assumption of locally linear regularity regarding the intersection of certain stationary sets and dominance regions. An interesting by-product is to present a sharper characterization of the limit set of the basic algorithm proposed by Pang et al., which fits between d-stationarity and global optimality. We also discuss sufficient conditions under which these assumptions hold. Finally, we provide several realistic and nontrivial statistical learning models where all assumptions hold.

Similar content being viewed by others

Notes

The first version of this paper with complete results appeared in 2018 on Optimization Online http://www.optimization-online.org/DB_HTML/2018/08/6766.html.

Note the discrepancy between \(\varepsilon \) and \(\varepsilon '\). This is consistent with the observation in [30] that if we take \(\varepsilon =0\) in Algorithm 2, a limit point is not necessarily d-stationary.

References

Ahn, M., Pang, J.S., Xin, J.: Difference-of-convex learning: directional stationarity, optimality, and sparsity. SIAM J. Optim. 27, 1637–1665 (2017)

Attouch, H., Bolte, J.: On the convergence of the proximal algorithm for nonsmooth functions involving analytic features. Math. Program. 116, 5–16 (2009)

Bačák, M., Borwein, J.: On difference convexity of locally lipschitz functions. Optimization 60, 961–978 (2011)

Bauschke, H.H., Borwein, J.M., Li, W.: Strong conical hull intersection property, bounded linear regularity, Jameson’s property (G), and error bounds in convex optimization. Math. Program. Ser. A 86, 135–160 (1999)

Bolte, J., Daniilidis, A., Lewis, A.: The Łojasiewicz inequality for nonsmooth subanalytic functions with applications to subgradient dynamical systems. SIAM J. Optim. 17, 1205–1223 (2007)

Cui, Y., Pang, J.S., Sen, B.: Composite difference-max programs for modern statistical estimation problems. SIAM J. Optim. 28, 3344–3374 (2018)

Drusvyatskiy, D., Lewis, A.S.: Error bounds, quadratic growth, and linear convergence of proximal methods. Math. Opera. Res. 43, 919–948 (2018)

Eckstein, J., Bertsekas, D.P.: On the Douglas-Rachford splitting method and the proximal point algorithm for maximal monotone operators. Math. Program. 55, 293–318 (1992)

Gong, P., Zhang, C., Lu, Z., Huang, J.Z., Ye, J.: A general iterative shinkage and thresholding algorithm for non-convex regularized optimization problems. Proc. Int. Conf. Mach. Learn. 28, 37–45 (2013)

Gotoh, J.Y., Takeda, A., Tono, K.: DC formulations and algorithms for sparse optimization problems. Math. Program. 169, 141–176 (2018)

Harker, P.T., Pang, J.S.: Finite-dimensional variational inequality and nonlinear complementarity problems: a survey of theory, algorithms and applications. Math. Program. 48, 161–220 (1990)

Hesse, R., Luke, D.R.: Nonconvex notions of regularity and convergence of fundamental algorithms for feasibility problems. SIAM J. Optim. 23, 2397–2419 (2013)

Hiriart-Urruty, J.B.: From convex optimization to nonconvex optimization. Necessary and sufficient conditions for global optimality. In: Clarke, F.H., Dem’yanov, V.F., Giannessi, F. (eds.) Nonsmooth Optimization and Related Topics, vol. 43, pp. 219–239. Springer, Boston (1989)

Hiriart-Urruty, J.B., Lemaréchal, C.: Convex Analysis and Minimization Algorithms. II. Grundlehren der Mathematischen Wissenschaften (Fundamental Principles of Mathematical Sciences), vol. 306. Springer, Berlin (1993)

Le Thi, H.A., Pham Dinh, T.: The DC (difference of convex functions) programming and DCA revisited with DC models of real world nonconvex optimization problems. Ann. Oper. Res. 133, 23–46 (2005)

Le Thi, H.A., Pham Dinh, T.: DC programming and DCA: thirty years of developments. Math. Program. Ser. B 169, 5–68 (2018)

Le Thi, H.A., Huynh, V.N., Pham Dinh, T.: Convergence analysis of difference-of-convex algorithm with subanalytic data. J. Optim. Theory Appl. 179, 103–126 (2018)

Li, G.Y., Pong, T.K.: Calculus of the exponent of Kurdyka–Łojasiewicz inequality and its applications to linear convergence of first-order methods. Found. Comput. Math. 18, 1199–1232 (2018)

Liu, T.X., Pong, T.K., Takeda, A.: A refined convergence analysis of pDCA\(_{e}\) with applications to simultaneous sparse recovery and outlier detection. Comput. Optim. Appl. 73, 69–100 (2019)

Lu, Z.S., Zhou, Z.R., Sun, Z.: Enhanced proximal DC algorithms with extrapolation for a class of structured nonsmooth DC minimization. Math. Program. 176, 369–401 (2018)

Luo, Z.Q., Pang, J.S.: Error bounds for analytic systems and their application. Math. Program. 67, 1–28 (1994)

Luo, Z.Q., Sturm, J.F.: Error bounds for quadratic systems. In: Frenk, H., Roos, K., Terlaky, T., Zhang, S. (eds.) High Performance Optimization. Applied Optimization, vol. 33. Springer, Boston (1985)

Luo, Z.Q., Tseng, P.: Error bound and convergence analysis of matrix splitting algorithms for the affine variational inequality problem. SIAM J. Optim. 2, 43–54 (1992a)

Luo, Z.Q., Tseng, P.: On linear convergence of descent methods for convex essentially smooth minimization. SIAM J. Control Optim. 30, 408–425 (1992b)

Luo, Z.Q., Tseng, P.: Error bounds and convergence analysis of feasible descent methods: a general approach. Ann. Oper. Res. 46–47, 157–178 (1993)

Nouiehed, M., Pang, J.S., Razaviyayn, M.: On the pervasiveness of difference-convexity in optimization and statistics. Math. Program. 174, 195–222 (2019)

Pang, J.S.: Error bounds in mathematical programming. Math. Program. 79, 299–332 (1997)

Pang, J.S., Scutari, G.: Nonconvex games with side constraints. SIAM J. Optim. 21, 1491–1522 (2010)

Pang, J.S., Tao, M.: Decomposition methods for computing directional stationary solutions of a class of non-smooth non-convex optimization problems. SIAM J. Optim. 28, 1640–1669 (2018)

Pang, J.S., Razaviyayn, M., Alvarado, A.: Computing B-stationary points of nonsmooth DC programs. Math. Oper. Res. 42, 95–118 (2017)

Pham Dinh, T., Le Thi, H.A.: Convex analysis approach to DC programming: theory, algorithms and applications. Acta Math. Vietnam. 22, 289–355 (1997)

Pham Dinh, T., Souad, E.B.: Duality in D.C. (difference of convex functions) optimization. Subgradient methods. In: Hoffmann, K.H., Zowe, J., Hiriart-Urruty, J.B., Lemarechal, C. (eds.) Trends in Mathematical Optimization. International Series of Numerical Mathematics, vol. 84. Birkhäuser, Basel (1988)

Rockafellar, R.T.: Convex Analysis. Princeton University Press, Princeton (1970)

Tseng, P., Yun, S.: A coordinate gradient descent method for nonsmooth separable minimization. Math. Program. Ser. B 117, 387–423 (2009)

Wen, B., Chen, X., Pong, T.K.: Linear convergence of proximal gradient algorithm with extrapolation for a class of nonconve nonsmooth minimization problems. SIAM J. Optim. 27, 124–145 (2017)

Wen, B., Chen, X.J., Pong, T.K.: A proximal difference-of-convex algorithm with extrapolation. Comput. Optim. Appl. 69, 297–324 (2018)

Zhang, C.H.: Nearly unbiased variable selection under minimax concave penalty. Ann. Stat. 38, 894–942 (2010)

Zhang, T., Bach, F.: Analysis of multi-stage convex relaxation for sparse regularization. J. Mach. Learn. Res. 11, 1081–1107 (2010)

Zhou, Z., So, A.M.C.: A unified approach to error bounds for structured convex optimization problems. Math. Program. Ser. A 165, 689–728 (2017)

Acknowledgements

Both authors would like to thank Prof. Jong-Shi Pang for numerous inspiring discussions on related topics during their individual visits to the University of Southern California in 2016. Prof. Pang also proposed the main question studied in this paper. Part of this work was completed during the second author’s visit of Prof. Kung-Fu Ng at the Chinese University of Hong Kong. Min Tao was partially supported by the Chinese National Natural Science Foundation Grant (No. 11971228) and the JiangSu Provincial National Natural Science Foundation of China (No. BK20181257) and the National Key Research and Development Program of China (No. 2018AAA0101100). Both of the authors are grateful to anonymous referees and the associate editor for their valuable comments and suggestions which have helped to improve the presentation of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Alexandre Cabot.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Dong, H., Tao, M. On the Linear Convergence to Weak/Standard d-Stationary Points of DCA-Based Algorithms for Structured Nonsmooth DC Programming. J Optim Theory Appl 189, 190–220 (2021). https://doi.org/10.1007/s10957-021-01827-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-021-01827-w

Keywords

- Difference-of-convex programming

- Nonsmooth

- Difference-of-convex algorithm

- Linear convergence

- Error bound