Abstract

In this note, some questions concerning the strong convergence of subgradients of convex functions along a given direction are recalled and posed. It is shown that some open problems in literature are linked to that of the existence of limits of subgradients from subdifferentials along a given segment.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

There are several open problems concerning the convexity. Some of them have a long history; some of them are not so old; see, for example, [1,2,3,4,5]. The aim of this note is to show that when we examine convex problems more carefully, then we encounter the problem of directional convergence of subgradients.

To the best of the Author’s knowledge, the first time, when the problem of the directional convergence was explicitly posed—it is still open—was due to F. Giannessi; see [2]. In fact, F. Giannessi posed several questions both in finite- and infinite-dimensional settings concerning the directional convergence of gradients of convex functions. In the finite-dimensional cases, our knowledge, on abilities and inabilities of ensuring the convergence, is at the beginning. Namely, at this moment, we know that there are convex functions such that there is the lack of convergence in some directions; examples of such functions were provided in [6,7,8]. Moreover, when we take subgradients instead of gradients, we know that the set of such directions are negligible; the Lebesgue measure of the directions, where the convergence is not valid, amounts zero; see [9]. The infinite-dimensional case is not recognized. There are more questions than answers; for some recent information, we refer to [9]. We do not know how large the set of good directions is. However, we know that if the set is at least a dense subset, then we can handle with some open problems concerning farthest distance function on a Hilbert space (Klee envelope); see, for example, [3, 10]. That is, if the set of directions, where the convergence is ensured, is dense in the space for some minimizer of a farthest distance function, then the subdifferential, at this minimizer, has at least two different unit subgradients. (They are from the unit sphere.) This would allow us to answer to the two celebrated Klee problems; see, for example, [3,4,5, 11,12,13], where the problems of the convexity of Chebyshev sets and the unique farthest point property are presented.

It also turns out that problems on directional convergence of subgradients are involved in the theory of second-order differentiability of convex functions. For example, when we want to have a version of Alexandrov Theorem in infinite dimensions, see [1] for comments and questions, then some questions concerning the directional convergence of subgradients arise.

At this moment, it seems that to tackle the questions posed by F. Giannessi in the infinite-dimensional setting, we should first to figure out conditions, which ensure the set of “good” directions is at least dense in some cone of directions, suitable for a problem under consideration. In fact, the larger the set is, the better tool we have at hand.

2 Perspectives and Open Problems

Let us recall Giannessi’s “first-order” questions; see [2] and see also [6,7,8] for examples of convex functions in two-dimensional spaces, for which the limit in (1) does not exist: Let \(f:\mathbb {R}^n \longrightarrow \mathbb {R}\), with \(n\ge 2\), be a convex function, and set \(x(t):=(t, 0 ,\ldots , 0) \in \mathbb {R}^n\), with \(t \in \mathbb {R}\). Assume that \( \nabla f(x(t))\) exists for every \(t > 0\), and consider the following limit:

We conjecture that the above limit may not exist. Hence, however, the question is still open. The above question can be generalized in several ways. For instance, x(t) may represent a curve, having the origin as endpoint, instead of a ray; \(\mathbb {R}^n\) may be replaced with an infinite-dimensional space.

F. Giannessi asked also several “second-order” questions. Namely, assuming additionally that Hessian Hf(x(t)) exists for all \(t>0\), where Hf(x(t)) stands for the Hessian of f at x(t), he asked whether: the limit

might not exist. Infinite dimensional as well as higher order and other cases of this question can be considered. The Author would like to thank Prof. Franco Giannessi for the permission to incorporate his questions to the paper. Moreover, several discussions with Prof. F. Giannessi on his “second-order” questions have revealed little knowledge on the higher-order analysis for convex functions. Even we do not know if there exists a convex function f, say on a Hilbert space, derivable two times around a given point, without the limit of Hessians at the point, that is, the limit of Hessians at this point does not exist. It seems that an investigation might start with looking for an answer to the following question: does there exist a convex function f from \(\mathbb {R}^2\) to R, derivable two times around the origin, for which the limit

does not exist?

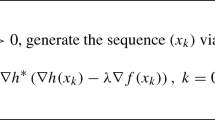

When we look at (1), then several obvious ideas come to mind. First, subgradients can be used instead of gradients. Second, the limit in (1) concerns the scalar case; the same questions and many others can be asked for convex vector valued functions. Third, are the questions following (1) of second-order nature? Some aspects concerning the third observation are discussed when the role of the following conditions, in the directional convergence of subgradients,

or

are explained; see comments following (4) and (5). Let us point out that the existence of the second-order derivative is sufficient for the existence (1), but we know little on the existence of the second-order derivatives for convex function, even in the separable Hilbert space setting (in infinite dimensions); see the last open question in this section. Perhaps, investigations on the directional convergence of subdifferentials in infinite dimensions, involving second-order theory, should start with a construction of an example of convex function having the second-order Gâteaux derivative but not having a second-order Gâteaux expansion at a point.

The directional aspects of the questions are essential. Let us rewrite the above questions, in order to expose the meaning of directions in the convergence and to encompass subdifferentials in investigations; in the sequel, \(\partial \) stands for the subdifferential in the convex analysis sense. Since the problem of the convergence along arcs is not even touched in the considerations below, so the questions are presented in the form convenient for the directional convergence; see (3).

Let \((X,\Vert \cdot \Vert )\) be a normed space and \(f:X \longrightarrow \mathbb {R} \cup \{+\infty \}\) be a convex function, \(x\in X\), \(w\in X\setminus \{0\}\) be fixed and U be an open convex set such that \(x\in U\) and \(f_{|U}\) is continuous (the restriction of f to U is a continuous function); actually, this restriction is imposed to make the presentation more clear, but it can be dropped. Consider the following limit:

when the limit exists, the limit exists whenever the lower and upper limits coincide and

where \(d_{\partial f(x+tw)}(x^*)\) stands for the distance of \(x^*\) from the subdifferential of f at \(x+tw\), that is, the distance from the set

Let us ask basic questions (see questions following (1) too): when does the limit in (3) exist? Is there any direction for which the limit exists? Is the set of “good” directions, for which the convergence holds true, dense in the space? Can we provide positive answers to the questions on the limit in (3) by a little change of the function under consideration (in other words, does a small perturbation preserve the directional convergence of subgradients along a dense subset of directions?)? In fact, in order to be in the spirit of Giannessi’s questions, we should also add the following question: for which directions is the limit, in (3), a singleton? Perhaps this question is the most demanding and will require a lot of work to be elaborated properly.

In order to understand a meaning of questions, let us specify the function. Let \({\mathbb {H}}\) be a real Hilbert space and \(f:{\mathbb {H}} \longrightarrow \mathbb {R}\) be a continuous convex function such that \(\partial f(x)\cap S_{{\mathbb {H}}}[0,1]\not = \emptyset \) for all \(x\in {\mathbb {H}}\), where \(S_{{\mathbb {H}}}[0,1]\) stands for the unit sphere; we refer to [14, Corollary 4.4] for more information on this function. Suppose that for a given \({\bar{x}}\in {\mathbb {H}}\) the set \(\partial f({\bar{x}})\cap S_{{\mathbb {H}}}[0,1]\) is a singleton, say \(\{{\bar{x}}^*\}= \partial f({\bar{x}})\cap S_{{\mathbb {H}}}[0,1]\). Does it imply that the Gâteaux derivative, \(D_Gf({\bar{x}})\), exists? Suppose that the set of “good” directions is dense in the space. Then for all h from a dense set we have

and consequently, by the continuity we get

which implies the Gâteaux differentiability. In fact, in some important cases, to get the differentiability, it is enough to have the directional convergence close to \({\bar{h}}\) (for a dense subset of a neighborhood of \({\bar{h}}\)), where \(\langle {\bar{x}}^*, {\bar{h}}\rangle =-1\). We omit a presentation of this fact, since it is too complicated. A good example, where the directional convergence of subgradients would work effectively, is the problem by V. Klee—it is still open; see [4]—suppose Q is a subset of Hilbert space \({\mathbb {H}}\) such that each point of \(\mathbb H\) admits a unique farthest point in Q. Must Q consist of a single point? In order to see it, let f be the farthest distance function; see [10]. Applying the above reasoning we get that f is Gâteaux differentiable; thus, using Theorem 5 in [10] and Theorem 4.2 in [15], we infer that the function is Fréchet differentiable at all points in \(\mathbb H\) or Q is a singleton. The Fréchet differentiability of f is excluded, whenever Theorem 4.4 in [15] and Theorem 5 in [10] are taken into account. Thus if we had the directional convergence of subgradients we would get a solution of the furthest point problem. Similar reasoning can be done in the case of the so called Chebyshev sets. We omit a presentation on how to do it, since it needs several notions and results allowing to change the problem of convexity of Chebyshev set into the problem of the directional convergence of subgradients of a function related to the distance function; see, for example, Ficken’s method in [13]. Of course, also the opposite reasoning can be done. For example, if there is a subset Q having the furthest distance property, such that it is not singleton, then for the farthest distance function, generated by this set, there is a point such that the set of wrong directions has a nonempty interior, that is, the directional convergence of subgradients is not preserved on a set with nonempty interior. This relation illustrates a potential role of the directional convergence of subgradients in investigations of convex analysis problems. To the best of Author’s knowledge, directional convergence of subgradients of convex functions has been little explored and rarely has been applied as a tool to get a new result.

In the finite-dimensional setting, due to the Rademacher Theorem, we know that the set of directions, with the property that limits in (3) exist, is a set of full measure. In the infinite-dimensional setting, it is hard to expect results of this form, unless additional assumptions on the function, under the consideration, are imposed. Moreover, a simple example of a function can be constructed, for which the set of wrong directions (no convergence) is large in the topological sense, that is, it is a dense subset of \(G_\delta \). This indicates that it is impossible, in a general case, to find a sector (drop, cone) with nonempty interior and to have the convergence of subgradients along all directions from the sector. Seemingly, it is a discouraging information for a future investigations. However, a result showing that the set of good directions is dense should be looked for. This is at most what can be expected in a general setting and this is enough to answer mentioned problems above. Of course, adding some assumptions on the function, we should enlarge the set of good directions. For example, sometimes we are interested in the convergence in some cones of directions. That is, we expect that all directions from some cone are such the limits in (3) exist. For this reason we can use some uniform upper semicontinuity of the directional derivatives or second (higher)-order difference quotient; see, for example, [1] for some comments on the role of higher-order theory and problems in variational analysis. Assumptions of the form

or

ensure that the set of directions, such that the limit in (3) exists, is at least a dense \(G_\delta \) subset of the ball \(B_{X}[w_0,\beta _0]\), where \((X, \Vert \cdot \Vert )\) is an Asplund space and \(w_0\in X\), \(\beta _0>0\) are given. The convergence of subdifferentials along these directions can be provided by using Theorem 3.1 [9]. We can also predict that more sophisticated second-order conditions can be used for a specification of good directions of the convergence. Of course, if the function involved in (5) has a second-order expansion at x, then (5) is fulfilled. Seemingly, restriction in (5) seems not to hide too much difficulties. However, even in a Hilbert space setting we can stumble over unresolved problems from convex analysis, whenever we want to preserve (5) by a second-order derivative. Let us first recall that there are convex continuous functions on Hilbert spaces nowhere second-order differentiable; see, for example, Section 5.1 [1]. Moreover, even in separable Hilbert space we do not know if such functions exist or do not exist. Thus we should ask: is it possible to construct a convex continuous function f on a separable Hilbert space such that for every point x, where the Gâteaux derivative exists, say \(D_Gf(x)\), the set of directions for which the directional convergence of subgradients holds true, is not dense in the space?

An example of such function should not be looked for among antidistance functions or Asplund functions (see [9] for the definition of the function). Because it is known that Gâteaux differentiability entails the Fréchet differentiability for these functions and due Corollary 2 [16]; see also Corollary 4.2 [14], we have the directional convergence of subdifferentials for all directions.

If we were able to construct such a function, then (5) would not be satisfied, thus the second derivative would not exist at this point, and consequently it would be an answer in the negative to the question, which we find in [1], that is to the question: Does every continuous convex function on separable Hilbert space admit a second-order Gâteaux expansion at at least one point (or perhaps on a dense set of points)? Jonathan M. Borwein wrote that it was the most intriguing open question to him about convex functions; see Section Alexandrov Theorem in Infinite Dimensions [1].

3 The Density of the Set of “Good” Directions for the Directional Convergence of Subdifferentials

In this section, an example of a function on an infinite-dimensional Hilbert space is presented, for which the set of directions, such that limits in (3) exist, is dense. First, a result, revealing the role of the upper and lower limits in the existence of the limit in (3), is presented. Namely, in the following Lemma, only the existence of \(\limsup _{t\downarrow 0}\partial f(x+tw)\) is preserved, on a dense subset, but we do not know if the limit exists.

Assume that \({\mathbb {H}}\) is a Hilbert space, \(\{x_1^*, x_2^*, \ldots \}\subset {\mathbb {H}}\) is a bounded subset and \(\beta _1\ge 0, \beta _2\ge 0,\ldots \), and \(\mathrm{ker}\, x^*_i:=\{z\in {\mathbb {H}}: \langle x^*_i,z\rangle =0\}\). Let us define the following convex function \(f:{\mathbb {H}} \longrightarrow \mathbb {R}\), where

Lemma 3.1

Let \({\mathbb {H}}\) be an infinite-dimensional Hilbert space,

be finite-dimensional subspaces, \(\{x_1^*, x_2^*, \ldots \}\subset {\mathbb {H}}\) be a bounded subset and \(\beta _1\ge 0, \beta _2\ge 0,\ldots \) be such that \(\liminf _{i\rightarrow \infty }\beta _i=0\), and \(t_1>t_2>\ldots >0\) be such that \(\lim _{j\rightarrow \infty }t_j=0\). Assume that for f defined in (6) the following conditions are satisfied:

and for some dense subset \(Y\subset \bigcup _{j\in \mathbb {N}}Y_j\) we have

Then, \(f(0)=0\) and for all \(y\in Y\), \(h\in \bigcap _{i\in \mathbb {N}}\mathrm{ker}\, x^*_i\) the limits

exist and are nonempty sets; and consequently the set of all directions \(w\in {\mathbb {H}}\) such that the limits

exist and are nonempty sets, is dense in \({\mathbb {H}}\).

Proof

It is easy to notice that \(f(0)=0\). Suppose that \(\mathrm {span}\,\{x_1^*,x_2^*,\dots , \}\) is an infinite-dimensional subspace; otherwise we are done, that is for all \(w\in {\mathbb {H}}\) the limits in (10) exist, since the relative compactness of \( \{x_1^*,x_2^*,\dots , \}\) in this case. Let us fix \(y\in Y\) and \(h\in \bigcap _{i\in \mathbb {N}}\mathrm{ker}\, x^*_i\). Choose \(k\in \mathbb {N}\) such that; see (8),

For every \(j\in \mathbb {N}\) take \(a^*_j\in \partial f(t_jy)\) such that \(\lim _{j\rightarrow \infty } d_{Y_k}(a^*_j)=0\). Since the local compactness of \(Y_k\), we are able to choose a converging subsequence \(\{a^*_{j_m}\}_{m\in \mathbb {N}}\), thus

Thus both limits in (9) exist and are nonempty sets.

Take \(\{y_1^*,y_2^*,\ldots \}\subset S_{{\mathbb {H}}} \) such that \(\langle y^*_i, y_j^* \rangle =0\), whenever \(i\not =j\) and

Let us fix any \(w_0\in {\mathbb {H}}\). For every \(n\in \mathbb {N}\) let us define

Obviously

For all \(n\in \mathbb {N}\) take \(y_n\in Y\) such that

and observe that for all \(t>0\) we have

and

Applying (11) for \(y_n\) instead of y, we are done. \(\square \)

The restriction in (8) can be met in several ways, for example, suppose that \(\{x_1^*, x_2^*, \ldots \}\subset {\mathbb {H}}\) is a bounded subset of a Hilbert space, \(\beta _1>0, \beta _2>0,\ldots \) are such that \(\liminf _{i\rightarrow \infty }\beta _i=0\) and \(t_1>t_2>\ldots >0\) are such that \(\lim _{i\rightarrow \infty }t_i=0\). Assume that for all \(k\in \mathbb {N}\), with \(I_k:= \{i\in \mathbb {N}: x_i^*\not \in Y_k\}\), the following condition is satisfied:

whenever \(I_k\) is infinite, then (8) is also valid, whenever (7) is satisfied. We leave it without a proof, since it is rather a simple observation. Let us also point out that for \({\mathbb {H}}:=l_2\) and \(x_i^*:=e_i\), where \(e_{i}=(e_{i1},e_{i2},\dots )\) and

the conditions in (12) is satisfied for any \(\beta _1>0, \beta _2>0,\ldots \) and

Example 3.1

Let us put \({\mathbb {H}}:=l_2\), \(f(x):=\sup _{i\in N}(x_i - i^{-1})\). We are in the Hilbert space setting. Of course we have \(f(0)=0\), \(\partial f(0)=\{0\}\), so function f is Gâteaux differentiable at the origin. It follows from Lemma 3.1 that the limits \(\limsup _{t\downarrow 0}\partial f(tw)\) and \(\limsup _{i\rightarrow \infty }\partial f(t_iw)\) exist for all w from a dense subset of \({\mathbb {H}}\); see (9). Moreover, it follows from Lemma 3.1 [9] that

Hence, for all w from the dense subset of \({\mathbb {H}}\) we get

However, for all directions \(h\in l_2\setminus \{0\}\) we can spoil the directional convergence of subgradients for close directions to h. In fact, take integers \(i_k\in \mathbb {N}\) such that \(i_1<i_2< \dots \), and \(i_{k+1}>i_{k}^2\), for all \(k\in \mathbb {N}\) and that \(\sum _{k\in \mathbb {N}}i_k^{-\frac{1}{2}}<\infty \). Let us take \(h:=(h_1,h_2,\ldots )\in l_2\), \(\epsilon >0\), and \(i_k\) such that

Observe that for

we have \(e_{i_{j}}\in \partial f(i_{j}^{-\frac{1}{4}}h_\epsilon )\) for all \(j\in \mathbb {N}\) large enough. Of course, the sequence \(\{e_{i_{j}}\}_{j\in \mathbb {N}}\) is not strongly convergent to any point of \(l_2\) (it is weakly convergent to the origin). Thus, we do not have the strong convergence in a dense subset of directions. In order to notice that the set of wrong directions contains a dense \(G_\delta \) subset, let us put

for all \(k\in \mathbb {N}\). It is not difficult to verify that W is a dense \(G_{\delta }\) subset of \(l_2\) and if \(w\in W\) then the limit in (3) does not exists. \(\square \)

At this moment, it is unknown whether an example of a convex continuous function on a Hilbert space, such that the limits in (10) do not exist for all directions from a dense \(G_\delta \) subset, can be provided—it is an open question. In the example above, it is only shown that the limit in (3) does not exist for all directions from a dense \(G_\delta \) subset. Our knowledge, on conditions preserving the existence of limits in (3), is little. In this section functions f, generated by a sequence of functionals, are considered. (This is not a restrictive abridgment, whenever we are in a separable Hilbert space setting.) The key role in the presentation is played by the condition in (8) (of course, we can find several substitutes for (8)—this is not the aim of this note). In the next example, we consider the case, where (8) may not be valid. However, in this case, we reduce the problem of the existence of limits to the investigation of limits in (14). Namely, it is also interesting if taking a close function to f, we are able to preserve the existence of the limits. For example, fix a direction \(w_0\) and \(\epsilon >0\) as small as we need. It would be valuable to know if there are directions w such that that \(\Vert w_0-w\Vert <\epsilon \) and a convex continuous function, say \(g_{\epsilon }\), such that

and the limit

exists; we refer to [14, 17,18,19] for some information how to preserve the inequality in (13).

Example 3.2

Let us suppose that f is defined as in (6) with \(\beta _1>0, \beta _2>0,\ldots \) such that \(\liminf _{i\rightarrow \infty }\beta _i=0\) and

are finite-dimensional subspaces such that both conditions in (7) are fulfilled and

Let us fix \(\epsilon >0\) and choose integers \(i_1<i_2< \cdots \) such that

Let \(P_{Y_k}\) stands for the metric projection on \(Y_k\). For all \(k\in \mathbb {N}\) and \(i\in \{i_k,\ldots , i_{k+1}-1\}\) put

and

It is a simple algebra to show that \(f(0)=g_{\epsilon }(0)\), \(g_{\epsilon }(y)\ge 0\) for all \(y\in {\mathbb {H}}\) and (13) is fulfilled for \(x=0\) as well as (12). Thus, the limit in (14) exists for \(g_{\epsilon }\) defined in (15). \(\square \)

Finally, let us pose the following question; see questions following (3): is there a convex continuous function defined on a Hilbert space such that, for some x, the limit in (3) does not exist for some nonempty open subset of directions? If the answer to this question is negative, then, for example, problems posed by V. Klee can be solved using the directional convergence of subgradients, as it was examined above.

4 Conclusions

-

1.

Open problems on the directional convergence of subgradients, subdifferentials and Hessians are recalled and posed.

-

2.

Some relations with open problems, known in the literature, are pointed out, that is, with Klee’s problems: the convexity of Chebyshev sets and farthest point conjecture; with Borwein’s question on the Alexandrov Theorem in infinite dimensions.

-

3.

It should be stressed that it would be valuable if a result, preserving the density of the set of directions for which the convergence holds true, was discovered in the infinite-dimensional setting.

References

Borwein, J.M.: Future challenges for variational analysis. In: Burachik, R.S., Yao, J.-C. (eds.) Variational Analysis and Generalized Differentiation in Optimization and Control. Springer Optimization and Its Applications, vol. 47, pp. 95–105. Springer, Berlin (2010)

Giannessi, F.: A problem on convex functions. J. Optim. Theory Appl. 59, 525 (1988)

Hiriart-Urruty, J.-B.: Potpourri of conjectures and open questions in nonlinear analysis and optimization. SIAM Rev. 49, 255–273 (2007)

Klee, V.L., reproduced with comments by Grünbaum, B.: Unsolved Problems in Intuitive Geometry, 1960/2010. https://alliance-primo.hosted.exlibrisgroup.com/primoexplore/fulldisplay?docid=CP71177826060001451&context=L&vid=UW&lang=en_US&search_scope=all&adaptor=Local%20Search%20Engine&tab=default_tab&query=any,contains,UNSOLVED%20PROBLEMS%20IN%20INTUITIVE%20GEOMETRY&sortby=rank

Ricceri, B.: A conjecture implying the existence of non-convex Chebyshev sets in infinite-dimensional Hilbert spaces. In: LE Matematiche, vol. LXV, Fasc. II, pp. 193–199 (2010)

Pontini, C.: Solving in the affirmative a conjecture about a limit of gradients. J. Optim. Theory Appl. 70, 623–629 (1991)

Rockafellar, R.T.: On a special class of convex functions. J. Optim. Theory Appl. 70, 619–621 (1991)

Zagrodny, D.: An example of bad convex function. J. Optim. Theory Appl. 70, 631–638 (1991)

Zagrodny, D.: On the strong convergence of subgradients of convex functions. J. Optim. Theory Appl. https://doi.org/10.1007/s10957-018-1276-7

Jourani, A., Thibault, L., Zagrodny, D.: The NSLUC property and Klee envelope. Math. Ann. 365(3), 923–967 (2016)

Bauschke, H.H., Combettes, P.L.: Convex Analysis and Monotone Operator Theory in Hilbert spaces. Springer, Berlin (2011)

Deutsch, F.: Best Approximation in Inner Product Spaces. Canadian Mathematical Society. Springer, New York (2001)

Klee, V.L.: Convexity of Chebyshev sets. Math. Ann. 142, 292–304 (1961)

Jourani, A., Thibault, L., Zagrodny, D.: \(C^{1,\omega (\cdot )}\)-regularity and Lipschitz-like properties of subdifferential. Proc. Lond. Math. Soc. 105, 189–223 (2012)

Jourani, A., Thibault, L., Zagrodny, D.: Differential properties of the Moreau envelope. J. Funct. Anal. 266, 1185–1237 (2014)

Asplund, E., Rockafellar, R.T.: Gradients of convex functions. Trans. Am. Math. Soc. 139, 443–467 (1969)

Thibault, L., Zagrodny, D.: Integration of subdifferentials of nonconvex functions. J. Math. Anal. Appl. 189, 33–58 (1995)

Thibault, L., Zagrodny, D.: Enlarged inclusion of subdifferentials. Can. Math. Bull. 48, 283–301 (2005)

Thibault, L., Zagrodny, D.: Subdifferential determination of essentially directionally smooth functions in Banach space. SIAM J. Optim. 20, 2300–2326 (2010)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Zagrodny, D. The Strong Convergence of Subgradients of Convex Functions Along Directions: Perspectives and Open Problems. J Optim Theory Appl 178, 660–671 (2018). https://doi.org/10.1007/s10957-018-1323-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-018-1323-4