Abstract

We consider the Lorentz gas in a distribution of scatterers which microscopically converges to a periodic distribution, and prove that the Lorentz gas in the low density limit satisfies a linear Boltzmann equation. This is in contrast with the periodic Lorentz gas, which does not satisfy the Boltzmann equation in the limit.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Lorentz gas was introduced in [14] to give a new understanding of phenomena such as electric resistivity and the Hall effect. Lorentz introduced many simplifications to admit “rigorously exact solutions” to some questions, which has made the model very attractive in the mathematical community.

The Lorentz gas can be described as follows: Let \({\mathcal X}\) be a point set in \(\mathbb {R}^2\) (actually most of what is said in this paper could equally well have been set in \(\mathbb {R}^n\) with \(n\ge 2\)). The point set can be expressed as a locally finite counting measure,

i.e. the number of points in the set A, or the empirical measure

Examples of interest are the periodic set \({\mathcal X}=\mathbb {Z}^2\), or random point processes such as a Poisson distribution. The Lorentz process is the motion of a point particle with constant speed in the plane, colliding elastically with the obstacles, which are circular of a fixed radius r and situated at each point \(X\in {\mathcal X}\). Given an initial position and velocity of the point particle, \((x_0,v_0)\in \mathbb {R}^2\times S^1\), its position at time t is given by

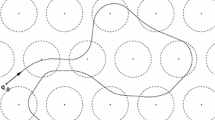

where \(t_0=0\), and \(\{t_1,\ldots ,t_M\}\) is the set of times where the trajectory of the point particle hits an obstacle, and where \(v_j\) is the new velocity that results from a specular reflection on the obstacle. We also set \(x_j=x(t_j)\). The notation is clarified in Fig. 1. For a general point set \({\mathcal X}\) it may happen that obstacles touch or overlap, and then a particle could be trapped or at least experience an infinite number of collisions in a finite time interval, and then (3) may fail to be valid. Adding further constraints on the obstacle configurations one may ensure that (3) is always valid, or fail only for a set of initial conditions of measue zero. This is true, for example, if the point set is a Delone set, and hence satisfies bounds on the minimal distance between the points as well as on the density of points. If \({\mathcal X}\) is deterministic, or if one considers a fixed realization of a random point process, this is a deterministic motion. Then \(T^t_{{\mathcal X},r}\) forms a group of maps, and \((x,v)\mapsto T^t_{{\mathcal X},r}(x,v)\) is continuous in the interior of \(\mathcal {M}:= \left( \mathbb {R}^2{\setminus } \cup _{{ x}\in {\mathcal X}} \bar{B}_{r}({ x})\right) \times S^1\), where \(B_{r}( x) \) is the open disk of radius r and center at x, except at points (x, v, t) such that \(T^t_{{\mathcal X},r}({ x},v)\) belongs to the boundary of \(\mathcal {M}\). On this boundary, where the collisions take place, v jumps, and it is natural to identify ingoing and outgoing velocities, i.e. points (y, v) and \(({ y},v')\) such that \( |{ y}-{ x}| =r\) and \(({ y}-{ x})\cdot (v + v')=0\) for some \(x\in {\mathcal X}\); one may then chose to represent this point by the outgoing velocity to make \(T^t_{{\mathcal X},r}(x,v)\) well-defined for all x, v and t.

For a given point set \({\mathcal X}\) we also consider the rescaled set \({\mathcal X}_{\epsilon }=\sqrt{\epsilon } {\mathcal X}\), so that for any set \(A\subset \mathbb {R}^2\)

We think of \({\mathcal X}\) as describing the domain of the Lorentz gas at a microscopic scale, and \({\mathcal X}_{\epsilon }\) as the macroscopic scale. Expressed in the macroscopic scale, we assume that for any open set \(A\subset \mathbb {R}^2\)

where m(A) is the Lebesgue measure of the set A and c is a positive constant.

For the rest of the paper the obstacle radius is fixed to be equal to \(\epsilon \) in the macroscopic scaling, and therefore \(\sqrt{\epsilon }\) in the microscopic scaling.

In the macroscopic scale, the time \(t_1\) of the first encounter with an obstacle for a typical trajectory \(T_{{\mathcal X}_{\epsilon }, \epsilon }^t(x_0,v_0)\) satisfies \(t_1 =\mathcal {O}(1/c)\), i.e. the mean free path-length of a typical trajectory is of the order 1/c. This is known as the low density limit, or the Boltzmann-Grad limit.

Consider next an initial density of point particles, i.e. a non-negative function \(f_0\in L^1(\mathbb {R}^2\times S^1)\), and its evolution under the Lorentz process. For a fixed pointset \({\mathcal X}\) the density at a later time is given by \(f_{\epsilon ,t}=f_{\epsilon }(x,v,t) = f_0(T^{-t}_{{\mathcal X}_{\epsilon },\epsilon }(x,v))\), which is well-defined when (3) holds for almost all \((x_0,v_0)\), because the map \(T^{-t}_{{\mathcal X}_{\epsilon },\epsilon }\) is both invertible and measure preserving. One may now study the limit of \(f_0(T^{-t}_{{\mathcal X}_{\epsilon },\epsilon }(x,v))\) for a given realization of \({\mathcal X}\); this is known as the quenched limit, as opposed to the annealed limit (see [1]), where the object of study is the expectation over all realizations of \({\mathcal X}\), \(f_{\epsilon }(x,v,t) = \mathbb {E}[f_0(T^{-t}_{{\mathcal X}_{\epsilon },\epsilon }(x,v))]\) (see [15]). Here the expectation is taken with respect to the probability distribution of the point set \({\mathcal X}\). Of course there is no difference when \({\mathcal X}\) is deterministic.

Equivalently the evolution \(f_{\epsilon ,t}\) in the annealed setting is defined as the function that satisfies, for each \(g\in C_0(\mathbb {R}^2\times S^1)\)

Gallavotti [8, 9] proved that when \({\mathcal X}\) is a Poisson process with unit intensity, and \(\epsilon \) converges to zero, then \(f_{\epsilon ,t}\) converges to a density \(f_t\) which satisfies the linear Boltzmann equation:

Here \(S^1_- =S^1_-(v) = \{\omega \in S^1\;\vert \; v\cdot \omega <0 \}\) and \(v'= v-2(\omega ,v)\omega \). Spohn has proven a related, and more general, result in [27]. Both the results by Gallavotti and Spohn concern the annealed setting. Boldrighini, Bunimovich and Sinai [1] proved the same result in the quenched setting, i.e. taking the limit in (6) for a typical realization of \({\mathcal X}\), and not for the expectation over \({\mathcal X}\).

On the other hand, it is also known that when \({\mathcal X}=\mathbb {Z}^2\) (or for that matter many other regular point sets, such as quasi crystals), then the Lorentz process is not Markovian in the limit and therefore (6) does not hold, see [2, 11]. For a periodic distribution of scatterers, in dimension two and higher, Marklov and Strömbergsson have proven that there is a limiting kinetic equation in an enlarged phase space [16, 17]. Caglioti and Golse [5] obtained similar results, strictly in dimension two, using different methods.

Marklof and Strömbergsson have studied this problem in several papers [18,19,20,21]. Working in the quenched setting, they present in [22] a very general theorem concering the Boltzmann-Grad limit of the Lorentz process, where they give a concise set of conditions for a point set \({\mathcal X}\), such that the Lorentz process \(T^t_{{\mathcal X}_{\epsilon },\epsilon }(x,v)\) converges to a random flight process. Their theorem and its relation to the results of this paper is discussed in some more detail in Sect. 2 below.

The problem studied in this paper is the following: Let \({\mathcal X}\) be the periodic point set that has one point at the center of each cell of the euclidean lattice, and let \({\mathcal Y}_{\epsilon }\) be a random point set that also has one point in each lattice cell, but in a random position, and we assume that \({\mathcal Y}_{\epsilon }\) converges to \({\mathcal X}\) in the sense that all points of \({\mathcal Y}_{\epsilon }\) converge to the corresponding point of \({\mathcal X}\) when \(\epsilon \rightarrow 0\), uniformly over \({\mathcal X}\). This convergence is thus assumed to take place at the microscopic level. To study this in the Boltzmann-Grad limit, we set

We are then interested in comparing the limits of the corresponding Lorentz processes, \(T_{{\mathcal Y}_{\epsilon ,\epsilon }}^t\) and \(T_{{\mathcal X}_{\epsilon }}^t\), assuming that the obstacle radius is \(\epsilon \). The main result of the paper is the construction of a family of point sets \({\mathcal Y}_{\epsilon }\) that converges to the periodic distribution, and yet the Lorentz process \(T_{{\mathcal Y}_{\epsilon ,\epsilon }}^t(x,v)\) converges to the free flight process generated by the linear Boltzmann equation (6), contrary to the limit of \(T_{{\mathcal X}_{\epsilon }}^t(x,v)\). It is in a sense a non-stability result for the periodic Lorentz gas, and while not proven in this paper it seems very likely that if \({\mathcal X}\) is a Poisson process with constant intensity, (almost) any approximation \({\mathcal Y}_{\epsilon }\) would result in convergence of the two Lorentz processes to the same limit. The proof follows quite closely the construction in [6, 26], and consists in constructing a third process, \({\widetilde{T}}_{{\mathcal Y}_{\epsilon ,\epsilon }}^t\) which can be proven to be path-wise close to the free flight process, and also to the Lorentz process. The full statement of the result, together with the main steps of the proof are given in Sect. 2. Section 3 gives the somewhat technical proof that \({\widetilde{T}}_{{\mathcal Y}_{\epsilon ,\epsilon }}^t\) converges to the Boltzmann process, and Sect. 4 contains a proof that the probability that an orbit of \(T_{{\mathcal Y}_{\epsilon ,\epsilon }}^t\) crosses itself near an obstacle is negligible in the limit as \(\epsilon \rightarrow 0\), which is then used to prove that \({\widetilde{T}}_{{\mathcal Y}_{\epsilon ,\epsilon }}^t\) and \(T_{{\mathcal Y}_{\epsilon ,\epsilon }}^t\) with large probability are path-wise close.

The scaling studied here for the Lorentz process is not the only one studied in literature. A more challenging problem is the long time limit, where the process is studied over a time interval of \([0,t_\epsilon [\), where \(t_\epsilon \rightarrow \infty \) when \(\epsilon \rightarrow 0\). Recent results of this kind have been obtained in [15]. It would be intersting to try to adapt the techniques in [15] to the present sitution, but we leave that to a future study. And Marklof and Tóth prove a superdiffusive central limit theorem for the displacement of a particle at a finite time t [23].

In a different direction, there are many results concering the ergodic properties of the Lorentz gas with a fixed configuration of scatterers, as opposed to the small scatterer limit that is setting of the present work. Early results are due to Bunimovich and Sinai, [3, 4], and a recent example is [25]. Typically these works deal with periodic configurations of scatterers, but there are also results on non-periodic configurations for example by Lenci and coworkers, see [12, 13].

2 The Main Result and the Principal Steps of Its Proof

Let \({\mathcal X}=\mathbb {Z}^2\) and define \({\mathcal Y}_{\epsilon }\) as a perturbation of \({\mathcal X}\) in the following way: Let \(\phi \) be a rotationally symmetric probability density supported in \(\vert x\vert < 1\), fix \(\nu \in ]1/2,1[\), set

and let

Thus each obstacle has its center in a disk of radius \(\epsilon ^{1-\nu }\) centered at an integer coordinate, (j, k). This disk will be called the obstacle patch below. The obstacle itself reaches at most a distance \(\epsilon ^{1-\nu } + \epsilon ^{1/2}\) from the same integer coordinate; this larger disk will be called an obstacle range below. The ratio of the obstacle range and the support of the center distribution is thus \(1+\epsilon ^{\nu -1/2}\), which converges to 1 when \(\epsilon \rightarrow 0\), and to simplify some notation the radius of the obstacle patch will be used instead of the radius of the obstacle range, and the difference will be accounted for with a constant in the estimates. In order to avoid cumbersome notation, the parameter \(\nu \) is not indicated in the symbol \({\mathcal Y}_{\epsilon }\), but it is important to remember that the obstacle distribution also depends on \(\nu \).

Clearly \({\mathcal Y}_{\epsilon }\) converges in law to the periodic distribution in \(\mathbb {R}^2\). Nevertheless we have the following theorem:

Theorem 2.1

Fix \(\nu \in ]1/2,1[\) and let \(T_{{\mathcal Y}_{\epsilon ,\epsilon }}^t(x,v)\) be the Lorentz process obtained by placing a circular obstacle of radius \(\epsilon \) at each point of \({\mathcal Y}_{\epsilon ,\epsilon }\). Let \(\bar{t}>0\), and let \(f_0(x,v)\) be a probability density in \(\mathbb {R}^2\times S^1\). Define \(f_{\epsilon }(x,v,t)\) as the function such that for all \(t\in [0, \bar{t}]\) and all bounded functions \(g\in C(\mathbb {R}^2\times S^1)\),

where \(T_{{\mathcal Y}_{\epsilon ,\epsilon }}^t(x,v)\) is defined in Eq. (3). Then there is a density \(f(x,v,t)\in C\left( [0,\bar{t}],L^1(\mathbb {R}^2\times S^1)\right) \) such that for all \(t\le \bar{t}\), \(f_\epsilon (x,v,t)\rightarrow f(x,v,t)\) in \(L^1(\mathbb {R}^2\times S^1)\) when \(\epsilon \rightarrow 0\), and such that f(x, v, t) satisfies the linear Boltzmann equation

The constant \(\kappa \) depends on \(\phi \) and \(S^1_-\) and \(v'\) are defined as in Eq. (7).

Remark 2.2

To simplify notation, point particles are allowed to start inside obstacles, and to cross the obstacle boundary from the inside without any change of velocity.

Both the statement in this theorem and the proof are very similar to the main results of [6, 26], but the distribution of scatterers is quite different, and this leads to new questions on the relation between limits of the scatterer distribution and limits of the Lorentz process. In those papers the point processes \({\mathcal Y}_{\epsilon }\) are constructed as the thinning of a periodic point set:

Here it is clear that this \({\mathcal Y}_{\epsilon }\) converges in law to the Poisson process with intensity one, and therefore it is perhaps not surprising that the limit of the Lorentz process is the same as for the Lorentz process generated by a Poisson distribution of the obstacles. Theorem 2.1 in the present paper states that the limit of the Lorentz process is the free flight process of the Boltzmann equation in some cases also if the limiting obstacle density is periodic, and raises the question as to which point processes \({\mathcal X}\) are stable to perturbation when it comes to the low density limit of the corresponding Lorentz processes.

Some understanding of this result can be drawn from the very heuristic argument used to derive the scaling of the Boltzmann–Grad limit: if a point particle is to move a distance L without hitting an obstacle of size \(\epsilon \), it is required that a cylinder of radius \(\epsilon \) and length \(L=\mathcal {O}(1)\) around the particle path is free from obstacle centres. Or, what is the same, for any direction v, each obstacle is the origin of a cylinder of forbidden initial points \(x_0\) for trajectories starting at \(x_0\) in the direction of v, and with a free path of at least L. In the two dimensional case the cylinders are simply strips of width \(2\epsilon \) and one finds that in order to have an average mean free path of order one, one needs to have a density of obstacles equal to \(\mathcal {O}\left( \epsilon ^{-1}\right) \). If the obstacle centers are distributed one per each lattice cell as in our setting, then the lattice cell must be of order \(\epsilon ^{1/2}\), which motivates the scaling in (10). However, the heuristic argument indicates that one needs to consider the point process at different scales along the path of a point particle and orthogonal to the path. This is expressed in a very precise form in [22] as the condition \([{\mathrm P2}]\) on the particle distribution \({\mathcal X}\). In a simplified form, restricted to our two-dimensinal case, it says the following: Writing points \({x} \in {\mathcal X}\) as row vectors, let R(v) be the orthogonal matrix that rotates the coordinate system so that \(v\in S^1\) is in the direction of the first coordinate. Let \(D_{\epsilon }\) be the diagonal matrix with entries \((\epsilon ^{1/2},\epsilon ^{-1/2})\). Let \(\lambda (v)\) be a probability density on \(S^1\), and let v be a random vector with distribution \(\lambda \). For any fixed \({ x }\in {\mathcal X}\) (possibly excluding a small fraction of points of \({\mathcal X}\)) consider the point set

This is a random point set, and the assumption \([{\mathrm P2}]\) is that \(\Xi _{\epsilon }\) converges in distribution to a point process \(\Xi \), independent of x and \(\lambda \). Intuitively this says that starting from any point in \({\mathcal X}\) the point set looks the same, if it is expanded with a factor \(\epsilon ^{-1/2}\) in the direction orthogonal to v, to make the obstacle size equal to \(\mathcal {O}(1)\) in that direction, and compressed with a factor \(\epsilon ^{1/2}\) in the direction along v. If \({\mathcal X}\) is a Poisson process with intensity one, then so is \(\Xi _{\epsilon }\) for all \(\epsilon >0\), and although this is not proven here, it seems likely that if \(\Xi _{\epsilon }\) is defined starting with \({\mathcal Y}_{\epsilon }\) as defined in (8) then \(\Xi \) will also be a Poisson process. This is due to the fact that when \(\nu >1/2\), the points are randomly spread out around over a distance \(\epsilon ^{1-\nu }\), which is much larger than the width of the strip. Figure 2 shows an example of this, where to the left there is a part of the perturbed periodic point set, with \(\epsilon ^{1-\nu }\sim 10^{-4}\), so that by eye it is difficult to distinguish from a periodic distribution. The middle figure shows a realization of \(\Xi _{\epsilon }\) for a random choice of v and with \(\epsilon \sim 10^{-8}\), to be with compared with the image to the right which is the same \(\Xi _{\epsilon }\) but starting from a periodic \({\mathcal X}\).

Left: A perturbed periodic distribution. Mid: The corresponding \(\Xi _{\epsilon }\) as defined by Eq. (14). Right: \(\Xi _{\epsilon }\) computed from a periodic distribution. In all cases, the obstacle radius \(\sqrt{\epsilon }\) is \(10^{-4}\) and the diameter of the obstacle patch is \(\mathcal {O}(10^{-2})\). The direction v is drawn from the uniform distribution on \(S^1\)

If \(\nu <1/2\), the points in \({\mathcal Y}_{\epsilon }\) are spread out over a small patch small also after the rescaling with \(D_{\epsilon }\), and therefore one would expect exactly the same behaviour in the limit as for the strictly periodic distribution \({\mathcal X}\). When \(\nu =1/2\) the centres of the scatterers are distributed in a ball essentially of the same size as the obstacle. In Fig. 2 one would see an image as the one to the right, but where the points would be spread in an narrow ellipsis with a minor axis of order one in the vertical direction, and \(\epsilon \) in the horizontal direction. As proven in [24], the distribution of free path lengths is then asymptotically the same as for the periodic case, and therfore the the particle density \(f_{\epsilon }\) would not converge to the solution of a linear Boltzmann equation. However, the distribution of scattering angles could be quite different from the periodic case, and it could be of interest to study this in more detail, in particular in the long time limit as in [15].Footnote 1

The general results from [22] states that when \([\hbox {D2}]\) and some other conditions are satisfied, then the Lorentz process converges to a free flight process, which in general is not Markovian, except in a larger phase space. It is only in case the distribution of free path lenghts is exponential that one can derive a linear Boltzmann equation like Eq. (12).

Before presenting the proof of Theorem 2.1 two more remarks are relevant. First, the main theorem in [22] is very general, and it seems likely that the results presented here, and also the results from [6, 26] could be concluded from their results. However, the results in [22] are proven under the hypothesis \([{\mathrm P2}]\) described above, stating that the \(\Xi _{\epsilon }\) from Eq. (14) converge for a fixed \({\mathcal X}\); here we have a family of distributions \({\mathcal Y}_{\epsilon }\) depending on \(\epsilon \). The proof in [22] seems to be robust enough to cover also this case, but it would need to be checked. The second remark concerns the distinction between the quenched and annealed limits. In [1, 22], the meaning of quenched is that you fix once and for all a realization of the point process \({\mathcal X}\), and the limits are obtained from a rescaling of this process. In this paper the distribution of scatters depends on \(\epsilon \) also at a microscopic level, and so one cannot just simply take one fixed realization of \({\mathcal Y}_{\epsilon }\) and rescale. With \({\mathcal Y}_{\epsilon }\) defined as in (9) a natural way of viewing a quenched limit would be to take one fixed realization of the \(\xi _{i,j}\), but with \({\mathcal Y}_{\epsilon }\) defined as in (13) there seems to be no unique way of defining a quenched limit. One could possibly define the \(\eta _{i,j}\) as (independent) 0, 1-valued random processes \(\epsilon \mapsto \eta _{i,j,\epsilon }\) with \(\epsilon \) as a decreasing parameter, and with a transition rate \(1\rightarrow 0\) defined so as to obtain the correct density of \({\mathcal Y}_{\epsilon }\) for all values of \(\epsilon \). One could then define the quenched limit as the one obtained from one fixed realization of this process. The results in this paper concern the annealed limit, and then the difference is not important.

In the proof of Theorem 2.1 we consider three processes: The Lorentz process \(T_{{\mathcal Y}_{\epsilon ,\epsilon }}^t(x,v)\), the free flight process \(T_B^t(x,v)\) generated by the Boltzmann equation (11), and an auxiliary Markovian Lorentz process, \({\widetilde{T}}_{{\mathcal Y}_{\epsilon ,\epsilon }}^t(x,v)\).

The Boltzmann process is the random flight process \((x(t),v(t))=T^t_B(x,v) \) generated by Eq. (1). Let \(0=t_0<t_1<,\ldots ,t_n<t\) be a sequence of times generated by independent, exponentially distributed increments \(t_j-t_{j-1}\) with intensity 2, let \(v_0=v\) define \(v_j=v_{j-1}-2 (\omega _j\cdot v_{j-1})\,\omega _j\), where the \(\omega _j\in S^1\) are independent and uniformly distributed. Finally set

Of course the number n in the sum is then Poisson distributed. The solution of Eq. (1) may be defined weakly by

The function g(x, v, t) can then be expanded as a sum of terms, each representing the paths with a fixed number of jumps:

with

The Markovian Lorentz process here is similar to the corresponding process in [6, 26], in the way that re-collisions, i.e. the event that a particle trajectory meets the same obstacle a second time, are eliminated. The construction is explained in Fig. 3. The red thin circles mark the support of the distribution of obstacle center in each cell, and the blue circle indicates the reach of an obstacle, the obstacle range. In each cell there is an obstacle, fixed from the start in the Lorentz model, but changing in the Markovian Lorentz model. Note that the size of both the obstacle radius and the obstacle support are very small, and decreasing to zero with \(\epsilon \), but drawn large here, for clarity. The path meets the same obstacle patch twice in the boxed cell. In the Lorentz case, the orbit simply traverses the cell the second time, because it misses the obstacle. In the Markovian case the obstacle is at a new, random position, drawn in blue color, the second time the orbit enters the support, and there is a positive probability that the path collides with the obstacle, as shown with the blue trajectory. In both cases the trajectory is surely well defined, but the probability of realizing an orbit is different.

The process can be defined as in Eq. (16), with the function g(x, v, t) replaced by

where \({\tilde{g}}_{\epsilon ,n}(x,v,t)\) is the contribution of trajectories having exactly n velocity jumps in the interval [0, t]. All these terms can be computed rather explicitly by counting the number of times a trajectory crosses the obstacle range of one cell.

The operators \(V^t\) and \(\tilde{V}^t_{\epsilon }\) are both well-defined operators \(C(\mathbb {R}^2\times S^1)\rightarrow C(\mathbb {R}^2\times S^1)\), and contractions in \(L^{\infty }\) because for the Boltzmann process as well as the Markovian Lorentz process we have with probability one a finite number of velocity jumps in a finite time interval, and for each n, the terms Eqs. (18) and (20) are continuous. Because \(v\in S^1\), both \(V^t\) and \(\tilde{V}^t_{\epsilon }\) are maps \(C_0(\mathbb {R}^2\times S^1) \rightarrow C_0(\mathbb {R}^2\times S^1) \), i.e. functions of compact support are mapped to functions of compact support, but this latter property is not needed here.

To simplify notation these three processes are hereafter denoted \(z_{\epsilon }(t)\), z(t) and \(\tilde{z}_{\epsilon }(t)\). As described in [6, 26] all these processes belong with probability one to the Skorokhod space \(D_{[0,\bar{t}]}(\mathbb {R}^2\times S^1)\) of càdlàg functions on \(\mathbb {R}^2\times S^1\) equipped with the distance

where

Any \(z\in C(D_{[0,\bar{t}]}(\mathbb {R}^2\times S^1))\) induces a measure \(\mu \) on \(D_{[0,\bar{t}]}(\mathbb {R}^2\times S^1)\) which is first defined on cylindrical continuous functions F, i.e. functions \(F\in C(D_{[0,\bar{t}]}(\mathbb {R}^2\times S^1))\) of the form \(F(z) = F_n(z(t_1),z(t_2),\ldots ,z(t_n))\) where \(F_n\in C( (\mathbb {R}^2\times S^1)^n)\) and \(0\le t_1<t_2<\cdots <t_n\le \bar{t}\). For such functions \(\mu \) is defined by

where \(P_{t_n,\ldots ,t_1,0}(z_1,z_2,\ldots ,z_n\vert z_0)\) is the joint probability density of \(z(t_1),z(t_2),\ldots ,z(t_n)\) given the starting point \(z_0\). For a Markov process, such as the Boltzmann process, this is

where \(P_{t_j,t_{j-1}}(z_j \vert z_{j-1})\) is the transition probability of going from state \(z_{j-1}\) to state \(z_j\) in the time interval from \(t_{j-1}\) to \(t_j\). Then by a density argument the measure \(\mu \) is defined for all \(F\in C(D_{[0,\bar{t}]}(\mathbb {R}^2\times S^1))\).

(Proof of Theorem 2.1)

Let \(\mu \), \(\mu _{\epsilon }\) and \(\tilde{\mu }_{\epsilon }\) be the measures induced by z(t), \(z_{\epsilon }(t)\) and \(\tilde{z}_{\epsilon }(t)\). Just like in [26], one may prove that for every F

The argument uses a theorem from Gikhman and Shorokhod (1974) [10], and relies on an equicontinuity condition and on the convergence of the marginal distributions of \(\tilde{z}_{\epsilon }(t)\). Both the equicontinuity condition and the convergence of the marginal distributions are consequences of Proposition 3.5 which shows that the number of jumps of \(z_{\epsilon }\) in small time intervals is not too large, and Theorem 3.1, which states that the one-dimensional marginals of \(\tilde{z}_{\epsilon }(t)\) converge to the marginals of z(t). \(\square \)

The next step is to prove that \((\mu _{\varepsilon }-\tilde{\mu }_{\epsilon } )\rightharpoonup 0\) when \(\epsilon \rightarrow 0\), i.e.

when \(\epsilon \rightarrow 0\), and this is done by a coupling argument. Given that \(z_{\epsilon }(0)=\tilde{z}_{\epsilon }(0)\), the two processes have the same marginal distributions up to the first time \(t_*\) where \(z_{\epsilon }(t)=\tilde{z}_{\epsilon }(t)\) returns to an obstacle range it already visited. For the process \(z_{\epsilon }(t)\), the position of the obstacle within its support is then fixed, whereas for the process \(\tilde{z}_{\epsilon }(t)\) a new random position of the obstacle is chosen when the trajectory arrives. Hence

where \(K_{\epsilon }\subset D_{[0,\bar{t}]}(\mathbb {R}^2\times S^1)\) is the set of trajectories that contain at least one such re-encounter for \(t\le \bar{t}\). Proposition 4.1 states that the righthand side of (27) converges to zero when \(\epsilon \rightarrow 0\). This together with (25) implies that

weakly when \(\epsilon \rightarrow 0\), and this concludes the proof of Theorem 2.1. \(\square \)

We end the section with a comment on the propagation of chaos for these processes. For the Boltzmann process z(t) and for the process \(\tilde{z}(t)\) it is clear that if a pair of initial conditions \((z^1(0),z^2(0))\) are chosen with joint density \(f_0^1(x^1,v^1) f_0^2(x^2,v^2)\), then the joint densities of \((z^1(t),z^2(t))\) and \((\tilde{z}_{\epsilon }^1(t),\tilde{z}_{\epsilon }^2(t))\) also factorize: a chaotic initial state is propagated by the flow, because the two paths are independent, they never interact. The same is not true for \((z_{\epsilon }^1(t),z_{\epsilon }^2(t))\), because there is a positive probability that the two paths will meet in the obstacle density support of one obstacle, which creates correlations. However, the same kind of estimates as the ones used to prove that the probability of re-encounters for one trajectory becomes negligible when \(\epsilon \rightarrow 0\) can be used to prove that also the probability that two different trajectories meet inside an obstacle range becomes small, and such estimates could be performed for any finite number of trajectories. The calculations are carried out in some detail in Sect. 4, and formulated as Theorem 4.2.

3 The Markovian Lorentz Process

The Markovian process may be described using the underlying periodic structure. Over a time interval [0, t], the particle traverses \(\mathcal {O}\left( \epsilon ^{-1/2}\right) \) lattice cells, and when the path is sufficiently close to the cell center to cross the obstacle range, there is a positive probability that it is reflected by an obstacle. Because the obstacle position is chosen independently each time the trajectory enters a cell, this may all be computed rather explicitly.

We write

This is the test function evaluated along the path of a point particle. It defines a semigroup \(\widetilde{V}_{\epsilon }^t\) acting on the test function, and the terms in the sum express the contribution to this semigroup from paths with exactly n velocity jumps. Obviously these terms are not semigroups in their own right.

Theorem 3.1

Take \(\nu \in ]1/2,1[\) and let \({\widetilde{T}}_{{\mathcal Y}_{\epsilon ,\epsilon }}^t(x,v)\) be the corresponding Markovian Lorentz process as defined above. Fix \(\bar{t}>0\). For any density \(f_0(x,v)\) in \(\mathbb {R}^2\times S^1\), let \(\tilde{f}_{\epsilon }(x,v,t)\) be the unique function such that

for all \(t\in [0,\bar{t}]\), and any bounded \(g(x,v)\in C(\mathbb {R}^2\times S^1)\). The expectation in (30) is taken with respect to the distribution of \({\mathcal Y}_{\epsilon }\). Then there is a function f(x, v, t) such that for all t

and f(x, v, t) satisfies the linear Boltzmann equation, Eq. (7).

Proof

Take any bounded function \(g\in C_0(\mathbb {R}^2\times S^1)\), and \(t<\bar{t}\). We must prove that

or, equivalently,

The expression in (33) is bounded by

and choosing \(\lambda \) and M large enough, depending on \(f_0\), the first term can be made arbitrarily small, smaller than \(\varepsilon _0/2\), say, where \(\varepsilon _0\) is taken arbitrarily small. It then remains to show that the second term can be made smaller than \(\varepsilon _0/2\) by choosing \(\epsilon \) small enough. Both \(V^t\) and \({\widetilde{V}}_{\epsilon }^t\) are bounded semigroups, and therefore, after dividing the interval [0, t] into N equal sub-intervals, \([j t/N,(j+1) t/N]\) one gets

As noted in [6], the underlying Hamiltonian structure implies that he two semigroups are contractions in \(L^1\cap L^{\infty }\), and therefore it is enough to prove that for \(N=N_{\epsilon }\) appropriately chosen

when \(\epsilon \rightarrow 0\). Setting \(N_{\epsilon }=t/{\tau _{\epsilon }}\) for a suitable \({\tau _{\epsilon }}\), we find

Denote the four terms in the right-hand side by \(R_{I}, R_{II}, R_{III}\), and \(R_{IV}\), and set \(r_{\epsilon }=\epsilon ^{(2\nu -1)/2}\left( 1+\log (t/\sqrt{\epsilon })\right) \). From Proposition 3.4 it follows that the first term, accounting for paths with no jump in the given interval, satisfies

which converges to zero with \(\epsilon \) if \(r_{\epsilon }^{1/2}/\tau _{\epsilon }\) does. The second term accounts for paths with exactly one jump, and according to Proposition 3.6,

Here \(\omega (\tau _{\epsilon },g)\) is the modulus of continuity for g. For this term it is enough that \(r_{\epsilon }/\tau _{\epsilon }\) converges to zero, but the rate of convergence may depend on the modulus of continuity of the test function g.

We also have that if \(\tau _{\epsilon } > r_{\epsilon }^{1/2} \)

This follows because for the Boltzmann process, the probability of having more than two jumps in an interval of length \(\tau _{\epsilon }\) is of the order \(\tau _{\epsilon }^2\), and Proposition 3.5 states that the same is true for the Markovian Lorentz process. Therefore, in conclusion, the convergence stated in eq. (32) holds with a rate depending on \(f_0\) but which can be controlled by entropy and moments, and on the modulus of continuity of the test function g. \(\square \)

The expressions (35) and (36) imply that it is enough to study the processes in short intervals, and Proposition 3.5 below shows that it is then enough to consider two cases: a particle path starting at \((x,v)\in \mathbb {R}^2\times S^1\), i.e. with position \(x\in \mathbb {R}^2\) in the direction v, moves without changing velocity during the whole interval, or hits an obstacle at some point \(x'\) and continues from there in the new direction \(v'\), but suffers no more collisions. The three propositions used in the proof of Theorem 3.1 all depend on Lemma 3.2 below, where the underlying periodic structure is used to analyze the particle paths in detail up to and just after the first collision with an obstacle. To set the notation for the following results, we consider a path starting in the direction v from a point x in the direction of v. It is sometimes convenient to denote the velocity \(v\in S^1\) by an angle \(\beta \in [0,2\pi [\), and both notations are used below without further comment. When a collision takes place, the new velocity \(v'\) depends on v and on the impact parameter r as shown in Fig. 5, where \(r\in [-1,1]\) when scaled with respect to the obstacle radius. In this scaling, \(dr = \cos (\beta '/2)\,d\beta '/2\), when \(\beta '\) is given as the change of direction.

Without loss of generality we may assume that the path is in the upward direction with an angle \(\beta \in [0,\pi /4] \) to the vertical axis as in Fig. 4, because all other cases can be treated in the same way just by a finite number of rotations and reflections of the physical domain. Let \(y_1\) be the first time the path enters the lower boundary of the lattice cell, and then let \(y_j= y_1+(j-1) \tan (\beta ) \mod 1 \) be the consecutive points of entry to the lattice cell. Setting \(y_0=-\tan (\beta )/2\), the signed distance between the particle path through the cell and the cell center is \(\rho _j=(y_j-y_0)\cos \beta \), and the probability that the path is reflected at the j-th passage and given the sequence \(\rho _j\), the events of scattering in cell passage nr j are independent.

Define

The probability that a scattering event takes place in the j-passage of a lattice cell is a function of the distance from the path to the centre of the cell, \(\rho _j\), and is zero outside \(\vert \rho _j\vert <\epsilon ^{1-\nu } + \epsilon ^{1/2}\). This probability will be denoted \(p_j=p_j(x,v)\), and depends only on the initial position x and the direction v. Given that a scattering event takes place, the outcome of this event depends on the scattering parameter, i.e. the distance between the path and the (random) center of the obstacle. Let \(A_{x,v,t}\) be any event that depends on a position x and direction v of a path segment of length t. Then the probability that \(A=A_{x',v',t'}\) is realized after the first collision can be computed as

Here \(\mathbb {P}[ A_{x',v',t'} \; \vert \; j\;]\) denotes the conditional probability of the event \(A_{x',v',t'}\) given that the collision takes place when the particle crosses cell number j along the path, and this also depends on the x and v. The time when the path enters cell number j is denoted \(t_{j-}\), and the terms in the sum contains a factor \(p_0(x,v,t_{j-})\) for the probability that no collision has taken place earlier. Similarly \(t_{j+}\) denotes the time when the trajectory leaves the cell; that is a random number, but we always have \(0< t_{j+}-t_{j-1} < 2\sqrt{\epsilon }\).

In (42), \((x',v',t')\) are random, so \(\mathbb {P}[A]\) involves also an integral over these variables, and the notation \(\mathbb {P}[ A_{x',v',t'} \; \vert \; j]\) is intended to include this integration. The expectation \(\mathbb {E}[\psi ]\) of some function \(\psi \) is computed in the same way with \(\mathbb {P}[ A_{x',v',t'} \; \vert \; j]\) replaced by \(\mathbb {E}[ A_{x',v',t'} \; \vert \; j]\) .

Given the density \(\phi \) for the position of the obstacle define

where \((x_1,x_2)\) is an arbitrary coordinate system in \(\mathbb {R}^2\). Because \(\phi \) is assumed to be rotationally symmetric, the resulting function \(\varphi _0\) is independent of the orientation of this coordinate system. It is a smooth probability density with support in \([-1,1]\), and then \(\varphi _{\epsilon }(r) \equiv \epsilon ^{\nu -1} \varphi _0( \epsilon ^{\nu -1}r)\) is also a smooth probability density with support in \(\vert r\vert \le \epsilon ^{1-\nu }\).

For each k the obstacle center is chosen randomly inside a circle of radius \(\epsilon ^{1-\nu }\) around the cell center, and one can then compute the probability that the path is reflected at step k.

Lemma 3.2

Consider a lattice cell in the microscopic scale, so that the cell side has length one, and assume that there is an obstacle of radius \(\epsilon ^{1/2}\) with center distributed with a density of the form \(\phi (x)=\epsilon ^{2\nu -2} \phi _0( \epsilon ^{\nu -1}x )\), where \(\phi _0\in C^{\infty }(\mathbb {R}^2) \) has support in the unit ball of \(\mathbb {R}^2\). Let \(\varphi _{\epsilon }\) be defined as in Eq. (43) and rescaled with \(\epsilon \). Let \(\psi _0\in L^1(\mathbb {R})\) with \(\psi _0(x)=0\) for \(\vert x\vert >1\), and set \(\psi _{\epsilon }(x) = \psi _0(x/\sqrt{\epsilon })\). Consider a particle path traversing the cell n times with an angle \(\beta \in [0,\pi /4]\) to the vertical cell sides, and set \(y_1,\ldots ,y_n\) to be the consecutive points at the lower edge of the cell. We have \(y_1\in [-1/2,1/2[\) and \(y_k=-1/2 + ( 1/2 + (k-1)\tan (\beta )) \mod \; 1 \). Then

satisfies

The remainder term \(R_a\) also depends on n and \(\epsilon \). It is bounded by a function \(\bar{R}_A(\beta )\), that depends on \(\phi \), n and \(\epsilon \) but not on \(\psi \), and that satisfies

Proof

The result is a small extension of the corresponding proposition in [26], and also the proof follows closely that paper. For any fixed \(\beta \), the expression \(p[\psi _{\epsilon }](y,\beta )\) has support in an interval of length \(2( \epsilon ^{1-\nu } + \epsilon ^{1/2})/\cos (\beta )\). Extend this function to be a one-periodic function of y. For simplicity we assume that n is odd, and set \(n=2m+1\). With n large, this assumption would only make a very small contribution from adding one extra term to the sum in case n were even. Then

In this expression \({\hat{p}}_k\) is the k-th Fourier coefficient of the periodic function \(p[\psi _{\epsilon }](\cdot ,\beta )\), and the sum to the right can be evaluated as the Dirichlet kernel of order m with argument \(k\tan (\beta )\):

Therefore

where

The Fourier coefficients \({\hat{p}}_k\) can be computed as the Fourier transform of \(p[\psi _{\epsilon }](\cdot ,\beta )\) evaluated at the integer points k,

Here \(\widehat{\varphi }_0\) and \(\widehat{\psi }_0\) are the Fourier transforms of \(\varphi _0\) and \(\psi _0\). Note the factor \(\sqrt{\epsilon }\) which is due to the definition of \(\psi _{\epsilon }\), which is not rescaled to preserve the \(L^1\)-norm. We have

and because \(\psi _0\in L^1\) and \(\varphi _0\) is smooth, the coefficients \({\hat{p}}_k\) decay rapidly: For any \(a>0\) there is a constant \(c_a\) depending on \(\varphi _0\) such that

Because \(\beta \in [0,\pi ]\), the dependence on \(\beta \) can be absorbed into the constant \(c_a\). Let

The remainder term \(R_a=R_a(n,\epsilon , y_1,\beta )\) is therefore bounded by

Using a standard estimate of the \(L^1\)-norm of the Dirichlet kernel,

Therefore these integrals are independent of k, and so

because the sum is bounded by \(C\int _{0}^{\infty } (1+ (\epsilon ^{1-\nu }x)^a)^{-1}\,dx\), and replacing \(\log (m)\) by \(\log (n)\) only modifies the constant. This concludes the proof. \(\square \)

Remark 3.3

The assumption that \(\psi _0\in L^1\) is actually stronger than needed. The proof works equally well as long as the Fourier transform of \(\psi _0\) is not increasing too fast, and a Dirac mass with support in \(]-1,1[\), for example, would give the same kind of error estimate.

Proposition 3.4

The terms \((V^t)_0 g (x,v)\) and \(({\widetilde{V}}^t_{\epsilon })_0 g(x,v)\) are given by

where \(p_0(x,v,t)\) is the probability that a trajectory starting at \(x\in \mathbb {R}^2\) in direction v does not hit an obstacle in the interval [0, t]; this can be computed explicitly. The function \(p_0(x,v,t)\) satisfies \(p_0(x,v,t)-e^{-2t} = R_b(x,v,t)\) with

for a function \(\bar{R}_B(\beta )\) that satisfies

and consequently, for any bounded \(g\in C\left( \mathbb {R}^2\times S^1\right) \) with support in \(\vert x\vert \le M\),

Proof

Consider a path starting at \(x\in \mathbb {R}^2\) in the direction of v. There is no restriction in assuming that the direction v is clockwise rotated with an angle \(\beta \) as illustrated in Fig. 4, which shows one lattice cell, with an obstacle patch indicated with a red circle, and a blue circle indicating the maximal range for an obstacle, and the red solid disk shows one possible position of the obstacle. In the macroscopic scale, the lattice size is \(\epsilon ^{1/2}\), the obstacle has radius \(\epsilon \), and the obstacle patch has radius \(\epsilon ^{3/2-\nu }\). These values are indicated within parenthesis, and the microsopic scale, in which the lattice size is 1 is indicated to the left. When \(\epsilon \rightarrow 0\) the obstacle patch in microscopic scale shrinks to a point, but is is drawn large in the image for clarity. The path under consideration enters a new lattice cell for the first time at \(y_1\), and enters the second lattice cell at \(y_2\), drawn in the same image. A path of length t in macroscopic scale will traverse a number n of lattice cells in the vertical direction. We set \(m =\lfloor \frac{t \cos \beta }{2\epsilon ^{1/2}}\rfloor \). Then

where \(\zeta \in ]-1,1]\), and depending on whether where the path starts and ends, the path may touch one additional cell. The error due to the exact position of the start and end points in a lattice cell can be taken into account by allowing \(\zeta \in [-2,2]\). Then

where \(p(y,\beta )\) is the probability that a trajectory entering a lattice cell at y with angle \(\beta \) along the lower cell boundary meets an obstacle before leaving the cell on the top. Because \(p(y_j,\beta )< c\epsilon ^{\nu -1/2}\rightarrow 0\) when \(\epsilon \rightarrow 0\) we may assume that \(p(y_j,\beta )<1/2\), and therefore

which provides an asymptotic expression for \(p_0(x,v,t)\) once the sum \(\sum _{j=1}^n p(y_j,\beta )\) has been evaluated. That \((V^t)_0 g(x,v)\) has the form (59) is evident, and hence it remains to prove the estimate (63). From (66) we get

Referring to the notation in Lemma 3.2 the probability \(p(y_j,\beta )\) can be computed as

that is, \(\psi _0\) is set to one in the interval \([-1,1]\) and zero outside this interval. The same lemma then gives

where \(R_a\) satisfies the estimate (47), and \(\vert {\widehat{R}}_a-R_a\vert \le 2 \sqrt{\epsilon }\). Hence \(\vert {\hat{R}}_a(n,\epsilon ,y_1,\beta )\vert \le C \bar{R}_A(\beta )\) for some constant C, and Markov’s inequality says that for any \(\lambda >0\), the inequality \(m( \{v\in S^1 \,\vert \, \bar{R}_A>\lambda \}) \le \frac{C}{\lambda } \epsilon ^{\nu -1/2}(1+\log (t/\sqrt{\epsilon })) \) holds. Therefore

where the norms inside the integrals are taken with respect to the variable x. Then (63) follows after integrating the expression over \(\mathbb {R}^2\) because

when \(g=0\) for \(\vert x\vert >M\). \(\square \)

Proposition 3.5

Let \(t>\epsilon ^a\) with \(a<(2\nu -1)/4\). Then if \(g=0\) for \(\vert x\vert >M\),

Proof

Let \(B_M\) be the ball of radius M in \(\mathbb {R}^2\), and consider a path starting at \((x,v)\in B_M \times S^1\), and set \(J_k=J_k(x,v,t)\) be the event that this path has exactly k velocity jumps in the time interval [0, t]. Similarly, let \(J_{k+}\) denote the same for the case of at least k jumps. Then

that is, the conditional probability that a path has at least two velocity jumps given the initial position and velocity (x, v) is integrated over x and v. Considering \(J_{2+}(x,v,t)\) for one octant of \(S^1\) at a time, we may represent v by an angle \(\beta \in [0,\pi /4]\) as in the proof of Lemma 3.2. A path in the direction of \(\beta \) starting at x will traverse \(n = \lfloor t \cos (\beta )/\sqrt{\epsilon }\rfloor \pm 1\) lattice cells if it is not reflected on an obstacle along the way, and so

that is, as a sum of terms conditioned on the event that the first jump takes place at the j-th passage of a lattice cell. Clearly

and the terms \( p_j \mathbb {P}[ J_{1+}(x',v',t)\; \vert \; (j,x',v') ] \) can be expressed as \(p[\psi _{\epsilon }](y,\beta )\) with

in Lemma 3.2; \(p_0(x',v'(r/\epsilon ),t)\) is the probability that there is no collision in a path of length t starting at \(x'\) in the direction of \(v'\), and this direction is given as the outcome of a collision with an obstacle with impact parameter \(r/\epsilon \). Using Proposition 3.4 we find that \(\left| p_0(x',v'(r/\epsilon ),t)- e^{-2t}\right| \le \bar{R}_B(\beta '(r/\epsilon ))\), where \(\beta '\) is the angular direction corresponding to \(v'\). Rescaling \(\psi _{\epsilon }\) gives

where \(\beta '\) is the scattering angle of \(v'\) with respect to the velocity before scattering, v. It follows that

Therefore, using Lemma 3.2, the righthand side of Eq. 74 is bounded by

and integrating with respect to v over \(S^1\) and then x over \(B_M\), gives

as a bound for the right hand side of Eq. (73). Here \(r_{\epsilon }= \epsilon ^{(2\nu -1)/2} (1+\log (t/\sqrt{\epsilon })\), and t is assumed to be small. The proof is concluded by comparing the terms when \(t>\epsilon ^{a}\) with \(a< (2\nu -1)/4\). \(\square \)

The following proposition concerns the terms \({\left( V^t\right) }_1g(x,v)\) and \({\left( {\widetilde{V}}_{\epsilon }^t\right) }_1g(x,v)\) defined by

and

where as before, \(J_1(x,v,t))\) is the event that there is exactly one jump on the trajectory starting at x in the direction of v. The \(\tilde{\tau }\in ]0,t[\) and \(v'\in S^1\) are the random jump time and velocity of the particle after the jump.

Proposition 3.6

Let \(\omega (\delta ,g)\) be the modulus of continuity for g, i.e. a function such that \(\vert g(x,v)-g(x_1,v_1)\vert \le \omega (\vert x-x_1\vert +\vert v-v_1\vert ,g)\). Then

where the support of g is contained in \(\{ (x,v)\,\vert \,\vert x\vert <M\} \) and \(r_{\epsilon } =\epsilon ^{\nu -1/2}(1+\log n)\).

Proof

First, because g is assumed to have compact support, the modulus of continuity exists, and \(\omega (\delta ,g)\rightarrow 0\) when \(\delta \rightarrow 0\). Define \(g_x(v)=g(x,v)\), i.e. a function depending only on v but with x regarded as a parameter. Because \(\vert v\vert =\vert v'\vert =1\), we then have \(\vert g_x(v')-g(x+\tau v + (t-\tau )v',v')\vert \le \omega (t,g)\), and therefore

where the factor t multiplying \(\omega (t,g)\) comes from the integral in the definition of \(V^t_1\). Similarly

where \(p_0\) and \(\bar{R}_A\) are defined as above. It remains to compare

and

The operators \({\left( V^t\right) }_1\) and \({\left( {\widetilde{V}}^t_{\epsilon }\right) }_1\) act only on the variable v of \(g_x\), x being considered as a parameter, and it is possible to use Lemma 3.2. By conditioning on the event that a velocity jump takes place in the j-the passage of a cell,

where \(t_{j-}\) and \(t_{j+}\) denote the time points when the trajectory enters and leaves cell number j along the path, \(x_j\) is the (random) point of reflection of the trajectory inside cell j, \(v'\) the random new velocity, and finally, \(\mathbb {E}[ f \vert j ]\) is shorthand for the expectation of f subject to the event that the jump takes place in cell number j along the path. Then

Using Lemma 3.2 with \(\psi _0(r)= g_x(v'(r))\mathbbm {1}_{\vert r\vert \le 1}\) one finds that the first term is

with \(R_a\) bounded by \(\Vert g\Vert _{L^{\infty }}\bar{R}_A(\beta )\) and \(\int _{S^1} \bar{R}_A(\beta ) \,dv'\le C \epsilon ^{\nu -1/2} (1+\log (t/\sqrt{\epsilon }))\). The second term is bounded by

where, in the same way as before, \(\left| R_b\right| \le \Vert \bar{R}_B(\beta (\cdot ) \Vert _{L^1} \bar{R}_A(\beta )\) . Similarly the third term is bounded by

and the last term by

Adding the error estimates from (84), (85), (90), (91), (92),and (93) and integrating over \(\beta \) gives

again writing \(r_{\epsilon }\) for the expression \(\epsilon ^{\nu -1/2} (1+\log n)\). This concludes the proof, because the integration over the support of g in x is trivial. \(\square \)

4 Equivalence of Processes and Propagation of Chaos

The Lorentz process and the Markovian Lorentz process are equivalent in the set of trajectories that don’t return to the same obstacle patch, and the purpose of this section is to prove that the probability that a path in the Markovian process returns to the same patch vanishes in the limit as \(\epsilon \rightarrow 0\). This is a geometrical construction which consists in estimating the measure of the set \(B_{\epsilon }\subset \mathbb {R}^2\times S^1\) of initial values that result in a path returning to the same obstacle patch. This subset depends on the realization of the obstacle positions, but the estimates of the measure \(m(B_{\epsilon })\) of this set do not, and \(m(B_{\epsilon })\rightarrow 0\) when \(\epsilon \rightarrow 0\). A very similar calculation is then made to prove that the probability that a pair of trajectories i the Markovian process meet in an obstacle range also converges to zero when \(\epsilon \) does. The same calculation could be repeated for any number of simultaneous trajectories, and from that one can conclude that propagation of chaos holds for this system.

Consider then a path passing through an obstacle range, and then returning to the same patch. All such paths can be enumerated by giving the relative (integer) coordinates of the lattice cells where the path changes direction, that is \(\xi _j\in \mathbb {Z}^2\) denotes the difference of the integer coordinates of the end point and starting point of a straight line segment of the path. This is illustrated in Fig. 6, where the starting point p of the loop is indicated with a black dot in cell nr. 0, which in this case is a point where the path is reflected, but in most cases it would not be. The point is not the starting point of the particle path, which may have a long history before entering this particular loop. For a loop with n collisions, we therefore have

For a given time interval \(t\in [0,\bar{t}[\), we must have

because the size of a lattice cell is \(\sqrt{\epsilon }\) in the scaling we are considering here. The notation is indicated in Fig. 6, where the first few and the last segment of a loop are indicated with a solid line, the segments joining the lattice centers with dashed lines, and angles \(\beta _j\) of the path segments, as well as the integer coordinates.

When \(\epsilon \) is small, the path segments are almost parallel to the corresponding segment joining the lattice cell centers, and the same holds for the segment lengths. In the worst case, with a segment joining two neighboring cells, the relative error at most of order \(\mathcal {O}(\epsilon ^{1-\nu })\). In the following computation, all lengths are expressed in terms of the integer coordinates, and the error coming from this approximation is taken into account by a constant denoted c.

A loop returning to the same obstacle range is not uniquely determined by the integer sequence \(\xi _1,\ldots ,\xi _{n+1}\), because there is some freedom in setting the initial position and angle of the path. The last angle \(\beta _{n+1}\) must belong to an interval of width \(\Delta \beta _{n+1}\) that satisfies

where the enumerator is the diameter of the obstacle range, and \(\sqrt{\epsilon } \vert \xi _{n+1}\vert \) is the length of the last segment. And it is an easy argument to see that then the angle of the n-th segment must belong to an interval of width smaller than

Following the path backwards gives

The history of the path leading up to the point p is at most \(\bar{t}\), and hence the phase space volume spanned by the possible histories leading to a loop indexed by a sequence \((\xi _1,\ldots ,\xi _n)\) is bounded by \( \bar{t}c \epsilon ^{3/2-\nu } \Delta \beta _1 \), the length of the path times the diameter of of the obstacle range times the angular interval. The constant c is there to account for the difference between the diameter of the obstacle range and obstacle patch, and can be taken as close to 1 as one wishes. Of course the history is not likely to be one straight line segment, but different histories leading a particular loop may be very different, with none or many reflections. However, it is well-known that the so called billiard map is measure preserving. Let \(\partial \Omega =\bigcup _{z \in \sqrt{\epsilon } \mathbb {Z}^2 } \{ x\in \mathbb {R}^2\;\vert \; x-z = \epsilon ^{3/2-\nu }\}\), i.e. the union of all obstacle boundaries. If \((x,v)\in \partial \Omega \times S^1_+\), a point on the boundary of an obstacle, with velocity pointing out from the obstacle, then the billiard map is the map \((x,v) \mapsto (x_1,v_1)\) where \((x_1,v_1)\) are the position and outgoing velocity after the trajectory hits the next obstacle. This map preserves the measure \( \vert n \cdot v\vert dx_{\parallel }dv\), where n is the normal point out from the obstacle at x and \(d x_{\parallel }\) is the length mesure of the obstacle boundary, see e.g. [7]. This means that even if the set of histories leading leading to the loop indexed by \((\xi _1,\ldots ,\xi _n)\) splits into a complicated form, the phase space volume in the full space still satisfies the same bound. Because the loop is indexed with coordinates relative to the starting point, all periodic translates of a point of the history maps into a loop of with the same index sequence, the fraction of points \((x,v)\in B\times S^1\), where B is any one lattice cell, that leads to a loop with the given index is bounded by

To compute an estimate of the fraction of \((x,v)\in B\times S^1\) that leads to any loop with \(n+1\) segments it is enough to sum this expression over the set of \((\xi _1,\ldots ,\xi _n,\xi _{n+1})\) satisfying

Using \(\vert \xi _1\vert ^{-1}\vert \xi _{n+1}\vert ^{-1}\le \frac{1}{2} \left( \vert \xi _1\vert ^{-2}+\vert \xi _{n+1}\vert ^{-2}\right) \), we find that the sum is bounded by

The last line has been obtained by changing to polar coordinates in \(\mathbb {R}^2\), and taking the simplex in the inner integral to span over the full length \(T/\sqrt{\epsilon }\). Therefore, summing the estimate in (100) first over the loops of length \(n+1\) and then over \(n=1\cdots \infty \) the following estimate for the fraction of initial points in phase space that result in a loop along the path:

This calculation may be summarized as a proposition:

Proposition 4.1

Let \(T_{\mathcal {Y}_{\epsilon ,\epsilon }}^t\) be the Lorentz process as in Theorem 2.1. Denote the event that there is a loop along the path of length \(\bar{t}\) starting at (x, v) by \(\mathcal {L}(x,v)\). Then

when \(\epsilon \rightarrow 0\).

Proof

It is enough to see that the integral is bounded by

where \(B_M\) is the ball of radius M in \(\mathbb {R}^2\), and assumed to contain the support of g. This is bounded by

This expression can be made arbitrarily small by first choosing \(\lambda \) large enough to make the first term as one wishes, and then the second term can be made equally small by choosing \(\epsilon \) sufficiently small. All this is uniform in the random positions of the obstacles. \(\square \)

The proof of propagation of chaos is similar in many ways. Consider two paths of the Markovian Lorentz model with independent initial conditions (x, v) and \((x',v')\). Because there is no interaction between the two particles, the particles remain independent until they meet the same obstacle range, if ever. As in the previous calculation, take a fixed realization of the random configuration of obstacles in each obstacle range. Let A(x, z) be the set of angles \(v\in S^1\) such that there is a possible path from the point \(x\in \mathbb {R}^2\) to the obstacle range with center at \(z\in \sqrt{\epsilon }\mathbb {Z}^2\). The set of angles such that the path reaches the patch before hitting an obstacle is bounded by \(\epsilon ^{3/2-\nu } /\vert x-z\vert \), and the measure of angles v such that the path meets the obstacle patch after at least one collision with an obstacle can be computed as above. The result is that

and the set of angles v and \(v'\) such that both the path \(T^t(x,v)\) and \(T^t(x',v')\) meet the same obstacle range is bounded by

This expression should now be summed over all possible \(z\in \sqrt{\epsilon } \mathbb {Z}^2\), and because the speed of a particle is equal to one, these z belong to a ball of diameter \(\bar{t}\). As above we find, after rescaling,

and

The dominating term when summing the expression in (107) comes from the part that does not depend on z. We get

which again decreases to zero when \(\epsilon \) does. The maps \(T_{{\mathcal Y}_{\epsilon }}^t\) and \({\widetilde{T}}_{{\mathcal Y}_{\epsilon }}^t\) extend to pairs of particles in a natural way, so that

denote the position in phase space of a pair of particles evolving with the Lorentz process and the Markovian Lorentz process respectively. In the latter case the obstacle position inside an obstacle patch is determined independently for the two particles, and every time a path meets an obstacle range, and therefore

In the Lorentz evolution this breaks down as soon as there is a loop for one of the particle paths, or when the two particle paths meet in one and the same obstacle range. However, the computation above leads to the following theorem:

Theorem 4.2

Let \(T_{\mathcal {Y}_{\epsilon ,\epsilon }}^t\) be the Lorentz process as in Theorem 2.1. Then for \(g_1, g_2\in C(B_M\times S^1)\)

This says that the evolution of two particles in the Lorentz gas become independent in the limit as \(\epsilon \rightarrow 0\).

Proof

Let \(\mathcal {L}_2(x,v,x',v')\) denote the event that the two particle paths starting at (x, v) and \((x',v')\) and evolving in the same random obstacle configuration meet in an obstacle range. Then

Therefore the integral in (113) is bounded by

Fix \(\varepsilon >0\) arbitrary, and take \(M>0\) and \(\lambda >0\) so large that

We find that (113) is bounded by

which can be made smaller then \(2\varepsilon \) by choosing \(\epsilon \) sufficiently small. Here \(C(\bar{t})\) is a \(\bar{t}\) depending constant coming from the expression (110). This concludes the proof because \(\varepsilon \) was arbitrarily small. \(\square \)

Theorem 4.2 could have been proven for tensor products of any order with exactly the same kind of computations, and therefore this is a proof that propagation of chaos holds. The rate of convergence in the theorem depends on the density \(f_0\), and also on the time interval. The dependence of f could have been replaced by bounds of the moments and entropy. The dependence of the time interval is much more difficult to get passed, but could maybe be addressed as in [15] which deals with the Lorentz gas in a Poisson setting.

Data Avaliablility

Data sharing not applicable to this article as no datasets were generated or analysed during the current study

Notes

I am grateful to an anonymous referee for pointing out this range of cases.

References

Boldrighini, C., Bunimovich, L.A., Sinaĭ, Y.G.: On the Boltzmann equation for the Lorentz gas. J. Stat. Phys. 32(3), 477–501 (1983)

Bourgain, J., Golse, F., Wennberg, B.: On the distribution of free path lengths for the periodic Lorentz gas. Commun. Math. Phys. 190(3), 491–508 (1998)

Bunimovich, L.A., Sinaĭ, Y.G.: Markov partitions for dispersed billiards. Commun. Math. Phys. 78(2), 247–280 (1980/1981)

Bunimovich, L.A., Sinaĭ, Y.G.: Statistical properties of Lorentz gas with periodic configuration of scatterers. Commun. Math. Phys. 78(4), 479–497 (1980/81)

Caglioti, E., Golse, F.: On the Boltzmann–Grad limit for the two dimensional periodic Lorentz gas. J. Stat. Phys. 141(2), 264–317 (2010)

Caglioti, E., Pulvirenti, M., Ricci, V.: Derivation of a linear Boltzmann equation for a lattice gas. Markov Process. Relat. Fields 6(3), 265–285 (2000)

Chernov, N., Markarian, R.: Chaotic Billiards Mathematical Surveys and Monographs, vol. 127. American Mathematical Society, Providence (2006)

Gallavotti, G.: Rigorous theory of the Boltzmann equation in the Lorentz gas. Nota Interna, Istituto di Fisica, Università di Roma, (358) (1972)

Gallavotti, G.: Statistical Mechanics. Texts and Monographs in Physics. Springer, Berlin (1999)

Gikhman, I.I., Skorokhod, A.V.: The Theory of Stochastic Processes. I. Classics in Mathematics. Springer, Berlin (2004). Translated from the Russian by S. Kotz, Reprint of the 1974 edition

Golse, F.: On the periodic Lorentz gas and the Lorentz kinetic equation. Ann. Fac. Sci. Toulouse Math. (6) 17(4), 735–749 (2008)

Lenci, M.: Aperiodic Lorentz gas: recurrence and ergodicity Ergodic theory. Dyn. Syst. 23(3), 869–883 (2003)

Lenci, M., Troubetzkoy, S.: Infinite-horizon Lorentz tubes and gases: recurrence and ergodic properties. Physica D 240(19), 1510–1515 (2011)

Lorentz, H.: Le mouvement des électrons dans les méteaux. Arch. Néerl. 10, 336–371 (1905)

Lutsko, C., Tóth, B.: Invariance principle for the random Lorentz gas—beyond the Boltzmann–Grad limit. Commun. Math. Phys. 379(2), 589–632 (2020)

Marklof, J., Strömbergsson, A.: Kinetic transport in the two-dimensional periodic Lorentz gas. Nonlinearity 21(7), 1413–1422 (2008)

Marklof, J., Strömbergsson, A.: The distribution of free path lengths in the periodic Lorentz gas and related lattice point problems. Ann. Math. (2) 172(3), 1949–2033 (2010)

Marklof, J., Strömbergsson, A.: The Boltzmann-Grad limit of the periodic Lorentz gas. Ann. Math. (2) 174(1), 225–298 (2011)

Marklof, J., Strömbergsson, A.: The periodic Lorentz gas in the Boltzmann–Grad limit: asymptotic estimates. Geom. Funct. Anal. 21(3), 560–647 (2011)

Marklof, J., Strömbergsson, A.: Free path lengths in quasicrystals. Commun. Math. Phys. 330(2), 723–755 (2014)

Marklof, J., Strömbergsson, A.: Generalized linear Boltzmann equations for particle transport in polycrystals. Appl. Math. Res. Express. AMRX 2, 274–295 (2015)

Marklof, J., Strömbergsson, A.: Kinetic theory for the low-density Lorentz gas. (2019). arXiv:1910.04982. To appear in Memoirs of the AMS

Markof, J., Tóth, B.: Superdiffusion in the periodic Lorentz gas. Commun. Math. Phys. 347(3), 933–981 (2016)

Markof, J., Vinogradov, I.: Spherical averages in the space of marked lattices. Geom. Dedicata. 186, 75–102 (2017)

Pène, F., Terhesiu, D.: Sharp error term in local limit theorems and mixing for Lorentz gases with infinite horizon. Commun. Math. Phys. 382(3), 1625–1689 (2021). (2)

Ricci, V., Wennberg, B.: On the derivation of a linear Boltzmann equation from a periodic lattice gas. Stoch. Process. Appl. 111(2), 281–315 (2004)

Spohn, H.: The Lorentz process converges to a random flight process. Commun. Math. Phys. 60(3), 277–290 (1978)

Funding

Open access funding provided by Chalmers University of Technology. The resarch was carried as part of my employment at Chalmers University of Technology, no other funding was received.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing Interests

The author has no relevant financial or non-financial interests to disclose.

Additional information

Communicated by Herbert Spohn.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wennberg, B. The Lorentz Gas with a Nearly Periodic Distribution of Scatterers. J Stat Phys 190, 123 (2023). https://doi.org/10.1007/s10955-023-03134-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10955-023-03134-2