Abstract

Network epidemics is a ubiquitous model that can represent different phenomena and finds applications in various domains. Among its various characteristics, a fundamental question concerns the time when an epidemic stops propagating. We investigate this characteristic on a SIS epidemic induced by agents that move according to independent continuous time random walks on a finite graph: agents can either be infected (I) or susceptible (S), and infection occurs when two agents with different epidemic states meet in a node. After a random recovery time, an infected agent returns to state S and can be infected again. The end of epidemic (EoE) denotes the first time where all agents are in state S, since after this moment no further infections can occur and the epidemic stops. For the case of two agents on edge-transitive graphs, we characterize EoE as a function of the network structure by relating the Laplace transform of EoE to the Laplace transform of the meeting time of two random walks. Interestingly, this analysis shows a separation between the effect of network structure and epidemic dynamics. We then study the asymptotic behavior of EoE (asymptotically in the size of the graph) under different parameter scalings, identifying regimes where EoE converges in distribution to a proper random variable or to infinity. We also highlight the impact of different graph structures on EoE, characterizing it under complete graphs, complete bipartite graphs, and rings.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Network epidemic models are an ubiquitous and powerful abstraction that can represent different phenomena in various domains, such as physics, biology, and social sciences. The classic model assumes network nodes correspond to individuals and edges indicate the possibility of direct influence. In this model, nodes have an epidemic state that changes over time according to some function of the epidemic state of their respective neighbors. The most elementary epidemic state is represented by a single binary digit, and thus, every node is found in one of two possible states, frequently denoted by susceptible (S) and infected (I) [1,2,3].

A fundamental problem concerning network epidemics is understanding the final (or time average) epidemic state of the nodes as time unfolds. Intuitively, the network structure plays a key role, as illustrated by the celebrated work of Pastor-Satorras and Vespignani, showing that the epidemic threshold vanishes on networks where the degree distribution is heavy enough [4]. Indeed, the role of network structure on specific epidemic models has been broadly investigated and different dichotomies have been identified (e.g., very long versus very short epidemic duration) [1, 2, 5,6,7]. Moreover, recent efforts have focused on understanding the impact of dynamic network structure (i.e., edge set changes over time) [8,9,10].

Another class of network epidemic models consider agents that move on the network. In this model, network nodes represent locations where agents can reside and edges indicate the possibility of direct movement between locations. In addition, the epidemic state is now associated with the agents (and not nodes) and changes over time when agents with different states meet in a node. Note that this model embodies two different dynamics, namely agent mobility and epidemic diffusion. While epidemic diffusion clearly depends on agent mobility, agent mobility may be independent of epidemic diffusion. Nevertheless, this coupled dynamics adds significant complexity, making a rigorous theoretical analysis much more challenging. Indeed, most theoretical results on this model are fairly recent when compared to the classic network epidemic model [11,12,13,14,15,16,17]. However, this model has been considered and analyzed through numerical simulations for at least 45 years [18], since it also finds applications in various domains.

Arguably the simplest agent mobility model are random walks, where agents choose neighbors uniformly at random and independently from one another. Indeed, this is the preferred choice in theoretical works that tackle this model. Moreover, the simplest kind of epidemic is the SI model, where every agent has a binary state (S or I) and can only transition from state S to I. For example, Draief and Ganesh [13] consider an SI epidemic with two random walks and characterize the infection probability over time as a function of the network, illustrating again the importance of the network structure on the epidemic. In a more recent work, Nagatani et al. analyze the SIS model (where agents alternate between S and I states) with many independent walkers (metapopulation model) on different networks to show that infection risk and epidemic threshold are a function of the network structure [17].

In the SIS epidemic, an agent in state I (infected) returns to state S (susceptible) after some time, known as recovery time, and can become infected again. However, the epidemic stops when all agents are found in state S, as no agent can further become infected. Let the end of the epidemic (EoE) denote the first time instant where all agents are found in state S. Under mild conditions (finite graph, finite number of walkers, recovery time with finite moments), EoE is finite almost surely. However, its value strongly depends on model parameters and network structure. Thus, EoE is a crucial quantity of SIS dynamics as it reveals a fundamental property of the epidemic, namely, when it ends. This metrics has been investigated in different models, as discussed in Sect. 2.

The main contribution of this work is a characterization of EoE as a function of the network structure. We consider edge-transitive graphs and two independent random walks with exponentially distributed step time and recovery time. Under this assumption, we provide the exact Laplace transform for EoE as a function of the Laplace transform for meeting times (Theorem 1). Interestingly, the graph structure only influences the latter which does not depend on the epidemic dynamics. On an intuitive level, our main result separates the effect of the network structure from the epidemic dynamics.

Our second contribution is the characterization of EoE on graph sequences of increasing size. In particular, we identify scaling regimes for which EoE converges to a distribution (with finite moments) or diverges to infinity (Theorems 2 and 3). Interestingly, while on fixed graphs EoE is finite, graph sequences with a proper scaling allow the EoE to grow with the graph size. Moreover, the scaling regimes necessary for EoE to diverge strongly depend on the graph, again illustrating the importance of network structure. We illustrate this behavior by considering complete graphs, complete bipartite graphs, and rings.

The remainder of this paper is organized as follows. A summary of related work and results is presented in Sect. 2. Section 3 presents the notation and some preliminary definitions. The main result is given in Sect. 4 providing the Laplace transform for the EoE for any edge-transitive graph. The main result is later applied in Sect. 5 to derive limit results and scaling regimes for different graphs, along with auxiliary theorems to characterize their behavior. Last, a brief discussion and outlook concerning this problem is presented in Sect. 6.

2 Related Work

In what follows the two different network epidemic models are presented more formally along with some of important results on the characterization of the epidemic.

2.1 Epidemics on Nodes

In this class of network epidemics nodes correspond to individuals in a given population and edges encode the possible interactions among the population. The various epidemic states such as S (susceptible), I (infected), and R (recovered), are associated with the nodes, and change over time according to the epidemic state of neighboring nodes. In such models, epidemic dynamics is strongly driven by network structure with node degree playing a fundamental role.

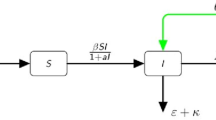

One of the most famous models in this class is a SIS epidemic model normally refereed to as contact process, defined as follows. Let \(G=(V,E)\) denote an undirected graph with node and edge set V and E, respectively, \(\lambda \) and \(\delta \) two parameters called the contagion rate and recovery rate, respectively (usually \(\delta =1\)). In this model the state of a node i evolves as follows:

The model was introduced on an infinite lattice by Harris [19] 45 years ago, and it has been broadly explored. There are several surveys on this topic providing many details and generalizations of this classic model [20, 21]. Note that besides the network structure, \(\lambda \) plays a fundamental role. Intuitively, if \(\lambda \) is much larger than \(\delta \), infections occur much faster than recovery and the epidemic may spread very quickly. Below we provide a few important results concerning the survival of the epidemic in different scenarios.

On infinite lattices When \(G = {\mathbb {Z}}^d\), there exists an epidemic threshold\(\lambda _c:=\inf \{\lambda : {\mathbb {P}}(\xi (t) \ne 0\, \forall t>0)>0\}\) such that

\(\lambda <\lambda _c\): epidemic dies out a.s., that is \({\mathbb {P}}(\exists t_0: \xi (t)= 0\, \forall t>t_0)=1\), \(\forall \xi (0)\)

\(\lambda > \lambda _c\): epidemic survives with positive probability (at any node), that is

$$\begin{aligned} {\mathbb {P}}(\xi (t) \ne 0 ,\forall t>0)>0 \text { and } \;\forall i \quad {\mathbb {P}}(\forall T \exists t>T: \xi _i(t)=1 )>0\;, \end{aligned}$$

for all initial conditions with infinitely many infected nodes.

Regular infinite trees In this case, two epidemic thresholds \(\lambda _1<\lambda _2\) have been identified. For \(\lambda <\lambda _1\) and for \(\lambda >\lambda _2\) the behavior is identical to \({\mathbb {Z}}^d\). Moreover,

\(\lambda \in (\lambda _1, \lambda _2)\): epidemic survives with positive probability, but every node recovers eventually a.s., that is

$$\begin{aligned} {\mathbb {P}}(\xi (t) \ne 0, \forall t>0)>0 \text { and } \forall i \quad {\mathbb {P}}(\exists T: \xi _i(t) =0 \forall t>T)=1 \end{aligned}$$

where the initial condition is one node called the root infected [22].

On arbitrary finite graphs In this case it is known that the epidemic will eventually die out with probability one, independently of the network structure and infection rate. However, there is still a phase transition on the time that the epidemic ends, which can be either very early or very late. Let \(G=([n],E)\) be an undirected finite and connected graph on n vertices, A its adjacency matrix, and \(\rho (A)\) its spectral radius. Let \(\tau \) denote the time that the epidemic ends, defined as follows:

Then the following holds:

\(\lambda \rho (A) < 1\)\(\implies \)\({\mathbb {E}}(\tau )\le \frac{\log n +1}{1-\lambda \rho }\), \(\forall \; \xi (0)\),

\(\lambda \eta (G)>1\)\(\implies \)\(\exists C>0\) such that \({\mathbb {E}}(\tau )\ge e^{Cn}\), \(\forall \xi (0)\ne 0\), where \(\eta (G)= \inf _{S\subset [n]: |S|\le \lfloor n/2\rfloor } \frac{E(S, S^c)}{|S|}\) is the isoperimetric constant and \(E(S,S^c)\) denotes the number of edges between S and \(S^c\).

Thus, the expected time for the epidemic to end can be logarithmic or exponential in the size of the network, depending on its structure and infection rate [5, 6].

2.2 Epidemics on Agents

In this class of network epidemic models, nodes correspond to locations and the edges encode the possibility of direct movement between locations. Different from the previous model, network nodes have no epidemic state. In contrast, the epidemic state is associated with agents that move around on the network. The epidemic state of agents can change when agents with different states meet in a network node. Moreover, it is often assumed that agents perform independent random walks in continuous time. Note that in this model network structure influences agent mobility which in turn influences the epidemic dynamics, adding an extra layer of complexity with respect to the previous model.

SI epidemic on finite graphs with two agents Consider two agents performing independent random walks \(X_t\), \(Y_t\), in continuous time according to rate transition matrix Q (assumed to be reversible). Start with one agent infected and the other susceptible and no recovery. Assume the susceptible agent becomes infected as a function of the time it has spent together with the infected agent, on any given node. Let \(\mathbb {I}(t)\) be an indicator for the susceptible agent to be infected by time t, and p(t) its expectation. Then

\({\mathbb {E}}(\tau (t))=\sum _{i \in V} \pi _i^2 t\)

\(p(t)\le 1- e^{-\beta t \sum _{i \in V} \pi _i^2}\)

where \(\tau (t)\) is the coincidence time up to time t (total time the two agents have spent together, up to time t), and \(\pi \) is the invariant distribution for the random walk on G. Note that graphs with different degree distribution (i.e., regular graph versus power law distribution) will have very different scalings for this quantities [2].

SI epidemic on infinite lattices Let \(G = {\mathbb {Z}}^d\) and consider two types of agents all performing independent continuous time random walks: A-particles (susceptible) step with rate \(D_A\) and B-particles (infected) step with rate \(D_B\). Assume there is no recovery and infection occurs immediately when an A-particle meets a B-particle in a node (the A-particle then becomes a B-particle).

Let \(N_A(x,0_-)\sim Poi(\lambda )\) denote the number of A-particles at \(x\in {\mathbb {Z}}^d\) at time \(0_-\). Moreover, let \(N_B\) denote a fixed number of B-particles placed in the lattice (not at random) at time 0. Define the two sets:

Then, for any \(D_A, D_B\ge 0\) there exists a constant \(C_1<\infty \) (independent of \(N_B\) and initial positions of B-particles) such that for all sufficiently large t

\(\qquad \implies \)\(B(t)\subset {\mathcal {C}}(2C_1 t)\) eventually almost surely [16].

Moreover, if \(D_A = D_B\) then there exists a constant \(C_2>0\) such that for each constant \(K>0\) and for sufficiently large t

\(\qquad \implies \)\({\mathcal {C}}(C_2t)\subset B(t)\) eventually almost surely [16].

These two theorems suggests that a “shape theorem” may hold: \(t^{-1}B(t)\) converges to a non-random set \(B_0\) which implies that the growth rate of B(t) is linear in t. Note that when \(D_A=0\) which implies that susceptible particles do not move, the model degenerates to what is known as the frog model [23]. In this case there exists a full shape theorem, as follows: \(\exists \) a non random set \(B_0\) such that for all \(0<\varepsilon <1\)

This means that \({\mathbb {P}}(\forall \varepsilon \exists t_0(\varepsilon ): \forall t>t_0\,\; (1-\varepsilon )B_0 \subset \frac{B(t)}{t}\subset (1+\varepsilon )B_0) =1.\) A similar shape theorem has been proved for a more general setting in [24].

SI epidemic on finite graphs The frog model has also been recently studied on finite graphs [25]. Let \(G=(V,E)\), \(N_A(x, 0_-)\sim Poi(\lambda )\), \(N_B=1\) a single infected particle, placed in a given node. In this model, A-particles do not move (frog model) and B-particles perform independent discrete time random walks. However, B-particles have a lifetime \(\tau \) (not random) after which they are removed from the system. The process stops when there are no more B-particles present. Let \(R_\tau \) denote the set of nodes visited by B-particles (with lifetime \(\tau \)) before the process stops. Let \(S(G) = \inf \{t>0: R_t = V\}\) denote the smallest lifetime required by B-particles to visit every node of G. A recent work has characterized the asymptotic behavior of \(S({\mathbb {T}}_d(n))\) as a function of the walking rate, where \({\mathbb {T}}_d(n)\) are regular trees with n nodes [26].

SIS epidemic on finite graphs with two agents This is the scenario tackled in this paper, focusing on edge-transitive graphs, that is described in the following sections. Note that this is the first rigorous work on characterizing the end time of SIS epidemics on finite graphs with mobile agents, to the best of our knowledge.

SIS epidemic on infinite lattices The SIS epidemic where agents are driven by the frog model has also been investigated on infinite graphs. In this model, an A-particle becomes a B-particle when a B-particle moves into its node (an S to I transition), while a B-particle becomes an A-particle after an exponential amount of time, with rate \(\lambda \). However, only B-particles move according to independent random walks. The time at which the epidemic ends (i.e., all agents are found in state S) has been characterized, showing a phase transition between very short and very long [12, 27]. In particular, a phase transition on the density of the agents has recently been shown for infinite lattices of any dimension, \({\mathbb {Z}}^d\) for fixed \(d>0\) [27]. A shape theorem has been proved in [28].

3 Notation and Preliminaries

Let \(G=(V,E)\) be a undirected, finite, connected graph with \(|V|=n\) vertices, and let d(i) denote the degree of vertex \(i \in V\). Throughout the paper we assume that G is edge-transitive, i.e., given any two edges \(e_1, e_2\in E\), there exists an automorphism of G that maps \(e_1\) to \(e_2\). Informally speaking, the edge transitivity assures that every edge “sees” the same graph structure. Notable examples are: complete graph (\(K_n\)), complete bipartite graph (\(K_{n_1,n_2}\)), cycle graph (\(C_n\)), star graph (\(S_n\)) and the hypercube (\(Q_n\)) with \(d>0\) dimensions (where \(n=2^d\)).

Consider two agents moving on the graph G according to independent continuous time random walks, denoted by \(\{W_1(t)\}_{t\ge 0}\) and \(\{W_2(t)\}_{t\ge 0}\), with \(W_k(t)\in V\), \(k=1,2\), for any time t. For each walker, the holding time in every vertex is exponentially distributed with parameter \(\lambda \) (walking rate), independently from the other walker. Thus, an agent in vertex i moves to vertex j with rate \(\lambda /d(i)\) if \(\{i,j\} \in E\), and 0 otherwise.

We assume agents are either susceptible (S) or infected (I), denoted by \(\{S_k(t)\}_{t\ge 0}\) with \(S_k(t) \in \{S,I\}\) for \(k=1,2\). We consider a SIS epidemic. When an infected agent meets the susceptible in a vertex, an infection immediately occurs. Note that this event takes place when the S agent walks into the vertex where I resides, or when the I agent walks into the vertex where S resides. Once infected, an agent recovers by transitioning to the S state after some time. The recovery time is assumed to be exponentially distributed with parameter \(\gamma \) (recovery rate), and is independent of other events. Once in the S state, the agent becomes infected again when it meets the I agent.

The system dynamics can be fully described by the joint state of both agents, \((S_k(t), W_k(t))\) for \(k=1,2\). We assume both agents are infected and located in the same vertex at time zero, and thus \(S_1(0)=S_2(0)=I\) and \(W_1(0)=W_2(0)=i\), for some \(i\in V\).

The following are important quantities related to this model:

Meeting time of the two walkers. Let \(i,j \in V\) and define

$$\begin{aligned} O^{i,j}:= \inf \{ t\ge 0: W_1(t) =W_2(t) \mid W_1(0)=i \text { and } W_2(0)=j \}\;. \end{aligned}$$(1)

A worst case polynomial upper bound (in n) for the meeting of two walkers in any graph is shown in [29]. An upper bound for the expected meeting time for a fixed G, given in terms of the hitting time of a single walker, is also known [30]. The Laplace transform of this meeting time has also been established in closed form for some specific graphs, including a scenario with more than two walkers [31].

A notion related to the meting time above is the time for two walkers to meet when they start at distance one from each other. This is a fundamental quantity for the analysis of our model, as we soon discuss.

Meeting time from distance one of the two walkers. In this case, we restrict \(i,j \in V\) such that \((i, j) \in E\), and thus,

$$\begin{aligned} M^{i,j}:= \inf \{ t\ge 0: W_1(t) =W_2(t) \mid W_1(0)=i \text { and } W_2(0)=j \text { and } (i, j) \in E \}\;. \end{aligned}$$(2)

Note that since G is edge-transitive the distribution of \(M^{i,j}\) does not depend on the specific edge \((i, j) \in E\), and all edges have the same distribution. Henceforth, we drop the indication of the edge in the notation and denote by M the random variable with this distribution.

The previous quantities depend only on the graph structure and walking rate \(\lambda \), but not on the epidemic dynamics. In sharp contrast, the following quantity indicates the time that the epidemic ends which occurs when both walkers become susceptible.

End of epidemic time is defined as

$$\begin{aligned} T:= \inf \{t\ge 0: S_1(t)=S_2(t)=S\}\;, \end{aligned}$$(3)for a given initial condition \(W_k(0) = i\) and \(S_k(0) = I\), for \(k=1,2\) and \(i \in V\).

Note that both agents will stay in the susceptible state ever after time T, moving on the graph but never becoming infected again. Moreover, note that T depends on graph structure G, the walking rate \(\lambda \) and the recovery rate \(\gamma \), while M does not depend on the recovery rate \(\gamma \). In what follows we provide a characterization of T as a function of G, \(\lambda \), and \(\gamma \).

4 End of Epidemic Time

We now state our main theorem relating the End of Epidemic time T to the meeting time from distance one M.

Theorem 1

Let \({{\mathcal {L}}}_T(s) = {{\mathbb {E}}}(e^{-sT})\) denote the Laplace transform of T and \({{\mathcal {L}}}_{M}(s) = {{\mathbb {E}}}(e^{-sM})\) denote the Laplace transform of M. Then, for any \(s > 0\),

Proof of Theorem 1

Denote by \(R_k \sim Exp(\gamma )\) the time to recovery of individual k and define \(R_* \triangleq \min (R_1,R_2) \sim Exp(2 \gamma )\) the shortest time to recovery. Denote by \(J_k \sim Exp(\lambda )\) the time to the next jump of walker k and define \(J_* \triangleq \min (J_1,J_2) \sim Exp(2 \lambda )\) the shortest time to the next jump. We can write

where \(T'\) is a random variable with the same distribution as T and independent of \(R_*\) and \(J_*\), whereas \(T_1\) is the end of epidemic time when the two walkers start at a distance one from each other and are both in the infected state. Note that \(T_1\) is independent of \(R_*\) and \(J_*\). From Equation (5), simple calculations imply that

where \({{\mathcal {L}}}_{T_1}(s) = {{\mathbb {E}}}(e^{-sT_1})\) is the Laplace transform of \(T_1\).

Similarly, if we define \(R^*\triangleq \max (R_1,R_2)\), we can express \(T_1\) as

where \(T''\) is a random variable with the same distribution as T and independent of \(R^*\) and M. Using the independence between the two walkers, the distribution of \(R^*\) is simple to obtain and, for every \(t\ge 0\), we have \({{\mathbb {P}}}(R^* < t) = (1-e^{-\gamma t})^2\), while the density is

Using Eq. (7) in the Laplace transform of \(T_1\), together with the independence between \(T''\) and M and \(R^*\), we obtain

With the use of Eq. (6) to re-write \({{\mathcal {L}}}_{T_1}(s)\) on the LHS of the previous equation, we obtain

Let us now deal with the two expectations in Eq. (9). First,

where \(F_M\) is the distribution function of M. Second,

Combining everything into Eq. (9) we obtain the claim. \(\square \)

Theorem 1 provides an expression for the Laplace transform of the end of epidemic time T that is a function of the Laplace transform of the meeting time from distance one \({{\mathcal {L}}}_M(s)\), the walking rate \(\lambda \) and the recovery rate \(\gamma \). This expression determines how the underlying graph structure (encoded in \({{\mathcal {L}}}_M(s)\)) influences the distribution of T.

The random variable M is related to a discrete time random variable that counts the number of jumps needed for two random walks to meet. More precisely, if N denotes the number of jumps required for the two walkers to meet if they start at distance one, it holds that

where \(E_k\) are independent \(Exp(2 \lambda )\) random variables. Note that N only depends on the graph G and does not depend on \(\lambda \) nor \(\gamma \). We can then express the Laplace transform of M as

Combining the above equation with Theorem 1, we obtain the following Corollary relating the Laplace transform of T to the distribution of N.

Corollary 1

Let \({{\mathcal {L}}}_T(s) = {{\mathbb {E}}}(e^{-sT})\) denote the Laplace transform of T and let N the number steps before two random walks meet. Then

Results of this chapter allow us to completely decouple the effects of the network structure and random walks on it from the effects of the epidemic.

5 General Limit Theorems

In this section we turn our attention towards understanding how EoE behaves when the size of the underlying graph grows to infinity. To account for the investigation of this asymptotic behavior, let us remark upon two aspects of our model on fixed finite graphs:

- (i)

The distribution of T may be difficult to explicitly compute;

- (ii)

The epidemics will eventually die, i.e., \({\mathbb {P}}(T<+ \, \infty )=1\) for every value of \(\lambda \) and \(\gamma \).

On the contrary, asymptotically in the size of the graph, we show that there exist scaling regimes for \(\lambda \) and \(\gamma \) in which the distribution of T can be explicitly computed. Moreover, we also show the existence of regimes in which the epidemic times grow infinitely with the size of the graph.

Recall that the random variables T, M and N depend on the underlying graph G and, in particular, on its size. With a slight abuse of notation, henceforth we write \(T_n\), \(M_n\) and \(N_n\) to stress the dependence on the graph size n, for some fixed class of graphs (e.g., \(K_n\), the complete graph).

The main objective of this section is to study the limit behavior of \(T_n\) when n goes to infinity and to investigate the role of the underlying graph structure. To this purpose we consider walking rate (\(\gamma _n\)) and recovery rate (\(\lambda _n\)) which depend on n such that \(\gamma _n\rightarrow +\infty \) and \(\lambda _n\rightarrow +\infty \) as n grows.

This section is organized as follows. In Sect. 5.1 we consider the complete graph and, by applying Theorem 1, we identify limiting behavior of the end of epidemic for all possible scalings of the parameters. Even though Theorem 1 theoretically allows to deal with any scaling regime and any graph, applying it directly in many cases turns out to be extremely cumbersome. We therefore focus on scalings where the walking dynamics is much faster that the recovery dynamics, so that it is possible that \(T_n \rightarrow \infty \). We prove two general limit theorems that follow from Theorem 1 and present their corollaries for specific graphs. Section 5.2 contains the first limit theorem followed by its application in Sect. 5.3 to complete bipartite graphs of certain structures. In Sect. 5.3 we also identify other bipartite graphs where the first limit theorem is not applicable. In Sect. 5.4 we prove another limit theorem and discuss its corollaries for bipartite graphs. In Sect. 5.5 we consider the ring graph, demonstrate that neither limit theorem may be applied to it and study the end of epidemic time in some regimes directly.

5.1 Complete Graph

Let us consider the specific case of a complete graph on n vertices \(G=K_n\). In this case, it is not difficult to see that \(N_n\sim Geom(n^{-1})\), \(M_n~\sim Exp(\frac{2\lambda }{n})\) and therefore \({{\mathcal {L}}}_{M_n}(s)= \frac{2\lambda _n}{2\lambda _n + n s}\). Using the latter in Theorem 1 we obtain that

Let us consider three different regimes:

- (i)

\(\lambda _n = \omega (n\gamma _n)\) (i.e., \(\frac{\lambda _n}{n \gamma _n}\rightarrow +\infty \))

- (ii)

\(\lambda _n = o(\gamma _n)\) (i.e., \(\frac{\lambda _n}{\gamma _n}\rightarrow 0\))

- (iii)

\(\lambda _n = o(n\gamma _n)\) and \(\lambda _n = \omega (\gamma _n)\) (i.e., \(\frac{\lambda _n}{\gamma _n}\rightarrow + \infty \)but\(\frac{\lambda _n}{n \gamma _n}\rightarrow 0\))

As far as regime i) is concerned, let us denote by \(b_n=\frac{n \gamma _n^2}{\lambda _n}\) and compute \({{\mathcal {L}}}_{T_n}(b_n s)\).

$$\begin{aligned} {{\mathcal {L}}}_{T_n}(b_n s)= \frac{1 }{ 1+ s + o (1) }\; \underset{n\,\uparrow \, \infty }{\longrightarrow }\;\frac{1}{1+s} \end{aligned}$$Thus, we have that \( b_n T_n \overset{D}{\longrightarrow }Exp(1)\) as n goes to infinity.

Remark 1

Note that within regime i) two different subregimes are possibles:

- (1)

\(b_n \rightarrow +\infty \) which implies \(T_n \rightarrow 0\) in probability,

- (2)

\(b_n \rightarrow 0\) which implies \(T_n \rightarrow + \infty \) in probability.

The regime 2) is of particular interest as the epidemic time grows infinitely, despite being finite for every n.

Note that \(b_n \rightarrow 0\) implies that \(\lambda _n = \omega (n\gamma _n^2)\) and, thus the walkers are moving at a rate that scales linearly with n and quadratically with the recovery rate. Interestingly, this rate is large enough for the epidemic time to grow infinitely.

As far as regime ii) is concerned, let us compute \({{\mathcal {L}}}_{T_n}(2\lambda _n s)\).

$$\begin{aligned} {{\mathcal {L}}}_{T_n}(2\lambda _n s)=\frac{1 }{ 1+ s + o (1) }\; \underset{n\,\uparrow \, \infty }{\longrightarrow }\;\frac{1}{1+s} \end{aligned}$$Thus, \( 2\lambda _n T_n \overset{D}{\longrightarrow }Exp(1)\) as n goes to infinity.

As far as regime (iii) is concerned, let us compute \({{\mathcal {L}}}_{T_n}(\gamma _n s)\)

$$\begin{aligned} {{\mathcal {L}}}_{T_n}(\gamma _n s)= \frac{1}{ ( s + 1)(s/2 +1) + o(1) } \underset{n\uparrow \infty }{\longrightarrow }\frac{2}{(s+1)(s +2)}\;. \end{aligned}$$Thus, \( \gamma _n T_n \overset{D}{\longrightarrow } X + Y\) as n goes to infinity, where \(X\sim Exp(1)\)\(Y\sim Exp(2)\) and X and Y are independent.

Remark 2

In both regimes (ii) and (iii) we have that \(T_n\rightarrow 0\) in probability.

5.2 General Limit Theorem I

For a complete graph, we have shown how Theorem 1 can be applied to identify the behaviour of \(T_n\) in different regimes. This same approach can be applied to other classes of graphs. However, instead of carrying out a similar analysis for other graphs, we provide below (and also in Sect. 5.4) auxiliary results which allow us to characterize the asymptotic behaviour of \(T_n\) in terms of the asymptotic behaviour of \(N_n\). These results can then be directly applied to other classes of graphs.

Theorem 2

Assume there exists a sequence \(\{a_n\}_{n \in {\mathbb {N}}}\) converging to zero such that \(a_n N_n \overset{D}{\longrightarrow }X\) as n tends to infinity, with X a random variable with the first two moments finite. Denote \(c_1 = {{\mathbb {E}}}(X)\) and \(c_2 = {{\mathbb {E}}}(X^2)\). Then, for \(\gamma _n = o(\lambda _n a_n)\)

The above theorem easily captures the regime (i) for the complete graph. Indeed, for the complete graph, \(N_n \sim Geom \big (\frac{1}{n}\big )\) and \({{\mathcal {L}}}_{N_n}(s)= \frac{e^{-s}}{n - (n-1)e^{-s}}\). Thus,

Therefore, \(n^{-1}N_n\overset{D}{\longrightarrow }Exp(1)\) as n tends to infinity. In Theorem 2 this corresponds to the situation where \(X\sim Exp(1)\) and thus \(c_1=1\), \(c_2=2\), and \(a_n = n^{-1}\). In the regime \(\frac{\lambda _n}{n \gamma _n}\rightarrow +\infty \), the above theorem guarantees that \( \frac{n \gamma _n^2}{\lambda _n} T_n \overset{D}{\longrightarrow }Exp(1)\) as n grows, as previously shown through several calculations.

Remark 3

Let us give an intuitive explanation of why the regime \(\gamma _n = o(\lambda _n a_n)\) is interesting. Due to the assumption \(a_n N_n \overset{D}{\longrightarrow }X\), it takes on average order \(1/a_n\) steps for the two random walks to meet as soon as they separate. Each step takes an average time of order \(1/\lambda _n\), so it will take on average time of order \(1/(\lambda _n a_n)\) for the two walkers to meet. On the other hand, it will take an average time of order \(1/\gamma _n\) for them both to recover. Therefore, assuming \(\gamma _n = o(\lambda _n a_n)\), we make sure that the time for the two walkers to recover is much larger than the time it takes them to meet again, thus giving the epidemic a chance to survive.

Proof of Theorem 2

To simplify the notation let us denote \(b_n=\dfrac{c_2 \gamma _n^2}{2c_1\lambda _n a_n}\). To prove the claim it is enough to show that \({{\mathcal {L}}}_{T_n}(b_n s)\underset{n \uparrow \infty }{\longrightarrow }\frac{1}{1+s}\), for every \(s>0\). The assumption \(a_n N_n \overset{D}{\longrightarrow }X\) assures that \({{\mathcal {L}}}_{N_n}(a_n s) \underset{n \uparrow \infty }{\longrightarrow } {{\mathcal {L}}}_X(s)\). From the latter we obtain a limit theorem for \(M_n\), i.e.,

where we used that \(a_n\) goes to zero and the continuity of \({{\mathcal {L}}}_X\).

For s sufficiently close to zero we can write \({{\mathcal {L}}}_X(s)=1 - c_1 s + \frac{c_2}{2} s^2 + o(s^2)\). Thus, for any sequence \(x_n \rightarrow 0\) it holds that \({{\mathcal {L}}}_X(x_ n s) = 1-c_1x_n s + \frac{c_2}{2}(x_n s)^2 + o ((x_n s)^2)\).

We use Corollary 1 to compute \({{\mathcal {L}}}_{T_n}(b_n s)\) from \({{\mathcal {L}}}_{N_n}\). We begin computing \({{\mathcal {L}}}_{M_n}\left( b_n s+\gamma _n\right) \).

Using that \(\frac{1}{\lambda _n a_n}\rightarrow 0\), we have that

So we obtain \({{\mathcal {L}}}_{M_n}\left( b_n s+\gamma _n\right) = 1 - c_1 \left( \frac{b_n s + \gamma _n}{2\lambda _n a_n } \right) + \frac{c_2}{2} \left( \frac{b_n s + \gamma _n}{2\lambda _n a_n } \right) ^2 + o\left( \left( \frac{\gamma _n}{\lambda _n a_n} \right) ^2\right) \). Similarly, \({{\mathcal {L}}}_{M_n}\left( b_n s+2\gamma _n\right) = 1 - c_1 \left( \frac{b_n s +2\gamma _n}{2\lambda _n a_n } \right) + \frac{c_2}{2} \left( \frac{b_n s+2\gamma _n}{2\lambda _n a_n } \right) ^2 + o\left( \left( \frac{\gamma _n }{\lambda _n a_n} \right) ^2\right) \). Therefore, the numerator in Eq. (4) can be written as

Let us now look at the denominator:

Overall, recalling that \(b_n= \frac{c_2\gamma _n^2}{2c_1\lambda _n a_n}\), we have

\(\square \)

We have already seen how the above theorem can be applied to the complete graph in the regime \(\gamma _n = o (\lambda _n n)\). Another example in which Theorem 2 can be applied is the complete bipartite graph \(K_{m,n-m}\) presented below.

5.3 Complete Bipartite Graph

For the complete bipartite graph \(G=K_{m,n-m}\) on n vertices with one partition having m elements and the other \(n-m\) elements, we shall consider several different scenarios: i) \(m=\alpha n\), with \(\alpha \in (0,1)\), ii) \(m=n^\beta \), with \(\beta \in (0,1)\) and iii) \(m=\log n\). It is not difficult to see that, in this case the random variable \(N_n\) satisfies

Therefore the Laplace transform of \(N_n\) satisfies he following recursion

and hence

If \(m=\alpha n\) we have

$$\begin{aligned} {{\mathcal {L}}}_{N_n}(s)= \frac{\frac{1}{2\alpha (1-\alpha )}e^{-s}}{n(1-e^{-2s}) + \frac{1}{2\alpha (1-\alpha )}e^{-s}}\;, \end{aligned}$$and therefore,

$$\begin{aligned} {{\mathcal {L}}}_{N_n}(n^{-1}s)&= \frac{\frac{1}{2\alpha (1-\alpha )}e^{-\frac{s}{n}}}{n(1-e^{-\frac{2s}{n}}) + \frac{1}{2\alpha (1-\alpha )}e^{-\frac{s}{n}}}\\&= \frac{\frac{1}{2\alpha (1-\alpha )}(1 - \frac{s}{n} + o(\frac{1}{n}))}{n(1-(1 - 2\frac{s}{n} + o(\frac{1}{n}))) + \frac{1}{2\alpha (1-\alpha )}(1 - \frac{s}{n} + o(\frac{1}{n}))}\\&= \frac{\frac{1}{2\alpha (1-\alpha )} (1 + o(1))}{ 2 s + \frac{1}{2\alpha (1-\alpha )} + o(1)} \underset{n\uparrow \infty }{\longrightarrow } \frac{(4\alpha (1-\alpha ))^{-1}}{(4\alpha (1-\alpha ))^{-1} + s}\;, \end{aligned}$$that is \(n^{-1}N_n \overset{D}{\longrightarrow }Exp ((4\alpha (1-\alpha ))^{-1})\). Thus, Theorem 2 guarantees that \(\frac{c_2}{2c_1}\frac{ \gamma _n^2 }{\lambda _n a_n}T_n \overset{D}{\longrightarrow } \text {Exp}(1)\), with \(c_1=4\alpha (1-\alpha )\) and \(c_2=32\alpha ^2(1-\alpha )^2\).

If \(m=m(n)\uparrow + \infty \) and \(m=o(n)\)

$$\begin{aligned} {{\mathcal {L}}}_{N_n}(s)= \frac{\frac{1}{2} \left( \frac{n}{m(n-m)} \right) e^{-s}}{ 1- e^{-2s} + \frac{1}{2}\left( \frac{n}{m(n-m)} \right) e^{-2s}}=\frac{ \left( \frac{1}{1-o(1)} \right) e^{-s}}{ 2m(1- e^{-2s}) + \left( \frac{1}{1-o(1)} \right) e^{-2s}} \end{aligned}$$and therefore,

$$\begin{aligned} {{\mathcal {L}}}_{N_n}(m^{-1}s)&= \frac{\left( \frac{1}{1-o(1)} \right) \left( 1 - \frac{s}{m} + o(\frac{1}{m})\right) }{2m ( 2\frac{s}{m} + o(\frac{1}{m}))) + \left( \frac{1}{1-o(1)} \right) \left( 1 - \frac{s}{m} + o(\frac{1}{m})\right) } \underset{n\uparrow \infty }{\longrightarrow } \frac{4^{-1}}{4^{-1} + s} \end{aligned}$$that is, \(m^{-1}N_n \overset{D}{\longrightarrow }Exp (4^{-1})\) and Theorem 2 implies that \(\frac{c_2}{2c_1}\frac{ \gamma _n^2 }{\lambda _n m^{-1}}T_n \overset{D}{\longrightarrow } \text {Exp}(1)\), with \(c_1=4\) and \(c_2=32\).

Two notable examples of this scenarios are:

- (i)

power law growth: \(m(n)=n^\beta \), with \(\beta \in (0,1)\)

- (ii)

polylogarithmic growth: \(m(n)=\log ^\beta n\), with \(\beta >0\)

- (i)

There are situations in which Theorem 2 cannot be applied. Specifically, it is not always the case that, given \(a_n\) going to zero, \(a_n N_n\) converges in distribution to a random variable. Consider, for example, the complete bipartite graph \(K_{m,n-m}\) with m constant. In this case, the Laplace transform is given by

and, as m is a constant, we have that \( {{\mathcal {L}}}_{N_n}(s) \underset{n\uparrow \infty }{\longrightarrow } \frac{\frac{1}{2m} e^{-s}}{ 1- \frac{1}{2}\left( 2 - \frac{1}{m}\right) e^{-2s}} \). As it turns out, in this case \(N_n\) converges in distribution to a random variable with first and second moments finite, but \(a_n N_n \rightarrow 0\) in probability for any sequence \(a_n \rightarrow 0\). In the next section we provide a general result to characterize the limit behaviour of \(T_n\) in these type of situations.

5.4 General Limit Theorem II

We present a limit theorem in a different regime to that of Theorem 2. This will allow to consider the bipartite graphs identified in the previous section for which Theorem 2 is not applicable.

Theorem 3

Assume \(N_n \overset{D}{\longrightarrow }X\) as n tends to infinity, with X a random variable with the first two moments finite. Denote \(c_1 = {{\mathbb {E}}}(X)\) and \(c_2 = {{\mathbb {E}}}(X^2)\). Then, for \(\gamma _n = o(\lambda _n)\)

Note that, for the complete bipartite graph with a finite fixed m, we have that \({{\mathcal {L}}}_{N_n}(s)\) converges to \(\frac{1/(2m) e^{-s}}{1-1/2(2-1/m)e^{-2s}}\). Thus, \(N_n\) converges in distribution to a random variable X with \(c_1={{\mathbb {E}}}(X)=-\frac{d}{ds}{{\mathcal {L}}}_X(s)\vert _{s=0}=4m-1 \) and \(c_2={{\mathbb {E}}}(X^2)=\frac{d^2}{ds^2}{{\mathcal {L}}}_X(s)\vert _{s=0}= 16m(2m-1)+1\). Thus the above theorem guarantees that, in the regime \(\gamma _n = o(\lambda _n)\), the random variable \(\frac{8m -3}{2}\frac{ \gamma _n^2 }{\lambda _n}T_n \) converges in distribution to an exponential random variable. In the particular case when \(m=1\), which corresponds to the star graph, we have that \(\frac{5}{2}\frac{ \gamma _n^2 }{\lambda _n}T_n \) converges in distribution to an exponential random variable.

Proof of Theorem 3

The proof follows the same approach as the proof of Theorem 2, and is provided for completeness. To simplify the notation let us define \(b_n=\frac{(c_1 + c_2)}{2(1+c_1)}\frac{ \gamma _n^2 }{\lambda _n}\). To show the claim it is enough to show that \({{\mathcal {L}}}_{T_n}(b_n s)\underset{n \uparrow \infty }{\longrightarrow }\frac{1}{1+s}\), for every \(s>0\). The assumption \(N_n \overset{D}{\longrightarrow }X\) assures that \({{\mathcal {L}}}_{N_n}(s) \underset{n \uparrow \infty }{\longrightarrow } {{\mathcal {L}}}_X(s)\), where \({{\mathcal {L}}}_X(s)=1 - c_1 s + \frac{c_2}{2} s^2 + o(s^2)\). From the latter, using Eq. (10), we obtain a limit theorem for \(M_n\), i.e.,

Using the Taylor expansions for \({{\mathcal {L}}}_X\) and for \(\log \), we can write

Thus,

Similarly, \({{\mathcal {L}}}_{M_n}\left( b_n s+2\gamma _n\right) = 1 - c_1 \left( \frac{b_n s +2\gamma _n}{2\lambda _n } \right) + \frac{c_1+c_2}{2} \left( \frac{b_n s+2\gamma _n}{2\lambda _n } \right) ^2 + o\left( \left( \frac{\gamma _n }{\lambda _n } \right) ^2\right) \). Therefore, the numerator in Eq. (4) can be written as

Let us now look at the denominator:

Overall, recalling that \(b_n= \frac{(c_1+c_2)\gamma _n^2}{2(1+c_1)\lambda _n }\), we have

\(\square \)

5.5 Ring

In this section, we study the behaviour of \(T_n\) on the ring \(C_n\) on n vertices. In the sequel, we shall assume that n is even; this is not crucial and the case n odd, albeit slightly different, can be similarly handled.

Following the path already used in the previous sections, we need to first understand the behaviour of the number of steps two walkers need to meet up starting at distance one. In light of this, for \(i=1, \ldots , \frac{n}{2}\), let \(N_{n,i}\) be the number of steps two random walks need to meet starting at distance i on the ring on n vertices; we set \(N_{n,0}=0\). The following recursion holds

To simplify the notation let us set \({{\mathcal {L}}}_i(s)={{\mathcal {L}}}_{N_{n,i}}(s)\) to denote the Laplace transform of \(N_{n,i}\), with \({{\mathcal {L}}}_0(s)=1\), and \(\alpha =e^{-s}/2\). Using Eq. (12) we obtain the following recursion for \({{\mathcal {L}}}_i\)

Recall that we are interested in \({{\mathcal {L}}}_1(s)\), i.e., the Laplace transform of the number of steps two walkers need to meet starting at distance one. Solving the latter recursion (see Appendix for details) we obtain

where \(x_1= \frac{1 + \sqrt{1-4\alpha ^2}}{2}\) and \(x_2= \frac{1 - \sqrt{1-4\alpha ^2}}{2}\).

From Eq. (13) two observations can be made:

\(a_n N_{n,1} \overset{{\mathbb {P}}}{\longrightarrow } 0\) for all \(a_n \rightarrow 0\) which implies that Theorem 2 cannot be applied

\(N_n\overset{D}{\longrightarrow }X\) with \({\mathbb {E}}(X)=+\infty \) which implies that Theorem 3 cannot be applied

Remark 4

Note that the second observation makes sense as \(N_{n,1}\) should converge to the time for a simple symmetric random walk on integers to reach zero if it starts at 1, which is well-known to have an infinite expectation.

In order to study the asymptotic of \(T_n\) on the ring, we therefore resort to Theorem 1 and obtain

Let us restrict to the regime \(\gamma _n = o(\lambda _n)\) and compute \({{\mathcal {L}}}_{T_n}(\gamma _n s)\). First we observe that

Thus,

The latter tells us that \(\gamma _n T_n \overset{D}{\longrightarrow } X\) where X is a random variables such that \({{\mathcal {L}}}_X(s)= \frac{2(\sqrt{2+s} - \sqrt{1+s})}{\sqrt{1+s} \sqrt{2+s}(2\sqrt{1+s} - \sqrt{2+s})}\).

6 Final Remarks

Epidemics on networks driven by mobile agents serve as a fundamental model for different contagious processes, finding applications in various domains. In the SIS epidemic model agents alternate between being susceptible and infected, becoming infected when meeting in network nodes, and a fundamental statistic is the duration of the epidemic (since all agents will eventually become susceptible). This model is challenging to analyze due the dependence between the epidemic process and agent mobility. When agent mobility is agnostic to the epidemic process (e.g., agents perform independent random walks, the scenario tackled in this work), theoretical analysis is more manageable.

Indeed, by considering edge-transitive graphs and two agents, this work establishes a strong result that separates the epidemic process from the meeting process. In particular, Theorem 1 determines the Laplace transform of the epidemic end time (EoE) as a function of the Laplace transform of the meeting times. Note that the latter depends only on the network structure. The second contribution is the characterization of the EoE for graph sequences of increasing size (Theorems 2 and 3). While for every finite graph, the EoE is finite, under a proper scaling of the model parameters the EoE can be arbitrarily long (and even converge to infinity, as the graph size grows). Interestingly, the proper scaling for such phenomenon strongly depend on the graph structure. This finding highlights a possible phase transition between very short and very long (expected) EoE, a phenomenon that has been rigorously observed in a related model [12, 27].

While this work focused on two agents, a natural next step is the characterization of EoE as a function of the number of agents. Indeed, recent works on a related model have shown that the density of the number of agents (in infinite lattices of fixed dimension), plays a fundamental role on EoE [12, 27]. While the approach taken in this work does not trivially extend to three agents, a mean-field approach could be derived for finite graphs with a sufficient number of agents. In fact, we conjecture that with a large enough number of agents, the EOE will be similar to the EoE in the classic network epidemic model, where network nodes have epidemic states. This result would establish an important relationship between apparently different models, contributing further to our understanding of network epidemics.

References

Pastor-Satorras, R., Castellano, C., Van Mieghem, P., Vespignani, A.: Epidemic processes in complex networks. Rev. Mod. Phys. 87(3), 925 (2015)

Draief, M., Massouli, L.: Epidemics and Rumours in Complex Networks. Cambridge University Press, Cambridge (2010)

Barabási, A.L.: Network Science. Cambridge University Press, Cambridge (2016)

Pastor-Satorras, R., Vespignani, A.: Epidemic spreading in scale-free networks. Phys. Rev. Lett. 86(14), 3200 (2001)

Ganesh, A., Massoulié, L., Towsley, D.: The effect of network topology on the spread of epidemics. In: Proceedings IEEE 24th Annual Joint Conference of the IEEE Computer and Communications Societies, vol. 2 (IEEE, 2005), pp. 1455–1466

Moez, D.: Epidemic processes on complex networks: the effect of topology on the spread of epidemics. Physica A 363(1), 120 (2006)

Newman, M.E.: Spread of epidemic disease on networks. Phys. Rev. E 66(1), 016128 (2002)

Masuda, N., Holme, P.: Introduction to temporal network epidemiology. In: Temporal Network Epidemiology. Springer, New York, pp. 1–16 (2017)

Tunc, I., Shkarayev, M.S., Shaw, L.B.: Epidemics in adaptive social networks with temporary link deactivation. J Stat. Phys. 151(1–2), 355 (2013)

Valdano, E., Ferreri, L., Poletto, C., Colizza, V.: Analytical computation of the epidemic threshold on temporal networks. Phys. Rev. X 5(2), 021005 (2015)

Datta, N., Dorlas, T.C.: Random walks on a complete graph: a model for infection. J. Appl. Probab. 41(4), 1008 (2004)

Dickman, R., Rolla, L.T., Sidoravicius, V.: Activated random walkers: facts, conjectures and challenges. J. Stat. Phys. 138(1–3), 126 (2010)

Draief, M., Ganesh, A.: A random walk model for infection on graphs: spread of epidemics & rumours with mobile agents. Discret. Event Dyn. Syst. 21(1), 41 (2011)

Abdullah, M., Cooper, C., Draief, M.: Viral processes by random walks on random regular graphs. In: Approximation, Randomization, and Combinatorial Optimization. Springer, New York, pp. 351–364 (2011)

Benjamini, I., Fontes, L.R., Hermon, J., Machado, F.P.: On an epidemic model on finite graphs. arXiv preprint arXiv:1610.04301 (2016)

Kesten, H., Sidoravicius, V.: The spread of a rumor or infection in a moving population. Ann. Probab. 2402–2462 (2005)

Nagatani, T., Ichinose, G., Tainaka, Ki: Epidemics of random walkers in metapopulation model for complete, cycle, and star graphs. J.Theor. Biol. 450, 66 (2018)

Kelker, D.: A random walk epidemic simulation. J. Am. Stat. Assoc. 68(344), 821 (1973)

Harris, T.E.: Contact interactions on a lattice. Ann. Probab. 969–988 (1974)

Durrett, R.: Ten lectures on particle systems. In: Lectures on Probability Theory, Springer, New York, pp. 97–201 (1995)

Liggett, T.M.: Stochastic Interacting Systems: Contact, Voter and Exclusion Processes, vol. 324. Springer, New York (2013)

Pemantle, R.: The contact process on trees. Ann. Probab. 2089–2116 (1992)

Alves, O., Machado, F., Popov, S.: Phase transition for the frog model. Electron. J. Probab. 7 (2002)

Kesten, H., Sidoravicius, V.: A shape theorem for the spread of an infection. Ann. Math. 701–766 (2008)

Benjamini, I., Fontes, L.R., Hermon, J., Machado, F.P.: On an epidemic model on finite graphs. arXiv preprint arXiv:1610.04301 (2018)

Hermon, J.: Frogs on trees? Electron. J. Probab. 23 (2018)

Sidoravicius, V., Teixeira, A.: Absorbing-state transition for stochastic sandpiles and activated random walks. Electron. J. Probab. 22 (2017)

Kesten, H., Sidoravicius, V., et al.: A phase transition in a model for the spread of an infection. Ill. J. Math. 50(1–4), 547 (2006)

Coppersmith, D., Tetali, P., Winkler, P.: Collisions among random walks on a graph. SIAM J. Discret. Math. 6(3), 363 (1993)

Aldous, D., Fill, J.: Reversible Markov chains and random walks on graphs. In: Reversible Markov Chains and Random Walks on Graphs (2002)

Ohwa, T.: Exact computation for meeting times and infection times of random walks on graphs. Pac. J. Math. Ind. 7(1), 5 (2015)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Eric A. Carlen.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Hereby we solve the recursion

Let latter expression can be rewritten in the following form

where, for instance, \(C_{\frac{n}{2}}=2\alpha \) and \(C_{\frac{n}{2}-1}= \frac{\alpha }{1-2\alpha ^2}\). Note that, given that \({{\mathcal {L}}}_0(s)=1\), we have \({{\mathcal {L}}}_1(s)=C_1\). Thus, the problem of finding the Laplace transform of \(N_{n,1}\) reduces to compute \(C_1\). The coefficients \(C_i\) satisfy the following recursion

In order to simplify the analysis, for every \(j=0, \ldots \frac{n}{2}-1\), we write

where \(P_j(\alpha )\) and \(Q_j(\alpha )\) are polynomials in \(\alpha \). Using the fact that \(C_{\frac{n}{2}}=2\alpha \), we find that \(P_0(\alpha )=2\) and \(Q_0(\alpha )=1\), while using Eq. (14) we find that for every \(j=1, \ldots \frac{n}{2}-1\)

which gives the following second order recurrence relation for \(Q_j(\alpha )\)

Consider the characteristic equation of the second order recurrence relation for \(Q_j\), i.e.,

whose solutions are \(x_1= \frac{1 + \sqrt{1-4\alpha ^2}}{2}\) and \(x_2= \frac{1 - \sqrt{1-4\alpha ^2}}{2}\). Then, we know that \(Q_j(\alpha )\) satisfies the following equation

where A and B can be computed using the initial conditions \(Q_0(\alpha )=1\) and \(Q_1(\alpha )=1-2\alpha ^2\). Specifically, we obtain that \(A=x_1\) and \(B=x_2\). Overall, we have that for every \(j=0, \ldots \frac{n}{2}-1\)

and

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Figueiredo, D., Iacobelli, G. & Shneer, S. The End Time of SIS Epidemics Driven by Random Walks on Edge-Transitive Graphs. J Stat Phys 179, 651–671 (2020). https://doi.org/10.1007/s10955-020-02547-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-020-02547-7