Abstract

We consider a single-server GI / GI / 1 queueing system with feedback. We assume the service time distribution to be (intermediate) regularly varying. We find the tail asymptotics for a customer’s sojourn time in two cases: the customer arrives in an empty system, and the customer arrives in the system in the stationary regime. In particular, in the case of Poisson input we obtain more explicit formulae than those in the general case. As auxiliary results, we find the tail asymptotics for the busy period distribution in a single-server queue with an intermediate varying service times distribution and establish the principle-of-a-single-big-jump equivalences that characterise the asymptotics.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In queueing theory, the sojourn time U of a customer in a queueing system is one of important characteristics, this is the time from its arrival instant to departure instant. In general, the distribution of U is hard to find analytically, and research interest is directed to the asymptotics of the tail probability, \(\mathbb {P}(U > x)\), as \(x \rightarrow \infty \) under various stochastic assumptions. Among them, the following assumption is typically used.

-

(1)

Delaying arrivals leads to increase of the characteristic.

The assumption (1) is known as a monotonicity property, it plays an important role in the asymptotic analysis of various characteristics. Another important factor for the tail asymptotic problem is the heaviness of service time distributions. A nonnegative random variable X is said to have a heavy tail distribution if \(\mathbb {E} \exp (sX) = \infty \) for all \(s > 0\), and a light tail distribution, otherwise. If service time distributions have heavy tails (or light tails), we talk about a heavy (or light) tail regime of the system.

Under the heavy tail regime, the sojourn time problem is relatively well studied for the system satisfying the monotonicity assumption (1) (see e.g. [2, 4, 5, 11] and references therein). However, if a customer separately takes multiple services, then the monotonicity is violated, in general. This is a common phenomenon in queueing networks. Clearly, non-monotone characteristics are also important, but we are unaware of any asymptotic results for them in the presence of heavy tails. So we challenge the tail asymptotics problem of a non-monotone characteristic under the heavy tail regime, using a relatively simple model as one of the first attempts.

We consider the following single server system. Exogenous customers arrive at the system subject to i.i.d. inter-arrival times \(\{t_n\}\) with finite mean a, join at the end of a queue, and are served in the first-in first-out order by a single server with i.i.d. service times \(\{\sigma _n\}\). When a customer completes service, it returns to the end of the queue for another service, with probability \(p\in (0,1)\), or leaves the system, with probability \(q=1-p\). Both transitions are independent of everything else. Note that if a customer requires more than one service, its sojourn time is the sum of several periods of waiting in queue with following service. One can see that the sojourn time U does not have natural monotonicity properties with respect to inter-arrival times. We refer this system as a GI / GI / 1 feedback queue. If \(p=0\) (no customer returns), then it is reduced to a standard GI / GI / 1 queue (e.g., see [1]).

Because of independent feedback of customers completing service, the ith arriving customer, customer i for short, requires a geometric number of services, say \(K_i\), and their total duration is \(\sum _{j=1}^{K_i} \sigma _i^{(j)}\) where \(\{\sigma _i^{(j)}\}\) are i.i.d. with finite mean b and do not depend on \(K_{i}\). Throughout the paper, we assume that the system is stable, that is, \(\rho \equiv \lambda b/q < 1\), where \(\lambda = 1/a\), and the service-time distribution is heavy-tailed. More precisely, we assume the distribution to be intermediate regularly varying—see the list of heavy-tailed distributions at the end of this section and in Appendix A.

For this GI / GI / 1 feedback queue, we derive the asymptotics of the probability \(\mathbb {P}(U>x)\) for the sojourn time U of customer 1 as \(x\rightarrow \infty \) under the heavy tail regime. This U depends on the state of the system just before the arrival instant of customer 1. We consider two scenarios for the system state found by customer 1. The first one is that customer 1 enters an empty queue. It is the most difficult because coefficients appearing in the asymptotics are sensitive not only to the first moments, but to the whole distribution of inter-arrival and service times, in general. The second one is that customer 1 enters a stationary queue. In both cases, we also obtain simpler formulae in the case when the input is Poisson. In particular, the first moment of the sojourn time can be obtained in a closed form in this special case. A part of this information will be used for the general renewal arrival case.

Our model may be considered as a particular case of a two-server generalised Jackson network where server \(i=1,2\) has service times \(\{\sigma _n^{(i)}\}\). Each family of service times is i.i.d. and they are mutually independent. Customers arrive in a renewal input to server 1 and join the queue there. After service completion at server 1, a customer either leaves the network, with probability \(p_{10}\), or joins the queue to the second server, with probability \(p_{12}=1-p_{10}\). Similarly, after service completion at server 2, a customer either leaves the network, with probability \(p_{20}\), or joins the queue to server 1, with probability \(p_{21}=1-p_{20}\). Customers are server in the order of their (external and internal) arrival to the servers.

If we let \(\sigma _n^{(2)}\equiv 0\) and \(p_{12}p_{21}=p\), we obtain our model as a particular case indeed. So the study of our model is not only of interest itself, but also opens a window to analysing a broad class of more general models.

In the GI / GI / 1 feedback queue, one may change the service order in such a way that each customer continuously gets service without interruption when it completes service and returns to the queue. Then such a system is nothing else than the standard GI / GI / 1 queue with “new” i.i.d. service times \(\sum _{j=1}^{K_i} \sigma _i^{(j)}\), and the sojourn time is again the sum of the waiting time and of the (new) service time. Although the busy period, which is the time from the moment when the system becomes non-empty to the moment when it is again empty, is unchanged by this modification, the sojourn time does change. We will use this modified system to study the tail asymptotic of the busy period.

Thus, our analysis is connected to the standard GI / GI / 1 queue. In this case, there is no feedback, and the monotonicity is satisfied. Hence, the waiting time of a tagged customer is a key characteristic because the sojourn time U is the sum of the waiting and service times, which are independent. In particular, the stationary waiting time is a major target for the tail asymptotic analysis. Let \(u_{0}\) be the unfinished work found by the “initial” customer 1 that arrives at the system at time 0, and let \(W_{n}\) be the waiting times of the nth arriving customer. Then, \(W_{1} = u_{0}\) and we have the Lindley recursion:

We assume both inter-arrival and service times to have finite means, \(a=\mathbb {E}t_n\) and \(b=\mathbb {E}\sigma _n\). Here \(W_n\) forms a Markov chain which is stable (i.e. converges in distribution to the limiting/stationary random variable \(W=W_{\infty }\)) if the traffic intensity \(\rho :=b/a \) is less than 1. It is well-known (see e.g. [1]) that if \(u_0=0\), then W coincides in distribution with the supremum \(M=\sup _{n\ge 0} \sum _{i=1}^n (\sigma _n-t_n)\) of a random walk with increments \(\sigma _n-t_n\).

The tail asymptotics for \(\mathbb {P}(M>x)\) as \(x\rightarrow \infty \) is known under the light-tail and heavy-tail regimes. In the case of light tails, there are three types of the tail asymptotics, depending on properties of the moment generating function \(\varphi (s) = \mathbb {E} \exp (s\sigma )\) – see e.g. [6] and references therein. In the case of heavy tails, the tail asymptotics are known in the class of so-called subexponential distributions and are based on the principle of a single big jump (PSBJ): M takes a large value if one of the service times is large. This PSBJ has been used for the asymptotic analysis in several other (relatively simple) stable queueing models, for a number of characteristics (waiting time, sojourn time, queue length, busy cycle/period, maximal data, etc.) that possess the monotonicity property (1) (see e.g. [2]).

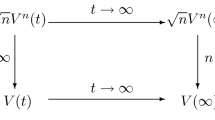

Our proofs rely on the tail asymptotics for the first and stationary busy periods of the system. We establish the PSBJ for the busy period first. This allows us to establish the principle for the sojourn time since the tail distribution asymptotics of the busy period is of the same order with that of the sojourn time. Then insensitivity properties of the intermediate varying distributions (see Appendix A again) allow us to compute the exact tail asymptotics for the sojourn time. The main result from [7] is a key tool in our analysis.

The paper is organised as follows. Section 2 formally introduces the model and presents main results. Section 3 states the tail asymptotic of the busy period and the PSBJ. All theorems from Sects. 2 and 3 are proved in Sect. 4. The Appendix consists of three parts. Part A contains an overview on basic properties of heavy-tailed distributions and part B the proof of Corollary 3.1. In part C, we propose an alternative approach to the proof of Corollary 3.2.

Throughout the paper, we use the following notation: \(1(\cdot )\) is the indicator function of the event “\(\cdot \)”. For two positive functions f and g, we write \(f(x)\sim g(x)\) if \(f(x)/g(x)\rightarrow 1\) as \(x\rightarrow \infty \), \(f(x) \gtrsim g(x)\) if \(\liminf _{x\rightarrow \infty } f(x)/g(x) \ge 1\) and \(f(x)\lesssim g(x)\) if \(\limsup _{x\rightarrow \infty } f(x)/g(x) \le 1\). For a distribution function F, its tail \(\overline{F}\) is defined as

For random variables X, Y with distributions F, G, respectively, \(X =_{st} Y\) if \(F = G\), and \(X \le _{st} Y\) if \(\overline{F}(x) \le \overline{G}(x)\) for all real x. Two families of events \(A_x\) and \(B_x\) of non-zero probabilities are equivalent, \(A_x \simeq B_x\), if

where \(A_x\Delta B_x= (A_x\setminus B_x)\cup (B_x\setminus A_x)\) is the symmetric difference of \(A_x\) and \(B_x\). Note that equivalence \(A_x\simeq B_x\) is symmetric, since

Note also that \(A_x\simeq B_x\) is stronger than equivalence \(\mathbb {P}(A_{x}) \sim \mathbb {P}(B_{x})\).

We complete the Introduction by a short

Summary of main classes of heavy tail distributions

In this paper, we are concerned with several classes of heavy tail distributions. We list their definitions below. Their basic properties are discussed in Appendix A.

In all definitions below, we assume that \(\overline{F}(x)>0\) for all x.

-

1.

Distribution F on the real line belongs to the class \(\mathcal{L}\) of long-tailed distributions if, for some \(y>0\) and as \(x\rightarrow \infty \),

$$\begin{aligned} \frac{\overline{F}(x+y)}{\overline{F}(x)} \rightarrow 1 \end{aligned}$$(1.3)(we may write equivalently \(\overline{F}(x+y) \sim \overline{F}(x)\)).

-

2.

Distribution F on the positive half-line belongs to the class \(\mathcal{S}\) of subexponential distributions if

$$\begin{aligned} \int _0^x F(dt) \overline{F}(x-t) \sim \overline{F}(x) \quad \text{ as } \quad x\rightarrow \infty , \end{aligned}$$which is equivalent to \(\int _{x}^{\infty } F(dt) F(x-t) \sim 2 \overline{F}(x)\). Distribution F of a real-valued random variable \(\xi \) is subexponential if distribution \(F^+(x) = F(x) 1(x\ge 0)\) of random variable \(\xi ^+ =\max (0,\xi )\) is subexponential.

-

3.

Distribution F on the real line belongs to the class \(\mathcal{S}^{*}\) of strong subexponential distributions if \(m^+(F) \equiv \int _{0}^{\infty } \overline{F}(x) dx\) is finite and

$$\begin{aligned} \int _0^x \overline{F}(y) \overline{F}(x-y) dy \sim 2m^+(F) \overline{F}(x) \quad \text{ as } \quad x\rightarrow \infty . \end{aligned}$$ -

4.

Distribution F on the real line belongs to the class \(\mathcal{D}\) of dominantly varying distributions if there exists \(\alpha >1\) (or, equivalently, for all \(\alpha >1\)) such that

$$\begin{aligned} \liminf _{x\rightarrow \infty } \frac{\overline{F}(\alpha x)}{\overline{F}(x)} > 0 \end{aligned}$$(1.4) -

5.

Distribution F on the real line belongs to the class \(\mathcal{IRV}\) of intermediate regularly varying distributions if

$$\begin{aligned} \lim _{\alpha \downarrow 1} \liminf _{x\rightarrow \infty } \frac{\overline{F}(\alpha x)}{ \overline{F}(x)} = 1. \end{aligned}$$(1.5) -

6.

Distribution F on the real line belongs to the class \(\mathcal{RV}\) of regularly varying distributions if, for some \(\beta >0\),

$$\begin{aligned} \overline{F}(x) = x^{-\beta } L(x), \end{aligned}$$(1.6)where L(x) is a slowly varying function, i.e. \(L(cx)\sim L(x)\) as \(x\rightarrow \infty \), for any \(c>0\).

The following relations between the classes introduced above may be found, say, in the books [3] or [9]: in the class of distributions F with finite \(m^+(F)\),

2 The Modelling Assumptions and Main Results

In this section, we describe the dynamics of the sojourn time of a tagged customer (customer 1), and present main results on the tail asymptotics of its sojourn time. In Sect. 2.1, we formally introduce the GI / GI / 1 feedback queue and then, in Sect. 2.2, a particular M / GI / 1 feedback queue, which has Poisson arrivals. Then the main results are presented in Sect. 2.3.

2.1 GI / GI / 1 Feedback Queue

Let K be the number of services of the tagged customer until its departure. By the feedback assumption, K is geometrically distributed with parameter p, that is,

and independent of everything else. Throughout the paper, we make the following assumptions:

-

(i)

The exogenous arrival process is a renewal process with a finite mean interarrival time \(a > 0\).

-

(ii)

All the service times that start after time 0 are i.i.d. with finite mean \(b > 0\), they are jointly independent of the arrival process.

-

(iii)

The system is stable, that is,

$$\begin{aligned} \rho \equiv \lambda b/q < 1, \end{aligned}$$(2.2)where \(\lambda = 1/a\).

We denote the counting process of the exogenous arrivals by \(N^{e}(\cdot ) \equiv \{N^{e}(t); t \ge 0\}\). We use the notation G for the service time distribution, and use \(\sigma \) for a random variable subject to G.

Let \((X_{0},R^{s}_{0})\) be the pair of the number of earlier customers and the remaining service time of a customer being served at time 0, where \(R^{s}_{0} = 0\) if there is no customer in the system. Let \(u_0\) be the waiting time of the tagged customer before the start of its first service, Then

where \(\sigma _{0,i}\)’s for \(i \ge 2\) are i.i.d. random variables each of which has the same distribution as \(\sigma \). There are two typical scenarios for the initial distribution, that is, the distribution of \((X_{0},R^{s}_{0})\).

-

(2a)

A tagged arriving customer finds the system empty. That is, \((X_{0},R^{s}_{0}) = (0,0)\).

-

(2b)

A tagged arriving customer finds \(X_{0}\) customers and the remaining service time \(R^{s}_{0}\) of the customer being served. Thus, the initial state \((X_{0},R^{s}_{0}) \ne (0,0)\).

In this paper, we assume that the service time distribution is heavy tailed, and mainly consider the tail asymptotic of the sojourn time distribution of the GI / GI / 1 feedback queue under the scenario (2a). The case (2b) when \(X_{0}\) and \(R_0^s\) are bounded by a constant may be studied very similarly to the case (2a), therefore we do not analyse it. We consider the case (2b) when \((X_{0},R^{s}_{0})\) is subject to the stationary distribution embedded at the arrival instants.

For given \((X_{0},R^{s}_{0})\), we have defined \(u_{0}\). Let \(X_{k}\) be the queue length behind the tagged customer when it finished its kth service for \(k \ge 1\) when the tagged customer gets service at least k times. Similarly, let \(U_{k}\) be the sojourn time of the tagged customer measured from its \((k-1)\)th service completion to its kth service completion, and let \(T_{k}\) be the sojourn time of the tagged customer just after its kth service completion.

We now formally define random variables \(X_{k}\), \(U_{k}\) and \(T_{k}\) by induction. Let \(T_0=0\). Denote the kth service time of the tagged customer by \(\sigma _{k,0}\), while \(\sigma _{k,0}, i=1,\ldots , X_{k-1}\) are the service times of the customers waiting before the tagged one on its kth return. Note that \(\sigma _{k,i}\)’s for \(k \ge 1, i \ge 0\) are i.i.d. random variables subject to the same distribution as \(\sigma \). Then, \(X_{k}\), \(U_{k}\) and \(T_{k}\) for \(k \ge 1\) are defined as

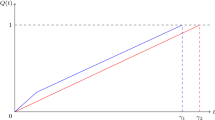

where \(u_0\) is given by (2.3), and \(N^{B}_{k}(n)\)’s are i.i.d. random variables each of which is subject to the Binomial distribution with parameters n, p. The dynamics of the sojourn time is depicted above when \(X_{0} = 0\), that is, a tagged customer finds the system empty (Fig. 1).

To make clear the dependence of \(X_{k}, U_{k}, T_{k}\), we introduce a filtration \(\{\mathcal{F}_{t}; t \ge 0\}\) as

where \(N^{s}(t)\) and \(N^{r}(t)\) are the numbers of customers who completed service and who return to the queue, respectively, up to time t. Clearly, \(T_{k}\) is a \(\mathcal{F}_{t}\)-stopping time, and \(X_{k}\) and \(U_{k}\) are \(\mathcal{F}_{T_{k}}\)-measurable. Furthermore, \(\sigma _{k,0}\) and \(\sigma _{k,i}\) for \(i \ge 1\) are independent of \(\mathcal{F}_{T_{k-1}}\). Then U, the sojourn time of the tagged customer, may be represented as

For \(k\ge 0\), let \(Y_{k} = \sum _{\ell = 0}^{k} X_{\ell }\) for \(k \ge 0\), which is the total number of external and internal arrivals to the queue up to time \(T_k\) plus the number of customers in system at time 0. Then

Hence, under scenario (2a), we have \(u_{0}= X_{0} = 0\), so

while, under scenario (2b),

where \(\sigma _{i}\)’s are i.i.d. random variables each of which has the same distribution as \(\sigma \). Note that \(K+Y_{K-1}\) is \(\mathcal{F}_{T_{K-1}}\)-measurable that depends, in general, on all \(\sigma _i\)’s of customers who arrive before \(T_{K-1}\). This causes considerable difficulty in the asymptotic analysis of U.

Thus, we need to consider dependence structure in the representation of U. Furthermore, \(\{(U_{k}, X_{k}); k \ge 0\}\) is generally not a Markov chain for a general renewal process.

On the other hand, if the arrival process \(N^{e}(\cdot )\) is Poisson, then not only \(\{(U_{k},X_{k}); k \ge 0\}\) but also \(\{X_{k}; k \ge 0\}\) is a Markov chain with respect to the filtration \(\{\mathcal{F}_{T_{k}}; k \ge 0\}\). In this case, we may obtain exact expressions for \(\mathbb {E}X_k\) and then an explicit form for the tail asymptotics.

2.2 M / GI / 1 Feedback Queue and Branching Process

In this subsection, we assume that the exogenous arrival process is Poisson with rate \(\lambda > 0\). This model is analytically studied using Laplace transforms in [12], but no asymptotic results are given there. Note that we may consider \(\{X_{k}; k\ge 0\}\) as a branching process and directly compute \(\mathbb {E}(X_{k})\), which then will be used for the general renewal input case.

Since the Poisson process \(N^{e}(\cdot )\) has independent increments, (2.6) is simplified to

using independent Poisson processes \(N^{e}_{k}\) and independent Binomial random variables \(N^{B}_{k}(n)\). Furthermore, (2.11) can be written as

where \(N_{k,i}^{e}(\cdot )\)’s are independent Poisson processes with rate \(\lambda \). Hence, \(\{X_{k}; k \ge 1\}\) is a branching process with immigration.

Due to the branching structure, we can compute the moments of \(X_{k}\) explicitly. We are particularly interested in their means. From (2.12), we have

where \(r = \lambda b + p\). By the stability condition (2.2), \(r < 1\), and we have

Hence, we have a uniform bound:

Furthermore, we have

Under the scenario (2a), \(\mathbb {E}(X_{k})\) of the M / GI / 1 feedback queue will be used for the tail asymptotic of the sojourn time in the GI / GI / 1 feedback queue. Thus, we introduce notations for them. Let \(X_{k}^{(0)}(M/GI/1)\) be the \(X_{k}\) of the M / GI / 1 feedback queue for \(X_{0} = 0\), then define \(m^{(0)}_{k}\) as

From (2.13), we have

We will use \(m^{(0)}_{K-1}\) and \(m^{(0)}_{K}\) in main results the next section. It should be noticed that they are random variables obtained by substituting \(K-1\) and K into k of \(m^{(0)}_{k}\).

2.3 Main Results

We are ready to present the main results of this paper. They are proved in Sect. 4.

Theorem 2.1

For the stable GI / GI / 1 feedback queue, assume that its service time distribution is intermediate regularly varying (\(\mathcal{IRV}\)). If the tagged customer finds the system empty, then

where \(X^{(0)}_{k}\) is \(X_{k}\) for \(X_{0} = 0\), \(m^{(0)}_{k} = \frac{1-r^{k}}{1-r} \lambda b\) for \(r = p + \lambda b\) by (2.16). Here the random variable K does not depend on \(\{X_k^{(0)}\}\) and \(\sigma \).

Remark 2.1

In the case of a Poisson input, \(\mathbb {E}\big (1+X^{(0)}_{K-1}\big ) =\frac{q(1+p)}{1-pr}\). In general, it is hard to evaluate \(\mathbb {E}\big (1+X^{(0)}_{K-1}\big )\) because this requires computing \(\mathbb {E}(N^{e}(T_{k}))\).

We prove this theorem in Sect. 4.3 using the PSBJ that is established in Theorem 3.2.

Corollary 2.1

Under the assumptions of Theorem 2.1, for each \(k \ge 1\),

This corollary is easily obtained from arguments used in the proof of Theorem 2.1. On the other hand, if we take the geometrically weighted sum of (2.18) and if the interchange of this sum and the asymptotic limit are allowed, then we have (2.17). This interchange of the limits is legitimated by Theorem 3.2. However, Corollary 2.1 itself can be directly proved. We provide such a proof for a slightly extended version of Corollary 2.1 in Appendix C.

Corollary 2.2

Assume that the assumptions of Theorem 2.1 hold.

-

(a)

If the distribution of service times is \(\mathcal{RV}\), \({\overline{G}}(x) = L(x)/x^{\alpha +1}\) with \(\alpha >0\) and slowly varying function L(x), then

$$\begin{aligned} \mathbb {P}\left( \big (1+ m^{(0)}_{K-1}\big ) \sigma > x \right) \sim \frac{qL(x)}{x^{\alpha +1}} \sum _{k=0}^{\infty } p^k \left( \frac{1-(p+ r^{k}\lambda b)}{1-r} \right) ^{\alpha +1}, \end{aligned}$$where \(r= p+\lambda b\).

-

(b)

Under the assumption in (a), if the input stream is Poisson with parameter \(\lambda \), then

$$\begin{aligned} \mathbb {P} (U>x) \sim CL(x)x^{-(\alpha +1)} \end{aligned}$$where

$$\begin{aligned} C= \frac{1+p}{(1-r)^{\alpha +1}(1-r p)} 1 + q \sum _{k=0}^{\infty } p^k (1-(p+r^k\lambda b))^{\alpha +1}. \end{aligned}$$

We next present the tail asymptotic for a tagged customer that arrives in the stationary system. By “stationary” we mean stationary in discrete time, i.e. at embedded arrival epochs, this is detailed in Sect. 4.4.

Theorem 2.2

Let \(U^0\) be the sojourn time of a typical customer in the stationary GI / GI / 1 feedback queue with \(\mathcal{IRV}\) distribution G of service times with mean b, i.i.d. inter-arrival times with mean a and probability of feedback \(p=1-q \in (0,1)\). Let \(\sigma _{I}\) be a random variable having the distribution function \(G_{I}(x) \equiv 1 - \min \left( 1,\int _{x}^{\infty } \overline{G}(u) du\right) \).

-

(a)

Then, as \(x\rightarrow \infty \),

$$\begin{aligned} \mathbb {P}(U^0>x)&\sim \frac{\lambda }{1 - \lambda b} \left( \mathbb {P}\left( m^{(0)}_{K} \sigma _{I}> x\right) + \frac{(1-q) \rho }{1 - \rho } \mathbb {P}\left( \left( \lambda b\, m^{(0)}_{K}\right) \sigma _{I} > x\right) \right) , \end{aligned}$$(2.19)where \(m^{(0)}_{k}\) is defined by (2.16), and \(\sigma _{I}\) is independent of K.

-

(b)

In particular, if G is an \(\mathcal{RV}\) distribution, \(\overline{G}(x)=L(x)/x^{\alpha +1}\) with \(\alpha >0\), where L(x) is a slowly varying function, then

$$\begin{aligned} \mathbb {P}(U^0>x)&\sim \frac{L(x)}{x^{\alpha }} \cdot \frac{q(1-r)^{{-\alpha }}}{\alpha (a-b)}\left( 1+\frac{b(1-q)}{aq-b} \left( \frac{b}{a}\right) ^{\alpha }\right) \sum _{k=1}^{\infty } p^{k-1} (1-r^k)^{{\alpha }}. \end{aligned}$$(2.20)

Remark 2.2

-

(a)

The tail function \(\overline{G_{I}}(x) \equiv 1 - G_{I}(x)\) is called the “integrated tail” of G. Instead of \(G_{I}\), we may use the stationary excess distribution \(G_{e}(x) \equiv \frac{1}{b} \int _{0}^{x} \overline{G}(u) du\) since \(\overline{G}_{I}(x) = b \, \overline{G_{e}}(x)\) for sufficiently large x. In this case, let \(\sigma _{e}\) be a random variable subject to \(G_{e}\), then we can replace \(\sigma _{I}\) by \(\sigma _{e}\) in (2.19), multiplying its right-hand side by b.

-

(b)

It is notable that no \(X^{(0)}_{K-1}\) for the renewal arrivals is involved in (2.19). This is different from (2.17), and may come from averaging in the steady state.

-

(c)

It may be interesting to compare the asymptotics in (2.19) with those without feedback, which is well known (e.g., see [2]). Namely, let the stationary sojourn time \(\widetilde{U}^0\) in the standard GI / GI / 1 queue with inter-arrival times \(\{t_n\}\) and with service times \(\{\sigma _n^{H}\}\). where \(\sigma _n^{H}\) has the same distribution as \(\sum _{i=1}^{K} \sigma _{i}\). If \(\sigma _{I}\) has a subexponential distribution, then

$$\begin{aligned} \mathbb {P}(\widetilde{U}^0>x) \sim \frac{\lambda }{1-\rho } \int _{x}^{\infty } \mathbb {P}\left( \sum _{i=1}^{K} \sigma _{i}> u\right) du \sim \frac{\lambda }{q(1-\rho )} \mathbb {P}(\sigma _{I} > x), \end{aligned}$$(2.21)where the second asymptotic equivalence follows from Lhópital’s theorem and (A.4). Thus, one can see that (2.19) is asymptotically compatible with (2.21) as \(q \uparrow 1\), because \(\rho \rightarrow \lambda b\) and \(m^{(0)}_{K} \rightarrow 1\) almost surely as \(q \uparrow 1\).

3 Busy Period and the Principle of a Single Big Jump

In this section, we present the Principle of a Single Big Jump (PSJB) in Theorem 3.2 below, which will be used for a proof Theorem 2.1. For that, we first provide an auxiliary result on the tail asymptotics of the busy period in the GI / GI / 1 queue without feedback. Denote its service time distribution by H and let \(\sigma ^{H}_{i}\) be the ith service time. It is assumed that the arrivals are subject to the renewal process \(N^{e}\) with interarrival times \(t_i\) with mean a, and H has a finite and positive mean \(b_{H} > 0\). Denote the traffic intensity by \(\rho \equiv b_{H}/a < 1\). Let B be the (duration of the) first busy period in this GI / GI / 1 queue, which is the time from the instant when the system becomes non-empty to the instant when it again becomes empty. We here omit the subscript \(_{H}\) for \(\rho , B\), because they will be unchanged for the GI / GI / 1 feedback queue. We finally let \(\tau ^{H}\) be the number of customers served in the first busy period.

We let \(\xi ^{H}_i=\sigma ^{H}_{i}-t_{i}\), and let \(S^{H}_{n} = \sum _1^n \xi ^{H}_i\). Then \(\tau ^{H} = \min \{n\ge 1 : S^{H}_{n} \le 0\}\). Recall the definitions of classes of heavy-tailed distributions \(\mathcal{L}, \mathcal{S^*}, \mathcal{IRV}\) and \(\mathcal{RV}\) at the end of Sect. 1. The following theorem is proved in Sect. 4.1.

Theorem 3.1

Consider a stable GI / GI / 1 queue, \(\rho <1\), with the service time distribution H.

If \(H\in \mathcal{L}\), then

If, in addition, \(H \in \mathcal{S}^{*}\), then, for any \(0<c<1\),

Finally, if \(H \in \mathcal{IRV}\), then, as \(x\rightarrow \infty \),

Remark 3.1

For the class of regularly varying tails, the equivalence (3.3) was proved by Zwart in [13]. We provide a different proof which is shorter and works for a broader class of distributions. Our proof is based on probabilistic intuition related to the principle of a single big jump. A similar result holds for another class of distributions that overlaps with the \(\mathcal{IRV}\) class but does not contain it, see e.g. [11].

Recall the equivalence \(A_{x} \simeq B_{x}\) for two families of events \(A_{x}\) and \(B_{x}\) with variable x. We have the following corollary, which is proved in Appendix B.

Corollary 3.1

Consider the same GI / GI / 1 queue as in Theorem 3.1. Let \(\sigma ^{H}_{n}\) be the service time of the nth arriving customer. If \(H\in \mathcal{IRV}\), then, for the busy period B, as \(x \rightarrow \infty \),

and, for any \(\varepsilon > 0\), one can choose \(N = N^{e}(\varepsilon ) \ge 1\) such that, as \(x \rightarrow \infty \),

Furthermore, the following PSBJ holds:

We now return to the GI / GI / 1 feedback queue with the service time distribution G. Assume that the first customer arrives at the system at time instant \(T_0=0\) and finds it empty. Recall that \(K_i\) is the number of services ith customer has in the system, \(K_{i}\)’s are independent of everything else and i.i.d with the same geometric distribution as K [see (2.1)]. For convenience, we let \(K=K_1\). Denote by \(\sigma _{i}^{(j)}\) the jth service time of the ith customer in the GI / GI / 1 feedback queue. Recall that \(\sigma _{i}^{(j)}\) has the same distribution G.

Consider the GI / GI / 1 queue without feedback and with service times \(\sigma ^{H}_i\) where

and denote its distribution by H. Since the length of the busy period, B, does not depend on the order of services, we may allow the server to proceed with services of lengths \(\sigma _i^{j}\), like in the queue with feedback, and conclude that the (the lengths of) the busy periods are the same in both queues. Similarly, the traffic intensity \(\rho \) in the new queue without feedback coincides with that in the GI / GI / 1 queue with feedback. Furthermore, let \(\tau \) be the number of service times in the first busy period of this feedback queue. Then, \(\tau = \sum _{i=1}^{\tau ^{H}} K_{i}\), and therefore we have

We now consider the GI / GI / 1 feedback queue introduced in Sect. 2.1. We establish the PSBJ, i.e. show that, for large x, the rare event \(\{U>x\}\) occurs mostly due to a big value of one of the service times. Our proof of Theorem 3.2 is based on Theorem 3.1 and is given in Sect. 4.2.

Theorem 3.2

Consider a stable single-server queue GI / GI / 1 with feedback. Assume that the service times distribution is intermediate regularly varying. Denote by U be the sojourn time of the first customer, and let

If there exists a collection of positive functions \(\{g_{k,\ell ,i,j}(x)\}\) such that, as \(x \rightarrow \infty \),

and constants \(C_{k,\ell ,i,j}\) such that, for any \(k \ge 1,\ell \ge 0, j \ge 0, 0 \le i \le j, x \ge 0\),

then

4 Proofs of the Theorems

4.1 Proof of Theorem 3.1

We will prove Theorem 3.1 for the tail asymptotics of the busy period B only. The proof for \(\tau ^{H}\), the number of arriving customers in the busy period, is similar.

It is enough to prove the lower and upper bounds in (3.1) and (3.2). Then the equivalences in (3.3) follow by letting c tend to 1 and using the property of \(\mathcal{IRV}\) distributions.

Lower bound Since \(\xi ^{H}_{1} = \sigma ^{H}_{1} - t_{1}\), \(d_0 \equiv b^{H}/(a-b^{H})\) is the solution to the equation

We put \(\widehat{\psi }^{H}_n = \sigma ^{H}_n + d_{1} \xi ^{H}_n\) for any positive number \(d_{1} < d_{0}\). Then \(\{\widehat{\psi }^{H}_n\}\) are i.i.d. random variables with common mean \(\mathbb {E} \widehat{\psi }^{H}_1 >0\). Recall that \(\tau ^{H} = \min \{ n \ge 1 : S^{H}_n \le 0\}\). For any fixed real \(C>0\), \(R>0\), integer \(N\ge 1\), and for \(x\ge 0\), define events \(D_{i}\) and \(A_{i}\) for \(i \ge 1\) as

Then, we have

Here, the first inequality in (4.1) holds since \(S^{H}_{\tau ^{H}}\) is non-positive, and the second inequality comes from the following facts. Events \(D_i\) are disjoint and, given the event \(D_i\), we have \(\sum _{j=1}^i \widehat{\psi }^{H}_j >x+R\). Then, given the event \(D_i\cap A_i\), we have \(\sum _{j=1}^k \widehat{\psi }^{H}_j \ge x\) for all \(k\ge i\) and, in particular, \(\sum _{j=1}^{\tau ^{H}} \widehat{\psi }^{H}_j>x\). Thus, (4.1) holds.

The events \(\{A_i\}\) form a stationary sequence. Due to the SLLN, for any \(\varepsilon >0\), one can choose R so large that \(\mathbb {P}(A_i) \ge 1-\varepsilon \). For this \(\varepsilon \) and any \(N \ge 1\), we can choose sufficiently large C such that

Hence, (4.1) implies that, as \(x\rightarrow \infty \),

and therefore the long-tailedness of distribution H and (iii) of Remark A.1 yield

Letting first N to infinity and then \(\varepsilon \) to zero completes the proof of the first inequality of (3.1).

Upper bound Take \(L>0\) and put \(\tilde{t}_n = \min (t_n,L)\), \(\tilde{\xi }^{H}_n = \sigma _n-\tilde{t}_n\), \(\tilde{S}^{H}_n = \sum _1^n \tilde{\xi }^{H}_i\), \(\tilde{\tau }^{H} = \min \{ n \ge 1: \tilde{S}^{H}_n \le 0\}\). Put also \(\psi ^{H}_n = \sigma ^{H}_n + d_{2}\tilde{\xi }^{H}_n\) where \(d_{2}>d_0\) is any number. Note that \(\tilde{\xi }^{H}_1\) converges to \(\xi ^{H}_1\) in distribution and in mean, as \(L\rightarrow \infty \). We may choose L so large that both \(\mathbb {E} \tilde{\xi }^{H}_1\) and \(\mathbb {E} \psi ^{H}_1\) are negative. Then \(\tilde{\tau }^{H}\) is finite and \(\tilde{S}^{H}_{\tilde{\tau }^{H}} \in (-L,0]\) a.s. Further, as L grows, \(\tilde{\tau }^{H}\) converges to \(\tau \) in distribution and in mean and, for any \(\varepsilon >0\), we may choose L so large that \(\mathbb {E} \tau ^{H} \le \mathbb {E}\tilde{\tau }^{H} \le (1+\varepsilon ) \mathbb {E} \tau ^{H}\). By Remark A.1, the distribution of \(\psi ^{H}_1\) also belongs to the class \(\mathcal{S}^*\). We have

where the equivalence follows from Theorem A.1. Further,

where the first equivalence follows from the long-tailedness of the distribution of \(\psi ^{H}_1\) and the second from Remark A.1. Letting \(\varepsilon \) tend to zero, we have

for any \(d_{2} > d_0\). Let \(c = (d_0+1)/(d_{2}+1) <1\). This proves the first inequality in (3.2).

4.2 Proof of Theorem 3.2

Recall that we consider the scenario where the initial customer 1 arrives at the empty system. Clearly, \(\sigma ^{H}_1 \le U \le B\) a.s. where \(\sigma ^{H}_1\) is the total service time of customer 1, and B is the duration of the first busy period.

Equivalence relation (A.4) and Remark A.1 from the Appendix imply that, given \(\sigma \) has an intermediate regularly varying distribution, random variables \(\sigma ^{H}_i\) have a common intermediate varying distribution too. Since any intermediate varying distribution is dominantly varying [see Property (1.7)], we get from Theorem 3.1 that

Relations (4.2) and (A.4) lead then to the logarithmic asymptotics:

Lemma 4.1

Assume that the distribution of the service time \(\sigma _{1}^{(1)}\) belongs to the class \(\mathcal{IRV}\). Then, as \(x\rightarrow \infty \),

Further, by Corollary 3.1, the PSBJ for B holds:

Here \(\tau \) is the number of customers served within the first busy period.

Combining (4.4) and (A.4), we arrive at the following result:

Lemma 4.2

Consider a stable single-server queue GI / GI / 1 with feedback. Let B be the duration of the first busy period and U the sojourn time of the first customer. Assume that the service times distribution is intermediate regularly varying. Then

To derive the exact asymptotics for \(\mathbb {P} (U>x)\), we recall that, for \(1\le k <K \equiv K_{1}\), \(X_{k} \ge 0\) is the total number of services of other customers between the kth and the \((k+1)\)st services of customer 1, and let \(\sigma _{k,i}\) be the service time of the i service there, \(1\le i \le X_{k}\). Further, under the scenario (2a), \(X_0=0\). Then let \(\nu \ge 0\) be the total number of services of other customers after the departure of the first customer within the busy period, and let \(\sigma _{i}^{*}\) be the ith service time there, \(1\le i \le \nu \). Then random variables \(\sigma _{k,.i}\) and \(\sigma _{i}^{*}\) are i.i.d. with the same distribution as \(\sigma \) and U is given by (2.9). From (4.5), we get,

On the other hand, we have

where \(C=\sup _x \overline{G}(x(1-\rho ))/\overline{G}(x)<\infty \) is a constant, and recall that \(\tau \) is the total number of services within the busy cycle. The last line follows since \(\mathbb {E} \tau = \mathbb {E} \tau ^{H} /q\) is finite. Therefore, we have

Lemma 4.3

In the conditions of Lemma 4.2, we have

Moreover, the following result holds:

Lemma 4.4

Assume the conditions of Lemma 4.2 hold. Then, for any \(\varepsilon >0\), one can find N such that

where

Proof

Indeed, the term in the right-hand side of (4.6) is bigger than \(\mathbb {P} (D_{N}(x))\) and smaller than the sum \(\mathbb {P} (D_{N}(x)) + \mathbb {P} (U>x, K_1 >N)\), where

Consider again the auxiliary GI / GI / 1 queue with service times \(\sigma ^{H}_i =\sum _{j=1}^{K_i}\sigma _{i}^{(j)}\) and the first-come-first-served service discipline. Consider the following majorant: assume that at the beginning of the first cycle, in addition to customer 1, an extra \(K-1\) new customers arrive, so there are K arrivals in total. Here K is a geometric random variable with parameter p that does not depend on service times. Then the first busy period in this queue has the same distribution as \(\sum _{i=1}^K B_i\) where \(B_i\) are i.i.d. random variables that have the same distribution as B and do not depend on K. By monotonicity,

Due to (A.4), the latter probability is equivalent, as \(x\rightarrow \infty \), to

where \(C_0\) is from (4.2). Now choose N such that \(C_0 \mathbb {E} (K{1} (K>N))\mathbb {E} K \le \varepsilon \). Since \(\mathbb {P}(U>x) \ge \overline{G}(x)\), (4.7) follows. \(\square \)

We can go further and obtain the following result.

Lemma 4.5

Assume the conditions of Lemma 4.2 hold, and let

Then, for any \(\varepsilon >0\), one can choose a positive integer R such that

where the event \(D_{N}(x)\) was defined in (4.8). Further,

Proof

Indeed,

where the term \(\mathbb {E} ((\tau +1){1} (\tau >R))\) may be made as small as possible by taking a sufficiently large R. Then (4.12) follows since the probability of a union of events is always smaller than the sum of their probabilities, and is bigger than the sum of probabilities of events minus the sum of probabilities of pairwise intersections of events. Each probability of intersection of two independent events is smaller than

therefore their finite sum is \(o(\overline{G}(x))\) and (4.12) follows.

We are now in a final step of the proof of Theorem 3.2. For \(k \ge 1,\ell , j \ge 0\), define \(D_{k,\ell ,j}\) as

where the second equality holds because K is geometrically distributed. Then, Lemma 4.5 implies (3.11) for \(g_{k,\ell ,i,j}(x) = P_{k,\ell ,i,j}(x)\) since, for any \(k,\ell ,j\ge i\),

and

where, recall, \(\tau \) is the total number of customers served in the first busy period. Clearly, (3.11) is also valid for a general \(\{g_{k,\ell ,i,j}(x)\}\) because of the conditions (3.9) and (3.10). This completes the proof of Theorem 3.2. \(\square \)

4.3 Proof of Theorem 2.1

We first recall the notation: \(U_{1}, U_{2},\ldots \) and \(X_{0}, X_{1}, \ldots \) are the service cycles and the number of customers other than the tagged customer served in the cycles, respectively. Here \(u_{0} = X_{0} = 0\). In general, the sojourn time is a randomly stopped sum of i.i.d. positive random variables, and both the summands and the counting random variable have heavy-tailed distributions. It is known that it is hard to study the tail asymptotics for general heavy-tailed distributions (see, e.g., [10]) in this case. We proceed under the assumption that the service time distribution is intermediate regularly varying.

Recall that \(\sigma _{k,0}\) is the kth service time of the tagged customer and, for \(i=1,\ldots , X_k\), \(\sigma _{k,i}\) is the ith service time in the queue \(X_k\). Further, \(T_{k}=\sum _{\ell =1}^k U_{\ell }\) be the time instant when the kth service of the tagged customer is completed, where \(U_{1} = \sigma _{1,0}\). Introduce the notation

which is the remaining time the tagged customer spends in the system after the completion of the kth service, and let \(v_{k}\) be the residual inter-arrival time of the input when the kth service of the tagged customer ends.

In what follows, we will say that an event involving some constants and functions/sequences occurs “with high probability” if, for any \(\varepsilon >0\), there exists constants and functions/sequences (that depend on \(\varepsilon \)) with the desired properties such that the event occurs with probability at least \(1-\varepsilon \).

For example, let \(S^{\sigma }_n = \sum _1^n \sigma _i\) be the sum of i.i.d. random variables with finite mean b. Then the phrase “with high probability (WHP), for all \(n=1,2,\ldots \),

with \(C>0\) and \(\delta _n\downarrow 0\)” means that “for any \(\varepsilon >0\), there exist a constant \(C\equiv C_{\varepsilon }>0\) and a sequence \(\delta _n\equiv \delta _n(\varepsilon ) \downarrow 0\) such that the probability of the event

is at least \(1-\varepsilon \)”. We can say equivalently that “WHP, for all \(n=1,2,\ldots \), \( S^{\sigma }_n \in (na - o(n), na+ o(n)) \) ”, or, simply, “WHP, \(S^{\sigma }_n \sim an\)”, and this means that “for any \(\varepsilon >0\), there exists a positive function \(h(n)=h_{\varepsilon }(n)\) which is an o(n)-function (it may tend to infinity, but slower than n) and is such that the probability of the event

is at least \(1-\varepsilon \).”

Now we show (3.8) for

Namely, we show that, for all \(k \ge 1, \ell \ge 0, 0\le i \le j\),

We prove (4.16) by induction on \(\ell \ge 0\), for each fixed \(k\ge 1, 0 \le i \le j\).

Lower bound \(\ell =0.\)

Since \(\sigma _{k,i} > x\) implies that \(U > x\) and \(\sigma _{k,i} > (1-\rho )x\), the lower bound for the LHS of (4.16) is

because \(m^{(0)}_{0} = 0\).

Upper bound \(\ell =0\).

There is a constant \(w > 0\) such that \(T_{k-1}\le w\) and \(\sum _{0\le i' \le j, i' \ne i} \sigma _{k,i'} \le w\) WHP. Then \(U\le 2w+\sigma _{k,i}\), so the upper bound for the LHS of (4.16) is

Letting \(\varepsilon \) tend to zero in this upper bounds yields that the lower and upper bounds are asymptotically identical. Since \(m^{(0)}_{0} = 0\), they are further identical to \(g_{k,0,i,j}(x)\) of (4.15). Thus, (4.16) is verified.

Turn to the case \(\ell =1.\)

Lower bound \(\ell =1.\)

Like in the case \(\ell =0\), replace all other service times \(\sigma _{k,i'}, i'\ne i\) by zero. Assume that all j customers from the group \(X_{k-1}\) leave the system after their service completions. WHP, \(v_{k-1}\le w\). Given \(y=\sigma _{k,i}\) is large and much bigger than w, we have that at least \(N^{e}(y-w)\) customers arrive during time \(U_{k} \ge \sigma _{k,i} = y\). Again WHP,

and, again WHP, their total service time is within the time interval \((\lambda b y - o(y), \lambda b y + o(y))\). Therefore,

and the RHS is bigger than x if \(y>x/(1+\lambda b) + o(x)\). Therefore, the lower bound for the LHS of (4.16) is

Upper bound \(\ell =1.\)

WHP, \(T_{k-1}\le w\), \(v_k\le w\) and \(\sum _{0 \le i' \le j, i'\ne i} \sigma _{k,i'} \le w\). Let \(\sigma _{k,i}=y \gg 1\). Then, WHP, \(U_{k}\le y+2w\) and the number of external arrivals within \(U_{k}\) is bounded above by \(1+N^{e}(y+2w) = \lambda y + o(y)\), again WHP. Assume that all \(X_{k-1}=j\) customers stay in the system after their services. Then again \(j+1+N^{e}(y+w) = \lambda y +o(y)\), WHP. Therefore, \(U_{k+1} = b \lambda y + o(y).\) Then we arrive at the upper bound that meets the lower bound.

Thus, (4.16) is verified for \(g_{k,2,i,j}(x)\) because \(m^{(0)}_{1} = \lambda b \) by (2.16).

Induction step

We can provide induction for any finite number of steps. Here is the induction base. Assume that \(\sigma _{k,i}=y \gg 1\) and that, after \(\ell \ge 1\) steps, \(T_{k+\ell '} \sim (1+m^{(0)}_{\ell '}) y\) for \(0 \le \ell ' \le \ell \), and there are \(X_{k+\ell -1}\) customers in the queue and that \(X_{k+\ell -1}=wy + o(y)\), WHP, where \(w>0\). Then, combining upper and lower bounds, we may conclude that, again WHP, \(U_{k+\ell } = b wy + o(y)\) and then

where we recall that \(r = p + \lambda b\). By the induction hypothesis, (4.17) implies that

which, with (2.16), yields that

Hence, by (4.18),

This completes the induction step for \(\ell +1\).

We finally check the conditions (3.9) and (3.10). Since an intermediate regularly varying distribution is dominantly varying, it follows from (4.15) that

for some \(c > 0\). Hence, letting

(3.9) is verified, while (3.10) follows from (4.14). Thus, by Theorem 3.2,

which implies (2.17), and Theorem 2.1 is proved.

4.4 PSBJ for the Stationary Queue

We now consider the case where customer 1 arrives to the stationary queue and denote by \(U^0\) its sojourn time. In this section, we frequently use the following notation: for a distribution F having a finite mean, \(\overline{F_{I}}(x) = \min (1, \int _x^{\infty } \overline{F}(y) dy)\) is its integrated tail distribution (see (a) of Remark 2.2).

By “stationarity” we mean stationarity in discrete time, i.e. at embedded arrival epochs. So we assume that the system has started from time \(-\infty \) and that customer 1 arrives at time \(\widetilde{t}_{1} \equiv 0\), customers with indices \(k \le 0\) enter the system at time instants \(\widetilde{t}_k=-\sum _{j=k}^0 t_j\) and customers with indices \(k \ge 2\) at time instants \(\widetilde{t}_k=\sum _{j=1}^{k-1} t_j\). For \(k \le \ell \), let \(S^{H}_{k,\ell } = \sum _{j=k}^{\ell }\xi ^{H}_j\), where \(\xi ^{H}_{j} = \sigma ^{H}_{j} - t_{j}\). Then the stationary busy cycle covering 0 starts at \(\widetilde{t}_k\), \(k \le 0\) if

So, if \(B^0\) is the remaining duration of the busy period viewed at time 0, then

where \(B_k\) is the duration of the period that starts at time \(\widetilde{t}_k\) given that customer k arrives in the empty system (then, in particular, \(B=B_0\)). See Fig. 2.

Let

which is the number of customers arriving at or after time 0 in the busy period when it starts at time \(\widetilde{t}_{k}\), and let

then \(A^{H}_{k} \cap \{\tau ^{H}_{k} \ge \ell \}\)’s for \(k \le 0, \ell \ge -k+1\) are disjoint sets.

Thus, (4.19) may be written as

and therefore, applying the PSBJ of Corollary 3.1 to each busy period \(B_{-k}\),

where \(\sigma ^{H}_{-k+i}\), \(i\ge 0\) is the service time of the ith customer arriving in the busy period that starts at time \(\widetilde{t}_{-k}\).

Hence, letting

we have

We first consider the event \(A^{0}_{+}(x)\), which is a contribution of big jumps at or after time 0, and show that its probability is negligible with respect to \(\overline{H_I}(x)\), as \(x\rightarrow \infty \). Clearly, for any positive function h(x) and for any \(\varepsilon \in (0,a)\),

Then

if one takes, say, \(h(x) = x^c\) for some \(c<1\). Here the second inequality follows since \(\left\{ \tau ^{H}_{-k+i} \ge i\right\} = \left\{ \tau ^{H}_{-k+i} \le i-1\right\} ^{c}\) is independent of \(\sigma ^{H}_{-k+i}\), the third inequality from Chernoff’s inequality, for a small \(\alpha >0\), and the final conclusion from property (A.7) in the Appendix.

Thus, we only need to evaluate the contribution of big jumps that occur before time 0. Namely, we analyse \(A^{0}_{-}(x)\). Note that, for any \(k_0>0\), the probability of the event

is of order \(O(\overline{G}(x(1-\rho )))\) which is negligible with respect to \(\overline{G_I}(x(1-\rho ))\). Therefore, one can choose an integer-valued \(h(x)\rightarrow \infty \) such that \(\mathbb {P}\left( A_-^{(0,h(x))}(x)\right) =o(\overline{G_I}(x(1-\rho )))\). So we may apply again the SLLN, \(\widetilde{t}_{-k} \sim ak\) for sufficiently large k, to get

On the other hand, for \(h(x)\uparrow \infty \) sufficiently slowly and for an appropriate sequence \(\varepsilon _{\ell }\downarrow 0\) (that comes from the SLLN), we have

where

Since \(B^0>x\) on the event E(x), we arrive at the following PSBJ for the stationary busy period.

Lemma 4.6

If the GI / GI / 1 feedback queue is stable and its service time distribution has an \(\mathcal{IRV}\) distribution with a finite mean, then

The lemma implies that

since the sum of the probabilities of pairwise intersections is of order \(O(\overline{G_{I}}^2(x))=o(\overline{G_{I}}(x))\).

Then we may conclude that the principle of a single big jump can be applied to the stationary sojourn time too:

where the second equivalence is valid for any integer-valued function \(h(x)\uparrow \infty \), \(h(x)=o(x)\) and follows from (4.19) and from the properties of \(\mathcal{IRV}\) and integrated tail distributions, see Appendix A.

4.5 Proof of Theorem 2.2

First, we comment that it is easy to obtain the logarithmic asymptotics for the stationary sojourn time. Since the sojourn time of the customer entering the stationary queue at time 0 is not bigger than the stationary busy period and is not smaller than the stationary sojourn time in the auxiliary queue without feedback, and since both bounds have tail distributions that are proportional to the integrated tail distribution of a single service time (see the Appendix for definitions), we immediately get the logarithmic tail asymptotics:

Now we provide highlights for obtaining the exact tail asymptotics for the stationary sojourn time distribution and give the final answer. For this, we use the following simplifications, which are made rigorous in the “WHP” terminology and due to the o(x)-insensitivity of the service-time distribution.

-

(1)

We observe that the order of services prior to time 0 is not important for the customer that enters the stationary queue at time 0: the joint distribution of the residual service time and of the queue length at time 0 stays the same for all reasonable service disciplines (that do not allow processor sharing). So we may assume that, up to time 0, all arriving customers are served in order of their external arrival: the system serves the “oldest” customer a geometric number of times and then turns to the service of the next customer.

-

(2)

We simplify the model by assuming that all inter-arrival times are deterministic and equal to \(a=\lambda ^{-1}\).

-

(3)

We further assume that all service times of all customers but one are equal to b, so every customer but one has a geometric number of services of length b. The “exceptional” customer may be any customer \(-n\le 0\), it has a geometric number of services, one of those is random and large and all others equal to b. So the total service time of the “exceptional” customer has the tail distribution equivalent to

$$\begin{aligned} \mathbb {E}K \cdot \overline{G}(x) = \frac{1}{q}\overline{G}(x). \end{aligned}$$ -

(4)

We assume that the “exceptional” customer arrives at an empty queue, that is, the workload found by this customer is negligible compared with his exceptional service time.

Due to the arguments explained above, we can show that the tail asymptotics of the sojourn time of customer 1 in the original and in the auxiliary system are equivalent. We start by repeating our calculations from the proof of Theorem 2.1, but in two slightly different settings.

Assume all service times but the very first one are equal to b for the exceptional customer arriving at or before time 0. Assume that, if customer 1 arriving at time 0 is not exceptional, then it finds \(X_0=N\) customers in the queue, and otherwise it finds a negligible number of customers compared with N while its first service time is Nb. Assume customer 1 leaves the system after \(K=k\) services. Denote, as before, by \(U_i\) the time between its \((i-1)\)st and ith services and by \(X_i\) the queue behind customer 1 after its ith service completion. How large should N be for the sojourn time of customer 1 to be bigger than x where x is large?

-

(A)

Assume that the (residual) service time, z, of the very first customer in the queue is not bigger than b (so we may neglect it). When N is large, we get that \(U_{1} \sim Nb\). Then we have

$$\begin{aligned} X_{i} \sim X_{i-1} p + \lambda U_{i}, \qquad U_{i} \sim X_{i-1} b, \qquad i =1,2,\ldots ,k. \end{aligned}$$Hence, \(X_i \sim X_{i-1}p + \lambda U_{i} \sim N r^i\) and \(U_{i} \sim Nbr^{i-1}\) where \(r=p+\lambda b<1\). Then \(U_1+\cdots +U_{k}\sim Nb(1-r^k)/(1-r)\). Thus, we may conclude that

$$\begin{aligned} \mathbb {P}(U>x, K=k) \sim qp^{k-1}\mathbb {P}(Nb> x_k), \end{aligned}$$(4.26)where \(x_k = x(1-r)/(1-r^k)\).

-

(B)

Assume now that both \(X_0=N\) and z are large. Then \(U_{1} \sim z+Nb\) and \(X_1 \sim Np+\lambda (z+Nb)\) and, further, \(X_{i}\sim X_{i-1}r \sim X_1 r^{i-1}\) and \(U_{i+1} \sim X_ib \sim X_1b r^{i-1} \sim Nbr^i + zr^i\). Thus, \(U_1+\cdots +U_{k}\sim Nb(1-r^k)/(1-r)+ z(1-r^k)/(1-r)\) and

$$\begin{aligned} \mathbb {P}(U>x, K=k) \sim qp^{k-1}\mathbb {P}(Nb+z> x(1-r)/(1-r^k)). \end{aligned}$$(4.27)Let W(t) be the total work in the system at time t. We illustrate W(t) below to see how the cases (A) and (B) occur.

We will see now that if \(K=k\) and if there is a big service time of the \((-n)\)th “exceptional” customer, then the case (A) occurs if \(n>x_k/b\) and the case (B) if \(n<x_k/b\) (Fig. 3).

Let the big service time take value \(y \gg 1\). Recall from (4.24) that it is enough to consider values of \(n\ge h(x)\) only, where \(h(x)\uparrow \infty \), \(h(x)=o(x)\).

For any \(k\ge 1\), assume \(K=k\) and \(y \le na\), then the exceptional service is completed before or at time 0, and the situation (A) occurs. Hence, \(X_{0} \equiv N = n - j\) for some nonnegative \(j \le n\), and \(y+jb/q \approx na\) because approximately j further customers leave the system prior to time 0. Then \(U \sim Nb (1-r^{k})(1-r)\), and \(U > x\) is asymptotically equivalent to \(Nb (1-r^{k})(1-r) > x\), where the last inequality is identical with \((n-j)b > x_{k}\). This together with \(j \approx (na-y) q/b\) implies that

Since \(na \ge y\), this further implies that \(n \gtrsim x_{k}/b\).

We next assume \(K=k\) and \(n<x_k/b\). Then, the contraposition of the above implication implies that \(y > na\), and the situation (B) occurs. Therefore, we should take \(y=z+na\), and \(U_{0} = nb + z\), where \(N=n\). Since \(U \sim (nb+z) (1-r^{k})/(1-r)\), \(U > x\) is equivalent to \(nb + z \gtrsim x_{k}\), and therefore

Combining together both cases, we obtain the following result:

Clearly, the second sum in the parentheses is equivalent to

while the first sum in the parentheses is

Hence, we have

where we recall that \(x_k=\frac{x(1-r)}{1-r^k}\), for \(k\ge 1\). Since \(m^{(0)}_{K} = \mathbb {E}\left( \frac{1-r^{K}}{1-r}\right) \), we arrive at (2.19) and (2.20).

References

Asmussen, S.: Applied Probability and Queues, 2nd edn. Springer, Berlin (2003)

Baccelli, F., Foss, S.: Moments and tails in monotone-separable stochastic networks. Ann. Appl. Probab. 14, 612–650 (2004)

Embrechts, P., Klüppelberg, C., Mikosch, T.: Modelling Extremal Events. Springer, Berlin (1997)

Foss, S., Korshunov, D.: On large delays in multi-server queues with heavy tails. Math. Oper. Res. 37, 201–218 (2012)

Foss, S., Miyazawa, M.: Two-node fluid network with a heavy-tailed random input: the strong stability case. J. Appl. Probab. 51A, 249–265 (2014)

Foss, S., Puhalskii, A.: On the limit law of a random walk conditioned to reach a high level. Stoch. Process. Appl. 121, 288–313 (2011)

Foss, S., Zachary, S.: The maximum on a random time interval of a random walk with a long-tailed increments and negative drift. Ann. Appl. Probab. 13, 37–53 (2003)

Foss, S., Palmowski, Z., Zachary, S.: The probability of exceeding a high boundary on a random time interval for a heavy-tailed random walk. Ann. Appl. Probab. 15, 1936–1957 (2005)

Foss, S., Korshunov, D., Zachary, S.: An Introduction to Heavy-Tailed and Subexponential Distributions, 2nd edn. Springer, Berlin (2013)

Greenwood, P.: Asymptotics of randomly stopped sequences with independent increments. Ann. Probab. 1, 317–321 (1973)

Jelenkovic, P., Momcilovic, P., Zwart, B.: Reduced load equivalence under subexponentiality. Queueing Syst. 46, 97–112 (2004)

Takacs, L.: A single-server queue with feedback. Bell Syst. Tech. J. 42, 505–519 (1963)

Zwart, A.P.: Queueing systems with heavy tails. PhD Thesis, Technische Universiteit Eindhoven, Eindhoven (2001)

Acknowledgements

The authors are grateful to the reviewers for helpful comments and suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Research of S. Foss is supported by RSF research Grant No. 17-11-01173. He also thanks EPFL, Lausanne for their hospitality. Research of M. Miyazawa is supported in part by JSPS KAKENHI Grant No. JP16H02786.

Appendices

Appendix

A Properties of Heavy-Tailed Distributions

We revise basic properties of several classes of heavy-tailed distributions listed at the end of Sect. 1 (see [9] for the modern theory of heavy tailed distribution and other books [1, 3] for more detail), and formulate a part of the main result from [7] that plays an important role in our analysis.

Let \(\{ \xi _n\}_{-\infty }^{\infty }\) be i.i.d. r.v.’s with finite mean \(\mathbb {E} \xi _1\) and with \(\mathbb {P} (\xi _1>0)>0\) and \(\mathbb {P} (\xi _1<0)>0\). Let \(F(x) = \mathbb {P} (\xi _1 \le x)\) be their common distribution and \(\overline{F}(x) = 1-F(x) = \mathbb {P} (\xi _1>x)\) its tail. Let \(m^+ \equiv m^+(F) = \mathbb {E} \max (0,\xi _1) = \int _0^{\infty } \overline{F}(t) dt\). Let \(S_0 =0\), \(S_n = \sum _1^n \xi _i\), and \(\tau = \min \{ n \ge 1 ~:~ S_n \le 0\}\).

If \(F \in \mathcal{L}\) (long tailed), that is, (1.3) holds for some \(y>0\), then it holds for all y and, moreover, uniformly in \(|y|\le C\), for any fixed C. Therefore, if \(F\in \mathcal{L}\), then there exists a positive function \(h(x)\rightarrow \infty \) such that \(\overline{F}(x-h(x))\sim \overline{F}(x) \sim \overline{F}(x+h(x))\). In this case we say that the tail distribution \(\overline{F}\) is h-insensitive.

In what follows, we make use of the following characteristic result (see Theorem 2.47 in [9]):

In particular, if F is h-insensitive, then F is \(h_c\)-insensitive for any \(c>0\), where \(h_c(x)=h(cx).\)

We also use another characteristic result which is a straightforward minor extension of Theorem 2.48 from [9]:

for any sequence of non-negative random variables \(V_n\) with corresponding means \(v_n=\mathbb {E} V_n\) satisfying

Here is another good property of \(\mathcal{IRV}\) distributions. Let random variables X and Y have arbitrary joint distribution, with the distribution of X being \(\mathcal{IRV}\) and \(\mathbb {P} (|Y|>x) =o(\mathbb {P}(X>x))\). Then

If F is an \(\mathcal{IRV}\) distribution with finite mean, then the distribution with the integrated tail \(\overline{F_I}(x)=\min (1,\int _x^{\infty }\overline{F}(y) dy)\) is also \(\mathcal{IRV}\) and \(\overline{F}(x) = o(\overline{F_I}(x))\) and, moreover, \(\int _x^{x+h(x)}\overline{F}(y) dy = o(\overline{F_I}(x))\) if \(F_I\) is h-insensitive.

We use the following well-known result: if \(\{\sigma _{1,j}\}\) is an i.i.d. sequence of random variables with common subexponential distribution F and if the counting random variable K does not depend on the sequence and has a light-tailed distribution, then

Here is the principle of a single big jump again: the sum is large when one of the summands is large.

Let \(M =\sup _{n\ge 0} \sum _{i=1}^n \xi _i\) where \(\{\xi _i\}\) are i.i.d. r.v.’s with negative mean \(-m\) and with common distribution function F such that \(F_I\) is subexponential. Then

Further, if \(F_I\) is subexponential, then, for any sequence \(m_n\rightarrow m>0\) and any function \(h(x)=o(x)\),

and, for any sequence \(c_n\rightarrow 0\),

Remark A.1

Let \(\mathcal{K}\) be any of the classes \(\mathcal{L},\mathcal{RV}, \mathcal{IRV}, \mathcal{D}, \mathcal{S}, \mathcal{S}^*\). The property of belonging to class \(\mathcal{K}\) is a tail property: if \(F\in \mathcal{K}\) and if \(\overline{G}(x) \sim C\overline{F}(x)\) where C is a positive constant, then \(G\in \mathcal{K}\). In particular,

-

(i)

if \(F\in \mathcal{K}\), then \(F_+\in \mathcal{K}\);

-

(ii)

if the random variable \(\xi \) has distribution \(F\in \mathcal{K}\) and \(c_1 > 0\) and \(c_2\) are any constants, then the distribution of the random variable \(\eta = c_1\xi + c_2\) also belongs to \(\mathcal{K}\);

-

(iii)

if the random variable \(\xi \) may be represented as \(\xi = \sigma - t\) where \(\sigma \) and t are mutually independent random variables and t is non-negative (or, slightly more generally, bounded from below), and if the distribution of \(\sigma \) belongs to class \(\mathcal{K}\), then \(\mathbb {P} (\xi>x) \sim \mathbb {P} (\sigma > x)\), so the distribution of \(\xi \) belongs to \(\mathcal{K}\) too.

The following result is a part of Theorem 1 in [7], see also [8] for a more general statement.

Theorem A.1

Let \(S_n=\sum _1^n \xi \), \(S_0=0\) be a random walk with i.i.d. increments with distribution function F and finite negative mean

Assume \(F\in \mathcal{S}^*\). Let \(T \le \infty \) be any stopping time (with respect to \(\{ \xi _n\}\)). Let \(M_{T} = \max _{0\le n\le T} S_n\). Then

B Proof of Corollary 3.1

We first note that the event \(\{\tau ^{H} \ge n \}\) is independent of \(\sigma ^{H}_{n}\) and \(\xi ^{H}_{n}\) because \(\{\tau ^{H} \ge n \} = \{\tau ^{H} \le n-1 \}^{c}\) is \(\sigma (\{\xi ^{H}_{\ell }; 1 \le \ell \le n-1\})\)-measurable. Hence,

and therefore the equivalence (3.4) is immediate from (3.3) of Theorem 3.1, while (3.5) easily follows from (3.4).

Thus, it remains to prove (3.6). For this, we introduce some notation. Let \(S^{H}_{n} = \sum _{i=1}^n \xi ^{H}_{i}\) and let \(S^{\sigma ^{H}}_n = \sum _{i=1}^n \sigma ^{H}_i\). Define a sequence of events \(E_{n}\), \(n=0,1,\ldots \), as

which is stationary in n (here, by convention, \(S^{H}_0=S^{\sigma ^{H}}_0=0\)). Due to the SLLN, there exists a sequence \(\delta _{\ell } \downarrow 0\) such that

as \(n\rightarrow \infty \). Therefore, for any \(\varepsilon >0\), there exists \(C=C_{\varepsilon }>0\), for which \(E_{n}\) is denoted by \(E_{n,\varepsilon }\), such that

Introduce a function \(h_{\varepsilon }(x)\) by

where [x / a] is the integer part of the ratio x / a. Then, for this \(\varepsilon \) and \(n \ge 1\), define \(J_{n,\varepsilon }(x)\) as

Then, on the event \(J_{n,\varepsilon }(x) \cap E_{n,\varepsilon }\), we have \(S^{H}_{n-1} > 0\), \(S^{H}_{n}> \xi ^{H}_{n} > x(1-\rho ) + h_{\varepsilon }(x)\), and therefore

Hence, letting \(\ell _{0} = [x/a]\), we have, on the same event,

For any integer \(N \ge 1\), let

then we have

Let \(F^{H}\) be the distribution of \(\xi ^{H}\), and recall that H is the distribution of \(\sigma ^{H}\). Both of them are intermediate regularly varying, they are tail-equivalent and h-insensitive [see Remark A.1 and (A.1)]. Since \(L_{N,\varepsilon }(x)\) is a union of N disjoint events and \(\{\tau ^{H} \ge n, \max _{i<n} \xi ^{H}_i \le x(1-\rho ) \}\) is independent of \(\xi ^{H}_{n}\) and \(E_{n,\varepsilon }\), (B.10) yields, as \(x\rightarrow \infty \),

Let \(L_{\varepsilon }(x) = \lim _{N \rightarrow \infty } L_{N,\varepsilon }(x)\), then \(L_{\varepsilon }(x) \subset \{B>x\}\) by (B.11), and, for any N, by (3.3) of Theorem 3.1,

Choosing N such that \(\sum _{n=N+1}^{\infty } \mathbb {P} (\tau ^{H} >n) \le \varepsilon \mathbb {E} \tau ^{H}\), we get

For \(x > 0\), define events J(x) and \(\overline{J}_{\varepsilon }(x)\) as

Since \(L_{\varepsilon }(x) = \cup _{n=1}^{\infty } \{\max _{i<n} \xi ^{H}_i \le x(1-\rho )\} \cap J_{n,\varepsilon }(x) \cap E_{n,\varepsilon }\), we have

Further, \(\overline{J}_{\varepsilon }(x) \subset J(x)\) and

Combining those with (B.13), we obtain that

Since \(\varepsilon >0\) may be taken arbitrarily small, we arrive at (3.6).

C Alternative Proof of Corollary 2.1

In this section, we give an alternative proof of Corollary 2.1, which is based on the result from [7], not using PSBJ. Instead of it, our basic tools are Theorem A.1 and the law of large numbers. We also slightly generalise Corollary 2.1.

Theorem C.1

For the stable GI / GI / 1 feedback queue, assume that its service time distribution is intermediate regularly varying and has a finite mean. If the first customer arriving at the empty system has an exceptional first service time \(\eta \) instead of \(\sigma _{1,0}\), that is, \(U_{1} = \eta \), such that

-

(I)

\(\eta \) has an intermediate regularly varying distribution with \(\mathbb {E}(\eta ) < \infty \);

-

(II)

\(\displaystyle \frac{\mathbb {P}(\eta> x)}{\mathbb {P}(\sigma > x)}\) converges to a constant from \([0,\infty ]\), as \(x \rightarrow \infty \);

-

(III)

\(\mathbb {E}(X^{(0)}_{k-1}) < \infty \) for \(k \ge 1\);

then, for each \(k \ge 1\), as \(x \rightarrow \infty \),

Remark C.1

If \(\eta = \sigma _{1,0}\), then the conditions (I)–(III) are satisfied, and this theorem is just Corollary 2.1.

Proof

Recall the definitions of \(X_{\ell -1}, U_{\ell }\) under \(\sigma _{1,0} = \eta \). We have \(X_{0} = u_{0}= 0\), \(U_{1} = \eta \), and, for \(\ell \ge 2\),

where \(X_{j-1}\) and \(\sigma _{j, i}\) are independent. Since (C.14) is an identity for \(k=1\), we assume that \(k \ge 2\). We partition the event \(\{T_{k} > x\}\) into the following k disjoint sets for each \({\varvec{y}} \equiv ( y_{1}, y_{2}, \ldots , y_{k-1}) > {\varvec{0}}\).

We prove that

as \(x \rightarrow \infty \) then \(y_{1}, \ldots , y_{k-1} \rightarrow \infty \). By the assumptions (I)–(III), these asymptotics yield (C.14), and therefore the theorem is obtained.

We prove (C.18) deriving upper and lower bounds. We first consider the case that \(\ell = 1\). Since \(U_{j} \le y_{j}\) for \(1 \le j \le k-1\), we have that \(T_{j} \le \sum _{j'=1}^{j} y_{j'}\) for \(1 \le j \le k-1\), and

This inductively shows that \(X^{(0)}_{k-1}\) has light tail on \(I_{k}^{(\ell )}({\varvec{y}},x)\), and therefore (C.17) implies that

The corresponding lower bound is obvious. That is,

Hence, letting \(y_{j} \rightarrow \infty \) for \(j=1,2,\ldots ,k\), we obtain (C.18) for \(\ell =1\).

We next consider the case \(\ell =2\). Let \(c_{1}\) be a positive constant, which will be appropriately determined for each sufficiently small \(\varepsilon > 0\).

where

Since \(U_{k-1} = \sum _{i=1}^{X^{(0)}_{k-2}} \sigma _{k-1,i}\), the term \(A^{(2)}_{+}(y_{1},\ldots ,y_{k-2},x)\) has the same asymptotics as \(I^{(1)}_{k-1}({\varvec{y}},c_{1}x)\). Hence, it follows from the asymptotics of \(I^{(1)}_{k}({\varvec{y}},x)\) that

Thus, if we show that \(B^{(2)}_{+}(y_{1},\ldots ,y_{k-2},x)\) is asymptotically negligible, then the right-hand side of (C.18) is obtained as an upper bound for \(\ell =2\). To see this, we consider \(X^{(0)}_{k-1}\) on the event

on which we have

Hence, letting

and applying Theorem A.1, we have

where the last probability term decays super-exponentially fast, so it is negligible. Thus, if we choose \(c_{1} > 0\) such that \(c_{1}(1+(b+\varepsilon )(\lambda +\varepsilon )) < 1\), then \(B^{(2)}_{+}(y_{1},\ldots ,y_{k-2},x)\) is asymptotically negligible because

Consequently, we choose \(c_{1} = \frac{1 - \varepsilon }{1 + (b+\varepsilon )(\lambda +\varepsilon )}\), which converges to \((1 + \lambda b)^{-1}\) as \(\varepsilon \downarrow 0\). Thus, we have proved that the right-hand side of (C.18) is an upper bound for \(\ell =2\).

For the lower bound for \(\ell =2\), we take another decomposition. Let \(d_{1} = \frac{1+\varepsilon }{1+\lambda b} < 1\) for a sufficiently small \(\varepsilon > 0\), then, for \(d_{1} x > y_{k-1}\),

Similar to (C.19), we have

On the other hand, by the law of large numbers,

Hence, this term is asymptotically negligible, and therefore we have the asymptotic lower bound for \(I_{k}^{(2)}({\varvec{y}},x)\), which agrees with the upper bound, by letting \(\varepsilon \downarrow 0\). Thus, we have proved (C.18) for \(\ell =2\). For \(\ell = 3,\ldots ,k\), (C.18) is similarly proved (we omit the details). Then the proof of the corollary is completed. \(\square \)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Foss, S., Miyazawa, M. Customer Sojourn Time in GI / GI / 1 Feedback Queue in the Presence of Heavy Tails. J Stat Phys 173, 1195–1226 (2018). https://doi.org/10.1007/s10955-018-2079-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-018-2079-9