Abstract

We study the occurrence of large queue lengths in the GI / GI / d queue with heavy-tailed Weibull-type service times. Our analysis hinges on a recently developed sample path large-deviations principle for Lévy processes and random walks, following a continuous mapping approach. Also, we identify and solve a key variational problem which provides physical insight into the way a large queue length occurs. In contrast to the regularly varying case, we observe several subtle features such as a non-trivial trade-off between the number of big jobs and their sizes and a surprising asymmetric structure in asymptotic job sizes leading to congestion.

Similar content being viewed by others

1 Introduction

The queue with multiple servers, known as the GI / GI / d queue, is a fundamental model in queueing theory. Its use in everyday applications such as call centers and supermarkets is well documented, and, despite being significantly studied over decades, it continues to pose interesting research challenges. Early work [1, 2] focused on exact analysis of the invariant waiting-time distribution, but finding tractable solutions has turned out to be challenging. This has led to lines of research that focus on approximations, either considering heavily loaded systems [3, 4] or investigating the frequency of rare events, for example the probability of a long waiting time or large queue length. For light-tailed service times, such problems have been considered in [5, 6].

The focus on this paper is on rare event analysis of the queue length in the case of heavy-tailed service times, a topic that is more recent. For a single server, the literature on this topic is extensive, as there is an explicit connection between waiting times and first passage times of random walks; a textbook treatment can be found in [7]. Tail asymptotics for the steady-state queue length have been treated in [8].

The earliest paper on heavy tails in the setting of a queue with multiple servers that we are aware of is [9], which stated a conjecture regarding the form of the tail of the waiting time distribution in steady state, assuming that the service-time distribution is sub-exponential. This has led to follow-up work on necessary and sufficient conditions for finite moments of the waiting-time distribution in steady state [10], and on tail asymptotics [11, 12]. Most of the results in the latter two papers focus on the case of regularly varying service times. An insight is that if the system load \(\rho \) is not an integer, a large waiting time occurs due to the arrival of \(\lceil d-\rho \rceil \) big jobs. The case of other heavy-tailed service times is poorly understood.

In the present paper, we assume that the service-time distribution has a tail of the form \(e^{-L(x)x^{\alpha }},\) where \( \alpha \in (0,1),\) and L is a slowly varying function (a more comprehensive definition is given later on). Tail distributions of this form are also known as semi-exponential. Their analysis poses challenges, as this category of tails falls in between the Pareto (very heavy tailed) case and the classical light-tailed case. In particular, in the case of \(d=2\) and \(\rho <1\), the results in [11] imply that two big jobs are necessary to cause a large waiting time when service times have a Weibull distribution. The arguments in [11] cannot be extended to the case \(\rho >1\). In the 2009 Erlang centennial conference, Sergey Foss posed the question “how many big service times are needed to cause a large waiting time to occur, if the system is in steady state?”. He noted that even a physical or heuristic treatment has been absent.

This has motivated us to investigate a strongly related question; namely, we analyze the event that the queue length \(Q(\gamma n)\) at a large time \(\gamma n\) exceeds a value n. A key result that we utilize in our analysis is a powerful upper bound of Gamarnik and Goldberg, see [13], for \({\mathbf {P}}(Q(t) > x)\). This upper bound can be combined with a recently developed large-deviations principle for random walks with heavy-tailed Weibull-type increments, which is another key result that we use. Consequently, we can estimate the probability of a large queue length of the GI / GI / d queue with heavy-tailed Weibull-type service times and obtain physical insights into “the most likely way”in which a large queue length builds up.

The main result of this paper, given in Theorem 3.1, states the following: If \(Q\left( t\right) \) is the queue length at time t (assuming an empty system at time zero) and \(\gamma \in (0,\infty )\), then

with \(c^{*}\) the value of the optimization problem

where \(\lambda \) is the arrival rate and service times are normalized to have unit mean. Note that this problem is equivalent to an \(L^{\alpha }\)-norm minimization problem with \(\alpha \in (0,1)\). Such problems also appear in applications such as compressed sensing and are strongly NP-hard in general; see [14] and references therein. In our particular case, we can analyze this problem exactly, and if \(\gamma \ge 1/(\lambda -\left\lfloor \lambda \right\rfloor )\), the solution takes the simple form

This simple minimization problem has at most two optimal solutions, which represent the most likely number of big jumps that are responsible for a large queue length to occur, and the most likely buildup of the queue length is through a linear path. For smaller values of \(\gamma \), asymmetric solutions can occur, leading to a piecewise linear buildup of the queue length; we refer to Sect. 3 for more details.

Note that the intuition that the solution to (1.2) yields is qualitatively different from the case in which service times have a power law. In the latter case, the optimal number of big jobs equals the minimum number of servers that need to be removed to make the system unstable. In the Weibull-type case, there is a non-trivial trade-off between the number of big jobs and their size, and this trade-off is captured by (1.2) and (1.3).

Although we do not make these claims rigorous for \(\gamma =\infty \) (which requires an interchange of limits argument beyond the scope of the paper), it makes a clear suggestion of what the tail behavior of the steady-state queue length should be. This can then be related to the steady-state waiting-time distribution, and the original question posed by Foss, using distributional Little’s law.

As mentioned before, we obtain (1.1) by utilizing a tail bound for Q(t), which is derived in [13]. This tail bound is given in terms of functionals of superpositions of renewal processes. We show that these functionals are (almost) continuous in the \(M_{1}^{\prime }\) topology (in the sense of being amenable to the use of the extended contraction principle). The \(M_{1}^{\prime }\) introduced in [15] is precisely the topology used in the development of a recently produced large-deviations principle for random walks with Weibull-type increments; see [16]. So, our approach here makes the new large-deviations principle directly applicable.

The paper is organized as follows: Section 2 provides a model description and some useful tools used in our proofs. Section 3 provides our main result and some mathematical insights associated with it. Lastly, Sect. 4 contains the lemmas and proofs needed to construct the main result of this paper, Theorem 3.1.

2 Model description and preliminary results

We consider the FCFS GI / GI / d queuing model with d servers in which inter-arrival times are independent and identically distributed (i.i.d.) random variables (r.v.’s) and service times are i.i.d. r.v.’s independent of the arrival process. Let \(A \ge 0\) and \(S \ge 0\) be a pair of generic inter-arrival time and service time, respectively. We introduce the following assumptions:

Assumption 2.1

There exists \( \theta _+>0\) such that \({\mathbf {E}}(e^{\theta A})< \infty \) for every \(\theta \le \theta _+\).

Assumption 2.2

\({\mathbf {P}}(S \ge x)=e^{-L(x)x^{\alpha }}\), \(\alpha \in (0,1),\) where \(L(\cdot )\) is a slowly varying function at infinity and \(L(x)x^{\alpha -1}\) is eventually non-increasing.

Let Q(t) denote the queue length process at time t in the FCFS GI / GI / d queuing system with inter-arrival times being i.i.d. copies of A and service times being i.i.d. copies of S. We assume that \(Q(0)=0\). Our goal is to identify the limiting behavior of \({\mathbf {P}}(Q(\gamma n)> n)\) as \(n \rightarrow \infty \) in terms of the distributions of A and S. To simplify the notation, let \(\lambda =1/ {\mathbf {E}}[A]\) and assume without loss of generality that \({\mathbf {E}}[S]=1\). To ensure stability, let \(\lambda < d\). Let M be the renewal process associated with A. That is,

and \(A(t) \triangleq A_1 + A_2 + \cdots + A_{\lfloor t \rfloor }\), where \(A_1,A_2,\ldots \) are i.i.d. copies of A, and \(A(0)=0\). Similarly, for each \(i=1,\ldots , d\), let \(S^{(i)}(t) \triangleq S_1^{(i)} + S_2^{(i)} + \cdots + S_{\lfloor t \rfloor }^{(i)}\), where \(S_1^{(i)}, S_1^{(i)}, \ldots \) are i.i.d. copies of S, and \(N^{(i)}\) be the renewal process associated with S. Let \({{\bar{M}}}_n\) and \({{\bar{N}}}_n^{(i)}\) be scaled processes of M and \(N^{(i)}\). More precisely, \({{\bar{M}}}_n(t) = M(nt)/n\) and \({{\bar{N}}}_n^{(i)}(t) = N^{(i)}(nt)/n\) for \(t\ge 0\). Our analysis hinges on Corollary 1 of [13], which for the GI / GI / d queue states

Result 2.1

For all \(x>0\) and \(t\ge 0\),

Now, from (2.1) we conclude that, for each \(\gamma \in (0,\infty )\),

Though this is only an upper bound, our main result implies that (2.2) is an asymptotically tight upper bound as \(n\rightarrow \infty \). We establish this later on by deriving a lower bound with the same asymptotic behavior.

In view of the above, a natural way to proceed is to establish large-deviations principles for \({{\bar{M}}}_n\) and \({{\bar{N}}}_n^{(i)}\), \(i=1,\ldots , d\). Before we continue, we start with some general background on large-deviations theory, based on [17, 18]. Let \(({\mathbb {X}}, d)\) be a metric space and \({\mathcal {T}}\) denote the topology induced by the metric d. Let \(X_n\) be a sequence of \({\mathbb {X}}\)-valued random variables. Let I be a nonnegative lower semi-continuous function on \({\mathbb {X}}\) and \(a_n\) be a sequence of positive real numbers that tends to infinity as \(n\rightarrow \infty \). We say that \(X_n\) satisfies a large-deviations principle (LDP) in \(({\mathbb {X}}, {\mathcal {T}})\) with speed \(a_n\) and rate function I if

for any measurable set A. Here, \(A^\circ \) and \(A^-\) are, respectively, the interior and the closure of the set A. If the level sets \(\{y: I(y) \le a\}\) are compact for each \(a \in {\mathbb {R}}_+\), we say that I is a good rate function. By deriving an LDP, one can have an estimation of the magnitude of probabilities of rare events on an exponential scale: if the upper and lower bounds of the LDP match, then \(P(X_n \in G) \approx e^{-a_n \inf _{x \in G}I(x)}\). The optimizers of the infimum typically provide insight into the most likely way a rare event occurs (i.e., the conditional distribution given the rare event of interest). For more background, we refer to [18] and [19].

An important factor in establishing an LDP on function spaces is the topology of the space under consideration. Let \({\mathbb {D}}[0,T]\) denote the Skorokhod space (i.e., the space of càdlàg functions from [0, T] to \({\mathbb {R}}\)). We shall use \({\mathcal {T}}_{M_1'}\) to denote the \(M_1'\) Skorokhod topology on \({\mathbb {D}}[0,T]\), which is generated by a metric \(d_{M_1'}\) defined in terms of the graphs induced by the elements of \({\mathbb {D}}[0,T]\). The precise definitions of the graph and the metric are as follows:

Definition 2.1

For \(\xi \in {\mathbb {D}}[0,T]\), define the extended completed graph \(\varGamma '(\xi )\) of \(\xi \) as

where \(\xi (0-) \triangleq 0\). Define an order on the graph \(\varGamma '(\xi )\) by setting \((u_1,t_1) < (u_2,t_2)\), for every \((u_1,t_1), (u_2,t_2) \in \varGamma '(\xi )\), if either

\(t_1 < t_2\); or

\(t_1 = t_2\) and \(|\xi (t_1-)-u_1| < |\xi (t_2-) - u_2|\).

We call a continuous non-decreasing function \((u,t) =\big ((u(s), t(s)),\,s \in [0,T]\big )\) from [0, T] to \({\mathbb {R}}\times [0,T]\) an \(M_1'\) parametrization of \(\varGamma '(\xi )\) if \(\varGamma '(\xi ) = \{(u(s), t(s)): s\in [0,T]\}\). We also just call it a parametrization of \(\xi \).

Definition 2.2

Define the \(M_1'\) metric on \({\mathbb {D}}[0,T]\) as follows:

where \(\varPi _{M_1'}(\xi )\) is the set of all \(M_1'\) parametrizations of \(\varGamma '(\xi )\).

Note that we can alternatively define the \(M_1'\) metric in such a way that the infimum in Definition 2.2 is over the parametrizations that are strictly increasing (rather than merely non-decreasing) without changing the resulting distance. An immediate implication of such an alternative definition is that a sequence of functions \(\{\xi _n\}_{n \ge 1}\) converges to \(\xi \) in \(({\mathbb {D}}[0,T], {\mathcal {T}}_{M_1'})\) if and only if there exist parametrizations \((u,t)\in \varPi _{M_1'}(\xi )\) and \((u_n,t_n) \in \varPi _{M_{1}'}(\xi _n)\) for each \(n\ge 1\) such that

as \(n \rightarrow \infty \).

The continuity of certain maps w.r.t. the \(M_1'\) topology is a key component in our whole argument. Therefore, we note some important related properties used in our proofs. We refer to Lemma B.2 in the appendix for proofs of these results.

- 1.

The functional \(S:{\mathbb {D}}[0,T]\rightarrow {\mathbb {R}}\), where \(S(\xi )=\sup _{t\in [0,T]}\xi (t)\), is continuous w.r.t. the \(M_1'\) topology at \(\xi \in {\mathbb {D}}[0,T]\) such that \(\xi (0) \ge 0\);

- 2.

the functional \(E:{\mathbb {D}}[0,T] \rightarrow {\mathbb {R}}\), where \(E(\xi )=\xi (T)\), is continuous w.r.t. the \(M_1'\) topology on \({\mathbb {D}}[0,T]\);

- 3.

the addition map \((\xi , \zeta ) \mapsto \xi + \zeta \) is a continuous map w.r.t. the \(M_1'\) topology if the functions \(\xi \) and \(\zeta \) do not have jumps of the opposite sign at the same jump times.

Now, we describe a recent result derived in [16] on sample path large deviations for random walks with heavy-tailed Weibull increments which constitutes an important cornerstone of our whole argument. We say that \(\xi \in {\mathbb {D}}[0,T]\) is a pure jump function if \(\xi = \sum _{i=1}^\infty x_i\mathbb {1}_{[u_i,T]}\) for some \(x_i\) and \(u_i\) such that \(x_i\in {\mathbb {R}}\) and \(u_i\in [0,T]\) for each i, and \(u_i\) are all distinct. Let \({\mathbb {D}}^{\uparrow }_p[0,T]\) be the subspace of \({\mathbb {D}}[0,T]\) consisting of non-decreasing pure jump functions that assume nonnegative values at the origin.

Result 2.2

Let \(S_n, n\ge 1\), be a mean-zero random walk such that \({\mathbf {E}}(e^{-\epsilon S_1})< \infty \) for some \(\epsilon >0\), \({\mathbf {P}}(S_1 \ge x) =e^{-L(x)x^{\alpha }}\) for some \(\alpha \in (0,1)\), and that \(L(x)x^{\alpha -1}\) is eventually non-increasing. Then, \({{\bar{S}}}_n\) satisfies the LDP in \(({\mathbb {D}}[0,T],{\mathcal {T}}_{M_1'})\) with speed \( L(n) n^{\alpha }\) and good rate function \(I_{M_1'}:{\mathbb {D}}[0,T] \rightarrow [0,\infty ]\) given by

Note that \({{\bar{M}}}_n\) and \({{\bar{N}}}_n^{(i)}\)’s depend on a random number of \(A_j\)’s and \(S^{(i)}_j\)’s and hence may depend on an arbitrarily large number of \(A_j\)’s and \(S^{(i)}_j\)’s. This does not exactly correspond to the large-deviations framework presented in Result 2.2. To accommodate such a context, we introduce the following maps: Fix \(\gamma >0\). For any path \(\xi \), let \(\varPsi (\xi )(t)\) denote the running supremum of \(\xi \) up to time t:

For each \(\mu \), define a map \(\varPhi _\mu :{\mathbb {D}}[0,\gamma /\mu ]\rightarrow {\mathbb {D}}[0,\gamma ]\) by

where

Here, we denote \(\max \{x, 0\}\) by \([x]_+\). In words, between the origin and the supremum of \(\xi \), \(\varPhi _\mu (\xi )(s)\) is the first passage time of \(\xi \) crossing the level s; from there to the final point \(\gamma \), \(\varPhi _\mu (\xi )\) increases linearly from \(\gamma /\mu \) at rate \(1/\mu \) (instead of jumping to \(\infty \) and staying there). Define \({{\bar{A}}}_n \in {\mathbb {D}}[0,\gamma /{\mathbf {E}}A]\) as \({{\bar{A}}}_n(t) \triangleq A(nt)/n\) for \(t\in [0,\gamma /{\mathbf {E}}A]\) and \({\bar{S}}_n^{(i)}\in {\mathbb {D}}[0,\gamma ]\) as \({{\bar{S}}}_n^{(i)}(t) \triangleq S^{(i)}(nt)/n = \frac{1}{n}\sum _{j=1}^{\lfloor nt \rfloor } S_j^{(i)}\) for \(t\in [0,\gamma ]\). In deriving LDPs for \({{\bar{M}}}_n\) and \({{\bar{N}}}_n^{(i)}\), we use the fact that \(\varPhi _{{\mathbf {E}}A}({{\bar{A}}}_n)\) is a function of \(\{{{\bar{A}}}_n(t): t\in [0,\gamma /{\mathbf {E}}A]\}\) (and, hence, the LDP associated with it can be derived from the LDP we have for \({{\bar{A}}}_n\)) as well as the fact that \(\varPhi _{{\mathbf {E}}A}({{\bar{A}}}_n)\) is close enough to \({{\bar{M}}}_n\) that they satisfy the same LDP. Similarly, we derive the LDP for \({{\bar{N}}}_n^{(i)}\) from the LDP for \({{\bar{S}}}_n^{(i)}\) using the fact that \(\varPhi _{1}({{\bar{S}}}_n^{(i)})\) is close enough to \({{\bar{N}}}_n^{(i)}\) for our purpose.

We now turn to the main result of this paper and discuss its implications.

3 Main result

Recall that Q(t) denotes the queue length of the GI / GI / d queue at time t.

Theorem 3.1

For each \(\gamma \in (0,\infty )\), it holds that

where \(c^*\) is defined as follows: for \(\gamma \ge 1 / \lambda \), \(c^*\) is equal to

while for \(\gamma < {1}/{\lambda }\), \(c^*=\infty \).

Theorem 3.1 is stated under the assumption that \({\mathbf {E}}S = 1\) for the sake of simplicity. Following a completely analogous argument with slightly more involved notation, one can obtain the following expression for \(c^*\) for the general case where \(\sigma = 1/{\mathbf {E}}S \ne 1\):

The proof of Theorem 3.1 is provided in Sect. 4 by implementing the following strategy:

- 1.

We first prove that \({{\bar{A}}}_n\) and \({{\bar{S}}}_n^{(i)}, i=1,\ldots , d\), satisfy certain LDPs in Proposition 4.1. The LDPs for the \({{\bar{S}}}_n^{(i)}\) are a consequence of Result 2.2, while the LDP for \({{\bar{A}}}_n\) is deduced by the sample path LDP in [6].

- 2.

We prove that \(\varPhi _{{\mathbf {E}}A}(\cdot )\) and \(\varPhi _{1}(\cdot )\) are essentially continuous maps—see Proposition B.1 for the more precise statement—and, hence, \(\varPhi _{{\mathbf {E}}A}({{\bar{A}}}_n)\) and \(\varPhi _{1}({{\bar{S}}}^{(i)}_n)\) satisfy the LDPs deduced by the extended contraction principle (cf. Appendix A).

- 3.

We show that \({{\bar{M}}}_n\) and \({{\bar{N}}}_n^{(i)}\) are equivalent to \(\varPhi _{{\mathbf {E}}A}({{\bar{A}}}_n)\) and \(\varPhi _{1}({{\bar{S}}}^{(i)}_n)\), respectively, in terms of their large deviations (Proposition 4.2); so \({{\bar{M}}}_n\) and \({{\bar{N}}}^{(i)}_n\) satisfy the same LDPs (Proposition 4.3).

- 4.

By applying the contraction principle to the \({{\bar{N}}}^{(i)}\) with the continuous maps in Appendix B, we infer the (logarithmic) asymptotic upper bound of \({\mathbf {P}}(Q(\gamma n) > n)\), which can be characterized by the solution of a (non-standard) variational problem. On the other hand, the lower bound is derived by keeping track of the optimal solution associated with the LDP upper bound. The complete argument is presented in Proposition 4.4.

- 5.

We solve the variational problem in Proposition 4.5 to explicitly compute its optimal solution. The optimal solution of the variational problem provides the limiting exponent and information on the trajectory leading to a large queue length.

In the remainder of this section, we further investigate properties of the solution of the optimization problem that defines \(c^*\). In large-deviations theory, solutions of such problems are known to provide insights into the most likely way a specific rare event occurs. Such insights are physical, and more technical work is typically needed to make such insights rigorous; we refer to Lemma 4.2 of [18] for more background. The latter lemma can be applied in a relatively straightforward manner to derive a rigorous statement for the most likely way that the functional in the Gamarnik and Goldberg upper bound (cf. Result 2.1) becomes large. The computations below are mainly intended to provide physical insight and highlight differences from the well-studied case where the job sizes follow a regularly varying distribution.

We consider two different cases based on the value of \(\gamma \). If \(\gamma <1/\lambda \), no finite number of large jobs suffice, and we conjecture that the large-deviations behavior is driven by a combination of light-tailed and heavy-tailed phenomena in which the light-tailed dynamics involve pushing the arrival rate by exponential tilting to the critical value \( 1/\gamma \), followed by the heavy-tailed contribution evaluated as we explain in the following development. If \(\gamma > 1/\lambda \), we observe the following features that come in contrast to the case of regularly varying service-time tails:

- 1.

The large-deviations behavior may not be driven by the smallest number of jumps which drives the queueing system to instability (i.e., \(\left\lceil d-\lambda \right\rceil \)). In other words, in the Weibull setting, it might be more efficient to block more servers.

- 2.

It is not necessary that the servers are blocked by the same amount, i.e., asymmetry in job sizes may be the most probable scenario in certain cases.

To illustrate the first point, assume \(\gamma >1/\left( \lambda -\left\lfloor \lambda \right\rfloor \right) \), in which case \( \left\lfloor \lambda \right\rfloor \le \left\lfloor \lambda -1/\gamma \right\rfloor . \) In that particular case, the first infimum in (3.2) is over an empty set and we interpret it as \(\infty \). So the optimal solution of \(c^*\) reduces to

Let \(l^{*}\) denote the index associated with the optimal value of the expression above. Intuitively, \(d-l^{*}\) represents the optimal number of blocked servers so that the queue gets congested. Observe that \(d-\left\lfloor \lambda \right\rfloor =\left\lceil d-\lambda \right\rceil \) corresponds to the number of servers blocked in the regularly varying case. Note that if we examine

for \(t\in [0,\left\lfloor \lambda \right\rfloor ]\), then the derivative \({\dot{f}}\left( \cdot \right) \) is equal to \( {\dot{f}}\left( t\right) =\alpha \left( d-t\right) \left( \lambda -t\right) ^{-\alpha -1}-\left( \lambda -t\right) ^{-\alpha }. \) Hence,

and

with \({\dot{f}}\left( t\right) =0\) if and only if \(t=\left( \lambda -\alpha d\right) /\left( 1-\alpha \right) \). This observation allows us to conclude that whenever \(\gamma >1/\left( \lambda -\left\lfloor \lambda \right\rfloor \right) \) we can distinguish two cases. The first one occurs if

in which case \(l^{*}=\left\lfloor \lambda \right\rfloor \). This case is qualitatively consistent with the way in which large deviations occur in the regularly varying case. On the other hand, if \( \left\lfloor \lambda \right\rfloor >\frac{\left( \lambda -\alpha d\right) }{ \left( 1-\alpha \right) }, \) then we must have that

This case is the one highlighted in Feature 1 in which we may obtain \(d-l^{*}>\left\lceil d-\lambda \right\rceil \) and thus more servers are blocked compared to the large-deviations behavior observed in the regularly varying case. However, the blocked servers are symmetric in the sense that they are treated in exactly the same way.

In contrast, the second feature indicates that the typical trajectory leading to congestion may be obtained by blocking not only a specific amount to drive the system to instability, but also by blocking the corresponding servers by different loads in the large-deviations scaling. To appreciate this, we must assume that

In this case, the contribution of the infimum in (3.2) becomes relevant. To illustrate that we can obtain solutions with the second feature, consider the case \(d=2\), \(1<\lambda <2\), and

Choose \(\gamma =1/(\lambda -1)-\delta \) and \(\lambda =2-\delta ^{3} \) for \(\delta >0\) sufficiently small. We derive

concluding that

More explicitly, consider the case \(d=2\), \(\lambda =1.49\), \(\alpha =0.1\) and \(\gamma = \frac{1}{\lambda -1}-0.1\). For these values, \(\gamma _1 ^{\alpha }+\left( 1-\gamma _1 \left( \lambda -1\right) \right) ^{\alpha }<2\left( \frac{1}{\lambda }\right) ^{\alpha }\), and the most likely scenario leading to a large queue length is two big jobs arriving at the beginning and blocking both servers with different loads. On the other hand, if \(\gamma = \frac{1}{\lambda -1}\), the most likely scenario is a single big job blocking one server. These two scenarios are illustrated in Fig. 1.

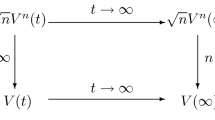

We conclude this section by presenting a future research direction. We provide asymptotics only for the transient model of the queue length process Q. For a queue in steady state, more work is needed to overcome the technicalities arising with the large-deviations framework. Specifically, one has to prove that the interchange of limits as \(\gamma \ \text {and} \ n\) tend to infinity,

is valid. We conjecture that the optimal value, similar to (3.2), of the variational problem associated with the steady-state model will consist solely of the term \(\min _{l=0}^{\left\lfloor \lambda \right\rfloor }\left\{ \left( d-l\right) \left( \frac{1}{\lambda -l }\right) ^{\alpha }\right\} \), obtained by taking \(\gamma =\infty \) in (1.2).

4 Proof of Theorem 3.1

We follow the general strategy outlined in the previous section. The first step consists of deriving the LDPs for \({\bar{A}}_n, {\bar{S}}_n^{(i)}\) which subsequently provide us with the LDPs for \({{\bar{M}}}_n\) and \({{\bar{N}}}_n^{(i)}\). Let \(\mathbb D_p^\uparrow [0,\gamma /\mu ]\) be the subspace of \(\mathbb D[0,\gamma /\mu ]\) consisting of non-decreasing pure jump functions that assume nonnegative values at the origin, and define \(\zeta _\mu \in {\mathbb {D}}[0,\gamma /\mu ]\) by \(\zeta _\mu (t) \triangleq \mu t\). Let \({\mathbb {D}}^{\mu }[0,\gamma /\mu ] \triangleq \zeta _\mu +{\mathbb {D}}_p^\uparrow [0,\gamma /\mu ]\) be the subspace of non-decreasing piecewise linear functions that have slope \(\mu \) and assume nonnegative values at the origin.

4.1 Sample path large deviations for the components of the queue length upper bound

Recall that \({{\bar{A}}}_n(t) = \frac{1}{n}\sum _{j=1}^{\lfloor nt\rfloor } A_j\) and \({\bar{S}}_n^{(i)}(t) = \frac{1}{n}\sum _{j=1}^{\lfloor nt\rfloor } S_j^{(i)}\).

Proposition 4.1

\({{\bar{A}}}_n\) satisfies the LDP on \(\big ({\mathbb {D}}[0,\gamma /{\mathbf {E}}A],\, d_{M_1'}\big )\) with speed \(L(n)n^\alpha \) and good rate function

and \({{\bar{S}}}^{(i)}_n\) satisfies the LDP on \(\big (\mathbb D[0,\gamma ],\, d_{M_1'}\big )\) with speed \(L(n)n^\alpha \) and good rate function

Proof

In view of Lemma 3.2 of [6], it is easy to deduce that \(\frac{1}{n} \sum _{j=1}^{\lfloor nt \rfloor }\left( A_j-{\mathbf {E}}A\right) \) satisfies the LDP on \(({\mathbb {D}}[0,\gamma /{\mathbf {E}}A], d_{M_1'})\) with speed \(L(n)n^{\alpha }\) and with good rate function

On the other hand, due to Result 2.2, \(\frac{1}{n} \sum _{j=1}^{\lfloor nt \rfloor }\big (S_j^{(i)}-1\big )\) satisfies the LDP on \(({\mathbb {D}}[0,\gamma ], d_{M_1'})\) with good rate function

Clearly, \(\frac{1}{n} \sum _{j=1}^{\lfloor nt \rfloor }A_j - t\cdot {\mathbf {E}}A\) and \(\frac{1}{n} \sum _{j=1}^{\lfloor nt \rfloor }S_j^{(i)}- t\) are exponentially equivalent to \(\frac{1}{n} \sum _{j=1}^{\lfloor nt \rfloor }(A_j - {\mathbf {E}}A)\) and \(\frac{1}{n} \sum _{j=1}^{\lfloor nt \rfloor }\big (S_j^{(i)}-1\big )\), respectively. (For the definition of exponential equivalence, we refer to Definition A.1 in Appendix A.) Therefore, \(\frac{1}{n} \sum _{j=1}^{\lfloor nt \rfloor }A_j - t\cdot {\mathbf {E}}A\) and \(\frac{1}{n} \sum _{j=1}^{\lfloor nt \rfloor }S_j^{(i)}- t\) satisfy the LDPs with the good rate functions \(I_A\) and \(I_{S^{(i)}}\), respectively.

Now, consider the map \(\Upsilon _\mu :\big ({\mathbb {D}}[0,\gamma /\mu ], {\mathcal {T}}_{M_1'}\big )\rightarrow \big ({\mathbb {D}}[0,\gamma /\mu ], {\mathcal {T}}_{M_1'}\big )\), where \(\Upsilon _{\mu }(\xi ) \triangleq \xi + \zeta _\mu \). Let \(I_0(\zeta )\triangleq \inf \{I_A(\xi ): \xi \in {\mathbb {D}}[0,\gamma /{\mathbf {E}}A],\, \zeta =\Upsilon _{{\mathbf {E}}A}(\xi )\}\). From the form of \(I_A\), it is easy to see that \(I_0\) coincides with the right-hand-side of (4.1). Since this map is continuous (Lemma B.1), the contraction principle (Result A.1) applies showing that \({\bar{A}}_n = \Upsilon _{{\mathbf {E}}A}\big (\frac{1}{n} \sum _{j=1}^{\lfloor nt \rfloor }A_j - t\cdot {\mathbf {E}}A\big )\) satisfies the desired LDP with the good rate function \(I_0\). We next consider \({{\bar{S}}}_n^{(i)}\). Let \(I_i(\zeta )\triangleq \inf \{I_{S^{(i)}}(\xi ): \xi \in {\mathbb {D}}[0,\gamma ], \zeta =\Upsilon _{1}(\xi )\}\). Note that \(I_{S^{(i)}}(\xi )=\infty \) whenever \(\xi \notin {\mathbb {D}}_p^{\uparrow }\), and \(\xi \in {\mathbb {D}}_p^{\uparrow }\) if and only if \(\zeta =\Upsilon _{1}(\xi )\) belongs to \({\mathbb {D}}^{1}[0,\gamma ]\). Again, it is easy to check that \(I_i\) coincides with the right-hand-side of (4.2). We apply the contraction principle once more to conclude that \({{\bar{S}}}_n^{(i)} = \Upsilon _{1}\big (\frac{1}{n} \sum _{j=1}^{\lfloor nt \rfloor }S^{(i)}_j - t\big )\) satisfies the desired LDP with good rate function \(I_i\). \(\square \)

To carry out the second step of our approach, we next prove that \(\varPhi _{{\mathbf {E}}A}({{\bar{A}}}_n)\) and \(\varPhi _{1}({{\bar{S}}}_n^{(i)})\) satisfy the same LDPs as \({{\bar{M}}}_n\) and \({{\bar{N}}}_n^{(i)}\) for each \(i=1,\ldots ,d\), respectively. To show this, we next prove that \(\varPhi _{{\mathbf {E}}A}({{\bar{A}}}_n)\) and \(\varPhi _{1}({{\bar{S}}}_n^{(i)})\) are exponentially equivalent to \({{\bar{M}}}_n\) and \({{\bar{N}}}_n^{(i)}\) for each \(i=1,\ldots ,d\), respectively.

Proposition 4.2

\({{\bar{M}}}_n\) and \(\varPhi _{{\mathbf {E}}A}({{\bar{A}}}_n)\) are exponentially equivalent in \(\big ({\mathbb {D}}[0,\gamma ],\,{\mathcal {T}}_{M_1'}\big )\). \(\bar{N}_n^{(i)}\) and \(\varPhi _{1}({{\bar{S}}}_n^{(i)})\) are exponentially equivalent in \(\big ({\mathbb {D}}[0,\gamma ],\,{\mathcal {T}}_{M_1'}\big )\) for each \(i=1,\ldots , d\).

Proof

We first claim that \(d_{M_1'}({{\bar{N}}}^{(i)}_n, \varPhi _{1}(\bar{S}^{(i)}_n)) \ge \epsilon \) implies either

To see this, suppose otherwise. That is,

By the construction of \({{\bar{S}}}^{(i)}_n\) and \({{\bar{N}}}^{(i)}_n\), we see that \({{\bar{N}}}^{(i)}_n(\cdot )\) is non-decreasing and \({\bar{N}}^{(i)}_n(t) \ge \gamma \) for \(t\ge \varPsi ({{\bar{S}}}^{(i)}_n)(\gamma )\). Therefore, the second condition of (4.3) implies

On the other hand, since the slope of \(\varPhi _{1}({{\bar{S}}}^{(i)}_n)\) is 1 on \([\varPsi ({{\bar{S}}}^{(i)}_n)(\gamma ), \gamma ]\), the first condition of (4.3) implies that

and hence,

Note also that, by the construction of \(\varPhi _{1}\), \(\bar{N}^{(i)}_n(\cdot )\) and \(\varPhi _{1}({{\bar{S}}}^{(i)}_n)(\cdot )\) coincide on \([0,\varPsi ({{\bar{S}}}^{(i)}_n)(\gamma ))\). From this, along with (4.4), we see that

which implies that \(d_{M_1'}(\varPhi _{1}({{\bar{S}}}^{(i)}_n), \bar{N}^{(i)}_n) < \epsilon \). The claim is proved. Therefore,

and we are done for the exponential equivalence between \(\bar{N}^{(i)}_n\) and \(\varPhi _{1}({{\bar{S}}}^{(i)}_n)\) if we prove that

and

For (4.5), note that \(\varPsi (\bar{S}^{(i)}_n)(\gamma ) \le \gamma - \epsilon /2\) implies that \(\bar{S}^{(i)}_n(\gamma )\le \gamma - \epsilon /2 \), and hence,

where the second inequality is due to the LDP upper bound for \(\bar{S}^{(i)}_n\) in Proposition 4.1 and the continuity of the map \(\xi \mapsto \xi (\gamma )\) as a functional from \(({\mathbb {D}}[0,\gamma ], \,d_{M_1'})\) to \({\mathbb {R}}\). For (4.6), note that \(\bar{N}^{(i)}_n(\gamma ) -\gamma \ge \epsilon /2\) implies \({{\bar{S}}}^{(i)}_n (\gamma + \epsilon /2) \le \gamma \). Considering the LDP for \({\bar{S}}_n^{(i)}\) on \({\mathbb {D}}[0,\gamma + \epsilon /2]\), we arrive at the same conclusion. This concludes the proof of the exponential equivalence between \({{\bar{M}}}_n\) and \(\varPhi _{1}({{\bar{S}}}^{(i)}_n)\). The exponential equivalence between \({{\bar{M}}}_n\) and \(\varPhi _{{\mathbf {E}}A}({\bar{A}}_n)\) is essentially identical and, hence omitted. \(\square \)

Due to the continuity of \(\varPhi _{\mu }\) over the effective domain of the rate functions \(I_i, i=1,\ldots , d\)—see Proposition B.1—we can appeal to the extended contraction principle—see Remark 1—to establish LDPs for \(\varPhi _{{\mathbf {E}}A}({{\bar{A}}}_n)\) and \(\varPhi _{1}({{\bar{S}}}_n^{(i)})\) for each \(i=1,\ldots ,d\). Our next proposition, which constitutes the third step of our strategy, characterizes the LDPs satisfied by \(\varPhi _{{\mathbf {E}}A}({{\bar{A}}}_n)\) and \(\varPhi _{1}({{\bar{S}}}_n^{(i)})\)—and, hence, by \({\bar{M}}_n\) and \({{\bar{N}}}^{(i)}_n\) as well. Define \( \check{\mathbb C}^{\mu }[0,\gamma ] \triangleq \{\zeta \in {\mathbb {C}}[0,\gamma ]: \zeta =\varphi _{\mu }(\xi ) \text { for some } \xi \in \mathbb D^{\mu }[0,\gamma /\mu ]\}\), where \({\mathbb {C}}[0,\gamma ]\) is the subspace of \({\mathbb {D}}[0,\gamma ]\) consisting of continuous paths, and \(\tau _s(\xi ) = \max \Big \{0,\, \sup \{t\in [0,\gamma ]: \xi (t) = s\} - \inf \{t\in [0,\gamma ]: \xi (t) = s\}\Big \}\).

Proposition 4.3

\(\varPhi _{{\mathbf {E}}A}({{\bar{A}}}_n)\) and \({{\bar{M}}}_n\) satisfy the LDP with speed \(L(n)n^\alpha \) and good rate function

and, for \(i=1,\ldots ,d\), \(\varPhi _{1}({{\bar{S}}}^{(i)}_n)\) and \({{\bar{N}}}_n^{(i)}\) satisfy the LDP with speed \(L(n)n^\alpha \) and good rate function

Proof

Let \({\hat{I}}'_0(\zeta )\triangleq \inf \{I_0(\xi ): \xi \in {\mathbb {D}}[0,\gamma /{\mathbf {E}}A],\,\zeta =\varPhi _{{\mathbf {E}}A}(\xi ) \}\) and \({\hat{I}}'_i(\zeta ) \triangleq \inf \{I_i(\xi ):\xi \in {\mathbb {D}}[0,\gamma ],\,\zeta =\varPhi _{1}(\xi ) \} \) for \(i=1,\ldots ,d\). Recall that in Proposition 4.1 we established the LDP for \({{\bar{A}}}_n\) and \({{\bar{S}}}_n^{(i)}\) for each \(i=1,\ldots ,d\). Note that if \(\xi \in {\mathcal {D}}_{\varPhi _{{\mathbf {E}}A}} \triangleq \{\xi \in {\mathbb {D}}[0,\gamma /{\mathbf {E}}A]: \varPhi _{{\mathbf {E}}A}(\xi )(\gamma ) - \varPhi _{{\mathbf {E}}A}(\xi )(\gamma -) > 0\}\), then there has to be s, t such that \(0\le s<t<\gamma /{\mathbf {E}}A\) and \(\varPsi (\xi )(s) = \gamma \). For such \(\xi \), \(I_0(\xi ) = \infty \). From this, along with Proposition B.1, we see that \(\varPhi _{{\mathbf {E}}A}\) is continuous on the effective domain of \(I_0\). Therefore, the extended contraction principle (see Remark 1 after Result A.1) applies, establishing the LDP for \(\varPhi _{{\mathbf {E}}A}({{\bar{A}}}_n)\) with rate function \({{\hat{I}}}_0'\). The LDP for \(\varPhi _{1}({{\bar{S}}}^{(i)}_n)\) with rate function \({{\hat{I}}}_i'\) follows from the same argument. Due to the exponential equivalence derived in Proposition 4.2, \({{\bar{M}}}_n \) and \({{\bar{N}}}_n^{(i)}\) satisfy the same LDP as \(\varPhi _{{\mathbf {E}}A}({{\bar{A}}}_n)\) and \(\varPhi _{1}({{\bar{S}}}^{(i)}_n)\). Therefore, we are done once we prove that the rate functions \({{\hat{I}}}_i'\) deduced from the extended contraction principle satisfy \(I_i' = {{\hat{I}}}_i'\) for \(i=0,\ldots , d\).

Starting with \(i=0\), note that \(I_0(\xi ) = \infty \) if \(\xi \ne \zeta _{{\mathbf {E}}A}\), and hence,

where it is straightforward to check that \(\varPhi _{{\mathbf {E}}A} (\zeta _{{\mathbf {E}}A}) = \zeta _{1/{\mathbf {E}}A}\). Therefore, \(I'_0 = {{\hat{I}}}'_0\).

Turning to \(i=1,\ldots ,d\), note first that since \(I_i(\xi )=\infty \) for any \(\xi \notin {\mathbb {D}}^{1}[0,\gamma ]\),

Note also that \(\varPhi _{1}\) can be simplified on \(\mathbb D^{1}[0,\gamma ]\): it is easy to check that if \(\xi \in \mathbb D^{1}[0,\gamma ]\), \(\psi _{1}(\xi )(t) = \gamma \) and \(\varphi _{1}(\xi )(t) \le \gamma \) for \(t\in [0,\gamma ]\). Therefore, \(\varPhi _{1}(\xi ) = \varphi _{1}(\xi )\), and hence,

Now, if we define \(\varrho _{1}:{\mathbb {D}}[0,\gamma ]\rightarrow \mathbb D[0,\gamma ]\) by

then it is straightforward to check that \(I_i(\xi ) \ge I_i(\varrho _{1}(\xi ))\) and \(\varphi _{1}(\xi ) = \varphi _{1}(\varrho _{1}(\xi ))\) whenever \(\xi \in \mathbb D^{1}[0,\gamma ]\). Moreover, \(\varrho _{1}(\mathbb D^{1}[0,\gamma ])\subseteq {\mathbb {D}}^{1}[0,\gamma ]\). From these observations, we see that

Note that \(\xi \in \varrho _{1}({\mathbb {D}}^{1}[0,\gamma ])\) and \(\zeta = \varphi _{1}(\xi )\) implies that \(\zeta \in \check{\mathbb C}^{1}[0,\gamma ]\). Therefore, in the case \(\zeta \not \in \check{{\mathbb {C}}}^{1}[0,\gamma ]\), no \(\xi \in {\mathbb {D}}[0,\gamma ]\) satisfies the two conditions simultaneously, and hence,

Now we prove that \({{\hat{I}}}'_i(\zeta ) = I'_i(\zeta )\) for \(\zeta \in \check{{\mathbb {C}}}^{1}[0,\gamma ]\). We claim that, if \(\xi \in \varrho _{1}({\mathbb {D}}^{1}[0,\gamma ])\),

for all \(s\in [0,\gamma ]\). The proof of this claim is provided at the end of the proof of the current proposition. Using this claim,

Note also that \(\zeta \in \check{{\mathbb {C}}}^{1}[0,\gamma ]\) implies the existence of \(\xi \) such that \(\zeta = \varphi _{1}(\xi )\) and \(\xi \in \varrho _{1}({\mathbb {D}}^{1}[0,\gamma ])\). To see why, note that there exists \(\xi '\in {\mathbb {D}}^{1}[0,\gamma ]\) such that \(\zeta = \varphi _{1}(\xi ')\) due to the definition of \(\check{{\mathbb {C}}}^{1}[0,\gamma ]\). Let \(\xi \triangleq \varrho _{1}(\xi ')\). Then, \(\zeta = \varphi _{1}(\xi )\) and \(\xi \in \varrho _{1}(\mathbb D^{1}[0,\gamma ])\). From this observation, we see that

and hence,

for \(\zeta \in \check{{\mathbb {C}}}^{1}[0,\gamma ]\). From (4.9) and (4.10), we conclude that \(I'_i = {{\hat{I}}}_i\) for \(i=1,\ldots ,d\).

All that remains is to prove that \( \tau _s(\varphi _{1}(\xi )) = \xi (s)-\xi (s-) \) for all \(s\in [0,\gamma ]\). We consider the cases \(s> \varphi _{1} (\xi ) (\gamma )\) and \(s\le \varphi _{1} (\xi ) (\gamma )\) separately. First, suppose that \(s> \varphi _{1} (\xi ) (\gamma )\). Since \(\varphi _{1}(\xi )\) is non-decreasing, this means that \(\varphi _{1}(\xi )(t) < s\) for all \(t\in [0,\gamma ]\), and hence \(\{t\in [0,\gamma ]: \varphi _{1} (t) = s\}=\emptyset \). Therefore,

On the other hand, since \(\xi \) is continuous on \([\varphi _{1}(\xi )(\gamma ),\gamma ]\) by its construction,

Therefore,

for \(s> \varphi _{1} (\xi ) (\gamma )\).

Now we turn to the case \(s \le \varphi _{1}(\xi )(\gamma )\). Since \(\varphi _{1}(\xi )\) is continuous, this implies that there exists \(u\in [0,\gamma ]\) such that \(\varphi _{1}(\xi )(u) = s\). From the definition of \(\varphi _{1}(\xi )(u)\), it is straightforward to check that

Note that \([\xi (s-),\xi (s)] \subseteq [0,\gamma ]\) for \(s \le \varphi _{1}(\xi )(\gamma )\) due to the construction of \(\xi \). Therefore, the above equivalence (4.11) implies that \([\xi (s-),\xi (s)] = \{u\in [0,\gamma ]: \varphi _{1}(\xi )(u) = s\}\), which in turn implies that \(\xi (s-)= \inf \{u\in [0,\gamma ]: \varphi _{1}(\xi )(u) = s\}\) and \(\xi (s) = \sup \{u\in [0,\gamma ]: \varphi _{1}(\xi )(u) = s\}\). We conclude that

for \(s \le \varphi _{1}(\xi )(\gamma )\). \(\square \)

4.2 Large deviations for the queue length

Now we are ready to follow step 4) of our outlined strategy and characterize the log asymptotics of \({\mathbf {P}}\big (Q(\gamma n) > n \big )\). Recall that \(\tau _s(\xi ) \triangleq \max \Big \{0,\, \sup \{t\in [0,\gamma ]: \xi (t) = s\} - \inf \{t\in [0,\gamma ]: \xi (t) = s\}\Big \}\).

Proposition 4.4

where \(c^*\) is the solution of the following variational problem:

Proof

From Corollary 1 of [13], for any \(\epsilon >0\),

By the LDP for \({\bar{M}}_n\) (Proposition 4.3), it is straightforward to deduce that

and

Therefore, by the principle of the maximum term,

To bound the limit supremum in the equality above, we derive an LDP for

Due to Proposition 4.3 and Theorem 4.14 of [18], \(({{\bar{N}}}_n^{(1)},\ldots , {\bar{N}}_n^{(d)})\) satisfy the LDP in \(\prod _{i=1}^d {\mathbb {D}}[0,\gamma ]\) (w.r.t. the d-fold product topology of \({\mathcal {T}}_{M_1'}\)) with speed \(L(n)n^\alpha \) and rate function

Let \({\mathbb {D}}^\uparrow [0,\gamma ]\) denote the subspace of \({\mathbb {D}}[0,\gamma ]\) consisting of non-decreasing functions. Since \({\bar{N}}_n^{(i)}\in {\mathbb {D}}^\uparrow [0,\gamma ]\) with probability 1 for each \(i=1,\ldots ,d\), we can apply Lemma 4.1.5 (b) of [17] to deduce the same LDP for \((\bar{N}_n^{(i)},\ldots , {{\bar{N}}}_n^{(d)})\) in \(\prod _{i=1}^d \mathbb D^\uparrow [0,\gamma ]\). We define \(f_1:\prod _{i=1}^d \mathbb D^\uparrow [0,\gamma ]\rightarrow {\mathbb {D}}[0,\gamma ]\) as

Note that \(f_1\) is continuous since all the jumps are in one direction in its domain. Since the supremum functional \(f_2:\xi \mapsto \sup _{0\le s \le \gamma } \xi (s)\) is continuous in the range of \(f_1\)—see Lemma (B.2)—\(f_2\circ f_1\) is a continuous map as well. The functional \(f_3:\xi \mapsto \xi (\gamma )\) is also continuous w.r.t. the \(M_1'\) topology on \({\mathbb {D}}[0,\gamma ]\) due to Lemma B.2. Therefore, the continuous map \(f:\prod _{i=1}^d {\mathbb {D}}^\uparrow [0,\gamma ]\rightarrow {\mathbb {R}}\), where

is continuous and, hence, we can apply the contraction principle with f to establish the LDP for \(f({{\bar{N}}}_n^{(1)},\ldots ,\bar{N}_n^{(d)}) = \frac{\gamma }{{\mathbf {E}}A} - \sum _{i=1}^d \bar{N}^{(i)}_n(\gamma ) + \sup _{0\le s\le \gamma } \Big (\sum _{i=1}^d {{\bar{N}}}^{(i)}_n(s) -\frac{s}{{\mathbf {E}}A}\Big )\). The LDP is controlled by the good rate function

Note that since \(I'(\xi ) = \infty \) for \(\xi \notin \check{\mathbb C}^{1}[0,\gamma ]\), and \(\xi (\cdot ) \in \check{\mathbb C}^{1}[0,\gamma ]\) if and only if \(\xi (\gamma ) - \xi (\gamma - \cdot ) \in \check{{\mathbb {C}}}^{1}[0,\gamma ]\),

Therefore,

Taking \(\epsilon \rightarrow 0\), we see that \(-c^*\) is the upper bound for the left-hand side.

We move on to the matching lower bound in the case \(\gamma > 1/\lambda \). Considering the obvious coupling between Q and \((M,N^{(1)},\cdots ,N^{(d)})\), one can see that \(M(s) - \sum _{i=1}^d N^{(i)}(s)\) can be interpreted as (a lower bound of) the length of an imaginary queue at time s where the servers can start working on the jobs that have not arrived yet. Therefore, \({\mathbf {P}}(Q((a+s)n)> n) \ge {\mathbf {P}}(Q((a+s) n)> n|Q(a) = 0) \ge {\mathbf {P}}({{\bar{M}}}_n(s) - \sum _{i=1}^d {{\bar{N}}}_n^{(i)}(s) > 1)\) for any \(a \ge 0\). Let \(s^*\) be the level crossing time of the optimal solution of (4.12). Then, for any \(\epsilon >0\),

Due to Proposition 4.3,

and hence, due to (4.13), it is straightforward to deduce that

where \(A = \{(\xi _1,\ldots ,\xi _d): s^*/{\mathbf {E}}A - \sum _{i=1}^d \xi _{i}(s^*) > 1 + \epsilon \}\). Note that the optimizer \((\xi _1^*,\ldots , \xi _d^*)\) of (4.12) satisfies \(s^*/{\mathbf {E}}A - \sum _{i=1}^d \xi _i^*(s^*) \ge 1\). Consider \((\xi _1',\ldots ,\xi _d')\) obtained by increasing one of the job sizes of \((\xi _1^*,\ldots , \xi _d^*)\) by \(\delta >0\). One can always find a small enough such \(\delta \) since \(\gamma > 1/\lambda \). Note that there exists \(\epsilon >0\) such that \(s'/{\mathbf {E}}A - \sum _{i=1}^d \xi '(s') > 1+\epsilon \). Therefore,

where the second inequality is from the subadditivity of \(x\mapsto x^\alpha \). Since \(\delta \) can be chosen arbitrarily small, letting \(\delta \rightarrow 0\), we arrive at the matching lower bound. \(\square \)

4.3 Explicit solution of the variational problem associated with the queue length

We now simplify the expression of \(c^*\) given in Proposition 4.4.

Proposition 4.5

If \(\gamma <1/\lambda \), \(c^{*}=\infty \). If \(\gamma \ge 1/\lambda \), \( c^{*}\) can be computed via

which in turn equals

Proof

Recall that \({\mathbb {D}}^{1}[0,\gamma ]\) is the subspace of the Skorokhod space and consists of non-decreasing piecewise linear functions with slope 1 almost everywhere over the time horizon \([0,\gamma ]\) and nonnegative values at the origin. Recall \(\varphi _{1}(\cdot )\) defined in (2.5) as well. From these definitions, it is easy to see that Proposition 4.4 implies that the constant \(c^{*}\) is equal to

Note that this is an infinite-dimensional (functional) optimization problem. We reduce this optimization problem to a more standard problem in two main steps:

- 1.

We first show that it suffices to optimize over \(\xi _i\) of the form \(\xi _i(t) = t + x_0\) for some \(x_0 \ge 0\).

- 2.

Next, we reduce the infinite-dimensional problem over the previously mentioned set into a finite-dimensional optimization problem where the aim is to minimize a concave function over a compact polyhedral set. This allows us to invoke Corollary 32.3.1 of [20], which enables us to calculate the optimal solution by finding the extreme points of the feasible region.

Step 1 Suppose that \(\left( \zeta _{1},\ldots ,\zeta _{d}\right) \) is an optimal solution associated with (4.16) and recall that \(\zeta _i=\varphi _1(\xi _i)\). We now claim that the corresponding functions \(\xi _{1},\ldots ,\xi _{d}\) have at most one jump. We prove this by contradiction. Assume that at least one of the \(\xi _i\) exhibits two jumps at times \(u_0\) and \(u_1\) of size \(x_0\) and \(x_1\), respectively, with \(0\le u_{0}<u_{1}\le \gamma \). Let

Intuitively, we constructed a new path, \({\bar{\xi }}_{i}\left( \cdot \right) \) by merging the two jumps into a big jump at time \(u_0\). Since \(x_{0},x_{1}\) are nonnegative, then we have that

Figure 2 illustrates this.

Now, let \({\bar{\zeta }}_{i}=\varphi _{1}\left( {\bar{\xi }} _{i}\right) \). From the definition of \(\varphi _1\), we obviously have that

Therefore, due to (4.17), \((\zeta _{1},\ldots ,{\zeta }_{i-1},{\bar{\zeta }}_{i},{\zeta }_{i+1},\ldots ,\zeta _{d})\) is also a feasible solution for (4.16). Moreover, by the following observation:

along with the fact that \( \left( x_{0}+x_{1}\right) ^{\alpha }<x_{0}^{\alpha }+x_{1}^{\alpha }, \) we deduce that \((\zeta _{1},\ldots ,{\zeta }_{i-1},{\bar{\zeta }}_{i},{\zeta }_{i+1},\ldots ,\zeta _{d})\) strictly improves the value of the objective function in (4.16). That is, \((\zeta _{1},\ldots ,\zeta _{d})\) cannot be an optimal solution. The argument can be iterated when \(\xi _i\) exhibits more than two jumps.

In conclusion, we proceed assuming that every \(\xi _{i}\left( \cdot \right) \) has a single jump of size \(x_{i}>0\) at some time \(u_{i} \in [0,\gamma ]\), and hence we can use the following representation (Fig. 3):

The pictures above depict the graph of a function \(\xi _i\) in \({\mathbb {D}}^1[0,\gamma ]\) and the graph of the function \(\zeta _i=\varphi _1(\xi _i)\). The function \(\xi _i\) has one jump of size \(x_i\) and this translates to a flat line under the transformation \(\varphi _1\). In conclusion, we infer that \(\zeta _i\) has the representation \(\zeta _i(s) =\min \left( s,u_{i}\right) +\left( s-x_{i}-u_{i}\right) ^{+}\)

To complete the first step of our construction, we show that, without loss of generality, jumps can be assumed to occur at time 0. Suppose that \(u_i>0\) for some \(i\in \{1,\ldots ,d\}\). Define

We constructed a new path \(\xi '\) by moving a jump time to 0. Again, it is easy to verify that \(\xi '(s) \ge \xi (s)\) for all \(s\in [0,\gamma ]\), and if we let \({\zeta }'_{i}=\varphi _{1 }\left( \xi '_{i}\right) \), then \( \zeta '_{i}\left( s\right) \le \zeta _{i}\left( s\right) \) for all \(s\in [0,\gamma ]\). Consequently, we preserve feasibility without increasing the value of the objective function in (4.16). Therefore, w.l.o.g. we can assume that \(\xi _i\) that correspond to the optimal solution of (4.16) are those paths that have at most one discontinuity at time zero and then they linearly increase with slope 1. That is, the solution \((\zeta _1,\ldots ,\zeta _d)\) takes the following form: for each \(i=1,\ldots , d\),

Step 2 Thanks to the reduction in (4.19), we see that for each \(i=1,\ldots ,d\) we have that \(\tau _0(\zeta _i)=x_i\), while \(\tau _s(\zeta _i)=0\) for every \(s>0\). Thus, we see that (4.12) takes the form

We continue simplifying the optimization problem in (4.20), reducing it to a polyhedral optimization problem. Let \(x=\left( x_{1},\ldots ,x_{d}\right) \) be an optimal solution so that its coordinates are sorted in increasing order: \(0\le x_{1}\le \cdots \le x_{d} \le \gamma \). Note that the supremum of \(l(s;x) \triangleq \lambda s-\sum _{i=1}^{d}(s-x_{i})^{+}\) over \(s\in [0,\gamma ]\) cannot be obtained strictly before \(x_d\), since in such a case, a sufficiently small perturbation of \(x_d\) to its left leads to a strictly smaller value of the objective function without changing the supremum of l(s; x), which is a contradiction to the assumption that x is an optimal solution. On the other hand, from the stability assumption \(\lambda < d\), the slope of l(s; x) is negative after \(x_d\), and hence its supremum cannot be obtained strictly after \(x_d\). Therefore, the supremum of l(s; x) has to be attained at \(s=x_d\). Now, set \(a_1 = x_1\) and \(a_{i}=x_{i}-x_{i-1}\) for \(i=2,\ldots , d\). Then, \(x_i = a_1+\ldots +a_i\) for \(i=1,\ldots , d\), and \(l(x_d; x) =\lambda \left( a_{1}+\cdots +a_{d}\right) -\sum _{i=1}^{d}(a_{1}+\cdots +a_{d}-\sum _{j=1}^{i}a_{j})\), and hence (4.20) is equivalent to

and by simplifying the constraints we arrive at

Recall \(0<\lambda <d\), and let m be any of the integers in the set \( \{1,\ldots ,d-1\}\). If \(\left( \lambda -m\right) <0\), we deduce that \(a_{m+1}=0\). If this was not the case, we could construct a feasible solution which reduces the value of the objective function and also satisfies the previously mentioned conditions. That is, the variational problem has an even simpler representation than the one above:

Recall that \(c^{*}=\infty \) if \(\gamma < 1/\lambda \). Assuming \(\gamma >1/\lambda \), we recover the optimal solution by evaluating the extreme points associated with the polyhedron described by the constraints (4.22), (4.23), and (4.24). The objective function in (4.21) is concave and lower bounded inside the feasible region. In addition, the feasible region is a compact polyhedron. Therefore, the optimizer is achieved at some extreme point in the feasible region (see Corollary 32.3.1 [20]).

Depending on the value of \(\gamma \), we indicate how to compute the basic feasible solutions related to (4.21). Firstly, we treat the case \(\gamma > 1/(\lambda -\left\lfloor \lambda \right\rfloor )\), where \(\lambda \) is not an integer. After that, we treat the general case \(\gamma >1/\lambda \). Given that \(\lambda > \left\lfloor \lambda \right\rfloor \), observe that if \(\gamma \ge 1/(\lambda -\left\lfloor \lambda \right\rfloor )\), then any solution satisfying (4.22) and (4.24) automatically satisfies (4.23). That is, we can ignore the constraint (4.23) by assuming that \(\gamma \ge 1/(\lambda -\left\lfloor \lambda \right\rfloor )\). Consequently, we only need to characterize the extreme points of (4.22), (4.24). Let \({\check{a}}_i=1/(\lambda -i+1)\) for \(i=1,\ldots ,\lfloor \lambda \rfloor +1\). Let \({\check{x}}_i\) denote the vector of the ith extreme point. That is, \({\check{x}}_i=(0,\ldots ,{\check{a}}_i,\ldots ,0)\). Calculating the value of the objective function over all extreme points, assuming that \(\gamma \ge 1/\left( \lambda -\left\lfloor \lambda \right\rfloor \right) \), we get

Next, we consider the general case \( \gamma > 1/\lambda \). We show that additional extreme points arise by considering the inclusion of (4.23) and this might potentially give rise to solutions in which large service requirements are not equal across all the servers. Note that if \(\lambda =\left\lfloor \lambda \right\rfloor \), we must have that \(a_{\left\lfloor \lambda \right\rfloor +1}=0\). To see this, suppose that is not the case. Then, a feasible solution would be of the form \(v=(a_1,\ldots ,a_i,\ldots ,a_{\left\lfloor \lambda \right\rfloor +1})\). By setting \(a_{\left\lfloor \lambda \right\rfloor +1}=0\), we construct another solution, \(v'=(a_1,\ldots ,a_i,\ldots ,a_{\left\lfloor \lambda \right\rfloor },0)\). Observe that \(v'\) is a feasible solution and it reduces the value of the objective function (4.21) in comparison with v. Our subsequent analysis also includes the case \(\lambda =\left\lfloor \lambda \right\rfloor \).

We identify the extreme points of (4.22), (4.23), (4.24). For that, we introduce the slack variable \(a_{0}\ge 0\).

From elementary results in polyhedral combinatorics, we know that extreme points correspond to basic feasible solutions. By choosing \(a_{i+1}=1/(\lambda -i)\) and \( a_{0}=\gamma -a_{i+1}\), we recover basic solutions which correspond to the extreme points identified by the equations above. Recall if \(\lambda =\left\lfloor \lambda \right\rfloor \) we must have that \(a_{\left\lfloor \lambda \right\rfloor +1}=0\). That is, we can safely assume that \(\lambda -i>0\). We observe that \(\gamma \ge 1/(\lambda -i)\) implies that \(a_{i+1}=1/(\lambda -i)\) and \(a_{j}=0\) for \(j\ne i+1\) which is a basic feasible solution for (4.26). Additional basic solutions are obtained by solving

Suppose that \(0\le l<k<\lambda \). This system of equations always has a unique solution because the equations are linearly independent, and hence

Therefore, the solution \(\left( {\bar{a}}_{k+1},{\bar{a}}_{l+1}\right) \) is given by

If we want \(\left( {\bar{a}}_{k+1},{\bar{a}}_{l+1}\right) \) to be both basic and feasible, we must have that \( 1/\left( \lambda -l\right) \le \gamma \le 1/\left( \lambda -k\right) \). Now, we calculate the value of the objective function for \(a_{k+1}={\bar{a}}_{k+1}\), \(a_{l+1}={\bar{a}}_{l+1}\) , and \(a_{i+1}=0\) for \(i\notin \{k,l\}\). That is,

Recall \(1/\left( \lambda -l\right) \le \gamma \le 1/\left( \lambda -k\right) \). As we mentioned before, if \(\gamma =1/\left( \lambda -k\right) \), then we have that \( a_{k+1}=1/\left( \lambda -k\right) \) and \(a_{i}=0\) for \(i\ne k+1\) which is a feasible extreme point. Furthermore, we see that under this particular solution the objective function has a smaller value than the solution involving \({\bar{a}}_{k+1}\) and \({\bar{a}}_{l+1}\). To illustrate this, observe that

Therefore, \(({\bar{a}}_{k+1}\) and \({\bar{a}}_{l+1})\) would be an optimal solution under the condition \(1/\left( \lambda -l\right) \le \gamma <1/\left( \lambda -k\right) \). Due to (4.25) and (4.29), we conclude that the optimal value of the variational problem (4.16) is given by

By simplifying the expression above, we arrive at (4.15). \(\square \)

List of symbols

- \({\mathbb {D}}[0,T]\) :

-

The Skorokhod space–space of càdlàg functions—over the domain [0, T]

- \({\mathbb {D}}^{\uparrow }[0,T]\) :

-

The subspace of \({\mathbb {D}}[0,T]\) consisting of non-decreasing functions that assume nonnegative values at the origin

- \({\mathbb {D}}_p^{\uparrow }[0,T]\) :

-

The subspace of \({\mathbb {D}}[0,T]\) consisting of non-decreasing pure jump functions that assume nonnegative values at the origin

- \({\mathbb {D}}^{\mu }[0,T]\) :

-

The subspace of \({\mathbb {D}}[0,T]\) consisting of non-decreasing piecewise linear functions with slope \(\mu \) almost everywhere and nonnegative values at the origin.

- \(\check{\mathbb {C}}^{\mu }[0,T]\) :

-

The subspace of \({\mathbb {D}}[0,T]\) consisting of continuous functions which are piecewise linear with slope 0 or \(1/\mu \)

- \({\mathcal {T}}_{M_1'}\) :

-

The \(M_1'\) topology

- \(d_{M_1'}\) :

-

The \(M_1'\) metric

- Q(t):

-

The queue length at time t

- d :

-

The number of servers of the multiple-server queue

- \(\lambda \) :

-

The arrival rate associated with the multiple-server queue

References

Pollaczek, Félix: Théorie analytique des problèmes stochastiques relatifs à un groupe de lignes téléphoniques avec dispositif d’attente (1961)

Kiefer, J., Wolfowitz, J.: On the theory of queues with many servers. Trans. Am. Math. Soc. 78, 1–18 (1955)

Iglehart, D.L., Whitt, W.: Multiple channel queues in heavy traffic. I. Adv. Appl. Probab. 2, 150–177 (1970)

Pang, G., Talreja, R., Whitt, W.: Martingale proofs of many-server heavy-traffic limits for Markovian queues. Probab. Surv. 4, 193–267 (2007)

Sadowsky, J.S.: The probability of large queue lengths and waiting times in a heterogeneous multiserver queue. II. Positive recurrence and logarithmic limits. Adv. Appl. Probab. 27(2), 567–583 (1995)

Puhalskii, A.: Large deviation analysis of the single server queue. Queueing Syst. 21(1), 5–66 (1995)

Foss, S., Korshunov, D., Zachary, S.: An Introduction to Heavy-Tailed and Subexponential Distributions. Springer Series in Operations Research and Financial Engineering, 2nd edn. Springer, New York (2013)

Foss, S., Korshunov, D.: Sampling at a random time with a heavy-tailed distribution. Markov Process. Relat. Fields 6(4), 543–568 (2000)

Whitt, W.: The impact of a heavy-tailed service-time distribution upon the \(M/GI/s\) waiting-time distribution. Queue. Syst. Theory Appl. 36(1–3), 71–87 (2000)

Scheller-Wolf, A., Vesilo, R.: Sink or swim together: necessary and sufficient conditions for finite moments of workload components in FIFO multiserver queues. Queue. Syst. 67(1), 47–61 (2011)

Foss, S., Korshunov, D.: Heavy tails in multi-server queue. Queue. Syst. Theory Appl. 52(1), 31–48 (2006)

Foss, S., Korshunov, D.: On large delays in multi-server queues with heavy tails. Math. Oper. Res. 37(2), 201–218 (2012)

Gamarnik, D., Goldberg, D.A.: Steady-state \(GI/G/n \) queue in the Halfin-Whitt regime. Ann. Appl. Probab. 23(6), 2382–2419 (2013)

Ge, D., Jiang, X., Ye, Y.: A note on the complexity of \(L_p\) minimization. Math. Program. 129(2, Ser. B), 285–299 (2011)

Puhalskii, A.A, Whitt, W.: Functional large deviation principles for first-passage-time processes. Ann. Appl. Probab. 362–381 (1997)

Bazhba, M., Blanchet, J., Rhee, C.-H., Zwart, B.: Sample-path large deviations for Lévy processes and random walks with Weibull increments. arXiv:1710.04013 (2017)

Dembo, A., Zeitouni, O.: Large Deviations Techniques and Applications. Springer, Berlin (2010)

Ganesh, A.J., O’Connell, N., Wischik, D.J.: Big Queues. Springer, Berlin (2004)

Feng, J., Kurtz, T.G: Large deviations for stochastic processes. Number 131. American Mathematical Soc. (2006)

Rockafellar, T.: Convex Analysis. Princeton Mathematical Series. Princeton University Press, Princeton (1970)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

A: Some large-deviations theory results

In this appendix, we include some important results and concepts widely used in the field of large deviations as well as in this paper. We have already mentioned the conditions under which a stochastic process Y satisfies a large-deviations principle. Let f be a map between two topological spaces. The following result, Theorem 4.2.1 in [17], formulates the conditions so that the transformation f(Y) satisfies an LDP also.

Result A.1

(Contraction principle) Let \({\mathcal {X}}\) and \({\mathcal {Y}}\) be Hausdorff topological spaces and \(f:{\mathcal {X}} \rightarrow {\mathcal {Y}}\) a continuous function. Consider a good rate function \(I:{\mathcal {X}} \rightarrow [0,\infty ].\)

- (a)

For each \(y \in {\mathcal {Y}}\), define

$$\begin{aligned}I'(y) \triangleq \inf \{I(x):x \in {\mathcal {X}}, y=f(x)\}.\end{aligned}$$Then, \(I'\) is a good rate function on \({\mathcal {Y}}\), where as usual the infimum over the empty set is taken as \(\infty \).

- (b)

If I controls the LDP associated with a family of probability measures \(\mu _{\epsilon }\) on \({\mathcal {X}}\), then \(I'\) controls the LDP for the family of probability measures \(\{ \mu _{\epsilon }\circ f^{-1} \}\) on \({\mathcal {Y}}\).

Remark 1

The theorem above holds under the weaker condition that f is continuous over the effective domain of the rate function I—i.e., on \(\{x\in {\mathcal {X}}: I(x) < \infty \}\). This particular extension of the contraction principle is called the extended contraction principle (p.367 of [15]; Theorem 2.1 of [6]).

Now, we review the notion of exponential equivalence. We start with the definition.

Definition A.1

Let \(({\mathcal {Y}},d)\) be a metric space. The probability measures \(\{ \mu _{\epsilon } \}\) and \(\{ {\tilde{\mu }}_{\epsilon } \}\) are called exponentially equivalent if there exist probability measures \(\{(\varOmega , {\mathcal {B}}_{\epsilon }, P_{\epsilon })\}\) and two families of \({\mathcal {Y}}\)-valued random variables \(\{ Z_{\epsilon } \}\) and \(\{{\tilde{Z}}_{\epsilon }\}\) with joint laws \(P_{\epsilon }\) and marginals \(\{\mu _{\epsilon }\}\) and \(\{{\tilde{\mu }}_{\epsilon }\}\), respectively, such that the following condition is satisfied:

For each \(\delta >0\), the set \(\{\omega : d({\tilde{Z}}_{\epsilon },{Z}_{\epsilon }) > \delta \}\) is \({\mathcal {B}}_{\epsilon }\) measurable, and

Intuitively, two random variables are exponentially equivalent if their distance is asymptotically negligible.

B: Continuity of some useful functionals in the \(M_1'\) topology

In the next proposition, we prove that the map \(\varPhi _{\mu }\) is sufficiently continuous for the application of extended contraction principle. Define \({\mathcal {D}}_{\varPhi _\mu } \triangleq \{\xi \in {\mathbb {D}}[0,\gamma /\mu ]: \varPhi _\mu (\xi )(\gamma ) - \varPhi _\mu (\xi )(\gamma -) > 0 \ \mathrm {and} \ \xi (0) \ge 0\}\).

Proposition B.1

For each \(\mu \in {\mathbb {R}}\), \(\varPhi _{\mu }: {\mathbb {D}} [0, \gamma /\mu ] \rightarrow {\mathbb {D}}[0,\gamma ]\) is continuous on \(\mathcal D_{\varPhi _\mu }^{\mathsf {c}}\) w.r.t. the \(M_1'\) topology.

Proof

Note that \(\varPhi _\mu = \varPhi _\mu \circ \varPsi \) and \(\varPsi \) is continuous, so we only need to check the continuity of \(\varPhi _\mu \) over the range of \(\varPsi \), in particular non-decreasing functions. Let \(\xi \) be a non-decreasing function in \({\mathbb {D}}[0,\gamma /\mu ]\). We consider two cases separately: \(\varPhi _\mu (\xi )(\gamma ) > \gamma /\mu \) and \(\varPhi _\mu (\xi )(\gamma ) \le \gamma /\mu \).

We start with the case \(\varPhi _\mu (\xi )(\gamma ) > \gamma /\mu \). Pick \(\epsilon >0\) such that \(\varPhi _\mu (\xi )(\gamma )> \gamma /\mu + 2\epsilon \) and \(\xi (\gamma /\mu ) + 2\epsilon < \gamma \). For such an \(\epsilon \), it is straightforward to check that \(d_{M_1'}(\zeta , \xi ) < \epsilon \) implies \(\varPhi _\mu (\zeta )(\gamma ) > \gamma /\mu \) and \(\zeta \) never exceeds \(\gamma \) on \([0,\gamma /\mu ]\). Therefore, the parametrizations of \(\varPhi _\mu (\xi )\) and \(\varPhi _\mu (\zeta )\) consist of the parametrizations—with the roles of space and time interchanged—of the original \(\xi \) and \(\zeta \) concatenated with the linear part coming from \(\psi _\mu \). More specifically, suppose that \((x,t)\in \varGamma (\xi )\) and \((y,r)\in \varGamma (\zeta )\) are parametrizations of \(\xi \) and \(\zeta \). Since \(\xi \) is non-decreasing, if we define on \(s\in [0,T]\)

then \((x',t')\in \varGamma (\varPhi _\mu (\xi ))\), \((y',r')\in \varGamma (\varPhi _\mu (\zeta ))\). Noting that

and taking the infimum over all possible parametrizations, we conclude that \(d_{M_1'}(\varPhi _\mu (\xi ), \varPhi _\mu (\zeta )) \le (1+\mu ^{-1})d_{M_1'}(\xi , \zeta ) \le (1+\mu ^{-1})\epsilon \), and hence \(\varPhi _\mu \) is continuous at \(\xi \).

Turning to the case \(\varPhi _\mu (\xi )(\gamma ) \le \gamma /\mu \), let \(\epsilon >0\) be given. Due to the assumption that \(\varPhi _\mu (\xi )\) is continuous at \(\gamma \), there has to be a \(\delta >0\) such that \(\varphi _\mu (\xi )(\gamma ) + \epsilon < \varphi _\mu (\xi )(\gamma - \delta ) \le \varphi _\mu (\xi )(\gamma + \delta ) \le \varphi _\mu (\xi )(\gamma ) + \epsilon \). We prove that if \(d_{M_1'}(\xi , \zeta ) < \delta \wedge \epsilon \), then \(d_{M_1'}(\varPhi _\mu (\xi ), \varPhi _\mu (\zeta )) \le 8\epsilon \). Since the case where \(\varPhi _\mu (\zeta )(\gamma ) \ge \gamma /\mu \) is similar to the above argument, we focus on the case \(\varPhi _\mu (\zeta )(\gamma ) < \gamma /\mu \); that is, \(\zeta \) also crosses level \(\gamma \) before \(\gamma /\mu \). Let \((x,t)\in \varGamma (\xi )\) and \((y,r)\in \varGamma (\zeta )\) be such that \(\Vert x-y\Vert _\infty + \Vert t - r\Vert _\infty < \delta \). Let \(s_x \triangleq \inf \{s\ge 0: x(s) > \gamma \}\) and \(s_y \triangleq \inf \{s\ge 0: y(s) > \gamma \}\). Then, it is straightforward to check \(t(s_x) = \varphi _\mu (\xi )(\gamma )\) and \(r(s_y) = \varphi _\mu (\zeta )(\gamma )\). Of course, \(x(s_x) = \gamma \) and \(y(s_y) = \gamma \). If we set \(x'(s) \triangleq t(s\wedge s_x)\), \(t'(s) \triangleq x(s\wedge s_x)\), and \(y'(s) \triangleq r(s\wedge s_y)\), \(r'(s) \triangleq y(s\wedge s_y)\), then

Now we argue that \(t(s_x) - \epsilon \le t(s_y) \le t(s_x) + \epsilon \). To see this, note first that \(x(s_y) < x(s_x) + \delta = \gamma + \delta \), and hence,

On the other hand,

where the last inequality is from \(\xi (t(s_y)) \ge x(s_y) > x(s_x) - \delta = \gamma - \delta \) and the definition of \(\varphi _\mu \). Therefore, \(\Vert x'-y'\Vert _\infty \le 2 \delta + 2\epsilon < 4\epsilon \). Now we are left with showing that \(\Vert t'-r'\Vert _\infty \) can be bounded in terms of \(\epsilon \).

Therefore, \(d_{M_1'}\big (\varPhi _\mu (\xi ), \varPhi _\mu (\zeta )\big ) \le \Vert x'-y'\Vert _\infty + \Vert t'-r'\Vert _\infty < 8\epsilon \). \(\square \)

Lemma B.1

The map \(\Upsilon _{\mu }: {\mathbb {D}}[0,\gamma /\mu ] \rightarrow {\mathbb {D}}[0,\gamma /\mu ]\), where \(\Upsilon _{\mu }(\xi ) \triangleq \xi + \zeta _{\mu }\), is continuous w.r.t. the \(M_1'\) topology on \({\mathbb {D}}[0,\gamma /\mu ]\).

Proof

Suppose that \(\xi _n\rightarrow \xi \) in \({\mathbb {D}}[0,\gamma /\mu ]\) w.r.t. the \(M_1'\) topology. As a result, there exist parametrizations \((u_n(s),t_n(s))\) of \(\xi _n\) and (u(s), t(s)) of \(\xi \) such that

This implies that \( \max \{\sup _{s\le {\gamma }/\mu }|u_n(s)-u(s)|,\sup _{s\le {\gamma }/\mu }|t_n(s)-t(s)|\}\rightarrow 0\,\,\text {as}\,\,n\rightarrow \infty . \) Observe that if (u(s), t(s)) is a parametrization for \(\xi \), then \((u(s)+\mu \cdot t(s),t(s))\) is a parametrization for \(\Upsilon _{\mu }(\xi )\). Consequently,

Thus, \(\Upsilon _{\mu }(\xi _n)\rightarrow \Upsilon _{\mu }(\xi )\) in the \(M_1'\) topology, proving that the map is continuous.\(\square \)

The next lemma provides the continuity of two functionals used in our large-deviation analysis.

Lemma B.2

For any \(T>0\),

- (i)

The functional \(E:{\mathbb {D}}[0,T] \rightarrow {\mathbb {R}}\), where \(E(\xi )=\xi (T)\), is continuous w.r.t. the \(M_1'\) topology on \({\mathbb {D}}[0,T]\).

- (ii)

The functional \(S:{\mathbb {D}}[0,T] \rightarrow {\mathbb {R}}\), where \(S(\xi )=\sup _{t \in [0,T]}\xi (t)\), is continuous w.r.t. the \(M_1'\) topology on \(\xi \in {\mathbb {D}}[0,T]\) such that \(\xi (0) \ge 0\).

Proof

Consider a sequence \(\xi _n\) such that \(d_{M_1'}(\xi _n, \xi ) \rightarrow 0\). From (2.3), there exists a parametrization (u(s), t(s)) of the completed graph of \(\xi \) and a parametrization \((u_n(s),t_n(s))\) of the completed graph of \(\xi _n\) such that

For i), note that \(|u_n(T) - u(T)| \le \sup _{s\in [0,T]}|u_n(s)-u(s)|\rightarrow 0\), while \(\xi _n(T)=u_n(T)\) and \(\xi (T)=u(T)\). Therefore, \(|E(\xi ) - E(\xi _n)| = |\xi _n(T)-\xi (T)| \rightarrow 0\) as \(n \rightarrow \infty \). Therefore, E is a continuous functional. For ii), suppose that \(\xi (0)\ge 0\). For any \(\epsilon >0\), there exists N such that \(\xi _n(0) \ge -\epsilon \) for \(n>N\). Now, from the definition of parametrization and the nonnegativity of \(\xi (0)\), we see that \(\sup _{s \in [0,T]}u(s)=\sup _{s \in [0,T]}\xi (s)\). Similarly, we can show that \(|\sup _{s \in [0,T]}u_n(s) - \sup _{s \in [0,T]}\xi _n(s)| < \epsilon \). Therefore,

Since \(\epsilon \) was arbitrary, this proves the continuity of S at \(\xi \).

\(\square \)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Bazhba, M., Blanchet, J., Rhee, CH. et al. Queue length asymptotics for the multiple-server queue with heavy-tailed Weibull service times. Queueing Syst 93, 195–226 (2019). https://doi.org/10.1007/s11134-019-09640-z

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11134-019-09640-z