Abstract

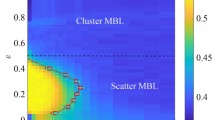

We consider a highly anisotropic \(d=2\) Ising spin model whose precise definition can be found at the beginning of Sect. 2. In this model the spins on a same horizontal line (layer) interact via a \(d=1\) Kac potential while the vertical interaction is between nearest neighbors, both interactions being ferromagnetic. The temperature is set equal to 1 which is the mean field critical value, so that the mean field limit for the Kac potential alone does not have a spontaneous magnetization. We compute the phase diagram of the full system in the Lebowitz–Penrose limit showing that due to the vertical interaction it has a spontaneous magnetization. The result is not covered by the Lebowitz–Penrose theory because our Kac potential has support on regions of positive codimension.

Similar content being viewed by others

References

Fontes, R.L., Marchetti, D., Merola, I., Presutti, E., Vares, M.E.: Phase transitions in layered systems. J. Stat. Phys. 157, 407–421 (2014)

Fontes, R.L., Marchetti, D., Merola, I., Presutti, E., Vares, M.E.: Layered systems at the mean field critical temperature. J. Stat. Phys. 161, 91–122 (2015)

Kotecký, R., Preiss, D.: Cluster expansion for abstract polymer models. Commun. Math. Phys. 103, 491–498 (1986)

Lebowitz, J.L., Penrose, O.: Rigorous treatment of the Van der Waals–Maxwell theory of the liquid vapour transition. J. Math. Phys. 7, 98–113 (1966)

Merola, I.: Asymptotic expansion of the pressure in the inverse interaction range. J. Stat. Phys. 95, 745–758 (1999). ISSN: 0022-4715

Presutti, E.: Scaling Limits in Statistical Mechanics and Microstructures in Continuum Mechanics. Theoretical and Mathematical Physics. Springer, Berlin (2009)

Zhang, Y., Tang, T.-T., Girit, C., Hao, Z., Martin, M.C., Zettl, A., Crommie, M.F., Shen, Y.R., Wang, F.: Direct observation of a widely tunable bandgap in bilayer graphene. Nature 459, 820–823 (2009)

Rutter, G., Jung, S., Klimov, N., Newell, D., Zhitenev, N., Stroscio, J.: Microscopic polarization in bilayer graphene. Nat. Phys. 7, 649–655 (2011)

LeRoy, B.J., Yankowitz, M.: Emergent complex states in bilayer graphene. Science 345, 31–32 (2014)

Schwierz, F.: Graphene transistors. Nat. Nanotechnol. 5, 487–496 (2010)

Shahil, K.M.F., Balandin, A.A.: Graphene-multilayer graphene nanocomposites as highly efficient thermal interface materials. Nano Lett. 12, 861–867 (2012)

Yankowitz, M., Wang, J.I.-J., Birdwell, A.G., Chen, Yu-An, Watanabe, K., Taniguchi, T., Jacquod, P., San-Jose, P., Jarillo-Herrero, P., LeRoy, B.J.: Electric field control of soliton motion and stacking in trilayer graphene. Nat. Mater. 13, 786–789 (2014)

Acknowledgments

We are indebted to the referees of JSP for many helpful comments. In particular following the suggestion of a referee we have modified our original definition of polymers greatly simplifying some of the computations.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Proof of Theorem 2

We preliminarly observe that for any \(h_\mathrm{ext}>0\) there is m so that \(h_\mathrm{ext} +m = f'_{\lambda }(m)\): in fact \(h_\mathrm{ext} +m - f'_{\lambda }(m)\) is positive at \(m=0\) and negative as \(m\rightarrow 1\) with \(f'_{\lambda }(m)\) continuous. If there are several m for which the equality holds we arbitrarily fix one of them that we denote by \(m_{h_\mathrm{ext}}\), we shall see a posteriori that there is uniqueness. To compute the left hand side of (3.2) we introduce an interpolating hamiltonian. For \(t\in [0,1]\) we set:

Denote by \(Z^0_L\) the partition function with hamiltonian \(H^0_L\), by \(P_{t,{ \gamma },L}\) the Gibbs measure with hamiltonian \(H_{t,{ \gamma },L}\) and by \(E_{t,{ \gamma },L}\) its expectation, then

The thermodynamic limit of \(\log Z^0_L/|{\Lambda }|\) is the pressure of the \(d=1\) Ising model with only vertical interactions and magnetic field \(h_\mathrm{ext} +m_{h_\mathrm{ext}}\), thus, by the choice of \(m_{h_\mathrm{ext}}\):

To compute the left hand side of (3.2) we need to control the expectation on the right hand side of (6.2) that we will do by exploiting the assumptions on \(h_\mathrm{ext}\) which imply the validity of the Dobrushin uniqueness criterion as we are going to show. The criterion involves the Vaserstein distance of the conditional probabilities \(P_{t,{ \gamma },L}[ {\sigma }(x,i)\;|\; \{{\sigma }(y,j)\}]\) of a spin \({\sigma }(x,i)\) under different values of the conditioning spins \(\{{\sigma }(y,j), (y,j)\ne (x,i)\}\). In the case of Ising spins such Vaserstein distance is simply equal to the absolute value of the difference of the conditional expectations and the criterion requires that for any pair of spin configurations outside (x, i)

Since

(\(J_{{ \gamma },L}(x,y)\) is the kernel \(J_{ \gamma }(x,y)\) with periodic boundary conditions in \({\Lambda }\)) one can easily check that (6.4) is satisfied with r as in (3.1) and \(r(x,i;y,j)=r_{{ \gamma },L}(x,i;y,j)\) with

By the Dobrushin uniqueness theorem there is a unique DLR measure \(P_{t,{ \gamma }}\) which is the weak limit of \(P_{t,{ \gamma },L}\) as \(L\rightarrow \infty \). We denote by \(m_{t,{ \gamma },L}\) and \(m_{t,{ \gamma }}\) the average of a spin under \(P_{t,{ \gamma },L}\) and \(P_{t,{ \gamma }}\). We call \(\nu ^0_L\) and \(\nu ^0\) the measures \(P_{t,{ \gamma },L}\) and \(P_{t,{ \gamma }}\) when \(t=0\), thus \(\nu ^0_L\) is the Gibbs measure for the Ising system in \({\Lambda }\) with hamiltonian \(H^\mathrm{vert}\) and magnetic field \(h_\mathrm{ext}+m_{h_\mathrm{ext}}\), \(\nu ^0\) denoting its thermodynamic limit. We then have

It also follows from the Dobrushin theory that under \(P_{t,{ \gamma },L}\) the spins are weakly correlated: let \(z\ne x\) then

where the \(*\)sum means that all the pairs \((y_k,j_k), k=1,\ldots ,n\) must differ from (z, i). Thus there is a constant c so that

and also (after using Chebitchev)

We can also use the Dobrushin technique to estimate the Vaserstein distance between \(P_{t,{ \gamma },L}\) and \(\nu ^0_L\). The key bound is again the Vaserstein distance between single spin conditional expectations. We have

thus, calling \(A:= \cosh ^{-2}( h_\mathrm{ext}-1-2{\lambda })\), we can bound the absolute value of the left hand side of (6.10) by:

After adding and subtracting \(m_{t,{ \gamma },L}\) to each \({\sigma }(y,i)\) and recalling that \(\sum _y J_{{ \gamma },L}(x,y)=1\), we use the Dobrushin analysis to claim that there exists a joint representation \(\mathcal P_{t,{ \gamma },L}\) of \(P_{t,{ \gamma },L}\) and \(\nu ^0_L\) such that

Since \(\sum _y J_{ \gamma }(x,y) ({\sigma }(y,i)-m_{t,{ \gamma },L})\) does not depends on \({\sigma }'\) we can replace the \(\mathcal E_{t,{ \gamma },L}\) expectation by the \( E_{t,{ \gamma },L}\) expectation and after using (6.9) we get by iteration

with r as in (3.1). Since \(|m_{t,{ \gamma },L}-m_{0,{ \gamma },L}|\le \mathcal E_{t,{ \gamma },L}[| {\sigma }(x,i)]- {\sigma }'(x,i)|]\), (6.12) yields

By (3.1) \(\frac{At}{1-r} \le \frac{r}{1-r} < \frac{1}{3}\), so that

Thus \(m_{t,{ \gamma },L} \rightarrow m_{h_\mathrm{ext}}\) as first \(L\rightarrow \infty \) and then \({ \gamma }\rightarrow 0\). This holds for all t and in particular for \(t=1\) hence properties (i) and (ii) are proved. Moreover, since \(m_{ \gamma }\equiv m_{1,{ \gamma }}\) converges as \({ \gamma }\rightarrow 0\) to \(m_{h_\mathrm{ext}}\) the latter is uniquely determined, as a consequence the equation \( h_\mathrm{ext} +m= f'_{\lambda }(m)\) has a unique solution \(m_{h_\mathrm{ext}}\) which is the limit of \(m_{ \gamma }\) as \({ \gamma }\rightarrow 0\). To prove (iii) we go back to (6.2) and observe that

Therefore

(6.2) and (6.3) then yield (3.2) because \(m_{t,{ \gamma },L} \rightarrow m_{h_\mathrm{ext}}\) as \(L\rightarrow \infty \) and then \({ \gamma }\rightarrow 0\). This is the same as taking the inf over all m because we have already seen that \( h_\mathrm{ext} +m= f'_{\lambda }(m)\) has a unique solution.

Appendix 2: Proof of Theorem 3

Following Lebowitz and Penrose we do coarse graining on a scale \(\ell \), \(\ell \) the integer part of \({ \gamma }^{-1/2}\). Without loss of generality we restrict L in (2.4) to be an integer multiple of \(\ell \). We then split each horizontal line in \({\Lambda }\) into \(L/\ell \) consecutive intervals of length \(\ell \) and call \(\mathcal I\) the collection of all such intervals in \({\Lambda }\). Thus

is the set of all possible values of the empirical spin magnetization in an interval \(I\in \mathcal I\). We denote by \(\underline{M}\) the set of all functions \(\underline{m}=\{m(x,i), (x,i)\in {\Lambda }\}\) on \({\Lambda }\) with values in \(\mathcal M_\ell \) which are constant on each one of the intervals I of \(\mathcal I\). Due to the smoothness assumption on the Kac potential there is c so that for all \({\sigma }\), \({ \gamma }\) and L

where, denoting by \(I_{x,i}\) the interval in \(\mathcal I\) which contains (x, i),

Thus \(m(x,i|{\sigma })\) does not change when (x, i) varies in an interval of \(\mathcal I\) and therefore \(\underline{m}=\{m(x,i|{\sigma }),(x,i)\in {\Lambda }\}\in \underline{M}\). Then the partition function

has the same asymptotics as \(Z_{{ \gamma }, h_\mathrm{ext},L}^\mathrm{per}\) in the sense that

We next change the vertical interaction \(H^{\mathrm{vert}}_L({\sigma })\) by replacing

and call \(H^{\mathrm{vert}}_\ell ({\sigma })\) the new vertical energy. We then split each vertical column into intervals of length \(\ell \), calling \(I'\) such intervals and \(\Delta \) the squares \(I\times I'\). Let \(\Delta =I\times I'\), \(m_\Delta \) the restriction of \(\underline{m}\) to \(\Delta \), so that \(m_\Delta (x,i)\), \(x\in I, i\in I'\) is only a function of i with values in \(\mathcal M_\ell \). Recalling the definition (4.3) of \(\phi _\ell (m_\Delta )\) we have that \(Z_{{ \gamma }, L}\) has the same asymptotics as

where \(\Delta _{x,i}\) denotes the square \(\Delta \) which contains (x, i).

The cardinality of \(\underline{M}\) is \(\displaystyle {\ell ^{|{\Lambda }|/\ell }}\), hence \(Z_{{ \gamma }, L,\ell }\) has the same asymptotics as

Recalling the definition (4.5) of \( Z^\mathrm{max}_{\Delta }\),

we are going to show that

To prove (7.7) we write

and use that \(\sum _y J_{{ \gamma },L}(x,y)=1\). In this way the exponent in the right hand side of (7.6) becomes a sum over all the squares \(\Delta \) of terms which depend on \(m_\Delta \) plus an interaction given by

Due to the minus sign the maximizer is obtained when all \(m_\Delta \) are equal to each other and to the maximizer in (4.5). To complete the proof of (7.7) we still need to prove the bound on the magnetization:

Proposition 2

There are \({\lambda }_0>0\) and \(m_+< 1\) so that for any \({\lambda }\le {\lambda }_0\) the maximum in (7.6) is achieved on configurations \(m_\Delta \) such that for all \((x,i)\in \Delta \), \(|m_\Delta (x,i)| \le m_+\).

Proof

Given \(h>0\) let S(m) be the entropy defined in (2.7) and let \(m_h\) be such that

Call \(m^*\) the value of \(m_h\) at \(h^*\), \(h^*\) as in (4.1) and choose \(m_+> m^*\). Fix any horizontal line i in \(\Delta \), take a magnetization \(m_i\) such that \(m_i\ge m_+\), it is then sufficient to prove that for all \({\sigma }(x,i+1)+{\sigma }(x,i-1) = : h_i(x)\),

where \(\displaystyle {U(m) = - \frac{m^2}{2}- h_\mathrm{ext} m}\). Since \(|h_i| \le 2\), this is implied (for \(\ell \) large enough) by

Since \(m_i>m^*\) and \(h_\mathrm{ext} \le h^*\), (7.10) is implied by

The function \(m^2+S(m) + h^*m\) is strictly concave in a neighborhood of \(m^*\) where it reaches its maximum, hence (recalling that \(m_i\ge m_+>m^*\)

is strictly positive and (7.9) follows for \({\lambda }\) small enough. \(\square \)

Appendix 3: Cluster Expansion

In this appendix we will study the partition function \(Z^*_{\ell ,\underline{h} }\) defined in (4.8) using the basic theory of cluster expansion, as the optimization of the estimates will not be an issue in the following.

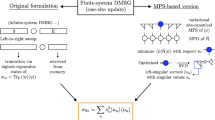

1.1 Appendix 3.1: Reduction to a Gas of Polymers

We shall first prove in Proposition 3 below that \(Z^*_{\ell ,\underline{h} }\) can be written as the partition function of a gas of polymers \({ \Gamma }\). The definition of polymers and the main notation of this section are given below.

-

A polymer \({ \Gamma }\) is a collection of pairs of consecutive points in the torus \([1,\ell ]\), which is then represented by an interval \([x_1,x_2]\) in the torus \([1,\ell ]\). Notice however that \([x_1,x_2]\) is not the same as \([x_2,x_1]\) and that [1, 1] is the polymer with all possible pairs of consecutive points.

-

\({ \Gamma }\) and \({ \Gamma }'\) are compatible, \({ \Gamma }\sim { \Gamma }'\), if their intersection is empty.

-

The weights \(w({ \Gamma })\) of the polymers \({ \Gamma }\) are defined as follows:

$$\begin{aligned} w([1,1]) = \tanh ( {\lambda }) ^{\ell } \end{aligned}$$(8.1)while if \({ \Gamma }=[x_1,x_2]\), \(x_1\ne x_2\) then

$$\begin{aligned} w({ \Gamma }) = \tanh ( {\lambda }) ^{|{ \Gamma }|-1} u_{x_1}u_{x_2}, \quad u_{x} = \tanh (h_x) \end{aligned}$$(8.2)where \(|{ \Gamma }|\) is the number of points in \({ \Gamma }\).

Proposition 3

Let \({ \Gamma }\) and \(w({ \Gamma })\) be as above, then

where the sum is over all collections \(\underline{{ \Gamma }}={ \Gamma }_1,\ldots ,{ \Gamma }_n\) of mutually compatible polymers.

Proof

We use the identity \(e^{{\lambda }{\sigma }_i {\sigma }_{i+1}} = \cosh ({\lambda })[1+ \tanh ({\lambda }) {\sigma }_i {\sigma }_{i+1}]\) to write

By expanding the last product we get a sum of terms each one being characterized by the pairs \((i,i+1)\) with \({\sigma }_i {\sigma }_{i+1}\). We fix one of these terms: its maximal connected set of pairs with \(\tanh ({\lambda }) {\sigma }_i {\sigma }_{i+1}\) identify the polymers. We then perform the sum over \({\sigma }\) observing that it factorizes over the polymers so that

and we then get (8.3). \(\square \)

We shall also consider the partition function

where \(w_1({ \Gamma })\) is obtained from \(w({ \Gamma })\) by putting \(u_i\equiv 1\).

1.2 Appendix 3.2: The K–P Condition

The Kotecký–Preiss condition for cluster expansion, [3], (hereafter called the K–P condition) requires that after introducing a weight \(|{ \Gamma }|\) then for any \({ \Gamma }\)

Proposition 4

For \({\lambda }\) small enough we have that

Proof

We are first going to prove that for \({\lambda }\) small enough

The left hand side of (8.6) is bounded by

which vanishes when \({\lambda }\rightarrow 0\), because by (8.5) \({\lambda }e^{2b}\) vanishes as \({\lambda }\rightarrow 0\). Hence (8.6) holds for \({\lambda }\) small enough.

To prove (8.5) we first write

and then use (8.6) to get

\(\square \)

1.3 Appendix 3.3: The Basic Theorem of Cluster Expansion

The theory of cluster expansion states that if the K–P condition is satisfied then the log of the partition function can be written as an absolutely convergent series over “clusters” of polymers. To define the clusters it is convenient to regard the space \(\{{ \Gamma }\}\) of all polymers as a graph where two polymers are connected if they are incompatible, as defined in Sect. 1. Then a cluster is a connected set in \(\{{ \Gamma }\}\) whose elements may also have multiplicity larger than 1. We thus introduce functions \(I: \{{ \Gamma }\} \rightarrow \mathbb N\) such that \(\{{ \Gamma }:I({ \Gamma })>0\}\) is a non empty connected set which is the cluster defined above, \(I({ \Gamma })\) being the multiplicity of appearance of \({ \Gamma }\) in the cluster. With such notation the theory says that

where the sums in (8.8)–(8.9) are absolutely convergent. The coefficients \(a_I\) are combinatorial (signed) factors, in particular \(a_I=1\) if I is supported by a single \({ \Gamma }\). We will not need the explicit expression of the \(a_I\) and only use the bound provided by Theorem 12 below. We use the notation:

Theorem 12

(Cluster expansion) Let \({\lambda }\) be so small that the K–P condition (8.5) holds. Let \({ \Gamma }\) be a polymer and \(\mathcal I\) a subset in \(\{I\}\) such that \(I({ \Gamma })\ge 1\) for all \(I\in \mathcal I\) (\(\mathcal I\) could be the whole \(\{I\}\)). Then

Observe that the absolute convergence of the sum in (8.8)–(8.9) is implied by (8.11) with \(\mathcal I=\{I: I({ \Gamma })\ge 1\}\) as it becomes

because \(\inf _{I\in \mathcal I} e^{-b|I|} = e^{-b|{ \Gamma }|}\) as the inf is realized by \(I^*\) which has \(I^*({ \Gamma })=1\) and \(I^*({ \Gamma }')=0\) for all \({ \Gamma }'\ne { \Gamma }\). (8.12) proves that the sum in (8.9) and hence the sum in (8.8) are both absolutely convergent.

Appendix 4: Proof of Theorem 4

In this section we will prove Theorem 4 as a direct consequence of Theorem 12.

1.1 Appendix 4.1: Proof of (4.9)

We start from (8.8) and observe that

\(u_{ \Gamma }=u_{x_1}u_{x_2}\), \({ \Gamma }=[x_1,x_2]\). The last factor is equal to \(u^{N(\cdot )}\) (see (4.10)) where \(N(\cdot )\) is determined by I:

hence (4.9). \(|N(\cdot )|\) (as defined in (4.11)) is even because each \({ \Gamma }\) contributes with a factor 2, its two endpoints.

1.2 Appendix 4.2: The Term with \(|N(\cdot )|=0\)

The term with \(|N(\cdot )|=0\) is a constant \(A_{0}\) (i.e. it does not depends on u) and it does not play any role in the sequel. Its value is

which is due to the polymer \({ \Gamma }=[1,1]\).

1.3 Appendix 4.3: Proof of (4.14)

The terms with \(|N(\cdot )|=2\) arise only when I has support on a single \({ \Gamma }\) and \(I({ \Gamma })= 1\). More specifically

because given \(i\ne j\) there are two intervals in the torus \([1,\ell ]\) with i and j as the endpoints. Thus

with \(|i-j|\) the distance of i from j in the torus \([1,\ell ]\).

1.4 Appendix 4.4: Proof of (4.12)

Given \(N(\cdot )\) let \(I\in \mathcal I\) be such that (9.1) holds for all x. Then

Thus

so that the left hand side of (4.12) is bounded by:

having used (8.11). (4.12) then follows from (8.6).

Appendix 5: A Priori Bounds

We will extensively use the bounds in this section which are corollaries of Theorem 4.

Corollary 1

There are constants \(c_k\), \(k\ge 0\), so that for any \(i\in \{1,\ldots ,\ell \}\), \(k\ge 0\) and \( M\ge 4\),

Proof

It follows from Theorem 4, see (4.12). \(\square \)

Corollary 2

There are constants \(c'_k\), \(k\ge 1\), so that for any \(\ell \) and \(i\in [1,\ell ]\)

for any \({\lambda }\) as small as required in Theorem 4. Moreover

Proof

We write \( \log Z^*_{\ell ,\underline{h}}= K_1+K_2\) where \(K_1\) is obtained by restricting the sum on the right hand side of (4.9) to \(|N(\cdot ) | \le 2\), \(K_2\) is the sum of the remaining terms. By (4.13)–(4.14) we easily check that \(K_1\) satisfies the bound in (10.2). We bound

by

(10.2) then follows from (10.1). (10.3) follows directly from the definition of \(\Psi _i(u)\). \(\square \)

Corollary 3

Recalling (4.13) and writing \(\alpha = \sum _{j>i}\alpha _{j-i}\),

Appendix 6: Proof of Theorems 5 and 6

We write \(\Vert v\Vert \) for the sup norm of the vector v: \(\Vert v\Vert := \max _{i=1,\ldots ,\ell }|v_i|\).

1.1 Appendix 6.1: Proof of Theorem 5

Existence. By (10.2) we can use the implicit function theorem to claim existence of a small enough time \(T>0\) such that the equation

has a solution \(u(t), t\in [0,T]\), such that: \(u(0)=m\), u(t) is differentiable and \(\Vert u(t)\Vert <1\), recall that \(\Vert m\Vert <1\).

If \({\lambda }\) is small enough (10.2) with \(k=1\) yields

so that the matrix \(1+ t \nabla \Psi (u(t))\), \((\nabla \Psi )_{i,j} =\frac{\partial }{\partial u_j}\Psi _i \), is invertible for \(t \le \min \{T,1\}\) and therefore for \(t \le \min \{T,1\}\)

By (11.2)–(10.2) f(u, t) is bounded and differentiable for \(t\le 1\) and \(\Vert u\Vert \le 1\), thus we can extend u(t) till \(\min \{1,\tau \}\) where \(\tau \) is the largest time \( \le 1\) such that \(\Vert u(t)\Vert \le 1\) for \(t\le \tau \). Thus for \(t\le \tau \) (11.1) has a solution u(t) which we claim to satisfy \(\Vert u(t)\Vert <1\). To prove the claim we suppose by contradiction that there is a time \(t\le \tau \) and i so that \(|u_i(t)|=1\). By (11.1), \(m_i=u_i + t\Psi _i(u)= u_i\) (having used (10.3)). We have thus reached a contradiction because \(\Vert m\Vert <1\). Thus the claim is proved and as a consequence \(\tau =1\) and therefore we have a solution of (11.1) for all \(t\le 1\) with

Uniqueness Suppose there are two solutions u and v. Then

Define \(u(s) = su +(1-s)v\), \(s\in [0,1]\), then

Since \(\Vert u(s)\Vert <1\) by (11.2) \(\Vert \nabla \Psi (u(s)) (u-v)\Vert \le r\Vert u-v\Vert \), so that \(\Vert u-v\Vert \le r \Vert u-v\Vert \) and therefore \(u=v\).

Boundedness Calling \(u=u(t)\) when \(t=1\), by (11.1) and (10.2)

so that if \(\Vert m\Vert \le m_+\) then for \({\lambda }\) small enough \(\Vert u\Vert <1\) and therefore there exists \(h_+\) such that \(\Vert \underline{h}\Vert \le h_+\).

1.2 Appendix 6.2: Proof of Theorem 6

Since

we have for free

and we are thus left with the proof of a lower bound for \(-\phi _\ell (\underline{m}) \).

Call \(I_i = \{(x,i): x \le \ell - \ell ^a\}\), let \(a' \in (\frac{1}{2}, a)\) and

Let \(\mu \) be the Gibbs probability for the system with vertical interactions and magnetic fields \(\underline{h}\). We look for a lower bound for

By the central limit theorem

because the spins in \(I_i\) are i.i.d. with mean \(m_i\). Moreover

because, given \(\displaystyle {\{ \bigcap _i \mathcal B_i\}}\), there is at least one configuration in the complement of \(I_i\) on each horizontal line. Thus

hence

which together with (11.6) proves (4.17).

Appendix 7: Proof of Lemma 1

We first write

We have \(\log (e^{h_i}+e^{-h_i}) = h_iu_i +S(u_i)\), the entropy S(u) being defined in (2.7)–(2.8). Thus

The term with \(h_\mathrm{ext}\Psi _i\) in (12.2) becomes

which can be written as

After an analogous procedure for the term with \((h_i-u_i)\Psi _i\) we get (4.26).

Appendix 8: Proof of Theorem 8

We say that a function \(F(\underline{u})\) is “sum of one body and gradients squared terms” if

for some functions f(u) and \(b_{i,j}(\underline{u})\). Thus (4.28) claims that \(H^{(1)}_{\ell ,\underline{h} }\) is “sum of one body and gradients squared terms”. We say in short that the “gradients squared terms are bounded as desired” if

Hence (4.29) will follow by showing that the gradients squared terms of \(H^{(1)}_{\ell ,\underline{h} }\) are bounded as desired.

We will examine separately the various terms which contribute to \(H^{(1)}\) and prove that each one of them is sum of one body and gradients squared terms and that the latter are bounded as desired.

1.1 Appendix 8.1: The \(\Theta \) Term

By (4.22)

Call \(\Theta ^{(2)}\) the above expression when we restrict the sum to \(N(\cdot ): |N(\cdot )|=2\) and call \(\Theta ^{(>2)}=\Theta -\Theta ^{(2)}\). Thus \(\Theta ^{(>2)}\) is equal to the sum of \(A_{N(\cdot )}\) over \(N(\cdot ): |N(\cdot )|>2\), i.e. \(|N(\cdot )|\ge 4\), recall in fact from Theorem 4 that \(A_{N(\cdot )}=0\) if \({N(\cdot )}\) is odd. We start from \(\Theta ^{(2)}\) which, recalling (10.4), is equal to

Thus \(-\Theta ^{(2)}\) is sum of one body and gradients squared terms, the latter non negative, hence \(-\Theta ^{(2)}\) is bounded as desired.

We rewrite \(\Theta ^{(>2)}\) using (5.1) for each one of the factors \(u^{N(\cdot )}\). Thus given \(N(\cdot )\) we call \(i_1<i_2<\cdots <i_k\) the sites where \(N(\cdot )>0\) and call \(\underline{n}=(N(i_1),\ldots ,N(i_k))\). We then apply (5.1) with \(u_1 = u_{i_1}, \dots , u_k = u_{i_k}\) so that \(p_i\) and \(d_{i,j}\) in (5.1) become functions of \(\underline{u}\) and \(N(\cdot )\). We then get

which is sum of one body and gradients squared terms. To get the desired bound on the latter we use the inequality

and (5.2) to get

Since both \( N(i)>0\), \(N(j)>0\) then \(j-i\le R(N(\cdot ))\) and given \(R(N(\cdot ))\ge k-i\) there are at most \(R(N(\cdot ))\) possible values of j. Therefore the above expression is bounded by

We upper bound the above if we extend the sum over \(N(\cdot )\) such that

We then apply (10.1) with \(k=5\) to get

The curly bracket is bounded by

Thus also \(\Theta ^{(>2)}\) is bounded as desired.

1.2 Appendix 8.2: The Term \(h_\mathrm{ext} \sum _i \Phi _i\)

By (4.23)

where \(e_i(j)=0\) if \(j\ne i\) and \(=1\) if \(j=i\).

Call \(g_i:=(1-u_i^2) (\alpha _{1}-{\lambda })\) then the first term contributes to \(\sum _i \Phi _i\) by

which is sum of one body and gradients squared terms. By (4.14) the coefficients of the gradients squared are bounded in absolute value by \(2c {\lambda }e^{-2b}\) which is the desired bound because \(\frac{2}{3}\le \frac{5}{6}\).

By an analogous argument and writing \(g'_i:=(1-u_i^2)\), the contribution of the second term in (13.4) is

which is sum of one body and gradients squared terms. We bound the latter using (13.3) and the second inequality in (4.14) to get

which is the desired bound because the curly bracket is bounded by \(c' {\lambda }^2\).

To write the contribution to \(\sum _i \Phi _i\) of the last term in (13.4) we introduce the following notation. Given \(N(\cdot ): N(i)>0\) we call \(N'(\cdot )= N(\cdot )-e_i\) and \(N''(\cdot )=N(\cdot )+e_i\). Let then \(i_1<i_2<\cdots <i_k\) the sites j where \(N'(j) >0\), \(\underline{n}=(N'(i_1),\ldots ,N'(i_k))\) and denote by \(p^{-}_j\), \(d^-_{j,j'}\) the corresponding coefficients in (5.1). Similarly let \(i'_1<i'_2<\cdots <i'_k\) the sites j where \(N''(j) >0\), \(\underline{n}=(N''(i_1),\ldots ,N''(i_k))\) and denote by \(p^{+}_j\), \(d^+_{j,j'}\) the corresponding coefficients in (5.1). Then the contribution to \(\sum _i \Phi _i\) of the last term in (13.4) can be written as

which is sum of one body and gradients squared terms. To bound the latter we examine the terms with \(d^-\), those with \(d^+\) are analogous and their analysis is omitted. For the \(d^-\) terms we get the bound:

which has an analogous structure as the gradient term in (13.2). Its analysis is similar and thus omitted. We have thus proved that \(h_\mathrm{ext} \sum _i \Phi _i\) has the desired structure.

1.3 Appendix 8.3: The Term \(\sum _i\Psi _i^2\)

We introduce the following notation: given \(i, N(\cdot ),N'(\cdot ),{\sigma },{\sigma }'\), \({\sigma }\in \{-1,1\}\), \({\sigma }'\in \{-1,1\}\), \(N(i)>0\), \(N'(i)>0\), we call

Then \(\sum _i\Psi _i^2\) is equal to

which is sum of one body and gradient squared terms. Let

then the gradient squared terms are bounded by \(\sum _{j<j'}C_{j,j'}(u_{j'}-u_j)^2\). We have

because 4 is the cardinality of \(({\sigma },{\sigma }')\). Moreover

By the symmetry between \(N(\cdot )\) and \(N'(\cdot )\) we get with an extra factor 2:

Moreover either \(R(N(\cdot )) \ge (j'-j)/2\), or \(R(N'(\cdot )) \ge (j'-j)/2\) or both, hence

By (10.1)

Using again (10.1)

Hence

The last sum is bounded proportionally to \(e^{-4b}\) (details are omitted) which gives the desired bound.

1.4 Appendix 8.4: The Term \( \sum _i \xi _i \Phi _i\)

Recalling (4.27) and (4.23) the contribution to \(H^{(1)}_{\ell ,\underline{h} }\) due to \(\sum _i \xi _i \Phi _i\) is

We have

with \(|\kappa _k| <1\); since \(|u| \le u_+ < 1\) the series converges exponentially. We start from the terms with \(\alpha _{j-i}\):

where \((p_i,p_j)\) is the probability vector introduced in Theorem 11 and d the corresponding coefficient. They depend on the pair \((2k+1,1)\) and \(|d| \le c k^{6}u_+^{2k}\). This is sum of one body and squared gradients terms and we are left with bounding the latter. We have the bound

which satisfies the desired bound as proved in Sect. 1.

We next study the last term on the right hand side of (13.7). Proceeding as before we check that it is sum of one body and gradients squared terms and next prove that the gradients are bounded as desired. We first bound them by

We have \((2k+|N(\cdot )|)^3 \le (2k)^3 |N(\cdot )|^3\) so that we get the bound

with

We can perform the sum over i to get

We are thus reduced to the case considered in Sect. 1, we omit the details.

Appendix 9: Proof of Proposition 1

Recalling that \(\xi (u):=(h(u)-u)(1-u^2)\), we have, supposing \(u'>u\),

with \(\displaystyle {a = \max _{|u| < 1}\frac{d\xi }{du}}\). Thus \(\theta _i(\underline{u}) \le a\) and by (13.8)

having retained only the term with \(k=1\).

Appendix 10: Proof of Theorem 10

We shall use in the proof that in \(H^\mathrm{eff}_{\ell ,\underline{h} }\) all terms but \(\left( T(u) - h_\mathrm{ext} u\right) \), cf. (12.2), are proportional to \(\lambda \).

Calling \(\tilde{u}\) the minimizer of \(\left( T(u) - h_\mathrm{ext} u\right) \) :

-

It will follow from Lemma 3 that the minimizer \(\underline{u}^*\) of \(H^\mathrm{eff}_{\ell ,\underline{h} }\) has components \(u^*_i\) such that \(|u^*_i-\tilde{u}| < {\lambda }^{1/4}\) (for all \({\lambda }\) small enough), and that the minimizer v of f(u), f(u) the one body term defined in (4.28), is such that \(|v-\tilde{u}|< {\lambda }^{1/4}\);

-

Since the gradient of \(H^\mathrm{eff}_{\ell ,\underline{h} }\) vanishes at \(\underline{v}=(v_i=v,\;i=1,\ldots ,\ell )\), cf. (4.28), \(\underline{v}\) is a critical point of \(H^\mathrm{eff}_{\ell ,\underline{h} }\);

-

T(u) is a convex function and its second derivative \(T''(u)\) is a strictly increasing, positive function of \(u \in (0,1)\) which diverges as \(u\rightarrow 1\), as it follows from (4.21). Then the matrix \(\frac{\partial ^2}{\partial u_i\partial u_j}H^\mathrm{eff}_{\ell ,\underline{h} }\) is positive definite in the ball \(\underline{u}: |u_i-\tilde{u}| < {\lambda }^{1/4}\), cf. Proposition 5.

As a consequence, the minimizer of \(H^\mathrm{eff}_{\ell ,\underline{h} }\) in the ball coincides with \(\underline{v}\) and since \(\underline{u}^*\) is in the ball it coincides with \(\underline{v}\), thus proving that all the components of \(\underline{u}^*\) are equal to each other. We are thus left with the proof of Lemma 3 and Proposition 5. We need a preliminary lemma.

Lemma 2

For any \(h_\mathrm{ext} \in [h_0,h^*]\) there is a unique \(\tilde{u} \) such that

and there is \(c_{h_0}>0\) so that

Proof

The proof follows from the fact that the second derivative of T(u) is positive away from 0 and in (0, 1) increases to \(\infty \) as \(u\rightarrow 1\). \(\square \)

Fix all \(u_j, j\ne i\) and call \(F(u_i)\) the energy \(H^\mathrm{eff}_{\ell ,\underline{h} }(\underline{u})\) as a function of \(u_i\). Then

Lemma 3

There is \(c'_{h_0}>0\) so that for all \({\lambda }\) small enough the following holds. Let \(h_\mathrm{ext} \in [h_0,h^*]\) and \(\tilde{u}\) as in Lemma 2 then

Proof

By (15.2)

We are going to show that the variation of all the other terms in (12.2) are bounded proportionally to \({\lambda }\) and this will then complete the proof of the lemma. We have

(the first inequality by (13.8), the last inequality by (10.2)).

Call \(G(u_i)\) the value of \(\log Z^*_{\ell ,\underline{h}}\) when \(\tanh (h_i)= u_i\) and the other \(h_j\) are fixed, then

where, to derive the last inequality, we have used Theorem 4. \(\square \)

As a corollary of the above lemmas

Lemma 4

For \({\lambda }\) small enough the inf of \(H^\mathrm{eff}_{\ell ,\underline{h} }\) is achieved in the ball \(\underline{u}: \max \{ |u_i-\tilde{u} | \le {\lambda }^{1/4}, i=1,\ldots ,\ell \}\).

Proposition 5

For \({\lambda }\) small enough the matrix \(\frac{\partial ^2}{\partial u_i\partial u_j} H^\mathrm{eff}_{\ell ,\underline{h} }\) is strictly positive in the ball \(\underline{u}: \max \{ |u_i-u_{h_\mathrm{ext} }| \le {\lambda }^{1/4}, i=1,\ldots ,\ell \}\).

Proof

From Lemma 2 and Corollary 2 one obtains

For any i,

Rights and permissions

About this article

Cite this article

Cassandro, M., Colangeli, M. & Presutti, E. Highly Anisotropic Scaling Limits. J Stat Phys 162, 997–1030 (2016). https://doi.org/10.1007/s10955-015-1437-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-015-1437-0