Abstract

The physical properties of a quantum many-body system can, in principle, be determined by diagonalizing the respective Hamiltonian, but the dimensions of its matrix representation scale exponentially with the number of degrees of freedom. Hence, only small systems that are described through simple models can be tackled via exact diagonalization. To overcome this limitation, numerical methods based on the renormalization group paradigm that restrict the quantum many-body problem to a manageable subspace of the exponentially large full Hilbert space have been put forth. A striking example is the density-matrix renormalization group (DMRG), which has become the reference numerical method to obtain the low-energy properties of one-dimensional quantum systems with short-range interactions. Here, we provide a pedagogical introduction to DMRG, presenting both its original formulation and its modern tensor-network-based version. This colloquium sets itself apart from previous contributions in two ways. First, didactic code implementations are provided to bridge the gap between conceptual and practical understanding. Second, a concise and self-contained introduction to the tensor-network methods employed in the modern version of DMRG is given, thus allowing the reader to effortlessly cross the deep chasm between the two formulations of DMRG without having to explore the broad literature on tensor networks. We expect this pedagogical review to find wide readership among students and researchers who are taking their first steps in numerical simulations via DMRG.

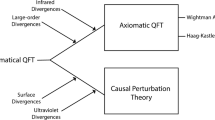

Graphic abstract

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Understanding the properties of quantum matter is one of the key challenges of the modern era [1]. The difficulties encountered are typically twofold. On the one hand, there is the challenge of modeling all the interactions of a complex quantum system. On the other hand, even when an accurate model is known, solving it is generally not an easy task. In what follows, we will overlook the first challenge and consider only quantum systems for which we can write a model Hamiltonian. Whether such a model is a good description of the physical system or not is thus beyond the scope of this colloquium.

Quantum problems can be divided into two classes: single-body and many-body. In the single-body case, the model Hamiltonian does not include interactions between different quantum particles. In other words, the quantum system can be described as if there was only one quantum particle subject to some potential. Single-body problems are easy to solve by numerical means, as the dimension of the corresponding Hamiltonian matrix scales linearly with the number of degrees of freedom. For instance, if we consider one electron in \(N_o\) spin-degenerate molecular orbitals, we have \(2N_o\) possible configurations, as the electron can have either spin-\(\uparrow \) or spin-\(\downarrow \) in each of the molecular orbitals. In contrast to the single-body case, quantum many-body problems entail interactions between the different quantum particles that compose the system. In that case, the Hamiltonian matrix must take all particles into account, which leads to an exponential growth of its dimension with the number of degrees of freedom. Using the previous example, the basis of the most general many-body Hamiltonian should have \(4^{N_{\text {o}}}\) terms, since every molecular orbital can be empty, doubly-occupied, or occupied by one electron with either spin-up or spin-down. Even if we fix the filling level \(N_{\text {e}}/N_{\text {o}}\) (where \(N_{\text {e}}\) denotes the number of electrons), we obtain \(\left( {\begin{array}{c}2N_{\text {o}}\\ N_{\text {e}}\end{array}}\right) \) configurations, which still scales exponentially with \(N_{\text {o}}\).Footnote 1 Hence, the exact diagonalization of quantum many-body problems is limited to small systems described by simple models. This is known as the exponential wall problem [2].

To circumvent the exponential wall in quantum mechanics, several numerical methods, each involving a different set of approximations, have been devised. Notable examples are the mean-field approximation, perturbation theory, the configuration interaction method [3], density-functional theory [4,5,6], quantum Monte Carlo [7], and quantum simulation [8,9,10], each of which having its own limitations. Additionally, there is the density-matrix renormalization group (DMRG), introduced in 1992 by Steven R. White [11, 12]. This approach, founded on the basis of the variational principle, rapidly established itself as the reference numerical method to obtain the low-energy properties of one-dimensional (1D) quantum systems with short-range interactions [13]. Importantly, a few years after its discovery, DMRG was reformulated in the language of tensor networks [14,15,16], which allowed for more efficient code implementations [17, 18]. The connection between the original formulation of DMRG and its tensor-network version is by no means straightforward, as the latter involves a variational optimization of a wave function represented by a matrix product state (MPS), making no direct reference to any type of renormalization technique.

The goal of this colloquium is to present a pedagogical introduction to DMRG in both the original and the MPS-based formulations. Our contribution should, therefore, add up to the vast set of DMRG reviews in the literature [13, 16, 19,20,21,22]. By following a low-level approach and focusing on learning purposes, we aim to provide a comprehensive introduction for beginners in the field. Bearing in mind that a thorough conceptual knowledge should be accompanied by a notion of practical implementation, we provide as supporting materials simplified and digestible code implementations in the form of documented Jupyter notebooks [23] to put both levels of understanding on firm footing.

The rest of this work is organized as follows. In Sect. 2, we introduce the truncated iterative diagonalization (TID). Although this renormalization technique has been successfully applied to quantum impurity models through Wilson’s numerical renormalization group [24, 25], we illustrate why it is not suitable for the majority of quantum problems. Section 3 contains the original formulation of DMRG, as invented by Steven R. White [11, 12]. We first describe the infinite-system DMRG, which essentially differs from the TID by the type of truncation employed. The truncation used in DMRG is then shown to be optimal, in the sense that it minimizes the difference between the exact and the truncated wave functions. Importantly, we also clarify the reason that renders this truncation efficient when applied to the low-energy states of 1D quantum systems with short-range interactions. This section ends with the introduction of the finite-system DMRG. In Sect. 4, we give a brief overview on tensor networks, addressing the minimal fundamental concepts that are required to understand how these are used in the context of DMRG. Section 5 shows how, in the framework of tensor networks, the finite-system DMRG can be seen as an optimization routine that, provided a representation of the Hamiltonian in terms of a matrix product operator (MPO), minimizes the energy of a variational MPS wave function. Finally, in Sect. 6, we present our concluding remarks, mentioning relevant topics that are beyond the scope of this review.

In Supplementary Information, we make available a transparent (though not optimized) Python [26] code that, for a given 1D spin model, implements the following algorithms: (i) iterative exact diagonalization, which suffers from the exponential wall problem; (ii) TID; (iii) infinite-system DMRG, within the original formulation. For pedagogical purposes, this code shares the same main structure for the three methods, differing only on a few lines of code that correspond to the implementation of the truncations associated with each method. Following the same didactic approach, we also provide a practical implementation of the finite-system DMRG algorithm in the language of tensor networks.

2 Truncated iterative diagonalization

Schematic description of the truncated iterative diagonalization method. At every iteration, the system size is increased while maintaining the dimension of the Hamiltonian matrix manageable for numerical diagonalization. This is achieved by projecting the basis of the enlarged system onto a truncated basis spanned by its lowest-energy eigenstates. The underlying assumption of this renormalization technique is that the low-energy states of the full system can be accurately described by the low-energy states of smaller blocks

The roots of DMRG can be traced back to a decimation procedure, to which we refer as TID. Given a large, numerically intractable quantum system, the key idea of this approach is to divide it into smaller blocks that can be solved by exact diagonalization. Combining these smaller blocks together, one at the time and integrating out the high-energy degrees of freedom, this renormalization technique arrives at a description of the full system in terms of a truncated Hamiltonian that can be diagonalized numerically. The underlying assumption of this method is that the low-energy states of the full system can be accurately described by the low-energy states of smaller blocks. The TID routine is one of the main steps in Wilson’s numerical renormalization group [24, 25], which has had notable success in solving quantum impurity problems, such as the Kondo [27] and the Anderson [28] models. As we shall point out below, TID was found to perform poorly for most quantum problems, working only for those where there is an intrinsic energy scale separation, such as quantum impurity models.

We now elaborate on the details of a TID implementation. For that matter, let us consider TID as schematically described in Fig. 1. In the first step, we consider a small system A, with Hamiltonian \({\mathcal {H}}_\text {A}\), the dimension of which, \(N_\text {A}\), is assumed to be manageable by numerical means. In the next step, we increase the system size, forming what we denote by system AB, the Hamiltonian of which, \({\mathcal {H}}_\text {AB}\), has dimension \(N_\text {A} N_\text {B}\) and is also assumed to be numerically tractable. The Hamiltonian \({\mathcal {H}}_\text {AB}\) includes the Hamiltonians of the two individual blocks A and B, as well as their mutual interactions \({\mathcal {V}}_\text {AB}\). Importantly, if we iterated the procedure at this step, it would be equivalent to doing exact diagonalization, in which case we would rapidly arrive at the situation where the dimension of the Hamiltonian matrix would increase to values that are too large to handle. Instead, in the third step, we diagonalize \({\mathcal {H}}_\text {AB}\) and keep only its \(N_\text {A}\) lowest-energy eigenstates.Footnote 2 These are used to form a rectangular matrix O, which can be employed to project the Hilbert space of the system AB onto a truncated basis spanned by its \(N_\text {A}\) lowest-energy eigenstates, thereby integrating out the remaining higher energy degrees of freedom. As a consequence, it is possible to find an effective truncated version of any relevant operator defined in the system AB. In particular, we can truncate \({\mathcal {H}}_\text {AB}\), obtaining an effective Hamiltonian \(\tilde{{\mathcal {H}}}_\text {AB}\) with reduced dimension \(N_\text {A}\), which can be used as the input for the first step of the next iteration. This procedure is then iterated until the desired system size is reached. As a final remark, we note that the matrices O should be saved in memory at every iteration, as they are required to obtain the terms \({\mathcal {V}}_\text {AB}\), which we usually only know how to write in the original basis, as well as to compute expectation values of observables.

Illustration of the failure of the truncated iterative diagonalization method for the problem of a quantum particle in a box. The dashed blue (red) lines represent the two lowest-energy wave functions in the box A (B). The solid black line represents the lowest-energy wave function in the box AB. It is apparent that the lowest-energy state of the larger box cannot be obtained as a linear combination of a few low-energy states of the smaller boxes, thus leading to the breakdown of the principle that underpins the TID approach

Despite its rather intuitive formulation, TID turned out to yield poor results for most quantum many-body problems [12]. In fact, White and Noack realized [29] that this renormalization approach could not even be straightforwardly applied to one of the simplest (single-body) problems in quantum mechanics: the particle-in-a-box model (Fig. 2). Even though White and Noack managed to fix this issue by considering various combinations of boundary conditions, this observation was a clear drawback to the aspirations of TID, which motivated the search for a different method. This culminated in the invention of DMRG, which is the focus of the next section.

3 Original formulation of DMRG

3.1 Infinite-system algorithm

In 1992, Steven R. White realized that the eigenstates of the density matrix are more appropriate to describe a quantum system than the eigenstates of its Hamiltonian [11]. This is the working principle of DMRG. In this subsection, we consider the so-called infinite-system DMRG algorithm. Even though it is possible to further improve this implementation scheme,Footnote 3 it is an instructive starting point as it already contains the core ideas of DMRG. Below, we introduce it in four steps. First, we describe how to apply it, providing no motivation for its structure. Second, we show, on the basis of the variational principle, that the truncation protocol prescribed by this method is optimal. Third, we address its efficiency—i.e., how numerically affordable the truncation required for an accurate description of a large system is—clarifying the models for which it is most suitable. Fourth, we provide a pedagogical code implementation and discuss the results obtained.

3.1.1 Description

Schematic description of the infinite-system DMRG algorithm (see also Fig. 18 of Appendix D for the corresponding pseudocode). Similarly to the TID approach, the system size is increased at every iteration while preventing an exponential growth of the dimension of its Hamiltonian matrix. The truncation employed involves the diagonalization of reduced density matrices, as their eigenvectors with highest eigenvalues are used to obtain an effective description of the enlarged system in a reduced basis. As shown in Sect. 3.1.2, this truncation protocol is optimal and can, in principle, be applied to obtain the best approximation of any state \(\vert \psi \rangle \) of an arbitrary quantum model. In practice, however, this method is mostly useful to probe the low-energy states of 1D quantum problems with short-range interactions (see Sect. 3.1.3)

The infinite-system DMRG algorithm is schematically described in Fig. 3. In the first step, we consider two blocks, denoted as S (system) and E (environment). As we shall see, both blocks are part of the full system under study, so their designation is arbitrary. Then, we increase the system size by adding two physical sites, one to each block, forming what we denote by blocks L (left) and R (right). We proceed by building the block SB (superblock), which amounts to bundling the blocks L and R. The block SB is the representation of the full system that we intend to describe at every iteration. It should be noted that all block aggregations imply that we account for the individual Hamiltonians of each block, plus their mutual interactions. Finally, we move on to the truncations. As a side remark, we point out that, if we truncated the blocks L and R using the corresponding low-energy states, forming new blocks S and E to use in the first step of the next iteration, this algorithm would be essentially equivalent to TID. Alternatively, we diagonalize the block SB, and use one of its eigenstates \(\vert \psi \rangle \) to build the density matrix \(\rho = \vert \psi \rangle \langle \psi \vert \).Footnote 4 Then, we compute the reduced density matrices in the subspaces of the blocks L and R, \(\sigma _\text {L/R} = \text {Tr}_\text {R/L} \rho \), diagonalize them, and keep their eigenvectors with highest eigenvalues. These are used to truncate the blocks L and R, forming new blocks S and E that are taken as inputs of the first step in the next iteration. For clarity, we note that, in the first few iterations, we may skip the truncation protocol (or, equivalently, keep all the eigenvectors of \(\sigma _\text {L/R}\)); in that case, the algorithm is equivalent to exact diagonalization, since the block SB is defined in the full Hilbert space. Due to the exponential wall problem, truncations will be required at some iteration; from then on, the Hamiltonian of the block SB is no longer exact, as it is represented in a truncated basis set.

3.1.2 Argument for truncation

Here, we justify the truncation strategy prescribed above. For that matter, let us consider an exact wave function of the block SB, written as

where \(\vert i_\text {L} \rangle \) (\(\vert i_\text {R} \rangle \)) denotes a complete basis of the block L (R), with dimension \(N_\text {L}\) (\(N_\text {R}\)). We now propose a variational wave function of the form

where \(\vert \alpha _\text {L} \rangle \) denotes a truncated basis of the block L, with reduced dimension \(D_\text {L} < N_\text {L}\). The goal is to find the states \(\vert \alpha _\text {L} \rangle \) and the variational coefficients \(c_{\alpha _\text {L},i_\text {R}}\) that provide the best approximation of the truncated wave function \(\vert {\tilde{\psi }} \rangle \) to the exact wave function \(\vert \psi \rangle \), for a given \(D_\text {L}\). This can be achieved by minimizing \(\Vert \vert \psi \rangle - \vert {\tilde{\psi }} \rangle \Vert ^2\).

The exact wave function is normalized, i.e., \(\langle \psi \vert \psi \rangle = 1\). Using this property, we obtain

where we have also used the orthonormal properties of the basis states, e.g., \(\langle i_\text {R} \vert i'_\text {R} \rangle = \delta _{i_\text {R},i'_\text {R}}\). To minimize the previous expression, we impose that its derivative with respect to the variational coefficients \(c_{\alpha _\text {L},i_\text {R}}\) (or \(c^*_{\alpha _\text {L},i_\text {R}}\)) must be zero. This leads to

Inserting Eqs. (4) into (3), we obtain

where we have introduced the reduced density matrix of the state \(\vert \psi \rangle \) in the subspace of block L

defined in terms of the full density matrix

Looking at Eq. (5), we observe that it involves a partial trace of the reduced density matrix \(\sigma _\text {L}\) (note that \(\sigma _\text {L}\) is an \(N_\text {L} \times N_\text {L}\) matrix, but the sum over \(\alpha _\text {L}\) runs over \(D_\text {L}\) terms only). Since \(\sigma _\text {L}\) is a density matrix, its full trace must be equal to 1, in which case the minimization of \(\Vert \vert \psi \rangle - \vert {\tilde{\psi }} \rangle \Vert ^2\) is accomplished by maximizing the partial trace of \(\sigma _\text {L}\). Per the Schur–Horn theorem [33, 34], the states \(\vert \alpha _\text {L} \rangle \) that accomplish this are those that diagonalize \(\sigma _\text {L}\) with highest eigenvalues \(\lambda _{\alpha _\text {L}}\) (which are all non-negative, since any density matrix is positive semi-definite), that is

thus leading to

Let us now put into words what we have just demonstrated. Starting from an exact wave function \(\vert \psi \rangle \), we can obtain a truncated (in the subspace of the block L) wave function \(\vert {\tilde{\psi }} \rangle \) that best approximates \(\vert \psi \rangle \) by going through the following protocol. First, we build the density matrix \(\rho = \vert \psi \rangle \langle \psi \vert \) and compute the reduced density matrix \(\sigma _\text {L} = \text {Tr}_\text {R} \rho \). Then, we diagonalize \(\sigma _\text {L}\) and form a \(D_\text {L} \times N_\text {L}\) matrix O whose lines are the eigenvectors of \(\sigma _\text {L}\) with highest eigenvalue. Finally, \(\vert {\tilde{\psi }} \rangle \) is obtained as \(\vert {\tilde{\psi }} \rangle = O \vert \psi \rangle \). Repeating the same strategy for the block R, for which the derivation is completely analogous, we arrive at the truncation scheme described in Sect. 3.1.1.

The calculation of \(\Vert \vert \psi \rangle - \vert {\tilde{\psi }} \rangle \Vert ^2\) at every iteration of the algorithm, using Eq. (9), can be used as a measure of the quality of the corresponding truncation. Therefore, instead of fixing a given \(D_\text {L}\), we can impose a maximum tolerance for \(\Vert \vert \psi \rangle - \vert {\tilde{\psi }} \rangle \Vert ^2\), obtaining an adaptive truncation scheme. As a final remark, we note that, while the general derivation presented here applies to any state \(\vert \psi \rangle \) of an arbitrary quantum problem, the efficiency of DMRG relies on how large \(D_\text {L}\) must be to ensure that the truncation does not compromise the accurate quantitative description of the system under study. This subject is addressed below.

3.1.3 Efficiency

Recalling Eq. (9), it is apparent that the efficiency of DMRG relies on how fast the eigenvalues of the reduced density matrices decay for the quantum state \(\vert {\psi } \rangle \) under study. However, this property is, in general, unknown.Footnote 5 Instead, the entanglement entropy—for which general results are known or conjectured [37]—can be used as a proxy, as explained below.

Relation between dimensionality and range of interactions on a lattice model. In the example depicted, a \(3 \times 3\) two-dimensional square lattice with nearest-neighbor hopping terms is described as a 1D chain with hoppings up to fifth neighbors. In general, the same mapping applied to an \(N \times N\) lattice leads to (nonlocal) hopping terms between sites separated by up to \(2N-1\) units of the 1D chain

The blocks L and R form a bipartition of the full system, represented by the block SB. We can therefore define the von Neumann entanglement entropy (of the state \(\vert \psi \rangle \)) between L and R as

Focusing on the block L, without loss of generality, we write

where we have restricted the sum over \(\alpha _\text {L}\) to the \(D_\text {L}\) highest eigenvalues of \(\sigma _\text {L}\). This approximation is valid, since we are fixing \(D_\text {L}\), so that \(\Vert \vert \psi \rangle - \vert {\tilde{\psi }} \rangle \Vert ^2 \simeq 0\), which implies, by virtue of Eq. (9), that the remaining eigenvalues are close to zero; given that \(\lim \nolimits _{\lambda \rightarrow 0^+} \lambda \log _2 \lambda = 0\), it follows that the lowest eigenvalues of \(\sigma _\text {L}\) can be safely discarded in the calculation of the entanglement entropy. Within this assumption, it is also straightforward to check that \({\mathcal {S}}\) is maximal if \(\lambda _{\alpha _\text {L}} = 1/D_\text {L}, \ \alpha _\text {L} = 1, 2,\ldots , D_\text {L}\), which allows us to write

leading to

Using Eq. (13), we can make a rough estimate of the order of magnitude of \(D_\text {L}\)

The scaling of \({\mathcal {S}}\) with the size of a translationally invariant quantum system is a property that is widely studied. In particular, there are exceptional quantum states that obey the so-called area laws [37], meaning that \({\mathcal {S}}\), instead of being an extensive quantity,Footnote 6 is at most proportional to the boundary of the two partitions. The area laws are commonly found to hold for the ground states of gapped Hamiltonians with local interactions [37]; this result has been rigorously demonstrated in the 1D case [38]. It should also be noted that, for the ground states of 1D critical/gapless local models, the scenario is not dramatically worse as \({\mathcal {S}}\) is typically verified to scale only logarithmically with the chain length [39, 40].

In summary, considering the ground state of a local Hamiltonian describing a \({\mathcal {D}}\)-dimensional system of size \({\mathcal {L}}\) in each dimension, we expect to have:

-

\({\mathcal {S}} \sim \text {const.}\), for 1D gapped systems. This implies a favorable scaling \(D_\text {L} \sim 2^\text {const.}\).

-

\({\mathcal {S}} \sim c \log _2 {\mathcal {L}}\), for 1D gapless models. This leads to \(D_\text {L} \sim 2^{c \log _2 {\mathcal {L}}}\), yielding a power law in \({\mathcal {L}}\), which is usually numerically manageable in practical cases.

-

\({\mathcal {S}} \sim {\mathcal {L}}^{{\mathcal {D}}-1}\), for gapped systems in \({\mathcal {D}}=2,3\) dimensions. This implies \(D_\text {L} \sim 2^{{\mathcal {L}}^{{\mathcal {D}}-1}}\), resulting in an exponential scaling that severely restricts the scalability of numerical calculations.

In short, we see that the truncation strategy employed in DMRG is in principle suitable for 1D quantum models (gapped or gapless), but not in higher dimensions. Notable exceptions are two-dimensional problems whose solutions can be obtained or extrapolated from lattices where the size along one of the two dimensions is rather small, such as stripes or cylinders (see Ref. [41] for a review on the use of DMRG to study two-dimensional systems). In fact, there is a relation between dimensionality and range of interactions in finite systems (Fig. 4), from which it also becomes apparent that DMRG is in practice only efficient when applied to models with short-range interactions. Finally, it is reasonable to expect that the previous statements may hold not only for ground states but also for a few low-lying states.

3.1.4 Code implementation

Benchmark results of truncated iterative diagonalization and infinite-system DMRG methods applied to open-ended spin-1 Heisenberg chains. Ground-state energy per spin, as a function of the number of spins, obtained with TID (a) and infinite-system DMRG (b), for different values of D, which reflects the truncation employed, as described in the text. In both algorithms, every iteration implies the diagonalization of an Hamiltonian matrix of maximal dimension \(9D^2 \times 9D^2\). Larger matrices are allowed if degeneracies to within numerical precision are found at the truncation threshold, as explained in the code documentation. The dashed black line marks the known result in the thermodynamic limit [42]

In Supplementary Information, we present a didactic code implementation of the infinite-system DMRG algorithm, also made available at https://github.com/GCatarina/DMRG_didactic. In this documented Jupyter notebook, written in Python, we focus on tackling spin-1 Heisenberg chains with open boundary conditions. The generalization to different spin models is completely straightforward. As for other types of quantum problems (e.g., fermionic models), this code can be readily used after simply defining the operators that appear in the corresponding Hamiltonian. We also note that a slight modification of the algorithm has been proposed to better deal with periodic boundary conditions [43].

Magnetic properties of spin-1 Heisenberg chains computed by infinite-system DMRG. Local distribution of magnetization for the ground state with quantum number \(S_z = +1\) (where \(S_z\) denotes the total spin projection) of an open-ended chain composed of 100 spins. The calculated local moments are exponentially localized at both edges of the chain, reflecting the fractionalization of the ground state into two effective spin-1/2 edge states. These results were obtained with an adaptive implementation in which the truncation error at every iteration was imposed to be below \(10^{-4}\). A small Zeeman term was added to the Hamiltonian to target the \(S_z = +1\) ground state

For pedagogical purposes, our Jupyter notebook is structured in three parts. First, we adopt the scheme described in Fig. 3, but make no truncations. This is the same as doing exact diagonalization. It is observed that, at every iteration, the running time of the code increases dramatically, reflecting the exponential wall problem. Second, maintaining the same scheme, we make a truncation where the D lowest-energy states of the block L (R) are used to obtain the new block S (E). This is equivalent to the TID approach. In Fig. 5a, we plot the ground state energy per spin, as a function of the number of spins, obtained with this strategy, for different values of D. Our calculations show a disagreement of at least \(5 \%\) with the reference value [42], which does not appear to be overcome by considering larger values for D. Therefore, we conclude that TID is not fully reliable for this problem. Third, we implement the infinite-system DMRG, where we first set a fixed value for \(D \equiv D_\text {L} = D_\text {R}\) in the truncations. Computing the ground state energy per spin with this method, the results obtained are very close to the reference value, even for small values of D, as shown in Fig. 5b. For completeness, we also implement an adaptive version of the algorithm where the values of \(D_\text {L/R}\) used at every iteration are set as to keep the truncation error, given by Eq. (9), below a certain threshold. This adaptive implementation is used to compute the expectation values presented in Fig. 6, which show a known signature of the emergence of fractional spin-1/2 edge states in the model [42].

3.2 Finite-system scheme

Breakdown of the finite-system DMRG routine. At the first stage, infinite-system DMRG is used to obtain an effective description for the target wave function of a system with desired size. This is followed by a sweeping protocol where one of the blocks is allowed to grow while the other is shrunk, thus keeping the total system size fixed. To prevent the exponential scaling, DMRG truncations (targeting the intended state) are employed for the growing blocks. The shrinking blocks are retrieved from memory, using stored data of the latest description of the block with such size (either from the infinite-system routine or from an earlier step of the sweeping procedure). The growth direction is reversed when the shrinking block reaches its minimal size. A typical strategy is to fix a maximal truncation error for the DMRG truncations, and perform sweeps until convergence in energy (and/or other physical quantities of interest) is attained; this approach ensures that the description of the target wave function is improved (or at least not worsened) at each step of the sweeping protocol

Within the infinite-system DMRG approach, the size of the system that we aim to describe increases at every iteration of the algorithm. Therefore, the wave function targeted at each step is different. This can lead to a poor convergence of the variational problem or even to incorrect results. For instance, a metastable state can be favored by edge effects in the early DMRG steps, where the embedding with the environment is not so effective due to its small size, and the lack of “thermalization” in the following iterations may not allow for a proper convergence to the target state.

In this subsection, we present the so-called finite-system DMRG method, which manages to fix the aforementioned issues to a large extent. The breakdown of this algorithm is shown in Fig. 7. Its first step consists in applying the infinite-system routine to obtain an effective description for the target wave function of a system with desired size. Then, a sweeping protocol is carried out to improve this description. In this part, one of the blocks is allowed to grow, while simultaneously shrinking the other, thus keeping the overall system size fixed. DMRG truncations (targeting the intended state) are employed for the growing blocks, whereas the shrinking blocks are retrieved from previous steps. When the shrinking block reaches its minimal size, the growth direction is reversed. A complete loop of this protocol, referred to as a sweep, entails the shrinkage of the two blocks to their minimal sizes, and the return to the initial block configuration. For a fixed truncation error, every step of a sweep must lead to a better (or at least equivalent) description of the target wave function; when the target is the ground state, this implies a variational optimization in which the estimated energy is a monotonically non-increasing function of the number of sweep steps performed. This property is at the heart of the MPS formulation of DMRG (see Sect. 5.1).

As a final remark, we wish to clarify a few subtleties related to the variational character of DMRG. For that matter, let us focus on the case where the target is the ground-state wave function. According to the derivation presented in Sect. 3.1.2, it is straightforward to check that the DMRG truncations are variational in the number of kept states: a larger value of \(D_\text {L/R}\) implies a better (or at least equivalent) description of the exact wave function, and, hence, a non-increasing energy estimation. On top of that, we have just argued that, as long as we keep a fixed truncation error, the finite-system method is also variational in the number of sweeps. Hence, the finite-system algorithm has an additional knob of optimization—the number of sweeps—that allows to improve the results of the infinite-system scheme.

4 Tensor-network basics

The modern formulation of DMRG is built upon tensor networks [14,15,16]. Indeed, virtually all state-of-the-art implementations of DMRG [17, 18] make use of MPSs and MPOs. Although pedagogical reviews on these and other tensor networks are available [44,45,46], their scope goes far beyond DMRG, as they provide the reader with the required background to explore the broader literature on tensor-network methods. Here, we take a more focused approach, giving the minimum necessary framework on tensor networks to understand the MPS-based version of the finite-system DMRG algorithm, which is discussed in detail in Sect. 5.

4.1 Diagrams and key operations

A tensor can be simply regarded as a mathematical object that stores information in a way that is determined by the number of indices \(r \in {\mathbb {N}}^0\) (referred to as the rank of the tensor), their dimensions \(\{d_i\}_{i=1}^{r}\) (i.e., the \(i^{\text {th}}\) index can take \(d_i \in {\mathbb {N}}^+\) different values), and the order by which those indices are organized. The total number of entries of a tensor is \(\prod _{i=1}^{r} d_i\). The most familiar examples of tensors are scalars (i.e., rank-0 tensors, each corresponding to a single number, thus not requiring any labels), vectors (i.e., rank-1 tensors, where every value is labeled by a single index that takes as many different values as the size of the vector), and matrices (i.e., rank-2 tensors, where every entry is characterized by two indices, one labeling the rows and another the columns). In general, each number stored in a rank-r tensor is labeled in terms of an ordered array of r indices, which can be regarded as its coordinates within the structure of the tensor. In Fig. 8a–c, we show how tensors are represented diagrammatically.

Diagrammatic representation of simple examples of tensors: a vector (i.e., rank-1 tensor), b matrix (i.e., rank-2 tensor), and c rank-3 tensor. Tensor networks are constructed by joining individual tensors, which is accomplished by contracting (i.e., summing over) indices in common. d Example of contraction between rank-2 and rank-3 tensors. Common index j is contracted. Free indices i, k, and l are represented through open legs. e Example of canonical tensor network, MPS. Each local tensor has one free index. There is one contracted index (also known as bond) between every pair of adjacent tensors. f Representation of generic rank-N tensor

Although the number of indices, their dimensions, and the order by which they are organized are crucial to unambiguously label the entries of a tensor, these properties—to which we shall refer as the shape of the tensor—are immaterial in the sense that we can fuse, split, or permute its indices without actually changing the information contained within it. For clarity, let us consider the following \(2 \times 4\) matrix \(A_{\alpha \beta }\) with \(\alpha \in \{0,1\}\), \(\beta \in \{0,1,2,3\}\):

We can reshape this rank-2 tensor by fusing its two indices, yielding the 8-dimensional vector \(A_{(\alpha , \beta )} \equiv A_{\gamma }\) if the row index \(\alpha \) is chosen to precede the column index \(\beta \)

or \(A_{(\beta , \alpha )} \equiv A_{\delta }\) if \(\beta \) takes precedence

Likewise, we can split the \(d=4\) column index \(\beta \) into two \(d=2\) indices, \(\beta _1, \beta _2 \in \{0,1\}\), corresponding to the least and most significant digits of the binary decomposition of \(\beta \), respectively. This yields the rank-3 tensor

where \(\beta _2\) corresponds to the rightmost leg in the diagrams above. Alternatively, we can leave the rank unchanged, permuting the row and column indices to yield the \(4 \times 2\) transpose matrix

In all three cases, even though we end up with tensors of different shape, all of them store exactly the same content as the original matrix, albeit in a different way. This is the key point: reshaping a tensor (by fusing or splitting indices) or simply permuting its indices merely restructures how the information is stored, leaving the information itself unaffected. In the context of numerical implementations, we note that these tensor operations can be applied to arbitrary-rank tensors via standard built-in functions (e.g., numpy.reshape and numpy.transpose in Python). The time complexity of reshaping a tensor or permuting its indices is essentially negligible, as these operations just modify a flag associated with the tensor that defines its shape rather than actually moving its elements around in memory.

Comparison of two strategies to contract a tensor network comprising three tensors. All indices, both free and contracted, are assumed to have dimension D for the purpose of estimating scaling of cost of contractions. a First, index \(\gamma \) linking tensors B and C is contracted, and then, indices \(\alpha \) and \(\beta \) are summed over, yielding an overall cost of \({\mathcal {O}}(D^5)\). b First, index \(\alpha \) linking tensors A and B is contracted, and then, indices \(\beta \) and \(\gamma \) are summed over, resulting in \({\mathcal {O}}(D^4)\) cost. Even though both strategies yield the same outcome, b is preferred, since its execution time scales more favorably with the index dimension D

Thus far, we have only considered isolated tensors. However, based on the diagrammatic representations illustrated in Fig. 8a–c, where each index corresponds to a leg, we can think of joining two individual tensors by linking a pair of legs, one from each tensor, as shown in Fig. 8d. Algebraically, such link/bond corresponds to a sum over a common index shared by the two tensors; the outcome of this operation can be explicitly obtained in Python via numpy.einsum. Of course, this process can be generalized to an arbitrary number of tensors, resulting in tensor networks of arbitrary shapes and sizes. Here, we will focus on the so-called matrix product states (MPSs), relevant for DMRG. A diagram of an MPS is shown in Fig. 8e; it comprises both free indices (i.e., open legs) and contracted indices (i.e., bonds). The elements of an MPS are uniquely identified by the free indices, but, unlike the case of an isolated tensor, their values are not immediately available, as the contracted indices must be summed over to obtain them. In the context of DMRG, an MPS with N free/physical indices is typically used to represent a quantum state of a system with N sites.

Even though the order by which sums over contracted indices are performed does not affect the obtained result, different orders may produce substantially different times of execution, especially if the tensor networks in question are large. For the 1D tensor networks herein considered, the type of contractions that we need to deal with are essentially those shown in Fig. 9, for which there are two possible contraction strategies. Contracting multiple bonds of a tensor network essentially amounts to performing nested loops. When we sum over a given contracted index, corresponding to the current innermost loop, we effectively have to fix the dummy variables of the outer loops. However, all possible values that such dummy variables can take must be considered. In the scheme of Fig. 9a, we first contract the D-dimensional bond linking tensors B and C, which involves order \({\mathcal {O}}(D)\) operations on its own, but we must repeat this for all possible combinations of values of all other indices of tensors B and C, which are \({\mathcal {O}}(D^4)\), yielding a total scaling of \({\mathcal {O}}(D^5)\). The second step contracts both bonds linking A to BC, taking \({\mathcal {O}}(D^4)\) operations. For Fig. 9b, in turn, contracting first the bond between A and B takes \({\mathcal {O}}(D^4)\) operations, and the same scaling is obtained for the second step. Hence, (b) has an overall cost of \({\mathcal {O}}(D^4)\), which is more favorable than the \({\mathcal {O}}(D^5)\) scaling of (a). In general, the problem of determining the optimal contraction scheme is known to be NP-hard [47, 48], but this issue only arises in two and higher dimensions. For our purposes, the cases described above are all we need to know about tensor-network contractions.

Tensor networks can be regarded as tensors with internal structure. Therein lies their great virtue: such internal structure allows for a compact storage of information, which greatly reduces the memory requirements of the variational methods that use these tensor networks as their ansätze. For concreteness, let us compare the N-site MPS shown in Fig. 8e to an isolated rank-N tensor (resultant, e.g., from contracting all the bonds of the N-site MPS), as shown in Fig. 8f. Assuming that free and contracted indices have dimension d and D, respectively, while the isolated tensor requires storing a total of \(d^{N}\) numbers in memory, the MPS only involves saving the entries of \(N-2\) rank-3 \(D \times d \times D\) tensors in the bulk and 2 rank-2 \(d \times D\) tensors at the ends, yielding \({\mathcal {O}}(N D^2 d)\) numbers saved in memory. In other words, the memory requirements of methods based on MPSs scale linearly with the system size N, in contrast with the exponential scaling associated with an unstructured tensor.

4.2 Singular value decomposition

The success of the original formulation of DMRG in tackling quantum many-body problems in a scalable way rests upon the projection of the Hilbert space onto the subspace spanned by the highest-weight eigenstates of the reduced density matrix on either side of the bipartition considered. In the MPS-based formulation, the analog operation (see Sect. 5.2) corresponds to the singular value decomposition (SVD) of the local tensors that compose the MPS.

Singular value decomposition for image compression. The original photo (taken at Fisgas de Ermelo, Portugal) is stored as a \(3335 \times 2668\) matrix, where each entry corresponds to a pixel and the values encode the grayscale color. The compressed images are obtained by applying SVD to this matrix, keeping only the highest singular values (namely, \(1\%\) and \(5\%\) of the total 2668 singular values). The distribution of the singular values is shown in the rightmost panel

SVD consists of factorizing any \(m \times n\) real or complex matrix M in the form \(M = {\mathcal {U}} {\mathcal {S}} {\mathcal {V}}^{\dagger }\), where \({\mathcal {U}}\) and \({\mathcal {V}}\) are \(m \times m\) and \(n \times n\) unitary matrices, respectively, and \({\mathcal {S}}\) is an \(m \times n\) matrix with non-negative real numbers (some of which possibly zero) along the diagonal and all remaining entries equal to zero

In the schematic representations of SVD above, the parallel horizontal and vertical lines forming the grids within \({\mathcal {U}}\) and \({\mathcal {V}}^{\dagger }\) serve to illustrate that the respective rows and columns form an orthonormal set, which is the defining property of a unitary matrix. As highlighted by the shaded regions, all entries of the the last \(n - m\) columns (if \(m < n\)) or the last \(m - n\) rows (if \(m > n\)) of \({\mathcal {S}}\) are zero, so we can remove such redundant information by truncating \({\mathcal {U}}\), \({\mathcal {S}}\) and \({\mathcal {V}}^{\dagger }\) (the truncated versions of which we write as U, S and \(V^\dagger \)) accordingly

This is the so-called thin or reduced SVD, as opposed to the full SVD described earlier. Both are implemented in Python via numpy.linalg.svd, setting the Boolean input parameter full_matrices appropriately. Henceforth, unless stated otherwise, we shall consider the thin SVD, as it yields the most compact factorization of the original matrix M.

A brief overview of some terminology related to SVD is in order. First, in the thin SVD diagrams above, \(V^{\dagger }\) in the \(m < n\) case and U in the \(m > n\) case are rectangular matrices, and therefore, neither is unitary. Nevertheless, as illustrated through the parallel lines, the rows of \(V^{\dagger }\) in the \(m < n\) case and the columns of U in the \(m > n\) case still form an orthonormal basis, so the former is said to be right-normalized (i.e., \(V^{\dagger } (V^{\dagger })^{\dagger } = V^{\dagger } V = \mathbb {1}\)) and the latter left-normalized (i.e., \(U^{\dagger } U = \mathbb {1}\)). The columns of U and the rows of \(V^{\dagger }\) are referred to as left- and right-singular vectors. The diagonal entries of the \({\text {min}}\{ m, n\} \times {\text {min}}\{ m, n\}\) matrix S are called singular values. The Schmidt rank \(r_{\text {S}} \le {\text {min}}\{ m, n\}\) is the number of nonzero singular values. By exploiting the gauge freedom of SVD (see Appendix A), the singular values are conventionally stored in descending order, which is useful when truncations are considered, as explained below.

The application of the thin SVD to a rectangular matrix allows for a trivial truncation of the bond dimensions between the factorized matrices. Further truncations can be implemented by discarding singular values of negligible magnitude. If the discarded singular values are zero, this procedure is exact. Otherwise, some information is lost, but the strategy of discarding the lowest singular values is known to yield the optimal truncation [49, 50]. Therefore, SVD is widely used for data compression, being particularly efficient in cases where the singular values decay rapidly. In Fig. 10, we show such an example, where SVD is used to compress a black-and-white photograph. We observe that, by keeping only the \(1\%\) highest singular values, the image obtained already exhibits most of the features of the original photo, though noticeably blurred out. This blur is significantly reduced when the number of kept singular values is increased to just \(5\%\).

Singular value decomposition of rank-3 tensor A belonging to a matrix product state. a Central/physical index \(\beta \) is fused with leftmost index \(\alpha \) to yield left-normalized tensor U at current site after SVD and index splitting. The remaining \(S V^{\dagger }\) is contracted with the local tensor that appears to the right of A in the MPS. b Central/physical index \(\beta \) is fused with rightmost index \(\gamma \) to yield right-normalized tensor \(V^{\dagger }\) at current site after SVD and index splitting. The remaining US is contracted with the local tensor that appears to the left of A in the MPS. Triangular shapes indicate left- and right-normalization of U and \(V^\dagger \), respectively. Diamond-shaped diagram illustrates that S is diagonal

A wave function can always be exactly represented by an MPS, although this will generally entail an exponential growth of the bond dimensions from the ends toward the center of the MPS (see Sect. 4.3.3). Within the context of MPS-based DMRG, SVD is adopted both to truncate the bond dimensions of the MPS and to transform it into convenient canonical forms, which we shall introduce in Sect. 4.3.2. However, since SVD is a linear algebraic method, it applies to matrices and not to the rank-3 tensors found in the non-terminal sites of an MPS. As a result, these tensors have to be reshaped by fusing two indices. There are two possibilities for this, depending on which leg we choose to fuse the physical index with (Fig. 11). In Fig. 11a, we end up with a left-normalized tensor U at the current site, with the remaining \(S V^{\dagger }\) being contracted with the next local tensor to the right of the MPS. In Fig. 11b, the right-normalized tensor \(V^{\dagger }\) is the final form of the tensor at the current site, and US is contracted with the next local tensor to the left. The expressive power of an MPS is determined by the bond dimension cutoff D, which sets the maximum size of the contracted indices (e.g., \(\alpha \), \(\gamma \), and \(\epsilon \) in Fig. 11). The dimension d of the physical indices (e.g., \(\beta \) in Fig. 11) is fixed by the local degrees of freedom of the problem under consideration (e.g., \(d = 2s+1\) for a spin-s quantum model). As a result, in Fig. 11a, b, both dimensions of the matrix resulting from reshaping the rank-3 tensor are \({\mathcal {O}}(D)\). Computing the SVD of an \(m \times n\) matrix (with \(m > n\)) takes \({\mathcal {O}}(m^2 n + n^3)\) floating-point operations [51]. Hence, within the context of MPS-based DMRG, the time complexity of SVD is \({\mathcal {O}}(D^3)\).

4.3 Matrix product states

This subsection introduces the key operations required to manipulate MPSs. In particular, we discuss how to compute overlaps between two MPSs and expectation values of MPSs for local operators.Footnote 7 Three MPS canonical forms that simplify some of these computations are introduced; the construction of all of them merely involves a sequential sitewise application of SVD, as described in Fig. 11. For completeness, we also explain how to obtain an MPS representation of a general wave function, even though this procedure is not essential for DMRG. In general, we shall consider N-site MPSs with bond dimension D and physical index dimension d.

4.3.1 Overlaps

Diagrammatic representation of a MPS for ket \(\vert {\psi } \rangle \), b MPS for bra \(\langle {\psi }\vert \), and c contraction of two previous MPSs to compute norm \(\langle \psi |\psi \rangle \). In c, singleton dummy indices \(\beta _0\), \(\beta '_0\), \(\beta _N\), and \(\beta '_N\) were added on either side of both MPSs to ease discussion of efficient method to contract tensors down to scalar \(\langle \psi |\psi \rangle \) (see Fig. 13)

Using Dirac’s Bra–Ket notation, the MPS representations of a ket \(\vert {\psi } \rangle \) and its bra \(\langle {\psi }\vert \) are shown in Fig. 12a, b, respectively. The diagrammatic representation of the norm of this state, \(\langle \psi |\psi \rangle \), amounts to linking the two MPSs by joining the physical indices \(\{ \sigma _i \}_{i = 1}^{N}\), as shown in Fig. 12c. The question, then, is how to contract such tensor network to arrive at the scalar \(\langle \psi |\psi \rangle \). A naïf approach would be to fix the same set of physical indices in the bra and the ket (\(\sigma _i = \sigma '_i\)), contract the remaining bonds (\(N-1\) at the ket and \(N-1\) at the bra), multiply the scalars obtained in the bra and the ket, and then sum over all possible values of the physical indices. The problem, however, is that \(\{ \sigma _i \}_{i = 1}^{N}\) take \(d^{N}\) different values, so this would be exponentially costly in N. Fortunately, there is a contraction scheme linear in N that resembles the process of closing a zipper [52].

Schematic description of closing-the-zipper strategy to perform contraction of tensor network resulting from the overlap between two MPSs representing the ket \(\vert {\psi } \rangle \) and the bra \(\langle {\psi }\vert \) of a given state to yield \(\langle \psi |\psi \rangle \). The steps are ordered from top to bottom. In the first step, \(C_{[0]}\) is initialized as the \(1 \times 1\) identity matrix and introduced on the left end of the tensor network, being contracted with the leftmost local tensors \(A_{[1]}\) and \(A^{\dagger }_{[1]}\) through the singleton dummy indices \(\beta _0\) and \(\beta '_0\). The contraction of the three tensors \(C_{[0]}\), \(A_{[1]}\), and \(A^{\dagger }_{[1]}\)—following the strategy described in Fig. 9b—produces the rank-2 tensor \(C_{[1]}\). This three-tensor contraction is repeated \(N-1\) times until arriving at the final \(1 \times 1\) \(C_{[N]}\), which is just the desired \(\langle \psi |\psi \rangle \). Although this figure considers the computation of the norm of a state \(\vert {\psi } \rangle \), this scheme can be identically applied to compute the overlap between two distinct MPSs. The closing-the-zipper method can be similarly performed from right to left instead. Assuming the free indices of the MPSs have dimension d and the bond dimension cutoff is D, the closing-the-zipper method cost scales as \({\mathcal {O}}(N D^3 d)\)

In Fig. 13, we illustrate this closing-the-zipper contraction scheme of the overlap between two MPSs.Footnote 8 The contraction is divided in N steps; at the \(n^{\text {th}}\) step, the local tensors \(A_{[n]}\) and \(A^{\dagger }_{[n]}\) are contracted with the tensor \(C_{[n-1]}\) that stores the outcome of all contractions from previous steps, yielding the tensor \(C_{[n]}\) to be used in the next step

To make sense of the first and final steps, it is helpful to add singleton dummy indices at each end of the two MPSs, as illustrated in Fig. 12c. This allows to apply the first step of the recursive process depicted in Fig. 13 with \(C_{[0]}\) initialized as the \(1 \times 1\) identity matrix (i.e., the scalar 1). At the \(N^{\text {th}}\) and final step, the recursive relation results in the rank-2 tensor \(C_{[N]}\), with both of its indices \(\beta _N\) and \(\beta '_N\) having trivial dimension 1. This scalar corresponds precisely to the norm \( \langle \psi \vert \psi \rangle \) we were after. Of course, we can cover the tensor network from right to left instead, producing exactly the same outcome. At each step, we make use of the tensor contraction scheme discussed in Sect. 4.1 (see Fig. 9b), resulting in a \({\mathcal {O}}(N D^3 d) \sim {\mathcal {O}}(N D^3)\) scaling overall. Unlike the naïf approach, the closing-the-zipper strategy allows for a scalable computation of overlaps between MPSs, which is crucial for the practicality of MPS-based DMRG.

4.3.2 Canonical forms

It is possible to cast the MPS in a suitable form that effectively renders most or even all steps of the closing-the-zipper scheme trivial, thus allowing to simplify the tensor-network diagrams considerably without requiring any detailed calculations. Suppose the MPS is in left-canonical form, in which case all local tensors \(\{ A_{[i]} \}_{i=1}^{N}\) are left-normalized, that is

or \(\sum _{\beta _{i-1}, \sigma _i} A^{*}_{\beta '_{i}, \sigma _i, \beta _{i-1}} A_{\beta _{i-1}, \sigma _i, \beta _{i}} = \delta _{\beta '_{i}, \beta _{i}}\) algebraically. In such case, all \(\{ C_{[n]} \}_{n=0}^{N}\) in the closing-the-zipper scheme of Fig. 13 are just resolutions of the identity, so all steps are trivial and the MPS is normalized, \(\langle \psi |\psi \rangle = 1\). The same conclusions hold if the closing-the-zipper scheme is performed from right to left and the local tensors are all right-normalized

or \(\sum _{\beta _{i}, \sigma _i} A_{\beta _{i-1}, \sigma _i, \beta _{i}} A^{*}_{\beta _{i}, \sigma _i, \beta '_{i-1}} = \delta _{ \beta _{i-1}, \beta '_{i-1}}\) algebraically. This is the right-canonical form.

For the purposes of computing expectation values of local operators, it is convenient to introduce another canonical form, the so-called mixed-canonical MPS, whereby all local tensors to the left of the site on which the local operator acts nontrivially are left-normalized, and all local tensors to the right are right-normalized. To show the usefulness of the mixed-canonical form, let us consider the expectation value \(\langle {\psi }\vert {\hat{O}}_{[i]} \vert {\psi } \rangle \) of a one-site operator (acting on a given site i) \({\hat{O}}_{[i]} = \sum _{\sigma _i, \sigma '_i} O_{\sigma _{i}, \sigma _{i'}} \vert {\sigma _i} \rangle \langle {\sigma '_i}\vert \), represented diagrammatically as

Upon making use of the left- and right-normalization of the tensors to the left and to the right of site i, closing the zipper on either side reduces this one-site-operator expectation value to

Any MPS can be converted into left-canonical form by performing SVD on one site at a time, covering the full chain from left to right. As discussed in the final paragraph of Sect. 4.2, each local rank-3 tensor \(A_{[i]}\) can be reshaped by fusing the leftmost index with the physical index; the SVD of the resulting matrix yields a unitary matrix U, which becomes a left-normalized rank-3 tensor upon splitting the two indices that were originally fused. Hence, at each site, we replace the original local tensor \(A_{[i]}\) with the reshaped U, absorbing the remaining \(S V^{\dagger }\) in the following local tensor \(A_{[i+1]}\). At the very last site, because the rightmost index is a singleton dummy index, \(V^{\dagger }\) is just a complex number of modulus 1, so we can neglect it as any wave function is defined up to a global phase factor. Moreover, S is a positive real number that corresponds to the norm of the original MPS. Typically, S is also discarded, in which case the left-canonical MPS becomes normalized. To obtain a right-canonical MPS, one proceeds analogously to the left-canonical case, with the main differences being that the chain is covered from right to left, the local tensor \(A_{[i]}\) is replaced by the right-normalized \(V^{\dagger }\) resulting from the SVD at that site, and the remaining US is absorbed by \(A_{[i-1]}\). For a mixed-canonical MPS, each of the two processes is carried out on the corresponding side of the selected site.

4.3.3 General wave function representation

Being 1D tensor networks, MPSs are most naturally suited for the representation of wave functions of 1D quantum systems. However, it should be stressed that any wave function, regardless of its dimensionality or entanglement structure, can be represented as an MPS, though possibly with exceedingly large bond dimensions. Suppose we are given the wave function of a quantum system defined on a N-site lattice

where \(\vert {\sigma _i} \rangle \) denotes the local basis of site i. Assuming the dimension of the local Hilbert space at each site is d, the amplitudes of the wave function, \(\psi _{\sigma _1,\sigma _2,\ldots ,\sigma _N}\), typically cast in the form of a \(d^{N}\)-dimensional vector, can be reshaped into a rank-N tensor such as the one shown in Fig. 8f, with each index having dimension d. To convert this rank-N tensor into the corresponding N-site MPS (Fig. 8e), one can perform SVD at each site at a time following some path that covers every lattice site once.Footnote 9 At the first site, the original rank-N tensor is reshaped into a \(d \times d^{N-1}\) matrix; its SVD produces a unitary \(d \times d\) matrix U, which is the first local tensor \(A_{[1]}\) of the MPS. The remainder of the SVD, the \(d \times d^{N-1}\) matrix \(S V^{\dagger }\), is reshaped into a \(d^2 \times d^{N-2}\) matrix, the SVD of which yields a unitary \(d^{2} \times d^{2}\) matrix U, which is reshaped into a left-normalized rank-3 tensor with shape \(d \times d \times d^{2}\), corresponding to the second local tensor \(A_{[2]}\) of the MPS. This sequence of sitewise SVDs is carried out until reaching the last site, where one obtains a rank-2 tensor \(A_{[N]}\) of dimensions \(d \times d\). The final outcome is, therefore, a left-canonical MPS. Importantly, because no truncations were performed, until the center of the MPS is reached, the bond dimension keeps on increasing by a factor of d at each site, yielding a maximum bond dimension of \(d^{\lfloor N/2 \rfloor }\), which is exponentially large in the system size. This is consistent with the fact that no information was lost, so the number of entries of the MPS is \({\mathcal {O}}(d^N)\), as for the original rank-N tensor.

The exact conversion of a wave function into an MPS ultimately defeats the purpose of using MPSs (or tensor networks, more generally), which is to provide a more compact representation without compromising the quantitative description of the system under study. A more scalable approach would involve truncating the bond dimension of the MPS to a cutoff D set beforehand, although this only produces an approximation of the original state, in general. The remarkable success of MPS-based methods in the study of 1D quantum phenomena is rooted upon the favorable scaling of the required bond dimension cutoff D of MPSs with the system size N, in accordance with the entanglement area laws discussed in Sect. 3.1.3. The relation between the entanglement entropy of a state in a given bipartition and the corresponding bond dimension D of its MPS representation will be clarified in Sect. 5.2.

4.4 Matrix product operators

A matrix product operator (MPO) is a 1D tensor network of the form shown diagrammatically in Fig. 14a. The structure of an MPO is similar to that of an MPS, except for the number of physical indices. While an MPS has a single physical index per site, an MPO has two, the top one to act on kets and the bottom one to act on bras, following the convention adopted in Fig. 12. MPOs constitute the most convenient representation of operators for MPS-based methods, as they allow for a sitewise update of the MPS ansatz. In particular, the MPS-based formulation of DMRG discussed in Sect. 5 involves expressing the Hamiltonian under study as an MPO.

Applying an MPO onto an MPS yields another MPS of greater bond dimensions (Fig. 14b). To obtain this MPS, at every site \(i = 1, 2,\ldots , N\), one contracts the local tensor \(A_{[i]}\) from the original MPS with the corresponding local tensor \(O_{[i]}\) from the MPO, fusing the pairs of bonds on either side to retrieve a rank-3 tensor \(B_{[i]}\)

Due to this fusion of indices, the bond dimensions of the final MPS are the product of the bond dimensions of the original MPS and the MPO. The cost of contracting an MPO of bond dimension w (which is typically a small constant, as we shall see below for the case of a short-ranged Hamiltonian) with an MPS of bond dimension D, both with N sites and physical index dimension d, is \({\mathcal {O}}(N D^2 w^2 d^2) \sim {\mathcal {O}}(N D^2)\).

a Diagram of N-site operator \({\hat{O}}\) as an MPO. b Applying MPO of \({\hat{O}}\) onto MPS of \(\vert {\psi } \rangle \) yields another MPS. At \(m^{\text {th}}\) bond, contracted index \(\beta _{m}\) of MPS of \({\hat{O}} \vert {\psi } \rangle \) results from fusion of corresponding indices \(\gamma _m\) and \(\alpha _{m}\) of MPO and original MPS, so the dimension of \(\beta _m\) is the product of the dimensions of \(\alpha _m\) and \(\gamma _m\), hence the bold line representation in the diagram. c Diagram of expectation value \(\langle {\psi }\vert {\hat{O}} \vert {\psi } \rangle \). The contraction of the tensor network can be done in two ways. Either the MPO is applied to one of the MPSs, and then, the closing-the-zipper strategy (Fig. 13) is adopted to compute the overlap between the two remaining MPSs or the closing-the-zipper method is applied directly to this three-layer tensor network, as described in the text. Assuming the physical indices have dimension d and the bond dimension cutoffs are D for the MPS and w for the MPO, the cost of the two strategies scales as \({\mathcal {O}}(N D^3 w^2 d)\) and \({\mathcal {O}}(N D^3 w d)\), respectively

The expectation value of an operator \({\hat{O}}\) cast in the form of an N-site MPO with respect to a state \(\vert {\psi } \rangle \) expressed as a N-site MPS is represented in Fig. 14c. One way to obtain \(\langle {\psi }\vert {\hat{O}} \vert {\psi } \rangle \) is to calculate the MPS corresponding to \({\hat{O}} \vert {\psi } \rangle \)—following the prescription provided in the previous paragraph—and then compute the overlap between the two resulting MPSs, one for \({\hat{O}} \vert {\psi } \rangle \) and another for \(\langle {\psi }\vert \), using the closing-the-zipper method introduced in Sect. 4.3.1. The cost of this approach scales as \({\mathcal {O}}(N D^3 w^2 d) \sim {\mathcal {O}}(N D^3)\). Alternatively, the closing-the-zipper strategy can be adapted to contract this three-layer tensor network. Specifically, at the \(n^{\text {th}}\) iteration, we have

and the indices are contracted as follows:

-

1.

Sum over \(\alpha _{n-1}\) with fixed \(\sigma _n\), \(\alpha _n\), \(\gamma _{n-1}\) and \(\alpha '_{n-1}\) at cost \({\mathcal {O}}(D^3 w d)\).

-

2.

Sum over \(\gamma _{n-1}\) and \(\sigma _n\) with fixed \(\alpha _n\), \(\gamma _n\), \(\sigma '_{n}\) and \(\alpha '_{n-1}\) at cost \({\mathcal {O}}(D^2 w^2 d^2)\).

-

3.

Sum over \(\alpha '_{n-1}\) and \(\sigma '_n\) with fixed \(\alpha _n\), \(\gamma _n\) and \(\alpha '_{n}\) at cost \({\mathcal {O}}(D^3 w d)\).

Upon completing the N iterations to go through all sites, the overall scaling is \({\mathcal {O}}(N D^3 w d) \sim {\mathcal {O}}(N D^3)\). For technical reasons that will be apparent in Sect. 5.1, this contraction scheme is preferred in the implementation of the finite-system DMRG algorithm.

Any N-site operator can be expressed as an MPO by performing SVD at each site at a time, in a similar spirit to the representation of an arbitrary wave function in terms of an MPS, discussed in Sect. 4.3.3. The problem with this approach is that the bond dimension of the resulting MPO grows by \(d^2\) at every iteration until reaching the middle of the MPO, thus leading to \({\mathcal {O}}(d^N)\) bond dimensions. The MPO representation of an arbitrary tensor product of single-site operators is straightforward: each local operator is reshaped into a rank-4 tensor with two singleton dummy indices (corresponding to the trivial bonds with dimension \(w = 1\)), which are contracted with those from the adjacent sites to form the MPO. MPOs like those described above can also be summedFootnote 10 to obtain the MPO representation of more generic operators. It must be noted, however, that the previous strategy, although versatile, does not always lead to the lowest possible bond dimensions of the final MPO. In particular, it is possible to represent local Hamiltonians in terms of MPOs with \({\mathcal {O}}(1)\) bond dimension—i.e., constant with respect to the system size N—, as explained below.

The exact MPO of a local Hamiltonian can be obtained through an analytical method originally proposed by McCulloch [21]. For concreteness, let us consider the Heisenberg model for an open-ended spin-s chain with a Zeeman term

where J and h are model parameters,  is the vector of spin-s operators at site \(i \in \{1,2,\ldots ,N\}\), and

is the vector of spin-s operators at site \(i \in \{1,2,\ldots ,N\}\), and  are the corresponding spin ladder operators. Our goal is to obtain the local tensors \(\{H_{[i]}\}_{i=1}^{N}\) of the MPO that encodes this Hamiltonian. Four different types of terms arise in Eq. (17)

are the corresponding spin ladder operators. Our goal is to obtain the local tensors \(\{H_{[i]}\}_{i=1}^{N}\) of the MPO that encodes this Hamiltonian. Four different types of terms arise in Eq. (17)

The numbers above the tensor product signs identify one of the following five ‘states’:

-

‘State’ 1: Only identity operators \(\hat{\mathbb {1}}\) to the right.

-

‘State’ 2: One \({\hat{S}}^{z}\) operator just to the right, followed by \(\hat{\mathbb {1}}\) operators.

-

‘State’ 3: One \({\hat{S}}^{-}\) operator just to the right, followed by \(\hat{\mathbb {1}}\) operators.

-

‘State’ 4: One \({\hat{S}}^{+}\) operator just to the right, followed by \(\hat{\mathbb {1}}\) operators.

-

‘State’ 5: One complete term somewhere to the right.

For a given bulk site i, the local rank-4 tensor \(H_{[i]}\), cast in the form of a \(w \times w\) matrix where each entry is itself a \(d \times d\) matrix—with \(w=5\) the bond dimension of the MPO (determined by the number of ‘states’) and \(d = 2s + 1\) the physical index dimension—is constructed in such a way that its (k, l) entry corresponds to the operator that makes the transition from ‘state’ l to ‘state’ k toward the left

For the terminal sites, due to the open boundary conditions, we have two rank-3 tensors, one corresponding to the last row of Eq. (18) for the leftmost site \(i = 1\) and another corresponding to the first column of Eq. (18) for the rightmost site \(i = N\).

To confirm that the constructed MPO does indeed give rise to the Hamiltonian stated in Eq. (17), one can perform by hand the matrix multiplication of the local tensors in the form shown in Eq. (18), but with the usual scalar multiplications being replaced by tensor products as each entry is itself a rank-2 tensor [54]. Alternatively, the MPO can be contracted and compared directly to the full \(d^{N} \times d^{N}\) matrix representation of the model Hamiltonian. This sanity check is performed for small system sizes N in the code that complements this manuscript (see Supplementary Information). In this code, we also construct the MPO Hamiltonian for two other quantum spin models, the Majumdar–Ghosh [55, 56] and the Affleck–Kennedy–Lieb–Tasaki [57] models. These two additional examples suffice to demonstrate how to apply McCulloch’s method in general, namely by adding next-nearest-neighbor interactions and further nearest-neighbor interactions, respectively. Assuming the most conventional case of model Hamiltonians with terms acting nontrivially on one or two sites only, the bond dimension of the MPO obtained with this method starts at two and increases by one for every new type of two-site term and/or unit of interaction range [16]. There are, however, notable exceptions to this rule, such as long-range Hamiltonians that allow for a more compact but still exact MPO representation [58, 59]. More complex Hamiltonians such as those arising in quantum chemistry [60] or in two-dimensional lattice models on a cylinder in hybrid real and momentum space [61] may require more sophisticated numerical approaches to reduce the bond dimension of the corresponding MPO [53].

5 Finite-system DMRG in the language of tensor networks

5.1 Derivation: one-site update

Tensor-network diagram of the “effective” matrix \(M_{[i]}\) of the eigenvalue problem (Eq. (20)) associated with one iteration—corresponding to the local optimization of the MPS at site i—of the one-site-update finite-system DMRG algorithm. To optimize the computational performance of the DMRG algorithm, \(M_{[i]}\) is stored in terms of three tensors, \(L_{[i]}\), \(H_{[i]}\), and \(R_{[i]}\) (see Appendix B for details). The pseudocode of the algorithm is shown in Fig. 19 of Appendix D

The starting point for the derivation of the MPS-based finite-system DMRG algorithm is to consider the set of all N-site MPS representations of a ket \(\vert {\psi } \rangle \) with (maximum) bond dimension D as a variational space. The local tensor of the MPS at site i is denoted by \(A_{[i]}\); for the sake of simplicity, we consider that the physical index dimension is d at all sites. We assume we are given the N-site MPO representation of the Hamiltonian \(\hat{{\mathcal {H}}}\), with bond dimension \(w \sim {\mathcal {O}}(1)\) and physical index dimension d; its local rank-4 tensor at site i is denoted by \(H_{[i]}\). The goal is to minimize the energy \(\langle {\psi }\vert \hat{{\mathcal {H}}} \vert {\psi } \rangle \), subject to the normalization constraint \(\langle \psi |\psi \rangle = 1\). This can be achieved by minimizing the cost function \(\langle {\psi }\vert \hat{{\mathcal {H}}} \vert {\psi } \rangle - \lambda \langle \psi |\psi \rangle \), where \(\lambda \) denotes the Lagrange multiplier. The one-site-update version of the algorithm consists of finding the stationary points of the cost function with respect to each local tensor \(A^\dagger _{[i]}\) at a time, that is

Making use of the diagrammatic representation, and taking into account that all contractions on a tensor network are linear operations, the derivative with respect to \(A^\dagger _{[i]}\) amounts to punching a hole [52] at the position of the tensor \(A^\dagger _{[i]}\), leading to

which can be understood as a generalized eigenvalue problem for \(A_{[i]}\). By casting the MPS in mixed-canonical form with respect to site i, the bottom part of the previous equation simplifies trivially, yielding an eigenvalue problem for \(A_{[i]}\) that we write as

with \(a \equiv (\beta _{i-1},\sigma _i,\beta _i)\) and \(M^{a',a}_{[i]}\) defined by the diagram shown in Fig. 15.

Having derived an eigenvalue problem [Eq. (20) from the local optimization of the MPS at site i (Eq. (19)], the optimal update of the corresponding local tensor \(A_{[i]}\) is simply the eigenstate with lowest eigenvalue, both of which can be obtained through the Lanczos algorithm [62]. Although it is common practice to provide a randomly generated state as the input state to the Lanczos algorithm, in this case, it is preferable to use the current version of the local tensor \(A_{[i]}\) as the initial state, as this is a more educated guess of the ground state, thus reducing the number of iterations of the Lanczos algorithm. In addition to the obtained eigenstate being the variationally optimized \(A_{[i]}\), the corresponding eigenvalue is also the current estimate of the ground-state energy of the full system. This step of the DMRG algorithm is repeated, sweeping i back and forth between 1 and N. As for the initialization, the typical approach is to start with a random MPS.

Two additional technical remarks regarding the implementation of the DMRG algorithm derived above are in order. First, at every step of the algorithm, after having obtained the updated local tensor \(A_{[i]}\) as the ground state of the eigenvalue problem, its SVD is performed to ensure that the MPS is in the appropriate mixed-canonical form in the next step of the sweep, thus avoiding a generalized eigenvalue equation. Second, the “effective” matrix of the eigenvalue problem, \(M_{[i]}\), is stored in terms of three separate tensors, \(L_{[i]}\), \(H_{[i]}\), and \(R_{[i]}\) (Fig. 15). As the notation suggests, the rank-4 tensor \(H_{[i]}\) is just the local tensor at site i of the MPO that encodes the Hamiltonian \(\hat{{\mathcal {H}}}\). As for the rank-3 tensors \(L_{[i]}\) and \(R_{[i]}\), they result from the contraction of all tensors to the left and to the right of site i, respectively. The efficient computation of \(L_{[i]}\) and \(R_{[i]}\) over the multiple sweeps of the DMRG algorithm is detailed in Appendix B.

Making use of the internal structure of the matrix \(M_{[i]}\), the time complexity of solving the eigenvalue problem stated in Eq. (20)—required to update one local tensor of the MPS—is \({\mathcal {O}}(D^3)\). This scaling results largely from the the matrix-vector multiplications involved in the construction of the Krylov space within the Lanczos algorithm [63]. Note that the naïf explicit contraction of \(M_{[i]}\) into a \((D^2 d) \times (D^2 d)\) matrix would have resulted in a \({\mathcal {O}}(D^4)\) scaling of the matrix-vector multiplications, as opposed to the \({\mathcal {O}}(D^3)\) obtained using the \(L_{[i]}\), \(H_{[i]}\), and \(R_{[i]}\) tensors. In the end, all key steps of one iteration of the one-site-update finite-system DMRG algorithm—closing-the-zipper contraction (as described in Appendix B), eigenvalue problem, and SVD—have the same \({\mathcal {O}}(D^3)\) computational cost, so the overall cost of a full sweep scales as \({\mathcal {O}}(N D^3)\). It must be noted that, since the standard Python functions to implement the Lanczos algorithm (e.g., scipy.sparse.linalg.eigsh) require a matrix as input, the naïf explicit contraction of \(M_{[i]}\) was adopted in the code that complements this manuscript, trading efficiency for simplicity.

Finally, although this discussion has been restricted to the computation of the ground state, it is straightforward to extend it to the calculation of low-lying excited states. For concreteness, let us suppose we have already determined the ground state \(\vert {{\text {GS}}} \rangle \) in a previous run of the DMRG algorithm and wish to obtain the first excited state. Exploiting the orthogonality of the eigenbasis of the Hamiltonian, we merely have to impose the additional constraint \( \langle \psi \vert GS \rangle = 0\) through another Lagrange multiplier in the cost function. This additional term effectively imposes an energy penalty on the variational states \(\vert {\psi } \rangle \) that have nonzero overlap with \(\vert {{\text {GS}}} \rangle \). In other words, the eigenvalue problem is restricted to a subspace orthogonal to \(\vert {{\text {GS}}} \rangle \). In practice, this condition can be imposed by setting \(\vert {{\text {GS}}} \rangle \) as the first Krylov state in the Lanczos algorithm but performing the diagonalization of the tridiagonal matrix defined in the Krylov subspace spanned by all but the first Krylov state [63].

5.2 Connection to original formalism