Abstract

Knowledge about the location of a defect is essential for damage assessment. In terms of a digitalised maintenance, inspection data is combined with position information. The presented approach regards the manual ultrasonic inspection, where the ultrasonic probe and the inspected component are both hand-held. By using markerless tracking technologies, it is possible to track the component without any markers. The ultrasonic probe is tracked by a more stable marker-based tracking technology. This results in a hybrid tracking system, which allows a referencing of the non-destructive testing (NDT) data directly to the local coordinate system of the 3D model that corresponds to the inspected component. Transferring this approach to other manual inspection technologies allows for a superimposition of recorded NDT data without any postprocessing or transformation. A better damage assessment is thus enabled. The inspection system, the inspection tool calibration and the camera registration process are described and analysed in detail. This work is focused on the analysis of the system accuracy, which is realised by using a reference body.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Along with the fourth industrial revolution, a change in nondestructive evaluation (NDE 4.0) is also taking place. This means the integration of digital technologies and physical testing methods for safety and economic value [1]. But despite increasing automation and digitalisation, human workers will remain an essential part of the maintenance process [2]. This is especially relevant in the area of inspection, where acquisition, interpretation and a valid assessment of structural defects is highly important for a safe, reliable and economic operation. This work addresses the manual ultrasonic inspection, whose conventional practice brings disadvantages in the field of damage dimensioning, interpretation, documentation and localisation [3].

In a digitised inspection process, a human worker has to be integrated and assisted properly and information about the defect position has to be combined with processible position information for the use in a virtual world. The following applications can be realised in consequence:

-

Assistance of human workers

-

Digitalisation of manual inspection processes

-

Localisation of inspection data on a component

-

Feedback about the complete areal inspection

-

Visualisation of inspection data directly on the component

-

Superimposition with further inspection data

-

Improved damage assessment

-

Data basis for future digital twins

To determine the position of an inspected component, optical tracking systems can be used. A main requirement is a dynamic pose determination, so the inspected component and the inspection tool can be moved and rotated during inspection. This is important, as described in [4], because using the ability of viewing and touching the component results in the highest accuracy in determination and classification of defects. Using fiducial markers for tracking purposes of the inspected component is not always possible and furthermore this would have a serious disadvantage: If any kind of external reference aid (e.g. component carrier, tracking marker) is used, recorded inspection data is never directly related to the component, but always to the reference aid. The reference aid can be calibrated to the component, but movements of the component are only possible, if the reference aid can be moved with it. As shown in Fig. 1, the component is removed from a component carrier, but a visualisation of the data remains with its virtual component at the component carrier. As a result, markerless tracking systems are a key for the digitalisation of manual inspection processes.

To have the ability to superimpose inspection data from different sources without any transformation work, a consistent reference is necessary. The local coordinate system of an inspected component as defined in its CAD data might be suitable. This work is a follow-up of the developments presented in [5].

In this work, a system is presented that uses a hybrid tracking approach. Necessary transformation calculations and software for data processing are described. Afterwards, the system accuracy is verified by starting with a causes analysis. Tool calibration and camera registration processes are described and analysed in detail. Finally the overall localisation accuracy of the inspection system is determined by using a reference body.

2 Combining NDT Data with Position Data

Localising inspection data or rather combining them with position data is the subject of several surveys. This section discusses inspection systems for manual ultrasonic inspections that have the potential for industrial use.

A commercial system called WiiPA tracks the position of a phased array ultrasonic probe and refers it to a component carrier [6, 7]. Probe and component carrier are equipped each with retroreflective ball markers, which are tracked by an infrared-based motion capture system. A visualisation of ultrasonic data directly on the component is possible during or after the inspection with the augmented reality device Microsoft HoloLens.

Olympus developed a system that allows precise positioning and orientation of an ultrasonic probe with an infrared-based motion capture system by equipping the probe with retroreflective ball markers [8, 9]. In addition, data glasses are provided for guiding the human worker along a predefined inspection path.

The Fraunhofer IZFP presented their 3D-SmartInspect system which uses an optically tracked colored marker on top of the inspection tool [10]. Thereby the pose determination is limited to two-dimensional tracking and the inspected component is not tracked. Inspection data is visualised on a computer screen or with a Microsoft HoloLens.

In [11], an inspection system called DIMUTA is developed for inspecting large castings, tracking an ultrasonic probe equipped with active infrared markers. Tracking of the component is not realised. Later on, a verification process is carried out to determine accuracy and precision of the inspection system [12].

A recent publication demonstrates an ultrasonic inspection of a helicopter fuselage by tracking the ultrasonic probe with retroreflective ball markers using an infrared-based motion tracking system. During inspection, the localised ultrasonic data is used for texturing a 3D-model of the inspected component, which is visualised by a Mixed Reality device [13]. A main optimisation that is aimed at the outlook is a future auto-alignment of the virtual model to its real correspondent, which can be realised by using markerless tracking capabilities. The referenced markerless tracking system is the product CAPTA [14], which has already been researched for this task in [5]. A main conclusion was that a monocular camera for markerless tracking purposes has accuracy limitations in determining the object position in depth.

To combine the advantages of marker-based and markerless tracking, the presented system follows the approach of hybrid tracking.

3 Inspection System Using Hybrid Tracking

The presented inspection system combines the tracking capabilities of marker-based tracking (MBT) and markerless tracking (MLT). The first one is well-developed, fast, stable and acurate but necessarily needs fiducial markers as reference points. The markerless tracking technology uses the tracked component itself or distinctive features of it as a reference to determine its pose. However, this technology is still in the development process and its stability and accuracy have to be proven.

3.1 General Functionality

The focus of the developed inspection system is to digitalise an ultrasonic inspection process where the inspection tool and the inspected component are both hand-guided and ultrasonic data is linked with position information. The position information is saved as a point cloud which depends on spatial tracking data only. The point cloud is then coloured in accordance to the ultrasonic data. To reference this inspection data to the local coordinate system of the inspected component, tracking its pose dynamically is required. Without equipping the component with any fiducial markers, or coupling it to linear measurement systems, only MLT technologies can be used for that purpose. To track the pose of the inspection tool, a more robust MBT technology is used.

Figure 2 shows the hardware structure of the physical setup of Fig. 3. All subsystems are connected to a laptop  , either via USB or Ethernet cable. For the MBT

, either via USB or Ethernet cable. For the MBT  , the mobile tracking solution SMARTTRACK3 by the company Advanced Realtime Tracking GmbH & Co. KG [15] is applied. The corresponding software DTrack provides position and orientation of the tracked body via a UDP signal. For this purpose, an ultrasonic testing (UT) probe is equipped with a tree target consisting of five retroreflective marker balls

, the mobile tracking solution SMARTTRACK3 by the company Advanced Realtime Tracking GmbH & Co. KG [15] is applied. The corresponding software DTrack provides position and orientation of the tracked body via a UDP signal. For this purpose, an ultrasonic testing (UT) probe is equipped with a tree target consisting of five retroreflective marker balls  . Here, the single channel impulse-echo probe Olympus V205-RM 15MHz with a probe diameter (more precisely: the diameter of its delay line) of \(6\,\textrm{mm}\) is used with a gel couplant for insonification. However, the location of the UT probe contact surface relative to the tree target has to be calibrated before use, see Sect. 4.6. The UT probe itself is connected to the ultrasonic frontend PCUS pro Single

. Here, the single channel impulse-echo probe Olympus V205-RM 15MHz with a probe diameter (more precisely: the diameter of its delay line) of \(6\,\textrm{mm}\) is used with a gel couplant for insonification. However, the location of the UT probe contact surface relative to the tree target has to be calibrated before use, see Sect. 4.6. The UT probe itself is connected to the ultrasonic frontend PCUS pro Single  by Fraunhofer IKTS [16]. With the support of a LabVIEW program running on the laptop, the ultrasonic data is provided via a UDP signal.

by Fraunhofer IKTS [16]. With the support of a LabVIEW program running on the laptop, the ultrasonic data is provided via a UDP signal.

When selecting a suitable MLT  , the experience made in [5] was taken into account, that additional information about the distance of the tracked component to the camera is needed for exact pose determination. A state-of-the-art technology is the so called Iterative Corresponding Geometry (ICG) presented in [17]. It fuses region and depth information for pose determination and can be used with an Azure Kinect camera by Microsoft. To track the pose of an inspected component

, the experience made in [5] was taken into account, that additional information about the distance of the tracked component to the camera is needed for exact pose determination. A state-of-the-art technology is the so called Iterative Corresponding Geometry (ICG) presented in [17]. It fuses region and depth information for pose determination and can be used with an Azure Kinect camera by Microsoft. To track the pose of an inspected component  with ICG, only a 3D-model without any information about its texture is required. Also the pose data from ICG is provided via UDP signal.

with ICG, only a 3D-model without any information about its texture is required. Also the pose data from ICG is provided via UDP signal.

Figure 4 shows captured images of the Azure Kinect colour and depth camera during an inspection process. A human worker holds the inspected component in his hand to examine it with the inspection tool held in his other hand. The coloured virtual model fitted over the corresponding inspected component indicates the active MLT process. While the component is tracked, also the target of the inspection tool is tracked by the MBT system. Knowing both poses, the pose of the UT probe relative to the component can be calculated and combined with ultrasonic inspection data.

3.2 Forward Kinematics

To determine the position of the UT probe contact surface (PCS) relative to the component coordinate system (CS), methods from the field of robotics for the calculation of the forward kinematics are implemented. If geometric information about every joint of a serial-chain manipulator is given, it is possible to determine the position and orientation of the end-effector relative to its base [18].

The presented hybrid inspection system can be described as a serial-chain manipulator as shown in Fig. 5. The inspected component (IC) represents the base and the PCS the end-effector. For every joint, a CS is defined. The links between the tracking cameras MLT\(\rightarrow \)MBT and between the UT probe and the tree target (TT) TT\(\rightarrow \)PCS are realised by a 3D-printed adapter, so that they can be regarded as rigid. The links MLT\(\rightarrow \)IC and MBT\(\rightarrow \)TT are based on optical tracking, because their geometric parameters must be determined dynamically by using the corresponding tracking systems.

At this point homogeneous transformation matrices as defined in Eq. 1 are introduced. For example, the matrix \(^{MBT}T_{TT}\) can be used to describe the pose of \((CS)_{TT}\) in the coordinate system \((CS)_{MBT}\). The component \(^{i-1}R_{i}\) of Eq. 1 describes the orientation of the regarded CS in a 3x3 rotation matrix and the component \(_{(i-1)}r_{i}\) of Eq. 1 describes its position as a xyz-vector.

Originally the pose of \((CS)_{IC}\) is determined in the coordinate system \((CS)_{MLT}\). To define the IC as the base, the homogeneous transformation matrix \(^{MLT}T_{IC}\) has to be inversed, see Eq. 2. The pose of \((CS)_{MLT}\) can now be described in the coordinate system \((CS)_{IC}\).

If every link is defined in a homogeneous transformation matrix, they can be linked together to define a serial-chain manipulator [18], see Eq. 3.

The transformation \(^{MLT}T_{MLTD}\) is not part of the equation, because it is a device-internal calibration of the Azure Kinect camera. The transformation \(^{TT}T_{PCS}\) is determined in a calibration process, described in Sect. 4.6. The transformation \(^{MLT}T_{MBT}\) is determined during a camera registration process, described in Sect. 4.7. All remaining transformations are determined dynamically during the inspection process.

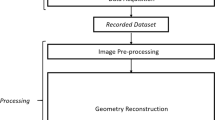

3.3 Data Processing

Three measuring systems are providing data, see Fig. 2. The ultrasonic device provides inspection data, especially a sample time increment, amplitude value, number of samples between first and second amplitude (Time of Flight: ToF) and sample values. The MBT system provides the pose of the TT and the MLT system provides the pose of the IC. Pose data is in form of xyz position and rotation matrix. All data of these measuring systems is transmitted via UDP signal.

A data processing program is written in Python 3.8, see the structure chart in Fig. 6. After starting the program, a CSV file with header information is created initially. This data format is chosen because the given data streams are already structured data.

Two UDP servers for receiving the MBT data and the ultrasonic data streams are instantiated. Each UDP server runs in the background in its own thread. A third UDP server for receiving the MLT data is started in the main thread. Every UDP server has a while loop to keep the receiving thread active. Directly after receiving a dataset, an incoming timestamp is set and the data set is processed to arrays and matrices so that it is available for subsequent computations. For MBT and US data no addional filter (e.g. for smoothing position data) is needed, because the MBT and US data is already preprocessed before being sent. Only the MLT system does not have any smoothing technique implemented, which will be discussed later.

Figure 6 shows that the while loop of the MLT UDP server contains data requests to the other UDP servers. By structuring the program in the presented way, data synchronisation is realised by having the slowest part setting the measurement frequency (MLT: \(30\,\textrm{Hz}\)), while the ultrasonic frontend enables a measurement frequency up to \(2\,\textrm{kHz}\) and the markerbased tracking system up to \(150\,\textrm{Hz}\).

After receiving and preprocessing the three data sets, the transformation described in Sect. 3.2 can be executed to get \(^{IC}T_{PCS}\). By that, the pose of \((CS)_{PCS}\) relative to \((CS)_{IC}\) is calculated, compare with Fig. 5.

Writing data to the CSV file is only proceeded, if a material thickness is measured with the ultrasonic probe. This allows to move the inspection tool to another measuring point without having data recorded. For visualisation purposes, the ultrasonic data set, which is linked to the calculated pose information, can be assigned with a colour code to indicate its magnitude. Considering the speed of sound (determined by a calibration performed on the inspected component) of the examined material, the ultrasonic ToF is suitable to calculate the material thickness up to the first defect or the rear wall of the IC. The used settings for the ultrasonic device are shown in Table 1. During inspection, a feedback about the A-Scan by the ultrasonic testing software and the current probe position data with the measured material thickness in a comment box is given. A live visualisation of the probe position on the CAD model is not implemented at the current status of development.

In a last step, incoming timestamps, pose data of \(^{IC}T_{PCS}\), ultrasonic data, material thickness and colour code are combined to one data set and appended to the CSV file, initialized at the beginning of the program.

In this paragraph, the settings for signal processing of the ultrasonic device are explained, compare with Fig. 7 and Table 1. The recording delay specifies the number of samples to be skipped after the excitation - useful for sensors with a delay line. The recording length must be set in a way that the backwall echo is displayed. All received signals are represented by a Hilbert transformation. The sample rate defines the number of samples in one second. Gate 1 and gate 2 are used to determine the number of samples between the front wall echo (or rather the entry echo) and the first echo. The first echo can be the back wall echo or the defect echo. By setting the offset from delay, length and level of each gate, the sample associated with the intersection point of the considering amplitude and gate can be determined. With knowledge about the sample rate, the number of samples between the two gates can be converted into the time of flight (ToF). Figure 7 shows the entry echo and the first echo of a defect.

4 Verification of System Accuracy

It is essential to know the accuracy of an inspection system and especially the ability to localise defects. Having a higher accuracy and precision, which means a lower systematic and random error of the inspection system, allows the localisation of smaller defects and the inspection of smaller components.

The presented inspection system consists of several individual parts. By performing a cause and effect analysis with an Ishikawa diagram and setting a main problem in the focus of the analysis, it is possible to examine several prospective causes and effects [19]. As the central problem, as shown in Fig. 8, a deviation in localising recorded NDT data is identified. Specifically, this means that the recorded sensor position does not match the true position.

The detailed analysis of every prospective cause will be future work. As a first step, they are described in order to understand their particular impact on the identified problem.

4.1 Mother Nature

During measurements the environment always has to be considered. The speed of light is depending on temperature, humidity and pressure. Thus pose determination of MBT and MLT are influenced, because they are based on infrared light or visible light. But the impact, at distances of less than \(5\,\textrm{m}\) considered here, is negligible. Direct sunlight on the measuring systems should be avoided and the room temperature should also remain stable. For MBT, sunlight coming from a window can lead to interference signals or unwanted reflections from the background. For MLT, work area illumination and background colour contrast influence component detection and tracking significantly. When having too low illuminaton or too cluttered background, component contours or features might not be recognised properly by computer vision algorithms.

4.2 Measurement

The most influential cause on localisation deviations is expected here. The system setup and transformation calculations (Fig. 5 and Eq. 3) cause that each individual error in spatial tracking, tool calibration or camera registration are equally weighted in the error chain. In the end, the overall accuracy determines which defect size can be detected with the inspection system. The ultrasonic testing probe is part of the tool calibration. In this work, it is assumed that the ultrasonic signal can be assigned to the center of the contact surface.

Software also causes errors, for example by rounding numerical values or latencies. Latencies can also occur during data transmission.

If a required warm-up phase of a system component is unaccounted, it can result in unstable or inaccurate pose estimations. For example, if the warm-up phase for the Azure Kinect depth camera of at least \(60\,\textrm{min}\) is not taken into account, an error of around \(2\,\textrm{mm}\) in distance estimation at a distance of \(90\,\textrm{cm}\) results [20].

4.3 Material

Trackability means that the objects can be tracked in a reliable, accurate and stable way. For MBT, targets must have more than three markers, asymmetry and a maximum euclidean distance between the single markers [21]. For MLT, the object must have overall asymmetry to detect it uniquely. Circumferential chamfers on the component can also lead to incorrect detection, especially if there is an unfortunate combination of poor illumination and a cluttered background.

At last, the surface condition is discussed. A damaged retroreflective coating on markers can lead to incorrect detection. Mirroring surfaces on a markerless tracked object can lead to an erroneous recognition during image processing.

4.4 Method

An implemented smoothing technique serves for more reliable pose estimation or for smoothing or predicting the output signal by reducing signal noise.

In [22], many definitions and information about MBT and MLT are presented and accuracy-influencing parameters are listed: the tracked object itself, camera resolution, dimension of the workspace, working distance etc.

4.5 Man

The presented MBT and MLT are optical tracking methods that need a free line of sight. The characteristics of the implemented algorithms and the markers decides the sensitivity to occlusions. Occlusion handling for the here implemented MLT is explained in detail in [17].

Motion blur as a result of fast movements can change the appearance of both texture and edges of the tracked object [17].

Regarding the discussed points, training of the inspecting human to avoid occlusions and fast movements is an important part for a successful inspection.

At this development stage, calibration of the inspection tool and the camera registration process are examined in detail, because they are required to set up the inspection system. Additionally, the overall localisation accuracy is examined with the aid of a reference body.

4.6 Inspection Tool Calibration Process

The inspection tool generates the ultrasonic data basis. To connect a UT probe with a tree target, a designed adapter is needed, compare with Fig. 9. A two-step calibration process is required:

-

1.

Calibration of the used tree target

-

2.

Determination of the vector between \(CS_{TT}\) and \(CS_{PCS}\)

For the calibration of the tree target, the marker-based tracking system ARTTRACK5 [23] with eight ceiling-mounted cameras is used. This tracking system has a higher accuracy than the SMARTTRACK3, which is used in the hybrid inspection system only for mobility reasons.

In order to be able to clearly identify a target, at least three marker balls must be visible at all times. Therefore it is recommended to use more than three marker balls. In addition, they have to be arranged asymmetrically to each other and with a maximised euclidian distance between each ball. In this way, a CS spanned by three marker balls can be defined during target calibration. Because the determination of ball marker positions from the acquired images is always subject to random errors, the calibration is performed ten times with the software DTrack and finally the average coordinate values for the individual ball marker positions are applied. For non-zero coordinate values, the MBT achieves an average standard deviation of \(0.03\,\textrm{mm}\).

After defining the tree target coordinate system \(CS_{TT}\), the location of \(CS_{PCS}\) relative to it has to be determined during a calibration process, compare with Fig. 9. Ideally, the distance vector for the transformation matrix \(^{MBT}T_{TT}\) can be taken from a CAD model. But deviations between nominal and actual geometries always exist. For example, a deviation analysis between the nominal CAD model and a 3D scan model of the tree target showed that the base of the tree target is \(0.11\,\textrm{mm}\) shorter than the nominal model specifies.

For this reason, the entire inspection tool is scanned with the GOM Compact Scan 3D scanner and the vector distance of the PCS center to the \(CS_{TT}\) is measured with the help of CAD software. For this purpose, the \(CS_{TT}\) had to be reconstructed beforehand on the basis of the scanned sphere markers.

To verify the determined transformation parameters, a special test rig is placed in the middle of a tracking room, compare with Fig. 10. The motorized part is a stepper motor according to the test setup shown in [21]. With an adapter, the UT probe can be centered to the stepper motor, to rotate the inspection tool around the center of the probe contact surface. If the measured transformation parameters are properly, the tracked point cloud will show nearly a dot, see Fig. 11. The second part of the test rig is a distance gauge of \(4.0\,\textrm{mm}\) thickness, which is clamped into a 3D printed distance gauge holder. By moving the inspection tool with its PCS along the right and left side of the distance gauge, the distance between the tracked point clouds can be measured. By that, it can be verified that the \(CS_{PCS}\) is lying exactly on the contact surface.

The testing is repeated three times. The recorded point cloud is measured by fitting cylinders and planes to it. Doing this, the transformed point cloud after rotating the inspection tool covers a cylindric volume of about \(0.23\,\textrm{mm}\) radius and \(0.29\,\textrm{mm}\) height. The variation in height is already found in the untransformed point cloud and can therefore be attributed to the tracking system. The measured distance between the point clouds of the right and left side of the distance gauge amounts to \(4.20\,\textrm{mm}\).

These values indicate the maximum achievable accuracy in determining the PCS position. They are influenced by random and systematical errors of the used tracking system and of the calibration method itself. The used stepper motor and its coupling may have a slight eccentricity and also the manual movement on the distance gauge is not perfect for calibration testing purposes. At the end, this calibration result is only one error element in the measurement chain of the complete inspection system.

4.7 Camera Registration Process

The purpose of the camera registration process is to determine the transformation parameters for \(^{MLT}T_{MBT}\), compare with Fig. 5. Thus, \(CS_{MLT}\) and \(CS_{MBT}\) will be aligned to each other after transformation. By that, the pose of the IC and the inspection tool are referred to the same global CS. Ideally, the two CS are aligned exactly, but there will always be slight variations and deviations due to the registration process. In general, there are two main steps in the camera registration process, both performed in separate A.R.T.-developed software solutions [24]:

-

1.

Determination of the MLT camera intrinsic parameters

-

2.

Registration of MBT camera to the MLT camera

4.7.1 Camera intrinsics

On the basis of images of a ChArUco board, acquired from various positions and orientations, the camera intrinsic parameters can be determined. For more details about camera calibration, see [25]. The used ChArUco board is equipped with a set of retroreflective circular markers in the outer frame, which serve as a reference for MBT during the registration process, compare with [26].

To minimize effects caused by the Azure Kinect autofocus, the images should ideally be acquired with a distance rather similar to the later working distance. Due to the wide angle of the Azure Kinect RGB camera (field of view 90 x 74 deg), the corners are slightly blurred and irregularly distorted, therefore these marginal areas should be avoided during image acquisition. The camera intrinsic parameters like focal length, principal point and distortion [27] are determined from automatically detected ChArUco 2D marker positions, which serve as an observation input for a self-calibration bundle adjustment [28, 29]. The 3D coordinates of the ChArUco markers are calculated within a free network adjustment with trace minimization [30, 31]. Therefore the geometry of the board itself does not have to be known beforehand. The overall scale is retrieved from the board via the chess pattern square size. In addition the circular retro markers are measured semi-automatically by an ellipse fitting routine in all the images and are also part of the bundle adjustment. By doing this the retro markers and the ChArUco markers refer to the same coordinate system. The derived 3D ChArUco markers are later used for the registration. The coordinates of the retroreflective markers constitute the geometry of a 6D target trackable by the SMARTTRACK3. The intrinsic parameters have to be determined at least once to enable an accurate registration and might be repeated when necessary.

4.7.2 Registration

The registration step serves to register the room calibration of the MBT system (SMARTTRACK3) to a calibrated camera (Azure Kinect) via a ChArUco board with retroreflective markers. The setup can be seen in Fig. 12. For the excecution of the registration, information about the coordinates of the markers attached to the ChArUco board have to be imported to the marker-based tracking software and information about camera calibration are required by the registration software. The single process steps of the registration are:

-

1.

Measurement of marker body pose by MBT system

-

2.

Image acquisition of the board by MLT camera

-

3.

Import of board geometry and camera calibration data to registration software

-

4.

Detection of ChArUco markers and resection of the camera to the board

-

5.

Setting room calibration of the MBT camera relative to the resected camera

After a successful registration, transformation parameters between \(CS_{MBT}\) and \(CS_{MLT}\) are determined. Marker-based tracking results, given in pose data, now refer to that coordinate system and are therefore within the same coordinate system as the markerless ICG tracking results. The registration software provides additional information about the registration result, which can be used for quality assessment.

4.7.3 Variance of registration results

The registration results are analysed by proceeding two test series, each of ten runs. For the first series called "static" the ChArUco board is positioned centrally, with a frontal orientation and in a distance of \(60\,\textrm{cm}\) to the Azure Kinect camera. For the second series called "dynamic", the board is positioned at varying orientations and locations around the distance of \(60\,\textrm{cm}\). This distance is chosen because it is the maximum distance in which the ArUco and ChArUco markers are reliably recognisable by the Azure Kinect camera. The illumination is adjusted, so that the workplace is illuminated with at least \(1000\,\textrm{lux}\) at every corner, which is in accordance with the workplace guideline for surface inspections [32].

As a result, the registration software provides the required transformation values for \(^{MLT}T_{MBT}\), see Table 2. The table concludes the average and standard deviation (SD) values for each transformation parameter. While all of the average values of both series differ only in tenths of a millimeter, the standard deviation values show larger differences. This results from the varying perspectives of the "dynamic" series because the registration software is not able do detect all markers of the board, if the angle between board and camera is too high, or if the board is not completely inside the camera field of view, compare with Table 3.

The listed values "camera projection error" and "marker ellipse fit" give feedback about the quality of the registration process. The first one is calculated by projecting the 3D coordinates to image space, which should yield subpixel accuracy. A mean projection error better than \(0.15\,\textrm{pixel}\) is considered good. The second value describes the average deviation in pixel between all visible ellipse centers detected in image space and their corresponding projection of its 6D body marker tracked by the SMARTTRACK3. This gives a throughout feedback that MLT and MBT are refering to the same coordinate system. For the camera registration, the average values of the "static" series are used and implemented to the MBT software.

Increasing values of the "dynamic" series in Table 3 indicate the decreasing quality of the registration. However, despite reduced quality values, the "dynamic" transformation results seem to deviate only slightly from the "static" series, which indicates the robustness of the registration software. The importance of the registration quality to the overall localisation result is significant, because a translation and/or an orientation error has a direct impact on deviations in localisation.

4.7.4 Current limitations

The Azure Kinect RGB camera can be operated in six different resolutions, each with its own set of intrinsic parameters, which are predetermined by the manufacturer for each camera individually. At this status of development, the determined intrinsic parameters are only used for the registration process, not for calibrating the Azure Kinect camera for MLT purposes, because the different sets of parameters (Azure Kinect and the self determined set from the A.R.T. software) are not compatible and thus not importable to the tracking software ICG. It uses the factory parameter set of the Azure Kinect camera instead. In addition, the A.R.T. software currently only works with an image resolution of \(3072\,\textrm{p}\), but the tracking software ICG works in \(1080\,\textrm{p}\) resolution. A negative impact on the localization error cannot be rated at this point.

On the basis of extracted factory parameter sets of the Azure Kinect camera, it can be seen that the principal point and the distortion parameters remain unchanged for the different resolutions and the principal point of the determined parameters fits with the factory values, see [25]. This shows that it is possible that the camera registration can be performed in a different RGB camera resolution than the RGB camera resolution used later for MLT.

4.8 Localisation Accuracy

To analyse the accuracy of the inspection system, a reference body is used. It provides characteristic geometric features for tracking purposes and eight drill holes with varying diameters and depths and a triangular pocket on the backside, compare with Table 5. These artificial defects are used to verify the absolute system accuracy, like it is done in [11, 33, 34]. The reference body is made of aluminum alloy 7075-T6 and has outer dimensions of \(200\,\textrm{mm}\) and \(250\,\textrm{mm}\). The maximum thickness is \(30\,\textrm{mm}\).

The inspection tool (IT), described in Chapter 4.6, is moved across the reference body’s top surface to inspect the artificial defects. The reference body is held in hand in a distance of about \(60\,\textrm{cm}\) to the cameras and the working area is illuminated with a minimum of \(1000\,\textrm{lux}\).

During inspection, the position of the IT is saved as a point cloud. Corresponding ultrasonic data is converted to a remaining component thickness, which is used to colour the point cloud according to the measured depth afterwards. All points that are not colored red can be assigned to either a drill hole or a pocket. The required speed of sound (\(6250\,\mathrm {m/s}\)) is calculated beforehand by using the ultrasonic measured ToF and the physically measured component thickness. Without any postprocessing or data transformation, the recorded inspection data (in the format of xyzrgb) can be imported to a CAD software to superimpose it to the corresponding CAD model, see the visualisation in Fig. 13.

4.8.1 Localisation accuracy in z-direction

Analysing the localisation accuracy in depth (z-direction of the \(CS_{IC}\)), all points measured by spatial tracking systems on the highest platform of the reference body are expected to be at \(30.0\,\textrm{mm}\) in z-direction. As shown in Table 4, the average value in z-direction is about \(27.9\,\textrm{mm}\) and it differs by \(-2.1\,\textrm{mm}\) in z-direction (comparing to the nominal value). A SD of about \(2.5\,\textrm{mm}\) is calculated. Considering the SD, minimum and maximum deviations can be regarded as outliers.

4.8.2 Localisation accuracy in x- and y-direction

The determined remaining component thickness and their corresponding colour can be used to separate the red coloured points (which indicate that no defect is measured at the respective position) from the others. Figure 14 shows the top view of the reference body with only the drill hole positions and diameters visualised. Every visualised point is subject to measurement uncertainty, but this only obvious for the points that lie outside the green circles. Each coloured point that is lying outside of a green circle is wrong localised, because the corresponding ultrasonic data was recorded inside the encircled areas. To analyse the localisation accuracy in the x-y-plane, the nominal diameters and positions of the inserted drill holes serve as individual references. For this purpose, it is necessary to separate the point cloud in accordance with the drill holes. By calculating the arithmetic average of each separated point cloud, it is possible to caclulate the difference to the nominal position, compare with Table 5.

Because the IT was moved across the whole component, only the systematical localisation error can be determined. For this reason, no standard deviation is calculated in Table 5. For an accurate determination of the systematical error, it must be ensured that the area of the drill hole is completely and uniformly scanned. Since this cannot be fully guaranteed due to manual inspection, the following analysis provides only a rough indication.

In Table 5, the information on the percentage of outliers is given. It indicates the amount of wrong localised positions of the IT. But it must be considered, that also the points inside the green circles are subject to measurement uncertainties. However, there is no observable relationship between drill hole diameter and the amount of outliers.

The average values of the separated point clouds indicate the measured x- and y-position of the drill holes. By calculating the difference to the nominal values, the systematical error can be assessed.

The localisation error, determined by use of the presented reference body is around \(-0.6\,\textrm{mm}\) in x- and \(0.6\,\textrm{mm}\) in y-direction of the component’s CS. The values vary in the area of \(-2.5... 1.5\,\textrm{mm}\) in x- and \(-1.9... 2.5\,\textrm{mm}\) in y-direction. The validity of the determined systematic error for x- and y-direction is limited, because there is no exact reference due to the large drill hole diameters. Additionally, a uniform measurement of the drill holes cannot be guaranteed due to the manual inspection process. For the error in z-direction, an exact reference is given by the top surface.

5 Discussion and Outlook

As mentioned in Chapter 4, there are several error-influencing variables that have an impact on deviations in NDT data localisation accuracy. Calibration of the inspection tool, camera registration and the measuring accuracy of the used tracking systems have the highest impact. The inspection system has no spatially fixed reference system, because the local coordinate system of the dynamically tracked and inspected component is set as reference system. A faulty pose determination of the inspection tool and the component directly lead to a faulty NDT data localisation.

5.1 Tool Calibration

The tool calibration process, described in Chapter 4.6, is realised by measuring the 3D scanned geometry of the inspection tool. A test procedure to verify the calibration parameters is limited by the used marker-based tracking system accuracy. Nevertheless, it could be proven that the point cloud of the calibrated inspection tool covers a cylindric volume of about \(0.23\,\textrm{mm}\) radius and \(0.29\,\textrm{mm}\) height.

5.2 Camera Registration

The camera registration process, described in Chapter 4.7, is realised by the use of a ChArUco board equipped with a set of retroreflective circular markers in the outer frame. An analysis of the registration results shows that there is a random error in the transformation parameters. This error is increased if the board is positioned at a disadvantageous distance or oriantation. A statement about the systematic error of the camera registration is not given in this work.

5.3 Overall Accuracy

To analyse the overall accuracy of the inspection system, a reference body with artificial defects is used. These defects can be detected by an ultrasonic probe and the recorded NDT data is combined with position information. For each defect, the center point of the recorded point cloud is determined. As a result, the average overall localisation error is \(-0.6\,\textrm{mm}\) in x-, \(0.6\,\textrm{mm}\) in y- and \(-2.1\,\textrm{mm}\) in z-direction relative to the coordinate system of the component. But this result is valid for the applied reference body, working distance, working area illumination, tool calibration, camera registration, marker-based and markerless tracking technology only.

By using the developed inspection system, it is possible to localise the center of a drill hole with an accuracy of \(\pm 2.5\,\textrm{mm}\). The achieved accuracy of the inspection system can be considered good, especially since there is still potential for optimisation.

Occurring latencies in pose estimation, data transmission and data processing are not yet taken into account in accuracy analysis, but it will be a concern in future work. Latencies in pose estimation are mostly influenced by direction and speed of hand guided motions. However, ultrasonic inspections require slow motions whereby occurring latencies will be reduced.

5.4 UT Accuracy

In this work, a UT probe with a small diameter of \(6\,\textrm{mm}\) was used. Thus, the assumption, that the measured echo can be allocated to the center of the probe contact surface is valid for this status of development. For larger UT probes, especially for phased array probes, the determined position information would have to be adapted to the dimensions of the UT probe, compare with [33]. In addition, it must be taken into account that the echo signal only assumes its maximum value when the sensor has completely detected a defect, since only a damped signal is received at border points.

5.5 System Optimisation

The overall localisation accuracy of the presented inspection system can be optimised. The mobile tracking system SMARTRACK3 can be replaced by the stationary system ARTTRACK5, which has a higher accuracy. The markerless tracking software ICG can be extended by a filter, which allows to smooth or to predict the position data of the tracked component. The camera calibration parameters, which are determined during the camera registration process can be implemented to the markerless tracking software ICG. This requires an adaptation of the registration software.

5.6 Further System Analysis

The used tracking systems, have to be analysed in detail in terms of accuracy and measurement uncertainties. General statements about the overall localisation accuracy of the presented inspection system will be necessary. Therefore, the reference body must be modified to allow a more stable markerless tracking and for a better analysis of the localisation accuracy. Additionally decision guidelines for the selection of system components must be developed.

A further analysis of the markerlessly tracked component itself can be performed. Because the component can deviate from its virtual model used to train the markerless tracking (for example after collision, deformation, erosion, etc.), the tracking result can be influenced in a negative way.

5.7 System Application

The inspection system can be combined with augmented reality devices, as already presented in [5, 10, 13]. This enables a data visualisation directly on the inspected component either during (real-time capability should be realised) or after inspection process. Issues of accuracy are addressed in the work of [35] during analysis of a calibration procedure for an augmented reality device. In terms of NDE 4.0, the recorded and localised NDT data can be stored in a virtual environment, which allows for further analysis or superimpositions with several NDT data.

The approach is not limited to the aerospace sector, or UT inspections. It is rather open for applications, where the information about the position of a damage is needed digitally for an all-over documentation or further damage assessments. Additionally, the approach can be transferred to other NDT processes like thermography [36] or radiography [37].

6 Conclusion

The presented inspection system enables the localisation of recorded inspection data directly referenced to the local coordinate system of the inspected component. The inspection tool as well as the component can be hand-held in order to move them without restrictions. This is realised by combining two tracking technologies: a marker-based tracking to determine the pose of the inspection tool and a markerless tracking to determine the pose of the component.

In this way, the component itself can be used as a reference system for data localisation and it is not necessary to equip the component with any fiducial markers or to use any part carrier with external references. Thus, it has a high potential to be used in manual inspection processes. Of course, it is possible to calibrate a component to a rigid workpiece holder serving as a reference aid, but this limits the free movement and rotation of the component held in the hand. In addition, the component must not move in the fixture.

The system development was successful and ultrasonic data was localised relative to the coordinate system of the component. That potentially allows for a better damage assessment by superimposing NDT data from different inspection technologies without any postprocessing or data transformation. Therefore, no additional worksteps are required. The overall localisation accuracy of the developed inspection system was analysed and further system optimisations and applications were discussed. The question about the localisation accuracy is not completely answered, but it will be the main topic of future analyses.

Data Availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Meyendorf, N., Ida, N., Singh, R., Vrana, J.: Handbook of Nondestructive Evaluation 4.0, 1st edn. Springer International Publishing, New York (2022)

Nguyen Ngoc, H., Lasa, G., Iriarte, I.: Human-centred design in industry 4.0: case study review and opportunities for future research. J. Intell. Manuf. 33, 35–76 (2022). https://doi.org/10.1007/s10845-021-01796-x

Okulla, M., Uwe, D., Bulavinov, A., Pinchuk, R.: Fortschrittliche Prüfmethoden zur Prüfung von CFK-Großkomponenten mit komplexer Geometrie. DGZfP-Jahrestagung 2017 (2017)

Aust, J., Mitrovic, A., Pons, D.: Comparison of visual and visual-tactile inspection of aircraft engine blades. Aerospace 8, 313 (2021). https://doi.org/10.3390/aerospace8110313

Wilken, A., Heilemann, F., Turgut, L., Helfrich, G.: Concept for digitalisation of an inspection process using hybrid tracking of part and probe for future maintenance and digital twins. Deutscher Luft- und Raumfahrtkongress 2021. https://doi.org/10.25967/550009 (2021)

Lasagni, F. et al.: C-Scan ultrasonic generation using wireless encoder based on passive markers. In: 6th International Symposium on NDT in Aerospace (2014)

Lopez, B.: Camera encoded phased array for semi-automated inspection of complex composite components: CAMUS 3D. Nondestructive Evaluation of Aerospace Materials & Structures 2018 (ASNT) (2018)

Lamarre, A., Habermehl, J.: Dynamic tracking or ultrasonic probe and real-time volumetric ray-tracing as a support to manual phasedarray inspection of complex components. In: 11th European Conference on Non-Destructive Testing (2014)

Langlois, P., Lepage, B., St-Laurent, M., Habermehl, J.: EP2846158A2. European Patent Office, Munich (2014)

IZFP, Fraunhofer: 3D-SmartInspect: Iintelligent inspection monitoring and documentation by optical tracking system for ultrasound and eddy current inspection. https://www.izfp.fraunhofer.de/content/dam/izfp/en/documents/2017/3D-SmartInspect-en.pdf. Accessed 09 Jan 2023 (2017)

Schmitt, R., Nienheysen, P., Lehmann, N.: et al Digitalized ultrasonic inspection by optical tracking. In: IEEE/SICE International Symposium on System Integration (SII). https://doi.org/10.1109/SII.2019.8700372 (2019)

Schmitt, R., Lehmann, N., Nienheysen, P.: Qualifizierung einer digitalisierten Ultraschallprüfprozesskette. tm - Technisches Messen 86, 509–521 (2019). https://doi.org/10.1515/teme-2019-0101

Rehbein, J., Lorenz, S.-J., Holtmannspötter, J., Valeske, B.: 3D-visualization of ultrasonic NDT data using mixed reality. J. Nondestruct. Eval. 8, 41 (2022). https://doi.org/10.1007/s10921-022-00860-7

Advanced Realtime Tracking GmbH & Co. KG.: Products in connection with the software CAPTA. https://ar-tracking.com/en/product-program/products-connection-capta-software. Accessed 09 Jan 2023 (2022)

Advanced Realtime Tracking GmbH & Co. KG.: SMARTTRACK3 & SMARTTRACK3/M - plug & play tracking solution for small volumes. https://ar-tracking.com/en/product-program/smarttrack3. 09 Jan 2023 (2022)

Fraunhofer IKTS.: PCUS pro SINGLE. https://www.ikts.fraunhofer.de/de/industrieloesungen/ultraschall/elektronik.html. Accessed 09 Jan 2023 (2023)

Stoiber, M., Sundermeyer, M., Triebel, R.: Iterative corresponding geometry: fusing region and depth for highly efficient 3D tracking of textureless objects. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2022)

Siciliano, B., Khatib, O.: Springer Handbook of Robotics. Springer, Berlin (2016)

Keferstein, C.P., Marxer, M., Bach, C.: Fertigungsmesstechnik. Alles zu Messunsicherheit, konventioneller Messtechnik und Multisensorik, 9th edn. Springer, Wiesbaden (2018)

Tölgyessy, M., Dekan, M., š Chovanec, Ľ., Hubinský, P.: Evaluation of the azure Kinect and its comparison to Kinect V1 and Kinect V2. In: Sensors (Basel) 2021. https://doi.org/10.3390/s21020413 (2021)

Cai, Q., Peng, C., Lu, J.-Y., Prieto, J.C., Rosenbaum, A.J., Stringer, J.S.A., Jiang, X.: Performance enhanced ultrasound probe tracking with a hemispherical marker rigid body. IEEE Trans. Ultrasonics Ferroelect. Freq. Control 68, 2155–2163 (2021). https://doi.org/10.1109/TUFFC.2021.3058145

Schreiber, W., Zürl, K., Zimmermann, P.: Web-basierte Anwendungen Virtueller Techniken. Springer, Berlin (2017). https://doi.org/10.1007/978-3-662-52956-0

Advanced Realtime Tracking GmbH & Co. KG.: SMARTTRACK3 & ARTTRACK5. https://ar-tracking.com/de/produktprogramm/arttrack5. Accessed 15 Dez 2022 (2022)

Advanced Realtime Tracking GmbH & Co. KG.: Product program. https://ar-tracking.com/en/product-program/products-connection-software-dtrack. Accessed 09 Jan 2023 (2022)

Open Source Computer Vision-Camera Calibration Documentation https://docs.opencv.org/4.x/dc/dbb/tutorial_py_calibration.html. Accessed 09 Jan 2023

Amadeus, A., Justin, O., Victor, G., Arnd, M. B., Clemens, G., Urs, A. B.: Evaluation of the pose tracking performance of the azure Kinect and Kinect v2 for gait analysis in comparison with a gold standard: a pilot study. In: Sensors (Basel) 20 (18). https://doi.org/10.3390/s20185104 (2020)

Brown, D.C.: Close-range camera calibration. Photogramm. Eng. 37(8), 855–866 (1971)

Wester-Ebbinghaus, W.: Einzelstandpunkt-Selbstkalibrierung-Ein Verfahren zur Feldkalibrierung von Aufnahmekammern. Deutsche Geodätische Kommission. München. ISBN 3-769693-39-6 (1983)

Luhmann, T., Robson, S., Kyle, S., Harley, I.: Close Range Photogrammetry. Whittles Publishing, Dunbeath (2006)

Niemeier, W.: Ausgleichungsrechnung: Statistische Auswertemethoden, vol. 2. De Gruyter, Berlin (2008)

Triggs, B., McLauchlan, P.F., Hartley, R.I., Fitzgibbon, A.W.: Bundle adjustment: a modern synthesis. In: Gerhard, G., Juris, H., van Jan, L., Bill, T., Andrew, Z., Richard, S. (eds.) Vision Algorithms: Theory and Practice, vol. 1883, pp. 298–372. Springer, Berlin (2000)

für Arbeitsschutz und Arbeitsmedizin, Bundesanstalt: Technische Regel für Arbeitsstätten ASR A3.4 Beleuchtung (2011)

Beausoleil, A., Walter, J., Morrow, F., Charlebois, A., Giguere, D.: Dynamic tracking of phased-array probe and 3D C-scan reconstruction for in-service inspection of CFRP aircrafts components. In: 11th International Symposium on NDT in Aerospace (2020)

Deppe, R., Nemitz, O., Herder, J.: Augmented reality for supporting manual non-destructive ultrasonic testing of metal pipes and plates. Virtuelle und Erweiterte Realität - 15. Workshop der GI-Fachgruppe VR/AR: 45–52. https://doi.org/10.2370/9783844062151 (2018)

Brand, M., Antonio, W., Lukas, H., Yogi, S.T.: Accuracy of marker tracking on an optical see-through head mounted display. In: Thorsten, S., Kirsten, T., Dominik, H. (eds.) Annals of Scientific Society for Assembly. Handling and Industrial RoboticsHandling and Industrial RoboticsHandling and Industrial Robotics, pp. 21–31. Springer, Berlin (2020)

Soldan, S., Ouellet, D., Hedayati, P., Bendada, H., Laurendeau, D., Kroll, A.: Mapping non-destructive testing data on the 3D geometry of objects with complex shapes. In: 12th International Conference for Quantitative InfraRed Thermography (QIRT 2014)) (2014)

Chen, L., Li, B., Zhang, L., Shang, Z.: 3D positioning of defects for gas turbine blades based on digital radiographic projective imaging. In: NDT & E International 133, S. 102751. (2023)

Funding

Open Access funding enabled and organized by Projekt DEAL. Open Access funding enabled and organized by Projekt DEAL. The authors have no relevant financial interests to disclose.

Author information

Authors and Affiliations

Contributions

AW carried out all studies and wrote the manuscript. JW developed the camera registration software and contributed to section 4.2. RR, GW and JF participated in technical discussions and supervision. JW, RR, GW and JF participated in the revisions of the manuscript. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

Not applicable.

Consent for Publication

All authors consent to publish this work.

Ethical Approval

All ethical COPE guidelines have been considered.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wilken, A., Willneff, J., Rodeck, R. et al. Localisation of Ultrasonic NDT Data Using Hybrid Tracking of Component and Probe. J Nondestruct Eval 42, 65 (2023). https://doi.org/10.1007/s10921-023-00976-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10921-023-00976-4