Abstract

Environmental and commercial drivers are leading to a circular economy, where systems and components are routinely recycled or remanufactured. Unlike traditional manufacturing, where components typically have a high degree of tolerance, components in the remanufacturing process may have seen decades of wear, resulting in a wider variation of geometries. This makes it difficult to translate existing automation techniques to perform Non-Destructive Testing (NDT) for such components autonomously. The challenge of performing automated inspections, with off-line tool-paths developed from Computer Aided Design (CAD) models, typically arises from the fact that those paths do not have the required level of accuracy. Beside the fact that CAD models are less available for old parts, these parts often differ from their respective virtual models. This paper considers flexible automation by combining part geometry reconstruction with ultrasonic tool-path generation, to perform Ultrasonic NDT. This paper presents an approach to perform custom vision-guided ultrasonic inspection of components, which is achieved through integrating an automated vision system and a purposely developed graphic user interface with a robotic work-cell. The vision system, based on structure from motion, allows creating 3D models of the parts. Also, this work compares four different tool-paths for optimum image capture. The resulting optimum 3D models are used in a virtual twin environment of the robotic inspection cell, to enable the user to select any points of interest for ultrasonic inspection. This removes the need of offline robot path-planning and part orientation for assessing specific locations on a part, which is typically a very time-consuming phase.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Robotic inspection of components in the manufacturing and remanufacturing industry has been a growing research area [2, 13]. Ultrasonic-based inspection techniques have been used in robotic systems to speed up the NDT inspection of critical components [26, 27, 34]. Although such robotic systems have increased inspection rates, these autonomous systems are based on robots that manipulate ultrasonic sensors through predefined tool paths. The robot tool paths are typically defined on the digital computer aided design (CAD) models of the parts to be inspected. Unfortunately, parts and components undergoing remanufacturing often differ from their respective virtual models. Therefore, robotic inspection systems are still lacking strategies to achieve flexible automation at a reasonable cost. There is an increasing need for automating the process of robotic tool path generation, to enable an effective NDT inspection of components with complex geometries. A robotic tool path can be generated by either a time intensive manual measurement of the object, expensive 3D scanning of the object [40], or the process of photogrammetry, which is cheap and readily accessible. Photogrammetry methods offer its widespread applications contributing to achieving flexible autonomy in fields such as engineering, geology, health, safety and manufacturing [11, 21, 22, 36, 39, 41]. It provides a cost-effective solution to acquire part geometries in the form of 3D models. 3D models produced through techniques such as structure from motion (SfM) can be used to construct suitable sub-millimetre accurate tool paths for robotic ultrasonic testing (UT) [44]. In this paper, we present a photogrammetry framework that starts with automated image acquisition to capture the images of the component placed in the robotic work-cell by using a single red, green, and blue (RGB) color machine vision camera, mounted at the end effector of the KUKA AGILUS robot arm [23]. The collected images are used by SfM [24], a 3D reconstruction technique, to obtain sparse scene geometry and camera poses. Then, patch-based multi-view stereo is used to obtain the dense scene geometry, producing a 3D model of the object. This 3D model is used to construct the tool path for a robot to perform the NDT inspection of specific locations through an ultrasonic probe.

SfM-based 3D reconstruction is the process of generating a computer model of the 3D appearance of an object from a set of 2D images and provides an alternative to costly and cumbersome 3D scanners such as Velodyne Puck [42]. In the projection process, the estimation of the true 3D geometry is an ill-posed problem (e.g. different 3D surfaces may produce the same set of images). The 3D information can be recovered by solving a pixel-wise and/or point-based correspondence using multiple images. However, images of specimens acquired in industrial environments can be challenging to use, because of the self-similar nature of industrial work-pieces, their reflective surfaces, and the image limited perspective (when scanning at a fixed stand off). This makes it difficult to produce the 3D model for a component and the associated robotic tool path with high accuracy. An accuracy of 1.0-3.0 mm is, typically, a requirement for automated ultrasonic testing. Furthermore, automating the NDT inspection of components in the manufacturing and remanufacturing industries is a difficult task because of the inconsistencies in the size and geometry of the components to inspect. We used the SfM technique to develop an autonomous 3D vision system. As SfM-based 3D reconstruction techniques are sensitive to environmental factors such as motion blur, non-uniform lighting, and changes in contrast, the image acquisition approach influences the resulting 3D model. For this purpose, we developed four approaches to acquire the images of sample objects. Moreover, UT-based NDT inspection requires the accurate positioning of the ultrasonic probe on the surface of the object. In this regard, our work aimed at producing complete and accurate 3D models which could be used to generate the required tool path for UT. This paper aims to contribute to the existing state-of-the-art in two-fold:

-

The work compares different image acquisition techniques and presents the results of each method on SfM-produced 3D models.

-

The work introduces the use of accurate 3D models, resulting from the 3D vision system, to produce the robot tool paths for ultrasonic NDT inspection.

Section “Related work” provides the relevant literature for robot vision supporting various visual inspection tasks. Section “Experimental setup” provides a brief description of the experimental setup and is followed by an overview of the proposed system in “Overview of the vision-guided robotic UT system”. Section “Three-dimensional vision system” presents the image acquisition system, the 3D modelling, and the integration of the resulting 3D model into the in-house developed interactive graphical user interface (GUI) for vision-guided NDT. The experimental setup is presented in “Experimental design”. Section “Results and discussion” presents results obtained from the experimental setup and a detailed discussion is presented. Section “Conclusion” provides the concluding remarks.

Related work

Robots are equipped with sensors such as cameras [35], lasers [17], radars [16], and ultrasonic probes [26]. For example, in a factory setting, robots equipped with ultrasonic probes have been able to perform NDT inspection successfully [26, 27, 37]. Although the use of artificial intelligence (AI) might introduce many layers of autonomy in several robotic systems, it is expected that a degree of human involvement will remain in the automated NDT inspection of safety critical components (e.g. in the specifications of the areas to inspect and in the assessment of the inspection results). With the advancements in robot vision, new robot control systems have the potential to enable flexible automation. In particular, 3D reconstruction methods can provide component digital twins to drive a robot to the user-defined areas of interest [29, 30].

A plethora of 3D reconstruction techniques have been developed, which are mainly categorised as RGBD (using RGB and Depth images), monocular, and multi-camera-based methods. RGBD sensors operate on the basis of either structured light or Time-of-Flight technologies. Structured light cameras project an infrared light pattern onto the scene and estimate the disparity given by the perspective distortion of the pattern due to the varying object’s depth. ToF cameras measure the time that light emitted by an illumination unit requires to travel to an object and back to a detector. RGBD cameras such as Kinect achieve real-time depth sensing (30 FPS), but for each image frame, the quality of the depth map is limited [20]. Kinectfusion [18, 31] is recommended for achieving the 3D reconstruction of static objects or indoor scenes for mobile applications with a tracking absolute error of 2.1-7.0 cm.

In recent years, simultaneous localisation and mapping (SLAM) [7, 8, 10, 32] using only cameras has been actively discussed, as this sensor configuration is simple, operates at real-time and achieves drift-free performance inaccessible to Structure from Motion approaches.

One of the limitations of the RGBD sensor is that it fails to produce depth information for glossy and dark solid colour objects leading to holes and noisy point clouds. Additionally, in visual SLAM, the loss of tracking results in recomputing the camera pose with respect to the map, a process called re-localization [38]. RGBD sensors are more suitable for applications that require real-time operation at the cost off accuracy, 3D mapping and localisation, path planning, autonomous navigation, object recognition, and people tracking.

Multi-camera systems promise to provide 3D reconstruction with the highest accuracy, as that reported in [1, 3, 33, 45], although the cost for the setup and maintenance is high. Figure 1 illustrates a typical work-flow of a multi-camera 3D vision system.

Structure from motion (SfM) is a classical method based on a single camera to perform 3D reconstruction; however, achieving millimetre to sub-millimetre accuracy is challenging. An SfM-based 3D reconstruction pipeline is illustrated in Fig. 2.

Experimental setup

This section provides a description of the hardware and software technologies used to realise the work reported in this paper.

Robotic arm

The KUKA KR AGILUS is a compact six-axis robot designed for particularly high working speeds. It has a maximum reach of 901 mm, maximum payload of 6.7 kg, and pose repeatability of (ISO 9283) 0.02 mm [23].

Machine vision camera system

An industrial FLIR machine vision camera BFLY-PGE-50S5C-CS supporting the USB 3.0 interface is used; it consists of a global shutter CMOS sensor Sony IMX264 and M0824-MPW2 lens. The sensor provides a high resolution of 5 MP, which is perfectly suitable for applications under low-light conditions and extremely low-noise images at full resolution (2456 x 2054 px) with a compact size of 2/3”. The chosen lens supports the sensor size and resolution. It has a fixed focal length of 8 mm, manual iris control and uses the C-mount interface to connect to the sensor. The camera system can be mounted to the end effector of the robot arm as shown in Fig. 3.

Ultrasonic wheel probe

A dry-coupled wheel transducer [9, 25] that houses two piezoelectric ultrasonic elements with a nominal centre frequency of 5 MHz is attached to the robot as shown in Fig. 4. It operates on the basis of the pulse-echo mode, performing both the sending and the receiving of the ultrasonic waves as the sound is reflected back to the device. The ultrasound is reflected by the interfaces, such as the back wall of the object or an imperfection within the object. The probe shown in Fig. 4 is a dry-coupled transducer, which was selected to avoid using liquid couplant during the robotic ultrasonic inspection.

Software technologies

For robot control and path planning, a C++ language-based toolbox, namely the Interfacing Toolbox for Robotic Arms (ITRA), developed by some of the authors of this work, was used [28,29,30]. ITRA was used to achieve fast adaptive robotic systems, with a latency of as low as 30 ms and facilitate the integration of industrial robotic arms with server computers, sensors, and actuators. We used a MATLAB-based interface to access ITRA.

A Python-based spinnaker SDK [12] supporting the FLIR camera systems was used to automate the image acquisition process.

VisualSFM is 3D reconstruction software using structure from motion (SfM) [43]. This program integrates the execution of Yasutaka Furukawa’s PMVS/CMVS [14] tool chain, which was used to produce dense reconstruction. The dense re-constructed model was post-processed in MeshLab [4].

To mitigate the environmental brightness variations, we used four additional 135-W lights with a colour temperature of 5500K and set them up at suitable locations around the robotic work-cell.

A workstation consisting of Intel(R) Core(TM) i7-4710HQ CPU, 16 GB of memory, and the 64-bit Windows 10 operating system was used to perform image acquisition, 3D modelling, and ultrasonic NDT testing guided by the 3D vision system.

In order to quantitatively analyse the 3D model produced by the 3D vision system to the CAD model, GOM Inspect software [15] was used to perform the mesh surface analysis on the CAD model. The obtained mesh from 3D modelling was aligned with the CAD model, and then, surface analysis was performed by computing the standard deviation of the distance as an error metric.

Overview of the vision-guided robotic UT system

Obtaining the image data for a given component newly introduced in the robotic work-cell is an important stage. In order to acquire image data free from motion blur and exposure of uniform lighting, containing 75%-85% overlap between image pairs, we developed an automated image acquisition system as part of the 3D vision system, which could gather images for a given sample object in the robotic work-cell. This image acquisition system could execute four different approaches to capture the object appearance in the work-cell. Each approach was investigated for its impact on the 3D model produced by the 3D vision system.

Images collected from the vision system were used to produce a sparse point cloud of the sample object along with the associated camera poses by using structure from motion. Both the sparse point cloud and the camera poses were used by patch-based multi-view stereo (PMVS2) [14] to obtain a dense point cloud. The dense point cloud was further processed to obtain a mesh and finally integrated into the KUKA KR5 robot control graphical user interface (GUI). The schematic workflow of the vision-guided robotic UT inspection system is illustrated by the flow chart shown in Fig. 5. The GUI (see Fig. 6 represents the reconstructed mesh in the virtual simulation environment of the robotic work-cell. This allowed for pre-planning the robot action by selecting a surface point on the object mesh, providing easier access for the operator. Thereafter, robot actions were invoked by finding the optimum tool path for the ultrasonic wheel probe to approach the selected point on the object. Finally, a trajectory normal to the object surface brought the probe into contact with the component, and UT inspection was carried out at the requested point.

Three-dimensional vision system

This section describes an automated 3D vision system that performs image acquisition for an object in a robotic work-cell and produces a 3D model from the collected images using SfM. The following sub-sections provide further details on the 3D vision system.

Automated image acquisition

The image acquisition system was fully automated and capable of capturing images for a component placed in the robotic work-cell using four different robot tool paths. These tool paths were motivated by the geometric shape of objects and to ascertain sufficient overlap (75%-85%) between image pairs, which was a requirement for obtaining complete and accurate 3D models using SfM. Acquiring images through a robot-mounted camera prevented motion blur and had less contrast variance, thereby producing high-quality image datasets. In order to reconstruct the geometry of the sample object, we tested four different image acquisition robot tool paths, which are briefly described below.

Raster

A raster path is a scanning pattern of parallel lines (passes) along which pictures are collected at constant pitch distances (Fig. 7). Raster tool-paths are best-suited to larger components.

Polar raster

A polar raster is the corresponding version of the Cartesian raster in polar coordinates. The passes are hemisphere meridians, separated by a constant angle (the scanning pitch). The pictures are taken at constant angle pitch along the scanning passes (Fig. 7b). An inconvenience of the polar raster is that the density of locations where pictures are taken, increases at the pole of the polar raster and redundant pictures are collected at the pole.

Helical path constructed on a hemisphere

To overcome the limitations of the raster and polar raster scanning patterns, helicoid (spiral) tool-paths have been tested. A circular helicoid is a ruled surface having a cylindrical helix as its boundary. In fact, the cylindrical helix is the curve of intersection between the cylinder and the helicoid. The circular helicoid bounded by the planes z = a and z = b is given in parametric form by:

Let f be a positive continuous function. A surface of revolution generated by rotating the curve y=f(z), with \(z\mathrm {\in }\left [a,b\right ]\), around the z-axis has the equation:

and a parametric representation can be given by:

As the intersection of a circular helicoid and cylinder is a cylindrical helix, the intersection of a helicoid and another surface of revolution gives rise to a three-dimensional spiral. Equating x, y and z, respectively from Eqs. 1 and 3 one gets:

Imposing the condition\(\mathrm {\ }f\left (t\right )\mathrm {\ }\mathrm {\in }\mathrm {\ }\left [\mathrm {0,\ }r\right ]\), it follows\(\mathrm {:\ }u\mathrm {=}f\left (t\right )\), hence the equations of the three-dimensional spiral are given by:

The hemisphere of radius r is generated by the function \(f\mathrm {:}\left [0,r\right ]\mathrm {,\ }f\left (z\right )\mathrm {=}\sqrt {r^{\mathrm {2}}\mathrm {-}z^{\mathrm {2}}}\). Then the hemispherical helical curve is given by:

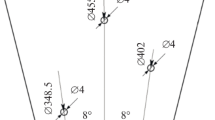

Figure 8 shows the top and lateral view of a hemispherical helicoid path, used for robotic photogrammetric scan.

Helical path constructed on paraboloid

Consider the function of the primitive parabola of a paraboloid of revolution, \(f\left (z\right )\mathrm {=}a\sqrt {b\mathrm {-}z}\) with \(z\mathrm {\in }\left [a,b\right ]\), the equations of paraboloid spiral are:

Figure 9 shows the top and lateral view of a paraboloid helicoid path, used for robotic photogrammetric scan.

Three-dimensional modelling

SfM was used to reconstruct a scene incrementally and did not require a particular order of 2D images. It took an input consisting of a set of images, calculated the image features (SIFT used in this study) and image matches, and produced a 3D reconstruction of the camera poses and the sparse scene geometry as the output. We used patch-based multi-view stereo [14] to use this sparse representation of the scene from SfM and produced a dense scene geometry as the output in the form of a point cloud along with the computed surface normals. MeshLab commands were used to post-process the point cloud and produce a mesh, which was a collection of vertices, edges, and faces that described the shape of a 3D object. The resultant mesh and the associated surface normals were used to underpin the required UT tool path for any given point on a given object undergoing inspection. This allowed the robot to guide the UT wheel probe in order to approach the selected point for inspection and make the contact. Typical UT measurements could then be taken for further analysis.

Experimental design

This section presents the experimental setup used to test and validate the proposed vision-guided robotic component inspection system.

Samples and Ground Truth

Two sample objects, namely a circular steel flow iron pipe segment (shown in Fig. 10a) and an aluminium stair case) (shown in Fig. 10b), were chosen; they contained self-similar geometric shapes, low texture, and reflective surfaces in order to reflect on the type of components that typically undergo inspection in the manufacturing and remanufacturing industry.

To acquire the ground truth/CAD model in the form of a mesh for the above samples, a GOM Scanner [5] was used. It is noteworthy that because of the self-similar nature of these sample objects and glossy appearance, initially, the GOM Scanner also struggled to produce a complete and accurate model. Reference markers were applied on the sample objects to enrich the sample surfaces with texture. Additionally, to mitigate the glossy and reflective effects, a matt developer spray was used to dull the sample finish.

Acquiring image datasets

This sub-section describes the setup for the image acquisition system and the tool-path approaches used to capture the view sphere of the objects. We captured the images of an object such that the overlap between two consecutive image pairs was 70%-90%. The development of these approaches was motivated by the flexibility of the robot control over using a turntable and reaching the limitation of the KUKA KR5 robot arm. However, these approaches were flexible and hardware independent. A discreet robot movement was used for all the tool paths, with the robot stopping at every target location and triggering the image capture on the data collection computer by setting a digital Boolean output to a high value. The system allowed the user to specify the required number of images. The following sections describe these approaches as used in the experiments.

Aluminium stair case sample

For rectangular shape objects such as aluminium stair case sample, the raster scan was used. With this tool-path approach, in all, 600 images were acquired using a raster scan (sample images are shown in Fig. 11). The measured light for the aluminium stair case object was 410 lux.

Steel flow iron pipe segment sample

For components with complex appearances such as steel flow iron pipe segments (sample images are shown in Fig. 12), more sophisticated tool paths were required to acquire images in order to capture the fine details observed in the different views of the surface of the object. In all, 2298 frames were acquired using the polar raster tool path, 2294 using the hemispherical spiral, and 857 using the paraboloid spiral. The number of images acquired per tool path was influenced by the reach limit of the robot and the consistency constraint on the image pairs for a given object.

Obtaining 3D model

We used Visual SfM [43] to produce an estimation of the camera positions and the sparse scene geometry and generate a dense scene geometry using PMVS2 [14]. It had the advantage of GPU support, reducing the computational time. The dense point cloud representing the scene geometry was processed using MeshLab commands [4] to segment, clean, and scale the point cloud. Poisson’s surface reconstruction algorithm [19] was used to reconstruct the surface from the point cloud in the form of a mesh; a polygon mesh is a collection of vertices, edges, and faces that make up a 3D object.

Integrating into robot kinematic chain

A script was developed in MATLAB to perform robot control actions for the image acquisition tasks and for generating an ultrasonic tool path for the NDT inspection. The coordinate system of the mesh obtained from the previous section was transformed into the KUKA KR5 robot arm’s base frame. This could be visualised using the robot control graphical user interface (GUI) developed in-house. This GUI (see Fig. 1) allowed user-friendly interactions in a simulated environment for the selection of a surface point on the component and could later be used to perform the UT-based NDT inspection of the selected point.

Results and discussion

This section presents the results obtained from the experiments to evaluate and validate our proposed vision-guided robotic component inspection system for the manufacturing and remanufacturing industry. Firstly, we evaluated the image acquisition approaches of the 3D vision system by comparing the obtained mesh surfaces with their respective CAD models. Secondly, we validated the accuracy and repeatability of our vision-guided robotic component inspection system. For this purpose and for the sake of simplicity while demonstrating the proof of concept, we have reported the experimental results obtained for the aluminium stair case sample by enabling the ultrasonic tool path executed by the robot to perform tasks such as NDT.

We analysed the automated image acquisition system for four tool-path approaches by comparing the quality of the obtained image datasets. After each image dataset was captured, the quality of a dense point cloud for an object was unknown until the final result. In this regard, the image quality metrics were used to analyse the quality of the image dataset at the beginning of the process. We use the Mutual Information (MI) as a metric to determine the quality of the image datasets. It is a measure of how well one can predict the signal in the second image, given the signal intensity in the first image [6]. With this simple technique, we could measure the cost of matching image pairs without the requirement of having the same signal in the two images. The lower value of MI for the image pairs translated into a high content overlap. Table 1 shows the summary of the MI metrics computed for each dataset obtained from all of the image acquisition tool paths. For the polar tool-path approach, the standard deviation of 0.32 was observed while translating to a high overlap of content for the image pairs. Furthermore, the observed standard deviations for the polar, hemispherical spiral, and paraboloid spiral tool-paths were 0.69, 0.44, and 0.46 respectively. From Table 1, by comparison, we deduced that despite the image dataset containing a reduced number of images by a factor of approximately \(\frac {2}{3}\), the standard deviation of 0.46 was achieved, translating to having a better content overlap in the image pairs than in the cases of the polar raster and hemispherical spiral tool paths.

In the following sections, we present an analysis of the 3D models produced from each image dataset and compare with their respective ground truth.

Aluminium stair case sample

Figure 13a shows the mesh produced for the aluminium sample resulting from the images obtained through the raster tool path. The artefacts on the sides of the mesh were trivial, as with the raster scan, the sides of the aluminium sample object could not be imaged entirely. We were interested in the top surface of the sample to be reconstructed accurately for the validation of the proof of concept proposed in this paper. The surface analysis of the obtained mesh on the CAD model is shown in Fig. 13b. The standard deviation of distance of 2.77 mm was achieved.

The surface analysis of the obtained mesh on the CAD model is shown in Fig. 13. A distance standard deviation of 2.77 mm is achieved.

Steel flow iron pipe segment sample

The performance related to the three tool paths (polar, hemispherical spiral, and paraboloid spiral) used to acquire the three image datasets is discussed here for the steel flow iron pipe segment sample. The three resultant meshes are shown in Fig. 14. Figure 14a and b show the meshes resulting from the polar and the hemispherical spiral-based image datasets, respectively, and contain more artefacts than the mesh resulting from and paraboloid spiral-based image dataset depicted in Fig. 14c.

Both the polar and the hemispherical spiral tool paths produced image datasets with the centre of the focus at the top of the object, resulting in an incomplete and deformed mesh at the bottom of the steel flow iron pipe segment. Moreover, the polar tool path produced redundant images, because the same camera position was revisited multiple times. The hemispherical spiral tool path performed better, producing a reduced image dataset that provided a more complete mesh, with less artefacts. The paraboloid spiral produced the best image dataset that allowed one to obtain a complete triangulated model, with the least number of artefacts.

Surface analysis plots are shown in Fig. 15. Figure 15a corresponds to the polar-based image dataset, Fig. 15b is related to the hemispherical spiral-based image dataset, and Fig. 15c represents the paraboloid spiral-based image dataset.

Surface comparison of the steel flow iron pipe segment mesh on CAD model: a polar tool path yields standard deviation of 2.53 mm, b hemispherical spiral tool path yields standard deviation of 2.34 mm, and paraboloid spiral tool path yields standard deviation of 1.28 mm, and d sub-region of the paraboloid spiral tool path yields standard deviation of 0.43 mm

From Fig. 15a, we inferred that the distance standard deviation of 2.53 mm from the CAD was recorded for the mesh resulting from the polar raster image dataset. The dataset originating from the hemispherical spiral tool path produced a mesh with a 2.35-mm standard deviation of the distance from the CAD model, as shown in Fig. 15b. The mesh produced by the paraboloid spiral tool path reported a 1.2-mm standard deviation of the distance from the CAD model. Note that because of the robot’s reach limit, the far side of the steel flow iron pipe segment was compromised, resulting in the presence of artefacts on the same side of the object. For this purpose, we selected points on the surface comparison plot shown in Fig. 15c to correspond to the true helical paraboloid flexible path scan, which is depicted in Fig. 15d for achieving the sub-millimetre accuracy. A summary of the results obtained for the distance-wise surface comparison of meshes produced by a tool path on the CAD models is shown in Table 2. Both Tables 1 and 2 show that the paraboloid spiral tool path could produce image data for a component with a high content overlap between the image pairs and generate 3D models with the best accuracy, and with a reduced number of images.

Vision guided robotic component inspection

To demonstrate the validity of the proposed robotic component NDT inspection system, the tool path generated by the 3D vision system was utilised to perform the robotic component inspection. For this purpose, we evaluated the proposed system by using the tool path produced by the 3D vision system, to approach the top surface of the aluminium stair case sample and position the ultrasonic wheel probe in contact with the chosen surface point (Fig. 16). Then, the excitation pulse was generated using JSR Ultrasonics DPR35 Pulser-Receiver, and the signals were then measured by PicoScope 5000a Series controlled by LabVIEW, as shown in Fig. 17. We observed that with the proposed method, the success rate for obtaining an ultrasonic measurement for the selected surface points on the sample under inspection was 80%.

Our proposed method addresses the challenges posed to photogrammetry-based 3D approaches since typical manufactured and remanufcatured components are plain, glossy and/or lack in texture. In addition, existing Robotic systems [26, 27, 34] that manipulate ultrasonic sensors through pre-defined tool-paths can carry out the inspection in both manufacturing and remanufacturing industries. Albeit, the robot tool-paths are typically defined on the CAD models of the components under inspection which is not always available. In such case, our proposed vision guided robotic inspection system provides a solution to perform inspection for parts in manufacturing and remanufacturing industry. For example, the oil and gas industry could benefit from our system that involves pipe inspection during manufacturing and remanufacturing which is a critical task as new line pipe is rolled and/or fabricated, inspection is important to ensure no faulty pipe and materials are installed. A complete pipe inspection involves on-surface (e.g. using photogrammetry) and sub-surface (e.g. UT) analysis of the pipe component both addressed by our proposed method.

Conclusion

The authors present an automated vision-guided ultrasonic tool path-based NDT inspection method for components in the manufacturing and remanufacturing industry. To add flexibility to autonomous ultrasonic NDT inspection, a 3D vision system based on SfM was used to generate complete 3D models with sub-millimetre accuracy. These 3D models were used to obtain an ultrasonic tool path which facilitated the autonomous NDT inspection. The developed 3D vision system had an automated image acquisition sub-module with four different tool-path approaches that were tested, evaluated, and analysed. The paraboloid spiral image acquisition approach produced a UT tool path with an accuracy of 0.43 mm. This generated tool path was used to approach the top surface of the aluminium stair case sample, position the ultrasonic wheel probe in contact with the chosen surface point, and take ultrasonic measurements. Furthermore, we observed an 80% success rate of capturing the ultrasonic pulse-echo data for the desired surface points on the sample under inspection. Finally, this paper met the aim of contributing to the existing state-of-the-art by proposing a new practical method for accurate 3D reconstruction, instrumental to produce the robot tool paths for ultrasonic NDT inspection.

References

Bitzidou M, Chrysostomou D, Gasteratos A (2012) Multi-camera 3D Object Reconstruction for Industrial Automation. In: Emmanouilidis C, Taisch M, Kiritsis D (eds) 19th Advances in Production Management Systems (APMS), Advances in Production Management Systems. Competitive Manufacturing for Innovative Products and Services. https://doi.org/10.1007/978-3-642-40352-1_66. https://hal.inria.fr/hal-01472287. Part 2: Design, Manufacturing and Production Management, vol AICT-397. Springer, Rhodes, pp 526–533

Caggiano A, Teti R (2018) Digital factory technologies for robotic automation and enhanced manufacturing cell design. Cogent Eng 5(1):1426676. https://doi.org/10.1080/23311916.2018.1426676

Chrysostomou D, Kyriakoulis N, Gasteratos A (2010) Multi-camera 3d scene reconstruction from vanishing points. In: 2010 IEEE International conference on imaging systems and techniques, pp 343–348. https://doi.org/10.1109/IST.2010.5548495

Cignoni P, Callieri M, Corsini M, Dellepiane M, Ganovelli F, Ranzuglia G (2008) Scarano V, Chiara RD, Erra U (eds), Eurographics Italian Chapter Conference. The Eurographics Association. https://doi.org/10.2312/LocalChapterEvents/ItalChap/ItalianChapConf2008/129-136

Company, G.A.Z.: Gom scanner. https://www.gom.com/metrology-systems/atos.html. [Online; 04-February-2020]

Cover TM, Thomas JA (2006) Elements of information theory. Wiley, New York

Davison A (2003) Real-time simultaneous localisation and mapping with a single camera. In: 2003. Proceedings. Ninth IEEE international conference on Computer vision. IEEE, pp 1403–1410

Davison A, Reid ID, Molton ND, Stasse O (2007) Monoslam: Real-time single camera slam. IEEE Trans Pattern Anal Mach Intell 29(6):1052–1067. https://doi.org/10.1109/TPAMI.2007.1049

Eddyfi: A dry-coupled wheel probe. https://www.eddyfi.com/. [Online; 04-February-2020]

Engel J, Schöps T., Cremers D (2014) Lsd-slam: Large-scale direct monocular slam. In: Computer vision–ECCV 2014. Springer, pp 834–849

Fawcett D, Blanco-Sacristán J, Benaud P (2019) Two decades of digital photogrammetry: Revisiting chandler’s 1999 paper on “effective application of automated digital photogrammetry for geomorphological research” – a synthesis. Progress Phys Geogr Earth Environ 43 (2):299–312. https://doi.org/10.1177/0309133319832863

FLIR: Spinnaker sdk and firmware. https://www.flir.co.uk/support-center/iis/machine-vision/downloads/spinnaker-sdk-and-firmware-download/. [Online; accessed 04-February-2020]

French R, Benakis M, Marin-Reyes H (2017) Intelligent sensing for robotic re-manufacturing in aerospace — an industry 4.0 design based prototype. In: 2017 IEEE International symposium on robotics and intelligent sensors (IRIS), pp 272–277. https://doi.org/10.1109/IRIS.2017.8250134

Furukawa Y, Ponce J (2010) Accurate, dense, and robust multiview stereopsis. IEEE Trans Pattern Anal Mach Intell 32 (8):1362–1376. https://doi.org/10.1109/TPAMI.2009.161

GOM: Gom inspect software. https://www.gom.com/3d-software/gom-inspect.html. [Online; accessed 04-February-2020]

Institute, O.R.: Radar sensors. https://ori.ox.ac.uk/radar-for-mobile-autonomy/. [Online; 04-April-2020]

Instructables: Lidar sensor. hhttps://www.instructables.com/id/ROSbot-Autonomous-Robot-With-LiDAR/. [Online; 04-April-2020]

Izadi S, Kim D, Hilliges O, Molyneaux D, Newcombe R, Kohli P, Shotton J, Hodges S, Freeman D, Davison A et al (2011) Kinectfusion: real-time 3d reconstruction and interaction using a moving depth camera. In: Proceedings of the 24th annual ACM symposium on User interface software and technology. ACM, pp 559–568

Kazhdan M, Bolitho M, Hoppe H (2006) Poisson surface reconstruction. In: Proceedings of the Fourth Eurographics Symposium on Geometry Processing, SGP ’06. Eurographics Association, Goslar DEU, pp 61–70

Khoshelham K, Elberink SO (2012) Accuracy and resolution of kinect depth data for indoor mapping applications. Sens (Basel) Switzerland) 12(2):1437–1454

Khurshid A, Scharcanski J (2020) An adaptive face tracker with application in yawning detection. Sensors (Basel Switzerland) 20(5) https://doi.org/10.3390/s20051494

Khurshid A, Tamayo SC, Fernandes E, Gadelha MR, Teofilo M (2019) A robust and real-time face anti-spoofing method based on texture feature analysis. In: International conference on human-computer interaction. Springer, pp 484–496

KUKA: Agilus hw specifications. https://www.kuka.com/. [Online; 04-February-2020]

Longuet-Higgins H (1987) A computer algorithm for reconstructing a scene from two projections. In: Fischler MA, Firschein O (eds) Readings in Computer Vision. Morgan Kaufmann, San Francisco, pp 61–62https://doi.org/10.1016/B978-0-08-051581-6.50012-X. http://www.sciencedirect.com/science/article/pii/B978008051581650012X

MacLeod C, Dobie G, Pierce S, Summan R, Morozov M (2018) Machining-based coverage path planning for automated structural inspection. IEEE Trans Autom Sci Eng 15(1):202–213. https://doi.org/10.1109/TASE.2016.2601880

Mineo C, MacLeod C, Morozov M, Pierce S, Summan R, Rodden T, Kahani D, Powell J, McCubbin P, McCubbin C, Munro G, Paton S., Watson D (2017) Flexible integration of robotics, ultrasonics and metrology for the inspection of aerospace components. AIP Conf Proc 1806(1):020026. https://doi.org/10.1063/1.4974567

Mineo C, Pierce S, Nicholson PI, Cooper I (2016) Robotic path planning for non-destructive testing – a custom matlab toolbox approach. Robot Comput-Integr Manuf 37:1–12. https://doi.org/10.1016/j.rcim.2015.05.003. http://www.sciencedirect.com/science/article/pii/S0736584515000666

Mineo C, Vasilev M, Cowan B, MacLeod C, Pierce S, Wong C, Yang E, Fuentes R, Cross E (2020) Enabling robotic adaptive behaviour capabilities for new industry 4.0 automated quality inspection paradigms. Insight: J British Inst Non-Destruct Test 62(6):338–344. https://doi.org/10.1784/insi.2020.62.6.338

Mineo C, Vasilev M, MacLeod C, Su R, Pierce S (2018) Enabling robotic adaptive behaviour capabilities for new industry 4.0 automated quality inspection paradigms. In: 57th Annual British Conference on Non-Destructive Testing. GBR, pp 1–12. https://strathprints.strath.ac.uk/65367/

Mineo C, Wong C, Vasilev M, Cowan B, MacLeod C, Pierce S, Yang E Interfacing toolbox for robotic arms with real-time adaptive behavior capabilities. https://strathprints.strath.ac.uk/70008/. [Online; accessed 04-February-2020]

Newcombe RA, Izadi S, Hilliges O, Molyneaux D, Kim D, Davison A, Kohi P, Shotton J, Hodges S, Fitzgibbon A (2011) Kinectfusion: Real-time dense surface mapping and tracking. In: 2011 10th IEEE international symposium on Mixed and augmented reality (ISMAR). IEEE, pp 127–136

Newcombe RA, Lovegrove SJ, Davison A (2011) Dtam: Dense tracking and mapping in real-time. In: 2011 International conference on computer vision, pp 2320–2327. https://doi.org/10.1109/ICCV.2011.6126513

Peng Q, Tu L, Zhong S (2015) Automated 3d scenes reconstruction using multiple stereo pairs from portable four-camera photographic measurement system. https://doi.org/10.1155/2015/471681

Pierce S (2015) Paut inspection of complex-shaped composite materials through six dofs robotic manipulators

Robotiq: Wrist camera. https://robotiq.com/products/wrist-camera. [Online; 04-April-2020]

Samardzhieva I, Khan A (2018) Necessity of bio-imaging hybrid approaches accelerating drug discovery process (mini-review). Int J Comput Appl 182(6):1–10. https://doi.org/10.5120/ijca2018917564. http://www.ijcaonline.org/archives/volume182/number6/29763-2018917564

Tabatabaeipour M, Trushkevych O, Dobie G, Edwards R, Dixon S, MacLeod C, Gachagan A (2019) Pierce s.: a feasability study on guided wave-based robotic mapping

Taketomi T, Uchiyama H, Ikeda S (2017) Visual slam algorithms: a survey from 2010 to 2016. IPSJ Trans Comput Vis Appl 9(1):16. https://doi.org/10.1186/s41074-017-0027-2

Thevara DJ, Kumar CV (2018) Application of photogrammetry to automated finishing operations. IOP Conf Ser Mater Sci Eng 402:012025. https://doi.org/10.1088/1757-899x/402/1/012025

Thinkscan: Thinkscan 3d scanning metrology system. https://www.thinkscan.co.uk/3d-scanning-service-bureau. [Online; 04-April-2020]

Valenċa J, Júlio ENBS, Araújo HJ (2012) Applications of photogrammetry to structural assessment. Exp Tech 36(5):71–81. https://doi.org/10.1111/j.1747-1567.2011.00731.x

Velodyne: Velodyne lidar. https://velodynelidar.com/products/puck/. [Online; 04-February-2020]

Wu C (2013) Towards linear-time incremental structure from motion. In: 2013 International conference on 3d vision - 3DV 2013, pp 127–134. https://doi.org/10.1109/3DV.2013.25

Zhang D, Watson R, Dobie G, MacLeod C, Khan A, Pierce G (2020) Quantifying impacts on remote photogrammetric inspection using unmanned aerial vehicles. Engineering Structures. https://doi.org/10.1016/j.engstruct.2019.109940

Zhang T, Liu J, Liu S, Tang C, Jin P (2017) A 3d reconstruction method for pipeline inspection based on multi-vision. Measurement 98:35–48. https://doi.org/10.1016/j.measurement.2016.11.004. http://www.sciencedirect.com/science/article/pii/S0263224116306418

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Khan, A., Mineo, C., Dobie, G. et al. Vision guided robotic inspection for parts in manufacturing and remanufacturing industry. Jnl Remanufactur 11, 49–70 (2021). https://doi.org/10.1007/s13243-020-00091-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13243-020-00091-x