Abstract

People frequently report feeling more than one emotion at the same time (i.e., blended emotions), but studies on nonverbal communication of such complex states remain scarce. Actors (N = 18) expressed blended emotions consisting of all pairwise combinations of anger, disgust, fear, happiness, and sadness – using facial gestures, body movement, and vocal sounds – with the intention that both emotions should be equally prominent in the resulting expression. Accuracy of blended emotion recognition was assessed in two preregistered studies using a combined forced-choice and rating scale task. For each recording, participants were instructed to choose two scales (out of 5 available scales: anger, disgust, fear, happiness, and sadness) that best described their perception of the emotional content and judge how clearly each of the two chosen emotions were perceived. Study 1 (N = 38) showed that all emotion combinations were accurately recognized from multimodal (facial/bodily/vocal) expressions, with significantly higher ratings on scales corresponding to intended vs. non-intended emotions. Study 2 (N = 51) showed that all emotion combinations were also accurately perceived when the recordings were presented in unimodal visual (facial/bodily) and auditory (vocal) conditions, although accuracy was lower in the auditory condition. To summarize, results suggest that blended emotions, including combinations of both same-valence and other-valence emotions, can be accurately recognized from dynamic facial/bodily and vocal expressions. The validated recordings of blended emotion expressions are freely available for research purposes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Many situations in life are not clear-cut and lead to complex appraisals and emotional responses (e.g., Oh & Tong, 2022). Self-report studies indicate that people can frequently experience more than one emotion at the same time (e.g., Oatley & Duncan, 1994; Moeller et al., 2018; Scherer & Meuleman, 2013; Watson & Stanton, 2017). Subjective feelings where positive and negative states co-occur are usually called mixed emotions, and they can be viewed as a subset of blended (or compound) emotions, which refers to the co-occurrence of more than one emotion regardless of valence (for reviews, see Berrios et al., 2015; Larsen & McGraw, 2014). Current research on nonverbal communication of emotion is focused on expanding the number and complexity of emotions, and recent studies suggest that dozens of distinct emotions can be perceived from facial gestures, body movements, and vocal sounds (Cowen et al., 2019). Although the experience of blended emotions is an important aspect of the emotional complexity of everyday life (Grossmann et al., 2016), few prior studies have investigated if expressions of co-occurring emotions can be communicated nonverbally through facial and vocal expressions – which is the aim of this paper.

Facial Expression of Blended Emotions

Facial expression is one of the main topics in research on nonverbal behavior and emotion (see e.g., Fernàndez-Dols & Russell, 2017), but the vast majority of studies have focused on the expression and perception of single emotions. However, a handful of studies have reported that people may perceive more than one emotion from facial expressions of single emotions, and that they also agree upon the non-target emotion (e.g., Ekman et al., 1987; Fang et al., 2018; Kayyal & Russell, 2013). This has been explained by similarities in morphology (Yrizarry et al., 1998). For example, facial expressions of anger and disgust are both associated with features such as facial action unit (AU) 7 (lid tightener) and AU4 (brow lowerer) (see Ekman et al., 2002). However, perception of more than one emotion could also simply reflect that many stimuli used in research – although intended as exemplars of single emotions – in fact contain features from more than one emotion. Ekman and Friesen (1975) proposed that blended emotion expressions could be created by combining AUs associated with two different emotions and also provided several examples of what such expressions could look like.

Initial evidence that observers are able to perceive blended emotions from facial expression photographs was presented by Nummenmaa (1964, 1988), who asked actors to express blended emotions and assessed emotion recognition using a forced-choice format task. Results indicated that all pairwise combinations of anger, pleasure, and surprise (Nummenmaa, 1964) and 6 out of 10 of the combinations of fear, hate, pleasure, sorrow and surprise (Nummenmaa, 1988) could be accurately recognized. Nummenmaa’s results are suggestive, but also limited because each study was based on portrayals from one actor only.

In a more comprehensive effort, Du et al. (2014) created a large database of photographs of blended expressions. They instructed 230 participants to produce 15 blended facial expressions (e.g., angrily surprised) by combining facial features from different emotions, partly by showing the actors examples from Ekman’s work. They presented detailed information about which AUs were employed to produce each emotion combination, and proceeded to train machine learning algorithms to classify the blended emotions based on facial features extracted from the photographs. The classification was successful and indicated that the facial features differed systematically between the different emotion combinations. No human validation of the stimuli was presented in Du et al. (2014), but Pallett and Martinez (2014) reported that 7 out of the 15 emotion combinations were accurately labeled by human observers. For other examples of machine learning classification of blended facial expressions, see Guo et al. (2018) and Li and Deng (2019) – these studies generally find that while classification is successful for several blended emotions, accuracy remains lower than for single emotions.

Perception of blended emotions has also been studied using various methods to create synthetic facial stimuli (e.g., Calder et al., 2000; Martin et al., 2006; Young et al., 1997). In one representative study, Mäkäräinen et al. (2018) produced dynamic facial expressions, going from neutral to peak expression, using a physically based facial model capable of producing all necessary muscle actions. Expressions of all pairwise combinations of anger, disgust, fear, happiness, sadness, and surprise were created by combining AUs associated with both of the target emotions. Human validation of the stimuli showed that observers perceived same-valence combinations mainly as single emotions (e.g., a combination of anger and fear was perceived as only anger), whereas other-valence combinations were perceived as neither single nor blended emotions, but rather as other complex emotions. Only one of the blended emotions (happiness-surprise) was perceived as a combination of the two intended emotions.

Vocal Expression of Blended Emotions

Research on how emotions are nonverbally expressed though the voice is more limited than research on facial expressions (e.g., Scherer 2019). In addition, past research has focused almost exclusively on single emotions, which makes studies on vocal expression of blended emotions particularly scarce. In one relevant study, Juslin et al. (2021) recorded naturalistic vocal expressions in a field setting. The ground truth for the expression of each recording was provided by the speakers themselves, and 41% of the recordings were reported to reflect situations where the speakers experienced blended emotions. However, vocal expressions of blended emotions were typically perceived by human judges as conveying one or the other of the two co-occurring felt emotions. Only a few blended emotions were perceived as combinations of the two felt emotions, and Juslin et al. (2021) provided examples of acoustic features associated with the best recognized combinations.

In another relevant study, Burkhardt and Weiss (2018) used speech synthesis to create vocal expressions of both single emotions and pair-wise combinations of anger, fear, joy, and sadness. For the emotion combinations, they manipulated one set of acoustic features (pitch and duration) to express the “primary” emotion, and another set of features (voice quality and articulation) to express the “secondary” emotion. Both sets of features were used to synthesize single emotions. Results showed that human judges did perceive the synthesized single emotions as intended, and were also able to recognize the “primary” intended emotions from the blended expressions. It proved more difficult to perceive the “secondary” emotions, and judges only provided two emotion judgments in 38% of the cases. However, when analyses were restricted to these cases, results suggested that judges were able to recognize at least some of the blends (e.g., anger-fear, and anger-sadness).

Contribution of the Present Study

In summary, previous studies suggest that some blended emotions may be recognized from facial and vocal expressions, but results remain inconclusive. Previous studies have also mainly investigated facial or vocal expressions in isolation, often using still pictures of facial expressions (Dawel et al., 2022), although in everyday life emotion expressions are not restricted to a single modality (e.g., Bänziger et al., 2012) and evolve dynamically over time (e.g., Krumhuber et al., 2023). We therefore present two studies that directly investigate if blended emotions – consisting of pairwise combinations of both same-valence and other-valence emotions – can be recognized from dynamic multimodal (facial/bodily/vocal) expressions (Study 1) and unimodal visual (facial/bodily) and auditory (vocal) expressions (Study 2).

Study 1

The aim of Study 1 was to investigate if blended emotions can be recognized from multimodal expressions wherein actors express combinations of two emotions. Data and code are available on the Open Science Framework (https://osf.io/xu4rd/), and all analyses were planned and preregistered (https://osf.io/6xm8w). Ethical approval was granted by the Swedish Ethical Review Authority (decision no. 2021 − 00972).

Method

Participants

Thirty-eight adults (20 women) who were between 19 and 57 years (M = 32, SD = 8) took part in the experiment. They were recruited through advertisements on online recruitment sites and were compensated with two movie tickets, which were sent to them after completion of the perception test. Informed consent was collected online from each participant. Only individuals between 18 and 65 years and who understood Swedish were eligible for participation. Sample size was determined based on power analysis for one-way repeated measures analysis of variance (ANOVA) with 10 measurements. We used G*Power (Faul et al., 2007) for the analysis and our goal was to obtain 0.95 power to detect a medium effect size (f = 0.25) at standard 0.05 alpha error probability. Nonsphericity correction epsilon was set to 1 and the correlation among repeated measures was conservatively set to 0. With these settings, G*Power suggested a sample size of N = 39.

Emotion Portrayals

Portrayals of blended emotions were recorded as part of a larger project on dynamic multimodal emotion expression wherein actors express a wide range of emotions through facial gestures, body movement and vocal sounds. Here, actors were instructed to express blended emotions consisting of all pairwise combinations of anger, disgust, fear, happiness, and sadness (i.e. 10 emotion combinations), with the intention that both emotions should be equally prominent in the resulting expression. These emotions were chosen because previous research has established that they are well recognized as single emotions from both facial and vocal expressions (for reviews, see Elfenbein & Ambady, 2002; Laukka & Elfenbein, 2021), and because diary studies have shown that combinations of these emotions co-occur in people’s self-reports of subjective feelings (Oatley & Duncan, 1994). The actors were instructed that the emotions should be conveyed as convincingly as possible, as if interacting with another person (the camera), but without using overtly stereotypical expressions. It was also specified that they should try to express the emotion simultaneously through both the face/body and the voice. For the vocal expressions, they were free to choose any non-linguistic vocalization (e.g., cries, laughter, groans), but no real or fake words were allowed (e.g., Scherer 1994). All actors were further provided with definitions and scenarios for each single emotion based on previous studies (see Laukka et al., 2016), but they were free to come up with their own scenario for each emotion combination. They were instructed to try to remember a similar situation that they had experienced personally, and that had evoked the specified emotion combination with a moderately high emotion intensity, and if possible try to put themselves into a similar state of mind. The actors’ intended emotion combinations were used as ground truth for determining whether the participants’ emotion judgments were correct or incorrect.

Recordings were conducted in a room with studio lighting and dampened acoustics, using a high-quality camera (Blackmagic Pocket Cinema Camera 6 K, Blackmagic Design Pty Ltd, Port Melbourne, Australia), lens (Sigma 35 mm F1.4 DG HSM, Sigma Corporation, Kawasaki, Japan) and microphone (Røde NTG3B, Røde Microphones, Sydney, Australia) setup. The audio level was calibrated relative to the loudest expected level and then kept constant during the recording session. The camera was placed in front of the actor at a distance of approximately 1.2 m, and the microphone was located 0.5 m above the actor and directed at the actor’s chest.

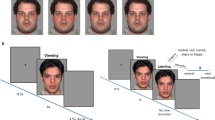

For the current study, we selected recordings from 18 actors (9 women) and each actor provided expressions of each of the 10 different emotion combinations, for a total of 180 brief video clips. Each clip showed a frontal view of the actor’s upper-torso and face. The duration of the clips ranged from 1 to 5 s. Examples of stills from the videos are presented in Fig. 1.

Emotion Perception Task

All participants rated all of the 180 video clips described above in an online experiment. The experiment was constructed in PsychoPy (Peirce et al., 2019) and was presented online using Pavlovia (https://pavlovia.org/), which is a platform for launching PsychoPy experiments online.

The emotion perception task combined elements of forced-choice and rating scale tasks, and was designed to assess which emotions were perceived and how clearly they were perceived. For each video clip, participants were presented with 5 rating scales (anger, disgust, fear, happiness, and sadness). They were instructed that they had to choose 2 out of the 5 available scales, based on their perception of the emotional content of the recording, and use these scales to indicate how clearly both of the chosen emotions were perceived. The rating scales ranged from 0 (emotion not at all prominent) to 10 (emotion very prominent). Stimuli were presented in random order (different for each participant) to avoid order effects.

The task included step-by-step instructions and participants were given the same definitions of the included emotions as were given to the actors. Instructions also emphasized that participants should try to make sure that they would not be interrupted while doing the task, and that they needed to perform the task on a computer and use headphones in order to hear the stimuli properly. Three practice stimuli were presented before the start of the experiment. Participants were instructed to adjust the audio to a comfortably loud level during the practice trials and then keep the same sound level during the rest of the task. The task was self-paced, and participants were allowed to play the clips as many times as needed to perform the ratings. The whole experiment took around 45–60 min to complete.

Results

Emotion Ratings

Emotion ratings were analyzed using one-way repeated measures ANOVAs with intended emotion combination (10 levels: anger-disgust, anger-fear, anger-happiness, anger-sadness, disgust-fear, disgust-happiness, disgust-sadness, fear-happiness, fear-sadness, happiness-sadness) as a within-subjects variable. Separate ANOVAs were performed for the participants’ mean ratings on each rating scale (anger, fear, disgust, happiness, and sadness). Significant main effects showed that ratings varied systematically as a function of intended emotion combination for all rating scales: anger (F(9, 333) = 113.44, p < .0001, η2p = 0.75, η2G = 0.64), disgust (F(9, 333) = 111.09, p < .0001, η2p = 0.75, η2G = 0.62), fear (F(9, 333) = 81.60, p < .0001, η2p = 0.69, η2G = 0.42), happiness (F(9, 333) = 148.38, p < .0001, η2p = 0.80, η2G = 0.75), and sadness (F(9, 333) = 74.81, p < .0001, η2p = 0.67, η2G = 0.45). Mauchly’s test of sphericity indicated that none of the scales met the assumption of sphericity, but effects remained statistically significantly also after Greenhouse-Geisser correction (all corrected ps < 0.0001). We report partial eta squared (η2p) in order to estimate the amount of variability explained by the within-subjects factor when controlling for the number of factors, and generalized eta squared (η2G) to estimate the variability explained by the within-subjects factor while accounting for both number of factors and individual differences (which enables comparison across studies regardless of design, see Olejnik & Algina, 2003).

Table 1 shows that target emotion combinations (i.e. where the rating scale matches with one of the intended emotions) received higher ratings than non-target emotion combinations (i.e. where the scale and intended emotions do not match) for each rating scale. For example, in the column for anger ratings one can see that the target combination anger-sadness (M = 4.40) received higher ratings than the non-target combination happiness-sadness (M = 0.40). In fact, post-hoc multiple comparisons (repeated measures t-tests) confirmed that all differences between target and non-target emotion combinations were significant for each rating scale (all ps < 0.0001, Bonferroni corrected alpha level = 0.0017, i.e. corrected for 30 comparisons per scale). Details about the comparisons between target and non-target combinations are presented in the online supplementary information (Table S1).

Table 1 also shows that target emotions did not receive equally high ratings for all target emotion combinations. For example, anger ratings were higher when anger was combined with fear or sadness, than when anger was combined with disgust or happiness. Disgust ratings were higher for disgust-fear, and fear ratings for fear-sadness, vs. other target combinations. Happiness ratings, in turn, were lower for anger-happiness than for other target combinations (all ps < 0.001, Bonferroni corrected alpha = 0.0017). However, there were no significant differences for sadness ratings between any of the target combinations (see online supplementary Table S2 for details of comparisons between target combinations).

Accuracy Indices

Emotion ratings were further used to calculate two indices of emotion recognition accuracy (see Juslin et al., 2021). Generous accuracy is a measure of the ability to correctly perceive at least one of the two intended emotions, whereas strict accuracy is a measure of the ability to correctly perceive both of the two intended emotions. For generous accuracy, a response was scored as correct if either of the used rating scales corresponded to either of the two intended emotions of a stimulus. For strict accuracy, a response was only scored as correct if the chosen rating scales corresponded to both of the two intended emotions of a stimulus. For both indices, correct responses were coded as 1 and incorrect responses as 0, and recognition accuracy was then calculated as the proportion of correct responses. For generous accuracy, the chance level is 0.4 (2 out of 5). For strict accuracy, the probability of getting the first emotion correct is 2/5, and the probability of getting the second emotion right is 1/(5 − 1) = 0.25. The combined probability of getting both emotions right by chance is thus 0.10. Table 1 presents accuracy values for each emotion combination and shows that both generous and strict accuracy were significantly higher than chance for all combinations, as indicated by 95% CI not overlapping with the chance level of each index.

Study 2

Results from Study 1 showed that participants were able to accurately recognize blended emotions from multimodal (facial/bodily/vocal) expressions. The aim of Study 2 was to investigate if blended emotions can also be accurately recognized from unimodal visual (facial/bodily) and auditory (vocal) expressions. Data and code are available on the Open Science Framework (https://osf.io/xu4rd/), and all analyses were planned and preregistered (https://osf.io/sa9wk). Ethical approval was granted by the Swedish Ethical Review Authority (decision no. 2021 − 00972).

Method

Participants

Participants consisted of 51 adults (25 women, 24 men, 2 participants did not report gender) who were between 18 and 57 years (M = 31, SD = 11). They were recruited through online recruitment sites and were compensated with two movie tickets. Informed consent was collected online from each participant. Only individuals who understood Swedish and were between 18 and 65 years old were eligible for participation. None of the participants had taken part in Study 1. Sample size was determined using the ANOVA_power shiny app (Lakens & Caldwell, 2021), which is an online tool for performing Monte Carlo simulations of factorial experimental designs. The power analysis required an a priori estimation of the expected results, and we specified a two-way repeated measures ANOVA with a large main effect of intended emotion combination (10 repeated measures: the 10 emotion combinations), a medium effect of presentation modality (2 repeated measures: video-only, audio-only), and a small emotion combination × modality interaction effect. Using an iterative process, we found that a sample size of N = 50 provided around 90% power to detect both a medium main effect of modality and a small interaction effect. We refer to the analysis plan for more details about the power analysis (https://osf.io/sa9wk).

Emotion Portrayals

Stimulus selection was based on the results from Study 1, and we selected the 8 best recognized video clips for each emotion blend provided by female (4 clips) and male (4 clips) actors. An additional constraint was that clips where the actors did not produce any audible sounds were excluded. In total, 80 clips were selected and the selection contained clips from each of the 18 original actors from Study 1. Two versions of each selected stimulus were prepared – one with only video and no sound, and one with only sound but no video – leading to a total of 160 stimuli (80 video-only and 80 audio-only).

Emotion Perception Task

All participants rated all of the 160 stimuli described above in an online experiment, which was constructed in PsychoPy (Peirce et al., 2019) and presented online using Pavlovia (https://pavlovia.org/). As in Study 1, participants were instructed to rate each stimulus on 2 out of 5 available rating scales (anger, disgust, fear, happiness, and sadness) ranging from 0 to 10, with regard to how clearly they perceived the different emotions. The 160 stimuli were presented in blocks consisting of 80 video-only and 80 audio-only stimuli. Stimuli were presented in random order within blocks and the order of blocks was counterbalanced to avoid order effects. The procedure was in other aspects the same as in Study 1. The whole experiment took around 45–60 min.

Results

Emotion Ratings

Emotion ratings were analyzed using two-way repeated measures ANOVAs with modality (2 levels: video-only and audio-only) and intended emotion combination (10 levels: anger-disgust, anger-fear, anger-happiness, anger-sadness, disgust-fear, disgust-happiness, disgust-sadness, fear-happiness, fear-sadness, happiness-sadness) as within-subject factors. Separate ANOVAs were performed for the participants’ mean ratings on each rating scale (anger, fear, disgust, happiness, and sadness). Table 2 shows the results from the ANOVAs. As can be seen, the main effect of emotion was significant for all rating scales, which indicates that emotion ratings overall varied systematically as a function of intended emotion combination. The main effect of modality was significant for the anger, fear, and happiness scales, with higher ratings in the video vs. audio modality, but not for the disgust and sadness scales. Descriptive statistics for the main effects are shown in the online supplementary Table S3.

The above main effects were qualified by significant modality × emotion combination interaction effects for all rating scales (see Table 2). Descriptive statistics for the interaction effects are shown in Table 3. In the following, we further explore these interaction effects using post-hoc multiple comparisons (repeated measures t-tests) with a Bonferroni corrected alpha level of 0.0008 (i.e. corrected for 64 comparisons per scale). We first investigate if target emotion combinations received higher ratings than non-target emotion combinations, followed by an investigation of differences between target emotion combinations.

Target vs. non-target emotion combinations. Table 3 shows that the target emotion combinations were rated higher than non-target combinations for each rating scale in both the video-only and the audio-only modality. All differences between target and non-target ratings were significant in the video modality. All differences were also significant in the audio modality, with the exception that disgust ratings did not differ significantly between disgust-sadness (target) and anger-fear (non-target). Details about the comparisons between target and non-target comparisons are shown in online supplementary Table S4.

Differences between target emotion combinations. Table 3 also shows that target emotions did not receive equally high ratings for all target emotion combinations. For example, anger ratings were higher for anger-fear, and lower for anger-happiness, vs. other target combinations in the video modality. Anger ratings were also lower for anger-happiness vs. other target combinations in the audio modality. Disgust ratings were higher for disgust-fear in the video modality, and lower for disgust-sadness in the audio modality, compared to other target combinations. Some significant differences were also observed for ratings of fear (anger-fear lower than other targets for video), happiness (anger-happiness higher than other targets for audio) and sadness (fear-sadness higher than other targets for video). See online supplementary Table S5 for details of the comparisons between target combinations.

Finally, we investigated whether ratings of target emotion combinations differed between the video-only and audio-only conditions. Significant differences were observed for all target combinations for anger and happiness ratings, and three out of four combinations for disgust and fear ratings, but only one target combination (fear-sadness) for sadness ratings, see Table 3. Video-only ratings were higher than audio-only ratings for all significant differences, except for disgust-happiness which had higher disgust ratings in the audio-only condition. Details of the comparisons between modalities are available in online supplementary Table S6.

Accuracy Indices

Like in Study 1, emotion ratings were also used to calculate measures of the extent to which participants recognized one of the two intended emotions (generous accuracy) or both of the two intended emotions (strict accuracy; see Juslin et al., 2021). Table 3 presents the accuracy indices for all emotion combinations for each modality. Overall, generous accuracy was high both in the video modality (M = 0.93, SD = 0.18, 95% CI [0.92, 0.95]) and the audio modality (M = 0.87, SD = 0.21, 95% CI [0.85, 0.89]). Values for strict accuracy were lower, with a proportion of correct responses of 0.51 (SD = 0.30, CI 95% [0.49, 0.54]) in the video modality and 0.30 (SD = 0.25, CI 95% [0.28, 0.32]) in the audio modality. These numbers can be compared to the accuracy values that the selected eighty stimuli obtained originally in Study 1, where the multimodal (both video and audio) versions received a generous accuracy of 0.95 (SD = 0.15, 95% CI [0.93, 0.96]) and a strict accuracy of 0.59 (SD = 0.31, 95% CI [0.56, 0.62]).

The highest strict accuracy was observed for disgust-fear (0.64) and fear-happiness (0.63) in the video modality, and for happiness-sadness (0.39) and fear-sadness (0.38) in the audio modality. Lowest strict accuracy was in turn observed for anger-fear (0.40) and disgust-sadness (0.40) in the video modality, and for anger-fear (0.19) and fear-happiness (0.18) in the audio modality. The differences between modalities were notable in that the lowest video-only accuracy was higher than the highest audio-only accuracy. We also note that while strict accuracy for fear-happiness was among the highest in the video modality, it was the lowest in the audio modality. However, as shown in Table 3, both generous and strict accuracy were nevertheless higher than what would be expected by chance guessing for each emotion combination in both the video-only and the audio-only modality, as indicated by 95% CIs not overlapping the chance level.

Discussion

To summarize, results showed that blended emotions, including both same-valence and other-valence combinations, can be accurately recognized from dynamic multimodal (facial/bodily/vocal) expressions (Study 1) and unimodal visual (facial/bodily) and auditory (vocal) expressions (Study 2). Target emotion combinations were on average rated higher than non-target combinations for all emotion scales and in all presentation conditions. In addition, the measures of strict accuracy indicated that participants were able to correctly judge both of the intended emotions for each emotion combination with higher-than-chance accuracy in all conditions. Emotion ratings and accuracy varied across presentation modalities in Study 2, with higher recognition in visual vs. auditory conditions. A comparison of the accuracy rates for the subset of stimuli that was used in both Studies 1 and 2 suggested that recognition was higher for multimodal vs. unimodal expressions. This pattern of findings (multimodal > video > audio) is in line with previous studies that have compared recognition of single emotions across modalities (e.g., Laukka et al., 2021). Finally, results also detailed how emotion ratings varied across emotion combinations and modalities (e.g., regarding which target emotions were most clearly perceived from which combinations for the different modalities), although we suggest that these aspects of the results require replication before given a more in-depth interpretation.

Overall, the current findings provide clearer evidence for accurate recognition of blended emotions than previous studies on (mainly static) facial (e.g., Nummenmaa 1988; Pallett & Martinez, 2014) or vocal (e.g., Juslin et al., 2021) expressions. We argue that the multimodal and dynamic nature of the current stimuli allowed for a richer set of physical expression features. A body of evidence suggests that emotional information that is presented simultaneously in multiple modalities (e.g., faces and voices) is processed in a synergistic fashion where multimodal information is integrated throughout the process, from early perceptual to later conceptual stages (e.g., Klasen et al., 2012; Schirmer & Adolphs, 2017). Dynamic expressions in turn provide temporal information regarding how expressive features change over time (e.g., in what direction, how much, and how fast) which could recruit higher-level cognitive processing and enhance emotion perception (Krumhuber et al., 2023).

While our study focused on perception only, future studies could investigate the expressive features of blended emotions. This could be achieved, for example, by analyzing facial AUs (Ekman et al., 2002) and various emotion-related acoustic features (e.g., Scherer 2019), and relating them to participant judgments (e.g., Laukka et al., 2016). Such analyses could provide clues about underlying mechanisms and provide answers to questions such as: Are blended emotions expressed by superimposing the feature patterns of both emotions, or do they contain some features from one emotion (e.g., angry eyes) and other features from the other emotion (e.g., happy mouth), or do they contain unique feature patterns not observed in single emotions? Or do dynamic blended expressions fluctuate over time, rapidly changing from one emotion to another? Or are different emotions expressed in different modalities (e.g., happy voice combined with a sad face)? (Although this last possibility seems less likely based on the current findings where blended emotions could be perceived from both video-only and audio-only stimuli). We are currently investigating these research questions in our lab.

This study provides a validated set of blended expressions that is freely available for research purposes. The stimuli could be used, to give one example among many, to study individual differences in the ability to recognize blended emotions. Such studies would complement previous studies on how emotion recognition ability varies as a function of age (Cortes et al., 2021; Hayes et al., 2020), gender (Hall et al., 2016), and psychiatric disorders (Phillips et al., 2003) – which have usually been based on single emotions. We believe that incorporating blended emotions into such studies has the potential to lead to new insights about interindividual variability in emotional abilities.

Limitations

Limitations include that results are based on posed expressions, which may differ from naturalistic emotion expressions in subtle yet systematic ways (e.g., Juslin et al., 2018; Namba et al., 2017). The development of sets of spontaneous blended expressions represents an interesting challenge for future research. Such work could potentially also provide data on how frequently different emotion combinations are expressed in different types of everyday situations. Although all of the emotion combinations that were included in the current study have also been reported in studies on the subjective experience of blended emotions (e.g., Oatley & Duncan, 1994), the relevance of specific combinations for everyday social interactions remains to be determined.

Another possible limitation concerns the response format, which may have increased recognition accuracy because participants were prompted to provide ratings on two scales for each stimulus. This was done to provide a direct measure of how well participants could recognize both of the intended emotions from each emotion combination. However, it would be interesting to also investigate what would happen if there were no limits or restrictions on the number of choices. For example, it remains a possibility that one of the two intended emotions could dominate the judges’ impressions, so that they would only report one emotion if not asked specifically to provide ratings on two scales (Juslin et al., 2021; Mäkäräinen et al., 2018). But it also remains a possibility that the judges might be able to systematically rate the blended emotions on more than two scales. Finally, some theories have proposed that when two emotions are blended, this creates a new emotion that is distinct from the two original emotions (e.g., the experience of shame has been proposed to consist of a combination of fear and disgust; see Plutchik 1980). To account for such possibilities, it would be interesting to investigate perception of blended emotions using response formats that do not limit participants to experimenter-imposed selections (e.g., open-ended responses, see Elfenbein et al., 2022).

Conclusions

Results showed that blended emotions can be accurately recognized from dynamic facial and vocal expressions, which suggests that emotion perception is more nuanced and flexible than has previously been thought. As many types of situations give rise to blended emotions (e.g., Oh & Tong, 2022), it makes sense that we should also be able to communicate nonverbally our emotional responses in such situations, as this helps us interpret, understand and adaptively respond to a complex environment (e.g., Berrios et al., 2015).

References

Bänziger, T., Mortillaro, M., & Scherer, K. R. (2012). Introducing the Geneva multimodal expression corpus for experimental research on emotion perception. Emotion, 12(5), 1161–1179. https://doi.org/10.1037/a0025827.

Berrios, R., Totterdell, P., & Kellett, S. (2015). Eliciting mixed emotions: A meta-analysis comparing models, types, and measures. Frontiers in Psychology, 6, 428. https://doi.org/10.3389/fpsyg.2015.00428.

Burkhardt, F., & Weiss, B. (2018). Speech synthesizing simultaneous emotion-related states. In A. Karpov, O. Jokisch, & R. Potapova (Eds.), Speech and computer: Proceedings of the 20th international conference, SPECOM 2018 (pp. 76–85). Springer. https://doi.org/10.1007/978-3-319-99579-3_9

Calder, A. J., Young, A. W., Keane, J., & Dean, M. (2000). Configural information in facial expression perception. Journal of Experimental Psychology: Human Perception and Performance, 26(2), 527–551. https://doi.org/10.1037/0096-1523.26.2.527.

Cortes, D. S., Tornberg, C., Bänziger, T., Elfenbein, H. A., Fischer, H., & Laukka, P. (2021). Effects of aging on emotion recognition from dynamic multimodal expressions and vocalizations. Scientific Reports, 11(1), 2647. https://doi.org/10.1038/s41598-021-82135-1.

Cowen, A., Sauter, D., Tracy, J. L., & Keltner, D. (2019). Mapping the passions: Toward a high-dimensional taxonomy of emotional experience and expression. Psychological Science in the Public Interest, 20(1), 69–90. https://doi.org/10.1177/1529100619850176.

Dawel, A., Miller, E. J., Horsburgh, A., & Ford, P. (2022). A systematic survey of face stimuli used in psychological research 2000–2020. Behavior Research Methods, 54(4), 1889–1901. https://doi.org/10.3758/s13428-021-01705-3.

Du, S., Tao, Y., & Martinez, A. M. (2014). Compound facial expressions of emotion. Proceedings of the National Academy of Sciences of the United States of America, 111(15), E1454–E1462. https://doi.org/10.1073/pnas.1322355111.

Ekman, P., & Friesen, W. V. (1975). Unmasking the face: A guide to recognizing emotions from facial clues. Prentice-Hall.

Ekman, P., Friesen, W. V., O’Sullivan, M., Chan, A., Diacoyanni-Tarlatzis, I., Heider, K., Krause, R., LeCompte, W. A., Pitcairn, T., Ricci-Bitti, P. E., Scherer, K., Tomita, M., & Tzavaras, A. (1987). Universals and cultural differences in the judgments of facial expressions of emotion. Journal of Personality and Social Psychology, 53(4), 712–717. https://doi.org/10.1037//0022-3514.53.4.712.

Ekman, P., Friesen, W. V., & Hager, J. V. (2002). Facial action coding system (2nd ed.). Research Nexus Ebook.

Elfenbein, H. A., & Ambady, N. (2002). On the universality and cultural specificity of emotion recognition: A meta-analysis. Psychological Bulletin, 128(2), 203–235. https://doi.org/10.1037/0033-2909.128.2.203.

Elfenbein, H. A., Laukka, P., Althoff, J., Chui, W., Iraki, F. K., Rockstuhl, T., & Thingujam, N. S. (2022). What do we hear in the voice? An open-ended judgment study of emotional speech prosody. Personality and Social Psychology Bulletin, 48(7), 1087–1104. https://doi.org/10.1177/01461672211029786.

Fang, X., Sauter, D. A., & van Kleef, G. A. (2018). Seeing mixed emotions: The specificity of emotion perception from static and dynamic facial expressions across cultures. Journal of Cross-Cultural Psychology, 49(1), 130–148. https://doi.org/10.1177/0022022117736270.

Faul, F., Erdfelder, E., Lang, A. G., & Buchner, A. (2007). G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39(2), 175–191. https://doi.org/10.3758/BF03193146.

Fernández-Dols, J. M., & Russell, J. A. (Eds.). (2017). The science of facial expression. Oxford University Press.

Grossmann, I., Huynh, A. C., & Ellsworth, P. C. (2016). Emotional complexity: Clarifying definitions and cultural correlates. Journal of Personality and Social Psychology, 111(6), 895–916. https://doi.org/10.1037/pspp0000084.

Guo, J., Lei, Z., Wan, J., Avots, E., Hajarolasvadi, N., Knyazev, B., Kuharenko, A., Junior, J. C. S. J., Baró, X., Demirel, H., Escalera, S., Allik, J., & Anbarjafari, G. (2018). Dominant and complementary emotion recognition from still images of faces. IEEE Access, 6, 26391–26403. https://doi.org/10.1109/ACCESS.2018.2831927.

Hall, J. A., Gunnery, S. D., & Horgan, T. G. (2016). Gender differences in interpersonal accuracy. In J. A. Hall, M. Schmid Mast, & T. V. West (Eds.), The social psychology of perceiving others accurately (pp. 309–327). Cambridge University Press.

Hayes, G. S., McLennan, S. N., Henry, J. D., Phillips, L. H., Terrett, G., Rendell, P. G., Pelly, R. M., & Labuschagne, I. (2020). Task characteristics influence facial emotion recognition age-effects: A meta-analytic review. Psychology and Aging, 35(2), 295–315. https://doi.org/10.1037/pag0000441.

Juslin, P. N., Laukka, P., & Bänziger, T. (2018). The mirror to our soul? Comparisons of spontaneous and posed vocal expression of emotion. Journal of Nonverbal Behavior, 42(1), 1–40. https://doi.org/10.1007/s10919-017-0268-x.

Juslin, P. N., Laukka, P., Harmat, L., & Ovsiannikow, M. (2021). Spontaneous vocal expressions from everyday life convey discrete emotions to listeners. Emotion, 21(6), 1281–1301. https://doi.org/10.1037/emo0000762.

Kayyal, M. H., & Russell, J. A. (2013). Americans and Palestinians judge spontaneous facial expressions of emotion. Emotion, 13(5), 891–904. https://doi.org/10.1037/a0033244.

Klasen, M., Chen, Y. H., & Mathiak, K. (2012). Multisensory emotions: Perception, combination and underlying neural processes. Reviews in the Neurosciences, 23(4), 381–392. https://doi.org/10.1515/revneuro-2012-0040.

Krumhuber, E. G., Skora, L. I., Hill, H. C. H., & Lander, K. (2023). The role of facial movements in emotion recognition. Nature Reviews Psychology, 2(5), 283-296. https://doi.org/10.1038/s44159-023-00172-1.

Lakens, D., & Caldwell, A. R. (2021). Simulation-based power analysis for factorial analysis of variance designs. Advances in Methods and Practices in Psychological Science, 4(1), 2515245920951503. https://doi.org/10.1177/2515245920951503.

Larsen, J. T., & McGraw, A. P. (2014). The case for mixed emotions. Social and Personality Psychology Compass, 8(6), 263–274. https://doi.org/10.1111/spc3.12108.

Laukka, P., & Elfenbein, H. A. (2021). Cross-cultural emotion recognition and in-group advantage in vocal expression: A meta-analysis. Emotion Review, 13(1), 3–11. https://doi.org/10.1177/1754073919897295.

Laukka, P., Elfenbein, H. A., Thingujam, N. S., Rockstuhl, T., Iraki, F. K., Chui, W., & Althoff, J. (2016). The expression and recognition of emotions in the voice across five nations: A lens model analysis based on acoustic features. Journal of Personality and Social Psychology, 111(5), 686–705. https://doi.org/10.1037/pspi0000066.

Laukka, P., Bänziger, T., Israelsson, A., Cortes, D. S., Tornberg, C., Scherer, K. R., & Fischer, H. (2021). Investigating individual differences in emotion recognition ability using the ERAM test. Acta Psychologica, 220, 103422. https://doi.org/10.1016/j.actpsy.2021.103422.

Li, S., & Deng, W. (2019). Blended emotion in-the-wild: Multi-label facial expression recognition using crowdsourced annotations and deep locality feature learning. International Journal of Computer Vision, 127, 884–906. https://doi.org/10.1007/s11263-018-1131-1.

Mäkäräinen, M., Kätsyri, J., & Takala, T. (2018). Perception of basic emotion blends from facial expressions of virtual characters: Pure, mixed or complex? In V. Skala (Ed.), Proceedings of the 26th International Conference in Central Europe on Computer Graphics, Visualization and Computer Vision (WSCG 2018) (pp. 135–142). University of West Bohemia. https://doi.org/10.24132/CSRN.2018.2802.17

Martin, J. C., Niewiadomski, R., Devillers, L., Buisine, S., & Pelachaud, C. (2006). Multimodal complex emotions: Gesture expressivity and blended facial expressions. International Journal of Humanoid Robotics, 3(3), 269–291. https://doi.org/10.1142/S0219843606000825.

Moeller, J., Ivcevic, Z., Brackett, M. A., & White, A. E. (2018). Mixed emotions: Network analyses of intra-individual co-occurrences within and across situations. Emotion, 18(8), 1106–1121. https://doi.org/10.1037/emo0000419.

Namba, S., Makihara, S., Kabir, R. S., Miyatani, M., & Nakao, T. (2017). Spontaneous facial expressions are different from posed facial expressions: Morphological properties and dynamic sequences. Current Psychology, 36(3), 593–605. https://doi.org/10.1007/s12144-016-9448-9.

Nummenmaa, T. (1964). The language of the face. Jyväskylä studies in education, psychology, and social research (Vol. 9). Jyväskylän Yliopistoyhdistys. https://jyx.jyu.fi/handle/123456789/71907

Nummenmaa, T. (1988). The recognition of pure and blended facial expressions of emotion from still photographs. Scandinavian Journal of Psychology, 29(1), 33–47. https://doi.org/10.1111/j.1467-9450.1988.tb00773.x.

Oatley, K., & Duncan, E. (1994). The experience of emotions in everyday life. Cognition and Emotion, 8(4), 369–381. https://doi.org/10.1080/02699939408408947.

Oh, V. Y. S., & Tong, E. M. W. (2022). Specificity in the study of mixed emotions: A theoretical framework. Personality and Social Psychology Review, 26(4), 283–314. https://doi.org/10.1177/10888683221083398.

Olejnik, S., & Algina, J. (2003). Generalized eta and omega squared statistics: Measures of effect size for some common research designs. Psychological Methods, 8(4), 434–447. https://doi.org/10.1037/1082-989X.8.4.434.

Pallett, P., & Martinez, A. (2014). Beyond the basics: Facial expressions of compound emotions. Journal of Vision, 14(10), 1401–1401. https://doi.org/10.1167/14.10.1401.

Peirce, J., Gray, J. R., Simpson, S., MacAskill, M., Höchenberger, R., Sogo, H., Kastman, E., & Lindeløv, J. K. (2019). PsychoPy2: Experiments in behavior made easy. Behavior Research Methods, 51(1), 195–203. https://doi.org/10.3758/s13428-018-01193-y.

Phillips, K. L., Drevets, W. C., Rauch, S. L., & Lane, R. (2003). Neurobiology of emotion perception II: Implications for major psychiatric disorders. Biological Psychiatry, 54(5), 515–528. https://doi.org/10.1016/s0006-3223(03)00171-9.

Plutchik, R. (1980). Emotion: A psychoevolutionary synthesis. Harper and Row.

Scherer, K. R. (1994). Affect bursts. In S. van Goozen, N. E. van de Poll, & J. A. Sergeant (Eds.), Emotions: Essays on emotion theory (pp. 161–196). Lawrence Erlbaum Associates.

Scherer, K. R. (2019). Acoustic patterning of emotion vocalizations. In S. Frühholz, & P. Belin (Eds.), The Oxford handbook of voice perception (pp. 61–93). Oxford University Press. https://doi.org/10.1093/oxfordhb/9780198743187.013.4.

Scherer, K. R., & Meuleman, B. (2013). Human emotion experiences can be predicted on theoretical grounds: Evidence from verbal labeling. PLoS One, 8(3), e58166. https://doi.org/10.1371/journal.pone.0058166.

Schirmer, A., & Adolphs, R. (2017). Emotion perception from face, voice, and touch: Comparisons and convergence. Trends in Cognitive Sciences, 21(3), 216–228. https://doi.org/10.1016/j.tics.2017.01.001.

Watson, D., & Stanton, K. (2017). Emotion blends and mixed emotions in the hierarchical structure of affect. Emotion Review, 9(2), 99–104. https://doi.org/10.1177/1754073916639659.

Young, A. W., Rowland, D., Calder, A. J., Etcoff, N. L., Seth, A., & Perrett, D. I. (1997). Facial expression megamix: Tests of dimensional and category accounts of emotion recognition. Cognition, 63(3), 271–313. https://doi.org/10.1016/S0010-0277(97)00003-6.

Yrizarry, N., Matsumoto, D., & Wilson-Cohn, C. (1998). American-Japanese differences in multiscalar intensity ratings of universal facial expressions of emotion. Motivation and Emotion, 22(4), 315–327. https://doi.org/10.1023/A:1021304407227.

Acknowledgements

Data and code are available on the Open Science Framework (https://osf.io/xu4rd/). The two studies that are presented in this article were pre-registered: study 1 (https://osf.io/6xm8w) and study 2 (https://osf.io/sa9wk). The stimulus set used in this study is freely available for academic research upon request.

We thank Christina Tornberg and Christine Malapetsa for assistance with the recordings and data collection, respectively.

Funding

This research was supported by the Marianne and Marcus Wallenberg Foundation (MMW 2018.0059). Open access funding provided by Stockholm University.

Author information

Authors and Affiliations

Contributions

The study was conceived by PL and all authors contributed to the design. PL and AI contributed materials, and AI and AS contributed to data collection. All authors contributed to data analysis. PL and AI wrote the paper with input from AS.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic Supplementary Material

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Israelsson, A., Seiger, A. & Laukka, P. Blended Emotions can be Accurately Recognized from Dynamic Facial and Vocal Expressions. J Nonverbal Behav 47, 267–284 (2023). https://doi.org/10.1007/s10919-023-00426-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10919-023-00426-9