Abstract

Affective states are expressed in an individual’s physical appearance, ranging from facial expressions and body postures, to indicators of physiological arousal (e.g., a blush). Confirming the claimed communicative function of these markers, humans are capable of distinguishing between a variety of discrete emotion displays. In an attempt to explain the underlying mechanism, characteristic bodily changes within the observer, including physiological arousal and mimicry, have been suggested to facilitate the interpretation of an expression. The current study aims to create a holistic picture of emotion perception by (1) using three different sources of emotional information (prototypical facial expressions, bodily expressions, and subtle facial cues) and (2) measuring changes in multiple physiological signals (facial electromyography, skin conductance level, skin temperature, and pupil size). While participants clearly discriminated between perceived emotional expressions, there was no overall 1–1 correspondence with their physiological responses. Some specific but robust effects were observed. Angry facial expressions were consistently responded to with a peak in skin conductance level. Furthermore, sad body expressions were associated with a drop in skin temperature. In addition to being the best recognized expression, viewing happy faces elicited congruent facial muscle responses, which supports the potential role of embodied simulation in emotion recognition. Lastly, tears were not only rated as highly emotional intense but also evoked a peak in skin conductance level in the observer. The absence of distinct physiological responses to other expressions could be explained by the lacking functionality of affect sharing in a non-interactive experimental context. Consequentially, emotional alignment in body and mind might especially take place in real social situations, which should be considered in future research.

Similar content being viewed by others

Humans are highly responsive to others’ displays of emotions. While these can differ in form, content and context, they share the potential to resonate in the observer’s body: For example, one’s heart starts beating faster when seeing a person blush during a talk, one’s eyes get wet when watching a grieving person in the movies and even a smiling face in an ad can make the observer mirror the expression. From a functional perspective, physiological changes in the context of emotion perception have been suggested to assist the identification of the observed person’s affective state (Niedenthal, 2007; Prochazkova & Kret, 2017). In the current study, we aim to shed light on the perception of discrete emotional expressions from the face and body, subtle emotion cues, and their corresponding physiological dynamics.

Nonverbal communication of emotion with conspecifics is a shared mechanism among social animals to sustain life in groups (Kim & Kret, in press; Kret et al., 2020). Communicating emotional states can have direct survival benefits: For example, signaling disgust when faced with rotten food or displaying fear when a predator is approaching can inform conspecifics to adjust their behavior (Marsh et al., 2005; Seidel et al., 2010; Curtis et al., 2011). In the long run, understanding and responding to emotions of group members can strengthen social bonds (Keltner & Haidt, 1999; Fischer & Manstead, 2016; Palagi et al., 2020). While leading research on emotion displays in humans has focused on prototypical facial expressions (Ekman et al., 1980; Ekman, 1992, 1993), the repertoire of nonverbal emotion signals is a lot broader in real life: not only the face but the entire body is critically involved in communicating affect, via posture, movements, or gestures (de Gelder, 2009; Dael et al., 2012; Witkower & Tracy, 2019). On top of that, changes in physiological arousal can be reflected on an individual’s face such as a blush or dilated pupils. These “emotional byproducts” can provide additional cues to the observer (Levenson, 2003; Shariff & Tracy, 2011; Kret, 2015). To date, we are still limited in our knowledge about how different types of expressions are processed and perceived (e.g. Crivelli et al., 2016; Kret & Straffon, 2018).

Concertedly with central nervous system processes, physiological responses, i.e., (de-) activations of the peripheral nervous system, accompany and might even inform the emotional experience elicited in observers. For example, changes in facial muscle activity associated with distinct affective states (Brown & Schwartz, 1980; Ekman & Rosenberg, 2005) have frequently been described during viewing of images with prototypical emotional facial expressions (e.g. Rymarczyk et al., 2011; Bornemann et al., 2012; Varcin et al., 2019). Furthermore, increases in sympathetic arousal as indexed by changes in electrodermal activity (e.g., Vrana & Gross, 2004; Tsunoda et al., 2008; Banks et al., 2012) or pupil dilation (Kret, Roelofs, et al., 2013; Kret, Stekelenburg, et al., 2013; Jessen et al., 2016; Burley et al., 2017) have been observed when participants were shown different prototypical facial emotion displays. In contrast to specific facial muscle activations, however, changes in these markers of sympathetic activity have been suggested to arise from perceiving highly emotionally arousing stimuli in general, independent of the affective content (Bradley et al., 2008, 2017). Activation of the parasympathetic branch of the autonomic nervous system (ANS), resulting in an initial decrease in heart rate (reflecting a freezing response), has specifically been described when being exposed to expressions of anger (Roelofs et al., 2010; Noordewier et al., 2020; however see: Dimberg, 1982). While these findings support the general idea that perceived emotional expressions resonate within the observer’s body, only little is known about the generalizability of effects over expression modalities and over physiological channels since those are rarely directly compared (however see Alpers et al., 2011; Kret, Roelofs, et al., 2013; Kret, Stekelenburg, et al., 2013). Using multiple physiological measures, the current study explores the specificity of bodily responses when perceiving prototypical facial expressions of emotion, bodily expressions of emotion, and subtle emotion cues.

In line with influential emotion theories that highlight bodily states as constitutive parts of affect, such as the James-Lange Theory of Emotion (James, 1884; Lange, 1912) or the Somatic marker hypothesis (Damasio, 1996), researchers have tried to identify patterns in ANS activity for the experience of distinct emotional states (Friedman, 2010). Although physiological information might not be sufficient for a precise classification (Siegel et al., 2018), integrated signals from multiple bodily systems as well as predictions about one’s affective state have been proposed to inform subjective emotional experience (Garfinkel & Critchley, 2013; Pace-Schott et al., 2019). But how does this relate to cases in which our own body becomes a platform to reflect other individuals’ emotions on? Spontaneous mimicry of emotional expressions has not only been suggested to influence the emotional experience of the mimicker (Hatfield et al., 1993; Prochazkova & Kret, 2017), but also to facilitate recognition of the mimicked individual’s emotions (Niedenthal, 2007; Palagi et al., 2020). The role of mimicry in emotion recognition is, to date, mostly investigated in facial muscle activity and evidence for a supporting role is mixed (against: Blairy et al., 1999; Hess & Blairy, 2001; for: Sato et al., 2013; meta-analysis: Holland et al., 2020). Importantly, physiological responses to another person’s emotional expression can go beyond facial mimicry (Prochazkova & Kret, 2017) and access to a variety of signals and their integration might be crucial to facilitate emotion recognition.

The current study investigates how perceiving emotional expressions, varying in display modality and content, affects the observer’s interpretation and physiology: we (1) measured multiple bodily signals while participants were presented with prototypical facial and bodily expressions of emotion as well as with subtle facial emotion cues and (2) asked participants to report how they interpreted the emotion and how intensely they perceived it. Without having a priori hypotheses about the interplay between the different variables, this approach allowed us to explore the possibility of distinct bodily responses to different emotional expressions and to evaluate their subjective interpretations, thus gaining insight in emotion processing on multiple levels.

Method

Participants

In total, 71 students from Leiden University, the Netherlands, participated in the experiment (42 female, Mage = 23.36, SD = 3.22, range: 19–34 years-old). Inclusion criteria were normal or corrected-to-normal vision, no regular use of medication or other substances and no prior psychiatric or neuropsychological disorders. Informed consent was provided prior to participation and participants were reimbursed with either 3 course credits or €10.5. The experimental procedures were in accordance with the Declaration of Helsinki and the study was reviewed and approved by the Psychology Ethics Committee of Leiden University (CEP18-1029/406; November 2018). Out of the 71 subjects we tested, there were technical problems for three subjects with regard to facial electromyography, skin conductance, and skin temperature recordings and, for three different subjects, pupil size was not measured during the experiment (both N = 68).

Stimuli

Pictures for the three different expression modalities, namely face, body, and subtle cues, were taken from existing stimulus databases and edited in Adobe Photoshop (version CC). For the prototypical facial expressions, we selected pictures of 8 identities from the NimStim set of Facial Expressions (Tottenham et al., 2009), displaying happy, angry, sad, fearful, and neutral expressions respectively (40 stimuli in total; overall recognition rate in validation studies: M = 82.14% and SD = 5.42%). The bodily expressions were taken from the bodily expressive action stimulus test (BEAST; de Gelder & Van den Stock, 2011) and, similarly, our set encompassed 8 identities displaying happy, angry, sad, fearful, and neutral postures each (40 stimuli in total; overall recognition rate in validation studies: M = 94.93% and SD = 2.29%). The backgrounds of the facial and bodily stimuli were cut out and replaced with a uniform gray background (RGB: 145, 145, 145). Furthermore, gray-scale versions of all body stimuli were created in order to control for effects of clothing color, and a Gaussian blur was applied to their faces to control for facial expressions. In addition, three subtle facial cue stimuli (blush, dilated pupils, and tears) were created by manipulating the neutral expression of each of the eight identities resulting in 24 subtle cue stimuli (for an example, see Fig. 1a). For the stimuli with dilated pupils, the original pupil size in each picture was increased to be clearly visible, on average by 23%. The “tears” stimuli were made by artificially adding a tear on the actor’s left cheek, increasing the redness of the sclera by making the veins more visible, and adding a reflection and watery blur to the eyes. Lastly, “blush” stimuli were created by increasing the redness of the cheek region. In total, there were 104 stimuli.

a Visualization of the subtle cue stimuli for one stimulus identity. The respective neutral facial expression from the NimStim set of Facial Expressions (first; Tottenham et al., 2009) was manipulated by adding a blush (second), tears (third) or dilated pupils (last). b Trial structure of the Passive Viewing task (left) and the Emotion Labeling task (right)

Procedure

After participants provided informed consent, physiological data acquisition tools were applied, starting with electrodes for skin conductance level (SCL), then electrodes for facial electromyography (EMG), and lastly a skin temperature (SKT) sensor (for more details, see Measurements section). In order to allow the signals to reach a stable baseline, a rest period of approximately 10 min passed before starting the data collection. In total, participants had to perform three tasks: a passive viewing task (PVT), an emotion labeling task (ELT), and an emotional dot-probe task, of which only the first two will be discussed in the scope of this paper. During both the PVT and the ELT, eyetracking data was recorded (see Measurements section), and a chin rest was used to ensure a stable head position.

The tasks were presented using E-prime (version 2; Schneider et al., 2002) on a Dell S2240Tb 21.5 inches touch screen (1920 × 1080 resolution, 60 Hz refresh rate). The background color of all screens (fixation, stimulus, blank) was set to grey (RBG: 145, 145, 145). All participants first completed the PVT, thus allowing us to measure the initial response to the emotional expressions without a secondary task. Each trial started with the presentation of a fixation cross for 500 ms, which was followed by a 4,000-ms presentation of one of the above-described stimuli (460 × 510 pixels). The stimulus presentations were separated by a 3500-, 4000-, or 4500-ms blank screen to the next trial (inter-trial interval duration varied between participants). Due to a coding error, a fearful face instead of a face with added tears was presented for one of the 8 stimulus identities and had to be excluded from data analysis (seven instead of eight trials for this stimulus category). Apart from that, each of the remaining 102 stimuli was presented once, in a randomized order. After taking a short break, participants continued the experiment with the ELT. Each trial started with a fixation cross lasting 500 ms, which was followed by one of the expressions for 1 s. Afterwards, a question appeared next to the stimulus, asking participants to indicate which of the five expression categories, namely angry, happy, scared (in the following referred to as “fearful”), sad, or neutral was displayed in the picture. In a second step, they had to rate how emotionally intense they perceived the stimulus, using a slider from neutral to very emotional (on a scale from 0–100). There were not time constraints on the ratings and each expression was rated twice (208 trials; see Fig. 1b for a visualization of the tasks). The eyetracking recording was stopped and all electrodes were removed for the subsequent emotional dot-probe task. Upon completion of all three tasks, participant filled in the self-report version of the Liebowitz Social Anxiety Scale (LSAS-SR; Liebowitz, 1987; Fresco et al., 2001), the Autism Spectrum Quotient (AQ; Baron-Cohen et al., 2001), and the short version of the Empathy Quotient (EQ; Baron-Cohen & Wheelwright, 2004) in the respective order. As the questionnaire scores were not included into the main analyses, descriptive statistics of these measures can be found in Table 1 in Online Resource 2. The total duration of the study was approximately 90 min.

Measurements

Pupil Size

Eyetracking data was recorded using a Tobii X2-60 eyetracker (sampling rate: 60 Hz) to which event markers were sent via the presentation software. Filtering of the data as well as artifact identification and rejection were undertaken in the PhysioData Toolbox (Sjak-Shie, 2019) according to the guidelines described in Kret and Sjak-Shie (2019).

EMG

Facial muscle activity related to the observation of emotional expression was measured over Corrugator supercilii and Zygomaticus major regions (in the following referred to as “corrugator” and “zygomaticus”). In total, five 4-mm reusable AG/AgCl surface electrodes were attached on the participant’s face: two over each region of interest in the left side of the face and one ground electrode on the center of the forehead, just below the hairline, according to the guidelines by Fridlund and Cacioppo (1986). Data was recorded with the Dual Wireless EMG BioNomadix System (BIOPAC, 2000 Hz sampling rate). The initial preprocessing of the raw EMG data was performed in the PhysioData Toolbox (Sjak-Shie, 2019). Before rectification of the signal, a 28 Hz high-pass FIR, a 200 Hz low-pass FIR, and a 50 Hz (Notch) filter were applied to the EMG data.

Skin Conductance

The electrodes measuring changes in SCL were attached to the index finger and the ring finger of the participant’s non-dominant hand. Data was recorded with the EDA 100C BIOPAC Systems module from (2000 Hz sampling rate, Gain: 5 µV, 10 Hz low-pass filter) and event triggers were sent from the presentation software via parallel port. Within the PhysioData Toolbox (Sjak-Shie, 2019), the recorded data was filtered with a 2 Hz low-pass filter (Chênes et al., 2013).

Skin Temperature

A fast response thermistor (TSD202A, Biopac) was placed below the participant’s right cheekbone to record changes in cheek temperature. Data was acquired with the SKT100C BIOPAC Systems module (2000 Hz sampling rate: Gain 2°F/V, 10 Hz low-pass filter). Similar to the other measures, the PhysioData Toolbox (Sjak-Shie, 2019) was used for further filtering (1 Hz low-pass; Chênes et al., 2013).

Data Analysis

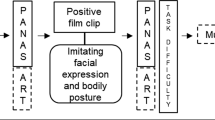

In order to shed light on different aspects of the processing of emotional expressions, we defined three different analyses aiming at the investigation of (1) subjective interpretation, (2) physiological signal changes, and (3) the linkage between the two levels, see Fig. 2 for a visualization and further explanation. Since the study was not specifically designed to perform the third analysis, it should be considered as a pilot test and further information can only be found in Online Resource 4. Prior to the analysis of the data, we looked for irregularities in each dependent variable. Importantly, for the physiological measures, we integrated information from a repeated visual inspection with statistical and literature-based thresholds. An overview of the outlier criteria can be found in the Online Resource 1. In addition, missing trials in the EMG, SKT, and SCL recordings were replaced with missing values (subject 8: 3 trials and subject 21: 2 trials). The data for all physiological channels within the windows of interest was downsampled by exporting average values within five 100 ms time bins prior to stimulus onset for the baseline window and 75 100-ms time bins after stimulus onset for the response window. Lastly, a baseline correction was performed by subtracting the baseline from all data points of the corresponding response window for each trial. While the entire response window (4 s stimulus presentation and 3.5 s blank screen) was used in the analysis of the relatively slowly changing SCL and SKT signals (Shearn et al., 1990; Dawson et al., 2016), EMG activity was only examined during stimulus presentation (Kret, Roelofs, et al., 2013; Kret, Stekelenburg, et al., 2013). In order to avoid distortions by the initial light reflex following stimulus onset (Bradley et al., 2008), the analysis on pupil size changes was restricted to the last two seconds of stimulus presentation.

Visualization of the three analysis approaches. In Analysis 1, the subjective interpretation (emotion recognition and intensity judgments) of the different emotional expressions was examined. In Analysis 2, the effect of perceiving different emotional expressions belonging to the same modality on the shape of five different physiological signals was explored. In Analysis 3, trial-wise summary measures of expression-specific signal changes in all physiological channels were taken to fit a model on self-reported emotion labels and the generalizability of these observed patterns was evaluated using different data sets (test sample, inaccurate trials and subtle emotional cues; see Online Resource 4 for a more detailed description)

Analysis 1 (Behavioral Analysis)

In the behavioral analysis, we investigated whether the specific content of the emotional expressions (categories: happy, angry, sad, or fearful versus neutral) as well as the modality with which it was displayed (face versus body) had an influence on recognition performance as well as on the perceived intensity in the ELT. Thus, in the first step, we looked at differences in the accuracy of recognizing specific emotional expressions from different expression modalities. Investigating the data on a trial level, we fitted a binomial generalized linear mixed-effects model on accuracy (0 or 1) with emotion category, expression modality, and an interaction between the two of them as predictors. In order to account for individual differences in overall emotion recognition abilities, we included a random intercept for the subject variable.Footnote 1

In order to examine whether the perceived intensity of an emotional expression systematically varied depending on expressed emotion and/or the expression modality, we fitted a linear mixed-effects model on the intensity ratings of each participant with regard to the facial and bodily expressions. As in the analysis above, emotion category, expression modality, and an interaction between the two of them were defined as fixed effects and we added a random intercept for each subject.

Finally, we examined the ratings of subtle facial cues. Given that their nature was largely different from the other stimuli (i.e., artificially created and exclusively added to neutral facial expressions), we kept the analysis for this modality separate. Furthermore, we focused on their perceived intensity since there is no past evidence to indicate that a specific emotion is associated with these cues; hence, they cannot be accurately labeled (see Table 2 in Online Resource 2 for an overview of the provided emotion labels). Thus, we used cue type (tear, blush, dilated pupils versus no cue/neutral) as the sole predictor in the LMM on the intensity scores and added a random intercept for the subject variable.

All three models were fitted using the lme4 package (v1.1–23; Bates et al., 2015) in R 3.6.3 (R Core Team, 2020). After fitting a model, post hoc pairwise comparisons between factor levels and their interactions were calculated by contrasting estimated marginal means with the emmeans package (v1.4.8; Lenth, 2020). Reporting the test results of all pairwise comparisons would exceed the scope of this paper which is why they are listed in the Tables 3–8 in Online Resource 2. Online Resource 2 also contains the description and results of analyses in which we explored the effect of demographic and personality variables on emotion recognition performance and perceived intensity of emotional expressions.

Analysis 2 (Physiological Analysis)

In the analysis of physiological data, we were specifically interested in identifying expression-specific changes in the shape of each physiological signal related to passive viewing of emotional expressions. Thus, we aimed to describe the entire time course in the response window of interest which differed in duration depending on the signals’ temporal dynamics (see Data analysis section). For modeling changes in pupil size, SKT, and SCL, we extended the approach from studies looking at factors affecting pupil dilatation (Wehebrink et al., 2018; Quesque et al., 2019) and employed higher-order polynomials in linear mixed models (LMMs). Given the fast changes in EMG activity related to affective states (Van Boxtel, 2010) as well as variations in response shapes (Cacioppo et al., 1988), we did not expect higher-order polynomials to reliable capture signal changes in the two EMG channels within the 4 s response window. In previous research on perception of static emotional expressions, EMG data was mostly analyzed over time periods of 1.5–2.5 s (Sato et al., 2008; Hermans et al., 2009; Rymarczyk et al., 2011, 2016; Bornemann et al., 2012) and, even if longer time windows were looked at, the EMG signal was averaged over time (Kret, Roelofs, et al., 2013; Kret, Stekelenburg, et al., 2013; Vrana & Gross, 2004). To keep the temporal resolution similar across measures and still allow for a fine-grained description of the EMG time courses, we therefore chose to identify time bins in which the stimulus content affected the signal rather than describing the signal as a whole, similar to the approach of Achaibou and colleagues (2008). The two analysis approaches will be outlined in more detail below.

Pupillometry, Skin Conductance, and Skin Temperature

The time courses of the pupil size data, the SCL data, and the SKT data were modeled using growth curve analysis (Mirman, 2014) with the nlme package (Pinheiro et al., 2020) in R statistic (R Core Team, 2020). Three separate analyses were done for the three emotional expression modalities (prototypical facial expressions, bodily expressions, and subtle facial cues). LMMs were fitted as follows: In order to capture the shape of the signal, first- and second-order orthogonal polynomials were used to model changes in pupil size, and first-, second-, and third-order polynomials were chosen for the SCL and SKT models based on visual inspection of the overall shape of the time courses per subject. Within each expression’s modality, emotion category (subtle: cue type) of the stimulus was included as categorical predictor (prototypical facial/bodily: angry, happy, sad, fearful, and neutral; subtle: blush, dilated pupils, tears, and neutral). Since these predictors of interest were assumed to influence the shape of the signal, interactions with the polynomials were added as fixed effects to the models. Given the observed individual differences in the overall shape of the time series, a random intercept and random slopes of the polynomials were defined on a subject level. In order to account for autocorrelation between subsequent data points, an autoregressive structure, with trials nested in subject as grouping factor, was included. The Nelder-Mead technique was chosen as optimization method. Given the complex model structure, we increased the maximum number of iterations as well as the maximum number of iterations for the optimization step inside optimization (msMaxIter) up to 5000, and the number of iterations for the EM algorithm (niterEM) as well as the maximum number of evaluations up to 1000. Since the model residuals were not normally distributed, we additionally applied clustered bootstrapping to estimate the confidence intervals of the coefficients. Thus, in addition to the parametric approach of determining statistical significance of fixed effects with conditional F-tests and marginal significance of fixed effect coefficients conditional t-tests, their respective nonparametric confidence intervals were calculated. Given the large number of statistical parameters, only the results of the F-tests and the interpretation of the analyses will be reported in the text whereas the t-statistics and the nonparametric confidence intervals can be found in Tables 1–6 in Online Resource 3. Based on previous findings (e.g., Lang et al., 1993; Bradley et al., 2008, 2017; Kosonogov et al., 2017), we additionally explored the possibility whether overall emotional intensity, instead of specific emotion expression categories, could explain a large amount of variation in the physiological signal changes (see Online Resource 3, Tables 10–12 and Fig. 2). Given that our stimuli were not controlled for global and local brightness and contrast, pupil size changes related to emotional content might have been altered in our analyses. For conciseness, these results are only reported in Online Resource 3 (Tables 7–9 and Fig. 1).

Facial EMG

Since there was no empirical evidence to expect any exact shape of the two EMG signals throughout our stimulus presentation window (4 s), our analysis aimed to determine the parts of the signal in which a specific emotional expression differed significantly from the respective neutral expression. Here, we extended on an approach by Achaibou and colleagues (2008) who tested for significant differences in EMG activity during stimulus presentation by calculating t-tests between activations related to angry versus happy facial expressions in 100-ms time bins. In contrast to their analysis, however, we (1) ran multilevel models instead of t-tests (including random variation and using the nlme package [Pinheiro et al., 2020], in consistence with the other here reported analyses), (2) compared each emotion category (happy, angry, fearful, and sad) against neutral as a control condition, and (3) used a split-half approach (i.e., first tested for effects in half of the sample [training set] and then validated the significant results in the other half [test set]). The two sets were matched by gender but, apart from that, randomly generated. This third adjustment was taken to allow for hypothesis-free exploration in one half of the data and confirmatory tests in the other half (Wagenmakers et al., 2012). As for the pupil size data, the SCL data, and the SKT data, separate analyses were performed for the different expression modalities. Furthermore, data from the corrugator region and the zygomaticus region were analyzed separately and, similarly to Achaibou et al. (2008), only two conditions were contrasted in one test (i.e., one emotion category against neutral). Thus, for each of the 40 100-ms time bins and for each presented emotional expression, we fitted separate LMMs on the mean EMG activity (filtered + rectified, see Measurements section) of the corrugator and the zygomaticus with emotion category as fixed effect and ID as random effect on the test sample. If one emotion category was significantly different from neutral in a time bin (p < 0.05), the same model was tested using the data from the test sample. Only if the difference between the signal related to the emotional versus the neutral expression was significant in both the training and the test sample, the EMG signal was regarded to be affected by the presentation of the respective emotional expression within this time bin.

Results

Behavioral Results (Analysis 1)

Descriptive statistics of the behavioral responses can be found in Table 1 in Online Resource 2. Contrasting expectations based on the stimulus validation studies (Tottenham et al., 2009; de Gelder & Van den Stock, 2011), recognition rates were lower for bodily expressions (M = 0.776, SD = 0.098) compared to the prototypical facial expressions (M = 0.885, SD = 0.081). The recognition rates of the same bodily expressions in the original validation study are higher but our results are in line with the means that were obtained in previous research (Kret, Roelofs, et al., 2013; Kret, Stekelenburg, et al., 2013).

Prototypical Facial and Bodily Expressions of Emotion

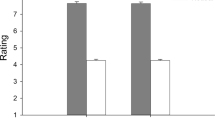

The model on accuracy in emotion recognition yielded significant main effects of emotion category, χ2(4) = 185.788, p < 0.001, and modality (body versus face), χ2(1) = 39.921, p < 0.001. Importantly, the significant interaction between emotion category and expression modality, χ2(4) = 203.438, p < 0.001, sheds more light on the interplay between the two variables affecting accuracy in emotion recognition (see Fig. 3a above and Table 3 in Online Resource 2). Overall, while emotions were better recognized compared to a neutral expression when expressed by the face, the opposite was observed for the body. Specifically, within the bodily expressions, the neutral expression was significantly better recognized than all emotional bodily expressions, except for fear. Fearful body expressions were better recognized than angry and happy bodily expressions. Finally, both angry and sad bodily expressions were more likely to be labeled correctly than their happy counterparts. In contrast, when emotions were presented on the face, happy facial expressions were best recognized, followed by angry facial expressions, and thirdly faces expressing fear, which received higher accuracy rates than sad facial expressions (all p values ≤ 0.029). Lastly, neutral facial expressions were least well recognized. When comparing between modalities, there was no difference in the odds for labeling sad facial and bodily expressions accurately. However, while angry, happy, and fearful expressions were more likely to be accurately recognized when they were displayed on the face, neutral expressions were more easily recognized from the body (see Table 4 in Online Resource 2).

a Predicted accuracies of labeling stimuli belonging to the four emotion categories (angry, happy, sad, fearful) and neutral within the body (red) and face (blue) modality and b their respective predicted intensity ratings. c Predicted intensity ratings for the subtle facial expressions by cue type are illustrated in. Whiskers represent confidence intervals. Significant differences between factor levels are indicated by adding a bracket (red = between categories within bodily expressions, blue = between categories within facial expressions, grey = within category across modalities OR between subtle cue types). Straight line = p < .001, dashed line = p < .01, dotted line = p < .05

Both emotion category and modality were significant predictors in the model on perceived emotional intensity, category: F(4, 5521) = 420.987, p < 0.001; modality: F(1, 5521) = 3.865, p = 0.049. The significant interaction between the two predictor variables highlighted their interdependency, F(4, 5521) = 37.339, p < 0.001 (see Fig. 3b and Table 5 in Online Resource 2). Within the facial expression modality, intensity ratings were lower for sad expressions compared to the three other emotions and both happy and fearful expressions received lower intensity scores than angry expressions but did not significantly differ from each other. In contrast, happy expressions received the second lowest intensity scores for the bodily expressions and were rated significantly lower in intensity than angry, sad, and fearful expressions while these three did not significantly differ from each other. When comparing the two expression modalities, angry, happy, neutral, and fearful expressions were all perceived as more intense when they were displayed on the face whereas there was no difference for sad expressions (see Table 6 in Online Resource 2).

Subtle Facial Cues

A separate model on the perceived intensity of the subtle facial cues revealed that the presence of a cue was a significant predictor of the intensity rating, F(3, 2097) = 669.31, p < 0.001. Crucially, faces with dilated pupils were rated equally intense as the same expressions with average pupil sizes (neutral). In contrast, stimuli with a blush received higher ratings than both neutral faces and faces with dilated pupils. Faces with tears were rated as significantly more intense than faces with the two other cue types and compared to neutral (see Fig. 3c and Tables 7 and 8 in Online Resource 2).

Physiological Results (Analysis 2)

Skin Conductance

Prototypical Facial Expressions

In the LMM, the linear polynomial was a significant predictor of the changes in SCL, Flinear (1, 181345) = 9.457, p = 0.002. Furthermore, all interactions between emotion category and the three polynomials were significant, Flinear*category (4, 181345) = 5.596, p < 0.001; Fquadratic*category(4, 181345) = 12.274, p < 0.001; Fcubic*category (4, 181345) = 15.145, p < 0.001, indicating that the shape of the signal differed for emotional as compared to neutral expressions. Looking at the t-statistics (Table 1 in Online Resource 3) as well as the predicted value graphs (Fig. 4a) for distinct emotion categories, the presentation of angry, happy, and sad facial expressions were more strongly associated with an initial peak at around 2 s and a decline over time which was strongest for happy expressions. A cubic component in the signal was observed following fearful faces, which however was not as strong as the other categories and without the pronounced peak at the beginning. Notably, only the interaction between angry facial expressions and the cubic trend did not include 0 in the bootstrap confidence intervals for the model coefficients, indicating that exclusively this effect was robust.

Predicted time course of the baseline-corrected skin conductance level signal (SCL) related to passive viewing of a prototypical facial expressions and b bodily expressions by emotion category as well as c subtle facial cues by cue type. The shaded areas indicate standard errors of the predicted means. Colored arrows indicate robust results in the clustered bootstrap analysis

Bodily Expressions

As for the model on facial expressions, the linear polynomial significantly predicted SCL measurements, Flinear (1, 181420) = 9.981, p = 0.002. In addition, the linear and cubic polynomials were involved in significant interaction terms with emotion category, Flinear*category (4, 181420) = 22.935, p < 0.001; Fcubic*category (4, 181420) = 5.541, p < 0.001, suggesting that the expression of emotion via the body also had an effect on the shape of SCL measurements. In this modality, however, only happy and, to a lesser degree, fearful expressions were related with an increase in SCL magnitude, whereas angry expressions rather yielded a stronger decline compared to neutral expressions (see Fig. 4b). While, in general, SCLs also decreased over time for sad bodily expressions, this decrease followed a cubic shape compared to neutral expressions. The bootstrap analysis could not confirm the robustness of directionalities of effects in this model (see Table 2 in Online Resource 3 for all statistics).

Subtle Facial Cues

In the last SCL model, the linear polynomial was again identified as significant predictor, Flinear (1, 140024) = 8.855, p = 0.003, as were the interactions between all three polynomials and emotion category, Flinear*category (3, 140024) = 16.339, p < 0.001; Fquadratic*category(3, 140024) = 45.746, p < 0.001; Fcubic*category (3, 140024) = 11.745, p < 0.001. Thus, the presence of facial signs of emotional involvement, without the context of prototypical emotion displays, also affected SCL properties: based on the statistics (Table 3 in Online Resource 3) and predicted time courses (Fig. 4c) for the three cue types versus neutral (no cue), the SCL signal decreased to a lesser degree for faces with an added blush and faces with dilated pupils, with even a slight late increase for the latter. Moreover, when observing faces with added tears, SCLs of participants increased steeply, with a peak around 2.5 s and a fast decline. Importantly, the coefficient for the interaction between the quadratic trend and tears cue category was the only coefficient which was consistently below 0 in the bootstrap samples, pointing out the stability of the observed peak in SCL for tears.

Skin Temperature

Prototypical Facial Expressions

While only the linear polynomial and the cubic polynomial were significant predictors of the SKT signal in the response window, Flinear (1, 182620) = 5.622, p = 0.018; Fcubic (1, 182620) = 4.909, p = 0.027, all interactions between the three polynomials and emotion category became significant model terms, Flinear*category (4, 182620) = 8.518, p < 0.001; Fquadratic*category(4, 182620) = 6.948, p < 0.001; Fcubic*category (4, 182620) = 4.757, p = 0.001. Emotional versus neutral facial expressions therefore also seemed to affect changes in SKT differently. Looking at the model statistics (Table 4 in Online Resource 3) and predicted value plots (Fig. 5a), there was a stronger increase in SKT following happy and fearful expressions and a diminished late increase following angry expressions compared to neutral ones. In addition, after an initial increase, cheek temperature already declined after approximately 6 s for sad and fearful expressions while this was not the case for the other facial expression categories. Importantly, no coefficient for any predictor was consistently larger or smaller than 0 in the bootstrap analysis.

Predicted time course of the baseline-corrected skin temperature signal (SKT) related to passive viewing of a prototypical facial expressions and b bodily expressions by emotion category as well as c subtle facial cues by cue type. The shaded areas indicate standard errors of the predicted means. Colored arrows indicate robust results in the clustered bootstrap analysis

Bodily Expressions

In the model describing SKT changes associated with viewing bodily expressions of emotions, the linear polynomial as well as the three interactions between each polynomial and emotion category were significant, Flinear (1, 182845) = 4.220, p = 0.040; Flinear*category (4, 182845) = 9.937, p < 0.001; Fquadratic*category(4, 182,845) = 20.160, p < 0.001; Fcubic*category (4, 182845) = 6.151, p < 0.001. Examining the effect of emotion in a body posture on the shape of the signal more closely, SKT rose for all emotions compared to neutral (Fig. 5b). However, while this increase was roughly linear for angry expressions, both happy and fearful expressions were related to a more cubic-like signal shape with stronger increases at the very beginning and end of the response window. On top of that, SKT first decreased after viewing sad expressions and only started to increase after approx. 2.5 s. The coefficient describing this initial dip was also the only coefficient for which the confidence interval of the bootstrap analysis did not include zero, indicating its stability (see Table 5 in Online Resource 3 for an overview).

Subtle Facial Cues

Both linear and cubic polynomials significantly predicted changes in SKT in the subtle facial cue model, Flinear (1, 141599) = 7.225, p = 0.007; Fcubic (1, 141599) = 5.227, p = 0.022. Additionally, all interactions between the emotion category and the three polynomials were significant, Flinear*category (3, 141599) = 5.543, p = 0.001; Fquadratic*category(3, 141599) = 24.200, p < 0.001; Fcubic*category (3, 141599) = 5.095, p = 0.002. Thus, adding subtle emotional cues to a neutral picture might already make a difference in the characteristics of SKT changes in the observer. Consulting the model statistics (Table 6 in Online Resource 3) and the predicted value graph (Fig. 5c), both faces with added tears and faces with added dilated pupils were associated with an initial dip. While this dip turned into an increase after approximately 2 s for the first (reaching a similar temperature level as the faces without cue), it did not for the latter. Faces with a blush yielded a strong increase in cheek temperature which attenuated over time. The subsequent bootstrap analysis did not support the directionality of any of the effects.

Facial EMG

Corrugator Supercilii

The split-half tests on differences in facial muscle activity between emotional and neutral expressions within distinct time bins yielded emotion- as well as time-bin-specific findings. When viewing happy compared to neutral facial expressions, activity over the corrugator supercilii region was significantly reduced in both our training and test sample 500 ms, 600 ms, 1600 ms, and 3800 ms after stimulus onset (all ps < 0.05). Furthermore, while 200 ms and 600 ms after stimulus onset, angry facial expressions yielded lower EMG activity compared to neutral expressions, the same observation was made for fearful facial expressions 3600 ms after stimulus onset. Lastly, we did not find a replicable effect of sad facial expressions on the EMG signal (see Fig. 6a below and Table 13 in Online Resource 3). The analyses on the other expression modalities revealed that neither any of the emotional bodily expression nor any of the emotional facial cues had a consistent effect on the Corrugator signal in the training and the test sample.

Time course of the filtered, baseline-corrected and z-scored facial electromyography (EMG) signal over a the corrugator supercilii region and b the zygomaticus major region related to passive viewing of prototypical facial expressions by emotion category. The colored shaded areas around the values indicate standard errors of the predicted means. Signals were plotted and analyzed in time bins of 100 ms each. Colored vertical lines (and gray-shaded background areas between them) highlight time bins in which the EMG signal when viewing an emotional expression is significantly different from neutral in both samples (training and test), with the color indicating the corresponding emotion category

Zygomaticus Major

EMG activity over the zygomaticus major region was consistently elevated for happy versus neutral facial expressions starting 700 ms after stimulus onset and almost throughout the entire stimulus presentation (700–2600 ms, 2800–2900 ms, 3200–3900 ms; all ps < 0.05). Moreover, seeing a fearful facial expression was related to an enhanced EMG signal 1800–2200 ms after stimulus onset in both training and test sample. Activations during the presentation of both angry and sad facial expressions did not differ significantly from neutral expressions (see Fig. 6b and Table 14 in Online Resource 3). On top of that, activity over the Zygomaticus major region was not observed to be altered if any of the emotional bodily expressions or facial cues compared to their neutral counterparts were shown.

Discussion

The aim of our study was to explore how expressions of emotion resonate in an observer’s body and mind. There are three main findings: First, the results show that, while participants distinguished between different emotional expressions in self-reports, physiological changes were not strictly corresponding to distinct emotion categories. Even though there was no 1–1 relationship between perception and physiological response, some robust physiological responses could be linked to the perception of certain emotional expressions, i.e., a peak in SCL for angry facial expressions and a decrease in SKT for sad bodily expression. Second, specific facial muscle (de-) activations were reproducibly observed following facial but not bodily expressions of emotion. Third, faces with tears were not only perceived as emotionally intense, but also elicited a robust peak in observers’ skin conductance levels. In the remainder of the discussion, we will elaborate on these findings in more details.

Overall, participants were well able to recognize all emotional expressions. However, they did show variation across expression modalities as well as emotion categories: Apart from displays of sadness, emotional facial expressions were better recognized than emotional bodily expression, with happy faces being most easily identified (see also Kret, Roelofs, et al., 2013; Kret, Stekelenburg, et al., 2013; Martinez et al., 2016). One driving factor of this finding might be the intensity of the expression which has been suggested to play a crucial role in recognizing both emotions from the body (Aviezer et al., 2012) and the face (Hess et al., 1997). Happy facial expressions, together with fearful facial expressions, received the second-highest intensity ratings in our study which might have facilitated their recognition. While bodily expressions were shown to be especially informative for the recognition of high-intensity emotions (Aviezer et al., 2012), the presented emotional body stimuli in our study were predominantly rated as less intense than their facial counterparts. Only facial and bodily expressions which were also similarly well recognized, i.e., sad expressions, did not differ in their intensity ratings. Our study thus confirms that intensity might be a relevant factor in the recognition of emotional expression. Given the overall high accuracy rates, our results further support that humans are highly capable of identifying and discriminating a variety of emotional expressions (Cowen & Keltner, 2019; Witkower &; Tracy, 2019).

The clear distinction between emotions in self-reports was not reflected in participants’ physiology. Nevertheless, a few consistent relationships between discrete emotion categories and physiological markers were observed. Specifically, in the SCL signal, a cubic trend with an early peak appeared when participants observed angry facial expressions. This result is in line with previous studies, showing that observing negative facial expressions (Banks et al., 2012), and anger in particular (Kreibig, 2010), tend to increase SCL (but see Vrana & Gross, 2004 for different results). As signals of direct threat, angry faces take a special role in emotion perception. Compared to other emotional expressions, their detection and processing occurs in a privileged, speedy, and automatic manner (Feldmann-Wüstefeld et al., 2011), a phenomenon called the “anger-superiority effect” (Hansen & Hansen, 1988). Perceiving threat immediately sets the body in a fight-or-flight mode (Cannon, 1914), which is typically characterized by autonomic arousal and, among others, an increased SCL (Darrow, 1936). This automatic response may explain the observed increase in SCL in our study, with the early peak highlighting the fast processing of facial displays of anger as a potential threat.

The current study also yielded some novel findings related to the processing of sad expressions. Sadness is characterized by a low-arousal physiological state (Huron, 2018) and a conservation-withdrawal tendency which, however, is not consistently reflected across physiological channels (Kreibig et al., 2007). When observing sad body expressions, the participants in the current study showed an initial drop in their cheek temperature (see Salazar-López et al., 2015 for similar findings on negative images with low arousal). As previous research has shown that watching sad body movements can induce sadness in observers (Shafir et al., 2013), the cheek temperature drop in our study might be the result of induced sadness. Compared to other facial regions, cheek temperature variations have however not been extensively studied in the context of emotional responses yet (Ioannou et al., 2014; Clay-Warner & Robinson, 2015). Further research should therefore substantiate this suggestion. Apart from these two observations, we did not find evidence for a robust linkage between the perception of basic emotion displays and distinct ANS responses. Our findings, thus, challenge the idea that their own signals from the ANS could serve observers as a reliable indication of the observed individual’s state.

One characteristic of facial emotional expressions is that they tend to be mimicked (e.g., Rymarczyk et al., 2011; Bornemann et al., 2012; Varcin et al., 2019), which is believed to help their recognition (Niedenthal, 2007; Palagi et al., 2020). Our examination of facial muscle responses revealed that happy facial expressions were not only best recognized in the current study, but also elicited the most prominent and prolonged changes; an increase in zygomaticus activity and a decrease in corrugator activity, replicating previous findings (e.g., Vrana & Gross, 2004; Rymarczyk et al., 2011). The question arises why smiles, compared to other expressions, yielded such strong effects. In daily life, humans are constantly exposed to smiling faces, making it the most frequently observed expression (Somerville & Whalen, 2006). These smiles can have different meanings and may signal reward, dominance, or affiliation (Martin et al., 2017). In line with their assumed function to create and maintain social bonds (Keltner, 1995), past research has shown that smiles are frequently reciprocated in social interactions (Hess & Bourgeois, 2010). The relevance of smiles in interpersonal bonding might therefore be one explanation for the pronounced mimicry of smiles in our study. In addition, smiles have also been found to be mimicked without the observer being directly addressed (e.g., see Mojzisch et al., 2006). These congruent facial responses have further been linked to specific neural activations in areas associated with embodiment and self-other distinction (Schilbach et al., 2008; see also Schilbach, 2015). According to the Simulation of Smiles model (SIMS, Niedenthal et al., 2010), congruent facial responses to smiles in a non-communicative context can also originate from knowledge–based simulations of the other’s emotional state instead of “real” emotional contagion. Given that only expressions from the same modality, i.e., the face, and with high social signaling value, i.e., a smile, elicited facial muscle responses in the current study, embodied simulation might be a plausible explanation for our EMG findings. Without necessarily evoking the experience of happiness, the simulation of smiles could potentially even have facilitated emotion recognition (however, see Holland et al., 2020).

Crying is claimed to be a uniquely human behavior and linked to a complex pattern in ANS responses, with sympathetic activation being most consistently found (Bylsma et al., 2019). Another key finding of the current study is that faces with tears were perceived as emotionally intense and increased sympathetic arousal in the participants when observing these stimuli. More specifically, the addition of tears to a neutral expression resulted in a steady peak in the observers’ SCL. Other research has demonstrated that tears increase the perceived sadness in others and thereby also the wish to help them (Küster, 2018). Perceiving tears thus seems to elicit approach behavior and to induce sympathetic arousal. Despite the scarce research on physiological responses to the observation of crying individuals, our findings substantiate the suggested function of tears as an effective call for social support (Balsters et al., 2013; Gračanin et al., 2018) by highlighting their strong resonance in the observer.

The above-described results include all robust findings of our study. Apart from these results, various other, non-robust emotion-specific effects on the physiological measures require further examination. At this moment, these effects should be considered confined to our sample and not be generalized. Most remarkably, these physiological effects show a great divergence across the different expression modalities and emotion categories in our stimulus materials. The most prominent example of this is the emotion of anger which, compared to the respective neutral expressions, was responded to with an increase in SCL if shown in the face and a decrease in SCL if expressed by the body. In contrast, the exact opposite pattern became apparent in the SKT responses to angry faces (SKT decrease) and angry bodies (SKT increase). This lack of coherence between physiological channels is in line with our first key finding, and complements evidence against an ‘all-or-none’ activation of the sympathetic nervous system and for a more differentiated view of ANS targets (Ax, 1953; Kreibig, 2010). Moreover, while bodily expressions of emotion were described to be automatically integrated in the processing of facial emotional expressions and facilitate their recognition (de Gelder, 2006; Kret, Roelofs, et al., 2013; Kret, Stekelenburg, et al., 2013; Poyo Solanas et al., 2018), isolated expressions from the two modalities might not automatically resonate similarly in an observer’s body.

From a functional standpoint, the limited extent of a consistent autonomic tuning to prototypical emotional expressions does make sense: Instead of requiring affect sharing for informative or affiliative purposes, our passive viewing task provided subjects with a stream of static and posed displays of emotion without a relevant social context (Fridlund, 1991; Hess & Fischer, 2013). The use of static images posed a limitation concerning ecological validity as compared to real dynamic expressions (Krumhuber et al., 2013). Furthermore, our participants were automatically put in the role of a passive observer, knowing that a displayed individual was not receiving any information about their own expressions. Importantly, the opportunity to interact with a social stimulus has been described to be highly influential in social attention (Laidlaw et al., 2011). Similarly, knowing that the counterpart has access to one’s own expressions can alter observational tendencies, enhance social signaling and promote prosocial choices (Frith, 2009; Gobel et al., 2015; Cañigueral & Hamilton, 2019). The degree of interactivity with a stimulus may thus determine the quality and strength of responses on multiple levels, including physiological signals (Schilbach et al., 2013). Based on experimental evidence looking at different aspects of social cognition and behavior, Schilbach and colleagues (2013) called for a turn to a “second-person neuroscience”: Social phenomena should be investigated in real social settings with two (or more) actively involved individuals, allowing to examine dynamics between, rather than only within, individuals. In the past years, this approach yielded promising insights in the behavioral and neural mechanisms underlying social interactions (Redcay & Schilbach, 2019). Recent findings successfully expanded an interactive viewpoint to the physiological level: Cooperation as a facet of prosocial behavior was found to be positively associated with two interactants’ synchronization in SCLs (Behrens et al., 2020). Synchrony in SCLs, as well as in heart rate, was further shown to be predictive of interpersonal attraction (Prochazkova et al., 2021). Consequently, while facial mimicry of discrete emotions might inform the automatic categorization of emotional expressions in passive observers, the ANS might only be strongly activated by social signals in real social settings, with the dynamics between interactants reflecting their (emotional) alignment.

In the future, researchers should try to keep experimental paradigms as close to real-life situations as possible. In cases in which passive observation of stimuli is required, it can already be beneficial to use dynamic and naturalistic, non-posed expressions (Kret et al., 2020). Compared to static and posed emotional expressions, these types of stimuli elicit stronger facial mimicry (Sato et al., 2008; Rymarczyk et al., 2011). In attempts to link physiological changes with subjective experiences of others’ emotions, it would, additionally, be interesting to include measures of interoceptive abilities. As understanding one’s own body has already successfully been linked to understanding one’s own emotions (Kanbara & Fukunaga, 2016; Critchley & Garfinkel, 2017), accurate interoceptive inferences might also be important prerequisites to connect to others’ emotions (Arnold et al., 2019).

To sum up, we confirmed existing evidence that the interpretation of emotional expressions depends on both the modality of the expression as well as the affective content. However, even if only information from the face or the body was available, emotion signals were still accurately perceived. Given that these situations become more frequent due to digitalization or safety measures during the COVID-19 pandemic, it is reassuring to know that emotion recognition as an essential process is not severely affected. Using static and posed expressions, we found limited evidence for a physiological signature of discrete emotions in the observer. The robust effects which we found might occur as a result of social cues eliciting strong motivational tendencies (e.g., SCL peaks in response to tears or angry faces) or embodied simulation of frequently observed expressions (e.g., EMG responses to happy faces). Based on recent perspectives on social cognition, an actual alignment in emotional states, which goes beyond emotion recognition, might however only happen in a “real” social context. As a consequence, in order to describe a link between the sharing of emotions on different levels of observation (experiential and physiological), future studies should involve interactive paradigms and examine the role of variables indexing an individual’s access to internal signals. A mechanistic understanding could eventually inform the development of interventions which target the identification of other’s emotions and, thus, facilitate building social connections.

Notes

An addition of random slopes for emotion category and/or expression modality resulted in convergence issues. To keep the behavioral models consistent, we refrained from defining random slopes in any of the models.

References

Achaibou, A., Pourtois, G., Schwartz, S., & Vuilleumier, P. (2008). Simultaneous recording of EEG and facial muscle reactions during spontaneous emotional mimicry. Neuropsychologia, 46(4), 1104–1113. https://doi.org/10.1016/j.neuropsychologia.2007.10.019

Alpers, G. W., Adolph, D., & Pauli, P. (2011). Emotional scenes and facial expressions elicit different psychophysiological responses. International Journal of Psychophysiology, 80(3), 173–181. https://doi.org/10.1016/j.ijpsycho.2011.01.010

Arnold, A. J., Winkielman, P., & Dobkins, K. (2019). Interoception and social connection. Frontiers in Psychology, 10, 1–6. https://doi.org/10.3389/fpsyg.2019.02589

Aviezer, H., Trope, Y., & Todorov, A. (2012). Body cues, not facial expressions, discriminate between intense positive and negative emotions. Science, 338(6111), 1225–1229. https://doi.org/10.1126/science.1224313

Ax, A. F. (1953). The physiological differentiation between fear and anger in humans. Psychosomatic Medicine, 15(5), 433–442. https://doi.org/10.1097/00006842-195309000-00007

Balsters, M. J. H., Krahmer, E. J., Swerts, M. G. J., & Vingerhoets, A. J. J. M. (2013). Emotional tears facilitate the recognition of sadness and the perceived need for social support. Evolutionary Psychology, 11(1), 148–158. https://doi.org/10.1177/147470491301100114

Banks, S. J., Bellerose, J., Douglas, D., & Jones-Gotman, M. (2012). Bilateral skin conductance responses to emotional faces. Applied Psychophysiology Biofeedback, 37(3), 145–152. https://doi.org/10.1007/s10484-011-9177-7

Baron-Cohen, S., & Wheelwright, S. (2004). The empathy quotient: An investigation of adults with asperger syndrome or high functioning autism, and normal sex differences. Journal of Autism and Developmental Disorder, 34(2), 163–175. https://doi.org/10.1023/b:jadd.0000022607.19833.00

Baron-Cohen, S., Wheelwright, S., Skinner, R., Martin, J., & Clubley, E. (2001). The autism spectrum quotient (AQ): Evidence from Asperger syndrome/high-functioning autism, males and females, scientists and mathematicians. Journal of Autism and Developmental Disorders, 31(1), 5–17. https://doi.org/10.1023/A:1005653411471

Bates, D., Mächler, M., Bolker, B. M., & Walker, S. C. (2015). Fitting linear mixed-effects models using lme4. Journal of Statistical Software. 67(1). https://doi.org/10.18637/jss.v067.i01

Behrens, F., Snijdewint, J. A., Moulder, R. G., Prochazkova, E., Sjak-Shie, E. E., Boker, S. M., & Kret, M. E. (2020). Physiological synchrony is associated with cooperative success in real-life interactions. Scientific Reports, 10(1), 1–9. https://doi.org/10.1038/s41598-020-76539-8

Blairy, S., Herrera, P., & Hess, U. (1999). Mimicry and the judgment of emotional facial expressions. Journal of Nonverbal Behavior, 23(1), 5–41. https://doi.org/10.1023/A:1021370825283

Bornemann, B., Winkielman, P., & Van der Meer, E. (2012). Can you feel what you do not see? Using internal feedback to detect briefly presented emotional stimuli. International Journal of Psychophysiology, 85(1), 116–124. https://doi.org/10.1016/j.ijpsycho.2011.04.007

Bradley, M. M., Miccoli, L., Escrig, M. A., & Lang, P. J. (2008). The pupil as a measure of emotional arousal and automatic activation. Psychophysiology, 45(4), 602–607. https://doi.org/10.1111/j.1469-8986.2008.00654.x

Bradley, M. M., Sapigao, R. G., & Lang, P. J. (2017). Sympathetic ANS modulation of pupil diameter in emotional scene perception: Effects of hedonic content, brightness, and contrast. Psychophysiology, 54(10), 1419–1435. https://doi.org/10.1111/psyp.12890

Brown, S. L., & Schwartz, G. E. (1980). Relationships between facial electromyography and subjective experience during affective imagery. Biological Psychology, 11(1), 49–62. https://doi.org/10.1016/0301-0511(80)90026-5

Burley, D. T., Gray, N. S., & Snowden, R. J. (2017). As far as the eye can see: Relationship between psychopathic traits and pupil response to affective stimuli. PLoS One, 12(1), 1–22. https://doi.org/10.1371/journal.pone.0167436

Bylsma, L. M., Gračanin, A., & Vingerhoets, A. (2019). The neurobiology of human crying. Clinical Autonomic Research, 29(1), 63–73. https://doi.org/10.1007/s10286-018-0526-y

Cacioppo, J. T., Martzke, J. S., Petty, R. E., & Tassinary, L. G. (1988). Specific forms of facial EMG response index emotions during an interview: From Darwin to the continuous flow hypothesis of affect-laden information processing. Journal of Personality and Social Psychology, 54(4), 592–604. https://doi.org/10.1037/0022-3514.54.4.592

Cañigueral, R., & Hamilton, A. F. D. C. (2019). Being watched: Effects of an audience on eye gaze and prosocial behaviour. Acta Psychologica, 195, 50–63. https://doi.org/10.1016/j.actpsy.2019.02.002

Cannon, W. B. (1914). The emergency function of the adrenal medulla in pain and the major emotions. American Journal of Physiology, 33(2), 356–372. https://doi.org/10.1152/ajplegacy.1914.33.2.356

Chênes, C., Chanel, G., Soleymani, M., & Pun, T. (2013). Highlight detection in movie scenes through inter-users, physiological linkage. In N. Ramzan, R. Van Zwol, J.-S. Lee, K. Clüver, & X.-S. Hua (Eds.), Social media retrieval (pp. 217–237). Springer. https://doi.org/10.1007/978-1-4471-4555-4_10

Clay-Warner, J., & Robinson, D. T. (2015). Infrared thermography as a measure of emotion response. Emotion Review, 7(2), 157–162. https://doi.org/10.1177/1754073914554783

Cowen, A. S., & Keltner, D. (2019). What the face displays: Mapping 28 emotions conveyed by naturalistic expression. American Psychologist, 75(3), 349–364. https://doi.org/10.1037/amp0000488

Critchley, H. D., & Garfinkel, S. N. (2017). Interoception and emotion. Current Opinion in Psychology, 17, 7–14. https://doi.org/10.1016/j.copsyc.2017.04.020

Crivelli, C., Russell, J. A., Jarillo, S., & Fernández-Dols, J. M. (2016). The fear gasping face as a threat display in a Melanesian society. Proceedings of the National Academy of Sciences of the United States of America, 113(44), 12403–12407. https://doi.org/10.1073/pnas.1611622113

Curtis, V., Barra, M. D., & Aunger, R. (2011). Disgust as an adaptive system for disease avoidance behaviour. Philosophical Transactions of the Royal Society B: Biological Sciences, 366(1563), 389–401. https://doi.org/10.1098/rstb.2010.0117

Dael, N., Mortillaro, M., & Scherer, K. R. (2012). Emotion expression in body action and posture. Emotion, 12(5), 1085–1101. https://doi.org/10.1037/a0025737

Damasio, A. (1996). The somatic marker hypothesis and the possible functions of the prefrontal cortex. Philosophical Transactions of the Royal Society of London Series B, 351, 1413–1420. https://doi.org/10.1098/rstb.1996.0125

Darrow, C. W. (1936). The galvanic skin reflex (sweating) and blood-pressure as preparatory and facilitative functions. Psychological Bulletin, 33(2), 73–94. https://doi.org/10.1037/h0051940

Dawson, M. E., Schell, A. M., & Filion, D. L. (2016). The electrodermal system. Handbook of Psychophysiology, Fourth Edition, 217–243. https://doi.org/10.1017/9781107415782.010

de Gelder, B. (2006). Towards the neurobiology of emotional body language. Nature Reviews Neuroscience, 7(3), 242–249. https://doi.org/10.1038/nrn1872

de Gelder, B. (2009). Why bodies? Twelve reasons for including bodily expressions in affective neuroscience. Philosophical Transactions of the Royal Society B: Biological Sciences, 364(1535), 3475–3484. https://doi.org/10.1098/rstb.2009.0190

de Gelder, B., & Van den Stock, J. (2011). The bodily expressive action stimulus test (BEAST). Construction and validation of a stimulus basis for measuring perception of whole body expression of emotions. Frontiers in Psychology, 2, 1–6. https://doi.org/10.3389/fpsyg.2011.00181

Dimberg, U. (1982). Facial reactions to facial expressions. Psychophysiology, 19(6), 643–647. https://doi.org/10.1111/j.1469-8986.1982.tb02516.x

Ekman, P. (1992). An argument for basic emotions. Cognition & Emotion, 6(3/4), 169–200. https://doi.org/10.1080/02699939208411068

Ekman, P. (1993). Facial expression and emotion. American Psychologist, 48(4), 384–392. https://doi.org/10.1037/0003-066X.48.4.384

Ekman, P., Friesen, W., & Ancoli, S. (1980). Facial signs of emotional experience. Journal of Personality and Social Psychology, 39(6), 1123–1134.

Ekman, P., & Rosenberg, E. (2005). What the face reveals: Basic and applied studies of spontaneous expression using the facial action coding system (FACS). Oxford University Press.

Feldmann-Wüstefeld, T., Schmidt-Daffy, M., & Schubö, A. (2011). Neural evidence for the threat detection advantage: Differential attention allocation to angry and happy faces. Psychophysiology, 48(5), 697–707. https://doi.org/10.1111/j.1469-8986.2010.01130.x

Fischer, A. H., & Manstead, A. S. R. (2016). Social functions of emotion and emotion regulation. In M. Lewis, J. Haviland-Jones, & L. F. Barrett (Eds.), Handbook of Emotions (4th ed., pp. 424–439). The Guilford Press.

Fresco, D. M., Coles, M. E., Heimberg, R. G., Liebowitz, M. R., Hami, S., Stein, M. B., & Goetz, D. (2001). The Liebowitz social anxiety scale: A comparison of the psychometric properties of self-report and clinician-administered formats. Psychological Medicine, 31(6), 1025–1035. https://doi.org/10.1017/S0033291701004056

Fridlund, A. J. (1991). Sociality of solitary smiling: Potentiation by an implicit audience. Journal of Personality and Social Psychology, 60(2), 229–240. https://doi.org/10.1037//0022-3514.60.2.229

Fridlund, A. J., & Cacioppo, J. T. (1986). Guidelines for human electromyographic Research. Psychophysiology, 23(5), 567–589. https://doi.org/10.1111/j.1469-8986.1986.tb00676.x

Friedman, B. H. (2010). Feelings and the body: The Jamesian perspective on autonomic specificity of emotion. Biological Psychology, 84(3), 383–393. https://doi.org/10.1016/j.biopsycho.2009.10.006

Frith, C. (2009). Role of facial expressions in social interactions. Philosophical Transactions of the Royal Society B: Biological Sciences, 364(1535), 3453–3458. https://doi.org/10.1098/rstb.2009.0142

Garfinkel, S. N., & Critchley, H. D. (2013). Interoception emotion and brain: new insights link internal physiology to social behaviour. Commentary on: “Anterior insular cortex mediates bodily sensibility and social anxiety” by Terasawa, et al. (2012). Social Cognitive and Affective Neuroscience, 8(3), 231–234. https://doi.org/10.1093/scan/nss140

Gobel, M. S., Kim, H. S., & Richardson, D. C. (2015). The dual function of social gaze. Cognition, 136, 359–364. https://doi.org/10.1016/j.cognition.2014.11.040

Gračanin, A., Bylsma, L. M., & Vingerhoets, A. J. J. M. (2018). Why only humans shed emotional tears: Evolutionary and cultural perspectives. Human Nature, 29(2), 104–133. https://doi.org/10.1007/s12110-018-9312-8

Hansen, C. H., & Hansen, R. D. (1988). Finding the face in the crowd: An anger superiority effect. Journal of Personality and Social Psychology, 54(6), 917–924. https://doi.org/10.1037/0022-3514.54.6.917

Hatfield, E., Cacioppo, J. T., & Rapson, R. L. (1993). Emotional contagion. Current Directions in Psychological Science, 2(3), 96–99. https://doi.org/10.1111/1467-8721.ep10770953

Hermans, E. J., van Wingen, G., Bos, P. A., Putman, P., & van Honk, J. (2009). Reduced spontaneous facial mimicry in women with autistic traits. Biological Psychology, 80(3), 348–353. https://doi.org/10.1016/j.biopsycho.2008.12.002

Hess, U., & Blairy, S. (2001). Facial mimicry and emotional contagion to dynamic emotional facial expressions and their influence on decoding accuracy. International Journal of Psychophysiology, 40(2), 129–141. https://doi.org/10.1016/S0167-8760(00)00161-6

Hess, U., Blairy, S., & Kleck, R. E. (1997). The intensity of emotional facial expressions and decoding accuracy. Journal of Nonverbal Behavior, 21(4), 241–257. https://doi.org/10.1023/A:1024952730333

Hess, U., & Bourgeois, P. (2010). You smile-I smile: Emotion expression in social interaction. Biological Psychology, 84(3), 514–520. https://doi.org/10.1016/j.biopsycho.2009.11.001

Hess, U., & Fischer, A. (2013). Emotional mimicry as social regulation. Personality and Social Psychology Review, 17(2), 142–157. https://doi.org/10.1177/1088868312472607

Holland, A. C., O’Connell, G., & Dziobek, I. (2020). Facial mimicry, empathy, and emotion recognition: A meta-analysis of correlations. Cognition and Emotion. https://doi.org/10.1080/02699931.2020.1815655

Huron D. (2018). On the functions of sadness and grief. In: H. Lench (Ed.) The Function of Emotions. Springer, Cham. https://doi.org/10.1007/978-3-319-77619-4_5.

Ioannou, S., Gallese, V., & Merla, A. (2014). Thermal infrared imaging in psychophysiology : Potentialities and limits. Psychophysiology, 51, 951–963. https://doi.org/10.1111/psyp.12243

James, W. (1884). What is an Emotion? Mind, 9, 188–205. http://mind.oxfordjournals.org/content/os-IX/34/188

Jessen, S., Altvater-Mackensen, N., & Grossmann, T. (2016). Pupillary responses reveal infants’ discrimination of facial emotions independent of conscious perception. Cognition, 150, 163–169. https://doi.org/10.1016/j.cognition.2016.02.010

Kanbara, K., & Fukunaga, M. (2016). Links among emotional awareness, somatic awareness and autonomic homeostatic processing. BioPsychoSocial Medicine, 10(1), 1–11. https://doi.org/10.1186/S13030-016-0059-3

Keltner, D. (1995). Signs of appeasement: Evidence for the distinct displays of embarrassment, amusement, and shame. Journal of Personality and Social Psychology, 68(3), 441–454. https://doi.org/10.1093/acprof:oso/9780195179644.003.0007

Keltner, D., & Haidt, J. (1999). Social functions of emotions at four levels of analysis. Cognition and Emotion, 13(5), 505–521. https://doi.org/10.1080/026999399379168

Kim, Y., & Kret, M.E. (in press). The emotional expressions and emotion perception in nonhuman primates. In Dukes, D., Samson, A.C., & Walle, E.A. (Eds.), The Oxford Handbook of Emotional Development. Oxford University Press.

Kosonogov, V., De Zorzi, L., Honoré, J., Martínez-Velázquez, E. S., Nandrino, J. L., Martinez-Selva, J. M., & Sequeira, H. (2017). Facial thermal variations: A new marker of emotional arousal. PLoS One, 12(9), 1–16. https://doi.org/10.1371/journal.pone.0183592

Kreibig, S. D. (2010). Autonomic nervous system activity in emotion: A review. Biological Psychology, 84(3), 394–421. https://doi.org/10.1016/j.biopsycho.2010.03.010

Kreibig, S. D., Wilhelm, F. H., Roth, W. T., & Gross, J. J. (2007). Cardiovascular, electrodermal, and respiratory response patterns to fear- and sadness-inducing films. Psychophysiology, 44(5), 787–806. https://doi.org/10.1111/j.1469-8986.2007.00550.x

Kret, M. E. (2015). Emotional expressions beyond facial muscle actions. A call for studying autonomic signals and their impact on social perception. Frontiers in Psychology, 6, 1–10. https://doi.org/10.3389/fpsyg.2015.00711

Kret, M. E., Prochazkova, E., Sterck, E. H. M., & Clay, Z. (2020). Emotional expressions in human and non-human great apes. Neuroscience and Biobehavioral Reviews, 115, 378–395. https://doi.org/10.1016/j.neubiorev.2020.01.027

Kret, M. E., Roelofs, K., Stekelenburg, J. J., & de Gelder, B. (2013). Emotional signals from faces, bodies and scenes influence observers’ face expressions, fixations and pupil-size. Frontiers in Human Neuroscience, 7, 1–9. https://doi.org/10.3389/fnhum.2013.00810

Kret, M. E., & Sjak-Shie, E. E. (2019). Preprocessing pupil size data: Guidelines and code. Behavior Research Methods, 51(3), 1336–1342. https://doi.org/10.3758/s13428-018-1075-y

Kret, M. E., Stekelenburg, J. J., Roelofs, K., & de Gelder, B. (2013). Perception of face and body expressions using electromyography, pupillometry and gaze measures. Frontiers in Psychology, 4, 1–12. https://doi.org/10.3389/fpsyg.2013.00028

Kret, M. E., & Straffon, L. M. (2018). Reply to Crivelli et al.: The different faces of fear and threat. Evolutionary and cultural insights. Journal of Human Evolution, 125, 193–197. https://doi.org/10.1016/j.jhevol.2017.11.006

Krumhuber, E. G., Kappas, A., & Manstead, A. S. R. (2013). Effects of dynamic aspects of facial expressions: A review. Emotion Review, 5(1), 41–46. https://doi.org/10.1177/1754073912451349

Küster, D. (2018). Social effects of tears and small pupils are mediated by felt sadness: An evolutionary view. Evolutionary Psychology, 16(1), 1–9. https://doi.org/10.1177/1474704918761104

Laidlaw, K. E. W., Foulsham, T., Kuhn, G., & Kingstone, A. (2011). Potential social interactions are important to social attention. Proceedings of the National Academy of Sciences of the United States of America, 108(14), 5548–5553. https://doi.org/10.1073/pnas.1017022108

Lang, P. J., Greenwald, M. K., Bradley, M. M., & Hamm, A. O. (1993). Looking at pictures: Affective, facial, visceral, and behavioral reactions. Psychophysiology, 30(3), 261–273. https://doi.org/10.1111/j.1469-8986.1993.tb03352.x

Lange, C. G. (1912). The mechanism of the emotions (B. Rand, Trans.). In B. Rand (Ed.), The classical psychologists (pp. 672–684). Copenhagen (Original work published 1885, Om Sindsbevaegelser et Psyko-Fysiologisk Studie).

Lenth, R. (2020). emmeans: Estimated marginal means, aka least-squares means. R package version 4.8. https://CRAN.R-project.org/package=emmeans

Levenson, R. W. (2003). Blood, sweat, and fears: The autonomic architecture of emotion. Annals of the New York Academy of Sciences, 1000, 348–366. https://doi.org/10.1196/annals.1280.016

Liebowitz, M. R. (1987). Social phobia. Modern Problems in Pharmacopsychiatry, 22, 141–173. https://doi.org/10.1159/000414022

Marsh, A. A., Ambady, N., & Kleck, R. E. (2005). The effects of fear and anger facial expressions on approach- and avoidance-related behaviors. Emotion, 5(1), 119–124. https://doi.org/10.1037/1528-3542.5.1.119

Martin, J., Rychlowska, M., Wood, A., & Niedenthal, P. (2017). Smiles as Multipurpose Social Signals. Trends in Cognitive Sciences, 21(11), 864–877. https://doi.org/10.1016/j.tics.2017.08.007

Martinez, L., Falvello, V. B., Aviezer, H., & Todorov, A. (2016). Contributions of facial expressions and body language to the rapid perception of dynamic emotions. Cognition and Emotion, 30(5), 939–952. https://doi.org/10.1080/02699931.2015.1035229

Mirman, D. (2014). Growth curve analysis and visualization using R (Issue The R series). Chapman and Hall. https://doi.org/10.1177/0962280215570173.