Abstract

Radial basis function methods are powerful tools in numerical analysis and have demonstrated good properties in many different simulations. However, for time-dependent partial differential equations, only a few stability results are known. In particular, if boundary conditions are included, stability issues frequently occur. The question we address in this paper is how provable stability for RBF methods can be obtained. We develop a stability theory for global radial basis function methods using the general framework of summation-by-parts operators often used in the Finite Difference and Finite Element communities. Although we address their practical construction, we restrict the discussion to basic numerical simulations and focus on providing a proof of concept.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We investigate energy stability of global radial basis function (RBF) methods for time-dependent partial differential equations (PDEs). Unlike finite differences (FD) or finite element (FE) methods, RBF schemes are mesh-free, making them very flexible with respect to the geometry of the computational domain since the only used geometrical property is the pairwise distance between two centers. Further, they are suitable for problems with scattered data like in climate [12, 34] or stock market [6, 41] simulations. Finally, for smooth solutions, one can reach spectral convergence [11, 13]. In addition, they have recently become increasingly popular for solving time-dependent problems in quantum mechanics, fluid dynamics, etc. [7, 29, 30, 52]. One distinguishes between global RBF methods (Kansa’s methods) [31] and local RBF methods, such as the RBF generated finite difference (RBF-FD) [50] and RBF partition of unity (RBF-PUM) [55] method. However, there are some nuances regarding the computational efficiency to take into account. For instance, a naive approach results in a large dense differentiation matrix. Furthermore, care must be taken regarding the conditioning of the differentiation and associated Vandermonde matrices. There exists several strategies to combat these issues, including stable bases, compactly supported RBFs [3, 54], domain decomposition [9, 58], and local variants of RBF methods [12, 55]. Also see the monograph [14] and references therein. Even though the efficiency and good performance of RBF methods have been demonstrated for various problems, only a few stability results are known for advection-dominated problems. For example, an eigenvalue analysis was performed for a linear advection equation in [42], and it was found that RBF discretizations often produce eigenvalues with positive real part, lending to an exponential increase of the \(L_2\) norm when boundary conditions were introduced. To illustrate this, consider the following example (also found in [21, Section 6.1]):

with \(x \in [-1,1]\), \(t>0\), and where periodic boundary conditions are applied. In this example, a bump travels to the right, leaving the domain and re-entering at the left boundary.

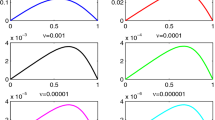

In Figure 1, we plot the numerical solution and its energy up to \(t=10\) using a global RBF method with a Gaussian kernel and \(N=20\) points. An increase in the bump’s size and energy can be seen. For longer times, the computation breaks down. The discrete setting does not reflect the continuous one with zero energy growth and demonstrates the stability problem. To overcome those, it was shown in [21] that a weak formulation as used in classical FE methods could result in a stable method, whereas in [24] weakly imposed boundary conditions together with properly constructed boundary operators were used. Recently, \(L_2\) estimates were also obtained using an oversampling technique [53], assuming that a sufficient amount of evaluation points are used. All these efforts use special techniques, and the question we address in this paper is how to stabilize RBF methods in a general way.

Classical summation-by-parts (SBP) operators were introduced during the 1970s in the context of FD schemes. They allow for a systematic development of energy-stable semi-discretizations of well-posed initial-boundary-value problems (IBVPs) [8, 49]. The SBP property is a discrete analog to integration by parts, and proofs from the continuous setting carry over directly to the discrete framework [38] if proper boundary procedures are added [49]. Based initially on polynomial approximations, the SBP theory has recently been extended to general function spaces developing so-called FSBP operators in [25]. Here, we investigate stability of global RBF methods through the lens of the FSBP theory. We demonstrate that many existing RBF discretizations do not satisfy the FSBP property, which explains the instability of these methods. Based on these findings, we show how RBF discretizations can be modified to obtain an SBP property. This then allows for a systematic development of energy-stable RBF methods. We provide some specific examples, including the most frequently used RBFs. Furthermore, we connect to some recent stability results from [53], where oversampling was used, to the FSBP property. For simplicity, we focus on the univariate setting for developing an SBP theory in the context of global RBF methods. That said, RBF methods and SBP operators can easily be extended to the multivariate setting, as demonstrated in our numerical tests. The focus of the present paper is to provide a proof of concept and use the FSBP theory to develop provable energy-stable global RBF methods. We restrict most of the discussion to the one-dimensional setting to avoid some technical difficulties that might otherwise distract the reader from the core concept. That said, future work will address the multi-dimensional case among other things, also including local RBF methods, accuracy, and efficient implementations.

The rest of this work is organized as follows. In Section 2, we provide some preliminaries on energy-stability of IBVPs and global RBF methods. Next, the concept of FSBP operators is shortly revisited in Section 3. We adapt the FSBP theory to RBF function spaces in Section 4. Here, it is also demonstrated that many existing RBF methods do not satisfy the SBP property and how to construct RBF operators in SBP form (RBFSBP). In Section 5, we give some concrete examples of RBFSBP operators resulting in energy-stable methods. Finally, we provide numerical tests in Section 6 and concluding thoughts in Section 7.

2 Preliminaries

We now provide a few preliminaries on IBVPs and RBF methods.

2.1 Well-posedness and Energy Stability

Following [28, 38, 49], we consider

where u is the solution and \({\mathcal {L}}\) is a differential operator with smooth coefficients. Further, \(B_0\) and \(B_1\) are operators defining the boundary conditions, \({\mathcal {{\hat{F}}}}\) is a forcing function, f is the initial data, and \(g_{x_L}, g_{x_R}\) denote the boundary data. Examples of (2) include the advection equation

with constant \(a \in {\mathbb {R}}\), the diffusion equation

with \(\kappa \in {\mathbb {R}}\) depending on x, t, as well as combinations of (3) and (4). Let us now formalize what we mean by the IBVP (2) being well-posed.

Definition 1

The IBVP (2) with \( {\mathcal {{\hat{F}}}}=0\) and \(g_{x_L}=g_{x_R}=0\) is well-posed, if for every \(f\in C^{\infty }\) that vanishes in a neighborhood of \(x=x_L,x_R\), (2) has a unique smooth solution u that satisfies

where \(C, \alpha _c\) are constants independent of f. Moreover, the IBVP (2) is strongly well-posed, if it is well-posed and

holds, where the function C(t) is bounded for finite t and independent of \({\mathcal {{\hat{F}}}}, g_{x_L}, g_{x_R}\), and f.

Switching to the discrete framework, our numerical approximation \(u^h\) of (2) should be constructed in such a way that similar estimates to (5) and (6) are obtained. We denote our grid quantity (a measure of the grid size) by h. In the context of RBF methods, h denotes the maximum distance between two neighboring points. We henceforth denote by \(\Vert \cdot \Vert _h\) a discrete version of the \(L_2\)-norm and \(\left| \left| \cdot \right| \right| _b\) represents a discrete boundary norm. Then, we define stability of the numerical solution as follows.

Definition 2

Let \( {\mathcal {{\hat{F}}}}=0\), \(g_{x_L}=g_{x_R}=0\), and \(f^h\) be an adequate projectionFootnote 1 of the initial data f which vanishes at the boundaries. The approximation \(u^h\) is stable if

holds for all sufficiently small h, where C and \(\alpha _d\) are constants independent of \(f^h\). The approximated solution \(u^h\) is called strongly energy stable if it is stable and

holds for all sufficiently small h. The function C(t) is bounded for finite t and independent of \({\mathcal {{\hat{F}}}}, g_{x_L}, g_{x_R}\), and \(f^h\).

2.2 Discretization

To discretize the IBVP (2), we apply the method of lines. The space discretization is done using a global RBF method resulting in a system of ordinary differential equations (ODEs):

Here, \({\textbf{u}}\) denotes the vector of coefficients and \({\text {L}}\) represents the spatial operator. We used the explicit strong stability preserving (SSP) Runge–Kutta (RK) method of third-order with three stages (SSPRK(3,3)) [47] for all subsequent numerical tests.

2.2.1 Radial Basis Function Interpolation

RBFs are powerful tools for interpolation and approximation [10, 14, 56]. In the context of the present work, we are especially interested in RBF interpolants. Let \(u: {\mathbb {R}}\supset \Omega \rightarrow {\mathbb {R}}\) be a scalar valued function and \(X_K = \{x_1,\dots ,x_K\}\) a set of interpolation points, referred to as centers. The RBF interpolant of u is

Here, \(\varphi : {\mathbb {R}}_0^+ \rightarrow {\mathbb {R}}\) is the RBF (also called kernel) and \(\{p_l\}_{l=1}^m\) is a basis for the space of polynomials up to degree \(m-1\), denoted by \({\mathbb {P}}_{m-1}\). In our numerical section, we use mostly \(m=1\) meaning that we include constants in our approximation space. Furthermore, the RBF interpolant (10) is uniquely determined by the conditions

Note that (11) and (12) can be reformulated as a linear system for the coefficient vectors \(\varvec{\alpha } = [\alpha _1,\dots ,\alpha _K]^T\) and \(\varvec{\beta } = [\beta _1,\dots ,\beta _m]^T\):

where \({\textbf{u}} = [u(x_1),\dots ,u(x_K)]^T\) and

Incorporating polynomial terms of degree up to \(m-1\) in the RBF interpolant (10) is important for several reasons:

-

(i)

The RBF interpolant (10) becomes exact for polynomials of degree up to \(m-1\), i. e., \(u^h = u\) for \(u \in {\mathbb {P}}_{m-1}\).

-

(ii)

For some (conditionally positive) kernels \(\varphi \), the RBF interpolant (10) only exists uniquely when polynomials up to a certain degree are incorporated.

In addition, we will show that (i) is needed for the RBF method to be conservative [21, 25]. The property (ii) is explained in more detail in (1) as well as in [10, Chapter 7] and [18, Chapter 3.1]. For simplicity and clarity, we will focus on the choices of RBFs listed in Table 1. More types of RBFs and their properties can be found in the monographs [10, 14, 56].

Note that the set of all RBF interpolants (10) forms a K-dimensional linear space, denoted by \({\mathcal {R}}_{m}(X_K)\). This space is spanned by the cardinal functions

which are uniquely determined by the cardinal property

and condition (12). They also provide us with the following (nodal) representation of the RBF interpolant:

2.2.2 Radial Basis Function Methods

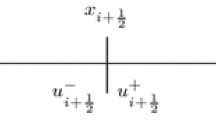

We outline the standard global RBF method for the IBVP (2). The domain \(\Omega \) on which we solve (2) is discretized using two point sets:

-

The nodal point set (centers) \(X_K=\{x_1, \cdots , x_K \}\) used for constructing the cardinal basis functions (15).

-

The grid (evaluation) point set \(Y_N=\{y_1, \cdots , y_N \}\) for describing the IBVP (2), where \(N\ge K\).

By selecting \(Y_N=X_K\), we get a collocation method, and with \(N>K\), a method using oversampling. The numerical solution \({\textbf{u}}\) is defined by the values of \(u^h\) at \(Y_N\) and the operator \(L({\textbf{u}})\) by using the spatial derivative of the RBF interpolant \(u^h\), also at \(Y_N\). The RBF discretization can be summarized in the following three steps:

-

1.

Determine the RBF interpolant \(u^h\in {\mathcal {R}}_{m}(X_K)\).

-

2.

Define \(L({\textbf{u}})\) in the semidiscrete equation by inserting (17) into the continuous spatial operator. This yields

$$\begin{aligned} {\text {L}}({\textbf{u}})=&\left( {\mathcal {L}}(y_n,t, \partial _x) u^h(t,y_n)+ {\mathcal {{\hat{F}}}}(t,y_n) \right) _{n=1}^N. \end{aligned}$$(18) -

3.

Use a classical time integration scheme to evolve (9).

Global RBF methods come with several free parameters. These include the center and evaluation points \(X_K\) and \(Y_N\), the kernel \(\varphi \), the degree \(m-1\) of the polynomial term included in the RBF interpolant (10). The kernel \(\varphi \) might come with additional free parameters such as the shape parameter \(\varepsilon \). Finally, we note that also the basis \(c_k\) of the RBF approximation space \({\mathcal {R}}_{m}(X_K)\), that one uses for numerically computing the RBF approximation \(u^h\) and its derivatives, can influence how well-conditioned the RBF method is in practice. Discussions of appropriate choices for these parameters are filling whole books [10, 14, 15, 56] and are avoided here. In this work, we have a different point in mind and focus on the basic stability conditions of RBF methods.

3 Summation-by-parts Operators on General Function Spaces

SBP operators were developed to mimic the behavior of integration by parts in the continuous setting and provide a systematic way to build energy-stable semi-discrete approximations. First, constructed for an underlying polynomial approximation in space, the theory was recently extended to general function spaces in [22, 25]. For completeness, we shortly review the extended framework of FSBP operators and repeat their basic properties. We consider the FSBP concept on the interval \([x_L, x_R]\) where the boundary points are included in the evaluation points \(Y_N\). Using this framework, we give the following definition originally found in [25]:

Definition 3

(FSBP operators) Let \({\mathcal {F}} \subset C^1([x_L,x_R])\) be a finite-dimensional function space. An operator \(D = P^{-1} Q\) is an \({\mathcal {F}}\)-based SBP (FSBP) operator if

-

(i)

\(D f({\textbf{x}}) =f'({\textbf{x}})\) for all \(f \in {\mathcal {F}}\),

-

(ii)

P is a symmetric positive definite matrix, and

-

(iii)

\(Q + Q^T = B = {{\,\textrm{diag}\,}}(-1,0,\dots ,0,1)\).

Here, \(f({\textbf{y}}) = [f(y_1), \dots , f(y_N)]^T\) and \(f'({\textbf{y}}) = [f'(y_1),\dots , f'(y_N)]^T\) respectively denote the vector of the function values of f and its derivative \(f'\) at the evaluation points \(y_1,\dots ,y_N\).

Further, D denotes the differentiation matrix and P is a matrix defining a discrete norm. In order to produce an energy estimate, we use that P is positive definite and symmetric such that it induces a norm. In this manuscript and in [25], we focus for stability reasons on diagonal norm FSBP operators [17, 35, 43]. The matrix Q is nearly skew-symmetric and can be seen as the stiffness matrix in context of FE. With these operators, integration-by-parts is mimicked discretely as:

for all \(f,g \in {\mathcal {F}}\).

3.1 Properties of FSBP Operators

In [25], the authors proved that the FSBP-SAT semi-discretization of the linear advection equation yields an energy stable semi-discretization. The so-called SAT term imposes the boundary condition weakly. Moreover, the underlying function space \({\mathcal {F}}\) should contain constants in order to ensure conservations.

In context of RBF methods, constants have to be included in the RBF interpolants (10), also for the reasons discussed above.

We will extend the previous investigation to the linear advection-diffusion equation.

where \(a>0\) is a constant and \(\kappa >0\) can depend on x and t. The problem (20) is strongly well-posed, as can be seen by the energy rate

with \( \left| \left| u_x\right| \right| ^2_{\kappa }= \int _{x_L}^{x_R} (\partial _x u)^2 \kappa \textrm{d} x.\) To translate this estimate to the discrete setting, we discretize (20). The most straightforward FSBP-SAT discretization reads

with \( {\mathcal {K}}= {{\,\textrm{diag}\,}}(\mathbf {\kappa })\) and

We can prove the following result using Definition 3 where we additionally assume that \({\mathcal {K}}D{\textbf{u}} \subset {\mathcal {F}}\). Note that \({\mathcal {K}}D{\textbf{u}} \subset {\mathcal {F}}\) is always satisfied when \({\mathcal {F}}\) is invariant under differentiation (\({\mathcal {F}} ' \subset {\mathcal {F}} )\) and \(\kappa \) does not depend on x.

Theorem 1

The scheme (22) is strongly stable with \(\sigma _0=-1\) and \({\sigma _1=-1}\).

Proof

We use the energy method together with the FSBP property. Multiplying (22) with \({\textbf{u}}^T P\) from the left, we get

The FSBP property \(P D + D^T P = B\) implies \({\textbf{u}}^T P D {\textbf{u}} = {\textbf{u}}^T B {\textbf{u}} - {\textbf{u}}^T D^T P {\textbf{u}}\). Substituting this into (24) yields

Observe that \({\textbf{u}}^T D^T P {\textbf{u}} = \left( {\textbf{u}}^T D^T P {\textbf{u}} \right) ^T = {\textbf{u}}^T P D {\textbf{u}}\) since this is a scalar term. Hence, adding (24) and (25), we get

Furthermore, the FSBP property also implies \({\textbf{u}}^T P D( {\mathcal {K}} D {\textbf{u}} ) = {\textbf{u}}^T B {\mathcal {K}} D {\textbf{u}} - ( D {\textbf{u}} )^T P {\mathcal {K}} ( D {\textbf{u}} )\). This transforms (26) into

Using \(\Vert {\textbf{u}} \Vert ^2_t = 2 {\textbf{u}}^T P{\textbf{u}}_t\) and \(\left| \left| D{\textbf{u}}\right| \right| ^2_{ {\mathcal {K}}} = (D{\textbf{u}})^T P {\mathcal {K}}D{\textbf{u}}\), we get

Finally, substituting (23) for the SAT term yields

resulting in

By elementary transformation, we obtain

which is a discrete analog of the continuous estimate (21). Note that P and \( {\mathcal {K}}\) have to be diagonal to ensure that we obtain our energy estimate. \(\square \)

Clearly, the FSBP operators automatically reproduce the results from the continuous setting, similar to the classical SBP operators based on polynomial approximations [49]. Note that no details are assumed on the specific function space, grid or the underlying methods. The only factors of importance is that the FSBP property is fulfilled and that well posed boundary condition are used.

Remark 1

(Second-derivative FSBP operators) In our analysis, we utilize the first-order derivative matrix twice to obtain a representation for the second derivative. Additionally, we assume \(KD{\textbf{u}} \in {\mathcal {F}}\). This ensures that the first term on the right-hand side of (22) provides a discrete representation of the second-derivative operator within the function space. Notably, this assumption is not a requisite for stability, only for accuracy. For an in-depth examination of second derivative FSBP operators, we refer to our recent publication [23].

4 SBP operators for RBFs

First, we adapt the FSBP theory in Section 2.2 to the RBF framework. Next, we investigate classical RBF methods concerning the FSBP property, and demonstrate that standard global RBF schemes does not fulfill this property. Finally, we describe how RBFSBP operators can be constructed that lead to stability.

4.1 RBF-based SBP operators

The function space \({\mathcal {F}} \subset C^1\) for RBF methods is defined by the description in Subsection 2.2. Consider a set of K points, \(X_K =\{x_1, \cdots , x_K \} \subset [x_L, x_R]\). The set of all RBF interpolants (10) forms a K-dimensional approximation space, which we denote by \({\mathcal {R}}_{m}(X_K)\). Let \(\{c_k\}_{k=1}^K\) be a basis in \({\mathcal {R}}_{m}(X_K)\). Further, we have the grid points \(Y_N =\{y_1, \cdots , y_N \} \subset [x_L, x_R]\) which include the boundaries. They are used to define the RBFSBP operators.

Definition 4

RBF (Summation-by-Parts Operators) An operator \(D = P^{-1} Q \in {\mathbb {R}}^{N \times N}\) is an RBFSBP operator on the grid points \(Y_N\) if

-

(i)

\(D c_k({\textbf{x}}) = c_k'({\textbf{x}})\) for \(k=1,2,\dots ,K\) and \(c_k \in {\mathcal {R}}_{m}(X_K)\),

-

(ii)

\(P \in {\mathbb {R}}^{N\times N}\) is a symmetric positive definite matrix, and

-

(iii)

\(Q + Q^T = B\).

In the classical RBF discretizations, the exactness of the derivatives of the cardinal functions is the only condition which is imposed. However, to construct energy stable RBF methods, the existence of an adequate norm is as important as the condition on the derivative matrix. Hence it is often necessary to use a higher number of grid points than centers to ensure the existence of a positive quadrature formula to guarantee the conditions in Definition 4.

The norm matrix P in Definition 4 has only been assumed to be symmetric positive definite. However, as mentioned above for the remainder of this work, we restrict ourselves to diagonal norm matrices \(P={{\,\textrm{diag}\,}}(\omega _1, \cdots ,\omega _N)\) where \(\omega _i\) is the associated quadrature weight because Diagonal-norm operators are

-

i)

required for certain splitting techniques [17, 37, 40], and variable coefficients, see for example (31).

-

ii)

better suited to conserve nonquadratic quantities for nonlinear stability [32],

-

iii)

easier to extend to, for instance, curvilinear coordinates [5, 43, 48].

Remark 2

In Definition 4, we have two sets of points, the interpolation points \(X_K\) and the grid points \(Y_N\). The derivative matrix is constructed with respect to the exactness of the cardinal functions \(c_k\) related to the interpolation points \(X_K\). However, all operators are constructed with respect to the grid points \(Y_N\), i.e. \(D,P,Q \in {\mathbb {R}}^{N\times N}\). This is in particular essential when ensuring the existence of suitable norm matrix P. This means that the size of the SBP operator is determined by the quadrature formula. So, the number of grid points and their positioning highly effects the size of the operators and so the efficiency of the underlying method itself. In the future, this will be investigated in more detail.

4.2 Existing Collocation RBF Methods and the FSBP Property

In this part, we shortly investigate if classical collocation RBF methods fulfill the FSBP property for their underlying function space. In the classical collocation RBF approach, the centers intersect with the grid points, i.e. \(X_K=Y_N\). It was shown in [25] that a diagonal-norm \({\mathcal {F}}\)-exact SBP operator exists on the grid \(Y_N = \{y_1, \cdots , y_N\} \) if and only if a positive and \(({\mathcal {F}}{\mathcal {F}})'\)-exact quadrature formula exists on the same grid (the same requirement as for classical SBP operators). The differentiation matrix \(D \in {\mathbb {R}}^{N \times N}\) of a collocation RBF method can thus only satisfy the FSBP property if there exists a positive and \(({\mathcal {R}}_{m}(Y_N) {\mathcal {R}}_{m}(Y_N))'\)-exact quadrature formula on the grid \(Y_N\). The weights \({\textbf{w}} \in {\mathbb {R}}^N\) of such a quadrature formula would have to satisfy

with the coefficient matrix G and vector of moments \({\textbf{m}}\) given by

In (33), \(\{g_l\}_{l=1}^L\) is a basis of the function space \(({\mathcal {R}}_{m}(Y_N) {\mathcal {R}}_{m}(Y_N))'\). In many cases, the dimension L of \(({\mathcal {R}}_{m}(Y_N) {\mathcal {R}}_{m}(Y_N))'\) is larger than the dimension N of \({\mathcal {R}}_{m}(Y_N)\). In this case, \(L > N\) and the linear system in (32) is overdetermined and has no solution. This is demonstrated in Table 2, which reports on the residual and smallest element of the least squares solution (solution with minimal \(\ell ^2\)-error) of (32) for different cases. In all of our considered tests, the residuals were always larger than zero indicating that the the classical RBF operators investigated are not in SBP form. Similar results are obtained for non-diagonal norm matrices P, which is outlined in Appendix.

Remark 3

(Least Squares RBF Methods) It was observed in [51, 53] that using least squares RBF methods instead of collocation RBF methods leads to improved stability. The above discussion sheds some new light on this observation: The differentiation matrix D of the method can satisfy the FSBP property if and only if there exists a positive and \(({\mathcal {R}}_{m}(X_K) {\mathcal {R}}_{m}(X_K))'\)-exact quadrature formula on the grid \(Y_N\). If N is sufficiently large, the linear system (32) becomes underdetermined (\(N>L\)) and will eventually admit a positive solution \({\textbf{w}}\), see [20]. For a least-squares RBF method, the centers \(X_K\) and grid points \(Y_N\) differ, with \(N > K\). Indeed, one possible positive and exact solution is given by least squares quadratures, which we will subsequently use to construct RBF methods satisfying the SBP property. The (quasi-)Monte Carlo formula, used as part of the stability analysis in [53], gives a positive but inexact quadrature formula and therefore does not yield an exact SBP property.

4.3 Existence and Construction of RBFSBP Operators

Translating the main result from [25], we need quadrature formulas to ensure the exact integration of \(({\mathcal {R}}_{m}(X_K) {\mathcal {R}}_{m}(X_K))'\). For RBF spaces, we use least-squares formulas, which can be used on almost arbitrary sets of grid points \(Y_N\) and to any degree of exactness. The least squares ansatz always leads to a positive and \(({\mathcal {R}}_{m}(X_K) {\mathcal {R}}_{m}(X_K))'\)-exact quadrature formula as long a sufficiently large number of data points \(Y_N\) is used.

Remark 4

Existing results on positivity and exactness of least squares quadrature formulas usually assume that the function space contains constants [19, 20]. Translating this to our setting, we need this property to be fulfilled for \(({\mathcal {R}}_{m}(X_K) {\mathcal {R}}_{m}(X_K))'\). Therefore, \({\mathcal {R}}_{m}(X_K)\) should contain constants and linear functions. However, this assumption is primarily made for technical reasons and can be relaxed. Indeed, even when \({\mathcal {R}}_{m}(X_K)\) only contained constants, we were still able to construct positive and \(({\mathcal {R}}_{m}(X_K) {\mathcal {R}}_{m}(X_K))'\)-exact least squares quadrature formulas in all our examples. Future work will provide a theoretical justification for this.

Due to the least-square ansatz, we may always assume that we have a positive and \(({\mathcal {R}}_{m}(X_K) {\mathcal {R}}_{m}(X_K))'\)-exact quadrature formula. With that ensured, we summarize the algorithm to construct a diagonal norm RBFSBP operators in the following steps:

-

1.

Build P by setting the quadrature weights on the diagonal.

-

2.

Split Q into its known symmetric \(\frac{1}{2}B\) and unknown anti-symmetric part \(Q_A\).

-

3.

Calculate \(Q_A\) by using

$$\begin{aligned} Q_AC =P C_x -\frac{1}{2} BC \text { with }C = [c_1({\textbf{y}}), \dots , c_K({\textbf{y}})] = \begin{bmatrix} c_1(y_1) &{} \dots &{}c_K(y_1) \\ \vdots &{} &{} \vdots \\ c_1(y_N) &{} \dots &{} c_K(y_N) \end{bmatrix} \end{aligned}$$and \(C_x = [c_1'({\textbf{y}}), \dots , c_K'({\textbf{y}})]\) is defined analogous to C where \(\{c_1,...,c_K\}\) is a basis of the K-dimensional function space.

-

4.

Use \(Q_A\) in \(Q= Q_A+\frac{1}{2} B\) to calculate Q.

-

5.

\(D=P^{-1}Q\) gives the RBFSBP operator.

In the RBF context, one can always use cardinal functions as the basis. However, for simplicity reason is can be wise to use another basis representation, derived from the cardinal functions.

5 Examples of RBFSBP operators

Next, we construct RBFSBP operators for a few frequently used kernelsFootnote 2. We consider a set of K points, \({X_K = \{x_1,\dots ,x_K\} \subset [x_L,x_R]}\), and assume that these include the boundaries \(x_L\) and \(x_R\). Henceforth, we will consider the kernels listed in Table 1 and augment them with constants. The set of all RBF interpolants including constants (10) forms a K-dimensional approximation space, which we denote by \({\mathcal {R}}_{1}(X_K)\). Recall that \(m=1\) imply constants, but no higher-order polynomials, are included in the RBF approximation space. This space is spanned by the cardinal functions \(c_k \in {\mathcal {R}}_{1}(X_K)\) which are uniquely determined by (16). The matching constraint is then simply \( \sum _{k=1}^K \alpha _k = 0. \) That is,

with the approximation space \({\mathcal {R}}_{1}(X_K)\) having dimension K.

The product space \({\mathcal {R}}_{1}(X_K){\mathcal {R}}_{1}(X_K)\) and its derivative space \(({\mathcal {R}}_{1}(X_K){\mathcal {R}}_{m}(X_K))'\) are respectively given by

Note that the right-hand sides of (35) and (36) both use \(K^2\) elements to span the product space \({\mathcal {R}}_{1}(X_K){\mathcal {R}}_{1}(X_K)\) and its derivative space \(({\mathcal {R}}_{1}(X_K){\mathcal {R}}_{1}(X_K))'\). However, these elements are not linearly independent and the dimensions of \({\mathcal {R}}_{1}(X_K){\mathcal {R}}_{1}(X_K)\) and \(({\mathcal {R}}_{1}(X_K){\mathcal {R}}_{1}(X_K))'\) are smaller than \(K^2\). Indeed, we can observe that \(c_k c_l = c_l c_k\) and the dimension of (35) is therefore bounded from above by

Subsequently, for ease of presentation, we round all reported numbers to the second decimal place.

5.1 RBFSBP Operators using Polyharmonic Splines

In the first test, we work with cubic polyharmonic splines, \(\varphi (r) = r^3\). On \([x_L,x_R] = [0,1]\) and for the centers \(X_3 = \{0,1/2,1\}\), the three-dimensional cubic RBF approximation space (34) is given by \( {\mathcal {R}}_{1}(X_3) = \textrm{span}\{ \, c_1, c_2, c_3 \, \} = \textrm{span}\{ \, b_1, b_2, b_3 \, \} \) with cardinal functions

and alternative basis functionsFootnote 3

We make the transformation to the basis representation \(\textrm{span}\{b_1,b_2, b_3\}\) to simplify the determination of \(({\mathcal {R}}_{1}(X_3){\mathcal {R}}_{1}(X_3))'\). In this alternative basis representation, the product space \({\mathcal {R}}_{1}(X_3){\mathcal {R}}_{1}(X_3)\) and its derivative space \(({\mathcal {R}}_{1}(X_3){\mathcal {R}}_{1}(X_3))' \) are respectively given by

Next, we have to find an \(({\mathcal {R}}_{1}(X_3){\mathcal {R}}_{1}(X_3))'\)-exact quadrature formula with positive weights. For the chosen \(N=4\) equidistant grid points, the least-squares quadrature formula has positive weights and is \(({\mathcal {R}}_{1}(X_3){\mathcal {R}}_{1}(X_3))'\)-exact. The points and weights are \({\textbf{x}} = \left[ 0, \frac{1}{3}, \frac{2}{3}, 1 \right] ^T\) and \( P = {{\,\textrm{diag}\,}}\left( \frac{16}{129}, \frac{81}{215}, \frac{81}{215}, \frac{16}{129}\right) \). The corresponding matrices Q and D of the RBFSBP operator \(D = P^{-1} Q\) obtained from the construction procedure described before are

This example was presented with less details in [25].

5.2 RBFSBP Operators using Gaussian Kernels

Next, we consider the Gaussian kernel \( \varphi (r) = \exp (-r^2)\) on \([x_L,x_R] = [0,1]\) for the centers \(X_3 = \{0,1/2,1\}\). The three-dimensional Gaussian RBF approximation space (34) is given by \( {\mathcal {R}}_{1}(X_3) = \textrm{span}\{ \, c_1, c_2, c_3 \, \} \) with cardinal functions

Again for \(N=4\) equidistant grid points in the least square quadrature formula, we obtain exactness and positive weights. They are \( {\textbf{x}} = \left[ 0, \frac{1}{3}, \frac{2}{3}, 1 \right] ^T\) and \( P = {{\,\textrm{diag}\,}}\left( 0.15, 0.36, 0.36,0.15 \right) \). The corresponding matrices Q and D of the RBFSBP operator \(D = P^{-1} Q\) obtained from the construction procedure described before are

To include an example with non-equidistant points for the centers, we also build matrices and FSBP operators with Halton points \(X_3\) for this case. A bit surprising, we need twice as many points than on an equidistant grid to get a positive exact quadrature formula. We obtain an exact quadrature using the nodes and weights \( {\textbf{x}} = \left[ i/7, \right] ^T,\) with \(i=0,\cdots ,7,\) and \( P = {{\,\textrm{diag}\,}}\left( 0.04, 0.12, 0.19, 0.13, 0. 04, 0.10, 0.30, 0.08 \right) \). The corresponding matrices Q and D are \({\mathbb {R}}^{8 \times 8}\) and are given by

5.3 RBFSBP Operators using Multiquadric Kernels

As the last example, we consider the RBFSBP operators using multiquadric kernels \( \varphi (r) = \sqrt{1+r^2}\) on \([x_L,x_R] = [0,0.5]\) and centers \(X_3 = \{0,1/4,1/2\}\). The \(({\mathcal {R}}_{1}(X_3){\mathcal {R}}_{1}(X_3))'\)-exact least square ansatz yields the points \({\textbf{x}} = \left[ 0, \frac{1}{6}, \frac{1}{3}, \frac{1}{2}\right] ^T\) and norm matrix \( P = {{\,\textrm{diag}\,}}\left( 0.07, 0.18, 0.18,0.07 \right) . \) With this norm matrix, we obtain finally

6 Numerical Results

For all numerical tests presented in this work, we used an explicit SSP-RK methods. The step size \(\Delta t\) was chosen to be sufficiently small not to influence the accuracy. To guarantee stability, we applied weakly enforced boundary conditions using Simultanuous Approximation Terms (SATs), as is usually done in the SBP community [1, 2, 39], and for RBFs in [24]. To avoid ill-conditioned matrix calculations inside the construction and application, we use a multi-block structure in some of our tests. In each block, a global RBF method is used and the blocks are coupled using SAT terms as in [4, 26]. While this is beyond the scope of this work, future work will address other techniques to overcome ill-conditioned matrices, including tailored point distributions, kernels, shape parameters, alternative bases, and efficient implementations. Additionally, most of the results can be calculated as well with classical SBP-FD operators and we get analogues results. We stress that this section provide a proof of concept and we focus on global RBF methods and their stability properties, not on efficiency and real application tests.

Most numerical simulations are performed using polyharmonic splines to avoid a discussion about the shape parameter, which highly effects the accuracy and stability properties of the RBF approach [14, 30]. In future work, we will investigate the connection between the selection of the shape parameter and the construction of RBFSBP operators.

6.1 Advection with Periodic Boundary Conditions

In the first test, we consider the linear advection

with \(a=1\) and periodic BCs. The initial condition is \(u(x,0) =\textrm{e}^{-20x^2} \) from the introducing example (1) and the domain is \([-1,1]\). We are in the same setting as shown in Figure 1. We compare a classical collocation RBF method with our new RBFSBP methods, focus on cubic splines and consider the final time to be \(T=2\). In Figure 2a and Figure 2c, the solutions are plotted using collocation RBF method and the RBFSBP approach. In Figure 2a, we select \(K=15\) for both approximations and \(N=29\) evaluation points for the RBFSBP operator. The collocation RBF method dampens the Gaussian bump significantly while the RBFSBP method do better. The decrease can also be seen in the energy profile 2b where the collocation approach loses more. To obtain a comparable result between the collocation and RBFSBP methods, we double the number of interpolation points K in our second simulation for the collocation RBF method, cf. Figure 2c and Figure 2d. The RBFSBP method still performs better and demonstrates the advantage of the RBFSBP approach.

Next, we focus only on RBFSBP methods and demonstrate the high accuracy of the approach by increasing the degrees of freedom. In Figure 3, we plot the result and the energy using Gaussian (\(\epsilon =1\)) and cubic kernels. We use \(K=5\) and \(I=20\) blocks. We obtain an highly accurate solution and the energy remains constant.

6.2 Advection with Inflow Boundary Conditions

In the following test from [24], we consider the advection equation (44) with \(a=1\) in the domain [0, 1]. The BC and IC are

We have a smooth IC and an inflow BC at the left boundary \(x=0\). We apply cubic splines with constants as basis functions and the discretization

with the simultaneous approximate terms (SAT) \( {\mathbb {S}} := [{\mathbb {S}}_0,0,\dots ,0]^T, \quad {\mathbb {S}}_0 := - (u_0-g).\) In Figures 4a - 4b, we show the solutions at time t = 0.5 with \(K=5\) and \(I=15, 20\) elements using equidistant point and randomly disturbed equidistant points. The numerical solutions using disturbed points in Figure 4a has wiggles but these are reduced by increasing the number of blocks, see Figure 4b. Note that the wiggles are more pronounced if the point selection is not distributed symmetrically around the midpoints, e.g. for the Halton points in Figures 4c - 4d. Next, we focus on the error behavior. As mentioned before, the RBF methods can reach spectral accuracy for smooth solutions. In Figure 5, the error behavior for \(K=3-7\) basis functions using 20 blocks is plotted in a logarithmic scale. Spectral accuracy is indicated by the (almost) constant slope.

Remark 5

(Accuracy) For different point selections, we obtain similar convergence rates, e.g. same slopes, but the error can be different. This can be seen in the numerical results using Halton points which are not as accurate as the ones using equidistant points, cf. Figure 4.

Error plots using cubic kernels with approximation space \(K=4-7\) on equidistant points with \(I=20\) blocks. For \(K=5\), the errors correspond to the solutions printed in the red dotted line on the right side of Figure 4

6.3 Advection-Diffusion

Next, the boundary layer problem from [59] is considered

The initial condition is \(u(x,0)=2x\) and the boundary conditions are \(u(0,t)=0\) and \(u(0.5,t)=1\). The exact steady state solution is \( u(x)= \frac{\exp \left( \frac{x}{\kappa } \right) -1}{\exp \left( \frac{1}{2\kappa } \right) -1}. \) Cubic splines and Gaussian kernels with shape parameter 1 are used together with constants. We expect to obtain better results using Gaussian kernels due to structure of the steady state solution. In Figure 6, we show the solutions for different times using \(K=5\) elements on equidistant grid points with diffusion parameters \(\kappa =0.2\) and \(\kappa =0.1\).

Some overshoots can be seen in the more steep case for \(\kappa =0.1\). This behavior can be circumvented by using more degrees of freedom and multi-blocks which are avoided in this case.

6.4 2D Linear Advection

We conclude our examples with a 2D case and consider the linear advection equation:

with constants \(a,b \in {\mathbb {R}}\).

6.4.1 Periodic Boundary Conditions

In our first test, \(a=b=1\) are used in (47). The initial condition is \( u(x,y,0)= \textrm{e}^{-20\left( (x-0.5)^2+(y-0.5)^2 \right) }\) for \((x,y) \in [0,1]^2\) and periodic boundary conditions, i. e., \(u(0,y,t)=u(1,y,t)\) and \(u(x,0,t)=u(x,1,t)\), are considered. The coupling at the boundary was again done via SAT terms. We use cubic kernels (\(K=13\)) equipped with constants in each direction. Figure 7b illustrates the numerical solution at time \(T=1\). The bump has once left the domain at the right upper corner and entered again in the left lower corner. It reaches its initial position at \(T=1\). No visible differences between the numerical solution at \(T=1\) and the initial condition can be seen. In Figure 7d the energy is reported over time. We notice a slight decrease of energy when the bump is leaving the domain (at \(t=0.5\)) due to weakly enforced slightly dissipative SBP-SAT coupling.

6.4.2 Dirichlet-Inflow Conditions

In the last simulation, we consider (47) with \(a=0.5\), \(b=1\), initial condition \(u(x,y,0)= \textrm{e}^{-20\left( (x-0.25)^2+(y-0.25)^2 \right) }\) for \((x,y) \in [0,1]^2\) and zero inflow \(u(0,y,t)=0\), and \(u(x,0,t)=0\). We again use cubic kernels (\(K=13\)) equipped with constants. The boundary conditions are enforced weakly via SAT terms. The initial condition lies in the left corner, cf. Figure 7a. In Figure 8b, the numerical solution is shown. The bump moves in y direction with speed one and in x-direction with speed 0.5. Figure 8c shows a slight decrease of the energy over time due the bump leaving the domain.

6.5 Conditioning of the RBFSBP operators

A significant challenge associated with global RBF methods is that a direct approach leads to a large and dense differentiation matrix. Furthermore, the conditioning of the differentiation matrix, the corresponding norm matrix and the associated Vandermonde matrix, can become problematic, in particular they can become highly ill-conditioned resulting in stability issues of the scheme. To address these issues, extensive efforts have been made to analyze the conditioning of RBF methods and to improve the schemes. To give a concrete example: the selection of basis functions alongside their associated shape parameters and point distributions can substantially impact the efficiency of the methods. As noted in [44], a close relationship exists between the flatness of RBFs (using small shape parameter) and the resulting ill-conditioning of the matrix in equation (13). This directly affects the accuracy of the RBF interpolant, a concept referred to as the uncertainity or trade-off principle of direct RBF methods. For potential solutions, as well as applications and comprehensive discussions on this matter, we direct readers to references such as [16, 33, 36, 45, 46, 57].

In this paper we focus on other issues but provide a concrete example of the conditioning numbers in the differentiation matrices, the norm matrices and the Vandermonde matrices in the collocation approach. We consider cubic splines utilizing equidistant points, similar to the setup in Subsection 6.1. The numerical values for these condition numbers are presented in Table 3. Notably, an increase in the condition numbers can be recognized, particularly the norm matrix increases as the number of evaluation points N.

Table 3 is provided to give a first impression of the condition numbers in classical RBF methods. We avoid further investigation using a collocation approach and instead point to the aforementioned literature concerning this situation, cf. [10, 15, 16, 33, 45, 46, 56] and the references therein. We focus on assessing the efficiency and conditioning of our RBFSBP operators. Note that the differentiation matrices within the (function-space) SBP framework are not regular, while the norm matrix remains exact within the finite function space. Therefore, focussing on the condition number of D and P does not provide us with any information about the performance of our algorithm since if these matrices exist, the schemes are energy stable as demonstrated in Section 3. The challenging aspect within our RBFSBP framework lies in constructing an appropriate norm matrix P corresponding to the derivative matrix D. As underscored in Remark 2, the matrices dimensions are determined by the availability of a suitable norm matrix. In our construction procedure, we apply a least square method to build P, as elaborated in Section 4.3. This involves iteratively solving the linear systems presented in equation (32), progressively increasing the number of evaluation points with each iteration. This iterative procedure continues until a suitable norm matrix P corresponding to the derivative matrix D is found ensuring the FSBP property.

Throughout all our computations up to this point, the evaluation points Y in our approach have been consistently selected using equidistant points. The matrix G is a Vandermonde like matrix. It is well-known that in classical polynomial interpolation formulating the Vandermonde matrix with respect to the wrong basis, e.g. monomials, this matrix gets highly ill-conditioned for increasing N. We see a similar behavior for most of our matrices \(G \in {\mathbb {R}}^{L\times N}\), also for small K.

In the subsequent analysis, we provide an initial study of the overall performance of our algorithm focussing on these three properties: the condition number of \(G^TG\), the number of points required for constructing the matrix P, and the norm of matrix D. We use cubic splines on equidistant points for the center points X.

Table 4 offers a first impression of the conditioning of our methods, revealing that in this simulation, the numerical values remain modest within the computations, e.g. all three values remain small. This finding intersects with the observations made in our previous numerical simulations, where no issues were encountered.

In Table 5, we present comparable results while now employing Halton points as the center points X. Note that even with small values of K, the condition number of \(G^TG\) becomes high. A noteworthy observation is that increasing the dimension K doesn’t necessarily lead to an automatic increase in the required number of evaluation points N. Note that our evaluation procedure remains confined to equidistant points, signifying that we employ an equidistant point distribution for the evaluation points Y as opposed to using Halton points or any optimization procedure for the point distribution. While potential enhancements could stem from adopting diverse point selections for Y, these considerations will be deferred to future investigations.

As mentioned before the shape parameter effects highly the conditioning numbers of the corresponding RBF methods. For flat RBF methods meaning small \(\epsilon \), the matrices becoming ill-conditioned. Also in this case, we see a similar behavior for the RBFSBP operators. In Table 6, we give the numbers using \(\epsilon =1\) in a Gaussian kernel. We recognize that the condition number of \(G^TG\) is high and stress that even if we could derive G, our construction procedure of the RBFSBP methods from Subsection 4.3 would be problematic. The reason being that, we have to solve a linear system to obtain \(Q_A\) where also the corresponding Vandermonde matrix is ill-conditioned. By increasing the shape parameter to 5, we get better result as reported in Table 7. In this example, we do not run into the ill-conditioning problem and we can increase the dimension of K.

7 Concluding Thoughts

RBF methods are a popular tool for numerical PDEs. However, despite their success for problems with sufficient inherent dissipation, stability issues are often observed for advection-dominated problems. In this work, we used the FSBP theory combined with a weak enforcement of BCs to develop provable energy-stable RBF methods. We found that one can construct RBFSBP operators by using oversampling to obtain suitable positive quadrature formulas. Existing RBF methods do not satisfy such an RBFSBP property, either because they are based on collocation or because an inappropriate quadrature is used. This is demonstrated for simple test cases and the one-dimensional setting. Our findings imply that the FSBP theory provides a building block for systematically developing stable RBF methods, filling a critical gap in the RBF theory.

In future work, it would be interesting to analyze the ill-condition property of the matrices with respect to the shape parameter using well-known techniques from the RBF community and to study the connection between the classical RBF and the RBFSBP framework. Additionally, it would be interesting to improve the quadrature procedure in the construction and optimize the point selection of the evaluation points Y to avoid the ill-conditioning of G. Also, instead of working with the basis representation of the cardinal functions, one may change the basis to avoid ill-conditioning of the Vandermonde matrices. However, these points are left for future work. Our investigation is a first consideration of the condition properties of the RBFSBP approach. Additionally, future work will address the extension to local RBF methods and multiple dimensions on complex domains.

Data Availability

Parts of the matlab code to replicate the results is provided in the corresponding repository https://github.com/phioeffn/Energy_stable_RBF. Further parts are not public but are available from the corresponding author on reasonable request.

Notes

By “adequate projection", we mean either evaluating the function f at the grid points, or employing \(L^2\) projection operators on f, leading to the projection of f into the underlying approximation spaces.

The matlab code to replicate the results is provided in the corresponding repository https://github.com/phioeffn/Energy_stable_RBF.

This basis can be constructed using a simple Gauss elimination method.

References

Abgrall, R., Nordström, J., Öffner, P., Tokareva, S.: Analysis of the SBP-SAT stabilization for finite element methods I: linear problems. J. Sci. Comput. 85(2), 28 (2020). https://doi.org/10.1007/s10915-020-01349-z

Abgrall, R., Nordström, J., Öffner, P., Tokareva, S.: Analysis of the SBP-SAT stabilization for finite element methods part ii: Entropy stability. Communications on Applied Mathematics and Computation pp. 1–23 (2021)

Buhmann, M.D.: Radial functions on compact support. Proc. Edinb. Math. Soc., II. Ser. 41(1), 33–46 (1998). https://doi.org/10.1017/S0013091500019416

Carpenter, M.H., Nordström, J., Gottlieb, D.: Revisiting and extending interface penalties for multi-domain summation-by-parts operators. J. Sci. Comput. 45(1–3), 118–150 (2010). https://doi.org/10.1007/s10915-009-9301-5

Chan, J., Del Rey Fernández, D.C., Carpenter, M.H.: Efficient entropy stable Gauss collocation methods. SIAM J. Sci. Comput. 41(5), a2938–a2966 (2019). https://doi.org/10.1137/18M1209234

Cuomo, S., Sica, F., Toraldo, G.: Greeks computation in the option pricing problem by means of RBF-PU methods. J. Comput. Appl. Math. 376, 14 (2020). https://doi.org/10.1016/j.cam.2020.112882

Dehghan, M., Mohammadi, V.: A numerical scheme based on radial basis function finite difference (RBF-FD) technique for solving the high-dimensional nonlinear Schrödinger equations using an explicit time discretization: Runge-Kutta method. Comput. Phys. Commun. 217, 23–34 (2017)

Del Rey Fernández, D.C., Hicken, J.E., Zingg, D.W.: Review of summation-by-parts operators with simultaneous approximation terms for the numerical solution of partial differential equations. Comput. Fluids 95, 171–196 (2014)

Fallah, A., Jabbari, E., Babaee, R.: Development of the Kansa method for solving seepage problems using a new algorithm for the shape parameter optimization. Comput. Math. Appl. 77(3), 815–829 (2019). https://doi.org/10.1016/j.camwa.2018.10.021

Fasshauer, G.E.: Meshfree Approximation Methods with MATLAB, vol. 6. World Scientific, Singapore (2007)

Flyer, N., Fornberg, B., Bayona, V., Barnett, G.A.: On the role of polynomials in RBF-FD approximations. I: Interpolation and accuracy. J. Comput. Phys. 321, 21–38 (2016). https://doi.org/10.1016/j.jcp.2016.05.026

Flyer, N., Lehto, E., Blaise, S., Wright, G.B., St-Cyr, A.: A guide to RBF-generated finite differences for nonlinear transport: shallow water simulations on a sphere. J. Comput. Phys. 231(11), 4078–4095 (2012). https://doi.org/10.1016/j.jcp.2012.01.028

Fornberg, B., Flyer, N.: Accuracy of radial basis function interpolation and derivative approximations on 1-D infinite grids. Adv. Computat. Math. 23(1–2), 5–20 (2005)

Fornberg, B., Flyer, N.: A Primer on Radial Basis Functions With Applications to the Geosciences. SIAM, New Delhi (2015)

Fornberg, B., Larsson, E., Flyer, N.: Stable computations with Gaussian radial basis functions. SIAM J. Sci. Comput. 33(2), 869–892 (2011)

Fornberg, B., Larsson, E., Flyer, N.: Stable computations with Gaussian radial basis functions. SIAM J. Sci. Comput. 33(2), 869–892 (2011). https://doi.org/10.1137/09076756X

Gassner, G.J., Winters, A.R., Kopriva, D.A.: Split form nodal discontinuous Galerkin schemes with summation-by-parts property for the compressible Euler equations. J. Comput. Phys. 327, 39–66 (2016). https://doi.org/10.1016/j.jcp.2016.09.013

Glaubitz, J.: Shock Capturing and High-Order Methods for Hyperbolic Conservation Laws. Logos Verlag Berlin GmbH (2020)

Glaubitz, J.: Stable high order quadrature rules for scattered data and general weight functions. SIAM J. Numer. Anal. 58(4), 2144–2164 (2020). https://doi.org/10.1137/19M1257901

Glaubitz, J.: Construction and application of provable positive and exact cubature formulas. IMA J. Numer. Anal. (2022). https://doi.org/10.1093/imanum/drac017

Glaubitz, J., Gelb, A.: Stabilizing radial basis function methods for conservation laws using weakly enforced boundary conditions. J. Sci. Comput. 87(2), 29 (2021). https://doi.org/10.1007/s10915-021-01453-8

Glaubitz, J., Klein, S.C., Nordström, J., Öffner, P.: Multi-dimensional summation-by-parts operators for general function spaces: Theory and construction. J. Comput. Phys. 491 (2023)

Glaubitz, J., Klein, S.C., Nordström, J., Öffner, P.: Summation-by-parts operators for general function spaces: The second derivative. arXiv preprint arXiv:2306.16314 (2023)

Glaubitz, J., Le Meledo, E., Öffner, P.: Towards stable radial basis function methods for linear advection problems. Comput. Math. Appl. 85, 84–97 (2021). https://doi.org/10.1016/j.camwa.2021.01.012

Glaubitz, J., Nordström, J., Öffner, P.: Summation-by-parts operators for general function spaces. SIAM J. Numer. Anal. 61(2), 733–754 (2023). https://doi.org/10.1137/22M1470141

Gong, J., Nordström, J.: Interface procedures for finite difference approximations of the advection-diffusion equation. J. Comput. Appl. Math. 236(5), 602–620 (2011). https://doi.org/10.1016/j.cam.2011.08.009

Grant, M., Boyd, S.: CVX: Matlab software for disciplined convex programming (2014). Version 2.2

Gustafsson, B., Kreiss, H.O., Oliger, J.: Time Dependent Problems and Difference Methods, vol. 24. Wiley, Hoboken (1995)

Hesthaven, J.S., Mönkeberg, F., Zaninelli, S.: RBF based CWENO method. In: Spectral and high order methods for partial differential equations, ICOSAHOM 2018. Selected papers from the ICOSAHOM conference, London, UK, July 9–13, 2018, pp. 191–201. Cham: Springer (2020)

Iske, A.: Ten good reasons for using polyharmonic spline reconstruction in particle fluid flow simulations. Continuum Mechanics, Applied Mathematics and Scientific Computing: Godunov’s Legacy pp. 193–199 (2020)

Kansa, E.J.: Multiquadrics - a scattered data approximation scheme with applications to computational fluid-dynamics. II: Solutions to parabolic, hyperbolic and elliptic partial differential equations. Comput. Math. Appl. 19(8–9), 147–161 (1990)

Kitson, A., McLachlan, R.I., Robidoux, N.: Skew-adjoint finite difference methods on nonuniform grids. New Zealand J. Math 32(2), 139–159 (2003)

Larsson, E., Fornberg, B.: Theoretical and computational aspects of multivariate interpolation with increasingly flat radial basis functions. Comput. Math. Appl. 49(1), 103–130 (2005). https://doi.org/10.1016/j.camwa.2005.01.010

Lazzaro, D., Montefusco, L.B.: Radial basis functions for the multivariate interpolation of large scattered data sets. J. Comput. Appl. Math. 140(1–2), 521–536 (2002)

Linders, V., Nordström, J., Frankel, S.H.: Properties of Runge–Kutta-summation-by-parts methods. J. Comput. Phys. 419, 109684 (2020)

Nassajian Mojarrad, F., Han Veiga, M., Hesthaven, J.S., Öffner, P.: A new variable shape parameter strategy for RBF approximation using neural networks. Comput. Math. Appl. 143, 151–168 (2023). https://doi.org/10.1016/j.camwa.2023.05.005

Nordström, J.: Conservative finite difference formulations, variable coefficients, energy estimates and artificial dissipation. J. Sci. Comput. 29(3), 375–404 (2006)

Nordström, J.: A roadmap to well posed and stable problems in computational physics. J. Sci. Comput. 71(1), 365–385 (2017). https://doi.org/10.1007/s10915-016-0303-9

Öffner, P.: Approximation and Stability Properties of Numerical Methods for Hyperbolic Conservation Laws. Springer Nature, Berlin (2023)

Öffner, P., Ranocha, H.: Error boundedness of discontinuous Galerkin methods with variable coefficients. J. Sci. Comput. 79(3), 1572–1607 (2019). https://doi.org/10.1007/s10915-018-00902-1

Pettersson, U., Larsson, E., Marcusson, G., Persson, J.: Improved radial basis function methods for multi-dimensional option pricing. J. Comput. Appl. Math. 222(1), 82–93 (2008)

Platte, R.B., Driscoll, T.A.: Eigenvalue stability of radial basis function discretizations for time-dependent problems. Comput. Math. Appl. 51(8), 1251–1268 (2006)

Ranocha, H., Öffner, P., Sonar, T.: Extended skew-symmetric form for summation-by-parts operators and varying Jacobians. J. Comput. Phys. 342, 13–28 (2017). https://doi.org/10.1016/j.jcp.2017.04.044

Schaback, R.: Error estimates and condition numbers for radial basis function interpolation. Adv. Comput. Math. 3(3), 251–264 (1995). https://doi.org/10.1007/BF02432002

Schaback, R.: Multivariate interpolation by polynomials and radial basis functions. Constr. Approx. 21(3), 293–317 (2005). https://doi.org/10.1007/s00365-004-0585-2

Schaback, R.: Small errors imply large evaluation instabilities. Adv. Comput. Math. 49(2), 27 (2023). https://doi.org/10.1007/s10444-023-10026-2

Shu, C.: Total-variation-diminishing time discretizations. SIAM J. Sci. Stat. Comput. 9(6), 1073–1084 (1988). https://doi.org/10.1137/0909073

Svärd, M.: On coordinate transformations for summation-by-parts operators. J. Sci. Comput. 20(1), 29–42 (2004)

Svärd, M., Nordström, J.: Review of summation-by-parts schemes for initial-boundary-value problems. J. Comput. Phys. 268, 17–38 (2014). https://doi.org/10.1016/j.jcp.2014.02.031

Tolstykh, A.I.: On using RBF-based differencing formulas for unstructured and mixed structured-unstructured grid calculations. In: Proceedings of the 16th IMACS world congress, vol. 228, pp. 4606–4624. Lausanne (2000)

Tominec, I., Larsson, E., Heryudono, A.: A least squares radial basis function finite difference method with improved stability properties. SIAM J. Sci. Comput. 43(2), a1441–a1471 (2021). https://doi.org/10.1137/20M1320079

Tominec, I., Nazarov, M.: Residual viscosity stabilized RBF-FD methods for solving nonlinear conservation laws. J. Sci. Comput. 94(1), 1–31 (2023)

Tominec, I., Nazarov, M., Larsson, E.: Stability estimates for radial basis function methods applied to time-dependent hyperbolic PDEs. arXiv preprint arXiv:2110.14548 (2021)

Wendland, H.: Piecewise polynomial, positive definite and compactly supported radial functions of minimal degree. Adv. Comput. Math. 4(4), 389–396 (1995). https://doi.org/10.1007/BF02123482

Wendland, H.: Fast evaluation of radial basis functions: Methods based on partition of unity. In: Approximation Theory X: Wavelets, Splines, and Applications. Citeseer (2002)

Wendland, H.: Scattered Data Approximation, vol. 17. Cambridge University Press, Cambridge (2004)

Wright, G.B., Fornberg, B.: Stable computations with flat radial basis functions using vector-valued rational approximations. J. Comput. Phys. 331, 137–156 (2017). https://doi.org/10.1016/j.jcp.2016.11.030

Xiong, J., Wen, J., Zheng, H.: An improved local radial basis function collocation method based on the domain decomposition for composite wall. Eng. Anal. Bound. Elem. 120, 246–252 (2020). https://doi.org/10.1016/j.enganabound.2020.09.002

Yuan, L., Shu, C.W.: Discontinuous Galerkin method based on non-polynomial approximation spaces. J. Comput. Phys. 218(1), 295–323 (2006). https://doi.org/10.1016/j.jcp.2006.02.013

Funding

Open Access funding enabled and organized by Projekt DEAL. JG was supported by AFOSR #F9550-18-1-0316 and ONR MURI #N00014-20-1-2595. JN was supported by Vetenskapsrådet, Sweden grant 2018-05084 VR and 2021-05484 VR, and the University of Johannesburg. PÖ was supported by the Gutenberg Research College, JGU Mainz.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Necessity of Polynomials in RBFs

For completeness, we shortly explain why the RBF interpolant (10) exists uniquely when the kernel \(\varphi \) is conditionally positive definite of order m and polynomials of degree up to \(m-1\) are incorporated. To this end, recall that \(\varphi \) is conditionally positive definite of order m when

for all \(\varvec{\alpha } \in {\mathbb {R}}^K\setminus \{{\textbf{0}}\}\) that satisfy (12), where \(\Phi \) is given by (14). Further, (12) is equivalent to \(P^T \varvec{\alpha } = {\textbf{0}}\). Next note that the RBF interpolant (10) exists uniquely if and only if the linear system (13) has a unique solution for every \({\textbf{u}}\), which is equivalent to the corresponding homogeneous linear system

admitting only the trivial solution, \(\varvec{\alpha } = {\textbf{0}}\) and \(\varvec{\beta } = {\textbf{0}}\). To show that this is the case, we multiply both sides of (49) by \(\varvec{\alpha }^T\) from the left, which yields

since \(\varvec{\alpha }^T P = {\textbf{0}}^T\) due to (50). Further, for conditionally positive definite \(\varphi \), (51) implies \(\varvec{\alpha } = {\textbf{0}}\). Substituting \(\varvec{\alpha } = {\textbf{0}}\) into (49) yields \(P \varvec{\beta } = {\textbf{0}}\), which means that the polynomial

has zeros \(x_1,\dots ,x_K\). Finally, for \(m \le K\), this can only be the case if \(\varvec{\beta } = {\textbf{0}}\).

1.2 RBFSBP property with Non-diagonal Norm Matrix

In Section 4.2, we demonstrated that there exist no diagonal P such that the RBFSBP properties are fulfilled in general. In the general definition (4), P must only be symmetric positiv definite and not necessarily diagonal. Therefore, some non-diagonal norm matrix might exists fulfilling the RBFSBP property. Here, we demonstrate that this is not the case. To investigate this, we set \(X_K=Y_N\). The differentiation operators \({D \in {\mathbb {R}}^{N \times N}}\) of classic global RBF methods are usually constructed to be exact for the elements of the finite dimensional function space \({\mathcal {R}}_{m}(Y_N)\). Unfortunately, neither the norm matrix P nor the matrix Q are explicitly part of RBF methods, which only come with an RBF-exact differentiation operator \({D \in {\mathbb {R}}^{N \times N}}\) for the cardinal functions. That said, we will now demonstrate that in many cases existing collocation RBF methods cannot satisfy the RBFSBP property since certain conditions are violated.

To this end, let \({D \in {\mathbb {R}}^{N \times N}}\) be the RBF-differentiation operator. We assume that there exist a positive definite and symmetric norm matrix \(P \in {\mathbb {R}}^{N \times N}\) and a matrix \(Q \in {\mathbb {R}}^{N \times N}\) such that (see (4))

The two conditions in (53) can be combined to

Next, we assume that the RBF interpolant include polynomials of most degree \(m-1 \ge 0\). In this case, \({\mathcal {R}}_{1}(Y_N)\) contains constants and P must be associated with a \({\mathcal {R}}_{1}(Y_N)\)-exact quadrature formula. Since D is \({\mathcal {R}}_{1}(Y_N)\)-exact, this can be reformulated as

Since D and \({\textbf{f}}\) are formulated with respect to the same basis \(\textrm{span}\{c_k\}\). The entries of D are given by \( D_{jk} =c_k'(x_j) \) with collocation points \(x_j\). Hence, (55) is used for every basis element \(\textrm{span} \{ c_i\}\), e.g. for \(c_1\):

Since \(\mathbf {c_i'}\) are the columns of the derivative matrix. We can collect every basis element using (56) resulting in

with \({\textbf{m}} = [ c_1|_{x_L}^{x_R}, \dots , c_N|_{x_L}^{x_R} ]\). We shall now summarize the above discussion: Let \(m-1 \ge 0\) and \({b(P):= \Vert PD + (PD)^T - B \Vert _2}\). Moreover, for given D let us consider the following optimization problem:

If the differentiation operator D of a classic global RBF method satisfies the FSBP property, then minimizers \(P^*\) of the optimization problem (58) satisfy \(b(P^*) = 0\). There exist a suitable quadrature formula to determine P through the minimization problem (58). It should be stressed that \(b(P) = 0\) is necessary for the given D to satisfy the SBP property, but not sufficient. This follows directly from [25, Lemma 4.3] containing the fact that the derivatives of the basis functions are integrated exactly.

In our implementation we solved (58) using Matlab’s CVX [27]. The results for different numbers and types of grid points \({\textbf{x}}\) as well as kernels \(\varphi \) and polynomial degrees \(m-1\) can be found in Table 8. Our numerical findings indicate that in all cases classic global RBF methods do not satisfy the RBFSBP property. This can be noted from the residual \(b(P) = \Vert PD + (DP)^T - B \Vert _2\) corresponding to the minimizer P of (58) to be distinctly different from zero (machine precision in our implementation is around \(10^{-16}\)). This result is not suprising and in accordance with the observations made in the literature [21, 53].

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Glaubitz, J., Nordström, J. & Öffner, P. Energy-Stable Global Radial Basis Function Methods on Summation-By-Parts Form. J Sci Comput 98, 30 (2024). https://doi.org/10.1007/s10915-023-02427-8

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-023-02427-8

Keywords

- Global radial basis functions

- Time-dependent partial differential equations

- Energy stability

- Summation-by-part operators