Abstract

Approximating the Hadamard finite-part integral by the quadratic interpolation polynomials, we obtain a scheme for approximating the Riemann-Liouville fractional derivative of order \(\alpha \in (1, 2)\) and the error is shown to have the asymptotic expansion \( \big ( d_{3} \tau ^{3- \alpha } + d_{4} \tau ^{4-\alpha } + d_{5} \tau ^{5-\alpha } + \cdots \big ) + \big ( d_{2}^{*} \tau ^{4} + d_{3}^{*} \tau ^{6} + d_{4}^{*} \tau ^{8} + \cdots \big ) \) at any fixed time, where \(\tau \) denotes the step size and \(d_{l}, l=3, 4, \dots \) and \(d_{l}^{*}, l\,=\,2, 3, \dots \) are some suitable constants. Applying the proposed scheme in temporal direction and the central difference scheme in spatial direction, a new finite difference method is developed for approximating the time fractional wave equation. The proposed method is unconditionally stable, convergent with order \(O (\tau ^{3- \alpha }), \alpha \in (1, 2)\) and the error has the asymptotic expansion. Richardson extrapolation is applied to improve the accuracy of the numerical method. The convergence orders are \(O ( \tau ^{4- \alpha })\) and \(O ( \tau ^{2(3- \alpha )}), \alpha \in (1, 2)\), respectively, after first two extrapolations. Numerical examples are presented to show that the numerical results are consistent with the theoretical findings.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The aim of this paper is to construct a higher order numerical method for approximating the following time fractional wave equation for \(\alpha \in (1, 2)\)

where \(f, \, \varphi \) and \( \psi \) are given functions and \(\, _0^C D_{t}^{\alpha } u (x, t)\) denotes the time fractional derivative in the Caputo sense.

To keep our exposition simple, we shall use one dimensional spatial domain \(\varOmega =(0,1)\). But our subsequent analysis can be extended to \(\varOmega =(0,1)^d, d=2,3\) with homogeneous Dirichlet boundary condition or periodic boundary conditions. However for the Neumann boundary condition, we need to extend the domain and impose the central difference scheme to approximate the derivative on the boundary, which is quite standard. Moreover, at a first step towards higher order schemes in time for the problem (1)–(3), we shall assume, henceforth, that the solution is smooth enough as required by our subsequent error analysis. However, in future, it is planned to discuss higher order schemes in time for problem with less smooth solution.

The time fractional wave equation is a basic equation to model many different phenomena such as the propagation of dislocations in crystals and the behavior of elementary particles in relativistic quantum mechanics, flux motion on a large Josephson junctions in superconductivity, spin waves, nonlinear optics and problems in mathematical physics, see [8, Chap. 8.2] for details. Since it is difficult to find an analytic solution of the general time fractional wave equation, different numerical methods are introduced from time to time for approximating its solution.

Sun and Wu [36] applied the order reduction technique and the L1 scheme to obtain a numerical method for approximating the time fractional wave equation with the convergence order \(O(\tau ^{3- \alpha })\), where \(\tau \) denotes the time step size. Gao and Sun [13] employed the weighted and shifted Grunwald-Letnikov method and order reduction technique to obtain a second order \(O(\tau ^{2})\) scheme. Applying the \(L2-1_{\sigma }\) scheme and the order reduction technique, Lyu et al. [28] also obtained a second order \(O(\tau ^{2})\) scheme. Sun et al. [37] introduced and analyzed a second order time discretization scheme by using the order reduction technique and the higher order interpolation polynomials. By using the higher order interpolation polynomials, Zhao et al. [41] proposed a second order time discretization scheme for solving the variable-order fractional wave equation. By correcting some starting steps of the numerical methods, Jin et al. [17, 18] developed some higher order numerical methods for time fractional wave equations with nonsmooth data. Fairweather et al. [12] considered a two-dimensional time fractional wave equation, where an alternating direction implicit method is used to approximate the time fractional derivative and the orthogonal spline collocation method is applied for the spatial discretization, see also [24, 33]. More references for approximating time fractional wave equation can be found in [1, 10, 25, 29, 35, 38, 40] and the references therein.

The order of convergence in time for all numerical methods mentioned above for approximating the time fractional wave equations is less than or equal to 2 and all these methods are based on the order reduction techniques as in [36]. Richardson extrapolation is another method to improve the convergence order when the error of the numerical method has the asymptotic expansion. Recently, Qi and Sun [32] considered the Richardson extrapolation for approximating subdiffusion problem with \(\alpha \in (0, 1)\) based on the asymptotic expansion of the L1 approximation scheme proved by Dimitrov [6, 7], see also [16, 22].

In this paper, we introduce a new time discretization scheme for (1)–(3) without using the order reduction technique. By approximating the Hadamard finite-part integral with the quadratic interpolation polynomials, we construct a scheme to approximate the Riemann-Liouville fractional derivative of order \(\alpha \in (1,2)\) and show that the error has the asymptotic expansion \( \big ( d_{3} \tau ^{3- \alpha } + d_{4} \tau ^{4-\alpha } + d_{5} \tau ^{5-\alpha } + \cdots \big ) + \big ( d_{2}^{*} \tau ^{4} + d_{3}^{*} \tau ^{6} + d_{4}^{*} \tau ^{8} + \dots \big ) \) for some suitable constants \(d_{i}, i=3, 4, \dots \) and \(d_{i}^{*}, i=2, 3, \dots \), where \(\tau \) denotes the step size. Applying this scheme in temporal direction and the central difference method in spatial direction, a completely discrete scheme is derived for the linear time fractional wave equation. The scheme is proved to be unconditionally stable and convergent of order \(O (\tau ^{3- \alpha }), \alpha \in (1, 2)\) and the error has the asymptotic expansion. The extrapolation method is applied to improve the accuracy of numerical method and orders of convergence are of \(O ( \tau ^{4- \alpha })\) and \(O ( \tau ^{2(3- \alpha )})\), respectively, after first two extrapolations.

The idea of applying Hadamard finite-part integral approach to approximate the solution of the time fractional partial differential equation has been used for approximating the subdiffusion problem with \(\alpha \in (0, 1)\) in [23]. To the best of our knowledge, there are hardly any result for approximating time fractional wave equation with \( \alpha \in (1,2) \) by using Hadamard finite-part integral approach. The analysis of the proposed numerical method for time fractional wave equation with \(\alpha \in (1,2)\) is completely different from the analysis of the numerical method for the subdiffusion equation with \(\alpha \in (0, 1)\) in [23]. Essentially, we need to study the properties of the weights in the approximate scheme of the Riemann-Liouville fractional derivative with order \(\alpha \in (1, 2)\).

The main contributions of this paper are as follows:

-

1.

A scheme for approximating the Riemann-Liouville fractional derivative of order \( \alpha \in (1,2)\) is introduced. The error has the asymptotic expansion

$$\begin{aligned} \big ( d_{3} \tau ^{3- \alpha } + d_{4} \tau ^{4-\alpha } + d_{5} \tau ^{5-\alpha } + \cdots \big ) + \big ( d_{2}^{*} \tau ^{4} + d_{3}^{*} \tau ^{6} + d_{4}^{*} \tau ^{8} + \cdots \big ) \end{aligned}$$(4)for some suitable constants \(d_{i}, i=3, 4, \dots \) and \(d_{i}^{*}, i=2, 3, \dots \), where \(\tau \) denotes the step size.

-

2.

A new finite difference method for approximating linear time fractional wave equation is developed and it is shown that the error has the asymptotic expansion.

-

3.

The extrapolated values of the proposed numerical methods for approximating linear time fractional wave equation have the convergence orders \(O ( \tau ^{4- \alpha })\) and \(O ( \tau ^{2(3- \alpha )}), \alpha \in (1, 2)\), respectively, after first two extrapolations.

-

4.

Numerical results are conforming our theoretical findings.

The paper is organized as follows. In Sect. 2, we introduce a higher order scheme to approximate the Riemann-Liouville fractional derivative of order \(\alpha \in (1,2)\) and show that the error has the asymptotic expansion. In Sect. 3, we introduce a new finite difference method for approximating the time fractional wave equation and prove that the method is unconditionally stable and has the convergence order \(O(\tau ^{3- \alpha }), \alpha \in (1, 2)\). In Sect. 4, we consider the Richardson extrapolations of the finite difference method for time fractional wave equation and prove that the convergence orders are of \(O ( \tau ^{4- \alpha })\) and \(O ( \tau ^{2(3- \alpha )}), \alpha \in (1, 2)\), respectively, after first two extrapolations. Numerical tests are carried out to verify the theoretical analysis in Sect. 4.

By \(C, c_{l}, c^{*}_{l}, d_{l}, d^{*}_{l}, l \in {\mathbb {Z}}^{+}\), we denote some positive constants independent of the step size \(\tau \), but not necessarily the same at different occurrences.

2 A New Scheme to Approximate the Riemann–Liouville Fractional Derivative

Based on the Hadamard finite-part integral approximation, we introduce a new scheme to approximate the Riemann-Liouville fractional derivative of order \(\alpha \in (1, 2)\) and show that the error has an asymptotic expansion.

2.1 The Scheme

Let \({\mathbb {N}}\) denote the set of all natural numbers then, for \(p\notin {\mathbb {N}}\) and \(p>1\), the Hadamard finite-part integral on a general interval [a, b] is defined as follows (see, in [2]):

where \( R_{\mu }(x,a):=\frac{1}{\mu !}\int ^x_a(x-y)^{\mu }f^{(\mu +1)}(y)dy \) and \(\, = \!\!\!\!\!\!\!\int \, \) denotes the Hadamard finite-part integral. \(\lfloor {p}\rfloor \) denotes the largest integer not exceeding p, where \(p\not \in {\mathbb {N}}\).

It is well-known that the Riemann-Liouville fractional derivative for \(\alpha \in (1,2)\) can be written as

where the integral \(=\!\!\!\!\!\!\int _{0}^{t}\) is interpreted as the Hadamard finite-part integral, see Diethelm [3, p.233], Elliott [11, Theorem 2.1].

Let \(0 = t_{0}< t_{1}< \dots < t_{N} =T\) be a partition of [0, T] and \(\tau \) the step size, i.e. \(\tau =T/N\). At \(t=t_{n}, \, n=1, 2, \dots , N\), write

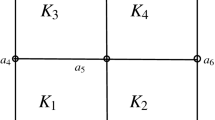

Let \(n \ge 2\) (the case \(n=1\) can be considered separately) and let \(w_{l}=\frac{l}{n}, l=0,1,2,\cdots , n\) be a partition of [0, 1]. We shall approximate \(g(w):=f(t_{n}-t_{n}w)\) by the following piecewise quadratic interpolation polynomial \(g_{2}(w)\)

and

We note that on the first interval \([w_{0}, w_{1}]\), the piecewise quadratic polynomial \(g_{2}(w)\) is defined on the nodes \(w_{0}, w_{1}, w_{2}\), and on the other intervals \([w_{k-1}, w_{k}], k=2, 3, \dots , n, n \ge 2\), the piecewise quadratic polynomial \(g_{2}(w)\) is defined on the nodes \(w_{k-2}, w_{k-1}, w_{k}\). Following the proof of [39, Lemma 2.1] with \(\alpha \in (0, 1)\), one may obtain the following lemma with \(\alpha \in (1, 2)\).

Lemma 1

Let \(\alpha \in (1, 2)\) and \( f \in C^{3}[0, T]\). Let \(g(w): = f(t_{n}-t_{n} w), n=2, 3, \dots , N\). Then, there holds

where \(R_{n} (g)\) denotes the remainder term. The weights \(\alpha _{kn}\) and \(w_{kn}\) have the following relation:

For \(n=2,\)

For \(n=3,\)

For \(n=4,\)

For \(n\ge 5,\)

Here, with \(k=2, 3, \cdots , n, n \ge 2\),

The following lemma shows the properties of E(k), F(k) and G(k).

Lemma 2

Let \(E(k), F(k), G(k), k=2,3, \dots , n, n=2,3, \dots , N\) be defined by (8), (9) and (10), respectively. Then, there hold

and

Proof

We first prove \(E(k)<0\) for \(k=2,3, \dots , n,\, n=2,3, \dots , N\). By the expression of E(k) and the series expansion of \((1-1/k)^{-\alpha +j}\) for \(j=1, 2\), one obtains

A use of \(K(\alpha )>0\) with some simplifications shows

Similarly we may prove \(F(k)<0\) and \(G(k)>0\). It remains to show \(E(k+1)-2F(k)>0\) for \(k=2,3, \dots , n,\, n=2,3, \dots , N-1\). Observe with \(K(\alpha )>0\) that

Together these estimates complete the proof of this lemma. \(\square \)

We now state below some properties of the weights \(w_{kn}\) defined in (7) in term of a Lemma, which can be easily derived from the expressions of the \(w_{kn}\). We include its proof in the Appendix.

Lemma 3

Let \(1<\alpha <2\). Then the coefficients \(w_{kn}\) defined by (2.2) satisfy the following properties

2.2 The Asymptotic Expansion of the Error for the Scheme (7)

In this subsection, we consider the asymptotic expansion of the error for the approximation of the Riemann-Liouville fractional derivative defined by (7).

Below, we state without proof of the asymptotic expansion of the remainder term \(R_{n}(g)\) in (7). The proof can be easily modified by combining the proof techniques of [5, Theorem 1.3] and [39, Lemma 2.3].

Theorem 1

Let \(0=t_{0}< t_{1}< \dots < t_{N}=T\) with \( N \ge 2\) be a partition of [0, T] and \(\tau = T/N\) the step size. Let \(\alpha \in (1, 2)\) and let g be sufficiently smooth on [0, T]. Let \(R_{n} (g)\) be the remainder term in (7). Then we have, for \(n=2, 3, \dots , N\),

for some suitable coefficients \(d_{l}, \, l=3, 4 \dots \) and \(d_{l}^{*}, \, l=2, 3 \dots \) which are independent of n.

Remark 1

Since \(t_{n}= n \tau , n=1, 2, \dots , N\) with \( \tau = \frac{T}{N} = \frac{1}{N}\), we write (14) for \(n=2, 3, \dots , N\) as

In particular, letting \(n=N\) with \(t_{N}=T=1\) in (15), one obtains

3 A New Finite Difference Method for Time Fractional Wave Equation

Based on the approximation scheme (7) of the Riemann-Liouville fractional derivative in Sect. 2, we introduce in this section a complete discrete scheme for the time fractional wave Eqs. (1)–(3) and prove that the scheme is unconditionally stable and has the convergence order \(O(\tau ^{3- \alpha }), \alpha \in (1, 2)\).

In order to discretize the problem in the spatial direction, we define a uniform mesh of interval [0, L] as \(\varOmega _{h}=\{x_{j}|x_{j}=jh, h=L/M, 0\le j \le M\}.\) Let \(\varOmega _{\tau }=\{t_{n}|t_{n}=n\tau , \tau =T/N, 0\le n \le N\}\) to be a uniform mesh of the time interval [0, T]. Define the grid function spaces

Denote \(\delta _{x}u_{j-\frac{1}{2}}=\frac{u_{j}-u_{j-1}}{h},\ \ j=1,2,\cdots ,M, \; \delta _{t}u^{n -\frac{1}{2}}=\frac{u^{n}-u^{n-1}}{\tau },\ \ n=1,2,\cdots ,N, \) and

For \(\vec {u}, \vec {v} \in \mathcal {{\dot{U}}}_{h}\), we introduce the discrete inner product and norm as follows: \(( \vec {u},\vec {v})=h\sum _{j=1}^{M-1}u_{j}v_{j}, \quad \Vert \vec {u}\Vert =\sqrt{(u,u)}, \quad \Vert \vec {u} \Vert _{\infty }=\max _{0\le j \le M}|u_{j}|\), and

Lemma 4

([25]) For any \(\vec {u}, \vec {v} \in \mathcal {{\dot{U}}}_{h}\), there holds

Here, \(\Vert \delta _x \vec {u} \Vert \) is indeed a norm on \(\mathcal {{\dot{U}}}_{h}\) and it is called discrete \(H^1_h\)-norm

Lemma 5

([9]) For any \(\vec {u} \in \mathcal {{\dot{U}}}_{h}\), it holds that

Remark 2

In 2-dimensional case, the inequality (17) can be modified to include a \(\log \frac{1}{h}\) factor, that is, by discrete Sobolev inequality, see [19, Lemma 3]

In 3-dimensional case ( also true for 2-dimension), the following inequality holds

for which one needs to derive \(H^2_h\)-stability estimate. We shall indicate this stability estimate after deriving \(H^1_h\) stability. For definitions, see, [19] and [30].

We now introduce a new numerical scheme to approximate (1)–(3). At the grid points \((x_{j},t_{n})\), \(j=1,2,\cdots ,M-1, n=2,3,\cdots ,N\), we approximate the Caputo fractional derivative by, using (7) and noting \(\alpha \in (1, 2)\),

and approximate the spatial derivative by \(\frac{\partial ^2 u(x_{j},t_{n})}{\partial x^2} =\frac{ u(x_{j+1}, t_{n}) -2 u(x_{j}, t_{n}) + u(x_{j-1}, t_{n})}{h^{2}}+O(h^{2}). \) Then the Eqs. (1)–(3) can be rewritten as, with \(u_{j}^{n}= u(x_{j}, t_{n})\),

Remark 3

In our numerical simulation, we have to compute weights \(w_{kn}\), where k ranges from 0 to n and n takes values from 1 to N. We have observed that the computational cost associated with calculation of these weights is quite reasonable. It is comparable to the computation required for determining the weights in the L1 scheme for solving subdiffusion problem with \(\alpha \in (0, 1)\).

Let \(U_{j}^{n} \approx u(x_{j}, t_{n}), \, j=0, 1, \dots , M, \, n=0, 1, \dots , N\) denote the approximate solution of \(u(x_{j}, t_{n})\). We then define the following finite difference method for solving (1)–(3): with \(f_{j}^{n}= f(x_{j}, t_{n})\),

Remark 4

We remark that we need to approximate \(U_{j}^{1}, j=1, 2, \dots , M-1\) separately by using an alternative numerical method such that the convergence order satisfies the required accuracy in the assumption of Theorem 4. One possible scheme to achieve such approximation accuracy is to use the following scheme proposed in Shen et al. [34]. Let \( t_{\frac{1}{2}} = \frac{1}{2} ( t_{1} + t_{0})\). At \(t= t_{\frac{1}{2}}\), we have, with \(j=1, 2, \dots , M-1\),

We approximate \(\, _{0}^{C}D_{t}^{\alpha } u (x_{j}, t_{\frac{1}{2}})\), \( \frac{\partial ^{2} u (x_{j}, t_{\frac{1}{2}})}{\partial x^2}\) and \(f(x_{j},t_{\frac{1}{2}})\) by

respectively. Here \( b_{0}^{(1, \alpha )} = \frac{2 \tau ^{1- \alpha }}{2- \alpha } \big ( (1- \frac{1}{2})^{2- \alpha } \big )\), see [34][(2.3)].

Let \(U_{j}^{1} \approx u_{j}^{1} = u(x_{j}, t_{1}), j=0, 1, \dots , M\) be the approximate solution of \( u_{j}^{1}\). We define the following scheme, with \( j=1, 2, \dots M-1\),

By [34][ Theorem 3.2], we may have, with \( e_{j}^{1} = u_{j}^{1} - U_{j}^{1}\) and \(\vec {e}^{1} = [ e_{0}^{1} \; e_{1}^{1} \; \dots \; e_{M}^{1}]\),

In order to prove the stability and error estimates of the finite difference scheme for fractional diffusion-wave equations (1.1)–(1.3), we need to rewrite the approximation scheme for the Caputo fractional derivative. At \(t=t_{n}\) for \(n=2,3,\cdots ,N\), we have

which implies that

Setting

we arrive at

Using (33), we may rewrite (25)–(28) as

Theorem 2

Let \(\{U_{j}^{n}, 1\le j\le M-1, 0\le n\le N\}\) be the solutions of the difference scheme (25)–(28), or equivalently (34)–(37). Assume that \(U^{1}\) satisfies \(\Vert \delta _{x}U^{1}\Vert ^{2} \le \Vert \delta _{x}U^{0}\Vert ^{2} +\frac{\tau ^{2-\alpha }}{\varGamma (3-\alpha )}\Vert \psi \Vert ^{2} +\tau ^{\alpha }\varGamma (2-\alpha )\Vert f^{1}\Vert ^{2}\). Then there holds, with \( n=2, 3, \cdots , N\),

To prove Theorem 2, we need the following lemma.

Lemma 6

Let \(1<\alpha <2\). Then, the coefficients \(p_{l}\) defined by (32) satisfy the following properties

Proof

Similar to Lemma 3, we only prove the case for \(n=5,6,\cdots , N\). The case for \(n=2,3,4\) can be proved similarly. We first show (39). According to (13), it is easy to see that

We next prove (40). Recalling (12), we arrive at

Finally, we give the proof of (41). we shall show that, with \(1<\alpha <2\) and \(n\ge 1\),

Assume (42) holds at the moment, we then have, for \(n=5,6,\cdots , N\),

which is (41).

It remains to show (42). We shall use the mathematical induction on n to prove (42). When \(n=1\), the result is obviously true. We assume that the conclusion is true for \(n=1,2,\cdots ,k-1\). We need to prove the conclusion holds for \(n=k\), that is, \( \sum \limits _{l=1}^{k}l^{1-\alpha }\le \frac{k^{2-\alpha }}{2-\alpha }. \) By the inductive hypothesis, it suffices to consider \( \frac{(k-1)^{2-\alpha }}{2-\alpha }+k^{1-\alpha }\le \frac{k^{2-\alpha }}{2-\alpha }, \) that is, \( \frac{(2-\alpha )k^{1-\alpha }}{k^{2-\alpha }-(k-1)^{2-\alpha }}\le 1. \) Note again using series expansion of \((1-1/k)^{2-\alpha }\) that

This shows the result is also true for \(n=k\) and by the principle of induction, we obtain (42). The proof of Lemma 6 is now complete. \(\square \)

Now we turn to the proof of Theorem 2.

Proof of Theorem 2

Forming the inner product between (34) and \(\delta _{t}U^{n-\frac{1}{2}}\), we obtain

Since \(a(a-b) \ge \frac{1}{2}( a^2-b^2),\) for \(a,b \ge 0\), then

Substituting (44) into (43), a use of the Cauchy-Schwarz inequality with the Young’s inequality shows

Hence, we arrive at, noting \(\sum _{l=1}^{n-1} \big (p_{n-l} -p_{n-l+1} \big ) + p_{n} =p_{1}\),

Hence, we obtain

Denoting \(Q^{0}=\Vert \delta _{x}U^{0}\Vert ^{2}\) and \(Q^{n}=\Vert \delta _{x}U^{n}\Vert ^{2}+\tau ^{2-\alpha } \sum \limits _{l=1}^{n}p_{n-l+1}\Vert \delta _{t}U^{l-\frac{1}{2}}\Vert ^{2}\), it follows from (46) that

Furthermore, it follows that

An application of the Young’s inequality yields

that is,

By Lemma 6, there holds

Hence, we arrive at

This completes the rest of the proof. \(\square \)

As consequence of the \(H_h^1\)-stability (47) of Theorem 2 and (17), we obtain the following discrete maximum stability result. in one dimensional problem.

Theorem 3

Assume that the assumptions in Theorem 2 hold. Further assume that \(f \in L^{\infty } (0, T; L^{\infty })\). Then there exists a positive constant C such that

Remark 5

In 2D case, the Soblev inequality (18) implied the discrete maximum norm stability with a log term. However, in 3D case, based on the proof technique in Theorem 2, we sketch a proof of discrete \(H^2_h\) stability from which we obtain discrete maximum norm stability using (19). We shall indicate the changes in 1D case, the similar analysis in verbatim provides \(H^2_h\) stability in 2D or 3D case with appropriate changes because of higher dimensional spatial discretization. Write \(\partial ^2_x {U}^n \) as \(\varDelta _h U^n\) and \(\partial _x U^n\) by \(\nabla U^n.\) Now rewrite \(H^1_h\) norm as \(\Vert U^n\Vert _{H^1_h}=\Vert \nabla U^n\Vert \) and define \(H^2_h\) norm as \(\Vert \varDelta _hU^n\Vert \) with \((-\varDelta _h U^n,V^n) = (\nabla _h U^n, \nabla _h V^n).\) Forming the inner product between (34) and \(-\varDelta _h \delta _{t}U^{n-\frac{1}{2}}\), and following exactly the analysis as in the proof of Theorem 2, we similarly obtain

Define \(Q_1^{0}=\Vert \varDelta _{h}U^{0}\Vert ^{2}\) and \(Q_1^{n}=\Vert \varDelta _{h}U^{n}\Vert ^{2}+\tau ^{2-\alpha } \sum \limits _{l=1}^{n}p_{n-l+1}\Vert \nabla _h\delta _{t}U^{l-\frac{1}{2}}\Vert ^{2}.\) From (48), we arrive at

and hence, a use of summation with the Young’s inequality implies

Therefore,

and this completes the stability in \(H^2_h.\)

Setting \(e_{j}^{n}:=u_{j}^{n}-U_{j}^{n}\), we derive the error equation with local truncation error \(R_{j}^{n} = O(\tau ^{3-\alpha } + h^2)\) as

Below, we discuss a superconvergence result in the discrete \(H^1_h\)-norm.

Theorem 4

Let \(\{u_{j}^{n}, 0 \le j\le M, 0 \le n \le N \}\) be the solution of (21)–(24) and further, let \(\{U_{j}^{n}, 0 \le j\le M, 0\le n \le N\}\) be the solution of the difference scheme (34)–(37). Denote \( \vec {e}^{n} = (u_{0}^{n}-U_{0}^{n}, u_{1}^{n}-U_{1}^{n}, \dots , u_{M}^{n}-U_{M}^{n})\). Assume that \(u \in C^0([0,T];C^{4} ({\bar{\varOmega }})) \cap C^3 ([0,T]; C^0({\bar{\varOmega }}))\) and \(\Vert \delta _{x} \vec {e} ^{1} \Vert \le C(\tau ^{3-\alpha }+h^{2}) \) for some constant C. Then, there exists a constant C, independent of h and \(\tau \) such that

Proof

In view of Theorem 2 and the error equation (50)–(53), we obtain

and hence \( \Vert \delta _{x} \vec {e}^{n}\Vert \le \sqrt{T^{\alpha }\varGamma (2-\alpha )}C(\tau ^{3-\alpha }+h^{2}). \) This completes the rest of the proof. \(\square \)

As a corollary, we derive the following discrete maximum error estimate.

Corollary 1

There holds \(n=2, 3, \dots , N\)

Proof

For 1D case, the estimate (54) follows from Lemma 5 and Theorem 4. In 2D case, as a consequence of the superconvergence result in \(H^1_h\)-norm and using the discrete Sobolev inequality (18), we find that

For 3D case ( even true for 2D without log term), apply \(H^2_h\) stability to the error Eq. (50) and using the truncation with higher regularity results to infer \(H^2_h\) error analysis:

Then apply (19) to complete the rest of the proof. \(\square \)

4 Asymptotic Expansion of the Error of the Numerical Method (25)–(28)

In this section, we consider the asymptotic expansion of the error of the finite difference method (25)–(28).

Assume that u(x, t) is sufficiently smooth, by (7) and (16), there exist some smooth functions \({\tilde{h}}_{l}(x, t), l=1, 2, \dots \) and \({\tilde{g}}_{m}(x, t), m=1, 2, \dots \), such that, for fixed \(n=2, 3, \dots , N\) and \(1 \le j \le M-1\),

Theorem 5

Let \(\{U_{j}^{n}, 1\le j\le M-1, 0\le n\le N\}\) be the solution of the finite difference method (25)–(28) with the time step size \(\tau \) and the space step size h. Then, there holds for \(\alpha \in (1, 2)\)

where \({\tilde{p}}_{l}(x, t), l=1, 2\) satisfy, with \({\tilde{h}}_{l}(x, t), l=1, 2\) introduced by (57),

Proof

We first show (58). Denote

where \(u(x_{j}, t_{n}), U_{j}^{n}\) and \( v(x_{j}, t_{n})\) are the solutions of (21)–(24), (25)–(28) and (60), respectively.

We shall show that \(\epsilon _{j}^{n}\) satisfies the following system

Assume for the moment that (61)–(64) hold. An application of Theorem 3 to (61)–(64) shows

which implies that

It remains to show (61)–(64). In fact, it follows, with \( e_{j}^{n} = u(x_{j}, t_{n}) - U_{j}^{n}\), that

Note that

Apply the asymptotic expansion formula (57) to arrive at

Further from (60), it follows that

Thus, by (66)–(68) with \(\alpha \in (1, 2)\), there follows

which shows (61). It is obvious that the initial and boundary conditions (62)–(64) hold. Together these estimates complete the proof of Theorem 5. Hence, we prove (58).

Now we turn to the proof of (59). Let

where \(u(x_{j}, t_{n}), U_{j}^{n}\) and \( v(x_{j}, t_{n})\) are the solutions of (21)–(24), (25)–(28) and (60), respectively.

We shall show that \(E_{j}^{n}\) satisfies the following system, with \(\alpha \in (1, 2)\),

Assume for the moment that (69)–(72) hold. An application of Theorem 3 shows

which implies that

It remains to show (69)–(72). In fact, it follows, with \( e_{j}^{n} = u(x_{j}, t_{n}) - U_{j}^{n}\), that

Note following the proof of (67) that

Further from (60), we show

Thus, we obtain, for \(\alpha \in (1, 2)\),

which shows (69). It is obvious that the initial and boundary conditions (70)–(72) hold. Hence we also prove (59). This concludes the rest of the proof. \(\square \)

Remark 6

By (57), we may consider more than two terms in the asymptotic expansion of \(U_{j}^{n} - u(x_{j}, t_{n})\). For example, one may show that, following the proof of (59),

where \({\tilde{p}}_{3}(x, t)\) satisfies (60) with \(l=3\). We observe that the highest convergence order of (78) is \(O(\tau ^{\min ( 5- \alpha , 2(3- \alpha ))} = O (\tau ^{2 (3- \alpha )})\) since \(\alpha \in (1, 2)\), which has the same convergence order as in (59). Hence we only consider two terms in the asymptotic expansion of the error \(U_{j}^{n} - u(x_{j}, t_{n})\) in (59) in Theorem 5.

5 Numerical Simulations

In this section, we shall give two numerical examples for approximating the time fractional wave equation using Richardson extrapolation.

Let us first recall the Richardson extrapolation algorithm. Let \(A_{0}(\tau )\) denote the approximation of A calculated by an algorithm with the step size \(\tau \). Assume that the error has the following asymptotic expansion

where \(a_{j}, j=0, 1, 2, \dots \) are unknown constants and \(0< \lambda _{0}< \lambda _{1}< \lambda _{2} < \dots \) are some positive numbers.

Let \(b \in {\mathbb {Z}}^{+}, \, b >1\) ( usually \(b=2\)). Let \(A_{0} \big (\frac{\tau }{b} \big ) \) denote the approximation of A calculated with the step size \(\frac{\tau }{b}\) by the same algorithm as for calculating \(A_{0}(\tau )\). Then by (79), there holds

Similarly, we may calculate the approximations \(A_{0} \big (\frac{\tau }{b^2} \big ), A_{0} \big (\frac{\tau }{b^3} \big ), \dots \) of A by using the step sizes \( \frac{\tau }{b^2}, \frac{\tau }{b^3}, \dots \).

By (79), we note that

that is, the approximation \(A_{0}(\tau )\) has the convergence order \(O(\tau ^{\lambda _{0}})\). The convergence order \(\lambda _{0}\) can be calculated numerically. By (81), there exists a constant C such that, for sufficiently small \(\tau \), such that \( |A- A_{0} (\tau ) | \approx C \tau ^{\lambda _{0}}, \) and \( \Big |A- A_{0} \big (\frac{\tau }{b} \big ) \Big | \approx C \big (\frac{\tau }{b} \big )^{\lambda _{0}}. \) Hence, we obtain

which implies that the convergence order \(\lambda _{0}\) can be calculated by

Since the error in (79) has the asymptotic expansion, we may use the Richardson extrapolation to construct a new approximation of A which has higher convergence order \(O(\tau ^{\lambda _{1}}), \, \lambda _{1} > \lambda _{0}\). To see this, multiplying (80) by \(b^{\lambda _{0}}\), we arrive at

Subtracting (79) from (83), we obtain

Denote

we then arrive at

for some suitable constants \(b_{1}, b_{2}, \dots \). We now remark that the new approximation \(A_{1}(\tau )\) only depends on b and \(\lambda _{0}\) and is independent of the coefficients \(a_{j}, j=0, 1, 2, \dots \) in (79). Hence we obtain a new approximation \(A_{1}(\tau )\) of A which has the higher convergence order \(O(\tau ^{\lambda _{1}})\). To see the convergence order \(\lambda _{1}\), using (84), we may calculate \( A_{1}\big ( \frac{\tau }{b} \big ) = \frac{b^{\lambda _{0}} A_{0} \big ( \frac{\tau }{b^2} \big ) - A_{0} \big (\frac{\tau }{b} \big )}{ b^{\lambda _{0}}-1}. \) Then, by (85), it follows that \( \frac{| A- A_{1}(\tau )|}{| A- A_{1} \big (\frac{\tau }{b} \big )|} \approx b^{\lambda _{1}}, \) and \( \lambda _{1} \approx \log _{b} \Big ( \frac{| A- A_{1}(\tau )|}{| A- A_{1} \big (\frac{\tau }{b} \big )|} \Big ). \)

Continuing this process, we may construct the approximations \(A_{2}(\tau ), A_{3}(\tau ), \dots \) which have the convergence orders \(O(\tau ^{\lambda _{2}})\), \(O(\tau ^{\lambda _{3}}), \dots . \)

Example 1

Consider the time fractional wave equations (1)–(3) with

The exact solution is \( u(x, t) = t^{3} (x- x^2), \; \varphi (x) = \psi (x) =0\).

We shall use the numerical method (25)–(28) to solve (1)–(3). Choose \(T=1\) and the different time step sizes \(\tau = 2^{-4}, 2^{-5}, 2^{-6}, 2^{-7}\) and \(2^{-8}\). Let \(0=x_{0}< x_{1}< \dots < x_{M}=1\) be the spatial partition of [0, 1] and h the spatial step size with \(h=2^{-10}\).

In Table 1, we show the experimentally determined orders of convergence (EOC) with respect to the temporal step size \(\tau \). We see that the numerical results are indeed consistent with our theoretical convergence orders \(O(\tau ^{3-\alpha })\).

Now we consider the extrapolated values. Denote

For fixed \(n=N, t_{N}=T=2\) and \(j=1, 2, \dots , M-1\), we further denote, with \(b=2\),

Calculating

we then have, by the Richardson extrapolation,

In Tables 2, 3 and 4, we obtain the extrapolated values of the approximate solutions with the time step sizes \( \big (2^{-4}, 2^{-5}, 2^{-6}, 2^{-7}, 2^{-8} \big )\) and the space step size \(h = 2^{-10}\).

We observe that the convergence orders of the approximations in the first column is \(O(\tau ^{3- \alpha })\) with \(\alpha \in (1, 2)\). After the first two extrapolations, we get the new approximations which have the convergence orders \(O(\tau ^{4- \alpha })\), \(O(\tau ^{2(3- \alpha )})\), respectively as expected.

Example 2

Consider the time fractional wave equations (1)–(3) with, see [36],

The exact solution takes is

where \(E_{\alpha ,1}(z)\) denotes the Mittag–Leffler function, see, e.g., [3].

Choose \(T=1\), the space step size \(h = 2^{-10}\) and the different time step sizes \(\tau = 2^{-4}, 2^{-5}, 2^{-6}, 2^{-7}\) and \(2^{-8}\). In Table 5, we show the experimentally determined orders of convergence (EOC) with respect to the temporal step size \(\tau \). We see that the experimentally determined orders of convergence are less than the theoretical convergence orders \(O(\tau ^{3-\alpha })\) since the solution of the problem is not smooth enough.

In Tables 6, 7, 8, we show the extrapolated values of the approximate solutions with the step sizes \( \big (2^{-4}, 2^{-5}, 2^{-6}, 2^{-7}, 2^{-8} \big )\). We observe that the convergence orders are much less than the expected orders due to the nonsmoothness of the solution of the problem.

6 Conclusion

Based on the Hadamard finite-part integral expression of the Riemann-Liouville fractional derivative, we construct in this paper a new higher order scheme for approximating the Riemann-Liouville fractional derivative of order \(\alpha \in (1, 2).\) The asymptotic expansion of the approximation error is developed and analyzed. By using this scheme in temporal direction, we obtain a higher order numerical method for solving the linear time fractional wave equation and prove that the error also has the asymptotic expansion. The numerical experiments show that the numerical results are consistent with our theoretical findings. All estimates proved in this paper are valid for the problem (1)–(3) in higher space dimensions. Moreover, as a first step towards higher order schemes in time, all results remain valid for the smooth solution. As for the nonsmooth solution, dedicated efforts will be undertaken to establish analogous results in subsequent endeavors.

Data Availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Chen, A., Li, C.: Numerical solution of fractional diffusion-wave equation. Numer. Func. Anal. Optim. 37, 19–39 (2016)

Diethelm, K.: An algorithm for the numerical solution of differential equations of fractional order. Electron. Trans. Numer. Anal. 5, 1–6 (1997)

Diethelm, K.: The Analysis of Fractional Differential Equations, Lecture Notes in Mathematics. Springer, Berlin (2010)

Diethelm, K.: Generalized compound quadrature formula finite-part integral. IMA J. Numer. Anal. 17, 479–493 (1997)

Diethelm, K., Walz, G.: Numerical solution of fractional order differential equations by extrapolation. Numer. Algorithms 16, 231–253 (1997)

Dimitrov, Y.: A second order approximation for the Caputo fractional derivative. J. Fract. Calc. Appl. 7, 175–195 (2016)

Dimitrov, Y.: Three-point approximation for Caputo fractional derivative. Commun. Appl. Math. Comput. 31, 413–442 (2017)

Drazin, P.J., Johnson, R.S.: Solitons: An Introduction. Cambridge University Press, Cambridge (1989)

Du, R., Cao, W.R., Sun, Z.Z.: A compact difference scheme for the fractional diffusion-wave equation. Appl. Math. Model. 34, 2998–3007 (2010)

Du, R., Yan, Y., Liang, Z.: A high-order scheme to approximate the Caputo fractional derivative and its application to solve the fractional diffusion wave equation. J. Comput. Phys. 376, 1312–1330 (2019)

Elliott, D.: An asymptotic analysis of two algorithms for certain Hadamard finite-part integrals. IMA J. Numer. Anal. 13, 445–462 (1993)

Fairweather, G., Yang, X., Xu, D., Zhang, H.: An ADI Crank–Nicolson orthogonal spline collocation method for the two-dimensional fractional diffusion-wave equation. J. Sci. Comput. 65, 1217–1239 (2015)

Gao, G.H., Sun, Z.Z.: Two alternating direction implicit difference schemes for solving the two-dimensional time distributed-order wave equations. J. Sci. Comput. 69, 506–531 (2016)

Gohar, M., Li, C., Li, Z.: Finite difference methods for Caputo–Hadamard fractional differential equations. Mediterr. J. Math. 17, 194 (2020)

Gohar, M., Li, C., Yin, C.: On Caputo Hadamard fractional differential equations. Int. J. Comput. Math. 97, 1459–1483 (2020)

Hao, Z., Cao, W., Li, S.: Numerical correction of finite difference solution for two-dimensional space-fractional diffusion equations with boundary singularity. Numer. Algorithms 86, 1071–1087 (2021)

Jin, B., Lazarov, R., Zhou, Z.: Two fully discrete schemes for fractional diffusion and diffusion-wave equations with nonsmooth data. SIAM J. Sci. Comput. 38, A146–A170 (2016)

Jin, B., Li, B.Y., Zhou, Z.: Correction of high-order BDF convolution quadrature for fractional evolution equations. SIAM J. Sci. Comput. 39, A3129–A3152 (2017)

Jovanovic, B.S., Popovic, B.Z.: Convergence of a finite difference scheme for the third boundary value problem for elliptic equation with variable coefficients, Comput. Methods. Appl. Math. 1, 356–366 (2001)

Kilbas, A.A., Srivastava, H.M., Trujillo, J.J.: Theory and Applications of Fractional Differential Equations. Elsevier, Amsterdam (2006)

Li, C., Cai, M.: Theory and Numerical Approximations of Fractional Integrals and Derivatives. SIAM, Philadelphia (2019)

Li, S., Cao, W., Hao, Z.: An extrapolated finite difference method for two-dimensional fractional boundary value problems with non-smooth solution. Int. J. Comput. Math. 99, 274–291 (2022). https://doi.org/10.1080/00207160.2021.1907356

Li, Z., Liang, Z., Yan, Y.: High-order numerical methods for solving time fractional partial differential equations. J. Sci. Comput. 71, 785–803 (2017)

Li, L., Xu, D., Luo, M.: Alternating direction implicit Galerkin finite element method for the two-dimensional fractional diffusion-wave equation. J. Comput. Phys. 255, 471–485 (2013)

Li, C., Zeng, F.: Numerical Methods for Fractional Calculus. Chapman and Hall/CRC, Boca Raton (2015)

Liu, Y., Roberts, J., Yan, Y.: Detailed error analysis for a fractional Adams method with graded meshes. Numer. Algorithms 78, 1195–1216 (2018)

Lyness, J.N.: Finite-part integrals and the Euler–MacLaurin expansion. In: Zahar, R. V. M. (ed.) Approximation and Computation, Internat. Ser. Numer. Math. 119, pp. 397–407. Birkhäuser, Basel (1994)

Lyu, P., Liang, Y., Wang, Z.: A fast linearized finite difference method for the nonlinear multi-term time fractional wave equation. Appl. Numer. Math. 151, 448–471 (2020)

McLean, W., Mustapha, K.: Time-stepping error bounds for fractional diffusion problems with non-smooth initial data. J. Comput. Phys. 293, 201–217 (2015)

Pani, Amiya K., Thomée, Vidar, Vasudeva Murthy, A. S.: A first-order explicit-implicit splitting method for a convection-diffusion problem. Comput. Methods Appl. Math. 20, 769–782 (2020)

Podlubny, I.: Fractional Differential Equations. Academic Press, New York (1999)

Qi, R., Sun, Z.: Some numerical extrapolation methods for the fractional sub-diffusion equation and fractional wave equation based on the L1 formula. Commun. Appl. Math. Comput. (2022). https://doi.org/10.1007/s42967-021-00177-8

Qiao, L., Xu, D., Yan, Y.: High-order ADI orthogonal spline collocation method for a new 2D fractional integro-differential problem. Math. Method Appl. Sci. 43(8), 5162–5178 (2020)

Shen, J., Li, C., Sun, Z.: An H2N2 interpolation for Caputo derivative with order in \((1, 2)\) and its application to time-fractional wave equations in more than one-space dimension. J. Sci. Comput. 83, 38 (2020)

Shi, Z., Zhao, Y., Liu, F., Wang, F., Tang, Y.: Nonconforming quasi-Wilson finite element method for 2D multi-term time fractional diffusion-wave equation on regular and anisotropic meshes. Appl. Math. Comput. 338, 290–304 (2018)

Sun, Z., Wu, X.: A fully discrete difference scheme for a diffusion-wave system. Appl. Numer. Math. 56(2), 193–209 (2006)

Sun, H., Zhao, X., Sun, Z.Z.: The temporal second order difference schemes based on the interpolation approximation for the time multi-term fractional wave equation. J. Sci. Comput. 78, 467–498 (2019)

Wang, Z., Vong, S.: Compact difference schemes for the modified anomalous fractional subdiffusion equation and the fractional diffusion-wave equation. J. Comput. Phys. 277, 1–15 (2014)

Yan, Y., Pal, K., Ford, N.J.: Higher order numerical methods for solving fractional differential equations. BIT Numer. Math. 54, 555–584 (2014)

Yang, J., Huang, J., Liang, D., Tang, Y.: Numerical solution of fractional diffusion wave equation based on fractional multistep method. Appl. Math. Model. 38, 3652–3661 (2014)

Zhao, X., Sun, Z.Z., Karniadakis, G.E.: Second-order approximations for variable order fractional derivatives: algorithms and applications. J. Comput. Phys. 293, 184–200 (2015)

Funding

The first author (JY) acknowledges the support from the Natural Science Foundation of Shanxi Province (201801D121010). The third author (AKP) acknowledges the support from SERB, Govt. India via MATRIX grant No. MTR/201 S/000309.

Author information

Authors and Affiliations

Contributions

The authors contributed equally to this work.

Corresponding author

Ethics declarations

Conflict of interest

The authors have not disclosed any competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

In this Appendix, we shall give the proof of Lemma 3.

Proof of Lemma 3

We only prove the case with \(n\ge 5\). For the cases with \(n=2,3,4\), it can be proved in a similar manner. We first show (11). Note that, with \(n=5,6,\cdots , N\),

Since

and

we obtain, with \(n=5,6,\cdots , N\),

It follows from Lemma 2 that, with \(n=5,6,\cdots , N\),

Similarly, for \(k=3,4,\cdots , n-2,\,n=5,6,\cdots , N\), there holds

Using Lemma 2 again, it holds that, with \(n=5,6,\cdots , N\),

and \(w_{nn}=\frac{1}{\varGamma (3-\alpha )}\frac{1}{2}G(n)>0.\)

We next prove (12). For \(l=1,2,\cdots ,n, n=5,6,\cdots ,N\), since

we obtain, taking \( f(t) \equiv 1\),

Finally, we give the proof of (13). Letting \(f(t)\equiv t\), we have, at \(t=t_{l}\),

that is

i.e.

Together these estimates complete the proof of Lemma 3. \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yang, J., Green, C.W.H., Pani, A.K. et al. Unconditionally Stable and Convergent Difference Scheme for Superdiffusion with Extrapolation. J Sci Comput 98, 12 (2024). https://doi.org/10.1007/s10915-023-02395-z

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-023-02395-z

Keywords

- Time fractional wave equation

- Higher order scheme

- Stability

- Error estimates

- Asymptotic expansion

- Extrapolation