Abstract

In this paper we introduce a procedure for identifying optimal methods in parametric families of numerical schemes for initial value problems in partial differential equations. The procedure maximizes accuracy by adaptively computing optimal parameters that minimize a defect-based estimate of the local error at each time step. Viable refinements are proposed to reduce the computational overheads involved in the solution of the optimization problem, and to maintain conservation properties of the original methods. We apply the new strategy to recently introduced families of conservative schemes for the Korteweg-de Vries equation and for a nonlinear heat equation. Numerical tests demonstrate the improved efficiency of the new technique in comparison with existing methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Many highly effective methods for initial value problems in partial differential equations appear as parametric families of numerical schemes. These include exponential splittings (see, e.g., [8, 25]), where the free parameters constitute the coefficients of the splitting, and rational Krylov methods [20], where the free parameters are the poles of rational approximants.

A new technique that uses symbolic algebra to develop bespoke finite difference methods that preserve multiple local conservation laws has been recently introduced in [14]. This approach has been further refined in [16], and new families of conservative schemes have been introduced for a range of partial differential equations (PDEs) in [13,14,15,16]. These numerical schemes feature certain free parameters that can be arbitrarily chosen without compromising the preservation of the conservation laws.

A convenient choice of the free parameters yields numerical solutions with superior accuracy in all these cases. Coefficients of exponential splittings are typically determined a-priori using algebraic means in the pursuit of high order accuracies [28] and may be specialized for specific PDEs [31]. Optimal pole selection for rational Krylov methods remains an active area of research and strategies include a-priori choices based on analytical reasoning [17] and a-posteriori fitting [7]. Optimal parameters for the finite difference methods in [13,14,15,16] are identified using a brute force sweep through the entire parameter space, and comparisons against reference solutions show that suitable choices of the parameters yield errors up to 20 times smaller than existing methods for the proposed benchmark tests.

In practice, the optimal choice of such free parameters depends heavily on initial conditions and may also vary with time-step. Consequently, while the results in [13,14,15,16] do highlight the potential advantages of choosing good parameters, there is no known algorithm for identifying them. In order to overcome this issue we propose here a new approach for adaptively identifying optimal parameters for families of numerical schemes for PDEs, where convenient values are not known a priori.

For obtaining estimates of the optimal parameters we adaptively minimize an estimate of the local error introduced by the time integrator. In order for this approach to be effective, we assume throughout the paper that the spatial approximation is accurate and that the error in the solution is mainly due to the time discretization. This is not too restrictive an assumption, as in many instances PDEs are approximated very accurately in space, using for example spectral semidiscretizations. In the case of finite difference schemes, this amounts to either considering higher order discretizations in space, or restricting attention to cases where \(\varDelta t\gg \varDelta x\). Large time-steps reduce computational expenses and are generally desirable, except for potential stability concerns. In particular, \(\varDelta t\gg \varDelta x\) is a typical setting when using implicit schemes.

In the proposed approach, at each time-step of a single step numerical scheme, we seek to compute the optimal parameters that minimize the local error. This requires a reasonably accurate but inexpensive estimate of the local error and its dependence on the parameters. For an a posteriori estimate of the local error, we resort to the “defect” based approach outlined in [6]. In the context of backward error analysis, the defect measures the discrepancy between the differential equation satisfied by the numerical solution and the original equation [30]. Defect based error estimates have been utilized widely in the development of time-adaptive methods for ordinary differential equations (ODEs) (see, e.g., [12, 21]) and PDEs [3, 4, 6], but, to the best of our knowledge, these have not been employed for the estimation of optimal parameters.

In this paper we do not aim for adaptive time stepping. Indeed, unlike adaptive techniques for choosing time-steps, where the local error can be assumed to decrease monotonically with the time-step, in the proposed approach an optimization problem needs to be solved for finding the values of the parameters. The optimization problem for minimizing the local error estimate is solved in an efficient manner by computing the defect on a coarser (but still accurate) spatial grid, and utilizing an iterative method with a Gauss–Newton approximation to the Hessian for achieving fast local convergence [29].

We apply the new procedure to families of schemes introduced in [14] for the Korteweg de Vries (KdV) and a nonlinear heat (NLH) equation. The main feature of these schemes is that each of them preserves specific discretizations of some conservation laws. However, since the discrete conservation laws also depend on the parameters, these cannot be preserved by using an adaptive approach. Where conservation of these properties is of paramount importance, we suggest a more conservative version of the algorithm that uses fixed parameters derived from a sequence of values obtained adaptively.

Although the conservation properties of the schemes in [14] confer them good stability and accuracy, by applying the technique proposed in this paper we achieve significantly higher accuracy with moderate overheads. Despite the defect based approach being asymptotic in the time-step, \(\varDelta t\), in practice the procedure also works well in the large time-steps regime and, in some cases, also confers a notable stability advantage.

In Sect. 2 we discuss the validity of a defect based approximation of the local error for the purpose of adaptively identifying optimal parameters. In Sect. 3 we outline the defect based approach used for finding optimal values of free parameters in a numerical scheme, and introduce the two algorithms briefly outlined above. In Sect. 4, we apply the new techniques to families of conservative schemes introduced in [14] for the KdV equation and the NLH equation, giving explicit expressions for the defect. In Sect. 5, we show numerical results that demonstrate the effectiveness of the proposed algorithms in finding good estimates of the optimal parameters, together with their higher accuracy and efficiency in comparison to a default choice of the parameters and other schemes from the literature. Conclusive remarks are presented in Sect. 6.

2 Defect Based Approximation of Local Error

We consider a PDE,

written as an Initial Value Problem on the Hilbert space \(\mathcal {H}\), where \(\mathcal {A}: \mathcal {H} \rightarrow \mathcal {H}\). Boundary conditions and non-autonomous PDEs can also be incorporated into our approach in a straightforward manner, as demonstrated with concrete examples in Sect. 4.2.

Following spatial discretization, the solution of (1) is approximated by the solution of the system of ODEs,

where here and henceforth \(\mathcal {D}_z\) denotes the total derivative with respect to z, and \(\varvec{u}(t)\) represents a finite dimensional approximation of u(t). For instance, this could involve a finite difference approximation on a uniform grid on the domain [a, b] with Dirichlet boundaries,

Let T be the final time of integration,

be the time nodes and stepsize, respectively, \(u_{m,n}\) an approximation of \(u(x_m,\!t_n)\), and \(\varvec{u}_n\) the column vector whose m-th entry is \(u_{m,n}\).

The exact solution of (2) is described by the flow \(\mathcal {E}: \mathbb {R}^+ \times \mathbb {R}^M \rightarrow \mathbb {R}^M\),

Similarly, a single step numerical scheme for (2) can be described by the numerical flow,

Note that the numerical flow \(\Phi \) also exists for implicit methods, even if not specified in an explicit form.

In this manuscript, we consider numerical schemes in the form

where \(\varOmega \) is a compact subset of \(\mathbb {R}^K\), and \(\Phi \) depends on a vector of free parameters \(\chi \in \varOmega \) that effect the accuracy of the scheme. In our theoretical discussion we assume that the vector field A, the exact flow \(\mathcal {E}\) and the numerical flow \(\Phi \) are smooth with respect to all arguments and that the method,

is stable and convergent for arbitrary choices of \(\chi : \mathbb {R}^+ \rightarrow \varOmega \).

The local error in the numerical method (3) is defined as

In general, \(\mathcal {L}(\varDelta t,\varvec{u}_n,\chi )\) is not a computable quantity since the exact solution \(\mathcal {E}(\varDelta t, \varvec{u}_n)\) is not available in practice. Consequently, we resort to defect-based approximations (see [5, 6]) to obtain a posteriori estimates. The defect or residual of \(\Phi \),

quantifies the extent to which the numerical flow \(\Phi \) fails to satisfy (2).

To study the accuracy of the defect (6) as an approximation of the local error, we resort to the following nonlinear variation-of-constant formula, valid for nonlinear parabolic PDEs and time-reversible equations [4, 11].

Lemma 1

(Gröbner–Alekseev formula) The analytical solutions of the following initial value problems

are related through the nonlinear variation-of-constants formula

Here and henceforth, \(\partial _k f\) denotes the Fréchet derivative of a function f with respect to the k-th argument.

Applying Lemma 1 to (2) and (6) yields the following formula for the local error (5),

Theorem 1

Given a numerical scheme \(\Phi \) (3) of order p, the defect-based estimator

is an asymptotically correct estimator of the local error (5), uniformly for \(\chi \in \varOmega \), i.e.

with C independent of \(\chi \).

Proof

Since the method is of order p, then \(\mathcal {L}(\varDelta t,\varvec{u}_n,\chi )=\mathcal {O}(\varDelta t^{p+1}),\) and \(\varTheta (\tau ,\varvec{u}_n,\chi )=\mathcal {O}(\tau ^{p})\) for any \(\varDelta t,\) \(\tau \in [0,\varDelta t]\), and \(\chi \in \varOmega .\) Taylor expanding \(\varTheta \) in \(\tau \) around 0, there exists \(\xi _1 \in [0,\tau ]\) such that

Therefore,

Due to smoothness of \(\varTheta \), \(\partial _1^{p+1}\varTheta \) is continuous and is bounded over the compact set \([0,\varDelta t] \times \varOmega \), so that we may define

Note that by definition of \(\varTheta \) in (7), \(\varTheta (\varDelta t,\varvec{u}_n,\chi ) = \partial _2 \mathcal {E}\left( 0, {\Phi }(\varDelta t, \varvec{u}_n, \chi ) \right) \cdot \mathcal {R}(\varDelta t, \varvec{u}_n, \chi ) = \mathcal {R}(\varDelta t,\varvec{u}_n,\chi )\). Using (10) and applying (9) with \(\tau = \varDelta t\), for some \(\xi _1,\xi _2 \in [0,\varDelta t]\),

where

is independent of \(\varDelta t\) and \(\chi \). Therefore, indeed

uniformly for any value of \(\chi \in \varOmega \).

Remark 1

Theorem 1 is a generalisation of the results of [6], where the defect based estimator (8) is shown to be an asymptotically correct estimator for the local error. An important distinction here is the correctness of this estimator uniformly with respect to the parameters \(\chi \), which is essential for applications in optimal parameter selection. Note that the compactness of the parameter set \(\varOmega \) is crucial for the proof, but in practice is typically not a severe restriction.

Remark 2

Formula (8) provides an estimate of the local error of the time integrator. Nevertheless, there is no guarantee that the global error behaves in a similar way. This may occur even when accurate space discretizations are considered, e.g., caused by instabilities and constraints on the ratio of the discretization stepsizes. However, in Sect. 5 we show an example where the proposed approach, based on finding the parameters \(\chi \) minimizing the quantity L in (8), prevents the occurrence of instabilities.

3 Defect Based Identification of Optimal Parameters

In this section, we propose the use of the defect based error estimate (8) for finding, at every time-step, optimal parameters \(\chi _n^* \in \varOmega \subset \mathbb {R}^K\), defined as

where \(\varvec{u}_n\) and \(\varDelta t\) are fixed. The result of Theorem 1 assures us that

and guarantees that the choice of parameters \(\chi _n^*\) keeps the true local error, \(\mathcal {L}\), close to \(L(\varDelta t,\varvec{u}_n,\chi _n^*)\) in the asymptotic limit \(\varDelta t\rightarrow 0\) and, therefore, small.

Remark 3

The application of defect based error estimates for choosing optimal parameters differs from their application in context of time-adaptivity in a couple of crucial aspects.

-

1.

Since L is neither monotonous in \(\chi \), nor are we interested in asymptotic limits for small \(\chi \) (unlike the case of \(\varDelta t\) in context of time-adaptivity), the defect based estimate \(L(\varDelta t,\varvec{u}_n,\chi )\) needs to be computed for multiple values of \(\chi \) within an optimization routine.

-

2.

The perturbation of L by a term \(\rho \) independent of \(\chi \) has no effect on \(\chi ^*\). This is in contrast to time-adaptivity where we seek the largest \(\varDelta t^*\) such that \(\left\| L(\varDelta t^*,\varvec{u}_n,\chi ) \right\| _{2} < \delta \) for some user specified tolerance \(\delta \), and \(\rho \ne 0\) effects the choice of \(\varDelta t^*\).

The first observation in Remark 3 suggests that the application of defect based estimates for choosing optimal parameters can be prohibitively expensive. However, the second suggests that we can resort to inexpensive approximations of the defect and still hope to arrive at a good choice of parameters.

In Sect. 3.2, we see that under reasonable assumptions, the number of optimization steps is not expected to be large and just a few steps of Gauss–Newton iteration are ever required. In the large time-step regime the approximation of defect on a coarse spatial grid also proves to be sufficient for the purposes described here. Overall, this leads to a procedure for identifying optimal parameters with very reasonable overheads, producing highly efficient schemes.

3.1 Optimization Procedure

In practice, we minimize the square of the defect,

using the gradient,

where \(\mathcal {D}_\chi \mathcal {R}\) is the Jacobian of the defect with respect to \(\chi \), and a Gauss–Newton approximation to the Hessian,

Utilizing the Gauss–Newton Hessian in the context of trust region algorithms yields a sequence of parameters \(\chi _n^k\) that quickly converges to the optimal \(\chi ^*_n\), with reliable global convergence properties [26]. At the same time, the procedure remains relatively inexpensive for a small number of parameters, K, since we only need to compute the first derivatives of the defect with regards to \(\chi \). These can be computed either analytically or approximately using finite differences.

The defect, \(\mathcal {R}(\varDelta t, \varvec{u}_n, \chi _n^k)\), is computed using (6). This requires the computation of a temporary solution, \(\widetilde{\varvec{u}}_{n+1}^k\!=\!\Phi (\varDelta t, \varvec{u}_n, \chi _n^k)\), and of \(\mathcal D_{\varDelta t} \Phi (\varDelta t,\varvec{u}_n, \chi _n^k)\), the latter of which can be computed analytically as outlined with concrete examples in Sect. 4.

Note that, in general, at each iteration a trust region algorithm may be used to compute \(\mathcal {R}\) at candidate parameters \(\chi =\widetilde{\chi }_n^{k+1}\) before deciding to accept or reject the candidate and/or update the trust region radius \(\varDelta _n^k\). For a detailed introduction to trust region algorithms, we refer the reader to [26].

3.2 Practical Considerations for Efficiency

The evaluations of defect can be very expensive, as they require the computation of the temporary solution \(\widetilde{\varvec{u}}_{n+1}^k\) at every iteration. Each of these is as expensive as a step of the original numerical solver \(\Phi \). However, in practice we identify \(\chi _n^*\) by optimizing the defect on a coarse spatial grid, resorting to the fine computational grid only for evaluating \(\varvec{u}_{n+1}\) once \(\chi _n^*\) has been identified.

The coarse grid is obtained as a subgrid of the fine grid with resolution \(r \varDelta x\), with r an integer that divides \(M+1\). Let \(\mathcal {P}_r\) denote the projection operator from the fine grid to the coarse grid, defined as

where

At the k-th iteration of Gauss-Newton algorithm, we evaluate the defect (6) on the coarse grid, as

This requires the computation of \(\widetilde{\varvec{u}}_{n+1}^k = \Phi (\varDelta t, \widehat{\varvec{u}}_n, \chi _n^k)\). On a grid with resolution \(r\varDelta x\), the dimension of the problem is reduced by a factor r. This typically leads to a significant speedup in the computation of \(\chi _n^*\). This speedup is expected to be particularly pronounced in 2 or 3 dimensional problems, where the coarse grid is smaller by factors of \(r^2\) and \(r^3\), respectively, than the finer computational grid.

For a method of order p in space and time, a \(\mathcal {O}(r^p\varDelta x^p)\) term of error is introduced in the evaluation of the defect. This error is negligible if \(r\varDelta x\ll \varDelta t\) and is not expected to have a significant effect on the estimate of the optimal parameters \(\chi _n^*\) in light of Remark 3.

Remark 4

With larger values of r, the additional cost of identifying \(\chi _n^*\) becomes marginal, while the advantages of identifying good parameters could still be significant. We can expect, however, that once r is large enough such that \(r\varDelta x\ll \varDelta t\) is no longer valid, spatial discretization errors will start to dominate and the computation of defect may become too inaccurate to be useful. In light of these observations, as a rule of thumb, the largest r we recommend is the largest divisor of \(M+1\) such that \(r<\varDelta t/\varDelta x\).

Lipschitz continuity. Further gains can be obtained by exploiting temporal smoothness of the optimal parameters \(\chi ^*\). In the context of some methods it may be reasonable to assume that the optimal parameters are described by a Lipschitz function \(\chi ^*(t)\), i.e. \(|\chi ^*_{n} - \chi ^*_{n-1}| \le \widetilde{C} \varDelta t\) for some \(\widetilde{C}<\infty \) independent of n. For small enough \(\widetilde{C}\varDelta t\), \(\chi ^*_{n-1}\) is close enough to \(\chi ^*_{n}\). Thus, the previous value of the optimal parameter serves as a good first guess for the next time-step, \(\chi ^{0}_{n} = \chi ^*_{n-1}\). With \(\widetilde{C}\) and \(\varDelta t\) small enough, \(\chi ^{0}_{n}\) can be expected close enough to \(\chi ^{*}_{n}\) so that conditions of trust-region are guaranteed to be satisfied and fast convergence is guaranteed. In such a case, it suffices to use the simple Newton-type iteration,

in place of the trust-region algorithm, where g and \(H_{\textrm{GN}}\) are given by (12) and (13), respectively. In practice, just a couple of steps of (15) suffice, with the exception of the first iteration (\(n=0\)), when the arbitrary value of the initial guess may be very far from the optimal one, requiring more optimization steps and the use of trust-regions.

Gauss–Newton algorithm is iterated until the stopping criterion,

is satisfied for a suitable tolerance. Then we set \({\chi }^*_n = \chi _n^{k+1}\) and the solution at the next time-step is obtained on the finer computational grid as

The overall procedure introduced in this section is summarized in Algorithm 1.

Assuming that the method \(\Phi \) in (4) is stable and convergent for a time-dependent choice of parameters \(\chi : \mathbb {R}^+ \rightarrow \varOmega \), it follows that the time-adaptive procedure is also stable and has the same order of convergence.

3.3 Modifications for Conservative Schemes

Algorithm 1 is very effective in improving accuracy of standard numerical methods with free parameters and of geometric integrators that preserve a structure that is independent of the parameters. However, some numerical schemes, e.g. the schemes described in [14], preserve conservation laws that depend explicitly on the parameters. Since the optimal parameters generated by Algorithm 1 change from time-step to time-step, it undermines the preservation of the conservation laws of these schemes.

We present an alternative approach for these numerical schemes, which post-processes the time-dependent optimal parameters and uses constant-in-time values of the parameters e.g. by using the mean-values. This allows numerical schemes with parameter-dependent conservation laws to respect their conservation laws as well, while benefitting from low local errors. We modify Algorithm 1 as follows.

-

1.

Project the initial condition on a grid with resolution \(r\varDelta x\).

-

2.

Find the optimal \(\chi _0^*\) and use it to advance a step in time on the coarse grid. Iterate this step till the final time, obtaining the optimal parameters \(\chi _n^*\) at \(n=0,\ldots ,N-1\) steps.

-

3.

Compute the average optimal parameters as \(\overline{\chi }^* = \frac{1}{N} \sum _{n=0}^{N-1} \chi _n^*\).

-

4.

Compute the numerical solution on the full computational grid using the average parameters, \(\varvec{u}_{n+1} = \Phi (\varDelta t, \varvec{u}_n, \overline{\chi }^*)\), for \(n=0,\ldots ,N-1\).

We summarize this conservative version of our procedure in Algorithm 2. Note that the numerical solution obtained on the coarse grid, \(\widehat{\varvec{u}}_n\), is used only for estimating the optimal parameters and later discarded.

Since our estimate of the optimal parameters in Algorithm 2 relies on the solution of the problem on a coarser grid, this algorithm is also affected by the accumulation of errors in space, which may be particularly pronounced for large values of r. On the other hand, the parameters \(\chi _n^*\) need not be identified with too high an accuracy since we only need a good average choice, \(\overline{\chi }^*.\)

Considering the average parameters for solving the problem on the fine grid is a good option particularly for solutions with simple and smooth dynamics (e.g. travelling waves) as the values obtained at the different time-step are all reasonably close to each other.

Remark 5

Note that at the end of step 2, the full sequence of optimal parameters, \(\chi _0^*, \ldots , \chi _{N-1}^*\), and local error estimates are available to the user, and it is possible to consider alternative and more suitable options than the average values of the parameters. This could be particularly useful in cases where the solution changes its type substantially and also the parameters values vary in a wide range along the integration. For example, one may choose the value corresponding to a particularly delicate stage of the time evolution.

4 Approximation of Defect for Specific Schemes

In this section we consider the application of the proposed approach to two partial differential equations—the KdV equation and a nonlinear heat equation—with suitable initial and boundary conditions. In particular, we present the computation of defect (6) for numerical schemes introduced in [14], which is required in Algorithms 1 and 2.

We define the forward shifts in space and time,

respectively, the forward difference and average operators,

and the centred difference operator,

approximating the space derivatives of degree 2k and \(2k-1\), respectively, with second order of accuracy. Action of these operators on vectors is defined entrywise. Moreover, we denote with \(\circ \) the Hadamard product whose action is entrywise multiplication of vectors.

For the two equations considered here, families of second order finite difference methods that depend on one or more free parameters have been introduced in [14] by means of a strategy based on the fact that, just like total divergences form the kernel of an Euler operator [27], there exists a discrete Euler operator whose kernel is the space of the difference divergences [23, 24]. We consider in this section some of these families of schemes.

Remark 6

We restrict attention to the setting where \(\varDelta t>\varDelta x\), and the parameters \(\chi \) featured in the numerical schemes from [14] are \(\mathcal {O}(\varDelta t^2)\). These small parameters \(\chi \) correspond to perturbation terms that have no counterpart in the continuous problem, and whose contributions vanish in the limit \(\varDelta t \rightarrow 0\). Thus, it is reasonable to restrict our search to a neighbourhood of \(\textbf{0}\) of size \(\mathcal {O}(\varDelta t^2)\), \(\varOmega \subset \overline{B}_{C\varDelta t^2}(\textbf{0};\mathbb {R}^K)\), for some constant \(C>0\). Therefore, when applying the algorithms outlined in Sect. 3 we can expect that \(\chi =\textbf{0}\) is a reasonable initial guess, and we can find convenient values of the parameters by using the simple iteration (15) without the aid of trust region methods.

Remark 7

The schemes considered here are all implicit. We assume that \(\mathcal {J}\), the Jacobian operator defined by the partial derivatives of the scheme with respect to \(\varvec{u}_{n+1}\), is never singular. Solutions can then be obtained by iteration of Newton’s method.

An important property of the numerical schemes considered here is that they preserve some conservation laws. Conservation laws are defined as total divergences,

that vanish on solutions of the PDE. The functions F and G are the flux and the density of the conservation law and depend on x, t, u and its derivatives. The methods in [14] preserve second order approximations of specific conservation laws in the form

where here and henceforth tildes represent approximations of the corresponding continuous terms, and \(\varvec{x}\) is the column vector whose m-th entry is \(x_m\).

4.1 Schemes for KdV Equation

In this section we apply the approach outlined in Sect. 3 to parametric families of schemes for the KdV equation,

with initial condition

For simplicity, we restrict attention to periodic or zero boundary conditions. However, as shown in Sect. 4.2, the entire discussion can also be adapted to boundary conditions of a different type. The KdV equation has infinitely many independent conservation laws. The first three, in increasing order,

describe the local conservation laws of mass, momentum and energy, respectively. For this equation we define the semidiscrete operator A in (2) in the most natural way, as

The results obtained are independent of the particular form of this operator under spatial discretization, as we work under the assumption that the leading source of error is given by the time integration.

Energy conserving methods

We consider here the following family of mass and energy-conserving methods described in [14],

where

We denote with \(\text {EC}(\alpha )\) the schemes in Eq. (19), where, according to Remark 6, \(\alpha \) is a free parameter in a neighbourhood of 0, \(\varOmega \subset \overline{B}_{C\varDelta t^2}(0;\mathbb {R})=[-C\varDelta t^2,C\varDelta t^2]\) for some constant \(C>0\). For any choice of the parameter \(\alpha \in \varOmega \) schemes EC\((\alpha )\) are defined on the 10-point stencil in Fig. 1, they are implicit and second order accurate. Moreover, they have a discrete version of the conservation law of the mass (17) given by their definition (19) with,

and satisfy the discrete energy conservation law,

Being implicit methods, an explicit expression for \(\Phi \) in (3) is not available. Nevertheless, an analytical expression of its time derivatives can be obtained by substituting (3) in (19) and differentiating. This yields,

where \(\varvec{u}_{n+1}=\Phi (\varDelta t,\varvec{u}_n,\alpha )\), and \(\mathcal {J}\) denotes the Jacobian matrix defined in Remark 7.

Optimal values of \(\alpha \) are then computed according to (11), where the defect is calculated according to (6) with (18) and (21).

Remark 8

As noted in Sect. 3.3, since the value of \(\alpha \) changes at every time-step in Algorithm 1, it cannot preserve the conservation laws (19) and (20) of EC(\(\alpha \)) because they depend on \(\alpha \). However, since the boundary conditions are conservative, summing the entries of the vectors in (19) and (20) yields

Therefore, EC(\(\alpha \)) also preserves the following approximations of the global mass and energy:

These two global invariants are independent of \(\alpha \), and therefore they are conserved by both algorithms introduced in Sect. 3.

Momentum conserving methods

A two-parameter family of mass and momentum conserving schemes described in [14] is

We denote with \(\text {MC}(\beta ,\gamma )\) the two-parameter family of schemes (22). For any value of the parameters \((\beta ,\gamma )\in \varOmega \subset \overline{B}_{C\varDelta t^2}(0;\mathbb {R}^2), C>0\), the schemes \(\text {MC}(\beta ,\gamma )\) are defined on the 10-point stencil in Fig. 1, are implicit and second order accurate. Solutions of MC\((\beta ,\gamma )\) satisfy the local mass conservation law given by (22), and the local momentum conservation law,

where

As also these schemes are fully implicit, we substitute (3) in (22) and differentiate in time. This yields,

The defect and the local error estimate (8) are then computed using (18) and (24).

Remark 9

Summation of (22) and (23) gives that

are the discretizations of the global mass and momentum, respectively. The discrete global mass is independent of the parameters, and therefore it is conserved by both Algorithms 1 and 2. However, the discrete global momentum and the local conservation laws (22) and (23) are only preserved by Algorithm 2.

4.2 Schemes for a Nonlinear Heat Equation

In this section we consider the nonlinear heat equation,

with initial condition and Dirichlet boundary conditions,

Equation (25) has only two independent conservation laws,

We denote with CS\((\lambda )\) the one-parameter family of methods described in [14], given by

The scheme CS\((\lambda )\) has the following discrete versions of the conservation laws (26) and (27),

In order to evaluate the defect, we consider a centred difference space discretization of (25) in the form (2) with

where \(\varvec{e}_j\) is the j-th unit vector, and we define \(\hat{D}_{2,\varDelta x} = D_{2,\varDelta x}\vert _{\varphi _L=\varphi _R=0}\) in order to isolate the contribution of the boundary conditions. The methods in (28) are linearly implicit so they can be written in the form (3) with

Differentiating (32) in time yields,

which, together with (31), is utilized in (8) and (6) to estimate the local error.

Remark 10

If the boundary conditions are conservative, summing the entries of the vectors in (29) and (30) yields

giving the following approximations of the global invariants

Since the former is independent of \(\lambda \), this is a conserved invariant when using either of Algorithms 1 and 2. In general, the latter is conserved only by Algorithm 2. However, if the boundary conditions are such that

the conservation of

is also guaranteed by Algorithm 1. On a uniform spatial grid, this is achieved, for example, with zero boundary conditions.

5 Numerical Examples

In this section we consider a range of benchmark problems for the KdV Eq. (16) and the nonlinear heat Eq. (25), and investigate the performance of the methods described in Sect. 4 with optimal parameters obtained by the two algorithms introduced in Sect. 3. Comparisons between different numerical schemes are based on:

-

Relative error in the solution at the final time \(t=T\), defined as

$$\begin{aligned} \frac{\Vert \varvec{u}_N-u_\textrm{exact}(T)\Vert }{\Vert u_\textrm{exact}(T)\Vert }, \end{aligned}$$where \(\Vert \cdot \Vert \) denotes the discrete \(L^2\) norm and \(u_\textrm{exact}\) is the solution of (1).

-

Error in the variation of the global densities. If the method preserves the k-th conservation law,

$$\begin{aligned} D_{\varDelta x} \widetilde{F}_k(\varvec{x},\varvec{u}_n,\varvec{u}_{n+1},\chi )+D_{\varDelta t} \widetilde{G}_k(\varvec{x},\varvec{u}_n,\chi )=0, \end{aligned}$$the error in the global variation of \(G_k\) is defined as

$$\begin{aligned} \textrm{Err}_k\!=\!\varDelta x\!\! \max _{n=1,\ldots ,N}\left| (\varvec{e}_{M+1}\!-\!\varvec{e}_{1})^T\widetilde{F}_k(\varvec{x},\varvec{u}_n,\varvec{u}_{n+1},\chi )\!+\!\textbf{1}^T D_{\varDelta t}\widetilde{G}_k\!\left( \varvec{x},\varvec{u}_n,\chi \right) \right| , \end{aligned}$$(33)where \(\varvec{e}_j\) denotes the j-th column vector of the standard basis of \(\mathbb {R}^{M+1}\), and \(\textbf{1}\in \mathbb {R}^{M+1}\) is the column vector with all entries equal to one. When the boundary conditions are periodic, we consider instead the maximum error on the k-th global invariant, defined as

$$\begin{aligned} \textrm{Err}_k=\varDelta x\max _{n=1,\ldots ,N}\left| \textbf{1}^T\left( \widetilde{G}_k\left( \varvec{x}, \varvec{u}_n,\chi \right) -\widetilde{G}_k\left( \varvec{x}, \varvec{u}_0,\chi \right) \right) \right| . \end{aligned}$$(34) -

Computational cost, measured in terms of computation time in seconds.

For each of the family of schemes described in Sect. 4, we consider the following choices of the vector of free parameters:

-

\(\chi =\textbf{0}\), default choice, fixed at each step.

-

\(\chi =\chi ^*\), globally optimal fixed value that minimizes the solution error for this specific problem. This value is obtained by brute force search based on empirical comparisons with the exact solution and is not available a priori. Thus, this choice of parameters does not constitute a reasonable numerical algorithm and we do not provide the computation time for it since it is excessive.

-

\(\{\chi _{r,n}^*\}_{n\geqslant 0}\), sequence of values that minimize the local error at each time-step obtained using Algorithm 1 projecting on a grid with resolution \(r\varDelta x\).

-

\(\chi =\overline{\chi }^*_r\), fixed value obtained using Algorithm 2 with projection on a coarse grid with spatial resolution \(r\varDelta x\).

As discussed in Remark 6, we apply both Algorithms 1 and 2 with the simplified Gauss–Newton step (15).

For all the experiments in this section, \(\varDelta t\) and \(\varDelta x\) are such that \(4<\varDelta t/\varDelta x<10\). In order to verify the validity of the observations in Remark 4, we apply the proposed algorithms with \(r=1,2,4,10\).

5.1 KdV Equation

In this section we solve the KdV equation (16), comparing schemes in Sect. 4.1 with different choices of the parameters against schemes known in literature – namely, the multisymplectic method,

and the narrow box scheme,

introduced in [1, 2]. Both of these schemes preserve a discrete conservation law of the mass, given by their definition, but not of the momentum or the energy. These schemes are more compact than those defined in Sect. 4.1 and not centred on the grid. Therefore, we evaluate the error in the global momentum and energy according to (34) with

For methods EC(\(\alpha \)) (resp. MC(\(\beta ,\gamma \))) we evaluate the error (34) in the conservation of the momentum (resp. the energy) with \(\widetilde{F}_2\) and \(\widetilde{G}_2\) (resp. \(\widetilde{F}_3\) and \(\widetilde{G}_3\)) given in (23) (resp. (20)) with all parameters set to zero. As a first numerical test we consider the motion of a soliton. The initial condition is obtained from the exact solution on \(\mathbb {R}\),

evaluated at \(t=0\). We solve this problem over \([a,b]=[-20,20]\) till the final time \(T=10\), and set a grid with \(\varDelta x=0.05\) and \(\varDelta t=0.4\).

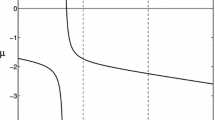

We first find estimates for the optimal parameters of the schemes EC(\(\alpha \)) and MC(\(\beta ,\gamma \)) by applying the two new algorithms with projection (14) on grids with spatial resolution \(r\varDelta x\), \(r=1,2,4,10\). In Fig. 2 we show the sequences of optimal values given by Algorithms 1 and 2, respectively, at each time step of scheme EC. Similarly, in Fig. 3 we show the sequences obtained at each time step of the scheme MC.

Since the solution only travels along the same direction with constant speed, after a few initial steps the sequences of parameters stabilize around constant values.

The sequences obtained by the two algorithms with \(r=1,2,4,\) are all very close to each other (within a maximum distance of \(5\cdot 10^{-3}\)). In agreement with Remark 4, those obtained with \(r=10>\varDelta t/\varDelta x\) show the effect of the space error on the coarser grid. This shows that the accuracy in the solution can be compromised when r is too large. Nevertheless, these parameters are not too far from the values obtained on the computational grid, and still show a good level of accuracy in all cases, further reducing the computational overheads involved in the solution of the optimization problem.

In Table 1 we compare different schemes. The results obtained show that:

-

The values of the maximum error on the k-th global invariant, Err\(_k\), \(k=1,2,3\), evaluated according to (34), show that schemes EC and MC with fixed values of the parameters exactly preserve two conservation laws, and therefore two global invariants.

-

According to Remark 8, due to the conservative boundary conditions, the sequence of schemes EC\((\alpha ^*_{r,n})_{n\geqslant 0}\) preserves the global mass and the global energy. Similarly, according to Remark 9, schemes MC\((\beta ^*_{r,n},\gamma ^*_{r,n})_{n\geqslant 0}\) preserve the global mass but not the global momentum.

-

The computational cost of both of the proposed algorithms decreases as r increases, i.e., as the resolution of the grid (see Eq. (14)) used for the solution of the optimization problem increases. With respect to solving the optimization problem on the same grid of the differential problem (\(r=1\)), the overall computational time reduces to less than a half when \(r=2\), and to less than a fourth when \(r=4\), making the computation cost of the methods proposed in this paper comparable to that of the other schemes in literature. As expected, setting \(r=10\), the computational time further reduces.

-

All the approximations obtained with the sequences of parameters given by Algorithms 1 and 2 are more accurate than the solutions of the schemes from the literature and of EC(0) and MC(0,0). However, schemes EC(\(\alpha ^*\)) and MC(\(\beta ^*,\gamma ^*\)), where the parameters are obtained by brute force, are more precise. This shows that there exist sequences of parameters yielding higher accuracy than those obtained using the new algorithms. This is due to the fact that our approach is based on a minimization of (8) as an estimate of the local error. This improves local error but does not necessarily lead to a minimization of the global error.

-

The accuracy of the solutions obtained using the two new algorithms is only marginally affected by the different choices of \(r<\varDelta t/\varDelta x\). However, for \(r=10>\varDelta t/\varDelta x\) the spatial error has a visible effect on the sequences of parameters obtained, as is evident in Figs. 2 and 3. In this case, the accuracy in the solution is only marginally affected but in general this may not be the case. In agreement with Remark 4, setting \(r=4\) gives the best compromise between reliability and speed of computation.

-

Algorithms 1 and 2 with \(r=4\) are significantly more efficient than the schemes from the literature: while computation times are comparable, the solution error is roughly three times lower.

One-soliton problem for KdV (16). Left: Initial condition (dashed line) and solution of EC(\(\overline{\alpha }^*_{4})\). Right: Pointwise error for different schemes at the final time

In the left plot of Fig. 4 we show the initial profile and, as an example, the solution of EC(\(\overline{\alpha }_{4}^*\)) at the final time. In the right plot we show the absolute error given by EC(\(\overline{\alpha }_{4}^*\)) at every point, in comparison with EC(0), EC(\(\alpha ^*\)), and the narrow box scheme. For all schemes, the bulk of the error is detected around the final location of the soliton and is due to a delay introduced by all numerical schemes. However, the maximum error introduced by EC(\(\overline{\alpha }_{4}^*\)) is less than the 40% of that given by EC(0) and by the narrow box scheme, and only slightly larger than the error given by EC(\(\alpha ^*\)).

As a second numerical test we consider the interaction of two solitons over \([a,b]=[-30,30]\) and till time \(T=15\). The initial condition is obtained from the exact solution on \(\mathbb {R}\),

with

We set

In Figs. 5 and 6 we show the sequences of parameters obtained at each time step of schemes EC and MC, respectively. Again, all the sequences obtained by solving the optimization problems on a grid up to four times coarser are very close (within a distance of \(6\cdot 10^{-3}\)) to those obtained on the computational grid.

We notice that the parameters rapidly change when the solitons interact, but only slowly variate before and after the interaction.

In Table 2 we compare the accuracy, efficiency and conservation properties of different schemes. The same observations done for the case of the motion of a solitary wave hold also in this case. As before, the computational times of the new algorithms with \(r=4\) are comparable to those of the methods from the literature, and their greater efficiency is evident through solution errors that are much lower.

Two-soliton problem for KdV (16). Left: Initial condition (dashed line) and solution of MC\((\beta _{4,n}^*,\gamma _{4,n}^*)_{n\ge 0}\). Right: Pointwise error for different schemes at the final time

In the left plot of Fig. 7, we show the initial condition together with the solution of the sequence MC\((\beta _{4,n}^*,\gamma _{4,n}^*)_{n\ge 0}\). In the right plot, we show the pointwise error of MC\((\beta _{4,n}^*,\gamma _{4,n}^*)_{n\ge 0}\), MC(0,0), and MC\((\beta ^*,\gamma ^*)\) at the final time. We omit the results for the multisymplectic and the narrow box scheme, as they are similar to that of MC(0,0). The methods obtained using the approaches introduced in this paper are very accurate around the final location of the faster soliton, where the bulk of the error given by MC(0, 0) and by the schemes from the literature is located. However, the error around the slower soliton is larger and small oscillations can be seen far from the solitons. MC\((\beta ^*,\gamma ^*)\) gives a slightly smaller error around the slower soliton, but more oscillations are introduced.

5.2 Nonlinear Heat Equation

In this section we apply the new algorithms to the nonlinear heat Eq. (25) using the methods CS(\(\lambda \)) from Sect. 4.2.

We consider here two benchmark problems with (weak) energy solutions that are not classic solutions, having at least one point of non differentiability. Although in Sect. 3 smoothness of the solution is assumed, we show that the strategies introduced in this paper are also effective in this setting.

In order to converge, explicit and implicit finite difference methods for (25) in literature typically require \(\varDelta t=\mathcal {O}(\varDelta x^2)\) and \(\varDelta t=\mathcal {O}(\varDelta x)\), respectively [9, 10, 18, 19, 22]. Under such small time-step restrictions, the Crank-Nicolson method applied to the semidiscretization (31) turns out to be very accurate and efficient. However, it fails to converge for the two benchmark tests in this section with \(\varDelta t>\varDelta x\). Such instabilities may also occur when using a CS(\(\lambda \)) method with a default choice of the parameter, \(\lambda =0\). In contrast, we find that the two proposed algorithms in Sect. 3, based on optimization of defect based error estimate, are able to avoid these instabilities.

The first benchmark problem is given by Eq. (25) with initial and boundary conditions,

The solution of this problem is a linear wave travelling in an undisturbed medium with unit speed,

We discretize the initial boundary value problem described by (25) and (35), choosing \(\varDelta x=0.025\) and \(\varDelta t=0.12\). The graphs in Fig. 8 show the sequences of parameters obtained from Algorithms 1 and 2 at each time step, where the search of the optimal parameters is carried out after applying the projection (14) with spatial resolutions of \(r\varDelta x\) with \(r=1,2,4,10\). Although the values of the parameters given by the two algorithms are different for the first time-steps, for the smallest values of r the two procedures converge to the same value.

In Table 3 we compare the different choices for the parameter \(\lambda \). The error in the conservation laws is calculated according to (33) and the figures in the table show that these are preserved to machine accuracy by all the methods that use a fixed value of the free parameter.

The two algorithms introduced in this paper give equally accurate solutions. By increasing r, the computation time decreases, while the accuracy in the solution is only marginally affected. Moreover, both new algorithms avoid instabilities that occur when \(\lambda =0\). This is shown on the left of Fig. 9 where, as an example, we show the solution of CS(\(\overline{\lambda }^*_{4}\)) (marks at every tenth grid point) and CS(0) at the final time.

On the right of Fig. 9, we plot the pointwise errors given by CS(\(\lambda ^*\)) and CS(\(\overline{\lambda }^*_{4}\)). The error obtained with \(\lambda =\overline{\lambda }^*_{4}\) is almost entirely located at the interface where the solution is non differentiable. Fixing \(\lambda =\lambda ^*\), the \(L^2\) error is lower and the solution is more accurate around the interface. However, spurious oscillations are seen where the true solution is smooth.

The second benchmark problem is (25) with

where \(f_+=\max (f,0).\) The solution of this problem is the Barenblatt profile,

This solution has compact support and is not differentiable at the interface points, which move outward at a finite speed. We solve this problem with \(\varDelta x=0.02\) and \(\varDelta t=0.09\).

We show in Fig. 10 that in this case the two proposed algorithms generate sequences of parameters that approach a small negative value for all the considered values of r.

Table 4 shows that all the schemes preserve a discrete version of the global invariants. When the value of the parameter is fixed during the iteration, this is a consequence of the preservation of the local conservation laws. When a sequence of different parameters is used, this is instead due to the zero boundary conditions (see Remark 10). Although in this case the conservation laws are not preserved locally, the error in the solution is the lowest.

The figures in Table 4 show that with increasing r the computation time decreases while the accuracy is not significantly affected even for \(r=10\). This is a consequence of the fact that the sequence of parameters obtained for different values of r quickly approach each other after just a few time-steps (see Fig. 10). Once again, we find in Fig. 11 (left) that the sequence of parameters \(\{\lambda _{4,n}^*\}_{n\geqslant 0}\) obtained from Algorithm 1 (marks at every tenth grid point) allows us to avoid the numerical instability that occurs under the default choice, \(\lambda =0\).

On the right half of Fig. 11 we show the solution error of CS(\(\lambda _{4,n}^*\))\(_{n\geqslant 0}\) and of CS(\(\lambda ^*\)). The solution error obtained using the sequence of parameters given by Algorithm 1 is mainly located at the points where the solution is not differentiable. Although \(\lambda =\lambda ^*\) minimizes the \(L^2\) norm of the error with respect to any other fixed value of \(\lambda \), we notice that the error at the interface can be further reduced by changing the parameter at every time-step. Moreover, for \(\lambda =\lambda ^*\), relatively large components of error appear near \(\pm 2.5\) where the solution is otherwise smooth. These are not visible in the solution errors obtained using either the adaptive sequences given by Algorithm 1 or the fixed parameters given by Algorithm 2.

NLH (25) with initial and boundary conditions (36). Exact and numerical solutions of CS(\(\lambda \)), (28), with \(\lambda =0\) and \(\lambda =\{\lambda _{4,n}^*\}_{n\geqslant 0}\) (left). Solution error for \(\lambda =\lambda =\{\lambda _{4,n}^*\}_{n\geqslant 0}\) and \(\lambda =\lambda ^*\) (right)

6 Conclusions

In this paper we have proposed two approaches for identifying an optimal method in a parameter dependent family of numerical schemes, based on a minimization of the defect as an estimate of the local error. The first approach uses different (adaptive) values of the parameters at every time-step. In the second approach fixed values of the parameters are derived from a sequence. The latter approach does not compromise parameter depending conservation properties of geometric integrators.

The new algorithms solve an optimization problem at each time-step in order to identify the optimal values of the parameter. In principle, this can increase the computational cost of the original method prohibitively. However, in the large time-step regime, it is possible to solve the optimization problem on coarser spatial grids without compromising the accuracy of the optimal parameters, significantly decreasing the computational time.

The new approaches have been applied to families of schemes for the KdV equation and a nonlinear heat equation that preserve local conservation laws. The proposed numerical tests show that, on one hand, the new strategies effectively identify very accurate methods in each considered family of schemes. On the other hand, introducing a coarse grid for the solution of the optimization problem tremendously improves the efficiency of the new strategies. Overall, the computational time is comparable to that of other schemes in literature, while the accuracy of the proposed approach is much superior.

Data Availability

All data generated or analyzed during this study are included in this manuscript.

References

Ascher, U.M., McLachlan, R.I.: Multisymplectic box schemes and the Korteweg-de Vries equation. Appl. Numer. Math. 48, 255–269 (2004). https://doi.org/10.1016/j.apnum.2003.09.002

Ascher, U.M., McLachlan, R.I.: On symplectic and multisymplectic scheme for the KdV equation. J. Sci. Comput. 25, 83–104 (2005). https://doi.org/10.1007/s10915-004-4634-6

Auzinger, W., Hofstätter, H., Koch, O., Kropielnicka, K., Singh, P.: Time adaptive Zassenhaus splittings for the Schrödinger equation in the semiclassical regime. Appl. Math. Comput. 362, 124550 (2019). https://doi.org/10.1016/j.amc.2019.06.064

Auzinger, W., Hofstätter, H., Koch, O.: Symmetrized local error estimators for time-reversible one-step methods in nonlinear evolution equations. J. Comput. Appl. Math. 356, 339–357 (2019). https://doi.org/10.1016/j.cam.2019.02.011

Auzinger, W., Koch, O., Thalhammer, M.: Defect-based local error estimators for splitting methods, with application to Schrödinger equations, Part I: the linear case. J. Comput. Appl. Math. 236(10), 2643–2659 (2012). https://doi.org/10.1016/j.cam.2012.01.001

Auzinger, W., Koch, O., Thalhammer, M.: Defect-based local error estimators for splitting methods, with application to Schrödinger equations, Part II: higher-order methods for linear problems. J. Comput. Appl. Math. 255, 384–403 (2013). https://doi.org/10.1016/j.cam.2013.04.043

Berljafa, M., Güttel, S.: The RKFIT algorithm for nonlinear rational approximation. SIAM J. Sci. Comput. 39, A2049–A2071 (2017). https://doi.org/10.1137/15M1025426

Blanes, S., Casas, F., Murua, A.: Splitting and composition methods in the numerical integration of differential equations. Bol. Soc. Esp. Mat. Apl. 45, 89–145 (2008). https://doi.org/10.1016/j.cam.2010.06.018

Del Teso, F.: Finite difference method for a fractional porous medium equation. Calcolo 51, 615–638 (2014). https://doi.org/10.1007/s10092-013-0103-7

Del Teso, F., Endal, J., Jakobsen, E.: Robust numerical methods for nonlocal (and local) equations of porous medium type. Part II: schemes and experiments. SIAM J. Numer. Anal. 56, 3611–3647 (2018). https://doi.org/10.1137/18M1180748

Descombes, S., Thalhammer, M.: The Lie-Trotter splitting method for nonlinear evolutionary problems involving critical parameters. An exact local error representation and application to nonlinear Schrödinger equations in the semi-classical regime. IMA J. Numer. Anal. 33, 722–745 (2013). https://doi.org/10.1093/imanum/drs021

Enright, W.: A new error-control for initial value solvers. Appl. Math. Comput. 31, 288–301 (1989). https://doi.org/10.1016/0096-3003(89)90123-9

Frasca-Caccia, G., Hydon, P.E.: Locally conservative finite difference schemes for the modified KdV equation. J. Comput. Dyn. 6, 162–179 (2019). https://doi.org/10.3934/jcd.2019015

Frasca-Caccia, G., Hydon, P.E.: Simple bespoke preservation of two conservation laws. IMA J. Numer. Anal. 40, 1294–1329 (2020). https://doi.org/10.1093/imanum/dry087

Frasca-Caccia, G., Hydon, P.E.: Numerical preservation of multiple local conservation laws. Appl. Math. Comput. 403, 126203 (2021). https://doi.org/10.1016/j.amc.2021.126203

Frasca-Caccia, G., Hydon, P.E.: A new technique for preserving conservation laws. Found. Comput. Math. 22, 477–506 (2022). https://doi.org/10.1007/s10208-021-09511-1

Göckler, T., Grimm, V.: Uniform approximation of \(\varphi \)-functions in exponential integrators by a rational Krylov subspace method with simple poles. SIAM J. Matrix Anal. Appl. 35(4), 1467–1489 (2014). https://doi.org/10.1137/140964655

Graveleau, J.L., Jamet, P.: A finite difference approach to some degenerate nonlinear parabolic equations. SIAM J. Appl. Math. 20, 199–223 (1971). https://doi.org/10.1137/0120027

Gurtin, M.E., MacCamy, R.C., Socolovsky, E.: A coordinate transformation for the porous media equation that renders the free-boundary stationary. Quart. Appl. Math. 47, 345–358 (1984). https://doi.org/10.1090/QAM/757173

Güttel, S.: Rational Krylov approximation of matrix functions: numerical methods and optimal pole selection. GAMM-Mitteilungen 36(1), 8–31 (2013). https://doi.org/10.1002/gamm.201310002

Higham, D.J.: Robust defect control with Runge–Kutta schemes. SIAM J. Numer. Anal. 26(5), 1175–1183 (1989). https://doi.org/10.1137/0726065

Hoff, D.: A linearly implicit finite-difference scheme for the one-dimensional porous medium equation. Math. Comp. 45, 23–33 (1985). https://doi.org/10.2307/2008047

Hydon, P.E., Mansfield, E.L.: A variational complex for difference equations. Found. Comput. Math. 4, 187–217 (2004). https://doi.org/10.1007/s10208-002-0071-9

Kupershmidt, B.A.: Discrete Lax equations and differential-difference calculus, vol. 123. Société mathématique de France (1985)

McLachlan, R.I., Quispel, G.R.W.: Splitting methods. Acta Numer. 11, 341–434 (2002). https://doi.org/10.1017/S0962492902000053

Nocedal, J., Wright, S.J.: Numerical Optimization, second edn. Springer, New York, NY, USA (2006). doi: https://doi.org/10.1007/978-0-387-40065-5

Olver, P.J.: Applications of Lie Groups to Differential Equations, vol. 107, 2nd edn. Springer Science & Business Media, New York (1993). https://doi.org/10.1007/978-1-4684-0274-2

Omelyan, I., Mryglod, I., Folk, R.: Symplectic analytically integrable decomposition algorithms: classification, derivation, and application to molecular dynamics, quantum and celestial mechanics simulations. Comput. Phys. Commun. 151(3), 272–314 (2003). https://doi.org/10.1016/S0010-4655(02)00754-3

Schaback, R.: Convergence analysis of the general Gauss-Newton algorithm. Numer. Math. 46, 281–309 (1985). https://doi.org/10.1007/BF01390425

Shampine, L.F.: Error estimation and control for ODEs. J. Sci. Comput. 25, 3–16 (2005). https://doi.org/10.1007/s10915-004-4629-3

Singh, P.: Sixth-order schemes for laser-matter interaction in the Schrödinger equation. J. Chem. Phys. 150(15), 154111 (2019). https://doi.org/10.1063/1.5065902

Acknowledgements

The authors would like to thank the Isaac Newton Institute for Mathematical Sciences for support and hospitality during the programme Geometry, Compatibility and Structure Preservation in Computational Differential Equations, when work on this paper was undertaken. This work was supported by EPSRC grant number EP/R014604/1. The first author is member of the INdAM Research group GNCS.

Funding

Open access funding provided by Università degli Studi di Salerno within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Frasca-Caccia, G., Singh, P. Optimal Parameters for Numerical Solvers of PDEs. J Sci Comput 97, 11 (2023). https://doi.org/10.1007/s10915-023-02324-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-023-02324-0

Keywords

- Parameter optimization

- Finite difference methods

- Conservation laws

- KdV equation

- Nonlinear diffusion equation