Abstract

A novel optimization procedure for the generation of stability polynomials of stabilized explicit Runge–Kutta methods is devised. Intended for semidiscretizations of hyperbolic partial differential equations, the herein developed approach allows the optimization of stability polynomials with more than hundred stages. A potential application of these high degree stability polynomials are problems with locally varying characteristic speeds as found for non-uniformly refined meshes and spatially varying wave speeds. To demonstrate the applicability of the stability polynomials we construct 2N-storage many-stage Runge–Kutta methods that match their designed second order of accuracy when applied to a range of linear and nonlinear hyperbolic PDEs with smooth solutions. These methods are constructed to reduce the amplification of round off errors which becomes a significant concern for these many-stage methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Stabilized Explicit Runge–Kutta Methods

Explicit Runge–Kutta methods are commonly considered the default choice for the integration of hyperbolic partial differential equations (PDEs). In contrast to implicit methods, explicit methods require only evaluations of the right-hand side instead of solving a potentially nonlinear system of equations. In fact, in the context of hyperbolic PDEs typically nonlinear fluxes are of interest. Explicit methods come with the drawback that the maximum stable timestep needs to be significantly reduced which is in the context of (hyperbolic) PDEs commonly referred to as the CFL condition [1].

To increase computational efficiency, stabilized explicit Runge–Kutta methods have been introduced already in the 1960s [2,3,4] targeting semidiscretizations

of parabolic PDEs. The approach of discretizing the spatial derivatives and leaving time continuous at first is commonly referred to as the Method of Lines approach. Although originally termed for finite difference based spatial discretizations [5] the name persisted and is used nowadays to comprise different discretization techniques like finite elements, finite volumes, and discontinuous Galerkin.

The central idea of stabilized explicit Runge–Kutta methods is to use additional stages primarily to improve the stability properties of the scheme, i.e., allow for larger timesteps. In particular, one usually settles for a moderate order of accuracy and uses the additional degrees of freedom to improve the stability properties of the scheme for a certain spectrum [6,7,8]. The fact that the eigenvalues of the Jacobian

are for parabolic PDES distributed on the negative real axis enables a successful construction of stabilized explicit Runge–Kutta methods optimized for this special case, see for instance the reviews [9, 10]. The fact that the optimal stability polynomial \(P_{S,1}(z)\) of first order accurate methods with S stages is given by the shifted Chebyshev polynomial of first kind \(T_S(z)\) [2, 3, 11, 12] serves as a valuable guidance to construct higher order approximately optimal stability polynomials [13,14,15,16,17,18] which stand on solid ground due to proven existence and uniqueness of optimal stability polynomials with maximum (negative) real axis inclusion [19].

For the purely hyperbolic case, i.e., for eigenvalues \(\lambda \in \sigma (J)\) exclusively on the imaginary axis also results for first order accurate methods in terms of Chebyshev polynomials of first kind are known [16, 20,21,22]. Approximations to higher order accurate stability polynomials can be found in [23].

For the more general case, i.e., where not only either the real or complex line are of interest, but rather a two-dimensional part of the complex plane, concrete results for stability polynomials are rare. To the best of our knowledge, only for disks results for first [24] and second [25, 26] order accurate optimal stability polynomials of variable degree S are available. In contrast, one usually has to resort to numerical optimization of the stability polynomials. A nonextensive list includes optimized methods for certain geometrically defined spectra [27, 28], particular equations [29,30,31,32] and spatial discretization techniques [33,34,35].

A general framework for maximizing the region of absolute stability of an explicit Runge–Kutta methods for a particular spectrum has been developed in [36] which has been extensively used [33, 34, 37,38,39,40,41,42,43,44]. The approach presented in [36] optimizes the coefficients \(\varvec{\alpha }\in {\mathbb {R}}\) of the stability polynomial

where \(\{q_j(z)\}_{j=0,\dots , S}\) forms a basis for the complex-valued polynomials with real coefficients. In the simplest case, \(q_j\) are given by the monomials \(z^j\) which lead for higher degrees S to an increasingly ill-conditioned optimization problem. This choice of basis and optimization in monomial coefficients is also employed in [27, 30, 31] where polynomials are optimized up to maximum degrees 14, 7, and 8, respectively. If a suitable basis \(\{q_j(z)\}_{j=0,\dots , S}\) for the spectrum of interest can be found, stability polynomials of high degrees (up to \(S=80\) are reported in [36]) can be constructed. In [36], suitable bases are proposed for parabolic (negative real-line), purely hyperbolic (imaginary axis) and disk-spectra. In general, however, finding such a basis for a complicated real-world spectrum is a challenging task. Consequently, the works [33, 34, 37,38,39,40,41,42,43,44] employ the monomial basis which limits the maximum degree of the stability polynomials to 16-20.

In this work, we develop a formulation that avoids the search of a suitable basis. In particular, instead of parametrizing the polynomial in terms of the basis-coefficients, the stability polynomial P(z) is characterized by the roots of \(\big (P(z)-1\big )/z\). This can be seen as a generalization of the approach employed in [28] wherein the stability polynomial is described by its extrema. Although this approach leads to a highly nonlinear formulation, it will be shown that for a relevant class of spectra an excellent initial guess can be supplied allowing the successful optimization of the highly nonlinear problem. By doing so, we are able to optimize stability polynomials of degrees larger than 100, which to the best of our knowledge is an unprecedented success for spectra of hyperbolic semidiscretizations. We emphasize that our approach involves for an efficient optimization the application of the coefficient-based method developed in [36] which forms thus an integral building block of our algorithm.

A potential application of these high degree stability polynomials are the recently published Paired-Explicit Runge–Kutta (P-ERK) methods [39, 40, 45] which achieve local time stepping effects by combining a set of stabilized methods. In particular, due to their construction as partitioned Runge–Kutta methods [46] they ensure consistency and conservation which are not trivially satisfied by other classical multirate methods [47]. Being based on the optimization approach developed in [36], the stability polynomials and corresponding P-ERK methods are currently limited to 16 stages. The availability of higher degree polynomials allows for a potentially even more efficient treatment of e.g. locally refined meshes or varying characteristic speeds. In this work, however, we focus on the optimization of high degree stability polynomials and methods that can be directly constructed thereof. The application to P-ERK methods is left for future work.

The paper is organized as follows. The motivation for the new optimization approach is presented in Sects. 2 and 3, based on findings for the proven optimal stability polynomials for disk-like spectra. The necessary details required for successful optimization of the problem are discussed in Sect. 4 which is followed by a discussion of the implementation in Sect. 5. In Sect. 6 a couple of high degree optimized stability polynomials are presented. Extensions of the original approach to non-convex spectra are covered in Sect. 7 and applied in Sect. 8. The construction of actual Runge–Kutta methods from the high-degree stability polynomials follows in Sect. 9 with a special focus on internal stability. Section 10 presents the application of the many-stage methods to linear and nonlinear problems. Section 11 concludes the paper.

2 Note on Terminology

For the sake of readability, we will refer to the shifted Chebyshev polynomials of first kind simply as Chebyshev polynomials. As the extreme points of the (shifted) Chebyshev polynomials (of first kind) play a crucial role in this work, we will call them in the interest of brevity simply Chebyshev extreme points. Furthermore, we will refer to the the Chebyshev extreme points with extremal value \(+1\) as positive Chebyshev extreme points.

Since a significant part of the paper centers around stability polynomials from which Runge–Kutta methods of certain order may be constructed, we will say that a polynomial of degree S is of order/accuracy p whenever the first \(i= 0, \dots , p\) coefficients match the first \(i=0, \dots , p\) coefficients of the Taylor series of the exponential.

3 Preliminaries

Runge–Kutta methods are single step methods, i.e., when applied to the constant coefficient linear initial value problem (IVP)

the new approximation \({\varvec{u}}_{n+1}\) can be computed from the previous iterate \({\varvec{u}}_{n}\) as

Here, R(z) denotes the stability function [6] of the Runge–Kutta method. For implicit methods R(z) is a rational function, while for explicit methods R(z) is a polynomial with real coefficients

By comparing (2.2) to the solution \({\varvec{u}}(t_{n+1}) = \exp (A \Delta t) {\varvec{u}}(t_n)\) of (2.1b) it follows from the definition of the exponential that for a \(p'\)th order (linearly) consistent approximation the coefficients \(\alpha _j\) need to satisfy

It is customary to define the family of polynomials with real coefficients over the complex numbers of degree S and corresponding order of accuracy p as \({\mathcal {P}}_{S,p}\).

Due to the fact that the stability function R(z) is a polynomial P(z) for explicit methods it follows that the region of absolute stability

is necessarily bounded. We further define the boundary of the region of absolute stability as

which will also be called in brief stability boundary.

3.1 Optimization Objective

The optimization objective is now to find the maximum possible timestep \(\Delta t_{S,p}^\star \) for a polynomial of degree S corresponding to a method with order of accuracy p:

Here, \(\{ \lambda ^{(m)} \}_{m=1, \dots , M} = \sigma (J)\) are the eigenvalues of the Jacobian (1.2) where the ordinary differential equation (ODE) system (1.1) corresponds in this work to the semidiscretization of PDEs describing typically physical processes. As a consequence, we assume that there are no amplifiying modes among the eigenvalues \(\lambda ^{(m)}\) corresponding to generation of energy, thus all eigenvalues should have non-positive real part:

It should be stressed that the maximum possible timestep \(\Delta t^\star \) is the single optimization target in this work. In particular, we do not focus on the reduction of dispersion or dissipation errors as done for instance in [48,49,50] or other objectives like maximum strong stability preserving (SSP) coefficients [34, 51, 52].

3.1.1 Convex Problem Formulation

As noted in [36] (2.7) is for fixed timestep \(\Delta t\) a convex optimization problem when parametrizing the stability polynomial \(P_{S,p}\) in terms of the coefficients \(\varvec{\alpha }\):

Paired with an outer bisection to determine the timestep, (2.9) leads to an efficient optimization routine that can be solved with standard software such as SeDuMi [53] or ECOS [54] for second order cone programs. The downside of formulation (2.9) is that, unless a suitable basis \(\{q_j(z)\}_{j=0,\dots , S}\) for the stability polynomial can be found, one has to resort to simple choices such as the monomials. For these, the problem becomes increasingly ill-conditioned for higher degrees since the monomial coefficients scale essentially as \(\alpha \sim 1/(j!)\), i.e., they become diminishingly small. Rescaling the coefficients as \(\widetilde{\alpha _j} :={\alpha _j}/{j!}\) improves this issue slightly, but ultimately cannot solve this difficulty fundamentally. This is due to the limited precision of floating point numbers which cannot meet the high sensitivity of higher degree polynomials with respect to the coefficients. This limits the monomial-based version of (2.9) in standard double precision to about 16 to 20 stages for general spectra. We remark that the usage of higher precision datatypes can mitigate this issue.

Avoiding the difficulty of finding a suitable basis that leads to a well-conditioned problem is the key achievement of our approach motivated in the next subsection.

3.2 Central Observation

The linear consistency requirement (2.4) implies that every at least first order linearly consistent stability polynomial \(P_{S,1}(z)\) can be written as

where the lower degree polynomial \({\widetilde{P}}_{S-1}\) is parametrized by its complex-conjugated roots \(\widetilde{{\varvec{r}}} \in {\mathbb {C}}^{S-1}\). Clearly, the roots of the lower-degree polynomial (called from now on pseudo-extrema of the original polynomial) form a subset of the stability boundary \(\partial {\mathcal {S}}\):

This observation is particularly useful when the shape of the stability boundary \(\partial {\mathcal {S}}\) can be a-priori roughly estimated. For optimized polynomials of higher degrees, \(\partial {\mathcal {S}}\) will follow the spectrum closely, thus one can use an envelope of the spectrum itself as a reasonable approximation to \(\partial {\mathcal {S}}\). This is discussed in Sects. 3.2 and 7 in more detail.

We remark that the formulation (2.10) bears some similarity to the basis \(q_j = (1 + z/S)^j\) used in [36] for generating stability polynomials for circular spectra.

4 Motivating the Optimization in Pseudo-Extrema

The optimization problem (2.7) for stability polynomials in terms of complex-conjugated pseudo-extrema reads

This is a highly nonlinear optimization problem for which we need an excellent initial guess in order to have a reasonable chance to reach the global optimum. In the next section, we will thoroughly motivate a suitable assumption on the distribution of the pseudo-extrema based on results of proven optimal stability polynomials.

4.1 Pseudo-Extrema of Proven Optimal Stability Polynomials for Disks

We direct our attention to examining the pseudo-extrema of known optimal stability polynomials \(P_{S,p}(z)\) for circular spectra. For the sake of completeness, the case for parabolic spectra, i.e., eigenvalues on the negative real line, is provided in “Appendix A.1”.

4.1.1 First Order Consistent Stability Polynomial

As shown in [24], the optimal first order stability polynomial with largest disk inclusion is given by a sequence of Forward Euler steps:

For this stability polynomial it holds that the disk with radius S

is contained in the region of absolute stability \({\mathcal {S}}\). Consider the positive Chebyshev extreme points \(x_j \in {\mathbb {R}}: T_S(x_j) = 1\) of the S degree polynomial on the here relevant \([-2S, 0]\) interval which are given by

We give two motivations for considering the Chebyshev extreme points in connection with the pseudo-extrema. First, as outlined in “Appendix A” the pseudo-extrema of (shifted) Chebyshev polynomials are trivially given by the (shifted) positive Chebyshev extreme points. This is of significance since the first order optimal stability polynomial for parabolic spectra is precisely given by the shifted Chebyshev polynomial of first kind. Second, we recall that the Chebyshev extreme points \(x_j\) can be perceived as the real part of the set of points \(z_j\) which partition the circle into segments with equal arc length [55]. In that case, the imaginary part \(y_j\) of \(z_j\) can be computed as

since \(\sin \left( \frac{2j \pi }{S} \right) \ge 0, j = 0, \dots , S/2\). Consequently, the points \(z_j = x_j \pm i y_j\) with \(x_j, y_j\) defined as above lie on the stability boundary \(\partial {\mathcal {S}}\) and are thus a valid candidate for the pseudo-extrema. In fact, one can readily show that these points are indeed the pseudo-extrema of \(P^\text {Disk}_{S, 1}(z)\), as stated in the following theorem.

Theorem 1

The \(S-1\) pseudo-extrema of \(P^\text {Disk}_{S, 1}(z)\) are given by the positive Chebyshev extreme points with \(x_j \ne 0\) of \(T_S(1+z/S)\) with projection onto the circle with radius S centered at \((-S, 0)\).

Proof

We compute

As a consequence, we have that

where we use the fact that \(P_{S, 1}(0, \widetilde{{\varvec{r}}}) = 1\) which excludes \(j=0\) from the set of pseudo-extrema.

Remark 1

Note that for even stability polynomials we have one purely real pseudo extremum \({\widetilde{r}}_0 = -S\) and \(S-2\) complex conjugated pseudo-extrema. For odd stability polynomials, all pseudo-extrema are complex conjugated. This is in accordance with the motivating observation (2.11) where we established that the pseudo-extrema lie on the boundary of the region of absolute stability \(\partial {\mathcal {S}}\). We also note that the complex-conjugated pseudo-extrema of \(P^\text {Disk}_{S, 1}(z)\) correspond to the real pseudo-extrema with root-multiplicity two of the Chebyshev polynomials \(T_S(1+z/S^2)\), see “Appendix A”.

4.1.2 Second Order Consistent Stability Polynomial

For disks/circular spectra there is also an explicit formula for the optimal second order accurate polynomial known [25]. The polynomial

contains the maximum disk \(D_{S-1}\) in its region of absolute stability [25, 26]. As a side note, we realize that \(P_{S, 2}(z)\) is identical to the second order accurate Runge–Kutta Chebyshev method [56, 57] with infinite damping [58]. Furthermore, as mentioned in [58] \(P_{S, 2}(z)\) can be written as a sequence of forward Euler steps and belongs consequently to the family of total varitation diminishing (TVD)/SSP integrators [59, 60] with optimal SSP coefficient [61].

Theorem 2

The \(S-1\) pseudo-extrema of \(P^\text {Disk}_{S, 2}\) are given by the positive Chebyshev extreme points \(x_j \ne 0\) of \(T_S\big (1+z/(S-1)\big )\) when projected onto the circle with radius \(S-1\) centered at \(\big (-(S-1), 0 \big )\).

Proof

Analogous to proof of Theorem 1.

Remark 2

As for the first order accurate stability polynomial, we have for even S one real pseudo-extremum \({\widetilde{r}}_0 = -(S-1)\) and \(S-2\) complex conjugated pseudo-extrema and for odd S a set of \((S-1)/2\) exclusively complex conjugated pseudo-extrema.

The significance of these results is that the pseudo-extrema are distributed with exact equal arc length on the hull of the spectrum, and consequently with approximately equal arc length on the stability boundary for a finite number of stages S. In fact, in the limit \(S\rightarrow \infty \) the stability boundaries converge to the circle and thus the pseudo-extrema are asymptotically distributed with exact equal arc length on the stability boundary.

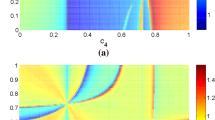

Especially higher degree stability polynomials have enough flexibility to fully adapt the stability boundary to the envelope of the spectrum. This is the case for even and odd polynomial degrees S as well as first and second order accurate polynomials. To illustrate this, we present Fig. 1 where the pseudo-extrema of second order accurate polynomials \(P^\text {Disk}_{S, 2}\) with degrees \(S=12\) and \(S=13\) are shown.

Optimal second order accurate stability polynomials \(P_{S, 2}(z)\) given by (3.11) for even (a) and odd degree (b) polynomial. The spectrum corresponds to the canonical first order Finite Volume Upwind/Godunov discretization [62] of the advection equation \(u_t + u_x = 0\) on the periodic \([-1, 1]\) domain discretized with 500 cells. In the even-degree case (a), the lower degree polynomial is odd and thus there is one pure real pseudo extremum at the left end of the spectrum. For odd polynomial degrees (b), the lower degree polynomial is of even-degree and we have only complex-conjugated pseudo-extrema. Note also that the segment crossing 0 is twice the length of the others, which follows from the fact that \(P_{S, p}(0) \equiv 1 \, \forall \, S, p \ge 1\), cf. (2.10)

4.2 Central Assumption

Based on the findings for the circle we make a central assumption regarding the pseudo-extrema of strictly convex spectra, i.e., where the eigenvalues define a strictly convex set of points in the complex plane. To establish an intuition for this type of spectra we present four exemplarily representatives falling into this category in Fig. 2. We assume that the pseudo-extrema of optimal stability polynomials are distributed with approximately equal arc length on the convex hull of strictly convex spectra.

4.2.1 Strictly Convex Spectra

To motivate this assumption extending the findings for proven optimal spectra of the circle, we display the pseudo-extrema of optimized \(P_{16,2}(z)\) polynomials for different spectra in Fig. 2. The optimal stability polynomials and optimal timesteps \(\Delta t_{16,2}\) have been computed using the approach developed in [36] and the pseudo-extrema are computed numerically.

We present the spectra of four nonlinear hyperbolic PDEs

-

1.

Burgers’ equation

-

2.

1D Shallow Water equations

-

3.

1D Ideal Compressible Magnetohydrodynamics (MHD) equations

-

4.

2D Compressible Euler equations

when discretized with the discontinuous Galerkin spectral element method (DGSEM) [63, 64] using Trixi.jl [65,66,67]. We use local polynomials of degrees 1, 2, 3 and a range of numerical fluxes and initial conditions, corresponding to both smooth and discontinuous solutions. For the sake of reproducibility we give the precise setups of the semidiscretizations corresponding to the displayed spectra in “Appendix B”.

As seen from Fig. 2 the pseudo-extrema are distributed with approximately equal arc length on the spectrum enclosing curve. Based on this observation we will initialize the pseudo-extrema such that they are distributed with equal arc length on the spectrum enclosing curve, which is assumed to be very close to the optimal stability boundary of higher degree polynomials. The deviations from the equal arc-length initialization are then courtesy of the optimizer.

The significance of the strictly convex spectra stems from the observation that the optimal stability boundary \(\partial {\mathcal {S}}^\star \) can be, for a sufficiently high polynomial degree S, reasonably well approximated with the convex hull of the eigenvalues.

This implies, in turn, that we can use the convex hull of the spectrum as an Ansatz for the initial placement of the pseudo-extrema \(\widetilde{{\varvec{r}}}\). Generalizing the proven results for the circle, the pseudo-extrema are then placed with equal arc length on the convex hull of the spectrum.

5 Detailed Formulation of the Optimization Problem

In this section we discuss the required details to successfully optimize a stability polynomial when parametrized by pseudo-extrema (3.1).

5.1 Constructing Complex-Conjugated Pseudo-Extrema

Since the lower-degree polynomial \({\widetilde{P}}_{S-1}\) has real coefficients, one has to ensure that the pseudo-extrema \(\widetilde{{\varvec{r}}}\) are complex-conjugated. In principle, this is a difficult task since throughout the optimization it might happen that a previously real pseudo-extremum is moved away from the real axis into the complex plane. Consequently, another real pseudo-extremum has to be set as the corresponding complex-conjugate, effectively changing the number of free optimization variables by introducing an additional constraint. To avoid this switch-like behaviour we set a-priori a number of real and complex-conjugated pseudo-extrema. Based on the previous results, we restrict ourselves for even-degree stability polynomials to one real and \(S-2\) complex conjugated pseudo-extrema and for odd stability polynomials to \(S-1\) complex conjugated ones.

5.2 Restriction to Second Quadrant

Due to symmetry around the real axis it suffices to consider either the second or third quadrant in the complex plane only. In this work, we choose the second quadrant, which implies that we consider only complex numbers with non-negative imaginary part. This implies that for real Jacobians (1.2) with complex-conjugated eigenvalues \(\lambda _j = \overline{\lambda _{j+1}}\) the stability requirement \(\vert P_{S}(\Delta t \lambda ^{(m)}) \vert \le 1\) needs only to be checked for the \(m = 1, \dots , {\widetilde{M}}\) eigenvalues with non-negative imaginary part, thereby also reducing the number of constraints.

5.3 Scaling of the Optimal Timestep

We briefly comment on the scaling of the optimal timestep \(\Delta t^\star \) with the degree of the stability polynomial S. It is well-known that the maximum admissible timestep scales for parabolic spectra covering the real-axis quadratic in S [9, 10]. In contrast, the one-sided imaginary axis inclusion of polynomials with real coefficients is bound by \(S-1\) as shown in [26]. In [23] the tighter maximum imaginary stability limit \(\sqrt{(S-1)^2 -1}\) was conjectured and proven for special cases in [68]. The proven optimal stability polynomials for the disk scale both linearly in S, see [24, 25]. As a consequence, we consider for general hyperbolic-parabolic spectra an asymptotically linear scaling

which is observed for instance in [33, 35, 36, 39, 42] as optimal. It should be mentioned that for \(S \sim {\mathcal {O}}(1)\) better than linear scalings are possible, as for these degrees qualitative changes in the shape of the stability boundary still occur. Once the stability boundary is closely adapted to the spectrum, the linear scaling is recovered.

It is immediately clear that once the timestep increases only linearly with more stages S there is no additional efficiency gained. In particular, we observe for the methods with a large number of stages additional complications due to internal stability, see Sect. 9.1 which render the application of an individual many-stage scheme unattractive. Nevertheless, many-stage stability polynomials are of great value in the context of multirate partitioned Runge–Kutta methods where an ensemble of methods is used. As mentioned in the introduction, the P-ERK schemes [39, 40, 45] would benefit from high degree stability polynomials which enable the efficient integration of systems even in the presence of locally restricted CFL numbers.

5.4 Constraints for Higher Order

For higher order p-consistent methods \(p-1\) equality constraints have to be added to the stability inequality constraints. To obtain second order consistent methods, for instance, the pseudo-extrema have to satisfy

which follows from Vieta’s formulas which establish a link between the roots and coefficients of a polynomial. More generally, for a stability polynomial that matches the first \(k=0, \dots , p\) coefficients of the exponential 1/(j!) we have the additional constraints

In this work we focus on second order accurate polynomials since for these the linear order constraints (4.3) imply second order convergence also for nonlinear system, without any additional constraints placed on the coefficients of the method [6, 46]. Nevertheless, we also constructed third order accurate stability polynomials for some selected cases.

5.5 Focus on Even-Degree Stability Polynomials

From now on we focus on even-degree polynomials since they lead to a slightly simpler formulation of the optimization problem. As we are interested in the many-stage case, focusing on either even or odd degree case is no severe restriction.

Even-degree stability polynomials come with two main advantages. First, we observe for all considered spectra (not only strictly convex) that the left endpoint of the spectrum, in our cases a real eigenvalue, is always selected as one pseudo-extremum, allowing for an even improved initialization. For strictly convex spectra, this is the only real pseudo-extremum, cf. Figs. 1a, 2, and 3. The remaining pseudo-extrema can then be initialized with equal arc length starting from the left endpoint of the spectrum.

Second, under the assumption motivated in Sect. 3.2 and the linear scaling of the optimal timestep Sect. 4.3 one can readily re-use the optimal pseudo-extrema of the S/2 degree polynomial as an advanced initialization for the S degree polynomial. In particular, every second pseudo-extremum of the S stability polynomial is essentially known, cf. Fig. 3.

Scaled spectrum \(\sigma _{12, 2}\) of the 1D linear advection equation \(u_t +u_x =0\) discretized through the DGSEM on [0, 1] using 16 cells/elements with DG polynomial degree 3 and Rusanov/Local Lax-Friedrichs flux. The pseudo-extrema of the 12 and 24 degree second order accurate polynomial are computed. To highlight that the relative positions of every second pseudo extremum \({\tilde{r}}_{2j-1}^{2S}\) agree with \({\tilde{r}}_j^S\), the former are scaled by 0.5 due to the linear scaling of the timestep according to (4.1)

5.6 Construction of the Convex Hull Interpolant

For strictly convex spectra the convex hull defines in the second quadrant actually a strictly concave (strictly convex in the third quadrant) function, which allows to uniquely map the real part of the pseduo-extrema to some corresponding imaginary part. To relax the very steep section near the imaginary axis we add the origin (0, 0) to the hull which helps to reduce the slope in that particular segment. This allows to carry out the optimization actually in terms of the real parts of the pseudo-extrema only, cutting the number of optimization variables in half.

Given the (strict) convex hull of the spectrum defined through a set of eigenvalues \(\mu ^{(j)} \in \sigma ^\text {Hull}\) and the expected maximum timestep \(\Delta t_{S,p}\), we define the scaled spectrum

Based on this we construct a piece-wise linear interpolant I as

While quadratic or the classic cubic splines would result in a continuously differentiable interpolant I, they lead to numerical artifacts in the very steep sections near the imaginary axis that are a common feature of the higher order discontinuous Galerkin (DG) spectra. For these parts it is observed that linear splines capture the boundary of the stability domain \(\partial {\mathcal {S}}\) best and should be used there. For the less steep regions quadratic and cubic splines were tested, but the increase in approximation quality compared to linear splines was for the majority of cases too small to justify the increased computational costs.

Global interpolation techniques were also tested but familiar problems like Runge’s phenomenon and large over/undershoots in the intervals between the eigenvalues are observed for a variety of approaches, including Bernstein polynomials or mapping to Chebyshev points [69].

It should be mentioned that the spectrum close to the imaginary axis becomes arbitrarily steep and thus the interpolation becomes less reliable in this part. This becomes an issue for stability polynomials of degrees \({\mathcal {O}}(100)\) since then pseudo-extrema are placed in that region. To solve this problem, one can work with a spectrum enclosing curve \(\gamma (\tau ): {\mathbb {R}} \rightarrow {\mathbb {C}}\) instead of a spectrum enclosing function \(I:{\mathbb {R}} \rightarrow {\mathbb {R}}\). By identifying the eigenvalues on the hull \(\mu ^{(j)} \in \sigma ^{\text {Hull}}\) with an arc length parameter \(0 \le \tau ^{(j)} \le 1\) the imaginary part can be computed as

which removes the vanishingly small difference in real parts from the denominator. This, however, comes at the expense that we have to do the interpolation (4.7) also for the real part of the pseudo-extrema, thus doubling the computational work load. As discussed in Sect. 10, methods with more than hundred stages introduce additional complications related to internal stability. Consequently, we tailor the optimization approach around the more robust \(S \sim {\mathcal {O}}(10)\) case and use the real parts of the pseudo-extrema as the optimization variables.

6 Implementation

In this section, we bundle the aforementioned details into the precise formulation of the solved problems.

6.1 Optimization Problems

Equipped with the interpolant I(x) the optimization of lower-degree polynomial can now be efficiently conducted. The optimization problem (2.7) is first conducted in the real parts \(x_j = \text {Re}({\widetilde{r}}_j)\) only, i.e.,

Note that the number of optimization variables N equals for even stability polynomials \(1 + \frac{S-2}{2} = \frac{S}{2}\) since we expect one real pseudo extremum and \(S-2\) complex conjugated ones.

Due to the sensitive dependence of the stability and order constraints on the pseudo-extrema we conduct a second optimization run where we allow for small corrections y in the imaginary parts of the pseudo-extrema:

Here, \(\varepsilon \) is the sole hyperparameter needed in our approach which could for all problems be set to \({\mathcal {O}} \left( 10^{-2}\right) \) with default choice 0.02. The second stage optimization problem reads then

The entire algorithm is given in Algorithm 1. In principle, it is also possible to conduct the optimization in real parts and imaginary corrections (5.3) only, i.e., without solving (5.1) first. In all considered cases, however, optimizing the real parts of the pseudo-extrema first led not only to a more robust, but also overall faster optimization process.

6.2 Feasibility Problems

Under assumption that we can indeed realize the optimal timestep \(\Delta t_{S,p}\) one can formulate the optimization problems (5.1), (5.3) actually as feasibility problems by fixing \(\Delta t = \Delta t_{S,p}\) and search for \({\varvec{x}}, {\varvec{y}}\) satisfying the stability and order constraints. The feasibility problem corresponding to (5.1) reads then

and the analogy to (5.3) is given by

In practice, the feasibility problems are solved significantly faster than their optimization counterparts. Note that no conceptual changes to Algorithm 1 are necessary, as the timestep handling in Lines Lines 11 and 16 can be omitted and (5.4) and (5.5) are solved in Lines 12 and 14, respectively.

6.3 Software

Both optimization and feasibility problem are solved via

Ipopt: Interioir Point OPTimizer [70], an optimization software designed for general non-linear problems. The herein used required linear solver is MUMPS: MUltifrontal Massively Parallel sparse direct Solver [71, 72] with optional package METIS [73]. Being a derivative based optimizer, Ipopt requires the Jacobian matrix and Hessian tensor of the constraints (and objective) to construct the Lagrangian of the problem. Here, the derivatives are computed algorithmically via dco/c++ [74] which requires only the adaption of some boilerplate code. Besides being much more computationally efficient than approximating the derivatives using finite differences, algorithmic differentiation provides exact derivatives (up to machine precision).

This is especially relevant in this case, since the stability constraints are very sensitive with respect to the pseudo-extrema, i.e., small deviations in \({\varvec{x}}\) can make the difference between a stable and an unstable method. This was observed when Matlab’s function for general nonlinear optimization, fmincon failed in finding the optimal solution. Therein, finite difference approximations of Jacobians and Hessians are used and consequently, the optimization is much less reliable than Ipopt combined with dco/c++.

In terms of complexity the proposed algorithms scales linearly in the number of constraints (\({\widetilde{M}}\) stability constraints at eigenvalues and \(p-1\) order constraints) and quadratic in the number of unknowns (pseudo-extrema with distinct real part) N.

It should be mentioned that Ipopt provides many options which can significantly speed up the optimization/feasible point search. Most notably, one should specify grad_f_constant yes which ensures that the gradient of the objective is only evaluated once and the Hessian not at all. An option for a constant objective function as it is the case for the feasibility problems is not supported, but can actually be implemented by changing only two lines of the Ipopt source code [75]. Furthermore, for many problems the Hessian of the Lagrangian may be successfully approximated using the Limited-memory BFGS method [76] which is activated by specifying the option hessian_approximation limited-memory, resulting in significant speed up. In terms of tolerances, we demand satisfaction of the constraints to machine precision by setting constr_viol_tol 1e-16.

Furthermore, in most cases it suffices to supply only the convex hull and a small subset of the eigenvalues instead of the entire spectrum. This can be seen from e.g. Fig. 4a, d

where the stability constraint at the “interior” eigenvalues is usually satisfied without being explicitly enforced. One has to ensure, however, that there is always a larger number of constraints than pseudo-extrema to prevent degenerate cases where the roots of the stability polynomial are placed exactly on the eigenvalues, thus allowing in principle infinitely large timesteps.

The source code is made publicly available on GitHub [77].

7 Optimal Stability Polynomials for Strictly Convex Spectra

7.1 Comparison to Coefficient-Based Approach

Before coming to applications of Algorithm 1 to spectra of practical semidiscretizations, we seek to compare the herein developed approach to results obtained by applying the method developed in [36]. For this, we consider the problem of maximum disk inclusion, i.e., finding a stability polynomial such that the radius \(\Delta t\) of the disk

is contained in the region of absolute stability \({\mathcal {S}}\). In particular, we construct third-order accurate stability polynomials \(P^\text {Disk}_{S,3}\) with Algorithm 1 and compare the maximum possible timestep of this approach to the timestep obtained when applying the coefficient-based approach from [36]. Using the basis recommended in [36] we construct stability polynomials and corresponding optimal timesteps for \(S=20, 30, \dots , 80\). The timestep obtained in this way is then used twofold: First, we use it as the fixed, supplied timestep for the feasibility problems (5.4), (5.5) to check if Algorithm 1 is able to find a stability polynomial which is also A-stable for the supplied spectrum. This is indeed for every considered S the case, the corresponding pseudo-extrema are provided in the supplementary material.

Next, we test also the optimization problems (5.1), (5.3) whether they report a similar optimal timestep. Here, we supply the expected timestep based on \(\Delta t_{20, 3}\), cf. (4.1) instead of the optimal timestep found using the coefficient-based approach. Table 1 compares the ratio \(\Delta t/S\) for the coefficient-based approach to the one corresponding to the obtained by applying Algorithm 1. For all considered values of S the ratios are quite similar and seem to approach a constant value, which is in accordance with the asymptotically linear scaling of the optimal timestep Sect. 4.3.

7.2 Applications

Here, we present many-stage optimal stability polynomials corresponding to first and second order for the spectra shown in Fig. 2. For each spectrum, we compute \(\Delta t_{16,p}\) and \(P_{16, p}(z)\) using the approach developed in [36]. Equipped with the optimal timestep, we supply the expected optimal timestep according to (4.1) to the feasibility problems (5.4), (5.5). In particular, we consider the constructed polynomial as optimal when the linear scaling of the maximum timestep is realized, which is the case for all examples mentioned in this section. For every spectrum, we start with a moderate number of stages (\(26-32\)) and then double the number of stages to utilize the equal arc length property for even spectra discussed in Sect. 4.5.

The computed pseudo-extrema alongside the constraining list of eigenvalues are provided as supplementary material to this manuscript. Optimal stability polynomials are constructed for:

-

1.

Burgers’ Equation: We find the optimal stability polynomials of first, second, and third order with degrees 32, 64, 128 constrained by \({\widetilde{M}} = 129\) eigenvalues. In particular, we make use of the property discussed in Sect. 4.5 by conducting the optimization sequentially, i.e., first performing the optimization for \( S=32\), re-using this results for the initialization of the \(S=64\) polynomial and in turn using this results for the final \(S=128\) polynomial. The pseudo-extrema and contours of the optimal \(P_{64,2}(z)\) stability polynomial are displayed in Fig. 4a. To highlight the efficiency of our approach, we provide the Ipopt runtimes for the feasibility problem runs in Table 2. We emphasize that we do not intend to compare the runtimes of Algorithm 1 to the optimization problem from [36]—instead, we would like to stress that equipped with an optimal timestep for a low degree polynomial, we can quickly compute the higher degree optimal ones.

-

2.

1D Shallow Water equations: Here we optimize polynomials of degrees 30, 60, and 120 constrained by \({\widetilde{M}} = 117\) eigenvalues, again in a sequential manner. The pseudo-extrema and contours of the optimal \(P_{60,2}(z)\) stability polynomial are displayed in Fig. 4b. The CPU times are very similar to Burgers’ equation with total 30.687s for the computation of the first order accurate stability polynomial and 28.726s for the second order case.

-

3.

1D Ideal compressible magnetohydrodynamics (MHD): Polynomials of degrees 28, 56, and 112 are found satisfying the \({\widetilde{M}} = 252\) stability constraints due to the eigenvalues. The pseudo-extrema and contours of the optimal \(P_{56,2}(z)\) stability polynomial are displayed in Fig. 4c. The generation of the 112 degree stability polynomial takes 94.433s and 98.377s for first and second order case, respectively.

-

4.

2D Compressible Euler equations: To conclude this result section, we generate stability polynomials with degrees 26, 52, and 104 for a reduced set of \({\widetilde{M}} = 717\) eigenvalue constraints. The pseudo-extrema and contours of the optimal \(P_{52,2}(z)\) stability polynomial are displayed in Fig. 4d. Due to the quadratic scaling in the number of constraints we have significantly longer runtimes then in the previous cases, with total \(2.456 \text { min}\) for the first order and \(8.55 \text { min}\) for the second order case. Note that these runtimes can be significantly reduced if only a small portion of the eigenvalues alongside the convex hull thereof is supplied as constraints.

8 Non-Convex Spectra

While a rich set of spatial discretization, equation, boundary and initial condition combinations leads to strictly convex spectra, especially in one spatial dimension, at least equally many cases with non-convex spectra are observed. In this section, we propose two possible treatments thereof.

First, alpha shapes [78, 79] are proposed as a potentially accurate, yet more expensive candidate which come with an unknown hyperparameter. Second, we follow the previous sections and use a convex hull approach also for non-convex spectra.

8.1 Alpha Shapes

Alpha shapes [78, 79] provide a rigorous way of constructing a point enclosing shape that is potentially closer to the point set than the convex hull. Alpha shapes are parametrized by the real scalar \(\alpha \) parameter that defines how close the alpha shape is shrinked to the points. For \(\alpha > 0\), the construction of alpha shapes can intuitively described by rolling a disk with radius \(1/\alpha \) around the points and drawing a line between two points of the set whenever they are on the edge of the disk and no points are in the interior of the disk. For \(\alpha = 0\), the disk has infinite radius, i.e., degenerates to a straight line and the resulting shape is equivalent to the convex hull which is constructed by tilting the straight line around the points. While alpha shapes provide potentially a very accurate ansatz for the stability boundary, cf. Fig. 5 they come with two main drawbacks.

First, alpha shapes introduce a hyperparameter whose choice is not clear. While in general higher degree polynomials follow the stability boundary more closely, i.e., correspond to larger alpha values the best choice is highly dependent on the individual spectra. Furthermore, for high \(\alpha \) corresponding to small radii there is always the danger of obtaining a disjoint alpha shape which is not useful in this context. Both of these issues can be in principle addressed through manual inspection, which is clearly not attractive.

Secondly, and more severely, alpha shapes provide in general only a spectrum enclosing curve \(\gamma _\alpha (\tau ): {\mathbb {R}} \rightarrow {\mathbb {C}}\), i.e., no spectrum enclosing function. This implies that for both the imaginary part (cf. (4.7)) and real part

of the pseudo-extrema interpolation has to be performed. Here, \(\mu ^{(j)} \in \sigma ^\alpha \) are the eigenvalues selected as the upper part of the alpha shape with corresponding arc length parameter \(0 \le \tau ^{(j)} \le 1\). This results in doubled computational costs and an overall more difficult optimization task.

Nevertheless, we stress that the general idea of distributing the pseudo-extrema with equal arc length, i.e., equal distances \(\Delta \tau = \tau ^{(j+1)} - \tau ^{(j)}, \, \forall j\) is still applicable and Algorithm 1 can be used under the appropriate changes.

Approximation of the optimal stability boundary by the contour of an alpha shape. The spectrum \(\sigma \) is obtained from a DGSEM discretization of the 2D linear advection equation with velocities \(a_x = 0.5, a_y = -0.1\) on the periodic domain \(\Omega = [-1,1]^2\) discretized by a \(6\times 6\) mesh with local polynomials of degree 3 and Rusanov/Local Lax-Friedrichs flux

8.2 Convex Hull Ansatz

By constructing the convex hull for non-convex spectra the framework prepared in the previous sections is recovered. Precisely, even if the hull of spectrum itself may be nonconvex, the convex hull is constructed and used as the ansatz for the initial placement of the pseudo-extrema. Using the convex hull difficulties with additional hyperparameters or the danger of disjoint alpha shapes are ruled out. This comes with the drawback that we can no longer expect to actually realize the maximum possible optimal timestep according to (4.1). Consequently, one has to indeed perform the optimization problems (5.1), (5.3) as the feasibility problems with fixed timestep will fail. Depending on the spectrum there are a priori no guarantees how efficient this approach will be. In practice, however, we find for many real-world spectra excellent results, as illustrated in the next section.

9 Optimal Stability Polynomials for Nonconvex Spectra

Here, we present results for two relevant, exemplary nonconvex spectra for which we use the convex hull-based approach.

-

1.

Burgers’ Equation with Riemannian initial data \(u_0(x) = {\left\{ \begin{array}{ll} 1.5 &{} x < 0.5 \\ 0.5 &{} x \ge 0 \end{array}\right. }\). As before, we discretize \(\Omega = [0, 1]\) with 64 cells and use Godunov’s flux. At \(x=0\) we fix \(u(t, 0) = 1.5\) while at \(x=1\) an outflow boundary is used. The solution is reconstructed using fourth order local polynomials. The corresponding spectrum is displayed in Fig. 6a alongside its convex hull. The convex hull is in most parts extremely close the spectrum and we thus expect maximal timesteps very close to the optimal ones according to the linear scaling (4.1). Indeed, for 30, 60, and 120 degree polynomials we can realize the optimal timestep. As for the convex spectra, we display the \(S=60\) case in Fig. 6a.

-

2.

2D compressible Euler equations: Isentropic vortex advection. The advection of an isentropic vortex is a classic, fully nonlinear testcase with known analytical solution [59, 80]. In terms of the physical parameters we use the same setup as in [39,40,41] which is spelled out in Sect. 10.2.2. The numerical scheme is composed of Rusanov/Local Lax-Friedrichs flux, second order local polynomials and 8 cells in each direction. This little resolution is required to execute the eigenvalue decomposition in reasonable time. The corresponding spectrum is shown in Fig. 6b alongside its convex hull. Similar to Burgers’ Equation, the spectrum is in most parts reasonably close to the spectrum. For \(S=28\) we can realize about \(97.9\%\) of the theoretically optimal timestep and for \(S=56, S=112\) indeed the optimal timestep according to the linear timestep scaling (4.1).

10 Construction of Many-Stage Runge–Kutta Methods

As discussed before, the primary use case of the high degree stability polynomials is the possibility to construct many-stage methods for e.g. partitioned multirate Runge–Kutta methods such as the P-ERK [39, 40, 45] schemes. Nevertheless, we seek to demonstrate the capabilities of the optimized stability polynomials by constructing many-stage standalone methods. Here, we limit ourselves to second order accurate methods for which the linear order constraints (2.4) imply second order convergence even in the nonlinear case, without any additional constraints [6, 46]. Furthermore, we leave the construction of SSP methods for future work and focus here on hyperbolic PDEs with smooth solutions.

Due to the factorized form of the stability polynomial one can directly read-off a possible choice of the intermediate steps:

Note that we group the complex-conjugated pseudo-extrema to avoid complex-valued timesteps. We focus on real timesteps since they allow easy implementation into existing codes and spare us from technical complications. Readers interested in complex-valued timesteps are pointed to [81] and references therein.

Being equipped with a stability polynomial, one has now in principle infinitely many possible choices for the actual Runge–Kutta method. It is well-known that internal stability, i.e., the propagation of round-off errors is a relevant concern for many-stage explicit methods [56, 57, 82, 83]. This issue arises also here and seems to be the major difficulty in realizing the maximum timestep.

Given the factorized form of the stability polynomial it is natural to construct Runge–Kutta methods with a sequential structure. Here, we specify the methods in modified Shu-Osher form [82, 84]

and set \(v_1 = 1, v_k = 0, k > 1\) which implies \({\varvec{Y}}_1 = {\varvec{U}}_n\). Following [82] we introduce the notation

with \(\alpha _{1:S}, \beta _{1:S} \in {\mathbb {R}}^{S \times S}\) resembling the coefficients of the intermediate stages. The parameters of the final stage are denoted via \(\varvec{\alpha }_{S+1}, \varvec{\beta }_{S+1} \in {\mathbb {R}}^{1\times S}\), i.e.,

which are in the present case conveniently chosen as

due to the particular representation of the stability polynomial (9.1).

The two-stage submethod (cf. (9.1)) corresponding to pseudo-extrema \({\widetilde{r}}_j, {\widetilde{r}}_j^\star \) are naturally represented via the low-storage scheme

The Forward Euler steps are readily reflected by \(\alpha _{k,k-1}^{(j)} = 1, \beta _{k,k-1}^{(j)} = \frac{-1}{{\widetilde{r}}_j}\). To meet the stability polynomial (9.1) we have for the two-stage methods the constraints

besides the usual requirement for consistency \(\sum _{l=0}^{k-1} \alpha _{k,l} = 1\) [60]. The timestamps/abscissae are computed via \({\varvec{c}} :=(I - \alpha _{1:S})^{-1} \beta _{1:S} {\varvec{1}}\) [82] where \({\varvec{1}}\) denotes a column vector of ones. The ordering of the two-stage submethods, i.e., the relation of j to k is determined based on the accumulated \(\beta \) values

The methods are then ordered such that \(\left\| \varvec{\beta }^{(j)}_k \right\| _1\) increases with k.

10.1 Internal Stability

For the discussion of internal stability we follow the main results derived in [82]. Under the influence of round-off errors \({\varvec{e}}_k\) the modified Shu-Osher form (9.2a) reads

where we denote the perturbed, round-off error bearing stages by \(\widetilde{{\varvec{Y}}}_k\). In [82] it was shown that the defect from actually computed, perturbed solution \(\widetilde{{\varvec{U}}}_n\) to the true solution \({\varvec{U}}(t_n)\) can for linear ordinary differential equations (ODEs) be estimated as

In addition to the usual truncation error \({\mathcal {O}}(\Delta t^{p+1})\) we have the amplification of round-off errors \(\sum _k\Vert Q_k(z) \Vert \Vert {\varvec{e}}_k \Vert \). Internal stability becomes a concern when both are of similar magnitude [82].

In the estimate of the error of the perturbed iterates (9.10) \( Q_j(z)\) denote the internal stability polynomials [56, 82] which are for a method in Shu-Osher form computed as [82]

For explicit methods there is no initial round-off error \({\varvec{e}}_1\) [82] and it suffices to consider the internal stability polynomials starting from second stage. To estimate the potential of round-off error amplification it is customary to investigate

which is a more precise variant of the maximum internal amplification factor proposed in [82]. For standard double precision floating point datatypes we expect the round-off errors to be \(\varepsilon = {\mathcal {O}}\left( 10^{-15}\right) \) at most, thus we expect the overall internal errors to be of order \(\widetilde{{\mathcal {M}}} \cdot 10^{-15}\). This can then be compared to \({\mathcal {O}}(\Delta t^{p+1})\) yielding an approximate criterion to estimate the influence of round-off errors. Both metrics are reported for the examples considered in Sect. 10.

In principle, one could now set out to minimize \(\widetilde{{\mathcal {M}}}\) over \(\alpha , \beta \) under the constraints (9.7). Given that even for methods with two intermediate stages (9.6) we have \(\frac{5}{2} S_\text {Complex} + S_\text {Real}\) free optimization variables and one is minimizing not only one, but S polynomials at M eigenvalues this is a significantly harder optimization problem than the original one (2.7). In addition, there are no immediate properties of the internal stability polynomials \(Q_j\) available, rendering this problem practically infeasible. As an alternative, we propose a significantly cheaper heuristically motivated approach in the next section.

10.2 Internal Stability Optimization: Heuristic Approach

We begin by recalling that \(\alpha _{1:S}, \beta _{1:S}\) are for explicit methods strictly lower triangular, i.e., nilpotent matrices with index S. Consequently, the identity

holds and allows an alternative to the inversion of the typically ill-conditioned matrix. We make now the following heuristic argument: For \(\alpha _{k,l} \in [0,1]\) and \(\vert z \vert \sim {\mathcal {O}}(100)\) for the many-stage methods we expect a significantly higher sensitivity of \({\widetilde{M}}\) with respect to \(\beta _{k,l}\) than \(\alpha _{k,l}\). In order to minimize \({\widetilde{M}}\) we thus minimize the accumulated \(\beta _{k,l}\) values (9.8) for each two-stage submethod individually. For these optimization problems constraints (9.7) satisfying local minima are easily found. Note that negative \(\beta _{k,l}\) are allowed here, which allow further reduction of \({\widetilde{M}}\) due to the increased flexibility. To illustrate this, consider the 104 degree stability polynomial optimized for the scalar advection equation (see Sect. 10.1.1) for which we construct Runge–Kutta methods with non-negative and unconstrained \(\beta _{k,l}\). For this case, values of \({\mathcal {M}}\) for grouped and non grouped pseudo-extrema (see next section) with non-negative and unconstrained \(\beta _{k,l}\) are tabulated in Table 3.

10.3 Generalized Lebedev’s Idea

For the higher order DG spectra we observe a couple of pseudo-extrema near the imaginary axis with \(\left| \text {Im}(\widetilde{{\varvec{r}}}_j) \right| \gg -\text {Re}(\widetilde{{\varvec{r}}}_j)\) which can lead to \(\beta _{k,l} \gg 1\), which should be avoided. Similar to Lebedev’s Idea/Realization [85, 86] we group the complex conjugated pseudo-extrema with \(\text {Re}(\widetilde{{\varvec{r}}}_j) > -0.5\) with the pseudo-extrema of largest \(\left| \text {Re}(\widetilde{{\varvec{r}}}_j) \right| \). By staying with the two register form (9.6) we have now 13 free parameters, three linear constraints due to \(\sum _{k=0}^{j-1} \alpha _{j,k} = 1\) and four nonlinear constraints which are derived similar to (9.7) by multplying out polynomials. As they are quite lengthy but readily obtained we omit them here. As for the remaining two-stage methods, we optimize \(\alpha , \beta \) to minimize \(\left\| \varvec{\beta }^{(j)}_k \right\| _1\).

The grouping of pseudo-extrema with small and large real part has a dramatic influence on the internal stability properties. For instance, consider again the 104 degree, second order accurate stability polynomial optimized for the scalar advection equation (see Sect. 10.1.1) for which we construct Runge–Kutta methods with and without grouping. Values for \(\widetilde{{\mathcal {M}}}\) are tabulated in Table 3 highlighting the importance of the grouping. Due to this results all in the following constructed methods bear grouping of pseudo-extrema and may have negative \(\beta _{k,l}\).

10.4 Note on SSP Properties

It is natural to ask for SSP properties of Runge–Kutta schemes intended for the integration of (nonlinear) hyperbolic systems. Furthermore, given the representation of the method in Shu-Osher form (9.2) one can readily tell whether the method is monotonicity preserving or not. For the methods resembling the factorized form of the stability polynomial the coefficients of the last stage are chosen according to (9.5). Consequently, the SSP cofficient [60]

is due to \(\alpha _{S+1,S} =0, \beta _{S+1,S} = 1\) always zero, regardless of the parametrization of the intermediate stages. This implies that there exists no timestep \(\Delta t >0\) such that the Runge–Kutta method is guaranteed to be monotonicity preserving. One will also need to revisit whether negative \(\beta _{k,l}\) should be allowed as they demand special treatment for SSP methods [60]. The construction of many-stage SSP methods is ongoing research.

11 Many-Stage Runge–Kutta Methods: Results

We present convergence studies of the many-stage Runge–Kutta methods which are constructed from the factorized form of the stability polynomial using the generalization of Lebedev’s Idea and possibly negative \(\beta _{k,l}\).

11.1 Linear Problems

11.1.1 1D Advection of a Gaussian Pulse

As a first example, we consider the linear advection equation with unit transport velocity and Gaussian initial data

on \(\Omega \) = \([-5, 5]\) equipped with periodic boundaries. We discretize \(\Omega \) with 512 cells and (10.1) via the DGSEM with third order polynomials and Rusanov/Local Lax-Friedrichs flux implemented in Trixi.jl [65,66,67]. Using Trixi.jl we can generate the Jacobian using algorithmic differentiation and compute the corresponding spectrum. For this spectrum we compute the reference timestep \(\Delta _{16,3} \approx 3.53 \cdot 10^{-2}\) using the algorithm proposed in [36] which is then supplied to the feasibility problems (5.4) and (5.5).

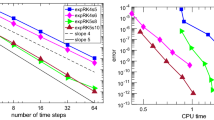

We compute ten passes through the domain corresponding to final time \(t_f = 100\) with 26, 52, and 104 stage, third order accurate methods using the Runge–Kutta parametrization with negative \(\beta \) and generalized Lebedev’s idea. As both the timestep as the computational cost (due to the low-storage formulation of the Runge–Kutta method) scale linearly with the number of stages S, we obtain constant runtimes for this examples. The used timestep \(\Delta t\), number of taken timesteps \(N_t\), internal amplification factors \(\widetilde{{\mathcal {M}}}\) and the order of the truncation error \(\Delta t^4\) are reported in Table 4. The third order convergence in \(L^\infty \)-norm

is observed until spatial discretization errors are becoming relevant, cf. Fig. 8a. In weighted \(L^1\)-norm

third order convergence is observed except for the smallest timestep, as displayed in Fig. 8b. We observe that the error constant increases with stage number, which seems to be a general phenomenon for the herein constructed methods.

Third order convergence in \(L^\infty , L^1\) for methods with 26, 52, and 104 stages optimized for the 1D scalar advection equation (10.1)

11.1.2 2D Linearized Euler Equations

The linearized Euler equations with source term read in two spatial dimensions, primitive variables

where \(({\bar{\rho }}, {\bar{u}}, {\bar{v}}, c) = (1, 1, 1, 1)\) denote the base state. By the method of manufactured solutions [87, 88] we set the solution to

with corresponding source terms

The linearized Euler equations (10.4) are discretized on \(\Omega = [0, 1]^2\) with 64 elements in each direction. We employ the DGSEM with fourth order local polynomials and Harten-Lax-Van Leer (HLL) [89] flux on a periodic domain. To leverage the computational costs of the eigenvalue decomposition, we compute the spectrum for a discretization with 8 cells in each coordinate direction. For the resulting set of eigenvalues we compute the reference timestep \(\Delta t_{16,2} \approx 4.53 \cdot 10^{-2}\) using the algorithm proposed in [36] which is then supplied to the feasibility problems (5.4) and (5.5).

For the actual simulation on the 8 times finer mesh we reduce the obtained timesteps accordingly. A convergence study is carried out in terms of the error in density fluctuation \(\rho \) at final time \(t_f = 10.5\) and displayed in Fig. 9. We use \(t_f= 10.5\) since we observed for \(t_f = 10\) spurious convergence of higher than second order, which is a peculiarity of the employed testcase rather than a general phenomenon. Again, second order convergence is observed in \(L^\infty \) for all timesteps. Here, we construct polynomials of degrees 32, 64, and 128 and the corresponding methods with parametrization using potentially negative \(\beta \) and the generalized Lebedev’s idea. Since the right-hand-side of the ODE system (1.1b) depends also explicitly on time this example also showcases convergence of the many-stage methods for non-homogeneous problems.

In contrast to the previous example, we observe that the theoretically possible maximum stable timestep has to be reduced by factor \(\text {CFL}\) (see Table 5) for a stable simulation which we attribute to issues with internal stability.

Consider for instance the 128 stage method with \(\text {CFL} = 1.0\) (corresponding to an unstable simulation) with \(\widetilde{{\mathcal {M}}} \cdot 10^{-15} \approx 7.60 \cdot 10^{-2}\) while \(\Delta t^3 \approx 9.29 \cdot 10^{-4}\). As discussed in [82], we observe in this case stability problems when error terms due to round off are of larger magnitude than the truncation error.

For the \(S=64\) stability polynomial the reduction in optimal timestep is a consequence of the optimization of the stability polynomials for the reduced spectrum, rather than related to internal stability.

11.2 Nonlinear Problems

11.2.1 Burgers’ Equation with Source Term

We consider Burgers’ Equation as the classic prototype equation for nonlinear hyperbolic PDEs. With source term s(t, x), Burgers’ Equation reads

The presence of the source term allows a convenient construction of a continuously differentiable solution \(u(t, x) \in C^1\) via the method of manufactured solutions. Here, the solution is set to

which corresponds to a simple advection of the initial condition, although this time through a nonlinear partial differential equation (PDE). Note that u(t, x) is periodic on [0, 1] for all times t. The source term is then computed from the governing PDE (10.7) as

The spatial discretization is realized using the DGSEM with third order polynomials on a 256 element mesh \(\Omega ^{(h)}\) with periodic boundaries and Godunov flux [90].

Despite being effectively also only the simple advection of the initial data \(u_0(x)\), it is now observed that the effectively stable timestep cannot be kept constant as in the case of the advection equation discussed previously. This is attributed to the fact that the perturbations \({\varvec{e}}_j\) do not only corrupt the stage updates (cf. (9.9)), but thereby also change the point of evaluation of the Jacobian and thus the spectrum. This can have dramatic effects since the stability polynomial is only optimized for the spectrum corresponding to the unperturbed solution. This is further amplified by the lack of the SSP property of the constructed schemes. Thus, as the time integration schemes used here have no oscillation-surpressing guarantees, these do arise and further perturb the spectrum. To measure this, we compute the increase of the total variation

where the total variation semi-norm is defined as usual

For methods with \(S= 28, 56\), and 112 stages we observe indeed increase in total variation, cf. Table 6. Here, the significant decrease of the optimal timestep even for methods with moderate stages is not expected to follow from issues with internal stability. Although the estimate for the perturbed approximation (9.10) is only valid for linear systems, we consider it also here as a crude estimate for the influence of internal stability. As note in table Table 6 the maximum possible timestep \(\Delta t_{28,2}\) has to be decreased to \(72\%\) of its theoretically possible value. As \({\widetilde{M}} \cdot 10^{-15}\) is still 4 orders of magnitude smaller than \(\Delta t^3\) we do not expect the round off errors to limit the stability.

Instead, we believe that the lack of the SSP property causes the limitations in timestep. To provide reason for this, we consider SSP the third order accurate methods with variable number of stages \(S_n = n^2\) proposed in [91]. For the \(S=15^2 = 225 \) method we have \({\widetilde{M}} \cdot 10^{-15} \approx 2.13 \cdot 10^{-13}\) and \(\Delta t^4 \approx 5.42 \cdot 10^{-8}\), i.e., similar values to the 28 stage method constructed here. In that case, however, we have \(e_\text {TV} \equiv 0\), as expected for the TVD time integration when applied to a smooth solution. For the schemes constructed here the spurious oscillations can even produce amplifying modes in the spectrum of the semidiscretization, i.e., move the system into an unphysical, energy-generating state. In that case, the true solution itself is unstable - this is a qualitatively different scenario to the lack of absolute stability where the numerical scheme is unstable for a certain eigenvalue. For \(S=28\) and \(\Delta t\) from Table 6 we have for instance at \(t=0.5\) an eigenvalue with \(\text {Re} \left( \lambda \right) = 8.57 \cdot 10^{-12}\) which bears the possibility to cause the whole computation to diverge. This effect becomes especially severe for the 112 stage method where the \(\text {CFL}\) number has to be dramatically decreased for a stable simulation.

Nevertheless, all methods achieve second order convergence in \(L^\infty \)-norm once the oscillations vanished which is the case for \(\Delta t \le \frac{1}{4} \Delta t_{S,2}\), cf. Fig. 10a. In weighted \(L^1\)-norm (10.3) second order convergence is observed for every timestep as displayed in Fig. 10b.

Second order convergence in \(L^\infty , L^1\) for methods optimized for Burgers’ equation with source (10.7)

11.2.2 2D Compressible Euler Equations: Isentropic Vortex Advection

To conclude the examples, we consider the 2D compressible Euler equations

with total energy \(E = E(\rho , {\varvec{v}}, p) = \rho \left( \frac{p}{\gamma - 1} + \frac{1}{2} \left( v_x^2 + v_y^2\right) \right) \). As a testcase we consider the advection of an isentropic vortex [80, 92]. Here, we use similar parameters to the ones used in [39, 40]. In particular, the base state is set to

with \(\text {Ma}_\infty = 0.4\). To localize the effect of the vortex centered at \( {\varvec{c}}(t, x, y) :=\begin{pmatrix} x \\ y \end{pmatrix} - {\varvec{v}}_\infty t\) the perturbations are weighted with the Gaussian \(g(t, x, y) :=\exp \left( \frac{1 - \Vert {\varvec{c}}(t, x, y) \Vert _2^2}{2R^2}\right) \) where \(R= 1.5\). While the size of the vortex is governed by R, its intensity/strength is quantified by I which is set here to 13.5 following [39, 40]. The density is given by

and the corresponding perturbed velocities are

while the pressure is computed analogous to the base pressure (10.13) as

The advection of the vortex is simulated on \(\Omega = [-10,10]^2\) discretized with \(64\times 64\) elements, sixth order local polynomials, and HLLC Flux [93]. Final time \(t_f\) is set to 20 which corresponds to a full traversion of the vortex through the periodic domain. For this setup 30, 60, and 120 degree methods of second order accuracy are constructed. As for the previous example, we observe a reduction of the theoretically stable timestep as given in Table 7 which we attribute again to the lack of SSP guarantees, which cause the rise of oscillations that move the system into an amplifying state.

For the \(L^\infty \) error in density \(\Vert \rho ^{(h)}(t_f, x, y) - \rho (t_f, x, y) \Vert _\infty \) second order convergence is observed, until spatial discretization errors start to become significant, cf. Fig. 11.

12 Conclusions

In this work, a novel optimization approach for the generation of optimal stability polynomials for spectra of hyperbolic PDEs is devised. Parametrizing the stability polynomials in terms of the herein introduced pseudo-extrema offers both a numerically stable and readily interpretable representation. The optimization approach is motivated by examining the properties of the pseudo-extrema for the proven optimal stability polynomials of first and second order for disks. These findings are directly extended to strictly convex spectra for which an optimization procedure is developed in detail. For non-convex spectra, possible remedies are discussed and optimal stability polynomials for both convex and nonconvex spectra are presented. Stability polynomials with degrees larger than 100 are constructed for a range of classical hyperbolic PDEs that match the linear consistency requirements up to order three.

Equipped with high degree stability polynomials in factorized form a possible choice for the actual Runge–Kutta methods is proposed. The construction of the numerical schemes centers around minimizing the propagation and amplification of round-off errors, which can become a concern for many-stage methods. Here, methods of second order are constructed which match their designed order of accuracy for both linear and nonlinear problems. For linear problems, only internal stability might limit the theoretically possible maximum timestep while for nonlinear problems the lack of the SSP property is much more severe which spoils the effectiveness of the very high-stage methods.

As mentioned earlier, the application of individual optimized many-stage methods do not provide efficiency gains compared to medium- or few-stage methods as both the stable timestep and the computational cost scale linearly in the number of stages. In fact, the main motivation for the construction of such high degree stability polynomials are multiscale problems where a composition of stabilized Runge–Kutta methods is applied to integrate the system more efficiently. In this scenario, one would adjust the number of stages according to the characteristic speeds in a part of the domain with the aim to keep the overall timestep constant. For this case, the relatively robust medium-stage methods with up to, say, 32 stages are a promising candidate to be included in the family of Runge–Kutta methods.

Data availibility

All data generated or analysed during this study are included in this published article and its supplementary information files.

References

Courant, R., Friedrichs, K., Lewy, H.: Über die partiellen Differenzengleichungen der mathematischen Physik. Mathematische Annalen 100, 32–74 (1928). https://doi.org/10.1007/BF01448839

Franklin, J.: Numerical stability in digital and analog computation for diffusion problems. J. Math. Phys. 37, 305–315 (1958)

Guillou, A., Lago, B.: Domaine de stabilité associé aux formules d’intégration numérique d’équations différentielles, a pas séparés et a pas liés. recherche de formules a grand rayon de stabilité, Ier Congr. Ass. Fran. Calcul., AFCAL, pp. 43–56 (1960)

Saul’ev, V.: Integration of parabolic equations by the grid method. Fizmatgiz Moscow 13, 14–19 (1960)

Forsythe, G.E., Wasow, W.R.: Finite-Difference Methods for Partial Differential Equations. Applied Mathematics Series, Wiley, New York (1960)

Wanner, G., Hairer, E.: Solving Ordinary Differential Equations II: Stiff and Differential-Algebraic Problems, vol. 375. Springer, Berlin (1996). https://doi.org/10.1007/978-3-642-05221-7

Hundsdorfer, W.H., Verwer, J.G.: Numerical Solution of Time-Dependent Advection-Diffusion-Reaction Equations. Springer Series in Computational Mathematics, vol. 375, 1st edn. Springer, Berlin (2010)

Verwer, J.G.: Explicit Runge–Kutta methods for parabolic partial differential equations. Appl. Numer. Math. 22, 359–379 (1996). https://doi.org/10.1016/S0168-9274(96)00022-0

Abdulle, A.: Explicit stabilized Runge–Kutta methods, Technical Report. Mathematics Institute of Computational Science and Engineering, School of Basic Sciences, Section of Mathematics EPFL Lausanne (2011)

Van der Houwen, P.: The development of Runge–Kutta methods for partial differential equations. Appl. Numer. Math. 20, 261–272 (1996). https://doi.org/10.1016/0168-9274(95)00109-3

Chzao-Din, Y.: Some difference schemes for the solution of the first boundary value problem for linear differential equations with partial derivatives. Moscow Stage University, Thesis (1958)

Burrage, K.: Order and stability properties of explicit multivalue methods. Appl. Numer. Math. 1, 363–379 (1985). https://doi.org/10.1016/0168-9274(85)90001-7

Lomax, H.: On the Construction of Highly Stable, Explicit, Numerical Methods for Integrating Coupled Ordinary Differential Equations with Parasitic Eigenvalues. National Aeronautics and Space Administration (1968)

Lebedev, V.: A new method for determining the roots of polynomials of least deviation on a segment with weight and subject to additional conditions. Part I. Russ. J. Numer. Anal. Math. Model. 8, 195–222 (1993a). https://doi.org/10.1515/rnam.1993.8.3.195

Lebedev, V.: A new method for determining the roots of polynomials of least deviation on a segment with weight and subject to additional conditions. Part II, Russ. J. Numer. Anal. Math. Model. 8, 397–426 (1993b). https://doi.org/10.1515/rnam.1993.8.5.397

Van der Houwen, P.J.: Construction of integration formulas for initial value problems. Technical Report, Stichting Mathematisch Centrum, Amsterdam (1977)

Van der Houwen, P., Kok, J.: Numerical Solution of a Minimax Problem. Stichting Mathematisch Centrum, Toegepaste Wiskunde (1971)

Abdulle, A., Medovikov, A.A.: Second order Chebyshev methods based on orthogonal polynomials. Numer. Math. 90, 1–18 (2001). https://doi.org/10.1007/s002110100292

Riha, W.: Optimal stability polynomials. Computing 9, 37–43 (1972). https://doi.org/10.1007/BF02236374

Van der Houwen, P.: Explicit Runge–Kutta formulas with increased stability boundaries. Numer. Math. 20, 149–164 (1972). https://doi.org/10.1007/BF01404404

Kinnmark, I.P., Gray, W.G.: One step integration methods with maximum stability regions. Math. Comput. Simul. 26, 87–92 (1984). https://doi.org/10.1016/0378-4754(84)90039-9

Sonneveld, P., Van Leer, B.: A minimax problem along the imaginary axis, Technical Report 4. Technische Hogeschool Delft, Onderafdeling der Wiskunde en Informatica (1984)

Kinnmark, I.P., Gray, W.G.: One step integration methods of third-fourth order accuracy with large hyperbolic stability limits. Math. Comput. Simul. 26, 181–188 (1984). https://doi.org/10.1016/0378-4754(84)90056-9

Jeltsch, R., Nevanlinna, O.: Largest disk of stability of explicit Runge–Kutta methods. BIT Numer. Math. 18, 500–502 (1978)

Owren, B., Seip, K.: Some stability results for explicit Runge–Kutta methods. BIT Numer. Math. 30, 700–706 (1990). https://doi.org/10.1007/BF01933217

Vichnevetsky, R.: New stability theorems concerning one-step numerical methods for ordinary differential equations. Math. Comput. Simul. 25, 199–205 (1983). https://doi.org/10.1016/0378-4754(83)90092-7

Niegemann, J., Diehl, R., Busch, K.: Efficient low-storage Runge–Kutta schemes with optimized stability regions. J. Comput. Phys. 231, 364–372 (2012). https://doi.org/10.1016/j.jcp.2011.09.003

Torrilhon, M., Jeltsch, R.: Essentially optimal explicit Runge–Kutta methods with application to hyperbolic-parabolic equations. Numer. Math. 106, 303–334 (2007). https://doi.org/10.1007/s00211-006-0059-5

Kennedy, C.A., Carpenter, M.H., Lewis, R.: Low-storage, explicit Runge–Kutta schemes for the compressible Navier–Stokes equations. Appl. Numer. Math. 35, 177–219 (2000). https://doi.org/10.1016/S0168-9274(99)00141-5

Allampalli, V., Hixon, R., Nallasamy, M., Sawyer, S.D.: High-accuracy large-step explicit Runge–Kutta (HALE-RK) schemes for computational aeroacoustics. J. Comput. Phys. 228, 3837–3850 (2009). https://doi.org/10.1016/j.jcp.2009.02.015

Toulorge, T., Desmet, W.: Optimal Runge–Kutta schemes for discontinuous Galerkin space discretizations applied to wave propagation problems. J. Comput. Phys. 231, 2067–2091 (2012). https://doi.org/10.1016/j.jcp.2011.11.024

Mead, J., Renaut, R.: Optimal Runge–Kutta methods for first order pseudospectral operators. J. Comput. Phys. 152, 404–419 (1999). https://doi.org/10.1006/jcph.1999.6260

Al Jahdali, R., Boukharfane, R, Dalcin, L., Parsani, M.: Optimized explicit Runge–Kutta schemes for entropy stable discontinuous collocated methods applied to the Euler and Navier–Stokes equations. In: AIAA Scitech 2021 Forum, 2021, p. 0633. https://doi.org/10.2514/6.2021-0633