Abstract

We address a three-tier data-driven approach for the numerical solution of the inverse problem in Partial Differential Equations (PDEs) and for their numerical bifurcation analysis from spatio-temporal data produced by Lattice Boltzmann model simulations using machine learning. In the first step, we exploit manifold learning and in particular parsimonious Diffusion Maps using leave-one-out cross-validation (LOOCV) to both identify the intrinsic dimension of the manifold where the emergent dynamics evolve and for feature selection over the parameter space. In the second step, based on the selected features, we learn the right-hand-side of the effective PDEs using two machine learning schemes, namely shallow Feedforward Neural Networks (FNNs) with two hidden layers and single-layer Random Projection Networks (RPNNs), which basis functions are constructed using an appropriate random sampling approach. Finally, based on the learned black-box PDE model, we construct the corresponding bifurcation diagram, thus exploiting the numerical bifurcation analysis toolkit. For our illustrations, we implemented the proposed method to perform numerical bifurcation analysis of the 1D FitzHugh-Nagumo PDEs from data generated by D1Q3 Lattice Boltzmann simulations. The proposed method was quite effective in terms of numerical accuracy regarding the construction of the coarse-scale bifurcation diagram. Furthermore, the proposed RPNN scheme was \(\sim \) 20 to 30 times less costly regarding the training phase than the traditional shallow FNNs, thus arising as a promising alternative to deep learning for the data-driven numerical solution of the inverse problem for high-dimensional PDEs.

Similar content being viewed by others

1 Introduction

The discovery of physical laws and the numerical solution of the inverse problem in complex systems modelling, i.e. the construction of Partial Differential Equations (PDEs) for the emergent dynamics from data and consequently the systematic analysis of their dynamics with established numerical analysis techniques is a holy grail in the study of complex systems and has been the focus of intense research efforts over the the last years [1,2,3,4]. From the early ’90s, exploiting both theoretical and technological advances, researchers employed machine learning algorithms for system identification using macroscopic observations, i.e. assuming that we already know the set of coarse variables to model the underlying dynamics and the derivation of normal forms ([5,6,7,8,9,10,11,12,13,14,15]). More recently, Bongard and Lipson [16] proposed a method for generating symbolic equations for nonlinear dynamical systems that can be described by ordinary differential equations (ODEs) from time series. Brunton et al. [17] addressed the so-called sparse identification of nonlinear dynamics (SINDy) method to obtain explicit data-driven PDEs when the variables are known, and construct normal forms for bifurcation analysis. Wang et al. [3] addressed a physics-informed machine learning scheme based on deep learning to learn the solution operator of arbitrary PDEs. Kovachki et al. [4] addressed the concept of Neural Operators, mesh-free, infinite dimensional operators with neural networks, to learn surrogate functional maps for the solution operators of PDEs. Recently, Vlachas et al. [18] used ANNs and autoencoders to learn the dynamics of complex systems, thus exploiting the concept of lifting and restriction operators as addressed in the Equation-free framework.

However, for complex systems, such “good” macroscopic observables that can be used effectively for modelling the dynamics of the emergent patterns are not always directly available. Thus, such an appropriate set of “hidden” macroscopic variables have to be identified from data. Such data can be available either directly from experiments and/or from detailed simulations using for example molecular dynamics, agent-based models, Monte-Carlo and kinetic-based model simulations. Hence, all in all, we are confronted with two major problems: (a) the identification of a set of appropriate variables that define (parametrize) the emerging (coarse-gained) dynamics, (b) the construction of models based on these variables. In the early 2000’s, the Equation-Free and Variable-Free multiscale framework [19,20,21,22,23] provided a systematic framework for the numerical analysis (numerical bifurcation analysis, design of controllers, optimization, rare-events analysis) of the emergent dynamics as well as for the acceleration of microscopic/mesoscale/large-scale simulations, by bridging the micro/mesoscale where the physical laws may be known and the macroscopic scale where the emergent dynamics evolve. This bridging is achieved via the concept of the “coarse time steppers”, i.e. the construction of a black-box map on the macroscopic scale. By doing so, one can perform multiscale numerical analysis, even for microscopically large-scale systems tasks by exploiting the algorithms (toolkit) of matrix-free methods in the Krylov subspace [19, 23,24,25,26,27], thus bypassing the need to construct explicitly models in the form of PDEs. In the case when the macroscopic variables are not known a-priori, one can resort to non-linear manifold learning algorithms such as Diffusion maps [28,29,30,31] to identify the intrinsic dimension of the slow manifold where the emergent dynamics evolve.

Over the last few years, efforts have been focused on developing physics-informed machine learning methods for solving both the forward and inverse problems, i.e. the numerical solution of high-dimensional multiscale problems described by PDEs, and that of discovering the hidden physics [1, 32,33,34,35], thus both identifying the set to coarse observables and based on them to learn the effective PDEs. Lee et al. [35] presented a methodology to find the right-hand-side of macroscopic PDEs directly from data using Diffusion maps and Automatic Relevance Determination for selecting a good set of macroscopic variables, and Gaussian processes and artificial neural networks for modelling purposes. The approach was applied to learn a “black-box” PDE from data produced by Lattice Boltzmann simulations of the FitzHugh-Nagumo PDEs at a specific value of the bifurcation parameter where sustained oscillations are observed.

In this paper, building on previous efforts [35], we address a data-driven numerical framework based on machine learning for the numerical bifurcation analysis of PDEs from spatio-temporal data produced by high-dimensional kinetic-based model (here D1Q3 Lattice Boltzmann model) simulations. For the discovery of an appropriate set of coarse-gained variables, we used parsimonious Diffusion maps [36, 38], while for the identification of the right-hand side of the coarse-grained PDEs, we used shallow Feedforward Neural Networks (FNNs) and Random Projection Neural Networks (RPNNs), thus proposing an appropriate sampling approach for the construction of the (random) basis functions. For our illustrations, we have used a D1Q3 Lattice Boltzmann model simulator of the FitzHugh Nagumo (FHN) spatio-temporal dynamics. Upon training, the tracing of the coarse-grained bifurcation diagram was obtained by coupling the machine learning models with the pseudo-arc-length numerical continuation approach. The performance of the machine learning schemes was compared with the reference bifurcation diagram obtained by the one obtained with central finite difference discretization of the FHN PDEs.

2 Methodology

The pipeline of the proposed machine learning framework for the coarse-grained numerical bifurcation analysis from spatio-temporal data produced from detailed high-dimensional spatio-temporal LBM simulations consists of three tasks: (a) the identification of a set of coarse-scale variables from the spatio-temporal data using manifold learning and in particular parsimonious Diffusion maps using leave-one-out cross-validation (LOOCV), (b) based on the parsimonious coarse-grained set of variables, the reconstruction of the right-hand-side of the effective PDEs using machine learning and, (c) based on the machine learning models, the construction of the coarse-scale bifurcation diagrams of the emergent dynamics using the numerical bifurcation analysis toolkit.

The assumption here is that the emergent dynamics of the complex system under study on a domain \(\Omega \times [t_0,t_{end}] \subseteq {\mathbb {R}}^d\times {\mathbb {R}}\) can be modelled by a system, of say m PDEs in the form of:

where \(\varvec{u}(\varvec{x},t)=[u^{(1)}(\varvec{x},t),\dots ,u^{(m)}(\varvec{x},t)]\), \(F^{(i)},\) \(i=1,2,\dots m\) is a non-linear operator, \({\mathcal {D}}^{\varvec{\nu }}\varvec{u}(\varvec{x},t)\) is the generic multi-index \(\varvec{\nu }\)-th order spatial derivative at time t i.e.:

and \(\varvec{\varepsilon }\) denotes the (bifurcation) parameters of the system.

The boundary conditions read:

where \(\{\partial \Omega _l\}\) denotes an l partition of the boundary of \(\Omega \), and initial conditions

The right-hand-side of the i-th PDE depend on say \(\gamma ^{(i)}\) number of variables and bifurcation parameters from the set of variables

Let us denote this set as \({\mathcal {S}}^{(i)}\), with cardinality \(|{\mathcal {S}}^{(i)}|=\gamma (i)\). Hence, at each spatial point \(\varvec{x}_q,q=1,2,\dots ,M\) and time instant \(t_s,s=1,2,\dots ,N\) the set of features for the i-th PDE can be described by a vector \({\varvec{z}}_q(t_s)\in {\mathbb {R}}^{\gamma (i)}\).

Here, we assume that such PDEs in principle exist but there are not available in a closed-form.

Instead, we assume that we have detailed observations from detailed/high-dimensional kinetic-based model simulations from which we can compute the time and spatial derivatives of all the observables in N points in time and M points in space using e.g., finite differences. Thus, we aim to (a) identify the intrinsic dimension of the manifold on which the coarse-grained dynamics evolve, i.e. for each PDE identify \(\gamma (i)\), and the coordinates that define the low-dimensional manifold, i.e., the sets \({\mathcal {S}}^{(i)}\), and based on them (b) identify the right-hand-side (RHS) of the effective PDEs using machine learning.

To demonstrate the proposed approach, we have chosen to produce high-dimensional data from D1Q3 Lattice Boltzmann model (LBM) simulations of the coupled FitzHugh-Nagumo PDEs of activation-inhibition dynamics. Using the LBM simulator, we produced data in space and time from different initial conditions and values of the bifurcation parameter. For the identification of an appropriate set of coarse-scale variables that define the low-dimensional manifold on which the dynamics evolve, we performed feature selection using parsimonious Diffusion Maps [36,37,38]. Then, we trained the machine learning schemes to learn the right-hand-side of the PDEs on the low-dimensional manifold. Based on the constructed models, we performed numerical bifurcation analysis employing the pseudo-arc-length continuation method. The performance of the proposed data-driven scheme for constructing the coarse-grained bifurcation diagram was validated against the one computed with the FHN PDEs using finite difference discretization. A schematic overview of the proposed framework for the case of two effective PDEs (as in the problem of the FitzHugh-Nagumo activation-inhibition dynamics) is shown in Fig. 1.

In what follows, we first describe the parsimonious Diffusion Maps algorithm for feature selection. Then, we present the machine learning schemes used for identifying the right-hand-side of the effective PDEs from the LBM simulations, and then we show how one can couple the machine learning models with the pseudo-arc-length continuation method to construct the coarse-scale bifurcation diagrams. Finally, we present the numerical results and compare the performance of the proposed machine learning schemes.

Schematic of the three-stage workflow for constructing coarse-grained bifurcation diagrams from fine scale observations using the paradigm of two parabolic PDEs: 1) Identify a set of parsimonious coarse-grained observables using Diffusion Maps from high-dimensional spatio-temporal data (here produced from D1Q3 Lattice Boltzmann model simulations) and compute their spatial and time derivatives using finite differences, 2) “learn” the right-hand-side of the PDEs using machine learning (here shallow FNNs with 2 hidden layers and single-layer RPNNs), and, 3) employ the numerical bifurcation analysis toolkit (here the pseudo-arc-length-continuation method) to construct the coarse-grained bifurcation diagram

2.1 Parsimonious Diffusion Maps

Diffusion Maps is a non-linear manifold learning algorithm [28,29,30] that identifies a low-dimensional representation \({\varvec{y}}_i\in {\mathbb {R}}^{\mu }\) of a point \({\varvec{z}}_i\in {\mathbb {R}}^{n}, i=1,2,\dots N\) in the high dimensional space (\(\mu<<n\)) addressing the diffusion distance among points as the preserved metric [30]. Diffusion Maps assume that the high-dimensional data are embedded in a smooth low-dimensional manifold. It can be shown that the eigenvectors of the large normalized kernel matrices constructed from the data converge to the eigenfunctions of the Laplace-Beltrami operator on this manifold at the limit of infinite data [28, 30]. The approximation of this Laplace-Beltrami operator is made by representing the weighted edges connecting nodes i and j commonly by a normalized diffusion kernel matrix W with elements:

Then, one can define the \(N\times N\) diffusion matrix P by:

which elements \( p_{ij}\) correspond to the probability of jumping from one point to another in the high-dimensional space.

Taking the power of t of the diffusion matrix P is essentially identical of observing t steps forward of a Markov chain process \(Z_t\) on the data points. Thus, the transition probability of moving from point i to point j reads:

On the weighted graph, the random walk can be defined as:

where \(deg(z_i)\) denotes the weighted degree of the point i defined as:

At the next step, it is easy to compute the graph Laplacian matrix \(\tilde{P}\) defined as:

The eigendecomposition of \({\tilde{P}}\) results to \({\tilde{P}}=U\Lambda U^{*}\), where \(\Lambda \) is a diagonal matrix with the eigenvalues and U is the matrix with columns the eigenvectors of \({\tilde{P}}\). The eigenvalues of P are the same of \({\tilde{P}}\) since P is the adjoint of the symmetric matrix P, while the left and right eigenvectors of P (say \(\Phi \) and \(\Psi \)) are related to those of \({\tilde{P}}\) as [39]:

The embedding of the manifold in \(\mu \) dimensions is realized by taking the first \(\mu \) non-trivial/parsimonious eigenvectors of \(\tilde{P}\):

where t denotes the number of diffusion steps (usually \(t=1\)) and \(\lambda _1,\dots ,\lambda _{\mu }\) the eigenvalues in descending order. For any pair of points \({\varvec{z}}_i\) and \({\varvec{z}}_j\), the diffusion distance at the time step t is defined as:

where \(\Phi _0\) denotes the stationary distribution of the random walk described by the diffusion matrix P [40]:

In practice, the embedding dimension \(\mu \) is determined by the spectral gap in the eigenvalues of the final decomposition. Such a numerical gap means that the first few eigenvalues would be adequate for the approximation of the diffusion distance between all pairs of points [28, 29]. Here we retain only the \(\mu \) parsimonious eigendimensions of the final decomposition as proposed in [36, 38].

2.1.1 Feature Selection Using Diffusion Maps with Leave-One-Out Cross-Validation (LOOCV)

Here, by identifying the coarse-scale spatio-temporal behaviour of a system of PDEs, we mean learning their right-hand-sides as a black-box model. Hence, we first have to deal with the task of discovering a set of coarse-grained variables embedded in the high-dimensional input data space. For this task, one can employ various methods for feature selection such as LASSO [41, 42] and Random Forests [43,44,45]. In our framework, we used a method that extracts the dominant features based on manifold parametrization through output-informed Diffusion Maps [36]. The core assumption of this method is that given a dataset in a high-dimensional space, then we can parametrize it on a lower-dimensional manifold.

For this purpose, given a set of \(\varvec{\phi }_1,\varvec{\phi }_2,\dots ,\varvec{\phi }_{k-1} \in {\mathbb {R}}^N\) Diffusion Maps eigenvectors, for each element \(i=1,2\dots ,N\) of \(\varvec{\phi }_k\), we use a local linear regression model:

to investigate if \(\phi _k\) is a dependent eigendirection; \(\Phi _{k-1,i}=[\phi _{1,i},\phi _{2,i},\dots ,\phi _{k-1,i}^T]\), \(\alpha _{k,i}\in {\mathbb {R}}\) and \(\beta _{k,i}\in {\mathbb {R}}^{k-1}\). The values of parameters \(\alpha _{k,i}\) and \(\beta _{k,i}\) are found solving an optimization problem of the form:

where K is a kernel weighted function, usually the Gaussian kernel:

where \(\sigma \) is the shape parameter. The final normalized leave-one-out cross-validation (LOOCV) error for this local linear fit is defined as:

For small values of \(r_k\), \(\varvec{\phi }_k\) is considered to be dependent of the other eigenvectors and hence is considered a harmomic or repeated eigendirection, while large values of \(r_k\), suggest that \(\varvec{\phi }_k\) can serve as a new independent eigendirection.

In our approach, we provide as inputs to the Diffusion Maps algorithm the combined input-output domain (the observables \({\varvec{z}}_i\) and their spatial and time derivatives). In principle, any of the subsets that is capable to parametrize the discovered embedding coordinates that were chosen after the above described methodology, can be considered as a new possible input data domain that can be used for learning the right-hand-side of the effective PDEs. Actually, we find the subsets of variables of the input space that minimally parametrize the intrinsic embedding by quantifying it with a total regression loss \(L_T\) based on a mean squared error:

Here, as \(L_{\varvec{\phi }_k}\), we define the regression loss for representing the intrinsic coordinate \(\varvec{\phi }_k\) as:

where \(g(\cdot )\) is the output of the regressors with inputs the values of the features in the ambient space and target values the eigenvectors \(\varvec{\phi }_k\). Note, that in this procedure, we did not include the values of the bifurcation parameter into the dataset. We have chosen to employ the above method separately for every subset of the same value of the bifurcation parameter and finally to select the subset of features with the minimum sum of the total regression losses across all the embedding spaces.

2.2 Shallow Feedforward Neural Networks

It is well known that FNNs are universal approximators of any (piecewise) continuous (multivariate) function, to any desired accuracy [46,47,48,49,50]. This implies that any failure of a network must arise from an inadequate choice/calibration of weights and biases or an insufficient number of hidden nodes.

The output of a FNN with two hidden layers, with H hidden units in each layer, that models the right-hand-side of the i-th PDE at each input point \({\varvec{z}}^{(i)}({\varvec{x}}_q,t_s) \in {\mathbb {R}}^{\gamma (i)}\), evaluated at each point in space \({\varvec{x}}_q,\, q=1,\dots ,M\), and time \(t_s, s=1,2,\dots ,N\) can be written as:

\(\psi (.)\) is the activation function (based on the above formulation it is assumed to be the same for all nodes in the hidden layers) \(\varvec{\omega }^{o(i)}=(\omega _1^{o(i)},\omega _2^{o(i)},\dots ,\ldots \omega _H^{o(i)})\in {\mathbb {R}}^{1 \times H}\) are the external weights connecting the second hidden layer and the output, \(b^{o(i)} \in {\mathbb {R}}\) is the bias of the output node, the matrix \({\mathcal {W}}^{1(i)} \in {\mathbb {R}}^{H\times \gamma (i)}\) with rows \(\varvec{\omega }_l^{1(i)} \in {\mathbb {R}}^{\gamma (i)}\) are the weights connecting the input and the first hidden layer, \({\varvec{b}}^{1(i)}=(b^{1(i)}_1,b^{1(i)}_2,\dots ,b^{1(i)}_H) \in {\mathbb {R}}^H\) are the biases of the nodes of the first hidden layer, the matrix \({\mathcal {W}}^{2(i)}\in {\mathbb {R}}^{H\times H}\) contains the weights \(\omega _{lj}\) connecting the l-th unit of the first hidden layer with the j-th unit of the second hidden layer and \({\varvec{b}}^{2(i)}=(b^{2(i)}_1,b^{2(i)}_2,\dots ,b^{2(i)}_H)\in {\mathbb {R}}^H\) are the biases of the second hidden layer. In the same way, one can easily extend the above formula for more than two hidden layers. Then, a loss function for each one of the m PDEs can be specified as:

Foresee and Hagan ([51]) showed that adding the regularization term \(E_{\omega }=\sum _{j=1}^H\omega ^2_j\) to the cost function will maximize the posterior probability based on Bayes’ rule. Hence, the total cost function is:

where \(\lambda \) is the regularization parameter that has to be tuned. For our simulations, we used the Bayesian regularized back-propagation updating the weight values using the Levenberg-Marquadt algorithm. ([52]) as implemented in Matlab2020b.

2.3 Random Projection Neural Networks

Random Projection Neural Networks (RPNNs) are a family of neural networks including Random Vector Functional Links (RVFLs) [53, 54], Reservoir Computing/ Echo state networks [55, 56], Extreme Learning Machines [57] and Liquid-State Networks [58]. The basic idea, which seed can be found in the pioneering work of Rosenblatt back in ’60s [59], behind all these approaches is to use FNNs with fixed-weights between the input and the hidden layer(s), fixed biases for the nodes of the hidden layer(s), and a linear output layer. Based on that configuration, the output is projected linearly onto the functional subspace spanned by the nonlinear basis functions of the hidden layer, and the only remaining unknowns are the weights between the hidden and the output layer. Their estimation is done by solving a nonlinear regularized least squares problem [60, 61]. The universal approximation properties of the RPNNs has been proved in a series of papers (see e.g. [53, 54, 56, 57]). In general, the universal approximation property of random projections can be rationalized by the celebrated Johnson-Lindenstrauss (JL) Theorem [62]:

Theorem 1

(Johnson-Lindenstrauss) Let \({\mathcal {Z}} \in {\mathbb {R}}^{n\times N}\) matrix with N points \({\varvec{z}}_i \in {\mathbb {R}}^n\). Then, \(\forall \epsilon \in (0,1)\) and \(\mu \in {\mathbb {N}}\) such that \(\mu \ge O (\frac{\ln {N}}{\epsilon ^2})\), there exists a map \(G: {\mathbb {R}}^n \rightarrow {\mathbb {R}}^{\mu }\) such that \(\forall {\varvec{z}}_i, {\varvec{z}}_j \in {\mathcal {Z}}\):

Note, that while the above theorem is deterministic, its proof relies on probabilistic techniques combined with Kirszbraun’s theorem to yield a so-called extension mapping [62]. In particular, it can be shown, that one of the many such embedding maps is simply a linear projection matrix R with entries \(r_{ij}\) that are i.i.d. random variables sampled from a normal distribution. In particular, the JL Theorem may be proved using the following lemma.

Lemma 1

Let \({\mathcal {Z}}\) be a set of N points in \({\mathbb {R}}^n\) and let \(G({\varvec{z}})\) be the random projection defined by

where \(R = [r_{ij}] \in {\mathbb {R}}^{\mu \times n}\) has components that are i.i.d. random variables sampled from a normal distribution. Then, \(\forall \, {\varvec{z}} \in {\mathcal {Z}}\)

is true with probability \(p \ge 1-2\exp \left( -(\epsilon ^2-\epsilon ^3)\frac{\mu }{4} \right) \).

Similar proofs have been given for distributions different from the normal one (see, e.g. [63,64,65,66]).

The above is a feature mapping, which may result in a dimensionality reduction (\(\mu <n\)) or, in analogy to the case of kernel-based manifold learning methods, a projection into a higher dimensional space (\(\mu >n\)). We also note that while the above linear random projection is but one of the choices for constructing a JL embedding, it has been experimentally demonstrated and/or theoretically proven that appropriately constructed nonlinear random embeddings may outperform simple linear random projections. For example, in [67] it was shown that deep networks with random weights for each layer result in even better approximation accuracy than the simple linear random projection.

Here, for learning the right-hand-side of the set of PDEs, we used RPNNs (in the form of an Extreme Learning Machine [57]) with a single hidden layer [60]. For H hidden units in the hidden layer, the output of the proposed RPNN can be written as:

where \({\varvec{z}}^{(i)}({\varvec{x}}_q,t_s) \in {\mathbb {R}}^{\gamma (i)}\) denotes the inputs computed at each point in space \({\varvec{x}}_q,\, q=1,\dots ,M\), and time \(t_s, s=1,2,\dots ,N\), \(\psi (.)\) is the activation function \(\varvec{\omega }^{o(i)}=(\omega _1^{o(i)},\omega _2^{o(i)},\dots , \ldots \omega _H^{o(i)})\in {\mathbb {R}}^{1 \times H}\) are the external weights connecting the hidden layer and the output and \(b^{o(i)} \in {\mathbb {R}}\) is the bias of the output node, while the matrix \({\mathcal {W}}^{(i)} \in {\mathbb {R}}^{H\times \gamma (i)}\) with rows \(\varvec{\omega }_j^{(i)} \in {\mathbb {R}}^{\gamma ^{(i)}}\) and \({\varvec{b}}^{(i)}=(b^{(i)}_1,b^{(i)}_2,\dots ,b^{(i)}_H) \in {\mathbb {R}}^H\) are the weights connecting the input and the hidden layer and the biases of the hidden layer, respectively. Now, in the proposed RPNN scheme, \(\varvec{\omega }^{(i)}_j\) and \(b^{(i)}_j\) are random variables drawn from appropriate uniform distributions, the output bias \(b^o\) is set to zero, and the output weights \(\varvec{\omega }^{o(i)} \in {\mathbb {R}}^{1\times H}\) are determined solving a linear least squares problem:

where \(\hat{\varvec{u}}^{(i)}_t\in {\mathbb {R}}^{MN}\) is the vector collecting all the outputs \({\hat{u}}^{(i)}_t(\varvec{x}_q,t_s)\) of the RPNN for \(q=1,\dots ,M\) and \(s=1,\dots ,N\), and the matrix \({\mathcal {A}}^{(i)} \in {\mathbb {R}}^{H\times MN}\) is the matrix which elements \({\mathcal {A}}_{j,k}\) are given by:

where \(\varvec{z}^{(i)}_k=\varvec{z}^{(i)}(\varvec{x}_q,t_s)\) and \(k=q+(s-1)M\). Regarding the regression problem, generally \(H<<MN\), the system Eq. (26) is over-determined. As the resulting projection matrix \({\mathcal {A}}\) is not guaranteed to be full row-rank the solution can be computed with Singular Value Decomposition (SVD). Given the SVD decomposition of \({\mathcal {A}}\), the pseudo inverse \({\mathcal {A}}^{+}\) is:

where \(U \in {\mathbb {R}}^{H\times H}\) and \(V \in {\mathbb {R}}^{MN \times MN}\) are the unitary matrices of left and right eigenvectors respectively, and \(\Sigma \in {\mathbb {R}}^{H\times MN}\) is the diagonal matrix of H singular values \(\sigma _j\). Finally, in order to regularize the problem, we can select just \({\tilde{H}}<H\) singular values \({\tilde{\sigma }}\) that are greater than a given tolerance, i.e., \({\tilde{\sigma }} \in \{\sigma _j\, |\,\sigma _j>tol, j=1,\dots ,H\}\). Hence, the output weights \(\varvec{\omega }^{o(i)}\) are computed as:

where \({\tilde{U}} \in {\mathbb {R}}^{H\times {\tilde{H}}}\), \({\tilde{V}} \in {\mathbb {R}}^{MN\times {\tilde{H}}}\) and \({\tilde{\Sigma }} \in {\mathbb {R}}^{{\tilde{H}}\times {\tilde{H}}}\) are restricted to the \({\tilde{\sigma }}\)s.

For the regression problem, we aim at learning the right-hand-side of the PDEs from spatio-temporal data with single-layer RPNNs with H random basis functions:

Then the approximated function \({\hat{F}}^{(i)}\) is just a linear combination of the random basis functions \(\psi _j^{(i)}\). For our computations, we selected as activation function the logistic sigmoid \(\psi :y\in {\mathbb {R}}\rightarrow \psi (y) \in {\mathbb {R}}\) given by:

where, y is given by linear combination \(y=\varvec{\omega }_j^{(i)} \cdot {\varvec{z}}^{(i)}+b^{(i)}_j\).

2.3.1 Random Sampling Procedure

For the construction of the appropriate set of random basis functions for the solution of the inverse problem (i.e. that of learning the effective PDEs from data), we suggest a different random sampling procedure, than the one usually implemented in RPNNs and in particular in Extreme Learning Machines [60, 61, 68,69,70,71] for the solution of the forward problem, i.e. that of the numerical solution of Partial Differential Equations. Since in the inverse problem, we aim at solving a high-dimensional over-determined system (\(MN>>H\)) is important to parsimoniously select the underlying basis functions \(\psi _j^{(i)}\), i.e. to seek for appropriate internal weights \(W^{(i)}\) and biases \(\varvec{b}^{(i)}\) that lead to non-trivial functions.

In general, the weights \(\omega _j^{(i)}\) and biases \(b_j^{(i)}\) are uniformly random sampled from a subset of the input/feature space, e.g., \(\omega _j^{(i)},\, b_j^{(i)} \sim {\mathcal {U}}([-1,1]^{\gamma (i)}\), where a high dimension \(\gamma (i)\) of the input/feature space leads to the phenomenon referred as the "curse of dimensionality". Indeed, it is necessary to use many function (\(H \propto 10^{\gamma (i)}\)) to correctly “explore” the input space and come up with a good basis function.

Hence, our goal is to construct \(\omega _j^{(i)}\) and \(b_j^{(i)}\) with a simple data-driven manifold learning in order to have a basis of functions \(\psi _j^{(i)}\) that well describe the manifold \({\mathcal {M}}^{(i)}\) where the data \(\varvec{z}^{(i)}(\varvec{x}_q,t_s) \in {\mathcal {M}}^{(i)}, \forall q,\forall s\) are embedded. It is well-known that the output of a neuron is given by a ridge function \(f:{\mathbb {R}}^H \rightarrow {\mathbb {R}}\) such that \(f(z_1,\dots ,z_n)=g({\varvec{a}}^T\cdot {\varvec{z}})\), where \(g:{\mathbb {R}} \rightarrow {\mathbb {R}}\) and \({\varvec{a}} \in {\mathbb {R}}^n \). The inflection point of the logistic sigmoid is at (\(y=0\), \(\psi (y)=1/2\)). The points that satisfy the following relation [60, 61]:

form an hyperplane \({\mathcal {H}}_j^{(i)}\) of \({\mathbb {R}}^{MN}\) (MN dimension of \({\varvec{z}}\)) defined by the direction of \(\varvec{\omega }_j^{(i)}\). Along \({\mathcal {H}}_j\), \(\psi _j^{(i)}\) is constantly 1/2. We call the points \(c^{(i)}_j \in {\mathcal {H}}_j^{(i)}\) the centers of the ridge function \(\psi _j^{(i)}\). Here the goal is to select H centers \(c^{(i)}_j\) that lie on the manifold \({\mathcal {M}}^{(i)}\) (note that this is not achieved by random weights and biases) and find directions \(w_j^{(i)}\) that make \(\psi _j^{(i)}\) non-constant/non-trivial along \({\mathcal {M}}^{(i)}\) (note that for ridge functions there are many directions for which this does not happen).

Thus, here, being \(H<<MN\) we suggest to uniformly random sample H points \(c^{(i)}_j\) from \({\varvec{z}}(\varvec{x}_q,t_s)\) to be the centers of the functions \(\psi _j^{(i)}\): in this way the inflection points of \(\psi _j^{(i)}\) are on the manifold \({\mathcal {M}}\). Also, we independently randomly sample other H points \({\tilde{c}}_j^{(i)}\) from the inputs \({\varvec{z}}(\varvec{x}_q,t_s)\). Then, we construct the hidden weights as:

in order to set the direction \(\varvec{\omega }^{(i)}_j\) of the hyperplane \(H_j^{(i)}\) parallel to the one connecting \({\tilde{c}}^{(i)}_j\) and \(c^{(i)}_j\). By doing so, the ridge function will be constant on a direction orthogonal to the connection between two points in the manifold \({\mathcal {M}}^{(i)}\) and along this line will change in value, so it will be able to discriminate between the points lying on this direction. Thus, the biases \(b_j^{(i)}\) are set to:

Eq. (32) ensures that \(c^{(i)}_j \in {\mathcal {H}}_j^{(i)}\) is a center of the ridge function.

3 Coarse-Grained Numerical Bifurcation Analysis From Spatio-Temporal Data

For assessing the performance of the proposed scheme, we selected the celebrated, well studied FitzHugh-Nagumo (FHN) model first introduced in [72] to simplify the Hodgkin-Huxley model into a two-dimensional system of ODEs to describe the dynamics of the voltage across a nerve cell. In particular, we considered the FHN equations which add a spatial diffusion term to describe the propagation of an action potential as a traveling wave. The bifurcation diagram of the one-dimensional set of PDEs is known to have a turning point and two supercritical Andronov-Hopf bifurcation points. In what follows, we describe the model along with the initial and boundary conditions and then we present the D1Q3 Lattice Boltzmann model.

3.1 The FitzHugh-Nagumo PDEs

The evolution of activation \(u:[x_0,x_{end}]\times [t_0,t_{end}]\rightarrow {\mathbb {R}}\) and inhibition \(v:[x_0,x_{end}]\times [t_0,t_{end}]\rightarrow {\mathbb {R}}\) dynamics are described by the following two coupled nonlinear parabolic PDEs:

with homogeneous von Neumann Boundary conditions:

\(\alpha _0\) and \(\alpha _1\) are parameters, \(\varepsilon \) is the kinetic bifurcation parameter.

For our simulations, we have set \(x_0=0\), \(x_{end}=20\), \(\alpha _1=2,\alpha _0=-0.03, D^u=1, D^v=4\) and varied the bifurcation parameter \(\varepsilon \) in the interval [0.005, 0.955] [73]. For our simulations, in order to explore the dynamic behaviour, we considered various initial conditions \(u_0(x)=u(x,0)\) and \(v_0(x)=v(x,0)\) selected randomly as follows:

where \({\mathcal {U}}(a,b)\) denotes the uniform distribution in the interval [a, b].

3.2 The D1Q3 Lattice Boltzmann model

Here, a Lattice Boltzman model (LBM) serves as our higher-dimensional simulator. The LBM method has originated as a (mesoscopic) alternative to the numerical solution of PDEs in fluid mechanics (see e.g. [74,75,76,77,78,79]) based on the Boltzmann kinetic equation (see [75, 80] for a review and perspectives). The statistical description of the system at a mesoscopic level uses the concept of distribution function \(f(\vec {r},\vec {c},t)\), i.e. \(f(\vec {r},\vec {c},t)d\vec {r} d\vec {c} dt\) is the infinitesimal probability of having particles at location \(\vec {r}\) with velocities \(\vec {c}\) at a given time t, for reducing the high-number of equations and unknowns. Then, at this level, a system without an external force is governed by the Boltzmann Transport equation [81]:

where the term \({\mathcal {R}}(f)\) describes the rate of collisions between particles. In 1954, Bhatnagar, Gross and Krook (BGK) [82] introduced an approximation model for the collision operator:

where \(\tau \) is the so-called relaxing time coefficient and \(f^{eq}\) denote the local equilibrium distribution function. As it has been recently shown [83], the BGK model of the Boltzmann equation can serve as a generator of a broad class of nonlinear PDEs.

In the LBM approach, Eq.(38)-(39) is collocated (assumed valid) along specific directions \(\vec {c}_i\) on a lattice:

and then Eq.(40) is discretized with a time step \(\Delta t\) as follows:

One common interpretation of Eq.(41) is to think about the distribution functions as fictitious particles that stream and collide along specified linkages of the lattice. Lattices are usually denoted by the notation DnQm, where n is the spatial dimension of the problem and m refer to the number of connections of each node in the lattice. The node in the lattices coincide with the points of a spatial grid with a spatial step \(\Delta x\).

Here, in order to estimate the coarse-scale observables u and v of the FHN dynamics, we considered the D1Q3 implementation, i.e. we used the one-dimensional lattice with three velocities \(c_i\): particles can stream to the right (\(c_1=\frac{\Delta x}{\Delta t}\)), to the left (\(c_{-1}=-\frac{\Delta x}{\Delta t}\)) or staying still on the node (\(c_0=0\)). Also, we assume the coexistence of two different distribution functions for describing the distribution of the activator particles \(f^u_i\) and the distribution of the inhibitor particles \(f^v_i\), where the subscript i refer to the associated direction. Therefore, one can figure that at each instant there are six fictitious particles on each node of the lattice: two resting on the node (with distribution \(f^u_0\) and \(f^v_0\)), two moving on the left (with distribution \(f^u_{-1}\) and \(f^v_{-1}\)) and two moving on the right (with distribution \(f^u_{1}\) and \(f^v_{1}\)). The relation between the above distributions and the coarse-scale density u and v is given by the zeroth moment (across the velocity directions) of the overall distribution function:

The coexistence of multiple distributions renders necessary to introduce weights \(\omega _i\) for the connections in the lattice that should satisfy the following properties:

-

(a)

Normalization \(\omega _0+\omega _1+\omega _{-1}=1\)

-

(b)

Simmetry \(\omega _1-\omega _{-1}=0\)

-

(c)

Isotropy:

-

(c.1)

\(\omega _0c_0^2+\omega _1c_1^2+\omega _{-1}c_{-1}^2=c_s^2\)

-

(c.2)

\(\omega _0c_0^3+\omega _1c_1^3+\omega _{-1}c_{-1}^3=0\)

-

(c.3)

\(\omega _0c_0^4+\omega _1c_1^4+\omega _{-1}c_{-1}^4=3c_s^4\),

-

(c.1)

where \(c_s\) is the speed of sound in the lattice. Thus, the weights are equal to \(\omega _{\pm 1}=1/6\) for the moving particles and \(\omega _0=4/6\) for the resting particle. The resulting speed of sound in the lattice is \(c_s=\frac{\sqrt{3}\Delta x}{3\Delta t}\).

As the BGK operator (39) suggests, one key step in applying LBM for solving reaction-advection-diffusion PDEs is to determine the local equilibrium distribution function \(f^{eq}\) associated to a given model. For particles with macroscopic density \(\rho \) that move in a medium macroscopic velocity \(\vec {u}_m\), the Maxwell distribution is:

where d is the spatial dimension of the problem, T is the temperature and R is the universal gas constant. The exponential in Eq. (43) can be expanded using Taylor series, ignoring terms of order \(O(u^3)\) and higher, thus obtaining:

with \(\omega (\vec {c})=(2\pi RT)^{-d/2}\text {exp}\biggl (-\dfrac{\vec {c}\cdot \vec {c}}{2RT} \biggr )\) and \(RT=c_s^2\), with \(c_s\) speed of the sound.

Now, since the FHN PDEs do not have any advection terms, the medium is stationary (\(\vec {u}_m=0\)) and the equilibrium distribution function, discretized on the lattice direction \(c_i\), is simplified in:

Now, in the FHN model, we need to consider also reaction terms \(R^l_i\) and so finally, the time evolution of the LBM simulator associated to the FHN on a given D1Q3 lattice is:

where the superscript l denotes the activator u and the inhibitor v and the reaction terms \(R^l_i\) are directly derived by:

Finally, the relaxation coefficient \(\dfrac{\Delta t}{\tau ^l}\) is related to the macroscopic kinematic viscosity \(D^l\) of the FHN model and in general depends on the speed of the sound \(c_s\) associated to the lattice [84]:

4 Algorithm Flow Chart

Summarizing, the proposed three-tier algorithm for constructing bifurcation diagrams from data is provided in the form of a pseudo code in Algorithm 1. The first two steps are related to the identification of the effective coarse scale observables and the learning of the right-hand-side of the effective PDEs. The third step is where the pseudo-arc-length continuation method is applied for the tracing of the solution branch through saddle-node bifurcations.

5 Numerical Results

5.1 Numerical Bifurcation Analysis of the FHN PDEs

For comparison purposes, we first constructed the bifurcation diagram of the FHN PDEs using central finite differences. The discretization of the one-dimensional PDEs in M points with second-order central finite differences in the unit interval \(0\le x \le 20\) leads to the following system of \(2(M-2)\) non-linear algebraic equations \(\forall x_j=(j-1)h, j=2,\dots M-1 \), \(h=\frac{1}{M-1}\):

At the boundaries, we imposed homogeneous von Neumann boundary conditions. The above \(2(M-2)\) set of non-linear algebraic equations is solved iteratively using Newton’s method. The non-null elements of the Jacobian matrix are given by:

To trace the solution branch along the critical points, we used the pseudo arc-length-continuation method ([85,86,87]). This involves the parametrization of u(x), v(x) and \(\varepsilon (x)\) by the arc-length s on the solution branch. The solution is sought in terms of \({\tilde{u}}(x,s)\), \({\tilde{v}}(x,s)\) and \({\tilde{\varepsilon }}(s)\) in an iterative manner, by solving until convergence the following augmented system:

where

and

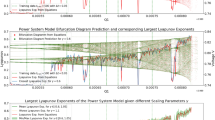

where (\({\tilde{u}}(x)_{-2}\),\({\tilde{v}}(x)_{-2}\)) and (\({\tilde{u}}(x)_{-1}\),\({\tilde{v}}(x)_{-1}\)) are two already found consequent solutions for \({\tilde{\varepsilon }}_{-2}\) and \({\tilde{\varepsilon }}_{-1}\), respectively and ds is the arc-length step for which a new solution around the previous solution \(({\tilde{u}}(x)_{-2},{\tilde{v}}(x)_{-2},{\tilde{\varepsilon }}_{-2})\) along the arc-length of the solution branch is being sought. The corresponding reference bifurcation diagram is shown in Fig. 2. In this range of values, there is an Andronov-Hopf bifurcation at \(\varepsilon \approx 0.018497\) and a fold point at \(\varepsilon \approx 0.95874\). Finally, in Fig. 3, we also depict the convergence errors of the numerical solutions as computed with finite differences.

Reference bifurcation diagram of the FHN PDEs with respect to \(\varepsilon \) as computed with FD and \(N=200\) points. (a) Mean values \(<u>\) for stable and unstable branches, (b) Mean values \(<v>\) for stable and unstable branches. Andronov-Hopf Point: \(HP_{\varepsilon }=0.01827931\). Turning Point: \(TP_{\varepsilon }=0.94457768\)

Contour plot of the \(l^2\) norms of the convergence of the solutions as computed with finite differences with respect to the size of the grid N computed as \(||u_{N}-u_{2N}||_{l^2}\), \(||v_{N}-v_{2N}||_{l^2}\). The convergence error was computed on 1001 grid points, using linear piecewise interpolation. (a) upper branch for u, (b) upper branch for v, (c) lower branch for u, (d) lower branch for v

Coarse initial conditions for (a) u and (b) v for the training. Every dot denotes a point whose \(\varepsilon \) and mean u (or v) were used for input data. Red dots are training points, blue points are test points. The grid is spanned with Chebychev-Gauss-Lobatto points for epsilons in the interval [0.005, 0.995] and the initial condition are randomly selected as in Eq. (37)

5.2 Numerical Bifurcation Analysis from Lattice Boltzmann Model Simulations

We collected transients of u(x, t) and v(x, t) with a sampling rate of 1s, from 10 different random sampled initial conditions for 40 different values for the bifurcation parameter \(\varepsilon \). In particular, we created a grid of 40 different \(\varepsilon \) in [0005, 0.955] using Gauss-Chebychev-Lobatto points, while the 10 initial conditions are sampled according to Eq.(37). Figure 4 depicts the total of 400 training initial conditions. Thus, we end up with a dataset consisting of 40 (values of \(\varepsilon \))\(\times 10\) (initial conditions)\(\times 448\) (time points ignoring the first 2s of the transient)\(\times 40\) (space points) \(\backsimeq 7.168.000\) data points.

For learning the coarse-grained dynamics and constructing the corresponding bifurcation diagram, we trained two FNNs and two single-layer RPNNs (one for each one of the variables u and v). The FNNs were constructed using two hidden layers with 12 units in each layer. Hidden units were employed with the hyperbolic tangent sigmoid activation function, while the regularization parameter was tuned and set \(\lambda =0.01\). For the training of the FNNs, we used the Deep Learning toolbox of MATLAB 2021a on an Intel Core i5-8265U with up to 3.9 GHz frequency with a memory of 8 GB.

5.2.1 Numerical Bifurcation Analysis Without Feature Selection

Table 1 summarises the performance of the two schemes on both training and test datasets. As it is shown, for any practical purposes, both schemes resulted to equivalent numerical accuracy for all metrics. For the FNNs, the training phase (using the deep-learning toolbox in Matlab R2020b) required \(\sim 1000\) epochs and \(\sim 4\) hours with the minimum tolerance set to \(1e-07\).

Differences between the predicted \({\hat{u}}_t(x,t)\) and \({\hat{v}}_t(x,t)\) and the actual values of the time derivatives \(u_t(x,t)\) and \(v_t(x,t)\) for three different values of \(\varepsilon \) are shown in Fig. 5 when using FNNs and in Fig. 6 when using RPNNs.

Approximation accuracy in the test data without feature selection as obtained with FNNs. Contour plot of the absolute values of differences in space and time, of \(\vert u_t(x,t)-{\hat{u}}_t(x,t) \vert \)((a), (c), and (e)) and of \(\vert v_t(x,t)-{\hat{v}}_t(x,t) \vert \) ((b), (d), and (f)) for characteristic values of \(\varepsilon \): (a) and (b) \(\varepsilon =0.0114\) near the Andronov-Hopf point, (c), (d) \(\varepsilon =0.4\), (e) and (f) \(\varepsilon =0.9383\) near the turning point

Approximation accuracy in the test data without feature selection as obtained with RPNNs. Contour plot of the absolute values of differences in space and time, of \(|u_t(x,t)-{\hat{u}}_t(x,t) |\) ((a), (c), and (e)) and of \(|v_t(x,t)-{\hat{v}}_t(x,t) |\) ((b), (d), and (f)) for characteristic values of \(\varepsilon \): (a) and (b) \(\varepsilon =0.0114\) near the Andronov-Hopf point, (c), (d) \(\varepsilon =0.4\), (e) and (f) \(\varepsilon =0.9383\) near the turning point

Instead, for the proposed RPNN scheme, the training phase, i.e. the solution of the least-squares problem with regularization, required around 8 minutes, thus resulting to a training phase of at least 20 times faster than that of the FNNs.

After training, we used the FNNs and RPNNs to compute with finite differences the quantities required for performing the bifurcation analysis (see Eq. (49)), i.e.:

with \(\delta =1e-06\). The reconstructed bifurcation diagrams are shown in Fig. 7. Using the FNNs, we estimated the Andronov-Hopf point at \(\varepsilon \approx 0.0191\) and the turning point at \(\varepsilon \approx 0.9713\); using the RPNNs, we estimated the Andronov-Hopf point at \(\varepsilon \approx 0.0193\) and the turning point at \(\varepsilon \approx 0.9696\). We approximated the same points using the finite differences scheme in the previous section at \(\varepsilon \approx 0.0183\) for the Andronov-Hopf point and at \(\varepsilon \approx 0.9446\) for the turning point. Hence, compared to the FNNs, the RPNNs approximated slightly better the reference turning point.

Reconstructed bifurcation diagrams from the Lattice Boltzmann simulations of the FHN dynamics with respect to \(\varepsilon \) with FNNs and RPNNs with and without feature selection. (a) Mean values \(<u>\) for stable and unstable branches; the inset zooms near the Andronov-Hopf bifurcation point (b) zoom near the turning Point for \(<u>\), (c) Mean values \(<v>\) for stable and unstable branches; the inset zooms near the Andronov-Hopf bifurcation point, (d) zoom near the turning Point for \(<v>\)

5.3 Numerical Bifurcation Analysis with Feature Selection

We used Diffusion Maps (setting the width parameter of the Gaussian kernel to \(\sigma =10\)) to identify the three parsimonious leading eigenvectors as described in Sect. 2.1. For our computations, we used the datafold package in python [88]. We denote them as \(\phi _1,\phi _2,\phi _3\). The three parsimonious Diffusion Maps coordinates for different values of the parameter \(\varepsilon \) are shown in Fig. 8. For \(\varepsilon =0.114\) that is close to the Andronov-Hopf point, the embedded space is a two dimensional “carpet” in the three dimensional space. The oscillatory behaviour leads to different values of the time derivative which can be effectively parametrized as shown by the coloring of the manifold (Fig. 8(a), (b)). For \(\varepsilon =0.4010\) and \(\varepsilon =0.9383\), the embedded space is a one dimensional line, since time derivatives converges rapidly to zero (Fig. 8(c), (d), (e) and (f)). Based on the feature selection methodology, the “good” subsets of the input data domain are presented in Table 2. As expected, the best candidate features are the \((u,v,u_{xx})\) for \(u_t\) and \((u,v,v_{xx})\) for \(v_t\), which are the only features that indeed appear in the closed form of the FHN PDEs.

(a) and (b): The three parsimonious Diffusion Maps coordinates for \(\varepsilon =0.01114\) near the Andronov-Hopf point, respectively. (c) and (d): the two parsimonious Diffusion maps coordinates for \(\varepsilon =0.4010\). (e) and (f): the two parsimonious Diffusion maps coordinates for \(\varepsilon =0.9383\) near the turning point. Colors represent \(u_t\) ((a), (c), (e)) and \(v_t\) ((b), (d), (f))

Finally, we repeated the same steps but now using as inputs in the FNNs and RPNNs the reduced input domain as obtained from the feature selection process. Table 1 summarizes the performance of the schemes on the training and the test sets. Figs. 9 and 10 illustrate the norms of the differences between the predicted from the FNNs and RPNNs and the actual time derivatives of both variables.

Approximation accuracy in the test data with feature selection as obtained with the FNNs. Contour plot of the absolute values of differences in space and time, of \(\vert u_t(x,t)-{\hat{u}}_t(x,t) \vert \) ((a), (c), and (e)) and of \(\vert v_t(x,t)-{\hat{v}}_t(x,t) \vert \) ((b), (d), and (f)) for characteristic values of \(\varepsilon \): (a) and (b) \(\varepsilon =0.0114\) near the Andronov-Hopf point, (c), (d) \(\varepsilon =0.4\), (e) and (f) \(\varepsilon =0.9383\) near the turning point

Approximation accuracy in the test data with feature selection as obtained with the RPNNs. Contour plot of the absolute values of differences in space and time, of \(\vert u_t(x,t)-{\hat{u}}_t(x,t) \vert \) ((a), (c), and (e)) and of \(\vert v_t(x,t)-{\hat{v}}_t(x,t) \vert \) ((b), (d), and (f)) for different values of \(\varepsilon \): (a) and (b) \(\varepsilon =0.0114\) near the Andronov-Hopf point, (c), (d) \(\varepsilon =0.4\), (e) and (f) \(\varepsilon =0.9383\) near the turning point

Hence, as it is shown, the proposed feature selection approach based on the parsimonious Diffusion Maps revealed correctly the structure of the embedded PDEs in the form of:

where \({\hat{F}}^u\) and \({\hat{F}}^v\) are the outputs of the FNNs (or the RPNNs). The constructed bifurcation diagram with feature selection is shown in Fig. 7. Using the FNNs, we estimated the Andronov-Hopf point at \(\varepsilon \approx 0.0195\) and the turning point at \(\varepsilon \approx 0.9762\). Using the RPNNs, we estimated the Andronov-Hopf point at \(\varepsilon \approx 0.0192\) and the turning point at \(\varepsilon \approx 0.9752\).

6 Conclusions

Building on previous efforts [35], we present a machine learning methodology for solving the inverse problem in complex systems modelling and analysis, thus identifying effective PDEs from spatio-temporal data produced by Lattice Boltzmann model simulations and performing a numerical bifurcation analysis, thus solving the forward problem based on the constructed machine learning models. The proposed approach is a three tiered one. In the first step, we used non-linear manifold-learning and in particular Diffusion Maps to select an appropriate set of coarse-scale observables that define the low-dimensional manifold on which the emergent dynamics evolve in the parameter space. At the second step, we learned the right-hand-side of the effective PDEs with respect to the coarse-scale observables; here we used shallow FNNs with two hidden layers and single layer RPNNs which basis functions were constructed using appropriately designed random sampling. Finally, based on the constructed black-box machine learning models, we constructed the coarse-grained bifurcation diagrams exploiting the arsenal of numerical bifurcation toolkit. To demonstrate the approach, we used D1Q3 Lattice Boltzmann simulations of the FitzHugh-Nagumo PDEs and compared the machine learning constructed bifurcation diagram with the one obtained by discretizing the PDEs with central finite differences.

The results show that the proposed machine learning framework was able to identify the “correct" set of coarse-scale variables that are required to model the emergent dynamics in the form of PDEs and based on them systematically study the coarse-scale dynamics by constructing the emerging macroscopic bifurcation diagram. In terms of approximation accuracy of the macroscopic dynamics, both schemes (the two hidden- layers FNNs and the single-hidden layer RPNNs) performed for all practical purposes equivalently. However, in terms of the computational cost in the training phase, the RPNNs were 20 to 30 times faster than the two hidden layers FNNs. Hence, the proposed RPNN scheme is a promising alternative approach to deep learning for solving the inverse problem for high-dimensional PDEs from big data [1, 89, 90].

Here, we have focused on the construction of “black-box” PDEs and their numerical bifurcation analysis over the parametric space from data produced from Lattice Bolzmann model simulations. The proposed machine learning approach can be extended to deal with higher-dimensional multi-scale stochastic/microscopic simulators such as particle in cell (see e.g. [91]), Brownian and molecular dynamics and agent-based models (see e.g. [19, 27] ). In such cases, one has to first identify an appropriate set of macroscopic observables that can parametrize a coarse-scale manifold on which the emergent dynamics evolve, using for example manifold learning algorithms such as Diffusion Maps (see e.g., [23, 30, 31, 38]) and based on this information to apply the proposed scheme to construct “black-box” PDEs and consequently perform coarse-grained numerical analysis. Furthermore, the proposed methodology can be extended to construct “gray-box” models by incorporating information from the physics into the machine learning architecture [1, 92]. Finally, based on previous efforts aiming at extracting normal forms of ODEs from data [14, 93], the proposed approach can be exploited to discover normal forms of high-dimensional PDEs.

Availability of Data and Material (Data Transparency)

Not applicable

References

Karniadakis, G.E., Kevrekidis, I.G., Lu, L., Perdikaris, P., Wang, S., Yang, L.: Physics-informed machine learning. Nat. Reviews Phys. 3(6), 422–440 (2021)

Schmidt, M., Lipson, H.: Distilling free-form natural laws from experimental data. Sci. 324(5923), 81–85 (2009)

Wang, S., Wang, H., Perdikaris, P.: Learning the solution operator of parametric partial differential equations with physics-informed deeponets. Sci. Adv. 7(40), 8605 (2021). https://doi.org/10.1126/sciadv.abi8605https://www.science.org/doi/pdf/10.1126/sciadv.abi8605

Kovachki, N., Li, Z., Liu, B., Azizzadenesheli, K., Bhattacharya, K., Stuart, A., Anandkumar, A.: Neural operator: Learning maps between function spaces. arXiv preprint arXiv:2108.08481 (2021)

Hudson, J.L., Kube, M., Adomaitis, R.A., Kevrekidis, I.G., Lapedes, A.S., Farber, R.M.: Nonlinear signal processing and system identification: applications to time series from electrochemical reactions. Chem. Eng. Sci. 45(8), 2075–2081 (1990). https://doi.org/10.1016/0009-2509(90)80079-T

Rico-Martinez, R., Krischer, K., Kevrekidis, I., Kube, M., Hudson, J.: Discrete-vs. continuous-time nonlinear signal processing of cu electrodissolution data. Chem. Eng. Commun. 118(1), 25–48 (1992)

Krischer, K., Rico-Martinez, R., Kevrekidis, I.G., Rotermund, H., Ertl, G., Hudson, J.: Model identification of a spatiotemporally varying catalytic reaction. Aiche JournalAiche J. 39(1), 89–98 (1993)

Masri, S.F., Chassiakos, A.G., Caughey, T.K.: Identification of nonlinear dynamic systems using neural networks. J. Appl. Mech. 60(1), 123–133 (1993). https://doi.org/10.1115/1.2900734

Rico-Martinez, R., Anderson, J.S., Kevrekidis, I.G.: Continuous-time nonlinear signal processing: a neural network based approach for gray box identification. In: Proceedings of IEEE Workshop on Neural Networks for Signal Processing, pp. 596–605 (1994). https://doi.org/10.1109/NNSP.1994.366006

Chen, T., Chen, H.: Universal approximation to nonlinear operators by neural networks with arbitrary activation functions and its application to dynamical systems. IEEE Trans. Neural Netw. 6(4), 911–917 (1995)

Anderson, J.S., Kevrekidis, I.G., Rico-Martinez, R.: A comparison of recurrent training algorithms for time series analysis and system identification. Comput. & Chem. Eng. 20, 751–756 (1996). https://doi.org/10.1016/0098-1354(96)00133-0. (European Symposium on Computer Aided Process Engineering-6)

González-García, R., Rico-Martínez, R., Kevrekidis, I.G.: Identification of distributed parameter systems: A neural net based approach. Comput. & Chem. Eng. 22, 965–968 (1998). https://doi.org/10.1016/S0098-1354(98)00191-4. (European Symposium on Computer Aided Process Engineering-8)

Siettos, C.I., Bafas, G.V.: Semiglobal stabilization of nonlinear systems using fuzzy control and singular perturbation methods. Fuzzy Sets Syst. 129(3), 275–294 (2002)

Siettos, C.I., Bafas, G.V., Boudouvis, A.G.: Truncated chebyshev series approximation of fuzzy systems for control and nonlinear system identification. Fuzzy Sets Syst. 126(1), 89–104 (2002)

Alexandridis, A., Siettos, C., Sarimveis, H., Boudouvis, A., Bafas, G.: Modelling of nonlinear process dynamics using kohonen’s neural networks, fuzzy systems and chebyshev series. Comput. & Chem. Eng. 26(4–5), 479–486 (2002)

Bongard, J., Lipson, H.: Automated reverse engineering of nonlinear dynamical systems. Proc. Natl. Acad. Sci. 104(24), 9943–9948 (2007)

Brunton, S.L., Proctor, J.L., Kutz, J.N.: Discovering governing equations from data by sparse identification of nonlinear dynamical systems. Proc. Natl. Acad. Sci. 113(15), 3932–3937 (2016)

Vlachas, P. R., Arampatzis, G., Uhler, C., Koumoutsakos, P.: Multiscale simulations of complex systems by learning their effective dynamics. Nat. Mach. Intell. 4(4), 359–366 (2022)

Kevrekidis, I.G., Gear, C.W., Hyman, J.M., Kevrekidis, P.G., Runborg, O., Theodoropoulos, C.: Equation-free, coarse-grained multiscale computation: Enabling mocroscopic simulators to perform system-level analysis. Commun. Math. Sci. 1(4), 715–762 (2003). https://doi.org/10.4310/cms.2003.v1.n4.a5

Kevrekidis, I.G., Gear, C.W., Hummer, G.: Equation-free: The computer-aided analysis of complex multiscale systems. AIChE J. 50(7), 1346–1355 (2004). https://doi.org/10.1002/aic.10106

Makeev, A.G., Maroudas, D., Kevrekidis, I.G.: “coarse’’ stability and bifurcation analysis using stochastic simulators: Kinetic monte carlo examples. J. Chem. Phys. 116(23), 10083–10091 (2002). https://doi.org/10.1063/1.1476929

Siettos, C.I., Graham, M.D., Kevrekidis, I.G.: Coarse brownian dynamics for nematic liquid crystals: Bifurcation, projective integration, and control via stochastic simulation. J. Chem. Phys. 118(22), 10149–10156 (2003). https://doi.org/10.1063/1.1572456

Erban, R., Frewen, T.A., Wang, X., Elston, T.C., Coifman, R., Nadler, B., Kevrekidis, I.G.: Variable-free exploration of stochastic models: a gene regulatory network example. J. Chem. Phys. 126(15), 04–618 (2007)

Vandekerckhove, C., Kevrekidis, I., Roose, D.: An efficient newton-krylov implementation of the constrained runs scheme for initializing on a slow manifold. J. Sci. Comput. 39(2), 167–188 (2009)

Samaey, G., Vanroose, W., Roose, D., Kevrekidis, I.G.: Newton-krylov solvers for the equation-free computation of coarse traveling waves. Comput. Methods Appl. Mech. Eng. 197(43–44), 3480–3491 (2008)

Samaey, G., Vanroose, W.: An analysis of equivalent operator preconditioning for equation-free newton-krylov methods. SIAM J. Numer. Anal. 48(2), 633–658 (2010)

Siettos, C.I., Gear, C.W., Kevrekidis, I.G.: An equation-free approach to agent-based computation: Bifurcation analysis and control of stationary states. EPL (Europhysics Letters) 99(4), 48007 (2012). https://doi.org/10.1209/0295-5075/99/48007

Coifman, R.R., Lafon, S., Lee, A.B., Maggioni, M., Nadler, B., Warner, F., Zucker, S.W.: Geometric diffusions as a tool for harmonic analysis and structure definition of data: Diffusion maps. Proc. Natl. Acad. Sci. 102(21), 7426–7431 (2005). https://doi.org/10.1073/pnas.0500334102https://www.pnas.org/content/102/21/7426.full.pdf

Coifman, R.R., Lafon, S.: Diffusion maps. Appl. Comput. Harmon. Anal. 21(1), 5–30 (2006). https://doi.org/10.1016/j.acha.2006.04.006. (Special Issue: Diffusion Maps and Wavelets)

Nadler, B., Lafon, S., Coifman, R.R., Kevrekidis, I.G.: Diffusion maps, spectral clustering and reaction coordinates of dynamical systems. Appl. Comput. Harmon. Anal. 21(1), 113–127 (2006)

Singer, A., Erban, R., Kevrekidis, I.G., Coifman, R.R.: Detecting intrinsic slow variables in stochastic dynamical systems by anisotropic diffusion maps. Proc. Natl. Acad. Sci. 106(38), 16090–16095 (2009)

Lee, S., Kevrekidis, I.G., Karniadakis, G.E.: A resilient and efficient cfd framework: Statistical learning tools for multi-fidelity and heterogeneous information fusion. J. Comput. Phys. 344, 516–533 (2017)

Raissi, M., Perdikaris, P., Karniadakis, G.E.: Inferring solutions of differential equations using noisy multi-fidelity data. J. Comput. Phys. 335, 736–746 (2017)

Raissi, M., Perdikaris, P., Karniadakis, G.E.: Machine learning of linear differential equations using gaussian processes. J. Comput. Phys. 348, 683–693 (2017)

Lee, S., Kooshkbaghi, M., Spiliotis, K., Siettos, C.I., Kevrekidis, I.G.: Coarse-scale pdes from fine-scale observations via machine learning. Chaos: An Interdiscip. J. Nonlinear Sci. 30(1), 013141 (2020). https://doi.org/10.1063/1.5126869

Dsilva, C.J., Talmon, R., Coifman, R.R., Kevrekidis, I.G.: Parsimonious representation of nonlinear dynamical systems through manifold learning: A chemotaxis case study. Appl. Comput. Harmon. Anal. 44(3), 759–773 (2018). https://doi.org/10.1016/j.acha.2015.06.008

Thiem, T. N., Kemeth, F. P., Bertalan, T., Laing, C. R., Kevrekidis, I. G.: Global and local reduced models for interacting, heterogeneous agents. Chaos: An Interdiscip. J. Nonlinear Sci. 31(7), 073139 (2021)

Holiday, A., Kooshkbaghi, M., Bello-Rivas, J.M., William Gear, C., Zagaris, A., Kevrekidis, I.G.: Manifold learning for parameter reduction. J. Comput. Phys. 392, 419–431 (2019). https://doi.org/10.1016/j.jcp.2019.04.015

Nadler, B., Lafon, S., Coifman, R., Kevrekidis, I.G.: Diffusion maps-a probabilistic interpretation for spectral embedding and clustering algorithms. In: Principal Manifolds for Data Visualization and Dimension Reduction, pp. 238–260. Springer (2008)

Gao, S., Mishne, G., Scheinost, D.: Nonlinear manifold learning in functional magnetic resonance imaging uncovers a low-dimensional space of brain dynamics. Hum. Brain Mapp. 42, 4510–4524 (2021). https://doi.org/10.1002/hbm.25561

Santosa, F., Symes, W.W.: Linear inversion of band-limited reflection seismograms. SIAM J. Sci. Stat. Comput. 7(4), 1307–1330 (1986). https://doi.org/10.1137/0907087

Tibshirani, R.: Regression shrinkage and selection via the lasso. J. Roy. Stat. Soc.: Ser. B (Methodol.) 58(1), 267–288 (1996)

Gallos, I. K., Gkiatis, K., Matsopoulos, G. K., Siettos, C.: ISOMAP and machine learning algorithms for the construction of embedded functional connectivity networks of anatomically separated brain regions from resting state fMRI data of patients with Schizophrenia. AIMS Neurosci. 8(2), 295–321 (2021)

Ho, T.K.: Random decision forests. In: Proceedings of 3rd International Conference on Document Analysis and Recognition, vol. 1, pp. 278–2821 (1995). https://doi.org/10.1109/ICDAR.1995.598994

Ho, T.K.: The random subspace method for constructing decision forests. IEEE Trans. Pattern Anal. Mach. Intell. 20(8), 832–844 (1998). https://doi.org/10.1109/34.709601

Cybenko, G.V.: Approximation by superpositions of a sigmoidal function. Math. Control Signals Syst. 2, 303–314 (1989)

Hornik, K., Stinchcombe, M., White, H.: Multilayer feedforward networks are universal approximators. Neural Netw. 2(5), 359–366 (1989)

Hornik, K., Stinchcombe, M., White, H.: Universal approximation of an unknown mapping and its derivatives using multilayer feedforward networks. Neural Netw. 3(5), 551–560 (1990)

Park, J., Sandberg, I.W.: Universal approximation using radial-basis-function networks. Neural Comput. 3(2), 246–257 (1991)

Leshno, M., Lin, V.Y., Pinkus, A., Schocken, S.: Multilayer feedforward networks with a nonpolynomial activation function can approximate any function. Neural Netw. 6(6), 861–867 (1993)

Dan Foresee, F., Hagan, M.T.: Gauss-newton approximation to bayesian learning. In: Proceedings of International Conference on Neural Networks (ICNN’97), vol. 3, pp. 1930–19353 (1997). https://doi.org/10.1109/ICNN.1997.614194

Hagan, M.T., Menhaj, M.B.: Training feedforward networks with the marquardt algorithm. IEEE Trans. Neural Netw. 5(6), 989–993 (1994). https://doi.org/10.1109/72.329697

Barron, A.R.: Universal approximation bounds for superpositions of a sigmoidal function. IEEE Trans. Inf. Theory 39(3), 930–945 (1993)

Igelnik, B., Pao, Y.-H.: Stochastic choice of basis functions in adaptive function approximation and the functional-link net. IEEE Trans. Neural Netw. 6(6), 1320–1329 (1995)

Verstraeten, D., Schrauwen, B., d’Haene, M., Stroobandt, D.: An experimental unification of reservoir computing methods. Neural Netw. 20(3), 391–403 (2007)

Jaeger, H.: The “echo state’’ approach to analysing and training recurrent neural networks-with an erratum note. Bonn, Ger.: Ger. Natl. Res. Cent. Inf. Technol. GMD Tech. Rep. 148(34), 13 (2001)

Huang, G.-B., Zhu, Q.-Y., Siew, C.-K.: Extreme learning machine: theory and applications. Neurocomputing 70(1–3), 489–501 (2006)

Maass, W., Natschläger, T., Markram, H.: Real-time computing without stable states: A new framework for neural computation based on perturbations. Neural Comput. 14(11), 2531–2560 (2002)

Van Der Malsburg, C.: Frank rosenblatt: Principles of neurodynamics: Perceptrons and the theory of brain mechanisms. In: Palm, G., Aertsen, A. (eds.) Brain Theory, pp. 245–248. Springer, Berlin, Heidelberg (1986)

Fabiani, G., Calabrò, F., Russo, L., Siettos, C.: Numerical solution and bifurcation analysis of nonlinear partial differential equations with extreme learning machines. J. Sci. Comput. 89(2), 1–35 (2021)

Calabrò, F., Fabiani, G., Siettos, C.: Extreme learning machine collocation for the numerical solution of elliptic pdes with sharp gradients. Comput. Methods Appl. Mech. Eng. 387, 114188 (2021)

Johnson, W.B., Lindenstrauss, J.: Extensions of Lipschitz mappings into a Hilbert space. Contemp. Math. 26(1), 189–206 (1984)

Achlioptas, D.: Database-friendly random projections: Johnson-Lindenstrauss with binary coins. J. Comput. Syst. Sci. 66(4), 671–687 (2003)

Dasgupta, S., Gupta, A.: An elementary proof of a theorem of Johnson and Lindenstrauss. Random Struct. & Algorithms 22(1), 60–65 (2003)

Vempala, S.S.: The Random Projection Method, vol. 65. American Mathematical Soc, United States (2005)

Wang, J.: Geometric structure of high-dimensional data. In: Geometric Structure of High-Dimensional Data and Dimensionality Reduction, pp. 51–77. Springer, Berlin, Heidelberg (2012)

Giryes, R., Sapiro, G., Bronstein, A.M.: Deep neural networks with random gaussian weights: A universal classification strategy? IEEE Trans. Signal Process. 64(13), 3444–3457 (2016)

Schiassi, E., Furfaro, R., Leake, C., De Florio, M., Johnston, H., Mortari, D.: Extreme theory of functional connections: A fast physics-informed neural network method for solving ordinary and partial differential equations. Neurocomputing 457, 334–356 (2021)

Dwivedi, V., Srinivasan, B.: Physics informed extreme learning machine (PIELM)-a rapid method for the numerical solution of partial differential equations. Neurocomputing 391, 96–118 (2020)

Dong, S., Li, Z.: Local extreme learning machines and domain decomposition for solving linear and nonlinear partial differential equations. Comput. Methods Appl. Mech. Eng. 387, 114129 (2021)

Dong, S.: Local extreme learning machines: A neural network-based spectral element-like method for computational pdes. Bulletin of the American Physical Society (2022)

FitzHugh, R.: Impulses and physiological states in theoretical models of nerve membrane. Biophys. J . 1(6), 445–466 (1961)

Theodoropoulos, C., Qian, Y.-H., Kevrekidis, I.G.: “coarse” stability and bifurcation analysis using time-steppers: A reaction-diffusion example. Proc. Natl. Acad. Sci. 97(18), 9840–9843 (2000). https://doi.org/10.1073/pnas.97.18.9840https://www.pnas.org/content/97/18/9840.full.pdf

Ottaviani, M., Romanelli, F., Benzi, R., Briscolini, M., Santangelo, P., Succi, S.: Numerical simulations of ion temperature gradient-driven turbulence. Phys. Fluids B 2(1), 67–74 (1990)

Succi, S.: The Lattice boltzmann equation: for fluid dynamics and beyond. Oxford University Press, Oxford (2001)

Chikatamarla, S., Frouzakis, C., Karlin, I., Tomboulides, A., Boulouchos, K.: Lattice boltzmann method for direct numerical simulation of turbulent flows. J. Fluid Mech. 656, 298–308 (2010)

La Rocca, M., Montessori, A., Prestininzi, P., Succi, S.: A multispeed discrete boltzmann model for transcritical 2d shallow water flows. J. Comput. Phys. 284, 117–132 (2015)

Lai, H., Xu, A., Zhang, G., Gan, Y., Ying, Y., Succi, S.: Nonequilibrium thermohydrodynamic effects on the rayleigh-taylor instability in compressible flows. Phys. Rev. E 94(2), 023106 (2016)

Dorschner, B., Chikatamarla, S.S., Karlin, I.V.: Fluid-structure interaction with the entropic lattice boltzmann method. Phys. Rev. E 97(2), 023305 (2018)

Succi, S.: Lattice boltzmann 2038. EPL (Europhysics Letters) 109(5), 50001 (2015)

Bhatnagar, P.L., Gross, E.P., Krook, M.: A model for collision processes in gases. i. small amplitude processes in charged and neutral one-component systems. Phys. Rev. 94, 511–525 (1954). https://doi.org/10.1103/PhysRev.94.511

Bhathnagor, P., Gross, E., Krook, M.: A model for collision processes in gases. Phys. Rev. 94(3), 511 (1954)

Otomo, H., Boghosian, B.M., Succi, S.: A kinetic generator for classical field theories with conservation laws. EPL (Europhysics Letters) 132(6), 60002 (2021)

Qian, Y.H., Orszag, S.A.: Scalings in diffusion-driven reaction \(a+b\rightarrow c\): Numerical simulations by lattice bgk models. J. Stat. Phys. 81, 237–253 (1995). https://doi.org/10.1007/BF02179978

Chan, T.F.C., Keller, H.B.: Arc-length continuation and multigrid techniques for nonlinear elliptic eigenvalue problems. SIAM J. Sci. Stat. Comput. 3(2), 173–194 (1982). https://doi.org/10.1137/0903012

Glowinski, R., Keller, H.B., Reinhart, L.: Continuation-conjugate gradient methods for the least squares solution of nonlinear boundary value problems. SIAM J. Sci. Stat. Comput. 6, 793–832 (1985)

Govaerts, W.J.: Numerical methods for bifurcations of dynamical equilibria. SIAM, Philadelphia, PA (2000)

Lehmberg, D., Dietrich, F., Köster, G., Bungartz, H.-J.: datafold: data-driven models for point clouds and time series on manifolds. J. Open Source Softw. 5, 2283 (2020). https://doi.org/10.21105/joss.02283

Raissi, M.: Deep hidden physics models: Deep learning of nonlinear partial differential equations. J. Machin. Learn. Res. 19(1), 932–955 (2018)

Raissi, M., Karniadakis, G.E.: Hidden physics models: Machine learning of nonlinear partial differential equations. J. Comput. Phys. 357, 125–141 (2018)

Toschi, F., Vignolo, P., Succi, S., Tosi, M.: Dynamics of trapped two-component fermi gas: Temperature dependence of the transition from collisionless to collisional regime. Phys. Rev. A 67(4), 041605 (2003)

Lovelett, R.J., Avalos, J.L., Kevrekidis, I.G.: Partial observations and conservation laws: Gray-box modeling in biotechnology and optogenetics. Ind. & Eng. Chem. Res. 59(6), 2611–2620 (2019)

Yair, O., Talmon, R., Coifman, R.R., Kevrekidis, I.G.: Reconstruction of normal forms by learning informed observation geometries from data. Proc. Natl. Acad. Sci. 114(38), 7865–7874 (2017)

Funding

Open access funding provided by Universitá degli Studi di Napoli Federico II within the CRUI-CARE Agreement. This work was supported by the Italian program “Fondo Integrativo Speciale per la Ricerca (FISR)” - FISR2020IP 02893/ B55F20002320001. I.G.K. acknowledges partial support from US Department of Energy and the US Air Force Office of Scientific Research.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflict of interests to disclose

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Galaris, E., Fabiani, G., Gallos, I. et al. Numerical Bifurcation Analysis of PDEs From Lattice Boltzmann Model Simulations: a Parsimonious Machine Learning Approach. J Sci Comput 92, 34 (2022). https://doi.org/10.1007/s10915-022-01883-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-022-01883-y