Abstract

We aim to solve a structured convex optimization problem, where a nonsmooth function is composed with a linear operator. When opting for full splitting schemes, usually, primal–dual type methods are employed as they are effective and also well studied. However, under the additional assumption of Lipschitz continuity of the nonsmooth function which is composed with the linear operator we can derive novel algorithms through regularization via the Moreau envelope. Furthermore, we tackle large scale problems by means of stochastic oracle calls, very similar to stochastic gradient techniques. Applications to total variational denoising and deblurring, and matrix factorization are provided.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The problem at hand is the following structured convex optimization problem

for real Hilbert spaces \({\mathcal {H}}\) and \({\mathcal {G}}\), \(f: {\mathcal {H}}\rightarrow {{\overline{{\mathbb {R}}}}:= {\mathbb {R}}\cup \{\pm \infty \}}\) a proper, convex and lower semicontinuous function, \(g:{\mathcal {G}}\rightarrow {\mathbb {R}}\) a, possibly nonsmooth, convex and Lipschitz continuous function, and \(K: {\mathcal {H}}\rightarrow {\mathcal {G}}\) a linear continuous operator.

Our aim will be to devise an algorithm for solving (1) following the full splitting paradigm (see [5, 6, 8, 9, 15, 17, 29]). In other words, we allow only proximal evaluations for simple nonsmooth functions, but no proximal evaluations for compositions with linear continuous operators, like, for instance, for \(g \circ K\).

We will accomplish this feat by the means of a smoothing strategy, which, for the purpose of this paper, means, making use of the Moreau-Yosida approximation. The approach can be described as follows: we “smooth” g, i.e. we replace it by its Moreau envelope, and solve the resulting optimization problem by an accelerated proximal-gradient algorithm (see [3, 13, 21]). This approach is similar to those in [7, 10, 11, 20, 22], where a convergence rate of \({\mathcal {O}}\Big (\frac{\log (k)}{k}\Big )\) is proved. However, our techniques (for the deterministic case) resemble more the ones in [28], where an improved rate of \({\mathcal {O}}(\frac{1}{k})\) is shown, with the most notable difference to our work is that we use a simpler stepsize and treat the stochastic case.

The only other family of methods able to solve problems of type (1) are the so called primal–dual algorithms, first and foremost the primal–dual hybrid gradient (PDHG) introduced in [15]. In comparison, this method does not need the Lipschitz continuity of g in order to prove convergence. However, in this very general case, convergence rates can only be shown for the so-called restricted primal–dual gap function. In order to derive from here convergence rates for the primal objective function, either Lipschitz continuity of g or finite dimensionality of the problem plus the condition that g must have full domain are necessary (see, for instance, [5, Theorem 9]). This means, that for infinite dimensional problems the assumptions required by both, PDHG and our method, for deriving convergence rates for the primal objective function are in fact equal, but for finite dimensional problems the assumption of PDHG are weaker. In either case, however, we are able to prove these rates for the sequence of iterates \({(x_{k})}_{k \ge 1}\) itself whereas PDHG only has them for the sequence of so-called ergodic iterates, i.e. \({(\frac{1}{k}\sum _{i=1}^{k} x_{i})}_{k \ge 1}\), which is naturally undesirable as the averaging slows the convergence down. Furthermore, we do not show any convergence for the iterates as these are notoriously hard to obtain for accelerated method whereas PDHG gets these in the strongly convex setting via standard fixed point arguments (see e.g. [29]).

Furthermore, we will also consider the case where only a stochastic oracle of the proximal operator of g is available to us. This setup corresponds e.g. to the case where the objective function is given as

where, for \(i=1,\dots ,m\), \({\mathcal {G}}_i\) are real Hilbert spaces, \(g_i : {\mathcal {G}}_i \rightarrow {\mathbb {R}}\) are convex and Lipschitz continuous functions and \(K_i : {\mathcal {H}}\rightarrow {\mathcal {G}}_i\) are linear continuous operators, but the number of summands being large we wish to not compute all proximal operators of all \(g_i, i=1, \dots , m\), for purpose of making iterations cheaper to compute.

For the finite sum case (2), there exist algorithms of similar spirit such as those in [14, 24]. Some algorithms do in fact deal with a similar setup of stochastic gradient like evaluations, see [26], but only for smooth terms in the objective function.

In Sect. 2 we will cover the preliminaries about the Moreau-Yosida envelope as well as useful identities and estimates connected to it. In Sect. 3 we will deal with the deterministic case and prove a convergence rate of \({\mathcal {O}}(\frac{1}{k})\) for the function values at the iterates. Next up, in Sect. 4, we will consider the stochastic case as described above and prove a convergence rate of \({\mathcal {O}}\left( \frac{\log (k)}{\sqrt{k}}\right) \). Last but not least, we will look at some numerical examples in image processing in Sect. 5.

It is important to note that the proof for the deterministic setting differs surprisingly from the one for the stochastic setting. The technique for the stochastic setting is less refined in the sense that there is no coupling between the smoothing parameter and the extrapolation parameter. Where as this technique works also works for the deterministic setting it gives a worse convergence rate of \({\mathcal {O}}\left( \frac{\log {k}}{k}\right) \). The tight coupling of the two sequences of parameters, however does not work in the proof of the stochastic algorithm as it does not allow for the particular choice of the smoothing parameters needed there.

2 Preliminaries

In the main problem (1), the nonsmooth function regularizer g is supposed to be Lipschitz continuous. This assumption is necessary to ensure our main convergence results, however, many of the preliminary lemmata of this section hold true similarly if the function is only assumed to be lower semicontinuous. We will point this out in every statement of this section individually.

Definition 2.1

For a proper, convex and lower semicontinuous function \(g: {\mathcal {H}}\rightarrow {\overline{{\mathbb {R}}}}\), its convex conjugate is denoted by \(g^*\) defined as a function from \({\mathcal {H}}\) to \({\overline{{\mathbb {R}}}}\), given by

As mentioned in the introduction, we want to smooth a nonsmooth function by considering its Moreau envelope. The next definition will clarify exactly what object we are talking about.

Definition 2.2

For a proper, convex and lower semicontinuous function \(g: {\mathcal {H}}\rightarrow {\overline{{\mathbb {R}}}}\), its Moreau envelope with the parameter \(\mu \ge 0\) is defined as a function from \({\mathcal {H}}\) to \({\mathbb {R}}\), given by

From this definition, however, it is not completely evident that the Moreau envelope indeed fulfills its purpose in being a smooth representation of the original function. The next lemma will remedy this fact.

Lemma 2.1

(see [2, Proposition 12.29]) Let \(g: {\mathcal {H}}\rightarrow {\overline{{\mathbb {R}}}}\) be a proper, convex and lower semicontinuous function and \(\mu > 0\). Then its Moreau envelope is Fréchet differentiable on \({\mathcal {H}}\). In particular, the gradient itself is given by

and is \(\mu ^{-1}\)-Lipschitz continuous.

In particular, for all \(\mu > 0\), a gradient step with respect to the Moreau envelope corresponds to a proximal step

The previous lemma establishes two things. Not only does it clarify the smoothness of the Moreau envelope, but it also gives a way of computing its gradient. Obviously, a smooth representation whose gradient we would not be able to compute would not be any good.

As mentioned in the introduction, we want to smooth the nonsmooth summand of the objective function which is composed with the linear operator as this can be considered the crux of problem (1). The function \(g \circ K\) will be smoothed via considering instead \({}^{\mu _{}}g \circ K : {\mathcal {H}}\rightarrow {\mathbb {R}}\). Clearly, by the chain rule, this function is continuously differentiable with gradient given for every \(x \in {\mathcal {H}}\) by

and is thus Lipschitz continuous with Lipschitz constant \(\frac{\Vert K \Vert ^2}{\mu }\), where \(\Vert K \Vert \) denotes the operator norm of K.

Lipschitz continuity will play an integral role in our investigations, as can be seen by the following lemmas.

Lemma 2.2

(see [4, Proposition 4.4.6]) Let \(g:{\mathcal {H}}\rightarrow {\mathbb {R}}\) be a convex and \(L_g\)-Lipschitz continuous function. Then, the domain of its Fenchel conjugate is bounded, i.e.

where \(B(0,L_g)\) denotes the open ball with radius \(L_g\) around the origin.

The Moreau envelope even preserves the Lipschitzness of the original function.

Lemma 2.3

(see [18, Lemma 2.1]) Let \(g: {\mathcal {H}}\rightarrow {\mathbb {R}}\) be a convex and \(L_g\)-Lipschitz continuous function. Then its Moreau envelope \({}^{\mu _{}}g\) is \(L_g\)-Lipschitz as well, i.e.

Proof

We observe that for all \(x \in {\mathcal {H}}\)

Therefore we can bound the gradient norm

where we used in the last step that the Lipschitz continuity of g. The statement follows from the mean-value theorem. \(\square \)

The following lemmata are not new, but we provide proofs anyways in order to remain self-contained and to shed insight on how to use the Moreau envelope for the interested reader.

Lemma 2.4

(see [28, Lemma 10 (a)]) Let \(g: {\mathcal {H}}\rightarrow {\overline{{\mathbb {R}}}}\) be proper, convex and lower semicontinuous. The maximizing argument in the definition of the Moreau-Yosida envelope is given by its gradient, i.e. for \(\mu >0\) it holds that

Proof

Let \(x \in {\mathcal {H}}\) be fixed. It holds

and the conclusion follows by using Lemma 2.1. \(\square \)

Lemma 2.5

(see [28, Lemma 10 (a)]) For a proper, convex and lower semicontinuous function \(g: {\mathcal {H}}\rightarrow {\overline{{\mathbb {R}}}}\) and every \(x \in {\mathcal {H}}\) we can consider the mapping from \((0, +\infty )\) to \({\mathbb {R}}\) given by

This mapping is convex and differentiable and its derivative is given by

Proof

Let \(x \in {\mathcal {H}}\) be fixed. From the definition of the Moreau-Yosida envelope we can see that the mapping given in (4) is a pointwise supremum of functions which are linear in \(\mu \). It is therefore convex. Furthermore, since the objective function is strongly concave, this supremum is uniquely attained at \(\nabla {}^{\mu _{}} g(x) = \mathop {\mathrm {arg\, max}}\limits _{p \in {\mathcal {H}}} \left\{ \left\langle x, p \right\rangle - g^*(p) - \frac{\mu }{2}\Vert p \Vert ^2\right\} \). According to the Danskin Theorem, the function \(\mu \mapsto {}^{\mu _{}} g(x)\) is differentiable and its gradient is given by

\(\square \)

Lemma 2.6

( [28, Lemma 10 (b)]) Let \(g: {\mathcal {H}}\rightarrow {\overline{{\mathbb {R}}}}\) be proper, convex and lower semicontinuous. For \(\mu _{1}, \mu _{2} > 0 \) and every \(x \in {\mathcal {H}}\) it holds

If g is additionally \(L_{g}\)-Lipschitz and if \(\mu _{2}\ge \mu _{1} > 0\), then

Proof

Let \(x \in {\mathcal {H}}\) be fixed. Via Lemma 2.5 we know that the map \( \mu \mapsto {}^{\mu _{}} g (x)\) is convex and differentiable. We can therefore use the gradient inequality to deduce that

which is exactly the first statement of the lemma. The first inequality of (6) follows directly from the definition of the Moreau envelope and the second one from (5) and (3). \(\square \)

By applying a limiting argument it is easy to see that (6) implies that for any \(\mu >0\)

which shows that the Moreau envelope is always a lower approximation the original function.

Lemma 2.7

(see [28, Lemma 10 (c)]) Let \(g: {\mathcal {H}}\rightarrow {\overline{{\mathbb {R}}}}\) be proper, convex and lower semicontinuous. Then, for \(\mu > 0 \) and every \(x,y \in {\mathcal {H}}\) we have that

Proof

Using Lemma 2.4 and the definition of the Moreau-Yosida envelope we get that

\(\square \)

In the convergence proof of Lemma 3.3 we will need the inequality in the above lemma at the points Kx and Ky, namely

The following lemma is a standard result for convex and Fréchet differentiable functions.

Lemma 2.8

(see [23]) For a convex and Fréchet differentiable function \(h: {\mathcal {H}}\rightarrow {\mathbb {R}}\) with \(L_{h}\)-Lipschitz continuous gradient we have that

By applying Lemma 2.8 with \({}^{\mu _{}}g\), Kx and Ky instead of h, x and y respectively, we obtain

The following technical result will be used in the proof of the convergence statement.

Lemma 2.9

For \(\alpha \in (0,1)\) and every \(x,y \in {\mathcal {H}}\) we have that

3 Deterministic Method

Problem 3.1

The problem at hand reads

for a proper, convex and lower semicontinuous function \(f: {\mathcal {H}}\rightarrow {\overline{{\mathbb {R}}}}\), a convex and \(L_g\)-Lipschitz continuous \((L_g >0)\) function \(g:{\mathcal {G}}\rightarrow {\mathbb {R}}\), and a nonzero linear continuous operator \(K : {\mathcal {H}}\rightarrow {\mathcal {G}}\).

The idea of the algorithm which we propose to solve (1) is to smooth g and then to solve the resulting problem by means of an accelerated proximal-gradient method.

Algorithm 3.1

(Variable Accelerated SmooThing (VAST)) Let \(y_0 = x_0 \in {\mathcal {H}}, {(\mu _{k})}_{k \ge 0} \! \subseteq (0,+\infty )\), and \({(t_{k})}_{k \ge 1}\) a sequence of real numbers with \(t_1=1\) and \(t_{k} \ge 1\) for every \(k\ge 2\). Consider the following iterative scheme

Remark 3.1

The assumption \(t_{1} = 1\) can be removed but guarantees easier computation and is also in line with classical choices of \({(t_{k})}_{k \ge 1}\) in [13, 21].

Remark 3.2

The sequence \({(u_{k})}_{k \ge 1}\) given by

despite not appearing in the algorithm, will feature a prominent role in the convergence proof. Due to the convention \(t_1 = 1\) we have that

We also denote

The next theorem is the main result of this section and it will play a fundamental role when proving a convergence rate of \({\mathcal {O}}(\frac{1}{k})\) for the sequence \({(F(x_k))}_{k \ge 0}\).

Theorem 3.1

Consider the setup of Problem 3.1 and let \({(x_{k})}_{k \ge 0}\) and \({(y_{k})}_{k \ge 0}\) be the sequences generated by Algorithm 3.1. Assume that for every \(k\ge 1\)

and

Then, for every optimal solution \(x^*\) of Problem 3.1, it holds

The proof of this result relies on several partial results which we will prove as follows.

Lemma 3.1

The following statement holds for every \(z \in {\mathcal {H}}\) and every \(k\ge 0\)

Proof

Let \(k\ge 0\) be fixed. Since, by the definition of the proximal map, \(x_{k+1}\) is the minimizer of a \(\frac{1}{\gamma _{k+1}}\)-strongly convex function we know that for every \(z \in {\mathcal {H}}\)

Next we use the \(L_{k+1}\)-smoothness of \({}^{\mu _{k+1}} g \circ K\) and the fact that \(\frac{1}{\gamma _{k+1}} = L_{k+1}\) to deduce

\(\square \)

Lemma 3.2

Let \(x^*\) be an optimal solution of Problem 3.1. Then it holds

Proof

We use the gradient inequality to deduce that for every \(z \in {\mathcal {H}}\) and every \(k \ge 0\)

and plug this into the statement of Lemma 3.1 to conclude that

For \(k=0\) we get that

Now we us the fact that \(u_1 = x_1\) and \(y_0= x_0\) to obtain the conclusion. \(\square \)

Lemma 3.3

Let \(x^*\) be an optimal solution of Problem 3.1. The following descent-type inequality holds for every \(k \ge 0\)

Proof

Let \(k \ge 0\) be fixed. We apply Lemma 3.1 with \(z := \left( 1- \frac{1}{t_{k+1}} \right) x_{k} + \frac{1}{t_{k+1}} x^*\) to deduce that

Using the convexity of f gives

Now, we use (8) to deduce that

and (9) to conclude that

Combining (10), (11) and (12) gives

The first term on the right hand side is \({}^{\mu _{k+1}}g(Kx_{k})\) but we would like it to be \({}^{\mu _{k}}g(Kx_{k})\). Therefore we use Lemma 2.6 to deduce that

Next up we want to estimate all the norms of gradients by using Lemma 2.9 which says that

Now we combine the two terms containing \(\Vert \nabla {}^{\mu _{k+1}}g(Kx_{k}) \Vert ^2\) and get that

By subtracting \(F(x^*) = f(x^*) + g(Kx^*)\) on both sides we finally obtain

\(\square \)

Now we are in the position to prove Theorem 3.1.

Proof of Theorem 3.1

We start with the statement of Lemma 3.3 and use the assumption that

to make the last term in the inequality disappear for every \(k \ge 0\)

Now we use the assumption that

to get that for every \(k \ge 0\)

Let \(N \ge 2\). Summing (15) from \(k=1\) to \(N-1\) and getting rid of the nonnegative term \(\Vert u_{N} - x^* \Vert ^2\) gives

Since \(t_1=1\), the above inequality is fulfilled also for \(N=1\). Using Lemma 3.2 shows that

The above inequality, however, is still in terms of the smoothed objective function. In order to go to the actual objective function we apply (7) and deduce that

\(\square \)

Corollary 3.1

By choosing the parameters \({(\mu _{k})}_{k\ge 1}, {(t_{k})}_{k\ge 1}, {(\gamma _{k})}_{k\ge 1}\) in the following way,

and for every \(k \ge 1\)

they fulfill

and

For this choice of the parameters we have that

Proof

Since \(\gamma _{k}\) and \(\mu _{k}\) are a scalar multiple of each other (18) is equivalent to

and further to (by taking into account that \(t_{k+1} > 1\) for every \(k \ge 1\))

Our update choice in (16) for the sequence \({(\mu _{k})}_{k\ge 1}\) is exactly chosen in such a way that it satisfies this. Plugging (19) into (17) gives for every \(k \ge 1\) the condition

which is equivalent to

and further to

Plugging in \(t_{k+1} = \sqrt{t_{k}^2+2t_{k}}\) we get that this equivalent to

which is evidently fulfilled. Thus, the choices in (16) are indeed feasible for our algorithm.

Now we want to prove the claimed convergence rates. Via induction we show that

Evidently, this holds for \(t_1=1\). Assuming that (20) holds for \(k \ge 1\), we easily see that

and, on the other hand,

In the following we prove a similar estimate for the sequence \({(\mu _{k})}_{k \ge 1}\). To this end we show, again by induction, the following recursion for every \(k\ge 2\)

For \(k=2\) this follows from the definition (19). Assume now that (21) holds for \(k \ge 2\). From here we have that

Using (21) together with (20) we can check that for every \(k \ge 1\)

where we used in the last step the fact that \(t_{k+1} \le t_{k}+1\).

The last thing to check is the fact that \(\mu _{k}\) goes to zero like \(\frac{1}{k}\). First we check that for every \(k\ge 1\)

This can be seen via

By bringing \(t_{k+1}\) to the other side we get that

from which we can deduce (23) by dividing by \(t_{k+1}^2 - t_{k+1}\).

We plug in the estimate (23) in (21) and get for every \(k \ge 2\)

With the above inequalities we can to deduce the claimed convergence rates. First note that from Theorem 3.1 we have

Now, in order to obtain the desired conclusion, we use the above estimates and deduce for every \(N \ge 1\)

where we used that

as shown in (22). \(\square \)

Remark 3.3

Consider the choice (see [21])

and

Since

we see that in this setting we have to choose

Thus, the sequence of optimal function values \({(F(x_N))}_{N \ge 1}\) approaches a \(b \Vert K \Vert ^2 \frac{L_g}{2}\)-approximation of the optimal objective value \(F(x^*)\) with a convergence rate of \({\mathcal {O}}(\frac{1}{N^2})\), i.e.

4 Stochastic Method

Problem 4.1

The problem is the same as in the deterministic case

other than the fact that at each iteration we are only given a stochastic estimator of the quantity

Remark 4.1

See Algorithm 4.3 for a setting where such an estimator is easily computed.

For the stochastic quantities arising in this section we will use the following notation. For every \(k \ge 0\), we denote by \(\sigma (x_0, \dots , x_k)\) the smallest \(\sigma \)-algebra generated by the family of random variables \(\{x_0, \dots , x_k\}\) and by \({\mathbb {E}}_k(\cdot ) := {\mathbb {E}}(\cdot | \sigma (x_0, \dots , x_k))\) the conditional expectation with respect to this \(\sigma \)-algebra.

Algorithm 4.1

(stochastic Variable Accelerated SmooThing (sVAST)) Let \(y_0 = x_0 \in {\mathcal {H}}, {(\mu _{k})}_{k \ge 1}\) a sequence of positive and nonincreasing real numbers, and \({(t_{k})}_{k \ge 1}\) a sequence of real numbers with \(t_1=1\) and \(t_k \ge 1\) for every \(k \ge 2\). Consider the following iterative scheme

where we make the standard assumptions about our gradient estimator of being unbiased, i.e.

and having bounded variance

for every \(k\ge 0\).

Note that we use the same notations as in the deterministic case

Lemma 4.1

The following statement holds for every (deterministic) \(z \in {\mathcal {H}}\) and every \(k \ge 0\)

Proof

Here we have to proceed a little bit different from Lemma 3.1. Namely, we have to treat the gradient step and the proximal step differently. For this purpose we define the auxiliary variable

Let \(k \ge 1\) be fixed. From the gradient step we get

Taking the conditional expectation gives

Using the gradient inequality we deduce

and therefore

Also from the smoothness of \(({}^{\mu _{k}}g \circ K)\) we deduce via the Descent Lemma that

Plugging in the definition of \(z_{k}\) and using the fact that \(L_{k} = \frac{1}{\gamma _{k}}\) we get

Now we take the conditional expectation to deduce that

Multiplying (25) by \(\gamma _k\) and adding it to (24) gives

Now we use the assumption about the bounded variance to deduce that

Next up for the proximal step we deduce

Taking the conditional expectation and combining (26) and (27) we get

From here, using now Lemma 2.3, we get that

Now we use

to obtain that

\(\square \)

Lemma 4.2

Let \(x^*\) be an optimal solution of Problem 4.1. Then it holds

Proof

Applying the previous lemma with \(k=0\) and \(z = x^*\), we get that

Therefore, using the fact that \(y_{0} = x_{0}\) and \(u_{1} = x_{1}\),

which finishes the proof. \(\square \)

Theorem 4.1

Consider the setup of Problem 4.1 and let \({(x_{k})}_{k \ge 0}\) and \({(y_{k})}_{k \ge 0}\) denote the sequences generated by Algorithm 4.1. Assume that for all \(k\ge 1\)

Then, for every optimal solution \(x^*\) of Problem 4.1, it holds

Proof of Theorem 4.1

Let \(k \ge 0\) be fixed. Lemma 4.1 for \(z := \left( 1- \frac{1}{t_{k+1}} \right) x_{k} + \frac{1}{t_{k+1}} x^*\) gives

From here and from the convexity of \(F^{k+1}\) follows

Now, by multiplying both sides with by \(t_{k+1}^2\), we deduce

Next, by adding \(t_{k}^2(F^{k+1}(x_{k}) - F^{k+1}(x^*))\) on both sides of (28), gives

Utilizing (6) together with the assumption that \({(\mu _{k})}_{k \ge 1}\) is nonincreasing leads to

Now, using that \(t_{k}^2 \ge t_{k+1}^2 - t_{k+1}\), we get

Multiplying both sides with \(\gamma _{k+1}\) and putting all terms on the correct sides yields

At this point we would like to discard the term \(\gamma _{k+1} \rho _{k+1}(F^{k+1}(x_{k}) - F^{k+1}(x^*))\) which we currently cannot as the positivity of \(F^{k+1}(x_{k}) - F^{k+1}(x^*)\) is not ensured. So we add \(\gamma _{k+1}\rho _{k+1}\mu _{k+1} \frac{L_{g}^2}{2}\) on both sides of (29) and get

Using again (6) to deduce that

we can now discard said term from (30), giving

Last but not least we use that \(F^{k}(x_{k}) - F^{k}(x^*) + \mu _{k}\frac{L_{g}^2}{2} \ge F(x_{k})-F(x^*)\ge 0\) and \(\gamma _{k+1} \le \gamma _{k}\) to follow that

Combining (31) and (32) yields

Let \(N \ge 2\). We take the expected value on both sides (33) and sum from \(k=1\) to \(N-1\). Getting rid of the non-negative terms \(\Vert u_{N} - x^* \Vert ^2\) gives

Since \(t_1=1\), the above inequality holds also for \(N=1\). Now, using Lemma 4.2 we get that for every \(N \ge 1\)

From (7) we follow that

therefore, for every \(N \ge 1\)

By using the fact that \(\mu _k = \gamma _k \Vert K \Vert ^2\) for every \(k \ge 1\) gives

Thus,

\(\square \)

Corollary 4.1

Let

and, for \(b >0\),

Then,

Furthermore, we have that \(F(x_N)\) converges almost surely to \(F(x^*)\) as \(N \rightarrow +\infty \).

Proof

First we notice that the choice of \(t_{k+1} = \frac{1 + \sqrt{1 + 4 t_{k}^2}}{2}\) fulfills that

Now we derive the stated convergence result by first showing via induction that

Assuming that this holds for \(k \ge 1\), we have that

and

Furthermore, for every \(N \ge 1\) we have that

The statement of the convergence rate in expectation follows now by plugging in our parameter choices into the statement of Theorem 4.1, using the estimate (34) and checking that

The almost sure convergence of \({(F(x_N))}_{N \ge 1}\) can be deduced by looking at (33) and dividing by \(\gamma _{k+1}t_{k+1}^2\) and using that \(\gamma _{k+1}t_{k+1}^2 \ge \gamma _{k}t_{k}^2\) as well as \(\rho _k=0\), which gives for every \(k \ge 0\)

Plugging in our choice of parameters gives for every \(k \ge 0\)

where \(C >0\).

Thus, by the famous Robbins-Siegmund Theorem (see [25, Theorem 1]) we get that \({(F^{k+1}(x_{k+1}) - F^{k+1}(x^*) + \mu _{k+1}\frac{L_{g}^2}{2})}_{k \ge 0}\) converges almost surely. In particular, from the convergence to 0 in expectation we know that the almost sure limit must also be the constant zero. \(\square \)

Finite Sum The formulation of the previous section can be used to deal e.g. with problems of the form

for \(f:{\mathcal {H}}\rightarrow {\overline{{\mathbb {R}}}}\) a proper, convex and lower semicontinuous function, \(g_{i}:{\mathcal {G}}_{i} \rightarrow {\mathbb {R}}\) convex and \(L_{g_i}\)-Lipschitz continuous functions and \(K_{i}: {\mathcal {H}}\rightarrow {\mathcal {G}}_{i}\) linear continuous operators for \(i=1,\dots ,m\).

Clearly one could consider

with \(\Vert {\varvec{K}} \Vert ^2 = \sum _{i=1}^{m}\Vert K_{i} \Vert ^2\) and

in order to reformulate the problem as

and use Algorithm 3.1 together with the parameter choices described in Corollary 3.1 on this. This results in the following algorithm.

Algorithm 4.2

Let \(y_0 = x_0 \in {\mathcal {H}}, \mu _{1}=b \Vert {\varvec{K}} \Vert \), for \(b >0 \), and \(t_1=1\). Consider the following iterative scheme

However, Problem (35) also lends itself to be tackled via the stochastic version of our method, Algorithm 4.1, by randomly choosing a subset of the summands. Together with the parameter choices described in Corollary 4.1 which results in the following scheme.

Algorithm 4.3

Let \(y_0 = x_0 \in {\mathcal {H}}, b >0\), and \(t_1=1\). Consider the following iterative scheme

with \(\epsilon _{k} := (\epsilon _{1,k}, \epsilon _{2,k}, \dots , \epsilon _{m,k})\) a sequence of i.i.d., \({\{0,1\}}^m\) random variables and \(p_i = {\mathbb {P}}[\epsilon _{i,1} = 1]\).

Since the above two methods were not explicitly developed for this separable case and can therefore not make use of more refined estimation of the constant \(\Vert {\varvec{K}} \Vert \), as it is done in e.g. [14]. However, in the stochastic case, this fact is remedied due to the scaling of the stepsize with respect to the i-th component by \(p_{i}^{-1}\).

Remark 4.2

In theory Algorithm 4.1 could be used to treat more general stochastic problems than finite sums like (35), but in the former case it is not clear anymore how a gradient estimator can be found, so we do not discuss it here.

5 Numerical Examples

We will focus our numerical experiments on image processing problems. The examples are implemented in python using the operator discretization library (ODL) [1]. We define the discrete gradient operators \(D_1\) and \(D_2\) representing the discretized derivative in the first and second coordinate respectively, which we will need for the numerical examples. Both map from \({\mathbb {R}}^{m \times n}\) to \({\mathbb {R}}^{m \times n}\) and are defined by

and

The operator norm of \(D_1\) and \(D_2\), respectively, is 2 (where we equipped \({\mathbb {R}}^{m \times n}\) with the Frobenius norm). This yields an operator norm of \(\sqrt{8}\) for the total gradient \(D := D_1 \times D_2\) as a map from \({\mathbb {R}}^{m \times n}\) to \({\mathbb {R}}^{m \times n} \times {\mathbb {R}}^{m \times n}\), see also [12].

We will compare our methods, i.e. the Variable Accelerated SmooThing (VAST) and its stochastic counterpart (sVAST) to the Primal Dual Hybrid Gradient (PDHG) of [15] as well as its stochastic version (sPDHG) from [14]. Furthermore, we will illustrate another competitor, the method by Pesquet and Repetti, see [24], which is another stochastic version of PDHG (see also [29]).

In all examples we choose the parameters in accordance with [14]:

-

for PDHG and Pesquet&Repetti: \(\tau = \sigma _i = \frac{\gamma }{\Vert K \Vert }\)

-

for sPDHG: \(\sigma _i = \frac{\gamma }{\Vert K \Vert }\) and \(\tau = \frac{\gamma }{n \max _i \Vert K_i \Vert }\),

where \(\gamma = 0.99\).

5.1 Total Variation Denoising

The task at hand is to reconstruct an image from its noisy observation. We do this by solving

with \(\alpha >0\) as regularization parameter, in the following setting: \(f= \alpha \Vert \cdot - b \Vert _2, g_1=g_2 = \Vert \cdot \Vert _1, K_1=D_1, K_2=D_2\).

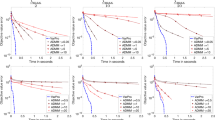

Figure 1 illustrates the images (of dimension \(m = 442\) and \(n = 331\)) used in for this example. These include the groundtruth, i.e. the uncorrupted image, as well as the data for the optimization problem b, which visualizes the level of noise. In Fig. 2 we can see that for the deterministic setting our method is as good as PDHG. For the objective function values, Fig. 2b, this is not too surprising as both algorithms share the same convergence rate. For the distance to a solution however we completely lack a convergence result. Nevertheless in Fig. 2a we can see that our method performs also well with respect to this measure.

In the stochastic setting we can see in Fig. 2 that, while sPDHG provides some benefit over its deterministic counterpart, the stochastic version of our method, although significantly increasing the variance, provides great benefit, at least for the objective function values.

Furthermore, Fig. 3, shows the reconstructions of sPDHG and our method which are, despite the different objective function values, quite comparable.

5.2 Total Variation Deblurring

For this example we want to reconstruct an image from a blurred and noisy image. We assume to know the blurring operator \(C: {\mathbb {R}}^{m \times n} \rightarrow {\mathbb {R}}^{m \times n}\). This is done by solving

for \(\alpha >0\) as regularization parameter, in the following setting: \(f=0, g_1 = \alpha \Vert \cdot - b \Vert _2, g_2=g_3 = \Vert \cdot \Vert _1, K_1=C, K_2 = D_1, K_2=D_2\).

Figure 4 shows the images used to set up the optimization problem (36), in particular Fig. 4b which corresponds to b in said problem.

In Fig. 5 we see that while PDGH performs better in the deterministic setting, in particular in the later iteration, the stochastic variable smoothing method provides a significant improvement where sPDHG method seems not to converge. It is interesting to note that in this setting even the deterministic version of our algorithm exhibits a slightly chaotic behaviour. Although neither of the two methods is monotone in the primal objective function PDHG seems here much more stable.

5.3 Matrix Factorization

In this section we want to solve a nonconvex and nonsmooth optimization problem of completely positive matrix factorization, see [16, 19, 27]. For an observed matrix \(A \in {\mathbb {R}}^{d\times d}\) we want to find a completely positive low rank factorization, meaning we are looking for \(x\in {\mathbb {R}}^{r\times d}_{\ge 0}\) with \(r \ll d\) such that \(x^{T}x=A\). This can be formulated as the following optimization problem

where \(x^{T}\) denotes the transpose of the matrix x. The more natural approach might be to use a smooth formulation where \(\Vert \cdot \Vert ^2_{2}\) is used instead of the 1-Norm we are suggesting. However, the former choice of distance measure, albeit smooth, comes with its own set of problems (mainly a non-Lipschitz gradient).

The so called Prox-Linear method presented in [18] solves the above problem (37), by linearizing the smooth (\({\mathbb {R}}^{d\times d}\)-valued) function \(x \mapsto x^{T}x\) inside the nonsmooth distance function. In particular for the problem

for a smooth vector valued function c and a convex and Lipschitz function g, [18] proposes to iteratively solve the subproblem

for a stepsize \(t \le {(L_{g}L_{D\nabla c})}^{-1}\). For our particular problem described in (37) the subproblem looks as follows

and therefore fits our general setup described in (1) with the identification \(f= \Vert \cdot - x_{k} \Vert ^2_{2} + \delta _{{\mathbb {R}}^{r\times d}_{\ge 0}}(x)\), \(g=\Vert \cdot \Vert _{1}\) and \(K = x_{k}^{T}\). Moreover, due to its separable structure, the subproblem (39) fits the special case described in (35) and can therefore be tackled by the stochastic version of our algorithm presented in Algorithm 4.3. In particular reformulating (38) for the stochastic finite sum setting we interpret the subproblem as

where A[i, : ] denotes the i-th row of the matrix A (Fig. 6).

Comparison of the evolutions of the objective function values for different starting points. We run 40 epochs with 5 iterations each. For each epoch we choose the last iterate of the previous epoch as the linearization. For the stochastic methods we fix the number of rows (batch size) which are randomly chosen in each update a priori and count d divided by this number as one iteration. For the randomly chosen initial point we use a batch size of 3 (to allow for more exploration) and for the one close to the solution we use 5 in order to give a more accuracy. The parameter b in the variable smoothing method was chosen with minimal tuning to be 0.1 for both the deterministic and the stochastic version

In comparison to Sects. 5.1 and 5.2 a new aspect becomes important when evaluating methods for solving (38). Now, it is not only relevant how well subproblem (39) is solved, but also the trajectory taken in doing so as different paths might lead to different local minima. This can be seen in Fig. 6 where PDHG gets stuck early on in bad local minima. The variable smoothing method (especially the stochastic version) is able to move further from the starting point and find better local minima. Note that in general the methods have a difficulty in finding the global minimum \(x_{true}\in {\mathbb {R}}^{3\times 60}\) (with optimal objective function value zero, as constructed \(A: = x_{true}^{T}x_{true}\in {\mathbb {R}}^{60\times 60}\) in all examples).

References

Adler, J., Kohr, H., Öktem, O.: Operator Discretization Library. https://odlgroup.github.io/odl/ (2017)

Bauschke, H.H., Combettes, P.L.: Convex Analysis and Monotone Operator Theory in Hilbert Spaces. Springer, New York (2011)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009)

Borwein, J.M., Vanderwerff, J.D.: Convex Functions: Constructions, Characterizations and Counterexamples. Cambridge University Press, Cambridge (2010)

Boţ, R.I., Csetnek, E.R.: On the convergence rate of a forward–backward type primal–dual splitting algorithm for convex optimization problems. Optimization 64(1), 5–23 (2015)

Boţ, R.I., Csetnek, E.R., Heinrich, A., Hendrich, C.: On the convergence rate improvement of a primal–dual splitting algorithm for solving monotone inclusion problems. Math. Program. 150(2), 251–279 (2015)

Boţ, R.I., Hendrich, C.: A double smoothing technique for solving unconstrained nondifferentiable convex optimization problems. Comput. Optim. Appl. 54(2), 239–262 (2013)

Boţ, R.I., Hendrich, C.C.: A Douglas-Rachford type primal–dual method for solving inclusions with mixtures of composite and parallel-sum type monotone operators. SIAM J. Optim. 23(4), 2541–2565 (2013)

Boţ, R.I., Hendrich, C.: Convergence analysis for a primal–dual monotone+ skew splitting algorithm with applications to total variation minimization. J. Math. Imaging Vis. 49(3), 551–568 (2014)

Boţ, R.I., Hendrich, C.: On the acceleration of the double smoothing technique for unconstrained convex optimization problems. Optimization 64(2), 265–288 (2015)

Boţ, R.I., Hendrich, C.: A variable smoothing algorithm for solving convex optimization problems. TOP 23(1), 124–150 (2015)

Chambolle, A.: An algorithm for total variation minimization and applications. J. Math. Imaging Vis. 20(1–2), 89–97 (2004)

Chambolle, A., Dossal, C.: On the convergence of the iterates of the Fast Iterative Shrinkage/Thresholding Algorithm. J. Optim. Theory Appl. 166(3), 968–982 (2015)

Chambolle, A., Ehrhardt, M.J., Richtárik, P., Schönlieb, C.B.: Stochastic primal–dual hybrid gradient algorithm with arbitrary sampling and imaging applications. SIAM J. Optim. 28(4), 2783–2808 (2018)

Chambolle, A., Pock, T.: A first-order primal–dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vis. 40(1), 120–145 (2011)

Chen, C., Pong, T.K., Tan, L., Zeng, L.: A difference-of-convex approach for split feasibility with applications to matrix factorizations and outlier detection. J. Glob. Optim. https://doi.org/10.1007/s10898-020-00899-8 (2020)

Condat, L.: A primal–dual splitting method for convex optimization involving Lipschitzian, proximable and linear composite terms. J. Optim. Theory Appl. 158(2), 460–479 (2013)

Drusvyatskiy, D., Paquette, C.: Efficiency of minimizing compositions of convex functions and smooth maps. Math. Program. 178, 1–56 (2019)

Groetzner, P., Dür, M.: A factorization method for completely positive matrices. Linear Algebra Appl. 591, 1–24 (2020)

Nesterov, Y.: Smooth minimization of non-smooth functions. Math. Program. 103(1), 127–152 (2005)

Nesterov, Y.: A method for unconstrained convex minimization problem with the rate of convergence \(O(1/k^2)\). Doklady Akademija Nauk USSR 269, 543–547 (1983)

Nesterov, Y.: Smoothing technique and its applications in semidefinite optimization. Math. Program. 110(2), 245–259 (2007)

Nesterov, Y.: Introductory Lectures on Convex Optimization: A Basic Course. Springer, New York (2013)

Pesquet, J.-C., Repetti, A.: A class of randomized primal–dual algorithms for distributed optimization. J. Nonlinear Convex Anal. 16(12), 2453–2490 (2015)

Robbins, H., Siegmund, D.: A convergence theorem for non negative almost supermartingales and some applications. In: Optimizing Methods in Statistics, Proceedings of a Symposium Held at the Center for Tomorrow, Ohio State University, June 14–16, Elsevier, pp. 233–257 (1971)

Rosasco, L., Villa, S., Vũ, B.C.: A first-order stochastic primal-dual algorithm with correction step. Numer. Funct. Anal. Optim. 38(5), 602–626 (2017)

Shi, Q., Sun, H., Songtao, L., Hong, M., Razaviyayn, M.: Inexact block coordinate descent methods for symmetric nonnegative matrix factorization. IEEE Trans. Signal Process. 65(22), 5995–6008 (2017)

Tran-Dinh, Q., Fercoq, O., Cevher, V.: A smooth primal–dual optimization framework for nonsmooth composite convex minimization. SIAM J. Optim. 28(1), 96–134 (2018)

Vũ, B.C.: A splitting algorithm for dual monotone inclusions involving cocoercive operators. Adv. Comput. Math. 38(3), 667–681 (2013)

Acknowledgements

The authors are thankful to two anonymous reviewers for comments and remarks which improved the quality of the presentation and led to the numerical experiment on matrix factorization.

Funding

Open access funding provided by Austrian Science Fund (FWF).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Research partially supported by FWF (Austrian Science Fund) project I 2419-N32. Research supported by the doctoral programme Vienna Graduate School on Computational Optimization (VGSCO), FWF (Austrian Science Fund), Project W 1260.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Boţ, R.I., Böhm, A. Variable Smoothing for Convex Optimization Problems Using Stochastic Gradients. J Sci Comput 85, 33 (2020). https://doi.org/10.1007/s10915-020-01332-8

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-020-01332-8

Keywords

- Structured convex optimization problem

- Variable smoothing algorithm

- Convergence rate

- Stochastic gradients