Abstract

Essentially non-oscillatory (ENO) and weighted ENO (WENO) methods on equidistant Cartesian grids are widely used to solve partial differential equations with discontinuous solutions. However, stable ENO/WENO methods on unstructured grids are less well studied. We propose a high-order ENO method based on radial basis function (RBF) to solve hyperbolic conservation laws on general two-dimensional grids. The radial basis function reconstruction offers a flexible way to deal with ill-conditioned cell constellations. We introduce a smoothness indicator based on RBFs and a stencil selection algorithm suitable for general meshes. Furthermore, we develop a stable method to evaluate the RBF reconstruction in the finite volume setting which circumvents the stagnation of the error and keeps the condition number of the reconstruction bounded. We conclude with several challenging numerical examples in two dimensions to show the robustness of the method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Solving systems of hyperbolic conservation laws with high-order methods continues to attract substantial interest. In two dimensions, the system of conservation law on differential form is given as

with the conserved variables \(u:\mathbb {R}^2\times \mathbb {R}_{+} \rightarrow \mathbb {R}^N\), the flux \(f_i:\mathbb {R}^N\rightarrow \mathbb {R}^N\) and the initial condition \(u_0\). A well-known method to solve hyperbolic conservation laws is the class of finite volume methods. It is based on a discretization of the domain into control volumes and an approximation of the flux through its boundaries. Van Leer [33] introduced the MUSCL approach which is based on an high-order approximation of the flux through the boundaries. A well-known challenge for high-order methods is the property of the conservation laws to form discontinuities from smooth initial data [26]. Thus, solutions need to be defined in the weak (distributional) sense. To prevent stability issues, caused by the discontinuous solutions, Harten et al. [17] proposed the principle of essentially non-oscillatory (ENO) methods. A powerful extension of the ENO method is the weighted ENO (WENO) method [30]. Alternative methods to avoid stability problems and unphysical oscillations are based on adding artificial viscosity [34] or on the use of limiters [18]. A generalization of the finite volume method is the class of Discontinuous Galerkin (DG) finite element methods [7], for which it is necessary to add limiters to ensure non-oscillatory approximations [22]. There exist several approaches that combine RBFs with finite volume methods, e.g. a high-order WENO approach based on polyharmonics [1], a high-order WENO approach based on multiquadratics [6], a high-order RBF based CWENO method [21] and an entropy stable RBF based ENO method [20]. However, most of these are suitable only for one-dimensional grids or are at most second order accurate. We seek to overcome these limitations with a new RBF-ENO method on two dimensional general grids.

In Sect. 2, we introduce the finite volume scheme based on the MUSCL approach [33] and describe the basics of RBF interpolation in Sect. 3. Sections 4 and 5 contain the main contribution. In Sect. 4 we introduce a stable evaluation method for RBF interpolation with a polynomial augmentation, which circumvents the known error stagnation. In the same section we include a general proof of the order of convergence for RBFs augmented with polynomials. In Sect. 5, we introduce a smoothness indicator for RBFs, based on the sign-stable one-dimensional approach developed in [20]. We combine these results to construct an arbitrarily high-order RBF based ENO finite volume method. In Sect. 6, we demonstrate the robustness of the numerical scheme with a variety of numerical examples, while Sect. 7 offers a few concluding remarks.

2 Finite Volume Methods

We assume a triangular grid of \(\varOmega \subset \mathbb {R}^2\), consisting of triangular cells \(C_i = (x_i ,x_k, x_j)\) as illustrated in Fig. 1. The finite volume method is based on cell averages \(U_i = \frac{1}{|C_i|}\int _{C_i} u(x) \mathrm {d}x\) over the cell \(C_i\). By integrating (1) over the cell and dividing it by its size \(|C_i|\) we recover after applying the divergence theorem the semi-discrete scheme

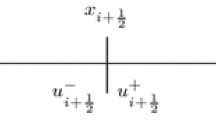

with the numerical flux \(F_{il_{e}} = F_{il_{e}}(U_i,U_{il_{e}},\mathbf{n }_{il_{e}})\) with the accuracy condition

where \(\mathbf{f} = (f_1,f_2)\), \(S_{il_{e}} = \partial C_i \cap \partial C_{il_{e}}\), \(U_{il_{e}}\) is the cell average of \(C_{il_{e}}\) and \(\mathbf{n }_{il_{e}}\) is the outward pointing normal vector. The numerical flux \(F_{il_{e}}\) can be expressed using an (approximate) Riemann solver. A common choice is the Rusanov flux

with

Here, \(\lambda _{max}(A)\) is the biggest eigenvalue of A and \(\mathbf{n }_{il_{e}}\) the normal vector to the interface \(S_{il_{e}}\).

A high-order boundary integral approximation of (3) and a high-order accurate (polynomial) reconstruction s of the local solution can be used to evaluate the first order flux \(F(U,V,{\mathbf{n }_{il_{e}}})\) on the quadrature points. This high-order flux can be written as

with the quadrature weights \(\omega _k\), the quadrature points \(\mathbf{x} _k\) for \(k = 1,\dots ,n_Q\) with \(n_Q\in \mathbb {N}\) the number of quadrature points and the high-order accurate reconstructions \(s_i\) of the solution in cell \(C_i\). The high-order reconstruction \(s_i\) is based on a stencil of cells which includes \(C_i\). In all cases, we can apply an arbitrary time discretization technique to recover a fully discrete scheme, e.g., an SSPRK method [14].

3 Radial Basis Functions

The use of radial basis function for scattered data interpolation has a long history. Their mesh-free property and flexibility for high-dimensional data makes them advantageous when compared to polynomials.

3.1 Standard Interpolation

Let us consider the interpolation problem \(f|_X = (f(\mathbf{x} _1),\dots ,f(\mathbf{x} _n))^T\in \mathbb {R}^n\) on the scattered set of data points \(X = (\mathbf{x} _1,\dots ,\mathbf{x} _n)^T\) with \(\mathbf{x} _i\in \mathbb {R}^d\) for \(f:\mathbb {R}^d\rightarrow \mathbb {R}\). We are seeking a function \(s_{f,X}:\mathbb {R}^d\rightarrow \mathbb {R}\) such that

The general radial basis function approximation is given as

with polynomials \(p_j\in \varPi _{l-1}(\mathbb {R}^d)\) the space of polynomials in \(\mathbb {R}^d\) of order \(l-1\), \(l\in \mathbb {N}\), \(m\in \mathbb {N}\) the degree of \(\varPi _{l-1}(\mathbb {R}^d)\), a univariate continuous function \(\phi \) (the radial basis function), the Euclidean norm \(\Vert \cdot \Vert \) and the shape parameter \(\varepsilon \). To ensure uniqueness of the coefficients \(a_i\) and \(b_j\) for all \(i= 1,\dots ,n\) and \(j=1,\dots , m\) we introduce the additional constraints

To simplify the notation we write

Finally, we express (7) and (9) by the system of equations

with \(A_{ij} = \phi (\mathbf{x} _i-\mathbf{x} _j)\), \(P_{ij} = p_j(\mathbf{x} _i)\), \(a = (a_1,\dots ,a_n)^T\) and \(b = (b_1,\dots ,b_m)^T\). Depending on the choice of the RBF \(\phi \) the polynomial term in (8) ensures the solvability of (11).

Definition 1

(Conditionally positive function) A function \(\phi :\mathbb {R}^d\rightarrow \mathbb {R}\) is called conditionally positive (semi-) definite of order m if, for any pairwise distinct points \(\mathbf{x} _1,\dots ,\mathbf{x} _n\in \mathbb {R}^d\) and \(c = (c_1,\dots ,c_n)^T\in \mathbb {R}^n\setminus \{0\}\) such that

for all \(p\in \varPi _{l-1}(\mathbb {R}^d)\), the quadratic form

is positive (non-negative).

For a conditionally positive definite RBF \(\phi \) of order r (11) has a unique solution if \(\mathbf{x} _1,\dots ,\mathbf{x} _n\) are \(\varPi _{l-1}(\mathbb {R}^d)\)-unisolvent for \(l\geqslant r\) [35]. A subclass of conditionally positive definite functions are the positive definite functions for which (13) holds but not (12).

Since the matrix A is positive definite for a positive definite function \(\phi \), the existence of an unique solution to (11) is trivial for all \(l\in \mathbb {N}\), if \(\mathbf{x} _1,\dots ,\mathbf{x} _n\) are pairwise disjoint.

The most commonly used RBFs are listed in Table 1.

A well-known problem with RBFs is the ill-conditioning of the interpolation matrix and the resulting stagnation (saturation) of the error under refinement [9, 24]. One way to overcome the stagnation error is the augmentation with polynomials [4, 5, 12]. In this case, the polynomials take over the role for the interpolation and the RBFs ensure solvability of (11).

3.2 Interpolation of Cell-Averages

The finite volume MUSCL approach is based on the interpolation of cell averages. Let us consider the interpolation problem \(f|_S = (\bar{u}_1,\dots ,\bar{u}_n)^T\in \mathbb {R}^n\) on the stencil S of cells \(C_1,\dots ,C_n\) in terms of the cell-averages \(\bar{u}_1,\dots ,\bar{u}_n\). Based on (8) we have

with \(\lambda _{C}^{\xi } f\) being the average operator of f over the cell C with respect to the variable \(\xi \) and \( \{p_1,\dots ,p_m\}\) the polynomial basis of \(\varPi _{l-1}(\mathbb {R}^d)\) [1]. Thus, we have the interpolation problem

The solvability of (15) is ensured provided \(\phi \) is conditional positive definite in a pointwise sense and \(\{\lambda _{C_i}\}_{i = 1}^n\) is \(\varPi _{l-1}(\mathbb {R}^d)\)-unisolvent [1].

4 Stable RBF Evaluation for Fixed Number of Nodes

As mentioned in Sect. 3, the ill-conditioning of the RBF interpolation is a well-known challenge. However, RBFs within finite volume methods are of a slightly different nature. In general, the RBF approximation achieves exponential order of convergence for smooth functions by increasing the number of interpolation nodes in a certain domain. The setting for finite volume methods is different since the number of interpolation points remains fixed at a rather low number of nodes and only the fill-distance is reduced.

Based on [11, 12] it is known that the combination of polyharmonics and Gaussians with polynomials overcomes the stagnation error. Bayona [3] shows that under certain assumptions the order of convergence is ensured by the polynomial part.

We propose to use multiquadratic rather than polyharmonic or Gaussian RBFs to enable the use of the smoothness indicator, developed in [20]. Since the RBFs are only used to ensure solvability of the linear system, we can use

as the shape parameter with the separation distance \(\varDelta x :=\min _{i\ne j}{\Vert \mathbf{x} _i-\mathbf{x} _j\Vert }\) for the interpolation nodes \(\mathbf{x} _1,\dots , \mathbf{x} _n\) with \(n\in \mathbb {N}\). To control the conditioning of the polynomial part we use the basis

for \(i = 1,\dots , m\) with \(\tilde{p}_i\in \{\mathbb {R}^d\rightarrow \mathbb {R}, \mathbf{x} \mapsto x_1^{\alpha _1}\dots x_d^{\alpha _d}| \; \sum _{i=1}^d \alpha _i < l, \alpha _i\in \mathbb {N}\}\), \(\text {deg}(\tilde{p}_i) \leqslant \text {deg}(\tilde{p}_{i+1})\) and \({\tilde{\mathbf{x}}}\in \{\mathbf{x }_1\dots ,\mathbf{x }_n\}\). The best choice for \({\tilde{\mathbf{x}}}\) would be the barycenter of the stencil. However, to use the same polynomials for different stencils in the ENO scheme we choose the central one.

Remark 1

The interpolation matrix is the same as the one with the interpolation basis \(\tilde{p}_i\) with \(i=1\dots ,m\), the RBFs with shape parameter 1, and the nodes \(\tilde{\mathbf{x }}_1,\dots , \tilde{\mathbf{x }}_n\) with \(\tilde{\mathbf{x }}_i = \varepsilon (\mathbf{x} _i-\mathbf{x} _1)\). This holds true for any \(\varDelta x \rightarrow 0\) and \(\varDelta \tilde{x} = 1\). Thus, the interpolation step in the finite volume method has the same condition number for all refinements as long as the interpolation nodes have a similar distribution.

4.1 Stability Estimate for RBF Coefficients

In this section, we analyze the stability of the RBF interpolation based on (16) and (17) and show that the stability of the RBF coefficients depends only on the number of the interpolation nodes n. Then, for the one-dimensional case we show that the stability of the polynomial coefficients depends on n and the ratio of the maximum distance between the interpolation points Dx and the minimum distance \(\varDelta x\). For higher dimension we conjecture that a similar result holds.

From [28] it follows that

Lemma 1

(Stability estimate [28]) For (11) there holds the stability estimate

with \(\lambda _{min} := \inf _{a\ne 0, P^T a = 0} \frac{a^TA a}{a^T a}\) and \(\lambda _{max}\) the maximal eigenvalue. Further, there exists an estimate for the polynomial coefficients

with the maximal and minimal eigenvalue of \(P^TP\), \(\lambda _{max,P^TP}\), \(\lambda _{min,P^TP}\).

Thus, the stability of the method depends on the ratios

The maximal eigenvalues can be estimated by

Note that \(\lambda _{min}\) is not the smallest eigenvalue of A, but its definition is similar. Schaback [28] established the following lower bound

Lemma 2

(Lower bound of \(\lambda _{min}\) [28]) Given an even conditionally positive definite function \(\phi \) with the positive generalized Fourier transform \(\hat{\phi }\). It holds that

with the function

for \(M>0\) satisfying

or

and with

It remains to estimate \(\varphi _0(M)\) depending on the RBFs. Some estimates for the common examples in Table 1 are

Lemma 3

(Estimate of \(\varphi _0\) for multiquadratics [28]) Let \(\phi \) be the multiquadratic RBF, then

Note that the lower bound of \(\varphi _0\) of Lemma 3 is zero for \(\nu \in \mathbb {N}\).

Lemma 4

(Estimate of \(\varphi _0\) for Gaussians [35]) Let \(\phi \) be the Gaussian RBF, then

Lemma 5

(Estimate of \(\varphi _0\) for polyharmonics [35]) Let \(\phi (r) = (-1)^{k+1}r^{2k}\log (r)\) be a polyharmonic RBF, then

Corollary 1

By using the shape parameter (16) we recover

for all \(\mathbf{x} _1,\dots ,\mathbf{x} _n\), \(n\in \mathbb {N}\) and a constant C(n, d) which depends on the number of interpolation nodes n and the dimension d.

Proof

From Remark 1 we conclude

with a constant \(C(n,d,\varDelta x)\) which depends on n, d and \(\varDelta x\).

Hence, the stability of the RBF coefficients depends only on the number of interpolation nodes n. This analysis is dimension independent and it remains to estimate the ratio \( \lambda _{max,P^TP}/ \lambda _{min,P^TP}\).

4.2 Stability Estimate for Polynomial Coefficients

The analysis of the Gram matrix \(G :=P^T P\in \mathbb {R}^{m\times m}\) is more challenging. For the polynomial basis (17) we have

We note that \(P = \tilde{P}\) where \((\tilde{P})_{i,j} = \tilde{p}_i(\tilde{\mathbf{x }}_j)\) with \(\tilde{\mathbf{x }}_j = \epsilon (\mathbf{x} _j - \mathbf{x} _1)\). In the one-dimensional case, the following estimate of the condition number holds for the Vandermonde matrix.

Lemma 6

(Conditioning of the Vandermonde matrix in one dimension, [13]) Let \(V_n\) be the Vandermonde matrix \((V_n)_{i,j} = z_j^i\) with \(z_i \ne z_j\) for \(i\ne j\) and \(z_j\in \mathbb {C}\). It holds that

Corollary 2

with \(Dx = \max _{i\ne j} |x_i-x_j|\).

Proof

We start with the estimate of \(\Vert P\Vert _{\infty }\)

To estimate the norm of \(P^{-1}\) we use Lemma 6

Furthermore, we have the standard estimate

for \(A \in \mathbb {R}^{m\times n}\). From [32] we recover

when \(n = m\). Combined, this yields

Applying Corollary 2 to uniformly distributed nodes in \(\mathbb {R}\) we obtain \(Dx/\varDelta x = n-1\) and the condition number of \(P^T P\) is uniformly bounded for all \(\varDelta x\) by

The proof of this estimate does not hold true for two-dimensional interpolation. However, we conjecture that similar bounds hold, as is confirmed in Table 2. Note that the reconstructions from (6) are based on a stencil in a grid. Thus, \(Dx/\varDelta x\) is bounded for these interpolation problems.

4.3 Approximation by RBF Interpolation Augmented with Polynomials

Considering ansatz (8) for the interpolation problem (7), (9) Bayona shows in [3], under the assumption of full rank of A and P, that the order of convergence is at least \(\mathcal {O}(h^{l+1})\) based on the polynomial part. With similar techniques we can relax the assumptions of full rank of A by assuming \(\varphi \) to be a conditionally positive definite RBF of order \(l+1\).

Theorem 1

Let f be an analytic multivariate function and \(\varphi \) a conditionally positive definite RBF of order \(l+1\). Further, we assume the existence of a \(\varPi _{l}(\mathbb {R}^d)\)-unisolvent subset of X. It follows

Proof

Let us consider \(x_0\in \mathbb {R}^d\) where \(x_0\) does not have to be a node. By the assumption that f is analytic, it admits a Taylor expansion in a neighborhood of \(\mathbf {x}_0\)

with \(L_k[f(\mathbf {x}_0)]\in \mathbb {R}\) the coefficients for f around \(\mathbf {x}_0\), e.g., \(L_k[f(\mathbf {x}_0)] = \frac{1}{k!}f^{(k)}(\mathbf {x}_0)\) for univariate functions. Thus, we recover

with \(\mathbf {p}_k =(p_k(\mathbf {x}_i-\mathbf {x}_0))_{i=1}^n.\)

Note that \(\mathbf {a}_k\in \mathbb {R}^n\), \(\mathbf {b}_k\in \mathbb {R}^m\) are given by

and they satisfy

Since there exists a \(\varPi _{l}(\mathbb {R}^d)\)-unisolvent subset and by the well-posedness of (45), we have

for \(i = 1,\dots ,n\) and \(j,k = 1,\dots ,m\). This allows us to write the interpolation function as

and recover

with \(r_m(\mathbf {x}) = \sum _{k>m} L_k[f(\mathbf {x}_0)] p_k(\mathbf {x})\).

Given the estimate of DeMarchi et al. [8]

we conclude

with \(\Vert r_m\Vert _{\infty } \leqslant C h^{l+1}\). \(\square \)

4.4 Numerical Examples

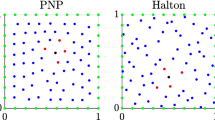

In this section, we seek to verify the results in the finite volume setup (fixed number of interpolation nodes). Let \(\varOmega = [0,1]^2\) and \(f:\varOmega \rightarrow \mathbb {R}\) be a function and \(\delta >0\). We approximate f by dividing the domain into subdomains of size \(\delta \times \delta \) and solve in each subdomain the interpolation problem with N nodes given from an Halton sequence [16]. Since the condition number depends on the maximal distance divided by the separation distance \(Dx/\varDelta x\), we use the Halton sequences with a separation distance bigger than \(0.5\delta /\sqrt{N}\). We test the following functions

In Figs. 2 and 3 we show the error of the interpolation problem and confirm the correct order of convergence for the multiquadratic interpolation augmented with a polynomial of degree l of order \(k \leqslant {l}\). For polynomial degree \({l} = 4\) we observe that the convergence breaks down for \(\delta < 2^{-7}\). This happens at small errors \(\approx 10^{-15}\) and high condition numbers \(>10^{13}\), as is shown in Table 2.

Furthermore, we verify the results from Sect. 4. Table 2 supports the conjecture that the condition number remains constant for a fixed number of interpolation nodes n and a fixed ratio \(Dx/\varDelta x\).

We also observe that the condition number stays constant for the refined grids, and it is considerably smaller for first order multiquadratics \(k=1\) than for the higher order ones.

5 RBF-ENO Method

In this section, we introduce a new RBF-ENO method on two-dimensional general grids that can be generalized to higher dimensions. The method is based on the MUSCL approach described in Sect. 2, the RBF-ENO reconstruction introduced in [20], and the evaluation technique discussed in Sect. 4.

The finite volume method relies on the high-order flux (6) based on the boundary integral of the Rusanov flux (4) which is approximated by the Gauss-Legendre quadrature [2]. For the evaluation of the high-order flux we use the RBF reconstruction (14) and to compute the cell average we use a cubature rule for triangles [10]. The ENO reconstruction (Algorithm 1) is based on the one introduced by Harten et al. [17]. Thus, we recursively add one cell to the stencil \(S_i\) and all its neighbors to a list of possible choices \(N_i\) for the next step. In each step, we add the cell in \(N_i\) which results in the stencil that has the smallest smoothness indicator IS, indicating the smoothness of the solution on a stencil. It is well-known that this strategy comes with high costs, but is also very robust. As the smoothness indicator we choose a generalization of the one-dimensional indicator introduced in [20]

for the reconstruction \(s(\mathbf{x} ) = \sum _{i=1}^n a_i\lambda _{C_i}^{\xi }\phi (\mathbf{x} -\xi ) + \sum _{j=1}^m b_j p_j(\mathbf{x} )\).

It is important to choose the right degree of the polynomial for each stencil. For a polynomial of degree l we need at least \(n = \frac{(l+2)(l+1)}{2}\) cells, and thus \( l = -1.5 + \frac{1}{2}\sqrt{1 + 8n}\). To reduce the probability of \(\{\lambda _{C_{i_j}}\}_{j = 1}^n\) having no \(\varPi _{l}(\mathbb {R}^d)\)-unisolvent subset, we choose

Furthermore, we use multiquadratics with a shape parameter based on (16) and the polynomials (17). Since the order of convergence is not influenced by the order of the multiquadratics and following the observations in Sect. 4.4, we choose first order multiquadratics.

We need to slightly adapt the evaluation method from Sect. 4 to use it for the RBF-ENO method. The coefficients \(a_i\) depend on the shape parameter. Thus, we must compare the smoothness indicator (54) with respect to the same shape parameter. By assuming approximately uniform equilateral triangles, we approximate \(\varDelta x\) as

with the radius \(r_{j,inscr}\) of the inscribed circle of the jth cell and \(|C_i|\) is the area of the ith cell. The last estimate comes from

where we assume \(C_i\) to be an equilateral triangle. Hence, we choose the shape parameter

with the polynomial basis (17).

The advantage of RBFs over polynomials is the ability to deal with a stencil with a variable number of elements. The condition for RBFs to have a well-defined system of equations is the existence of a subset which is \(\varPi _{l}(\mathbb {R}^d)\)-unisolvent and l must be larger than the order of the RBF. Thus, we can use a bigger stencil than the dimension of \(\varPi _{l}(\mathbb {R}^d)\) to circumvent cell constellations that are ill-conditioned. To keep the stencil compact we classify each cell around the central one, depending on its distance \(d\in \mathbb {N}\), such that

and introduce \(d_{max}\) as the maximal distance. A stable configuration for second to fourth order methods is given in Table 3.

Note that (55) does not coincide with the values from Table 3. However, from numerical experiments this combination seems superior.

Summary of the RBF-ENO method

-

Finite volume method with a high-order flux (6);

-

The Gauss-Legendre quadrature [2] to approximate the boundary integral of the Rusanov flux (4);

-

Reconstruction based on the RBF approach (14) with the polynomial basis (17);

-

First order multiquadratics with shape parameter (58);

-

Size n of stencil and \(d_{max}\) from Table 3 depending on the order of the method;

-

Stencil selection: Algorithm 1 and smoothness indicator (54) with polynomial degree (55).

6 Numerical Results

In this chapter, we demonstrate the robustness of the second and third order RBF-ENO method on general grids. For the time discretization we use a third order SSPRK method [14]. The grids are generated by distmesh2d(), which is based on the Delaunay algorithm [27].

6.1 Linear Advection Equation

We consider the linear advection equation in two dimensions

with wave speed \(a = 1\), \(b = 0\) and periodic boundary conditions [26]. This results in a right moving wave given by the initial condition

Figure 4 shows the error at \(T = 0.1\). We observe a drop of the order of convergence after a certain level of refinement which is a known phenomena [19, 31]. This arises from constantly switching the stencil. For a very smooth function we recover the right order of convergence by multiplying the smoothness indicator with a penalty term \(D^3\) which depends on the distance to the central cell

with the center \(x_{c,i}\) of cell \(C_i\). This gives preference to the central stencil.

6.2 Burgers’ Equation

Next, we consider Burgers equation

on the domain \(\varOmega = [0,1]^2\) with the initial conditions

The Burgers equation illustrates the behavior of the scheme with a non-linear flux and its ability to deal with discontinuities. Furthermore, the results can be compared with the exact solution [15]. The solution consists of shocks and rarefaction waves as its one-dimensional counterpart. To avoid boundary effects we increase the computational domain to \(\varOmega = [-1,2]^2\) and keep the initial conditions for the extended square, see Fig. 5. The solutions at time \(T=0.25\) for the 3rd and 4th order method are as expected, Fig. 6. There are some minor oscillations, but they remain small.

6.3 KPP Rotating Wave

We consider the two-dimensional KPP rotating wave problem

in the domain \(\varOmega = [-2,2]^2\) with periodic boundary conditions and the initial conditions

This is a complex non-convex scalar conservation law [23]. The KPP problem was designed to test various schemes for entropy violating solutions. At time \(T=1\) the solution forms a characteristic spiral, which is well-resolved for the second and third order method, as shown in Fig. 7.

6.4 Euler Equations

Let us consider the two-dimensional Euler equations

with the density \(\rho \), the mass flux \(m_1\) and \(m_2\) in x- and y-direction, the total energy E, and the pressure

The mass flux is given by \(m = \rho u\). Further, we choose \(\gamma = 1.4\) which reflects a diatomic gas such as air.

6.4.1 Isentropic Vortex

The isentropic vortex problem describes the evolution of a inviscid isentropic vortex in a free stream on the domain \(\varOmega = [-5,5]^2\). Proposed by Yee et al. [37] it is one of the few problems of the Euler equations with an exact solution. The initial conditions are

with the initial vortex strength \(\beta \), the initial vortex center \((x_c,y_c)\) and periodic boundary conditions. The pressure is initialized by \(p = \rho ^\gamma \) and \(\alpha \) prescribes the passive advection direction. The exact solution is the initial condition propagating with speed M in direction \((\cos (\alpha ),\sin (\alpha ))\). The parameters are chosen as \(M = 0.5\), \(\alpha = 0\), \(\beta = 5\) and \((x_c,y_c) = (0,0)\). We analyze the order of convergence at time \(T = 1\). In Fig. 8 we observe the same behavior as for the linear advection equation. Again, we overcome this stability issue by introducing a penalty term \(D^3\) which depends on the distance of the cell to its central one (61), and recover the optimal order of convergence.

6.4.2 Riemann Problem

The initial values for Riemann problems in two dimensions are constant in each quadrant

with the physical domain \(\varOmega = [0,1]^2\), which is enlarged to \(\varOmega = [-1,2]^2\) to reduce boundary effects. The values are chosen in such a way that only a single elementary wave appears at each interface. This results in 19 genuinely different configuration for a polytropic gas [25]. We test two of them, see Table 4.

We solve the Riemann problems until time \(T = 0.25\) on the grid shown in Fig. 9. In Fig. 10 we see that the results of the 4th configuration are well resolved with the 2nd and 3rd order methods, while keeping the oscillations small. Furthermore, Fig. 11 illustrates the convergence in h for the RBF-ENO method of order 3.

For the Riemann problem 12 at time \(T = 0.25\) the results are of a similar quality, see Figs. 12 and 13.

6.4.3 Shock Vortex Interaction

The shock vortex interaction problem was introduced to test high order methods [29]. It describes the interaction of a right-moving vortex with a left-moving shock in the domain \(\varOmega = [0,1]^2\). The initial condition is given by the shock discontinuity

with the left state superposed by the perturbation

with the temperature \(\theta = p/ \rho \), the physical entropy \(s = \log p-\gamma \log \rho \) and the distance \(r^2 = ((x-x_c)^2+(y-y_c)^2)/r_c^2\). The left state is given by \((\rho _L,u_{1,L},u_{2,L},E_L) = (1,\sqrt{\gamma },0,1)\) and the right state by

The parameter of the vortex are chosen as \(\epsilon = 0.3\), \(r_c = 0.05\), \(\beta = 0.204\) with the initial center of the vortex \((x_c,y_c) = (0.25,0.5)\). Figure 14 shows the result of the second and third order RBF-ENO method at the final time \(T = 0.35\) for \(N = 14{,}616\) cells. The higher resolution of the third order method is clear. In Fig. 15 we see the convergence of the scheme for increasing number of cells. We observe minor oscillations for \(N = 58{,}646\), but they remain stable.

6.4.4 Double Mach Reflection

The double Mach reflection problem is a standard benchmark for Euler codes that tests its robustness in the presence of a strong shock. It was introduced by Woodward et al. [36] and consists of a Mach 10 shock propagating at an angle of \(30^\circ \) (\(\alpha = 60^\circ \)) into the ramp, see Fig. 16. The domain \(\varOmega = [0,4]\times [0,1]\) contains a ramp starting at \(x_s = 1/6\). As boundary conditions we have on the left side and on the ground in front of the ramp inflow boundary conditions with the post-shock values. On the ramp we use slip-wall conditions, on the top we apply the exact time dependent shock location and on the right outflow boundary conditions with the pre-shock conditions. The solution is simulated until \(T = 0.2\) with the initial condition

To solve the double Mach reflection problem we must choose the multiquadratics of order l for a method of order l to get a stable solution, shown in Fig. 17. This suggests that the proposed stencil selection algorithm in [20] is more stable than just using a first order RBF in the same algorithm. To highlight the ability to deal with fully unstructured grids, we present a solution with around a quarter of the cells refined in the lower fifth of the domain, Fig. 18. The solution is based on a grid of the form of Fig. 19 with approximately six times more cells at each face. Note that the cells in the lower part have around the same size as the ones in the example from Fig. 17.

7 Conclusions

In this work, we propose a new RBF-ENO method for multi-dimensional problems on general grids. We introduced a stable evaluation method for RBFs, augmented with polynomials and a stencil selection algorithm based on [20]. We showed that the algorithm preserves the expected accuracy and we demonstrated its robustness for challenging test cases, including two classic Riemann problems, the shock-vortex interaction and the double Mach reflection problem.

However, it is well-known that the strategy of the stencil selection algorithm is coming with high costs. As shown for the Burgers equation the method is working also in the 4th order setup, but due to the high number of cells in the stencil it is extremely costly.

In the future, we will combine the stable and flexible RBF-ENO method with the standard (polynomial) WENO method on structured grids, to offset some of the computational cost of the RBF-ENO scheme.

References

Aboiyar, T., Georgoulis, E.H., Iske, A.: High order weno finite volume schemes using polyharmonic spline reconstruction. In: Proceedings of the International Conference on Numerical Analysis and Approximation theory NAAT2006. Department of Mathematics. University of Leicester, Cluj-Napoca (Romania) (2006)

Abramowitz, M., Stegun, I.A.: Handbook of mathematical functions: with formulas, graphs, and mathematical tables, vol. 55. Courier Corporation. http://people.math.sfu.ca/~cbm/aands/intro.htm (1965). Accessed 06 March 2020

Bayona, V.: An insight into RBF-FD approximations augmented with polynomials. Comput. Math. Appl. 77(9), 2337–2353 (2019)

Bayona, V., Flyer, N., Fornberg, B.: On the role of polynomials in rbf-fd approximations: III. Behavior near domain boundaries. J. Comput. Phys. 380, 378–399 (2019). https://doi.org/10.1016/j.jcp.2018.12.013

Bayona, V., Flyer, N., Fornberg, B., Barnett, G.A.: On the role of polynomials in RBF-FD approximations: II. Numerical solution of elliptic pdes. J. Comput. Phys. 332, 257–273 (2017)

Bigoni, C., Hesthaven, J.S.: Adaptive weno methods based on radial basis function reconstruction. J. Sci. Comput. 72(3), 986–1020 (2017). https://doi.org/10.1007/s10915-017-0383-1

Cockburn, B., Shu, C.W.: TVB Runge–Kutta local projection discontinuous galerkin finite element method for conservation laws. II. General framework. Math. Comput. 52(186), 411–435 (1989)

De Marchi, S., Schaback, R.: Stability of kernel-based interpolation. Adv. Comput. Math. 32(2), 155–161 (2010)

Driscoll, T.A., Fornberg, B.: Interpolation in the limit of increasingly flat radial basis functions. Comput. Math. Appl. 43(3), 413–422 (2002). https://doi.org/10.1016/S0898-1221(01)00295-4

Dunavant, D.: High degree efficient symmetrical gaussian quadrature rules for the triangle. Int. J. Numer. Methods Eng. 21(6), 1129–1148 (1985)

Flyer, N., Barnett, G.A., Wicker, L.J.: Enhancing finite differences with radial basis functions: experiments on the Navier–Stokes equations. J. Comput. Phys. 316, 39–62 (2016). https://doi.org/10.1016/j.jcp.2016.02.078

Flyer, N., Fornberg, B., Bayona, V., Barnett, G.A.: On the role of polynomials in RBF-FD approximations: I. Interpolation and accuracy. J. Comput. Phys. 321, 21–38 (2016)

Gautschi, W.: How (un) stable are vandermonde systems. Asymptot. Comput. Anal. 124, 193–210 (1990)

Gottlieb, S., Ketcheson, D.I., Shu, C.W.: High order strong stability preserving time discretizations. J. Sci. Comput. 38(3), 251–289 (2009). https://doi.org/10.1007/s10915-008-9239-z

Guermond, J.L., Pasquetti, R., Popov, B.: Entropy viscosity method for nonlinear conservation laws. J. Comput. Phys. 230(11), 4248–4267 (2011). https://doi.org/10.1016/j.jcp.2010.11.043

Halton, J.H.: On the efficiency of certain quasi-random sequences of points in evaluating multi-dimensional integrals. Numer. Math. 2(1), 84–90 (1960). https://doi.org/10.1007/BF01386213

Harten, A., Engquist, B., Osher, S., Chakravarthy, S.R.: Uniformly high order accurate essentially non-oscillatory schemes, III. J. Comput. Phys. 71(2), 231–303 (1987). https://doi.org/10.1016/0021-9991(87)90031-3

Harten, A., Zwas, G.: Self-adjusting hybrid schemes for shock computations. J. Comput. Phys. 9(3), 568–583 (1972)

Hesthaven, J.S.: Numerical methods for conservation laws: from analysis to algorithms. Soc. Ind. Appl. Math. (2017). https://doi.org/10.1137/1.9781611975109

Hesthaven, J.S., Mönkeberg, F.: Entropy stable essentially nonoscillatory methods based on rbf reconstruction. ESAIM: M2AN 53(3), 925–958 (2019). https://doi.org/10.1051/m2an/2019011

Hesthaven, J.S., Mönkeberg, F., Zaninelli, S.: RBF based CWENO method. https://infoscience.epfl.ch/record/260414 (2018). Submitted

Hesthaven, J.S., Warburton, T.: Nodal Discontinuous Galerkin Methods: Algorithms, Analysis, and Applications. Springer, Berlin (2007)

Kurganov, A., Petrova, G., Popov, B.: Adaptive semidiscrete central-upwind schemes for nonconvex hyperbolic conservation laws. SIAM J. Sci. Comput. 29(6), 2381–2401 (2007)

Larsson, E., Fornberg, B.: Theoretical and computational aspects of multivariate interpolation with increasingly flat radial basis functions. Comput. Math. Appl. 49(1), 103–130 (2005). https://doi.org/10.1016/j.camwa.2005.01.010

Lax, P.D., Liu, X.D.: Solution of two-dimensional riemann problems of gas dynamics by positive schemes. SIAM J. Sci. Comput. 19(2), 319–340 (1998)

LeVeque, R.J.: Numerical Methods for Conservation Laws. Springer, Berlin (1992)

Persson, P.O., Strang, G.: A simple mesh generator in matlab. SIAM Rev. 46(2), 329–345 (2004)

Schaback, R.: Error estimates and condition numbers for radial basis function interpolation. Adv. Comput. Math. 3(3), 251–264 (1995)

Shu, C.W.: High Order ENO and WENO Schemes for Computational Fluid Dynamics, pp. 439–582. Springer, Berlin (1999)

Shu, C.W., Osher, S.: Efficient implementation of essentially non-oscillatory shock-capturing schemes. J. Comput. Phys. 77(2), 439–471 (1988)

Strang, G.: Accurate partial difference methods I: linear cauchy problems. Arch. Ration. Mech. Anal. 12(1), 392–402 (1963)

Tyrtyshnikov, E.E.: How bad are hankel matrices? Numer. Math. 67(2), 261–269 (1994)

Van Leer, B.: Towards the ultimate conservative difference scheme. V. A second-order sequel to godunov’s method. J. Comput. Phys. 32(1), 101–136 (1979)

VonNeumann, J., Richtmyer, R.D.: A method for the numerical calculation of hydrodynamic shocks. J. Appl. phys. 21(3), 232–237 (1950)

Wendland, H.: Scattered Data Approximation. Cambridge University Press, Cambridge (2004). https://doi.org/10.1017/CBO9780511617539. https://www.cambridge.org/core/books/scattered-data-approximation/980EEC9DBC4CAA711D089187818135E3

Woodward, P., Colella, P.: The numerical simulation of two-dimensional fluid flow with strong shocks. J. Comput. Phys. 54(1), 115–173 (1984)

Yee, H.C., Sandham, N.D., Djomehri, M.J.: Low-dissipative high-order shock-capturing methods using characteristic-based filters. J. Comput. Phys. 150(1), 199–238 (1999)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work has been supported by SNSF (\(200021\_165519\)).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hesthaven, J.S., Mönkeberg, F. Two-Dimensional RBF-ENO Method on Unstructured Grids. J Sci Comput 82, 76 (2020). https://doi.org/10.1007/s10915-020-01176-2

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-020-01176-2