Abstract

We have implemented a self-consistent field solver for Hartree–Fock calculations, by making use of Multiwavelets and Multiresolution Analysis. We show how such a solver is inherently a preconditioned steepest descent method and therefore a good starting point for rapid convergence. A distinctive feature of our implementation is the absence of any reference to the kinetic energy operator. This is desirable when Multiwavelets are employed, because differential operators such as the Laplacian in the kinetic energy are challenging to represent correctly. The theoretical framework is described in detail and the implemented algorithm is both presented in the paper and made available as a Python notebook. Two simple examples are presented, highlighting the main features of our implementation: arbitrary predefined precision, rapid and robust convergence, absence of the kinetic energy operator.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Atom-centered Gaussians have traditionally been the most common and widespread choice of basis set for molecules [1]. Several strong arguments are in favor of such a choice: the compactness of the representation which is defined by a handful of coefficients, the ability to represent atomic orbitals well (Slater functions are in theory superior due to the cusp at the nuclear position and the correct asymptotic), the simplification in the computation of molecular integrals which are often obtained analytically (this is the weak point of Slater orbitals which require expensive numerical evaluations). Their main disadvantage is the non-orthogonality of the basis which can become a severe problem especially for large bases leading to a computational bottleneck when orthonormalization is required or worse numerical instabilities due to near linear-dependency in the basis [2].

On the opposite side of the spectrum, plane waves (PWs) are ideally suited for periodic systems and are orthonormal by construction. However a very large number of them needs to be employed in order to achieve good precision, especially if one is interested in high resolution in the nuclear-core regions [3]. A popular choice to circumvent the problem is to use pseudopotentials [4] in the core region, thereby reducing the number of electrons to be treated and at the same time removing the need for very high-frequency components. Lately, the use of projector augmented wave (PAW) [5] and linearized augmented plane wave (LAPW) [6] techniques, has made this issue less critical for PW calculations. Another challenge for PWs is constituted by non-periodic systems, which can only be dealt with by using a supercell approach [7].

Quantum chemical modeling is constantly expanding its horizons: cutting edge research is focused on achieving good accuracy (either in energetics or molecular properties) on large non-periodic systems such as large biomolecules or molecular nanosystems. This progression is constantly exposing the weaknesses of the traditional approach thus rendering the use of unconventional methods, which are free from the above mentioned limitations ever more attractive. One such choice is constituted by numerical, real-space grid-based methods which are gaining popularity in quantum chemistry as a promising strategy to deal with the Self Consistent Field (SCF) problem of Hartree Fock(HF) and density functional theory (DFT).

Among real-space approaches, three strategies have been commonly employed: Finite Differences [8], Finite Elements [9], Wavelets [10, 11] and Multiwavelets (MWs) [12]. MWs are particularly well suited for all-electron calculations [12, 13]. The basis functions are localized (as Gaussian-type orbitals) yet orthonormal (as plane waves). One crucial property of MWs is the disjoint support (zero overlap) between basis functions in adjacent nodes [14], paving the way for adaptive refinement of the mesh, tailored to each given function. This is essential for an all-electron description where varying resolution is a prerequisite for efficiency. The price to pay, to provide a representation with a given number of vanishing moments, is a basis consisting of several wavelet functions per node. The most common choice of basis functions in the MW framework is a generic orthonormal polynomial basis of order k, providing a second possibility to increase the resolution of the representation alongside the adaptive grid refinement [15]. Currently, the main drawbacks of this approach are a large memory footprint (a numerical representation of a molecular orbital is much larger in terms of number of coefficients), and a significant computational overhead [16, 17]. On the other hand, a localized orthonormal basis is an ideal match for modern massively-parallel architectures [18] and we are confident that it is only a matter of time before real-space grid methods in general and MWs in particular will become competitive with or even superior to traditional ones.

To achieve high precision and keep the memory footprint at a manageable level, an adaptive strategy which refines grids only if needed is necessary [19]. This choice has a profound impact on the minimization strategies that can be adopted in order to solve SCF problems such as the Roothaan–Hall equations of the Hartree–Fock (HF) method. In other words, strategies which rely upon having a fixed basis, such as the most common atomic orbital based methods [20] are excluded. On the other hand, only the occupied molecular orbitals are needed both in HF and DFT to describe the wavefunction/electronic density. Methods providing a direct minimization of the orbitals without requiring a fixed basis representation must be considered. Additionally, using MWs on an adaptive grid generates representations with discontinuities at the nodal surfaces, which poses a challenge when differential operators are considered. As will be shown in the paper, if the Hartree–Fock equations are reformulated as coupled integral equations, it becomes possible to minimize the occupied orbitals, without ever recurring to differential operators.

2 Functions and operators in the Multiwavelet framework

When defining the MW framework we think in terms of scaling spaces \(V^n\) and wavelet spaces \(W^n\). The scaling space \(V^0\) in 3D real-space is spanned by a set of orthogonal polynomials on the unit cube, and the spaces \(V^n\) for \(n>0\) are obtained recursively by splitting the intervals of \(V^{n-1}\) in \(2^3\) sub-cubes, then translate and dilate the original polynomial basis onto those intervals. This results in the ladder of scaling spaces

which are approaching completeness in \(L^2\). The wavelet spaces \(W^n\) are defined as the orthogonal complement of the scaling space \(V^n\) in \(V^{n+1}\)

which results in the following relation

2.1 Functions

Functions can be approximated by a projection \(P^n\) onto the scaling space \(V^{n}\), which we denote as

where the latter sum runs over all the \(2^{{3n}}\) cubes that make up a uniform grid at length scale \(2^{{ - n}}\). Obviously, larger n means higher resolution and thus a better approximation. Importantly, these cubes completely fill the space of the unit cube, without overlapping, which means that all of them are necessary in order to get a complete description of f.

Similarly, a function projection onto the wavelet space \(W^n\) is denoted as

Here it should be noted that such a wavelet projection is not an approximation to the function, but should be regarded as a difference between two consecutive approximations. By making use of the relation in Eq. (3), we can arrive at two equivalent representations for the approximated function:

where the former can be thought of as a high-resolution representation at a uniform length scale N, while the latter is a multi-resolution representation that spans several different length scales \(n=\lbrace 0,\ldots ,N-1\rbrace\). The two representations are completely equivalent both in terms of precision and complexity (number of expansion coefficients), but the latter has one significant advantage: since it is built up as a series of corrections to the coarse approximation at scale zero, one can choose to keep only the terms that add a significant contribution [12, 21]

where \(\epsilon\) is some global precision threshold.

2.2 Convolution operators

As will be shown in the following sections, for SCF algorithms within a preconditioned steepest descent framework, the necessary operators are the Poisson operator for the Coulomb and exact exchange contributions and the bound-state Helmholtz operator for the SCF iterations. Their Green’s kernel can be written as

where \(\mu > 0\) yields the bound-state Helmholtz kernel, whereas \(\mu = 0\) is the Poisson kernel. Their application is achieved by convolution of a function with the corresponding Green’s kernel

once an approximate separated form in terms of Gaussian functions has been computed [21,22,23]:

The non-standard [22, 24] form of the operator T is built as a telescopic expansion of the finest scale projection \(T^N = P^N T P^N\)

where \(A^n = Q^n T Q^n\), \(B^n = Q^n T P^n\), \(C^n = P^n T Q^n\). Thanks to the vanishing moments of the MW basis, the matrix representations of \(A^n\), \(B^n\) and \(C^n\) (which contain at least one wavelet projector) are diagonally dominant for both the Poisson and bound-state Helmholtz kernels. Therefore, all terms beyond a predetermined bandwidth can be omitted in the operator application, by a screening similar to the one for functions in Eq. (7). In particular, we have previously shown that the application of the Poisson operator for the calculation of the electrostatic potential scales linearly with the size of the system [25].

2.3 Derivative operators

The discontinuities in the MW basis leads to a number of problems when considering derivative operators. In contrast to the well-behaved smoothing properties of the integral operators as discussed above, the application of the derivative operator will amplify the numerical noise arising from the discontinuity between adjacent intervals in the representation. In particular, since the standard construction [14] of the operator allows only for a first derivative to be defined, higher derivatives have to be computed by repeated application of the first derivative, which will effectively propagate, and further amplify, the numerical noise at every step. This prohibits the use of MW in certain situations, like iterative time-propagation methods involving a derivative in the propagation operator.

A new class of derivative operators was proposed recently by Anderson et al. [26], addressing some of the issues with the original construction. The idea behind the new construction was to realize that the MW representations are usually discontinuous approximations of functions that are supposed to be smooth and continuous. In these situations, a workaround can be achieved by moving to an auxiliary continuous basis, compute the derivative, and then move back to the original (discontinuous) MW basis. Anderson et al. proposed either b-splines or bandlimited exponentials for this auxiliary basis.

It should be noted that the new operators assume that the input function is n times differentiable, even if its MW representation is clearly not. It is thus only appropriate if the function does not in fact contain any analytic discontinuities or cusps. It is well known that the non-relativistic electronic wavefunction in any point-nucleus model does contain cusps at the nuclear positions, but there are workarounds for this problem, by either removing the cusps from the wavefunction analytically [27], or by introducing effective core potentials.

In the following we will avoid this issue altogether, by formulating the HF equations without any reference to the kinetic energy (or derivative) operator.

3 The Hartree–Fock equations

The HF equations are indisputably the cornerstone of quantum chemistry. We will here briefly revise the formalism as presented by Jensen [28]. It constitute a concise yet formally correct derivation, which also has the advantage of being completely independent of the choice of basis.

We start by considering the energy expression of a Slater determinant:

where \(\varPsi\) is a single determinant and \({\hat{H}}\) is the electronic Hamiltonian operator.

In terms of the spinorbitals \(\left\{ \varphi _i, i=1 \ldots N \right\}\) defining the Slater determinant, the energy expression can be written as follows:

where \(h_i\) represents the one-electron part of the energy, \(J_{ij}\) is the Coulomb interaction, \(K_{ij}\) is the exchange interaction and \(U_{N}\) constitutes the inter-nuclear repulsion. They are obtained respectively as:

In the above equations, lowercase indices run over the electrons, uppercase ones run over the nuclei, Z is a nuclear charge, R is the inter-nuclear distance, r is the inter-electronic distance, \({\hat{T}}= -\nabla ^2/2\) is the kinetic energy operator, \({\hat{V}}_N = -\sum _{I} Z_I /|{\varvec{R}}_I-{r}|\) is the nuclear potential, and atomic units (\(\hbar =1\), \(q_{e} = - 1\), \(m_{e} = 1\)) are used throughout. It is useful to define the effective one-electron Coulomb and Exchange operators as:

In accordance to the variational principle, the minimizer is obtained by writing the Lagrangian equations with the constraint of orthonormal occupied orbitals, and differentiating with respect to orbital variations:

where the Fock operator \({\hat{F}}\) is defined as:

The functional derivative of the Lagrangian with respect to an arbitrary orbital variation \(\delta \varphi _i\) and of its complex conjugate \(\delta \varphi _i^*\) can then be written as:

The Lagrange multipliers constitute a Hermitian matrix [28], which leads to the coupled HF equations:

The Fock matrix \(F\) can be formally obtained by projecting the above equations along the directions defined by the set of occupied orbitals:

In the equation above and in the rest of the paper, we made use of a shorthand notation, indicating with \(\varPhi = (\varphi _1, \ldots , \varphi _N)\) the row-vector of all occupied orbitals.

The Fock operator depends on the orbitals implicitly through \({\hat{J}}\) and \({\hat{K}}\). The equations must therefore be solved iteratively. The straightforward iteration would in practice correspond to a steepest descent minimization:

where \(\alpha\) defines the length of the step and the negative sign emphasizes that the step is in the opposite direction of the gradient. We notice also that the orbital set \(\left\{ {\tilde{\varphi }}_i^{n+1} \ ,i=1\ldots N\right\}\) is not orthonormal, because the chosen parametrization is linear and not exponential [1, 29]. Throughout the paper we will use \({\tilde{\varphi }}\) to refer to non-(ortho)normalized orbitals.

The direct minimization described above is at best lengthy and often leads to either oscillations or even worse to divergent behavior [1]. The usual strategy to solve the HF equations consists in projecting the equations onto a given basis, and solving the so called Roothaan–Hall equations thereby obtained with an acceleration method known as Direct Inversion of the Iterative Subspace (DIIS) [30]. The DIIS is however centered on minimizing the occupied-virtual blocks of the Fock matrix in the finite basis representation. As discussed in the previous section, this is prevented when a MW approach is employed, because the basis set is adaptively refined for each function and should therefore be regarded as infinite and the use of differential operators within a MW basis is problematic.

An alternative is constituted by the integral representation of the HF equations as shown in the next section.

4 Helmholtz kernel and integral formulation

The use of an integral equation formalism to solve the Schrödinger equation was first proposed by Kalos [31]. Let us here consider the derivation for a one-electron system, for which we have the Schrödinger equation:

Such an equation can be rewritten in an integral form by making use of a Green’s function formalism. The starting point is the Helmholtz equation

which admits a solution in terms of the following Green’s function

By making use of this Green’s function kernel, and choosing \(\mu ^2 = -2\epsilon\), the Schrödinger equation can be written in an integral form:

The above integral formulation does not require the explicit use of the kinetic energy operator, which has been formally inverted as follows:

The integral formulation provides also a natural starting point for efficient iterative algorithms. At each iteration n, the successive orbital \(\varphi ^{n+1}\) is obtained as:

For a practical realization of the algorithm, it is also necessary to be able to compute the energy expectation value \(\epsilon = \langle {\varphi }\vert {\hat{H}}\vert {\varphi }\rangle\) without recurring to the explicit evaluation of the kinetic energy. Consider the expectation value at iteration \(n+1\), if \(\varphi ^{n+1}\) is obtained through Eq. (32), it is easy to show that \(\epsilon ^{n+1}\) can be obtained as a direct update

This shows that the expectation value of the total energy can be obtained by updating \(\epsilon ^{n}\) with the matrix element of the potential operator involving the new orbital \({\tilde{\varphi }}^{n+1}\) and the previous one \(\varphi ^{n}\). We underline that such a prescription is valid for an arbitrary form of the potential and only requires that the Helmholtz operator used in Eq. (32) is computed with \(\mu = \sqrt{-2 \epsilon ^{n}}\). It is however not required that \(\epsilon ^{n}\) be the expectation value at iteration n, which allows to start with a predefined initial guess \(\epsilon ^{0}\) at the first iteration.

5 Integral formulation for a many-electron system

The procedure described in the previous section can be extended to a many-electron system, to compute all elements of the Fock matrix defined in Eq. (25). To simplify the notation, all occupied orbitals \(\lbrace \varphi _i\rbrace\) are collected in the row-vector \(\varPhi\). Starting from the coupled HF Eq. (26), and applying the Helmholtz operator \({\hat{G}}^{\mathbf {\mu }^{n}}\):

In the above equations the operators \({\hat{T}}\) and \({\hat{V}}\) are applied on each component of \(\varPhi\). Similarly \({\hat{G}}^{\mathbf {\mu }}\) is applied on each component of the resulting vector in the square brackets, making sure the proper \(\mu _i\) is employed for each component i. By recalling the relationship between the Helmholtz kernel and the shifted kinetic energy, one obtains:

where \(\alpha = 1\) and \(\varLambda _{ij}^n = \lambda _i^n\delta _{ij}\) is a diagonal matrix, with \(\lambda _i^n = - \frac{(\mu _i^n)^2}{2}\).

Although the integral formulation above and the differential one in Eq. (26) are formally equivalent, there is an important distinction to be made. Iterating on the differential formulation corresponds to a steepest descent algorithm, whereas the integral formulation is instead a preconditioned steepest descent algorithm, with the preconditioner \(B = (T-\varLambda _{ii})^{-1}\). The integral formulation is therefore a better starting point for optimizations.

Compared to the one-electron case, a few complications arise for the HF coupled equations:

-

1.

The Fock operator, in contrast to the one-electron Hamiltonian, depends on the electronic orbitals. Computing the Fock matrix will therefore require the update of the potential to be taken into account.

-

2.

The electrons are described by a set of orbitals which must be kept orthonormal, in order to arrive at a true Aufbau solution of the HF equations, otherwise a straightforward iteration of Eq. (35) would bring all orbitals to the lowest eigenfunction.

There is also an arbitrariness in the choice of the parameters \(\mu _i^{n}\) defining the Helmholtz kernels. The natural choice is to make sure that the diagonal element in the last term cancels (\(\varLambda _{ii} = F_{ii}\)). Numerical tests, performed by using a fixed \(\mu\), have shown that this choice is indeed nearly optimal in terms of the number of iterations needed to converge the orbitals.

The simplest approach to keep orthonormality would be to apply Eq. (35) for each orbital followed by a Gram–Schmidt orthogonalization in order of increasing energy. This would however lead to very slow convergence, especially for valence orbitals, as the convergence of each orbital is restrained by the convergence of lower-lying orbitals, which in turn will depend on all orbitals through the potential operator \({\hat{V}}\).

Harrison et al. [12] described how to use deflation to extract multiple eigenpairs from the Fock operator by recasting the equation for each orbital as a ground state problem. Another approach suggested in the same paper is to simply diagonalize the Fock matrix at each iteration.

5.1 Calculation of Fock matrix

The starting point is a set of orthonormal orbitals \(\varPhi ^n\) and an initial guess for the corresponding Fock matrix \(F^n\approx \langle \varPhi ^n|{\hat{F}}|\varPhi ^n\rangle\). We emphasize that such a guess need not to be the exact Fock matrix for the given orbital set. The new, and now exact, Fock matrix \({\tilde{F}}^{n+1}=\langle {\tilde{\varPhi }}^{n+1}|{\hat{F}}|{\tilde{\varPhi }}^{n+1}\rangle\) in the non-orthonormal basis obtained by applying Eq. (35) can be computed without any reference to the kinetic energy operator. This is in analogy to the one-electron case discussed in Sect. 4.

As for the one-electron case, the application of \(({\hat{T}} - \lambda _i^n)\) to the new orbital will return the argument from the Helmholtz operator, provided that \(\mu _i^n = \sqrt{-2\lambda _i^n}\) was used in this operator:

We can now use the above observation to eliminate the kinetic operator from the calculation of the Fock matrix:

where in the last step we have defined four updates:

Assuming that the original orbital set is orthonormal (\(S_{ij}^n=\delta _{ij}\)), then the new Fock matrix can be obtained as an update to the previous guess:

where

For the definition of the new Fock matrix to be consistent, the two-electron contributions to the potential operator at the \(n+1\) step needs to be constructed using an orthonormalized version of the corresponding orbitals \({\tilde{\varPhi }}^{n+1}\), while the matrix elements themselves are evaluated in the original non-orthonormal basis. This requires a temporary set \({\bar{\varPhi }}\), constructed e.g. through a Gram–Schmidt process, so that \(\langle {\bar{\varphi }}_i|{\bar{\varphi }}_j\rangle =\delta _{ij}\). In this basis we can define the Coulomb and Exchange operators as

which are used when computing the potential updates in Eqs. (39) and (43).

We want to emphasize that these expressions are formally exact, but they require that the orbitals of the new set \({\tilde{\varPhi }}^{n+1}\) are related to the orbitals of the old set \(\varPhi ^n\) exactly through the application of the Helmholtz operator in Eq. (35). Otherwise the application of the kinetic energy operator cannot be avoided to obtain the Fock matrix.

5.2 Calculation of energy

The Hamiltonian and the Fock operator differ only by a factor two in the two-electron contribution. The expectation value of the Hamiltonian defined in Eq. (13) can therefore be obtained by taking the trace of the Fock matrix and subtracting the two-electron contribution

which means that the kinetic energy operator can be avoided also for the expectation value.

5.3 Orbital orthonormalization

As already mentioned, the basic iteration of the integral operators in Eq. (35) to all orbitals \(\lbrace \varphi _i\rbrace\) does not preserve orthonormality. We thus need to explicitly enforce orthonormality in each iteration, but here it is important to avoid projective approaches like the Gram–Schmidt procedure, because these will not allow us to carry over the Fock matrix to the new orbitals. Instead, we can make use of the overlap matrix \({\tilde{S}} = \langle {\tilde{\varPhi }} | {\tilde{\varPhi }} \rangle\) in a Löwdin transformation [32]

which allows us to employ the same transformation to the Fock matrix, thus keeping it consistent with the new orthonormal orbitals.

In order to speed up convergence it is useful to augment the Löwdin transformation with another rotation M that brings the orbitals to a particular form. For small systems this can be a diagonalization of the Fock matrix, but for larger systems it is often beneficial to localize the orbitals, e.g. in a Foster–Boys [33, 34] transformation that maximizes the separation between the orbitals from the functional \(L_{FB} = \sum _i \langle \varphi _i | \mathbf {r} | \varphi _i \rangle ^2\). In practice it will not be necessary to diagonalize/localize in every SCF iteration, so the matrix M can be chosen as the identity for many intermediate steps. The combined transformation matrix then becomes \({\tilde{U}}={\tilde{S}}^{-1/2} M_X\), with \(X=\lbrace C,L,I\rbrace\) for canonical, localized or identity, and the new orbital vector and Fock matrix are obtained with

6 Implementation

The general algorithm for the SCF optimization for many-electron systems is summarized in Algorithm 1. At each iteration, the input is an orbital vector \(\varPhi ^n\) with the corresponding Fock matrix \(F^n\), which may or may not be diagonal. The orbitals are used to construct the full potential operator \({\hat{V}}^n\) with updated two-electron contributions. The diagonal part of the Fock matrix is extracted into another matrix \(\varLambda ^n\), and its elements are used to construct the Helmholtz operator for the corresponding orbitals. The Helmholtz operator is applied to each orbital separately, where the argument is corrected with the off-diagonal terms of the Fock matrix, in case non-canonical orbitals are used. This results in a non-orthonormal set of orbitals \({\tilde{\varPhi }}^{n+1}\), and the convergence is judged by the norm of the difference between the input and output orbitals at this point. The Fock matrix corresponding to the new set of orbitals is computed as a pure update from the previous one, as described in Sect. 5.1. It is here important to note that the updated potential operator is computed from an orthonormalized version of the new orbitals, while the matrix elements are computed using the original non-orthonormal set. The final step of the algorithm is to perform an orbital rotation with the Löwdin orthonormalization matrix, optionally combined with another transformation that brings the orbitals to either canonical or localized form, as described in Sect. 5.3.

7 Results

To illustrate the features of the framework exposed in the previous sections, we present two simple examples: the Hydrogen atom as a prototype, one-electron system, and the Beryllium atom as a minimal many-electron system. We have used the Multiwavelet library MRCPP [35] through its Python interface VAMPyR [36] for the implementation, and we have prepared Jupyter Notebooks [37] that reproduce all the results presented below, which can be run freely on Binder [38]. The many-electron implementation is a fully general HF solver. Its limitations are mostly outside the scope of the present work: the missing features to extend it to larger systems are the possibility to deal with open-shell systems, a robust starting guess generator, an iteration accelerator such as the Krylov Accelerated Inexact Newton (KAIN) method [39], and the computational resources which are invariably limited on a Binder distribution. Nevertheless these two simple examples are sufficient to highlight the main features our our implementation. Within the Multiwavelet library, all mathematical objects are represented within a given numerical precision. This means that all functions and operators are truncated accordingly in their compressed representation, i.e. Eqs. (6) and (11), and the operators are applied with bandwidth thresholds that are consistent with the target precision. It is then expected that the obtained solution is exact with respect to the complete basis set limit up to the given precision, but not beyond, even if the equations might be converged further than this.

7.1 Hydrogen atom

nvergence of the Hydrogen wavefunction and energy by direct power iteration of Eqs. (32) and (33). The wavefunction is normalized between each iteration. The plots show both the size of the updates relative to the previous iteration, as well as the error with respect to the analytical solution. The overall numerical precision is kept at \(10^{-6}\)

The Hydrogen atom is a simple one-electron system, where the Schrödinger equation can be solved by straightforward power iteration of Eqs. (32) and (33), with an additional normalization step for the wavefunction after each iterations. The potential operator \({\hat{V}}\) contains only the fixed nuclear potential in this case. It should be noted, however, that even if the potential does not depend on the wavefunction, Eq. (32) must still be solved iteratively. This is in contrast to a traditional fixed-basis approach where the corresponding matrix equation can be readily inverted in a single step.

Figure 1 shows a remarkably uniform convergence of the optimization for the Hydrogen atom: the norm of the wavefunction update is almost exactly halved between each iteration, while the energy update is divided by four. This behavior is expected, since the error in the energy should be quadratic with respect to the error of the wavefunction. The initial guess for the orbital was for convenience chosen as a simple Gaussian function, which was projected onto the numerical grid before entering the iterative procedure. The underlying numerical precision is kept at \(10^{-6}\) throughout, and we do not expect the final solution to be more accurate than this, relative to the true eigenfunction. Indeed, when comparing to the known exact solution for Hydrogen we see that error in the orbital stabilizes just below this threshold, while the energy is several orders of magnitude more precise than the set threshold.

7.2 Beryllium atom

Convergence of the Beryllium orbitals and energies by iteration of Algorithm 1. The kinetic energy is computed indirectly, by subtracting all other contributions from the total energy, which in turn was computed by the kinetic-free expression in Eq. (46). \(\varDelta E_{tot}\) represents the update in total energy w.r.t. the previous iteration, while \(\varDelta E_{exact}\) represents the difference w.r.t. the Hartree-Fock limit (-14.57302317 Ha) from Thakkar et al. [40]. The overall numerical precision is kept at \(10^{-6}\)

The Beryllium atom, being a closed-shell two-orbital system, requires the general many-electron HF procedure as outlined in Algorithm 1. Figure 2 shows the convergence from a simple starting guess of two linearly independent Gaussians, with just a simple Löwdin orthonormalization between each iteration, i.e., no additional orbital rotation was performed to obtain either canonical or localized orbitals, as described in Sect. 5.3.

The convergence pattern for Beryllium is very similar to Hydrogen, until the requested numerical precision is achieved; thereafter the orbital convergence tails off. In contrast to Hydrogen, the orbital mixing caused by the orthonormalization step is introducing numerical noise at the order of the truncation threshold, and the orbitals are randomly perturbed in every iteration. This in turn prevents further convergence in the norm of the orbital error. The total energy, on the other hand, shows again a quadratic convergence relative to the orbital errors, as illustrated in the right-hand panel of Fig. 2. However, each energy contribution separately is just linear: it is only their combined sum which exhibits quadratic convergence. It’s important to note, though, that even if the total energy converges rapidly and reaches a numerical limit at around \(10^{-12}\) (around the square of the orbital error), its accuracy relative to the HF limit is still bounded by the overall numerical precision in the calculation, in this case \(~10^{-6}\). The reason for this is that in the MW framework, every component is approximated according to this precision threshold, including the nuclear, Coulomb and exchange operators. We clearly see this bound when comparing the total energy with the very precise reference from Thakkar et al. [40], displayed as \(\varDelta E_{exact}\) in the figure. In practice, this means that it is not really useful to converge the orbitals and energies all the way to their numerical limits; the SCF can be considered converged whenever the energy update drops below the truncation threshold, in this case after 10-12 iterations.

8 Conclusions

We have presented an implementation of a MW-based SCF solver for the HF equations. The formalism is general and able to deal both closed-shell and open-shell systems alike. The extension to DFT [41], although not considered here, is straightforward by including the exchange and correlation potential. We note however that for DFT derivative operators can be avoided only for local density approximation (LDA) functionals.

We have shown how it is possible to compute the Fock matrix and the electronic energy by exploiting the formal relation between the level-shifted Laplacian and the bound-state Helmholtz kernel, thus avoiding any reference to the kinetic energy operator. This is an advantage within a MW formalism, because differential operators are formally ill-defined [14], although recent developments have shown that good results can still be achieved [26], also for the kinetic energy expectation value.

We have shown that we are able to obtain high-precision results (basis-set limit within an arbitrary, predefined threshold), and the robust convergence pattern is consistent with the fact that the integral formulation can be viewed as a preconditioned steepest descent [29] method, in contrast to the differential formulation which is instead a steepest descent method.

To illustrate the theoretical framework and demonstrate its applicability we detailed the algorithm and presented two simple examples (Hydrogen and Beryllium atoms), showing that the convergence achieved is consistent with the expected behavior. In particular we have seen that convergence within the predefined threshold is achieved both for the orbital norm and for the energy. Moreover the total energy converges quadratically with respect to the norm of the orbital error. Following an open science paradigm, our algorithms are made freely and readily available for inspection and testing through the Binder platform (Table 1).

References

T. Helgaker, P. Jorgensen, J. Olsen, Molecular Electronic-Structure Theory (Wiley, New York, 2008)

D. Moncrie, S. Wilson, Computational linear dependence in molecular electronic structure calculations using universal basis sets. Int. J. Quantum Chem. 101, 363–371 (2005)

G. Kresse, J. Furthmuller, Effcient iterative schemes for ab initio total-energy calculations using a plane-wave basis set. Phys. Rev. B 54, 11169–11186 (1996)

D. Vanderbilt, Soft self-consistent pseudopotentials in a generalized eigenvalue formalism. Phys. Rev. B 41, 7892–7895 (1990)

G. Kresse, D. Joubert, From ultrasoft pseudopotentials to the projector augmented-wave method. Phys. Rev. B 59, 1758–1775 (1999)

D.J. Singh, L. Nordstrom, Planewaves, Pseudopotentials, and the LAPW Method (Springer, New York, 2006)

G.Y. Sun et al., Performance of the Vienna ab initio simulation package (VASP) in chemical applications. J. Mol. Struct. Theochem 624, 37–45 (2003)

E. Briggs, D. Sullivan, J. Bernholc, Large-scale electronic-structure calculations with multigrid acceleration. Phys. Rev. B 52, R5471–R5474 (1995)

J.E. Pask, P.A. Sterne, Finite element methods in ab initio electronic structure calculations. Mod. Simul. Mater. Sci. Eng. 13, R71 (2005)

L. Genovese et al., Daubechies wavelets as a basis set for density functional pseudopotential calculations. J. Chem. Phys. 129, 014109 (2008)

J. Pipek, S. Nagy, The kinetic energy operator in the subspaces of wavelet analysis. J. Math. Chem. 46, 261–282 (2009)

R. Harrison et al., Multiresolution quantum chemistry: basic theory and initial applications. J. Chem. Phys. 121, 11587 (2004)

T. Yanai et al., Multiresolution quantum chemistry in multiwavelet bases: Hartree-Fock exchange. J. Chem. Phys. 121, 6680 (2004)

B. Alpert et al., Adaptive solution of partial differential equations in multiwavelet bases. J. Comput. Phys. 182, 149–190 (2002)

B.K. Alpert, A class of bases in L\(^{2\, }\)for the sparse representation of integral operators. SIAM J. Math. Anal. 24, 246–262 (1999)

F.A. Bischoff, R.J. Harrison, E.F. Valeev, Computing many-body wave functions with guaranteed precision: the first-order Møller-Plesset wave function for the ground state of Helium atom. J. Chem. Phys. 104103 (2012)

A. Durdek et al., Adaptive order polynomial algorithm in a multiwavelet representation scheme. Appl. Num. Math. 92, 40–53 (2015)

G. Beylkin, R.J. Harrison, K.E. Jordan, Singular operators in multi-wavelet bases. IBM J. Res. Dev. 48, 161–171 (2004)

G. Beylkin, Fast adaptive algorithms in the non-standard form for multidimensional problems. Appl. Comput. Harmon. Anal. 24, 354–377 (2008)

S. Høst et al., The augmented Roothaan-Hall method for optimizing Hartree-Fock and Kohn-Sham density matrices. J. Chem. Phys. 129, 124106 (2008)

L. Frediani et al., Fully adaptive algorithms for multivariate integral equations using the non-standard form and multiwavelets with applications to the Poisson and bound-state Helmholtz kernels in three dimensions. Mol. Phys. 111, 1143–1160 (2013)

G. Beylkin, V. Cheruvu, F. Perez, Fast adaptive algorithms in the non-standard form for multidimensional problems. Appl. Comput. Harmon. Anal. 24, 354–377 (2008)

G. Beylkin, M. Mohlenkamp, Numerical operator calculus in higher dimensions. Proc. Natl. Acad. Sci. 99, 10246 (2002)

D. Gines, G. Beylkin, J. Dunn, LU factorization of non-standard forms and direct multiresolution solvers. Appl. Comput. Harmon. Anal. 5, 156–201 (1998)

S.R. Jensen et al., Linear scaling Coulomb interaction in the multiwavelet basis, a parallel implementation. Int. J. Model Simul. Sci. Comput. 05, 1441003 (2014)

J. Anderson et al., On derivatives of smooth functions represented in multiwavelet bases. J. Comput. Phys. X 4, 100033 (2019)

F.A. Bischoff, Regularizing the molecular potential in electronic structure calculations. I. SCF methods. J. Chem. Phys. 141, 184105 (2014)

F. Jensen, Introduction to Computational Chemistry (Wiley, New York, 2013)

R. Schneider et al., Direct minimization for calculating invariant subspaces in density functional computations of the electronic structure. J. Comput. Math. 27, 360–387 (2008)

P. Pulay, Convergence acceleration of iterative sequences. The case of SCF iteration. Chem. Phys. Lett. 73, 393–398 (1980)

M.H. Kalos, Monte Carlo calculations of the ground state of three-and four-body nuclei. Phys. Rev. 128, 1791 (1962)

P.-O. Löwdin, On the non-orthogonality problem connected with the use of atomic wave functions in the theory of molecules and crystals. J. Chem. Phys. 18, 365–375 (1950)

S.F. Boys, Construction of some molecular orbitals to be approximately invariant for changes from one molecule to another. Rev. Mod. Phys. 32, 296–299 (1960)

J.M. Foster, S.F. Boys, Canonical configurational interaction procedure. Rev. Mod. Phys. 32, 300–302 (1960)

R. Bast, et al., MRCPP: MultiResolution computation program package (2021). https://github.com/MRChemSoft/mrcpp/tree/release/1.4,version v1.4.0.

E. Battistella, et al., VAMPyR: very accurate multiresolution python routines (2021). https://github.com MRChemSoft/vampyr/tree/v0.2rc0,versionv0.2rc0.

M. Bjørgve, S. R. Jensen, Kinetic-energy-free algorithms for atoms. https://github.com/MRChemSoft/Kinetic-energy-free-HF

The binder project. https://mybinder.org/

R. Harrison, Krylov subspace accelerated inexact Newton method for linear and nonlinear equations. J. Comput. Chem. 25, 328–334 (2004)

T. Koga et al., Improved Roothaan-Hartree-Fock wave functions for atoms and ions with N \(\le\) 54. J. Chem. Phys. 103, 3000–3005 (1995)

R.G. Parr, W. Yang, Density-functional theory of the electronic structure of molecules. Annu. Rev. Phys. Chem. 46, 701–728 (1995)

L. Allen et al., Publishing: credit where credit is due. Nature 508, 312–313 (2014)

A. Brand et al., Beyond authorship: attribution, contribution, collaboration, and credit. Learn. Publ. 28, 151–155 (2015)

Researchers are embracing visual tools to give fair credit for work on papers, pp. 5–3 (2021). https://www.natureindex.com/news-blog/researchers-embracing visual-tools-contribution-matrix-give-fair-credit-authorsscientific-papers

Acknowledgements

This work has been supported by the Research Council of Norway through a Centre of Excellence Grant (Grant No. 262695) and through a FRIPRO Grant (Grant No. 324590). The simulations were performed on resources provided by Sigma2 - the National Infrastructure for High Performance Computing and Data Storage in Norway (Grant No. NN4654K). LF thanks Prof. Reinhold Schneider for fruitful discussions about the challenges of the HF minimization problem.

Funding

Open access funding provided by UiT The Arctic University of Norway (incl University Hospital of North Norway).

Author information

Authors and Affiliations

Contributions

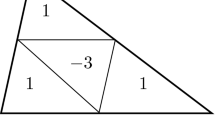

We use the CRediT taxonomy of contributor roles [42, 43]. The “Investigation” role also includes the “Methodology”, “Software”, and “Validation” roles. The “Analysis” role also includes the “Formal analysis” and “Visualization” roles. The “Funding acquisition” role also includes the “Resources” role. We visualize contributor roles in the following authorship attribution matrix, as suggested in Ref. [44].

Corresponding author

Additional information

This article is dedicated to the memory of Prof. János Pipek. Prof. Pipek had in the latest years of his career turned his attention to Multiresolution Analysis and Wavelet methods. We honor his contribution to the field with a paper on the same subject.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jensen, S.R., Durdek, A., Bjørgve, M. et al. Kinetic energy-free Hartree–Fock equations: an integral formulation. J Math Chem 61, 343–361 (2023). https://doi.org/10.1007/s10910-022-01374-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10910-022-01374-3